Abstract

An experiment consisting of a network of sensors can endow several advantages over an experiment with a single sensor: improved sensitivity, error corrections, spatial resolution, etc. However, there is often a question of how to optimally set up the network to yield the best results. Here, we consider a network of devices that measure a vector field along a given axis; namely for magnetometers in the Global Network of Optical Magnetometers for Exotic physics searches (GNOME). We quantify how well the network is arranged, explore characteristics and examples of ideal networks, and characterize the optimal configuration for GNOME. We find that by re-orienting the sensitive axes of existing magnetometers, the sensitivity of the network can be improved relative to the past science runs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Various experiments make use of a network of sensors in lieu of a single, centralized device. A network of sensors can come with several advantages such as having better sensitivity than a single device, being able to catch and correct errors, and achieving superior spatial resolution. However, there are also a few challenges and complexities that arise when involving many devices. In addition to the logistical challenges of managing multiple devices at once and making sense of several data streams, there is the foundational question of how to best arrange the network. This question is explored for a network consisting of a specific class of sensors: ones that measure a vector field.

A common motivational interest in designing these network experiments is in measuring spatially extended phenomena. For example, interferometer networks used to measure gravitational waves [1,2,3], gravimeters used for geodesy [4], and magnetometers used for geophysics [5,6,7]; all of which measure vector-field phenomenaFootnote 1 on scales the size of the Earth or larger. Networks have also been used to search for direct evidence of dark matter, which is believed to dominate the mass of the galaxy but only weakly interacts with visible matter; see, e.g., reviews in Refs. [8,9,10]. This includes both gravimeters [11,12,13,14] that search for the motion of dark matter captured by the Earth as well as magnetometers [15,16,17,18,19,20] that search for coupling between dark and visible matter.

For this work, the Global Network of Optical Magnetometers for Exotic physics searches (GNOME) [15,16,17,18] is of particular interest. GNOME consists of shielded magnetometers around the Earth and has the goal of finding new, exotic (vector) fields that couple to fermionic spin. For example, GNOME searches for axion-like particle (ALP) domain walls [15, 17, 18] via the coupling of the ALP field gradient to nucleon spin. The gradient, in this case, is in effect a vector field; albeit with typical constraints, such as having a vanishing curl.

This paper is organized as follows: a method of calculating the network sensitivity is described in Sect. 2, a quantification of network quality is described in Sect. 3, ideal and optimized networks are described in Sect. 4, and concluding remarks are given in Sect. 5. Throughout this paper, the network under consideration will consist of the GNOME magnetometers. However, the principles explored here can be extended to other network experiments.

2 Sensitivity

The magnetometers in the network each possess a “sensitive axis” that results in the attenuation of a signal when the vector field is not parallel or anti-parallel to the sensitive axis. Denote the sensitive axis of magnetometer i with \(\varvec{d_i}\). The magnitude of this vector reflects the strength of the coupling such that a vector (field) \({\varvec{m}}\) will induce a signal \(s_i=\varvec{d_i}\cdot {\varvec{m}}\) in the \(i^\text {th}\) magnetometer. Consider the case in which only one vector \({\varvec{m}}\) describes the signal. For a domain wall, this could be the gradient at the center of the wall with the timing of the signal adjusted to account for delays as the domain wall crosses the network. The signals observed by the network can be simplified into the linear equation,

where D is a matrix whose rows are \(\{\varvec{d_i}\}\) and similarly \({\varvec{s}}=\{ s_i \}\). To avoid a trivial case, it is assumed that the network consists of at least one operating sensor; so there exists a vector \({\varvec{m}}\) such that \(D{\varvec{m}}\ne {\varvec{0}}\).

In practice, one will measure a set of signals \({\varvec{s}}\) with some error. Let \(\Sigma _s\) be the covariance matrix in the measurements \({\varvec{s}}\) that characterizes this error; the matrix will generally be diagonal as noise between the GNOME magnetometers is uncorrelated. An approximate solution to Eq. (1) given the error in measurement is obtained by minimizing \(\chi ^2=(D{\varvec{m}}-{\varvec{s}})^T\Sigma _s^{-1}(D{\varvec{m}}-{\varvec{s}})\). This solution is given by

where \(\Sigma _m\) is the covariance matrix for \({\varvec{m}}\). As long as \(\Sigma _s\) is a positive-definite matrix, as is the case for a realistic network, \(\Sigma _s^{-1}\) is well-defined. However, \(\Sigma _m\) will not be well-defined if D has a non-trivial kernel, \(D{\varvec{m}}=0\) for \({\varvec{m}}\ne {\varvec{0}}\); in other words, the network has a “blind spot.” In this pathological case, one cannot uniquely reconstruct \({\varvec{m}}\), though one can still salvage a meaningful definition of sensitivity.

The network sensitivity is defined as the magnitude necessary to induce a \(|{\varvec{m}}|\) signal-to-noise ratio \(\zeta =\sqrt{{\varvec{m}}^T \Sigma _m^{-1} {\varvec{m}}}\) of one. Thus, the sensitivity in the direction \(\hat{{\varvec{m}}}\) is

This will vary by direction. However, one can find the range of sensitivities over different directions by solving for the eigenvalues of the symmetric, positive semi-definite matrix \(\Sigma _m^{-1} = \left( D^T \Sigma _s^{-1} D \right) \) — the smallest eigenvalue \(\lambda _\text {min} = \beta _0^{-2}\) giving the “worst-case” sensitivity as a large signal \(\beta _0\) would be needed to induce a significant signal. Likewise the largest eigenvalue \(\lambda _\text {max} = \beta _1^{-2}\) gives the “best-case,” and the corresponding eigenvectors are the directions that induce such signals. If the network has a blind spot, then \(\lambda _\text {min} = 0\) so \(\beta \rightarrow \infty \) along the corresponding direction.

3 Quality factor

Given the sensitivity defined by Eq. (3), there remains the question of how to optimize the network; in particular, how to optimize the directions \(\{\varvec{d_i}\}\) for the best network. If there is distribution of directions of interest for the vector field, one could define the optimization by performing some weighted average of Eq. (3) over this distribution. If one is ambivalent about the direction of the signal, the worst-case direction indicates a bound on sensitivity.

Ideally, the magnetometers in the network will be oriented to evenly cover all directions. If there is a preferred and unpreferred direction, one could improve the sensitivity in the unpreferred direction by rotating the sensitive axis of magnetometers toward this direction. Under practical conditions, it is not possible to have GNOME always operating under optimal conditions because the noise in individual sensors varies over time and magnetometers will occasionally activate and deactivate.

The quality factor over time for Science Runs 1–5. Solid lines represent 1 day rolling averages. a The number of active sensors over time. b The quality factors over time. The color indicates the number of active sensors. c The approximate factor by which the network could be improved if the sensors were optimally oriented using the approximate optimized sensitivity, Eq. (5)

To judge how well the GNOME network is performing, it helps to define some quantitative “quality factor.” This factor would ideally reflect how optimally the network is set up with the magnetometers available and not the absolute sensitivity of the network. That is, the quality of the network refers to how well the magnetometers are oriented and is not affected by improving all magnetometers by a constant factor. One possibility is the quotient of the best and worst sensitivity,

This factor will be zero if network has a blind spot (\(\beta _0\rightarrow \infty \)) and one if the network has no preferred direction. Generally, a more optimally oriented network has a larger \(q_0\). The quality factor for GNOME during the Science Runs is given in Fig. 1b.

A few terms are defined here based on the quality of a network. Namely, an “ideal” network is one for which \(q_0=1\), while an “optimal” network is one in which the sensitivity \(\beta _0\) cannot be improved by re-orienting the sensors in the network.

Given a set of magnetometers with covariance matrix \(\Sigma _s\) and known coupling, it should be possible to determine a theoretical best sensitivity. For this, we will consider a network with \(n\) independent magnetometers that are all described by a single sensitive axis \({\varvec{d}}_i\) with coupling strength \(\kappa _i := | {\varvec{d}}_i |\). Consider the case in which the angle between any vector signal and any sensitive axis is random (this would be the case for many randomly oriented sensors). Because  , for \(d=3\) spatial dimensions, Eq. (3) in this case becomes,

, for \(d=3\) spatial dimensions, Eq. (3) in this case becomes,

This may not be the optimal sensitivity, but it provides a heuristic for an optimal network. The quotient between the observed and optimal sensitivity for GNOME over time is given in Fig. 1c.

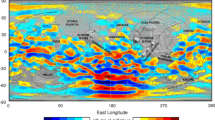

Average sensitivity \(\beta \) of GNOME during Science Run 5 (23 August–31 October 2021). A position on the Earth represents the direction perpendicular to that point on the Earth. The direction of the sensitive axes of the stations is represented by \(\odot \) (parallel) and \(\otimes \) (anti-parallel). That is, if a sensor was moved to the corresponding geographical location, then its sensitive axis would be vertical. The \(\odot \) markers are labeled with name of the station, given by the city in which the sensor is physically located. The marker color is a visual aid to associate the pair of markers on opposite sides of the Earth representing the same station

With the quality factor in mind, it helps to consider exactly how sensitivity varies with direction. The network has been fairly stable with many active sensors during the recent Science Run 5. A map of the average sensitivityFootnote 2,  , in different directions is shown in Fig. 2. The network quality could be improved by improving the quality/reliability of stations sensitive to insensitive directions (e.g., Moxa or Daejeon) or rotating/adding additional sensor(s) toward the worst direction.

, in different directions is shown in Fig. 2. The network quality could be improved by improving the quality/reliability of stations sensitive to insensitive directions (e.g., Moxa or Daejeon) or rotating/adding additional sensor(s) toward the worst direction.

4 Ideal and optimized networks

With the quantitative definition of network quality given in the previous section, various optimized and ideal networks are given here. In particular, we consider properties and explicit arrangements of ideal networks as well as numerical optimizations of more realistic networks.

To better understand the characteristics of networks, it helps to define some additional formalism. Define a network with a given set of orientations as a pair of \((n\times d)\) directional matrix and \((n\times n)\) covariance matrix \(N=\{D,\Sigma \}\); for n sensitive axes and \(d=3\) spatial dimensions. Two networks \(N_0 = \{D_0,\Sigma _0\}\) and \(N_1 = \{D_1,\Sigma _1\}\) can be considered equivalent \(N_0\cong N_1\) if there exists a permutation matrix P such that \(D_1 = PD_0\) and \(\Sigma _1 = P\Sigma _0P^T\); that is, they are the same up to ordering. Additionally, a network can be decomposed into two complementary subnetworks \(N\cong N_A\oplus N_B\) if

Observe that the subnetworks \(N_A\) and \(N_B\) are independent/uncorrelated.

In addition to the basic equivalence relation described above, there are some additional symmetries for a network. First, the sensitivity \(\beta (\hat{{\varvec{m}}})\) is invariant with respect to parity reversal of a subnetwork; i.e., \(D_A\mapsto - D_A\) for \(N\cong N_A\oplus N_B\). Further, though the sensitivity \(\beta (\hat{{\varvec{m}}})\) of a (non-ideal) network can change under arbitrary rotation of the whole network \(D^T\mapsto RD^T\), the value of the worst sensitivity, best sensitivity, and quality factor do not.

4.1 Ideal networks

There are a few useful characteristics of ideal networks that are worth considering. For an ideal network, \(q_0=1\) so the smallest and largest eigenvalues of \(D^T\Sigma ^{-1} D\) are the same which implies that this matrix is proportional to the identity matrix. In particular, \(D^T\Sigma ^{-1} D = \beta ^{-2}\mathbb {1}\). It also follows that the sensitivity of an ideal network is independent of global rotations.

Consider, now, how ideal networks are combined. Let \(N_A\) and \(N_B\) be two complementary, ideal subnetworks of N, then

That is, the network \(N\cong N_A \oplus N_B\) is also ideal with sensitivity \(\left( \beta _A^{-2} + \beta _B^{-2} \right) ^{-1/2}\). Further, because an ideal network remains ideal under rotations, one can rotate either ideal subnetwork without affecting the sensitivity of the network N. Further, if \(N=N_A\oplus N_B\) is an ideal network and \(N_A\) is an ideal subnetwork, then \(N_B\) is also an ideal subnetwork. An ideal network that cannot be separated into ideal subnetworks is “irreducible.” Because an ideal network needs at least d sensitive axes (for \(d=3\) spatial dimensions), an ideal network with \(n<2d\) is irreducible, because it cannot be split into two ideal networks.

One can consider certain explicit cases of ideal networks with some simplified conditions. In particular, let \(N=\{ D, \Sigma \}\) be composed of n identical, independent, single-axis sensors — that is, \(\Sigma = \sigma ^2\mathbb {1}\) and \(|{\varvec{d}}_i| = \kappa \) where \({\varvec{d}}_i\) is the \(i^\text {th}\) row of D. If there are \(d=3\) sensors oriented such that their sensitive axes are orthogonal, then the resulting network will be ideal with sensitivity \(\beta =\sigma /\kappa \). Heuristically, one would like to orient the magnetometers to evenly cover all directions. One way to do this is to take some inspiration from the Platonic solids by designing a network in which the sensitive axes of the sensors are oriented from the center of the solid to each of the vertices; see Table 1. Most of these solids will generate a network with two ideal subnetworks having opposite sensitive axes. Thus, one can obtain ideal networks with three (octahedron), four (tetrahedron and cube), six (icosahedron), and ten (dodecahedron) sensors through this method; denoted \(N_3\), \(N_4\), \(N_6\), and \(N_{10}\). These arrangements have the sensitivity \(\beta =\frac{\sigma /\kappa }{\sqrt{n/3}}\) and are irreducible. With these networks alone, it is evident that there are multiple unique ways of orienting a given number of sensors that do not rely on using the same set of ideal subnetworks; for example, six sensors can be arranged as \(N_6\) or \(N_3 \oplus N_3\).

Combining \(N_3\) and \(N_4\) subnetworks, one can design ideal networks with three, four, six, or more sensors. What seems to remain is a way to orient five identical, independent sensors into an ideal network. One can show that two such network arrangements \(N_{5a}\) and \(N_{5b}\) are given by

each with sensitivity \(\beta = \sqrt{\sigma /\kappa }{\sqrt{5/3}}\). These networks are unique, even when considering parity reversal of individual sensors, reordering, and global rotations; this is evident because \(D_{5b}\) has orthogonal sensors while \(D_{5a}\) does not, and orthogonality of two vectors is invariant under these operations. Further, these are irreducible ideal networks because \(n<6\). Along with \(N_3\) and \(N_4\), one can generate any ideal network with at least three identical, independent sensors using these arrangements.

Though one can always arrange three or more identical, independent sensors into an ideal network, it is not always the case that a given set of sensors can be arranged into an ideal network. For example, consider a set of \(n\ge d=3\) sensors all with the same coupling \(\kappa =1\), but \(n-1\) sensors have noise \(\sigma _0\) and the last sensor has noise \(\sigma _1 < \sigma _0/\sqrt{(n-1)/2}\). An optimized network would be arranged with the first \(n-1\) sensors evenly oriented around the plane orthogonal to the last sensor and have a quality \(q_0=\frac{\sigma _1}{\sigma _0}\sqrt{\frac{n-1}{2}} < 1\) and sensitivity \(\beta _0=\frac{\sigma _0}{\sqrt{(n-1)/2}}\). The least-sensitive direction is orthogonal to the last sensor’s sensitive axis, and any adjustments to the sensors’ orientations would worsen the sensitivity in this plane. The problem in this scenario is that one sensor is much more sensitive than the others, so they cannot compensate, even collectively. The extreme case of this would be if the first \(n-1\) sensors were so noisy that they were effectively inoperable; this arrangement would not be much different than an \(n=1\) network, which cannot be ideal.

4.2 Optimizing networks

Regardless of whether a given set of magnetometers can be arranged into an ideal network, their arrangement can always be optimized; at least at a given time. In practice, the noise in each GNOME magnetometer varies over time and magnetometers turn on and off throughout the experiment, so an optimal arrangement at one time may not be optimal at another.

A non-optimized network can still be improved via an algorithm. An example of a “greedy” algorithm would be one in which, each step, a sensor is randomly selected, removed from the network, and re-inserted in the least-sensitive direction for the network without the removed sensor. This step can then be repeated many times until reaching some optimization condition. For multi-axis sensors that always have the same relative angle between the sensitive axes, the orientation by which to re-insert the sensor is a bit more complicated. Roughly, one would apply a rotation to the sensor to align the best- and worst-directions for the multi-axis sensor and the rest of the network (see Appendix A).

This algorithm will also work regardless of whether an ideal network arrangement exists, though the resulting network may not be optimal. Subsequent steps of the algorithm may converge to a non-optimal network or alternate between network configurations.

after applying a 1.67 mHz high-pass filter, 20 s averaging, and notch filters to remove powerline frequencies. This choice in how the average noise is calculated suppresses the influence of brief periods in which there was a spike in noise. The columns contain the run number, number of sensors used in the optimization, network characteristics, optimized network characteristics, theoretical optimized sensitivity from Eq. (5), and factor by which the optimization algorithm improved sensitivity

after applying a 1.67 mHz high-pass filter, 20 s averaging, and notch filters to remove powerline frequencies. This choice in how the average noise is calculated suppresses the influence of brief periods in which there was a spike in noise. The columns contain the run number, number of sensors used in the optimization, network characteristics, optimized network characteristics, theoretical optimized sensitivity from Eq. (5), and factor by which the optimization algorithm improved sensitivityThe optimization can be applied to the GNOME network to better understand the potential of the experiment. In particular, this was performed for GNOME’s official Science Runs:

-

Science Run 1: 6 June–5 July 2017.

-

Science Run 2: 29 November–22 December 2017.

-

Science Run 3: 1 June 2018–10 May 2019.

-

Science Run 4: 30 January–30 April 2020.

-

Science Run 5: 23 August–31 October 2021.

The results of the optimization are given in Table 2 in which the coupling was assumed to be one. For most of the Science Runs, the improvement was less than a factor of two with the largest improvement being by a factor of 2.7 from Science Run 4. Additionally, the Science Run 2 network could be made ideal with the same sensitivity as predicted in Eq. (5). It should be noted that the networks used in the optimization included sensors available at least 25 % of the run, while it is not typical for all these sensors to be active at the same time. Another example of network optimization is given in Appendix B wherein only a couple sensors are reoriented and an additional sensor is added.

5 Conclusions

In this paper, we have considered a network of sensors with directional sensitivity and how the collective sensitivity of the network is affected by the choice in how the sensors are oriented. To do this, a “quality factor” was introduced to quantify how efficiently the network sensors are oriented regardless of their underlying sensitivity. Various properties and examples of ideal networks were presented, along with a means of optimizing an existing network. By optimizing GNOME, we can show some modest improvement in the network sensitivity without requiring additional sensors or improvements in existing sensors.

In practice, there are still some limitations in how sensors can be oriented. For example, depending on how the sensor is built, it may not be possible/practical to reorient it, or it may only be possible to orient its sensitive axis vertically or horizontally. Additionally, what may be an optimal network under some set of conditions may not be optimal under later conditions depending on how the sensitivities of the sensors change and which ones are active. However, if there are many decent/reliable sensors, the network as a whole will not usually deviate too much from its optimal arrangement.

This work only considered the manner in which the sensors were oriented, not their position. The relative positions and distances between the sensors are not relevant to understanding the sensitivity of the network as a whole. Briefly, the optimal placement of the sensors in a network differs depending on the goal of the experiment. It is generally simpler to observe a signal that crosses a network of nearby sensors; because the potential crossing time is shorter, less data need to be compared between the sensors. However, measuring the direction and speed of some phenomena crossing the network can be done more accurately when the sensors are more distant from one another.

The work described in this paper can be applied to any experiment involving a network of directionally sensitive devices. These networks are useful in detecting features in a vector field or gradient that traverse a spatial region. Both the design and improvement of these networks can be meaningfully improved through careful consideration of how sensors are oriented.

Moving forward, this study can have a direct influence on Advanced GNOME; a planned general upgrade to the GNOME experiment. In particular, this study provides the tools to understand how to orient new sensors and re-orient existing sensors as to optimize the network sensitivity—taking advantage of the major upgrade period to make improvements to the collective network. The Advanced GNOME upgrade includes the addition of SERF comagnetometers [21] with the option to operate in two-axis mode. The use of multi-axis sensors can be incorporated into the work presented here by treating them as multiple sensors with correlated noise. These sensors also have the constraint that the sensitive axes must remain orthogonal. Optimization with multi-axis sensors is explored in Appendix A considering this constraint.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: GNOME data can be made available upon reasonable request. Data are displayed at https://budker.uni-mainz.de/gnome/.]

Notes

The interferometer networks can be understood as measuring something closer to a tensorial deformation in spacetime. However, similar to vector-field sensors that measure a vector only along one axis, these interferometers are only sensitive to certain polarizations of gravitational waves.

Using the average of the inverse sensitivity accounts for blind spots \(\beta ^{-1}\rightarrow 0\).

References

W.G. Anderson, P.R. Brady, J.D.E. Creighton, É.É. Flanagan, Excess power statistic for detection of burst sources of gravitational radiation. Phys. Rev. D 63, 042003 (2001)

R.X. Adhikari, Gravitational radiation detection with laser interferometry. Rev. Mod. Phys. 86, 121–151 (2014)

S. Klimenko et al., Method for detection and reconstruction of gravitational wave transients with networks of advanced detectors. Phys. Rev. D 93, 042004 (2016)

Boy, J.-P., Barriot, J.-P., Förste, C., Voigt, C. & Wziontek, H. Achievements of the First 4 Years of the International Geodynamics and Earth Tide Service (IGETS) 2015–2019. in International Association of Geodesy Symposia (Springer, Berlin, Heidelberg, 2020), pp. 1–6

J.W. Gjerloev, A Global Ground-Based Magnetometer Initiative. EOS Trans. Am. Geophys. Union 90, 230–231 (2009)

J.W. Gjerloev, The SuperMAG data processing technique. J. Geophys. Res. Space Phys. 117, 1069 (2012)

A. Bergin, S.C. Chapman, J.W.A.E. Gjerloev, DST, and Their SuperMAG Counterparts: The Effect of Improved Spatial Resolution in Geomagnetic Indices. J. Geophys. Res. Space Phys. 125, 2020JAe027828 (2020)

J.L. Feng, Dark matter candidates from particle physics and methods of detection. Ann. Rev. Astron. Astrophys. 48, 495–545 (2010)

P. Gorenstein, W. Tucker, Astronomical signatures of dark matter. Adv. High Energy Phys. 2014, 1–10 (2014)

D.J.E. Marsh, Axion cosmology. Phys. Rep. 643, 1–79 (2016)

C.J. Horowitz, R. Widmer-Schnidrig, Gravimeter search for compact dark matter objects moving in the earth. Phys. Rev. Lett. 124, 051102 (2020)

W. Hu et al., A network of superconducting gravimeters as a detector of matter with feeble nongravitational coupling. Eur. Phys. J. D 74, 115 (2020)

R.L. McNally, T. Zelevinsky, Constraining domain wall dark matter with a network of superconducting gravimeters and LIGO. Eur. Phys. J. D 74, 61 (2020)

N.L. Figueroa, D. Budker, E.M. Rasel, Dark matter searches using accelerometer-based networks. Quantum Sci. Technol. 6, 034004 (2021)

M. Pospelov et al., Detecting domain walls of Axionlike models using terrestrial experiments. Phys. Rev. Lett. 110, 021803 (2013)

e D.F. Jackson Kimball et al., Searching for axion stars and Q-balls with a terrestrial magnetometer network. Phys. Rev. D 97, 043002 (2018)

H. Masia-Roig et al., Analysis method for detecting topological defect dark matter with a global magnetometer network. Phys. Dark Universe 28, 100494 (2020)

S. Afach et al., Search for topological defect dark matter with a global network of optical magnetometers. Nat. Phys. 1–6, 2102.13379 (2021)

M.A. Fedderke, P.W. Graham, K.D.F. Jackson, S. Kalia, Earth as a transducer for dark-photon dark-matter detection. Phys. Rev. D 104, 075023 (2021)

M.A. Fedderke, P.W. Graham, D.F. Jackson Kimball, S. Kalia, Search for dark-photon dark matter in the SuperMAG geomagnetic field dataset. Phys. Rev. D 104, 095032 (2021)

V. Shah, J. Osborne, J. Orton, O. Alem, Fully integrated, standalone zero field optically pumped magnetometer for biomagnetism, in Steep Dispersion Engineering and Opto-Atomic Precision Metrology XI, 51 (SPIE. ed. by S.M. Shahriar, J. Scheuer (United States, San Francisco, 2018)

Acknowledgements

Work for this paper was made possible through discussions with the GNOME collaboration as well as characterization data from the experiment. Discussions with Dr. Derek Jackson Kimball, Dr. Dmitry Budker, Dr. Szymon Pustelny, Dr. Ibrahim Sulai, and Hector Masia-Roig greatly aided in the completion of this work. JAS has no funding sources to declare.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

All authors have equally contributed to this work.

Corresponding author

Appendices

Appendix A: Multi-axis sensors

Consider a multi-axis magnetometer that can only be reoriented by rotating the entire sensor—one cannot adjust individual sensitive axes. Some effort is needed to understand how to orient such a sensor with respect to a larger network.

Let \(N_a=\{D_a,\Sigma _a\}\) describe the orientation of the multi-axis sensor and \(N_b=\{D_b,\Sigma _b\}\) describe the rest of the network. The matrices \(D_i^T\Sigma _i^{-1} D_i\) (for \(i\in \{a,b\}\)) can be diagonalized as \(U_i\Lambda _i U_i^T\) where the \(\Lambda _i\) is a diagonal matrix whose \(j^\text {th}\) element along the diagonal is the \(j^\text {th}\)-smallest eigenvalue \(\lambda _{ij}\), and \(U_i\) is an orthogonal matrix (\(U_i^{-1}=U_i^T\)) whose \(j^\text {th}\) column is the respective (normalized) eigenvector \({\varvec{u}}_{ij}\). The orthogonal matrices have the effect of rotating the coordinate axes to the best- and worst-directions (i.e., most- and least-sensitive) for the respective network: \(\varvec{\hat{x}}\mapsto \) the worst-direction and \(\varvec{\hat{z}}\mapsto \) the best-direction. Finally, define \({\tilde{U}}_i\) as \(U_i\) with its columns reversed; this also reverses which coordinate axis will be rotated to which direction.

When orienting the multi-axis sensor to be included in the rest of the network, it is optimal to orient the respective best-direction of the multi-axis sensor with the worst-direction of the rest of the network and vice-versa. This is accomplished by rotating the multi-axis sensor as follows:

This rotation maps:

For the diagonal matrix with the eigenvalues in descending order \({\tilde{\Lambda }}_i\) (\(i\in \{a,b\}\)), the combined network will have \(D_{ab}^T\Sigma _{ab}^{-1}D_{ab}=U_b\Lambda _{ab}U_b^T\) for \(\Lambda _{ab} = {\tilde{\Lambda }}_a + \Lambda _b\), a diagonal matrix of eigenvalues (not necessarily ordered). The quality factor is given by the ratio of the smallest and largest eigenvalue \(q_0=\sqrt{\frac{\lambda _\text {min}}{\lambda _\text {max}}}\). The eigenvalues for the combined network consist of the sum of the smallest eigenvalues for one subnetwork and the largest eigenvalues for the other. This decreases the range of eigenvalues and optimize the network.

For example, the Hayward, Krakow, and Mainz sensors could operate as two-orthogonal-axis magnetometers during Science Run 5; though at the cost of worse sensitivity, roughly doubling the variance. Replacing these three sensors during the Science Run with (uncorrelated) two-axis sensors and including them with optimal orientations improves the sensitivity by a factor of 1.35 to \(\beta _0 = 0.18\,\text {pT}\). This is slightly better than the 1.21 factor of improvement for optimizing the three sensors in single-axis mode.

Appendix B: Applied optimization

It is useful to consider an explicit examples of optimizing parts of the network. Specifically, consider reorienting the Mainz and Krakow magnetometers and adding another magnetometer with 0.2 pT standard deviation noise after filtering/averaging. The new magnetometer will be located in Berkeley, CA, USA. This optimization will use the Science Run 5 characteristics.

Locally, the orientation is expressed in horizontal coordinates; using altitude (alt) and azimuthal (az) angles expressed as the pair (alt, az). The altitude is the angle relative to the horizon, while the azimuth is the angle relative to noise (measured clockwise). The Mainz sensor is oriented with \(\text {alt}=-90^\circ \), while the Krakow sensor is oriented as \(\text {alt}=+90^\circ \) during Science Run 5.

Optimizing the direction of the three magnetometers would result in a sensitivity of \(\beta _0=0.17\,\text {pT}\) with \(q_0=0.89\). Using Eq. (5), the optimal sensitivity would be \(\beta _\text {opt} = 0.16\,\text {pT}\). When optimized, the Mainz magnetometer is oriented as \((74^\circ , 99^\circ )\), the Krakow magnetometer is oriented as \((6^\circ , -19^\circ )\), and the Berkeley magnetometer is oriented as \((10^\circ , 123^\circ )\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Smiga, J.A. Assessing the quality of a network of vector-field sensors. Eur. Phys. J. D 76, 4 (2022). https://doi.org/10.1140/epjd/s10053-021-00328-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjd/s10053-021-00328-9