Abstract

The prospects are presented for precise measurements of the branching ratios of the purely leptonic \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) and \(B^+ \rightarrow \tau ^+ \nu _\tau \) decays at the Future Circular Collider (FCC). This work is focused on the hadronic \(\tau ^{+} \rightarrow \pi ^+ \pi ^+ \pi ^- {\bar{\nu }}_\tau \) decay in both \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) and \(B^+ \rightarrow \tau ^+ \nu _\tau \) processes. Events are selected with two Boosted Decision Tree algorithms to optimise the separation between the two signal processes as well as the generic hadronic Z decay backgrounds. The range of the expected precision for both signals are evaluated in different scenarios of non-ideal background modelling. This paper demonstrates, for the first time, that the \(B^+ \rightarrow \tau ^+ \nu _\tau \) decay can be well separated from both \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) and generic \(Z\rightarrow b{\bar{b}}\) processes in the FCC-ee collision environment and proposes the corresponding branching ratio measurement as a novel way to determine the CKM matrix element \(|V_{ub}|\). The theoretical impacts of both \(B^+ \rightarrow \tau ^+ \nu _\tau \) and \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) measurements on New Physics cases are discussed for interpretations in the generic Two-Higgs-doublet model and leptoquark models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

While the Standard Model (SM) has been reigning uncontested since its formulation over 50 years ago [1,2,3], our community has long been searching for evidence of physics beyond it (BSM). To this end, particularly useful probes are leptonic decays of heavy flavour mesons, such as \({{B} _{c} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) or \({{B} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \).Footnote 1 The description of such decays in the SM is very simple, with the meson decay constants as the only hadronic inputs to the computation of their branching ratios. The decay constants for \(B^+_c /{{B} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) decays have been computed with extreme precision, thanks to the help of simulations performed employing lattice QCD (LQCD) [4], resulting in very clean and precise theoretical predictions.

The study of these channels has been gathering further interest in recent years, especially for the case of \(B_c^+\rightarrow \tau ^+\nu _\tau \) decays [5,6,7,8], due to recently reported discrepancies in semileptonic B-meson decays mediated at the quark level by the same process, namely the \(b\rightarrow c \ell \nu _\ell \) transitions. In particular, the lepton flavour universality ratio

have been measured at LHC and the B-factories [9,10,11,12,13,14,15,16,17,18,19], showing a combined \(3.2~\sigma \) deviation between the experimental average and their SM prediction [20]. A similar deviation, albeit not as statistically significant and experimentally precise, has been observed in \(\mathcal{R}(J/\psi )= {\mathcal {B}}(B_c^+ \rightarrow J/\psi \tau ^+ \nu _\tau )/{\mathcal {B}}(B_c^+\rightarrow J/\psi \mu ^+ \nu _\mu )\) [21]. It is worth mentioning that, lately, a first experimental measurement for the ratio \(\mathcal{R}(\Lambda _c)={\mathcal {B}}(\Lambda _b \rightarrow \Lambda _c \tau {{\bar{\nu }}})/{\mathcal {B}}(\Lambda _b \rightarrow \Lambda _c \ell {{\bar{\nu }}})\) has been performed by LHCb [22]. Despite being described at the parton level by the same transition [7, 8], this measurement demonstrated a different behaviour from the \(\mathcal{R}(J/\psi )\) results. However, further analyses are required for \(\mathcal{R}(\Lambda _c)\), particularly due to how the normalisation employed in the experimental measurement could impact its final determination [23, 24].

It is also interesting to notice that, going potentially beyond the \(\mathcal{R}(D^{(*)})\) anomalies, \({{B} _{c} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) and \({{B} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) decays are in general excellent probes of New Physics (NP) contributions due to an extended pseudoscalar sector, as predicted by BSM theories like Two-Higgs-Doublet Models (2HDM) [25] or certain leptoquark models (LQ) [26, 27].

The measurement of \({{B} _{c} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) and \({{B} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) decays are of particular interest not only in the context of BSM searches but also as a test of an exquisitely SM feature, the extraction of Cabibbo-Kobayashi-Maskawa ***(CKM) elements. In the Standard Model, the branching ratio responsible for this class of decays is determined by

where \(\tau _{B_q^+}\) denotes the \(B_q^+\) meson lifetime, \(G_F\) is the Fermi constant, and \(m_{B_q^+}\) and \(m_\tau \) are the masses of the \(B_q^+\) meson and the \(\tau ^+\) lepton, respectively. The \(B_q^+\) meson decay constants are \(f_{B_q^+}\): for the charmed meson, the latest result computed via LQCD [28, 29] gives \(f_{B_{c}} =427(6)\) MeV, while the latest LQCD \(N_f=2+1+1\) calculations for the \(B^+\) decay constant [30,31,32,33] yield to the averaged value \(f_{B^+} =190.0(1.3)\) MeV [4]. Finally, \({{B} _{c} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) and \({{B} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) are sensitive to \(\vert V_{cb} \vert \) and \(\vert V_{ub} \vert \), respectively, whose precise determination has been however hindered by the long-standing inclusive vs. exclusive puzzle [34, 35]. Several ways to extract these CKM elements have been indeed advocated in the past, differentiated by whether the study of a specific exclusive channel or rather an inclusive determination, would be used to this end. While these different approaches are expected yield to compatible values for the extracted CKM elements, it has not been the case for either \(\vert V_{cb} \vert \) or \(\vert V_{ub} \vert \), for both of which the average of the exclusive determinations differs from the inclusive one at the \(3.3~\sigma \) level [20]. Taking as an example the latest determinations for the CKM elements from the global SM CKM fit performed by the UTfit collaboration [34], namely \(|V_{cb}|^\mathrm {excl.}=42.22(51)\times 10^{-3}\) and \(|V_{ub}|^\mathrm {excl.}=3.70(11)\times 10^{-3}\), we obtain the SM predictions for the branching ratios

where the main sources of uncertainty come from the CKM matrix elements and the meson decay constants. Therefore, the aforementioned cleanness of the leptonic \({{B} _{c} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) and \({{B} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) decays makes them a theoretically perfect choice for an independent extraction of these CKM elements, potentially capable to play a relevant role in understanding the inclusive vs. exclusive puzzle, provided a measurement with enough precision is achievable at colliders.

Unfortunately, the level of theoretical precision is not yet matched on the experimental side, particularly for the \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) decay. In spite of its sizeable branching ratio in the SM (\(\approx 2\%\)), a search for the \({{B} _{c} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) decay is very challenging at current collider experiments. The selection and profiling of such decay rely on the reconstruction of \(\tau \) decay vertices as well as the measurement of the missing energy. At hadron collider experiments, due to the high multiplicity of simultaneous interactions in each bunch crossing and the lack of knowledge of the centre-of-mass energy of the \(b{\bar{b}}\) production process, it is practically impossible to separate \({{B} _{c} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) events from the overwhelming background processes. The current \(e^+e^-\) B-factories, in spite of their relatively clean environments and the full knowledge of the centre-of-mass energy, operate at energies (for example \(\Upsilon (5S)\)) lower than the production threshold of the \(B_c\) meson. In comparison, future Z-factories, with a large number of \(Z\rightarrow b{\bar{b}}\) events and an exquisite reconstruction of event kinematics, would provide the ideal environment to study this decay. Meanwhile, the measurement of \({{B} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \), albeit having been conducted at B-factories with the current best precision of 20% [20], can also benefit significantly from the large dataset at Z-factories.

The Future Circular Collider (FCC) project [36,37,38] aims at a design of a multi-stage collider complex for \(e^+e^-\), pp, and ep collisions hosted in a 91 km tunnel near CERN, Geneva. The first stage, FCC-ee, is envisioned for \(e^+e^-\) collisions at four different centre-of-mass energy windows: around 91 GeV for the Z production, around 161 GeV for the \(W^+W^-\) production, about 240 GeV for the ZH production, and more than 350 GeV for the \(t{\bar{t}}\) production. The Z-pole operation offers an unprecedented dataset not only for precision measurements of electroweak parameters related to the properties of the Z boson itself, but also the properties of all of its decay products, such as the b and c quarks, and the \(\tau \) lepton.

The integrated luminosity of the FCC-ee Z-pole data is proposed to be 150 \(\text {ab}^{-1}\) in the original conceptual design reports [37, 38], corresponding to \(N_Z \approx 5\times 10^{12}\). Recent studies in the FCC-ee design have updated such expectations to 180 \(\text {ab}^{-1}\) and \(N_Z \approx 6\times 10^{12}\). A dedicated study of \({{B} _{c} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) decay at FCC-ee based on the original design has been reported in Ref. [39], and a similar study at the Circular Electron Position Collider (CEPC) [40, 41] has been presented in Ref. [42]. Both have demonstrated promising physics prospects in this channel. In this work, we revise the analysis for \({{B} _{c} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) at FCC-ee and extend the work to a combined measurement of both \({{B} _{c} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) and \({{B} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) decays. As done in Ref. [39], we focus on the hadronic \(\tau ^+ \rightarrow \pi ^+ \pi ^+ \pi ^- {\bar{\nu }}_\tau \) decay for both \({{B} _{c} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) and \({{B} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) cases.

It is worth mentioning at this point that, in the case of the \({{B} _{c} ^+} \rightarrow {\tau ^+} {{\nu } _\tau } \) decay, the measurement of its branching ratio is hampered by the lack of knowledge of the \(B_c^+\) meson hadronisation fraction, \(f(B_c^\pm ) \equiv f(b\rightarrow B_c^\pm )\) [43]. The strategy followed in this work is the same as the one adopted in Ref. [39], which consists in using \(B_c^+\rightarrow J/\psi \mu ^+ \nu _\mu \) as a normalisation mode and therefore relies on its LQCD prediction [44, 45] to extract a value for \({\mathcal {B}}(B_c^+ \rightarrow \tau ^+ \nu _\tau )\). As will be reported in Sect. 3, the relative precision of the LQCD prediction for this normalisation will be one of the main limiting factors in the determination of \({\mathcal {B}}(B_c^+ \rightarrow \tau ^+ \nu _\tau )\). On the other hand, the \(B^+\) meson hadronisation fraction, \(f(B^\pm ) \equiv f(b\rightarrow B^\pm )\), being known at the percent level [46], does not pose an impediment for the measurement of \({\mathcal {B}}(B^+ \rightarrow \tau ^+ \nu _\tau )\).

The remainder of this paper is organised as follows: Sect. 2 summarises the experimental setup, describes the analysis workflow, and provides estimates of the range of signal precision in different scenarios; Sect. 3 illustrates the phenomenological impact of these measurements, both in the SM case and in selected relevant NP benchmarks.

The work in this paper has been conducted following the recommendation of the European Strategy Update for Particle Physics to investigate the technical and financial feasibility of a future hadron collider at CERN with a centre-of-mass energy of at least 100 TeV and with an electron-positron Higgs and electroweak factory as a possible first stage. This article provides a detailed description of the key ingredients for physics analyses at FCC-ee.

2 Analysis

The procedure of this analysis is modified from the preceding work on the measurement of the \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) at FCC-ee, reported in Ref. [39]. Major changes in the procedure are listed as follows:

-

Multi-classification, instead of binary classification, is deployed in the second-stage training, which achieves good separation between \(B^+ \rightarrow \tau ^+ \nu _\tau \), \(B_c^+ \rightarrow \tau ^+ \nu _\tau \), and background processes and ensures orthogonality in later selections.

-

The procedure for selection efficiency estimate is fully revised to minimise potential biases from the limited number of events that pass the final selection. Additional background samples are generated in the signal-enriched phase-space to further improve the robustness of the efficiency estimate.

-

The method for the final fit is updated to allow for the simultaneous evaluation of \(B^+ \rightarrow \tau ^+ \nu _\tau \) and \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) yields. Additional validation studies are performed to make sure the selection procedure does not introduce shifts in the background shapes.

-

Additional studies are performed to estimate the potential impact of background inflation and fluctuation to the signal precision in order to understand the range of expected sensitivity of these measurements.

This section provides a description of the full analysis. The detector concepts, simulated samples, and the analysis setup are described in detail in Ref. [39] and not repeated here. Additional simulated samples used in this work are summarised in Sect. 2.1 and the updated analysis tools are publicly available in Ref. [47]. The main kinematic features to characterise events in this analysis are discussed in Sect. 2.2. In this analysis, events are selected with a series of rectangular selections on their kinematic properties, followed by two boosted decision tree (BDT) classifiers described in Sects. 2.3 and 2.4. The BDT-based final selection, detailed Sect. 2.5, separates the \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) and \(B^+ \rightarrow \tau ^+ \nu _\tau \) signals into two categories with high signal-to-background ratios (S/B) and little cross-contamination. A template fit is performed simultaneously for two signal modes to estimate their individual yields and precisions, as described in Sect. 2.6. Considerations of potential systematic uncertainties and the expected experimental precision in scenarios with nonideal background modelling are also discussed in Sects. 2.7 and 2.8. Finally, Sect. 2.9 discusses the projected precision in case of a smaller dataset.

2.1 Signal and background samples

Event samples are generated with Pythia 8.303 [48] and EvtGen 02.00.00 [49] and the detector responses are simulated with DELPHES [50], following the configurations detailed in Ref. [39]. This work uses the full set of samples described in Ref. [39]. A few additional samples, listed in Appendix A, are produced for exclusive background processes in order to improve the estimates of background efficiencies.

Orthogonal sets of samples are deployed for the BDT training and the statistical analysis to avoid potential biases from overtraining. The training sample set comprises signal samples and inclusive \(Z \rightarrow b{\bar{b}}\), \(c{\bar{c}}\), and \(q{\bar{q}}\) processes, where \(q \in \{u,d,s\}\). The analysis sample set is composed of signal samples, inclusive background decays, and a selected list of exclusive background processes. The inclusive decay samples, each containing \(10^9\) events, are used to estimate background yields and shapes. They become insufficient for estimating background yields towards final selections, where backgrounds are rejected at more than the \(10^9\) level. A collection of exclusive decay modes are chosen to provide a good representation of a subset of background processes, which share with signals more similarities of the kinematic profile. The exclusive samples are used to estimate the background efficiency under high BDT requirements.

The exclusive b-hadron decay modes in the \(Z \rightarrow b{\bar{b}}\) process considered in this analysis are listed as follows:

-

\(Z \rightarrow b{\bar{b}}\), \(B \rightarrow D \tau ^+ \nu _\tau \)

-

\(Z \rightarrow b{\bar{b}}\), \(B \rightarrow D^* \tau ^+ \nu _\tau \)

-

\(Z \rightarrow b{\bar{b}}\), \(B \rightarrow D e^+ \nu _e\)

-

\(Z \rightarrow b{\bar{b}}\), \(B \rightarrow D^* e^+ \nu _e\)

-

\(Z \rightarrow b{\bar{b}}\), \(B \rightarrow D \mu ^+ \nu _\mu \)

-

\(Z \rightarrow b{\bar{b}}\), \(B \rightarrow D^* \mu ^+ \nu _\mu \)

-

\(Z \rightarrow b{\bar{b}}\), \(B \rightarrow D \pi ^+ \pi ^+ \pi ^-\)

-

\(Z \rightarrow b{\bar{b}}\), \(B \rightarrow D^*\pi ^+\pi ^+ \pi ^-\)

-

\(Z \rightarrow b{\bar{b}}\), \(B \rightarrow D D_s^+\)

-

\(Z \rightarrow b{\bar{b}}\), \(B \rightarrow D^* D_s^+\)

-

\(Z \rightarrow b{\bar{b}}\), \(B \rightarrow D^* D_s^{*+}\)

The B in the list stands for \(\{B^0, B^+, B_s^0, \Lambda _b^0\}\) and the corresponding D represents \(\{D^-, {{\bar{D}} {}^0}, D_s^-, \Lambda _c^-\}\). In each of the exclusive b-hadron samples, all of the b-hadron decay products are decayed further inclusively. The list of exclusive decays considered is not exhaustive and covers around 24% of the decay width for each b-hadron. Hence, they are not used to estimate the absolute background yields, but only to evaluate the relative efficiency from the baseline selection to the final selections, as detailed in Sect. 2.5.

The exclusive \(Z \rightarrow c{\bar{c}}\) decay modes considered in this analysis are:

-

\(D^+ \rightarrow \tau ^+\nu _\tau \)

-

\(D^+ \rightarrow K^0 \pi ^+\pi ^+\pi ^-\)

-

\(D_s^+ \rightarrow \tau ^+\nu _\tau \)

-

\(D_s^+ \rightarrow \rho ^+ \eta ^\prime \)

-

\(\Lambda _c^+ \rightarrow \Lambda ^0 e^+\nu _e\)

-

\(\Lambda _c^+ \rightarrow \Lambda ^0 \mu ^+\nu _\mu \)

-

\(\Lambda _c^+ \rightarrow \Lambda ^0 \pi ^+\pi ^+\pi ^-\)

-

\(\Lambda _c^+ \rightarrow \Sigma ^+ \pi ^+\pi ^-\)

All remaining unstable particles are decayed inclusively. Among these samples, the \(D^+ \rightarrow K^0 \pi ^+\pi ^+\pi ^-\), \(D_s^+ \rightarrow \rho ^+ \eta ^\prime \), \(\Lambda _c^+ \rightarrow \Lambda ^0 \pi ^+\pi ^+\pi ^-\), and \(\Lambda _c^+ \rightarrow \Sigma ^+ \pi ^+\pi ^-\) modes are chosen to represent the high multiplicity hadronic decays. Similar to the \(Z \rightarrow b{\bar{b}}\) case, all exclusive samples are only used to evaluate the relative efficiency from the baseline selection to the final selection and not for the absolute yield.

2.2 Thrust axis and event hemisphere definitions

In \(Z \rightarrow b{\bar{b}}\) events at FCC-ee, the net momentum of the Z boson is expected to be 0 and the two b quarks are boosted in opposite directions along the Z decay axis. The event can therefore be divided into two hemispheres, with one b quark on each side. The hemispheres are defined event-by-event, by a plane normal to the event thrust axis, which is reconstructed as the unit vector \(\hat{{\textbf {n}}}\) to maximise

where \({\textbf {p}}_i\) is the momentum vector of the ith reconstructed particle. Reconstructed particles are assigned to either hemisphere based on the angle between their momentum vector and the thrust axis.

In this analysis, the signal decays involve significant missing energy due to the presence of two neutrinos in the \(B^+/B_c^+ \rightarrow \tau ^+ \nu _\tau \) and \(\tau ^+ \rightarrow \pi ^+ \pi ^+ \pi ^- {\bar{\nu }}_\tau \) decays, while a generic b-hadron decay usually have a higher fraction of reconstructed energy. This leads to a sizeable energy imbalance between two hemispheres in events containing a signal decay on one side and a generic decay on the other side, in contrast to generic background events, where the energy distributions from two generic decays are well-balanced. Furthermore, the significant missing energy in the signal hemisphere also skews the reconstructed thrust axis and biases the reconstruction of other kinematic properties of the event. Such interplay introduces further discrimination between the signals and backgrounds in variables that are not directly related to the signal decays. In the rest of this work, we designate the hemisphere with less energy as the signal (positive) hemisphere, and the other as the background (negative) hemisphere.

2.3 First-stage BDT

The first-stage training (BDT1) is designed to loosely separate signals from generic backgrounds using event level kinematic features and not the specific decay processes. The training follows the procedure described in Ref. [39], the only change being the inclusion of the \(B^+ \rightarrow \tau ^+ \nu _\tau \) process in the signal sample set. The BDT is trained with XGBoost[51] using the following features, which are chosen to provide the complete information of the overall event topology:

-

Total reconstructed energy in each hemisphere;

-

Total charged and neutral reconstructed energies in each hemisphere;

-

Charged and neutral particle multiplicities in each hemisphere;

-

Number of tracks in the reconstructed PV;

-

Number of reconstructed \(3\pi \) candidates in the event;

-

Number of reconstructed vertices in each hemisphere;

-

Minimum, maximum, and average radial distance of all decay vertices from the PV.

Distributions of all input features are summarised in Appendix B.

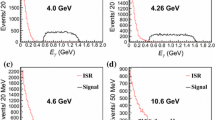

The optimal configuration of this is BDT found to feature 1000 trees, with a maximum number of cuts of 5 and a learning rate of 0.3. It is found to have a receiver operating characteristic (ROC) curve area of 0.983, indicating an excellent separation between signals and backgrounds. The BDT performance is illustrated in Fig. 1, where the BDT distributions in \(B_c^+ \rightarrow \tau ^+ \nu _\tau \), \(B^+ \rightarrow \tau ^+ \nu _\tau \), and each type of inclusive Z background are shown alongside their corresponding efficiency profiles. The signal efficiency for \(B^+ \rightarrow \tau ^+ \nu _\tau \) is slightly worse than that of \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) because its kinematic properties are more similar to generic \({{B} ^+} \) decays in \(Z \rightarrow b{\bar{b}}\) backgrounds.

A comparison of BDT performances on training and testing datasets is also provided in the right plot of Fig. 1. For this purpose, the testing dataset is a randomly sampled subset of the analysis samples, which are statistically independent of the training samples. Each line in this plot is profiled with \(5\times 10^5\) events. A good agreement in the efficiency profiles of training and testing samples is seen for efficiencies down to \(10^{-3}\). When background efficiencies go below \(10^{-3}\), the efficiency profiles of training and testing samples start to mildly deviate from each other, due to the limited number of remaining events.

As will be shown in Sect. 2.5, very tight BDT selections are applied in the final statistical analysis, corresponding to BDT1 \(\gtrsim 0.9999\) and BDT1 efficiencies of O(\(10^{-5}\)) for backgrounds. There are not enough events in the training samples to precisely evaluate background efficiencies to this level. However, this should not inflict a concern about an over-optimistic efficiency estimate for the final analysis, because the efficiency estimates are always conducted with analysis samples, which are free from any statistical bias in the training. Nonetheless, any potential discrepancy between the real data and the simulated samples in this analysis would lead to an inaccurate expectation of the BDT performance on the actual data. To address the lack of knowledge of the data expected to be collected a few decades in the future, instead of quoting the BDT efficiencies as is for simulations, we evaluate the range of the final precision in a few scenarios in which the BDT performance on data is worse than its expectation. The study is described in detail in Sect. 2.8.

2.4 Second-stage BDT

Prior to the second-stage training, events are required to have the first-stage BDT \(>0.6\). This selection is over 80% efficient for both signals and removes more than 90% of each type of background. In addition, the energy difference between the background and signal hemispheres is required to exceed 10 GeV, in order to further remove background-like events with little energy imbalance.

The second-stage BDT (BDT2) is designed to further refine signal selection using the full kinematic properties of the reconstructed \(3\pi \) candidate for the \(\tau \) decay as well as properties of other reconstructed decay vertices in the event. The \(\tau ^+ \rightarrow \pi ^+ \pi ^+ \pi ^- {\bar{\nu }}_\tau \) candidate is chosen as the \(3\pi \) vertex with the smallest vertex fit \(\chi ^2\) in the signal hemisphere. It is further required to have an invariant mass below that of the \(\tau \) lepton, and have at least one \(m(\pi ^+\pi ^-)\) combination within the range 0.6-\(-\)1.0 GeV. These selections retain candidates consistent with the \(a_1(1260)^+ \rightarrow (\rho ^0 \rightarrow \pi ^+\pi ^-)\pi ^+\) decay, via which all \(\tau ^+ \rightarrow \pi ^+ \pi ^+ \pi ^- {\bar{\nu }}_\tau \) decays proceed.

The setup of the second-stage training is similar to that in Ref. [39]. Instead of using a binary classifier for the signal-background separation, the BDT2 in this work is a multi-classifier with three labels: Bc for \(B_c^+ \rightarrow \tau ^+ \nu _\tau \), Bu for \(B^+ \rightarrow \tau ^+ \nu _\tau \), and Bkg for backgrounds. The full size of \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) and \(B^+ \rightarrow \tau ^+ \nu _\tau \) training samples after the aforementioned selections are used in the training, corresponding to about \(1\times 10^6\) events for \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) and \(6\times 10^5\) for \(B^+ \rightarrow \tau ^+ \nu _\tau \). The background training sample is a combination of \(Z \rightarrow b{\bar{b}}\), \(Z \rightarrow c{\bar{c}}\), and \(Z \rightarrow q{\bar{q}}\) events, whose proportions are based on their branching ratios and their efficiencies after previous selections, leading to roughly \(1 \times 10^4\) \(Z \rightarrow q{\bar{q}}\), \(1 \times 10^5\) \(Z \rightarrow c{\bar{c}}\), and \(1 \times 10^6\) \(Z \rightarrow b{\bar{b}}\) events.

Input variables to the second-stage BDT include the ones used in Ref. [39], which are designed to describe the full kinematic information of the \(3\pi \) candidate as well as its correlation with other displaced vertices. A few variables are added characterising extra D meson decays in the event. D mesons are expected in the signal hemisphere of \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) events because of the presence of charm quarks in the hadronisation of the \(B_c^+\) meson. Variables explicitly tagging D meson candidates have shown to provide a small gain in selecting the \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) signal. The D meson candidate is selected as the secondary vertex in the signal hemisphere with the invariant mass closest to 1.85 GeV.

A summary of all features used in the second-stage training is listed as follows:

-

\(3\pi \) candidate mass, and masses of the two \(\pi ^+\pi ^-\) combinations;

-

Number of \(3\pi \) candidates in the event;

-

Radial distance of the \(3\pi \) candidate from the PV;

-

Vertex \(\chi ^2\) of the \(3\pi \) candidate;

-

Momentum magnitude, momentum components, and impact parameter (transverse and longitudinal) of the \(3\pi \) candidate;

-

Angle between the \(3\pi \) candidate and the thrust axis;

-

Minimum, maximum, and average impact parameter (longitudinal and transverse) of all other reconstructed decay vertices in the event;

-

Mass of the PV;

-

Nominal B meson energy, defined as the Z mass minus all reconstructed energy apart from the \(3\pi \) candidate;

-

The mass and vertex displacement of the D meson candidate.

Distributions of all input features are summarised in Appendix B.

The optimal configuration of this is BDT found to feature 1000 trees, with a maximum number of cuts of 3 and a learning rate of 0.3. This BDT achieves a high level of separation between backgrounds and each signal, which is illustrated in the distribution of different processes in the Bc score vs Bu score plane in Fig. 2. The output of the multi-classifier is regulated such that the Bc score, Bu score and background score sum up to 1. The closer to the origin of the Bc-Bu plane, the higher the background score. The area under the Bc vs non-Bc ROC curve is found to be 0.921 and the area under the Bu vs non-Bu ROC curve to be 0.886.

The second-stage BDT performance is shown in Fig. 3, along with a comparison between training samples and testing samples. The top left plot shows the selection efficiency of events with Bc score greater than different cut values, the top right plot shows the efficiency with regard to the Bu score and the bottom plot regarding the Bkg score. Each line for \(B_c^+ \rightarrow \tau ^+ \nu _\tau \), \(B^+ \rightarrow \tau ^+ \nu _\tau \), and \(Z \rightarrow b{\bar{b}}\) processes is profiled with \(5\times 10^5\) events. The \(Z \rightarrow c{\bar{c}}\) and \(Z \rightarrow q{\bar{q}}\) are already rejected with high rates by selections prior to the second-stage training and have fewer remaining events in the training samples. As a results, \(2\times 10^5\) events are used for each line of \(Z \rightarrow c{\bar{c}}\) and \(2\times 10^4\) for \(Z \rightarrow q{\bar{q}}\).

The BDT2 performance is found to agree well between the training and testing samples in the Bc score plot and the Bkg score plot. In the Bu-enriched region, some deviations are observed between the training and testing samples for the \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) and background processes. The largest difference is found to be about a factor of 5 in the efficiency of the \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) process on the Bu score selection around BDT2 Bu \(= 0.95\). The discrepancy is avoided in the final analysis, detailed in Sect. 2.5, as the final selection criterion on the Bu score is kept at 0.64. Furthermore, similar to the case discussed in Sect. 2.3, we do not assume the BDT performance on current simulations to be a precise depiction of what it will be on real data. The impact of non-ideal data modelling is addressed in Sect. 2.8.

2.5 Final selection

To select each signal process with high purity, two orthogonal event categories are selected targeting the \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) and \(B^+ \rightarrow \tau ^+ \nu _\tau \) signals, respectively. Each category is defined with a combined selection on the BDT1, BDT2 signal,Footnote 2 and BDT2 background scores. The selection criteria are optimised by evaluating the yields for different processes under the selection combinations.

The yields for two signal modes are evaluated directly with simulated samples. For backgrounds, an indirect measure is taken because the final background rejection is beyond \(10^9\) level, and the number of events in the simulated samples is insufficient for a direct estimate. The background yields are evaluated with a three-step procedure:

-

The inclusive samples are used to estimate a yield with a baseline selection (BDT1 \(> 0.9\), BDT2 signal \(> 0.6\), and BDT2 bkg \(< 0.1\)).

-

The inclusive samples become statistically limited after the baseline selection. The samples for exclusive decays listed in Sect. 2.1 are used to estimate the efficiencies from this baseline selection to a tight selection (BDT1 \(> 0.99\), BDT2 signal \(> 0.6\), and BDT2 bkg \(<0.05\)). Each exclusive decay is weighted by its cross section multiplied by its efficiency regarding previous steps. The combination of all exclusive samples provides the best approximation to the inclusive phase-space.

-

Beyond the tight selection, the exclusive samples also become limited in statistical precision. To estimate efficiencies with further selections, the first-stage and second-stage BDTs are assumed to be independent of each other and their efficiencies are evaluated separately with the exclusive samples. The efficiency profiles with regard to BDT1 are evaluated with a series of cut values and smoothed with splines. Similarly, the efficiency profiles with regard to BDT2 are evaluated on a 2D grid (BDT2 signal, BDT2 background) and smoothed with 2D splines. The efficiencies with regard to two BDTs are multiplied to give the total efficiency, as shown in Eq. (6).

$$\begin{aligned} \begin{aligned} \epsilon _1(\alpha )&= \epsilon (\text {BDT1}> \alpha \hspace{0.1cm}|\hspace{0.1cm} \text {tight selection}), \\ \epsilon _2(\beta , \gamma )&= \epsilon (\text {BDT2}_\text {sig} > \beta , \\&\quad \text {BDT2}_\text {bkg} < \gamma \hspace{0.1cm}|\hspace{0.1cm} \text {tight selection}), \\ \epsilon _{tot}(\alpha , \beta , \gamma )&= \epsilon _1(\alpha ) \times \epsilon _2(\beta , \gamma ) \end{aligned} \end{aligned}$$(6)

The efficiency profiles of \(Z \rightarrow b{\bar{b}}\) and \(Z \rightarrow c{\bar{c}}\) processes are evaluated independently. The light flavour \(Z \rightarrow q{\bar{q}}\) decay is already rejected at \(10^9\) level with the baseline selection and is therefore not considered for further steps.

The final selection criteria are optimised separately for two categories by scanning through a three-dimensional grid of BDT1, BDT2 signal, and BDT2 background scores to find the best-expected signal purity, defined as \(P = S/(S+B)\), where S and B are the expected signal and background yields. The optimal selections are found to be BDT1 > 0.99993, BDT2 Bc > 0.782, and BDT2 background < 0.0088 for the Bc category, and BDT1 > 0.99946, BDT2 Bu > 0.640, and BDT2 background < 0.0123 for the Bu category. The efficiencies for \(Z \rightarrow b{\bar{b}}\) and \(Z \rightarrow c{\bar{c}}\) processes factorised for each step are listed in Table 1. The signal efficiencies factorised for each step are listed in Table 2. The corresponding expected yields, in the scenario of \(N_Z = 6 \times 10^{12}\), are listed in Table 3.

2.6 Fit to measure the signal yield

The signal yield is measured by fitting the distributions of certain kinematic variables, which discriminate signals from backgrounds. The kinematic distributions are modelled directly for signals after the final selection. For the background, however, there is not enough simulated events after final selections, so its fit variable shapes need to be extrapolated from looser selections. Such extrapolation relies on the assumption that there is no significant variation in the background shapes between loose and tight BDT selections. Since the two BDTs utilise the full kinematic description of the event, all kinematic variables are, to different extents, correlated with the BDT scores. However, as will be discussed below, some variable shapes are found to be more sculpted by very loose BDT selections and become stable against tighter cuts.

The first-stage BDT imposes strong selection on the decay processes in both hemispheres of background events. Semileptonic decays, which include large missing energy and have more kinematic resemblance to signals, are found to be significantly preferred in both hemispheres. This effect leads to intrinsic differences between the signals and backgrounds in the non-signal hemisphere, which is otherwise supposed to be random decays. The second-stage BDT focuses on the signal-like hemisphere, in which the signal decays feature more missing energy and larger angle to the thrust axis than backgrounds. As mentioned in Sect. 2.2, this indirectly changes the shape of the non-signal hemisphere energy, therefore the energy variable still exhibits discriminating power after BDT selections. Most of the background process filtering and the kinematic variable morphing described above is attained at low BDT regions, and the background composition become rather stable towards tight BDT selections. On the other hand, the discriminating power in the energy of the signal hemisphere is preserved after BDT selections because very few background processes contain as much missing energy as the signals.

Therefore the sum of energy in the signal hemisphere (minimum hemisphere energy) and that in the non-signal hemisphere (maximum hemisphere energy) are chosen as candidates for the final fit, as described in Ref. [39]. The candidate variables are test against the assumption of stable background shapes, with details provided in Appendix C. From Appendix C, no significant variation is found for the shape of maximum hemisphere energy under further BDT selections, while the minimum hemisphere energy is clearly sculpted. Therefore only the maximum hemisphere energy is considered in the final fit.

The fit is constructed as a combined maximum likelihood fit across two event categories, each with four binned distribution templates for the four processes: \(B_c^+ \rightarrow \tau ^+ \nu _\tau \), \(B^+ \rightarrow \tau ^+ \nu _\tau \) signals, and \(Z \rightarrow b{\bar{b}}\), \(Z \rightarrow c{\bar{c}}\) backgrounds. The shape templates for signals are directly modelled by simulations. Whereas for backgrounds, as mentioned in the previous section, there are not enough events in simulations after the final selection to make a direct model of their shapes. The background shape templates are taken as the shapes of the inclusive samples after baseline BDT selections (BDT1 \(> 0.9\), BDT2 signal \(> 0.6\), and BDT2 bkg \(<0.1\)) and are modelled separately for \(Z \rightarrow b{\bar{b}}\) and \(Z \rightarrow c{\bar{c}}\) processes. Since the fit variable is independent of BDT selections, we do not expect the template shapes to change from the baseline to the final selection.

The template shapes in both categories are shown in Fig. 4. Signal distributions are in general more centred towards 45 GeV than the background ones. The two signal modes have similar shapes and their individual contributions are mainly determined by their contrasting expected yields in different categories. The background shapes, featuring a further spread over low-energy regions, can in general be distinguished from signals in the fit. The only exception is the \(Z \rightarrow c{\bar{c}}\) background in the Bu category, shown as the orange line in the right plot of Fig. 4, which resembles signals in shape. Given the very small anticipated \(Z \rightarrow c{\bar{c}}\) yield in this category, as provided in Table 3, we do not expect it to lead to a visible bias in the signal estimates as long as the best-fit value of the \(Z \rightarrow c{\bar{c}}\) events stays in a reasonable range. This is ensured by posing a very loose constraint on the \(Z \rightarrow c{\bar{c}}\) yield in the Bu category in the likelihood fit, which is detailed in the following part of this section as well as Sect. 2.7.

All templates are normalised by their expected yields listed in Table 3. In total, 6 parameters are considered in the fit:

-

\(\mu (B_c^+ \rightarrow \tau ^+ \nu _\tau )\) and \(\mu (B^+ \rightarrow \tau ^+ \nu _\tau )\): The signal strength modifiers relative to their expected yields. They are correlated across two categories and are fully floating.

-

\(\mu _\text {Bc}(Z \rightarrow b{\bar{b}})\), \(\mu _\text {Bc}(Z \rightarrow c{\bar{c}})\), \(\mu _\text {Bu}(Z \rightarrow b{\bar{b}})\), \(\mu _\text {Bu}(Z \rightarrow c{\bar{c}})\): The background strength modifiers relative to their expected yields in each category. They only appear in their designated category and are uncorrelated from each other. The modifiers \(\mu _\text {Bc}(Z \rightarrow b{\bar{b}})\), \(\mu _\text {Bc}(Z \rightarrow c{\bar{c}})\), and \(\mu _\text {Bu}(Z \rightarrow b{\bar{b}})\) are fully floating. The modifier \(\mu _\text {Bu}(Z \rightarrow c{\bar{c}})\) is allowed to float without boundary but with a penalty in the log-likelihood of the fit, which corresponds to a lognormal uncertainty equal to 10 times its expected yields.

Figure 5 shows an example of the fit performed on a pseudo-dataset. Pseudo-datasets are generated from the sum of the expected templates of all processes, with a bin-by-bin random Poisson fluctuation based on the event count in each bin.

To evaluate statistical uncertainties and potential biases from the fit, 4000 pseudo-datasets are generated and the corresponding fit results are summarised for statistical tests. Figure 6 shows the distributions of the best-fit signal strengths from these 4000 pseudo-experiments. This distribution is fit with a double-sided Gaussian function to examine its central value and uncertainties. The mean values for both signal strengths have roughly 0.1% level deviations from 1.0, which are 10 times smaller than the corresponding signal uncertainties. This indicates that there is no visible bias in the fit results from this approach. The relative uncertainties on signal strengths are found to be \({}^{+1.4\%}_{-1.7\%}\) for \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) signal and \({}^{+1.5\%}_{-2.1\%}\) for \(B^+ \rightarrow \tau ^+ \nu _\tau \) signal. Uncertainties extracted at this stage are purely statistical. Considerations of systematic uncertainties are discussed in the next section.

Statistical variation of the signal shape for the \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) (left) and \(B^+ \rightarrow \tau ^+ \nu _\tau \) (right) processes. The upper panels show the distributions of the energy in the maximum hemisphere in three equal subsets of the signal simulation. The lower panels compare the ratio of the distributions in the second and third subsets (dashed and dotted lines) with regard to the distribution in the first subset. The Poisson uncertainties based on the expected yields in data are also shown as the grey band in the lower panel

2.7 Considerations for systematic uncertainties

In this section, we discuss the a few main types of systematic uncertainties and their potential impacts on the results. Note that such considerations are base on the current knowledge of FCC, which is largely speculative at this early stage. We aim to inspect whether there are major ineluctable uncertainties that would impair the feasibility of these measurements. Detailed investigations on detector responses and data conditions will be required to provide accurate estimates of systematic uncertainties in the future.

-

Particle identification and vertex reconstruction efficiency: Signal events feature one displaced vertex in the signal hemisphere with 3 charged pions. The successful reconstruction of signal events relies on the identification of pions and reconstruction of secondary vertices. In this analysis, particle identification is assumed to be perfect. A prospective study [52] has shown that pions and kaons can be distinguished at above \(3 \sigma \) level in the full kinematic phase-space relevant to this analysis. Therefore we expect the signal uncertainty from particle identification to be at per mille level or smaller. In this analysis, the vertex reconstruction is seeded with true decay vertices from simulation. With real data, we do not expect there to be a significant change of signal vertexing efficiency, as the signal \(\tau ^+ \rightarrow \pi ^+ \pi ^+ \pi ^- {\bar{\nu }}_\tau \) vertex is very displaced from other hadron activities, but we do expect the imperfect vertexing to lead to a non-negligible amount of combinatorial backgrounds. Impacts of additional backgrounds is discussed in Sect. 2.8.

-

Signal shape uncertainty: The stability of signal shapes after the final selection is tested by splitting the signal samples into three equal subsets and comparing the signal shape in each subset. Figure 7 illustrates the level of signal shape variation in different subsets as well as the bin-by-bin Poisson uncertainty based on the expected event yields. The shapes in the three subsets are found to be consistent within the statistical fluctuation. To evaluate the impact of such variations, a test is performed including these shape variations as a systematic uncertainty on the signal templates with 4000 pseudo-experiments following the procedure described in Sect. 2.6. The combined uncertainties are found to be \({}^{+1.4\%}_{-1.7\%}\) for \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) and \({}^{+1.5\%}_{-2.1\%}\) for \(B^+ \rightarrow \tau ^+ \nu _\tau \), in which the exact numbers differ from those in Sect. 2.6 only in the third digit and are omitted after rounding. At the current stage, there is very limited information to speculate how the shape uncertainties would be in an analysis with real data. This test example provides a rough estimate of the robustness of the signal precision against shape uncertainties. We conclude that signal shape variations do not introduce a significant source of uncertainty to the best of our knowledge.

-

Potential background shape sculpting from BDT: As explained in Appendix C, the background shape is very stable against tight BDT selections. Although there is not enough simulated events to provide a quantitative estimate of background shape variations in the final selection, we expect such variations to be very small and not to lead to a visible change in the fit results.

-

Background efficiency: The background efficiency estimate relies on many assumptions: the stability of BDT performance at high BDT values as discussed in Sects. 2.3 and 2.4, the agreement between inclusive background efficiencies and exclusive background efficiencies, and other potential background sources that are not taken into consideration, for example, the combinatorial backgrounds mentioned above. In the fit approach described in Sect. 2.6, the background yields are freely floating.Footnote 3 Since the fit does not constrain background yields to their expected values in simulations, any bias in the background efficiency should not affect the fit as a systematic uncertainty.

-

Uncertainty of branching ratios of \(B \rightarrow DD\) components: as described in Sect. 2.1, several \(B\rightarrow DD\) decay samples are considered as the exclusive decays, which are used for background efficiency estimates in Sect. 2.5. The branching ratios of these decay are not yet known to high precision, and such uncertainties should be propagated into the efficiency estimates and background shape variations. We consider a 30% uncertainty on the branching ratios of all \(B \rightarrow DD\) processes and check for the impact on the signal sensitivity. The impact turns out to be negligible. This study is summarised in Appendix D.

Overall, we do not expect any significant systematic uncertainty from known sources. However, to make a prediction on the experimental sensitivity, as performed in Sect. 2.6, pseudo-datasets are generated based on the expected background yields in simulations. We evaluate the lack of knowledge in the exact background yields in a series of nonideal scenarios and provide a range of expected experimental sensitivity in Sect. 2.8.

2.8 Fit performance for nonideal scenarios

In this analysis, we consider two treatments on the background yields to provide sensitivity estimates in several nominal scenarios, spanning optimistic and very pessimistic cases.

-

Inflation factor: The expected background yields, as reported in Sect. 2.5, are multiplied by the inflation factor. In each pseudo-dataset, the same inflation factor is applied to both \(Z \rightarrow b{\bar{b}}\) and \(Z \rightarrow c{\bar{c}}\) backgrounds in both the Bc and Bu categories. Scenarios of \(N_\text {bkg}=\) [1,2,5,10]\(\times N_\text {bkg}^\text {exp}\) are considered.

-

Random fluctuation: When generating pseudo-datasets, in addition to the Poisson fluctuation based on the event yields, a random factor following a lognormal distribution is applied to each background process. The lognormal distribution has a central value (value at 50% quantile) at 1.0 and a 68% coverage band of \([1/\sigma _\text {bkg}^\text {fluc}, \sigma _\text {bkg}^\text {fluc}]\), where \(\sigma _\text {bkg}^\text {fluc}\) is the relative background yield fluctuation. In each pseudo-dataset, the fluctuation parameter of each background process in each category is independently sampled from the same lognormal distribution. Scenarios of \(\sigma _\text {bkg}^\text {fluc}=\)[1,2,5,10] are considered.Footnote 4

The signal sensitivity is evaluated with 4000 pseudo-experiments in each scenario of background inflation and fluctuation. Figure 8 summarises the expected sensitivity for both \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) (left) and \(B^+ \rightarrow \tau ^+ \nu _\tau \) (right) signals. The relative uncertainties shown in Fig. 8 are symmetrised from their up and down uncertainties. The scenario with \(\sigma _\text {bkg}^\text {fluc}=1\) and \(N_\text {bkg}=N_\text {bkg}^\text {exp}\) is the idealistic case as demonstrated in Sect. 2.6.

The experimental sensitivity is shown to be reasonably stable against background inflation and fluctuations. From the idealistic case to the most pessimistic case, the \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) precision deteriorates from \(1.6\%\) to \(2.3\%\) and the \(B^+ \rightarrow \tau ^+ \nu _\tau \) precision from \(1.8\%\) to \(3.6\%\). This high level of resilience against exaggerated backgrounds is a result of the high signal-to-background ratios in both categories. We expect the background inflation and fluctuation in pessimistic cases to be large enough to account for most of the uncertainties discussed in Sect. 2.7 and such results to provide a reasonable expectation of the achievable precision range at FCC-ee.

2.9 Expected performance with different data sizes

All experimental predictions shown in previous sections are based on an expected nominal FCC-ee Z-pole dataset of 180 ab\(^{-1}\), containing \(6\times 10^{12}\) Z bosons. Note that the nominal luminosity is updated since the previous work on the \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) decay [39]. This change of expected luminosity leads to a marginal improvement of the experimental precision, while the main improvement of the \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) precision comparing to the previous work comes from the updated categorisation strategy. In this paper we only provide results based on \(6\times 10^{12}\) Z bosons, while similar predictions on experimental sensitivities with different sample sizes, in case of further changes of the FCC design or operation schedule, can be inferred with simple calculations. The uncertainties evaluated in Sect. 2.8 are purely statistical uncertainties, which scale inversely with the square root of the sample size, \(\propto 1/\sqrt{N_Z}\). Therefore predictions on the experimental precision, assuming the same analysis strategy, can be calculated by scaling the numbers provided by Sect. 2.8.

3 Phenomenological Implications in the Standard Model and Beyond

In this section, we inspect the phenomenological implications that the measurements of \({\mathcal {B}}(B^+\rightarrow \tau ^+ \nu _\tau )\) and \({\mathcal {B}}(B_c^+\rightarrow \tau ^+ \nu _\tau )\) would have in the Standard Model and Beyond. Our predictions are based on the projected precision with which these modes will be measured at FCC-ee at 180 ab\(^{-1}\), as studied in the previous section and summarised in Fig. 8. For \(N(B^+\rightarrow \tau ^+ \nu _\tau )\), we will consider two different benchmarks corresponding to precisions of \(\approx 2\%\) and \(\approx 4\%\) in the idealistic and pessimistic case respectively. Whereas for \(N(B_c^+\rightarrow \tau ^+ \nu _\tau )\), as will be discussed in this section, theoretical uncertainties from other sources are non-negligible, and the difference between the idealistic (\(1.6\%\)) and pessimistic (\(2.3\%\)) experimental precisions becomes insignificant. Therefore we adopt a single benchmark corresponding to a \(\approx 2\%\) precision. As we stated in the introduction it is worth to mention that, while for the \(B^+\) channel its signal yield can be easily translated into a branching ratio measurement and employed to infer phenomenological implications, this is not the case for the \(B_c^+\) one due to the poor knowledge of its hadronisation fraction \(f(B_c^\pm )\).

In order to circumvent this issue, we choose to factor out \(f(B_c^\pm )\) by normalising the \(B_c^+ \rightarrow \tau ^+ \nu _\tau \) decay to the semileptonic decay mode \(B_c^+ \rightarrow J/\psi \mu ^+\nu _\mu \). As explained in our previous work [39], the normalisation ratio

is measured purely from the experiment, in which the experimental uncertainty on \(N(B_c^+ \rightarrow J/\psi \mu ^+\nu _\mu )\) is expected to be significantly smaller than that of \(N(B_c^+\rightarrow \tau ^+ \nu _\tau )\). In this work, we assume that the normalisation channel is not affected by NP, which is a well justified choice since we will consider scenarios where NP couples only to taus, and not to light leptons. Here we place a \(\approx 1.5\%\) uncertainty for \(N(B_c^+ \rightarrow J/\psi \mu ^+\nu _\mu )\), which leads to a \(\approx 3\%\) uncertainty for \({\mathcal {R}}\). In this manner, we obtain the relative precision of \({\mathcal {B}}(B^+\rightarrow \tau ^+ \nu _\tau )\) from \({\mathcal {R}}\) as well as a theoretical external input \(\Gamma _{\textrm{theo}}(B_c^+ \rightarrow J/\psi \mu ^+\nu _\mu )/|V_{cb}|^2\). For this input the current prediction obtained employing LQCD yields a relative uncertainty of \(\approx 7 \%\) [44]. However, current estimates consider achievable to cut down this uncertainty to \(\approx 2 \%\) already in the next decade [53], hence it is conceivable to assume such level of precision for our study. Our final expected precision on \(\Gamma (B_c^+ \rightarrow J/\psi \mu ^+\nu _\mu )/|V_{cb}|^2\) therefore corresponds to \(\approx 3.5 \%\). It is interesting to notice that, if LQCD will be able to improve the precision on \(\Gamma _{\textrm{theo}}(B_c^+ \rightarrow J/\psi \mu ^+\nu _\mu )/|V_{cb}|^2\) beyond the current estimates, this will translate in an increase of the expected precision for \(\Gamma (B_c^+ \rightarrow J/\psi \mu ^+\nu _\mu )/|V_{cb}|^2\) as well.

Current determinations of \(\vert V_{ub} \vert \) from various sources, and future predictions. In the lower part of the plot, we report from bottom to top the current values extracted by a fit to exclusive channels [20], a determination from the inclusive channel [20] based on the method originally developed in Ref. [54], the values obtained by the UTfit and CKMfitter global fits [34, 35], and the determination extracted by the average of current measurements of \(B^+\rightarrow \tau ^+ \nu _\tau \) [20]. In the upper part of the plot, we give the predicted extractions by future measurements of \(B^+\rightarrow \tau ^+ \nu _\tau \) both at Belle II and at FCC-ee: for the former, we assume a precision of \(5\%\) in the determination of the branching ratio [55], while for the latter we give both the pessimistic and idealistic cases of precision, namely \(4\%\) and \(2\%\). We give for each of these scenarios three different predictions according to the choice employed for the central value of \(\vert V_{ub} \vert \), namely the current exclusive determination, the current UTfit result or the current \(B^+\rightarrow \tau ^+ \nu _\tau \) determination, identified by a circle, a square or a star marker, respectively. To facilitate a comparison, the grey and ochre vertical bands represent the current exclusive and inclusive determinations of \(\vert V_{ub} \vert \), respectively

3.1 Extraction of \(\vert V_{ub} \vert \)

The measurement of \({\mathcal {B}}(B^+\rightarrow \tau ^+ \nu _\tau )\) allows us to perform a direct determination of \(\vert V_{ub} \vert \), which we define as \(\vert V_{ub} \vert ^{\textrm{lep}}\), by inverting Eq. (2). Observing that the \(B^+\) meson lifetime is currently determined with a per mille accuracy [46], the only other source of the uncertainty in determining \(\vert V_{ub} \vert ^{\textrm{lep}}\) would come from the theory prediction of the decay constant \(f_{B^+} \). As stated in the introduction, the average of its latest LQCD determinations is equal to \(f_{B^+} =190.0(1.3)\) MeV [4], with a \(\approx 0.6\%\) relative error. However, according to present estimates this uncertainty could be halved within the next 10 years [53], well before the FCC timeline. Therefore, the precision of the future extraction of \(\vert V_{ub} \vert ^{\textrm{lep}}\) will be fully determined by the experimental error.

We report our findings in Fig. 9. In the lower part of this plot, we show the current status of the determination of \(\vert V_{ub} \vert \) giving the values presently extracted by exclusive and inclusive [20] channel determinations, employing the method originally developed in Ref. [54] for the latter, respectively dubbed below as \(\vert V_{ub} \vert ^\mathrm{excl.}\) and \(\vert V_{ub} \vert ^{\mathrm{incl.}}\), together with the results from global fits performed by the UTfit [34] and the CKMfitter [35] collaborations, \(\vert V_{ub} \vert ^{\textrm{fit}}\). Moreover, we also show the determination extracted by the average of current measurements of \({\mathcal {B}}(B^+\rightarrow \tau ^+ \nu _\tau )\) [20]. The current value extracted for \(\vert V_{ub} \vert ^{\textrm{lep}}\) is compatible with the present inclusive determination, but with a large error due the current precision of the \({\mathcal {B}}(B^+\rightarrow \tau ^+ \nu _\tau )\) determination, equal to \(\approx 20\%\). An incompatibility with the exclusive determination of the CKM element can therefore be hardly claimed. Hence, this channel currently plays no relevant role neither in the determination of \(\vert V_{ub} \vert ^{\mathrm{excl.}}\), nor in the attempt to understand and reconciling the long-standing \(\vert V_{ub} \vert ^{\mathrm{incl.}}\) vs. \(\vert V_{ub} \vert ^{\mathrm{excl.}}\) puzzle.

This situation will change in the future. Belle II expected precision on the determination of \({\mathcal {B}}(B^+\rightarrow \tau ^+ \nu _\tau )\) is equal to \(\approx 5\%\) [55], and as we shown in the previous section, FCC-ee will bring it down potentially to \(\approx 2\%\). Given the accuracy of the theoretical inputs described above, this will directly reflect into a significant increase in the precision of the extraction of \(\vert V_{ub} \vert ^{\textrm{lep}}\), as the reader can see from the upper part of Fig. 9.Footnote 5 In this section of the plot, we give predictions for Belle II and both the pessimistic and the idealistic scenarios at FCC-ee. Given the obtained level of accuracy, we present each of these predictions in three different benchmarks, differentiated by the choice adopted for the central value of \(\vert V_{ub} \vert ^{\textrm{lep}}\): for the first benchmark, we assume that \({\mathcal {B}}(B^+\rightarrow \tau ^+ \nu _\tau )\) will align to the other exclusive modes, and hence employ the current exclusive average for the central value of \(\vert V_{ub} \vert ^{\textrm{lep}}\); in the second benchmark, we forecast that the channel to converge to what is currently suggested from global CKM fits, and assume a central value for the CKM element in according to the current UTfit determination; for the last benchmark, we instead keep the central value as the same of the current average of \({\mathcal {B}}(B^+\rightarrow \tau ^+ \nu _\tau )\) measurements. These benchmarks are identified in the plot by a circle, a square and a star marker, respectively.

Several considerations are now in order. First and foremost, the level of accuracy with which \(\vert V_{ub} \vert ^{\textrm{lep}}\) could be extracted at FCC-ee in the idealistic case is less than a third of the current precision stemming from exclusive average, while about a half in the pessimistic case. This implies that, contrary to the current situation, \({\mathcal {B}}(B^+\rightarrow \tau ^+ \nu _\tau )\) will start to play a role in the extraction of the determination of \(\vert V_{ub} \vert ^{\mathrm{excl.}}\) in the future. Moreover, if the central value will be similar to what we assumed for our third benchmark, we would obtain a theoretically clean exclusive determination in accordance with the current inclusive one. This could be a hint to the reason behind the long-standing exclusive vs. inclusive discrepancy: on the one hand, the semileptonic decays currently employed for the \(\vert V_{ub} \vert ^{\mathrm{excl.}}\) are prone to theory errors due to the presence of form factors; on the other hand, the theoretical prediction of the fully inclusive rate used for the extraction of \(\vert V_{ub} \vert ^{\mathrm{incl.}}\) is cleaner, but also hindered by the need to perform phase space cuts due to experimental limitations. A measurement of \(\vert V_{ub} \vert ^\textrm{lep}\) compatible with \(\vert V_{ub} \vert ^{\mathrm{incl.}}\) could therefore point of a better control of the latter theoretical errors, rather than the former ones. If we will instead observe a result in accordance to the first or second benchmark, this would strengthen the case of the exclusive determination of \(\vert V_{ub} \vert ^{\mathrm{excl.}}\), which is currently the preferred one given the better accordance with results coming from global CKM fits.

In conclusion, the determination of \(\vert V_{ub} \vert ^{\textrm{lep}}\) through the measurement of \({\mathcal {B}}(B^+\rightarrow \tau ^+ \nu _\tau )\) at FCC-ee will be of extreme relevance, potentially playing a key role in the resolution of the long-standing \(\vert V_{ub} \vert ^\mathrm{incl.}\) vs. \(\vert V_{ub} \vert ^{\mathrm{excl.}}\) puzzle.

3.2 New Physics Implications

We proceed now to study the implications that the measurements of \({\mathcal {B}}(B^+ \rightarrow \tau ^+ \nu _\tau )\) and \({\mathcal {B}}(B_c^+ \rightarrow \tau ^+ \nu _\tau )\) at FCC-ee would have on some specific NP models. In order to perform this kind of analysis, we start by introducing the most general dimension-six effective Hamiltonian for \(b\rightarrow q\tau \nu \) transitions, with \(q=u,c\), namely

where the effective coefficients \(C^q_{\alpha }\equiv C^q_{\alpha }(\mu )\) with \(\alpha \in \lbrace V_{L(R)},S_{L(R)},T\rbrace \) are evaluated at the renormalisation scale \(\mu =m_b\). The normalisation is chosen in such a way that the SM can be recovered by setting \(C^q_{\alpha }=0\) for all coefficients. Note also that here and below we are assuming that NP couples only to taus, and not to light leptons.

Starting from this Hamiltonian, the NP contribution to the leptonic \(B_q^+\) decays can be written as

which can also be expressed in terms of common combinations \(C^q_{A} \equiv C^q_{V_R}- C^q_{V_L}\) and \(C^q_{P} \equiv C^q_{S_R}- C^q_{S_L}\). It is worth mentioning that, due to chiral enhancement, \({\mathcal {B}}(B_q^+ \rightarrow \tau ^+ \nu _\tau )\) is particularly sensitive to contributions from the scalar coefficient \(C^q_{P}\). The combinations \(C^q_{V} \equiv C^q_{V_R}+ C^q_{V_L}\) and \(C^q_{S} \equiv C^q_{S_R}+ C^q_{S_L}\), or the coupling \(C^q_T\), cannot be probed by leptonic \(B^+_q\) decays, but are on the other hand accessible in decays involving a pseudoscalar meson in the final state like, e.g., \(B^0 \rightarrow D^-(\pi ^-) \tau ^+ \nu _\tau \) decays, for \(q=c(u)\) respectively. Moreover, complementary information can be obtained by decays with a vector in the final state, such as \(B^0 \rightarrow D^{* -} \tau ^+ \nu _\tau \) and \(B_c^+ \rightarrow J/\psi \tau ^+ \nu _\tau \), or \(B^0 \rightarrow \rho ^- \tau ^+ \nu _\tau \) and \(B^+ \rightarrow \omega \tau ^+ \nu _\tau \), respectively for the \(q=c\) and \(q=u\) channels. For a recent global analysis in \(b\rightarrow c\) and \(b\rightarrow u\) transitions, see e.g. Refs. [57] and [58], respectively.

Left diagram: additional tree-level contribution to \(b\rightarrow q \tau \nu _\tau \) transitions due to the presence of a charged Higgs, \(H^-\). Central diagram: additional tree-level contribution to \(b\rightarrow q \tau \nu _\tau \) transitions due to the presence of the scalar leptoquark \(S_1\). Right diagram: additional tree-level contribution to \(b\rightarrow q \tau \nu _\tau \) transitions due to the presence of the vector leptoquark \(U_1\)

The effective couplings introduced in Eq. (8) can be used to parametrize at the low scale NP effects stemming from new heavy fields, once they are integrated out, and hence constrain such models. In this section, we focus particularly on two specific extensions of the Standard Model, namely the generic Two-Higgs-doublet model (G2HDM) [25] and specific models containing scalar and vector LQs [26, 27]. This choice is justified by the fact that these models predict a non-negligible value for the \(C^q_{P}\) coefficients, particularly in light of a potential explanation of the anomalies observed in \(b\rightarrow c\) transitions. Details on the results obtained for such models as discussed in the following sections.

3.2.1 G2HDM

The first NP model that we consider here is obtained by extending the Higgs sector with an additional Higgs doublet, carrying the same quantum numbers under the SM gauge group [25]. This addition will enrich the scalar sector of the SM with 4 new states: a second \(C\!P\)-even scalar, a neutral \(C\!P\)-odd one, and a charged Higgs. This last particle could mediate the same kind of transitions which occurs in the SM via the exchange of a W boson, and hence would contribute to \(B_q^+\rightarrow \tau ^+ \nu _\tau \) decays as well, as depicted at the partonic level in the left diagram of Fig. 10. Allowing both Higgs doublets to couple to all fermions leads however to tree level flavour changing neutral currents, which are heavily suppressed in the SM. For this reason, often one imposes some kind of discrete symmetry on the fermions and scalar fields, such that only fermions of a specific chirality and hypercharge should couple to a single Higgs doublet [59]. However, it was observed that a G2HDM where such a symmetry is not imposed can be used to address the \(b\rightarrow c\) anomalies, given it allows to evade bounds such the ones discussed in Ref. [60], and is currently the only realisation of 2HDM still capable to explain such measurements, see e.g. Refs. [61, 62] and references therein.

In particular, defining the simplified Lagrangian

where we introduced the complex coefficients \(y^q_Q\) and \(y_\tau \) which are mediating the interaction between the charged Higgs boson and the SM fermions, we can relate such a Lagrangian to the effective coefficients appearing at Eq. (8) once integrating out the heavy charged Higgs. In particular, it is possible to write

where the coefficients are related to the couplings introduced in Eq. (10) and the mass of the charged Higgs mass \(m_{H^-}\).

Left panel: allowed region for the G2HDM induced complex \(C_{S_L}^c\) coefficient. The grey region represents the constraint coming from current constraint on \({\mathcal {B}}(B_c^+\rightarrow \tau ^+ \nu _\tau )\), while the hashed region corresponds to the future constraint induced by FCC-ee. In blue are shown the 1\(\sigma \), 2\(\sigma \) and 3\(\sigma \) regions (darker to lighter) inferred on the couplings from \(b\rightarrow c\) anomalies. Right panel: analogous to the left panel, but for the \(B^+\rightarrow \tau ^+ \nu _\tau \) channel

The expected FCC-ee constraints on the plane \(\text {Re}(C_{S_L}^q)\) vs. \(\text {Im}(C_{S_L}^q)\) are shown in Fig. 11, where we report on the left panel the bounds for the c sector and on the right panel the ones for the u one. In this plots, we show both current and future constraints coming from \(B_q^+\rightarrow \tau ^+ \nu _\tau \) measurements. In particular, for the current constraint on the still-to-be observed \({{B} _{c} ^+} \) tauonic decay we employed the conservative estimate \({\mathcal {B}}(B_c^+ \rightarrow \tau ^+ \nu _\tau )\le 60\%\) [7, 8], which is the reason why the unconstrained region appears as a disk and not as an annulus, while for the \({{B} ^+} \) measurement we can rely on the average \({\mathcal {B}}(B^+ \rightarrow \tau ^+ \nu _\tau )= (1.094 \pm 0.208 \pm 0.043) \times 10^{-4}\) [20]. The future constraints are inferred employing for their central values the current SM prediction in the \({{B} _{c} ^+} \) channel and the current experimental average in the \({{B} ^+} \) one, and from a measurement with a relative precision of \(3.5\%\) and \(4\%\), respectively. Here and below, we refrain ourselves to show the results obtained assuming a \(2\%\) precision in the \({{B} ^+} \) system as well, in order not to impede the readability of the plots by the reader. As can be observed from Fig. 11, the future measurements at FCC-ee in these channels will strongly constrain the currently allowed parameter space for the G2HDM. Of particular relevance in the \({{B} _{c} ^+} \) sector, due to its relation to the aforementioned \(b\rightarrow c\) anomalies. To this end, we show in the plot also the current 1\(\sigma \), 2\(\sigma \) and 3\(\sigma \) regions required in order to address such anomalies: while the current limit on \({\mathcal {B}}(B_c^+ \rightarrow \tau ^+ \nu _\tau )\) already imposes a stringent constraint on the allowed parameter space, FCC-ee will be able to fully probe the 1\(\sigma \) and 2\(\sigma \) regions, leaving only a marginal part of the 3\(\sigma \) one untested. The measurement of this channel at FCC-ee will be therefore of extreme relevance in the context of the current B-meson charged current anomalies, capable of playing a major, independent role in confirming or rebutting the presence of NP in such channels. It is worth mentioning that, while for \(m_{H^-} \ge 400\,\)GeV stringent constraints are imposed by \(\tau \nu \) resonance searches, this is not the case for \(m_{H^-} \le 400\,\)GeV where the lack of constraints still allows the explanation of the \(b\rightarrow c\) anomalies [63]. We therefore assume a mass for the charged Higgs below this value, hence evading current constraints.

3.2.2 Leptoquarks

The second class of NP models considered in our analysis are obtained adding to the SM field contents a new class of particles, named leptoquarks, which couple directly to leptons and quarks in the same vertices. Several different realisation of LQs exists, given their spin and their quantum number under the SM gauge group [26]. In our analysis we will focus on two specific models, which have been recently object of extensive study due to their ability to satisfactorily address the aforementioned \(b\rightarrow c\) anomalies, see e.g. Ref. [57] and references therein. We classify these models by the LQ quantum numbers \((SU(3)_c,SU(2)_L,U(1)_Y)\), where \(Q=Y+T_3\) is the electric charge, Y denotes the hypercharge and \(T_3\) is the third component of weak isospin.

The first LQ that we study here is the scalar Leptoquark \(S_1\), characterised by the quantum numbers \((\bar{{\textbf{3}}},\textbf{1},1/3)\). \(S_1\) is therefore an \(SU(2)_L\) singlet, capable to mediate at tree-level the transition \(b\rightarrow q\tau \nu \) being this kind of interaction induced by the Lagrangian

where \(\tau _2\) is the second Pauli matrix and \(y_{L,R}^{ij}\) are generic Yukawa complex couplings. For a diagrammatic depiction of such a contribution, see the central diagram of Fig. 10. Once \(S_1\) is integrated out of the theory, its low energy footprints in \(b\rightarrow q \tau \nu \) transitions are mediated by the couplings

where the matching is performed at the scale of the \(S_1\) mass, \(\mu _{\textrm{LQ}}=\text {\,m} _{S_1}\). Both the vectorial and the combined scalar/tensor couplings are independently capable to address the \(b\rightarrow c\) anomalies. However, since the former would also induce a tree-level contribution to \(b\rightarrow s\nu \nu \) due to the \(SU(2)_L\) symmetry implying additional constraint, we will focus below only on the latter.

Left panel: allowed region for the \(S_1\) induced complex \(C_{S_L}^c\) coefficient. The grey region represents the constraint coming from current constraint on \({\mathcal {B}}(B_c^+\rightarrow \tau ^+ \nu _\tau )\), while the grey hashed region corresponds to the future constraint induced by FCC-ee. The green region shows the constraint coming from current direct searches at LHC, with future limits from HL-LHC given in hashed green. In blue are shown the 2\(\sigma \) and 3\(\sigma \) regions (darker to lighter) inferred on the couplings from \(b\rightarrow c\) anomalies. Right panel: analogous to the left panel, but for the \(B^+\rightarrow \tau ^+ \nu _\tau \) channel

We report in Fig. 12 the implications of future measurements of \(B_q^+\rightarrow \tau ^+ \nu _\tau \). In our analysis we assumed \(\text {\,m} _{S_1}=2\) TeV, which modifies the relation between the scalar and the tensor coefficients to \(C_{S_L}^q= - 8.9 C_T^q\) due to renormalisation group evolution (RGE), once the coefficients are evolved down to \(\mu _b=m_b\) [64]. Following the same procedure employed in the previous section, we show both present and future exclusion coming from the measurement of the leptonic decays branching ratios. On top of those, we also overlay the collider limits coming from LQ mediated high \(P_T\) mono-\(\tau \) search at the LHC [65], together with the projected sensitivity for HL-LHC [65, 66]. Finally, we report the current 2\(\sigma \) and 3\(\sigma \) regions required for the coefficients in order to address the charged current anomalies. Different considerations are in order for the two channels here analysed. For the \(B_c^+\rightarrow \tau ^+ \nu _\tau \), given the present lack of a measurement for its branching ratio, the current main constraints come from direct searches at LHC. However this picture is going to change in the future, where FCC-ee and HL-LHC will play complementary roles in constraining the currently available parameter space, strongly reducing the possibility of an \(S_1\) explanation of the anomalies but not able to completely rule it out. On the other hand, having already a measurement for \({\mathcal {B}}(B^+\rightarrow \tau ^+ \nu _\tau )\) makes current LHC constraint non competitive. Moreover, the suppression coming from a different CKM normalisation in the coefficients of Eq. (13) compared to the \(B^+_c\) channel is capable to overcome the PDF enhancement of this channel. Therefore, even after the advent of HL-LHC this picture will not be altered, making the measurement of \({\mathcal {B}}(B^+\rightarrow \tau ^+ \nu _\tau )\) at FCC-ee of particular interest.

The second LQ model that we inspect is the singlet vector Leptoquark \(U_1=(\textbf{3},\textbf{1},2/3)\). As depicted at in the right diagram of Fig. 10, also this field can mediate at tree-level the transition \(b\rightarrow q\tau \nu \), being described by the Lagrangian

where the generic complex couplings \({\hat{z}}_{L,R}^{ij}\) are defined in the interaction basis. Integrating out now the vector LQ and going to the mass basis, one obtains

with the matching performed at the \(U_1\) mass scale, \(\mu _\textrm{LQ}=\text {\,m} _{U_1}\). Being the \(U_1\) LQ a massive vector state, it requires some sort of UV completion to explain the origin of its mass: a common realisation consists into considering it as a gauge boson of a new U(2) symmetry [67], and assuming that in the interaction basis it couples only to SM fields of the third generation, i.e., \({\hat{z}}_L^{b\tau }={\hat{z}}_R^{b\tau }\ne 0\), and vanishing otherwise. Rotating now to the mass basis will induce two consequences: first, the appearance of couplings like \(z_L^{c\tau }\) or \(z_L^{u\tau }\); second, the mis-alignment of left- and right-handed couplings due to the physical phase \(\beta _R\), coming from the rotation matrices and implying \(z_R^{b\tau }= e^{-i\beta _R} z_L^{b\tau }\). We therefore obtain, in a UV completion of the \(U_1\) LQ by means of a U(2) symmetry, the following relation:

where we have taken into account the RGE effects [64]. The implications of future measurements of \(B_q^+\rightarrow \tau ^+ \nu _\tau \) on this model are reported in Fig. 13. Analogously to what done for the previous cases, we show present and future bounds on the parameter space coming both from \({\mathcal {B}}(B_q^+\rightarrow \tau ^+ \nu _\tau )\) and high \(P_T\) mono-\(\tau \) searches at LHC. Similarly to the \(S_1\) scenario, it is interesting to notice that in the \(B_c\) channel FCC-ee and HL-LHC will play complementary roles in constraining this model. Of particular interest is the capability of FCC-ee to constrain the CP-violating phase \(\beta _R\), which is instead not probed at HL-LHC. This will fully enable the probe of the 1\(\sigma \) and 2\(\sigma \) regions identified by the \(b\rightarrow c\) anomalies, leaving only a minimal part of the 3\(\sigma \) parameter space not probed. Once again, the high precision with which FCC-ee will be capable to measure \({\mathcal {B}}(B_c^+\rightarrow \tau ^+ \nu _\tau )\) will allow it to play a prominent role into either confirming or refuting these anomalies. Regarding the \(B^+\) channel, due to the different CKM normalisation present also in this scenario, HL-LHC will not have a meaningful impact in constraining the model, contrarily to the strong probing power of FCC-ee.

Left panel: allowed region for the \(U_1\) induced \(C_{V_L}^c\) real coefficient and \(\beta _R^q\) complex phase, see text for details. The colour scheme is the same of Fig. 12. Right panel: analogous to the left panel, but for the \(B^+\rightarrow \tau ^+ \nu _\tau \) channel

4 Conclusion