Abstract

The mass of the top quark is measured in 36.3\(\,\text {fb}^{-1}\) of LHC proton–proton collision data collected with the CMS detector at \(\sqrt{s}=13\,\text {Te}\hspace{-.08em}\text {V} \). The measurement uses a sample of top quark pair candidate events containing one isolated electron or muon and at least four jets in the final state. For each event, the mass is reconstructed from a kinematic fit of the decay products to a top quark pair hypothesis. A profile likelihood method is applied using up to four observables per event to extract the top quark mass. The top quark mass is measured to be \(171.77\pm 0.37\,\text {Ge}\hspace{-.08em}\text {V} \). This approach significantly improves the precision over previous measurements.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The top quark [1, 2] is the most massive fundamental particle and its mass, \(m_{\textrm{t}}\), is an important free parameter of the standard model (SM) of particle physics. Because of its large Yukawa coupling, the top quark dominates the higher-order corrections to the Higgs boson mass and a precise determination of \(m_{\textrm{t}}\) sets strong constraints on the stability of the electroweak vacuum [3, 4]. In addition, precise measurements of \(m_{\textrm{t}}\) can be used to test the internal consistency of the SM [5,6,7].

At the CERN LHC, top quarks are produced predominantly in quark–antiquark pairs (\(\hbox {t}\bar{\hbox {t}} \)), which decay almost exclusively into a bottom (b) quark and a W boson. Each \(\hbox {t}\bar{\hbox {t}} \) event can be classified by the subsequent decay of the W bosons. For this paper, the lepton + jets channel is analyzed, where one W boson decays hadronically, and the other leptonically. Hence, the minimal final state consists of a muon or electron, at least four jets, and one undetected neutrino. This includes events where a muon or electron from a tau lepton decay passes the selection criteria.

The mass of the top quark has been measured with increasing precision using the reconstructed invariant mass of different combinations of its decay products [8]. The measurements by the Tevatron collaborations led to a combined value of \(m_{\textrm{t}} =174.30\pm 0.65\,\text {Ge}\hspace{-.08em}\text {V} \) [9], while the ATLAS and CMS Collaborations measured \(m_{\textrm{t}} =172.69\pm 0.48\,\text {Ge}\hspace{-.08em}\text {V} \) [10] and \(m_{\textrm{t}} =172.44\pm 0.48\,\text {Ge}\hspace{-.08em}\text {V} \) [11], respectively, from the combination of their most precise results at \(\sqrt{s}=7\) and 8 TeV (Run 1). The LHC measurements achieved a relative precision on \(m_{\textrm{t}}\) of 0.28%. These analyses extract \(m_{\textrm{t}}\) by comparing data directly to Monte Carlo simulations for different values of \(m_{\textrm{t}}\). An overview of the discussion of this mass definition and its relationship to a theoretically well-defined parameter is presented in Ref. [12].

In the lepton + jets channel, \(m_{\textrm{t}}\) was measured by the CMS Collaboration with proton–proton (pp) collision data at \(\sqrt{s} = 13\,\text {Te}\hspace{-.08em}\text {V} \). The result of \(m_{\textrm{t}} = 172.25\pm 0.63\,\text {Ge}\hspace{-.08em}\text {V} \) [13] was extracted using the ideogram method [14, 15], which had previously been employed in Run 1 [11]. In contrast to the Run 1 analysis, in the analysis of \(\sqrt{s} = 13\,\text {Te}\hspace{-.08em}\text {V} \) data, the renormalization and factorization scales in the matrix-element (ME) calculation and the scales in the initial- and final-state parton showers (PS) were varied separately, in order to evaluate the corresponding systematic uncertainties. In addition, the impacts of extended models of color reconnection (CR) were evaluated. These models were not available for the Run 1 measurements and their inclusion resulted in an increase in the systematic uncertainty [13].

In this paper, we use a new mass extraction method on the same data, corresponding to 36.3\(\,\text {fb}^{-1}\), that were used in Ref. [13]. In addition to developments in the mass extraction technique, the reconstruction and calibration of the analyzed data have been improved, and updated simulations are used. For example, the underlying event tune CP5 [16] and the jet flavor tagger DeepJet [17] were not available in the former analysis on the data. The new analysis employs a kinematic fit of the decay products to a \(\hbox {t}\bar{\hbox {t}} \) hypothesis. For each event, the best matching assignment of the jets to the decay products is used. A profile likelihood fit is performed using up to four different observables for events that are well reconstructed by the kinematic fit and one observable for the remaining events. The additional observables are used to constrain the main sources of systematic uncertainty. The model for the likelihood incorporates the effects of variations of these sources, represented by nuisance parameters based on simulation, as well as the finite size of the simulated samples. This reduces the influence of systematic uncertainties in the measurement. Tabulated results are provided in the HEPData record for this analysis [18].

2 The CMS detector and event reconstruction

The central feature of the CMS apparatus is a superconducting solenoid of 6\(\,\text {m}\) internal diameter, which provides a magnetic field of 3.8\(\,\text {T}\). Within the solenoid volume are a silicon pixel and strip tracker, a lead tungstate crystal electromagnetic calorimeter (ECAL), and a brass and scintillator hadron calorimeter (HCAL), each composed of a barrel and two endcap sections. Forward calorimeters extend the pseudorapidity (\(\eta \)) coverage provided by the barrel and endcap detectors. Muons are measured in gas-ionization detectors embedded in the steel flux-return yoke outside the solenoid. A more detailed description of the CMS detector, together with a definition of the coordinate system used and the relevant kinematic variables, can be found in Ref. [19].

The primary vertex is taken to be the vertex corresponding to the hardest scattering in the event, evaluated using tracking information alone, as described in Section 9.4.1 of Ref. [20]. The particle-flow (PF) algorithm [21] aims to reconstruct and identify each individual particle in an event, with an optimized combination of information from the various elements of the CMS detector. The energy of photons is obtained from the ECAL measurement. The energy of electrons is determined from a combination of the electron momentum at the primary interaction vertex as determined by the tracker, the energy of the corresponding ECAL cluster, and the energy sum of all bremsstrahlung photons spatially compatible with originating from the electron track. The energy of muons is obtained from the curvature of the corresponding track. The energy of charged hadrons is determined from a combination of their momentum measured in the tracker and the matching ECAL and HCAL energy deposits, corrected for the response function of the calorimeters to hadronic showers. Finally, the energy of neutral hadrons is obtained from the corresponding corrected ECAL and HCAL energy deposits.

Jets are clustered from PF candidates using the anti-\(k_{{\textrm{T}}}\) algorithm with a distance parameter of 0.4 [22, 23]. The jet momentum is determined as the vectorial sum of all particle momenta in the jet, and is found from simulation to be, on average, within 5 to 10% of the true momentum over the whole transverse momentum (\(p_{{\textrm{T}}}\)) spectrum and detector acceptance. Additional pp interactions within the same or nearby bunch crossings (pileup) can contribute additional tracks and calorimetric energy depositions, increasing the apparent jet momentum. To mitigate this effect, tracks identified as originating from pileup vertices are discarded and an offset correction is applied to correct for remaining contributions. Jet energy corrections are derived from simulation studies so that the average measured energy of jets becomes identical to that of particle level jets. In situ measurements of the momentum balance in dijet, photon + jet, \(\hbox {Z}+\)jet, and multijet events are used to determine any residual differences between the jet energy scale in data and in simulation, and appropriate corrections are made [24]. Additional selection criteria are applied to each jet to remove jets potentially dominated by instrumental effects or reconstruction failures. The jet energy resolution amounts typically to 15–20% at 30\(\,\text {Ge}\hspace{-.08em}\text {V}\), 10% at 100\(\,\text {Ge}\hspace{-.08em}\text {V}\), and 5% at 1 TeV [24]. Jets originating from b quarks are identified using the DeepJet algorithm [17, 25, 26]. This has an efficiency of approximately 78%, at a misidentification probability of 1% for light-quark and gluon jets and 12% for charm-quark jets [17, 26].

The missing transverse momentum vector, \({\vec p}_{{\textrm{T}}}^{\hspace{1.0pt}\text {miss}}\), is computed as the negative vector sum of the transverse momenta of all the PF candidates in an event, and its magnitude is denoted as \(p_{{\textrm{T}}} ^\text {miss}\) [27]. The \({\vec p}_{{\textrm{T}}}^{\hspace{1.0pt}\text {miss}}\) is modified to account for corrections to the energy scale of the reconstructed jets in the event.

The momentum resolution for electrons with \(p_{{\textrm{T}}} \approx 45\,\text {Ge}\hspace{-.08em}\text {V} \) from \(\hbox {Z} \rightarrow \hbox {ee}\) decays ranges from 1.6 to 5.0%. It is generally better in the barrel region than in the endcaps, and also depends on the bremsstrahlung energy emitted by the electron as it traverses the material in front of the ECAL [28, 29].

Muons are measured in the pseudorapidity range \(|\eta | < 2.4\), with detection planes made using three technologies: drift tubes, cathode strip chambers, and resistive plate chambers. Matching muons to tracks measured in the silicon tracker results in a relative transverse momentum resolution, for muons with \(p_{{\textrm{T}}}\) up to 100\(\,\text {Ge}\hspace{-.08em}\text {V}\), of 1% in the barrel and 3% in the endcaps. The \(p_{{\textrm{T}}}\) resolution in the barrel is better than 7% for muons with \(p_{{\textrm{T}}}\) up to 1 TeV [30].

3 Data samples and event selection

The analyzed data sample has been collected with the CMS detector in 2016 at a center-of-mass energy \(\sqrt{s} = 13\,\hbox {TeV}\). It corresponds to an integrated luminosity of 36.3\(\,\text {fb}^{-1}\) [31]. Events are required to pass a single-electron trigger with a \(p_{{\textrm{T}}}\) threshold for isolated electrons of 27\(\,\text {Ge}\hspace{-.08em}\text {V}\) or a single-muon trigger with a minimum threshold on the \(p_{{\textrm{T}}}\) of an isolated muon of 24\(\,\text {Ge}\hspace{-.08em}\text {V}\) [32].

Simulated \(\hbox {t}\bar{\hbox {t}} \) signal events are generated with the powheg v2 ME generator [33,34,35], pythia8.219 PS [36], and use the CP5 underlying event tune [16] with top quark mass values, \(m_{\textrm{t}}^\text {gen}\), of 169.5, 172.5, and 175.5\(\,\text {Ge}\hspace{-.08em}\text {V}\). To model parton distribution functions (PDFs), the NNPDF3.1 next-to-next-to-leading order (NNLO) set [37, 38] is used with the strong coupling constant set to \(\alpha _\textrm{S} = 0.118\). The various background samples are simulated with the same ME generators and matching techniques [39,40,41,42,43] as in Ref. [13]. The background processes are \(\hbox {W}/\hbox {Z}\) + jets, single-top, diboson, and events composed uniquely of jets produced through the strong interaction, referred to as quantum chromodynamics (QCD) multijet events. The PS simulation and hadronization is performed with pythia8, using the CUETP8M1 tune [44].

All of the simulated samples are processed through a full simulation of the CMS detector based on Geant4 [45] and are normalized to their predicted cross section described in Refs. [46,47,48,49]. The effects of pileup are included in the simulation and the pileup distribution in simulation is weighted to match the pileup in the data. The jet energy response and resolution in simulated events are corrected to match the data [24]. In addition, the b-jet identification (b tagging) efficiency and misidentification rate [25], and the lepton trigger and reconstruction efficiencies are corrected in simulation [28, 30].

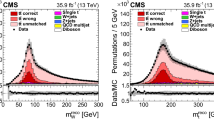

The top quark mass distribution before (upper) and after (lower) the \(P_\text {gof} > 0.2\) selection and the kinematic fit. For the simulated \(\hbox {t}\bar{\hbox {t}} \) events, the jet-parton assignments are classified as correct, wrong, and unmatched permutations, as described in the text. The uncertainty bands contain statistical uncertainties in the simulation, normalization uncertainties due to luminosity and cross section, jet energy correction uncertainties, and all uncertainties that are evaluated from event-based weights. A large part of the depicted uncertainties on the expected event yields are correlated. The lower panels show the ratio of data to the prediction. A value of \(m_{\textrm{t}}^\text {gen} = 172.5 \,\text {Ge}\hspace{-.08em}\text {V} \) is used in the simulation

Events are selected with exactly one isolated electron (muon) with \(p_{{\textrm{T}}} > 29\) (26)\(\,\text {Ge}\hspace{-.08em}\text {V}\) and \(|\eta |< 2.4\) that is separated from PF jet candidates with \(\varDelta R=\sqrt{\smash [b]{{(\varDelta \eta )}^2+{(\varDelta \phi )}^2}} >0.3\) (0.4), where \(\varDelta \eta \) and \(\varDelta \phi \) are the differences in pseudorapidity and azimuth (in radians) between the jet and lepton candidate. The four leading jet candidates in each event are required to have \(p_{{\textrm{T}}} >30\,\text {Ge}\hspace{-.08em}\text {V} \) and \(|\eta |<2.4\). Only these four jets are used in further reconstruction. Exactly two b-tagged jets are required among the four selected jets, yielding 287 842 (451 618) candidate events in the electron + jets (muon + jets) decay channel.

To check the compatibility of an event with the \(\hbox {t}\bar{\hbox {t}} \) hypothesis, and to improve the resolution of the reconstructed quantities, a kinematic fit [50] is performed. For each event, the inputs to the algorithm are the momenta of the lepton and of the four leading jets, \({\vec p}_{{\textrm{T}}}^{\hspace{1.0pt}\text {miss}}\), and the resolutions of these variables. The fit constrains these quantities to the hypothesis that two heavy particles of equal mass are produced, each one decaying to a b quark and a W boson, with the invariant mass of the latter constrained to 80.4\(\,\text {Ge}\hspace{-.08em}\text {V}\). The kinematic fit then minimizes \(\chi ^{2} \equiv ({\textbf{x}}-{\textbf{x}}^{m})^{\textrm{T}}G({\textbf{x}}-{\textbf{x}}^{m})\) where \({\textbf{x}}^{m}\) and \({\textbf{x}}\) are the vectors of the measured and fitted momenta, respectively, and G is the inverse covariance matrix, which is constructed from the uncertainties in the measured momenta. The masses are fixed to 5\(\,\text {Ge}\hspace{-.08em}\text {V}\) for the b quark and to zero for the light quarks and leptons. The two b-tagged jets are candidates for the b quark in the \(\hbox {t}\bar{\hbox {t}} \) hypothesis, while the two jets that are not b tagged serve as candidates for the light quarks from the hadronically decaying W boson. This leads to two possible parton-jet assignments, each with two solutions for the longitudinal component of the neutrino momentum, and four different permutations per event. For simulated \(\hbox {t}\bar{\hbox {t}} \) events, the parton-jet assignments can be classified as correct permutations, wrong permutations, and unmatched permutations, where, in the latter case, at least one quark from the \(\hbox {t}\bar{\hbox {t}} \) decay is not unambiguously matched within a distance of \(\varDelta R <0.4\) to any of the four selected jets.

The goodness-of-fit probability, \(P_\text {gof} = \exp (-\chi ^{2}/2)\), is used to determine the most likely parton-jet assignment. For each event, the observables from the permutation with the highest \(P_\text {gof}\) value are the input to the \(m_{\textrm{t}}\) measurement. In addition, the events are categorized as either \(P_\text {gof} < 0.2\) or \(P_\text {gof} > 0.2\), matching the value chosen in Ref. [13]. Requiring \(P_\text {gof} > 0.2\) yields 87 265 (140 362) \(\hbox {t}\bar{\hbox {t}} \) candidate events in the electron+jets (muon+jets) decay channel and has a predicted signal fraction of 95%. This selection improves the fraction of correctly reconstructed events from 20 to 47%. Figure 1 shows the distribution of the invariant mass of the hadronically decaying top quark candidate before (\(m_{\textrm{t}}^\text {reco}\)) and after (\(m_{\textrm{t}}^\text {fit}\)) the \(P_\text {gof} > 0.2\) selection and the kinematic fit. A large part of the depicted uncertainties on the expected event yields are correlated. Hence, the overall normalization of the simulation agrees within the uncertainties, although the simulation predicts 10% more events in all distributions. For the final measurement, the simulation is normalized to the number of events observed in data.

4 Observables and systematic uncertainties

Different observables are used per event based on its \(P_\text {gof} \) value. The cases and observables are listed in Table 1.

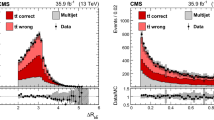

The distributions of the reconstructed W boson mass for the \(P_\text {gof} > 0.2\) category (upper) and of the invariant mass of the lepton and the jet assigned to the semileptonic decaying top quark for the \(P_\text {gof} < 0.2\) category (lower). The uncertainty bands contain statistical uncertainties in the simulation, normalization uncertainties due to luminosity and cross section, jet energy correction uncertainties, and all uncertainties that are evaluated from event-based weights. A large part of the depicted uncertainties on the expected event yields are correlated. The lower panels show the ratio of data to the prediction. A value of \(m_{\textrm{t}}^\text {gen} = 172.5 \,\text {Ge}\hspace{-.08em}\text {V} \) is used in the simulation

For events with \(P_\text {gof} > 0.2\), the mass of the top quark candidates from the kinematic fit, \(m_{\textrm{t}}^\text {fit}\), shows a very strong dependence on \(m_{\textrm{t}}\) and is the main observable in this analysis. Only events with \(m_{\textrm{t}}^\text {fit}\) values between 130 and 350 \(\,\text {Ge}\hspace{-.08em}\text {V}\) are used in the measurement. The high mass region is included to constrain the contribution of unmatched events to the peak. For events with \(P_\text {gof} < 0.2\), the invariant mass of the lepton and the b-tagged jet assigned to the semileptonically decaying top quark, \(m_{\ell {\textrm{b}}}^\text {reco}\), is shown in Fig. 2 (lower). For most \(\hbox {t}\bar{\hbox {t}} \) events, a low \(P_\text {gof}\) value is caused by assigning a wrong jet to the W boson candidate, while the correct b-tagged jets are the candidates for the b quark in 60% of these events. Hence, \(m_{\ell {\textrm{b}}}^\text {reco}\) preserves a good \(m_{\textrm{t}}\) dependence and adds additional sensitivity to the measurement. The measurement is limited to \(m_{\ell {\textrm{b}}}^\text {reco}\) values between 0 and 300\(\,\text {Ge}\hspace{-.08em}\text {V}\). While a similar observable has routinely been used in \(m_{\textrm{t}}\) measurements in the dilepton channel [51, 52], this is the first application of this observable in the lepton + jets channel.

Additional observables are used in parallel for the mass extraction to constrain systematic uncertainties. In previous analyses by the CMS Collaboration in the lepton + jets channel [11, 13], the invariant mass of the two untagged jets before the kinematic fit, \(m_{{\textrm{W}}}^\text {reco}\), has been used together with \(m_{\textrm{t}}^\text {fit}\), mainly to reduce the uncertainty in the jet energy scale and the jet modeling. Its distribution is shown in Fig. 2 (upper) and the region between 63 and 110\(\,\text {Ge}\hspace{-.08em}\text {V}\) is used in the measurement. As \(m_{{\textrm{W}}}^\text {reco}\) is only sensitive to the energy scale and modeling of light flavor jets, two additional observables are employed to improve sensitivity to the scale and modeling of jets originating from b quark. These are the ratio \(m_{\ell {\textrm{b}}}^\text {reco}/m_{\textrm{t}}^\text {fit} \), and the ratio of the scalar sum of the transverse momenta of the two b-tagged jets (\(\hbox {b}1\), \(\hbox {b}2\)), and the two non-b-tagged jets (\(\hbox {q}1\), \(\hbox {q}2\)), \(R_{{\textrm{bq}}}^\text {reco} = (p_{{\textrm{T}}} ^{\hbox {b}1} + p_{{\textrm{T}}} ^{\hbox {b}2})/(p_{{\textrm{T}}} ^{\textrm{q}1}+p_{{\textrm{T}}} ^{\textrm{q}2})\). Their distributions are shown in Fig. 3. While \(m_{\textrm{t}}^\text {fit}\) and \(m_{{\textrm{W}}}^\text {reco}\) have been used by the CMS Collaboration in previous analyses in the lepton + jets channel, \(m_{\ell {\textrm{b}}}^\text {reco}\), \(m_{\ell {\textrm{b}}}^\text {reco}/m_{\textrm{t}}^\text {fit} \), and \(R_{{\textrm{bq}}}^\text {reco}\) are new additions. However, \(R_{{\textrm{bq}}}^\text {reco}\) has been used in the lepton + jets channel by the ATLAS Collaboration [10, 53].

The distributions of \(m_{\ell {\textrm{b}}}^\text {reco}/m_{\textrm{t}}^\text {fit} \) (upper) and of \(R_{{\textrm{bq}}}^\text {reco}\) (lower), both for the \(P_\text {gof} > 0.2\) category. The uncertainty bands contain statistical uncertainties in the simulation, normalization uncertainties due to luminosity and cross section, jet energy correction uncertainties, and all uncertainties that are evaluated from event-based weights. A large part of the depicted uncertainties on the expected event yields are correlated. The lower panels show the ratio of data to the prediction. A value of \(m_{\textrm{t}}^\text {gen} = 172.5 \,\text {Ge}\hspace{-.08em}\text {V} \) is used in the simulation

The distributions of the five observables are affected by uncertainties in the modeling and the reconstruction of the simulated events. These sources of systematic uncertainties are nearly identical to those in the previous measurements [13, 54]. The only difference is that we no longer include a systematic uncertainty related to the choice of ME generator as this uncertainty would overlap with the uncertainty we find from varying the default ME generator parameters. The considered sources are summarized in the categories listed below.

-

Method calibration: In the previous measurements [13, 54], the limited size of the simulated samples for different values of \(m_{\textrm{t}}^\text {gen}\) lead to an uncertainty in the calibration of the mass extraction method. In the new profile likelihood approach, the statistical uncertainty in the top quark mass dependence due to the limited sample size is included via nuisance parameters.

-

Jet energy correction (JEC): Jet energies are scaled up and down according to the \(p_{{\textrm{T}}}\)- and \(\eta \)-dependent data/simulation uncertainties [24, 55]. Each of the 25 individual uncertainties in the jet energy corrections is represented by its own nuisance parameter.

-

Jet energy resolution (JER): Since the JER measured in data is worse than in simulation, the simulation is modified to correct for the difference [24, 55]. The jet energy resolution in the simulation is varied up and down within the uncertainty. The variation is evaluated independently for two \(|\eta _{\text {jet}} |\) regions, split at \(|\eta _{\text {jet}} |=1.93\).

-

b tagging: The \(p_{{\textrm{T}}}\)-dependent uncertainty of the b-tagging efficiencies and misidentification rates of the DeepJet tagger [17, 26] are taken into account by reweighting the simulated events accordingly.

-

Pileup: To estimate the uncertainty from the determination of the number of pileup events and the reweighting procedure, the inelastic pp cross section [56] used in the determination is varied by \(\pm 4.6\%.\)

-

Background (BG): The main uncertainty in the background stems from the uncertainty in the measurements of the cross sections used in the normalization. The normalization of the background samples is varied by ±10% for the single top quark samples [57, 58], ±30% for the \(\hbox {W}+\)jets samples [59], ±10% for the \(\hbox {Z}+\)jets [60] and for the diboson samples [61, 62], and ±100% for the QCD multijet samples. The size of the variations is the same as in the previous measurement [13] in this channel. The uncertainty in the luminosity of 1.2% [31] is negligible compared to these variations.

-

Lepton scale factors (SFs) and momentum scale: The simulation-to-data scale factors for the trigger, reconstruction, and selection efficiencies for electrons and muons are varied within their uncertainties. In addition, the lepton energy in simulation is varied up and down within its uncertainty.

-

JEC flavor: The difference between Lund string fragmentation and cluster fragmentation is evaluated by comparing pythia 6.422 [63] and herwig++ 2.4 [64]. The jet energy response is compared separately for each jet flavor [24].

-

b-jet modeling (bJES): The uncertainty associated with the fragmentation of b quark is split into four components. The Bowler–Lund fragmentation function is varied symmetrically within its uncertainties, as determined by the ALEPH and DELPHI Collaborations [65, 66]. The difference between the default pythia setting and the center of the variations is included as an additional uncertainty. As an alternative model of the fragmentation into b hadrons, the Peterson fragmentation function is used and the difference obtained relative to the Bowler–Lund fragmentation function is assigned as an uncertainty. The third uncertainty source taken into account is the semileptonic b-hadron branching fraction, which is varied by \({-}0.45\) and \({+}0.77\%\), motivated by measurements of \(\hbox {B}^{0}/\hbox {B}^{+}\) decays and their corresponding uncertainties [8] and a comparison of the measured branching ratios to pythia.

-

PDF: The default PDF set in the simulation, NNPDF3.1 NNLO [37, 38], is replaced with the CT14 NNLO [67] and MMHT 2014 NNLO [68] PDFs via event weights. In addition, the default set is varied with 100 Hessian eigenvectors [38] and the \(\alpha _\textrm{S} \) value is changed to 0.117 and 0.119. All described variations are evaluated for their impact on the measurement and the negligible variations are later omitted to reduce the number of nuisance parameters.

-

Renormalization and factorization scales: The renormalization and factorization scales for the ME calculation are varied independently and simultaneously by factors of 2 and 1/2. This is achieved by reweighting the simulated events. The independent variations were checked and it was found to be sufficient to include only the simultaneous variations as a nuisance parameter.

-

ME to PS matching: The matching of the powheg ME calculations to the pythia PS is varied by shifting the parameter \(h_{\text {damp}}=1.58^{+0.66}_{-0.59}\) [69] within its uncertainty.

-

ISR and FSR: For initial-state radiation (ISR) and final-state radiation (FSR), 32 decorrelated variations of the renormalization scale and nonsingular terms for each branching type (\({\textrm{g}}\rightarrow {\textrm{gg}}\), \({\textrm{g}}\rightarrow \textrm{q}\bar{\textrm{q}}\), \(\textrm{q}\rightarrow {\textrm{qg}}\), and \({\textrm{X}} \rightarrow {\textrm{Xg}}\) with \({\textrm{X}}= \hbox {t}\text { or }\hbox {b}\)) are applied using event weights [70]. The scale variations correspond to a change of the respective PS scale in pythia by factors of 2 and 1/2. This approach is new compared to the previous analysis [13], which only evaluated correlated changes in the FSR and ISR PS scales.

-

Top quark \(p_{{\textrm{T}}}\): Recent calculations suggest that the top quark \(p_{{\textrm{T}}}\) spectrum is strongly affected by NNLO effects [71,72,73]. The \(p_{{\textrm{T}}}\) of the top quark in simulation is varied to match the distribution measured by CMS [74, 75]. The default simulation is not corrected for this effect, but this variation is included via a nuisance parameter in the \(m_{\textrm{t}}\) measurement.

-

Underlying event: Measurements of the underlying event have been used to tune pythia parameters describing nonperturbative QCD effects [16, 44]. The parameters of the tune are varied within their uncertainties.

-

Early resonance decays: Modeling of color reconnection introduces systematic uncertainties, which are estimated by comparing different CR models and settings. In the default sample, the top quark decay products are not included in the CR process. This setting is compared to the case of including the decay products by enabling early resonance decays in pythia8.

-

CR modeling: In addition to the default model used in pythia8, two alternative CR models are used, namely a model with string formation beyond leading color (“QCD inspired”) [76] and a model allowing the gluons to be moved to another string (“gluon move”) [77]. Underlying event measurements are used to tune the parameters of all models [78]. For each model, an individual nuisance parameter is introduced.

5 Mass extraction method

A maximum likelihood (ML) fit to the selected events is employed to measure \(m_{\textrm{t}}\). The evaluated likelihood ratio \(\lambda (m_{\textrm{t}}, \vec {\theta }, \vec {\beta }, \varOmega | \text {data})\) depends not only on \(m_{\textrm{t}}\), but also on three sets of nuisance parameters. The nuisance parameters, \(\vec {\theta }\), incorporate the uncertainty in systematic effects, while the statistical nuisance parameters, \(\vec {\beta }\) and \(\varOmega \), incorporate the statistical uncertainties in the simulation. The parameters \(\vec {\beta }\) account for the limited sample size of the default simulation and the parameters \(\varOmega \) account for the limited size in the simulated samples for variations of \(m_{\textrm{t}}\) and of the uncertainty sources. All nuisance parameters are normalized such that a value of 0 represents the absence of the systematic effect and the values \(\pm 1\) correspond to a variation of the systematic effect by one standard deviation up or down. The RooFit [79] package is used to define and evaluate all the functions. The minimum of the negative log-likelihood \(-2 \ln \lambda (m_{\textrm{t}}, \vec {\theta }, \vec {\beta }, \varOmega | \text {data})\) is found with the Minuit2 package [80].

The data are characterized by up to four observables per event, as mentioned in Sect. 4. The events are split into the electron + jets and the muon + jets channels. The input to the ML fit is a set of one-dimensional histograms of the observables, \(x_i\), in the two \(P_\text {gof}\) categories. For each histogram, a suitable probability density function \(P(x_i |m_{\textrm{t}}, \vec {\theta }, \vec {\beta }, \varOmega )\) is derived from simulation.

The probability density function for the \(m_{\textrm{t}}^\text {fit}\) histograms is approximated by the sum of a Voigt profile (the convolution of a Cauchy–Lorentz distribution and a Gaussian distribution) for the correctly reconstructed \(\hbox {t}\bar{\hbox {t}} \) candidates and Chebyshev polynomials for the remaining events. For all other observables, a binned probability density function is used that returns the relative fraction of events per histogram bin. Here, eight bins are used for each observable and the width of the bins is chosen so that each bin has a similar number of selected events for the default simulation (\(m_{\textrm{t}}^\text {gen} = 172.5\,\text {Ge}\hspace{-.08em}\text {V} \)). For the following, we denote the parameters of the probability density functions as \(\vec {\alpha } \). All the functions \(P_i(x_i | \vec {\alpha })\) are normalized to the number of events in the histogram for the observable \(x_i\), so only shape information is used in the ML fit. Hence, the parameters \(\vec {\alpha } \) are correlated even for the binned probability density function. The dependence of these parameters on \(m_{\textrm{t}}\) and \(\vec {\theta }\) is assumed to be linear. The full expression is for a component \(\alpha _k\) of \(\vec {\alpha }\)

with l indicating the nuisance parameter. For the nuisance parameters corresponding to the FSR PS scale variations, the linear term, \(s_k^l \ \theta _l\), is replaced with a second-order polynomial. With these expressions for the parameters \(\alpha _k\), the probability density function, \(P_i(x_i | \vec {\alpha })\), for an observable \(x_i\) becomes the function \(P_i(x_i | m_{\textrm{t}}, \vec {\theta }, \vec {\beta }, \varOmega )\) mentioned above.

The model parameter \(\alpha _k^0\) is determined by a fit to the default simulation, while the linear dependencies of \( \alpha _k\) on \(m_{\textrm{t}}\) or a component \( \theta _l\) of \(\vec {\theta }\) are expressed with the model parameters \(s_k^0\) and \(s_k^l\), respectively. The parameter \(s_k^0\) is determined from a simultaneous fit to simulated samples, where \(m_{\textrm{t}}^\text {gen}\) is varied by ±3\(\,\text {Ge}\hspace{-.08em}\text {V}\) from the default value. Along the same lines, the parameters \(s_k^l\) are obtained from fits to the simulation of the systematic effect corresponding to the nuisance parameter \(\theta _l\). The values of \(C_k\) and \(d_k\) are chosen ad hoc so that the results of the fits of \(\alpha _k^0\), \(s_k^0\), and the \(s_k^l\) are all of the same order of magnitude and with a similar statistical uncertainty. This improves the numerical stability of the final ML fit.

The limited size of the simulated samples for different \(m_{\textrm{t}}^\text {gen}\) values gives rise to a calibration uncertainty in \(m_{\textrm{t}}\). Hence, additional statistical nuisance parameters, \(\beta _k\) and \(\varOmega _k^0\), are introduced that account for the statistical uncertainty in the model parameters \(\alpha _k^0\) and \(s_k^0\), similar to the Barlow–Beeston approach [81, 82]. They are scaled by \(\sigma ^\alpha _k\) and \(\sigma _k^0\), which are the standard deviations of \(\alpha _k^0\) and \(s_k^0\) obtained from the fits to the default simulation or the \(m_{\textrm{t}}^\text {gen}\)-varied samples, respectively. Hence, a statistical nuisance with value \(\varOmega _k^0=\pm 1\) changes the corresponding \(\alpha _k\), i.e., the shape or the bin content of an observable, by the statistical uncertainty in the \(m_{\textrm{t}}\) dependence evaluated at a shift in \(m_{\textrm{t}}\) of 1\(\,\text {Ge}\hspace{-.08em}\text {V}\). Similarly, the parameters \(s_k^l\) contain random fluctuations if they are determined from simulated samples that are statistically independent to the default simulation and of limited size. These fluctuations can lead to overconstraints on the corresponding nuisance parameter \(\theta _l\) and, hence, an underestimation of the systematic uncertainty. The nuisance parameters \(\varOmega _k^l\) are added to counter these effects and are scaled by parameters \(\sigma _k^l\), which are the standard deviations of the \(s_k^l\) parameters from the fits to the corresponding samples for the systematic effect. As in the \(m_{\textrm{t}}\) case, a value of \(\varOmega _k^l=\pm 1\) changes the corresponding \(\alpha _k\) by the statistical uncertainty in the \(\theta _l\) dependence evaluated at a shift in \(\theta _l\) of 1. Unlike the systematic nuisance parameters \(\theta _l\), which affect all \(\alpha _k\) collectively, for each \(\alpha _k\) there are individual \(\varOmega _k^l\) parameters. While this drastically increases the number of fitted parameters in the ML fit to data, this also guarantees that the \(\varOmega _k^l\) parameters are hardly constrained by the fit to data and the uncertainty in \(m_{\textrm{t}}\) includes the statistical uncertainty in the impact of the systematic effect.

For a single histogram in a set, the products of Poisson probabilities for the prediction \(\mu _{i,j} = n_{\text {tot},i}P_i(x_{i,j}|m_{\textrm{t}}, \vec {\theta }, \vec {\beta }, \varOmega )\) and for an alternative model with an adjustable parameter per bin \({{\hat{\mu }}}_{i,j} = n_{i,j}\) are used to compute the likelihood ratio \(\lambda _i\) [8], where \(x_i\) is the observable, \(n_{i,j}\) is the content of bin j with bin center \(x_{i,j}\), and \(n_{\text {tot},i}\) is the total number of entries. Then the combined likelihood ratio for a set with observables \(\vec {x}\) is

where \(P(\theta _l)\), \(P(\vec {\beta })\), and \(P(\varOmega )\) are the pre-fit probability density functions of the nuisance parameters \(\theta _l\), \(\vec {\beta } \), and \(\varOmega \). The product of the likelihood ratios can be used on the right-hand side of the equation, as all observables are independent in most phase space regions. The probability density functions of the nuisance parameters related to the sources of systematic uncertainties, \(P(\theta _l)\), are standard normal distributions. All model parameters \(\alpha _k^0\), \(s_k^0\), or \(s_k^l\) that are related to the same observable and nuisance parameter are obtained together by one fit to the corresponding simulation samples. To take the correlations between these parameters into account, the statistical nuisance parameters \(\vec {\beta } \) or \(\varOmega \) that incorporate the statistical uncertainty in these parameters are constrained by centered multivariate normal distributions. The covariances of the distributions are set to the correlation matrices obtained by the fits of the corresponding parameters. The latter nuisance parameters and constraints are only included if the model parameters are determined from samples that are statistically independent of the default simulation, like, for example, for the alternative color reconnection models. If the model parameters are determined from samples obtained from varying the default simulation with event-based weights or scaling or smearing of the jet energies, the corresponding \(\varOmega \) parameters are fixed to zero and the constraint is removed from \(\lambda (m_{\textrm{t}}, \vec {\theta }, \vec {\beta }, \varOmega | \text {data})\).

The mass of the top quark is determined with the profile likelihood fit for different sets of data histograms. The sets and their labels are listed in Table 1.

The expected total uncertainty in \(m_{\textrm{t}}\) is evaluated for each set defined in Table 1 with pseudo-experiments using the default simulation. The results of the pseudo-experiments are shown in Fig. 4 (upper). The improvements in the data reconstruction and calibration, event selection, simulation, and mass extraction method reduce the uncertainty in the 1D measurement from 1.09 to 0.63\(\,\text {Ge}\hspace{-.08em}\text {V}\), when compared to the previous measurement [13]. The uncertainty in the 2D measurement improves from 0.63 to 0.50\(\,\text {Ge}\hspace{-.08em}\text {V}\). The additional observables and the split into categories further reduce the expected uncertainty down to 0.37\(\,\text {Ge}\hspace{-.08em}\text {V}\) for the 5D set.

The statistical uncertainty is obtained from fits that only have \(m_{\textrm{t}}\) as a free parameter. From studies on simulation, it is expected to be 0.07, 0.06, and 0.04\(\,\text {Ge}\hspace{-.08em}\text {V}\) in the electron+jets, muon+jets, and the combined (lepton + jets) channels, respectively.

Upper: Comparison of the expected total uncertainty in \(m_{\textrm{t}}\) in the combined lepton + jets channel and for the different observable-category sets defined in Table 1. Lower: The difference between the measured and generated \(m_{\textrm{t}}\) values, divided by the uncertainty reported by the fit from pseudo-experiments without (red) or with (blue) the statistical nuisance parameters \(\vec {\beta }\) and \(\varOmega \) in the 5D ML fit. Also included in the legend are the \(\mu \) and \(\sigma \) parameters of Gaussian functions (red and blue lines) fit to the histograms

The applied statistical model is verified with additional pseudo-experiments. Here, the data for one pseudo-experiment are generated using probability density functions \(P(x_i |m_{\textrm{t}}, \vec {\theta })\) that have the same functional form as the ones used in the ML fit, but their model parameters \(\vec {\alpha }\) and all slopes, \(s_k^l\) are determined on statistically fluctuated simulations. For the generation of a pseudo-experiment, \(m_{\textrm{t}}\) is chosen from a uniform distribution with a mean of 172.5\(\,\text {Ge}\hspace{-.08em}\text {V}\) and the same standard deviation as is assumed for the calibration uncertainty. The values of the nuisance parameters \(\vec {\theta }\) are drawn from standard normal distributions. The same ML fit that is applied to the collider data is then performed on the pseudo-data. The pseudo-experiments are generated for two cases, specifically, with and without the statistical nuisance parameters \(\vec {\beta }\) and \(\varOmega \) in the ML fit. Figure 4 (lower) shows the distribution of the differences between the measured and generated \(m_{\textrm{t}}\) values, divided by the uncertainty reported by the fit for both cases. A nearly 40% underestimation of the measurement uncertainty can be seen for the case without the statistical nuisance parameters \(\vec {\beta }\) and \(\varOmega \), while consistency is observed for the method that is employed on data.

In addition, single-parameter fits were performed on pseudo-data sampled from simulation to verify that the mass extraction method is unbiased and reports the correct uncertainty. These tests were done for fits of \(m_{\textrm{t}}\) with samples corresponding to mass values of 169.5, 172.5, and 175.5\(\,\text {Ge}\hspace{-.08em}\text {V}\), as well as on the simulation of different systematic effects for the fits of the corresponding nuisance parameter.

6 Results

The results of the profile likelihood fits to data are shown in Fig. 5 for the electron + jets, muon + jets, and lepton + jets channels and for the different sets of observables and categories, as defined in Table 1. The observables \(m_{{\textrm{W}}}^\text {reco}\), \(m_{\ell {\textrm{b}}}^\text {reco}/m_{\textrm{t}}^\text {fit} \), and \(R_{{\textrm{bq}}}^\text {reco}\) provide constraints on the modeling of the \(\hbox {t}\bar{\hbox {t}} \) decays in addition to the observables \(m_{\textrm{t}}^\text {fit}\) and \(m_{\ell {\textrm{b}}}^\text {reco} |_{P_\text {gof} <0.2}\), which are highly sensitive to \(m_{\textrm{t}}\). With the profile likelihood method, these constraints not only reduce the uncertainty in \(m_{\textrm{t}}\), but also change the measured \(m_{\textrm{t}}\) value, as they effectively alter the parameters of the reference \(\hbox {t}\bar{\hbox {t}} \) simulation. When additional observables are included, the measurement in the lepton + jets channel yields a smaller mass value than the separate channels because of the correlations between the channels.

Measurement of \(m_{\textrm{t}}\) in the three different channels for the different sets of observables and categories as defined in Table 1

The 5D fit to the selected events results in the best precision and yields in the respective channels:

The comparisons of the data distributions and the post-fit 5D model are shown in Fig. 6. While the binned probability density functions of the model describe the corresponding observables well, significant deviations between the data and the model can be observed in the peak region of the \(m_{\textrm{t}}^\text {fit}\) observable. These deviations are also observed in simulation and stem from the fact that effectively only two parameters, the peak position and its average width, are used in the model to describe the peak. Tests with simulation show no bias on the extracted \(m_{\textrm{t}}\) value from these deviations. In fact, the small number of parameters should increase the robustness of the measurement as the model is not sensitive to finer details of the peak shape that might be difficult to simulate correctly.

Distribution of \(m_{\textrm{t}}^\text {fit}\) (upper) and the additional observables (lower) that are the input to the 5D ML fit and their post-fit probability density functions for the combined fit to the electron+jets (left) and muon+jets (right) channels. For the additional observables results, the events in each bin are divided by the bin width. The lower panels show the ratio of data and post-fit template values. The green and yellow bands represent the 68 and 95% confidence levels in the fit uncertainty

Measurement of \(m_{\textrm{t}}\) in the combined lepton + jets channel using the 5D set of observables and categories. The left plot shows the post-fit pulls on the most important nuisance parameters and the numbers quote the post-fit uncertainty in the nuisance parameter. The right plot shows their pre-fit (lighter colored bars) and post-fit impacts (darker colored bars) on \(m_{\textrm{t}}\) for up (red) and down (blue) variations. The post-fit impacts of systematic effects that are affected by the limited size of simulation samples include the contribution from the additional statistical nuisance parameters accounting for the effect. The size of the additional contribution from the statistical nuisance parameters is called MC stat. and shown as gray-dotted areas. The average of the post-fit impacts in \(\,\text {Ge}\hspace{-.08em}\text {V}\) for up and down variations is printed on the right. The rows are sorted by the size of the averaged post-fit impact. The statistical uncertainty in \(m_{\textrm{t}}\) is depicted in the corresponding row

Figure 7 shows the pulls on the most important systematic nuisance parameters \(\theta \) and their impacts on \(m_{\textrm{t}}\), \(\varDelta m_{\textrm{t}} \), after the fit with the 5D model. The pulls are defined as \(({\hat{\theta }}- \theta _0)/\varDelta \theta \), where \({\hat{\theta }}\) is the measured nuisance parameter value and \(\theta _0\) and \(\varDelta \theta \) are the mean and standard deviation of the nuisance parameter before the fit. The pre-fit impacts are evaluated by repeating the ML fit with the studied nuisance parameter \(\theta \) fixed to \({\hat{\theta }} \pm \varDelta \theta \) and taking the difference in \(m_{\textrm{t}}\) between the result of these fits and the measured \(m_{\textrm{t}}\). In most cases, the post-fit impacts are evaluated respectively with \({\hat{\theta }}\) and \( \widehat{\varDelta \theta }\), where \(\widehat{\varDelta \theta }\) is the uncertainty in the nuisance parameter after the fit. However, if the studied systematic nuisance parameter \(\theta \) has statistical nuisance parameters \(\varOmega \) that account for the statistical uncertainty in the \(\theta \)-dependence of the model, the combined impact of the systematic and statistical nuisance parameters is plotted in Fig. 7 as post-fit impact. To estimate this combined impact, the likelihood fit is repeated with the corresponding systematic and statistical nuisance parameters fixed to their post-fit values and the quadratic difference of the overall \(m_{\textrm{t}}\) uncertainty compared to the default fit is taken. The quadratic difference between the combined impact and the post-fit impact of only the systematic nuisance parameter is interpreted as the effect of the limited size of the systematic simulation samples.

Most nuisance parameters are consistent with their pre-fit values. The largest effect on the measured mass value corresponds to the FSR scale of the \({\textrm{q}}\rightarrow {\textrm{qg}}\) branching type. The effect is caused by the difference in the peak position of \(m_{{\textrm{W}}}^\text {reco}\) seen in Fig. 2 (upper). The previous measurements in this channel by the CMS Collaboration assumed correlated FSR PS scales with the same scale choice for jets induced by light quarks and b quark [11, 13]. In that case, a lower peak position in the \(m_{{\textrm{W}}}^\text {reco}\) distribution would also cause the \(m_{\textrm{t}}^\text {fit}\) peak position to be lower than expected from simulation for a given \(m_{\textrm{t}}\) value, resulting in a higher top quark mass value to be measured. In fact, a 5D fit to data assuming fully correlated FSR PS scale choices yields \(m_{\textrm{t}} = 172.20 \pm 0.31\,\text {Ge}\hspace{-.08em}\text {V} \). This value is very close to the previous measurement on the same data of \(m_{\textrm{t}} = 172.25 \pm 0.63\,\text {Ge}\hspace{-.08em}\text {V} \) [13].

The measurement is repeated for different correlation coefficients (\(\rho _{\text {{FSR}}}\)) in the pre-fit covariance matrix between the FSR PS scales for the different branching types. The result of this study is shown in Fig. 8. The final result strongly depends on the choice of the correlation coefficient between the FSR PS scales because of the significant deviation for the FSR PS scale of the \({\textrm{q}}\rightarrow {\textrm{qg}}\) branching from the default simulation. However, the assumption of strongly correlated FSR PS scale choices would also significantly reduce the overall uncertainty, as the impacts from the scale choice for gluon radiation from b quark (\(\hbox {X}\rightarrow {\textrm{Xg}}\)) and light quarks (\({\textrm{q}}\rightarrow {\textrm{qg}}\)) partially cancel. In addition, there is a tension between the measured nuisance parameter values for the different FSR PS scales, which disfavors a strong correlation. As there is only a small dependence on FSR PS scale correlations at low correlation coefficients (\(\rho _{\text {{FSR}}} < 0.5\)), and uncorrelated nuisance parameters for the FSR PS scales receive the least constraint from the fit to data, we assume uncorrelated FSR PS scales for this measurement.

Most of the other nuisance parameters that show a strong post-fit constraint correspond to systematic uncertainties that are evaluated on independent samples of limited size. The small sample sizes are expected to bias these nuisances parameter and lead to too small uncertainties. Hence, the nuisance parameters are accompanied by additional statistical nuisance parameters. A comparison of the pre-fit and post-fit impacts where the post-fit impacts include the impact of these statistical nuisance parameters shows that there is an only minimal constraint by the fit on the corresponding systematic uncertainties.

The largest constraint of a nuisance parameter without additional statistical nuisance parameters corresponds to the JER uncertainty. This is expected, as the energy resolution of jets from \(\hbox {t}\bar{\hbox {t}} \) decays can be measured much better from the width of the \(m_{{\textrm{W}}}^\text {reco}\) distribution than by the extrapolation of the resolution measurement with dijet topologies at much higher transverse momenta [24].

Table 2 compares the measurements by the 2D and 5D methods with the previous result [13, 54] for the same data-taking period. The JEC uncertainties are grouped following the recommendations documented in Ref. [83]. The uncertainty in \(m_{\textrm{t}}\) for one source (row) in this table is evaluated from the covariance matrix of the ML fit by taking the square root of \(\text {cov}(m_{\textrm{t}},X) \text {cov}(X,X)^{-1} \text {cov}(X,m_{\textrm{t}})\), where \(\text {cov}(m_{\textrm{t}},X)\), \(\text {cov}(X,X)\), \(\text {cov}(X,m_{\textrm{t}})\) are the parts of the covariance matrix related to \(m_{\textrm{t}}\) or the set of nuisance parameters X contributing to the source, respectively. The statistical and calibration uncertainties are obtained differently by computing the partial covariance matrix on \(m_{\textrm{t}}\) where all other nuisance parameters are removed. The quadratic sum of all computed systematic uncertainties is larger than the uncertainty in \(m_{\textrm{t}}\) from the ML fit, as the sum ignores the post-fit correlations between the systematic uncertainty sources.

The 5D method is the only method that surpasses the strong reduction in the uncertainty in the JEC achieved by the previous analysis that determined \(m_{\textrm{t}}\) and in situ an overall jet energy scale factor (JSF). However, the measurement presented here also constrains the jet energy resolution uncertainty that was unaffected by the JSF. The new observables and additional events with a low \(P_\text {gof}\) reduce most modeling uncertainties, but lead to a slight increase in some experimental uncertainties. While the usage of weights for the PS variations removes the previously significant statistical component in the PS uncertainties, the introduction of separate PS scales leads to a large increase in the uncertainty in the FSR PS scale, despite the tight constraint on the corresponding nuisance parameters shown in Fig. 7.

The result presented here achieves a considerable improvement compared to all previously published top quark mass measurements. Hence, it supersedes the previously published measurement in this channel on the same data set [13]. The analysis shows the precision that is achievable from direct measurements of the top quark mass. As the uncertainty in the relationship of the direct measurement from simulation templates to a theoretically well-defined top quark mass is currently of similar size, the measurement should fuel further theoretical studies on the topic.

7 Summary

The mass of the top quark is measured using LHC proton–proton collision data collected in 2016 with the CMS detector at \(\sqrt{s}=13\,\text {Te}\hspace{-.08em}\text {V} \), corresponding to an integrated luminosity of 36.3\(\,\text {fb}^{-1}\). The measurement uses a sample of \(\hbox {t}\bar{\hbox {t}} \) events containing one isolated electron or muon and at least four jets in the final state. For each event, the mass is reconstructed from a kinematic fit of the decay products to a \(\hbox {t}\bar{\hbox {t}} \) hypothesis. A likelihood method is applied using up to four observables per event to extract the top quark mass and constrain the influences of systematic effects, which are included as nuisance parameters in the likelihood. The top quark mass is measured to be \(171.77\pm 0.37\,\text {Ge}\hspace{-.08em}\text {V} \). This result achieves a considerable improvement compared to all previously published top quark mass measurements and supersedes the previously published measurement in this channel on the same data set.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: Release and preservation of data used by the CMS Collaboration as the basis for publications is guided by the CMS policy as stated in “https://cms-docdb.cern.ch/cgi-bin/PublicDocDB/RetrieveFile?docid=6032 &filename=CMSDataPolicyV1.2.pdf &version=2 CMS data preservation, re-use and open access policy”].

References

CDF Collaboration, Observation of top quark production in \(\bar{p}p\) collisions. Phys. Rev. Lett. 74, 2626 (1995). https://doi.org/10.1103/PhysRevLett.74.2626. arXiv:hep-ex/9503002

D0 Collaboration, Observation of the top quark. Phys. Rev. Lett. 74, 2632 (1995). https://doi.org/10.1103/PhysRevLett.74.2632. arXiv:hep-ex/9503003

G. Degrassi et al., Higgs mass and vacuum stability in the standard model at NNLO. JHEP 08, 1 (2012). https://doi.org/10.1007/JHEP08(2012)098. arXiv:1205.6497

F. Bezrukov, M.Y. Kalmykov, B.A. Kniehl, M. Shaposhnikov, Higgs boson mass and new physics. JHEP 10, 140 (2012). https://doi.org/10.1007/JHEP10(2012)140. arXiv:1205.2893

The ALEPH, DELPHI, L3, and OPAL Collaborations and the LEP Electroweak Working Group, Electroweak measurements in electron–positron collisions at W-boson-pair energies at LEP. Phys. Rep. 532, 119 (2013). https://doi.org/10.1016/j.physrep.2013.07.004. arXiv:1302.3415

M. Baak et al., The electroweak fit of the standard model after the discovery of a new boson at the LHC. Eur. Phys. J. C 72, 2205 (2012). https://doi.org/10.1140/epjc/s10052-012-2205-9. arXiv:1209.2716

M. Baak et al., The global electroweak fit at NNLO and prospects for the LHC and ILC. Eur. Phys. J. C 74, 3046 (2014). https://doi.org/10.1140/epjc/s10052-014-3046-5. arXiv:1407.3792

Particle Data Group, P.A. Zyla et al., Review of particle physics. Prog. Theor. Exp. Phys. 2020, 083C01 (2020). https://doi.org/10.1093/ptep/ptaa104

CDF and D0 Collaborations, Combination of CDF and D0 results on the mass of the top quark using up \(9.7\,{{\rm fb}}^{-1}\) at the Tevatron. FERMILAB-CONF-16-298-E (2016). arXiv:1608.01881

ATLAS Collaboration, Measurement of the top quark mass in the \(t\bar{t}\rightarrow \) lepton+jets channel from \(\sqrt{s}=8\) TeV ATLAS data and combination with previous results. Eur. Phys. J. C 79, 290 (2019). https://doi.org/10.1140/epjc/s10052-019-6757-9. arXiv:1810.01772

CMS Collaboration, Measurement of the top quark mass using proton–proton data at \({\sqrt{s}} = 7\) and 8 TeV. Phys. Rev. D 93, 072004 (2016). https://doi.org/10.1103/PhysRevD.93.072004. arXiv:1509.04044

A.H. Hoang, What is the top quark mass? Annu. Rev. Nucl. Part. Sci. 70, 225 (2020). https://doi.org/10.1146/annurev-nucl-101918-023530. arXiv:2004.12915

CMS Collaboration, Measurement of the top quark mass with lepton+jets final states using \({{\rm pp}}\) collisions at \(\sqrt{s}=13\,\text{ TeV } \). Eur. Phys. J. C 78, 891 (2018). https://doi.org/10.1140/epjc/s10052-018-6332-9. arXiv:1805.01428

DELPHI Collaboration, Measurement of the mass and width of the W boson in \({{\rm e}}^{+}{{\rm e}}^{-}\) collisions at \(\sqrt{s}\) = 161-209 GeV. Eur. Phys. J. C 55, 1 (2008). https://doi.org/10.1140/epjc/s10052-008-0585-7. arXiv:0803.2534

CMS Collaboration, Measurement of the top-quark mass in \(\text{ t }\bar{\text{ t }}\) events with lepton+jets final states in pp collisions at \(\sqrt{s}=7\) TeV. JHEP 12, 105 (2012). https://doi.org/10.1007/JHEP12(2012)105. arXiv:1209.2319

CMS Collaboration, Extraction and validation of a new set of CMS pythia tunes from underlying-event measurements. Eur. Phys. J. C 80, 4 (2020). https://doi.org/10.1140/epjc/s10052-019-7499-4. arXiv:1903.12179

E. Bols et al., Jet flavour classification using DeepJet. JINST 15, P12012 (2020). https://doi.org/10.1088/1748-0221/15/12/P12012. arXiv:2008.10519

HEPData record for this analysis (2023). https://doi.org/10.17182/hepdata.127993

CMS Collaboration, The CMS experiment at the CERN LHC. JINST 3, S08004 (2008). https://doi.org/10.1088/1748-0221/3/08/S08004

CMS Collaboration, Technical proposal for the Phase-II upgrade of the Compact Muon Solenoid. CMS Technical Proposal CERN-LHCC-2015-010, CMS-TDR-15-02 (2015). http://cds.cern.ch/record/2020886

CMS Collaboration, Particle-flow reconstruction and global event description with the CMS detector. JINST 12, P10003 (2017). https://doi.org/10.1088/1748-0221/12/10/P10003. arXiv:1706.04965

M. Cacciari, G.P. Salam, G. Soyez, The anti-\(k_{{\rm T}}\) jet clustering algorithm. JHEP 04, 063 (2008). https://doi.org/10.1088/1126-6708/2008/04/063. arXiv:0802.1189

M. Cacciari, G.P. Salam, G. Soyez, FastJet user manual. Eur. Phys. J. C 72, 1896 (2012). https://doi.org/10.1140/epjc/s10052-012-1896-2. arXiv:1111.6097

CMS Collaboration, Jet energy scale and resolution in the CMS experiment in pp collisions at 8 TeV. JINST 12, P02014 (2017). https://doi.org/10.1088/1748-0221/12/02/P02014. arXiv:1607.03663

CMS Collaboration, Identification of heavy-flavour jets with the CMS detector in pp collisions at 13 TeV. JINST 13, P05011 (2018). https://doi.org/10.1088/1748-0221/13/05/P05011. arXiv:1712.07158

CMS Collaboration, Performance of the DeepJet b tagging algorithm using 41.9/fb of data from proton–proton collisions at 13 TeV with Phase 1 CMS detector. CMS Detector Performance Note CMS-DP-2018-058 (2018). http://cds.cern.ch/record/2646773

CMS Collaboration, Performance of missing transverse momentum reconstruction in proton–proton collisions at \(\sqrt{s} = 13\) TeV using the CMS detector. JINST 14, P07004 (2019). https://doi.org/10.1088/1748-0221/14/07/P07004. arXiv:1903.06078

CMS Collaboration, Electron and photon reconstruction and identification with the CMS experiment at the CERN LHC. JINST 16, P05014 (2021). https://doi.org/10.1088/1748-0221/16/05/P05014. arXiv:2012.06888

CMS Collaboration, ECAL 2016 refined calibration and Run2 summary plots. CMS Detector Performance Note CMS-DP-2020-021 (2020). https://cds.cern.ch/record/2717925

CMS Collaboration, Performance of the CMS muon detector and muon reconstruction with proton–proton collisions at \(\sqrt{s}= 13\) TeV. JINST 13, P06015 (2018). https://doi.org/10.1088/1748-0221/13/06/P06015. arXiv:1804.04528

CMS Collaboration, Precision luminosity measurement in proton–proton collisions at \(\sqrt{s} = 13\) TeV in 2015 and 2016 at CMS. Eur. Phys. J. C 81, 800 (2021). https://doi.org/10.1140/epjc/s10052-021-09538-2. arXiv:2104.01927

CMS Collaboration, The CMS trigger system. JINST 12, P01020 (2017). https://doi.org/10.1088/1748-0221/12/01/P01020. arXiv:1609.02366

P. Nason, A new method for combining NLO QCD with shower Monte Carlo algorithms. JHEP 11, 040 (2004). https://doi.org/10.1088/1126-6708/2004/11/040. arXiv:hep-ph/0409146

S. Frixione, P. Nason, C. Oleari, Matching NLO QCD computations with parton shower simulations: the POWHEG method. JHEP 11, 070 (2007). https://doi.org/10.1088/1126-6708/2007/11/070. arXiv:0709.2092

S. Alioli, P. Nason, C. Oleari, E. Re, A general framework for implementing NLO calculations in shower Monte Carlo programs: the POWHEG BOX. JHEP 06, 043 (2010). https://doi.org/10.1007/JHEP06(2010)043. arXiv:1002.2581

T. Sjöstrand et al., An introduction to pythia8.2. Comput. Phys. Commun. 191, 159 (2015). https://doi.org/10.1016/j.cpc.2015.01.024. arXiv:1410.3012

J. Butterworth et al., PDF4LHC recommendations for LHC Run II. J. Phys. G 43, 023001 (2016). https://doi.org/10.1088/0954-3899/43/2/023001. arXiv:1510.03865

NNPDF Collaboration, Parton distributions from high-precision collider data. Eur. Phys. J. C 77, 663 (2017). https://doi.org/10.1140/epjc/s10052-017-5199-5. arXiv:1706.00428

S. Alioli, P. Nason, C. Oleari, E. Re, NLO single-top production matched with shower in POWHEG: \(s\)- and \(t\)-channel contributions. JHEP 09, 111 (2009). https://doi.org/10.1088/1126-6708/2009/09/111. arXiv:0907.4076. [Erratum: https://doi.org/10.1007/JHEP02(2010)011]

E. Re, Single-top Wt-channel production matched with parton showers using the POWHEG method. Eur. Phys. J. C 71, 1547 (2011). https://doi.org/10.1140/epjc/s10052-011-1547-z. arXiv:1009.2450

J. Alwall et al., The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. JHEP 07, 079 (2014). https://doi.org/10.1007/JHEP07(2014)079. arXiv:1405.0301

J. Alwall et al., Comparative study of various algorithms for the merging of parton showers and matrix elements in hadronic collisions. Eur. Phys. J. C 53, 473 (2008). https://doi.org/10.1140/epjc/s10052-007-0490-5. arXiv:0706.2569

R. Frederix, S. Frixione, Merging meets matching in MC@NLO. JHEP 12, 061 (2012). https://doi.org/10.1007/JHEP12(2012)061. arXiv:1209.6215

P. Skands, S. Carrazza, J. Rojo, Tuning pythia8.1: the Monash 2013 tune. Eur. Phys. J. C 74, 3024 (2014). https://doi.org/10.1140/epjc/s10052-014-3024-y. arXiv:1404.5630

GEANT4 Collaboration, Geant4—a simulation toolkit. Nucl. Instrum. Methods A 506, 250 (2003). https://doi.org/10.1016/S0168-9002(03)01368-8

M. Czakon, A. Mitov, Top++: a program for the calculation of the top-pair cross-section at hadron colliders. Comput. Phys. Commun. 185, 2930 (2014). https://doi.org/10.1016/j.cpc.2014.06.021. arXiv:1112.5675

Y. Li, F. Petriello, Combining QCD and electroweak corrections to dilepton production in FEWZ. Phys. Rev. D 86, 094034 (2012). https://doi.org/10.1103/PhysRevD.86.094034. arXiv:1208.5967

M. Aliev et al., HATHOR: HAdronic Top and Heavy quarks crOss section calculatoR. Comput. Phys. Commun. 182, 1034 (2011). https://doi.org/10.1016/j.cpc.2010.12.040. arXiv:1007.1327

P. Kant et al., HATHOR for single top-quark production: updated predictions and uncertainty estimates for single top-quark production in hadronic collisions. Comput. Phys. Commun. 191, 74 (2015). https://doi.org/10.1016/j.cpc.2015.02.001. arXiv:1406.4403

D0 Collaboration, Direct measurement of the top quark mass at D0. Phys. Rev. D 58, 052001 (1998). https://doi.org/10.1103/PhysRevD.58.052001. arXiv:hep-ex/9801025

ATLAS Collaboration, Measurement of the top quark mass in the \({t}\bar{{t}}\rightarrow \) dilepton channel from \(\sqrt{s}=8\) TeV ATLAS data. Phys. Lett. B 761, 350 (2016). https://doi.org/10.1016/j.physletb.2016.08.042. arXiv:1606.02179

CMS Collaboration, Measurement of the \({{\rm t}}\bar{{\rm t}}\) production cross section, the top quark mass, and the strong coupling constant using dilepton events in pp collisions at \(\sqrt{s} = 13\) TeV. Eur. Phys. J. C 79, 368 (2019). https://doi.org/10.1140/epjc/s10052-019-6863-8. arXiv:1812.10505

ATLAS Collaboration, Measurement of the top quark mass in the \(t\bar{t}\rightarrow \text{ lepton }+\text{ jets } \) and \(t\bar{t}\rightarrow \text{ dilepton } \) channels using \(\sqrt{s}=7\) TeV ATLAS data. Eur. Phys. J. C 75, 330 (2015). https://doi.org/10.1140/epjc/s10052-015-3544-0. arXiv:1503.05427

CMS Collaboration, Measurement of the top quark mass in the all-jets final state at \(\sqrt{s} = 13\) TeV and combination with the lepton+jets channel. Eur. Phys. J. C 79, 313 (2019). https://doi.org/10.1140/epjc/s10052-019-6788-2. arXiv:1812.10534

CMS Collaboration, Jet energy scale and resolution performance with 13 TeV data collected by CMS in 2016–2018. CMS Detector Performance Note CMS-DP-2020-019 (2020). https://cds.cern.ch/record/2715872

CMS Collaboration, Measurement of the inelastic proton-proton cross section at \( \sqrt{s}=13 \) TeV. JHEP 07, 161 (2018). https://doi.org/10.1007/JHEP07(2018)161. arXiv:1802.02613

CMS Collaboration, Measurement of the \(t\)-channel single-top-quark production cross section and of the \(|{V}_{{\rm tb}}|\) CKM matrix element in pp collisions at \(\sqrt{s} = 8\) TeV. JHEP 06, 090 (2014). https://doi.org/10.1007/JHEP06(2014)090. arXiv:1403.7366

CMS Collaboration, Cross section measurement of \(t\)-channel single top quark production in pp collisions at \(\sqrt{s} = 13\) TeV. Phys. Lett. B 772, 752 (2017). https://doi.org/10.1016/j.physletb.2017.07.047. arXiv:1610.00678

CMS Collaboration, Measurement of the production cross section of a W boson in association with two b jets in pp collisions at \(\sqrt{s} = 8\,{\rm TeV }\). Eur. Phys. J C. 77, 92 (2017). https://doi.org/10.1140/epjc/s10052-016-4573-z. arXiv:1608.07561

CMS Collaboration, Measurements of the associated production of a Z boson and b jets in pp collisions at \({\sqrt{s}} = 8\,\text{ TeV }\). Eur. Phys. J. C 77, 751 (2017). https://doi.org/10.1140/epjc/s10052-017-5140-y. arXiv:1611.06507

CMS Collaboration, Measurement of the WZ production cross section in pp collisions at \({\sqrt{s}} = 13\) TeV. Phys. Lett. B 766, 268 (2017). https://doi.org/10.1016/j.physletb.2017.01.011. arXiv:1607.06943

CMS Collaboration, Measurements of the \({{\rm pp}}\rightarrow {{\rm ZZ}}\) production cross section and the \({{\rm Z}}\rightarrow 4\ell \) branching fraction, and constraints on anomalous triple gauge couplings at \(\sqrt{s} = 13\,\text{ TeV }\). Eur. Phys. J. C 78, 165 (2018). https://doi.org/10.1140/epjc/s10052-018-5567-9. arXiv:1709.08601

T. Sjöstrand, S. Mrenna, P.Z. Skands, pythia 6.4 physics and manual. JHEP 05, 026 (2006). https://doi.org/10.1088/1126-6708/2006/05/026. arXiv:hep-ph/0603175

M. Bähr et al., Herwig++ physics and manual. Eur. Phys. J. C 58, 639 (2008). https://doi.org/10.1140/epjc/s10052-008-0798-9. arXiv:0803.0883

DELPHI Collaboration, A study of the b-quark fragmentation function with the DELPHI detector at LEP I and an averaged distribution obtained at the Z pole. Eur. Phys. J. C 71, 1557 (2011). https://doi.org/10.1140/epjc/s10052-011-1557-x. arXiv:1102.4748

ALEPH Collaboration, Study of the fragmentation of b quarks into B mesons at the Z peak. Phys. Lett. B 512, 30 (2001). https://doi.org/10.1016/S0370-2693(01)00690-6. arXiv:hep-ex/0106051

S. Dulat et al., New parton distribution functions from a global analysis of quantum chromodynamics. Phys. Rev. D 93, 033006 (2016). https://doi.org/10.1103/PhysRevD.93.033006. arXiv:1506.07443

L.A. Harland-Lang, A.D. Martin, P. Motylinski, R.S. Thorne, Parton distributions in the LHC era: MMHT 2014 PDFs. Eur. Phys. J. C 75, 204 (2015). https://doi.org/10.1140/epjc/s10052-015-3397-6. arXiv:1412.3989

CMS Collaboration, Investigations of the impact of the parton shower tuning in pythia8 in the modelling of \({{\rm t}}{\bar{{\rm t}}}\) at \(\sqrt{s}=8\) and 13 TeV. CMS Physics Analysis Summary CMS-PAS-TOP-16-021 (2016). https://cds.cern.ch/record/2235192

S. Mrenna, P. Skands, Automated parton-shower variations in pythia8. Phys. Rev. D 94, 074005 (2016). https://doi.org/10.1103/PhysRevD.94.074005. arXiv:1605.08352

M. Czakon, D. Heymes, A. Mitov, High-precision differential predictions for top-quark pairs at the LHC. Phys. Rev. Lett. 116, 082003 (2016). https://doi.org/10.1103/PhysRevLett.116.082003. arXiv:1511.00549

M. Czakon et al., Top-pair production at the LHC through NNLO QCD and NLO EW. JHEP 10, 186 (2017). https://doi.org/10.1007/JHEP10(2017)186. arXiv:1705.04105

S. Catani et al., Top-quark pair production at the LHC: fully differential QCD predictions at NNLO. JHEP 07, 100 (2019). https://doi.org/10.1007/JHEP07(2019)100. arXiv:1906.06535

CMS Collaboration, Measurement of differential cross sections for top quark pair production using the lepton+jets final state in proton–proton collisions at 13 TeV. Phys. Rev. D 95, 092001 (2017). https://doi.org/10.1103/PhysRevD.95.092001. arXiv:1610.04191

CMS Collaboration, Measurement of normalized differential \( {{\rm t}}{\bar{\rm t}} \) cross sections in the dilepton channel from pp collisions at \( \sqrt{s}=13 \) TeV. JHEP 04, 060 (2018). https://doi.org/10.1007/JHEP04(2018)060. arXiv:1708.07638

J.R. Christiansen, P.Z. Skands, String formation beyond leading colour. JHEP 08, 003 (2015). https://doi.org/10.1007/JHEP08(2015)003. arXiv:1505.01681

S. Argyropoulos, T. Sjöstrand, Effects of color reconnection on \(\text{ t }\bar{\text{ t }}\) final states at the LHC. JHEP 11, 043 (2014). https://doi.org/10.1007/JHEP11(2014)043. arXiv:1407.6653

CMS Collaboration, CMS pythia8 colour reconnection tunes based on underlying-event data. Eur. Phys. J. C 83, 587 (2023). https://doi.org/10.1140/epjc/s10052-023-11630-8. arXiv:2205.02905

W. Verkerke, D.P. Kirkby, The RooFit toolkit for data modeling, in Proceedings of the 13th International Conference for Computing in High-Energy and Nuclear Physics (CHEP03) (2003). arXiv:physics/0306116. [eConf C0303241, MOLT007]

F. James, M. Roos, Minuit: a system for function minimization and analysis of the parameter errors and correlations. Comput. Phys. Commun. 10, 343 (1975). https://doi.org/10.1016/0010-4655(75)90039-9

R.J. Barlow, C. Beeston, Fitting using finite Monte Carlo samples. Comput. Phys. Commun. 77, 219 (1993). https://doi.org/10.1016/0010-4655(93)90005-W

J.S. Conway, Incorporating nuisance parameters in likelihoods for multisource spectra, in PHYSTAT 2011 (2011), p. 115. https://doi.org/10.5170/CERN-2011-006.115. arXiv:1103.0354

ATLAS and CMS Collaborations, Jet energy scale uncertainty correlations between ATLAS and CMS at 8 TeV. ATL-PHYS-PUB-2015-049, CMS-PAS-JME-15-001 (2015). http://cds.cern.ch/record/2104039

Acknowledgements

We congratulate our colleagues in the CERN accelerator departments for the excellent performance of the LHC and thank the technical and administrative staffs at CERN and at other CMS institutes for their contributions to the success of the CMS effort. In addition, we gratefully acknowledge the computing centers and personnel of the Worldwide LHC Computing Grid and other centers for delivering so effectively the computing infrastructure essential to our analyses. Finally, we acknowledge the enduring support for the construction and operation of the LHC, the CMS detector, and the supporting computing infrastructure provided by the following funding agencies: BMBWF and FWF (Austria); FNRS and FWO (Belgium); CNPq, CAPES, FAPERJ, FAPERGS, and FAPESP (Brazil); MES and BNSF (Bulgaria); CERN; CAS, MoST, and NSFC (China); MINCIENCIAS (Colombia); MSES and CSF (Croatia); RIF (Cyprus); SENESCYT (Ecuador); MoER, ERC PUT and ERDF (Estonia); Academy of Finland, MEC, and HIP (Finland); CEA and CNRS/IN2P3 (France); BMBF, DFG, and HGF (Germany); GSRI (Greece); NKFIH (Hungary); DAE and DST (India); IPM (Iran); SFI (Ireland); INFN (Italy); MSIP and NRF (Republic of Korea); MES (Latvia); LAS (Lithuania); MOE and UM (Malaysia); BUAP, CINVESTAV, CONACYT, LNS, SEP, and UASLP-FAI (Mexico); MOS (Montenegro); MBIE (New Zealand); PAEC (Pakistan); MES and NSC (Poland); FCT (Portugal); JINR (Dubna); MON, RosAtom, RAS, RFBR, and NRC KI (Russia); MESTD (Serbia); MCIN/AEI and PCTI (Spain); MOSTR (Sri Lanka); Swiss Funding Agencies (Switzerland); MST (Taipei); MHESI and NSTDA (Thailand); TUBITAK and TENMAK (Turkey); NASU (Ukraine); STFC (United Kingdom); DOE and NSF (USA). Individuals have received support from the Marie-Curie program and the European Research Council and Horizon 2020 Grant, contract Nos. 675440, 724704, 752730, 758316, 765710, 824093, 884104, and COST Action CA16108 (European Union); the Leventis Foundation; the Alfred P. Sloan Foundation; the Alexander von Humboldt Foundation; the Belgian Federal Science Policy Office; the Fonds pour la Formation à la Recherche dans l’Industrie et dans l’Agriculture (FRIA-Belgium); the Agentschap voor Innovatie door Wetenschap en Technologie (IWT-Belgium); the F.R.S.-FNRS and FWO (Belgium) under the “Excellence of Science – EOS” – be.h project n. 30820817; the Beijing Municipal Science and Technology Commission, No. Z191100007219010; the Ministry of Education, Youth and Sports (MEYS) of the Czech Republic; the Hellenic Foundation for Research and Innovation (HFRI), Project Number 2288 (Greece); the Deutsche Forschungsgemeinschaft (DFG), under Germany’s Excellence Strategy – EXC 2121 “Quantum Universe” – 390833306, and under project number 400140256 - GRK2497; the Hungarian Academy of Sciences, the New National Excellence Program - ÚNKP, the NKFIH research grants K 124845, K 124850, K 128713, K 128786, K 129058, K 131991, K 133046, K 138136, K 143460, K 143477, 2020-2.2.1-ED-2021-00181, and TKP2021-NKTA-64 (Hungary); the Council of Science and Industrial Research, India; the Latvian Council of Science; the Ministry of Education and Science, project no. 2022/WK/14, and the National Science Center, contracts Opus 2021/41/B/ST2/01369 and 2021/43/B/ST2/01552 (Poland); the Fundação para a Ciência e a Tecnologia, grant CEECIND/01334/2018 (Portugal); the National Priorities Research Program by Qatar National Research Fund; the Ministry of Science and Higher Education, projects no. 0723-2020-0041 and no. FSWW-2020-0008 (Russia); MCIN/AEI/10.13039/501100011033, ERDF “a way of making Europe”, and the Programa Estatal de Fomento de la Investigación Científica y Técnica de Excelencia María de Maeztu, grant MDM-2017-0765 and Programa Severo Ochoa del Principado de Asturias (Spain); the Chulalongkorn Academic into Its 2nd Century Project Advancement Project, and the National Science, Research and Innovation Fund via the Program Management Unit for Human Resources & Institutional Development, Research and Innovation, grant B05F650021 (Thailand); the Kavli Foundation; the Nvidia Corporation; the SuperMicro Corporation; the Welch Foundation, contract C-1845; and the Weston Havens Foundation (USA).

Author information

Authors and Affiliations

Consortia

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

The original online version of this article was revised. The following dedication has been added: “We dedicate this paper to the memory of our friend and colleague Thomas Ferbel, whose innovative work on precision measurements of the top quark mass laid the foundation for this publication.”.

We dedicate this paper to the memory of our friend and colleague Thomas Ferbel, whose innovative work on precision measurements of the top quark mass laid the foundation for this publication.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3. SCOAP3 supports the goals of the International Year of Basic Sciences for Sustainable Development.

About this article

Cite this article

Tumasyan, A., Adam, W., Andrejkovic, J.W. et al. Measurement of the top quark mass using a profile likelihood approach with the lepton + jets final states in proton–proton collisions at \(\sqrt{s}=13\,\text {Te}\hspace{-.08em}\text {V} \). Eur. Phys. J. C 83, 963 (2023). https://doi.org/10.1140/epjc/s10052-023-12050-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-023-12050-4