Abstract

The identification of b-jets, referred to as b-tagging, is an important part of many physics analyses in the ATLAS experiment at the Large Hadron Collider and an accurate calibration of its performance is essential for high-quality physics results. This publication describes the calibration of the light-flavour jet mistagging efficiency in a data sample of proton–proton collision events at \(\sqrt{s}=13\) TeV corresponding to an integrated luminosity of 139 fb\(^{-1}\). The calibration is performed in a sample of Z bosons produced in association with jets. Due to the low mistagging efficiency for light-flavour jets, a method which uses modified versions of the b-tagging algorithms referred to as flip taggers is used in this work. A fit to the jet-flavour-sensitive secondary-vertex mass is performed to extract a scale factor from data, to correct the light-flavour jet mistagging efficiency in Monte Carlo simulations, while simultaneously correcting the b-jet efficiency. With this procedure, uncertainties coming from the modeling of jets from heavy-flavour hadrons are considerably lower than in previous calibrations of the mistagging scale factors, where they were dominant. The scale factors obtained in this calibration are consistent with unity within uncertainties.

Similar content being viewed by others

A new method to distinguish hadronically decaying boosted Z bosons from W bosons using the ATLAS detector

Measurements of b-jet tagging efficiency with the ATLAS detector using $$ t\overline{t} $$ events at $$ \sqrt{s}=13 $$ TeV

Avoid common mistakes on your manuscript.

1 Introduction

Many analyses in ATLAS [1], such as measurements or searches involving top quarks or Higgs bosons, rely on the identification of jets containing b-hadrons (b-jets) with high tagging efficiency and low mistagging efficiency for jets containing c-hadrons (c-jets) or containing neither b- nor c-hadrons (light-flavour jets). The relatively long lifetime and high mass of b-hadrons together with the large track multiplicity of their decay products is exploited by b-tagging algorithms to identify b-jets.

The b-tagging algorithms are trained using Monte Carlo (MC) simulated events and therefore need to be calibrated in order to correct for efficiency differences between data and simulation that may arise from an imperfect description of the data, e.g. in the parton shower and fragmentation modelling or in the detector and response simulation. The efficiency of identifying a b-jet (\(\varepsilon ^{b}\)) and the mistagging efficiencies (\(\varepsilon ^{c}\) and \(\varepsilon ^{\text {light}}\)), which are the probabilities that other jets are wrongly identified by the algorithms as b-jets, are measured in data and compared with the predictions of the simulation. These tagging and mistagging efficiencies are defined as

where \(f \in \{b,~c,~\text {light}\}\), \(N_{\textrm{pass}}^{f}\) is the number of jets of flavour f selected by the b-tagging algorithm and \(N_{\textrm{all}}^{f}\) is the number of all jets of flavour f. The flavour-tagging efficiency in data (\(\varepsilon ^{f}_{\textrm{data}}\)) is compared with the efficiency in MC simulation (\(\varepsilon ^{f}_{\textrm{MC}}\)) and a calibration factor, also called the scale factor (SF), is defined as

The calibration factors correct the efficiencies and mistagging efficiencies in simulation to the ones in data and are applied to all physics analyses in ATLAS that use b-tagging. The b-tagging efficiencies and the calibration SFs depend on the jet kinematics. The b-jet efficiency (\(\varepsilon ^{b}\)) is calibrated using the method described in Ref. [2], where the SFs\(^{b}\) are extracted from a sample of events containing top-quark pairs decaying into a final state with two charged leptons and two b-jets. The c-jet mistagging efficiency (\(\varepsilon ^{c}\)) is calibrated via the method described in Ref. [3], where the SFs\(^{c}\) are extracted from events containing top-quark pairs decaying into a final state with exactly one charged lepton and several jets. The events are reconstructed using a kinematic likelihood technique and include a hadronically decaying W boson, whose decay products are rich in c-jets.

This paper describes the measurement of the light-flavour jet mistagging efficiency (\(\varepsilon ^{\text {light}}\)) of the DL1r b-tagger, which is widely used in ATLAS Run 2 physics analyses and is discussed in Sect. 5. The SFs\(^{\text {light}}\) are extracted from particle-flow jets [4] using 139 \(\textrm{fb}^{-1}\) of proton–proton (pp) collision data collected by the ATLAS detector during Run 2 of the LHC.

The mistagging efficiency \(\varepsilon ^{\text {light}}\) is difficult to calibrate because after applying a b-tagging requirement, the obtained sample of jets is strongly dominated by b-jets and the fraction of light-flavour jets passing a selection on the b-tagging score is too low to estimate \(\varepsilon ^{\text {light}}_{\text {data}}\). In order to extract an unbiased and precise SF\(^{\text {light}}\), a sample enriched in mistagged light-flavour jets is required.

In this paper, the Negative Tag method [5, 6], which relies on a modified tagger with a reduced \(\varepsilon ^{b}\) and \(\varepsilon ^{c}\) but similar \(\varepsilon ^{\text {light}}\) with respect to the nominal tagger, is used. The method, described in detail in Sect. 6, has already been used to calibrate \(\varepsilon ^{\text {light}}\) by using dijet events in 2015–2016 data [7] from the early part of Run 2.

In this work, jets produced in association with Z bosons (Z+jets) are used instead, allowing the use of unprescaled lepton triggers instead of the prescaled single-jet triggers which were used in the previous calibration. The calibration precision is improved by extracting the SF\(^{\text {light}}\) in a fit that simultaneously corrects \(\varepsilon ^{b}\) using data. Previously, \(\varepsilon ^{b}\) was estimated from simulation, resulting in large modelling uncertainties which impacted the precision of the result. An alternative approach [7] to the Negative Tag method exists in which \(\varepsilon ^{\text {light}}_{\text {data}}\) is estimated from simulation by applying data-driven corrections to the input quantities of the low-level b-tagging algorithms. This method is used to assess the extrapolation uncertainty between the modified and nominal tagger as described in Sect. 7.

The paper is structured as follows. Section 2 describes the ATLAS detector. Section 3 presents the dataset and simulations used in this calibration. The reconstruction of jets, electrons and muons is summarised in Sect. 4, while the b-tagging algorithms are described in detail in Sect. 5. The calibration method is detailed in Sect. 6. Systematic uncertainties and results are presented, respectively, in Sects. 7 and 8 and conclusions are given in Sect. 9.

2 ATLAS detector

The ATLAS experiment [1] at the LHC is a multipurpose particle detector with a forward–backward symmetric cylindrical geometry and a near \(4\pi \) coverage in solid angle.Footnote 1 It consists of an inner tracking detector (ID) surrounded by a thin superconducting solenoid providing a 2 T axial magnetic field, electromagnetic and hadron calorimeters, and a muon spectrometer with a toroidal magnet system.

The inner tracking detector, which provides full coverage of a pseudorapidity range \(|\eta | < 2.5\), consists of silicon pixel, silicon microstrip, and transition radiation tracking detectors. The high-granularity silicon pixel detector covers the vertex region and typically provides four measurements per track, the first measurement normally being in the insertable B-layer (IBL) installed before Run 2, primarily to enhance the b-tagging performance [8, 9]. Sampling calorimeters, made of lead and liquid Argon (LAr), provide electromagnetic (EM) energy measurements with high granularity in the pseudorapidity region \(|\eta | < 3.2\). A steel/scintillator-tile hadron calorimeter covers the central pseudorapidity range (\(|\eta | < 1.7\)) and a copper/LAr hadron calorimeter covers the range \(1.5< |\eta | < 3.2\). The forward region is instrumented with LAr calorimeters in the range \(3.1< |\eta | < 4.9\), measuring both electromagnetic and hadronic energies in copper/LAr and tungsten/LAr modules. The muon spectrometer surrounds the calorimeters and is based on three large superconducting air-core toroidal magnets with eight coils each. The field integral of the toroids ranges between 2.0 and 6.0 Tm across most of the detector. The muon spectrometer includes a system of precision tracking chambers and fast detectors for triggering.

A two-level trigger system is used to select events. The first-level trigger is implemented in hardware and uses a subset of the detector information to accept events at a rate below 100 kHz. This is followed by a software-based trigger that reduces the accepted event rate to 1 kHz on average depending on the data-taking conditions [10].

An extensive software suite [11] is used in data simulation, in the reconstruction and analysis of real and simulated data, in detector operations, and in the trigger and data acquisition systems of the experiment.

3 Data and simulated samples

The presented results are based on data from pp collisions with a centre-of-mass energy of \(\sqrt{s}=13\) TeV and a 25 ns proton bunch crossing interval. The data were collected between 2015 and 2018 with the ATLAS detector, corresponding to an integrated luminosity of 139 \({\hbox {fb}^{-1}}\) [12, 13]. A set of single-electron [14] and single-muon triggers [15] were used, with transverse momentum (\(p_{\text {T}}\)) thresholds in the range 20–26 GeV and depending on the lepton flavour and data-taking period. All detector subsystems are required to have been operational during data taking and to fulfil data quality requirements [16].

Expected Standard Model (SM) processes were modelled using MC simulation. A summary of the simulated samples used in this calibration and their basic parameter settings is provided in Table 1. All samples were produced using the ATLAS simulation infrastructure [17] and Geant4 [18]. The effect of additional pp interactions per bunch crossing (pile-up) is accounted for by overlaying the hard-scattering process with minimum-bias events generated with Pythia 8.186 [19] using the NNPDF2.3lo set of parton distribution functions (PDF) [20] and the A3 set of tuned parameters [21]. Different pile-up conditions between data and simulation are taken into account by reweighting the mean number of interactions per bunch crossing in simulation to the number observed in data.

The events used in this study originate mostly from the production of a Z boson in association with jets. This process is simulated with two different generators. Nominal predictions of \(Z/\gamma ^{*}\) production were generated using Sherpa 2.2.1 [22] with the NNPDF3.0nnlo PDF [23]. Diagrams with up to two additional parton emissions were simulated with next-to-leading-order (NLO) precision in QCD, and those with three or four additional parton emissions to leading-order accuracy. Matrix elements were merged with the Sherpa parton shower using the MEPS@NLO formalism [24,25,26,27] with a merging scale of 20 GeV and the five-flavour numbering scheme (5FNS). The sample used to evaluate the tagging efficiencies predicted by the MC simulation was produced with the MadGraph 2.2.3 [28] event generator with up to four additional partons at matrix-element level interfaced with Pythia 8.210 to model the parton shower, hadronisation, and underlying event with the NNPDF3.0nlo PDF for the processes where the Z boson decays into muons or electrons, and with MadGraph 2.2.2 interfaced with Pythia 8.186 with the NNPDF2.3lo PDF [20] for Z boson decays into \(\tau \)-leptons. The predictions of the Z+jets MadGraph program are based on calculations using the 5FNS scheme. Sherpa 2.2.1 and MadGraph Z+jets simulations are normalised to the overall cross-section prediction at NNLO precision in QCD [29].

In addition to Z+jets production, some minor backgrounds contribute to the final event sample used for the calibration. These backgrounds consist mostly of diboson production (\(ZZ \) and \(ZW \)) and top-quark pair production with subsequent decays into final states with two charged leptons. The \(ZW \) production and the \(ZZ \) production via quark–antiquark annihilation were simulated with Sherpa 2.2.1 with a MEPS@NLO configuration similar to what was used in the simulation of the Z+jets process described above, while loop-induced ZZ processes initiated by gluon–gluon fusion were generated with Sherpa 2.2.2. Both the \(ZZ \) and \(ZW \) simulation samples used the NNPDF3.0nnlo PDF set. Top-quark pair production was simulated using the Powheg Box v2 generator at NLO precision in QCD [30] interfaced with Pythia 8.230 to model the parton shower, hadronisation, and underlying event, using the A14 set of tuned parameters [31]. This simulation was used to model top-quark pair production with subsequent decays into final states with two charged leptons and into final states with one charged lepton. The latter is used to evaluate the difference between the SFs obtained from the modified (DL1rFlip) and nominal (DL1r) b-tagging algorithms.

The decays of b- and c-hadrons were handled by EvtGen 1.6.0 [32] in all simulations, except for those generated using Sherpa, for which the default configuration recommended by the Sherpa authors was used.

4 Object and event selection

Tracks of charged particles are reconstructed from energy deposits in the material of the ID [33]. Events are required to contain at least one vertex with two or more associated tracks that must have \(p_{\text {T}} > 500\) MeV. Among all vertices, the vertex with the highest \(p_{\text {T}}^2\) sum of the associated tracks is taken as the primary vertex (PV) [34]. The transverse (\(d_{0}\)) track impact parameter (IP) is defined as the distance of closest approach of the track-trajectory to the PV in the transverse plane. The longitudinal track IP (\(z_{0}\)) is defined as the distance in the z-direction between the PV and the track trajectory at the point of the closest approach in the x–y plane.

Jets containing b- and c-hadrons are characterised by having in-flight decays due to the relatively long lifetime of the heavy-flavour hadrons and give rise to secondary vertices (SV) with associated tracks. To exploit the presence of in-flight decays in the jet direction, the signed IP is defined for each track within a jet. As shown in Fig. 1, a track has a positive IP if the angle between the jet direction and the line joining the PV to the point of closest approach to the track is less than \(\pi /2\) and negative otherwise. The Single Secondary Vertex Finder (SSVF) algorithm [35] is used for the reconstruction of the SV.

Electron candidates are reconstructed from energy clusters in the EM calorimeter matched to an ID track [36]. Candidates must satisfy \(p_{\text {T}} > 18\) GeV and \(|\eta | < 2.47\), excluding the barrel–endcap transition region of 1.37\(< |\eta | < \)1.52, and their associated tracks must fulfil \(|d_{0}|/\sigma _{d_{0}} < 5\) and \(|z_{0}|\sin \theta < 0.5\) mm, where \(\sigma _{d_{0}}\) is the uncertainty in \(d_{0}\). Electrons are identified using a likelihood-based discriminant that combines information about shower shapes in the EM calorimeter, track properties and the quality of the track-to-cluster matching [36]. Electrons must fulfill a ‘Tight’ identification selection. The gradient isolation criteria are applied to reject non-prompt electrons, using energy deposits in the ID and calorimeter within a cone around the electron. Their efficiency is 90% (99%) for electrons from \(Z\rightarrow ee\) decays at \(p_{\text {T}}=25~(60)\) GeV. Efficiency scale factors measured in data [36] are used to correct for differences in reconstruction, identification, isolation and trigger selection efficiencies between data and simulation.

Muon candidates are reconstructed by combining tracks in the ID with tracks in the muon spectrometer [37]. Only muons with \(|\eta | < 2.5\) and \(p_{\text {T}} > 18\) GeV are used. They have to fulfil the ‘Tight’ identification selection criteria [38] and satisfy \(|d_{0}| / \sigma _{d_{0}} < 3\) and \(|z_{0}|\sin \theta <0.5\) mm. The isolation requirement reduces the contamination from non-prompt muons by placing an upper bound on the amount of energy measured in the tracking detectors and the calorimeter (combined using the particle-flow algorithm [4]) within a cone of variable size (for \(p_{\text {T}}^\mu < 50\) GeV) or fixed size (for \(p_{\text {T}}^\mu > 50\) GeV) around the muon. Efficiency scale factors are used to correct for differences in muon reconstruction, identification, vertex association, isolation and trigger efficiencies between simulation and data [38].

Jets are reconstructed from particle-flow objects combining information from the tracker and calorimeter [4], using the anti-\(k_{t}\) algorithm [39, 40] with a radius parameter of \(R=0.4\). The jet energy is calibrated to the particle scale by using a sequence of corrections, including simulation-based corrections and in situ calibrations [41]. The jet-vertex tagging technique (JVT) [42], which uses a multivariate likelihood approach, is applied to jets with \(|\eta | < 2.4\) and \(p_{\text {T}} < 60\) GeV to suppress jets from pile-up activity. The ‘Tight’ selection criterion, corresponding to a JVT score > 0.5, is used. Scale factors are applied to match the JVT MC efficiencies to those in data. All selected jets must have a \(p_{\text {T}} > 20\) GeV and \(|\eta |< 2.5\).

The b-tagging algorithms use tracks matched to jets as input. This matching uses the angular separation between the track momenta, defined at the point of closest approach to the PV, and the jet axis, \(\Delta R(\mathrm {track,jet~axis})\). The selection requirement on \(\Delta R(\mathrm {track,jet~axis})\) varies as a function of the jet \(p_{\text {T}}\) because the b-hadron decay products are more collimated at larger hadron \(p_{\text {T}}\) [2].

The jet-flavour labelling in simulation is based on an angular matching of reconstructed jets to generator-level b-hadrons, c-hadrons and \(\tau \)-leptons with \(p_{\text {T}} > 5\) GeV. If a b-hadron is found within a cone of size \(\Delta R = 0.3\) around the jet axis, the jet is labelled as a b-jet. If no matching to any b-hadron is possible, the matching procedure is repeated sequentially for c-hadrons and \(\tau \)-leptons, and the matched jets are called c-jets and \(\tau \)-jets, respectively. A jet is labelled as a light-flavour jet by default if no matching to any of these particles was successful.

A series of requirements on the angular separation \(\Delta R\) between muons, electrons and jets are applied to remove overlaps between objects. If an electron candidate shares an ID track with a muon candidate, the electron candidate is rejected. Jets within a cone of \(\Delta R = 0.2\) around a lepton are rejected, unless the lepton is a muon and the jet has more than three associated tracks, in which case the muon is rejected. Finally, lepton candidates that are found to be \(0.2< \Delta R < 0.4\) from any remaining jet are discarded.

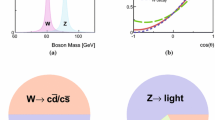

A jet sample enriched in light-flavour jets is needed for the calibration. A sample constructed using the leading jet in \(p_{\text {T}}\) in a Z+jets selection is expected to contain a 7%–8% fraction of c-jets and a 4%–6% fraction of b-jets, depending on the jet \(p_{\text {T}}\). The mistagging efficiency calibration is performed on the leading jet in a sample of Z+jets candidate events. The distinct signature of a Z boson decaying into either two electrons or two muons allows a clean sample of Z+jets events to be selected. Events are selected for further analysis using single-lepton triggers, where one of the leptons must be matched to an object that triggered the recording of the event. The event must contain exactly two prompt leptons with opposite charges and the same flavour (i.e. either exactly two electrons or exactly two muons). The leading lepton must have \(p_{\text {T}} > 28\) GeV and the invariant mass of the dilepton system, \(m_{\ell \ell }\), must satisfy \(81< m_{\ell \ell } < 101\) GeV. Only events with a reconstructed Z boson with \(p_{\textrm{T}}^{Z} > 50\) GeV are considered because the overall modelling is better in this range [43]. The event must also contain at least one jet with \(p_{\text {T}} > 20\) GeV and \(|\eta | < 2.5\).

The event yields obtained after applying all selection requirements are listed in Table 2, while comparisons between the predictions by the simulation and the data are shown in Fig. 2 for selected variables. The predictions of the MC simulation are normalised to data for these comparisons. The shapes of the distributions predicted by the simulation are consistent with those observed within statistical uncertainties for most of the considered kinematic range. The discrepancy observed in the SV mass (\(m_{\textrm{SV}}\)) distribution is expected because it is affected by a known mismodelling of the jet-flavour fractions [44, 45]; this is mitigated by the fit described in Sect. 6. The \(m_{\textrm{SV}}\) observable is calculated using the SSVF algorithm. Negative values of \(m_{\textrm{SV}}\) indicate that no SV was found in the jet. The mismodelling in jet \(p_{\text {T}}\) does not affect the calibration.

Comparisons between data and simulation for the most relevant kinematic variables after event selection. The MC expectations are normalised to data. The shaded area corresponds to the statistical uncertainties of the MC simulations. The Z+jets events are simulated using Sherpa 2.2.1. Negative values of \(m_{\textrm{SV}}\) indicate that no SV was found in the jet

5 The DL1r b-tagging algorithm

Properties of b-hadrons, such as their relatively high mass, long lifetime and large multiplicity of reconstructed tracks from their decay products, are exploited to distinguish b-jets from c-jets and light-flavour jets. Individual low-level taggers [46, 47] are designed to target the specific properties of b-jets. The IP2D, IP3D and RNNIP algorithms exploit the individual properties of the tracks from charged particles matched to the jet, especially the track \(d_{0}\) and \(z_{0}\). RNNIP is based on a recurrent neural network and it explores the correlations between the IPs of different selected tracks in the jet. The SV1 algorithm exploits the output of the SSVF algorithm and JetFitter [48] reconstructs secondary and tertiary vertices, following the expected topology of b-hadron decays. The DL1r algorithm is a deep neural network that takes the output of the low-level taggers and jet kinematics as input and builds a single discriminant [49, 50].

ATLAS analyses use selection requirements defining a lower bound on the DL1r discriminant to select b-jets with a certain efficiency. Four of these so-called single-cut operating points (OPs) are defined, corresponding to b-jet selection efficiencies of 85%, 77%, 70% and 60%. The OPs are evaluated in a sample of b-jets from simulated \(t\bar{t}\) events. These selection requirements divide the DL1r score into five intervals to form the pseudo-continuous OPs, where the lower edge of the lowest interval corresponds to 100% DL1r b-tagging efficiency, and the upper edge of the highest interval corresponds to 0% efficiency.

The single-cut and pseudo-continuous OPs are calibrated in order to correct for efficiency differences between data and simulation. The calibration SF, defined in Eq. (1), is measured relative to a reference efficiency \(\varepsilon ^{f}_{\textrm{MC}}\). The performance of the b-tagging algorithm in simulation is affected by the hadronisation and fragmentation model used in the parton shower simulation [43]. To account for their differences, simulation-to-simulation SFs are applied to those simulated samples that have a fragmentation model different from the default. The calculation of these simulation-to-simulation correction factors is described in Ref. [51]. The top-quark pair production sample produced with Powheg Box v2 + Pythia 8.230 is used to define the reference MC efficiencies in the b- and c-jet calibrations. As no equivalent Z+jets simulation produced with the Powheg Box v2 generator is available, the Z+jets MadGraph + Pythia 8 simulation is used for the definition of the reference efficiency in the calculation of SF\(^{\text {light}}\) for light-flavour jets. Since the Z+jets MadGraph + Pythia 8 simulation uses the same parton shower model and set of tuned parameters as the top-quark pair production simulation, the light-flavour jet results and the b- and c-jet calibrations are obtained relative to similar reference models.

6 The negative tag method

The DL1r algorithm rejects 97.52% and 99.96% of the light-flavour jets, respectively, at the 85% and 60% OPs, according to simulated \(t\bar{t}\) events. Therefore, the fraction of light-flavour jets passing the threshold is too low to estimate \(\varepsilon ^{\text {light}}\) in data. The Negative Tag method [5, 6] calibrates \(\varepsilon ^{\text {light}}_{\text {data}}\) using a modified version of the DL1r algorithm (called DL1rFlip) that achieves lower \(\varepsilon ^{b}\) to \(\varepsilon ^{\text {light}}\) ratios without changing \(\varepsilon ^{\text {light}}\) significantly.

Tracks matched to b-jets have relatively large and positively signed IPs due to the long lifetime of the b-hadrons and the presence of displaced decay vertices. In contrast, tracks matched to light-flavour jets typically have IP values consistent with zero within the IP resolution such that a more symmetricFootnote 2 IP distribution is expected. The expected IP distributions of the tracks associated with b-jets, c-jets or light-flavour jets are shown in Fig. 3. The Negative Tag method assumes that the probability for a light-flavour jet to be mistagged remains almost the same when inverting the IP signs of all tracks and displaced vertices. This is based on the assumption that light-flavour jets are misidentified as b-jets mainly due to resolution effects in the track reconstruction which result in tracks and vertices from tracks with positive IPs inside the jet. Given the symmetric IP distributions, the fractions of tracks and vertices from tracks with positive IPs remain stable after inverting the IP signs of all tracks and vertices. The presence of the positive tail in the IP distribution challenges this assumption and its impact is taken into account by a dedicated uncertainty, which is called the DL1rFlip-to-DL1r extrapolation uncertainty in the following.

Signed-\(d_{0}\) (left) and signed-\(z_{0}\) (right) distributions for tracks matched to b-jets, c-jets and light-flavour jets in simulated \(t\bar{t}\) events. The selected tracks are matched to particle-flow jets with \(p_{\text {T}}\,> 20\) GeV and \(|\eta |< 2.5\), and pass the jet-vertex tagger selection. The distributions are normalised to unity. Statistical uncertainties are also shown

The DL1rFlip algorithm inverts the signs of the track IPs and the decay length,Footnote 3 while using the same algorithm training and OP-threshold definitions as the DL1r algorithm. The \(\varepsilon ^{\text {light}}\) values obtained by the two algorithms are approximately the same, while the DL1rFlip algorithm selects a smaller fraction of b-jets than the nominal version. The discriminants of the DL1r and DL1rFlip algorithms are compared between data and simulation in Fig. 4. These comparisons show that the heavy-flavour fractions are much lower for high DL1rFlip values than for high DL1r values. At the 85% (60%) single-cut OP, the DL1r algorithm selects a sample of jets in which 25% (1%) are light-flavour jets, while the DL1rFlip algorithm selects a sample in which a higher fraction, 38% (6%), are light-flavour jets at the same OP.

Comparisons between data and simulation for the distributions of the DL1r discriminant (left) and DL1rFlip discriminant (right). Statistical uncertainties of the yields are shown

The calibration is performed independently in jet \({p_{\text {T}}}\) intervals in order to account for the \({p_{\text {T}}}\) dependence of \(\varepsilon ^{\text {light}}\). A simultaneous binned fit to the \(m_{\textrm{SV}}\) distribution in each pseudo-continuous interval of the DL1rFlip discriminant is performed in order to simultaneously determine \(\varepsilon ^{b}_{\text {data}}\) and \(\varepsilon ^{\text {light}}_{\text {data}}\) in the DL1rFlip discriminant intervals. The sensitivity of the fit does not allow the SFs of all three jet flavours to be derived simultaneously. Therefore, \(\varepsilon ^{c}_{\text {data}}\) is constrained to the MC predictions and SF\(^{c}\) is fixed to unity within an uncertainty of 30%, as suggested by studies of the c-jet mistagging efficiency calibration [3].

For a given interval of jet \({p_{\text {T}}}\), the expected number of jets for a defined discriminant interval i is given by

where \(N_{i,\text {MC}}\) is the predicted flavour-inclusive event yield for each discriminant interval; C is a global normalisation factor and \(F^{f}\) are the jet-flavour fractions; \(P_{i}^{f}\!(m_{\text {SV}})\) is the probability density function of \(m_{\text {SV}}\) for jet flavour f in the i-th DL1rFlip discriminant interval, taken from simulation. The \(P_{i}^{f}\!(m_{\text {SV}})\) is defined in such a way to integrate an additional bin (\(m_{\text {SV}}<0\) GeV) representing the number of events where no secondary vertex is found. The \(m_{\text {SV}}\) has been obtained with tracks with nominal sign as input to the SSVF algorithm.

The C, \(F^{f}\) and \(\text {SF}^{b,\text {light}}_{i}\) parameters are allowed to float in the fit, while \(N_{i,\text {MC}}\) and \(\varepsilon ^{f}_{i,\text {MC}}\) are fixed to the predictions from simulated events and \(\text {SF}^{c}_{i}\) is set to \(1.0\pm 0.3\). The constraints \(\sum _{i=1}^{5} \varepsilon _{i, \text {MC}}^{f} \times \text {SF}_{i}^{f} = 1\), where the contributions to the first bin (corresponding to the 100%−85% OP interval) are allowed to vary such that unitarity is preserved, and \(F^{\text {light}} = 1 - F^{b} - F^{c}\) are applied, reducing the number of free parameters to 11. Figure 5 shows the post-fit \(m_{\textrm{SV}}\) and DL1rFlip discriminant distributions for the 50–100 GeV \({p_{\text {T}}}\) interval. The parameters of interest, i.e. the \(\text {SF}^{\text {light}}_{i}\), are obtained from the DL1rFlip algorithm. In order to apply this calibration to the DL1r algorithm, an extrapolation uncertainty is added to account for the differences between these two tagging algorithms. Details of this DL1rFlip-to-DL1r extrapolation uncertainty are presented in Sect. 7.

Post-fit distribution of the DL1rFlip discriminant (left) and the \(m_{\textrm{SV}}\) distribution in the 77–70% OP interval (right). Both distributions are shown for the 50–100 GeV \(p_{\text {T}}\) interval. The shaded area corresponds to the total statistical uncertainty. The binning in \(m_{\textrm{SV}}\) allows good separation between the jet flavours and also uses the minimum number of bins necessary to constrain the free parameters of the fit. Choosing the coarsest binning possible allows the statistical uncertainties to be minimised

7 Systematic uncertainties

The measurement of SF\(^{\text {light}}\) is affected by four types of uncertainties, including those due to experimental effects, the modelling of the Z+jets and background processes, and the limited number of events in data and simulation. For each source of uncertainty, one parameter of the fit model is varied at a time, and the effect of this variation on SF\(^{\text {light}}\) is evaluated. This approach is chosen to prevent the fit from using the data to constrain the impact of individual systematic uncertainties. The probability density functions in \(m_{\textrm{SV}}\) are derived from the Sherpa 2.2.1 Z+jets simulation sample. The probability density function for each flavour component is separately normalised to unity, so only the shape of the \(m_{\textrm{SV}}\) distributions is estimated using the Sherpa 2.2.1 Z+jets simulation. The simulated efficiencies from the MadGraph Z+jets simulation listed in Table 1 are used in the likelihood definition to predict the expected number of events for each flavour category. Given that the flavour fractions and most SFs are extracted from the data, the systematic uncertainty calculation considers mainly effects on the template shapes. An additional uncertainty accounts for differences between SF\(^{\text {light}}\) values from the DL1rFlip and DL1r algorithms to ensure the applicability of this calibration to the DL1r algorithm.

Uncertainties in the modelling of the detector response have a negligible impact on the calibration results. The impact of the jet energy scale uncertainties was estimated by globally shifting the jet energy scale (JES) by 5%, in accord with the most conservative estimate of the JES uncertainty in \({p_{\text {T}}}\) derived in Ref. [41]. This conservative assumption about the magnitude of the jet energy scale uncertainties leads to an estimated impact of less than 1% on the calibration results, owing to the low \({p_{\text {T}}}\) dependence of the scale factors. The impact of \(d_{0}\) and \(z_{0}\) IP resolution modelling uncertainties on the \(m_{\textrm{SV}}\) templates was estimated by applying data-driven corrections to the simulated \(d_{0}\) and \(z_{0}\) in a sample of simulated top-quark pairs with a method similar to the one in Ref. [7]. The impact of these corrections is transfered to the fit templates and is symmetrised around the central value. This approach was chosen in order to mitigate the effect of statistical fluctuations in the available Z+jets samples. Other experimental uncertainties were found to be negligible because they are not correlated with the discriminant used in the fit.

Simulations of Z+jets via Sherpa 2.2.1 are used as the nominal model to derive the fit templates for b-jets, c-jets and light-flavour jets in the \(m_{\textrm{SV}}\) distribution. In order to assess the impact of the resulting choice of parton shower model, shower matching scheme and order of the perturbative QCD calculations on the template shapes, SFs\(^{\text {light}}\) are derived using an alternative model, provided by the Z+jets MadGraph + Pythia 8 simulation. The difference between the obtained SFs\(^{\text {light}}\) is taken as the estimate of the MC modelling uncertainty. The effect of QCD scale uncertainties is estimated by independently doubling or halving the renormalisation and factorisation scales. The impact of choosing a particular PDF set is estimated by propagating its uncertainties. However, the impacts of the QCD scale and PDF set uncertainties are found to be covered by the statistical uncertainties of the MC simulation and these uncertainties are therefore not included.

The effect of the 30% uncertainty in SF\(^{c}\), which was fixed to a value of 1.0 in the fit, is estimated by repeating the fit with SF\(^{c}\) set to 1.3 and 0.7 instead. The mean impact of the two variations on SF\(^{\text {light}}\) is taken as the impact of the charm calibration uncertainty on the light-flavour jet calibration.

Comparison of the light-flavour jet efficiency scale factor results for the DL1r and DL1rFlip tagger obtained with the MC-based calibration on a Powheg Box v2 + Pythia 8.230 \(t{\bar{t}}\) MC simulation, considering the 85% and 70% operating points, as a function of the jet \({p_{\text {T}}}\). The error bars correspond to MC statistical uncertainties. The lower panel shows the ratio of DL1rFlip to DL1r tagger MC-based calibration efficiency scale factors. The envelope of this ratio across the considered jet \({p_{\text {T}}}\) interval is used as an estimate for the impact of the tracking variable mismodelling on the difference between the DL1rFlip and DL1r calibrations, which is a part of the DL1rFlip-to-DL1r extrapolation uncertainty, estimating the impact of applying the calibrations derived for the DL1rFlip algorithm to the DL1r algorithm

SFs to calibrate the \(\varepsilon ^{\text {light}}\) of the pseudo-continuous OPs. The size of the uncertainty bands in the direction of the jet \({p_{\text {T}}}\) axis is arbitrary and corresponds to the choice of the calibration intervals in \({p_{\text {T}}}\)

SFs to calibrate the \(\varepsilon ^{\text {light}}\) of the single-cut OPs. The size of the uncertainty bands in the direction of the jet \(p_{\text {T}}\) axis is arbitrary and corresponds to the choice of the calibration intervals in \(p_{\text {T}}\)

DL1r mistagging efficiency calibration uncertainties. The breakdown of the different uncertainties is shown for the different OPs

The \(\varepsilon ^{\text {light}}\) value is assumed to be the same for DL1rFlip and DL1r to first order, as described in Sect. 6. However, mismodelling of the IP resolution, the fake-track rate or the parton shower can have different effects on the tagging performance and SF\(^{\text {light}}\) values of the DL1r and DL1rFlip algorithms. A DL1rFlip-to-DL1r extrapolation uncertainty is added to account for residual differences in SF\(^{\text {light}}\) between the two algorithms. SF\(^{\text {light}}\) cannot be measured in data for the DL1r algorithm. Therefore, a method similar to the one in Ref. [7] is used to derive SF\(^{\text {light}}\) for DL1rFlip and DL1r from MC simulation in order to compare the two calibrations. The DL1rFlip-to-DL1r extrapolation uncertainty consists of two components. One component estimates the impact of the modelling of tracking variables on the DL1rFlip and DL1r tagging performance, evaluated in a bottom-up approach correcting the simulation, and the second component estimates the impact of the shower and hadronisation model on the tagging performance difference between DL1rFlip and DL1r. The impact of the modelling of tracking variables is evaluated by applying data-driven corrections to underlying tracking variables affecting the b-tagging performance in a sample of simulated \(t{\bar{t}}\) events generated with Powheg Box v2 + Pythia 8.230. The impact on \(\varepsilon ^{\text {light}}\) of correcting these observables is evaluated with respect to the uncorrected simulation. Only corrections which have been shown to have a significant impact are considered [7]. These include the IP resolution and fake-track rate modelling [53]. The total impact on the mistagging efficiency is obtained by multiplying the effects of all corrections. The total impact on \(\varepsilon ^{\text {light}}_{\text {MC}}\) is compared between DL1r and DL1rFlip and the relative difference is assigned as the first component of the DL1rFlip-to-DL1r extrapolation uncertainty. A comparison of these simulation-based calibration factors is shown in Fig. 6. The impact of the parton shower modelling on the difference between SF\(^{\text {light}}\) in DL1r and DL1rFlip is estimated by multiplying the SFs\(^{\text {light}}\) for DL1r (DL1rFlip), derived using the corrected Powheg Box v2 + Pythia 8.230 simulation, by the simulation-to-simulation SFs defined as the ratio of the DL1r (DL1rFlip) \(\varepsilon ^{\text {light}}\) for the Sherpa 2.2.1 and the MadGraph + Pythia 8 simulations. The simulation-to-simulation SFs have been derived using the Z+jets samples listed in Table 1. This gives an estimate of the DL1rFlip-to-DL1r extrapolation uncertainty for an alternative shower model. The difference between this estimate and the estimate obtained using the corrected Powheg Box v2 + Pythia 8.230 simulation is used as the component assessing the impact of the shower and hadronisation model on the tagging performance difference between DL1rFlip and DL1r. The two components are added in quadrature to obtain the DL1rFlip-to-DL1r extrapolation uncertainty. The envelope over \({p_{\text {T}}}\) of the DL1rFlip-to-DL1r extrapolation uncertainty is used for the final uncertainty estimate. The shower modelling makes the largest contribution to the DL1rFlip-to-DL1r extrapolation uncertainty and ranges up to 10%. The largest uncertainty contribution in correcting the MC simulation is obtained from the track IP resolution modelling corrections, ranging up to 10% as well. The total DL1rFlip-to-DL1r extrapolation uncertainty is 10%–12% depending on the b-tagging OP and is the dominant systematic uncertainty overall.

Statistical uncertainties of the data and the \(m_{\textrm{SV}}\) templates from simulated MC events are taken into account. The statistical uncertainties of the MC-based fit templates are implemented following the light-weight Beeston–Barlow method [54]. As their effect is non-negligible, cross-check studies were performed using a ‘toy’ approach. The fit template bin entries were fluctuated randomly around the nominal value according to a Gaussian probability distribution with a standard deviation equal to the MC statistical uncertainty in the template bins. SF\(^{\text {light}}\) was extracted for each of these new fit templates and the standard deviation of the resulting SF\(^{\text {light}}\) distribution was taken as the statistical uncertainty. The statistical uncertainty estimates via the Beeston–Barlow model and the toy approach were found to be consistent.

8 Results

The calibration SFs\(^{\text {light}}\) of the DL1r algorithm are presented. The 85%, 77% and 70% OPs were successfully calibrated in data by using the Negative Tag method. However, it is not feasible to calibrate the 60% OP in data because of insufficient statistics and the relatively large contamination by heavy-flavour jets.

Due to these limitations and since the measured SFs for the closest 77–70% and 70–60% pseudo-continuous OPs do not show any significant deviation from unity compared to their respective measurement uncertainties, the assumption is made that the same must hold for the 60% OP. The SF for this OP is thus set to 1. Its dominant uncertainty, the DL1rFlip-to-DL1r extrapolation uncertainty, is derived specifically for the 60% OP, and found to be slightly larger than for the 70% OP. The other (sub-leading) uncertainties are assumed to be identical to the 70% OP, since their evaluation suffers from large uncertainties. It should also be noted that extracting very precise SFs\(^\text {light}\) for the 60–0% pseudo-continuous OP interval is not critical to most physics analyses as only around 0.5 per mille of all light-flavour jets are b-tagged at this OP [49].

The results for the mistagging efficiency calibration of light-flavour jets from the DL1r tagger, using particle-flow jets in Run 2 data, are shown in Fig. 7 for the pseudo-continuous OPs of the DL1r algorithm. The calibration of the DL1r single-cut OPs, which is derived from the calibration results of the pseudo-continuous OPs, is shown in Fig. 8. Furthermore, Fig. 9 shows a breakdown of the uncertainties in the SFs\(^{\text {light}}\) for each jet \(p_{\text {T}}\) interval and single-cut OP.

The measured SFs\(^{\text {light}}\) are consistent with unity within uncertainties, except for the 85–77% pseudo-continuous OP and the 85% single-cut OP, where the data prefer SFs\(^{\text {light}}\) which differ from one by about one standard deviation. Therefore, the results indicate the MC simulation predicts \(\varepsilon ^{\text {light}}\) to be similar to that in data.

Mistags of light-flavour jets are mostly caused by the presence of fake tracks and the limited impact parameter resolution [7]. The fake-track rate is underestimated by the MC simulation and the impact parameter resolution is overestimated [7, 53]. An increase in the fake-track rate and a decrease in impact parameter resolution both give rise to larger mistagging efficiencies [7, 55]. Observed SFs slightly larger than unity are therefore expected.

Tables 3, 4, 5 and 6 feature a detailed breakdown of the uncertainties in the results. Overall, the largest systematic uncertainty is the DL1rFlip-to-DL1r extrapolation uncertainty, with values of 10–12%, depending on the b-tagging OP. The impact of the charm calibration uncertainty amounts to 5–10% depending on the jet \({p_{\text {T}}}\) and the b-tagging OP, and is therefore one of the larger uncertainties. The MC modelling uncertainty is a few percent in most cases, but reaches its maximum of 11% for the 70% single-cut OP in the \(20 \le \text {jet}~{p_{\text {T}}} \le 50\) GeV interval.

The effect of the \(d_{0}\) and \(z_{0}\) impact parameter resolution modelling uncertainty is generally of the order of a few percent and therefore mostly subdominant. However, the effect can range up to 14% for low jet \(p_{\text {T}}\) and tight OPs. The data statistical uncertainties give subdominant contributions except for high jet \({p_{\text {T}}}\) and tight OPs, where contributions range from 0.5 to 9.5%. The MC statistical uncertainty is one of the dominant contributions, ranging from 0.4 to 13%, depending on the OP and the jet \({p_{\text {T}}}\). The total uncertainty in SF\(^{\text {light}}\) is between 11 and 23%. In general, higher precision is obtained for the looser OPs and the size of the dominant uncertainties does not depend significantly on the jet \({p_{\text {T}}}\).

In the previous calibration with the Negative Tag method using dijet events in 2015–2016 data, the total uncertainty in SF\(^{\text {light}}\) ranged from 14% to 76% for jets with \({p_{\text {T}}}\) between 20 and 300 GeV. Dominant uncertainties arose from the modelling of the track impact parameter resolution and the uncertainty in the fraction of heavy-flavour jets which was estimated using simulation. The reduced uncertainty in the present calibration stems from both improved modelling of the track impact parameters in simulation and the \(m_{\text {SV}}\) fit, which allows the extraction of SF\(^{\text {light}}\) while simultaneously correcting \(\varepsilon ^{b}\) and the jet-flavour fractions using data. Previously, \(\varepsilon ^{b}\) and the heavy-flavour fractions were estimated from simulation, resulting in large modelling uncertainties, which were a limiting factor in the precision of the result.

9 Conclusions

The light-flavour jet mistagging efficiency \(\varepsilon ^{\text {light}}\) of the DL1r b-tagging algorithm has been measured with a 139 \({\hbox {fb}^{-1}}\) sample of \(\sqrt{s}=13~\hbox {TeV}\) pp collision events recorded during 2015–2018 by the ATLAS detector at the LHC. The measurement is based on an improved method applied to a sample of Z+jets events. The Negative Tag method, based on the application of an alternative b-tagging algorithm, designed to facilitate the measurement of the light-flavour jet mistagging efficiency, is used. Data-to-simulation scale factors for correcting \(\varepsilon ^{\text {light}}\) in simulation are measured in four different jet transverse momentum intervals, ranging from 20 to 300 GeV, for four separate quantiles of the b-tagging discriminant. The scale factors typically exceed unity by around 10–20%, with total uncertainties ranging from 11 to 23%, and do not exhibit any strong dependence on jet transverse momentum. These calibration uncertainties are considerably lower than the previous 14% to 76% uncertainties from the Negative Tag method using 2015–2016 data.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: All ATLAS scientific output is published in journals, and preliminary results are made available in Conference Notes. All are openly available, without restriction on use by external parties beyond copyright law and the standard conditions agreed by CERN. Data associated with journal publications are also made available: tables and data from plots (e.g. cross section values, likelihood profiles, selection efficiencies, cross section limits, ...) are stored in appropriate repositories such as HEPDATA (http://hepdata.cedar.ac.uk/). ATLAS also strives to make additional material related to the paper available that allows a reinterpretation of the data in the context of new theoretical models. For example, an extended encapsulation of the analysis is often provided for measurements in the framework of RIVET (http://rivet.hepforge.org/).” This information is taken from the ATLAS Data Access Policy, which is a public document that can be downloaded from http://opendata.cern.ch/record/413 [opendata.cern.ch].]

Notes

ATLAS uses a right-handed coordinate system with its origin at the nominal interaction point in the centre of the detector and the \(z\)-axis along the beam pipe. The \(x\)-axis points from the interaction point to the centre of the LHC ring, and the \(y\)-axis points upwards. Cylindrical coordinates \((r,\phi )\) are used in the transverse plane, \(\phi \) being the azimuthal angle around the \(z\)-axis. The pseudorapidity is defined in terms of the polar angle \(\theta \) as \(\eta = -\ln \tan (\theta /2)\). The angular distance is measured in units of \(\Delta R \equiv \sqrt{(\Delta \eta )^{2} + (\Delta \phi )^{2}}\).

The tracks matched to light-flavour jets have a slight bias towards positive-sign values due to the presence of some long-lived particles (e.g. \(K_\text {S}\) or \(\Lambda \)). The contribution from the mismodelling of long-lived particles is expected to be negligible relative to the mismodelling of the \(d_0\) and \(z_0\) resolutions [7].

In this paper, vertices are assigned a negative decay length if they have an angle between the jet direction and the line joining the primary vertex to the secondary vertex of more than \(\pi /2\) (see Sect. 14.2 of Ref. [52] for more details).

References

ATLAS Collaboration, The ATLAS Experiment at the CERN Large Hadron Collider. JINST 3, S08003 (2008)

ATLAS Collaboration, ATLAS \(b\)-jet identification performance and efficiency measurement with \(t{\bar{t}}\) events in \(pp\) collisions at \(\sqrt{s}=13 TeV\). Eur. Phys. J. C 79, 970 (2019). arXiv:1907.05120 [hep-ex]

ATLAS Collaboration, Measurement of the \(c\)-jet mistagging efficiency in \(t{\bar{t}}\) events using \(pp\) collision data at \(\sqrt{s}=13 TeV\) collected with the ATLAS detector. Eur. Phys. J. C 82, 95 (2021). arXiv:2109.10627 [hep-ex]

ATLAS Collaboration, Jet reconstruction and performance using particle flow with the ATLAS Detector. Eur. Phys. J. C 77, 466 (2017). arXiv:1703.10485 [hep-ex]

ATLAS Collaboration, Calibration of the performance of \(b\)-tagging for \(c\) and light-flavour jets in the 2012 ATLAS data, ATLAS-CONF-2014-046 (2014). https://cds.cern.ch/record/1741020

D0 Collaboration, Measurement of the \(t{\bar{t}}\) production cross section in \(pp\) collisions at \(\sqrt{s}=1.96 TeV\) using secondary vertex \(b\) tagging. Phys. Rev. D 74, 112004 (2006). arXiv:hep-ex/0611002

ATLAS Collaboration, Calibration of light-flavour \(b\)-jet mistagging rates using ATLAS proton–proton collision data at \(\sqrt{s}=13 TeV\), ATLAS-CONF-2018-006 (2018). https://cds.cern.ch/record/2314418

ATLAS Collaboration, ATLAS Insertable B-Layer: Technical Design Report, ATLAS-TDR-19; CERN-LHCC-2010-013 (2010). https://cds.cern.ch/record/1291633 (Addendum: ATLAS-TDR-19-ADD-1; CERN-LHCC-2012-009, 2012, url: https://cds.cern.ch/record/1451888)

B. Abbott et al., Production and integration of the ATLAS Insertable B-Layer. JINST 13, T05008 (2018). arXiv:1803.00844 [physics.ins-det]

ATLAS Collaboration, Performance of the ATLAS Trigger System in 2015. Eur. Phys. J. C 77, 317 (2017). arXiv:1611.09661 [hep-ex]

ATLAS Collaboration, The ATLAS Collaboration Software and Firmware, ATL-SOFT-PUB-2021-001 (2021). https://cds.cern.ch/record/2767187

ATLAS Collaboration, Luminosity determination in \(pp\) collisions at \(\sqrt{s}=13 TeV\) using the ATLAS detector at the LHC (2022). arXiv:2212.09379 [hep-ex]

G. Avoni et al., The new LUCID-2 detector for luminosity measurement and monitoring in ATLAS. JINST 13, P07017 (2018)

ATLAS Collaboration, Performance of electron and photon triggers in ATLAS during LHC Run 2. Eur. Phys. J. C 80, 47 (2020). arXiv:1909.00761 [hep-ex]

ATLAS Collaboration, Performance of the ATLAS muon triggers in Run 2. JINST 15, P09015 (2020). arXiv:2004.13447 [hep-ex]

ATLAS Collaboration, ATLAS data quality operations and performance for 2015.2018 data-taking. JINST 15, P04003 (2020). arXiv:1911.04632 [physics.ins-det]

ATLAS Collaboration, The ATLAS Simulation Infrastructure. Eur. Phys. J. C 70, 823 (2010). arXiv:1005.4568 [physics.ins-det]

S. Agostinelli et al., Geant4: a simulation toolkit. Nucl. Instrum. Methods A 506, 250 (2003)

T. Sjöstrand, S. Mrenna, P. Skands, A brief introduction to PYTHIA 8.1. Comput. Phys. Commun. 178, 852 (2008). arXiv:0710.3820 [hep-ph]

NNPDF Collaboration, R.D. Ball et al., Parton distributions with LHC data. Nucl. Phys. B 867, 244 (2013). arXiv:1207.1303 [hep-ph]

ATLAS Collaboration, The Pythia 8 A3 tune description of ATLAS minimum bias and inelastic measurements incorporating the Donnachie–Landshoff diffractive model, ATL-PHYS-PUB-2016-017 (2016). https://cds.cern.ch/record/2206965

T. Gleisberg et al., Event generation with SHERPA 1.1. JHEP 02, 007 (2009). arXiv:0811.4622 [hep-ph]

The NNPDF Collaboration, R.D. Ball et al., Parton distributions for the LHC run II. JHEP 04, 040 (2015). arXiv:1410.8849 [hep-ph]

S. Catani, F. Krauss, B.R. Webber, R. Kuhn, QCD matrix elements + parton showers. JHEP 11, 063 (2001). arXiv:hep-ph/0109231

S. Höche, F. Krauss, M. Schönherr, F. Siegert, QCD matrix elements + parton showers. The NLO case. JHEP 04, 027 (2013). arXiv:1207.5030 [hep-ph]

S. Höche, F. Krauss, S. Schumann, F. Siegert, QCD matrix elements and truncated showers. JHEP 05, 053 (2009). arXiv:0903.1219 [hep-ph]

S. Hoche, F. Krauss, M. Schonherr, F. Siegert, A critical appraisal of NLO+PS matching methods. JHEP 09, 049 (2012). arXiv:1111.1220 [hep-ph]

J. Alwall et al., The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. JHEP 07, 079 (2014). arXiv:1405.0301 [hep-ph]

C. Anastasiou, L. Dixon, K. Melnikov, F. Petriello, High-precision QCD at hadron colliders: Electroweak gauge boson rapidity distributions at next-to-next-to leading order. Phys. Rev. D 69, 094008 (2004). arXiv:hep-ph/0312266

S. Frixione, P. Nason, C. Oleari, Matching NLO QCD computations with parton shower simulations: the POWHEG method. JHEP 11, 070 (2007). arXiv:0709.2092 [hep-ph]

ATLAS Collaboration, ATLAS Pythia 8 tunes to 7 TeV data, ATL-PHYS-PUB-2014-021 (2014). https://cds.cern.ch/record/1966419

D.J. Lange, The EvtGen particle decay simulation package. Nucl. Instrum. Methods A 462, 152 (2001)

ATLAS Collaboration, Performance of the ATLAS track reconstruction algorithms in dense environments in LHC Run 2. Eur. Phys. J. C 77, 673 (2017). arXiv:1704.07983 [hep-ex]

ATLAS Collaboration, Vertex Reconstruction Performance of the ATLAS Detector at \(\sqrt{s}=13 TeV\), ATL-PHYS-PUB-2015-026 (2015). https://cds.cern.ch/record/2037717

ATLAS Collaboration, Secondary vertex finding for jet flavour identification with the ATLAS detector, ATL-PHYS-PUB-2017-011 (2017). https://cds.cern.ch/record/2270366

ATLAS Collaboration, Electron and photon performance measurements with the ATLAS detector using the 2015–2017 LHC proton–proton collision data. JINST 14, P12006 (2019). arXiv:1908.00005 [hep-ex]

ATLAS Collaboration, Muon reconstruction performance of the ATLAS detector in proton–proton collision data at, \(\sqrt{s}=13 TeV\). Eur. Phys. J. C 76, 292 (2016). arXiv:1603.05598 [hep-ex]

ATLAS Collaboration, Muon reconstruction and identification efficiency in ATLAS using the full Run 2 \(pp\) collision data set at \(\sqrt{s}=13 TeV\). Eur. Phys. J. C 81, 578 (2021). arXiv:2012.00578 [hep-ex]

M. Cacciari, G.P. Salam, G. Soyez, The anti-\({k_{t}}\)jet clustering algorithm. JHEP 04, 063 (2008). arXiv:0802.1189 [hep-ph]

M. Cacciari, G.P. Salam, G. Soyez, FastJet user manual. Eur. Phys. J. C 72, 1896 (2012). arXiv:1111.6097 [hep-ph]

ATLAS Collaboration, Jet energy scale and resolution measured in proton.proton collisions at \(\sqrt{s}=13 TeV\) with the ATLAS detector. Eur. Phys. J. C 81, 689 (2020). arXiv:2007.02645 [hep-ex]

ATLAS Collaboration, Performance of pile-up mitigation techniques for jets in \(pp\) collisions at \(\sqrt{s}=8 TeV\) using the ATLAS detector. Eur. Phys. J. C 76, 581 (2016). arXiv:1510.03823 [hep-ex]

ATLAS Collaboration, Measurements of b-jet tagging efficiency with the ATLAS detector using \(t{\bar{t}}\) events at \(\sqrt{s}=13 TeV\). JHEP 08, 089 (2018). arXiv:1805.01845 [hep-ex]

ATLAS Collaboration, Measurements of the production cross-section for a \(Z\) boson in association with \(b\)-jets in proton.proton collisions at \(\sqrt{s}=13 TeV\) with the ATLAS detector. JHEP 07, 044 (2020). arXiv:2003.11960 [hep-ex]

C.M.S. Collaboration, Measurement of differential cross sections for \(Z\) bosons produced in association with charm jets in pp collisions at \(\sqrt{s}=13 TeV\). JHEP 04, 109 (2021). arXiv:2012.04119 [hep-ex]

ATLAS Collaboration, Optimisation of the ATLAS \(b\)-tagging performance for the 2016 LHC Run, ATL-PHYS-PUB-2016-012 (2016). https://cds.cern.ch/record/2160731

ATLAS Collaboration, Identification of Jets Containing \(b\)-Hadrons with Recurrent Neural Networks at the ATLAS Experiment, ATL-PHYS-PUB-2017-003 (2017). https://cds.cern.ch/record/2255226

ATLAS Collaboration, Topological \(b\)-hadron decay reconstruction and identification of \(b\)-jets with the JetFitter package in the ATLAS experiment at the LHC, ATL-PHYS-PUB-2018-025 (2018). https://cds.cern.ch/record/2645405

ATLAS Collaboration, Expected performance of the 2019 ATLAS \(b\)-taggers (2019). http://atlas.web.cern.ch/Atlas/GROUPS/PHYSICS/PLOTS/FTAG-2019-005/

ATLAS Collaboration, Optimisation and performance studies of the ATLAS \(b\)-tagging algorithms for the 2017-18 LHC run, ATL-PHYS-PUB-2017-013 (2017). https://cds.cern.ch/record/2273281

ATLAS Collaboration, Monte Carlo to Monte Carlo scale factors for flavour tagging efficiency calibration, ATL-PHYS-PUB-2020-009 (2020). https://cds.cern.ch/record/2718610

ATLAS Collaboration, Performance of \(b\)-jet identification in the ATLAS experiment, JINST 11, P04008 (2016). arXiv:1512.01094 [hep-ex]

ATLAS Collaboration, Early Inner Detector Tracking Performance in the 2015 Data at \(\sqrt{s}=13 TeV\), ATL-PHYS-PUB-2015-051 (2015). https://cds.cern.ch/record/2110140

K. Cranmer, G. Lewis, L. Moneta, A. Shibata, W. Verkerke, HistFactory: a tool for creating statistical models for use with RooFit and RooStats, CERN-OPEN-2012-016 (2012). https://cds.cern.ch/record/1456844

ATLAS Collaboration, Expected Performance of the ATLAS experiment-detector, trigger and physics (2009). arXiv:0901.0512 [hep-ex]

ATLAS Collaboration, ATLAS Computing Acknowledgements, ATL-SOFT-PUB-2021-003 (2021). https://cds.cern.ch/record/2776662

Acknowledgements

We thank CERN for the very successful operation of the LHC, as well as the support staff from our institutions without whom ATLAS could not be operated efficiently. We acknowledge the support of ANPCyT, Argentina; YerPhI, Armenia; ARC, Australia; BMWFW and FWF, Austria; ANAS, Azerbaijan; CNPq and FAPESP, Brazil; NSERC, NRC and CFI, Canada; CERN; ANID, Chile; CAS, MOST and NSFC, China; Minciencias, Colombia; MEYS CR, Czech Republic; DNRF and DNSRC, Denmark; IN2P3-CNRS and CEA-DRF/IRFU, France; SRNSFG, Georgia; BMBF, HGF and MPG, Germany; GSRI, Greece; RGC and Hong Kong SAR, China; ISF and Benoziyo Center, Israel; INFN, Italy; MEXT and JSPS, Japan; CNRST, Morocco; NWO, Netherlands; RCN, Norway; MEiN, Poland; FCT, Portugal; MNE/IFA, Romania; MESTD, Serbia; MSSR, Slovakia; ARRS and MIZŠ, Slovenia; DSI/NRF, South Africa; MICINN, Spain; SRC and Wallenberg Foundation, Sweden; SERI, SNSF and Cantons of Bern and Geneva, Switzerland; MOST, Taiwan; TENMAK, Turkey; STFC, UK; DOE and NSF, United States of America. In addition, individual groups and members have received support from BCKDF, CANARIE, Compute Canada and CRC, Canada; PRIMUS 21/SCI/017 and UNCE SCI/013, Czech Republic; COST, ERC, ERDF, Horizon 2020 and Marie Skłodowska-Curie Actions, European Union; Investissements d’Avenir Labex, Investissements d’Avenir Idex and ANR, France; DFG and AvH Foundation, Germany; Herakleitos, Thales and Aristeia programmes co-financed by EU-ESF and the Greek NSRF, Greece; BSF-NSF and MINERVA, Israel; Norwegian Financial Mechanism 2014-2021, Norway; NCN and NAWA, Poland; La Caixa Banking Foundation, CERCA Programme Generalitat de Catalunya and PROMETEO and GenT Programmes Generalitat Valenciana, Spain; Göran Gustafssons Stiftelse, Sweden; The Royal Society and Leverhulme Trust, UK. The crucial computing support from all WLCG partners is acknowledged gratefully, in particular from CERN, the ATLAS Tier-1 facilities at TRIUMF (Canada), NDGF (Denmark, Norway, Sweden), CC-IN2P3 (France), KIT/GridKA (Germany), INFN-CNAF (Italy), NL-T1 (Netherlands), PIC (Spain), ASGC (Taiwan), RAL (UK) and BNL (USA), the Tier-2 facilities worldwide and large non-WLCG resource providers. Major contributors of computing resources are listed in Ref. [56].

Author information

D. Bruncko, I. A. Budagov, E. F. Torregrosa, V. Gratchev, M. Lokajicek, J. Olszowska, L. Perini, S. Yu. Sivoklokov.

Authors and Affiliations

Department of Physics, University of Adelaide, Adelaide, Australia

M. Amerl, E. K. Filmer, P. Jackson, A. X. Y. Kong, H. Potti, T. A. Ruggeri, E. X. L. Ting & M. J. White

Department of Physics, University of Alberta, Edmonton, AB, Canada

D. M. Gingrich, J. H. Lindon, N. Nishu & J. L. Pinfold

Department of Physics, Ankara University, Ankara, Türkiye

O. Cakir, H. Duran Yildiz, S. Kuday & I. Turk Cakir

Division of Physics, TOBB University of Economics and Technology, Ankara, Türkiye

S. Sultansoy

LAPP, Université Savoie Mont Blanc, CNRS/IN2P3, Annecy, France

C. Adam Bourdarios, N. Berger, F. Costanza, M. Delmastro, L. Di Ciaccio, C. Goy, T. Guillemin, T. Hryn’ova, S. Jézéquel, I. Koletsou, J. Levêque, D. J. Lewis, J. D. Little, N. Lorenzo Martinez, G. Poddar, E. Rossi, A. Sanchez Pineda, E. Sauvan & L. Selem

APC, Université Paris Cité, CNRS/IN2P3, Paris, France

G. Bernardi, M. Bomben, R. Bouquet, G. Di Gregorio, A. Li, G. Marchiori, Q. Shen & Y. Zhang

High Energy Physics Division, Argonne National Laboratory, Argonne, IL, USA

S. Chekanov, S. Darmora, W. H. Hopkins, J. Hoya, E. Kourlitis, T. LeCompte, J. Love, J. Metcalfe, A. S. Mete, A. Paramonov, J. Proudfoot, P. Van Gemmeren, R. Wang & J. Zhang

Department of Physics, University of Arizona, Tucson, AZ, USA

S. Berlendis, E. Cheu, Z. Cui, A. Ghosh, K. A. Johns, W. Lampl, R. E. Lindley, P. Loch, J. P. Rutherfoord, J. Sardain, E. W. Varnes, H. Zhou & Y. Zhou

Department of Physics, University of Texas at Arlington, Arlington, TX, USA

D. Bakshi Gupta, B. Burghgrave, J. C. J. Cardenas, K. De, A. Farbin, H. K. Hadavand, A. J. Myers, N. Ozturk, G. Usai & A. White

Physics Department, National and Kapodistrian University of Athens, Athens, Greece

S. Angelidakis, D. Fassouliotis, L. Fountas, I. Gkialas & C. Kourkoumelis

Physics Department, National Technical University of Athens, Zografou, Greece

T. Alexopoulos, C. Bakalis, I. Drivas-koulouris, E. N. Gazis, C. Kitsaki, S. Maltezos, C. Paraskevopoulos, M. Perganti & P. Tzanis

Department of Physics, University of Texas at Austin, Austin, TX, USA

T. Andeen, C. D. Burton, K. Choi, P. U. E. Onyisi, D. K. Panchal, M. Tost, M. Unal & A. F. Webb

Institute of Physics, Azerbaijan Academy of Sciences, Baku, Azerbaijan

N. Huseynov

Institut de Física d’Altes Energies (IFAE), Barcelona Institute of Science and Technology, Barcelona, Spain

M. N. Agaras, M. Bosman, J. I. Carlotto, M. P. Casado, L. Castillo Garcia, S. Epari, V. Gautam, S. Gonzalez Fernandez, C. Grieco, S. Grinstein, A. Juste Rozas, S. Kazakos, I. Korolkov, J. Mamuzic, M. Martinez, P. Martinez Suarez, L. M. Mir, J. L. Munoz Martinez, N. Orlando, A. Pacheco Pages, C. Padilla Aranda, I. Riu, A. Salvador Salas, A. Sonay & S. Terzo

Institute of High Energy Physics, Chinese Academy of Sciences, Beijing, China

J. Barreiro Guimarães da Costa, Y. Cai, X. Chu, H. Cui, Y. Fan, Y. Fang, F. Guo, Y. F. Hu, Y. Huang, X. Jia, M. Li, S. Li, Z. Li, Z. Liang, B. Liu, P. Liu, X. Lou, G. Lu, F. Lyu, A. F. Mohammed, L. Y. Shan, J. Wu, S. Xin, D. Xu, Z. Xu, X. Yang, M. Zhai, K. Zhang, P. Zhang, C. Zhu, H. Zhu & X. Zhuang

Physics Department, Tsinghua University, Beijing, China

X. Chen, M. Feng, H. Pang, M. Xia & Y. Xu

Department of Physics, Nanjing University, Nanjing, China

H. Chen, S. J. Chen, A. De Maria, L. Han, X. Huang, Z. Jia, S. Jin, H. Li, Y. Liu, F. L. Lucio Alves, T. Min, X. Wang, Y. Wang, L. Xia, H. Ye, B. Zhang & L. Zhang

University of Chinese Academy of Science (UCAS), Beijing, China

Y. Cai, X. Chu, H. Cui, Y. Fang, F. Guo, Y. F. Hu, X. Jia, M. Li, S. Li, Z. Li, Y. Liu, X. Lou, G. Lu, A. F. Mohammed, K. Ran, J. Wu, S. Xin, M. Zhai, K. Zhang, P. Zhang & C. Zhu

Institute of Physics, University of Belgrade, Belgrade, Serbia

E. Bakos, L. J. Beemster, J. Jovicevic, V. Maksimovic, Dj. Sijacki, N. Vranjes, M. Vranjes Milosavljevic & L. Živković

Department for Physics and Technology, University of Bergen, Bergen, Norway

T. Buanes, J. I. Djuvsland, G. Eigen, N. Fomin, S. K. Huiberts, G. R. Lee, A. Lipniacka, B. Martin dit Latour, B. Stugu & A. Traeet

Physics Division, Lawrence Berkeley National Laboratory, Berkeley, CA, USA

D. J. A. Antrim, R. M. Barnett, J. Beringer, P. Calafiura, F. Cerutti, A. Ciocio, A. Dimitrievska, G. I. Dyckes, K. Einsweiler, I. Ene, M. G. Foti, L. G. Gagnon, M. Garcia-Sciveres, C. Gonzalez Renteria, H. M. Gray, C. Haber, S. Han, T. Heim, I. Hinchliffe, X. Ju, K. Krizka, C. Leggett, Z. Marshall, M. Muškinja, B. P. Nachman, G. J. Ottino, S. Pagan Griso, V. R. Pascuzzi, M. Pettee, E. Pianori, E. D. Resseguie, E. Reynolds, B. R. Roberts, S. N. Santpur, M. Shapiro, B. Stanislaus, V. Tsulaia, C. Varni, H. Wang, J. Xiong, T. Yamazaki, W.-M. Yao, B. K. Yeo & Z. Zhang

Institut für Physik, Humboldt Universität zu Berlin, Berlin, Germany

C. Appelt, N. B. Atlay, M. Bahmani, D. Battulga, A. Cortes-Gonzalez, S. Grancagnolo, C. Issever, T. J. Khoo, K. Kreul, H. Lacker, T. Lohse, M. Michetti, A. Nayaz, Y. S. Ng, V. H. Ruelas Rivera, C. Scharf, F. Schenck, P. Seema, T. Theveneaux-Pelzer & H. A. Weber

Albert Einstein Center for Fundamental Physics and Laboratory for High Energy Physics, University of Bern, Bern, Switzerland

H. P. Beck, M. Chatterjee, L. Franconi, L. Halser, S. Haug, A. Ilg, R. Müller, A. P. O’Neill, M. M. Schefer & M. S. Weber

School of Physics and Astronomy, University of Birmingham, Birmingham, UK

P. P. Allport, A. D. Auriol, P. Bellos, G. A. Bird, J. Bracinik, D. G. Charlton, A. S. Chisholm, H. G. Cooke, P. M. Freeman, W. F. George, L. Gonella, F. Gonnella, N. A. Gorasia, C. M. Hawkes, S. J. Hillier, M. Marinescu, A. M. Mendes Jacques Da Costa, T. J. Neep, P. R. Newman, K. Nikolopoulos, J. M. Silva, A. Stampekis, J. P. Thomas, P. D. Thompson, G. S. Virdee, R. J. Ward, A. T. Watson & M. F. Watson

Department of Physics, Bogazici University, Istanbul, Türkiye

E. Celebi, B. Gokturk, S. Istin, K. Y. Oyulmaz & V. E. Ozcan

Department of Physics Engineering, Gaziantep University, Gaziantep, Türkiye

A. Bingul & Z. Uysal

Department of Physics, Istanbul University, Istanbul, Türkiye

A. Adiguzel

Istinye University, Sariyer, Istanbul, Türkiye

A. J. Beddall, S. A. Cetin, S. Ozturk & S. Simsek

Facultad de Ciencias y Centro de Investigaciónes, Universidad Antonio Nariño, Bogotá, Colombia

G. Navarro & Y. Rodriguez Garcia

Departamento de Física, Universidad Nacional de Colombia, Bogotá, Colombia

C. Sandoval

Dipartimento di Fisica e Astronomia A. Righi, Università di Bologna, Bologna, Italy

G. Carratta, N. Cavalli, L. Clissa, S. De Castro, F. Del Corso, L. Fabbri, M. Franchini, A. Gabrielli, L. Rinaldi, A. Sbrizzi, N. Semprini-Cesari, M. Sioli, K. Todome, S. Valentinetti, M. Villa & A. Zoccoli

INFN Sezione di Bologna, Bologna, Italy

G. L. Alberghi, F. Alfonsi, L. Bellagamba, D. Boscherini, A. Bruni, G. Bruni, M. Bruschi, G. Cabras, G. Carratta, N. Cavalli, A. Cervelli, L. Clissa, S. De Castro, F. Del Corso, L. Fabbri, M. Franchini, A. Gabrielli, B. Giacobbe, F. Lasagni Manghi, L. Massa, M. Negrini, A. Polini, L. Rinaldi, M. Romano, C. Sbarra, A. Sbrizzi, N. Semprini-Cesari, A. Sidoti, M. Sioli, K. Todome, S. Valentinetti, M. Villa & A. Zoccoli

Physikalisches Institut, Universität Bonn, Bonn, Germany

A. Bandyopadhyay, S. Bansal, P. Bauer, P. Bechtle, F. Beisiegel, F. U. Bernlochner, I. Brock, K. Desch, C. Deutsch, F. G. Diaz Capriles, C. Dimitriadi, J. Dingfelder, P. J. Falke, C. Grefe, S. Gurbuz, M. Hamer, L. M. Herrmann, F. Hinterkeuser, T. Holm, M. Huebner, F. Huegging, C. Kirfel, O. Kivernyk, P. T. Koenig, H. Krüger, K. Lantzsch, T. Lenz, C. Nass, D. Pohl, M. Standke, C. Vergis, E. Von Toerne & N. Wermes

Department of Physics, Boston University, Boston, MA, USA

J. M. Butler & Z. Yan

Department of Physics, Brandeis University, Waltham, MA, USA

S. V. Addepalli, J. R. Bensinger, P. Bhattarai, C. Blocker, D. Camarero Munoz, F. Capocasa, E. R. Duden, G. Frattari, M. Goblirsch-Kolb, Z. M. Schillaci, G. Sciolla, D. A. Trischuk & D. T. Zenger Jr

Transilvania University of Brasov, Brasov, Romania

S. Popa

Horia Hulubei National Institute of Physics and Nuclear Engineering, Bucharest, Romania

C. Alexa, A. Chitan, D. A. Ciubotaru, I.-M. Dinu, O. A. Ducu, A. E. Dumitriu, A. A. Geanta, A. Jinaru, V. S. Martoiu, J. Maurer, A. Olariu, D. Pietreanu, M. Renda, M. Rotaru, G. Stoicea, G. Tarna, I. S. Trandafir, A. Tudorache, V. Tudorache, M. E. Vasile & S. Younas

Department of Physics, Alexandru Ioan Cuza University of Iasi, Iasi, Romania

C. Agheorghiesei & P. Postolache

National Institute for Research and Development of Isotopic and Molecular Technologies, Physics Department, Cluj-Napoca, Romania

G. A. Popeneciu

University Politehnica Bucharest, Bucharest, Romania

A. A. Geanta & R. Hobincu

West University in Timisoara, Timisoara, Romania

P. M. Gravila

Faculty of Mathematics, Physics and Informatics, Comenius University, Bratislava, Slovak Republic

R. Astalos, D. Babal, P. Bartos, M. Dubovsky, B. Eckerova, S. Hyrych, L. Keszeghova, I. Sykora, S. Tokár & T. Ženiš

Department of Subnuclear Physics, Institute of Experimental Physics of the Slovak Academy of Sciences, Kosice, Slovak Republic

D. Bruncko, F. Sopkova, P. Strizenec & J. Urban

Physics Department, Brookhaven National Laboratory, Upton, NY, USA

S. H. Abidi, K. Assamagan, G. Barone, M. Begel, D. P. Benjamin, M. Benoit, R. Bi, D. Boye, E. Brost, V. Cavaliere, H. Chen, G. D’amen, S. J. Das, J. Elmsheuser, B. Garcia, V. Garonne, Y. Go, G. Iakovidis, C. W. Kalderon, A. Klimentov, E. Lançon, D. Lynn, H. Ma, T. Maeno, C. Mc Ginn, C. Mwewa, J. L. Nagle, P. Nilsson, M. A. Nomura, D. Oliveira Damazio, J. Ouellette, D. V. Perepelitsa, M. -A. Pleier, V. Polychronakos, S. Protopopescu, S. Rajagopalan, G. Redlinger, T. T. Rinn, J. Roloff, S. Snyder, P. Steinberg, S. A. Stucci, A. Tricoli, E. N. Umaka, A. Undrus, C. Weber, T. Wenaus & S. Ye

Universidad de Buenos Aires, Facultad de Ciencias Exactas y Naturales, Departamento de Física, y CONICET, Instituto de Física de Buenos Aires (IFIBA), Buenos Aires, Argentina

M. F. Daneri, G. Otero y Garzon, R. Piegaia & M. Toscani

California State University, CA, USA

K. Grimm, J. Moss & J. Veatch

Cavendish Laboratory, University of Cambridge, Cambridge, UK

W. K. Balunas, J. R. Batley, O. Brandt, J. T. P. Burr, J. D. Chapman, J. W. Cowley, W. J. Fawcett, L. Henkelmann, B. H. Hodkinson, L. B. A. H. Hommels, D. M. Jones, P. Jones, C. G. Lester, J. K. K. Liu, C. Malone, D. L. Noel, H. A. Pacey, M. A. Parker, C. J. Potter, D. Robinson, D. Rousso, R. Tombs & S. Williams

Department of Physics, University of Cape Town, Cape Town, South Africa

R. J. Atkin, K. N. Barends, J. M. Keaveney & S. Yacoob

Department of Mechanical Engineering Science, University of Johannesburg, Johannesburg, South Africa

M. Bhamjee, M. P. Connell, S. H. Connell, N. Govender, L. L. Leeuw & L. Truong

National Institute of Physics, University of the Philippines Diliman (Philippines), Quezon City, Philippines

M. Flores

School of Physics, University of the Witwatersrand, Johannesburg, South Africa

F. Carrio Argos, T. Chowdhury, L. D. Christopher, S. Dahbi, M. G. D. Gololo, D. Kar, M. Kumar, R. P. Mckenzie, B. R. Mellado Garcia, G. Mokgatitswane, O. Mtintsilana, E. K. Nkadimeng, N. P. Rapheeha, X. Ruan, E. M. Shrif, E. Sideras Haddad, S. Sinha & S. H. Tlou

Department of Physics, Carleton University, Ottawa, ON, Canada

A. Bachiu, A. Bellerive, B. Davis-Purcell, D. Gillberg, K. Graham, J. Heilman, C. E. Jessiman, S. Kaur, J. S. Keller, C. Klein, T. Koffas, L. S. Miller, M. Naseri, B. J. Norman, F. G. Oakham, D. A. Pizzi, E. J. Staats, M. G. Vincter & N. Zakharchuk

Faculté des Sciences Ain Chock, Réseau Universitaire de Physique des Hautes Energies-Université Hassan II, Casablanca, Morocco

B. Aitbenchikh, D. Benchekroun, F. Bendebba, K. Bouaouda, Z. Chadi, A. El Moussaouy, S. Ezzarqtouni, H. Imam & S. Zerradi

Faculté des Sciences, Université Ibn-Tofail, Kénitra, Morocco

Y. El Ghazali & M. Gouighri

LPMR, Faculté des Sciences, Université Mohamed Premier, Oujda, Morocco

J. Assahsah, M. Ouchrif & S. Ridouani

Faculté des sciences, Université Mohammed V, Rabat, Morocco

A. Aboulhorma, M. Ait Tamlihat, S. Batlamous, R. Cherkaoui El Moursli, H. El Jarrari, F. Fassi, H. Hamdaoui, B. Ngair, Z. Soumaimi, Y. Tayalati & M. Zaazoua

CERN, Geneva, Switzerland

Y. Afik, A. Ahmad, M. Aleksa, C. Amelung, J. K. Anders, N. Aranzabal, A. J. Armbruster, G. Avolio, M.-S. Barisits, T. A. Beermann, C. Beirao Da Cruz E Silva, J. F. Beirer, L. Beresford, T. Bisanz, D. Bogavac, J. D. Bossio Sola, J. Boyd, V. M. M. Cairo, N. Calace, S. Camarda, T. Carli, A. Catinaccio, L. D. Corpe, A. Cueto, P. Czodrowski, V. Dao, A. Dell’Acqua, A. Di Girolamo, F. Dittus, A. Dudarev, M. Dührssen, N. Ellis, M. Elsing, L. F. Falda Ulhoa Coelho, S. Falke, D. Francis, D. Froidevaux, P. Gessinger-Befurt, F. Giuli, L. Goossens, B. Gorini, C. A. Gottardo, S. Guindon, G. Gustavino, R. J. Hawkings, C. Helsens, A. M. Henriques Correia, L. Hervas, A. Hoecker, M. Huhtinen, J. J. Junggeburth, M. Kiehn, P. Klimek, T. Klioutchnikova, A. Koulouris, A. Krasznahorkay, S. Kuehn, F. Lanni, M. Lassnig, M. LeBlanc, G. Lehmann Miotto, S. Manzoni, A. Marzin, M. Mentink, A. Milic, M. Mlynarikova, A. K. Morley, L. Morvaj, P. Moschovakos, B. Moser, A. M. Nairz, M. Nessi, N. Nikiforou, S. Palestini, T. Pauly, O. Penc, H. Pernegger, S. Perrella, B. A. Petersen, N. E. Pettersson, L. Pezzotti, L. Pontecorvo, M. E. Pozo Astigarraga, M. Raymond, C. Rembser, S. Rettie, P. Riedler, S. Roe, A. Rummler, D. Salamani, A. Salzburger, S. Schlenker, J. Schovancova, A. Sharma, M. V. Silva Oliveira, R. Simoniello, I. Siral, J. Smiesko, C. A. Solans Sanchez, P. Sommer, G. Spigo, G. A. Stewart, M. C. Stockton, A. N. Tuna, G. Unal, T. Vafeiadis, M. Van Rijnbach, W. Vandelli, T. Vazquez Schroeder, C. Vittori, B. Vormwald, R. Vuillermet, T. Wengler, H. G. Wilkens & L. Zwalinski

Affiliated with an institute covered by a cooperation agreement with CERN, Geneva, Switzerland

A. V. Akimov, M. Aliev, A. V. Anisenkov, E. M. Baldin, S. Barsov, K. Beloborodov, K. Belotskiy, N. L. Belyaev, V. S. Bobrovnikov, A. G. Bogdanchikov, O. Bulekov, A. R. Buzykaev, S. P. Denisov, F. Dubinin, A. Ezhilov, R. M. Fakhrutdinov, O. L. Fedin, G. Fedotov, A. B. Fenyuk, I. L. Gavrilenko, A. Gavrilyuk, L. K. Gladilin, D. Golubkov, P. A. Gorbounov, V. Gratchev, S. Harkusha, A. N. Karyukhin, V. F. Kazanin, A. G. Kharlamov, T. Kharlamova, N. Korotkova, A. S. Kozhin, V. A. Kramarenko, O. Kuchinskaia, Y. Kulchitsky, A. Kupich, Y. A. Kurochkin, A. Kurova, M. Levchenko, V. P. Maleev, A. L. Maslennikov, D. A. Maximov, O. Meshkov, A. A. Minaenko, A. G. Myagkov, I. Naryshkin, P. Y. Nechaeva, V. Nikolaenko, S. V. Peleganchuk, M. Penzin, A. Petukhov, D. Pudzha, D. Pyatiizbyantseva, E. Ramakoti, O. L. Rezanova, A. Romaniouk, A. Ryzhov, V. A. Schegelsky, P. B. Shatalov, S. Yu. Sivoklokov, S. Yu. Smirnov, Y. Smirnov, L. N. Smirnova, A. A. Snesarev, E. Yu. Soldatov, A. A. Solodkov, V. Solovyev, E. A. Starchenko, V. V. Sulin, L. Sultanaliyeva, A. A. Talyshev, V. Tikhomirov, Yu. A. Tikhonov, S. Timoshenko, P. V. Tsiareshka, I. I. Tsukerman, K. Vorobev, O. Zenin, K. Zhukov & V. Zhulanov

Affiliated with an international laboratory covered by a cooperation agreement with CERN, Geneva, Switzerland

F. Ahmadov, I. N. Aleksandrov, V. A. Bednyakov, I. R. Boyko, I. A. Budagov, G. A. Chelkov, A. Cheplakov, M. V. Chizhov, D. V. Dedovich, M. Demichev, A. Gongadze, M. I. Gostkin, S. N. Karpov, Z. M. Karpova, U. Kruchonak, V. Kukhtin, E. Ladygin, V. Lyubushkin, T. Lyubushkina, S. Malyukov, M. Mineev, E. Plotnikova, I. N. Potrap, N. A. Rusakovich, M. Shiyakova, A. Soloshenko, S. Turchikhin, T. Turtuvshin, I. Yeletskikh, A. Zhemchugov & N. I. Zimine

Enrico Fermi Institute, University of Chicago, Chicago, IL, USA

R. W. Gardner, M. D. Hank, Y. K. Kim, D. W. Miller, J. T. Offermann, M. J. Oreglia, J. L. Rainbolt, B. J. Rosser, D. Schaefer, M. J. Shochet, E. A. Smith, C. Tosciri & I. Vukotic

LPC, Université Clermont Auvergne, CNRS/IN2P3, Clermont-Ferrand, France

D. Boumediene, A. M. Burger, D. Calvet, S. Calvet, J. Donini, R. Madar, T. Megy, O. Perrin, C. Santoni, O. V. Solovyanov, A. Tnourji, L. Vaslin & F. Vazeille

Nevis Laboratory, Columbia University, Irvington, NY, USA

K. Al Khoury, A. Angerami, G. Brooijmans, E. L. Busch, B. Cole, A. Emerman, B. J. Gilbert, J. L. Gonski, D. A. Hangal, Q. Hu, A. Kahn, K. E. Kennedy, D. J. Mahon, S. Mohapatra, J. A. Parsons, B. D. Seidlitz, A. C. Smith, P. M. Tuts, D. M. Williams, P. Yin & W. Zou

Niels Bohr Institute, University of Copenhagen, Copenhagen, Denmark

A. Camplani, M. Dam, J. B. Hansen, J. D. Hansen, P. H. Hansen, T. C. Petersen, C. Wiglesworth & S. Xella

Dipartimento di Fisica, Università della Calabria, Rende, Italy

E. Bisceglie, M. Capua, G. Crosetti, F. Curcio, D. Malito, A. Mastroberardino, E. Meoni, D. Salvatore, M. Schioppa & E. Tassi

INFN Gruppo Collegato di Cosenza, Laboratori Nazionali di Frascati, Frascati, Italy