Abstract

Searching for new physics (NP) is one of the areas of high-energy physics that requires the most processing of large amounts of data. At the same time, quantum computing has huge potential advantages when dealing with large amounts of data. The principal component analysis (PCA) algorithm may be one of the bridges connecting these two aspects. On the one hand, it can be used for anomaly detection, and on the other hand, there are corresponding quantum algorithms for PCA. In this paper, we investigate how to use PCA to search for NP. Taking the example of anomalous quartic gauge couplings in the tri-photon process at muon colliders, we find that PCA can be used to search for NP. Compared with the traditional event selection strategy, the expected constraints on the operator coefficients obtained by PCA based event selection strategy are even better.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since its establishment, the Standard Model (SM) has withstood the challenge of high-precision experimental measurements in describing strong interaction, electromagnetic and weak interaction phenomena. The theoretical results are almost in perfect agreement with the experimental observations, but some important fundamental problems are still difficult to be explained within the framework of the SM [1,2,3,4,5]. The seeking for new physics (NP) beyond the SM has become one of the most advanced and important topics in high energy physics (HEP) [6].

As the colliders’ performance improves and their luminosities increase, the efficient ways to process large amounts of data become important. One of the efficient ways is to use machine learning (ML) algorithms. ML is a general term for a class of algorithms, including probability theory, statistics and other disciplines. Because of its advantages in complex data processing, ML algorithms have been applied in many fields, including HEP [7,8,9,10,11,12,13,14,15,16,17,18]. At present, many applications show that the anomaly detection (AD) ML method can be effectively applied in the phenomenological studies of NP. One of the advantages is that, when AD is applied to search for NP, the implementation is often independent of the NP model to be searched [19,20,21,22,23,24,25,26]. Nevertheless, it should be pointed out that the tunable parameters related to AD methods are often dependent on the NP models and processes to be studied.

In addition, quantum computing is another efficient way to process large amounts of complex data. Many ML algorithms can be accelerated by quantum computing [27,28,29]. One example is the principal component analysis (PCA) algorithm [30, 31]. PCA algorithm can also be used in AD, but it is not clear whether PCA anomaly detection (PCAAD) algorithm is useful for searching NP. If it was the case, then it is strongly implied that quantum PCA can also be used to search for NP signals and we will probably get a way to discover NP using quantum computers. There are other examples such as the autoencoder (AE) [32,33,34,35]. AE is better than PCA in handling data dimensionality reduction, so it has a good potential to perform better than PCA in AD as well [21, 22]. Not only that, AE also has the potential for quantum acceleration [36,37,38,39]. However, PCA is more explicit in a geometric sense, as the events are mainly distributed along the eigenvectors of the covariance matrix. Moreover, PCAAD does not require the cooperation of other algorithms. Therefore, we focus on the PCAAD in this paper and leave the study of the AE for the future.

To test whether the PCAAD algorithm is feasible, we intend to conduct experiments by searching for dimension-8 operators in the SM effective field theory (SMEFT) contributing to anomalous quartic gauge couplings (aQGCs). Note that there are already ML approaches targeting SMEFT which have been shown to able to enhance the signal significance [13, 17, 18, 23, 40].

There are many reasons to consider dimension-8 operators contributing to aQGCs. For example, the dimension-8 operators are important w.r.t. the convex geometry perspective to the operator space [41,42,43]. Moreover, there exist various NP models generating dimension-8 effective operators relevant for aQGCs [44,45,46,47,48,49,50,51,52,53], and there are distinct cases where dimension-6 operators are absent but the dimension-8 operators show up [54,55,56]. As a result, aQGCs have received a lot of attention [57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77]. The existence of aQGCs makes the tri-photon process at the muon colliders inconsistent with the predictions of the SM [78]. Recently, the studies of muon colliders also have drawn a lot of attention [79,80,81,82,83,84,85,86,87,88,89,90]. Although, the PCAAD might be suitable for various cases, as a test bed, we use the PCAAD to search for the signals of aQGCs in the tri-photon process at the muon colliders.

The rest of this paper consists of the following. In Sect. 2, the aQGCs and the tri-photon process are briefly reviewed. Event selection strategy using PCAAD is discussed in Sect. 3 with the contribution of interference ignored and the contributions of the SM and NP considered separately because we focus on the features of the SM and NP events in this section. In our approach the eigenvectors are solely determined by the SM events. Section 4 presents the expected constraints on operator coefficients. In Sect. 4, the interference terms between the SM and the NP are taken into account. Section 5 summarizes our main conclusions.

2 The effect of aQGCs in the tri-photon process

Because only the transverse operators \(O_{T_i}\) contribute to the tri-photon process [78], in this paper, we consider only \(O_{T_i}\), they are [91, 92]

where \({\widehat{W}}\equiv \vec {\sigma }\cdot {\vec W}/2\), \(\sigma \) is the Pauli matrices, \({\vec W}=\{W^1,W^2,W^3\}\), \(B_{\mu }\) and \(W_{\mu }^i\) are \(U(1)_{\textrm{Y}}\) and \(SU(2)_{\textrm{I}}\) gauge fields, \(B_{\mu \nu }\) and \(W_{\mu \nu }\) correspond to the field strength tensors. Table 1 shows the constraints on the coefficients obtained by the large hadron collider (LHC).

At muon colliders, which are also called gauge boson colliders [86], it is expected that, the vector boson scattering/fusion (VBS/VBF) processes are dominant production modes for both the SM and NP starting from a few TeV energies because of the logarithmic enhancement from gauge boson radiation [85,86,87]. For \(O_{T_i}\) operators, the dimension of cross section indicates \(\sigma _{NP}\sim s^3 (f/\Lambda ^4)^2\), except for logarithmic enhancement, the contribution for both VBS and tri-boson grow by \(s^3\), i.e., the momentum dependence in the Feynman rule of the aQGCs cancels the 1/s in the propagator in the tri-boson process. Besides, different from the LHC, where the tri-boson contributions from the aQGCs are further suppressed because they must be led by sea quark partons, no such suppression occurs at muon colliders except for that the s-channel propagator could not be the W bosons. It has been shown that, at muon colliders, for \(O_{T_5}\) the tri-boson contribution is competitive to the VBS for the process \(\mu ^+\mu ^-\rightarrow \gamma \gamma \nu {\bar{\nu }}\), it surpasses the VBS when \(\sqrt{s}<5\;\textrm{TeV}\), and is about 1/3 of the VBS when \(\sqrt{s}=30\;\textrm{TeV}\) [78]. Moreover, for the tri-photon process, there are no subsequent decays in final states. As a result, the sensitivity of the tri-photon process at the muon colliders to the \(O_{T_i}\) operators is competitive to or even better than the VBS processes. For simplicity, we consider only the tri-photon process in this paper.

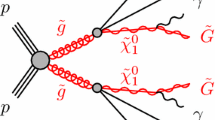

The left panel of Fig. 1 shows the Feynman diagrams induced by \(O_{T_i}\) operators, while the right panel shows the SM background. The contributions of \(O_{T_{1,6}}\) operators are exactly equal to \(O_{T_{0,5}}\) operators, respectively. Therefore, in the following we consider only \(O_{T_{0,2,5,7,8,9}}\) operators.

3 PCA assistant event selection strategy

PCA algorithm is one of the most commonly used linear dimensionality reduction methods. It uses mathematical dimensionality reduction or feature extraction to linearly transform a large number of original variables to a smaller number of important variables, which can explain the main information of the original data-set.

Denoting the points in a data-set as \(\vec {p}_i=(p_i^1, p_i^2, \ldots , p_i^m)\), where \(1 \le i \le N\), N is the number of points in the data-set and m is the number of dimensions of the points (which is also called the number of features of the points), the PCA algorithm start with a standardization of the points. In this paper, we use z-score standardization, \(x_i^j=(p_i^j - {\bar{p}}^j)/\epsilon ^j\), where \({\bar{p}}^j\) and \(\epsilon ^j\) are the mean and standard deviation of the j-th feature for the whole data-set. Then the data-set can be expressed as a matrix \(X=(\vec {x}_1^T, \vec {x}_2^T, \ldots , \vec {x}_N^T)\). An \(m\times m\) covariance matrix C can be obtained as \(C=XX^T\). Next, we need to calculate the eigenvectors of C. Denoting \(\vec {\eta }_j\) as the eigenvector corresponding to eigenvalue \(\lambda _j\), where \(\{\lambda _j\}\) are in descending order. The PCA algorithm project the points in the data-set using \(\vec {\eta }_j\) to new features, hence the new features are \({\tilde{x}}_i^j = \vec {\eta }_j \cdot \vec {x}_i\).

The process of reducing the original data dimension by PCA can be equivalent to projecting the original features onto the dimension with the maximum amount of projection information. Each eigenvector \(\vec {\eta }_j\) is a projection axis, and each \(\vec {\eta }_j\) corresponding eigenvalue \(\lambda _j\) is the variance of the original features projected onto the projection axis. According to the maximum variance theory, the larger the variance, the larger the information, after projection, in order to ensure that the information lost is small, one have to choose the projection axis with larger variance, that is, to choose the \(\vec {\eta }_j\) corresponding to the larger \(\lambda _j\). By selecting \(m'\) eigenvectors corresponding to the first \(m'\) eigenvalues, the dimension of the points is reduced from m for \(\vec {x}_i\) to \(m'\) for \(\vec {{\tilde{x}}}_i\). The components of \(\vec {{\tilde{x}}}_i\), i.e. \({\tilde{x}}_i^j\) with \(1\le j \le m'\) are the first \(m'\) principal components of the data-set.

The eigenvector reflects the directions corresponding to the degree of variance change of the original data, and the eigenvalue is the variance of the data in the corresponding direction. Since the background is mainly distributed along the most important eigenvectors, it can be expected that points in the background are closer to the eigenvectors after decentralization. And if the signal does not coincide with the background distribution, then the distances between the signal points and eigenvectors will be larger. Therefore, after the \(\vec {\eta }_j\) are obtained, we use the distance between the points and \(\vec {\eta }_j\) (denoted as \(d_{i,j}\)) as anomaly scores to search for the signal events.

3.1 Data preparation

To test the feasibility of the PCAAD algorithm for searching for aQGCs, we build the data-set using Monte Carlo (MC) simulation with the help of MadGraph5@NLO toolkit [93,94,95], and a muon collider-like detector simulation with Delphes [96] is applied. To avoid infrared divergences, in this section we use the standard cuts as default, and the cuts associated with infrared divergences are

where \(p_{T,\gamma }\) and \(\eta _{\gamma }\) are the transverse momentum and pseudo-rapidity for each photon, respectively, \(\Delta R_{\gamma \gamma }=\sqrt{\Delta \phi ^2 + \Delta \eta ^2}\), where \(\Delta \phi \) and \(\Delta \eta \) are the differences in azimuth angles and pseudo-rapidities between two photons, respectively. The events of the signal are generated one operator at a time. In this section, we choose the coefficients as upper bounds listed in Table 1. We require that each event consists of at least three photons, so that an event in the data-set consists of 12 numbers which are the components of the four momenta of three photons, which means that an event corresponds to a dimension-12 vector. In this paper, the hardest three photons are selected, and the photons are arranged in descending order of energy for each event. In this section, we generate 600,000 events for the SM and 30,000 events for NP, respectively. It needs to point out that, the interference is ignored in this section because we concentrate on the features of the background and signal.

Note that we do not use the physical information in the data. This is because we want to verify that our method is independent of the physical content. For example, for photons, the four components of the four-momentum are not independent of each other, and the four-momenta of three photons are not independent of each other. We assume that the PCAAD does not know that these data represent the four-momenta of the photons, and do not use the above relationships, but only treat the data as vectors with 12 numbers.

3.2 Event selection strategy

To search for NP in the target data-set, based on PCAAD, we draw out the following procedure,

-

1.

Prepare a data-set for the SM using MC. The data-set of the SM is denoted as \(p^{\textrm{SM}}\), and the data-set of the target is denoted as \(p^{\textrm{tar}}\).

-

2.

Apply the z-score standardization to the data-sets of the SM \(x_i^{\textrm{SM,j}}=(p_i^{\textrm{SM,j}} - {\bar{p}}^{\textrm{SM,j}})/\epsilon ^{\textrm{SM,j}}\), where \({\bar{p}}^{\textrm{SM,j}}\) and \(\epsilon ^{\textrm{SM,j}}\) are the mean and the standard deviation of the j-th feature for the SM data-set. However, when standardizing the target data-set, we also use \({\bar{p}}^{\textrm{SM},j}\) and \(\epsilon ^{\textrm{SM},j}\), so that \(x_i^{\textrm{tar},j}=(p_i^{\textrm{tar},j} - {\bar{p}}^{\textrm{SM},j})/\epsilon ^{\textrm{SM},j}\).

-

3.

Denote \(X^{\textrm{SM}}=((\vec {x}_1^{\textrm{SM}})^T, (\vec {x}_2^{\textrm{SM}})^T, \ldots , (\vec {x}_{N^{\textrm{SM}}}^{\textrm{SM}})^T)\), and \(X^{\textrm{tar}}=((\vec {x}_1^{\textrm{tar}})^T, (\vec {x}_2^{\textrm{tar}})^T, \ldots , (\vec {x}_{N^{\textrm{tar}}}^{\textrm{tar}})^T)\), where \(N^{\textrm{SM,tar}}\) are the total numbers of points in the SM and target data-sets, respectively. Calculate the covariance matrix of the SM as \(C^{\textrm{SM}}=X^{\textrm{SM}}(X^{\textrm{SM}})^T\).

-

4.

Find out the eigenvalues and eigenvectors of the covariance matrix \(C^{\textrm{SM}}\).

-

5.

Sort the eigenvalues in descending order and select the eigenvectors \(\vec {\eta } _j^{\textrm{SM}}\) corresponding to the top \(m'\) (\(m'< m=12\)) largest eigenvalues. As will be explained later, in this paper, we use \(m'=4\).

-

6.

Find out the \(m'\) new features by projecting the data-sets of both \(X^{\textrm{SM}}\) and \(X^{\textrm{tar}}\) with \(\vec {\eta } _j^{\textrm{SM}}\), i.e. \({\tilde{x}}_i^{\textrm{SM, j}}=\vec {\eta } _j^{\textrm{SM}}\cdot \vec {x}_i^{\textrm{SM}}\), \({\tilde{x}}_i^{\textrm{tar, j}}=\vec {\eta } _j^{\textrm{SM}} \cdot \vec {x}_i^{\textrm{tar}}\).

-

7.

The distance from a point \(\vec {x}_i\) to an eigenvector can be obtained as \(d_{i,j} = \sqrt{|\vec {x}_i|^2 -\left( \vec {x}_i\cdot \vec {\eta }_j\right) ^2 }= \sqrt{|\vec {x}_i|^2 -({\tilde{x}}_i^j)^2 }\). \(d_{i,j}\) for the points in the SM data-set and target data-set are denoted as \(d_{i,j}^{\textrm{SM}}\) and \(d_{i,j}^{\textrm{tar}}\), respectively.

-

8.

Set cuts on \(d_{i,j}\) to select events.

It can be seen that, the PCAAD event selection strategy requires a data-set of the SM, therefore is a supervised machine learning algorithm. In this section, we use the data-set of NP as the target data-set.

Taking the case of \(O_{T_0}\) at \(\sqrt{s} = 3\), 10, 14 and \(30\;\textrm{TeV}\) as examples, the standard deviations of \({\tilde{x}}_i^{\textrm{SM,j}}\) (denoted as \({\tilde{\epsilon }}^{\textrm{SM,j}}\)) are shown in Fig. 2. It can be shown that, \({\tilde{\epsilon }}^{\textrm{SM,j}}\) with \(1\le j\le 4\) are much larger than the other \({\tilde{\epsilon }}^{\textrm{SM,j}}\)’s, which indicates that the background points are mainly distributed along first 4 eigenvectors. Therefore, in this paper, we use \(m'=4\). We have also verified that, the improvements of expected constraints on the operator coefficients one can archive with a larger \(m'\) are negligible compared with the case of \(m'=4\). The normalized distributions of \(d_{i,j}^{\textrm{SM}}\) and \(d_{i,j}^{\textrm{tar}}\) are shown in Fig. 3. It can be seen that, \(d_{i,j}^{\textrm{tar}}\) are generally larger than \(d_{i,j}^{\textrm{SM}}\) as expected. In this paper, we use \(d_{i,j}^{\textrm{tar}}\) as the anomaly score to discriminate the signal from the background. The means and standard deviations for \(p^{\textrm{SM}}\) used in z-score standardization are listed in Sect. 1, so as the components of \(\vec {\eta } ^{\textrm{SM}}_{1,2,3,4}\).

Although we can not care about the physical meanings of \(d_{i,1}\), \(d_{i,2}\), \(d_{i,3}\) and \(d_{i,4}\) when searching for NP signals using a AD approach such as PCAAD. But there is still an interesting and noteworthy phenomenon. For the SM, the positions of the \(d_{i,1}\), \(d_{i,2}\), \(d_{i,3}\) and \(d_{i,4}\) peaks are delayed to around 2, and the peak of \(d_{i,2}\) is closer to 0 compared with the others. Because of decentralization, the center points are those with high energies and small momenta, which cannot be the four momenta of photons which should be light-like. This is the reason that \(d_{i,1}^{\textrm{SM}}\) cannot be too close to 0. And \(\vec {\eta } _2^{\textrm{SM}}\) approximately corresponds to the directions of all photon momenta along the \(\textbf{z}\)-axis. Thus \(d_{i,2}\) distributed at a position more towards 0 shows that the photons are more inclined to the \(\textbf{z}\)-axis, which can be seen as a consequence of infrared divergence. Meanwhile, for \(d_{i,2}\) which also peaks at a position larger than 0 instead of 0 due to the cuts to avoid infrared divergence.

Another noteworthy result is that \({\tilde{\epsilon }} ^{\textrm{SM,9,10,11,12}}\approx 0\). The PCA can automatically find out the redundant information. Ignoring the effect of detector simulation, 4 of the 12 variables are linearly related to the others by \(\sum p_n^{\gamma } = (\sqrt{s},0,0,0)\) where \(p_{n=1,2,3}^{\gamma }\) are momenta of the photons, and there are 3 other nonlinear relations \((p_n^{\gamma })^2=0\). The numerical results of \(\vec {\eta } ^{\textrm{SM}}_{9,10,11,12}\) at \(\sqrt{s}=3\;\textrm{TeV}\) indicates that,

where \(E_{1,2,3}\) are energies of the three photons, \(p_{1,2,3}^{x,y,z}\) are the components of \(p_n^{\gamma }\). \({\tilde{\epsilon }} ^{\textrm{SM}}_{9,10,11,12}\approx 0\) indicate that \({\tilde{x}}_{9,10,11,12}^{\textrm{SM}}\) are almost constants. This corresponds to \(\sum p_n^{\gamma } = (\sqrt{s},0,0,0)\). However, to cleanly remove the non-linear redundant variables, non-linear PCA must be used [97].

4 Constraints on the coefficients

When no NP signal is found, the PCAAD event selection strategy can also be used to constrain the coefficients of NP. To this end, we generate events with the coefficients in Table 2. In this section, the target data-sets consist of the events generated with the SM, NP, and interference between the SM and NP included. In Ref. [78], \(p_{T,\gamma } > 0.12 E_{\textrm{beam}}\) is used as a part of the event selection strategy, where \(E_{\textrm{beam}}\) is the energy of the beam. To avoid dealing with too many events, when generating events, the standard cut requires \(p_{T,\gamma } > 0.1 E_{\textrm{beam}}\) while the other standard cuts are the same as those in Eq. (2).

\(N_s/N_{\textrm{bg}}\) in the conservative cases for \(O_{T_0}\) as functions of d, with \(f_{T_0}\) as the upper bounds of Table 2. The top-left panel corresponds to \(\sqrt{s}=3\;\textrm{TeV}\), the top-right panel corresponds to \(\sqrt{s}=10\;\textrm{TeV}\), the bottom-left panel corresponds to \(\sqrt{s}=14\;\textrm{TeV}\), and the bottom-right panel corresponds to \(\sqrt{s}=30\;\textrm{TeV}\)

Same as Fig. 5 but for \({\mathcal {S}}_{\textrm{stat}}\)

The cross-section can be expressed as a parabola of \(f_{T_{i}}/\Lambda ^{4}\), as \(\sigma =\sigma _{\textrm{SM}}+ \sigma _{\textrm{int}} f_{T_{i}}/\Lambda ^{4}+ \sigma _{\textrm{NP}} \left( f_{T_{i}}/\Lambda ^{4}\right) ^2\), where \(\sigma _{\textrm{SM}}\) denotes the contribution of the SM, \(f_{T_{i}}/\Lambda ^{4} \sigma _{\textrm{int}}\) denotes the interference between the SM and aQGCs, and \(\sigma _{\textrm{NP}} \left( f_{T_{i}}/\Lambda ^{4}\right) ^2\) is the contribution induced by aQGCs. After obtaining the cross section after cuts corresponding to each coefficient, we fitted the cross sections according to parabola using the least squares method. For simplicity we use a same criterion for \(d_{i,1\le j \le 4}\) for each \(\sqrt{s}\) (denoted as d). Taking \(d_{i,1\le j \le 4}<4.2\) at \(\sqrt{s}=3\;\textrm{TeV}\) and \(d_{i,1\le j \le 4}<4.8\) at \(\sqrt{s}=10\), 14, and \(30\;\textrm{TeV}\) as examples, the cross-sections after the event selection strategy and the fitted cross-sections are shown in Fig. 4. It can be seen that the cross-sections also fit the parabola functions well after cuts.

In order to verify the effect of different criterions, the effect of the PCAAD event selection strategy for \(O_{T_0}\) at \(\sqrt{s}=3\), 10, 14, and \(30\;\textrm{TeV}\) are investigated. The cross sections after cuts corresponding to different d are fitted. The expected constraints on the coefficients are estimated with the fitted cross-sections and the statistical sensitivity defined as \({\mathcal {S}}_{stat}=\sqrt{2 \left[ (N_{\textrm{bg}}+N_{s}) \ln (1+N_{s}/N_{\textrm{bg}})-N_{s}\right] }\) [98, 99], where \(N_s=(\sigma -\sigma _{\textrm{SM}})L\), \(N_{\textrm{bg}}=\sigma _{\textrm{SM}}L\) and L is the luminosity. The luminosities correspond to the conservative case are 1, 10, 10 and \(10\;\textrm{ab}^{-1}\) for \(\sqrt{s}=3\), 10, 14, and \(30\;\textrm{TeV}\), respectively [86]. In the conservative case, \(N_s/N_{\textrm{bg}}\) and \({\mathcal {S}}_{\textrm{stat}}\) are calculated using the fitted cross sections with the coefficients \(f_{T_0}\) as the upper bounds in Table 2. \(N_s/N_{\textrm{bg}}\) and \({\mathcal {S}}_{\textrm{stat}}\) are shown in Figs. 5 and 6, respectively. It can be seen that \(S_{\textrm{stat}}\) peeks at \(d=4.2\) at \(\sqrt{s}=3\;\textrm{TeV}\) and 4.8 at \(\sqrt{s}=10\), 14, and \(30\;\textrm{TeV}\). As a consequence, the above d’s are chosen as the criterions for the PCAAD event selection strategy.

In this paper, the luminosities correspond to the conservative and optimistic cases are both considered [86], and the expected constraints are listed in Tables 3 and 4, respectively. The energy and luminosities in this paper are the same as those used in Ref. [78], however, compared to the traditional event selection strategy used in Ref. [78], the constraints are at the same order of magnitude but generally strengthened, especially for the lower bounds.

5 Summary

Searching for NP signals at the LHC and future colliders requires a lot of data processing. Meanwhile, the quantum computers have great potential in processing a large amount of data. In this paper, we investigate the event selection strategy based on PCA algorithm, which can be accelerated by quantum computers. We proposes a PCAAD event selection strategy based on PCA algorithm to search for NP signals.

The PCAAD is an automatic event-selection strategy that does not require a prior knowledge on the physics content of the NP. Since both the aQGCs and the muon colliders are of interest to HEP community, in this paper, we use the tri-photon process at muon colliders as an example. It can be shown that PCAAD is useful and efficient in NP signal searching. The expected upper bounds on the operator coefficients w.r.t. aQGCs are generally tighter than those obtained by a traditional event selection strategy.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: Our data come from Monte Carlo simulations using MadGraph5@NLO and can therefore be easily reproduced.]

References

O. Cremonesi, Neutrino masses and neutrinoless double beta decay: status and expectations (2010). arXiv:1002.1437

A. de Gouvea, A. Friedland, P. Huber, I. Mocioiu, Opportunities in neutrino theory—a snowmass white Paper (2013). arXiv:1309.7338

G.W. Bennett, B. Bousquet, H.N. Brown, G. Bunce, R.M. Carey, P. Cushman, G.T. Danby, P.T. Debevec, M. Deile, H. Deng, W. Deninger, S.K. Dhawan, V.P. Druzhinin, L. Duong, E. Efstathiadis, F.J.M. Farley, G.V. Fedotovich, S. Giron, F.E. Gray, D. Grigoriev, M. Grosse-Perdekamp, A. Grossmann, M.F. Hare, D.W. Hertzog, X. Huang, V.W. Hughes, M. Iwasaki, K. Jungmann, D. Kawall, M. Kawamura, B.I. Khazin, J. Kindem, F. Krienen, I. Kronkvist, A. Lam, R. Larsen, Y.Y. Lee, I. Logashenko, R. McNabb, W. Meng, J. Mi, J.P. Miller, Y. Mizumachi, W.M. Morse, D. Nikas, C.J.G. Onderwater, Y. Orlov, C.S. Özben, J.M. Paley, Q. Peng, C.C. Polly, J. Pretz, R. Prigl, G. zu Putlitz, T. Qian, S.I. Redin, O. Rind, B.L. Roberts, N. Ryskulov, S. Sedykh, Y.K. Semertzidis, P. Shagin, Y.M. Shatunov, E.P. Sichtermann, E. Solodov, M. Sossong, A. Steinmetz, L.R. Sulak, C. Timmermans, A. Trofimov, D. Urner, P. von Walter, D. Warburton, D. Winn, A. Yamamoto, D. Zimmerman, Final report of the E821 muon anomalous magnetic moment measurement at BNL. Phys. Rev. D 73, 072003 (2006). https://doi.org/10.1103/PhysRevD.73.072003

M. Huschle et al., Measurement of the branching ratio of \(\bar{B} \rightarrow D^{(\ast )} \tau ^- \bar{\nu }_\tau \) relative to \(\bar{B} \rightarrow D^{(\ast )} \ell ^- \bar{\nu }_\ell \) decays with hadronic tagging at Belle. Phys. Rev. D 92(7), 072014 (2015). https://doi.org/10.1103/PhysRevD.92.072014. arXiv:1507.03233

T. Aaltonen et al., High-precision measurement of the \(W\) boson mass with the CDF II detector. Science 376(6589), 170–176 (2022). https://doi.org/10.1126/science.abk1781

J. Ellis, Outstanding questions: physics beyond the Standard Model. Philos. Trans. R. Soc. Lond. A 370, 818–830 (2012). https://doi.org/10.1098/rsta.2011.0452

A. Radovic, M. Williams, D. Rousseau, M. Kagan, D. Bonacorsi, A. Himmel, A. Aurisano, K. Terao, T. Wongjirad, Machine learning at the energy and intensity frontiers of particle physics. Nature 560(7716), 41–48 (2018). https://doi.org/10.1038/s41586-018-0361-2

P. Baldi, P. Sadowski, D. Whiteson, Searching for exotic particles in high-energy physics with deep learning. Nat. Commun. 5, 4308 (2014). https://doi.org/10.1038/ncomms5308. arXiv:1402.4735

J. Ren, L. Wu, J.M. Yang, J. Zhao, Exploring supersymmetry with machine learning. Nucl. Phys. B 943, 114613 (2019). https://doi.org/10.1016/j.nuclphysb.2019.114613. arXiv:1708.06615

M. Abdughani, J. Ren, L. Wu, J.M. Yang, Probing stop pair production at the LHC with graph neural networks. JHEP 08, 055 (2019). https://doi.org/10.1007/JHEP08(2019)055. arXiv:1807.09088

R. Iten, T. Metger, H. Wilming, L. del Rio, R. Renner, Discovering physical concepts with neural networks. Phys. Rev. Lett. 124, 010508 (2020). https://doi.org/10.1103/PhysRevLett.124.010508

J. Ren, L. Wu, J.M. Yang, Unveiling CP property of top-Higgs coupling with graph neural networks at the LHC. Phys. Lett. B 802, 135198 (2020). https://doi.org/10.1016/j.physletb.2020.135198. arXiv:1901.05627

Y.-C. Guo, L. Jiang, J.-C. Yang, Detecting anomalous quartic gauge couplings using the isolation forest machine learning algorithm. Phys. Rev. D 104(3), 035021 (2021). https://doi.org/10.1103/PhysRevD.104.035021. arXiv:2103.03151

M.A. Md Ali, N. Badrud’din, H. Abdullah, F. Kemi, Alternate methods for anomaly detection in high-energy physics via semi-supervised learning. Int. J. Mod. Phys. A 35(23), 2050131 (2020). https://doi.org/10.1142/S0217751X20501316

E. Fol, R. Tomás, J. Coello de Portugal, G. Franchetti, Detection of faulty beam position monitors using unsupervised learning. Phys. Rev. Accel. Beams 23(10), 102805 (2020). https://doi.org/10.1103/PhysRevAccelBeams.23.102805

R.T. D’Agnolo, A. Wulzer, Learning new physics from a machine. Phys. Rev. D 99(1), 015014 (2019). https://doi.org/10.1103/PhysRevD.99.015014. arXiv:1806.02350

J.-C. Yang, X.-Y. Han, Z.-B. Qin, T. Li, Y.-C. Guo, Measuring the anomalous quartic gauge couplings in the W\(^{+}\)W\(^{-}\rightarrow {}\) W\(^{+}\)W\(^{-}\) process at muon collider using artificial neural networks. JHEP 09, 074 (2022). https://doi.org/10.1007/JHEP09(2022)074. arXiv:2204.10034

J.-C. Yang, J.-H. Chen, Y.-C. Guo, Extract the energy scale of anomalous \(\gamma \gamma \rightarrow W^+W^-\) scattering in the vector boson scattering process using artificial neural networks. JHEP 21, 085 (2020). https://doi.org/10.1007/JHEP09(2021)085. arXiv:2107.13624

G. Kasieczka et al., The LHC Olympics 2020 a community challenge for anomaly detection in high energy physics. Rep. Prog. Phys. 84(12), 124201 (2021). https://doi.org/10.1088/1361-6633/ac36b9. arXiv:2101.08320

M. Kuusela, T. Vatanen, E. Malmi, T. Raiko, T. Aaltonen, Y. Nagai, Semi-supervised anomaly detection—towards model-independent searches of new physics. J. Phys. Conf. Ser. 368, 012032 (2012). https://doi.org/10.1088/1742-6596/368/1/012032. arXiv:1112.3329

M. Farina, Y. Nakai, D. Shih, Searching for new physics with deep autoencoders. Phys. Rev. D 101(7), 075021 (2020). https://doi.org/10.1103/PhysRevD.101.075021. arXiv:1808.08992

O. Cerri, T.Q. Nguyen, M. Pierini, M. Spiropulu, J.-R. Vlimant, Variational autoencoders for new physics mining at the large hadron collider. JHEP 05, 036 (2019). https://doi.org/10.1007/JHEP05(2019)036. arXiv:1811.10276

J.-C. Yang, Y.-C. Guo, L.-H. Cai, Using a nested anomaly detection machine learning algorithm to study the neutral triple gauge couplings at an \(e^{+}e^{-}\) collider. Nucl. Phys. B 977, 115735 (2022). https://doi.org/10.1016/j.nuclphysb.2022.115735. arXiv:2111.10543

M. van Beekveld, S. Caron, L. Hendriks, P. Jackson, A. Leinweber, S. Otten, R. Patrick, R. Ruiz De Austri, M. Santoni, M. White, Combining outlier analysis algorithms to identify new physics at the LHC. JHEP 09, 024 (2021). https://doi.org/10.1007/JHEP09(2021)024. arXiv:2010.07940

M. Crispim Romão, N.F. Castro, R. Pedro, Finding new physics without learning about it: anomaly detection as a tool for searches at colliders. Eur. Phys. J. C 81(1), 27 (2021). https://doi.org/10.1140/epjc/s10052-020-08807-w. arXiv:2006.05432

S. Zhang, J.-C. Yang, Y.-C. Guo, Using k-means assistant event selection strategy to study anomalous quartic gauge couplings at muon colliders 2 (2023). arXiv:2302.01274

J. Biamonte, P. Wittek, N. Pancotti, P. Rebentrost, N. Wiebe, S. Lloyd, Quantum machine learning. Nature 549, 195–202 (2017). https://doi.org/10.1038/nature23474

M. Schuld, I. Sinayskiy, F. Petruccione, An introduction to quantum machine learning. Contemp. Phys. 56(2), 172–185 (2015). https://doi.org/10.1080/00107514.2014.964942

D.P. García, J. Cruz-Benito, F.J. García-Peñalvo, Systematic literature review: quantum machine learning and its applications 1 (2022). arXiv:2201.04093

I.T. Jolliffe, J. Cadima, Principal component analysis: a review and recent developments. Philos. Trans. A Math. Phys. Eng. Sci. 374(2065), 20150202 (2016). https://doi.org/10.1098/rsta.2015.0202

S. Lloyd, M. Mohseni, P. Rebentrost, Quantum principal component analysis. Nat. Phys. 10, 631–633 (2014). https://doi.org/10.1038/nphys3029

C.-Y. Liou, J.-C. Huang, W.-C. Yang, Modeling word perception using the Elman network. Neurocomputing 71(16), 3150–3157 (2008). Advances in Neural Information Processing (ICONIP 2006)/Brazilian Symposium on Neural Networks SBRN (2006). https://doi.org/10.1016/j.neucom.2008.04.030.https://www.sciencedirect.com/science/article/pii/S0925231208002865

C.-Y. Liou, W.-C. Cheng, J.-W. Liou, D.-R. Liou, Autoencoder for words. Neurocomputing 139, 84–96 (2014). https://doi.org/10.1016/j.neucom.2013.09.055. www.sciencedirect.com/science/article/pii/S0925231214003658

D.P. Kingma, M. Welling, Auto-encoding variational Bayes (2013). arXiv:1312.6114

Y. Burda, R. Grosse, R. Salakhutdinov, Importance weighted autoencoders (2015). arXiv:1509.00519

J. Romero, J.P. Olson, A. Aspuru-Guzik, Quantum autoencoders for efficient compression of quantum data. Quantum Sci. Technol. 2(4), 045001 (2017). https://doi.org/10.1088/2058-9565/aa8072. arXiv:1612.02806

C. Bravo-Prieto, Quantum autoencoders with enhanced data encoding. Mach. Learn. Sci. Technol. 2(3), 035028 (2021). https://doi.org/10.1088/2632-2153/ac0616

D. Bondarenko, P. Feldmann, Quantum autoencoders to denoise quantum data. Phys. Rev. Lett. 124, 130502 (2020). https://doi.org/10.1103/PhysRevLett.124.130502

A. Khoshaman, W. Vinci, B. Denis, E. Andriyash, H. Sadeghi, M.H. Amin, Quantum variational autoencoder. Quantum Sci. Technol. 4, 014001 (2019). https://doi.org/10.1088/2058-9565/aada1f

S. Chen, A. Glioti, G. Panico, A. Wulzer, Parametrized classifiers for optimal EFT sensitivity. JHEP 05, 247 (2021). https://doi.org/10.1007/JHEP05(2021)247. arXiv:2007.10356

C. Zhang, S.-Y. Zhou, Positivity bounds on vector boson scattering at the LHC. Phys. Rev. D 100(9), 095003 (2019). https://doi.org/10.1103/PhysRevD.100.095003. arXiv:1808.00010

Q. Bi, C. Zhang, S.-Y. Zhou, Positivity constraints on aQGC: carving out the physical parameter space. JHEP 06, 137 (2019). https://doi.org/10.1007/JHEP06(2019)137. arXiv:1902.08977

C. Zhang, S.-Y. Zhou, Convex geometry perspective to the (standard model) effective field theory space. Phys. Rev. Lett. 125(20), 201601 (2020). https://doi.org/10.1103/PhysRevLett.125.201601. arXiv:2005.03047

D. Espriu, F. Mescia, Unitarity and causality constraints in composite Higgs models. Phys. Rev. D 90(1), 015035 (2014). https://doi.org/10.1103/PhysRevD.90.015035. arXiv:1403.7386

R. Delgado, A. Dobado, M. Herrero, J. Sanz-Cillero, One-loop \(\gamma \gamma \rightarrow \) W\(_{L}^{+}\) W\(_{L}^{-}\) and \(\gamma \gamma \rightarrow \) Z\(_{L}\) Z\(_{L}\) from the Electroweak Chiral Lagrangian with a light Higgs-like scalar. JHEP 07, 149 (2014). https://doi.org/10.1007/JHEP07(2014)149. arXiv:1404.2866

S. Fichet, G. von Gersdorff, Anomalous gauge couplings from composite Higgs and warped extra dimensions. JHEP 03, 102 (2014). https://doi.org/10.1007/JHEP03(2014)102. arXiv:1311.6815

T. Lee, A theory of spontaneous T violation. Phys. Rev. D 8, 1226–1239 (1973). https://doi.org/10.1103/PhysRevD.8.1226

J.-C. Yang, M.-Z. Yang, Effect of the charged Higgs bosons in the radiative leptonic decays of \(B^-\) and \(D^-\) mesons. Mod. Phys. Lett. A 31(03), 1650012 (2015). https://doi.org/10.1142/S0217732316500127. arXiv:1508.00314

X.-G. He, G.C. Joshi, H. Lew, R. Volkas, Simplest Z-prime model. Phys. Rev. D 44, 2118–2132 (1991). https://doi.org/10.1103/PhysRevD.44.2118

J.-X. Hou, C.-X. Yue, The signatures of the new particles \(h_2\) and \(Z_{\mu \tau }\) at e-p colliders in the \(U(1)_{L_\mu -L_\tau }\) model. Eur. Phys. J. C 79(12), 983 (2019). https://doi.org/10.1140/epjc/s10052-019-7432-x. arXiv:1905.00627

K. Mimasu, V. Sanz, ALPs at colliders. JHEP 06, 173 (2015). https://doi.org/10.1007/JHEP06(2015)173. arXiv:1409.4792

C.-X. Yue, M.-Z. Liu, Y.-C. Guo, Searching for axionlike particles at future \(ep\) colliders. Phys. Rev. D 100(1), 015020 (2019). https://doi.org/10.1103/PhysRevD.100.015020. arXiv:1904.10657

C.-X. Yue, X.-J. Cheng, J.-C. Yang, Charged-current non-standard neutrino interactions at the LHC and HL-LHC*. Chin. Phys. C 47(4), 043111 (2023). https://doi.org/10.1088/1674-1137/acb993. arXiv:2110.01204

M. Born, L. Infeld, Foundations of the new field theory. Proc. R. Soc. Lond. A 144(852), 425–451 (1934). https://doi.org/10.1098/rspa.1934.0059

J. Ellis, S.-F. Ge, Constraining gluonic quartic gauge coupling operators with \(gg\rightarrow \gamma \gamma \). Phys. Rev. Lett. 121(4), 041801 (2018). https://doi.org/10.1103/PhysRevLett.121.041801. arXiv:1802.02416

J. Ellis, N.E. Mavromatos, T. You, Light-by-light scattering constraint on Born–Infeld theory. Phys. Rev. Lett. 118(26), 261802 (2017). https://doi.org/10.1103/PhysRevLett.118.261802. arXiv:1703.08450

B. Henning, X. Lu, T. Melia, H. Murayama, Higher dimension operators in the SM EFT. JHEP 08, 016 (2017). https://doi.org/10.1007/JHEP08(2017)016. arXiv:1512.03433 [Erratum: JHEP 09, 019 (2019)]

D.R. Green, P. Meade, M.-A. Pleier, Multiboson interactions at the LHC. Rev. Mod. Phys. 89(3), 035008 (2017). https://doi.org/10.1103/RevModPhys.89.035008. arXiv:1610.07572

G. Perez, M. Sekulla, D. Zeppenfeld, Anomalous quartic gauge couplings and unitarization for the vector boson scattering process \(pp\rightarrow W^+W^+jjX\rightarrow \ell ^+\nu _\ell \ell ^+\nu _\ell jjX\). Eur. Phys. J. C 78(9), 759 (2018). https://doi.org/10.1140/epjc/s10052-018-6230-1. arXiv:1807.02707

Y.-C. Guo, Y.-Y. Wang, J.-C. Yang, C.-X. Yue, Constraints on anomalous quartic gauge couplings via \(W\gamma jj\) production at the LHC. Chin. Phys. C 44(12), 123105 (2020). https://doi.org/10.1088/1674-1137/abb4d2. arXiv:2002.03326

Y.-C. Guo, Y.-Y. Wang, J.-C. Yang, Constraints on anomalous quartic gauge couplings by \(\gamma \gamma \rightarrow W^+W^-\) scattering. Nucl. Phys. B 961, 115222 (2020). https://doi.org/10.1016/j.nuclphysb.2020.115222. arXiv:1912.10686

J.-C. Yang, Y.-C. Guo, C.-X. Yue, Q. Fu, Constraints on anomalous quartic gauge couplings via \(Z\gamma jj\) production at the LHC. Phys. Rev. D 104(3), 035015 (2021). https://doi.org/10.1103/PhysRevD.104.035015. arXiv:2107.01123

G. Aad et al., Evidence for electroweak production of \(W^{\pm }W^{\pm }jj\) in \(pp\) collisions at \(\sqrt{s}=8\) TeV with the ATLAS detector. Phys. Rev. Lett. 113(14), 141803 (2014). https://doi.org/10.1103/PhysRevLett.113.141803. arXiv:1405.6241

A.M. Sirunyan et al., Measurements of production cross sections of WZ and same-sign WW boson pairs in association with two jets in proton-proton collisions at \(\sqrt{s} =\) 13 TeV. Phys. Lett. B 809, 135710 (2020). https://doi.org/10.1016/j.physletb.2020.135710. arXiv:2005.01173

M. Aaboud et al., Studies of \(Z\gamma \) production in association with a high-mass dijet system in \(pp\) collisions at \(\sqrt{s}=\) 8 TeV with the ATLAS detector. JHEP 07, 107 (2017). https://doi.org/10.1007/JHEP07(2017)107. arXiv:1705.01966

V. Khachatryan et al., Measurement of the cross section for electroweak production of Z\(\gamma \) in association with two jets and constraints on anomalous quartic gauge couplings in proton-proton collisions at \(\sqrt{s} = 8\) TeV. Phys. Lett. B 770, 380–402 (2017). https://doi.org/10.1016/j.physletb.2017.04.071. arXiv:1702.03025

A.M. Sirunyan et al., Measurement of the cross section for electroweak production of a Z boson, a photon and two jets in proton-proton collisions at \(\sqrt{s} =\) 13 TeV and constraints on anomalous quartic couplings. JHEP 06, 076 (2020). https://doi.org/10.1007/JHEP06(2020)076. arXiv:2002.09902

V. Khachatryan et al., Measurement of electroweak-induced production of W\(\gamma \) with two jets in pp collisions at \( \sqrt{s}=8 \) TeV and constraints on anomalous quartic gauge couplings. JHEP 06, 106 (2017). https://doi.org/10.1007/JHEP06(2017)106. arXiv:1612.09256

A.M. Sirunyan et al., Measurement of vector boson scattering and constraints on anomalous quartic couplings from events with four leptons and two jets in proton-proton collisions at \(\sqrt{s}=\) 13 TeV. Phys. Lett. B 774, 682–705 (2017). https://doi.org/10.1016/j.physletb.2017.10.020. arXiv:1708.02812

A.M. Sirunyan et al., Measurement of differential cross sections for Z boson pair production in association with jets at \(\sqrt{s} =\) 8 and 13 TeV. Phys. Lett. B 789, 19–44 (2019). https://doi.org/10.1016/j.physletb.2018.11.007. arXiv:1806.11073

M. Aaboud et al., Observation of electroweak \(W^{\pm }Z\) boson pair production in association with two jets in \(pp\) collisions at \(\sqrt{s} =\) 13 TeV with the ATLAS detector. Phys. Lett. B 793, 469–492 (2019). https://doi.org/10.1016/j.physletb.2019.05.012. arXiv:1812.09740

A.M. Sirunyan et al., Measurement of electroweak WZ boson production and search for new physics in WZ + two jets events in pp collisions at \(\sqrt{s} =\) 13TeV. Phys. Lett. B 795, 281–307 (2019). https://doi.org/10.1016/j.physletb.2019.05.042. arXiv:1901.04060

V. Khachatryan et al., Evidence for exclusive \(\gamma \gamma \rightarrow W^+ W^-\) production and constraints on anomalous quartic gauge couplings in \(pp\) collisions at \( \sqrt{s}=7 \) and 8 TeV. JHEP 08, 119 (2016). https://doi.org/10.1007/JHEP08(2016)119. arXiv:1604.04464

A.M. Sirunyan et al., Observation of electroweak production of same-sign W boson pairs in the two jet and two same-sign lepton final state in proton-proton collisions at \(\sqrt{s} = \) 13 TeV. Phys. Rev. Lett. 120(8), 081801 (2018). https://doi.org/10.1103/PhysRevLett.120.081801. arXiv:1709.05822

A.M. Sirunyan et al., Search for anomalous electroweak production of vector boson pairs in association with two jets in proton-proton collisions at 13 TeV. Phys. Lett. B 798, 134985 (2019). https://doi.org/10.1016/j.physletb.2019.134985. arXiv:1905.07445

A.M. Sirunyan et al., Observation of electroweak production of W\(\gamma \) with two jets in proton-proton collisions at \(\sqrt{s}\) = 13 TeV. Phys. Lett. B 811, 135988 (2020). https://doi.org/10.1016/j.physletb.2020.135988. arXiv:2008.10521

A.M. Sirunyan et al., Evidence for electroweak production of four charged leptons and two jets in proton-proton collisions at \(\sqrt{s}\) = 13 TeV. Phys. Lett. B 812, 135992 (2021). https://doi.org/10.1016/j.physletb.2020.135992. arXiv:2008.07013

J.-C. Yang, Z.-B. Qing, X.-Y. Han, Y.-C. Guo, T. Li, Tri-photon at muon collider: a new process to probe the anomalous quartic gauge couplings. JHEP 22, 053 (2020). https://doi.org/10.1007/JHEP07(2022)053. arXiv:2204.08195

D. Buttazzo, D. Redigolo, F. Sala, A. Tesi, Fusing vectors into scalars at high energy lepton colliders. JHEP 11, 144 (2018). https://doi.org/10.1007/JHEP11(2018)144. arXiv:1807.04743

J.P. Delahaye, M. Diemoz, K. Long, B. Mansoulié, N. Pastrone, L. Rivkin, D. Schulte, A. Skrinsky, A. Wulzer, Muon Colliders 1 (2019). arXiv:1901.06150

M. Lu, A.M. Levin, C. Li, A. Agapitos, Q. Li, F. Meng, S. Qian, J. Xiao, T. Yang, The physics case for an electron-muon collider. Adv. High Energy Phys. 2021, 6693618 (2021). https://doi.org/10.1155/2021/6693618. arXiv:2010.15144

R. Franceschini, M. Greco, Higgs and BSM physics at the future muon collider. Symmetry 13(5), 851 (2021). https://doi.org/10.3390/sym13050851. arXiv:2104.05770

R. Palmer et al., Muon collider design. Nucl. Phys. B Proc. Suppl. 51, 61–84 (1996). https://doi.org/10.1016/0920-5632(96)00417-3. arxiv:acc-phys/9604001

S.D. Holmes, V.D. Shiltsev, Muon Collider (Springer, Heidelberg, pp. 816–822, 2013). https://doi.org/10.1007/978-3-642-23053-0_48. arXiv:1202.3803

A. Costantini, F. De Lillo, F. Maltoni, L. Mantani, O. Mattelaer, R. Ruiz, X. Zhao, Vector boson fusion at multi-TeV muon colliders. JHEP 09, 080 (2020). https://doi.org/10.1007/JHEP09(2020)080. arXiv:2005.10289

H. Al Ali et al. The muon Smasher’s guide. Rep. Prog. Phys. 85(8), 084201 (2022). https://doi.org/10.1088/1361-6633/ac6678

T. Han, Y. Ma, K. Xie, High energy leptonic collisions and electroweak parton distribution functions. Phys. Rev. D 103(3), L031301 (2021). https://doi.org/10.1103/PhysRevD.103.L031301. arXiv:2007.14300

T. Han, Y. Ma, K. Xie, Quark and gluon contents of a lepton at high energies. JHEP 02, 154 (2022). https://doi.org/10.1007/JHEP02(2022)154. arXiv:2103.09844

C. Aime et al., Muon collider physics summary 3 (2022). arXiv:2203.07256

W. Yin, M. Yamaguchi, Muon g-2 at a multi-TeV muon collider. Phys. Rev. D 106(3), 033007 (2022). https://doi.org/10.1103/PhysRevD.106.033007. arXiv:2012.03928

O. Eboli, M. Gonzalez-Garcia, J. Mizukoshi, \(p p \rightarrow j j e^{\pm } \mu ^{\pm } \nu \nu \) and \(j j e^{\pm } \mu ^{\mp } \nu \nu \) at \(mathcal O ( \alpha _{\pm }^6)\) and \(mathcal O ( \alpha _{\pm }^4 \alpha _s^2)\) for the study of the quartic electroweak gauge boson vertex at CERN LHC. Phys. Rev. D 74, 073005 (2006). https://doi.org/10.1103/PhysRevD.74.073005. arXiv:hep-ph/0606118

O.J.P. Éboli, M.C. Gonzalez-Garcia, Classifying the bosonic quartic couplings. Phys. Rev. D 93(9), 093013 (2016). https://doi.org/10.1103/PhysRevD.93.093013. arXiv:1604.03555

J. Alwall, R. Frederix, S. Frixione, V. Hirschi, F. Maltoni, O. Mattelaer, H.S. Shao, T. Stelzer, P. Torrielli, M. Zaro, The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. JHEP 07, 079 (2014). https://doi.org/10.1007/JHEP07(2014)079. arXiv:1405.0301

N.D. Christensen, C. Duhr, FeynRules—Feynman rules made easy. Comput. Phys. Commun. 180, 1614–1641 (2009). https://doi.org/10.1016/j.cpc.2009.02.018. arXiv:0806.4194

C. Degrande, C. Duhr, B. Fuks, D. Grellscheid, O. Mattelaer, T. Reiter, UFO—the Universal FeynRules output. Comput. Phys. Commun. 183, 1201–1214 (2012). https://doi.org/10.1016/j.cpc.2012.01.022. arXiv:1108.2040

J. de Favereau, C. Delaere, P. Demin, A. Giammanco, V. Lemaître, A. Mertens, M. Selvaggi, DELPHES 3. A modular framework for fast simulation of a generic collider experiment. JHEP 02, 057 (2014). https://doi.org/10.1007/JHEP02(2014)057. arXiv:1307.6346

M. Linting, J.J. Meulman, P.J.F. Groenen, A.J. van der Koojj, Nonlinear principal components analysis: introduction and application. Psychol. Methods 12(3), 336–358 (2007). https://doi.org/10.1037/1082-989X.12.3.336

G. Cowan, K. Cranmer, E. Gross, O. Vitells, Asymptotic formulae for likelihood-based tests of new physics. Eur. Phys. J. C 71, 1554 (2011). https://doi.org/10.1140/epjc/s10052-011-1554-0. arXiv:1007.1727 [Erratum: Eur. Phys. J. C 73, 2501 (2013)]

P. Zyla et al., Review of particle physics. PTEP 2020(8), 083C01 (2020). https://doi.org/10.1093/ptep/ptaa104

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grants No. 12147214, the Natural Science Foundation of the Liaoning Scientific Committee No. LJKZ0978 and the Outstanding Research Cultivation Program of Liaoning Normal University (No. 21GDL004).

Author information

Authors and Affiliations

Corresponding author

Appendix A: The z-score standardization and eigenvectors used in this paper

Appendix A: The z-score standardization and eigenvectors used in this paper

The means and standard deviations of \(p^{\textrm{SM}}\) are used to standardize the data-sets, which are listed in Tables 5 and 6, respectively. The components of the eigenvectors \(\vec {\eta } _{1,2,3,4}^{\textrm{SM}}\) are listed in Tables 7, 8, 9, and 10, respectively.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3. SCOAP3 supports the goals of the International Year of Basic Sciences for Sustainable Development.

About this article

Cite this article

Dong, YF., Mao, YC. & Yang, JC. Searching for anomalous quartic gauge couplings at muon colliders using principal component analysis. Eur. Phys. J. C 83, 555 (2023). https://doi.org/10.1140/epjc/s10052-023-11719-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-023-11719-0