Abstract

This paper presents the observation of four-top-quark (\(t\bar{t}t\bar{t}\)) production in proton-proton collisions at the LHC. The analysis is performed using an integrated luminosity of 140 \(\hbox {fb}^{-1}\) at a centre-of-mass energy of 13 TeV collected using the ATLAS detector. Events containing two leptons with the same electric charge or at least three leptons (electrons or muons) are selected. Event kinematics are used to separate signal from background through a multivariate discriminant, and dedicated control regions are used to constrain the dominant backgrounds. The observed (expected) significance of the measured \(t\bar{t}t\bar{t}\) signal with respect to the standard model (SM) background-only hypothesis is 6.1 (4.3) standard deviations. The \(t\bar{t}t\bar{t}\) production cross section is measured to be \(22.5^{+6.6}_{-5.5}\) fb, consistent with the SM prediction of \(12.0 \pm 2.4\) fb within 1.8 standard deviations. Data are also used to set limits on the three-top-quark production cross section, being an irreducible background not measured previously, and to constrain the top-Higgs Yukawa coupling and effective field theory operator coefficients that affect \(t\bar{t}t\bar{t}\) production.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The top quark is the heaviest elementary particle in the Standard Model (SM) and has a strong connection to the SM Higgs boson as well as potentially new particles in various theories beyond the SM (BSM). It is therefore relevant to study rare processes involving the top quark, such as the production of four top quarks (\(t\bar{t}t\bar{t}\)), which is predicted by the SM but has not been observed yet. The \(t\bar{t}t\bar{t}\) cross section could be enhanced in many BSM models, including gluino pair production in supersymmetric theories [1, 2], scalar-gluon pair production [3, 4], the associated production of a heavy scalar or pseudoscalar boson with a top-quark pair in two-Higgs-doublet models [5,6,7], or in top-quark-compositeness models [8]. Additionally, the \(t\bar{t}t\bar{t}\) cross section is sensitive to the strength of the top-quark Yukawa coupling, and its charge conjugation and parity (CP) properties [9, 10]. It is also sensitive to various four-fermion interactions [11,12,13,14] and the Higgs oblique parameter [15] in the context of an effective field theory (EFT) framework.

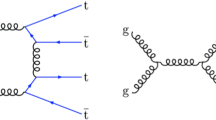

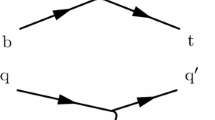

Within the SM, the predicted cross section of \(t\bar{t}t\bar{t}\) production in proton–proton (pp) collisions at a centre-of-mass energy \(\sqrt{s}=13\) TeV is \(\sigma _{t\bar{t}t\bar{t}}=12.0\) fb at next-to-leading order (NLO) in QCD including electroweak corrections, with a relative scale uncertainty of \(\pm \,20\%\) [16,17,18]. This prediction is used as a reference throughout this paper, as for the previous publications [19, 20]. Including threshold resummation at next-to-leading logarithmic accuracy increases the total production cross section by approximately 12% and reduces the scale uncertainty significantly, leading to \(\sigma _{t\bar{t}t\bar{t}}=13.4^{+1.0}_{-1.8}\) fb [21]. Illustrative Feynman diagrams for SM \(t\bar{t}t\bar{t}\) production are shown in Fig. 1. Other rare multi-top-quark production processes which haven’t been observed yet, such as three-top-quark production (\(t\bar{t}t\)),Footnote 1 are expected to have cross sections of O(1 fb) in the SM, an order of magnitude smaller than \(t\bar{t}t\bar{t}\) production. They can also be sensitive to BSM effects.

The \(t\bar{t}t\bar{t}\) process results in various final states depending on the top quark decays. These final states are classified based on the number of electrons or muons produced in the semileptonic top quark decays, including those originating from subsequent \(\tau \) leptonic decays. This paper focuses on two types of events: those with exactly two same-charge isolated leptons (2LSS) and those with at least three isolated leptons (3L). In the SM, 7% and 5% of the produced four top-quark events result in 2LSS and 3L final states, respectively. While these channels, referred to as 2LSS/3L, are relatively rare final states, they are advantageous due to low levels of background. The \(t\bar{t}t\bar{t}\) topology is also characterised by a high light-jet and b-jet multiplicity and a large rest mass of the event, amounting to about \(700~\hbox {GeV}\).

The ATLAS and CMS experiments have reported evidence for \(t\bar{t}t\bar{t}\) production in 13 TeV pp collisions at the LHC. The latest ATLAS result combines two analyses using \(139~\hbox {fb}^{-1}\) at \(\sqrt{s}=13\) TeV: one in the 2LSS/3L channel [19] and the other in the channel comprising events with one lepton or two leptons with opposite electric charge. This combination results in a measured cross-section of \(24^{+7}_{-6}\) fb, corresponding to an observed (expected) signal significance of 4.7 (2.6) standard deviations over the background-only predictions [20]. The latest CMS result is from a combination of several measurements using \(138~\hbox {fb}^{-1}\) at \(\sqrt{s}=13\) TeV, in the channels with zero, one and two electrons or muons with opposite-sign electric charges and the 2LSS/3L channel, yielding an observed (expected) significance of 4.0 (3.2) standard deviations [22]. The \(t\bar{t}t\bar{t}\) cross section measured by the CMS collaboration is \(17 \pm 5\) fb.

This paper presents a re-analysis of the \(140~\hbox {fb}^{-1}\) data set at \(\sqrt{s}=13~\hbox {TeV}\) in the 2LSS/3L channel with the ATLAS detector and supersedes the result of Ref. [19]. Compared to the previous result that showed evidence for \(t\bar{t}t\bar{t}\) production [19], this new measurement brings several improvements: an optimised selection with lower cuts on the leptons’ and jets’ transverse momenta; improved b-jet identification; a new data-driven estimation of the \(t\bar{t}W\)+jets background, one of the main backgrounds in this channel; a revised set of systematic uncertainties; an improved treatment of the \(t\bar{t}t\) background and a more powerful multivariate discriminant to separate the signal from background. This paper also presents limits under several BSM scenarios, such as limits on the three-top-quark production cross section, on the top-Higgs Yukawa coupling, and on EFT operators corresponding to heavy-flavour four-fermion interactions and the Higgs oblique parameter.

2 The ATLAS detector

The ATLAS detector [23] at the LHC covers nearly the entire solid angle around the collision point.Footnote 2 It consists of an inner tracking detector surrounded by a thin superconducting solenoid, electromagnetic and hadron calorimeters, and a muon spectrometer incorporating three large superconducting air-core toroidal magnets.

The inner-detector system (ID) is immersed in a 2 T axial magnetic field and provides charged-particle tracking in the range \(|\eta | < 2.5\). The high-granularity silicon pixel detector covers the vertex region and typically provides four measurements per track, the first hit normally being in the insertable B-layer (IBL) installed before Run 2 [24, 25]. It is followed by the silicon microstrip tracker (SCT), which usually provides eight measurements per track. These silicon detectors are complemented by the transition radiation tracker (TRT), which enables radially extended track reconstruction up to \(|\eta | = 2.0\). The TRT also provides electron identification information based on the fraction of hits (typically 30 in total) above a higher energy-deposit threshold corresponding to transition radiation.

The calorimeter system covers the pseudorapidity range \(|\eta | < 4.9\). Within the region \(|\eta |< 3.2\), electromagnetic calorimetry is provided by barrel and endcap high-granularity lead/liquid-argon (LAr) calorimeters, with an additional thin LAr presampler covering \(|\eta | < 1.8\) to correct for energy loss in material upstream of the calorimeters. Hadron calorimetry is provided by the steel/scintillator-tile calorimeter, segmented into three barrel structures within \(|\eta | < 1.7\), and two copper/LAr hadron endcap calorimeters. The solid angle coverage is completed with forward copper/LAr and tungsten/LAr calorimeter modules optimised for electromagnetic and hadronic energy measurements respectively.

The muon spectrometer (MS) comprises separate trigger and high-precision tracking chambers measuring the deflection of muons in a magnetic field generated by the superconducting air-core toroidal magnets. The field integral of the toroids ranges between 2.0 and 6.0 T m across most of the detector. Three layers of precision chambers, each consisting of layers of monitored drift tubes, covers the region \(|\eta | < 2.7\), complemented by cathode-strip chambers in the forward region, where the background is highest. The muon trigger system covers the range \(|\eta | < 2.4\) with resistive-plate chambers in the barrel, and thin-gap chambers in the endcap regions.

Interesting events are selected by the first-level trigger system implemented in custom hardware, followed by selections made by algorithms implemented in software in the high-level trigger [26]. The first-level trigger accepts events from the 40 MHz bunch crossings at a rate below 100 kHz, which the high-level trigger further reduces in order to record events to disk at about 1 kHz.

An extensive software suite [27] is used in data simulation, in the reconstruction and analysis of real and simulated data, in detector operations, and in the trigger and data acquisition systems of the experiment.

3 Data and Monte Carlo simulation samples

This analysis was performed using the pp collision data collected by the ATLAS detector between 2015 and 2018 at \(\sqrt{s} = {13}\,\hbox {TeV}\). After the application of data-quality requirements [28], the data set corresponds to an integrated luminosity of \(140~\hbox {fb}^{-1}\).

Samples of simulated, or Monte Carlo (MC), events were produced to model the different signal and background processes. Additional samples were produced to estimate the modelling uncertainties for each process. The configurations used to produce the samples are summarised in Table 1. The effects of the additional \(pp\) collisions in the same or a nearby bunch crossing (pile-up) were modelled by overlaying minimum bias events simulated using Pythia8.186 [29] with the A3 set of tuned parameters (tune) [30] on events from hard-scatter processes. The MC events were weighted to reproduce the distribution of the average number of interactions per bunch crossing observed in the data. All samples generated with PowhegBox [31,32,33,34] and MadGraph5_aMC@NLO [35] were interfaced to Pythia8 to simulate the parton shower, fragmentation, and underlying event with the A14 tune [36] and the  distribution function (PDF) set. Samples using Pythia8 and Herwig7 have heavy-flavour hadron decays modelled by EvtGen v1.2.0 or EvtGen v1.6.0 [37]. All samples include leading-logarithm photon emission, either modelled by the parton shower generator or by PHOTOS [38]. The masses of the top quark, \(m_{\textrm{top}}\), and of the SM Higgs boson, \(m_H\), are set to \(172.5~\hbox {GeV}\) and \(125~\hbox {GeV}\), respectively. The signal and background samples were processed through the simulation of the ATLAS detector geometry and response using Geant4 [39], and then reconstructed using the same software as for collider data. Some of the alternative samples used to evaluate systematic uncertainties were instead processed through a fast detector simulation making use of parameterised showers in the calorimeters [40].

distribution function (PDF) set. Samples using Pythia8 and Herwig7 have heavy-flavour hadron decays modelled by EvtGen v1.2.0 or EvtGen v1.6.0 [37]. All samples include leading-logarithm photon emission, either modelled by the parton shower generator or by PHOTOS [38]. The masses of the top quark, \(m_{\textrm{top}}\), and of the SM Higgs boson, \(m_H\), are set to \(172.5~\hbox {GeV}\) and \(125~\hbox {GeV}\), respectively. The signal and background samples were processed through the simulation of the ATLAS detector geometry and response using Geant4 [39], and then reconstructed using the same software as for collider data. Some of the alternative samples used to evaluate systematic uncertainties were instead processed through a fast detector simulation making use of parameterised showers in the calorimeters [40].

The nominal sample used to model the SM \(t\bar{t}t\bar{t}\) signal was generated using the MadGraph5_aMC@NLO v2.6.2 [45] generator, which provides matrix elements at NLO in QCD. The renormalisation (\(\mu _R\)) and factorisation (\(\mu _F\)) scales are set to \(\mu _R = \mu _F = m_{\texttt {T}}/4\), where \(m_{\texttt {T}}\) is the scalar sum of the transverse masses \(\sqrt{p_{\texttt {T}}^{2} + m^{2}}\) of the particles generated from the matrix element calculation, following Ref. [16]. An alternative \(t\bar{t}t\bar{t}\) sample generated with the same MadGraph5_aMC@NLO set-up, but interfaced to Herwig7.04 [46, 47] with the H7UE tune [47], is used to evaluate uncertainties due to the choice of parton shower and hadronisation model. Another \(t\bar{t}t\bar{t}\) sample was produced at NLO using the Sherpa 2.2.11 [48] generator to account for the modelling uncertainty from an alternative generator choice. The scales for this sample are set to \(\mu _R=\mu _F=\hat{H}_\text {T}/2\), where \(\hat{H}_\text {T}\) is defined as the scalar sum of the transverse momenta of all final state particles. To mitigate the effect of the large fraction of events with negative weights present in the nominal sample that would be detrimental to the training of the multivariate discriminant used to separate signal from background, an additional sample with settings similar to the nominal ones was generated using leading order (LO) matrix elements (MEs). The \(t\bar{t}t\bar{t}\) MC samples with different BSM effects such as non-SM top Yukawa coupling and various EFT operators, including the four-fermion and the Higgs oblique operators, were generated at LO with MadGraph5. They are referred to as “\(t\bar{t}t\bar{t}\) \(\kappa _t\)” and “\(t\bar{t}t\bar{t}\) EFT” in Table 1. Dedicated UFO models implemented in MadGraph5 with FeynRules [49, 50] are used to simulate these BSM effects. The alternative Yukawa coupling scenarios were simulated using the Higgs characterisation model [51]. The effects of the four-fermion and Higgs oblique operators are simulated using the SMEFT@NLO model [52] and the dedicated UFO model from Ref. [15], respectively.

The \(t\bar{t}W\) process was simulated using the Sherpa 2.2.10 [48, 53] generator. The MEs were calculated for up to one additional parton at NLO and up to two partons at LO using Comix [42] and  [41], and merged with the

[41], and merged with the  parton shower [43] using the MEPS@NLO prescription [44] with a merging scale of \(30~\hbox {GeV}\). The choice of scales is \(\mu _R = \mu _F = m_\text {T}\)/2. In addition to the nominal prediction at NLO in QCD, higher-order corrections related to electroweak (EW) contributions are also included. Event-by-event correction factors are applied that provide virtual NLO EW corrections to O(\(\alpha ^{2}\alpha _{\text {s}} ^{2}\)) and LO corrections to O(\(\alpha ^3\)) [48, 54, 55]. An independent

parton shower [43] using the MEPS@NLO prescription [44] with a merging scale of \(30~\hbox {GeV}\). The choice of scales is \(\mu _R = \mu _F = m_\text {T}\)/2. In addition to the nominal prediction at NLO in QCD, higher-order corrections related to electroweak (EW) contributions are also included. Event-by-event correction factors are applied that provide virtual NLO EW corrections to O(\(\alpha ^{2}\alpha _{\text {s}} ^{2}\)) and LO corrections to O(\(\alpha ^3\)) [48, 54, 55]. An independent  2.2.10 sample was produced at LO to account for the sub-leading EW corrections to O(\(\alpha ^{3}\alpha _{\text {s}} \)) [16]. The NLO QCD and NLO EW contributions from

2.2.10 sample was produced at LO to account for the sub-leading EW corrections to O(\(\alpha ^{3}\alpha _{\text {s}} \)) [16]. The NLO QCD and NLO EW contributions from  are combined following the method of Ref. [56]. The \(t\bar{t}W\) samples are normalised using a cross section of 722 fb computed at NLO including the hard non-logarithmically enhanced radiation at NLO in QCD [56]. This normalisation is used to determine the contribution of the \(t\bar{t}W\)+jets background before additional data-driven corrections are derived. The impact of the systematic uncertainty associated with the choice of generator is evaluated using an alternative \(t\bar{t}W\) sample generated with up to one additional parton in the final state at NLO accuracy in QCD using MadGraph5_aMC@NLO. In this sample, the different jet multiplicities were merged using the FxFx NLO matrix-element and parton-shower merging prescription [35] with a merging scale of \(30~\hbox {GeV}\). A separate MadGraph5 \(t\bar{t}W\)+jets sample including only the sub-leading EW corrections to O(\(\alpha ^{3}\alpha _{\text {s}} \)) is also included.

are combined following the method of Ref. [56]. The \(t\bar{t}W\) samples are normalised using a cross section of 722 fb computed at NLO including the hard non-logarithmically enhanced radiation at NLO in QCD [56]. This normalisation is used to determine the contribution of the \(t\bar{t}W\)+jets background before additional data-driven corrections are derived. The impact of the systematic uncertainty associated with the choice of generator is evaluated using an alternative \(t\bar{t}W\) sample generated with up to one additional parton in the final state at NLO accuracy in QCD using MadGraph5_aMC@NLO. In this sample, the different jet multiplicities were merged using the FxFx NLO matrix-element and parton-shower merging prescription [35] with a merging scale of \(30~\hbox {GeV}\). A separate MadGraph5 \(t\bar{t}W\)+jets sample including only the sub-leading EW corrections to O(\(\alpha ^{3}\alpha _{\text {s}} \)) is also included.

The \(t\bar{t}Z/\gamma ^*\) sample was generated at NLO, including off-shell Z contributions with \(m(\ell ^+\ell ^-) > 1~\hbox {GeV}\) and is normalised to the calculation of Ref. [57], extrapolated to take into account off-shell effects down to \(m(\ell ^+\ell ^-) = 1~\hbox {GeV}\). The \(t\bar{t}H\) process was generated at NLO QCD using the five-flavour scheme with the \(h_{\textrm{damp}}\) parameterFootnote 3 set to \(0.75\times (2m_{\textrm{top}} +m_H)\). It is normalised to the cross section computed at NLO QCD plus NLO EW [57, 58]. The production of \(t\bar{t}\) and single-top-quark events were modelled at NLO QCD using PowhegBox. For the \(t\bar{t}\) sample, the \(h_{\textrm{damp}}\) parameter is set to 1.5 \(m_{\textrm{top}}\) [59]. The \(t\bar{t}\) and single-top-quark simulated samples are normalised to the cross sections calculated at next-to-next-to-leading order (NNLO) QCD including the resummation of next-to-next-to-leading logarithmic (NNLL) soft-gluon terms [60,61,62,63]. The overlap between the \(t\bar{t}\) and the single top tW final states is removed using the diagram-removal scheme [64]. The \(t\bar{t}t\) sample was generated at LO QCD using the five-flavour scheme, and includes \(t\bar{t}tW\) and \(t\bar{t}tq\) processes. The \(t\bar{t}tW\) and \(t\bar{t}tq\) samples are normalised to a cross section of 1.02 fb and 0.65 fb, respectively, computed at NLO using MadGraph5_aMC@NLO, with a central scale choice of \(\hat{H}_{\texttt {T}}/2\) and the NNPDF23 PDF set. Rare and small background contributions including \(tWZ\), \(tZq\), \(t\bar{t}VV\), \(t\bar{t}H H\), \(t\bar{t}W H\), V, VV, VVV and VH are normalised using their NLO theoretical cross sections.

4 Objects and event selection

Events were selected using single-lepton or dilepton triggers with variable electron and muon transverse momentum (\(p_{\text {T}}\)) thresholds, and various identification and isolation criteria depending on the lepton flavour and the data-taking period [65,66,67,68]. Events are required to have at least one vertex with at least two associated ID tracks with \(p_{\text {T}} > 0.5\) GeV. In each event, the primary vertex is defined as the reconstructed vertex having the highest scalar sum of squared \(p_{\text {T}}\) of associated tracks [69] and is consistent with the average beam-spot position.

Electron candidates are reconstructed from energy deposits in the EM calorimeter associated with ID tracks [70] and are required to consist of a calorimeter energy cluster with a pseudorapidity of \(|\eta _{\text {cluster}}|<2.47\), excluding candidates in the transition region between the barrel and the endcap calorimeters (\({|\eta _{\text {cluster}}|\not \in [1.37,1.52]}\)). Electrons are required to meet the ‘tight’ likelihood-based identification criterion [70]. Muon candidates are reconstructed by combining tracks in the ID with tracks in the muon spectrometer [71]. They are required to have \(|\eta |<2.5\) and meet the ‘medium’ cut-based identification criterion.

The electron and muon candidates are required to have \(p_{\text {T}}>\) 15 GeV and meet the tight working point of the prompt-lepton isolation discriminant [72], trained to separate prompt and non-prompt leptons. The transverse impact parameter divided by its estimated uncertainty, \({|d_{0} |/\sigma (d_{0})}\), is required to be lower than five (three) for electron (muon) candidates. The longitudinal impact parameter must satisfy \({|z_{0} \sin (\theta ) |<0.5}\) mm for both lepton flavours. To suppress the background arising from incorrect charge assignment, an additional requirement is imposed on electrons based on the score of a boosted decision tree (BDT) discriminant that uses their calorimeter energy clusters and track properties [70]. Electrons and muons passing these criteria are referred to as ‘tight’ in the following. ‘Loose’ electrons are required to pass the same set of requirements as tight electrons except that they satisfy the ‘medium’ likelihood-based identification and loose isolation instead. Similarly, for ‘loose’ muons, the isolation requirement is relaxed.

Jets are reconstructed using a particle flow algorithm [73, 74] by combining measurements from both the ID and the calorimeter. The anti-\(k_{t}\) algorithm [75, 76] with a radius parameter of \(R=0.4\) is used. Jets are required to have \(p_{\text {T}} >{20}\,\hbox {GeV}\) and \(|\eta |<2.5\) and are calibrated as described in Ref. [74]. To reduce the effect from pile-up, the jet-vertex tagger [77], which identifies jets originating from the selected primary vertex, is applied to jets with \(p_{\text {T}} < 60~\hbox {GeV}\) and \(|\eta |<2.4\).

Jets containing b-hadrons are identified (b-tagged) via the \(t\bar{t}\)DL1r algorithm [78, 79]. This algorithm uses deep-learning neural networks exploiting the distinct features of b-hadrons in terms of track impact parameters and displaced vertices reconstructed in the ID. A jet is considered b-tagged if it passes the operating point corresponding to 77% average efficiency for b-quark jets in simulated \(t\bar{t}\) events, with rejection factors against light-quark/gluon jets and c-quark jets of 192 and 6, respectively. Three additional operating points are defined with average b-tagging efficiencies of 85%, 70% and 60%. To fully exploit the b-tagging information of an event, each jet is assigned a pseudo-continuous b-tagging (PCBT) score that defines if a jet passes a given operating point but fails the adjacent tighter one. A score of two, three, four or five is assigned to a jet passing the 85%, 77%, 70% or 60% operating point. If a jet does not pass any operating point, a score of one is assigned.

The missing transverse momentum in the event, whose magnitude is denoted in the following by \(E_{\text {T}}^{\text {miss}}\), is defined as the negative vector sum of the \(p_{\text {T}}\) of the reconstructed and calibrated objects in the event [80]. This sum also includes the momenta of the ID tracks that are matched to the primary vertex but are not associated with any other reconstructed objects.

A sequential overlap removal procedure described in Ref. [19] and based on loose leptons is applied, such that the same calorimeter energy deposit or the same track being reconstructed is not used in two different objects.

The event selection closely follows that of Ref. [19]. Each event must have at least one reconstructed lepton that matches a lepton that fired the trigger. The highest \(p_{\text {T}}\) lepton is required to have \(p_{\text {T}} > 28\) GeV to be always above the trigger threshold. The events are required to have one same-sign tight lepton pair or at least three tight leptons, and at least one b-tagged jet. Each event must have at least one reconstructed lepton that matches a lepton that fired the trigger. Events with two same-sign electrons are required to have invariant mass satisfying \(m_{ee}>15~\hbox {GeV}\) and \(|m_{ee}-91~\hbox {GeV}|>10~\hbox {GeV}\), to reduce the charge mis-assignment background originating from low-mass resonances and Z boson decays. In events with at least three leptons, all opposite-sign same-flavour lepton pairs are required to satisfy \(|m_{\ell \ell }-91~\hbox {GeV}|>10~\hbox {GeV}\) to reduce the contamination from Z boson decay.

Events in the signal region (SR) are required to contain at least six jets, two of which are b-tagged. The scalar sum of the transverse momentum of the selected leptons and jets in the event (\(H_{\text {T}}\)) is required to be above \(500\,\hbox {GeV}\). This requirement is motivated by the high light-flavour jet and b-jet multiplicity as well as the large overall event activity characteristic of the \(t\bar{t}t\bar{t}\) process.

The main sources of physics background are \(t\bar{t}W\)+jets, \(t\bar{t}Z\)+jets and \(t\bar{t}H\)+jets processes. Smaller backgrounds, for which all selected leptons are from W or Z boson decays or from leptonic \(\tau \)-lepton decays, include diboson or triboson production, single-top-quark production in association with a Z boson (\(tWZ\), \(tZq\)) and rare processes such as \(t\bar{t}t\), \(t\bar{t}H H\), \(t\bar{t}W H\) and \(t\bar{t}VV\), where \(V=W,Z\). These backgrounds, except for \(t\bar{t}W\)+jets production, are evaluated using simulated events. The \(t\bar{t}W\)+jets background is evaluated based on the information from the simulation and a data-driven technique described in Sect. 5. This is motivated because the \(t\bar{t}W\)+jets process is notoriously challenging to model accurately using only simulation [16], and because existing measurements of its cross section [19, 81, 82] are consistently above the theoretical predictions. The background events originating from \(t\bar{t}\)+jets and tW+jets production pass the selection if prompt leptons have a mis-assigned charge (QmisID) or leptons are fake/non-prompt. The QmisID background is evaluated using a data-driven technique while the fake/non-prompt background estimation is based on the template method utilising inputs from both simulation and data as described in Sect. 5 and already used in Ref. [19].

The estimated yield for each source of background is given in Sect. 8.

5 Data-driven background estimation

5.1 Estimation of the \(t\bar{t}W\) background

The theoretical modelling of the \(t\bar{t}W\) background at high jet multiplicity, corresponding to the phase space of this analysis, suffers from large uncertainties. To mitigate this effect, the normalisation of this background in jet multiplicity bins is determined using a data-driven approach, while other characteristics of the \(t\bar{t}W\) events are modelled by simulated events. The uncertainties related to the modelling of the kinematic distributions taken from simulation are discussed in Sect. 7.3. The estimate of the normalisation in each jet bin is based on a functional form that describes the evolution of the number of \(t\bar{t}W\) events N as a function of the jet multiplicity j, \(R(j)=N(j+1)/N(j)\) [83,84,85,86]. At high jet multiplicity, R(j) follows the so-called ’staircase scaling’ with R(j) being a constant denoted \(a_0\). This behaviour implies a fixed probability of additional jet radiation. For lower jet multiplicities, Poisson scaling is expected [86] with \(R(n) = a_1 / (1+n)\), where \(a_1\) is a constant and n is the number of additional jets to the hard process. It was derived in the Abelian limit of QCD by considering gluon radiation off hard quark legs and validated using experimental data [86]. The transition point between these scaling behaviours depends on the jet kinematic selection. Since \(t\bar{t}W\) production in the phase-space region of this analysis is dominated by events that include at least four jets in the matrix element at tree level, the parameterisation of the \(t\bar{t}W\) events starts at the fourth jet (\(n=j-4\)). Independent normalisation factors (NF) for the expected number of \(t\bar{t}W^{+}\) and \(t\bar{t}W^{-}\) events are introduced to take into account different production rates of these processes in \(pp\) collisions. The normalisation factor for \(t\bar{t}W\) events with \(j>4\) is then given by

Dedicated control regions (CR) labelled as CR \(t\bar{t}W^+\)+jets, CR \(t\bar{t}W^-\)+jets, CR 1b(+) and CR 1b(-) are defined to determine the \(a_0\) and \(a_1\) scaling parameters and the overall number of \(t\bar{t}W^+\) and \(t\bar{t}W^-\) events with exactly 4 jets, \(\textrm{NF}_{ t\bar{t}W^+ (\textrm{4jet})}\) and \(\textrm{NF}_{ t\bar{t}W^- (\textrm{4jet})}\), respectively. The definition of these CRs is summarised in Table 2. The CR \(t\bar{t}W^+\)+jets and CR \(t\bar{t}W^-\)+jets regions are primarily used to adjust the \(t\bar{t}W\) normalisation in a phase space with at least two b-tagged jets as in the SR, while the CR 1b(+) and CR 1b(-) regions, which include events with \(N_j \ge 4\), are mainly used to determine the \(t\bar{t}W\) jet multiplicity spectrum.

The \(t\bar{t}W\) CRs, along with the CRs defined in Sect. 5.2, are included in the likelihood fit to data, together with the SR (see Sect. 6). Figure 2 shows the jet-multiplicity distributions in each of the four \(t\bar{t}W\) CRs. After the fit, the predictions provide a good description of the data.

As mentioned above, unlike the signal and other background processes, \(t\bar{t}W\) production has a pronounced charge asymmetry in \(pp\) collisions: \(t\bar{t}W^{+}\) events are produced approximately twice as abundantly as \(t\bar{t}W^{-}\) events. This property is used to validate the data-driven estimation of this background by examining the difference between the number of events with all tight leptons positively charged (positive events) and the number of events with all tight leptons negatively charged (negative events). The charge-symmetric \(t\bar{t}t\bar{t}\) signal and most non-\(t\bar{t}W\) backgrounds are expected to cancel in this difference, providing a validation of the \(t\bar{t}W\) background. The distribution of the number of jets obtained from this procedure for events entering the four \(t\bar{t}W\) CRs and the SR defined in Table 2 is shown in Fig. 3. A good description of the jet multiplicity distribution in data confirms that it can be predicted by two scaling parameters, as given in Eq. 1.

Post-fit distributions for the number of jets (\(N_{j}\)) in each of the four \(t\bar{t}W\) CRs: a CR \(t\bar{t}W^+\)+jets, b CR \(t\bar{t}W^-\)+jets, c CR 1b(+) and d CR 1b(-). The ratio of the data to the total post-fit prediction is shown in the lower panel. The dashed blue lines show the pre-fit prediction in the upper panel and the ratio of the data to the total pre-fit prediction in the lower panel. The shaded band represents the total post-fit uncertainty in the prediction

Post-fit distribution for the difference between the number of positive events and the number of negative events (\(N_+-N_-\)) as a function of the number of jets (\(N_\text {j}\)) in the sum of four \(t\bar{t}W\) CRs and the SR. The uncertainties on the normalisation factors and on the \(t\bar{t}W\) modelling parameters are represented by the shaded band. The ratio of the data to the total post-fit prediction is shown in the lower panel

5.2 Fake/non-prompt lepton background

A template method is used to estimate the fake/non-prompt background. This method relies on simulation samples to model the kinematic distributions of the background processes arising from fake and non-prompt leptons and on CRs to determine the normalisation of these components. These CRs are included in the fit, together with the \(t\bar{t}W\) CRs and the SR, and the normalisation factors are determined simultaneously with the \(t\bar{t}t\bar{t}\) signal. The uncertainties related to the modelling of the kinematic distributions predicted in the simulation are discussed in Sect. 7.4. The method is similar to that used in the previous analysis [19].

The following main contributions of the fake/non-prompt background are distinguished:

-

Events with a single reconstructed non-prompt electron (muon) from heavy-flavour decay; this contribution is referred to as \(\text {HF}~e\) (\(\text {HF}~\mu \)).

-

Events with a single reconstructed non-prompt electron originating from photon conversions in the detector material (\(\text {Mat. Conv.}\)).

-

Events with a virtual photon (\(\gamma ^*\)) leading to an \(e^{+}e^{-}\) pair where only one of the electrons is reconstructed (\(\text {Low}~m_{\gamma ^*}\)).

Minor contributions to the fake/non-prompt background arising from events with a lepton originating from light-meson decay or with a jet mis-identified as a lepton are determined using simulated events.

As summarised in Table 2, the CRs labelled as CR HF e and CR HF \(\mu \) are defined to determine the normalisation of the \(\text {HF}~e\) and \(\text {HF}~\mu \) backgrounds, respectively. Similarly, the CRs labelled as CR Mat. Conv. and CR Low \(m_{\gamma ^*}\) are defined to determine the normalisation of \(\text {Mat. Conv.}\) and \(\text {Low}~m_{\gamma ^*}\) backgrounds, respectively. Each region is designed to have a dominant background component.

Figure 4 shows the distributions and event yields used in the template method: the third-highest lepton \(p_{\text {T}}\) and the number of events. A good description of the data distributions by the fitted predictions is observed in all CRs.

5.3 Charge mis-assignment background

The QmisID background affects only the ee and \(e\mu \) channels of the 2LSS region, and is estimated using the same method as in the previous analysis [19]. The probability for an electron to have its charge incorrectly assigned is estimated using a data sample of \(Z\rightarrow ee\) events requiring the invariant mass of the electron pair to be within 10 GeV of the Z boson mass and without any requirement on the charge of the two electrons. The charge mis-assignment rate extracted from the fraction of events with same-charge electrons within this sample, is parameterised as a function of the electron transverse momentum and pseudorapidity. The rate varies from 0.004% to 4% depending on the electron \(p_{\text {T}}\) and \(|\eta |\). The expected number of events arising from the QmisID background is determined by applying the measured charge mis-assignment rate to data events satisfying the requirements of the kinematic selection of the 2LSS channel, except that the two leptons are required to be of opposite charge.

Post-fit distributions for the fitted variables in the CRs for the fake/non-prompt lepton background. For a CR HF e and b CR HF \(\mu \), the third-highest lepton \(p_{\text {T}}\) is fitted, while for c CR Mat. Conv. and d CR Low \(m_{\gamma ^*}\), the number of events is used. The ratio of the data to the total post-fit prediction is shown in the lower panel. The dashed blue lines show the pre-fit prediction in the upper panel and the ratio of the data to the total pre-fit prediction in the lower panel. The shaded band represents the total post-fit uncertainty in the prediction. In a, b, the last bin contains the overflow

6 Signal extraction and cross section measurement

The background composition of the SR is largely dominated by the production of top-quark pairs in association with bosons. A multivariate discriminant built with a Graph Neural Network (GNN) [87] is used to separate the \(t\bar{t}t\bar{t}\) signal from the background using the graph_nets library from TensorFlow [88]. From each event, a fully connected graph is constructed with ‘nodes’, corresponding to reconstructed jets, electrons, muons, and the missing transverse momentum of the event. The features of each node include the four momentum of the object, assumed to be massless,Footnote 4 the jet PCBT score, the lepton charge, and an integer labelling the type of object represented by the node. The ‘edges’ between nodes carry three features with information about the angular separation between the objects they connect. Additionally, the jet multiplicity is treated as a ‘global’ feature. The nodes, edges, global feature and the GNN hyperparameters are optimised to maximise the integral under the receiver operating characteristic curve of the GNN event classifier.

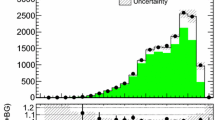

The GNN training is performed for events passing the SR requirements. The LO \(t\bar{t}t\bar{t}\) simulated signal sample is used in the training. The MC simulated samples, corresponding to all background components, represent the background in the training. The GNN discriminant is chosen as the observable of the analysis to extract the \(t\bar{t}t\bar{t}\) signal. Figure 5 shows the distribution of the GNN score in the signal region.

Following the strategy of the previous publication [19], a BDT discriminant is also trained as a cross-check in the SR by combining several input observables, of which the sum of the four highest PCBT scores among all jets in the event has the highest discriminating power. The separation power of the \(t\bar{t}t\bar{t}\) signal from the background is slightly better for the GNN, leading to a 10% higher expected significance for this method.

The \(t\bar{t}t\bar{t}\) production cross-section, the normalisation factors of the backgrounds, and the \(t\bar{t}W\) modelling parameters defined in Sect. 5.1 are determined via a binned likelihood fit to the discriminant score distribution in the SR and to the event yields or to the discriminating variable distributions in the eight CRs listed in Table 2. The maximum-likelihood fit is performed with the RooFit package [89] based on a likelihood function built on the Poisson probability that the observed data are compatible with the model prediction. The value of each nuisance parameter, describing the systematic uncertainties for both signal and background processes (see Sect. 7), is constrained by a Gaussian penalty term present in the likelihood function, while all normalisation factors and \(t\bar{t}W\) modelling parameters, described in Sect. 5, are unconstrained.

7 Systematic uncertainties

Systematic uncertainties arise from the modelling of the signal processes, from theoretical or data-driven predictions of the background processes, as well as from experimental effects. These uncertainties affect both the shape and the normalisation of the estimations. In addition, they can lead to event migration between different regions. The different sources of systematic uncertainty are described below and their impact on the \(t\bar{t}t\bar{t}\) cross section measurement after the fit is shown in Table 6.

7.1 Experimental uncertainties

The dominant experimental uncertainties arise from the measurement of the b-tagging efficiencies and mis-tagging rates [90,91,92]. Corrections applied to simulated samples to match the performance in data and the associated uncertainties are determined for each jet flavour separately in five PCBT score bins and in bins of jet \(p_{\text {T}}\). The resulting uncertainties are decomposed into 40 independent components (referred to as eigenvector components) for b-jet tagging, 20 for c-jet and 20 for light-jet mis-tagging. Due to a lack of data for b-/c-/light jets calibration with \(p_{\text {T}} >400/300/250\) GeV, the corrections for these jets are extrapolated from the highest \(p_{\text {T}}\) bin below these thresholds; uncertainties in this extrapolation are determined from the simulation, independently for b-/c-/light jets.

Uncertainties in the calibration of the jet energy scale and resolution [93,94,95] play a subleading role among the experimental uncertainties. An additional minor uncertainty arises from the correction applied to simulated events associated with the jet-vertex-tagger selection [96].

Other experimental uncertainties have minor impacts on the measurements. The uncertainty in the combined 2015–2018 integrated luminosity is 0.83% [97], obtained using the LUCID-2 detector [98], complemented by measurements using the inner detector and calorimeters. An uncertainty in the corrections on the pile-up profile in the simulated samples is also considered. Uncertainties in electrons and muons arise from the calibration of the simulated samples to data. The calibrations correct for the efficiencies of the trigger, reconstruction, identification and isolation requirements, as well as the energy scale and resolution [70, 71]. For electrons, an additional uncertainty associated with the efficiency of the electric-charge identification is considered. Finally, an uncertainty in the measurement of \(E_{\text {T}}^{\text {miss}}\) is assigned due to a possible mis-calibration of its soft-track component [80].

Comparison between data and the predictions after a fit to data for the GNN distribution in the SR. The first bin contains underflow events. The ratio of the data to the total post-fit prediction is shown in the lower panel. The dashed blue lines show the pre-fit prediction in the upper panel and the ratio of the data to the total pre-fit prediction in the lower panel. The shaded band represents the total post-fit uncertainty in the prediction

7.2 Signal modelling uncertainties

Uncertainties in the modelling of SM \(t\bar{t}t\bar{t}\) production have the dominant impact on the measurements. They affect both the signal acceptance and the shape of the GNN discriminant. The two leading uncertainties are determined by comparing the nominal prediction with alternative samples generated with  and with MadGraph5_aMC@NLO+Herwig7. These uncertainties mainly cover two effects: different matching schemes between the matrix element and parton shower generators as well as different parton shower, hadronisation and fragmentation models. The uncertainty due to missing higher-order QCD corrections in simulated events is estimated by varying the renormalisation and factorisation scales in the matrix element individually or simultaneously up or down by a factor of two, and taking the maximum up and down variations. The PDF uncertainty is 1%. It is calculated as the RMS of the predictions from the 100 replicas of the \(t\bar{t}\)NNPDF30_nlo_as_0118 PDF set following the PDF4LHC prescription [99]. The effect of PDF variation on the shape of the fitted distributions was found to be negligible.

and with MadGraph5_aMC@NLO+Herwig7. These uncertainties mainly cover two effects: different matching schemes between the matrix element and parton shower generators as well as different parton shower, hadronisation and fragmentation models. The uncertainty due to missing higher-order QCD corrections in simulated events is estimated by varying the renormalisation and factorisation scales in the matrix element individually or simultaneously up or down by a factor of two, and taking the maximum up and down variations. The PDF uncertainty is 1%. It is calculated as the RMS of the predictions from the 100 replicas of the \(t\bar{t}\)NNPDF30_nlo_as_0118 PDF set following the PDF4LHC prescription [99]. The effect of PDF variation on the shape of the fitted distributions was found to be negligible.

7.3 Uncertainties in irreducible backgrounds

The dominant uncertainty in the background predictions arises from the modelling of \(t\bar{t}W\), \(t\bar{t}H\) and \(t\bar{t}Z/\gamma ^*\) events. Uncertainties due to generator choices are determined by comparing the nominal predictions with those obtained with the alternative matrix element and/or parton shower generators specified in Table 1. Given that the \(N_\text {j}\) distribution in \(t\bar{t}W\) production is estimated using a data-driven method, the alternative MC sample simulated using MadGraph5_aMC@NLO is weighted to have the same \(N_\text {j}\) distribution as the nominal prediction. This avoids double counting of the systematic effects in \(N_\text {j}\) modelling. Minor uncertainties due to missing higher-order QCD corrections and PDF variations for each of the three processes are studied and determined using the same method as for \(t\bar{t}t\bar{t}\) production.

The uncertainty in the predicted cross-sections of the background processes have minor impact on the measurements. The uncertainty in the \(t\bar{t}t\) production cross section is set to 35%. This includes the effect of varying the renormalisation and factorisation scales up and down by a factor of two in the NLO QCD prediction, and the uncertainties from the PDF choice, EW LO contribution and the acceptance difference between MC samples generated using the five-flavour and the four-flavour schemes.

Uncertainties of 12% and 10% are included for the \(t\bar{t}Z/\gamma ^*\) and \(t\bar{t}H\) production cross sections, respectively [57]. An uncertainty of 30% is applied to the sum of \(tZq\) and \(tWZ\) processes [100,101,102]. The uncertainty in VV production is taken as the discrepancies between the measured differential cross section as a function of the jet multiplicity and the prediction from  [103]. For VV events with \(\le 3/4/\ge 5\) jets, an uncertainty of 20%/50%/60% is applied. The three components are considered as uncorrelated. An uncertainty of 50% is considered for \(t\bar{t}WW\) production based on the NLO prediction [45]. For all other minor background processes with negligible impact including the production of VH, VVV, \(t\bar{t}ZZ\), \(t\bar{t}WZ\), \(t\bar{t}H H\) and \(t\bar{t}W H\), a single uncertainty of 50% is considered. This value is based on previous analyses in a similar final state [19, 104], and is large enough to cover the different predictions listed in Ref. [45].

[103]. For VV events with \(\le 3/4/\ge 5\) jets, an uncertainty of 20%/50%/60% is applied. The three components are considered as uncorrelated. An uncertainty of 50% is considered for \(t\bar{t}WW\) production based on the NLO prediction [45]. For all other minor background processes with negligible impact including the production of VH, VVV, \(t\bar{t}ZZ\), \(t\bar{t}WZ\), \(t\bar{t}H H\) and \(t\bar{t}W H\), a single uncertainty of 50% is considered. This value is based on previous analyses in a similar final state [19, 104], and is large enough to cover the different predictions listed in Ref. [45].

For all background contributions estimated based on simulations, additional uncertainties are considered for events produced with associated b-jets. This is motivated by the expected high b-jet multiplicities in signal-like events and the general difficulty in modelling additional heavy-flavour jets in simulations. For each background process, an uncorrelated uncertainty of 50% is assigned to events with exactly three ‘true’ b-jetsFootnote 5 and to events with at least four true b-jets. Since \(t\bar{t}t\) events have three b-jets arising from top quark decays, an uncertainty of 50% is only assigned to \(t\bar{t}t\) events if they have at least four true b-jets. These estimates are based on the measurement of \(t\bar{t}\)+jets production with additional heavy-flavour jets [105] and on comparisons between data and prediction in \(t \bar{t} \gamma \) events with three and four b-tagged jets.

7.4 Uncertainties in reducible backgrounds

Uncertainties in the estimate of the fake/non-prompt and QmisID backgrounds have minor impact. These uncertainties are derived and treated in the same way as in Ref. [19]. The resulting uncertainties for the QmisID background range from 5–30% depending on the \(p_{\text {T}}\) and \(\eta \) of the lepton. For leptons from heavy-flavour hadron decay, the uncertainties range from 20–100%. For material conversion and virtual photon conversion, the uncertainties are 30% and 21%, respectively. Conservative uncertainties are applied to other smaller contributions, including an uncertainty of 100% for events with fake/non-prompt leptons from light jets, and a 30% uncertainty for all other sources of fake/non-prompt background. These values are taken from previous analyses in a similar final state [19, 104].

Given that \(t\bar{t}\)+jets events are the main source of fake/non-prompt and QmisID backgrounds, additional uncertainties are considered for \(t\bar{t}\)+jets events produced in association with additional b-jets. An uncorrelated uncertainty of 50% is assigned to events with 3 b-jets and to events with at least four b-jets.

8 Result for the \(t\bar{t}t\bar{t}\) cross section measurement

A maximum-likelihood fit is performed to the GNN score distribution in the SR and the different distributions used in the CRs (see Table 2). Figure 5 shows the distribution of the GNN score in the SR before and after performing the fit. Good agreement is observed between data and the prediction after the fit. The best-fit value of the signal strength \(\mu \), defined as the ratio of the measured \(t\bar{t}t\bar{t}\) cross section to the SM expectation of \(\sigma _{t\bar{t}t\bar{t}} = 12.0 \pm 2.4 ~\text {fb}\) from Ref. [16], is found to be:

The corresponding measured \(t\bar{t}t\bar{t}\) production cross section is:

The systematic uncertainty, is determined by subtracting the statistical uncertainty in quadrature from the total uncertainty. The statistical uncertainty is obtained from a fit where all nuisance parameters are fixed to their post-fit values. The systematic uncertainty on the signal strength includes the theoretical uncertainty on the SM \(t\bar{t}t\bar{t}\) cross section. The measured \(t\bar{t}t\bar{t}\) production cross section is consistent within 1.8 standard deviations with the SM prediction of Ref. [16], and within 1.7 standard deviations with the resummed \(\sigma _{t\bar{t}t\bar{t}} \) calculation of Ref. [21].

The probability for the background-only hypothesis to result in a signal-like excess at least as large as seen in data is derived using the profile-likelihood ratio following the procedure described in Ref. [106]. From this, the significance of the observed signal is found to be 6.1 standard deviations, while 4.3 standard deviations are expected using the SM cross section of \(\sigma _{t\bar{t}t\bar{t}} = 12.0 \pm 2.4 ~\text {fb}\) from Ref. [16]. Using the SM cross section of \(13.4^{+1.0}_{-1.8}\) fb from Ref. [21], the expected significance would be 4.7 standard deviations. The goodness-of-fit evaluated using a saturated model [107, 108] yields a probability of 76%. Compared to the previous result of Ref. [19], the gain in expected sensitivity comes from the updated lepton and jet selection and uncertainties, from the use of the GNN discriminant and from the improved treatment of the \(t\bar{t}t\) background. This leads to a better purity of the signal and a smaller uncertainty on the background in the signal-enriched region. The overall uncertainty on the cross section is slightly smaller than the result in Ref. [19], mainly because of an updated treatment of the systematic uncertainty for signal modelling.

The normalisation factors of the different fake/non-prompt lepton background sources and the parameters of the data-driven \(t\bar{t}W\) background model determined from the fit are shown in Tables 3 and 4. The post-fit values of the background and signal yields before and after the fit as well as for events with a GNN score equal to or higher than 0.6 are shown in Table 5. The post-fit number of \(t\bar{t}W\) events with 6 and 7 jets is smaller than at the pre-fit level, while the number of \(t\bar{t}W\) events with \(\ge \)9 jets is increased compared to the pre-fit prediction. The overall number of fitted \(t\bar{t}W\) is in agreement with the \(t\bar{t}W\) cross section measurement in Ref. [82]. The number of background events from material conversion is also increased. The post-fit normalisation factors from Tables 3 and 4 agree with their nominal value of 1, except for \(\text {NF}_{\text {Mat.\ Conv.}}\). They provide good agreement with data as shown in Table 5 and Fig. 5.

Figure 6 presents the distributions of the number of jets, the number of b-jets, the sum of the four highest PCBT scores of jets in the event and \(H_{T}\), in the signal-enriched region for events with a GNN score equal to or higher than 0.6. Good agreement between data and the post-fit predictions is observed.

Comparison between data and prediction after the fit to data in the signal-enriched region with GNN\(\ge 0.6\) for the distributions of a the number of jets, b the number of b-jets, c the sum of the four highest PCBT scores of jets in the event, and d the sum of transverse momenta over all jets and leptons in the event (\(H_{T}\)). The ratio of the data to the total post-fit computation is shown in the lower panel. The shaded band represents the total post-fit uncertainty in the prediction. The first and last bins contain underflow and overflow events, respectively

As a cross-check, a maximum-likelihood fit to the BDT score distribution in data is also performed yielding the same signal strength corresponding to the observed significance of 6.0 standard deviations while 3.9 is expected.

The largest systematic uncertainties in the measurement of \(\sigma _{t\bar{t}t\bar{t}}\) arise from the modelling of the \(t\bar{t}t\bar{t}\) signal, with the uncertainty from the choice of the MC generator being by far the largest followed by the uncertainty from the parton shower and hadronisation model. The uncertainty in the data-driven \(t\bar{t}W\) background estimate is also significant. Among the experimental uncertainties, the largest effects come from the b-jet tagging and the jet energy scale. The parameter associated with the \(t\bar{t}t\bar{t}\) MC generator choice is pulled by around −0.5 standard deviations after the fit. No other nuisance parameter is found to be significantly adjusted or constrained by the fit. The uncertainties impacting the \(t\bar{t}t\bar{t}\) cross section measurement are summarised in Table 6.

This analysis derives 95% confidence level (CL) intervals on the cross section of the \(t\bar{t}t\) process, \(\sigma _{t\bar{t}t}\). In the SM, triple top quarks are always produced in association with other particles, and can be split into the \(t\bar{t}tW\) and \(t\bar{t}tq\) processes [109,110,111]. These processes give rise to an experimental signature and kinematic properties of the event similar to that of the \(t\bar{t}t\bar{t}\) signal, resulting in a similar GNN shape for \(t\bar{t}t\bar{t}\) and \(t\bar{t}t\) production. Varying the normalisation of the \(t\bar{t}t\bar{t}\) and \(t\bar{t}t\) processes in the likelihood fit simultaneously results in an anti-correlation between the two processes of −93%. Figure 7 shows the two-dimensional negative log-likelihood contour for the likelihood fit where the \(t\bar{t}t\) and \(t\bar{t}t\bar{t}\) cross sections are treated as free parameters. Although the best-fit value in this case is consistent with a \(t\bar{t}t\bar{t}\) cross section of zero, it is also consistent with the SM prediction at the level of 2.1 standard deviations.

The two components of the \(t\bar{t}t\) process (\(t\bar{t}tW\) and \(t\bar{t}tq\)) have different sensitivity to possible BSM effects. In particular, a number of higher-dimensional operators generating flavour changing neutral currents involving the top quark [112, 113] lead to the production of \(t\bar{t}t\) events without an associated W boson in the final state. Thus it is interesting to derive 95% CL intervals on the cross sections of the \(t\bar{t}tW\) and \(t\bar{t}tq\) processes separately. Under the assumption of a \(t\bar{t}t\bar{t}\) signal strength of 1 and of 1.9, the 95% CL intervals on \(t\bar{t}t\), \(t\bar{t}tW\) and \(t\bar{t}tq\) are shown in Table 7. The 95% CL intervals for the \(t\bar{t}tq\) process are wider than those for the \(t\bar{t}tW\) process because the \(t\bar{t}tq\) process is characterised by lower lepton and jet multiplicities in the final state, which results in a lower selection efficiency.

Two-dimensional negative log-likelihood contour for the \(t\bar{t}t \) cross section (\(\sigma _{t\bar{t}t}\)) versus the \(t\bar{t}t\bar{t} \) cross section (\(\sigma _{t\bar{t}t\bar{t}}\)) when the normalisation of both processes are treated as free parameters in the fit. The blue cross shows the SM expectation of \(\sigma _{t\bar{t}t\bar{t}} =12\) fb from Ref. [16] and \(\sigma _{t\bar{t}t}=1.67\) fb (see Sect. 3), both computed at NLO, while the black cross shows the best-fit value. The observed (expected) exclusion contours at 68% (black) and 95% CL (red) are shown in solid (dashed) lines. The gradient-shaded area represents the observed likelihood value as a function of \(\sigma _{t\bar{t}t}\) and \(\sigma _{t\bar{t}t\bar{t}}\)

9 Interpretations

Limits and 95% CL intervals are also obtained on the top-quark Yukawa coupling, on EFT operators that parametrize BSM \(t\bar{t}t\bar{t}\) production, and on a Higgs oblique parameter.

9.1 Limits on the top-quark Yukawa coupling

The top-quark Yukawa coupling enters the electroweak \(t\bar{t}t\bar{t}\) Feynman diagram where a pair of top quarks is mediated by a Higgs boson (see Fig. 1). This makes \(t\bar{t}t\bar{t}\) production sensitive to the top-quark Yukawa coupling, including the coupling strength and its CP properties. An additional dependence on the Yukawa coupling comes through the \(t\bar{t}H\) background. The \(t\bar{t}t\bar{t}\) cross section can be parameterised as a function of two parameters: the top Yukawa coupling strength modifier \(\kappa _t\), and the CP-mixing angle \(\alpha \) [10, 114]. The SM corresponds to a pure CP-even coupling with \(\alpha \) = 0 and \(\kappa _t\) = 1, while a CP-odd coupling is realised when \(\alpha \) = 90\(^{\circ }\). In case of a pure CP-even coupling, \(\kappa _t\) can be expressed as a ratio of the top-quark Yukawa coupling \(y_t\) to the SM prediction \(y_t^\textrm{SM}\). The \(t\bar{t}H\) cross section also depends on these parameters, but unlike \(t\bar{t}t\bar{t}\) production, the \(t\bar{t}H\) kinematic distributions change only when the CP-odd term \(\kappa _t\sin (\alpha )\) is non-zero. The \(t\bar{t}t\bar{t}\) and \(t\bar{t}H\) yields in each bin of the GNN distribution are parameterised as a function of \(\kappa _t\) and \(\alpha \). The observed (expected) 95% CL limits are shown in Fig. 8 in the two-dimensional parameter space (\(|\kappa _t\cos (\alpha )|, |\kappa _t\sin (\alpha )|\)). Fixing the top-quark Yukawa coupling to be CP-even only (i.e. \(\alpha =0\)), the following observed (expected) limits are extracted: \(|\kappa _t| < 1.8\) (1.6). This limit is less stringent than that reported in Ref. [115] using a similar technique, due to a slightly higher measured SM \(t\bar{t}t\bar{t}\) cross section than the prediction. As a check to probe the effect from the \(t\bar{t}H\) process, an alternative fit is performed. As opposed to the fit used to obtain the nominal result, the \(t\bar{t}H\) background yields are not parametrised, whilst the normalisation of the \(t\bar{t}H\) background is treated as a free parameter of the fit. This leads to an observed (expected) limit of \(|\kappa _t| < 2.2\) (1.8).

Two-dimensional negative log-likelihood contours for |\(\kappa _t\cos (\alpha )\)| versus |\(\kappa _t\sin (\alpha )\)| at 68% and 95%, where \(\kappa _t\) is the top-Higgs Yukawa coupling strength parameter and \(\alpha \) is the mixing angle between the CP-even and CP-odd components. The gradient-shaded area represents the observed likelihood value as a function of \(\kappa _t\) and \(\alpha \). Both the \(t\bar{t}t\bar{t}\) signal and \(t\bar{t}H\) background yields in each fitted bin are parameterised as a function of \(\kappa _t\) and \(\alpha \). The blue cross shows the SM expectation, while the black cross shows the best fit value

9.2 Limits on EFT operators and the Higgs oblique parameter

Within the EFT framework, the \(t\bar{t}t\bar{t}\) process is sensitive to four heavy-flavour fermion operators \(O_{tt}^1\), \(O_{QQ}^1\), \(O_{Qt}^1\) and \(O_{Qt}^8\), which can probe the BSM models that enhance interactions between the third-generation quarks [12]. The \(t\bar{t}t\bar{t}\) production cross section can be approximated by:

where \(C_{i}\) denotes the coupling parameters of the four heavy-flavour fermion operators, \(C_{i}{\sigma }_{i}^{(1)} \) is the linear term that represents the interference of dimension-6 operators with SM operators, and \(C_{i}C_{i}{\sigma }_{i,j}^{(2)}\) is the quadratic term that also includes the interference between different EFT operators. The 95% CL intervals on the EFT parameters are extracted by parameterising the \(t\bar{t}t\bar{t}\) yield in each bin of the GNN score distribution as a quadratic function of the coefficient of the corresponding EFT operator (\(C_i/\Lambda ^2\)) and performing the fit to data. The fit is carried out assuming that only one operator contributes to the \(t\bar{t}t\bar{t}\) cross section, while the coefficients of the other three operators are fixed to the SM value of zero. The expected and observed 95% CL intervals on the coefficients are summarised in Table 8. To probe the importance of the different terms in Eq. 2, the limits are also extracted assuming only the linear terms as a test. The resulting upper limits on the absolute values of the coefficients (\(|C_i/\Lambda ^2|\)) of \(O_{QQ}^1\), \(O_{Qt}^1\), \(O_{tt}^1\) and \(O_{Qt}^8\) are 5.3, 3.3, 2.4 and 8.8 \(\hbox {TeV}^{-2}\), respectively, at 95% CL. Comparable limits on these EFT parameters can be found in Ref. [116].

An oblique parameter is a self-energy correction term applied to electroweak propagators in the SM. The BSM additions to such a correction can be expressed as an EFT expansion, with the self-energy correction to the Higgs boson parameterised by the parameter \(\hat{H}\), where \(\hat{H}=0\) corresponds to the SM prediction [15]. The \(\hat{H}\) parameter affects the off-shell Higgs interaction, and thus the \(t\bar{t}t\bar{t}\) cross section, as well as processes involving a Higgs boson, in particular \(t\bar{t}H\) production, which is a significant background to the \(t\bar{t}t\bar{t}\) measurement. To account for this effect, the \(t\bar{t}H\) contribution is parameterised as a function of \(\hat{H}\) [15]: \(\mu _{t\bar{t}H} = 1-\hat{H}\), where \(\mu _{t\bar{t}H}\) is the \(t\bar{t}H\) normalisation factor with respect to the SM cross section. A limit on \(\hat{H}\) is extracted from the likelihood scans shown on Fig. 9. The observed (expected) upper limit on the \(\hat{H}\) value is 0.20 (0.12) at 95% CL. The observed limit coincides with the largest value of this parameter that preserves unitarity in the perturbative theory. Previously, limits on the \(\hat{H}\) parameter were reported in Refs. [15, 115, 117].

10 Conclusion

This paper presents a measurement of \(t\bar{t}t\bar{t}\) production using \(140~\hbox {fb}^{-1}\) of data at \(\sqrt{s}=13~\hbox {TeV}\) with the ATLAS detector at the LHC. Events are selected with exactly two same-charge isolated leptons or at least three isolated leptons. The normalisation of the \(t\bar{t}W\) background in jet multiplicity bins is determined using a data-driven approach. A Graph Neural Network is used to separate the \(t\bar{t}t\bar{t}\) signal from the background. Four-top production is observed with a significance of 6.1 standard deviations with respect to the background-only hypothesis. The expected significance is 4.3 or 4.7 standard deviations depending on the assumed SM cross section. The measured \(t\bar{t}t\bar{t}\) production cross section is \(22.5^{+6.6}_{-5.5}\) fb. It is consistent with the SM predictions within 1.8 or 1.7 standard deviations and with the previous ATLAS measurement. In addition, 95% confidence level intervals on the cross section for inclusive \(t\bar{t}t\) production and its subprocesses \(t\bar{t}tq\) and \(t\bar{t}tW\) are provided, for the scenarios assuming the SM \(t\bar{t}t\bar{t}\) production cross section and the measured \(t\bar{t}t\bar{t}\) cross section.

The results are used to set limits on several new physics scenarios. Constraints on the CP properties of the top-quark Yukawa coupling are obtained in the form of limits in the two-dimensional parameter space \(\left( |\kappa _t\cos (\alpha )|, |\kappa _t\sin (\alpha )|\right) \). Assuming a pure CP-even coupling (\(\alpha =0\)), the observed upper limit on \(|\kappa _t|=|y_t/y_t^\textrm{SM}|\) at 95% CL is 1.8. Constraints at 95% CL are obtained on the four dimension-6 heavy-flavour fermion operators. Assuming one operator taking effect at a time, the observed constraints on the coefficients (\(C_i/\Lambda ^2\)) of \(O_{QQ}^1\), \(O_{Qt}^1\), \(O_{tt}^1\) and \(O_{Qt}^8\) are \([-3.5, 4.1]\), \([-3.5, 3.0]\), \([-1.7, 1.9]\) and \([-6.2, 6.9]\) \(\hbox {TeV}^{-2}\), respectively. An observed upper limit at 95% CL of 0.20 is obtained for the Higgs oblique parameter that coincides with the largest value that preserves unitarity for the perturbative theory.

Data availability statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: All ATLAS scientific output is published in journals, and preliminary results are made available in Conference Notes. All are openly available, without restriction on use by external parties beyond copyright law and the standard conditions agreed by CERN. Data associated with journal publications are also made available: tables and data from plots (e.g. cross section values, likelihood profiles, selection efficiencies, cross section limits, ...) are stored in appropriate repositories such as HEPDATA (http://hepdata.cedar.ac.uk/). ATLAS also strives to make additional material related to the paper available that allows a reinterpretation of the data in the context of new theoretical models. For example, an extended encapsulation of the analysis is often provided for measurements in the framework of RIVET (http://rivet.hepforge.org/).]

Change history

15 February 2024

An Erratum to this paper has been published: https://doi.org/10.1140/epjc/s10052-024-12458-6

Notes

In the following, \(t\bar{t}t\) is used to denote both \(t\bar{t}t\) and its charge conjugate, \(\bar{t}t\bar{t}\). Similar notation is used for other processes, as appropriate.

ATLAS uses a right-handed coordinate system with its origin at the nominal interaction point (IP) in the centre of the detector and the \(z\)-axis along the beam pipe. The \(x\)-axis points from the IP to the centre of the LHC ring, and the \(y\)-axis points upwards. Cylindrical coordinates \((r,\phi )\) are used in the transverse plane, \(\phi \) being the azimuthal angle around the \(z\)-axis. The pseudorapidity is defined in terms of the polar angle \(\theta \) as \(\eta = -\ln \tan (\theta /2)\). Angular distance is measured in units of \(\Delta R \equiv \sqrt{(\Delta \eta )^{2} + (\Delta \phi )^{2}}\).

The \(h_{\textrm{damp}}\) parameter controls the transverse momentum \(p_{\text {T}}\) of the first additional emission beyond the leading-order Feynman diagram in the parton shower and therefore regulates the high-\(p_{\text {T}}\) emission against which the \(t\bar{t}\) system recoils.

The energy of each object is computed from the four momentum assuming that its mass is zero. For jets, this avoids any dependence on the jet mass calibration.

‘True’ b-jets are identified in simulated events as jets that are ghost-associated with b-hadrons [75].

References

H.P. Nilles, Supersymmetry, supergravity and particle physics. Phys. Rep. 110, 1 (1984). https://doi.org/10.1016/0370-1573(84)90008-5

G.R. Farrar, P. Fayet, Phenomenology of the production, decay, and detection of new hadronic states associated with supersymmetry. Phys. Lett. B 76, 575 (1978). https://doi.org/10.1016/0370-2693(78)90858-4

T. Plehn, T.M.P. Tait, Seeking sgluons. J. Phys. G 36, 075001 (2009). https://doi.org/10.1088/0954-3899/36/7/075001. arXiv:0810.3919 [hep-ph]

S. Calvet, B. Fuks, P. Gris, L. Valéry, Searching for sgluons in multitop events at a center-of-mass energy of 8 TeV. JHEP 04, 043 (2013). https://doi.org/10.1007/JHEP04(2013)043. arXiv:1212.3360 [hep-ph]

D. Dicus, A. Stange, S. Willenbrock, Higgs decay to top quarks at hadron colliders. Phys. Lett. B 333, 126 (1994). https://doi.org/10.1016/0370-2693(94)91017-0. arXiv:hep-ph/9404359

N. Craig, F. D’Eramo, P. Draper, S. Thomas, H. Zhang, The hunt for the rest of the Higgs bosons. JHEP 06, 137 (2015). https://doi.org/10.1007/JHEP06(2015)137. arXiv:1504.04630 [hep-ph]

N. Craig, J. Hajer, Y.-Y. Li, T. Liu, H. Zhang, Heavy Higgs bosons at low \(\text{ tan }\,\beta \): from the LHC to 100 TeV. JHEP 01, 018 (2017). https://doi.org/10.1007/JHEP01(2017)018. arXiv:1605.08744 [hep-ph]

A. Pomarol, J. Serra, Top quark compositeness: feasibility and implications. Phys. Rev. D 78(7), 074026 (2008). https://doi.org/10.1103/PhysRevD.78.074026. arXiv:0806.3247 [hep-ph]

Q.-H. Cao, S.-L. Chen, Y. Liu, Probing Higgs width and top quark Yukawa coupling from \(t\bar{t}H\) and \(t\bar{t}t\bar{t}\) productions. Phys. Rev. D 95, 053004 (2017). https://doi.org/10.1103/PhysRevD.95.053004. arXiv:1602.01934 [hep-ph]

Q.-H. Cao, S.-L. Chen, Y. Liu, R. Zhang, Y. Zhang, Limiting top quark-Higgs boson interaction and Higgs-boson width from multitop productions. Phys. Rev. D 99, 113003 (2019). https://doi.org/10.1103/PhysRevD.99.113003. arXiv:1901.04567 [hep-ph]

C. Degrande, J.-M. Gerard, C. Grojean, F. Maltoni, G. Servant, Non-resonant new physics in top pair production at hadron colliders. JHEP 03, 125 (2011). https://doi.org/10.1007/JHEP03(2011)125. arXiv:1010.6304 [hep-ph]

C. Zhang, Constraining qqtt operators from four-top production: a case for enhanced EFT sensitivity. Chin. Phys. C 42, 023104 (2018). https://doi.org/10.1088/1674-1137/42/2/023104. arXiv:1708.05928 [hep-ph]

G. Banelli, E. Salvioni, J. Serra, T. Theil, A. Weiler, The present and future of four top operators. JHEP 02, 043 (2021). https://doi.org/10.1007/JHEP02(2021)043. arXiv:2010.05915 [hep-ph]

R. Aoude, H. El Faham, F. Maltoni, E. Vryonidou, Complete SMEFT predictions for four top quark production at hadron colliders. JHEP 10, 163 (2022). https://doi.org/10.1007/JHEP10(2022)163. arXiv:2208.04962 [hep-ph]

C. Englert, G.F. Giudice, A. Greljo, M. Mccullough, The \(\hat{H}\)-parameter: an oblique Higgs view. JHEP 09, 041 (2019). https://doi.org/10.1007/JHEP09(2019)041. arXiv:1903.07725 [hep-ph]

R. Frederix, D. Pagani, M. Zaro, Large NLO corrections in \(t\bar{t}W^{\pm }\) and \(t\bar{t}t\bar{t}\) hadroproduction from supposedly subleading EW contributions. JHEP 02, 031 (2018). https://doi.org/10.1007/JHEP02(2018)031. arXiv:1711.02116 [hep-ph]

G. Bevilacqua, M. Worek, Constraining BSM Physics at the LHC: four top final states with NLO accuracy in perturbative QCD. JHEP 07, 111 (2012). https://doi.org/10.1007/JHEP07(2012)111. arXiv:1206.3064 [hep-ph]

T. Ježo, M. Kraus, Hadroproduction of four top quarks in the powheg box. Phys. Rev. D 105, 114024 (2022). https://doi.org/10.1103/PhysRevD.105.114024. arXiv:2110.15159 [hep-ph]

ATLAS Collaboration, Evidence for \(t\bar{t}t\bar{t}\) production in the multilepton final state in proton-proton collisions at \(\sqrt{s}=13TeV\) with the ATLAS detector. Eur. Phys. J. C 80, 1085 (2020). https://doi.org/10.1140/epjc/s10052-020-08509-3. arXiv:2007.14858 [hep-ex]

ATLAS Collaboration, Measurement of the \(t \overline{t} t \overline{t} \) production cross section in \(pp\) collisions at \(\sqrt{s}= 13\,TeV\) with the ATLAS detector. JHEP 11, 118 (2021). https://doi.org/10.1007/JHEP11(2021)118. arXiv:2106.11683 [hep-ex]

M. van Beekveld, A. Kulesza, L.M. Valero, Threshold resummation for the production of four top quarks at the LHC. (2022). arXiv:2212.03259 [hep-ph]

CMS Collaboration, Evidence for four-top quark production in proton-proton collisions at -\(\sqrt{s} = 13\,TeV\). (2023).https://doi.org/10.1088/1748-0221/3/08/S08003. arXiv: 2303.03864 [hep-ex]

ATLAS Collaboration, The ATLAS Experiment at the CERN Large Hadron Collider, JINST 3, S08003 (2008). https://doi.org/10.1088/1748-0221/3/08/S08003. Accessed 27 Mar 2023

ATLAS Collaboration, ATLAS Insertable B-Layer: Technical Design Report, ATLAS-TDR-19; CERN-LHCC-2010-013, 2010, https://cds.cern.ch/record/1291633, Addendum: ATLAS-TDR-19-ADD-1; CERN-LHCC-2012-009, 2012. https://cds.cern.ch/record/1451888

B. Abbott et al., Production and integration of the ATLAS Insertable B-Layer. JINST 13, T05008 (2018). https://doi.org/10.1088/1748-0221/13/05/T05008. arXiv:1803.00844 [physics.ins-det]

ATLAS Collaboration, Performance of the ATLAS trigger system in 2015, Eur. Phys. J. C 77, 317 (2017). https://doi.org/10.1140/epjc/s10052-017-4852-3. arXiv:1611.09661 [hep-ex]

ATLAS Collaboration, The ATLAS Collaboration Software and Firmware, ATL-SOFT-PUB-2021-001. (2021). https://cds.cern.ch/record/2767187. Accessed 27 Mar 2023

ATLAS Collaboration, ATLAS data quality operations and performance for 2015-2018 data-taking, JINST 15, P04003 (2020). https://doi.org/10.1088/1748-0221/15/04/P04003. arXiv:1911.04632 [physics.ins-det]

T. Sjöstrand et al., An introduction to PYTHIA 8.2. Comput. Phys. Commun. 191, 159 (2015). https://doi.org/10.1016/j.cpc.2015.01.024. arXiv:1410.3012 [hep-ph]

ATLAS Collaboration, The Pythia 8 A3 tune description ofATLAS minimum bias and inelastic measurements incorporating the Donnachie-Landshoff diffractive model, ATL-PHYS-PUB-2016-017. (2016). https://cds.cern.ch/record/2206965. Accessed 27 Mar 2023

P. Nason, A new method for combining NLO QCD with shower Monte Carlo algorithms. JHEP 11, 040 (2004). https://doi.org/10.1088/1126-6708/2004/11/040. arXiv:hep-ph/0409146

S. Frixione, G. Ridolfi, P. Nason, A positive-weight next-to-leading-order Monte Carlo for heavy flavour hadroproduction. JHEP 09, 126 (2007). https://doi.org/10.1088/1126-6708/2007/09/126. arXiv:0707.3088 [hep-ph]

S. Frixione, P. Nason, C. Oleari, Matching NLO QCD computations with parton shower simulations: the POWHEG method. JHEP 11, 070 (2007). https://doi.org/10.1088/1126-6708/2007/11/070. arXiv:0709.2092 [hep-ph]

S. Alioli, P. Nason, C. Oleari, E. Re, A general framework for implementing NLO calculations in shower Monte Carlo programs: the POWHEG BOX. JHEP 06, 043 (2010). https://doi.org/10.1007/JHEP06(2010)043. arXiv:1002.2581 [hep-ph]

R. Frederix, S. Frixione, Merging meets matching in MC@NLO. JHEP 12, 061 (2012). https://doi.org/10.1007/JHEP12(2012)061. arXiv:1209.6215 [hep-ph]

ATLAS Collaboration, ATLAS Pythia 8 tunes to 7 TeV data, ATL-PHYS-PUB-2014-021. (2014). https://cds.cern.ch/record/1966419. Accessed 27 Mar 2023

D.J. Lange, The EvtGen particle decay simulation package. Nucl. Instrum. Methods A 462, 152 (2001). https://doi.org/10.1016/S0168-9002(01)00089-4

P. Golonka, Z. Was, PHOTOS Monte Carlo: a precision tool for QED corrections in and decays. Eur. Phys. J. C 45, 97 (2006). https://doi.org/10.1140/epjc/s2005-02396-4. arXiv:hep-ph/0506026

GEANT4 Collaboration, S. Agostinelli et al., Geant4—a simulation toolkit. Nucl. Instrum. Methods A 506, 250 (2003). https://doi.org/10.1016/S0168-9002(03)01368-8

ATLAS Collaboration, The simulation principle and performance of the ATLAS fast calorimeter simulation FastCaloSim, ATL-PHYS-PUB-2010-013. (2010). URL: https://cds.cern.ch/record/1300517. Accessed 27 Mar 2023

F. Cascioli, P. Maierhöfer, S. Pozzorini, Scattering amplitudes with open loops. Phys. Rev. Lett. 108, 111601 (2012). https://doi.org/10.1103/PhysRevLett.108.111601. arXiv:1111.5206 [hep-ph]

T. Gleisberg, S. Höche, Comix, a new matrix element generator. JHEP 12, 039 (2008). https://doi.org/10.1088/1126-6708/2008/12/039. arXiv:0808.3674 [hep-ph]

S. Schumann, F. Krauss, A parton shower algorithm based on Catani–Seymour dipole factorisation. JHEP 03, 038 (2008). https://doi.org/10.1088/1126-6708/2008/03/038. arXiv:0709.1027 [hep-ph]

S. Höche, F. Krauss, M. Schönherr, F. Siegert, QCD matrix elements + parton showers. The NLO case. HEP 04, 027 (2013). https://doi.org/10.1007/JHEP04(2013)027. arXiv:1207.5030 [hep-ph]

J. Alwall et al., The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. JHEP 07, 079 (2014). https://doi.org/10.1007/JHEP07(2014)079. arXiv:1405.0301 [hep-ph]

M. Bahr et al., Herwig++ physics and manual. Eur. Phys. J. C 58, 639 (2008). https://doi.org/10.1140/epjc/s10052-008-0798-9. arXiv:0803.0883 [hep-ph]

J. Bellm et al., Herwig 7.0/Herwig++ 3.0 release note, Eur. Phys. J. C 76, 196 (2016). https://doi.org/10.1140/epjc/s10052-016-4018-8. arXiv:1512.01178 [hep-ph]

E. Bothmann et al., Event generation with Sherpa 2.2. SciPost Phys. 7, 034 (2019). https://doi.org/10.21468/SciPostPhys.7.3.034. arXiv:1905.09127 [hep-ph]

A. Alloul, N.D. Christensen, C. Degrande, C. Duhr, B. Fuks, FeynRules 2.0—a complete toolbox for tree-level phenomenology. Comput. Phys. Commun. 185, 2250 (2014). https://doi.org/10.1016/j.cpc.2014.04.012. arXiv:1310.1921 [hep-ph]

C. Degrande et al., UFO—The Universal FeynRules Output. Comput. Phys. Commun. 183, 1201 (2012). https://doi.org/10.1016/j.cpc.2012.01.022. arXiv:1108.2040 [hep-ph]

P. Artoisenet et al., A framework for Higgs characterisation. JHEP 11, 043 (2013). https://doi.org/10.1007/JHEP11(2013)043. arXiv:1306.6464 [hep-ph]

C. Degrande et al., Automated one-loop computations in the SMEFT. Phys. Rev. D 103, 096024 (2021). https://doi.org/10.1103/physrevd.103.096024. arXiv:2008.11743 [hep-ph]

T. Gleisberg et al., Event generation with SHERPA 1.1. JHEP 02, 007 (2009). https://doi.org/10.1088/1126-6708/2009/02/007. arXiv:0811.4622 [hep-ph]

S. Kallweit, J.M. Lindert, P. Maierhöfer, S. Pozzorini, M. Schönherr, NLO QCD+ EW predictions for V+jets including off-shell vector-boson decays and multijet merging. JHEP 04, 021 (2016). https://doi.org/10.1007/JHEP04(2016)021. arXiv:1511.08692 [hep-ph]

C. Gütschow, J.M. Lindert, M. Schönherr, Multi-jet merged top-pair production including electroweak corrections. Eur. Phys. J. C 78, 317 (2018). https://doi.org/10.1140/epjc/s10052-018-5804-2. arXiv:1803.00950 [hep-ph]