Abstract

In a special run of the LHC with \(\beta ^{\star } = 2.5\) km, proton–proton elastic-scattering events were recorded at \(\sqrt{s} = 13\) TeV with an integrated luminosity of \(340~\upmu {\text {b}}^{-1}\) using the ALFA subdetector of ATLAS in 2016. The elastic cross section was measured differentially in the Mandelstam t variable in the range from \(-t = 2.5 \cdot 10^{-4}\) GeV\(^{2}\) to \(-t = 0.46\) GeV\(^{2}\) using 6.9 million elastic-scattering candidates. This paper presents measurements of the total cross section \(\sigma _{\text {tot}}\), parameters of the nuclear slope, and the \(\rho \)-parameter defined as the ratio of the real part to the imaginary part of the elastic-scattering amplitude in the limit \(t \rightarrow 0\). These parameters are determined from a fit to the differential elastic cross section using the optical theorem and different parameterizations of the t-dependence. The results for \(\sigma _{\text {tot}}\) and \(\rho \) are

The uncertainty in \(\sigma _{\text {tot}}\) is dominated by the luminosity measurement, and in \(\rho \) by imperfect knowledge of the detector alignment and by modelling of the nuclear amplitude.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Measurements of elastic scattering at hadron colliders give unique experimental access to non-perturbative dynamics, which cannot be calculated from first principles. Of particular importance are the total hadronic cross section, \(\sigma _{\text {tot}} \), and the ratio of the real part to the imaginary part of the elastic-scattering amplitude (\(\rho \)-parameter), which probes Coulomb–nuclear interference (CNI). These observables are related by dispersion relations derived from foundational unitarity and analyticity arguments for scattering amplitudes. Dispersion relations connect the \(\rho \)-parameter at a certain energy to the energy evolution of \(\sigma _{\text {tot}} \) both below and above this energy. The \(\rho \)-parameter at the LHC energy has recently received significant interest because of a measurement at \(\sqrt{s} =13~\text {TeV} \) by the TOTEM experiment [1] which measured a lower value of the \(\rho \)-parameter than would be expected assuming a \(\ln ^{2}s\) rise of the total cross section, s being the centre-of-mass energy squared.

The \(\rho \)-parameter is sensitive not only to the high-energy evolution of the total hadronic cross section but also to the fundamental structure of the elastic-scattering amplitude. Traditionally, the elastic-scattering amplitude at energies well above \(100~\text {GeV} \) has been thought to be dominated by an exchange of Pomerons in the t-channel (see e.g. Ref. [2]). In QCD the Pomeron is represented by a two-gluon colourless state with spin–parity–charge quantum numbers J\(^{\text {PC}} = 0^{++}\). The additional possible presence of a three-gluon colourless state with J\(^{\text {PC}} = 1^{--}\), the so-called Odderon, can also influence the value of the \(\rho \)-parameter. Thus, measurements of the \(\rho \)-parameter at the highest energy of the LHC are essential.

The \(\rho \)-parameter is defined as the ratio of the real part to the imaginary part of the elastic-scattering amplitude in the limit \(t\rightarrow 0\), i.e.

where \(f_{\text {el}}(t)\) is the elastic-scattering amplitude and where t stands for the four-momentum transfer in the reaction.

Normally, the \(\rho \)-parameter is determined by measuring the differential elastic cross section at such small values of |t| that the amplitude is sensitive both to the Coulomb amplitude and the strong amplitude, and thus also to the interference between the two. The phase of the Coulomb amplitude is known, and therefore the value of \(\rho \) can be extracted from the measured size of the interference term.

The ATLAS experiment has previously measured elastic scattering at 7 and \(8~\text {TeV} \) [3, 4] using the ATLAS Roman Pot system ALFA [5]. However, those measurements did not extend to the region of very small |t|-values where the differential cross section is sensitive to the \(\rho \)-parameter. Such small |t|-values require measurements of angles in the microradian range, which in turn need even smaller divergence of the beam at the interaction point (IP). Moreover, they require that the vertically movable Roman Pot detectors approach to within millimetres of the beam.

This paper presents a new measurement using pp collision data at \(\sqrt{s}= 13\) \(\text {TeV}\), corresponding to an integrated luminosity of 340 \(\upmu \)b\(^{-1}\). For the first time, the ATLAS measurement is extended, by an order of magnitude lower in \(-t\), to such small scattering angles that the Coulomb interaction starts to play a role. Here, the differential elastic cross section is measured down to \(-t=2.5 \cdot 10^{-4}~\text {GeV} ^2\). The acceptance for elastic events is very small for such low values of |t| and if an acceptance greater than 10% is required, the lower limit is \(-t=4.5 \times 10^{-4}~\text {GeV} ^2\). The needed small divergence of the beam at the IP is achieved by using very high-\(\beta ^{\star } \) opticsFootnote 1 (\(\beta ^{\star } =2.5\) km), producing a large beam spot size but very small beam divergence.

The measurements rely upon the very accurate luminosity determination that ATLAS provides for all cross-section measurements [6]. The TOTEM experiment has a different approach, using the so-called luminosity-independent method for the normalization of the differential cross section. The main uncertainty in using this method comes from the Monte Carlo estimate of the non-measurable forward diffractive cross section. In addition, ATLAS has introduced data-driven methods to constrain the parameters of the detector alignment. The alignment uncertainty and the luminosity uncertainty together constitute the dominant uncertainty in the measurement of the differential elastic cross section.

The optical theorem connects the hadronic component of the total cross section \(\sigma _{\text {tot}} \) to the imaginary part of the scattering amplitude in the forward direction,

In the same manner as in the ATLAS measurements at \(7~\text {TeV} \) and \(8~\text {TeV} \), the optical theorem is also used in the present analysis to determine \(\sigma _{\text {tot}} \) by extrapolating the differential cross section to \(t\rightarrow 0\). The total hadronic elastic cross section, in turn, is obtained from an integration of the measured differential elastic cross section. Using the trivial relation, \(\sigma _{\text {inel}} = \sigma _{\text {tot}}-\sigma _{\text {el}} \), the total inelastic cross section can also be calculated.

Another aspect of interest is whether the differential elastic cross section can be described by a simple exponent in the region where the strong interaction dominates or if higher-order corrections are needed (for an overview of the experimental situation, see the introduction of Ref. [7]). Such tendencies were already observed at the Intersecting Storage Rings (ISR) but the sample size was insufficient to draw a definite conclusion. With the large sample available here at 13 \(\text {TeV}\), ATLAS has an opportunity to establish such a non-exponential behaviour. TOTEM has reported observations of non-exponential behaviour at both \(8~\text {TeV} \) [7] and \(13~\text {TeV} \) [8].

The paper is organized in the following way. Section 2 presents the experimental set-up and includes a brief description of the ALFA subdetector. The data sets and the data-taking conditions are discussed in Sect. 3, where the measurement method and detector alignment are also explained. The theoretical framework and simulation tools for the description of elastic scattering are presented in Sect. 4. The main points of the data analysis are discussed in Sect. 5. Section 6 is devoted to the determination of the differential elastic cross section and the physics parameters. The interpretation of the results is discussed in Sect. 7. The conclusions are given in Sect. 8.

2 Experimental set-up

The ALFA detector is a specific part of the ATLAS experiment [9] designed to measure the elastic scattering of protons. Because the protons are elastically scattered at very small angles the tracking detectors need to be placed close to the beam and far from the IP. For this purpose two stations with tracking detectors are located on both sides of the central ATLAS detector.

The conceptual layout of the detectors in the two stations on one side of the ATLAS detector is shown in Fig. 1. In each station, two main tracking detectors (MDs) measure the trajectory of scattered protons in the upper or lower half of the station. Overlap detectors (ODs) are placed on the right and left sides of each MD. They are used to measure the vertical distance between the upper and lower MDs by recording halo protons passing through the left-side or right-side ODs on the two MDs. The MDs and ODs are supplemented by trigger counters made of 3 mm scintillator tiles. The detectors are housed in so-called Roman Pots (RPs), an upper one and a lower one, which are movable and can approach the circulating beam in the vertical direction to within 1 mm. Due to the layout of the LHC accelerator, with two parallel beam pipes close to each other in the horizontal plane, a choice of vertically moving RPs was natural.

Each MD consists of 10 modules with 64 scintillating fibres of 0.5 mm size glued on both the front and back sides of a titanium support plate. The fibres on both sides are arranged orthogonally in a uv-geometry at \(\pm 45^{\circ }\) to the y-axis. The module layers are staggered in steps of a tenth of the fibre size. The spatial resolution of the MDs is measured to be about \(32~\upmu \)m. The ODs consists of only three layers of 0.5 mm fibres. A detailed description of the ALFA detector can be found in Ref. [5].

The schematic layout of the ALFA stations in the LHC is shown in Fig. 2. Two stations at distances of 237 m and 245 m on each side of the interaction point ensure the reconstruction of the scattered proton’s trajectory. The stations on the right side of ATLAS measure the scattered protons along the outgoing LHC beam 1 line (C-side), while stations on the left side cover the outgoing LHC beam 2 line (A-side). The eight MDs form two independent spectrometer arms. Arm 1 consists of the upper detectors in the two stations on the left side combined with the lower detectors in the two stations on the right side of the IP. Arm 2 consists of the two lower detectors on the left side combined with the two upper detectors on the right side. The preselection of elastic-scattering events requires triggers to have fired in at least one MD on either side of a spectrometer arm.

A sketch of the experimental set-up, not to scale, showing the positions of the ALFA Roman Pot stations in the outgoing LHC beams, and the quadrupole (Q1–Q6) and dipole (D1–D2) magnets situated between the IP and ALFA. The ALFA detectors are numbered A1–A8, and are combined into inner stations A7R1 and A7L1, which are closer to the IP, and outer stations B7R1 and B7L1. The arrows in the top panel indicate the beam directions and in the bottom panel the scattered proton directions. The stations A7R1 and B7R1 measure the scattered protons along the outgoing LHC beam 1 line (C-side) while stations A7L1 and B7L1 cover the outgoing LHC beam 2 line (A-side)

In the LHC Long Shutdown period from 2013 to 2014 (known as LS1) some major technical investments were made to ensure data taking with ALFA in Run 2. Dangerous heating of the fiber detectors up to damage level was observed at higher beam intensities in Run 1. To protect the detectors, all stations were equipped with an active air cooling system and additional passive cooling components. Another modification was necessary to reach the high-\(\beta ^*\) value of 2.5 km: two additional water-cooled power cables were installed to allow more flexible quadrupole operation.

For physics analysis the most relevant modification was the relocation of the outer stations B7L1 and B7R1. The new positions increased the distance between inner and outer stations from 4 m to about 8 m. As a consequence the track angular resolution improved significantly.

3 Experimental method

3.1 Measurement principle

The data were recorded with special beam optics characterized by a \(\beta ^{\star } \) of 2.5 km [10] at the IP resulting in small beam divergence and providing parallel-to-point focusing in the vertical plane. In parallel-to-point beam optics the betatron oscillation has a phase advance \(\Psi \) of \(90^{\circ }\) between the IP and the RPs, such that all particles scattered at the same angle are focused to the same position in the detector, independent of their production vertex position. This focusing is only achieved in the vertical plane.

The beam optics parameters are needed for the reconstruction of the scattering angle \(\theta ^{\star }\) at the IP. In elastic scattering at high energies the four-momentum transfer t is calculated from \(\theta ^{\star }\) by:

where p is the nominal LHC beam momentum of \(6.5~\text {TeV} \) [11] and \(\theta ^{\star }\) is reconstructed from the proton trajectories in ALFA. The trajectories are measured in the beam coordinate system, where the transverse positions x and y are determined relative to the nominal orbit. A formalism based on transport matrices relates the positions and angles of particles at two different points of the magnetic lattice.

The trajectory \((w(\xi ), \theta _w(\xi )),\) where \(w\in \{x,y\}\) is the transverse position at a distance \(\xi \) from the IP and \(\theta _w\) is the angle in the w direction between the particle trajectory and the nominal orbit, is given by the transport matrix \({\textbf{M}}\) and the coordinates at the IP (\(w^{\star }\), \(\theta _w^{\star }\)):

Here the elements of the transport matrix can be calculated from the optical function \(\beta \) and its derivative with respect to \(\xi \) and \(\Psi \). The transport matrix \({\textbf{M}}\) must be calculated separately in x and y and depends on the longitudinal position \(\xi \); the corresponding indices have been dropped for clarity. The focusing properties of the beam optics in the vertical plane enable a reconstruction of the scattering angle with good precision using only \(M_{12}\), but in the horizontal plane the phase advance is close to \(180^{\circ }\) and different reconstruction methods are investigated.

The ALFA detector was designed to use the ‘subtraction’ method, exploiting the fact that for elastic scattering the particles are back-to-back, so that the scattering angles on the A-side and C-side are the same in magnitude and opposite in sign, and that the protons originate from the same vertex. The beam optics was optimized to maximize the lever arm \(M_{12}\) in the vertical plane to access the smallest possible scattering angle. The positions measured with ALFA on the A-side and C-side of ATLAS have the same magnitude to within 50–100 \(\upmu \)m but opposite signs, and in the subtraction method the scattering angle is calculated according to:

The measurements from inner and outer stations are averaged in y to obtain the final value of \(\theta ^{\star }\). The subtraction method is the nominal method in both planes and yields the best t-resolution. In contrast to previous analyses using 90 m optics [3, 4], the lever arm \(M_{12}\) in the horizontal plane at the inner stations is so small that its contribution to the scattering angle determination introduces an unacceptable degradation of the resolution. Therefore, a method named ‘subtraction light’ is used instead, which uses y everywhere in the \(\theta ^{\star }\)-reconstruction but ignores the x-measurement at the inner stations and only uses x at the outer stations. However, the x measurement is used for event selection purposes and for the local track angle used in the method described below.

An alternative method for the reconstruction of the horizontal scattering angle uses the ‘local angle’ \(\theta _w\) of the tracks measured between the inner and outer stations on the same side:

Another method performs a ‘local subtraction’ of measurements at the inner station at 237 m and the outer station at 245 m, separately on the A-side and C-side, before combining the two sides:

Finally, the ‘lattice’ method uses both the measured positions and the local angle to reconstruct the scattering angle by the inversion of the transport matrix

and from the second row of the inverted matrix the scattering angle is determined to be

The lattice method yields a scattering angle measurement at each station, and the average value is taken for the t-reconstruction. All methods using the local angle suffer from limited resolution due to a moderate angular resolution of about 5 \(\upmu \)rad. These alternative methods are nevertheless used to cross-check the subtraction method and determine beam optics parameters.

For all methods, t is calculated from the scattering angles as follows:

where \(\theta ^{\star }_{y}\) is always reconstructed with the subtraction method, because of the parallel-to-point focusing in the vertical plane, while the four methods are used for \(\theta ^{\star }_{x}\).

3.2 Data taking

Since the high-\(\beta ^{\star } \) runs are very different from the standard LHC runs, a few test fills were performed to find acceptable beam parameter settings. The minimum accessible value \(t_{\text {min}}\) is influenced by three parameters: the \(\beta \)-function, the distance of the detectors from the beam trajectory, and the beam emittance. It is expressed in the following formula:

with the beam momentum p, the detector transverse position as a multiple n of the beam width \(\sigma = \sqrt{\epsilon \cdot \beta }\), the (normalized) emittance \(\epsilon \) (\(\epsilon _N\) and the value of the \(\beta \)-function at the IP, \(\beta ^{\star } \).

To achieve a \(|t_{\text {min}}|\) value of a few times 10\(^{-4}~\text {GeV} ^2\) for the given beam momentum of \(6.5~\text {TeV} \) and \(\beta ^{\star } = 2.5\) km, the detectors need to be placed at a distance of \(3\sigma \) from the beam trajectory. The target value for the emittance was about 1 \(\upmu \)m.

To achieve acceptable conditions for recording physics data, several collimator settings were tested. The tight collimator positions induced shower particles by interactions with halo particles. The main challenge in the data taking was the handling of the rapid increase of background for elastic triggers.

The final procedure was to scrape the beam with the primary vertical collimators to \(2\sigma \) of the beam width and position the RPs at \(3\sigma \). The collimators were then retracted to \(2.5\sigma \) to reduce the impact of shower particles from their edges. In addition the secondary vertical and horizontal collimators were used to optimize the background conditions.

Due to LHC machine protection requirements, the total beam intensity in the high-\(\beta ^{\star } \) runs is limited to \(3 \cdot 10^{11}\) protons per beam. A filling scheme of five bunches with \(6 \cdot 10^{10}\) protons each was used in all fills. For background studies in the first fill, one pair of non-colliding bunches was used.

After the LHC was filled, the energy was ramped up to \(6.5~\text {TeV} \) and \(\beta ^{\star } \) was de-squeezed to 2.5 km. The next step was the beam-based alignment (BBA). In this procedure, the RP windows scrape the beam edge and the resulting signals in the beam loss monitors [12] are used to determine the beam trajectory. All RP and collimator positions are given in terms of the beam width \(\sigma \) relative to the measured beam trajectory.

When the BBA procedure was finished, the collimators were moved to their final positions and the RPs were set at the \(3\sigma \) positions, corresponding to a distance between the RP window facing the beam and the beam orbit of about 0.5 mm. The detectors themselves were about 0.3–0.4 mm further away from the beam orbit due to the RP window thickness and the gap towards the detector edge.

The evolution of the background fraction was monitored by the ratio of background to elastic trigger rates. If the background rate reached the level of the elastic rate the RPs were retracted slightly and a re-scraping to a beam width of \(2\sigma \) was performed. Depending on the beam intensity, this procedure was repeated after 30–90 min. The fill was dumped when the elastic rate fell below 1 Hz.

In four fills (5313, 5315, 5317, 5321), which each lasted between 10 and 24 h, a total integrated luminosity of about 340 \(\upmu \)b\(^{-1}\) was collected. The luminosity and the recorded number of elastic-scattering candidates for the individual fills and runs are given in Sect. 5.1.

The emittance was regularly measured by wire scans and a dedicated synchrotron light monitor. After injection, typical values of the horizontal and vertical emittances were below 1 \(\upmu \)m. After ramp-up and de-squeezing, these values grew to about 1.1 \(\upmu \)m for the vertical emittance and about 2.5 \(\upmu \)m for the horizontal emittance. The measured emittance and its time evolution are taken into account in the event simulation model described in Sect. 4.2.

A dedicated trigger menu was used to record the sample of elastic interactions, and also the various diffractive and background samples needed for systematic studies. The collection of elastic events is based on conditions which require in each spectrometer arm a trigger signal in at least one detector in the stations at opposite sides of the central ATLAS detector. For background studies, a similar trigger pattern was used but it combined all upper or lower detectors of all stations, which excludes elastic events by construction. The trigger efficiency was derived from a minimum-bias sample, which is based on a single trigger signal from any ALFA detector. For the selected data sample, the data acquisition dead time was below \(0.3\%\) and the trigger efficiency was above \(99.97\%\). An extensive software suite [13] is used in the reconstruction of data, in detector operations, and in the trigger and data acquisition systems of the experiment.

3.3 Track reconstruction and alignment

The tracks of the elastically scattered protons are reconstructed in the ALFA main detectors. The tracks are reconstructed individually in each ALFA detector. The reconstruction method takes advantage of the fact that the tracks left by the elastically scattered protons are almost parallel to the beam. Then, one can neglect the slope of the trajectory and focus on the determination of its position only. Because the layers are staggered, the position is constrained by the geometrical overlap of the fired fibres projected separately on the u and v directions. A track is required to consist of hits in at least three overlapping fibres in each direction (an elastically scattered proton typically fires 18–19 fibres in a detector).

Reconstruction of the event kinematics requires knowledge of the transverse position of the tracks relative to the beam. A transformation from the detector-related u and v coordinates to the x and y coordinates relative to the beam requires a good understanding of the detector position: the rotation of the detector around the beam axis,Footnote 2 the horizontal offset between the centre of the detector and the centre of the beam, and the vertical distance between the detector and the beam.

Information about the horizontal offset and the rotation angle is obtained from the analysis of distributions of elastically scattered protons (see Sect. 5.1 for details of the event selection). The (x, y) distribution of the positions of these protons at a given RP station has an elliptical shape centred around the beam and is elongated in the y direction. The two detectors of the station, upper and lower, measure fragments of this ellipse. For a correctly calculated horizontal offset, the average value of the x position, \(\left\langle x \right\rangle \), should be zero. For a correctly determined rotation of the detector, no correlation should be observed between x and y. The alignment procedures are based on measurements of \(\left\langle x \right\rangle \) and how \(\left\langle x \right\rangle \) depends on y. The resulting alignment corrections are applied iteratively until the detectors are fully aligned.

The information about the vertical distance between each detector and the centre of the beam is obtained from several sources. First, the distance between the upper and lower detectors is measured using data collected by the ALFA overlap detectors, which are placed on the sides of the main detectors and extend below the beam for the upper RP and above the beam for the lower RP [5]. A simultaneous measurement of traversing particles by the upper and lower detectors allows the determination of their relative position in the vertical direction.

The measurement using the overlap detectors provides the vertical distance between the upper and lower detectors, i.e. the sum of their distances to the beam centre. The determination of the position of the beam between the detectors is again based on a basic property of the (x, y) distribution of elastically scattered protons, namely that it has an up–down mirror symmetry. Part of the alignment procedure is to search for a vertical beam offset that equalizes the y distributions (corrected for the reconstruction efficiency, see Sect. 5.3) in the upper and lower detectors.

The alignment analysis presented above was performed separately for different detectors and data-taking periods, resulting in a range of values and corresponding uncertainties for each parameter. Table 1 summarizes the obtained results.

The dominant systematic uncertainty for the rotation angle originates from ignoring the underlying fibre structure of the detectors. This structure can induce a bias in the reconstructed hit pattern at a scale comparable to the spatial resolution. The magnitude of this effect is estimated by varying the fiducial volume in which the alignment analysis is performed. The statistical uncertainty is at a similar level. The total uncertainty of the horizontal offset is dominated by the systematic component, evaluated by changing the method of extracting the centre of the distribution.

The uncertainty for the vertical distance is dominated by the systematic component originating from our imperfect knowledge of how the overlap detectors are positioned relative to the main detectors, from the choice of statistical method used to extract the distance value, and from the event selection. The dominant source of systematic uncertainty for the vertical offset is related to the choice of method for testing the compatibility of the distributions, and this uncertainty is similar in size to the statistical uncertainty.

The next step of the alignment analysis exploits the fact that the kinematics of an elastic-scattering event are fully described by only two parameters, meaning that a measurement of the proton position in one detector fully constrains the kinematics. This measurement can then be extrapolated to all other detectors of the same elastic arm. The properties of the LHC optics used for these measurements, especially its parallel-to-point focusing in the vertical plane, make this extrapolation very precise in the y coordinate. Comparing the extrapolated position with the measured one provides further constraints on the vertical alignment. The analysis showed a need for distance corrections at different stations of up to 55 \(\upmu \)m, which is larger than the estimated distance uncertainties, suggesting that they are underestimated. One possible explanation is a non-zero average angle of the particles traversing the overlap detectors, which would lead to a bias in the distance measurement.

The above steps of the alignment analysis are based on the same principles and techniques as used in the previous ALFA measurements [3, 4]. However, the final analysis presented in this paper is very sensitive to the distance uncertainties. Therefore, additional steps for vertical alignment were introduced to ensure sufficient precision. Once the relative positions of all detectors within each elastic arm are fixed using the precise extrapolations discussed above, the two remaining parameters are the global vertical distance between the two arms and the global vertical position of the beam between them.

The global vertical distance is found by performing the complete analysis presented in this paper (see Sect. 5) assuming different values of the global vertical distance. A fit to the differential elastic cross section is performed using only statistical uncertainties. The \(\chi ^2\) of the fit, considered as a function of the global vertical distance, has a clear minimum whose position determines the final vertical distance used in the analysis. This correction is found to be 86 \(\upmu \)m. It is added to the distance determined by averaging the distance measurements from individual stations. The uncertainty is evaluated to be 22 \(\upmu \)m, with the largest contribution originating from the variation of the luminosity within its uncertainty (see Sect. 5.6).

It is worth noting that the sensitivity of the \(\chi ^2\) value to distance originates from the very good acceptance of the ALFA detectors down to the region dominated by the Coulomb interaction, which is well understood. Performing the analysis with misaligned detectors affects the measured distribution, making it incompatible with our knowledge of the physics that governs the behaviour of the t-spectrum in this range, thus increasing the \(\chi ^2\).

As the final step of the alignment, the vertical position of the beam between the elastic arms is fine-tuned by equalizing the t-spectra measured in the two arms. The principle is similar to that for the measurement per station discussed above, but when performed at the arms level the resulting uncertainty is improved to 4–15 \(\upmu \)m, with corrections of the order of 12–27 \(\upmu \)m (depending on the data-taking period). The uncertainty is dominated by two systematic components of similar size related to the details of testing the compatibility of the distributions and to the uncertainty of the reconstruction efficiency in the two arms.

4 Simulation model for elastic scattering

4.1 Theoretical predictions

Elastic scattering is related to the total cross section through the optical theorem (Eq. (1)) and the differential elastic cross section is obtained from the scattering amplitudes of the contributing diagrams:

Here, \(f_{\textrm{N}}\) is the purely strong-interaction amplitude, \(f_{{\textrm{C}}}\) is the Coulomb amplitude, \(\alpha \) is the fine-structure constant, and a phase \(\phi \) is induced by long-range Coulomb interactions [14, 15]. In the simplest model elaborated in Ref. [15] the individual amplitudes are given by

where G is the electric form factor of the proton and B is the nuclear slope. The value of \(f_{\textrm{N}}(0)\) follows from the optical theorem, while the t-dependence is the simplest parameterization, which is valid only at small |t|. A possible generalization of Eq. (8) that incorporates a t-dependent slope consists of the introduction of additional terms C proportional to \(t^{2}\) and D proportional to \(|t|^{3}\)

parameterizing the curvature of the t-spectrum at large |t|. Their effect is most visible in between \(-t = 0.05~\text {GeV} ^2\) and \(-t = 0.2~\text {GeV} ^2\). At smaller |t|, the slope of the t-spectrum is essentially constant and well parameterized by \(\exp {(-B|t|/2)}\), and at very large |t| beyond \(0.2~\text {GeV} ^{2}\) the form of the spectrum changes when approaching the diffractive dip, which is a local minimum in the t-spectrum generated by the interference of diffractive amplitudes. It is located around \(0.5~\text {GeV} ^2\) in \(13~\text {TeV} \) pp collisions and followed by a local maximum, called the bump. At yet larger |t|-values the spectrum continues to fall steeply.

Depending on the number of terms included in the exponential function, three different models are considered for parameterization of the nuclear amplitude; they are referred to as the B-model with validity up to \(-t=0.04~\text {GeV} ^2\), the BC-model with validity up to \(-t=0.1~\text {GeV} ^2\) and the full BCD-model with validity up to \(-t=0.2~\text {GeV} ^2\). The validity ranges are approximate and were empirically determined by studying the quality of a fit to the data.

The theoretical form of the t-dependence of the cross section is obtained by evaluating the square of the complex amplitudes, following Eqs. (7), (8), and (9):

where the first term corresponds to the Coulomb interaction, the second to the Coulomb–nuclear interference (CNI), and the last to the hadronic interaction. This parameterization is used to fit the differential elastic cross section to extract the physics parameters \(\sigma _{\text {tot}} \) and \(\rho \), and the terms B, C and D relevant to the nuclear slope, depending on the model. This simple parameterization of the differential elastic cross section has been criticized by the authors of Ref. [16]. The criticism is based upon the fact that the formula has only been derived for a constant B-slope and is thus in principle not valid when the curvature terms C and D are introduced. However, in Ref. [17] it is shown that the formula is still valid at a level of \(10^{-3}\) in \(\rho \) given this kind of t-dependence of the slope.

The theoretical prediction given by Eq. (10) also depends on the Coulomb phase \(\phi \) and the form factor G. This analysis uses a conventional dipole parameterization of the proton electric form factor from Ref. [18]

where \(\Lambda =0.71~\text {GeV} ^2\). The uncertainty in the electric form factor is derived by comparing the simple dipole parameterization with more sophisticated forms [19], which better describe the high-precision low-energy electron–proton elastic-scattering data [20]. An expression for the Coulomb phase was initially derived in Ref. [15]

where the model-dependent Coulomb phase shift \(\phi _{\text {C}}\) was taken to be \(\gamma _{\text {E}}=0.577\). Further corrections, primarily from the form factor, were calculated in Ref. [18] and the expression nominally used in this analysis is

Uncertainties in the Coulomb phase are estimated by replacing the parameterization in Eq. (12) by the simple form in Eq. (11). This change has only a minor impact on the cross-section prediction. Replacing the dipole by other forms also has a negligible impact on the determination of the physics parameters.

In the present theoretical framework, a constant nuclear phase is assumed between the real and imaginary parts of the nuclear amplitude. In Ref. [17], various alternative t-dependent nuclear phase models are discussed which would entail a t-dependence of the \(\rho \) parameter. The models suggested in Ref. [17] are considered for systematic uncertainty purposes, excluding an extreme model featuring a peripheral phase. The model with the largest impact is a simple model in which the \(\rho \)-value vanishes at \(-t=0.1~\text {GeV} ^2\)

4.2 Simulation model

Monte Carlo (MC) simulated events are used to calculate acceptance and unfolding corrections. A fast simulation is used in which the detector resolution is parameterized and tuned to data and the elastically scattered protons are passed through the LHC lattice by means of the beam transport \(2\times 2\) matrix according to Eq. (3). The detector geometry is described by a set of simple requirements. The fast simulation offers convenient methods to tune the relevant detector and beam parameters, in terms of resolution and beam divergence, to measured control observables. The generation of elastic-scattering events is performed with a simple ‘toy’ MC simulation. For each event, a t-value is randomly drawn from a probability density function representing the theoretical model. The nominal model, named BCD, includes the small-|t| parameterization in Eq. (10) with three slope parameters B, C and D. The parameters of the event generation model are summarized in Table 2. The values of the slope parameters, and also \(\sigma _{\text {tot}} \) and \(\rho \), were iteratively adjusted in the analysis and are close to the final results. The validity of this model is limited to relatively small \(-t\) values, \(|t|< 0.2~\text {GeV} ^2\), which is of relevance for the physics parameter determination in this analysis.

The acceptance of the present data set does not cover the dip, but a parameterization based on the model introduced by Ref. [21] to describe this region is used for \(|t|> 0.2~\text {GeV} ^2\).

The simulation model also includes the relevant LHC beam parameters that describe the width of the production vertex distribution, the intrinsic beam energy spread, and the beam divergence at the IP. The last is of particular importance, as the angular divergence contributes to the scattering angle resolution. The divergence is inferred from a combination of measurements of the beam emittance from wire scans performed by the LHC beam instrumentation, from the beam width measured by the ATLAS inner detector, and in the vertical plane directly from ALFA angular measurements. The resulting beam divergence is implemented per plane, per beam, and per run and, in addition, the time-dependent emittance growth during the runs is incorporated.

A fast parameterization of the detector response is used for the detector simulation, with the spatial resolution tuned to the measured resolution. The resolution is measured by extrapolating tracks reconstructed in the inner stations to the outer stations using beam optics matrix-element ratios and comparing predicted positions with measured positions. Thus, it is a convolution of the resolutions in the inner and outer stations. The fast simulation is tuned to reproduce this convolved resolution. A full Geant4 [22] simulation is used to set the resolution scale between detectors at the inner and outer stations, which cannot be determined from the data.

5 Data analysis

5.1 Event selection

All data used in this analysis were recorded in September 2016 with a beam optics of \(\beta ^{\star } =2.5\) km in four fills of the LHC resulting in seven ATLAS runs. An event preselection consisting of data quality, trigger and reconstruction requirements was applied, resulting in a sample of elastic-scattering candidates. Data quality requirements were applied to ensure that the ALFA detector was fully operational. Only data recorded outside of the scraping periods are taken into account. Furthermore, only periods of the data taking where the dead-time fraction was below \(5\%\) are used. The luminosity-weighted average dead-time fraction is below \(0.3\%\). These requirements eliminate less than 5\(\%\) of the data.

Events are required to pass the trigger conditions for elastic-scattering events, and have a reconstructed track in all four detectors of the arm which fired the trigger. Events with additional tracks in detectors of the other arm arise from the temporal overlap of halo protons with elastic-scattering protons and are retained. If halo and elastic-scattering protons overlap in the same detectors, which happens typically only on one side, a track-matching procedure [3] between the detectors on each side is applied to identify the elastic track.

Fiducial cuts to ensure good containment inside the detection area are applied to the vertical coordinate. Tracks must be at least 60 \(\upmu \)m away from the edge of the detector nearer the beam, where the full detection efficiency is reached. The cut at the detector edge determines the smallest accessible value of |t|. At large vertical distance, the vertical coordinate must be at least 1 mm away from the shadow of the beam screen, a protection element of the quadrupoles, in order to minimize the impact from showers generated in the beam screen.

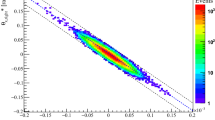

Further geometrical cuts on the left–right acollinearity are applied, exploiting the back-to-back topology of elastic-scattering events. Figure 3 shows the correlation between left-side (A-side) and right-side (C-side) positions measured in the vertical plane.

The correlation of the y coordinate measured in the inner stations on the A-side and C-side. Elastic-scattering candidates after preselection and fiducial cuts but before acceptance and background rejection cuts are shown. Identified elastic events are required to lie between the red lines. Only data from one run are shown

The correlation between the coordinate and the local angle in the horizontal plane. Elastic-scattering candidates after preselection and fiducial cuts but before acceptance and background rejection cuts are shown. Identified elastic events are required to lie inside an ellipse corresponding to a \(3.5\sigma \) selection, represented by a thin red line. The vertical band corresponds to badly reconstructed tracks, the narrow almost horizontal band represent the main halo contribution and the structure extending to the upper right quadrant contains off-momentum protons

The elastic-scattering candidates are observed in a narrow region along the diagonal, and events are selected in a band of 2 mm width, as indicated by the red lines in Fig. 3. In the horizontal plane the position difference between the left and right sides must be within \(3.5\sigma \) of its resolution determined from simulation. An efficient cut against non-elastic background is obtained from the correlation of the local angle between two stations and the position in the horizontal plane, as shown in Fig. 4, where elastic-scattering events appear inside a broad ellipse with positive slope, whereas background is concentrated in a narrow ellipse with negative slope or in the band in the top-right quadrant. A similar selection is also applied in the vertical plane, where elastic events are selected in a band in the local angle of 40 \(\upmu \)rad width. The selection requirements are summarized in Table 3, alongside the number of events that meet the requirements at each step of the event selection for the longest run. A total of 6.9 million events were selected. The selection efficiency relative to the preselection is about 94%. The fraction of elastic pile-up events, where two elastic events from the same bunch crossing are observed in two different arms, is about \(0.2\permille \). The numbers of selected elastic-scattering candidates per run are summarized in Table 4.

5.2 Background determination

A small fraction of the background is expected to be inside the fiducial volume defined by the event selection cuts. The background contributions can be clearly observed e.g. in Fig. 4, where the elastic core populates the interior of the elliptical contour, whereas distinct patterns seen as narrow or wide bands constitute background from different sources. A fraction of the background extends into the selected area and thus constitutes an irreducible component that needs to be subtracted from the reconstructed t-spectrum. The fraction of background events is very small and its subtraction has little impact on the results.

Two types of background are considered: non-elastic physics background processes from central diffraction, also known as double-Pomeron exchange (DPE) \(pp\rightarrow pp+X\), and accidental coincidences of either halo protons on both sides or a halo proton on one side and a proton from single diffraction (SD) on the other side. Other combinations involving double diffraction or protons from non-diffractive processes in conjunction with a halo proton are not considered, given the overall small level of background. The strategy for the determination of the background is to use a data-driven template method for the halo+halo and halo+SD estimation and a MC simulation for DPE. The DPE simulation is based on the MBR model [23], which predicts a total DPE cross section of 0.82 mb at \(13~\text {TeV} \). The event generation for DPE is done with nominal MBR settings as implemented in Pythia 8.303 [24].

In the data-driven method used to calculate the contribution from accidental coincidences, events with tracks only on one side of the experiment are selected, and those with activity on the opposite side are vetoed. The veto suppresses contributions from elastic events and the sample composition is thus dominated by halo and SD events. Then a background template is constructed by randomly mixing uncorrelated single-sided events from the left side and right side, corresponding to the background from accidental coincidences. The absolute normalization of the templates is obtained by comparing the number of events in the data and the template in control regions, before applying the event selection cuts. The procedure is illustrated in Fig. 5, where the correlation between the horizontal track position and the local angle is shown in data, in DPE simulation and in the templates. The normalization region is depicted in Fig. 5a showing the correlation in data between the horizontal position and local angle in the horizontal plane. The events are counted outside of the shaded area in the upper right corner and the elliptical elastic signal region. The non-shaded normalization region is dominated by background from accidental coincidences of halo protons and free of elastic events. Simulated DPE events shown in Fig. 5b contribute very little to this area, but a large fraction of accidental coincidences from the event-mixing templates shown in Fig. 5c lie in the normalization region. The templates are scaled such that the number of events in this region equals the number of events in the data. The irreducible background for selected events is then calculated by applying the nominal event-selection cuts to the properly scaled template events.

Correlation plots before cuts in the data a, showing regions used to normalize the event-mixing templates for the background estimate of accidental coincidences between two halo protons or a halo proton and an SD proton. The normalization region is the area in the bottom and left part of the figure. The shaded region surrounded by the black lines and ellipse is excluded from the normalization. The templates in b and c have been normalized to show the expected number of background events present in the data sample

Also, the normalization for simulated DPE events is determined from the data by counting events in a region of the correlation between the vertical coordinate on the A-side and C-side (not shown). The selected normalization region is mostly populated by DPE events. About \(10\%\) of the data events in the normalization area originate from accidental coincidences, which are subtracted before calculating the DPE scaling factor.

The resulting background estimate is illustrated in Fig. 6 for Arm 1, which shows that the overall level of background is very small and dominated by DPE, except at small |t| where the accidental coincidences prevail. In previous analyses at \(\beta ^{\star } =90\) m [3, 4] a procedure called the ‘anti-golden’ method was applied, in which events collected in all upper or all lower detectors were used to estimate the irreducible background in the sample of elastic-scattering candidates. This method is not used in the present analysis because the detectors are much closer to the beam and even small vertical beam offsets (up to \(80~\upmu \)m, see Sect. 3.3) introduce an asymmetry between the arms that is not reproduced by the anti-golden method.

The resulting background level is \(0.75\permille \) on average with very small differences between the different runs. Systematic uncertainties are estimated by changing the normalization regions, the template composition, and the parameters of the DPE simulation. The nominal normalization region for accidental coincidences shown in Fig. 5 in the plane of x and \(\theta _x\) is changed to a region in the plane of x on the A-side and C-side, which exhibits a different type of correlation. The template composition is varied by imposing a veto on minimum-bias triggers from the central ATLAS detector, thereby depleting the SD content of the templates. The normalization regions for the DPE background are varied in a similar way. In addition, the DPE background shape is changed by varying the value of the Pomeron intercept in the simulation from 0.02 to 0.15. Varying this MBR parameter was found to have the largest impact on the DPE shape. The total background uncertainties are dominated by systematic uncertainties and range from 10.4 to \(14.8\%\).

5.3 Reconstruction efficiency

The reconstruction efficiency accounts for the elastic events which cannot be fully reconstructed because of the following effects and which therefore need to be excluded from the analysis. The development of a hadronic shower in the Roman Pot may lead to configurations where the proton track cannot be reconstructed in one or more of the four detectors. Also, an overlap with a halo proton or SD proton can lead to reconstruction failures, particularly if these background protons have initiated a shower either in the Roman Pot material or upstream as a result of a collimator scattering or beam–gas interaction. The large majority of failed reconstructions are thus related to events with a hit multiplicity that is too large, in which no meaningful tracks can be determined. The probability of reconstruction failing because of too few hits is in contrast very low, since for the regular reconstruction only three good layers out of ten in u and v are required and the single-layer efficiency is about \(90\%\) [5].

The number of elastically scattered protons that are lost is estimated by a data-driven tag-and-probe method. This method exploits the back-to-back topology of elastic events, allowing a proton to be tagged on one side of the spectrometer and probe the reconstruction on the other side. The probability that an elastic event is fully reconstructed is given by the reconstruction efficiency:

where \(N_{\text {reco}}\) is the number of fully reconstructed elastic-scattering events, which have at least one reconstructed track in each of the four detectors of a spectrometer arm, and \(N_{\text {fail}}\) is the number of not fully reconstructed elastic-scattering events that have reconstructed tracks in fewer than four detectors. Events are grouped into several reconstruction cases, for which different selection criteria and corrections are applied, to determine if an event is from elastically scattered protons, but was not fully reconstructed because of inefficiencies.

Both the fully reconstructed and failed events need to have an elastic trigger signal present and need to be inside the acceptance region. The efficiency is determined separately for the two spectrometer arms. Based on the number of detectors with at least one reconstructed track, the events are grouped into six reconstruction cases called topologies named 4/4, 3/4, 2/4, (1 + 1)/4, 1/4 and 0/4. The first number in this notation indicates the number of detectors with at least one reconstructed track. In the 2/4 case, both detectors with tracks are on one side of the IP and in the (1 + 1)/4 case they are on different sides.

Elastic events for all cases are selected with the event selection described in Sect. 5.1, using the subset of cuts available to each particular topology. The tag-and-probe method relies on the very high efficiency of the trigger scintillator \((\varepsilon _{\text {trig}}>99.9\%)\) to tag the activity of events in the four detectors of an arm. The activity is quantified by the number of hits, which must be more than five. Then selection cuts are applied to detectors with reconstructed tracks to accept events as elastic or reject them as background. Not all cuts can be applied to every case because limitations may make them impossible to apply, e.g. left–right correlation cuts in the 2/4 case. This results in an overestimation of the elastic event yield and a reduced background rejection efficiency, which is accounted for by a phase-space correction derived from simulation. Because of the reduced set of cuts available for topologies with failed reconstructions, the background rejection is less efficient and more background is present, particularly in the 2/4 topology.

The background strategy follows the method applied to ‘golden’ 4/4 events and described in Sect. 5.2: two background contributions from DPE events and accidental halo+SD events are considered, where both are normalized in control regions specific to the topology. The single-side templates for the accidental coincidences are extended to also include events where only one detector has a reconstructed track. For the cases where only two or fewer detectors have reconstructed tracks, particularly the 2/4 case, the background from accidental coincidences becomes important, with a similar contribution from the DPE background. For the cases with only one or no detector with reconstructed tracks, the tracking information is not sufficient for event classification, and the expected yields are calculated with a probabilistic method [3] based on cases with reconstructed tracks in two or more detectors. Taking into account background subtraction and phase-space corrections, the final reconstruction efficiency calculation can be cast in the following equation:

where \(B_{j/4}\) denotes the background, PS the phase-space correction and \(N_{\textrm{lower}}\) merges the background-subtracted topologies calculated by the probabilistic method. The t-spectra after background subtraction and with phase-space corrections are shown for different topology classes in Fig. 7. The shape of the spectra for topologies with failed reconstruction is in good agreement with the shape of 4/4 cases, except for the 2/4 case where at large |t| values the background, mostly from DPE, is slightly overestimated. This leads to a bias, which is taken into account in the systematic uncertainty (see Fig. 10).

t-spectra for elastic-scattering candidates with reconstructed tracks in four detectors (4/4) and for events where in at least one detector the reconstruction failed. Events in the 2/4 topology have two detectors with reconstructed tracks on the same side, those in the (1 + 1)/4 have one detector with reconstructed tracks per side. Corrections for phase-space effects are applied and background is subtracted

The final reconstruction efficiency calculated per run and per arm according to Eq. (14) is shown in Fig. 8. The values are around \(85\%\), and are typically about 2\(\%\) higher for Arm 1 than for Arm 2 because of a slightly different material distribution [3]. The results are summarized in Table 4 for both arms. The systematic uncertainties range between \(0.4\%\) and \(0.9\%\) and are dominated by the composition of the templates for accidental coincidences and uncertainties in the background subtraction. The former are evaluated by applying different veto conditions and by increasing and decreasing the SD fraction in the template; the latter are calculated by varying the background normalization regions; and in both cases the procedure described in Sect. 5.2 is followed.

The reconstruction efficiency has a time dependence, both within a run and between runs. This time dependence is correlated with the number of halo protons overlapping in time with protons from elastic scattering. Every proton, be it from elastic scattering, an SD event or the beam halo, has a certain probability to develop a hadronic shower; thus in events with overlapping protons the shower probability is approximately twice as high as in events with only one proton per detector. The correlation between the reconstruction efficiency and the number of overlapping halo events is illustrated in Fig. 9, where \(\varepsilon _{\text {rec}}\) is plotted as a function of the fraction of multi-track events. Each point in Fig. 9 corresponds to a data-taking period between beam scrapings. Multi-track events are defined as events with a selected elastically scattered proton in each detector and an additional reconstructed track in any of the four detectors. Different sources can create additional tracks, such as cross-talk, but these are time-independent, whereas additional tracks from the halo exhibit a strong time dependence. Therefore, the time-variation of the multi-track fraction is dominated by the halo overlap, which induces the clear correlation with \(\varepsilon _{\text {rec}}\), shown in Fig. 9. The reconstruction efficiency is nominally assumed to be independent of the t-value because of the uniform material distribution across the detection surface. However, a possible t-dependent bias could be introduced, through deficiencies in the background subtraction method for the 2/4 topology. A model of this possible t-dependence, shown in Fig. 10, is used to assess an additional systematic uncertainty.

5.4 Acceptance and unfolding

The acceptance is defined as the ratio of events at particle level passing all geometrical and fiducial acceptance cuts defined in Sect. 5.1 to the total number of generated events, and is calculated as a function of t. The differential elastic cross section is corrected for acceptance losses. The calculation is carried out with the simulation model described in Sect. 4.2. The acceptance is shown in Fig. 11 for each arm. The shape of the acceptance curve can be understood from the contributions of the vertical and horizontal scattering angles to t (see Eq. (6)). The smallest accessible value of |t| is obtained at the detector edge and set by the vertical distance of the detector from the beam. Close to the edge, the acceptance is small because a fraction of the events are lost due to the beam divergence, i.e. events inside the acceptance on one side but outside on the other side. At small |t|, up to \(-t \sim 0.15~\text {GeV} ^2\), vertical and horizontal scattering angles contribute about equally to a given value of t. Larger |t|-values imply larger vertical scattering angles and larger values of |y|, and with increasing |y| the fraction of events lost in the gap between the main detectors decreases. The maximum acceptance is reached for events occurring at the largest possible values of |y| within the beam-screen cut. Beyond that point the acceptance decreases steadily because the events are required to have larger values of |x|, since these t-values are dominated by the horizontal scattering angle component. The difference between the two arms is mainly related to the vertical beam offset, which brings the detectors of Arm 2 closer to the beam, thereby increasing the acceptance. Meanwhile, events at large |y| are shadowed by the beam screen, decreasing the acceptance for Arm 2 at large |t|.

The relative resolution \({\textrm{RMS}}((t-t_{\textrm{rec}})/t)\), where \(t_{\textrm{rec}}\) is the reconstructed value of t, for different reconstruction methods. The resolution for local subtraction is not shown as it is practically the same as for the lattice method. The dotted line shows the resolution without detector resolution, accounting only for beam effects. It is the same for all methods

The measured t-spectrum is distorted by detector resolution and beam smearing effects, including angular divergence, vertex smearing and energy smearing. These effects change the shape of the reconstructed t-spectrum, particularly at small |t| as shown in Fig. 12, which also shows how detector resolution effects depend strongly on the reconstruction method. The magnitude of the matrix elements used in the t-reconstruction relative to the detector spatial and angular resolutions determines the performance of a method. While the subtraction method receives only small contributions from the detector resolution, which is good for the space coordinate measurement, all other methods suffer from a sizeable degradation once detector resolution is included. The degradation arises from the local angle being poorly measured, given that the 8 m distance between the two stations is too small to obtain good precision.

After background subtraction, the measured t-spectrum in each arm is corrected for migration effects using an iterative, dynamically stabilized unfolding method [25]. MC simulation is used to obtain the migration matrix shown in Fig. 13 that is used in the unfolding. The superior resolution of the subtraction method means the migration almost ends only one or two bins away from the diagonal, whereas a few more non-diagonal elements are populated for the local angle method. For the subtraction method the impact on the t-spectrum is very small and confined to the first few bins, whereas for the local angle method the corrections are slightly larger and flat for \(-t>10^{-3}~\text {GeV} ^{2}\). Overall, the impact of the resolution on the reconstructed t-spectrum is negligible, except for very small |t| at the detector edge, where the beam divergence is important. The very first bin at smallest |t| is dominated by divergence effects. The events that contribute to this bin have a small vertical scattering angle and would, in the absence of divergence, be outside of the acceptance defined by the vertical cut close to the detector edge. The divergence-induced angular smearing folded with the steeply falling scattering angle distribution causes many more events to migrate into that bin than out of it.

The results are cross-checked using an unfolding method based on the singular value decomposition method [26]. The unfolding procedure is applied to the distribution obtained using all selected events, after background subtraction in each elastic arm. A data-driven closure test is used to evaluate any bias in the unfolded data spectrum shape due to mis-modelling of the reconstruction-level spectrum shape in the simulation. The simulation is reweighted, according to a polynomial function parameterizing the data/MC difference, at particle level such that the reconstruction-level spectrum in simulation matches the data. The modified reconstruction-level simulation is unfolded using the original migration matrix, and the result is compared with the modified particle-level spectrum. The resulting bias is considered as systematic uncertainty; it is very small.

The unfolding procedure introduces a statistical correlation between the bins of the t-spectrum, which is incorporated in a covariance matrix included when fitting the data.

5.5 Beam optics

The reconstruction of the t-value requires knowledge of the elements of the transport matrix. The transport matrix can be calculated from the design of the 2.5 km beam optics, which consists of a sequence of the beam elements including the alignment parameters of the magnets, the magnet currents and the field calibrations. This initial set of matrix elements is referred to as the ‘design optics’. Small corrections, allowed within the range of the systematic uncertainties, need to be applied to the design optics for the measurement of the physics parameters, following a procedure developed in Refs. [3, 4]. Constraints on beam optics parameters are derived from the ALFA data, exploiting the fact that the reconstructed scattering angle must be the same for different reconstruction methods using different transport matrix elements. The beam optics parameters are determined from a global fit, using these constraints, with the design optics as a starting value. The tuned parameters are the field strengths of the quadrupoles, quantified by the k-values, located between the IP and ALFA, and named Q1–Q6 (see Fig. 2), in beam 1 and beam 2. For the 2.5 km beam optics, the following k-values are tuned to fulfil the ALFA constraints: a single k-value correction for Q1 and Q3, because they are connected to a common power line, and independent k-values for Q5 and Q6. In the fit, the k-values for beam 1 and beam 2 are treated as independent parameters, resulting in a total of six fitted parameters.

Reconstructed tracks from elastic-scattering events are used to derive two classes of data-driven constraints on the beam optics:

-

Correlations between A-side and C-side measurements of positions or angles, and between positions or angles measured in the inner and outer stations of ALFA on the same side. These are used to infer the ratio of matrix elements in the beam transport matrix. The resulting constraints are independent of any optics input.

-

Correlations between the reconstructed scattering angles. These are calculated using different methods to derive further constraints on matrix elements as scaling factors. These factors indicate the amount of scaling needed for a given matrix element ratio to equalize the measurements of the scattering angle. These constraints depend on the given optics model. The design beam optics with quadrupole currents measured during the run is used as a reference to calculate the constraints.

For each constraint, the bias induced by the measurement method because of resolution or acceptance effects is estimated by evaluating the constraint in simulation and comparing the value with that from the design optics. An additive correction is then applied to the constraint values obtained from data before comparison with beam optics calculations. These corrections are generally small and at the level of a few per mille.

The difference in reconstructed scattering angle \(\Delta \theta ^{\star }_x\) between the subtraction and local angle methods as a function of the scattering angle using the subtraction method for the outer detectors. In each bin of the scattering angle the points show the mean value of \(\Delta \theta ^{\star }_x\) and the error bar represents the RMS. The red line represents a fit used to determine the value of the slope

A stringent constraint from the second class is illustrated in Fig. 14. This example shows the comparison of the scattering angle in the horizontal plane reconstructed with the subtraction method, Eq. (4), which is based on the position and \(M_{12,x}\), and the local angle method Eq. (5), which is based on the local angle and \(M_{22,x}\). Figure 14 shows the difference in scattering angle between the two methods as a function of the scattering angle determined with the subtraction method. The slope is extracted using a linear fit in the central region (red line). This slope is used as the scaling factor for the matrix element ratio \(M_{12}/M_{22}\). Its value of about \(1\%\) indicates that the design optics ratio \(M_{12,x}/M_{22,x}\) needs to be increased by \(1\%\) to obtain the same scattering angle, on average, from both methods using data.

A total of 21 constraints were determined from the ALFA data. A sub-set of 17 constraints with the best precision are normally included in the \(\chi ^2\) calculation of the fit, four constraints with larger experimental uncertainties and less constraining power are not included but kept for control. All ALFA constraints are treated as uncorrelated in the fit, but coherent changes in the constraints observed under experimental variations of the analysis are taken into account in the systematic uncertainty. In the minimization procedure, the beam optics calculation program MadX [27] is used to extract the optics parameters and to calculate the matrix element ratios for a given set of magnet strengths. The ALFA system provides precise constraints on the matrix element ratios, but cannot probe the deviations of single magnets. There are, therefore, several sets of optics parameters which minimize the \(\chi ^2\), arising from different combinations of magnet strengths. The chosen configuration, called the effective optics, is one solution among many. Different sets of quadrupoles are included in the fit, and a variation of the choice of set represents the main contribution to the systematic uncertainty. Figure 15 shows the pull of the ALFA constraints after the minimization, which resulted in magnet strength corrections ranging from 0.1 to 0.6%, with rather large differences between beam 1 and beam 2, most notably for Q6 with a negative correction of \(-0.62\%\) in beam 1 and a positive correction of \(0.13\%\) in beam 2. The \(\chi ^{2}\) of the fit includes the systematic uncertainties of the constraints and is of good quality with \(\chi ^{2}/N_{\text {dof}}=0.45\). The resulting change in the transport matrix elements most important for t-reconstruction is up to 1%.

Systematic uncertainties for the k-values and matrix elements include the coherent change of constraints under experimental variations. A total of 13 variations, including the nominal constraints obtained from the average of the arms and the constraints from individual arms, are taken into account and the standard deviation of the resulting distribution is assigned as an uncertainty. The dominant systematic uncertainty with the largest impact on the t-reconstruction is obtained when treating only the k-values of Q5 and Q6 as free parameters in the fit, which still results in an acceptable fit quality.

5.6 Luminosity

The general methods for luminosity determination in ATLAS are described in Ref. [6]. However, a dedicated measurement is required due to the special \(\beta ^{\star } = 2.5\) km optics resulting in different conditions shown in Table 5. The ATLAS general strategy to provide a reliable luminosity determination and to properly assess the systematic uncertainties is to compare the measurements of different detectors and algorithms. This section describes the luminosity determination for this run and its systematic uncertainty using LUCID (LUminosity measurement with a Cherenkov Integrating Detector) [28] as the baseline alongside approaches using the Beam Conditions Monitor (BCM) [29] and the Inner Detector (ID) [9] of ATLAS. Each detector and each algorithm is calibrated in special van der Meer (vdM) scans [30], except the track counting algorithm, which is cross-calibrated to LUCID in parts of the vdM scan run with head-on collisions. Table 5 summarizes the differences between high-\(\beta ^{\star } \) runs, high-luminosity runs and vdM scans. Such differences are due to the number of colliding bunches and the average numbers of interactions per bunch crossing (pile-up parameter \(\mu \)), leading to an instantaneous luminosity in the high \(\beta ^{\star } \) regime which is up to seven orders of magnitude lower than in high-luminosity running, and three orders of magnitude lower than in the vdM scans.

The algorithms listed in Table 6 were used in the final analysis. They were chosen as having the sensitivity needed for high-\(\beta ^{\star } \) runs and at the same time exhibiting the most favourable background conditions. Both LUCID and the BCM have detectors on both the A-side and C-side of the ATLAS IP. The A-side detectors were not used in OR-mode due to high background on the A-side. The track-counting algorithm using ID data is independent of LUCID and the BCM. The track-counting algorithm obtains the per-bunch visible interaction value \(\mu _{\text {vis}}\) from the mean number of reconstructed tracks per bunch crossing averaged over a luminosity block, which corresponds to a period of about one minute with approximately constant luminosity. The track measurements used in this analysis are based on the TightModLumiFootnote 3 working point [6]; they are available for only two of the ALFA runs (308979 and 309165).

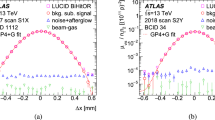

LUCID_EventORC_BI, being the most stable algorithm with favourable background conditions, was chosen as the reference algorithm and the other algorithms are used to evaluate the systematic uncertainties. The percentage differences with respect to the reference algorithm are shown in Fig. 16 for all runs as a function of the run number. This is a key plot in the context of evaluating the systematic uncertainties. The main sources of systematic uncertainty are the vdM calibration uncertainty, calibration transfer uncertainty, long-term stability uncertainty and background uncertainty.

vdM calibration uncertainty: This source of uncertainty is the same as for the standard luminosity analysis, and in 2016 it was evaluated to be 1.1% [6], while the central value of the calibration was updated for this analysis, applying the techniques described in Ref. [6].

Calibration transfer uncertainty: This arises because the vdM scans are performed at a luminosity \(10^3\) times higher than the luminosity during the ALFA data taking (see Table 5). Any non-linearity between the vdM-scan and ALFA-run luminosity regimes would appear as systematic deviation between algorithms in the stability plot shown in Fig. 16. It is therefore included in the deviations of the various independent algorithms from the reference one.

taking, the period with high-\(\beta ^{\star } \) runs was very short, lasting only a few days. Nevertheless, these runs were acquired four months after the vdM-scan session and about one month before a vdM-like scan, the so-called MD1814 scan. The stability of the detectors and algorithms from the time of the vdM-scan session was evaluated through the comparison between these two scans, and it is reflected in the stability plot of Fig. 16. Thus, no additional uncertainty is needed to account for the long-term stability.

Background uncertainty: The single-beam background to the luminosity signal, as estimated from unpaired bunches, appears negligible for all LUCID and BCM algorithms used in this analysis. There is no sign of collision background for the chosen algorithms. A more quantitative constraint on collision background can be inferred from the internal consistency, over the entire ALFA running period, of measurements reported by five independent luminosity algorithms with very different intrinsic background sensitivity. Therefore, the internal consistency of the measurements displayed in Fig. 16 implicitly sets an upper limit on the possible impact of collision backgrounds on the reported integrated luminosity.

The total systematic uncertainty of the luminosity measurement can be obtained from the absolute calibration uncertainty of the vdM scan and from the stability and consistency among the various algorithms. Figure 16 shows the deviation of the luminosity from the value given by the reference algorithm run by run. However, the run-integrated luminosity is most relevant for the determination of \(\sigma _{\text {tot}} \). The run-integrated luminosity measured by the various algorithms is compared with the reference LUCID_EventORC_BI value. This provides the uncertainty related to all listed effects: calibration transfer, long-term stability and background. The biggest deviation in the run-integrated luminosity is found to be \(-1.8\%\) for the BCM_T_EventAND algorithm. Unfortunately, the TightModLumi track-counting algorithm was only available in two runs. The TightModLumi run-integrated luminosity in these two runs deviates from the value given by reference LUCID algorithm by \(1.85\%\). This number is therefore taken as the stability and consistency uncertainty.

Adding in quadrature the uncertainty in the absolute calibration (1.1%) and that associated with the stability and consistency of the available independent luminosity measurements (1.85%), a total systematic uncertainty of 2.15% is obtained for the integrated luminosity delivered during the seven high-\(\beta ^{\star } \) runs.

The final value of the integrated luminosity is therefore:

6 Results

6.1 The differential elastic cross section

Several corrections are applied to calculate the differential elastic cross section. The corrections are made individually per run and per arm before combining all runs and the two arms in the final differential elastic cross section. In a given bin \(t_i\), the cross section is calculated according to the following formula:

where \(\Delta t_i\) is the bin width, \({\mathcal {M}}^{-1}\) represents the unfolding procedure applied to the background-subtracted number of events \(N_i - B_i\), \(A_i\) is the acceptance, \(\epsilon _{{\textrm{rec}}}\) is the event reconstruction efficiency, \(\epsilon _{\textrm{trig}}\) is the trigger efficiency, \(\epsilon _{\textrm{DAQ}}\) is the dead-time correction and \(L_{\textrm{int}}\) is the integrated luminosity used for this analysis. The binning in t is chosen to be appropriate for the experimental resolution and statistical uncertainty. At small |t| the selected bin width is two times the resolution. At larger |t| the bin width is increased to compensate for the lower number of events from the exponentially falling distribution. The resulting differential elastic cross section obtained using the subtraction method is shown in Fig. 19 and numerical values with uncertainties are summarized in Tables 12 and 13 in the Appendix.

6.1.1 Experimental systematic uncertainties

Several variations of the analysis are performed under different experimental conditions in order to assess the systematic uncertainties. For certain sources, several variations potentially probing the same effect are considered. From these variations, the largest deviation from the nominal cross section is retained as the systematic uncertainty. A total of 20 sources of uncertainty are propagated to the differential elastic cross section as follows:

Relative systematic shifts of \({\textrm{d}}\sigma /{\textrm{d}}t\) as a function of t resulting from uncertainties in the a alignment and effective optics and b beam properties. For cases where two-sided uncertainties are available the larger one was retained and assigned as a symmetric uncertainty

-

For the alignment, separate uncertainties are calculated for different components of the alignment procedure. The dominant uncertainty is related to the global correction to the distance parameter determined by the procedure described in Sect. 3.3, and the correction is varied by its total uncertainty. For the vertical beam offset, the uncertainties are assumed to be fully correlated between inner and outer stations but anti-correlated between the left and right sides, and thus systematic shifts preserve the internal consistency of the data. Uncertainties related to the horizontal offset and to the rotation are propagated directly to the differential cross section, where both the full-correlation and anti-correlation assumptions were tested. The resulting uncertainties are rather small and similar for the two correlation assumptions, and the larger one is retained as the nominal uncertainty.

-