Abstract

Large-scale detectors consisting of a liquid scintillator target surrounded by an array of photo-multiplier tubes (PMTs) are widely used in the modern neutrino experiments: Borexino, KamLAND, Daya Bay, Double Chooz, RENO, and the upcoming JUNO with its satellite detector TAO. Such apparatuses are able to measure neutrino energy which can be derived from the amount of light and its spatial and temporal distribution over PMT channels. However, achieving a fine energy resolution in large-scale detectors is challenging. In this work, we present machine learning methods for energy reconstruction in the JUNO detector, the most advanced of its type. We focus on positron events in the energy range of 0–10 MeV which corresponds to the main signal in JUNO – neutrinos originated from nuclear reactor cores and detected via the inverse beta decay channel. We consider the following models: Boosted Decision Trees and Fully Connected Deep Neural Network, trained on aggregated features, calculated using the information collected by PMTs. We describe the details of our feature engineering procedure and show that machine learning models can provide the energy resolution \(\sigma = 3\%\) at 1 MeV using subsets of engineered features. The dataset for model training and testing is generated by the Monte Carlo method with the official JUNO software.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

One of neutrino detection methods consists in observation of optical photons emitted in the target volume by all the particles produced as the result of the neutrino interaction. Although charged particles can produce Cherenkov light even in water, a certain class of detectors, e.g. Borexino [1], KamLAND [2], Daya Bay [3], Double Chooz [4], and RENO [5], used liquid scintillators to increase the light yield. Modern compositions of liquid scintillators provide \(O(10^4)\) photons per MeV of deposited energy, a significant part of which can be observed with photomultiplier tubes (PMTs) placed around the target. Since the amount of emitted photons is defined by the deposited energy, it can be easily estimated. Using extra information from the temporal distribution and spatial pattern of fired PMTs allows improving the energy measurement accuracy. However, future detectors with a large number of channels (PMTs) will make the task challenging. We consider novel approaches based on machine learning for the detector of the Jiangmen Underground Neutrino Observatory (JUNO) [6, 7], the largest of its type, currently under construction. These approaches can also be applied to other similar detectors.

JUNO is a multipurpose neutrino observatory located in South China. The primary aims of the JUNO experiment are to determine the neutrino mass ordering and to precisely measure neutrino oscillation parameters \(\sin ^2{\theta _{12}}\), \(\Delta m_{21}^2\), \(\Delta m^{2}_{31}\). The main source of neutrinos in JUNO will be the Yangjiang and Taishan nuclear power plants located about 52.5 km away from the detector. Concurrently, JUNO will be able to explore neutrinos from supernovae, atmospheric and solar neutrinos, geoneutrinos, as well as some rare processes, like the proton decay.

The key requirement for JUNO is to provide the energy resolution \(\sigma _{E}/E = 3\% / \sqrt{E(\text {MeV})}\). The detector construction illustrated in Fig. 1 is optimized to meet this requirement. The JUNO detector consists of the Central Detector (CD), a water Cherenkov detector, and the Top Tracker. The CD is an acrylic sphere 35.4 m in diameter filled with 20 kt of liquid scintillator. The CD is held by a stainless steel construction immersed in a water pool. It is equipped with a large number of PMTs of two types: 17612 large 20-inch tubes and 25600 small 3-inch tubes. The former provide 75.2% the sphere coverage, and the latter add extra 2.7% of coverage [7]. The water pool is also equipped with 2400 20-inch PMTs to detect Cherenkov light from muons. The Top Tracker is disposed at the top of the detector and used to detect muon tracks. The Calibration House integrates calibration systems.

JUNO will detect electron antineutrinos via the inverse beta decay (IBD) channel: \({\overline{\nu }}_{e} + p \rightarrow e^{+} + n\). The positron deposits its energy and annihilates into two 0.511 MeV gammas forming a so-called prompt signal. The energy is deposited shortly after the interaction and is the sum of the positron kinetic energy and the annihilation energy of two 0.511 MeV gammas: \(E_{\text {dep}} = E_{\text {kin}} + 1.022\) MeV. The neutron is captured in liquid scintillator by hydrogen or carbon nuclei after approximately 200 µs producing 2.22 MeV (99% cases) or 4.95 MeV (1% cases) deexcitation gammas for hydrogen and carbon, respectively. This is called a delayed signal. The time coincidence of prompt and delay signals makes it possible to separate IBD signals from backgrounds.Footnote 1 The information collected by PMTs will be used for energy and vertex reconstruction of neutrino interactions.

Machine Learning (ML) has experienced an extraordinary rise in recent years. High-energy physics (HEP), and neutrino physics in particular, have also proven to be remarkable domains for ML applications, especially supervised learning, due to the availability of a large amount of labeled data produced by simulation. There are many examples of using ML approaches in HEP: in neutrino experiments, in collider experiments, etc. [9,10,11]. We also recommend the Living Review of ML Techniques for HEP which tries to include all relevant papers [12].

The remaining part of the paper is organized as follows. Section 2 states the problem. In Sect. 3, we introduce the data used for this analysis. In Sect. 4, we present feature engineering and ML approaches used for this study and their application to solve the problem. The performance of the presented ML models is discussed in Sect. 5. We summarize the study in Sect. 6.

2 Problem statement

In this work, we continue studying ML techniques for energy reconstruction in the energy range of 0–10 MeV, covering the region of interest for IBD events from reactor electron antineutrinos (previous work published in [13, 14]). In general, the deposited energy of an event can be reconstructed from individual PMT signals (charge and time) or using aggregated information from the whole array of PMTs – “aggregated features”. In [13], we presented different approaches based on the information obtained PMT-wise as well as on several basic aggregated features. In the subsequent paper [14], we, using an optimal subset from a large set of newly engineered aggregated features, demonstrated that this approach can achieve the same performance as the one, based on the PMT-wise gained information. On the other hand, vertex reconstruction requires granular information both with traditional and ML algorithms, see [15, 16]. The actual research aims to further investigate the potential of the aggregated feature approach and to study two models: Boosted Decision Trees and Fully Connected Deep Neural Network for energy reconstruction in JUNO.

To evaluate the performance of the models, we use two metrics: resolution and bias. They are defined by a Gaussian fit of the distribution of difference between predicted and true energy deposition \((E_{\textrm{pred}} - E_{\textrm{dep}})\) (see Sect. 5). The resolution is defined as \(\sigma /E_{\textrm{dep}}\) and the bias as \(\mu /E_{\textrm{dep}}\), where \(\sigma \) and \(\mu \) are the standard deviation and the mean of the Gaussian fit, respectively.

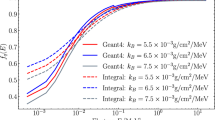

To resolve the primary goal of the JUNO experiment, the determination of the neutrino mass ordering at the level of 3 standard deviations, the energy resolution must be better that or equal to \(\sigma = 3\%\) at 1 MeV, and the uncertainty of energy nonlinearity should be less than 1% [6]. Following [6], we use a simple model to describe the energy resolution \(\sigma /E_{\textrm{dep}}\) as a function of energy:

with parameters a, b, and c interpreted as follows: a is mainly driven by statistics of the true accumulated charge on PMTs, b is related to spatial nonuniformity, and c is associated with the charge from dark noise (introduced in Sect. 3). We extract values of a, b and c by fitting the energy resolution curve obtained with our energy reconstruction models (see Sect. 5).

It is convenient to have only one parameter to estimate the entire resolution curve. The JUNO requirements for the appropriate determination of the neutrino mass ordering could be translated into the following requirement on the effective resolution \({\tilde{a}}\) as [17]:

reflecting that the effect of the b term is 1.6 larger and the effect of the c term is 1.6 smaller compared to the effect of the a term.

3 Data description

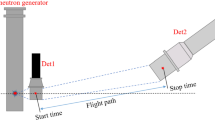

The analyzed data are generated by the full detector Monte Carlo method using the official JUNO software [18,19,20]. The detector simulation software is based on the Geant4 framework [21, 22], with the geometry defined according to the latest design [23], and is implemented as a standalone application.

The simulation begins with the injection of a positron with the kinetic energy in the range from 0 to 10 MeV. As the neutron, produced in a neutrino interaction, is going to be used only for the offline event selection, it was not simulated for this study. The positron interacts with liquid scintillator losing its energy. After it stops (or in-flight), the positron annihilates with an electron in the medium, producing a pair of 511 keV gammas. These two gammas are usually stopped by the target, producing secondary Compton electrons. Electromagnetic energy losses of charged particles (the primary positron and secondary electrons in our case) are accompanied by optical light emission. In general, about 90% of light is produced from the scintillation of the detector target medium, and the other \({\sim }\) 10% is produced from Cherenkov radiation.

Example of a positron event with the deposited energy of 6.165 MeV as seen by 20-inch PMTs. Only fired PMTs are shown. On the left, the color represents the accumulated charge: yellow points show the channels with more hits, red points show the channels with fewer hits. On the right side, the color indicates PMT activation time: the darker blue color shows earlier first hit arrivals. The primary vertex is shown by the gray sphere

The produced photons are transported through the materials until they are absorbed, leave the detector, or hit PMTs. We do not take into account any liquid flows or inhomogeneities: despite it may make an impact on the detector performance, it is hard to foresee a realistic scenario at the stage of the experiment preparation. We leave it for future studies when the detector will be constructed and we will have information on the liquid flows, temperature variations, etc. Roughly \(\sim \)30% of photons hitting the PMT photocathode lead to the release of photoelectrons which initiate an electric pulse. On average, all PMTs collect about 1500 photoelectrons per 1 MeV of the deposited energy at the center of the detector. PMTs also produce pulses spontaneously, the so-called dark current which constitutes the noise. JUNO will be equipped with two types of 20-inch PMTs: 25% produced by Hamamatsu and 75% by NNVT. The measured dark current rate for the former is 19.3 kHz; the latter has the dark rate of 49.3 kHz [24]. Different processes during photo-electron collection and further current amplification, as well as intrinsic effects of electronics, are also simulated. The introduced spread of hit times is 1.3 ns and 7.0 ns for Hamamatsu and NNVT, respectively [24]. Then the charge and time information is extracted from PMT pulse shapes and serves as an input for reconstruction algorithms. Then, using interaction kinematics, the antineutrino energy can be calculated assuming the following relation: \(E_{\tilde{\nu }_{e}} \approx E_{\textrm{dep}} + 0.8\) MeV.

However, if an event happens near the edge of the detector, less than a meter away, one or both photons can escape the CD without contributing to the light yield. The positron energy will be underestimated in such cases. There is also a strong radioactive background at the detector edge which also compromises the energy reconstruction in that area. To avoid these effects, we apply the \(R<\) 17.2 m volume cut, i.e. discard those events in the outermost 0.5 m layer of the detector.

Figure 2 illustrates one example event for the positron with a 6.165 MeV deposited energy, as seen by the 20-inch PMTs. The figure shows accumulated charges in PMT channels (left) and activation time, i.e. the first hit arrival times (right).

In this study, event energy reconstruction is based only on the information collected by the larger 20-inch PMTs. We do not include the smaller ones (3-inch) so far because their contribution to the light collection is negligible.

To train models and to evaluate their performance, we prepared two datasets:

-

1.

Training dataset consisting of 5 million events uniformly spread in the scintillator volume with isotropic angular distribution and with a flat kinetic energy spectrum ranging from 0 to 10 MeV, i.e. \(E_{\text {kin}} \in [0, 10]\) MeV.

-

2.

Testing dataset consisting of 14 subsets with discrete kinetic energies of 0 MeV, 0.1 MeV, 0.3 MeV, 0.6 MeV, 1 MeV, 2 MeV, ..., 10 MeV and with the same spatial and angular distribution as the training dataset. Each subset contains about 100 thousand events.

4 Machine learning approach

The event energy defines the total charge collected by all PMTs because the number of emitted photons is roughly proportional to the deposited energy. However, the total collected charge also depends on the event position. This nonuniformity is illustrated in Fig. 3 which shows the accumulated charge per 1 MeV of the deposited energy as a function of the radius cubed \(R^3\). The sharp decrease of the accumulated charge in the region of R \(\gtrsim \) 16 m (or \(R^3\) \(\gtrsim \) 4000 m\(^3\)) is caused by the effect of the total internal reflection due to which the photons with a large incident angle never escape the target.

In the case of JUNO, spherical symmetry is not exactly held because the supporting structures at the bottom hemisphere hinder the installation of some PMTs, which leads to additional dependence on the z-coordinate.

The pattern of signal distribution over PMTs carries the information on the event position and may be used for a precise reconstruction of energy taking into account nonuniformity. Two numbers from each of the 17612 20-inch PMTs of the JUNO Central Detector can be extracted: the total charge in the event window and the first hit time (FHT), resulting in 35224 channels (some of which may be empty).

The values of FHT are the result of the waveform reconstruction and the trigger algorithms embedded in the JUNO simulation software. The procedure is the same as it will be used for the real data and does not rely on the true simulation information in any sense. After an event starts, the main part of the charge is collected by PMTs in about 200 ns (depending on the distance from the center of the detector). In the remaining time of the signal window, there is a noticeable contribution from scattered and reflected photons as well as from dark noise.

In this study, we aggregate the information obtained channel-wise in a small set of features in order to reduce the number of channels. This set of features is used as an input to machine learning models to predict the deposited energy \(E_{\text {dep}}\).

In this section, we describe how we construct features for machine learning algorithms and consider two models: Boosted Decision Trees and Fully Connected Deep Neural Network. The models are trained and tested on exactly the same datasets, which enables a fair comparison given in Sect. 5.

4.1 Feature engineering

The following basic aggregated features are extracted for energy reconstruction:

-

1.

AccumCharge – total charge accumulated on all fired PMTs. In the first order, it is proportional to \(E_{\textrm{dep}}\) and, therefore, is expected to be a powerful feature.

-

2.

nPMTs – total number of fired PMTs. It is similar to AccumCharge but may bring some complementary information.

-

3.

Features aggregated from the charge and position of individual PMTs:

-

3.1

Coordinate components of the center of charge:

$$\begin{aligned} \begin{aligned} (x_{\textrm{cc}},\ y_{\textrm{cc}},\ z_{\textrm{cc}}) = \varvec{r}_{\textrm{cc}} = \frac{\sum _{i=1}^{N_{\textrm{PMTs}}} \varvec{r}_{\textrm{PMT}_i}\cdot n_{\mathrm{p.e.}, i}}{\sum _{i=1}^{N_{\textrm{PMTs}}}n_{\mathrm{p.e.}, i}}, \end{aligned} \end{aligned}$$(3)and its radial component:

$$\begin{aligned} R_{\textrm{cc}} = |\varvec{r}_{\textrm{cc}}| \end{aligned}$$(4)Coordinate components of the center of charge provide a rough approximation of the location of the energy deposition and help to correct the nonuniformity of the detector response. It is useful, for some ML models, to engineer new synthesized features from the existing ones [25, 26]. It is sometimes hard, in particular for Boosted Decision Trees, to learn nonlinear dependencies, and so we construct the following extra features:

$$\begin{aligned} \begin{aligned} \theta _{\textrm{cc}}&= \arctan {\frac{\sqrt{x_{\textrm{cc}}^2 + y_{\textrm{cc}}^2}}{z_{\textrm{cc}}}},\ \phi _{\textrm{cc}} = \arctan {\frac{y_{\textrm{cc}}}{x_{\textrm{cc}}}},\\ J_{\textrm{cc}}&= R_{\textrm{cc}}^2 \cdot \sin {\theta _{\textrm{cc}}},\ \rho _{\textrm{cc}} = \sqrt{x_{\textrm{cc}}^2 + y_{\textrm{cc}}^2},\\ \gamma _{z}^{\textrm{cc}}&= \frac{z_{\textrm{cc}}}{\sqrt{x_{\textrm{cc}}^2 + y_{\textrm{cc}}^2}},\ \gamma _{y}^{\textrm{cc}} = \frac{y_{\textrm{cc}}}{\sqrt{x_{\textrm{cc}}^2 + z_{\textrm{cc}}^2}},\\ \gamma _{x}^{\textrm{cc}}&= \frac{x_{\textrm{cc}}}{\sqrt{z_{\textrm{cc}}^2 + y_{\textrm{cc}}^2}}. \end{aligned} \end{aligned}$$(5) -

3.2

Shape of charge distribution over PMT channels. Figure 4 shows cumulative distribution functions (CDFs) and probability density functions (PDFs) for charge distribution on fired PMTs (nPE). The PDFs of nPE consist of two prominent peaks corresponding to one or two hits on a single PMT. The higher the energy, the more the second peak is populated. Moreover, for the events occurring near the edge there are PMTs accumulating a large amount of charge and forming a long tail of the distribution. Therefore, the shape of this distribution delivers information both on the intensity of light emission and its position. To characterize the CDF for charge, we use the following sets of percentiles as features:

$$\begin{aligned} \{ {\textrm{pe}}_{2\%}, {\textrm{pe}}_{5\%}, {\textrm{pe}}_{10\%}, {\textrm{pe}}_{15\%}, \ldots , {\textrm{pe}}_{90\%}, {\textrm{pe}}_{95\%} \} \end{aligned}$$as well as mean, standard deviation, skewness, and kurtosis:

$$\begin{aligned} \{ {\textrm{pe}}_{mean}, {\textrm{pe}}_{std}, {\textrm{pe}}_{skew}, {\textrm{pe}}_{kurtosis}\} \end{aligned}$$

-

3.1

-

4.

Features aggregated from first hit times and position of individual PMTs:

-

4.1

Coordinate components of the FHT center:

$$\begin{aligned} (x_{\textrm{cht}},\ y_{\textrm{cht}},\ z_{\textrm{cht}})= & {} \varvec{r}_{\textrm{cht}}\nonumber \\= & {} \frac{1}{\sum _{i=1}^{N_{\textrm{PMTs}}} \frac{1}{t_{\textrm{ht},i} + c}} \sum _{i=1}^{N_{\textrm{PMTs}}} \frac{\varvec{r}_{\textrm{PMT}_i}}{t_{\textrm{ht},i} + c},\nonumber \\ \end{aligned}$$(6)and its radial component:

$$\begin{aligned} R_{\textrm{cht}} = |\varvec{r}_{\textrm{cht}}| \end{aligned}$$(7)Here the constant c is required to avoid division by zero. The value of 50 ns was selected to make the center of FHT closer to the energy deposition vertex. These features give extra information on the location of the energy deposition. Likewise the center of charge, the center of FHT provides a rough estimation of the energy deposition center but with the use of time information. Figure 6 shows that \(R_{\textrm{cht}}\) and \(R_{\textrm{cc}}\) bring complementary information, especially at larger radii. We also synthesize new features from the components of the center of FHT as it was done for the center of charge (5):

$$\begin{aligned} \theta _{\textrm{cht}}&= \arctan {\frac{\sqrt{x_{\textrm{cht}}^2 + y_{\textrm{cht}}^2}}{z_{\textrm{cht}}}},\ \phi _{\textrm{cht}} = \arctan {\frac{y_{\textrm{cht}}}{x_{\textrm{cht}}}},\nonumber \\ J_{\textrm{cht}}&= R_{\textrm{cht}}^2 \cdot \sin {\theta _{\textrm{cht}}},\ \rho _{\textrm{cht}} = \sqrt{x_{\textrm{cht}}^2 + y_{\textrm{cht}}^2},\nonumber \\ \gamma _{z}^{\textrm{cht}}&= \frac{z_{\textrm{cht}}}{\sqrt{x_{\textrm{cht}}^2 + y_{\textrm{cht}}^2}},\ \gamma _{y}^{\textrm{cht}} = \frac{y_{\textrm{cht}}}{\sqrt{x_{\textrm{cht}}^2 + z_{\textrm{cht}}^2}},\nonumber \\ \gamma _{x}^{\textrm{cht}}&= \frac{x_{\textrm{cht}}}{\sqrt{z_{\textrm{cht}}^2 + y_{\textrm{cht}}^2}}. \end{aligned}$$(8) -

4.2

Shape of FHT distribution over PMT channels. The corresponding PDFs and CDFs are shown in Fig. 5. There is a clear dependence of the shape on both the energy and radial position. The depression of FHT PDFs at 16.9 m (yellow in the left panel of Fig. 5) is due to the total internal reflection of photons that have large incidence angles at the spherical surface of the detector. Near the edge, the events appear earlier in the readout window because of the lower time-of-flight correction. The FHT PDFs at the detector center (right panel of Fig. 5) have similar shapes only differing by the relative contribution from dark noise hits and from annihilation gammas. This contribution decreases with increasing energy. To characterize the CDF for FHT, we add the following percentile features as we did for charge:

$$\begin{aligned} \{ {\textrm{ht}}_{2\%}, {\textrm{ht}}_{5\%}, {\textrm{ht}}_{10\%}, {\textrm{ht}}_{15\%}, \ldots , {\textrm{ht}}_{90\%}, {\textrm{ht}}_{95\%} \} \end{aligned}$$as well as mean, standard deviation, skewness and kurtosis:

$$\begin{aligned} \{ \textrm{ht}_{\textrm{mean}}, \textrm{ht}_{\textrm{std}}, \textrm{ht}_{\textrm{skew}}, \textrm{ht}_{\textrm{kurtosis}}\} \end{aligned}$$Figure 7 shows the average PDF for FHT distribution for 161 events with \(E_{\textrm{dep}} = 2.022\) MeV and \(R = 16\) m. There is a noticeable variance in the first bins. Taking this into account, we also use the following differences between percentiles, since they are more robust to this variance:

$$\begin{aligned} \{ {\textrm{ht}}_{5\% - 2\%}, {\textrm{ht}}_{10\% - 5\%}, \ldots , {\textrm{ht}}_{95\% - 90\%} \} \end{aligned}$$

-

4.1

4.1.1 Feature summary

In total, we engineered 91 features. The full set of features is listed in Table 1.

4.2 Boosted decision trees

In terms of machine learning, the problem of energy reconstruction is a supervised regression problem. In general, algorithms for supervised problems learn the mapping of input features to a target output using a data sample with input-output pairs. Boosted Decision Trees (BDT) is one of such algorithms [27, 28].

BDT is a gradient boosting-based algorithm with Decision Tree (DT) [29] as a base model. DT is a simple, fast, and interpretable model. DT consists of a binary set of splitting rules based on values of different features of the object. A single DT is not a powerful algorithm. Therefore, an ensemble of DTs is commonly used in which DTs are trained sequentially: each subsequent DT is trained to correct errors of previous DTs in the ensemble.

In general, boosting is an algorithm of sequential combining of some basic models to create a stronger one. There are many different realisations of boosting algorithms. For example, AdaBoost [30], widely used in the past, is based on the weighting procedure: a weight is assigned to each event at each iteration. Events with the worse prediction get a larger weight, and the ones with the better prediction get a smaller weight. In contrast, gradient boosting defines a differentiable loss function and calculates the gradient between the true targets (\(E_{\textrm{dep}}\)) and the ones predicted by the previous ensemble. Then, at each iteration, the parameters of the next basic model are updated to fit the residual gradient from the previous ensemble.

Tree-based models, including BDT, are an efficient way to work with tabular data [31]. XGBRegressor from the XGBoost library [32] is adopted in this paper. XGBoost is a robust and widely-accepted framework for training and deployment of BDT.

4.2.1 Feature selection

Many of the 91 features described in Sect. 4.1 are highly correlated, and we want to keep only a subset of features which provide the same performance of the model as the full set. The feature selection procedure for the BDT model is described as follows:

-

1.

We train the BDT model on a dataset with 1 million events using a 5-fold cross-validation from the Scikit-learn library [33]. Figure 8 illustrates the cross-validation with the early stopping condition on an additional hold-out dataset. We evaluate the model performance with a mean absolute percentage error (MAPE), which is better suited for reconstruction at low energies. MAPE is a generalized metric that is calculated for true deposited energies and reconstructed deposited energies for, in case of training, validation dataset:

$$\begin{aligned} {\text {MAPE}} = \frac{100\%}{N} \sum _{i=1}^{N} \left| \frac{y_i - {\hat{y}}_i}{y_i} \right| , \end{aligned}$$(9)where N – number of events, \(y_i\) – true deposited energy, \({\hat{y}}_i\) – reconstructed deposited energy. As a result of the cross-validation procedure, we obtain the mean MAPE on the validation datasets and its standard deviation \(\varepsilon \).

-

2.

Then, we initialize an empty list and start filling it with features. At each step, we pick a feature from the full set and train the BDT model using the 5-fold cross-validation procedure with an early stopping.Then, we calculate the mean MAPE for each step and add to the list the feature that provides the stepwise best performance. We stop when the MAPE score differs from the mean MAPE score for the model trained on all features by less than the standard deviation \(\varepsilon \).

Figure 9 shows the results of the above-described feature selection procedure. The procedure results in the following set of features:

As expected, the most powerful feature is the total accumulated charge because the amount of emitted light is, to the first order, proportional to the deposited energy. However, the amount of detected photons is position-dependent, which can be corrected using the information provided by the coordinates of the center of charge and the center of FHT. Due to the axial symmetry of the detector, x and y components provide useful information only as a combination \(x^2+y^2\), so they do not appear in the list at all. Extra information is extracted from the width of the p.e. number distribution which is peaked around 1 for central events and is populated with large values for peripheral events. The shape of the time profile also helps, but its contribution is small because of a large event-to-event variation.

Figure 10 shows the dependence of effective resolution \({\tilde{a}}\) and standard deviation (RMSE) obtained on the validation dataset for reconstructed energy and true energy. Both quantities are obtained with sequentially selected features as an input of the BDT model. RMSE is defined as follows:

The dashed line illustrates \({\tilde{a}}\) for BDT trained on the full set of features, and the dark red area is its error. The decrease of RMSE is consistent and converged with the improvement of the effective energy resolution \({\tilde{a}}\). All models were trained with a maximal tree depth equal to 9 and a learning rate of 0.08, and with an early stopping condition with a patience of 5.

4.2.2 Hyperparameter optimization

We use the grid search approach for hyperparameter optimization for the BDT model. The space of hyperparameters is determined, and the metric is calculated for each possible combination of hyperparameters from this space. The node with the best metric is selected as the optimum.

For the grid search, we use the BDT model with a learning rate equal to 0.08 trained with a 5-fold cross-validation on the dataset with 1 million events. We optimize the maximal tree depth in the ensemble. The training of the model stops if the MAPE score on the hold-out validation dataset has not decreased during 5 successive iterations.

Figure 11 shows the results of hyperparameter optimization. The best maximum depth of the tree was found to be 10, and the corresponding number of trees is 583.

4.3 Fully connected deep neural network

Training a neural network means fitting, based on data, the best parameters for mapping input features to output value(s). A fully connected neural network consists of layers with sets of units called neurons. Each neuron in a layer is connected with each neuron in the next layer. If it has many layers, it is called a Fully Connected Deep Neural Network (FCDNN).

Each neuron computes a linear combination of its inputs with its own learnable weights. To enable a network to reproduce nonlinearities, it is required to pass a neuron output through some nonlinear function, the so-called activation function. There are several popular choices: sigmoid, hyperbolic tangent, Rectified Linear Unit (ReLU) and its modifications (ELU, Leaky ReLU, SELU, etc.), and others [34, 35].

Optimization of hyperparameters for FCDNN is performed using the BayesianOptimization tuner from the KerasTuner library for Python [36]. To train the model, we use TensorFlow [37]. The MAPE loss for reconstructed energy and true energy is used as a loss function. All input features were normalized with a standard score normalization. The training process is performed with an early stopping condition on the validation dataset with a patience of 25 and with the batch size 1024. Table 2 shows the search space and the selected hyperparameters.

We first select several subsets of features that are reasonable from our previous experience. Then, we pick the following subset of features that provides virtually the same quality of the FCDNN model as the full set of features:

Taking into account the axial symmetry, it is enough to have a pair of coordinates as features. We use \(\rho = \sqrt{x^2 + y^2}\) and \(R = \sqrt{x^2+y^2+z^2}\), however, the neural network can extract necessary information also from other combinations, e.g. \(\rho \) and z.

It is interesting to note that with those features selected for the BDT approach, the prediction quality of FCDNN is within \(2\sigma \) from the optimal quality corresponding to the feature list above.

Figure 12 describes the selected architecture of FCDNN and its main selected hyperparameters.

5 Results

As mentioned in Sect. 2, we use resolution and bias metrics to evaluate the performance of the models. These metrics are obtained from the Gaussian fit of the \(E_{\textrm{pred}} - E_{\textrm{dep}}\) distribution as its standard deviation (\(\sigma \)) and mean value (\(\mu \)). Figure 13a illustrates an example of the \(E_{\textrm{pred}} - E_{\textrm{dep}}\) distribution and its Gaussian fit with \(E_{\textrm{dep}} = 3.022\) MeV. Note that we exclude edge points with the 0 MeV and 10 MeV kinetic energy corresponding to 1.022 and 11.022 MeV of deposited energy. ML models learn that the energy of an event belongs to the energy range from the training dataset (\(E_{\textrm{kin}} \in 0{-}10\) MeV) and, therefore, the distributions at the edge points are truncated and exhibit an artificially increased resolution as shown in Fig. 13 b.

Figure 14 shows the energy reconstruction performance (resolution and bias) of the BDT and FCDNN models described in Sects. 4.2 and 4.3. Parameterization (1) with fitted a, b, and c values is also shown. The energy reconstruction bias for both models is very close to zero. The resolution performance for FCDNN is better in the lower energy region and converges with the BDT results at higher energies.

Table 3 lists parameters a, b, and c of Parameterization (1) of the energy resolution curves and the effective resolution \({\tilde{a}}\) for both BDT and FCDNN models. The value of \({\tilde{a}}\) is noticeably better for FCDNN, but for both models \({\tilde{a}}\) satisfies the requirement of JUNO: \({\tilde{a}} < 3\%\).

We also investigate how the resolution depends on different subdetector regions, see Fig. 15. We used our models, BDT and FCDNN, trained on a dataset with the standard fiducial volume cut of 17.2 m, i.e. in the 0–17.2 m range, but tested them on datasets with varied R ranges. The R ranges are selected to contain approximately the same number of events. The effective resolution in the R regions is correlated with the accumulated charge per MeV (compare to Fig. 3): less charge means a smaller \({\tilde{a}}\), and vice versa. Consequently, the performance of both models worsens for the events close to the edge of the detector.

Besides that, the outer region of the detector is more populated by background events originating from radioactive decays in materials. Therefore, one may consider reducing the fiducial volume to increase the quality of data. On the other hand, excluding outer events decreases statistics, which limits the experiment sensitivity to the neutrino oscillation pattern. Thus, the optimal strategy has to be found via a comprehensive sensitivity study.

Expected value of the effective resolution \({\tilde{a}}\) for equidistant subdetector regions. Dashed lines and filled areas represent the effective resolution \({\tilde{a}}\) and its standard deviation for the BDT and FCDNN models, respectively. Both models are trained on data with \(R_{\textrm{FV}}<\) 17.2

6 Conclusions

In this work, we present an application of machine learning techniques for precise energy reconstruction in the energy range of 0–10 MeV. We use two models: Boosted Decision Trees (BDT) and Fully Connected Deep Neural Network (FCDNN), trained using aggregated features extracted from Monte Carlo simulation data. We considered the case of the JUNO detector. However, the approaches are valid for other similar detectors with a large liquid scintillator target surrounded by an array of photo-sensors. Our dataset is generated with the official JUNO software for modeling particle interactions, light emission, transport, and collection, as well as realistic response of PMTs and the readout system.

We design a large set of features and select one feature subset for the BDT model and another feature subset for the FCDNN model. Both provide the same performances as the full set of features. The requirement on the effective resolution to determine the neutrino mass ordering, \({\tilde{a}} \le 3\%\), is achieved by both BDT and FCDNN. BDT is a fast and minimalistic model, making predictions 3–4 times faster than FCDNN. On the other hand, the latter provides a slightly better performance. This follows the trend observed in [31] where authors found that neural networks trained on large and detailed datasets outperform models based on decision trees. However, for smaller datasets, decision trees have an advantage. For the experiments like JUNO, one can generate enough large datasets, but it is also mandatory to use sparse calibration data. This situation makes the choice between the models not evident, and both approaches should be considered.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: This study is based on MC data produced by the JUNO collaboration and is not publicly available according to the collaboration policy.]

Notes

However, after application of a set of selection cuts, the remaining fraction of background events is indistinguishable from the signal. For example, in case of the reactor neutrino analysis it amounts to about 17.8% of signal events [8].

References

X. Guo et al. [Borexino Collaboration], Science and technology of Borexino: a real-time detector for low energy solar neutrinos. Astropart. Phys. 16(3), 205–234 (2002). https://doi.org/10.1016/S0927-6505(01)00110-4

K. Eguchi et al. [KamLAND Collaboration], First results from KamLAND: evidence for reactor anti-neutrino disappearance. Phys. Rev. Lett. 90, 021802 (2003). https://doi.org/10.1103/PhysRevLett.90.021802

F.P. An et al. [Daya Bay Collaboration], Observation of electron-antineutrino disappearance at Daya Bay. Phys. Rev. Lett. 108, 171803 (2012). https://doi.org/10.1103/PhysRevLett.108.171803

Y. Abe et al. [Double Chooz Collaboration], Indication of reactor \({\bar{\nu }}_e\) disappearance in the double Chooz experiment. Phys. Rev. Lett. 108, 131801 (2012). https://doi.org/10.1103/PhysRevLett.108.131801

J.K. Ahn et al. [RENO Collaboration], Observation of reactor electron antineutrino disappearance in the RENO experiment. Phys. Rev. Lett. 108, 191802 (2012). https://doi.org/10.1103/PhysRevLett.108.191802

F. An et al. [JUNO Collaboration], Neutrino physics with JUNO. J. Phys. G 43(3), 030401 (2016). https://doi.org/10.1088/0954-3899/43/3/030401

A. Abusleme et al. [JUNO Collaboration], JUNO physics and detector. Prog. Part. Nucl. Phys. 123, 103927 (2022). https://doi.org/10.1016/j.ppnp.2021.103927

M. He et al. [JUNO Collaboration], Sub-percent precision measurement of neutrino oscillation parameters with JUNO. Chin. Phys. C. https://doi.org/10.1088/1674-1137/ac8bc9

D. Bourilkov, Machine and deep learning applications in particle physics. Int. J. Mod. Phys. A 34(35), 1930019 (2020). https://doi.org/10.1142/S0217751X19300199

M.D. Schwartz, Modern machine learning and particle physics. Harvard Data Sci. Rev. (2021). https://doi.org/10.1162/99608f92.beeb1183

D. Guest, K. Cranmer, D. Whiteson, Deep learning and its application to LHC physics. Annu. Rev. Nucl. Part. Sci. 68, 161–181 (2018). https://doi.org/10.1146/annurev-nucl-101917-021019

HEP ML Community. A living review of machine learning for particle physics. https://iml-wg.github.io/HEPML-LivingReview/

Z. Qian, V. Belavin, V. Bokov et al., Vertex and energy reconstruction in JUNO with machine learning methods. Nucl. Instrum. Meth. A 1010, 165527 (2021). https://doi.org/10.1016/j.nima.2021.165527

A. Gavrikov, F. Ratnikov, The use of boosted decision trees for energy reconstruction in JUNO experiment. EPJ Web Conf. 251, 03014 (2021). https://doi.org/10.1051/epjconf/202125103014

Z. Li, Y. Zhang, G. Cao et al., Event vertex and time reconstruction in large-volume liquid scintillator detectors. Nucl. Sci. Tech. 32, 49 (2021). https://doi.org/10.1007/s41365-021-00885-z

Z.Y. Li, Z. Qian, J.H. He et al., Improvement of machine learning-based vertex reconstruction for large liquid scintillator detectors with multiple types of PMTs. Nucl. Sci. Tech. 33, 93 (2022). https://doi.org/10.1007/s41365-022-01078-y

A. Abusleme et al. [JUNO Collaboration], Calibration strategy of the JUNO experiment. JHEP 03, 004 (2021). https://doi.org/10.1007/JHEP03(2021)004

X. Huang et al., Offline data processing software for the JUNO experiment, PoS ICHEP2016, 1051 (2017). https://doi.org/10.22323/1.282.1051

T. Lin et al., The application of SNiPER to the JUNO simulation. J. Phys. Conf. Ser. 898(4), 042029 (2017). https://doi.org/10.1088/1742-6596/898/4/042029

T. Lin et al., Parallelized JUNO simulation software based on SNiPER. J. Phys. Conf. Ser. 1085(3), 032048 (2018). https://doi.org/10.1088/1742-6596/1085/3/032048

S. Agostinelli et al. [GEANT4 Collaboration], GEANT4—a simulation toolkit. Nucl. Instrum. Meth. A 506, 250–303 (2003). https://doi.org/10.1016/S0168-9002(03)01368-8

J. Allison, J. Apostolakis, S.B. Lee et al., Recent developments in Geant4. J. Nucl. Instrum. Meth. A 835, 186–225 (2016). https://doi.org/10.1016/j.nima.2016.06.125

K. Li, Z. You, Y. Zhang et al., GDML based geometry management system for offline software in JUNO. Nucl. Instrum. Meth. A 908, 43–48 (2018). https://doi.org/10.1016/j.nima.2018.08.008

A. Abusleme, T. Adam, S. Ahmad et al. [JUNO Collaboration], Mass testing and characterization of 20-inch PMTs for JUNO. arXiv:2205.08629

A. Coates, A. Ng, H. Lee, An analysis of single-layer networks in unsupervised feature learning, Proceedings of the fourteenth international conference on artificial intelligence and statistics. PMLR 15, 215–223 (2011). http://proceedings.mlr.press/v15/coates11a

J. Heaton, An empirical analysis of feature engineering for predictive modeling, SoutheastCon, IEEE, 1–6 (2016). https://doi.org/10.1109/SECON.2016.7506650

J. Friedman, Stochastic gradient boosting. Comput. Stat. Data Anal. 38(4), 367–378 (2002). https://doi.org/10.1016/S0167-9473(01)00065-2

J. Friedman, Greedy function approximation: a gradient boosting machine. Ann. Stat. 29(5), 1189–1232 (2001). https://doi.org/10.1214/aos/1013203451

J. Quinlan, Simplifying decision trees. Int. J. Man-Mach. Stud. 27(3), 221–234 (1987). https://doi.org/10.1016/S0020-7373(87)80053-6

Y. Freund, R.E. Schapire, A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 55(1), 119–139 (1997). https://doi.org/10.1006/jcss.1997.1504

V. Borisov et al., Deep neural networks and tabular data: a survey (2021). arXiv:2110.01889

T. Chen, C. Guestrin, Xgboost: a scalable tree boosting system. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 785–794 (2016). https://doi.org/10.1145/2939672.2939785

F. Pedregosa et al., Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011). https://doi.org/10.5555/1953048.2078195

A. Apicella et al., A survey on modern trainable activation functions. Neural Netw. 138, 14–32 (2021). https://doi.org/10.1016/j.neunet.2021.01.026

J. Lederer, Activation functions in artificial neural networks: a systematic overview (2021). arXiv:2101.09957

T. O’Malley et al., KerasTuner (2019). https://github.com/keras-team/keras-tuner/

A. Martin et al., TensorFlow: large-scale machine learning on heterogeneous systems (2015). https://www.tensorflow.org/

N. Vinod, G. Hinton, Rectified linear units improve restricted Boltzmann machines. In: Proceedings of the 27th International Conference on Machine Learning, pp. 807–814 (2010). https://doi.org/10.5555/3104322.3104425

D.A. Clevert, T. Unterthiner, S. Hochreiter, Fast and accurate deep network learning by exponential linear units (ELUs) (2015). arXiv:1511.07289

G. Klambauer, T. Unterthiner, A. Mayr, S. Hochreiter, Self-normalizing neural networks. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 972–981 (2017). https://doi.org/10.5555/3294771.3294864

D. Kingma, J. Ba, Adam: a method for stochastic optimization, 3nd ICLR (2015). arXiv:1412.6980

S. Ruder, An overview of gradient descent optimization algorithms (2016). arXiv:1609.04747

Z. Li, S. Arora, An exponential learning rate schedule for deep learning (2019). arXiv:1910.07454

A. Baranov, N. Balashov, N. Kutovskiy, R. Semenov, JINR cloud infrastructure evolution. Phys. Part. Nucl. Lett. 13(5), 672–675 (2016). https://doi.org/10.1134/S1547477116050071

Acknowledgements

We are very thankful to the JUNO collaborators who contributed to the development and validation of the JUNO simulation software. We thank N. Kutovskiy and N. Balashov for providing an extensive IT support with computing resources of JINR cloud services [44], and X. Zhang for her work on producing MC samples. We are also grateful to Maxim Gonchar for fruitful discussions. Fedor Ratnikov is supported by the grant for research centers in the field of AI provided by the Analytical Center for the Government of the Russian Federation (ACRF) in accordance with the agreement on the provision of subsidies (identifier of the agreement 000000D730321P5Q0002) and the agreement with the HSE University 70-2021-00139. Yury Malyshkin is supported by the Russian Science Foundation under grant agreement 21-42-00023.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3. SCOAP3 supports the goals of the International Year of Basic Sciences for Sustainable Development.

About this article

Cite this article

Gavrikov, A., Malyshkin, Y. & Ratnikov, F. Energy reconstruction for large liquid scintillator detectors with machine learning techniques: aggregated features approach. Eur. Phys. J. C 82, 1021 (2022). https://doi.org/10.1140/epjc/s10052-022-11004-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-022-11004-6