Abstract

The reconstruction of event-level information, such as the direction or energy of a neutrino interacting in IceCube DeepCore, is a crucial ingredient to many physics analyses. Algorithms to extract this high level information from the detector’s raw data have been successfully developed and used for high energy events. In this work, we address unique challenges associated with the reconstruction of lower energy events in the range of a few to hundreds of GeV and present two separate, state-of-the-art algorithms. One algorithm focuses on the fast directional reconstruction of events based on unscattered light. The second algorithm is a likelihood-based multipurpose reconstruction offering superior resolutions, at the expense of larger computational cost.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

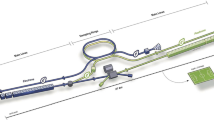

The IceCube Neutrino Observatory [1] at the South Pole registers Cherenkov light from particle interactions in the ice with its 5160 digital optical modules (DOMs). The modules are deployed at depths between 1450 and 2450 m, covering a volume of a cubic-kilometer of ice, and arranged in 86 vertical strings (see Fig. 1). Eight dedicated strings with denser spacing and photomultiplier tubes (PMTs) with higher quantum efficiency form, together with the 7 central IceCube strings, the DeepCore sub-array [2]. The module density in DeepCore is about five times greater than the rest of IceCube, which allows for a much lower energy detection threshold of a few GeVs. Most of the recorded events in IceCube are from atmospheric muons or random triggers caused by dark noise, while only a fraction are from atmospheric neutrinos and a few events can be attributed to astrophysical neutrinos. In all cases, the event information – after some basic processing – is represented as a series of pulses per optical module, with each pulse consisting of a time and a charge. Figure 2 shows event views for one high and two low energy events. In the process of event reconstruction, this raw pulse information is used to estimate properties of the interaction, such as interaction vertex, energy, and direction (angles).

Multiple algorithms to infer such quantities in IceCube (and its predecessor experiment, AMANDA [3]) have been successfully developed and used, including those reported in Refs. [4,5,6,7] and recently Refs. [8, 9]. These algorithms, however, are geared towards high energy interactions for neutrinos in the TeV–PeV energy range such as the one shown in Fig. 2a. For oscillation physics or dark matter searches, for instance, energies in the GeV range are of interest. Such events create much less light in the detector resulting in sparse pulses (see Fig. 2b, c), and hence involve different challenges for reconstruction than in the high energy regime. At the same time, a large number of events are collected at these low energies, with typical analysis samples easily exceeding a hundred thousand observed events, and simulated events used in analyses amounting up to \({\mathcal {O}}(10^8)\) events that require reconstruction.

In this article, we describe two distinct strategies addressing the challenges of extracting event-level reconstruction information from sparse data at high computational speeds:

-

Selecting events dominated by unscattered light results in a comparably small, but easy-to-reconstruct and robust set of events, and allows for fast turn-around physics analyses. The corresponding algorithm is discussed in Sect. 2, representing an enhancement of an existing direction reconstruction [10], and is limited to events passing a selection of direct light. This type of reconstruction was used, for instance, for the results of Ref. [11].

-

To explore a larger, and more inclusive amount of detector data, the second algorithm, discussed in Sect. 3, offers a multipurpose event reconstruction applicable to any event, and yields more comprehensive information at the expense of higher computational cost (around two orders of magnitude higher than the first algorithm, more details in Sect. 4). A predecessor to the algorithm discussed is described in Ref. [12]. This type of reconstruction was used, for example, for the results of Refs. [13,14,15,16].

Schematic view of the IceCube in-ice detector array. The DeepCore sub-array, located at the bottom center, features denser module spacing and allows for the detection of lower energy events compared to the rest of IceCube. Also shown are the depth-dependent effective scattering and absorption coefficients [17]. The zone labeled as “Dust layer” is of inferior optical quality with increased scattering and absorption. The DeepCore array is located below this in the clearest part of the ice

1.1 Key observables

We start by discussing important observable properties for neutrino events in IceCube DeepCore. For many physics analyses, reconstructed event-level information, such as estimates for an event’s deposited energy and point of interaction (vertex) in the ice, are crucial ingredients. Such information is often used in the event selection to remove background events (e.g. atmospheric muons or uncontained events), but it is also used directly in the analysis itself.

As an example, we can illustrate this for neutrino oscillation physics using the two-flavor vacuum oscillation probability for a neutrino produced in flavor eigenstate \(\alpha \) to be observed in a different eigenstate \(\beta \):

This probability depends on the neutrino energy, E, the length between production and interaction, L, the mixing angle, \(\theta \), and mass splitting, \(\Delta m^2\) (all in natural units). In order to resolve the oscillations and infer the parameters of interest, \(\theta \) and \(\Delta m^2\), precise estimates of the energy, E, the distance, L, and the flavors, \(\alpha \) and \(\beta \), are desired. For atmospheric neutrino oscillations, our estimate of the energy of an incoming neutrino is the deposited energy of the event. For the purposes discussed in this work, this “deposited” energy is defined as the difference in energy of the incoming and any outgoing neutrino in the interaction. In the case of charged-current (CC) tau neutrino interactions, \(\nu _\tau ^{\text {CC}}\) , half the energy of the tau is subtracted to correct for the invisible energy of the daughter neutrino in the tau decay. For atmospheric neutrinos, the propagation length can be calculated using the zenith direction, \(\vartheta \), for which an approximate relation is \(L \sim D \cos {\vartheta }\), with D being the Earth diameter. For atmospheric neutrinos, the initial flavor, \(\alpha \), at the production site is not known at the event level, and the expected fluxes of each flavor are modeled theoretically. This means for the oscillation analysis example, estimates of E, \(\vartheta \) and a handle on the interaction flavor \(\beta \) (see Sect. 1.2) are desired.

Example event displays: The size of spheres represent the amount of light observed in each DOM; larger spheres correspond to more light observed. The dotted black line represents the incoming neutrino, the black circle the cascade, and the solid black line the outgoing muon track. The size of the black round marker is proportional to the energy of the cascade at the vertex. The color represents the timing of the hit, where the earliest hits are colored in red and the latest hits in blue. Low energy events have significantly fewer hits [18]

1.2 Event Signatures

Detectable neutrino interactions in IceCube can be grouped into two general categories: those which only contain a so-called “cascade” signature, and those with an additional “track” (see Fig. 2b, c, respectively). We categorize any particle showers as cascades. These include hadronic showers caused by deep inelastic scattering, and electromagnetic showers induced by the electron from \(\nu _e^{\text {CC}}\) interactions. Relativistic, charged particles (\(\beta > 1/n\)) in such showers emit Cherenkov radiation that can be detected by IceCube DOMs, and for energies between 1–100 GeV, such showers extend approximately 4–6 m in length [19]. In contrast to hadrons and electrons, muons have the potential to travel a sizable distance in the ice while radiating Cherenkov light, leading to a track signature in the detector. In general \(\nu _\mu ^{\text {CC}}\) interactions will produce muons, and about 17% of tau leptons produced in \(\nu _\tau ^{\text {CC}}\) interactions decay to muons, while other interactions result only in cascades [20]. We are interested in distinguishing events containing tracks from pure cascade events in order to get a handle on the flavor of the neutrino that we observe.

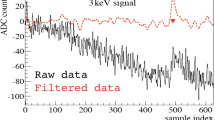

1.3 Raw data/pulses

Photo electrons created in the PMTs in IceCube’s DOMs generate a voltage at the PMT’s anode that, when exceeding a discriminator threshold, triggers a digitization process of the signal via analog to digital converters (ADCs). This digitization process involves multiple gain channels, and different digitizers operating at different sampling rates [21]. The resulting waveforms then undergo an unfolding procedure as described in Ref. [5] that extracts the time and charge of individual single photo electrons (SPEs) [22] from the raw data, resulting in a collection of pulses, \({t_i}\), with charges \({q_i}\). Multiple SPEs can be extracted into single pulses, and hence result in charges \({q_i}\) greater than one photoelectron (p.e.). Before applying reconstruction algorithms, these pulse series typically undergo a cleaning algorithm that reduces isolated pulses that are created by dark noise. A clustering approach requires all pulses to be causally connected within a distance of at most 150 m and a time difference of at most 1 \(\upmu \)s to at least one other pulse in the cluster. The resulting series of pulses per event serve as the input data to the reconstruction algorithms described next in this article. For a typical oscillation event sample, as used in Sect. 4, and after the cleaning described above is applied, the average events contain a total of 17 pulses, distributed over 14 DOMs and 6 strings. The mean total charge per event is 27 p.e., the median is 16.4 p.e., and the majority of pulses are stemming from SPEs.

2 santa: avoiding scattered light

The Single-string Antares-inspired Analysis (santa) [23] is an algorithm for the reconstruction of track directions. It is an improved and adapted version of a similar approach originally used at the ANTARES neutrino telescope [10], and developed with the intention of building a fast reconstruction method well suited for online monitoring. A discussion of the working principles behind the algorithm and the improvements made with respect to the original implementation is the scope of this section.

The reconstruction algorithm is preceded by a cleaning routine which removes pulses produced by light that has undergone significant scattering as it traveled through the ice, leaving only minimally scattered photons in the event. This hit selection routine is described in Sect. 2.1. With the scattering removed, we calculate the expected observation time for the Cherenkov photons geometrically as discussed in Sect. 2.3. Finally, we run a \(\chi ^2\)-fit with respect to the observed and predicted observation time with an additional regularization term involving the observed charge as described in Sect. 2.4.

2.1 Hit selection

The first step of the santa reconstruction is the selection of minimally scattered photons from all of the observed pulses by removing those pulses within the trigger window that are likely to have undergone a significant amount of scattering.

We combine the pulse series recorded by each activated DOM to a hit with the time of the first pulse and the total charge of all pulses. All subsequent cleaning and reconstruction steps are applied to these combined hits. To remove scattered light, we make use of the fact that the largest possible delay between hits that are produced by unscattered light on two different DOMs, i and j, on the same string, is the time it takes for a directly up- or down-going light front to travel from one DOM to the other, \(\tau _{ij}=|\Delta z_{ij}|/c_{\mathrm {ice}}\), where \(|\Delta z_{ij}|\) is the distance between DOMs i and j and \(c_{\mathrm {ice}}\) is the speed of light in ice. If the time delay between two hits, \(\Delta t_{ij}\), is larger than the maximum delay, \(\tau _{ij}\), then we know that the light must have undergone some amount of scattering. As a starting point for the hit selection, we choose the hit with the highest charge on each string, \(i=0\), and first remove any earlier hit, j, where \(-\Delta t_{0j} > \tau _{0j}\). From there, the algorithm iterates through every hit, i, and removes any other hit, j, where \(\Delta t_{ij} > \tau _{ij}\). If fewer than 3 hits remain on a string, the entire string is removed from the event. If less than 5 hits remain in the event, it cannot be reconstructed. This is a simplified version of the cleaning procedure described in Ref. [23] and leaves more scattered light in the events. This is compensated for by the addition of the robust loss function (Sect. 2.4.2). In this configuration, we can reconstruct about 10% more \(\nu _\mu ^{\text {CC}}\) events than with the original implementation from Ref. [23] at a similar resolution. In the example event fits in Figs. 3 and 4, the hits that are removed by the hit selection are crossed out.

2.2 Single-string vs. multi-string

After the hit-cleaning procedure, passing events fall into two basic categories that are reconstructed differently. The first category is multi-string events that contain observed charges in modules on two or more strings of the detector. Since a string is removed entirely from an event if it has less than three hits left after hit cleaning, a multi-string event contains at least six modules with recorded charges: three on one string and three on another string. In these events, we reconstruct both the zenith and the azimuth angles of the direction of a track. The second category is single-string events that contain only one string in which modules have observed charges. Since all modules on a single string share approximately the same x and y coordinates, the azimuth angle of a track cannot be reconstructed. Example events for single-string and multi-string event fits are shown in Figs. 3 and 4, respectively.

2.3 Geometry of tracks in IceCube

To perform the \(\chi ^{2}\)-fit on the observed hit times for a track hypothesis, we first need to derive the expected photon arrival time for an optical module at position \(\mathbf {r}=(x,y,z)\) given the parameters of the hypothesis.

We characterize a track by a normalized direction vector \(\mathbf {u}=(u_{x},u_{y},u_{z})\), an anchor point \(\mathbf {q}=(q_{x},q_{y},q_{z})\) and a time \(t_{0}\) at which the particle passes through \(\mathbf {q}\). In this simplified hypothesis, tracks are modeled as being infinite in both directions; there are no parameters to fix the start and end position and the velocity is fixed to the vacuum speed of light, c. Since the reconstruction ignores DOMs that have not recorded any pulses, the fact that the true track length is finite only makes a negligible difference. Without scattering, all Cherenkov photons lie on a cone with an opening angle \(\theta _{c}\) (see Fig. 5) whose tip is at the position of the particle at the time \(\mathbf {p}(t)\). The opening angle satisfies \(\cos (\theta _c)=1/n_{\mathrm {ph}}\), where \(n_{\mathrm {ph}}\) is the phase index of refraction of the ice.

We solve the geometric equations analogously to Ref. [23] assuming that a photon emitted by the moving particle travels in a straight line at a velocity of c divided by the group index of refraction \(n_{\mathrm {gr}}\), which gives the geometric time, \(t_{\mathrm {geom}}\), as a function of the track parameters

where the distance traveled by the photon \(d_\gamma \) is

The group and phase indices of refraction depend on the wavelength, but for this reconstruction we use as value for the wavelength \(\lambda =400\,\mathrm {nm}\),Footnote 1 where \(n_{\mathrm {gr}}=1.356\) and \(n_{\mathrm {ph}}=1.319\) from Ref. [24].

Detailed geometry of a light cone created by a track. \(\mathbf {q}\) is the position of the anchor point and \(\mathbf {r}\) is the position of the optical module. \(\mathbf {p}(t_{\mathrm {em}})\) and \(\mathbf {p}(t_{\mathrm {geom}})\) are the positions of the muon at the time the photon is emitted and when it is geometrically expected to arrive, respectively

2.4 Fitting procedure

2.4.1 Loss function

For a given set of parameters \(\mathbf {\theta }=(\mathbf {u},\mathbf {q},t_0)\), we minimize a modified chi-square loss function given by

where \(\bar{q}\) is the average of \(\tilde{q}_i\), and \(r^2_i\) is the chi-square residual for each observed hit, i, between the observed time, \(t_{\mathrm {obs}, i}\) and the geometric arrival time, \(t_{\mathrm {geom},i}(\mathbf {\theta })\),

The uncertainty on the pulse time measurement is approximately \(\sigma _{t}=3\,\mathrm {ns}\), corresponding to the readout rate of the modules [21].

The second term in Eq. 4 is a regularization term that multiplies the distance traveled by a photon to the optical module that recorded it, \(d_{\gamma ,i}\), by the measured charge, \(\tilde{q}_i\), to penalize solutions where a large charge is observed far away from the hypothesized track position. Because the modules are most sensitive on the side facing towards the bedrock, we correct the observed total charge in each DOM, \(q_i\), for the sensitivity with

where \(\vartheta _i\) is the angle between the direction of the photon and a vector pointing up to the surface of the ice. The parameter \(d_{0}\) determines the relative contribution of the regularization term. Its value has been optimized for best average performance of the reconstruction and is fixed to \(7\,\mathrm {m}\).

2.4.2 Robust loss functions

After the hit selection described in Sect. 2.1, a small number of hits from photons that have undergone significant amounts of scattering will remain that could strongly bias the fit result. We improve the robustness of the regression against such outliers by wrapping the squared residuals for each pulse in Eq. 4, \(r^{2}_i\), with the Cauchy robust loss function

It reproduces the original \(r^{2}\) residual for small values of r, but grows more slowly than \(r^2\) for large values of r, so that outliers are effectively given less weight.

Additionally, we choose the point of a “soft cut-off”, denoted herein as C, at which the residual diverges from the regular \(r_{i}^{2}\) in units of standard deviations by setting

Figure 6 shows the Cauchy loss function for different values of C. The choice of the value of C is a trade-off. If it is too large, then the fit can be strongly influenced by single outliers. If it is too small, then the fit ignores too many hits and falls into degenerate solutions. We found the optimal value to be \(C=3\). At this setting, the fit can effectively ignore hits that are far away from the Cherenkov cone.

As a further constraint on the regression, we apply the robust loss function from Eq. 7 only to pulses where the observed time is later than the expected photon time, since we expect that scattering would only cause photons to arrive too late, never too early.

The performance of the santa algorithm will be presented, together with the next algorithm discussed, in Sect. 4.

3 retro: embracing scattered light

Light from neutrino interactions has a high chance of undergoing (multiple) scattering before being detected in IceCube. The effective scattering coefficient for 400 nm light (peak acceptance of optical modules) in the region of DeepCore is about \(b_e \approx 0.03\)–\(0.04\,m^{-1}\) [25], meaning an effective scattering length of 25–33 m. This length is smaller than the inter-string spacing in IceCube of \(\sim 125\) m, and DeepCore of \(\sim 45\) m. Consequently the geometrically propagated distance is enlarged, the time of arrival of photons is delayed with respect to the geometrical propagation time (Eq. 2), and the photon direction will be diffused. Thus, we developed the likelihood-based reconstruction method retro, which can handle scattered light and hence applies to all events.

3.1 Likelihood

In order to correctly model scattered photons in the event reconstruction, we derive the distribution \(P(t|\mathbf {\theta })\), that describes the number of photons expected in a DOM as a function of arrival time t, given the neutrino event parameters \(\mathbf {\theta }\). We will refer to \(P(t|\mathbf {\theta })\) as the unnormalized probability density, in accordance with the language of Barlow [26]. These distributions encode the propagation of light through the inhomogeneous South Pole ice, and are not analytically available. Sections 3.1.1 and 3.1.2 describe how these distributions can be approximated using retro—the reverse table reconstruction.

Given the distributions, we can write down a likelihood featuring all registered photons at times \(t_i\), based on an extended likelihood [26]:

where \(P(t_i|\mathbf {\theta }) = \lambda (t_i|\mathbf {\theta }) + n\) is the time dependent, unnormalized probability of registering photons. The term \(\lambda (t_i|\mathbf {\theta })\) is the contribution from the actual neutrino event and will be introduced later, while the term n represents a noise rate (see Ref. [1]), \(\Lambda (\mathbf {\theta }) = \int _{TW}{\lambda (t|\mathbf {\theta })dt}\) is the total number of expected photons from the neutrino event over the trigger window (TW) and N is the time-integrated noise rate. We can omit the last term N, as it is constant under variations of \(\mathbf {\theta }\). Since our reconstruction is based on a collection of pulses with times \({t_i}\) and charges \({q_i}\), as described in Sect. 1.3, we adapt the above expression to a charge weighted one: \(\log {{\mathcal {L}}} = \sum _i q_i \cdot \log {P(t_i)} - \Lambda \). This log-likelihood is computed for all of the 5160 DOMs in IceCube and summed up.

Expected photon time distribution in several DOMs along string 86, for a straight up-going muon with an energy 20 GeV, starting at \((0, 0, -350)\) m in IceCube coordinates. The dashed lines show the average from \(10^7\) repeated MC simulations, while the solid lines show the expected distributions based on the tables and event model discussed here

In the simulations used by IceCube, events are generated via Monte Carlo (MC) methods and the resulting photons are propagated via ray tracing [27]. This means that a simulated event corresponds to one particular random variate of the underlying probability density. Through repeated simulations, however, one can approximate the time distributions P. An example of such distributions is shown in Fig. 7 for one particular choice of event parameters \(\mathbf {\theta }\). Such repeated simulations [28] allow for the reconstruction of single events including the full level of detail available in IceCube simulation. The main challenges for this approach are the large number of simulations necessary for creating the expected distributions and the associated computational cost, as well as the large number of events that need to be reconstructed. To address those, we will follow an alternative path to arrive at approximate distributions by splitting up the process into a parameterized event model (Sect. 3.1.1) and a tabulated detector response (Sect. 3.1.2) discussed next.

3.1.1 Parameterized event model

The parameterized event model describes how much Cherenkov light is emitted in the ice at different locations and times, given a set of event parameters:

This light emission is translated into a set of discrete Cherenkov-light emitters in the ice, which are then used together with the detector response to evaluate the likelihood function. Our parameterization depends on the following eight parameters (see also Fig. 8):

-

\(V_\text {xyzt}\): the neutrino’s interaction point in (x, y, z) in the IceCube coordinate system and interaction time with respect to the trigger window.

-

\(\Psi \): the zenith and azimuth angles \((\vartheta , \varphi )\) of the neutrino’s direction of travel.

-

\(E_\text {trck}\): the energy carried by the outgoing muon in \(\nu _\mu ^{\text {CC}}\) interactions, otherwise zero.

-

\(E_\text {cscd}\): all deposited energy in the interaction excluding \(E_\text {trck}\).

The track part of the hypothesis is modeled as a series of colinear, constant luminosity Cherenkov emitters (2450 photons/m, [29]) placed every \(\ell _\Delta = 3~\text {ns} \cdot c \approx 0.9\) m along the trajectory. Assuming constant energy loss, the track energy and length are related as \(E_\text {trck}/ \text {GeV} \approx 11/50 \cdot \ell _\text {trck}/ \text {m} \) [29]. The cascade part of the hypothesis is modeled as a single point emitting Cherenkov light source located at the vertex and co-directional with the muon track.Footnote 2 Its light production is parameterized by 5.21 m/GeV muon track equivalent (\(\sim 12{,}800\) photons per GeV) [29].

Sketch of the eight-dimensional event model for a neutrino \(\nu \) incoming at angle \(\Psi =(\vartheta , \varphi )\) and interacting at vertex position and time \(V_{xyzt}\). The cascade part is modeled as a single Cherenkov emitter (gray color) placed at the vertex in the same direction as the incident neutrino, and scaled by the expected number of photons per energy \(E_\text {cscd}\). The track part consists of n (here \(n=8\)) emitters (blue color) placed at distances \(\ell _\Delta \) along the track to reach the full length of \(\ell _\text {trck}\)

These assumptions allow us to divide up the likelihood function into separate terms describing the cascade part with linear energy scaling, and the track part with a sum of discrete emitters every \(\mathbf {\Delta }\) of length \(\ell _\Delta \) along a line:

where the terms \(\lambda _\gamma (t)\) describe the charge vs. time distributions for individual Cherenkov photons, and will be discussed in the next section.

This event model is chosen to obtain sufficient reconstruction accuracy while being fast enough to evaluate to make the analysis computationally feasible. Currently, the model does not account for subdominant stochastic losses along the muon track (which are generally small for our energies of interest [30]), the possibility that the cascade’s axis and track are not collinear, and longitudinal and off-axis shower development. To partially correct for these shortcomings, the reconstructed energies undergo a post-reconstruction correction, described in Sect. 3.3. More complicated and differently parameterized event models, such as a more realistically longitudinally elongated cascade profile or additionally the kinematic angle between the cascade and the track direction, have been studied, but for the events of interest, no significant gain in reconstruction accuracy was found, while the computational load increased. These assumptions only hold for the low energy events targeted here, with energies in the range of around 10–100 GeV.

3.1.2 Detector response

The detector response characterizes the term \(\lambda _\gamma ( t|(x,y,z,t)_\gamma ,\Psi _\gamma )\), that expresses the probability of registering a photon emitted from a Cherenkov emitter at \((x,y,z,t)_\gamma \) in the direction \(\Psi _\gamma \). These \(\lambda \) terms encode the scattering and absorption in the ice, and are therefore location and orientation dependent. We are approximating the term using high statistics MC simulation to generate large lookup tables. The tables are generated using the CLSim softwareFootnote 3 provided with the Spice Lea ice model [17] to simulate the propagation of photons in the South Pole ice.

Other approaches based on tables, e.g. Refs. [12, 31], (referred to as “standard” approach in this paragraph) use Cherenkov-spectrum light sources placed at different points throughout the ice as if these are due to actual physical particles. Our tables, in contrast, treat PMTs as if they emit light. Assuming that absorption and scattering are time-symmetric processes, we reverse the role of the emitter and the receiver here (hence the name “retro”). This difference is also illustrated in Fig. 9, showing an example for the calculation of the photons expected in a DOM for two Cherenkov light sources at different positions and orientations. In the standard approach, two separate emitters would be simulated to represent both source hypotheses. In the retro reverse procedure, a single table with photons emitted from the DOM is needed to account for the different hypotheses. Producing tables in each manner captures different symmetries and samples the space differently. In retro, for instance, the origin of the table is fixed at the DOM position – a position that is also fixed in the actual detector. In standard table, however, the origin is fixed at the source position, which is a variable of the reconstruction. Another difference is due to the IceCube DOMs not having isotropic photon detection efficiency. They are mostly sensitive to photons coming in from below, i.e. towards the face of the PMT, and have almost no sensitivity to photons from the opposite side, i.e. the base of the PMT. In retro tables, photons are emitted according to this detection efficiency, meaning that only photons that can be observed are simulated. In contrast, standard tables generate typically similar amounts of photons for any emitter orientation, but emitters that point towards the PMT face will generate more PMT hits, while for emitters facing the other way many photons will never enter the detector acceptance (see Fig. 9). retro tables assume rotational symmetry about the DOM’s axis of symmetry, i.e. the ice is assumed to only change properties as a function of depth – an assumption that is only approximately true as the South Pole ice features an optical anisotropy [17], and the ice layers exhibit a slight tilt [17].

We do not keep tables for each individual DOM, but merge similar ones (i.e. DOMs in regions of similar ice properties) together resulting in a total of 140 separate tables to represent the 5160 DOMs. This grouping is achieved via a k-means clustering algorithm [32, 33] and the resulting clusters are shown in Fig. 10 for the IceCube and DeepCore volumes. The resulting clusters reflect the structure of the South Pole ice properties, grouping DOMs in the depth-dependent and tilted ice layers together.

The grouping of DOMs into 140 separate ice property clusters via k-means, 80 for the larger IceCube volume (left), and 60 for the DeepCore volume (right). The plot shows the convex hull from DOM positions (black dots) in common clusters, and the varying colors are visually separating the clusters. At first order grouping happens in z-layers, while tilt behavior can be also be seen along the direction of the ice gradient of 225\(^{\circ }\) SW

The photon tables are generated according to the binning specified in Table 1, where r is the distance from the center of the DOM to the photon’s location, \(\Delta t\) is the time of propagation in the ice minus \(r/c'\), i.e. the time difference of the actual photon path minus the direct line of sight without scattering. For the vector connecting the position of a photon back to the DOM it was emitted from, the quantity \(\cos {\vartheta }\) is the cosine of the zenith angle of that vector. These first three binning dimensions determine the relative location in time and space of the photons. The remaining two dimensions encode the orientation – i.e. direction of flight – of the photons as follows: the quantity \(\cos {\vartheta _\gamma }\) is the cosine of the photon’s orientation zenith angle, and \(\Delta \varphi _\gamma \) is the difference between the photon’s orientation azimuth angle and the azimuth angle of the connecting vector mentioned just above. Because we assume azimuthal symmetry for the propagation of light, we can take the absolute value \(|\Delta \varphi _\gamma |\). Photons are emitted from each DOM according to the average DOM zenith acceptance distribution [14] and wavelength sensitivity distribution, where the latter is weighted by the Cherenkov spectrum. Simulated photons are traced through the ice while being tabulated, i.e. accounting for which table bin volume (\(V_{bin}\)) they traversed (\(N_{tab}\)), normalized by the reference volume \(V_{ref}\) that is the DOM surface area multiplied by the simulation step size. Each DOM table is produced by simulating \(N_{gen}=10^{10}\) photons. In order to relate a number of emitted source photons (\(N_{src}\)) anywhere within the ice to the expected number in a DOM (\(N_{exp}\)), we use this table by applying correct normalization and looking up the number of tabulated photons (\(N_{tab}\)) in the bin where the source lies with respect to the DOM:

Since the photons of interest (originating from neutrino events) are emitted from Cherenkov radiation, the generated tables are convolved with the angular emission profile (see Fig. 5), such that the new directional angles in the table correspond to that of the charge generating the Cherenkov light cone (see also Fig. 11).

3.1.3 Table compression

A raw table, based on the above specified binning in five dimensions, contains \(5.12\cdot 10^8\) bins, and we generate 140 different tables. In single precision floating points, the required space amounts to about 0.29 TB of memory – a value exceeding the available RAM in typical compute nodes available to us. For this reason, we compress the raw tables with a custom procedure that is described below down to about 315 MB, achieving a compression factor of three orders of magnitude.

Since the directional distributions of photons follow the scattering behaviour in the ice, many of these distributions look similar. The full set of 140 tables can be split up into \((140 \times 80 \times 100 \times 40)\) bins of magnitude, and corresponding directionality distributions of \((40 \times 40)\) bins. We now replace the total 44.8 million directionality distributions with a collection of 4000 templates that are representive of the original distributions. This means that instead of the full table, we only store the magnitude (= number of photons) and template index.

To generate a representative template library, we first linearly transform the distributions (i.e. the bin values of the distributions represented as 160-dimensional vectors) using a principal component analysis (PCA), and perform a k-means clustering based on the first 80 components to group similar distributions together, resulting in 4000 clusters. Then we average the original distributions of the members of each cluster into a template, resulting in 4000 template distributions. In the final step, the original tables are then replaced by finding the best matching template for each bin, i.e. the statistically most compatible template determined via the Pearson \(\chi ^2\)-distance.

Figure 11 shows a few example directionality distributions, their template substitutes, and the difference between the two. The fidelity of the compression is high and does not introduce unwanted artifacts. In the figure, larger deviations can be observed in the region \(|\Delta \varphi | > \pi /2\), where photons are back scattered and hence very low photon statistics are present. In this region, the templates offer smoothed-out distributions due to the averaging.

Example retro table slices showing the photon directions for \(\Delta t\) bins (0.4, 1.6), (3.6, 6.4), and (102, 116) ns from top to bottom, at r-bin (7, 8) m and \(\cos {\vartheta }\)-bin (0.25, 0.3). The left column shows the photon direction distribution from emitted photons, the second column has the Cherenkov-cone folding applied, the third column shows the template substitute, and the last column shows the error introduced by the template compression. The distributions in the first three columns are normalized, and darker areas indicate higher photon density (linear scale). The first row contains almost exclusively direct (unscattered) photons, and hence the left most plot in that row has almost all photons in the bin of direct line of sight, and the Cherenkov table contains a sharp ring. The subsequent rows are at later times and contain more and more scattered light resulting in diffusion. The last column showing the percentage difference between compression and original exhibits the largest deviations in the right half, where light is back-scattered and statistics are very low

3.1.4 Likelihood landscape and comparison to simulation

Our parameterized event model and the compressed tables for the detector response – including all the approximations discussed – offer a sufficiently precise description of the reconstruction likelihood, as illustrated in Fig. 7. The expected number of photons vs. time, given a set of event parameters, is approximated well over several orders of magnitude, compared with the expectation through repeated simulation. The expected photon counts span over five orders of magnitude and the time range displayed is one microsecond. A description accurate to the level of \({\mathcal {O}}(10\%)\) is achieved, and the overall shape of the curves with rising edge and diffusion tail are matched well. The repeated simulations shown in Fig. 7 include the full complexity of event modeling available in IceCube simulation, and our simplified, parameterized model provides an overall reasonable approximation of these distributions.

Our reconstruction likelihood \({\mathcal {L}}(x|\mathbf {\theta })\) (Eq. 11) is, in general, not differentiable, as it depends on discrete table entries, and those are in addition susceptible to statistical fluctuations. The likelihood exhibits a multimodal structure and is highly non-Gaussian. Figure 12 shows a few example slices through the likelihood of a typical event in two dimensions to illustrate its structure.

Slices through the likelihood landscape, with the remaining six event parameters set to truth in each panel. The white star indicates the maximum likelihood point within the slice. The true values (shown as black crosshairs) for the depicted \(\nu _\mu ^{\text {CC}}\) example event are \(V_{xyzt}\) = (8.0 m, 47.2 m, -346.9 m, 9660 ns), \((\varphi , \vartheta )\) = (1.65, 1.77) rad, and deposited energies (\(E_\text {cscd}\), \(E_\text {trck}\)) = (14.3, 4.2) GeV

3.2 Optimization

Finding the maximum likelihood estimators \(\hat{\mathbf {\theta }}\) for our reconstruction parameters requires a maximization of the likelihood function (Eq. 9). We split the model parameter space up into the two energy terms (\(E_\text {trck}\) and \(E_\text {cscd}\)) that are maximized separately (see Sect. 3.2.1) for any choice of the remaining six parameters that are maximized in the external loop described in Sect. 3.2.2.

3.2.1 Energy optimization

Since the cascade energy \(E_\text {cscd}\) in our likelihood is realized via a simple scaling factor (see Eq. 11), the change and the derivative of the likelihood with respect to this parameter are known. To exploit this fact, we maximize the likelihood, given the remaining 7 parameters, via a binary search for the root of this derivative \(\partial {\mathcal {L}} / \partial E_\text {cscd}\). This provides the optimal cascade energy configuration as a function of the other 7 parameters.

For the track energy parameter \(E_\text {trck}\), the same does not apply, as the likelihood is a sum of independent track energy contributions summed along the extension of the length of the track (see also Eq. 11). For higher energies, more elements are added to extend the track, while the previous track segments remain unchanged. Based on this, we maximize the likelihood as a function of the track length, starting at \(\ell _\text {trck}\)=0 (i.e. \(E_\text {trck}\)=0), and adding increments, one at a time, to find the optimal solution. The cascade energy is re-optimized for every track configuration. This procedure is computationally advantageous, since the likelihood of all previous track segments that have already been computed can be reused and only the new increments need to be computed and added.

The likelihood difference between the \(E_\text {trck}\)=0 and optimal \(E_\text {trck}\) is also stored as a useful variable to distinguish track from cascade events (as used in Sect. 3.4).

3.2.2 Vertex and direction optimization

For the optimization of the remaining 6 parameters, we use a custom, derivative free, global optimization algorithm. It is based on the controlled random search with local mutation (crs2) algorithm described in [34], and extended to correctly treat angles (azimuth and zenith). An open-source implementation is providedFootnote 4 and more details can be found in Appendix A. The customized treatment of spherical quantities (azimuth and zenith angles) is crucial for convergence and thus the performance of our reconstruction.

The two energy parameters are a nested optimization inside this outer 6-d optimization for vertex and direction, i.e. for any point in the 6-d vertex-direction space, the two energies are always optimized internally.

Seed points The optimization is started by choosing 160 quasi-random [35] seed points that are distributed according to the existing online reconstruction “spefit” (see for example Ref. [4] for details) with a spread of the points following the seed reconstruction’s resolution. The resolution of the spefit seed reconstruction is also shown in Sect. 4.1.1.

Convergence After each internal iteration of the algorithm, we evaluate four stopping criteria. If any of these criteria is met, the minimization is terminated. The following criteria are classified to have converged successfully:

-

1.

Number of consecutive iterations without finding an improved point \(\ge 1000\), or

-

2.

Standard deviation of the log-likelihood values of all 160 current minimizer points \(\le 0.5\), or

-

3.

Standard deviation of the vertex position \(V_\text {xyzt}\) of all 160 current minimizer points \(\le (x, y = 5\,\text {m}, z = 4\,\text {m}, t = 20\,\text {ns})\)

Termination by the last criterion indicates the minimization was unsuccessful:

-

4.

Number of iterations \(\ge 10{,}000\).

For a typical selection of IceCube DeepCore events for atmospheric neutrino oscillation studies (as used in Sect. 4), we observe the optimization to terminate on average after \(\langle n \rangle = 2229\) iterations of the optimization routine, while 99% of events finish with less than 5305 iterations, and for all events the optimization converged successfully. With 11.3% of cases stopped via condition 1, 39.1% via condition 2 and 49.7% of the cases terminating via condition 3.

3.3 Energy corrections

3.3.1 Cascade energy conversion

Reconstructed energy before and after the bias correction is applied. This bias correction is applied to make the reconstructed energy a better estimator of true deposited energy. The shaded region shows the 68% containment regions, and the solid line shows the median. The dashed, black line is the 1:1 line where reconstructed energy exactly matches the true deposited energy. The correction pulls the median to more closely follow the 1:1 line

retro provides two energy estimates, \(E_\text {cscd}\) and \(E_\text {trck}\), as described in Sect. 3.1.1. We reconstruct the energy as visible, electromagnetic-equivalent energy. While topological differences in the experimental signatures of hadronic and electromagnetic cascades are small, the true energy needs to be rescaled accounting for the proper hadronic photon yield.

To account for this, a correction factor F is applied to convert the reconstructed EM cascade energy to a more accurate cascade energy estimate. As a proxy for the light yield, the cumulative Cherenkov track length T is used, which is found by summing all charged shower products with energies above the Cherenkov threshold. F is defined to be the ratio of Cherenkov track lengths for hadronic and electromagnetic cascades for a given primary energy:

We use the functional form for F described in [36] and [37]:

where \(f_0\) is the relative Cherenkov activity of the pure hadronic portion of the cascade and \(F_{EM}\) is the fraction of the total energy in the pure electromagnetic portion of the cascade. The following expression is used for \(F_{EM}\) originating from [38]:

where \(E_0\) and m are model parameters which depend on the primary hadron and the detector material. The following parameter values are used: \(E_0=0.188\) GeV, \(m=0.163\), \(f_0=0.310\) [37].

3.3.2 Track energy conversion

The second energy estimate from retro, \(E_\text {trck}\), is directly related to the optimal track length found during the reconstruction process. During the reconstruction, a constant energy loss of 0.22 GeV/m is assumed, as mentioned in Sect. 3.1.1. However, this is not a perfect assumption and we get an improved estimate of the real track energy by using more realistic energy losses which are dependent on the primary muon energy. The energy of the track (i.e. the original muon) is recalculated by using an interpolation of the muon ranges and muon corresponding primary energies found in Table II-28 from [39].

3.3.3 Bias correction

With the above corrections applied, the resulting mean reconstructed cascade energies and track lengths still do not perfectly match their true parameter values. A linear scaling factor is applied to make the reconstructed parameters better estimators of the true deposited energies. A scaling factor of 1.7 for all cascade energies after being converted from EM to hadronic, and a scaling factor of 1.45 for all track lengths before being converted to track energy are used here. The total reconstructed energy is then the sum of these resulting two quantities. Figure 13 shows the total reconstructed energy versus the true deposited energy with and without these correction factors applied.

3.4 Particle identification (PID)

To distinguish between track-like and cascade-like events in DeepCore, the likelihood ratio \(\mathcal {L}(\hat{E}_\text {trck}) / \mathcal {L}(E_\text {trck}=0)\) alone would make the most powerful classifier (“Neyman–Pearson” lemma) given a perfect likelihood description. However, with the approximations in the likelihood discussed earlier, we find that a multivariate classifier with additional inputs to the likelihood ratio leads to an improved classification. Here we use a boosted decision tree (BDT) based on the the XGBoost algorithm [40]. The variables used as input features are the following reconstructed quantities determined by retro:

-

\(\mathcal {L}(\hat{E}_\text {trck}) / \mathcal {L}(E_\text {trck}=0)\): Likelihood ratio between the full reconstruction and a cascade-only hypothesis in which \(E_\text {trck}\)=0, as mentioned in Sect. 3.2.1.

-

\(\ell _\text {trck}\): The reconstructed track length is a straightforward measure of how track-like an event is. A long reconstructed track indicates the presence of a muon track in the event.

-

\(E_\text {cscd}\): retro’s estimate of how much light is in the cascade portion of the event (as opposed to the track component).

-

Zenith angle: The ability to identify tracks is zenith-dependent due to the geometry of the detector, because the spacing between adjacent DOMs in the vertical direction is smaller than in the \(x-y\) plane. Therefore tracks that are nearly vertical are easier to identify than horizontal ones.

-

Zenith Spread: This quantity is calculated from the distribution of points traversed by the minimizer before convergence. Tracks are typically associated with better pointing resolution due to their longer lever arm, and we expect events with a smaller zenith spread to be more track-like.

The classifier is trained on a selection of simulated \(\nu _\mu ^{\text {CC}}\) and \(\nu _e^{\text {CC}}\) with reconstructed energy between 5 and 500 GeV. For training, events are weighted with an unoscillated spectrum according to the HKKM flux [41]. Sample balancing is performed for the relative contributions between classes, with an overall equal weight for true tracks and cascades during training. The sample is divided and 50% of events are used for training, and 50% for testing the model.

The top panel shows the fraction of events classified correctly as a function of energy. The bottom panel shows the area under the curve (AUC) for the receiver operating characteristics (ROC) for each energy band. The classifier performance is better at higher energies, while towards the lowest energies the AUC converges to 0.5, i.e. zero classification performance

The output score of the BDT is a number between 0 and 1 indicating how track-like an event is, with 1 being the most track-like. Figure 14 shows the distribution of classifier scores for tracks and cascades for an example sample (see Fig. 20). There is a peak at 1 in the track distribution as we expect, and cascades appear mostly at lower scores. Many events in the confusion region around the middle tend to be low energy events, and therefore have very few hits in the detector and consequently not enough information for the BDT to distinguish between event types.

Using an example cut of 0.5 to evaluate the performance of the classifier as a function of energy, we find that at the lowest energies almost everything is classified as a cascade and at the highest energies, the vast majority of events are classified correctly (see Fig. 15).

4 Performance

Finally, we demonstrate how the two reconstructions presented in this paper perform compared to each other for a common set of events. We also show the retro performance on a larger event sample, which includes events that do not pass the santa selection criteria.

To illustrate the algorithm’s performance, we use a neutrino-only MC set simulated with primary neutrino energies between 1 GeV and 10 TeV, following an \(E^{-2}\) spectrum, and containing about 8.1 million events passing a selection process similar to what is used in Ref. [15]. In all following figures (except those showing reconstruction time) the events are weighted by the HKKM flux [41], oscillation probabilities calculated with the NuFIT 2.2 [42] best-fit values, and interaction and detection probabilities close to what is expected from experimental data. The true parameter distributions of this sample (with selection and weights applied) are shown in Fig. 20 in the appendix.

About 3.2 million events fulfill the criteria to be reconstructed with santa. In the following plots that compare retro and santa results (see Figs. 16, 17, and 18a), only those events are used. The plots showing retro alone (see Figs. 18b, and 19) use the full event sample.

4.1 Comparison between reconstructions

Since santa only estimates the particle direction, we compare the azimuth and zenith resolutions of the two reconstructions. In addition, we also compare the time needed to perform a fit.

4.1.1 Direction angles

Angular resolutions for single-string (SS) and multi-string (MS) events. The results of retro (blue) and santa (orange) are shown together with the spefit (black) reconstruction. Since santa has no sensitivity to azimuth for single string events the respective line is not shown. The lines in the first two plots of this figure are the result of a kernel density estimation (KDE) to the actual distributions. IQR is the 50% interquantile range, i.e. the difference between the 0.25 and the 0.75 quantile. The last plot shows the median angle between true and reconstructed direction (\(\Delta \Psi _{true}^{reco}\)) vs. the true track energy. Single string lines are just shown up to 100 GeV, because there are very few single string events above these energy

Figure 16 shows the angular resolutions of retro and santa together with results from the simple online reconstruction spefit [4]. The events are split by single string and multi-string events. The criterion if an event is classified as single or multi-string is based on santa, i.e. the distributions are based on identical sets of events. For single string events santa shows no sensitivity to azimuth and therefore the respective line is not included. For both types of events, retro gives undoubtedly the best resolutions. The azimuth resolutions strongly benefit if more than one string sees light, since this is a measurement within the horizontal plane. For spefit, the interquantile range decreases by about a factor of 3 and for retro by nearly a factor of 4 when comparing the resolutions for single and multi-string events. Similar to azimuth, the zenith resolution improves if multiple strings see light. For both types of events, retro shows again the best performance, followed by santa. The last panel in Fig. 16 shows the median pointing error, i.e. the angle between the true and reconstructed directions, as a function of the true track energy (cascades all have track energy 0). The angular resolution improves as expected with higher track energies (longer tracks), and again a similar picture is painted with retro outperforming the others. The best resolutions ultimately converge to a median of around 0.03 radians (1.72\(^{\circ }\)) for multi-string events with a track energy of a few hundred GeV. This resolution is also reached by spefit. The slight performance gain for zero track energy can be attributed to high energy cascades being present.

Figure 17 shows that santa exhibits a bias towards up-going angles, while retro has virtually no bias and stays much closer to the ideal 1:1 line. The bends in the figure towards the poles result from the fact that values of the cosine are physically bounded between \(-1\) and 1.

4.1.2 Reconstruction time

Low energy MC samples in IceCube contain up to \({\mathcal {O}}(10^{8}\)) events that need to be reconstructed. Therefore, the time needed to reconstruct an event is another important property of the reconstruction performance. Figure 18 shows the CPU time spent per event relative to the true (total) deposited energy for santa and relative to the reconstructed track energy for retro.Footnote 5 In this example, santa is around 200 times faster than retro, and it would typically take around 5 kCPUh to reconstruct \(10^8\) events, while around 1000 kCPUh are required to do the same with retro.

Reconstructions CPU time to estimate the event parameters. The median together with the 50 and 90 percent interquantile range (IQR and IQ90) are plotted for different energies. The overall mean time is also shown as an indication of how long it takes to reconstruct a certain number of events for the event distribution used here

4.2 Full retro performance

In contrast to santa, retro estimates all eight parameters used to define a neutrino interaction in IceCube DeepCore. Figure 19 shows the retro resolutions for all parameters. The resolutions of the vertex and the total energy are compared versus the true (total) deposited energy. The angular resolutions are compared versus the true track energy and for track and cascade energy their respective truth is used on the x-axis. We use a containment cutFootnote 6 to remove all events that are reconstructed outside the DeepCore instrumented detector volume.

retro resolutions vs. true (total) deposited energy for vertex position and total energy. For the angular resolutions, the true track energy is used instead of the true deposited energy. In addition to azimuth and zenith resolutions also the angle between reconstructed and true direction (\(\Delta \Psi ^{reco}_{true}\)) is shown. The single cascade and track energy resolutions are also shown, but in contrast to the other parameters vs. their respective truth. The median together with the 50 and 90 percent interquantile range (IQR and IQ90) are used to quantify the resolutions. A containment cut is used to remove all events that are reconstructed outside of DeepCore

For our test sample the x and y vertex coordinates can be resolved at a precision of about 15 m, while the resolution in z is about 8 m. The deteriorating resolution at high energies can be explained by partially contained events, which are high energy events outside of DeepCore that appear to be lower energy events inside or at the edge of the detector. The angular resolutions are virtually unbiased (except for the zenith resolution for short tracks) and improve with higher track energy. The relative energy resolutions are almost constant over the energy range targeted for neutrino oscillations. The bias in the energy resolution bands at very low (< 10 GeV) and very high (> 100 GeV) energies can be attributed to the event selection. The event sample used here is optimized for atmospheric neutrino oscillation physics with a targeted energy between 5 and 300 GeV, meaning that the selection process is designed to pass events that appear to be in this range. Light propagation in IceCube is a stochastic process. Therefore, in some low energy events enough DOMs see light to pass the criteria. On the other side, some high energy events deposit less light than expected and also appear in the sample. These, respectively, over- and under-fluctuated events cause the bending of the energy resolution curves in the last row of Fig. 19.

5 Conclusions and outlook

This article describes the state of the art in low energy reconstruction techniques as used in IceCube DeepCore for neutrino events in the GeV energy range. The algorithms must offer a high enough computational speed to be applied to up to \({\mathcal {O}}(10^8)\) events, and must be able to deal with very sparse data since in a typical GeV scale event around 99.7% of all IceCube DOMs see no light.

The santa algorithm offers a better zenith angle reconstruction (and for multi-string events also a better azimuth reconstruction) than previous methods for events that pass a selection of unscattered photon hits. The reconstruction is in comparison fast, allowing for short turn-around times in the development of analyses and is currently used for verification purposes.

The retro algorithm is a full likelihood reconstruction with an eight dimensional event model and detector response. It offers superior resolutions in all reconstructed dimensions, and is applicable to any event. This significant gain in reconstruction accuracy, however, comes at the price of larger computational load – about two orders of magnitude slower than santa. retro is serving as the baseline reconstruction for current low energy oscillations and beyond the standard model analyses such as light sterile neutrinos, non-standard interactions or dark matter searches in IceCube DeepCore.

True parameter distributions of the full test event sample after the selection process weighted by the atmospheric flux and oscillation probability. The position and angular distributions contain all events, the energy distributions are split up in a cascades only distribution (upper right) showing only the cascade energy and a track only distribution (lower right) showing cascade and track energy

At the same time, reconstruction algorithms remain an actively studied topic in IceCube. Especially novel techniques from machine learning are being tested as fast approximations of the detector response, surrogate likelihoods, or applied directly in the context of regression of reconstruction quantities and classification (see for example Refs. [43,44,45,46]).

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: All data points plotted can be provided upon request.]

Notes

\(400\;\mathrm {nm}\) is near the wavelength of the highest acceptance of the optical modules [1].

More detailed and realistic cascade light emission modeling have been studied, but did not significantly improve reconstruction performance.

The reason for choosing the reconstructed track energy instead of the true total energy for retro is that the number of calls to the retro photon tables depends on the reconstructed track energy (cf. Eq. 11).

\(-500<z_{reco}<-200\) and \(\rho _{36, reco}<300\), where \(\rho _{36}\) is the horizontal distance to string 36.

References

M.G. Aartsen et al., The IceCube neutrino observatory: instrumentation and online systems. JINST 12(03), P03012 (2017). https://doi.org/10.1088/1748-0221/12/03/P03012

R. Abbasi et al., The design and performance of IceCube DeepCore. Astropart. Phys. 35(10), 615 (2012)

E. Andres et al., Observation of high-energy neutrinos using Cherenkov detectors embedded deep in Antarctic ice. Nature 410, 441 (2001). https://doi.org/10.1038/35068509

J. Ahrens et al., Muon track reconstruction and data selection techniques in AMANDA. Nucl. Instrum. Methods A 524, 169 (2004). https://doi.org/10.1016/j.nima.2004.01.065

M.G. Aartsen et al., Energy reconstruction methods in the IceCube Neutrino Telescope. JINST 9, P03009 (2014). https://doi.org/10.1088/1748-0221/9/03/P03009

R. Abbasi, Y. Abdou, M. Ackermann, J. Adams, J. Aguilar, M. Ahlers, D. Altmann, K. Andeen, J. Auffenberg, X. Bai et al., An improved method for measuring muon energy using the truncated mean of de/dx. Nucl. Instum. Methods A 703, 190–198 (2013)

M.G. Aartsen et al., Improvement in fast particle track reconstruction with robust statistics. Nucl. Instrum. Methods A 736, 143 (2014). https://doi.org/10.1016/j.nima.2013.10.074

R. Abbasi et al., A convolutional neural network based cascade reconstruction for the IceCube Neutrino Observatory. JINST 16, P07041 (2021). https://doi.org/10.1088/1748-0221/16/07/P07041

R. Abbasi et al., A muon-track reconstruction exploiting stochastic losses for large-scale Cherenkov detectors. JINST 16(08), P08034 (2021). https://doi.org/10.1088/1748-0221/16/08/P08034

J.A. Aguilar et al., A fast algorithm for muon track reconstruction and its application to the ANTARES neutrino telescope. Astropart. Phys. 34, 652 (2011). https://doi.org/10.1016/j.astropartphys.2011.01.003

M.G. Aartsen et al., Determining neutrino oscillation parameters from atmospheric muon neutrino disappearance with three years of IceCube DeepCore data. Phys. Rev. D 91(7), 072004 (2015). https://doi.org/10.1103/PhysRevD.91.072004

M. Leuermann, Testing the neutrino mass ordering with icecube deepcore. Ph.D. thesis, RWTH Aachen University (2018). https://doi.org/10.18154/RWTH-2018-231554. https://www.rwth-aachen.de/global/show_document.asp?id=aaaaaaaaabebgkc

M.G. Aartsen et al., Measurement of atmospheric neutrino oscillations at 6–56 GeV with IceCube DeepCore. Phys. Rev. Lett. 120(7), 071801 (2018). https://doi.org/10.1103/PhysRevLett.120.071801

M.G. Aartsen et al., Measurement of atmospheric tau neutrino appearance with IceCube DeepCore. Phys. Rev. D 99(3), 032007 (2019). https://doi.org/10.1103/PhysRevD.99.032007

M.G. Aartsen et al., Development of an analysis to probe the neutrino mass ordering with atmospheric neutrinos using three years of IceCube DeepCore data. Eur. Phys. J. C 80(1), 9 (2020). https://doi.org/10.1140/epjc/s10052-019-7555-0

R. Abbasi et al., All-flavor constraints on nonstandard neutrino interactions and generalized matter potential with three years of IceCube DeepCore data. Phys. Rev. D 104(7), 072006 (2021). https://doi.org/10.1103/PhysRevD.104.072006

D. Chirkin, Evidence of optical anisotropy of the South Pole ice, in 33rd international cosmic ray conference (2013)

M.G. Aartsen et al., Multimessenger observations of a flaring blazar coincident with high-energy neutrino IceCube-170922A. Science 361(6398), eaat1378 (2018). https://doi.org/10.1126/science.aat1378

S.W. Li, J.F. Beacom, Spallation backgrounds in super-Kamiokande are made in muon-induced showers. Phys. Rev. D 91(10), 105005 (2015). https://doi.org/10.1103/PhysRevD.91.105005

P. Zyla, et al., Review of particle physics. PTEP 2020(8), 083C01 (2020). https://doi.org/10.1093/ptep/ptaa104

R. Abbasi et al., The IceCube data acquisition system: signal capture, digitization, and timestamping. Nucl. Instrum. Methods A 601, 294 (2009). https://doi.org/10.1016/j.nima.2009.01.001

M.G. Aartsen et al., In-situ calibration of the single-photoelectron charge response of the IceCube photomultiplier tubes. JINST 15(06), 06 (2020). https://doi.org/10.1088/1748-0221/15/06/P06032

J.P.Y. Garza, Measurement of neutrino oscillations in atmospheric neutrinos with the IceCube DeepCore detector. Ph.D. thesis, Humboldt-Universität zu Berlin, Mathematisch-Naturwissenschaftliche Fakultät I (2014). https://doi.org/10.18452/17016

P. Price, K. Woschnagg, Role of group and phase velocity in high-energy neutrino observatories. Astropart. Phys. 15(1), 97 (2001). https://doi.org/10.1016/S0927-6505(00)00142-0

M. Ackermann et al., Optical properties of deep glacial ice at the South Pole. J. Geophys. Res. 111(D13), D13203 (2006). https://doi.org/10.1029/2005JD006687

R.J. Barlow, Extended maximum likelihood. Nucl. Instrum. Methods A 297, 496 (1990). https://doi.org/10.1016/0168-9002(90)91334-8

D. Chirkin, Photon tracking with GPUs in IceCube. Nucl. Instrum. Methods A 725, 141 (2013). https://doi.org/10.1016/j.nima.2012.11.170

D. Chirkin, Event reconstruction in IceCube based on direct event re-simulation, in 33rd international cosmic ray conference (2013)

M.G. Aartsen et al., Measurement of South Pole ice transparency with the IceCube LED calibration system. Nucl. Instrum. Methods A 711, 73 (2013). https://doi.org/10.1016/j.nima.2013.01.054

D. Chirkin, W. Rhode, Muon Monte Carlo: a high-precision tool for muon propagation through matter (2004)

J. Lundberg, P. Miocinovic, T. Burgess, J. Adams, S. Hundertmark, P. Desiati, K. Woschnagg, P. Niessen, Light tracking for glaciers and oceans: scattering and absorption in heterogeneous media with photonics. Nucl. Instrum. Methods A 581, 619 (2007). https://doi.org/10.1016/j.nima.2007.07.143

J. MacQueen, et al., in Proceedings of the fifth Berkeley symposium on mathematical statistics and probability, vol. 1 (Oakland, CA, USA, 1967), vol. 1, pp. 281–297

S. Lloyd, Least squares quantization in pcm. IEEE Trans. Inf. Theory 28(2), 129 (1982)

P. Kaelo, M.M. Ali, Some variants of the controlled random search algorithm for global optimization. J. Optim. Theory Appl. 130(2), 253 (2006). https://doi.org/10.1007/s10957-006-9101-0

I.M. Sobol, Distribution of points in a cube and approximate evaluation of integrals. Zh. Vych. Mat. Mat. Fiz. 7, 784 (1967)

M. Kowalski, Search for neutrino-induced cascades with the AMANDA-II detector. Ph.D. thesis. Humboldt-Universität zu Berlin, Mathematisch-Naturwissenschaftliche Fakultät I (2004). https://doi.org/10.18452/15003

M.G. Dunkman, Measurement of atmospheric muon neutrino disappearance with icecube-deepcore. Ph.D. thesis. Pennsylvania State University (2015). https://etda.libraries.psu.edu/catalog/27226

T. Gabriel, D. Groom, P. Job, N. Mokhov, G. Stevenson, Energy dependence of hadronic activity. Nucl. Instrum. Methods A 338(2), 336 (1994). https://doi.org/10.1016/0168-9002(94)91317-X

D.E. Groom, N.V. Mokhov, S.I. Striganov, Muon stopping power and range tables 10 MeV–100 TeV. At. Data Nucl. Data Tables 78(2), 183 (2001). https://doi.org/10.1006/adnd.2001.0861

T. Chen, C. Guestrin, XGBoost: a scalable tree boosting system, in Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining (2016). https://doi.org/10.1145/2939672.2939785

M. Honda, M. Sajjad Athar, T. Kajita, K. Kasahara, S. Midorikawa, Atmospheric neutrino flux calculation using the NRLMSISE-00 atmospheric model. Phys. Rev. D 92(2), 023004 (2015). https://doi.org/10.1103/PhysRevD.92.023004

M.C. Gonzalez-Garcia, M. Maltoni, T. Schwetz, Updated fit to three neutrino mixing: status of leptonic CP violation. JHEP 11, 052 (2014). https://doi.org/10.1007/JHEP11(2014)052

R. Abbasi, et al., Combining maximum-likelihood with deep learning for event reconstruction in IceCube. PoS ICRC2021, 1065 (2021). https://doi.org/10.22323/1.395.1065

R. Abbasi, et al., Reconstructing neutrino energy using CNNs for GeV scale IceCube events. PoS ICRC2021, 1053 (2021). https://doi.org/10.22323/1.395.1053

R. Abbasi et al., Reconstruction of neutrino events in IceCube using graph neural networks. PoS ICRC2021, 1044 (2021). https://doi.org/10.22323/1.395.1044

R. Abbasi et al., Direction reconstruction using a CNN for GeV-scale neutrinos in IceCube. PoS ICRC2021, 1054 (2021). https://doi.org/10.22323/1.395.1054

Acknowledgements

USA – U.S. National Science Foundation-Office of Polar Programs, U.S. National Science Foundation-Physics Division, U.S. National Science Foundation-EPSCoR, Wisconsin Alumni Research Foundation, Center for High Throughput Computing (CHTC) at the University of Wisconsin–Madison, Open Science Grid (OSG), Extreme Science and Engineering Discovery Environment (XSEDE), Frontera computing project at the Texas Advanced Computing Center, U.S. Department of Energy-National Energy Research Scientific Computing Center, Particle astrophysics research computing center at the University of Maryland, Institute for Cyber-Enabled Research at Michigan State University, and Astroparticle physics computational facility at Marquette University; Belgium – Funds for Scientific Research (FRS-FNRS and FWO), FWO Odysseus and Big Science programmes, and Belgian Federal Science Policy Office (Belspo); Germany – Bundesministerium für Bildung und Forschung (BMBF), Deutsche Forschungsgemeinschaft (DFG), Helmholtz Alliance for Astroparticle Physics (HAP), Initiative and Networking Fund of the Helmholtz Association, Deutsches Elektronen Synchrotron (DESY), and High Performance Computing cluster of the RWTH Aachen; Sweden – Swedish Research Council, Swedish Polar Research Secretariat, Swedish National Infrastructure for Computing (SNIC), and Knut and Alice Wallenberg Foundation; Australia – Australian Research Council; Canada – Natural Sciences and Engineering Research Council of Canada, Calcul Québec, Compute Ontario, Canada Foundation for Innovation, WestGrid, and Compute Canada; Denmark – Villum Fonden and Carlsberg Foundation; New Zealand – Marsden Fund; Japan – Japan Society for Promotion of Science (JSPS) and Institute for Global Prominent Research (IGPR) of Chiba University; Korea – National Research Foundation of Korea (NRF); Switzerland – Swiss National Science Foundation (SNSF); United Kingdom – Department of Physics, University of Oxford.

Author information

Authors and Affiliations

Consortia

Corresponding author

Appendix A: Spherical CRS optimizer

Appendix A: Spherical CRS optimizer

The controlled random search with local mutation (crs2) algorithm described in [34] is a derivative free, global optimization strategy. We extend the existing crs2 algorithm into our custom variantFootnote 7 that is suited to correctly treat angles (azimuth and zenith).

The crs2 algorithm, which is a simplex based optimization adapted for multi-modal target functions, uses internally geometric point reflections and centroid calculations. In the standard implementation those operations are performed assuming Euclidean geometry, however this is not the case for angles. We therefore execute these calculations for the neutrino’s zenith and azimuth values on the sphere, resulting in a different (correct) behavior. The adapted algorithm is independent of the choice of the coordinates and has better convergence than the original implementation. The customization lies in the calculation of centroids and point reflection for the dimensions on the sphere:

Centroid calculation In order to calculate the centroid of n points given their spherical coordinates \((\vartheta _1, \varphi _1)\), \((\vartheta _2, \varphi _2)\), \(\dots (\vartheta _n, \varphi _n)\), we resort to their representation in Cartesian coordinates and calculate the centroid (element-wise average) in x, y and z, normalize to assure \(x^2 + y^2 + z^2 = 1\), and subsequently transform back to spherical coordinates to obtain the centroid in zenith and azimuth.

Also for the operations in the crs2 algorithm where midpoints of two points have to be determined, we use the same procedure, i.e. the centroid of two points.

Point reflection Calculating geometric point reflections, as used in crs2, is slightly more involved. To reflect a point p around the centroid \((\vartheta _c, \varphi _c)\) into a new point \({\overline{p}}\), we first transform the coordinates so that the centroid comes to lie at the North Pole of the unit sphere. This is achieved by a rotation \(R_z^T(\phi _c)\) about the z-axis such that the centroid point will be on the \(x-z\) plane. Then we rotate around the y axis by a rotation \(R_y^T(\vartheta _c)\), which brings the centroid to the desired location. In these coordinates, reflections about the centroid (now at the North Pole), are simply taking the negative values of the x and y dimensions of a point in its Cartesian representation. After this, we transform back into the original representation by inverse rotation in the correct order. The full operation is shown in Eq. A.1 for a point p in its Cartesian representation.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3. SCOAP3 supports the goals of the International Year of Basic Sciences for Sustainable Development.

About this article

Cite this article

Abbasi, R., Ackermann, M., Adams, J. et al. Low energy event reconstruction in IceCube DeepCore. Eur. Phys. J. C 82, 807 (2022). https://doi.org/10.1140/epjc/s10052-022-10721-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-022-10721-2