Abstract

We present an updated determination of nuclear parton distributions (nPDFs) from a global NLO QCD analysis of hard processes in fixed-target lepton-nucleus and proton-nucleus together with collider proton-nucleus experiments. In addition to neutral- and charged-current deep-inelastic and Drell–Yan measurements on nuclear targets, we consider the information provided by the production of electroweak gauge bosons, isolated photons, jet pairs, and charmed mesons in proton-lead collisions at the LHC across centre-of-mass energies of 5.02 TeV (Run I) and 8.16 TeV (Run II). For the first time in a global nPDF analysis, the constraints from these various processes are accounted for both in the nuclear PDFs and in the free-proton PDF baseline. The extensive dataset underlying the nNNPDF3.0 determination, combined with its model-independent parametrisation, reveals strong evidence for nuclear-induced modifications of the partonic structure of heavy nuclei, specifically for the small-x shadowing of gluons and sea quarks, as well as the large-x anti-shadowing of gluons. As a representative phenomenological application, we provide predictions for ultra-high-energy neutrino-nucleon cross-sections, relevant for data interpretation at neutrino observatories. Our results provide key input for ongoing and future experimental programs, from that of heavy-ion collisions in controlled collider environments to the study of high-energy astrophysical processes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The parton distribution functions (PDFs) of nuclei, known as nuclear PDFs (nPDFs) [1,2,3], are essential to a variety of experimental programs that collide nuclei (or nuclei with protons) at high energies [4]. At the Large Hadron Collider (LHC), nPDFs are required as a theoretical input to the heavy ion program that aims to disentangle cold from hot nuclear matter effects, making the detailed characterisation of the latter possible. Ongoing and upcoming heavy ion runs include high-luminosity proton–lead (pPb) and lead–lead (PbPb) collisions [5], dedicated proton–oxygen (pO) and oxygen–oxygen (OO) runs [6], and a fixed-target mode [7], where energetic proton beams collide with a nuclear gas target filling the detectors. A reliable determination of nPDFs is also critical in various astrophysical processes, for instance to make theoretical predictions of signal [8,9,10,11] and background [12,13,14] events in neutrino–nucleus scattering as measured at high-energy neutrino observatories such as IceCube [15] and KM3NeT [16]. In the longer term, nPDFs will be probed at the Electron Ion Collider (EIC) [17,18,19], by means of GeV-scale lepton scattering on light and heavy nuclei, and further tested at the proposed Forward Physics Facility (FPF) [20], by means of TeV-scale neutrino scattering on heavy nuclear targets.

In addition to their phenomenological role in the modelling of high-energy collisions involving nuclei, nPDFs are also a means towards an improved understanding of Quantum Chromodynamics (QCD) in the low-energy non-perturbative regime. Indeed, a global analysis of nPDFs provides a determination of the behaviour of nuclear modifications which are flavour, atomic mass number A, and x dependent. This information is critical to isolate the source of the different physical mechanisms that are responsible for the modification of nPDFs in comparison to their free-nucleon counterparts, such as (anti-)shadowing, the EMC effect, and Fermi motion. A detailed understanding of these effects is also necessary to provide a robust baseline in the search for more exotic forms of QCD matter, such as the gluon-dominated Color Glass Condensate [21], which is predicted to have an enhanced formation in heavy nuclei.

Knowledge of nPDFs also enters, albeit indirectly, the precise determination of free-proton PDFs. These are typically determined from global analyses that include, among others, measurements of neutrino–nucleus structure functions. These measurements uniquely constrain quark flavour separation at intermediate and large x. Uncertainties due to nPDFs may inflate the overall free-proton PDF uncertainty by up to a factor of two at large x when properly taken into account [22,23,24]. More precise nPDFs can therefore lead to more precise free-proton PDFs.

Taking into account these considerations, and building upon previous studies by some of us [25, 26], here we present an updated determination of nuclear PDFs from an extensive global dataset: nNNPDF3.0. This determination benefits from the inclusion of new data and improvements in the overall fitting methodology. Concerning experimental data, a broad range of measurements from pPb collisions at the LHC are considered. Notably, CMS dijet [27] and LHCb \(D^0\)-hadron [28] production data is included in both the proton baseline and the nPDF determination, revealing gluon PDF shadowing at small x. We also explicitly remove all deuterium data (\(A= 2\)) from the proton baseline fit and include it in the nPDF fit. Concerning the methodology, we follow the NNPDF3.1 approach [29] (but with an extended dataset) for the proton baseline fit, while we supplement the nNNPDF2.0 methodology [26] for the nuclear fit with a number of technical improvements in our machine learning framework, in particular with automated hyperparameter optimisation [30].

The combination of these various developments leads to substantially improved nPDFs for all partons in a wide range of x values. In particular, we obtain strong evidence of nuclear shadowing for both the gluon and quarks in the region of small-x and large-A values, as well as of gluon anti-shadowing at large x and large A. As customary, the nNNPDF3.0 parton sets are made publicly available for all phenomenologically relevant values of A via the LHAPDF [31] interface.

The outline of this paper is the following. In Sect. 2 we provide details of the experimental measurements and theoretical computations used as input for the nNNPDF3.0 determination. The updates in fitting methodology are described in Sect. 3. Section 4 presents the main results of this work, namely the nNNPDF3.0 parton sets, their comparison with other determinations (EPPS16 [32] and nCTEQ15WZSIH [33]), and an assessment of the dependence of the extracted nuclear modifications with respect to flavour, A, and x. The stability of the nNNPDF3.0 fit is studied in Sect. 5, where variants based on different kinematic cuts, proton PDF baseline sets, and theoretical settings are presented. As a representative phenomenological application of nNNPDF3.0, in Sect. 6 we provide predictions for the ultra-high-energy neutrino-nucleon cross-section for several A values as relevant for (next-generation) large-volume neutrino detectors. We indicate which nPDF sets are made available via LHAPDF, summarise our findings, provide usage prescriptions for the nNNPDF3.0 sets, and discuss some avenues for possible future investigations in Sect. 7.

Four appendices complete the paper. Appendix A summarises the notation used through the paper; Appendix B clarifies the kinematic transformation required to analyse pPb measurements in different reference frames; Appendix C collects several data/theory comparisons for measurements included and not included in nNNPDF3.0; and Appendix D presents a validation of the reweighting method used to assess the impact of LHCb D-meson measurements.

2 Experimental data and theoretical calculations

In this section we discuss the experimental data and theoretical calculations that form the basis of the nNNPDF3.0 analysis. We present an overview of the nNNPDF3.0 dataset and general theoretical settings, and then focus on the new measurements that are added in comparison to nNNPDF2.0. For each of these, we describe their implementation and the calculation of the corresponding theoretical predictions.

2.1 Dataset overview

The nNNPDF3.0 dataset includes all the measurements that were part of nNNPDF2.0. These are the neutral-current (NC) isoscalar nuclear fixed-target deep-inelastic scattering (DIS) structure function ratios measured, for a range of nuclei, by the NMC [34,35,36,37], EMC [38,39,40,41], SLAC [42], BCDMS [43, 44] and FNAL [45, 46] experiments; the charged-current (CC) neutrino-nucleus DIS cross-sections measured, respectively on Pb and Fe targets, by the CHORUS [47] and NuTeV [48] experiments; and cross-sections, differential in rapidity, for inclusive gauge boson production in pPb collisions measured by the ATLAS [49] and CMS [50,51,52] experiments at the LHC. These datasets are discussed in [26], to which we refer the reader for further details.

In addition, two different groups of new measurements are incorporated into the nNNPDF3.0 dataset. The first group consists of DIS and fixed-target Drell–Yan (DY) measurements that involve deuterium targets, specifically the NMC [53] deuteron to proton DIS structure functions; the SLAC [54] and BCDMS [55] deuteron structure functions; and the E866 [56] fixed-target DY deuteron to proton cross-section ratio. This group also includes the fixed-target DY measurement performed on Cu by the E605 experiment [57]. In nNNPDF2.0 these datasets entered the determination of the free proton PDF used as baseline in the nuclear PDF fit. As will be discussed in Sect. 3, in nNNPDF3.0 we no longer include them in the proton PDF baseline but rather choose to include them directly in the nuclear fit. This approach is conceptually more consistent, as it completely removes any residual nuclear effects from the proton baseline. Information loss on quark flavour separation for proton PDFs due to the removal of these datasets is partially compensated by the availability of additional measurements from proton–proton (pp) collisions [24], specifically concerning new weak gauge boson production data.

The second group of new measurements entering nNNPDF3.0 consists of LHC pPb data. Specifically, we consider forward and backward rapidity fiducial cross-sections for the production of \(W^\pm \) bosons measured by ALICE [58] at \(\sqrt{s}=5.02\) TeV in the centre-of-mass (CoM) frame; forward and backward rapidity fiducial cross-sections for the production of Z bosons measured by ALICE and LHCb at 5.02 TeV [58, 59] and 8.16 TeV [60]; the differential cross-section for the production of Z bosons measured by CMS at 8.16 TeV [61]; the ratio of pPb to pp differential cross-sections for dijet production measured by CMS at 5.02 TeV [27]; the ratio of pPb to pp differential cross-sections for prompt photon production measured by ATLAS at 8.16 TeV [62]; and the ratio of pPb to pp differential cross-sections for prompt \(D^0\) production measured at forward rapidities by LHCb at 5.02 TeV [28]. The impact of the last measurement on nNNPDF3.0 is evaluated by means of Bayesian reweighting [63, 64], while that of all of the others by means of a fit, see Sect. 3. All these measurements are discussed in detail in Sect. 2.3 below.

The main features of the new datasets entering nNNPDF3.0 (in comparison to nNNPDF2.0) are summarised in Table 1. For each process we indicate the name of the datasets used throughout the paper, the corresponding reference, the number of data points after/before kinematic cuts (described below), the nuclear species involved, and the code used to compute theoretical predictions. The upper (lower) part of the table lists the datasets in the first (second) group discussed above.

The kinematic coverage in the \((x,Q^2)\) plane of the complete nNNPDF3.0 dataset is displayed in Fig. 1. For hadronic data, kinematic variables are determined using leading order (LO) kinematics. Whenever an observable is integrated over rapidity, the centre of the rapidity range is used to compute the values of x. Data points are classified by process. Data points that are new in nNNPDF3.0 (in comparison to nNNPDF2.0) are marked with a grey edge.

As customary, kinematic cuts are applied to the DIS structure function measurements to remove data points that may be affected by large non-perturbative or higher-twist corrections, namely we require \(Q^2\ge 3.5\) \(\hbox {GeV}^2\) for the virtuality and \(W^2\ge 12.5\) \(\hbox {GeV}^2\) for the final-state invariant mass. Cuts are also applied to the FNAL E605 measurement to remove data points close to the production threshold that may be affected by large perturbative corrections. Namely we require \(\tau \le 0.08\) and \(|y/y_{\mathrm{max}}|\le 0.663\), where \(\tau =m^2/s\) and \(y_{\mathrm{max}}=-\frac{1}{2}\ln \tau \), with m and y the dilepton invariant mass and rapidity and \(\sqrt{s}\) the CoM energy of the collision. These cuts were determined in [65] and are also adopted in NNPDF4.0 [24]. Data points excluded by kinematic cuts are displayed in grey in Fig. 1.

The total number of data points considered after applying these kinematic cuts is \(n_{\mathrm{dat}}=2188\); in comparison, the nNNPDF2.0 analysis contained \(n_{\mathrm{dat}}=1467\) points. Of the new data points, 210 correspond to LHC measurements and the remaining to fixed-target data. The kinematic coverage of the nNNPDF3.0 dataset is significantly expanded in comparison to nNNPDF2.0, in particular at small x, where the LHCb \(D^0\)-meson data covers values down to \(x\simeq 10^{-5}\), and at high-Q, where the ATLAS photon and CMS dijet data reaches values close to \(Q\simeq 500\) GeV.

The kinematic coverage in the \((x,Q^2)\) plane of the nNNPDF3.0 dataset. The evaluation of x and \(Q^2\) for the hadronic processes assumes LO kinematics. Data points are classified by process. Data points new in nNNPDF3.0 in comparison to nNNPDF2.0 are marked with a grey edge. Data points excluded by kinematic cuts are filled grey

2.2 General theory settings

The settings of the theoretical calculations adopted to describe the nNNPDF3.0 dataset follow those of the previous nNNPDF2.0 analysis [26].

Theoretical predictions are computed to next-to-leading order (NLO) accuracy in the strong coupling \(\alpha _s(Q)\). The strong coupling and (nuclear) PDFs are defined in the \(\bar{\mathrm{MS}}\)scheme, whereas heavy-flavour quarks are defined in the on-shell scheme. The FONLL general-mass variable flavour number scheme [66] with \(n_{f}^{\mathrm{max}} = 5\) (where \(n_{f}^{\mathrm{max}}\) is the maximum number of active flavours) is used to evaluate DIS structure functions. Instead, for proton–nucleus collisions the zero-mass variable flavour number scheme is applied; the only exception being prompt D-meson production which is discussed in Sect. 2.3.4. The charm- and bottom-quark PDFs are evaluated perturbatively by applying massive quark matching conditions. In the fit, the following input values are used: \(m_c=1.51\) GeV, \(m_b=4.92\) GeV, and \(\alpha _s(M_Z)=0.118\), respectively for the charm and bottom quark masses, and for the strong coupling at a scale equal to the Z-boson mass \(M_Z\).

Predictions are made at LO in the electromagnetic coupling, with the following input values for the on-shell gauge boson masses (widths): \(M_W=80.398\) GeV (\(\Gamma _W=2.141\) GeV) and \(M_Z=91.1876\) GeV (\(\Gamma _Z=2.4952\) GeV). The \(G_\mu \) scheme is used, with a value of the Fermi constant \(G_F=1.1663787\ 10^{-5}\) \(\hbox {GeV}^{-2}\).

The fitting procedure relies on the pre-computation of fast-interpolation grids for both lepton–nucleus and proton–nucleus collisions. The FK table format, provided by APFELgrid [67], is used for all fitted data. The format combines PDF and \(\alpha _s\) evolution factors, computed with APFEL [68], with interpolated weight tables, whose generation is process specific. For each of the new LHC datasets included in nNNPDF3.0, this is detailed in the following. For the datasets already part of nNNPDF2.0, the set-up was detailed in [26].

2.3 New LHC measurements and corresponding theory settings

The new LHC measurements included in nNNPDF3.0 are discussed in the following: inclusive electroweak boson, prompt photon, dijet, and prompt \(D^0\)-meson production. For inclusive electroweak boson production we consider data for differential distributions obtained in pPb collisions. For all other processes, differential distributions measured in pPb collisions are always normalised to the corresponding distributions in pp collisions, measured at the same CoM energy. These ratios take the schematic form

where X represents an arbitrary differential variable. The same form applies to more (e.g. double) differential quantities. The general rationale for applying this approach is that the LO predictions for prompt photon, dijet, and prompt D-meson production are \({\mathcal {O}}(\alpha _s)\). As a consequence, the theoretical predictions for the absolute rates of these processes (at NLO QCD accuracy) are subject to uncertainties due to missing higher order effects which are typically in excess of the uncertainty related to nPDFs. At the level of the ratio, uncertainties related to the overall normalisation of the distributions (e.g. the value of the coupling) cancel, while sensitivity to the nuclear modification of nPDFs is retained. In Sect. 4 it will be shown for prompt D-meson production (which has the largest relative theory uncertainties of the considered processes) that such observables ensure that nPDF uncertainties dominate over those due to scale uncertainties. Notably, a shortcoming of this approach is that it becomes necessary to exclude the reference pp data from the proton baseline which enters the nPDF fit. The extension of the perturbative accuracy of the fit to NNLO QCD and/or including theoretical uncertainties (as in [69, 70]) would allow one to consider absolute distributions instead of ratios for these selected processes. As a note, these ratios are constructed in the pp CoM frame. For collisions such as pPb, they do not coincide with ratios constructed in the laboratory frame. The role of this asymmetry for the interpretation of pPb observables is reviewed in Appendix B.

2.3.1 Inclusive electroweak boson production

The new datasets that we consider in this category are the following. First we include the ALICE measurements of the fiducial cross-section for W- and Z-boson production at \(\sqrt{s}=5.02\) TeV in the muonic decay channel [58]. The data points cover the backward (Pb–going) and forward (p–going) rapidity regions. The integrated luminosity is 5.81 and 5.03 \(\hbox {nb}^{-1}\) in each case. Then we also include the related LHCb [60] and ALICE [59] measurements of the fiducial cross-section for Z-boson production at \(\sqrt{s}=5.02\) TeV and \(\sqrt{s}=8.16\) TeV, respectively. Backward and forward rapidity measurements are considered in both cases. The integrated luminosities are, for ALICE, 8.40 and 12.7 \(\hbox {nb}^{-1}\) in the Pb–going and in the p–going directions; and for LHCb, 1.6 \(\hbox {nb}^{-1}\). Finally we include the CMS measurement of Z-boson production in the dimuon decay channel at \(\sqrt{s}=8.16\) TeV [61]. In this latter case, differential cross-sections are presented with respect to the rapidity and the invariant mass of the dimuon system, after being corrected for acceptance effects. The integrated luminosity is 173 \(\hbox {nb}^{-1}\).

As was already the case for nNNPDF2.0, theoretical predictions for electroweak gauge boson production are computed at NLO QCD accuracy with MCFM v6.8 [71,72,73]. The renormalisation and factorisation scales are set equal to the mass of the gauge boson, for total cross-sections, and to the central value of the corresponding invariant mass bin, in the case of the CMS differential cross-sections [61]. Note that the choice of the scale is partly restricted by the grid generation procedure – i.e. a fully dynamical event-by-event scale choice such as the invariant mass of the muon pair is not accessible. Experimental correlations are taken into account whenever available, namely for the measurements of [58, 59, 61], otherwise statistical and systematic uncertainties are added in quadrature.

2.3.2 Prompt photon production

In this category nNNPDF3.0 includes the ATLAS measurement at \(\sqrt{s}=8.16\) TeV [62]. Cross-sections for isolated prompt photon production are presented in three pseudo-rapidity \(\eta _{\gamma }\) bins and then differentially in the photon transverse energy \(E_T^\gamma \). As discussed above, we only consider the ratio of the differential pPb cross-section normalised with respect to the reference pp results in the fit. Notably, for prompt photon production this means that uncertainties due to the treatment of photon fragmentation and the choice of the value of the electromagnetic coupling are not important. For completeness, in Sect. 4 we also indicate how the fit quality is deteriorated when the absolute cross-section is considered (without accounting for theory uncertainties). The ranges of the three photon pseudo-rapidity bins in the CoM frame are \(-2.83<\eta _{\gamma }<-2.02\), \(-1.84<\eta _{\gamma }<0.91\) and \(1.09<\eta _{\gamma }<1.90\); the kinematic coverage in the photon transverse energy is, for each of these, \(20<E_T^\gamma <550\) GeV. The integrated luminosity of this measurement is 165 \(\hbox {nb}^{-1}\).

Theoretical predictions are computed at NLO QCD accuracy with MCFM (v6.8) following the calculational settings presented in [74]. The renormalisation and factorisation scales are set equal to the central value of the photon transverse energy \(E_T^\gamma \) for each bin. Experimental correlations between transverse momentum and rapidity bins are taken into account following the prescription provided in [62].

2.3.3 Dijet production

In this category we consider the CMS measurement at \(\sqrt{s}=5.02\) TeV [27]. The cross-section is presented double differentially in the dijet average transverse momentum \(p_{T,\mathrm{dijet}}^{\mathrm{ave}}\), where \(p_{T,\mathrm{dijet}}^{\mathrm{ave}} = \left( p_{T,1} + p_{T,2}\right) /2\), and the dijet pseudo-rapidity \(\eta _{\mathrm{dijet}}\). As in the case of prompt photon production, we consider only data for the ratio of cross-sections obtained in pPb with respect to that from pp collisions. Again, for completeness, in Sect. 4 we also show how the fit quality deteriorates (without accounting for theory uncertainties) when the absolute cross-section is considered. The kinematic coverage in pseudo-rapidity and average transverse momentum is, in the CoM frame, \(-2.456<\eta <2.535\) and \(55~\mathrm{GeV}<p_{\mathrm{T,dijet}}^{\mathrm{avg}}<400\) GeV. The integrated luminosity is 35 (27) \(\hbox {nb}^{-1}\) for the Pb–going (p–going) direction.

Theoretical predictions are computed at NLO QCD accuracy with NLOjet++ [75]. We have verified that the independent computation of [76] is reproduced. The renormalisation and factorisation scales are set equal to the dijet invariant mass \(m_{jj}\), as in the dedicated pp study of [77]. Since experimental correlations for the pPb to pp cross-section ratio are not provided, statistical and systematic uncertainties are added in quadrature.

2.3.4 Prompt D-meson production

In this category we consider the LHCb measurements of prompt \(D^0\)-meson production in pPb collisions at \(\sqrt{s}=5.02\) TeV [28]. The data is available both in the forward and backwards configurations, and is presented as absolute cross-sections differential in the transverse momentum \(p^{D^0}_{\mathrm{T}}\) and rapidity \(y^{D^0}\) of the \(D^0\)-mesons, namely

Following the discussion around Eq. (2.1), we only consider data for cross-section ratios (which are obtained with respect to reference pp data [78]). The double differential ratio for forward pPb measurements is

where both pPb and pp cross-sections are given in the pp CoM frame (and \(y^{D^0}\) is the \(D^0\)-meson rapidity in that frame). The corresponding observable can be constructed for backwards Pbp collisions, which is denoted as \(R_{\mathrm{Pbp}}\).

An additional ratio \(R_{\mathrm{fb}}\) constructed from forward over backward cross-sections (defined in the pp CoM frame) can also be considered:

This ratio benefits from a cancellation of a number of experimental systematics, and, as motivated in [79], provides sensitivity to nuclear modifications while the theoretical uncertainty from all other sources is reduced to a sub-leading level.

The LHCb measurements cover the rapidity range of \(2.0< y^{D^0} < 4.5\) for pp collisions, and the effective range of \(1.5< y^{D^0} < 4.0\) in the forward pPb collisions. The data on the \(R_{\mathrm{pPb}}\) ratio Eq. (2.3) is presented in four rapidity bins in the region \(2.0< y^{D^0} < 4.0\), such that the coverage of the forward pPb and the baseline pp measurements overlap. For each of these bins in \(y^{D^0}\), the coverage in the \(D^0\)-meson transverse momentum is \(0< p^{D^0}_{\mathrm{T}} < 10\) GeV, adding up to a total of 37 data points. In contrast to pp measurements, for which different D meson species are detected, in the pPb case only \(D^0\) mesons are reconstructed. Because bin-by-bin correlations for systematic uncertainties are not available for \(R_{\mathrm{pPb}}\), we consider them as fully uncorrelated and add them in quadrature with the statistical uncertainties.

Theoretical predictions for D-meson production in hadron-hadron collisions are computed at NLO QCD accuracy in a fixed-flavour number scheme with POWHEG [80,81,82] matched to Pythia8 [83]. The Monash 2013 Tune [84] is used throughout. Our computational set-up has been compared with that used in the calculations of charm production in pp collisions from [85], finding good agreement. Furthermore, theoretical predictions for \(R_{\mathrm{pPb}}\) and \(R_{\mathrm{Pbp}}\) have been benchmarked against the corresponding calculations used in the EPPS analysis [86] (where the same set-up was considered). It is relevant to mention that a comparison of how different theoretical approaches to describing \(D^0\)-meson production in pPb collisions impacts the extraction of nPDFs has been considered in [86]. This study demonstrated that (see Fig. 15 of [86]), for suitably defined observables such as \(R_{\mathrm{fb}}(y^{D^0}, p^{D^0}_{\mathrm{T}})\), consistent results are obtained between our chosen set-up (POWHEG+Pythia8) and those in a general-mass variable flavour number scheme [87].

The above discussion summarises how the various nuclear cross-section ratios for \(D^0\)-meson production are accounted for. However, it is also necessary to constrain the overall normalisation of the nPDFs, and not just the size of the nuclear correction – i.e. the normalisation of the free nucleon PDFs must also be known. To achieve this, we include the constraints from D-meson production in pp collisions at 7 TeV [88] and 13 TeV [89] into the proton PDF baseline which is used as a boundary condition for nNNPDF3.0. This is done following the analysis of [85], which considers LHCb D-meson data at the level of normalised differential cross-sections according to

in terms of a reference rapidity bin \(y_{\mathrm{ref}}^D\). The main advantage of normalised observables such as Eq. (2.5) is that scale uncertainties cancel to good approximation (since these depend mildly on rapidity) while some sensitivity to the PDFs is retained (as the rate of the change of the PDFs in x is correlated with \(y^D\)). This has been motivated in [90] and subsequently in [12, 85]. As in [85] we consider pp measurements for the \(\{D^0, D^+, D_s^+\}\) final states, adding up to a total of 79 and 126 data points for \(N^{\mathrm{pp}}_7\) and \(N^{\mathrm{pp}}_{13}\) respectively. To avoid double counting, the available data on \(N^{\mathrm{pp}}_5\) is not included in the proton baseline, given that it will enter the nuclear PDF analysis through Eq. (2.3).

Finally, we note that in contrast to all other considered scattering processes in this work, there is currently no public interface for the computation of fast interpolation tables for prompt D-meson production in pp or pPb collisions. This complicates the inclusion of this data in the nNNPDF3.0 global analyses, which is realised by a multi-stage Bayesian reweighting procedure as detailed in Sect. 3.4 and summarised in Fig. 4.

3 Analysis methodology

In this section we describe the fitting methodology that is adopted in nNNPDF3.0. We start with an overview of the methodological aspects shared with nNNPDF2.0. We then discuss the improvements in the free-proton baseline PDF used as the \(A=1\) boundary condition for the nuclear fit, and compare this baseline to the one used in nNNPDF2.0. We proceed by describing hyperoptimisation, the procedure adopted to automatically select the optimal set of model hyperparameters such as the neural network architecture and the minimiser learning rates. Finally we outline the strategy, based on Bayesian reweighting, used in order to include the LHCb measurements of D-meson production in a consistent manner both in the free-proton baseline PDFs and in the nPDFs.

3.1 Methodology overview

The fitting methodology adopted in nNNPDF3.0 closely follows the one used in nNNPDF2.0. Here we summarise the methodological aspects common to the two determinations. Additional methodological improvements will be discussed in Sects. 3.2 and 3.3.

3.1.1 Parametrisation

As in nNNPDF2.0, we parametrise six independent nPDF combinations in the evolution basis. These are:

where \(f^{(p/A)}(x,Q_0)\), with \(f=\Sigma ,T_3,T_8,V,V_3,g\), denotes the nPDF of a proton bound in a nucleus with atomic mass number A, see Appendix A for the conventions adopted. The parametrisation scale is \(Q_0=1\) GeV, as in the free-proton baseline PDF set. The normalisation coefficients \(B_V\), \(B_{V_3}\) and \(B_g\) enforce the momentum and valence sum rules, while the preprocessing exponents \(\alpha _f\) and \(\beta _f\) are required to control the small- and large-x behaviour of the nPDFs, see Sect. 3.1.2. In Eq. (3.1), \(\mathrm{NN}_f(x,A)\) represents the value of the neuron in the output layer of the neural network associated to each independent nPDF. The input layer contains three neurons that take as input the values of the momentum fraction x, \(\ln (1/x)\), and the atomic mass number A, respectively. The rest of the neural network architecture is determined through the hyperoptimisation procedure described in Sect 3.3. This is in contrast to nNNPDF2.0, where the optimal number of hidden layers and neurons were determined by trial and error. The corresponding distributions for bound neutrons are obtained from those of bound protons assuming isospin symmetry. Under the isospin transformation, all quark and gluon combinations in Eq. (3.1) are left invariant, except for:

While the nNNPDF3.0 fits presented in this work are carried out in the basis specified by Eq. (3.1), any other basis obtained as a linear combination of Eq. (3.1) could be used. In principle, the choice of any basis should lead to comparable nPDFs, within statistical fluctuations, as demonstrated explicitly in the proton case [24]. In the following, we will display the nNNPDF3.0 results in the flavour basis, given by

see Sect. 3.1 of [24] for the explicit relationship with the evolution basis of Eq. (3.1).

3.1.2 Sum rules and preprocessing

Momentum and valence sum rules are enforced by requiring that the normalisation coefficients \(B_g\), \(B_V\), and \(B_{V_3}\) in Eq. (3.1) take the values:

These coefficients must be determined for each value of A; perturbative evolution ensures that, once enforced at the initial parametrisation scale \(Q_0\), momentum and valence sum rules are not violated for any \(Q > Q_0\).

The preprocessing exponents \(\alpha _f\) and \(\beta _f\) in Eq. (3.1) facilitate the training process and are fitted simultaneously with the network parameters. The exponents \(\alpha _V\) and \(\alpha _{V_3}\) are restricted to lie in the range [0, 5] during the fit to ensure integrability of the valence distributions. The other \(\alpha _f\) exponents are restricted to the range \([-1,5]\), consistently with the momentum sum rule requirements, while the exponents \(\beta _f\) lie in the range [1, 10]. While we do not explicitly impose integrability of \(xT_3\) and \(xT_8\), consistently with the NNPDF3.1-like proton baseline, the fitted nPDFs turn out to satisfy these integrability constraints anyway. It is worth pointing out that a strategy to avoid preprocessing within the NNPDF methodology has been recently presented in [91]. This strategy, which leads to consistent results in the case of free-proton PDFs, may be applied to nPDFs in the future.

3.1.3 The figure of merit

The best-fit values of the parameters defining the nPDF parametrisation in Eq. (3.1) are determined by minimising a suitable figure of merit. As in nNNPDF2.0, this figure of merit is an extended version of the \(\chi ^2\), defined as

The first term, \(\chi _{t_0}^2\), is the contribution from experimental data

This is derived by maximising the likelihood of observing the data \(D_i\) given a set of theory predictions \(T_i\). The covariance matrix is constructed according to the \(t_0\) prescription [92], see Eq. (9) in [93].Footnote 1

The second term, \(\kappa _{\mathrm{pos}}^2\), ensures the positivity of physical cross-sections and is defined as

where l runs over the \(n_{\mathrm{pos}}\) positivity observables \({\mathcal {F}}^{(l)}\) (defined in Table 3.1 of [26]) and each of the observables contain \(n_{\mathrm{dat}}^{(l)}\) kinematic points that are computed over all \(n_A\) nuclei for which there are experimental data in the fit. The Lagrange multiplier is fixed by trial and error to \(\lambda _{\mathrm{pos}} = 1000\).

The third term, \(\kappa _{\mathrm{BC}}^2\), ensures that, when taking the \(A \rightarrow 1\) limit, the nNNPDF3.0 predictions reduce to those of a fixed free-proton baseline in terms of both central values and uncertainties. The choice of this baseline set differs from nNNPDF2.0 and is further discussed in Sect. 3.2. The \(\kappa _{\mathrm{BC}}^2\) term is defined as

where f runs over the six independent nPDFs in the evolution basis, and j runs over \(n_x\) points in x as discussed in Sect. 3.2. The value of the Lagrange multiplier is fixed to \(\lambda _{\mathrm{BC}}=100\). In Eq. (3.8), for each replica in the nNNPDF3.0 ensemble, we use a different random replica from the free-proton baseline set. This way the free-proton PDF uncertainty is propagated into the nPDF fit via the \(A=1\) boundary condition.

In summary, in the present analysis the best-fit nPDFs are determined by maximising the agreement between the theory predictions and the corresponding Monte Carlo replica of the experimental data, subject to the physical constraints of cross-section positivity and the \(A=1\) boundary condition, together with methodological requirements such as cross-validation to prevent overlearning.

3.2 The free-proton boundary condition

The neural network parametrisation of the nPDFs described by Eq. (3.1) is valid from \(A=1\) (free proton) up to \(A=208\) (lead). This implies that the nNNPDF3.0 determination also contains a determination of the free-proton PDFs (as \(A\rightarrow 1\)). However, as discussed above, the PDFs in this \(A=1\) limit are fixed by means of the Lagrange multiplier defined in Eq. (3.8). The central values and uncertainties of nNNPDF3.0 for \(A=1\) hence reproduce those of some external free-proton baseline PDFs, which effectively act as a boundary condition to the nPDFs. This is a unique feature of the nNNPDF methodology. We note that moderate deviations from this boundary condition may appear as a consequence of the positivity constraints enforced through Eq. (3.7). Distortions may also arise when nPDFs replicas are reweighted with new data, as we will discuss in Sect. 3.4.

In nNNPDF3.0, the free-proton baseline PDFs are chosen to be a variant of the NNPDF3.1 NLO parton set [29] where the charm-quark PDF is evaluated perturbatively by applying massive quark matching conditions. This baseline differs from that used in nNNPDF2.0 in the following respects.

-

The free-proton baseline PDFs include all the datasets incorporated in the more recent NNPDF4.0 NLO analysis [24], except those involving nuclear targets (these are instead part of nNNPDF3.0), see in particular Tables 2.1–2.5 and Appendix B in [24]. The extended NNPDF4.0 dataset provides improved constraints on the free-proton baseline PDFs in a wide range of x for all quarks and the gluon. Note that, while our free-proton baseline PDFs are close to NNPDF4.0 insofar as the dataset is concerned, they are however based on the NNPDF3.1 fitting methodology. The reason being that the NNPDF4.0 methodology incorporates several modifications (see Sect. 3 in [24] for a discussion) that are not part of the nNNPDF3.0 methodology. Among these, a strict requirement of PDF positivity. Those modifications do not alter the compatibility between PDF determinations obtained with the NNPDF3.1 or NNPDF4.0 methodologies, however they lead to generally smaller uncertainties in the latter case, see Sect. 8 in [24]. One could therefore expect a reduction of nPDF uncertainties for low-A nuclei, and results consistent with those presented in the following, if the proton-only fit determined with the NNPDF4.0 methodology was used as a boundary condition.

-

When implementing the free-proton boundary condition via Eq. (3.8), in nNNPDF3.0 we use a grid with \(n_x=100\) points, half of which are distributed logarithmically between \(x_{\mathrm{min}}=10^{-6}\) and \(x_{\mathrm{mid}}=0.1\) and the remaining half are linearly distributed between \(x_{\mathrm{mid}}=0.1\) and \(x_{\mathrm{max}}=0.7\). This is different from nNNPDF2.0, where the grid had 60 points, 10 of which were logarithmically spaced between \(x_{\mathrm{min}}=10^{-3}\) and \(x_{\mathrm{mid}}=0.1\) and the remaining 50 were linearly spaced between \(x_{\mathrm{mid}}=0.1\) and \(x_{\mathrm{max}}=0.7\). This extension in the x range is necessary to account for the kinematic coverage of the LHCb D-meson production measurements in pp and pPb collisions, see also Fig. 1.

Furthermore, we produce two variants of these free-proton baseline PDFs, respectively with and without the LHCb D-meson production data in pp collisions at 7 and 13 TeV, see Sect. 2.3.4. The two variants are used consistently with variants of the nPDF fit in which LHCb \(D^0\)-meson production data in pPb collisions are included or not. These two variants are compared (and normalised) to the free-proton baseline PDFs used in nNNPDF2.0 in Fig. 2. We show the PDFs at \(Q=10\) GeV in the same extended range of x for which the boundary condition Eq. (3.8) is enforced, that is between \(x=10^{-6}\) and \(x=0.7\).

Comparison between the free-proton baseline PDFs used in nNNPDF2.0 and in nNNPDF3.0, without and with the LHCb D-meson data. These PDFs are used to enforce the \(A=1\) boundary condition in the corresponding nPDF fits by means of Eq. (3.8). Results are normalised to the nNNPDF2.0 free-proton baseline PDFs and are shown at \(Q=10\) GeV in the extended range of x for which the boundary condition is enforced

Differences between the three free–proton baseline PDFs displayed in Fig. 2 arise from differences in the fitted dataset: the nNNPDF3.0 baselines do not include nuclear data that were instead part of the nNNPDF2.0 baseline; and conversely the nNNPDF3.0 baselines benefit from the extended NNPDF4.0 dataset, which was not part of the nNNPDF2.0 baseline. The effect of these differences are: an increase of the up and down quark and anti-quark central values in the region  ; a slight increase of the PDF uncertainties in the same region, in particular for the down quark and antiquark PDFs and for the total strangeness; and a suppression of the gluon central value for

; a slight increase of the PDF uncertainties in the same region, in particular for the down quark and antiquark PDFs and for the total strangeness; and a suppression of the gluon central value for  followed by an enhancement at larger values of x, accompanied by an uncertainty reduction in the same region.

followed by an enhancement at larger values of x, accompanied by an uncertainty reduction in the same region.

The datasets responsible for each of the effects observed in the nNNPDF3.0 baseline PDFs have been identified in the NNPDF4.0 analysis [24] and in related studies [22, 23, 77, 94]. The enhancement of the central values of the up quark and antiquark PDFs in the region  is a consequence of the new measurements of inclusive and associated DY production from ATLAS, CMS, and LHCb. The increase of uncertainties for the down quark and antiquark PDFs and for the total strangeness are due to the removal of the deuteron DIS and DY cross-sections. This piece of information is however not lost since it is subsequently included in the nuclear fit. The variation of the central value and the reduction of the uncertainty of the gluon PDF are a consequence of the new ATLAS and CMS dijet cross-sections at 7 TeV.

is a consequence of the new measurements of inclusive and associated DY production from ATLAS, CMS, and LHCb. The increase of uncertainties for the down quark and antiquark PDFs and for the total strangeness are due to the removal of the deuteron DIS and DY cross-sections. This piece of information is however not lost since it is subsequently included in the nuclear fit. The variation of the central value and the reduction of the uncertainty of the gluon PDF are a consequence of the new ATLAS and CMS dijet cross-sections at 7 TeV.

On the other hand, a comparison between the two nNNPDF3.0 free-proton baseline PDFs, with and without the pp 7 and 13 TeV LHCb D-meson production data, reveals that central values remain mostly unchanged. This fact indicates that the data is described reasonably well even if this is not included in the fit. Uncertainties are unaffected for  , while they are reduced at smaller values of x, in particular for the sea quark and gluon PDFs.

, while they are reduced at smaller values of x, in particular for the sea quark and gluon PDFs.

It should be finally noted that several datasets and processes constrain both the free-proton baseline PDFs and the nPDFs to a similar degree of precision. There is therefore some interplay between the two. The free-proton baseline PDFs will directly constrain the low-A nPDFs, and indirectly the nPDFs corresponding to higher values of A.

Graphical representation of a hyperparameter scan for representative hyperparameters produced with 1000 trial searches using the TPE algorithm. The values of the hyperparameter are represented on the x-axis while the hyperopt loss function is in the y-axis. In addition to the outcome of individual trials we display a reconstruction of the probability distribution by means of the KDE method. The red dots indicate the values of the hyperparameters used in the nNNPDF2.0 analysis

3.3 Hyperparameter optimisation

A common challenge in training neural-network based models is the choice of the hyperparameters of the model itself. These include, for instance, the architecture and activation functions of the neural network, the optimisation algorithm and learning rates. The choice of hyperparameters affect the performance of the model and of its training. Hyperparameters can be tuned by trial and error, however this is computationally inefficient and may leave unexplored relevant regions of the hyperparameter space. A heuristic approach designed to address this problem more effectively is hyperparameter scan, or hyperparameter optimisation (or hyperoptimisation in short). It consists in finding the best combination of hyperparameters through an iterative search of the hyperparameter space following a specific optimisation algorithm. In the context of NNPDF fits, this approach has been proposed in [30] and has been used in recent free-proton PDF fits [24].

Hyperoptimisation is realised as follows. First a figure of merit (also known as loss function) to minimise and a search domain in the hyperparameter space are defined. Here we define the loss function as the average of the training and validation \(\chi ^2\),

see [24] for alternative choices. We carry out the hyperparameter scan for various subsets of Monte Carlo data replicas and check that the results converge to a unique combination of hyperparameters. During the initialisation process, the loss function is evaluated for a few random sets of hyperparameters. Based on the results from these searches, the optimisation algorithm constructs models in which the hyperparameters that reduce the loss function are selected. The models are then updated during the trials based on historical observations and subsequently define new sets of hyperparameters to test. In this analysis, we implement the tree-structured Parzen Estimator (TPE), also known as Kernel Density Estimator (KDE) [95, 96], as optimisation algorithm for hyperparameter tuning. The TPE selects the most promising sets of hyperparameters to evaluate the loss function by constructing a probabilistic model based on previous trials, and has been proven to outperform significantly any random or grid searches.

Figure 3 is a graphical representation of a hyperparameter scan obtained with 1000 trial searches using the TPE algorithm. We show the results for the architecture, weight initialisation method, activation function, and learning rates of the optimiser. The values of the hyperparameters are represented on the x-axis while the loss function, Eq. (3.9), is reported on the y-axis. In addition to the outcome of individual trials, we also display a reconstruction of the probability distribution by means of the KDE method. The red dots indicate the values of the best hyperparameters used in nNNPDF2.0, which were determined by means of a trial and error selection procedure.

Parameters that exhibit denser tails in the KDE distributions of Fig. 3 are considered better choices as they yield more stable trainings. For instance, one observes that a network with one single hidden layer and 25 nodes significantly outperforms a network with two hidden layers. Indeed, not only the chosen architecture leads to the smallest value of the loss function but also to a more stable behaviour. Similar patterns are observed for the activation function and learning rate. On the other hand, no clear preference is seen for the initialisation of the weights. Despite the fact that the Glorot Uniform initialisation leads to the smallest value of the objective function, the Glorot Normal one appears to yield slightly better stability with more gray points concentrated at the tail and hence a fatter distribution.

Following this hyperparameter optimisation process, we have determined the baseline hyperparameters to be used in the nNNPDF3.0 analysis. We list them in Table 2. For reference, we also show the hyperparameters that were chosen in nNNPDF2.0 by means of a trial and error selection procedure. Remarkably, the automatically-selected optimal hyperparameters in nNNPDF3.0 coincide in many case with those selected by trial and error in nNNPDF2.0, in particular for the neural network architecture, the initialisation of the weight, and the optimiser. In terms of the initialisation of the weights, the Glorot Normal and Glorot Uniform strategies exhibit similar training behaviours. The main differences between the hyperparameters of the nNNPDF2.0 and nNNPDF3.0 methodologies hence lie in the activation function and the learning rate, where for the latter it is found that faster convergence is achieved with a larger step size. We recall that situations where the model could converge to a suboptimal solution are avoided thanks to the Adaptive Momentum Stochastic Gradient Descent (Adam) optimiser that dynamically adjusts the learning rate.

All in all, the differences between the previous and new model hyperparameters listed in Table 2 are found to be rather moderate, confirming the general validity of the choices that were adopted in the nNNPDF2.0 analysis.

3.4 The LHCb D-meson data and PDF reweighting

As mentioned previously, the constraints on nNNPDF3.0 from the datasets described in Sect. 2 are accounted for by means of the experimental data contribution, \(\chi ^2_{t_0}\), to the cost function in Eq. (3.5) used for the neural network training. The only exceptions are the LHCb measurements of D-meson production discussed in Sect. 2.3.4. The impact of these measurements is instead determined by means of Bayesian reweighting [63, 64]. The reason is that interpolation tables, that combine PDF and \(\alpha _s\) evolution factors with weight tables for the hadronic matrix elements (see Sect. 2.2), need to be pre-computed to allow for a fast determination of theoretical predictions. The efficient computation of these theoretical predictions is critical for the fit, as they must be evaluated a large number of times, \({\mathcal {O}}(10^5)\), as part of the minimisation procedure. However, no interface is currently publicly available to generate interpolation tables in the format required to include LHCb D-meson data in the free-proton and nuclear nNNPDF3.0 fits. Bayesian reweighting then provides a suitable alternative to account for the impact of these measurements in the nPDF determination, since the corresponding theory predictions must be evaluated only once per each of the prior replicas.

Our strategy therefore combines fitting and reweighting procedures, as illustrated in the right branch of the flowchart in Fig. 4. We describe the various steps of this strategy in turn.

Schematic representation of the fitting strategy used to construct the nNNPDF3.0 determination. The starting point is a dedicated proton global fit based on the NNPDF3.1 methodology but with the NNPDF4.0 proton-only dataset. This proton PDF fit is then reweighted with the LHCb D meson production data in pp collisions, which upon unweighting results into the proton PDF baseline to be used for nNNPDF3.0 (via the \(A=1\) boundary condition). Then to assemble nNNPDF3.0 we start from the nNNPDF2.0 dataset, augment it with the NNPDF4.0 deuteron data and the new pPb LHC cross-sections, and produce a global nPDF fit with the hyperoptimised methodology. Finally this is reweighted by the LHCb \(D^0\) meson production measurements in pPb collisions, and upon unweighting we obtain the final nNNPDF3.0 fits

-

The first step concerns the free-proton PDF baseline. A variant of the NNPDF3.1 NLO fit is constructed as described in Sect. 3.2, which is then reweighted with the LHCb D-meson measurements of \(N^{\mathrm{pp}}_7\) and \(N^{\mathrm{pp}}_{13}\), see Eq. (2.5) and the discussion in Sect. 2.3.4. The NNPDF3.1 fit variant is composed of \(N_{\mathrm{rep}}=500\) replicas; after reweighting one ends up with \(N_{\mathrm{eff}}=250\) effective replicas. Table 3 collects the values of \(\chi ^2/n_{\mathrm{dat}}\) for the LHCb D-meson measurements of \(N^{\mathrm{pp}}_7\) and \(N^{\mathrm{pp}}_{13}\) before and after reweighting. We observe that the dataset is relatively well described already before reweighting; the improvement after reweighting is therefore noticeable, although not dramatic. As shown in Fig. 2, the impact of the LHCb \(N^{\mathrm{pp}}_7\) and \(N^{\mathrm{pp}}_{13}\) data on the free-proton baseline PDFs consists of a reduction of uncertainties in the small-x region; central values are left mostly unaffected.

-

The second step consists in producing the nNNPDF3.0 prior fit. This is based on the dataset described in Sect. 2 (except LHCb pPb \(D^0\)-meson data) and makes use of the free-proton PDF set determined at the end of the previous step. The nNNPDF3.0 prior fit is made of \(N_{\mathrm{rep}}=4000\) replicas. Such a large number of replicas ensures sufficiently high statistics for the subsequent reweighting of this nNNPDF3.0 prior fit with the LHCb D-meson pPb data.

-

The third step is the reweighting of the nNNPDF3.0 prior fit with the LHCb measurements of \(D^0\)-meson production in pPb collisions. As discussed in Sect. 2.3.4 we use the ratio of pPb to pp spectra, in the forward region, Eq. (2.3), by default. The stability of our results if instead the forward-to-backward ratio measurements Eq. (2.4) are used is quantified in Sect. 5. After reweighting, we end up with \(N_{\mathrm{eff}}=200\) (500) effective replicas when reweighting with \(R_{\mathrm{pPb}}\) (\(R_{\mathrm{fb}}\)). A satisfactory description of the LHCb \(D^0\)-meson data in pPb collisions is achieved, as will be discussed in Sect. 4.2. Our final nNNPDF3.0 set is constructed after unweighting [64] and contains \(N_{\mathrm{rep}}=200\) replicas. This is released in the usual LHAPDF format for the relevant values of A, listed in Sect. 7.1.

In addition to this baseline nNNPDF3.0 fit, as indicated on the left branch of the flowchart in Fig. 4, we also produce and release a variant without any LHCb D-meson data, neither in the free-proton baseline nor in the nuclear fit. For completeness, we list here the specific procedure adopted to construct this variant.

-

The first step concerns again the free-proton PDF baseline. This is the variant of the NNPDF3.1 NLO fit constructed as described in Sect. 3.2 and made of \(N_{\mathrm{rep}}=1500\) replicas.

-

The second step consists in producing the variant of the nNNPDF3.0 fit from the datasets described in Sect. 2, except the LHCb D-meson cross-sections. We produce two ensembles with \(N_{\mathrm{rep}}=250\) and \(N_{\mathrm{rep}}=1000\) replicas, which we also release in the usual LHAPDF format for the relevant values of A. In Sects. 4.1 and 4.2 we will compare the nNNPDF3.0 baseline fit and the variant without any LHCb D-meson data.

We have explicitly verified the validity of the reweighting procedure when applied to nPDFs (see Appendix D for more details), by comparing the outcome of a fit where a subset of the CMS dijet measurements from pPb collisions are included either by a fit or by reweighting. We have confirmed that the two methodologies lead to compatible results, in particular that nPDF central values are similarly shifted and uncertainties are similarly reduced. Nevertheless, we remark that the reweighting procedure has some inherent limitations as compared to a fit. First of all, reweighting is only expected to reproduce the outcome of a fit provided that statistics, i.e. the number of effective replicas, is sufficiently high. Second, the results obtained by means of reweighting may differ from those obtained after a fit in those cases where the figure of merit used to compute the weights is different from that used for fit minimisation. For instance, if the \(\chi ^2\) function used for reweighting does not account for the cross-section positivity and the \(A=1\) free-proton boundary condition constraints as in Eq. (3.5).

4 Results

In this section we present the main results of this work, namely the nNNPDF3.0 global analysis of nuclear PDFs. First of all, we discuss the key features of the variant of nNNPDF3.0 without the LHCb D-meson data, and compare it with the nNNPDF2.0 reference. Second, we describe the outcome of the reweighting of a nNNPDF3.0 prior set with the LHCb D-meson data, which defines the nNNPDF3.0 default determination, and the resulting constraints on the nuclear modification factors. Third, we study the goodness-of-fit to the new datasets incorporated in the present analysis and carry out representative comparisons with experimental data. Fourth, we study the A-dependence of our results and assess the local statistical significance of nuclear modifications. Finally, we compare the nNNPDF3.0 determination with two other global analyses of nuclear PDFs, EPPS16 and nCTEQ15WZ+SIH.

The stability of nNNPDF3.0 with respect to methodological and dataset variations is then studied in Sect. 5, while its implications for the ultra high-energy neutrino-nucleus interaction cross-sections are quantified in Sect. 6. Furthermore, representative comparisons between the predictions from nNNPDF3.0 and experimental data from pPb collisions can be found in Appendix C.

4.1 The nNNPDF3.0 (no LHCb D) fit

We present first the main features of the nNNPDF3.0 variant that excludes the LHCb D meson data (from both pp and pPb collisions), following the strategy indicated in Sect. 3. In the following, this variant is denoted as nNNPDF3.0 (no LHCb D). This fit differs from nNNPDF2.0 due to three main factors: i) the significant number of new datasets involving D, Cu, and Pb targets, ii) the improved treatment of \(A=1\) free-proton PDF boundary condition, and iii) the automated optimisation of the model hyperparameters.

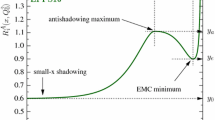

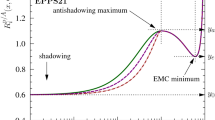

The comparison between the nNNPDF2.0 and nNNPDF3.0 (no LHCb D) fits is presented in Fig. 5 at the level of lead PDFs and in Fig. 6 at the level of nuclear modification ratios, defined as

where \(f^{(N/A)}\), \(f^{(p)}\), and \(f^{(n)}\) indicate the PDFs of the average nucleon N bound in a nucleus with Z protons and \(A-Z\) neutrons, the free-proton, and the free-neutron PDFs respectively, see Appendix A for an overview of the conventions and notation used throughout this work.

In both cases, the results display the 68% CL uncertainties and are evaluated at \(Q=10\) GeV.

Same as Fig. 5 now in terms of the nuclear modification ratios \(R_f^{(A)}(x,Q^2)\)

Comparison between the LHCb data on \(D^0\)-meson production from pPb collisions in the forward region and the corresponding theoretical predictions based on the nNNPDF3.0 prior set described in Sect. 3.4. The ratio between \(D^0\)-meson spectra in pPb and pp collisions, \(R_{\mathrm{pPb}}\) in Eq. (2.3), is presented in four bins in \(D^0\)-meson rapidity \(y^{D^0}\) as a function of the transverse momentum \(p_T^{D^0}\). We display separately the PDF and scale uncertainty bands, and the bottom panels show the ratios to the central value of the theory prediction based on the prior

First of all, the two determinations are found to be consistent within uncertainties for all the nPDF flavours in the full range of x for which experimental data is available. The qualitative behaviour of the nuclear modification ratios defined in Eq. (4.1) is similar in the two determinations, with the strength of the small-x shadowing being reduced (increased) in the quark (gluon) nPDFs in nNNPDF3.0 (no LHCb D) as compared to the nNNPDF2.0 analysis. From Fig. 6, one also observes how the large-x behaviour of the nuclear modification factors for the quark PDFs is similar in the two fits, while for the gluon one finds an increase in the strength of anti-shadowing peaking at \(x\simeq 0.2\). These differences in \(R_g^{(A)}\) reported between nNNPDF3.0 (no LHCb D) and nNNPDF2.0 can be traced back to the constraints provided by the CMS dijet cross-sections in pPb, which will be further discussed in Sect. 5.3. One also finds that uncertainties in the small-x region,  , where neither of the two fits includes direct constraints, are increased in nNNPDF3.0. This is a consequence of the improved implementation of the \(A=1\) proton PDF boundary condition discussed in Sect. 3.2, as will be further studied in Sect. 5.1.

, where neither of the two fits includes direct constraints, are increased in nNNPDF3.0. This is a consequence of the improved implementation of the \(A=1\) proton PDF boundary condition discussed in Sect. 3.2, as will be further studied in Sect. 5.1.

Furthermore, in the region where the bulk of experimental data on nuclear targets lies,  , the uncertainties on the quark nPDFs of lead are also basically unchanged between the two analyses. The impact of the new LHC W and Z production measurements in nNNPDF3.0 is mostly visible for the up and down anti-quark PDFs, both in terms of a shift in the central values and of a moderate reduction of the nPDF uncertainties. The increase in the central value of the total strangeness is related to the inclusion of deuteron and copper cross-sections in nNNPDF3.0 together with the improved \(A=1\) boundary condition, as will be demonstrated in Sect. 5.1.

, the uncertainties on the quark nPDFs of lead are also basically unchanged between the two analyses. The impact of the new LHC W and Z production measurements in nNNPDF3.0 is mostly visible for the up and down anti-quark PDFs, both in terms of a shift in the central values and of a moderate reduction of the nPDF uncertainties. The increase in the central value of the total strangeness is related to the inclusion of deuteron and copper cross-sections in nNNPDF3.0 together with the improved \(A=1\) boundary condition, as will be demonstrated in Sect. 5.1.

4.2 The nNNPDF3.0 determination

The nNNPDF3.0 (no LHCb D) fit presented in the previous section is the starting point to quantify the constraints on the proton and nuclear PDFs provided by LHCb D-meson production data by means of Bayesian reweighting. As discussed in Sect. 3.4, the first step is to produce the nNNPDF3.0 prior fit, which coincides with nNNPDF3.0 (no LHCb D) with the only difference being that the proton PDF boundary condition now accounts for the constraints provided by the LHCb D-meson data in pp collisions at 7 and 13 TeV. The differences and similarities between the proton PDF boundary conditions used for the nNNPDF3.0 and nNNPDF3.0 (no LHCb D) fits and their nNNPDF2.0 counterpart were studied in Fig. 2. Subsequently, the LHCb data for \(R_{\mathrm{pPb}}\) in the forward region is added to this prior nPDF set using reweighting.

Figure 7 displays the comparison between the LHCb data for \(R_{\mathrm{pPb}}\), Eq. (2.3), for \(D^0\)-meson production in pPb collisions (relative to that in pp collisions) in the forward region, and the corresponding theoretical predictions based on this nNNPDF3.0 prior set. The LHCb measurements are presented in four bins in \(D^0\)-meson rapidity \(y^{D^0}\) as a function of the transverse momentum \(p_T^{D^0}\), and we display separately the PDF and scale uncertainty bands, and the bottom panels show the ratios to the central value of the theory prediction.

Same as Fig. 7, now comparing the theory predictions based on the nNNPDF3.0 prior fit with the backward rapidity bins of the LHCb measurement of \(D^0\)-meson production in Pbp collisions. While the predictions are consistent with the LHCb data, uncertainties due to MHOs now are the dominant source of theory error (as opposed to the pPb forward data), hence this dataset is not amenable for inclusion in nNNPDF3.0

From Fig. 7 one can observe how PDF uncertainties of the prior (that does not yet contain \(R_{\mathrm{pPb}}\) \(D^0\)-meson data) are very large, and completely dominate over the uncertainties due to missing higher order (MHOs), for the whole kinematic range for which the LHCb measurements are available. The uncertainties due to MHOs (or scale uncertainties) are evaluated here by independently varying the factorisation and renormalisation scales around the nominal scale \(\mu = E_{T}^c\) with the constraint \(1/2 \le \mu _F/\mu _R \le 2\), and correlating those scales choices between numerator and denominator of the ratio observable defined in Eq. (2.3). Furthermore, these PDF uncertainties are also much larger than the experimental errors, especially for the bins in the low \(p_T^{D^0}\) region which dominate the sensitivity to the small-x nPDFs of lead. Within these large PDF uncertainties, the predictions based on the nNNPDF3.0 prior fit agree well with the LHCb measurements. This feature makes the LHCb forward \(R_{\mathrm{pPb}}\) data amenable to inclusion in a nPDF analysis, as opposed to the situation with the corresponding measurements in the backward region, shown in Fig. 8, where uncertainties due to MHOs are larger than both PDF and experimental uncertainties. Because of this, the LHCb backward \(R_{\mathrm{Pbp}}\) data are not further considered in the nNNPDF3.0 analysis. Considering the low \(p_T^{D^0}\) region of the \(R_{\mathrm{pPb}}\) measurements, one finds that the LHCb data prefer a smaller central value than the central prediction for the nNNPDF3.0 prior fit, indicating than a stronger shadowing at small-x is being favoured. Indeed, as we show next, once the LHCb D-meson constraints are included via reweighting, the significance of small-x shadowing in nNNPDF3.0 markedly increases.

The comparison between the nPDFs of lead nuclei at \(Q=10\) GeV for the nNNPDF3.0 prior fit and the corresponding reweighted results, normalised to the central value of the former, is shown in Fig. 9. This comparison quantifies the impact of the LHCb D-meson production measurements when added to the nNNPDF3.0 prior fit. The reweighted nPDFs of lead nuclei are consistent with those of the prior, and display a clear uncertainty reduction for  (for the gluon) and

(for the gluon) and  (for the sea quarks). For instance, in the case of the gluon the nPDF uncertainties are reduced by around a factor three for \(x\simeq 10^{-4}\), highlighting the constraining power of these LHCb measurements. In terms of the central values, that of the gluon is mostly left unchanged as compared to the prior, while for the sea quarks one gets a suppression of up to a few percent. We note that the reweighting procedure also affects the proton baseline, given that weights are applied to each replica including the full A-dependence of the parametrisation.

(for the sea quarks). For instance, in the case of the gluon the nPDF uncertainties are reduced by around a factor three for \(x\simeq 10^{-4}\), highlighting the constraining power of these LHCb measurements. In terms of the central values, that of the gluon is mostly left unchanged as compared to the prior, while for the sea quarks one gets a suppression of up to a few percent. We note that the reweighting procedure also affects the proton baseline, given that weights are applied to each replica including the full A-dependence of the parametrisation.

The same comparison as Fig. 9 is displayed now in terms of the nuclear modification ratios \(R_f^{(A)}(x,Q)\) in Fig. 10. As discussed in Sect. 3.4, in the present analysis we consider in a coherent manner the constraints of the LHCb D-meson data both on the proton and nuclear PDFs while keeping track of their correlations, and hence the impact on the ratios \(R_f^{(A)}\) is in general expected to be more marked as compared to that restricted to the lead PDFs. Indeed, considering first the nuclear modification ratio for the gluon, we find that the LHCb \(D^0\)-meson measurements in pPb collisions bring in an enhanced shadowing for  together with an associated reduction of the PDF uncertainties in this region by up to a factor five. Hence the LHCb data constrain \(R_g\) more than it does the absolute lead PDFs in Fig. 9, demonstrating the importance of accounting for the correlations between proton and lead PDFs. In the case of the sea quark PDFs, the enhanced shadowing for

together with an associated reduction of the PDF uncertainties in this region by up to a factor five. Hence the LHCb data constrain \(R_g\) more than it does the absolute lead PDFs in Fig. 9, demonstrating the importance of accounting for the correlations between proton and lead PDFs. In the case of the sea quark PDFs, the enhanced shadowing for  and the corresponding uncertainty reduction is qualitatively similar to that observed at the lead PDF level. The preference of the LHCb D-meson production measurements for a strong small-x shadowing of the quark and gluon PDFs of lead is in agreement with related studies of the same process in the literature [86, 97, 98].

and the corresponding uncertainty reduction is qualitatively similar to that observed at the lead PDF level. The preference of the LHCb D-meson production measurements for a strong small-x shadowing of the quark and gluon PDFs of lead is in agreement with related studies of the same process in the literature [86, 97, 98].

Same as Fig. 9 now presented in terms of the terms of the nuclear modification ratios \(R_f^{(A)}(x,Q)\)

Whenever the nuclear ratios deviate from unity, \(R^{(A)}_f(x,Q)\ne 1\), the fit results favour non-zero nuclear modifications of the free-proton PDFs. However, such non-zero nuclear modifications will not be significant unless the associated nPDF uncertainties are small enough. In order to quantify the local statistical significance of the nuclear modifications, it is useful to evaluate the pull on \(R^{(A)}_f(x,Q)\) defined as

where \(\delta R^{(A)}_f(x,Q)\) indicates the 68% CL uncertainties associated to the nuclear modification ratio for the f-th flavour. Values of these pulls such that  indicate consistency with no nuclear modifications at the 68% CL, while

indicate consistency with no nuclear modifications at the 68% CL, while  corresponds to a local statistical significance of nuclear modifications at the \(3\sigma \) level, the usually adopted threshold for evidence, in units of the nPDF uncertainty.

corresponds to a local statistical significance of nuclear modifications at the \(3\sigma \) level, the usually adopted threshold for evidence, in units of the nPDF uncertainty.

These pulls are displayed in Fig. 11 for both nNNPDF3.0 and the prior fit at \(Q=10\) GeV, where dotted horizontal lines indicate the threshold for which nuclear modifications differ from zero at the \(3\sigma \) (\(5\sigma \)) level. In the case of the quarks, the LHCb D-meson data enhances the pulls in the region \(x\simeq 10^{-3}\), leading to a strong evidence for small-x shadowing in the quark sector. At larger values of x, the pull for anti-shadowing reaches between the \(1\sigma \) the \(2\sigma \) level for up and down quarks and the down antiquark, while for \({\bar{u}}\) it is absent. The significance of the EMC effect remains at the \(1\sigma \) level of the up and down quarks. Considering next the pull on the gluon modification ratio, we observe how the LHCb D-meson measurements markedly increase both the significance and the extension of shadowing in the small-x region. Once the LHCb constraints are accounted for, one finds that nNNPDF3.0 favours a marked and statistically significant shadowing of the small-x gluon nPDF of lead in the region \( x \le 10^{-2}\). To the best of our knowledge, this is the first time that such a strong significance for gluon shadowing in heavy nuclei has been reported.

4.3 Fit quality and comparison with data

We now turn to discuss the fit quality in the nNNPDF3.0 analysis, for both the variants with and without the LHCb D-meson data, and present representative comparisons between NLO QCD predictions and the corresponding experimental data. Table 4 reports the values of the \(\chi ^2\) per data point for the DIS datasets that enter nNNPDF3.0. For each dataset we indicate its name, reference, the nuclear species involved, the number of data points, and the values of \(\chi ^2/n_{\mathrm{dat}}\) obtained both with nNNPDF3.0 (no LHCb D) and with nNNPDF3.0. Datasets labelled with (*) are new in nNNPDF3.0 as compared to its predecessor nNNPDF2.0. Table 5 displays the same information as Table 4 now for the fixed-target and LHC DY production datasets, the CMS dijet cross-sections, and the ATLAS direct photon production measurements. The last row of the table indicates the values corresponding to the global dataset. Values indicated within brackets ( ) correspond to datasets that are not part of the nNNPDF3.0 baseline, and whose \(\chi ^2\) values are reported only for comparison purposes. Finally in Table 6 we report the details of the reweighting and unweighting procedures, including the number of effective replicas \(N_{\text {eff}}\), the number of replicas in the unweighted set \(N_{\mathrm{unweight}}\), the number of data points included and the values of the \(\chi ^2\) before and after reweighting.

) correspond to datasets that are not part of the nNNPDF3.0 baseline, and whose \(\chi ^2\) values are reported only for comparison purposes. Finally in Table 6 we report the details of the reweighting and unweighting procedures, including the number of effective replicas \(N_{\text {eff}}\), the number of replicas in the unweighted set \(N_{\mathrm{unweight}}\), the number of data points included and the values of the \(\chi ^2\) before and after reweighting.

) correspond to datasets that are not part of the nNNPDF3.0 baseline, and are reported only for comparison purposes. See Table 6 for the corresponding \(\chi ^2\) values for the LHCb \(D^0\)-meson forward data

) correspond to datasets that are not part of the nNNPDF3.0 baseline, and are reported only for comparison purposes. See Table 6 for the corresponding \(\chi ^2\) values for the LHCb \(D^0\)-meson forward dataComparison between the NLO QCD theory predictions based on the nNNPDF3.0 and nNNPDF3.0 (no LHCb D) fits with the corresponding experimental data for the first two \(p_{T,\mathrm{dijet}}^{\mathrm{avg}}\) bins of the CMS \(\sqrt{s}=5.02\) TeV dijet production measurement (upper) and for the two dimuon invariant mass bins from the CMS \(\sqrt{s}=8.16\) Z production measurement (bottom panels)

Several interesting observations can be derived from the results presented in Tables 4 and 5. First of all, the global fit \(\chi ^2/n_{\mathrm{dat}}\) values are satisfactory, with \(\chi ^2/n_{\mathrm{dat}}\simeq 1.10\) for both nNNPDF3.0 variants. Actually, the values obtained for the nNNPDF3.0 fits with and without the constraints from the LHCb D-meson data are very similar in all cases. This observation is explained because, as discussed above, the LHCb D-meson data constraints are restricted to  where there is little overlap with other datasets entering the nuclear fit, as also highlighted by the kinematic plot of Fig. 1. Given that one is combining \(n_{\mathrm{dat}}=2151\) data points from 54 datasets corresponding to 7 different processes, such a satisfactory fit quality is a reassuring, non-trivial consistency test of the reliability of the QCD factorisation framework when applied to nuclear collisions. Likewise, a very good description of the nuclear DIS structure function data is achieved, with \(\chi ^2/n_{\mathrm{dat}}\simeq 1.02\) (1.04) for 1784 data points for nNNPDF3.0 (its variant without LHCb D data). This fit quality is similar to that reported for nNNPDF2.0, see [25, 26] for a discussion of the somewhat higher \(\chi ^2\) values obtained for a few of the DIS datasets.

where there is little overlap with other datasets entering the nuclear fit, as also highlighted by the kinematic plot of Fig. 1. Given that one is combining \(n_{\mathrm{dat}}=2151\) data points from 54 datasets corresponding to 7 different processes, such a satisfactory fit quality is a reassuring, non-trivial consistency test of the reliability of the QCD factorisation framework when applied to nuclear collisions. Likewise, a very good description of the nuclear DIS structure function data is achieved, with \(\chi ^2/n_{\mathrm{dat}}\simeq 1.02\) (1.04) for 1784 data points for nNNPDF3.0 (its variant without LHCb D data). This fit quality is similar to that reported for nNNPDF2.0, see [25, 26] for a discussion of the somewhat higher \(\chi ^2\) values obtained for a few of the DIS datasets.

The dependence with the atomic mass number A of the pulls defined in Eq. (4.2) in nNNPDF3.0 for a range of nuclei from deuterium (\(A=2\)) up to lead (\(A=208\)). Recall from Eq. (4.2) that nuclear modifications associated to the different numbers of protons and neutrons have already been accounted for

Concerning the new LHC gauge boson production datasets from pPb collisions added to nNNPDF3.0, a satisfactory fit quality is obtained for all of them except for the ALICE \(W^+\) at 5.02 TeV (2 points) and CMS Z at 8.16 TeV (36 points) measurements. From the data versus theory comparisons reported in Fig. 12 (see also Appendix C) for the case of the CMS Z dataset, in the low dimuon invariant mass bin with \(15\le M_{\mu {{\bar{\mu }}}} \le 60\) GeV the NLO QCD theory predictions undershoot the data by around 10% to 20%. This shift should be reduced by the addition of the NNLO QCD corrections [123], which may be relatively large in this region. We have verified that the nNNPDF3.0 fit results are unaffected if this low invariant mass bin is removed. For the on-peak invariant mass bin, with \(60\le M_{\mu {{\bar{\mu }}}} \le 120\) GeV and for which the NNLO QCD corrections are relatively small, a good description of the experimental data is obtained except for the left-most rapidity bin, where the cross-section is very small.

Concerning the CMS measurements of dijet production at 5.02 TeV and the ATLAS ones of isolated photon production at 8.16 TeV, in both cases presented as ratio between the pPb and pp spectra, one finds a fit quality of \(\chi ^2/n_{\mathrm{dat}}\simeq 1.8\) and 1.0 respectively. In the case of the CMS dijets, inspection of the comparison between the fit results and data in the bottom panels of Fig. 12 (see Appendix C for the rest of the bins) reveals that in general one has a good agreement with the exception of one or two bins in the forward (proton-going) direction. In particular, the most forward bin systematically undershoots the NLO QCD theory prediction. We have verified that if the two most forward rapidity bins are removed in each \(p_{T,\mathrm{dijet}}^{\mathrm{avg}}\) bin, the fit quality improves to \(\chi ^2/n_{\mathrm{dat}}\simeq 1.3\) without any noticeable impact on the fit results. Hence, it is neither justified nor necessary to apply ad-hoc kinematic cuts in the dijet rapidity, and one can conclude that a satisfactory description of this dataset is obtained. In the case of the ATLAS isolated photon measurements, good agreement between data and theory is obtained for the whole range of \(E_T^\gamma \) and \(\eta ^\gamma \) covered by the data.

As indicated by Table 5, when the theory predictions based on nNNPDF3.0 are compared to the absolute pPb spectra for dijet production and isolated photon production, much worse \(\chi ^2/n_{\mathrm{dat}}\) values are found, 13.6 and 3.3 for each dataset respectively. As discussed in Sect. 2.3, the prediction of the absolute cross-section rates at NLO QCD accuracy suffers from large uncertainties due to MHO effects. Dedicated studies of these two processes at NNLO QCD accuracy (such as those in [74, 77]) will be required to determine if this data can be reliably included in an nPDF fit.

4.4 A-dependence of nuclear modifications