Abstract

We present the prospects of detecting quantum entanglement and the violation of Bell inequalities in \(t\bar{t}\) events at the LHC. We introduce a unique set of observables suitable for both measurements, and then perform the corresponding analyses using simulated events in the dilepton final state, reconstructing up to the unfolded level. We find that entanglement can be established at better than \(5 \sigma \) both at threshold as well as at high \(p_T\) already in the LHC Run 2 dataset. On the other hand, only very high-\(p_T\) events are sensitive to a violation of Bell inequalities, making it significantly harder to observe experimentally. By employing a sensitive and robust observable, two different unfolding methods and independent statistical approaches, we conclude that, at variance with previous estimates, testing Bell inequalities will be challenging even in the high luminosity LHC run.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Quantum Mechanics (QM) predicts that when an entangled pair of particles is created, the two–particle wavefunction retains a non–separable character when they are set apart. In particular, correlations on experimental measurements arise even when the observations are space-like separated. Were QM the emergent explanation of an underlying classical theory, the causal structure imposed by relativity would be violated. The issue can also be solved by postulating QM is incomplete, and additional hidden degrees of freedom exist. Ultimately, whether or not reality is described by QM is matter of experiment. In 1964, Bell proved [1] classical theories obey correlation limits, i.e., Bell Inequalities (BIs), that QM can violate. In the last decades, several experiments have been performed and all results so far agree with QM predictions. Recently, prospects of using hadron colliders to test BIs at unprecedented scales of order of a TeV have emerged [2,3,4,5]. In this article, we study the perspectives of experimentally observing entanglement as well as the violation of BIs using spin correlations in \(t {\bar{t}}\) pairs produced in proton–proton collisions at the LHC, as first suggested in [2] and [3], respectively. We improve on previous studies in several aspects. First, we introduce a single set of observables that allow to measure entanglement as well as to assess BIs violation. Second, we perform an event simulation up to (fast) detector level, fully reconstruct the final states and then determine the quantum observables after unfolding detector effects. We show that our approach is robust under improved physics simulations, such as off-shell and high-order QCD effects, as well as under different choices in the reconstruction procedure. Finally, we carefully examine the stability of the results against the unfolding procedure. Our analysis confirms the expectations of Ref. [2] on measuring the entanglement at the LHC already with Run II data, while we find very challenging to convincingly prove BIs violation even in the high-luminosity phase of the LHC. The paper is organised as follows. We briefly present the general framework of bipartite spin systems in Sect. 2 and the basic physics features of top quark pair production in Sect. 3. In Sects. 4 and 5 we introduce the quantum observables and, in the case of BIs violation, compare their sensitivity with other proposals. In Sect. 6 we present the details of the data simulation and analysis. Our final results are presented in Sect. 7, while a discussion of the possible loopholes of the Bell experiment are collected in Sect. 8. We draw our conclusions in Sect. 9.

2 Entanglement and Bell inequalities

The state of a system composed of two subsystems A and B is separable if its density matrix \(\rho \) can be written as:

where \(\rho _A^k\) and \(\rho _B^k\) are quantum states for A and B, and the coefficients \(p_k\) are non-negative and add to one. The density matrix in Eq. (1) represents a system with classical probability \(p_k\) of being in the state \(\rho _A^k \otimes \rho _B^k\). The state is entangled when it is not separable. Entanglement is a property of a system described in the framework of QM. A different question is whether measurements on a given system can violate BIs. These can be conveniently phrased in terms of Clauser, Horne, Shimony, and Holt (CHSH) inequality [6] which states that measurements \(a, a'\) and \(b , b'\) on subsystems A and B, respectively (with absolute value \(\le 1\)) classically must satisfy:

A particularly simple example is provided by two particles with non-zero spin. In this case, the measurements entering the CHSH inequality are their spin projections along four axes, \({\hat{a}}, {\hat{a}}'\) for particle A, and \({\hat{b}} , {\hat{b}}'\) for particle B. This corresponds to the situation which we will be considering in the following.

In QM, entanglement is a necessary condition for violating BIs. However, it is important to stress that in general, i.e., for mixed states, the opposite is not true:

A well-known example of states which can be entangled and yet not violate BI’s are the so-called bipartite Werner states [7]. Let us consider a two spin-\(\frac{1}{2}\) particle system, whose state is described by the simple density matrix:

This state gives already a (very good) approximation of the pattern of spin correlations in a \(t{\bar{t}}\) system at the LHC and displays the features that will be important later.

The specific case where \(C_{xx}=C_{yy}=C_{zz}=-\eta \) with \(0< \eta <1\) corresponds to the singlet Werner state, while \(C_{xx}=C_{yy}=-C_{zz}=\eta \) and cyclic permutations correspond to a triplet of Werner states (with fidelity \(F=\frac{3\eta +1}{4}\)). It is known that for Werner states, \(\eta >1/3\) implies entanglement, while the CHSH inequality is violated when \(\eta >\sqrt{2}/2\).Footnote 1

More in general, one can identify the regions where the state (4) is entangled, using a criterion such as the one proposed in [8]. Independently, one can test whether directions \({\hat{a}}, {\hat{a}}', {\hat{b}}, {\hat{b}}'\) exist such that the CHSH inequality, Eq. (2), is violated, using the theorem proven in [9]. The corresponding disjoint regions are depicted in Fig. 1. Entanglement is present in the four light-colored small tetrahedrons which are inscribed in a large tetrahedron, while the CHSH inequality is violated only in the dark-colored sub-regions of the small tetrahedrons. The vertices of the large tetrahedron correspond to pure singlet and triplet Bell states, which we also use to identify the corresponding small tetrahedrons. The closer the \(C_{ii}\)’s are to vertices of the large tetrahedron the larger amount of spin correlations is present.

Regions of \(\{ C_{11}, C_{22}, C_{33} \}\) phase space where the density matrix \(\rho \) in Eq. (4) is entangled, and where the CHSH inequality is violated, divided into triplet and singlet. Regions where \(\rho \) is not positive definite are not shown

3 Top quark pairs at the LHC

Top quarks are unique candidates for high energy Bell tests. This follows from three concurring facts. First, since \(m_t \, \Lambda ^{-2}_{\text {\tiny QCD}} \gg \Gamma _t^{-1}\), top quarks decay semi-weakly before their spin is randomised by chromomagnetic radiation. Second, the leading top quark production mechanism at hadron colliders involves \(t {\bar{t}}\) pairs where top quarks are not polarised yet their spins are highly (and not-trivially) correlated in different areas of phase space. Third, thanks to left-handed nature of the weak interactions, the charged lepton emerging from the two-step decay \(t \rightarrow W b\) and \(W \rightarrow \ell \nu \) turns out to be \(100\%\) correlated with the spin of the mother top quark, i.e. the top quark differential width is given by:

with the spin analyzing power \(\alpha \) attaining the largest possible values, i.e., \(\pm 1\). We denote \(\varphi \) the angle between the top spin and the direction of the emitted lepton in the top quark rest frame, see Fig. 2. As a result, the lepton can be considered as a proxy for the spin of the corresponding top quark and the correlations between the leptons as a proxy for those between the top quark spins.

Assuming no net polarisation is present,Footnote 2 the density matrix for the spin of a \(t {\bar{t}}\) pair can be written as:

where the first term in the tensor product refers to the top and the second term to the anti-top quark. The \(C_{ij}\) matrix encodes spin correlations, and it is measurable. Note that Eq. (6), which will be used in the following, is more general than the simple density matrix in Eq. (4) considered in Sect. 2, since C is allowed to have off-diagonal entries. However, since in practice \(C_{ij} \approx C_{ji}\), the C matrix can be made (almost) diagonal with an appropriate choice of basis, thus reducing the \(t {\bar{t}}\) system to Eq. (4). The differential cross section for \(p p \rightarrow t {\bar{t}} \rightarrow \ell ^+ \ell ^- b {\bar{b}} \nu {\bar{\nu }}\) can be expressed as [12]:

where \(x_{ij} \equiv \cos \theta _{i} \cos {\bar{\theta }}_{j}\), \(\theta _i\) is the angle between the antilepton momentum and the i-th axis in its parent top rest frame, and \({\bar{\theta }}_j\) the angle between the lepton momentum and the j-th axis in its parent anti-top rest frame. In particular, Eq. (7) implies:

a relation that allows direct measurement of the C matrix. Spin is measured fixing a suitable reference frame. An advantageous choice is the helicity basis \(\lbrace {\hat{k}}, {\hat{r}}, {\hat{n}} \rbrace \),

where \({\hat{p}}\) is the beam axis and \(\theta \) is the top scattering angle in the center of mass frame, see also Fig. 3. The helicity basis is defined in terms of the top quark and also applies to the antitop.Footnote 3 Relevant reference frames are reached in a two step process: a \({\hat{z}}\) boost from the laboratory to the \(t {\bar{t}}\) center of mass frame, then a \({\hat{k}}\) boost to each top’s rest frame.

The amount and type of spin correlations strongly depend on the production mechanism as well as the phase space region (energy and angle) of the top quarks. Two complementary regimes are important: at threshold, i.e., when the top quarks are slow in their rest frame, and when they are ultra-relativistic. At threshold, gluon fusion \(gg \rightarrow t {\bar{t}}\) leads to an entangled spin-0 state while \(q {\bar{q}} \rightarrow t {\bar{t}}\) to a spin-1 state. The latter is subdominant at the LHC and acts as an irreducible background [2].

4 Observation of entanglement

It can be shown [8] that the \(t {\bar{t}}\) spin density matrix in Eq. (6) is separable (that is, not entangled) if and only if the partial transpose \((\mathbb {1} \otimes T) \, \rho \), obtained by acting with the identity on the first term of the tensor product and transposing the second, is positive definite. As shown in [2], this implies that

is a sufficient condition for the presence of entanglement. It generalises the Werner condition \(\eta >1/3\) to the case where the \(C_{ii}\)’s are not equal. The inequality in Eq. (10) does not depend on the basis, but we will use the helicity basis in Eq. (9) in the following.

At \(t {\bar{t}}\) production threshold \(C_{kk} + C_{rr} < 0\), so inequality of Eq. (10) reads:

The second regime corresponds to high transverse momentum top quarks, i.e. when the system is characterised by \(m_{t {\bar{t}}} \gg m_t\) and CMF scattering angle \(\theta \sim \frac{\pi }{2}\). In this case, an entangled spin-1 state is produced as a consequence of conservation of angular momentum regardless of production channel. Since in this region \(C_{kk} + C_{rr} > 0\), inequality in Eq. (10) is written as:

5 Observation of a violation of the BIs

Spin correlations at threshold are strong enough to show entanglement, yet not enough to allow the observation of a violation of the BIs. In addition, as we will discuss in the following, top quarks are moving slowly and their decays are usually not causally disconnected. A violation of the BIs can, however, be expected at large \(m_{t {\bar{t}}}\) and \(\theta \simeq \pi /2\). In this region of the phase space mass effects are subdominant. Different strategies to experimentally observe violations of BIs exist, we present them in the following.

The CHSH inequality, Eq. (2), can be written in terms of the \(C_{ij}\) matrix as:

As shown in Refs. [9, 13] the maximal value predicted by QM in the CHSH inequality of Eq. (2) is:

where \(\lambda \) and \(\lambda '\) are the two largest eigenvalues of \(C^T C\). The maximal value is obtained for the following choice of directions:

where d and \(d'\) are eigenvectors of \(C^T C\) corresponding to the eigenvalues \(\lambda , \lambda '\), and \(\tan \varphi = \sqrt{\frac{\lambda '}{\lambda }}\).

Following Ref. [2] and Eqs. (15) and (16), it can be shown that in the limit \(m_{t {\bar{t}}} \gg m_t\) and \(\theta = \frac{\pi }{2}\), the axes (in the helicity basis):

correspond to the optimal choice (up to NLO QCD corrections. Adopting the axes in Eq. (17), the CHSH inequality in Eq. (2) can then be cast in a particularly simple form:

which, once again, generalises the CHSH condition derived for Werner states. Note the \({\hat{k}}\) axis does not appear in Eq. (18), consistent with the physical argument that in a Bell experiment the spin (helicity) of a massless particle is measured on a plane perpendicular to its motion. A spin correlation experiment in this regime is equivalent to the usual quantum optics experiment with entangled photons, except for being characterised by a \(10^{12}\) times larger energy.

In general, the optimal choice of directions \({\hat{a}}, {\hat{a}}', {\hat{b}}, {\hat{b}}'\) using Eqs. (15) and (16) can be evaluated for each point in phase space, in terms of \(m_{t {\bar{t}}}\) and \(\theta \). In this case, for each \(t {\bar{t}}\) event, one can determine the optimal choice of axes and evaluate Eq. (13). This strategy should, in principle, maximise the effects of spin correlations. However, it also enhances the effects of systematic uncertainties in the event reconstruction, i.e., in the assignment of \(m_{t {\bar{t}}}\) and \(\theta \).

As suggested in [3], following from the result in Eq. (14), one can use:

as the CHSH inequality. This strategy entails a rather serious bias. Since each event is used to find the axes maximizing Eq. (2) and to evaluate Eq. (2) itself, this method automatically selects upwards-shifting statistical fluctuations. In other words, random fluctuations are more likely to drive the eigenvalues of \(C^T C\) in the positive direction rather than in the negative one, and furthermore, when selecting the two largest eigenvalues \(\lambda \) and \(\lambda '\) one is more likely to pick the ones that fluctuated up rather than those that fluctuated down. As a result, the estimated value of \(\lambda + \lambda '\) is on average larger than the true value. The amount of bias present in \(\lambda + \lambda '\) is a notoriously difficult quantity to evaluate [14]. To overcome the issue of correcting for the bias, one can use the observable \(\lambda + \lambda '\) in an hypothesis test as follows. First, the entries \(C_{ij}\) are reconstructed from (simulated) data, producing the matrix C. Then, the entries of C are smeared randomly many times according to their uncertainties, and the resulting distribution of \(\lambda + \lambda '\) is constructed. The same procedure is applied to the “classical” correlation matrix

which can be considered as the worst–case scenario to reject, as it imitates the spin correlations we want to observe but has \(\lambda + \lambda ' = 1\). Then, the probability that upon smearing of \(C_{\text {classical}}\) one finds:

is the significance for rejecting the “classical” hypothesis, given the data.

We conclude this section with an observation regarding Eq. (19). Assuming the \(C_{ij}\) matrix is approximately diagonal, and \(|C_{kk}| < |C_{rr}|\), \(|C_{kk}| < |C_{nn}|\), which is satisfied in the signal region, one obtains from (19):

Inequality (22) identifies a disk and looks different from Eq. (18), which is linear. However, in the region where the experimental measurement most likely lies, \(C_{rr}\) and \(C_{nn}\) are constrained to be \(0.7 \lesssim C_{rr} \lesssim 0.9\), \(-0.8 \lesssim C_{nn} \lesssim -0.6\) and the two conditions are essentially equivalent, see Fig. 4. This shows that Eq. (18) is as a sensitive indicator for the violation of BIs as the reference-frame independent bound provided by Eq. (19).

6 Simulation and analysis

We generate events corresponding to proton–proton collisions at \(\sqrt{s} = 13 \, \text {TeV}\) resulting in a \(\ell ^- \, \ell ^+ \, \nu \, {\bar{\nu }} \, b \, {\bar{b}}\) final state. Leptons \(\ell \) only include electrons and muons, both in same and different flavor combinations. Non–resonant, single resonant and double resonant top quark diagrams are summed, as well as diagrams in which no top quarks exist, yet the final state is two bottom quarks, two leptons, and two neutrinos. Events are generated using MadGraph5_aMC@NLO [15] at Leading Order (LO) within the Standard Model. This amounts to about 5000 diagrams summed. No kinematic cuts are imposed except for a lower limit of \(5 \, \text {GeV}\) on the invariant mass of same flavor lepton pairs, to keep the \(\gamma ^* \rightarrow \ell ^+ \ell ^-\) splitting infrared safe, and a \(10 \, \text {GeV}\) lower limit for charged leptons \(p_T\). The rate is normalised to an estimate of the experimental cross section of \(p p \rightarrow \ell ^- \, \ell ^+ \, \nu \, {\bar{\nu }} \, b \, {\bar{b}}\) obtained with recent measurements [16]. Hard processes are showered and hadronised using Pythia 8 [17]. Events are then passed to the Delphes framework [18] for fast trigger, detector and reconstruction simulation, using the setup of ATLAS detector at the LHC. In our simplified analysis, we only consider irreducible backgrounds, consisting of contributions to the \(\ell ^- \, \ell ^+ \, \nu \, {\bar{\nu }} \, b \, {\bar{b}}\) final state without an intermediate \(t {\bar{t}}\) pair, or with an intermediate \(t {\bar{t}}\) pair that does not decay into prompt light leptons. These contributions become negligible when the kinematics of two on-shell top quarks is correctly reconstructed. Further sources of background include \(t {\bar{t}} \, V\) events, diboson events, and misidentification of leptons. These backgrounds are known to amount to a few percent of the total [19] and are neglected. The same flavor channel also receives a contamination from \(Z + \text {jets}\) events, whose number, after cuts, is at the percent level [20], comparable with other backgrounds already quoted. At the selection level, we require the presence of at least two jets with \(p_T > 25 \, \text {GeV}\) and \(|\eta | < 2.5\). At least one jet has to be b–tagged. If only one jet has been b–tagged, we assume the second b-jet is the one not b–tagged with the largest \(p_T\). We require exactly two leptons of opposite charge, both with \(p_T > 25 \, \text {GeV}\) and \(|\eta | < 2.5\). Both leptons must pass an isolation requirement. In the \(e^+ e^-\) and \(\mu ^+ \mu ^-\) channels, Z + jets processes are suppressed by requiring \(p^{\text {miss}}_{T} > 40 \,\text {GeV}\) and \(20 \, \text {GeV}< m_{\ell ^+ \ell ^-} < 76 \, \text {GeV}\) or \(m_{\ell ^+ \ell ^-} > 106 \, \text {GeV}\). Before reconstructing neutrinos, the measured momenta of b quarks and the value of the missing energy are smeared according to the simulated distribution of reconstructed values around true values. Neutrinos are then reconstructed solving for the kinematics of \(t {\bar{t}} \rightarrow \ell ^- \, \ell ^+ \, \nu \, {\bar{\nu }} \, b \, {\bar{b}}\). The solution is assigned a weight proportional to the likelihood of producing a neutrino of the given reconstructed energy in a \(p p \rightarrow t {\bar{t}} \rightarrow \ell ^- \, \ell ^+ \, \nu \, {\bar{\nu }} \, b \, {\bar{b}}\) at \(\sqrt{s} = 13 \, \text {TeV}\) in the SM. If many solutions exist for the kinematics, the solution yielding the smallest \(m_{t{\bar{t}}}\) is considered. The smearing on b quarks and \(p^{\text {miss}}\) is repeated 100 times. There is a twofold ambiguity in assigning b quarks to jets, so reconstruction is performed twice for each event. The assignment yielding the largest weight is chosen, and the final \(p_\nu \) and \(p_{{\bar{\nu }}}\) are calculated as a weighted average. Further details on the performance of our event reconstruction algorithm can be found in [21]. The reconstructed distributions of \(\cos \theta _{i} \cos {\bar{\theta }}_{j}\) appearing in Eq. (7) are unfolded using the iterative Bayesian method [22] implemented in the RooUnfold framework [23], and the final statistical uncertainty on the \(C_{ij}\) matrix is computed taking into account the bin-to-bin correlations introduced by the unfolding process.

As a validation of our unfolding procedure, we have repeated the unfolding varying the number of iterations (from 3 to 6) and the number of bins (from 6 to 12) and results are found to be stable. The unfolding performance worsens when the number of iterations is increased above \(\sim 10\), as expected, since the iterative Bayesian method [22] reproduces the simple inversion of the response matrix without regularisation for \(n_{\text {iterations}} \rightarrow \infty \). As a further check, we have unfolded our reconstructed distributions with the Singular Value Decomposition technique proposed in [24], also implemented in RooUnfold, for various number of bins (from 6 to 12) and various choices of the regulating parameter k (from 3 to 5). Results are consistent with the iterative method and stable under change of parameters. When run over \(35.9 \, \text {fb}^{-1}\) of simulated luminosity with the same kinematical cuts in [20], our analysis produces statistical uncertainties at the unfolded level that are compatible with those found by the CMS Collaboration.

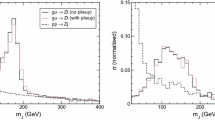

In order to verify the robustness of our observable definition and reconstruction method against higher-order QCD effects, we have generated \(250 \, \text {fb}^{-1}\) of \(p p \rightarrow t {\bar{t}}\) events at \(\sqrt{s} = 13 \, \text {TeV}\) at Next-to-Leading Order (NLO) in QCD with MadGraph5_aMC@NLO. Since NLO QCD corrections to the \(C_{ij}\) matrix are known to be small [10], top spin correlations and finite width effects have been taken into account using MadSpin [25]. The test statistics, Eqs. (11), (12), and (18), are then re-evaluated using the event reconstruction algorithm cited above. Deviations in our region of interest are seen at the percent level, meaning the algorithm is well–behaved under the introduction of NLO QCD corrections, and missing higher-order terms in our LO analysis are sub-leading with respect to statistical uncertainty in realistic LHC scenarios.

7 Results

As a first step, we consider the observation of entanglement. The two signal regions of interest are i) at threshold and ii) at large \(p_T\). We consider three different selections, characterised by different trade-offs between keeping the largest possible statistics and maximising the correlations. The three selections are shown explicitly in Fig. 5, with the “strong” selection being completely contained in the “intermediate” selection, that in turn is contained in the “weak” selection. Results are collected in Table 1, together with an estimate of the cross section included in each selection. When considering the LHC Run 2 luminosity of \(139 \, \text {fb}^{-1}\), the expected statistical significance for the detection of entanglement is of order \(5\sigma \) or more in both signal regions.

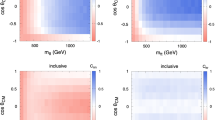

The strategy to observe a violation of BIs is the same as the one employed for entanglement. In this case, however, we only consider one signal region, corresponding to events with \(m_{t{\bar{t}}}\) of order TeV and \(\theta \) close to \(\frac{\pi }{2}\), and move directly to simulating experiments using \(350 \, \text {fb}^{-1}\) of luminosity. We consider three selections, shown explicitly in Fig. 6, with the same “strong”/“intermediate”/“weak” hierarchy as before. Assuming an average detector efficiency of \(12\%\) in successfully reconstructing parton–level \(t {\bar{t}}\) events, consistent with the results of our simulations, our three different selections should yield approximately \(10^4\), \(5 \cdot 10^3\), and \(3 \cdot 10^3\) events respectively at the end of Run 3 of the LHC, and a factor of \(\sim 10\) more after the High–Luminosity Run.

Table 2 collects results for the fixed choice of axes of Eq. (17). We find that the improvement given by the optimization is not enough to overcome the increase in systematic uncertainty noted in Sect. 5, and the overall performance of this method is worse than just using fixed axes. Finally, Table 3 shows results for the hypothesis test using \(\lambda + \lambda '\). Figure 8 shows the distribution of \(\lambda +\lambda '\) and \((\lambda +\lambda ')_{\text {classical}}\) used for the hypothesis test with the weak selection cuts. We find that LHC Run 2 + Run 3 statistics are not sufficient for a conclusive measure. In order to provide an estimate for the upcoming High–Luminosity Run (HL-LHC), we estimate statistical uncertainties running all our analyses on \(3 \, \text {ab}^{-1}\) of simulated luminosity. Results are shown in Fig. 7. The statistical significance for a violation of the CHSH inequality in Eq. (18) becomes of order \(\sim 2 \,\sigma \), regardless of the specific strategy or observable used.

8 Loopholes

When performing a Bell experiment the possible existence of loopholes has to be assessed. First, Bell experiments require outside intervention to choose freely, i.e. unknown to the system itself, what to measure. This can be achieved, for example, by mechanisms that randomly choose the orientations of the measurement axes. In the case of a \(t {\bar{t}}\) system, no outside intervention is possible. However, one can argue that a random choice of axes is realised by the direction of the final state leptons in the top quark decays. Second, the quantum measurements on the spin state of the \(t {\bar{t}}\) pair, which take place when the top quarks decay leptonically, happen at very short distances. This is in contrast with the typical Bell experiment setup, which features macroscopic distances, and relates events that are always casually disconnected. In the case of the \(t {\bar{t}}\) system, one can establish the casual independence of the decays only at the statistical level. In Fig. 9 we plot a Monte Carlo evaluation of the probability of space-like separated decays as a function of the pair invariant mass \(m_{t {\bar{t}}}\). Close to threshold, most of the top pairs decay within each other’s light-cone, while more than \(90\%\) of \(t {\bar{t}}\) pairs decay when they are space–like separated for \(m_{t {\bar{t}}} > 800 \, \text {GeV}\). Third, even assuming b–tagging and lepton identification were perfect, one can only observe \(p p \rightarrow \ell ^- \, \ell ^+ \, b \, {\bar{b}} \, + E^{\text {miss}}\). In fact, top quarks might even not be present in a given event. However, within our simulations, we have verified that after reconstruction the large majority of the events selected can be attributed to \(t {\bar{t}}\) pair production. Fourth, only a small fraction of events is usable for the analysis, and one has to assume the events that are recorded provide an unbiased representation of the bulk.

Representation of results of Table 2 in the \(C_{rr} - C_{nn}\) plane. Shaded band: region where CHSH inequality is violated. Ellipses: \(1 \sigma \) contour estimations for the value of \(C_{rr}\) and \(C_{nn}\) after the HL-LHC Run for the three selections. Stronger cuts move the central value further into the non–classical region, yet widen the uncertainties. It is expected [20] that different entries of the C matrix have different statistical uncertainties at the unfolded level, up to a relative factor of \(\sim 2\)

9 Conclusions

We have presented new detailed studies for the detection of entanglement and the violation of Bell inequalities using spin-correlation observables in top quark pairs at the LHC. The main motivation for such a measurement is the possibility of performing a first TeV–scale Bell experiment, opening new prospects for high–energy precision tests of QM and of the SM. We have identified a unique set of observables that are sensitive to either the presence of entanglement or to a violation of a CHSH inequality. Our results indicate that the detection of entanglement will be straightforward, in agreement with Ref. [2], and barring unexpected effects from systematic uncertainties, the LHC Run 2 dataset should be enough to reach a \(5\sigma \) statistical significance.

On the other hand, assessing the violation of Bell inequalities is much more challenging: sufficiently strong correlations are found only for top quarks at very high-\(p_T\), thereby drastically reducing the available statistics. By considering only dileptonic final states and ignoring possibly relevant systematic uncertainties, whose evaluation goes beyond the scope of this study, we find the statistical significance for a violation to be of order \(2 \sigma \) at the end of the High–Luminosity Run. The need to compare with theoretical expectations at unfolded level introduces a significant degradation of the naively estimated sensitivity just based on the share statistics, consistently with the current sensitivity of spin-correlations measurements at the LHC.

The analysis strategy presented here is robust and can be directly implemented by the experimental collaborations. As a cross-check, we also directly employed the test statistics proposed in Ref. [3] and obtained results which are consistent with our own method.

Barring the obvious benefits of an increased collider energy/luminosity, further studies to improve the prospects could be envisaged. For example, one could consider whether the limited statistics could be improved by including final states where only one top quark decays leptonically.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: The datasets generated for the study, together with the code used for the analysis, are available from the corresponding author on request.]

Notes

Werner states are usually expressed as mixtures of Bell states, \(\vert \Phi ^\pm \rangle \) and \(\vert \Psi ^\pm \rangle \). In particular, singlet and triplet Werner state correspond to one-parameter families of states that in the high-fidelity limit \(F \rightarrow 1\) match pure singlet \(\vert \Psi ^- \rangle \) or (one of the three) triplet states \(\vert \Psi ^+ \rangle \), \(\vert \Phi ^- \rangle \), \(\vert \Phi ^+ \rangle \), respectively.

Strong \(t{\bar{t}}\) production does not lead to polarised top quarks, as parity is conserved [10]. EW effects (and possibly also absorptive parts from loops), on the other hand, can give rise to a net top quark polarisation. However, they have been estimated to be very small [11], and therefore are neglected here.

We follow the sign convention of [2].

References

J.S. Bell, On the Einstein Podolsky Rosen paradox. Phys. Phys. Fizika 1(3), 195–200 (1964). https://doi.org/10.1103/PhysicsPhysiqueFizika.1.195

Y. Afik, J.R.M. de Nova. Quantum information and entanglement with top quarks at the LHC. Eur. Phys. J. Plus, 136 (2021). https://doi.org/10.1140/epjp/s13360-021-01902-1. arXiv:2003.02280

M. Fabbrichesi, R. Floreanini, G. Panizzo. Testing Bell inequalities at the LHC with top-quark pairs. Phys. Rev. Lett. 127 (2021). https://doi.org/10.1103/PhysRevLett.127.161801. arXiv:2102.11883

Y. Takubo et al. On the feasibility of bell inequality violation at atlas with flavor entanglement of b0-b0 pairs. 2021. arXiv:2106.07399

A. Barr. Testing Bell inequalities in Higgs boson decays (2021). arXiv:2106.01377

J.F. Clauser, Horne M.A., A. Shimony, R.A. Holt. Proposed experiment to test local hidden-variable theories. Phys. Rev. Lett. 23(15), 880–884 (1969). https://doi.org/10.1103/PhysRevLett.23.880

R. F. Werner. Quantum states with Einstein-Podolsky-Rosen correlations admitting a hidden-variable model. Phys. Rev. A 40 (1989). https://doi.org/10.1103/PhysRevA.40.4277

A. Peres. Separability criterion for density matrices. Phys. Rev. Lett. 77 (1996). https://doi.org/10.1103/PhysRevLett.77.1413. arXiv:quant-ph/9604005

R. Horodecki, P. Horodecki, M. Horodecki. Violating Bell inequality by mixed spin-\(\frac{1}{2}\) states: necessary and sufficient condition. Phys. Lett. A 200 (1995). https://doi.org/10.1016/0375-9601(95)00214-N

W. Bernreuther, A. Brandenburg, Z. G. Si, P. Uwer. Top quark spin correlations at hadron colliders: predictions at next-to-leading order QCD. Phys. Rev. Lett. 87, 242002 (2001). https://doi.org/10.1103/PhysRevLett.87.242002. arXiv:hep-ph/0107086

R. Frederix, I. Tsinikos, and T. Vitos. Probing the spin correlations of \(t{\bar{t}}\) production at NLO QCD+EW. Eur. Phys. J. C 81 (2021). https://doi.org/10.1140/epjc/s10052-021-09612-9. arXiv:2105.11478

W. Bernreuther, A. Brandenburg, Z.G. Si, P. Uwer. Top quark pair production and decay at hadron colliders. Nucl. Phys. B 690(1-2):81–137 (2004). https://doi.org/10.1016/j.nuclphysb.2004.04.019. arXiv:hep-ph/0403035

S. Chen, Y. Nakaguchi, S. Komamiya, Testing Bell’s inequality using charmonium decays. Progress of Theoretical and Experimental Physics 6, 2013 (2013). https://doi.org/10.1093/ptep/ptt032. arXiv:1302.6438

Z. Furedi, J. Komlos. The eigenvalues of random symmetric matrices. Combinatorica 1, (1981)

J. Alwall et al. The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. J. High Energy Phys. 79 (2014). https://doi.org/10.1007/JHEP07(2014)079. arXiv:1405.0301

Particle Data Group, Review of particle physics. Prog. Theor Exp. Phys. 8, 2020 (2020)

T. Sjöstrand et al. An introduction to PYTHIA 8.2. Comput. Phys. Commun. 191 (2015). https://doi.org/10.1016/j.cpc.2015.01.024. arXiv:1410.3012

DELPHES Collaboration. DELPHES 3: a modular framework for fast simulation of a generic collider experiment. J. High Energy Phys. 57 (2014). https://doi.org/10.1007/JHEP02(2014)057. arXiv:1307.6346

ATLAS Collaboration. Probing the quantum interference between singly and doubly resonant top-quark production in \(pp\) collisions at \(\sqrt{s} = 13\) TeV with the ATLAS detector. Phys. Rev. Lett. 121 (2018). https://doi.org/10.1103/PhysRevLett.121.152002. arXiv:1806.04667

CMS Collaboration. Measurement of the top quark polarization and \(t {\bar{t}}\) spin correlations using dilepton final states in proton-proton collisions at \(\sqrt{s}\) = 13 TeV. Physical Review D, 100, 2019. 10.1103/PhysRevD.100.072002. arxiv:1907.03729

C. Severi. Bell inequalities with top quark pairs with the ATLAS detector at the LHC (2021). http://amslaurea.unibo.it/23535/

G. D’Agostini. Improved iterative Bayesian unfolding (2010). arXiv:1010.0632

T. Adye et al. Roounfold gitlab repository. 2021. https://gitlab.cern.ch/RooUnfold/RooUnfold

A. Hoecker, V. Kartvelishvili. SVD approach to data unfolding. Nucl. Instrum. Methods Phys. Res. A 372 (1996). https://doi.org/10.1016/0168-9002(95)01478-0. arXiv:hep-ph/9509307

P. Artoisenet et al. Automatic spin-entangled decays of heavy resonances in Monte Carlo simulations. J. High Energy Phys. 3 (2013). https://doi.org/10.1007/JHEP03(2013)015. arXiv:1212.3460

Acknowledgements

We are thankful to Eleni Vryonidou and Marco Zaro for comments on the manuscript and to Alan Barr, Stefano Forte, Rikkert Frederix and Marino Romano for discussions. C. S. is supported by the European Union’s Horizon 2020 research and innovation programme under the EFT4NP project (grant agreement no. 949451). F. M. has received funding from the European Union’s Horizon 2020 research and innovation programme as part of the Marie Skłodowska-Curie Innovative Training Network MCnetITN3 (grant agreement no. 722104) and by the F.R.S.-FNRS under the ‘Excellence of Science‘ EOS be.h project n. 30820817.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3

About this article

Cite this article

Severi, C., Boschi, C.D.E., Maltoni, F. et al. Quantum tops at the LHC: from entanglement to Bell inequalities. Eur. Phys. J. C 82, 285 (2022). https://doi.org/10.1140/epjc/s10052-022-10245-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-022-10245-9