Abstract

We show how to deal with uncertainties on the Standard Model predictions in an agnostic new physics search strategy that exploits artificial neural networks. Our approach builds directly on the specific Maximum Likelihood ratio treatment of uncertainties as nuisance parameters for hypothesis testing that is routinely employed in high-energy physics. After presenting the conceptual foundations of our method, we first illustrate all aspects of its implementation and extensively study its performances on a toy one-dimensional problem. We then show how to implement it in a multivariate setup by studying the impact of two typical sources of experimental uncertainties in two-body final states at the LHC.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Experimental results in the last several decades consolidated our knowledge of fundamental physics as described by “standard” theoretical models such as the Standard Model (SM) of particle physics or the \(\Lambda \text{ CDM }\) model of cosmology. On the other hand we lack understanding of the microscopic origin of several ingredients of these models, such as the Dark Matter and Dark Energy densities in \(\Lambda \text{ CDM }\), the electroweak scale and the Yukawa couplings structure in the SM. These considerations, as well as the theoretical incompleteness of our current theory of gravity, guarantee the existence of new fundamental laws waiting to be discovered, but do not sharply outline a path towards their actual experimental discovery.

One can take the incompleteness of the standard models as guidance to formulate putative “new physics” models or scenarios that complete the standard models in one or several aspects. Then one can organize the exploration of new fundamental laws as the search for the experimental manifestations of such models. We call these searches “model-dependent” as they target the signal expected in one specific model and have poor or no sensitivity to unexpected signals. The problem with this strategy is that each new physics model only offers one possible solution to the problems of the standard models. Even searching for all of them experimentally, we are not guaranteed to achieve a discovery, as the actual solution might be one that we have not yet hypothesized. This possibility should be taken seriously also in light of the lack of discovery so far in the vast program of model-dependent searches carried out at past and ongoing experiments.

The development of “model-independent” strategies to search for new physics emerges in this context as a priority of fundamental physics. We dub model-independent those strategies that aim at assessing the compatibility of data with the predictions of a Reference theoretical Model, to be interpreted as one of the “standard” models previously discussed, rather than at probing the signatures of a specific alternative model, as in traditional model-dependent searches. It should be noted on the one hand that testing one Reference hypothesis with no assumption on the set of allowed alternative hypotheses is an ill-defined statistical concept. On the other hand, it is often trivial in practice to tell the level of compatibility of the Reference Model with the data of an experiment whose outcome consists of a single or a few measurements. The statistical distribution of the measurements is known and can be compared with the one predicted by the Reference Model. Combining a limited number of measurements does not spoil the sensitivity even if the departure from the Reference Model is present in one single measurement. However the problem becomes practically and conceptually non-trivial in modern fundamental physics experiments where the data are extremely rich and the number of possible measurements is essentially infinite. In model-dependent strategies one restricts the set of measurements to those where the specific new physics model is expected to contribute significantly, and/or one exploits the correlation between the outcome of different measurements predicted by the new physics model. Obviously this is not an option in the model-independent case.

We consider here the model-independent method that we proposed and developed in Refs. [1, 2] for data analysis at particle colliders such as the Large Hadron Collider (LHC). In this case the data \({\mathcal {D}}=\{x_1,\ldots ,x_{{{\mathcal {N}}_{\mathcal {D}}}}\}\) consist of \({{\mathcal {N}}_{\mathcal {D}}}\) independent and identically-distributed measurements of a vector of features x. The physical knowledge of the Reference Model (the SM) can be used to produce a synthetic set of Reference data \({\mathcal {R}}=\{x_1,\ldots ,x_{\mathrm{{N}}_{\mathcal {R}}}\}\), whose elements follow the probability distribution of x in the Reference hypothesis “\(\mathrm{{R}}\)”. In general, \({\mathcal {R}}\) could be a weighted event sample. The Reference Model can also predict the total number of events \(\mathrm{{N}}(\mathrm{{R}})\) expected in the experiment, around which the number of observations \({{\mathcal {N}}_{\mathcal {D}}}\) is Poisson-distributed. Model-independent search strategies aim at exploiting these elements for a test of compatibility between the hypothesis \(\mathrm{{R}}\) and the data.Footnote 1 In order to be useful, the test should be capable to detect “generic” departures of the data distribution from the Reference expectation. Moreover it should target “small” departures in the distribution. The significance of the discrepancy can be large, but the signal can be sizable (i.e., given by a number of events that is large, relative to the Reference model expectation) only in a small (low-probability) region of the features space, or its significance emerge from correlated small differences in a large region. This is because previous experiments and theoretical considerations generically exclude the viability of new physics models that produce a radical deformation of the LHC data distribution, which are furthermore easier to detect.

As said, the Reference sample \({\mathcal {R}}\) consists of synthetic instances of the variable x that follow the distribution predicted by the Reference Model. It plays conceptually the same role as the background dataset in regular model-dependent searches and it can be obtained either by a first-principle Monte Carlo simulation based on the fundamental physical laws of the Reference Model, or with data-driven methods. In the latter case, one could extrapolate the background from data measured in a control region, using transfer functions that are extracted from Monte Carlo simulations. In both cases, \({\mathcal {R}}\) results from a knowledge of the Reference Model that is unavoidably imperfect. Therefore it provides only an approximate representation of the data distribution in the Reference (or background) hypothesis. Uncertainties emerge from all the ingredients of the simulations such as the value of the Reference Model input parameters, of the parton distribution functions and of the detector response, as well as from the finite accuracy of the underlying theoretical calculations. The impact of all these uncertainties must be assessed and included if needed in any LHC analysis. In this paper we define a strategy to deal with them in our framework for model-independent new physics searches.Footnote 2

1.1 Overview of the methodology

In this work we develop a full treatment of systematic uncertainties within a model-independent search. Our treatment follows closely the canonical high-energy physics profile likelihood approach, reviewed in Ref. [13]. Each source of imperfection in the knowledge of the Reference Model is associated with a nuisance parameter \(\nu \). Its (true) value is unknown but statistically constrained by an “auxiliary” dataset \({\mathcal {A}}\), which produces a \(\nu \)-dependent multiplicative term in the likelihood, \(\mathcal {L}({\varvec{\nu }}|{\mathcal {A}})\). The Reference Model prediction for the distribution of the variable x depends on the nuisance parameters, which we collect in a vector \({\varvec{\nu }}\). The Reference Model is thus interpreted as a composite (parameter-dependent) statistical hypothesis \(\mathrm{{R}}_{\varvec{\nu }}\), to be identified with the null hypothesis \(H_0\) of the statistical test. The alternative hypothesis \(H_1\) is defined as a local (in the features space) rescaling of the Reference distribution by the exponential of a neural network function \(f(x;{\mathbf{{w}}})\). The \(H_1\) hypothesis is clearly also a composite one. We denote it as \(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}\), where \({\mathbf{{w}}}\) represents the trainable parameters of the neural network. Our strategy consists of performing a hypothesis test, based on the Maximum Likelihood log-ratio test statistic [14,15,16], between the \(\mathrm{{R}}_{\varvec{\nu }}\) and \(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}\) hypotheses. Namely our test statistic t (see Eq. (8)) is twice the logarithm of the ratio between the likelihood of \(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}\) given the data (times the auxiliary likelihood \(\mathcal {L}({\varvec{\nu }}|{\mathcal {A}})\)), maximized over \({\mathbf{{w}}}\) and \({\varvec{\nu }}\), and the likelihood of \(\mathrm{{R}}_{\varvec{\nu }}\) (times \(\mathcal {L}({\varvec{\nu }}|{\mathcal {A}})\)) maximized over \({\varvec{\nu }}\).

The concept is literally the same as in Refs. [1, 2], with the difference that the Reference hypothesis is now composite rather than simple (i.e., \({\varvec{\nu }}\)-independent) and the \(H_1\) hypothesis also depends on the nuisances and not only on the neural network parameters \({\mathbf{{w}}}\). As in Refs. [1, 2], the choice of a neural network model for \(H_1\) is motivated by the quest for an unbiased flexible approximant that can adapt itself to generic departures of the data from the Reference distribution, in order to maximize the sensitivity of the hypothesis test to generic new physics.

The first goal of the present paper is to construct a practical algorithm that computes the Maximum Likelihood log-ratio test statistic as defined above, including the effect of nuisance parameters. The basic idea is to normalize the \(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}\) and \(\mathrm{{R}}_{\varvec{\nu }}\) likelihoods to the likelihood of the “central-value” Reference hypothesis \(\mathrm{{R}}_{\varvec{0}}\), namely the one where the nuisance parameters are set to their central value (\({\varvec{\nu }}=0\)) that maximizes the observed auxiliary likelihood. In this way we divide the calculation of the test statistic t in the evaluation of two separate terms. One of them merely consists of the likelihood log-ratio between the nuisance-dependent \(\mathrm{{R}}_{\varvec{\nu }}\) likelihood maximized over \({\varvec{\nu }}\), and the likelihood of the central-value \(\mathrm{{R}}_{\varvec{0}}\) hypothesis. Maximizing the background-only likelihood as a function of the nuisance parameters is a necessary step of any LHC analysis. It serves in the first place to quantify the pull of the best-fit values of the nuisances, that maximize the complete likelihood (including the likelihood of the data of interest and of the auxiliary data, \({\mathcal {A}}\)), relative to their central value estimates and uncertainties as obtained from the auxiliary likelihood alone. Therefore the determination of the first term in t does not pose any novel challenge, and could be in principle performed with the standard strategy of employing a binned approximation of the likelihood after modeling the dependence of the cross section in each bin on the nuisances. For the specific applications studied in this paper we have found more effective and more easy to employ an un-binned likelihood reconstructed by neural networks [17,18,19,20,21,22,23].

The other term required for the determination of the test statistic t involves the neural network and requires the maximization over the neural network parameters \({\mathbf{{w}}}\) (and over \({\varvec{\nu }}\)). It will be obtained by neural network training (with simultaneous minimization over \({\varvec{\nu }}\)), with a strategy that is a relatively straightforward generalization of the one we already employed [1, 2] in the absence of nuisance parameters. As in Refs. [1, 2], the training data are the observed dataset \({\mathcal {D}}\) and the Reference dataset \({\mathcal {R}}\). The Reference data are supposed to represent the distribution in the central-value hypothesis \(\mathrm{{R}}_{\varvec{0}}\), therefore they are obtained fixing each nuisance parameter to its central value. They do not contain any information on the variability of the Reference distribution due to the nuisances, which is taken into account by the first term of the test statistic. This avoids employing in the training Reference samples with multiple values of the nuisance parameters. The algorithm is thus not more computationally expensive than the one in the absence of nuisances.

Like any other frequentist hypothesis test, the practical feasibility of our strategy is linked to the validity of asymptotic formulae for the distribution of the test statistic t in the null hypothesis \(\mathrm{{R}}_{\varvec{\nu }}\), \(P(t|H_0)=P(t|\mathrm{{R}}_{\varvec{\nu }})\). In particular the asymptotic formulae are needed to ensure the independence of \(P(t|\mathrm{{R}}_{\varvec{\nu }})\) on the nuisance parameters \({\varvec{\nu }}\) [13, 24]. The Wilks–Wald Theorem [15, 16] predicts a \(\chi ^2\) distribution for t in the asymptotic (infinite sample) limit, but it gives no quantitative information on how “large” the dataset should be, in order for \(P(t|\mathrm{{R}}_{\varvec{\nu }})\) to be similar to a \(\chi ^2\). Furthermore there is obviously no universal lower threshold on the data statistics after which the asymptotic result starts applying. The threshold depends on the problem and, crucially, on the complexity of the statistical model that is being considered. For instance if a simple one-parameter linear model was used for the numerator hypothesis instead of a neural network, a statistics of a few data events might suffice to reach the asymptotic limit accurately. Larger and larger datasets will be needed if the expressivity of the model is increased using neural networks of increasing complexity. One can of course also adopt the opposite viewpoint, which is more convenient in our case where the statistics of the data is fixed, and consider the upper threshold for the model complexity below which the asymptotic limit is reached and the distribution of t starts following the \(\chi ^2\) distribution.

We need the asymptotic formula to hold in order to eliminate or mitigate the dependence of \(P(t|\mathrm{{R}}_{\varvec{\nu }})\) on \({\varvec{\nu }}\). On the other hand, we would like our model to be as complex and expressive as possible in order to be sensitive to the largest possible variety of putative new physics effects. Therefore the optimal complexity for the neural network model is right at the threshold of loosing the \(\chi ^2\) compatibility. In Ref. [2] we already advocated this \(\chi ^2\) compatibility criterion for the selection of the neural network model, with the motivation that the t distribution not following the asymptotic formula signals that t is sensitive to low-statistics regions of the dataset, a fact which in turn can be interpreted as “overfitting” in our context. This heuristic motivation remains, but it is accompanied by the stronger technical argument associated with the feasibility of the hypothesis test including nuisance parameters.

1.2 Structure of the paper

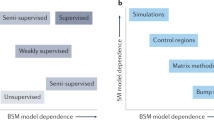

The rest of the paper is organized as follows. In Sect. 2 we describe the statistical foundations of our method. Namely we show how to turn the mathematical definition of the Maximum Likelihood ratio test statistic into a practical algorithm for its evaluation along the lines described above. The implementation of the algorithm in all its aspects, including the selection of the neural network hyperparameters by the \(\chi ^2\) compatibility criterion, is described in Sect. 3 for an illustrative univariate problem. In that section we will obtain a first validation of our method by studying how it reacts to toy datasets generated with values of the nuisance parameters that are different from the central values employed for the Reference training set. We will see that the term in t coming from the neural network is typically large, its distribution over the toys shifts to the right and gets strongly distorted with respect to the distribution one obtains when the toy data are instead generated with central-value nuisances. The other term in t, associated with the \(\mathrm{{R}}_{\varvec{\nu }}/\mathrm{{R}}_{\varvec{0}}\) likelihood ratio as previously described, engineers a non-trivial cancellation on the total value of t for each individual toy. A \(\chi ^2\) distribution is eventually recovered for the total t distribution, compatibly with the Wilks–Wald Theorem, regardless of the value of \({\varvec{\nu }}\) used in the generation of the toy data. Similar tests are performed in Sect. 4 in a slightly more realistic problem with five features (kinematical variables) that represent a dataset that one might encounter in the study of the production of two particles at the LHC. Two common sources of uncertainties are included, and their impact on the sensitivity of our strategy to benchmark putative signals is quantified. We report our Conclusions in Sect. 5. Appendix A provides an overview of model-independent strategies in connection and comparison with ours.

2 Foundations

2.1 Hypothesis testing

As explained in Sect. 1, our method consists of a hypothesis test between a null hypothesis \(H_0=\mathrm{{R}}_{\varvec{\nu }}\) and an alternative \(H_1=\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}\). We now characterize the two hypotheses in turn, starting from the null \(\mathrm{{R}}_{\varvec{\nu }}\) Reference (i.e., the SM) hypothesis. The data collected in the region of interest for the analysis are denoted as \({\mathcal {D}}=\{x_1,\ldots ,x_{{{\mathcal {N}}_{\mathcal {D}}}}\}\) and consist of \({{\mathcal {N}}_{\mathcal {D}}}\) instances of a multi-dimensional variable x. For instance, the region of interest for the analysis could be defined as the subset of the entire experimental dataset where a given experimental signature (e.g., two high-\(p_{\mathrm{T}}\) muons reconstructed within a certain detector acceptance) has been observed. The features x would then consist of the reconstructed momenta of these particles. The region of interest might be further restricted by selection cuts that define the region X of the phase space (\(x\in X\)) to which the particle momenta belong. Each instance of x in \({\mathcal {D}}\) is thrown with a probability distribution that we denote as \(P(x\,|\mathrm{{R}}_{\varvec{\nu }})\) in the Reference hypothesis \(\mathrm{{R}}_{\varvec{\nu }}\). The total number of instances of x, \({{\mathcal {N}}_{\mathcal {D}}}\), is Poisson-distributed with a mean \(\mathrm{{N}}(\mathrm{{R}}_{\varvec{\nu }})\) that equals the total cross section in the region X times the integrated luminosity. The likelihood of the \(\mathrm{{R}}_{\varvec{\nu }}\) hypothesis, given the observation of the dataset \({\mathcal {D}}\), is thus provided by the extended likelihood

In the previous equation we defined for shortness

We will denote n(x|H), in different hypotheses H, the “distribution” of the variable x.

The Reference hypothesis distribution for x depends on a set of nuisance parameters \({\varvec{\nu }}\). They model all the imperfections in the knowledge of the Reference Model, ranging from theoretical uncertainties like those in the determination of the parton distribution functions, to the calibration of the detector response. The nuisance parameters are (often, see below) statistically constrained by “auxiliary” measurements performed using data sets independent of \({\mathcal {D}}\), that we collectively denote as \({\mathcal {A}}\). The \(\mathrm{{R}}_{\varvec{\nu }}\) hypothesis provides a \({\varvec{\nu }}\)-dependent prediction also for the statistical distribution of the auxiliary measurements. The total likelihood of \(\mathrm{{R}}_{\varvec{\nu }}\), given the observation of both the data of interest and of the auxiliary data, thus reads

where we denoted, for brevity, \(\mathcal {L}(\mathrm{{R}}_{\varvec{\nu }}|{\mathcal {A}})\) as \(\mathcal {L}({\varvec{\nu }}|{\mathcal {A}})\).

We now turn to the alternative hypothesis \(H_1=\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}\). This hypothesis should include potential departures in the distribution of the variable x from the Reference (i.e., SM) expectation. As anticipated in Sect. 1, we parametrize these departures as a local rescaling of the Reference distribution by the exponential of a single-output neural network. Following the approach of Refs. [1, 2] we postulate

where f is the neural network and \({\mathbf{{w}}}\) denotes its trainable parameters. The neural network architecture and hyper-parameters are problem-dependent. The general criteria for their optimization are discussed in Sect. 2.5 and illustrated in Sects. 3.1 and 4.1 in greater detail.

We further postulate that new physics is absent in the auxiliary data. Namely that the distribution of the auxiliary data in the \(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}\) hypothesis is the same one as in hypothesis \(\mathrm{{R}}_{\varvec{\nu }}\)

Therefore the total likelihood of \(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}\) is

where \(\mathcal {L}(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}|{\mathcal {D}})\) is the extended likelihood

with \(n(x|\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}})\) as in Eq. (4). The total number of expected events \(\mathrm{{N}}(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}})\) is the integral of \(n(x|\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}})\) over the features space. A discussion of the implications of postulating the absence of new physics in the auxiliary data as in Eq. (5), and of related aspects, is postponed to Sect. 2.6.

The test statistic variable we aim at computing and employing for the hypothesis test is the Maximum Likelihood log ratio [13, 14, 24]

Notice that this definition of the test statistic, and in turn its properties [15, 16], assumes that the composite hypothesis in the denominator (\(H_0\)) is contained in the numerator hypothesis (\(H_1\)). This holds in our case since the neural network function in Eq. (4) is equal to zero when all its weights and biases \({\mathbf{{w}}}\) vanish. Therefore \((\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}})|_{{\mathbf{{w}}}=0}=\mathrm{{R}}_{\varvec{\nu }}\). Also notice that the test statistic variable t depends on all the data that are employed in the analysis. In particular it depends on the auxiliary data \({\mathcal {A}}\) as well as on the data of interest \({\mathcal {D}}\). We now address the problem of evaluating t, once the data are made available either from the actual experiment or artificially by generating toy datasets.

2.2 The central-value reference hypothesis

In order to proceed, we consider the special point in the space of nuisance parameters that corresponds to their central-value determination as obtained from the auxiliary data alone. If we call \({\mathcal {A}}_0\) the observed auxiliary dataset, namely the one that is observed in the actual experiment, the central values of the nuisance parameters are those maximizing the auxiliary likelihood function \(\mathcal {L}({\varvec{\nu }}|{\mathcal {A}}_0)\). It is always possible to choose the coordinates in the nuisance parameters space such that the central values of all the parameters sit at \(\nu =0\). So we have, by definition

We stress again that \({\mathcal {A}}_0\) represents one single outcome of the auxiliary measurements (the one observed in the actual experiment), unlike \({\mathcal {A}}\) (and \({\mathcal {D}}\)) that describe all the possible experimental outcomes. Therefore \({\mathcal {A}}_0\), and in turn the central value of the nuisance parameters that we have set to \({\varvec{\nu }}={{\varvec{0}}}\), is not a statistical variable and therefore it will not fluctuate when we will generate toy experiments, unlike \({\mathcal {A}}\) and \({\mathcal {D}}\).

The central-value Reference hypothesis \(\mathrm{{R}}_{\varvec{0}}\) predicts a distribution for the variable x, \(n(x|\mathrm{{R}}_{\varvec{0}})\), that can be regarded as the “best guess” we can make for the actual SM distribution of x before analyzing the dataset of interest \({\mathcal {D}}\). Correspondingly, \({\varvec{\nu }}={{\varvec{0}}}\) is the best prior guess for the value of the nuisances. The likelihood of \(\mathrm{{R}}_{\varvec{0}}\), given by

is thus conveniently used to “normalize” the likelihoods at the numerator and denominator in Eq. (8). Namely we multiply and divide the argument of the log by \(\mathcal {L}(\mathrm{{R}}_{\varvec{0}}|{\mathcal {D}},{\mathcal {A}})\) and we obtain

where \(\tau \) involves the maximization over the neural network parameters \({\mathbf{{w}}}\) and over \({\varvec{\nu }}\)

while the “correction” term \(\Delta \) does not contain the neural network and involves exclusively the Reference hypothesis

Both \(\tau \) and \(\Delta \) are positive-definite. Since they contribute with opposite sign, the test statistic t will emerge from a cancellation between these two terms. The cancellation is more and more severe the more the data happen to favor a value of \({\varvec{\nu }}\) that is far from the central value. In Sect. 2.5 we will describe the nature and the origin of this cancellation in connection with the asymptotic formulae for the distribution of t. Below we outline our strategy for computing \(\tau \) and \(\Delta \), starting from the latter term.

2.3 Learning the effect of nuisance parameters

The correction term \(\Delta \) in Eq. (13) is the log-ratio between the likelihood of the Reference hypothesis evaluated with best-fit values of the nuisance parameters, and the one with central-value nuisance parameters. This object is of interest for any statistical analysis to be performed on the dataset \({\mathcal {D}}\), as it provides a first indication of the data compatibility with the Reference hypothesis. In particular a sizable departure of the best-fit nuisance parameters from the central values should be monitored as an indication of a mis-modeling of the Reference hypothesis or possibly of a new physics effect.

In order to introduce our strategy for the evaluation of \(\Delta \), it is convenient to first recall the standard approach, employed in most LHC analyses, based on a binned Poisson likelihood approximation of \(\mathcal {L}(\mathrm{{R}}_{\varvec{\nu }}|{\mathcal {D}})\). In this approach, the dataset gets binned and the observed counting in each bin is compared with the corresponding \({\varvec{\nu }}\)-dependent cross section prediction. The predictions are obtained by computing each cross section for multiple values of the nuisance parameters and interpolating with a polynomial (or with the exponential of a polynomial, to enforce cross section positivity) around the central value \({\varvec{\nu }}=0\). A simple polynomial is sufficient to model the dependence of the cross section on the nuisances if their effect is small. The polynomial interpolation produces analytic expressions for the cross sections as a function of \({\varvec{\nu }}\), which are fed into the Poisson likelihood. Clearly, if the analytic dependence of the cross section on one or more nuisance parameters is known then the polynomial approximation is not needed and the exact form can be used. The maximization over \({\varvec{\nu }}\) in Eq. (13) is then performed with standard computer packages.

In principle we could proceed to the evaluation of \(\Delta \) exactly as described above. However we found it simpler and more effective to employ an un-binned \(\mathcal {L}(\mathrm{{R}}_{\varvec{\nu }}|{\mathcal {D}})\) likelihood, obtained by reconstructing the ratio between the \(n(x|\mathrm{{R}}_{\varvec{\nu }})\) and \(n(x|\mathrm{{R}}_{\varvec{0}})\) distributions locally in the feature space. This is achieved by a rather straightforward adaptation of likelihood-reconstruction techniques based on neural networks developed in the literature [17,18,19,20,21,22,23]. In particular, our implementation (briefly summarized below) closely follows Refs. [21,22,23] to which we refer the reader for a more in-depth exposition. As for the regular binned approach, the basic idea is to employ a polynomial approximation for the dependence of the distribution on the nuisances. The polynomial coefficients, functions of the input x, are expressed as suitably trained neural networks. For instance, in the case of a single nuisance parameter \(\nu \) we would write

with the Taylor series expansion in the exponent truncated at some finite order. Clearly the truncation is justified only if the effect of the nuisance is a relatively small correction to the central-value distribution. More precisely, nuisance effects must be small when \(\nu \) is in a “plausibility” range around 0, compatibly with the shape of the auxiliary likelihood \(\mathcal {L}({\varvec{\nu }}|{\mathcal {A}})\). For instance, if the auxiliary likelihood is Gaussian with standard deviation \(\sigma _\nu \), we should worry about the validity of the approximation in Eq. (14) only for \(\nu \) within few times \(\pm \sigma _\nu \). Larger values are not relevant for the maximization in Eq. (13) because they are suppressed by \(\mathcal {L}({\varvec{\nu }}|{\mathcal {A}})\). Notice that in Eq. (14) we might have opted for a polynomial approximation of the ratio r rather than of its logarithm. However the latter choice guarantees the positivity of r even when the numerical minimization algorithm is led to explore regions where \(\nu \) is large. Furthermore working with \(\log \,r(x;{\varvec{\nu }})\) is more convenient for our purposes, as we will readily see. The polynomial expansion in Eq. (14) can be straightforwardly generalized to deal with several nuisance parameters, including if needed mixed quadratic terms to capture the correlated effects of two different parameters.

Approximations \({\widehat{\delta }}(x)\) of the \(\delta (x)\) coefficient functions are obtained as follows. Consider a continuous-output classifier \(c(x;{\varvec{\nu }})\in (0,1)\) defined as

where \({\widehat{r}}\) has the same dependence on the nuisance parameter as the true distribution ratio r. For instance in the case of a single nuisance parameter, and truncating Eq. (14) at the quadratic order, we have

where \({\widehat{\delta }}_{1,2}(x)\) represents two suitably trained single-output neural network models.Footnote 3

The training is performed on a set of data samples \(\mathrm{{S}}_0({\varvec{\nu }}_i)\) that follow the distribution of x in the \(\mathrm{{R}}_{\varvec{\nu }}\) hypothesis at different points \({\varvec{\nu }}={\varvec{\nu }}_i\ne {{\varvec{0}}}\) in the nuisance parameters space. Two distinct \({\varvec{\nu }}_i\) points are sufficient to learn the two coefficient functions associated to a single nuisance parameter at the quadratic order. Employing more points is possible and typically convenient for the accuracy of the coefficient functions reconstruction. Data samples produced in the central-value Reference hypothesis \({\varvec{\nu }}={{\varvec{0}}}\) are also employed, one for each \(\mathrm{{S}}_0({\varvec{\nu }}_i)\) sample. These central-value Reference samples are denoted as \(\mathrm{{S}}_1({\varvec{\nu }}_i)\), in spite of the fact that they all follow the \(\mathrm{{R}}_{\varvec{0}}\) hypothesis. Each event “e” in the samples has a weight \(w_{\mathrm{{e}}}\), normalized such that the sum of the weights in each sample equals the total number of expected events in the corresponding hypothesis (i.e., \(\mathrm{{N}}({\mathrm{{R}}_{{\varvec{\nu }}_i}})\) for \(\mathrm{{S}}_0({\varvec{\nu }}_i)\) and \(\mathrm{{N}}(\mathrm{{R}}_{\varvec{0}})\) for \(\mathrm{{S}}_1({\varvec{\nu }}_i)\)). The loss function is

It is not difficult to show [21] that the \({\widehat{\delta }}\) networks trained with the loss in Eq. (17) converge to the corresponding coefficient function \(\delta \) in the limit where the samples are large, provided of course the true distribution ratio is in the form of Eq. (14).

The basic strategy outlined above can be improved and refined in several aspects [22, 23], whose detailed description falls however outside the scope of the present paper. For our purposes it is sufficient to know that the coefficient functions in Eq. (14) can be rather easily and accurately reconstructed. As such, the dependence on \({\varvec{\nu }}\) of the distribution ratio \(r(x;{\varvec{\nu }})\) is known analytically at each point x of the features space. This solves our problem of evaluating the correction term \(\Delta \) in Eq. (13), because \(\Delta \) is

Thanks to the fact that we adopted an exponential parametrization for r (14), the first term in the curly brackets is a polynomial in \({\varvec{\nu }}\). The constant term of the polynomial vanishes. The higher degree terms are the sum over \(x\in {\mathcal {D}}\) of the corresponding \(\delta (x)\) coefficients, approximated with the reconstructed \({\widehat{\delta }}(x)\) that are provided by the trained neural networks. The second term, \(\mathrm{{N}}(\mathrm{{R}}_{\varvec{\nu }})\), is proportional to the total cross section in the \(\mathrm{{R}}_{\varvec{\nu }}\) hypothesis. It can be approximated with a polynomial or with the exponential of the polynomial as in regular binned likelihood analyses. Finally, \(\mathrm{{N}}(\mathrm{{R}}_{\varvec{0}})\) is a constant and the log ratio between the \({\varvec{\nu }}\) and the \({\mathbf{0}}\) auxiliary likelihoods is also known in an analytical form. Actually in most cases the auxiliary likelihood is Gaussian and \(\log [\mathcal {L}({\varvec{\nu }}|{\mathcal {A}})/\mathcal {L}({{\varvec{0}}}|{\mathcal {A}})]\) is merely a quadratic polynomial. In summary, all the terms in the curly brackets of Eq. (18) are known analytically. The maximization required to evaluate \(\Delta \) is thus a trivial numerical operation for dedicated computer packages.

2.4 Maximum likelihood from minimal loss

We now turn to the evaluation of the \(\tau \) term defined in Eq. (12). This term involves the \(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}\) hypothesis, which foresees possible non-SM effects (i.e., departures from the Reference Model) in the distribution of x. Non-SM effects are parametrized by the neural network \(f(x;{\mathbf{{w}}})\) as in Eq. (4). The calculation of \(\tau \) involves the maximization over the neural network weights and biases, \({\mathbf{{w}}}\), and over the nuisance parameters \({\varvec{\nu }}\). The maximization will be performed by running a training algorithm, treating both \({\mathbf{{w}}}\) and \({\varvec{\nu }}\) as trainable parameters. The algorithm will exploit the knowledge of the \(\delta \) coefficient functions that is provided by the \({\widehat{\delta }}\) neural networks as explained in the previous section. However the latter networks are pre-trained. Therefore their parameters are not trainable during the evaluation of \(\tau \), even if they do appear in the loss function as we will readily see.

In order to turn the evaluation of \(\tau \) into a training problem, the first step is to combine Eq. (4) with the definition of r in Eq. (14), obtaining

We then rewrite \(\tau \) in the form

The first, third and fourth terms in the curly brackets are easily available. The first one depends on the neural network \(f(x;{\mathbf{{w}}})\), as well as on the coefficient functions \(\delta \) (approximated by the neural networks \({\widehat{\delta }}\)) through \(r(x;{\varvec{\nu }})\) in Eq. (14). The second term is the total number of events in the \(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}\) hypothesis, given by

Clearly \(\mathrm{{N}}(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}})\) is not easily available because \(n(x|\mathrm{{R}}_{\varvec{0}})\) is not known in closed form and even if it was, computing the integral as a function of \({\mathbf{{w}}}\) and \({\varvec{\nu }}\) is numerically unfeasible.

Evaluating \(\mathrm{{N}}(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}})\) requires us to employ a Reference data set \({\mathcal {R}}=\{x_1,\ldots ,x_{\mathrm{{N}}_{\mathcal {R}}}\}\). As described in Sect. 1, \({\mathcal {R}}\) consists of synthetic instances of the variable x that follow the Reference Model distribution. The \({\mathcal {R}}\) set plus the data \({\mathcal {D}}\) constitute the sample that we will employ for training the neural network \(f(x;{\mathbf{{w}}})\). Notice that the \({\mathcal {R}}\) dataset follows, by construction, the central-value distribution \(n(x|\mathrm{{R}}_{\varvec{0}})\). It might result from a first-principle Monte Carlo simulation, or have data-driven origin. In both cases it might take the form of a weighted event sample. Footnote 4 We choose the normalization of the weights such that

If the \({\mathcal {R}}\) sample is “unweighted”, all the weights are equal, and equal to \(w_{\mathrm{{e}}}=\mathrm{{N}}(\mathrm{{R}}_{\varvec{0}})/\mathrm{{N}}_{\mathcal {R}}\), with \(\mathrm{{N}}_{\mathcal {R}}\) the Reference sample size. The Reference sample plays conceptually the same role as the central-value in regular model-dependent LHC searches. Its composition and origin is the same one of the samples \(\mathrm{{S}}_1({\varvec{\nu }}_i)\) employed to learn the effect of nuisance parameters with the strategy outlined in the previous section.

With the normalization (22), the weighted sum of a function of x over the Reference sample approximates the integral of the function with integration measure \(n(x|\mathrm{{R}}_{\varvec{0}})dx\). Therefore

where the accuracy of the approximation improves with (square root of) the size of the Reference sample. In what follows we are going to assume an infinitely abundant Reference sample and turn the approximate equality above into a strict equality. Clearly in so doing we are ignoring the uncertainties associated with finite statistics of \({\mathcal {R}}\). This is justified if \(\mathrm{{N}}_{\mathcal {R}}\gg \mathrm{{N}}(\mathrm{{R}}_{\varvec{0}})\sim {{\mathcal {N}}_{\mathcal {D}}}\), because in this case the statistical variability of \(\tau \) is expectedly dominated by the statistical fluctuation of the data sample \({\mathcal {D}}\). All the results of the present paper are compatible with this expectation for \(\mathrm{{N}}_{\mathcal {R}}\) a few times larger than \({{\mathcal {N}}_{\mathcal {D}}}\).

By combining Eqs. (20) and (23) (and (22)) and by factoring out a minus sign to turn the maximization into a minimization, we express

where L has the form of a loss function for a supervised training between the \({\mathcal {D}}\) and \({\mathcal {R}}\) samples

The loss depends on the neural network function \(f(\cdot ;{\mathbf{{w}}})\) and in particular on its trainable parameters \({\mathbf{{w}}}\). It also depends on the nuisance parameters \({\varvec{\nu }}\), through the ratio r and through the auxiliary likelihood ratio term. The minimization over the nuisances is requested by Eq. (24), therefore the nuisances should be treated as trainable parameters on the same footing as the neural network parameters \({\mathbf{{w}}}\). This is relatively straightforward to implement in standard deep learning packages, provided the loss depends on \({\varvec{\nu }}\) through analytically differentiable functions. This is the case for \(r(x;{\varvec{\nu }})\), and typically also for the auxiliary likelihood ratio. The loss also depends on the reconstructed coefficient functions \({\widehat{\delta }}\). However this dependence is purely parametric and the parameters of the \({\widehat{\delta }}\) networks are fixed at their optimal values, opportunely determined in a previous training as described in Sect. 2.3. After training, \(\tau \) is obtained as minus two times the minimal loss owing to Eq. (24).

Our strategy to evaluate \(\tau \) is a relatively straightforward extension of the one developed in Refs. [1, 2]. In the absence of nuisance parameters, namely in the limit where \(r(x;{\varvec{\nu }})\) is independent of \({\varvec{\nu }}\) and identically equal to one, the loss in Eq. (25) reduces to the one of Refs. [1, 2], plus the auxiliary log likelihood ratio that carries all the dependence on \({\varvec{\nu }}\) and can be minimized independently. The latter term however cancels in the test statistic t when subtracting the correction term \(\Delta \) (18) and the results of Refs. [1, 2] are recovered in the absence of nuisances, as it should be.

2.5 Asymptotic formulae

We now discuss the actual feasibility of a frequentist hypothesis test based on our variable t (8). The generic problem with frequentist tests stems from the determination of the distribution of the t variable in the null hypothesis, \(P(t|H_0)\), out of which the p-value of the observed data is extracted. If the null hypothesis is a simple one, this can be obtained rigorously by running toy experiments, with a procedure that is computationally demanding but not unfeasible, especially if one does not target probing the extreme tail of the t distribution. If instead the null hypothesis \(H_0=\mathrm{{R}}_{\varvec{\nu }}\) is composite as in this case, due to the nuisances, and if \(P(t|\mathrm{{R}}_{\varvec{\nu }})\) (and in turn the p-value) depends on the value of \({\varvec{\nu }}\), the problem becomes extremely hard as one should in principle run toy experiments and compute \(P(t|\mathrm{{R}}_{\varvec{\nu }})\) for each value of \({\varvec{\nu }}\). Indeed in frequentist statistics there is no notion of probability for the parameters. Consequently each value of \({\varvec{\nu }}\) defines an equally “likely” hypothesis in the null hypotheses set \(\mathrm{{R}}_{\varvec{\nu }}\). We can thus quantify the level of incompatibility of the data with the null hypothesis only by defining the p-value as the maximum p-value that is obtainable by a scan over the \({\varvec{\nu }}\) parameters in their entire allowed range. Since this is not feasible, the only option is to employ a suitably-designed test statistic variable, such that \(P(t|\mathrm{{R}}_{\varvec{\nu }})\) is independent of \({\varvec{\nu }}\) to a good approximation.

The considerations above are deeply rooted in the standard treatment of nuisance parameters. They actually constitute the very reason for the choice, in LHC analyses [24], of a specific Maximum Likelihood ratio test statistic, whose distribution is in fact independent of \({\varvec{\nu }}\) in the asymptotic limit where the number of observations is large. Specifically, \(P(t|\mathrm{{R}}_{\varvec{\nu }})\) approaches a \(\chi ^2\) distribution with a number of degrees of freedom equal to the number of free parameters in the “numerator” hypothesis \(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}\), minus the number of parameters of the “denominator” hypothesis \(\mathrm{{R}}_{\varvec{\nu }}\), owing to the Wilks–Wald Theorem [15, 16]. In a regular model-dependent search [24], the number of degrees of freedom of the \(\chi ^2\) equals the number of free parameters of the new physics model that is being searched for (i.e., the so-called “parameters of interest”). The exact same asymptotic result applies in our case because our test statistic is also defined and rigorously computed as a Maximum Likelihood ratio. Its distribution in the null hypothesis will thus be independent of \({\varvec{\nu }}\) and approach the \(\chi ^2\). The number of degrees of freedom is given in this case by the number of trainable parameters \({\mathbf{{w}}}\) of the neural network.

As already stressed in Sect. 1, however, asymptotic formulae such as the Wilks–Wald Theorem only hold in the limit of an infinitely large data set and therefore they offer no guarantee that \(P(t|\mathrm{{R}}_{\varvec{\nu }})\) will resemble a \(\chi ^2\) (and be independent of \({\varvec{\nu }}\)) in concrete analyses where the dataset has a finite size. At fixed dataset size, whether this is the case or not depends on the complexity (or expressivity) of the parameter-dependent hypothesis that is being compared with the data. When fitted by the likelihood maximization, an extremely flexible hypothesis will adapt its free parameters to reproduce (overfit) the observed data points individually. Therefore the value of t that results from the maximization can be driven by low-statistics portions of the dataset and thus violate the asymptotic condition even if the total size of the dataset is large. The expressivity of our hypothesis is driven by the architecture (number of neurons and layers) of the neural network \(f(x;{\mathbf{{w}}})\), and by the other hyper-parameters (a weight clipping, in our implementation) that regularize the network preventing it from developing overly sharp features. We can thus enforce the validity of the asymptotic formula, i.e. ensure that \(P(t|\mathrm{{R}}_{\varvec{\nu }})\) is close to a \(\chi ^2\) and independent of \({\varvec{\nu }}\), by properly selecting the neural network hyper-parameters.

For the selection of the hyper-parameters according to the \(\chi ^2\) compatibility criterion we proceed as in Ref. [2], where this criterion had been already introduced on a more heuristic basis, unrelated with nuisance parameters. We generate toy datasets following the central-value hypothesis \(\mathrm{{R}}_{\varvec{0}}\), we compute t and we compare its empirical distribution with a \(\chi ^2\) with as many degrees of freedom as the number of parameters of the neural network. We select the largest neural network architecture and the maximal weight clipping for which a good level of compatibility is found. Notice that whether or not a given neural network model is sufficiently “simple” to respect the asymptotic formula is conceptually unrelated with the presence of nuisance parameters. Furthermore our goal is to show that the presence of nuisances does not affect the distribution of t. Therefore when we enforce the \(\chi ^2\) compatibility, with the strategy outlined above, we compute t as if nuisance parameters were absent. After the model is selected, based on the Wilks–Wald Theorem we expect that the distribution of t will be a \(\chi ^2\) with the same number of degrees of freedom even in the presence of nuisance parameters. This can be verified by recomputing the distribution of t, including this time the effect of nuisances, on the \(\mathrm{{R}}_{\varvec{0}}\) toys and on new toy samples generated according to \(\mathrm{{R}}_{\varvec{\nu }}\) with different values \({\varvec{\nu }}\ne {\mathbf {0}}\) of the nuisance parameters. Explicit implementations of this procedure, and confirmations of the validity of the asymptotic formulae, will be described in Sects. 3 and 4.

The Wilks–Wald Theorem also enables us to develop a qualitative understanding of the interplay between the \(\tau \) and \(\Delta \) terms in the determination of t (Eq. (11)). Both \(\tau \) (Eq. (12)) and \(\Delta \) (Eq. (13)) are Maximum Likelihood log-ratios, with the simple hypothesis \(\mathrm{{R}}_{\varvec{0}}\) playing the role of the denominator hypothesis. Therefore \(\tau \) and \(\Delta \) are also distributed as a \(\chi ^2_d\) with d degrees of freedom, if the data follow the \(\mathrm{{R}}_{\varvec{0}}\) hypothesis itself. In the case of \(\tau \), d is the number of neural network parameters plus the number of nuisance parameters. The number of degrees of freedom of \(\Delta \) is instead given by the number of nuisance parameters. The test statistic t, whose value emerges from a cancellation between \(\tau \) and \(\Delta \), has d equal to the number of neural network parameters, as previously discussed. The cancellation is not severe in this case, because the number of nuisance parameters is typically smaller than the number of neural network parameters. Namely the values of \(\tau \) and \(\Delta \) for each individual toy will not be, on average, much larger that \(t=\tau -\Delta \). Suppose instead that the data follow \(\mathrm{{R}}_{\varvec{\nu }}\) with some \({\varvec{\nu }}\ne {{\varvec{0}}}\). This hypothesis belongs to the numerator (composite) hypothesis in the definitions of \(\tau \) and \(\Delta \). The Wilks–Wald Theorem predicts in this case non-central \(\chi ^2\) distributions [15], with increasingly large non-centrality parameters as we increase the distance between \({\varvec{\nu }}\) and \({{\varvec{0}}}\). Therefore when we compute \(P(t|\mathrm{{R}}_{\varvec{\nu }})\) with larger and larger \({\varvec{\nu }}\), the \(\tau \) and \(\Delta \) distributions shift more and more to the right and their typical value over the toys becomes large. The typical value of t is instead given by the number of neural network parameters, because t follows a central \(\chi ^2\) distribution independently of \({\varvec{\nu }}\). A sharp correlation between \(\tau \) and \(\Delta \) will thus engineer a delicate cancellation on toys generated with very large values of the nuisance parameters. The occurrence of the cancellation amplifies the uncertainties in the calculation of \(\tau \) and \(\Delta \) that emerge (dominantly) from the imperfect modeling of the \(\delta (x)\) coefficient functions. Obtaining a \(\chi ^2\) for the distribution of t is thus increasingly demanding at large \({\varvec{\nu }}\), as we will see more quantitatively in Sects. 3 and 4.

2.6 New physics in auxiliary measurements or in control regions

The step we took in Eq. (5) of postulating the absence of new physics in the auxiliary data deserves further comments. In regular model-dependent searches for new physics the alternative hypothesis \(H_1\) is a physical model that accounts for new phenomena in addition to the SM ones. One can thus assess whether or not these new phenomena can manifest themself in the auxiliary data. If they do not, Eq. (5) is justified. The situation is different in model-independent searches. On one hand, there is no way to tell if Eq. (5) holds because the new physics model is not given. On the other hand, in our framework we are always free to postulate Eq. (5). In a model-dependent search Eq. (5) could be wrong, in our case it is a restriction on the set of new physics models that we are testing.

Still it is interesting to discuss how the model-independent strategy that we are constructing would react to the presence of new physics effects in the auxiliary data. New (or mis-modeled) effects in auxiliary data could in general reduce the sensitivity of the test to new physics, however it is not obvious that this reduction will be significant. Consider the extreme case in which new physics is absent from the dataset of interest, and is present only in the auxiliary measurements. The new physics effects make the true auxiliary likelihood function different from the postulated one, \(\mathcal {L}({\varvec{\nu }}|{\mathcal {A}})\). Therefore, in the likelihood maximization, the \(\mathcal {L}({\varvec{\nu }}|{\mathcal {A}})\) term will push \({\varvec{\nu }}\) to values that are different from the true values of the nuisance parameters. This will occur both in the maximization of the \(\mathcal {L}(\mathrm{{R}}_{\varvec{\nu }}|{\mathcal {D}},{\mathcal {A}})\) and of the \(\mathcal {L}(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}|{\mathcal {D}},{\mathcal {A}})\) likelihoods. For these incorrect values of the nuisance parameters, \(n(x|\mathrm{{R}}_{\varvec{\nu }})\) does not provide a good description of the distribution of the data of interest \({\mathcal {D}}\). Therefore the maximal likelihood of \(\mathrm{{R}}_{\varvec{\nu }}\) will be small, due to the mismatch between the data and the Reference distribution estimated from the “signal-polluted” auxiliary dataset. The \(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}\) hypothesis instead possesses enough flexibility to adapt \(n(x|\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}})\) according to the data of interest, thanks to the flexibility of the neural network (4). The likelihood of \(\mathrm{{H}}_{{\mathbf{{w}}},{\varvec{\nu }}}\) will thus possess a high maximum, in the configuration where \({\varvec{\nu }}\) maximizes the auxiliary likelihood and the neural network accounts for the discrepancy between the x distribution at that value of \({\varvec{\nu }}\) and the true x distribution at the true value of the nuisance parameters. This can enable our test to reveal a tension of the data with the Reference Model even in this limiting configuration, as we will see happening in Sect. 3.5 in a simple setup. New physics effects in the auxiliary data might thus not spoil the potential to achieve a discovery. On the other hand, they would complicate its interpretation.

Similar considerations hold for possible new physics contaminations in the Reference dataset \({\mathcal {R}}\) employed for training. These contaminations emerge if \({\mathcal {R}}\) has a data-driven origin, and if new physics affects the distribution of the data control region. Since the control region data are transferred to the region of interest by assuming the validity of the Reference Model, the net effect is a mismatch between the true distribution of x in the (central-value) Reference Model and the actual distribution of the instances of x in the Reference sample. As for auxiliary measurements, new physics in control regions does not necessarily spoil the sensitivity to new physics. Indeed our test is sensitive to generic departures of the observed data distribution with respect to the distribution of the Reference dataset. Departures which are due to a mis-modeling of the Reference induced by new physics in the control region, rather than to new physics in the data of interest, could still be seen. Our strategy would instead completely loose sensitivity if new physics affects the control region and the data of interest in the exact same way, because in this case there would be strictly no difference between the distribution of the data and the one of the Reference dataset.

3 Step-by-step implementation

The present section describes the detailed implementation of our strategy and its validation in a simple case study that will serve as an explanatory example throughout the presentation of the algorithm. In particular, we consider a one-dimensional feature \(x\in [0,\infty )\) with exponentially falling distribution in the Reference hypothesis. We assume that our knowledge of the Reference hypothesis is not perfect and that our lack of knowledge is described by a two-dimensional nuisance parameters vector \({\varvec{\nu }}=(\nu _{{\textsc {n}}},\nu _{{\textsc {s}}})\). The two parameters account, respectively, for the imperfect knowledge of the normalization of the distribution (i.e., of the total number of expected events \(\mathrm{{N}}(\mathrm{{R}}_{\varvec{\nu }})\equiv e^{\nu _{{\textsc {n}}}}\mathrm{{N}}(\mathrm{{R}}_{\varvec{0}})\)) and of a multiplicative “scale” factor (defined by \(x=x_{\mathrm{{meas.}}}=e^{\nu _{{\textsc {s}}}}x_{\mathrm{{true}}}\)) in the measurement of x. The Reference Model distribution of x reads

with the total number of expected events in the central-value hypothesis, \(\mathrm{{N}}(\mathrm{{R}}_{\varvec{0}})\), fixed at \(\mathrm{{N}}(\mathrm{{R}}_{\varvec{0}})=2\,000\). As discussed in Sect. 2.2, the central-value Reference hypothesis \(\mathrm{{R}}_{\varvec{0}}\) is defined to be at the point \((\nu _{{\textsc {n}}},\nu _{{\textsc {s}}})=(0,0)\) in the nuisances’ parameter space. We have parametrized the normalization, \(e^{\nu _{{\textsc {n}}}}\), and the scale factor, \(e^{\nu _{{\textsc {s}}}}\), so that they are positive in the entire real plane spanned by \((\nu _{{\textsc {n}}},\nu _{{\textsc {s}}})\).

We suppose that the normalization and the scale nuisances are measured independently using an auxiliary set of data \({\mathcal {A}}\). The estimators of the measurements central values are denoted as \({\widehat{\nu }}_{{\textsc {n}}}={\widehat{\nu }}_{{\textsc {n}}}({\mathcal {A}})\) and \({\widehat{\nu }}_{{\textsc {s}}}={\widehat{\nu }}_{{\textsc {s}}}({\mathcal {A}})\). We assume that these estimators are unbiased and Gaussian-distributed with standard deviations \(\sigma _{{\textsc {n}}}\) and \(\sigma _{{\textsc {s}}}\). The auxiliary likelihood log-ratio thus reads

It should be noted that \({\widehat{\nu }}_{{\textsc {n}}}\) and \({\widehat{\nu }}_{{\textsc {s}}}\) are statistical variables, owing to their dependence on the auxiliary data \({\mathcal {A}}\). Therefore we must let them fluctuate when generating the simulated experiments (toys) that we employ to validate the algorithm. Namely, denoting as \(\varvec{\nu }^*=(\nu _{{\textsc {n}}}^*,\nu _{{\textsc {s}}}^*)\) the true value of the nuisance parameter vector, the estimators \({\widehat{\nu }}_{{\textsc {n}}}\) and \({\widehat{\nu }}_{{\textsc {s}}}\) are thrown from Gaussian distributions with standard deviations \(\sigma _{{\textsc {n}}}\) and \(\sigma _{{\textsc {s}}}\), centered \(\nu _{{\textsc {n}}}^*\) and \(\nu _{{\textsc {s}}}^*\), respectively. This mimics the statistical fluctuations of the auxiliary data \({\mathcal {A}}\), out of which the estimators \({\widehat{\nu }}_{{\textsc {n,s}}}\) are derived in the actual experiment. The true value of the nuisance parameters \(\varvec{\nu }^*\) is unknown, and the validation of the method consists in verifying that the distribution of the test statistic is independent on \(\varvec{\nu }^*\). We will verify this on toy datasets generated with \(\nu _{{\textsc {n,s}}}^*\) at the central value (\(\nu _{{\textsc {n,s}}}^*=0\)), and at plus and minus one standard deviation (\(\nu _{{\textsc {n,s}}}^*=\pm \sigma _{{\textsc {n,s}}}\)).

The rest of this section is structured as follows. In Sect. 3.1 we describe the selection of the neural network model and regularization parameters based on the \(\chi ^2\) compatibility criterion introduced in the previous section (and in Ref. [2]), and in particular in Sect. 2.5. Next, in Sect. 3.2, we illustrate the reconstruction of the coefficient functions that model the dependence of the Reference Model distribution on the nuisance parameters, following Sect. 2.3. In Sect. 3.3 we present our implementation of the calculation of the test statistic as in Sect. 2.4. In Sect. 3.4 we validate our strategy by verifying the asymptotic formulae of Sect. 2.5 and in turn the independence of the distribution of the test statistic on the true value of the nuisance parameters. Finally, in Sect. 3.5 we study the sensitivity to putative “new physics” signals that distort the distribution of x relative to the Reference Model expectation in Eq. (26). It should be emphasized that this latter study has a merely illustrative purpose. All the steps that are needed to set up our strategy, from the model selection to the evaluation of the distribution of the test statistic, are performed based exclusively on knowledge of the Reference Model and not on putative new physics signals, as appropriate for a model-independent search strategy.

While presented in the context of a simple univariate problem that is rather far from a realistic LHC data analysis problem, the technical implementation of all the steps described in the present section is straightforwardly applicable to more complex situations. The application to a more realistic problem will be discussed in Sect. 4.

3.1 Model selection

The first step towards the implementation of our strategy is to select the hyper-parameters of the neural network model “\(f(x;{\mathbf{{w}}})\)”, which we employ to parametrize possible new physics (or Beyond the SM, BSM) effects as in Eq. (4). We restrict our attention to fully-connected feedforward neural networks, with an upper bound on the absolute value of each weight and bias. The upper limit is set by a weight clipping regularization parameter that needs to be selected. The other hyper-parameters are the number of hidden layers and of neurons per layer that define the neural network architecture.

According to the general principles outlined in Sect. 2.5, the model selection results from two competing principles. The first one is that the model should have the highest complexity that can be handled by the available computational resources in a reasonable amount of time. This maximizes the model’s capability to fit complex departures from the Reference Model expectation, making it sensitive to the largest possible variety of putative new physics signals. On the other hand, the model should be simple enough for the distribution of the associated test statistic to be in the asymptotic regime, given the finite amount of training data. This condition is enforced by monitoring the compatibility with the \(\chi ^2\) asymptotic formula for the test statistic distribution.

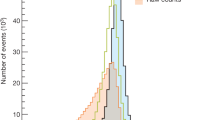

Empirical distributions of \({\overline{t}}\) after \(300\,000\) training epochs for different values of the weight clipping parameter, compared with the \(\chi ^2_{13}\) distribution expected in the asymptotic limit for the (1, 4, 1) network. The evolution during training of the \({\overline{t}}\) distribution percentiles, compared with the \(\chi ^2_{13}\) expectation, is also shown. Only 100 toy datasets are employed to produce the results shown in the figure, except for the ones for weight clipping equal to 9 where all the 400 toys are used

As explained in Sect. 2.5, the \(\chi ^2\) compatibility condition that underlies the selection of the neural network hyperparameters will be enforced in the limit where the nuisance parameters do not affect the distribution of the variable x or, equivalently, in the limit where the auxiliary measurements of the nuisance parameters are infinitely accurate (i.e., \(\sigma _{{\textsc {n,s}}}\rightarrow 0\)). It is easy to see from the results of Sect. 2, or from Refs. [1, 2], that the test statistic in this limit becomes

The minimization is performed by training the network f with the loss function

The asymptotic distribution of \(\overline{t}\) is a \(\chi ^2\) with a number of degrees of freedom which is equal to the number of trainable parameters of the neural network. The \(\chi ^2\) compatibility of a given neural network model will be monitored by generating toy instances of the dataset \({\mathcal {D}}\) in the \(\mathrm{{R}}_{\varvec{0}}\) hypothesis, running the training algorithm on each of them, computing the empirical probability distribution of \(\overline{t}\) and comparing it with the \(\chi ^2\).

We first discuss how to select the weight clipping regularization parameter for a given architecture of the neural network. We consider for illustration, in the simple univariate example at hand, a network with four nodes in the hidden layer (and one-dimensional input and output). We refer to this architecture as (1, 4, 1), for brevity. This network has a total of 13 trainable parameters, therefore the target \(\overline{t}\) distribution is a \(\chi ^2_{13}\) with 13 degrees of freedom. We generated a Reference sample \({\mathcal {R}}\), with \({\mathrm{{N}}_{\mathcal {R}}}=200\,000=100\,\mathrm{{N}}(\mathrm{{R}}_{\varvec{0}})\) entries, following the \(\mathrm{{R}}_{\varvec{0}}\) distribution of the variable x as given by Eq. (26) for \(\nu _{{\textsc {n,s}}}=0\). The sample is unweighted, therefore the weights in the sample are all equal and \(w_{\mathrm{{e}}}=\mathrm{{N}}(\mathrm{{R}}_{\varvec{0}})/{\mathrm{{N}}_{\mathcal {R}}}=0.01\). We also generate 400 toy instances of the dataset \({\mathcal {D}}\) in the same hypothesis. The number of instances of x in \({\mathcal {D}}\), \({{\mathcal {N}}_{\mathcal {D}}}\), is thrown from a Poisson distribution with mean \(\mathrm{{N}}(\mathrm{{R}}_{\varvec{0}})=2\,000\) in accordance with the \(\mathrm{{R}}_{\varvec{0}}\) expectation. For different values of the weight clipping parameter, ranging from 1 to 100, we train the neural network with the loss in Eq. (29) and we compute \(\overline{t}({\mathcal {D}})\) on the toy datasets using Eq. (28). The empirical \(P(\overline{t}|\mathrm{{R}}_{\varvec{0}})\) distributions obtained in this way after \(300\,000\) training epochs, and some of its percentiles as a function of the number of epochs, are reported in Fig. 1.

We see that for large values of the weight clipping parameter the distribution sits slightly to the right of the target \(\chi ^2\) with 13 degrees of freedom. Furthermore the training is not stable and significant changes in the \(\overline{t}\) percentiles (especially the \(95\%\) one) occur even after \(150\,000\) epochs. Very small values of the weight clipping make the distribution stable with training, but push it lower than the \(\chi ^2_{13}\) expectation. A good compatibility is instead obtained for intermediate values of the weight clipping parameter. We see that a weight clipping equal to 9 reproduces the \(\chi ^2_{13}\) formula quite accurately.

The strategy to find the value of the weight clipping parameter that best complies with the \(\chi ^2\) compatibility criterion can be refined and optimized. We can start from one small and one large value of the weight clipping, for which we expect that the distribution of \({\overline{t}}\) will, respectively, undershoot and overshoot the \(\chi ^2\) expectation, and compute \({\overline{t}}\) by running the training algorithm on a limited number n of toy datasets. The average of \({\overline{t}}\) over the n toys will be below (above) the mean of the target \(\chi ^2\) distribution (i.e., 13, in the case at hand) for the small (large) value of the weight clipping. We thus obtain a window of values where the optimal weight clipping sits, which can be further narrowed by applying a standard root finding algorithm on the average \({\overline{t}}\) compared with the expected mean. Clearly the average \({\overline{t}}\) will be affected by a relatively large error if n is small. Therefore after a few iterations of the root finding algorithm, it will become compatible with the expected mean, preventing us from further restricting the weight clipping compatibility window.

Rather than looking at the compatibility of the average, a more powerful compatibility test should be employed at this stage in order to pick up the optimal weight clipping value inside the window. Furthermore this test should be sensitive to the entire shape of the distribution and not only to its central value. One can consider for instance a Kolmogorov–Smirnov (KS) test and maximize, in the window, the p-value for the compatibility with the target \(\chi ^2\) of the empirical \({\overline{t}}\) distribution.Footnote 5

It is advantageous to implement the strategy described above using a rather small number n of toy datasets, because training could become computationally demanding in realistic applications of our strategy. On the other hand, if n is small the KS compatibility test has limited power, leaving space for considerable departures from the target \(\chi ^2\) of the true distribution of \({\overline{t}}\), even with the value of the weight clipping that has been selected as “optimal”. A more accurate determination of the optimal weight clipping could however be obtaining by increasing n and repeating the previous optimization step. Clearly at this stage one could restrict to the much narrower window obtained at the end of the previous step, and benefit from the previous determination of the optimal weight clipping in order to speed up the convergence. The entire procedure could be further repeated with an even larger n, until a certain compatibility goal is achieved. For instance, one might require a KS p-value larger than some threshold, at the optimal weight clipping point, with a relatively large number n (say, 400) of toy experiments.

The results reported in Table 1 illustrate the weight clipping optimization strategy described above for the (1, 4, 1) network in the univariate problem under consideration. Actually a systematic optimization strategy is not needed to deal with the simple problem at hand, because training is sufficiently fast to test many points in the weight clipping parameter space with a large number of toys. Furthermore the departures from the \(\chi ^2\) of the empirical \(\overline{t}\) distribution are rather mild, as shown by Fig. 1, in a rather wide range of weight clipping values. We will instead need the optimization strategy in order to deal with the more realistic five-features problem of Sect. 4 where training is longer and the distribution is more sensitive to the weight clipping parameter.

Up to now we have considered a single architecture, and found one choice of the weight clipping parameter that ensures a good level of \(\chi ^2\) compatibility. According to general principles of model selection, we should now switch to more complex architectures, with more neurons and/or hidden layers, aiming at selecting the most complex network that respects the asymptotic formula and that can be practically handled by the available computational resources. We saw in Ref. [2] that computational considerations play an important role in the selection, however the univariate problem at hand is not sufficiently demanding to illustrate this aspect. Indeed we have found \(\chi ^2\)-compatible networks with up to one hundred neurons, which are clearly an overkill for the univariate problem. Therefore, we will not describe the process of architecture optimization in the univariate example and postpone the discussion to a more realistic context in Sect. 4. The (1, 4, 1) network, with weight clipping equal to 9, will be employed in the rest of the present section.

3.2 Learning nuisances

We now turn to the problem of learning the effect of the nuisance parameters on the distribution of the variable x, following the methodology described in Sect. 2.3. In the simple univariate problem at hand, we have access to the distribution in closed form (Eq. (26)), and in turn to the exact analytic expression for the log distribution ratio

The dependence on the normalization nuisance \({\nu _{{\textsc {n}}}}\) is trivial and it can be incorporated analytically, both in the univariate problem and in realistic analyses. The dependence on the scale nuisance \({\nu _{{\textsc {s}}}}\) is more complex, and not analytically available in realistic problems. We thus approximate it by a Taylor series as in Eq. (14). Namely we define

Truncations of the \({\nu _{{\textsc {s}}}}\) series at the first and at the second order will be considered in what follows.

We model each \({\widehat{\delta }}_a(x)\) coefficient function (with a ranging from 1 to the desired order of the series truncation in Eq. (31)) with fully-connected (1, 4, 1) neural networks with ReLU activation functions, trained with the loss function in Eq. (17). The training samples \({\mathrm{{S}}_1}({\varvec{\nu }}_i)\) and \({\mathrm{{S}}_0}({\varvec{\nu }}_i)\) contain \(20\,000\) events each. The events in \({\mathrm{{S}}_1}({\varvec{\nu }}_i)\) are thrown according to the probability distribution of x in the \(\mathrm{{R}}_{\varvec{0}}\) hypothesis. The ones in \({\mathrm{{S}}_0}({\varvec{\nu }}_i)\) are thrown according to the \(\mathrm{{R}}_{\varvec{\nu }}\) hypothesis at selected points \({\varvec{\nu }}_i=(0,\nu _{{\textsc {s}},i})\) in the nuisance parameters space. The choice of the \(\nu _{{\textsc {s}},i}\) values used for training has a considerable impact on the quality of the reconstruction of the \({\widehat{\delta }}_a(x)\) functions. They should be such as to expose the dependence of the distribution ratio on each monomial of the expansion. For instance, when dealing with the quadratic approximation one would employ a relatively small value of \(\nu _{{\textsc {s}}}\), for which the linear term dominates, in order to learn \({\widehat{\delta }}_1(x)\), and a relatively large one for the reconstruction of \({\widehat{\delta }}_2(x)\). At least one additional value of \(\nu _{{\textsc {s}}}\) would be needed in order to go to the cubic order. This value would be taken even larger, namely in the regime where the quadratic approximation starts becoming insufficient and the dependence of the distribution ratio on the cubic term plays a role. Employing a redundant set of \(\nu _{{\textsc {s}},i}\)’s (for instance, 4 points rather than 2 at the quadratic order) is beneficial. In general it is convenient to pick up the \(\nu _{{\textsc {s}},i}\)’s in pairs of opposite sign, symmetric around the origin.

The dependence on \(\nu _{{\textsc {s}}}\) of \(\log {N_{\mathrm{{b}}}(\nu _{{\textsc {s}}})}/{N_{\mathrm{{b}}}(0)}\) in selected bins. The dots represent the true value of the log-ratio. The linear, quadratic and quartic fits are performed using a subset of the true values points as explained in the main text

The reconstructed distribution log-ratio (empty dots) for different values of \(\nu _{{\textsc {s}}}\), compared with the exact log-ratio and with the fourth-order binned approximation described in the main text. The two panels correspond to truncations of the series in Eq. (31) at linear and at the quadratic order

The set of \(\nu _{{\textsc {s}},i}\)’s that duly captures all the terms in the Taylor expansion can be determined by inspecting the dependence on \(\nu _{{\textsc {s}}}\) of the distribution integrated in bins, and identifying the points on the \(\nu _{{\textsc {s}}}\) axis where a change of regime (say, from linear to quadratic) is observed. This is illustrated in Fig. 2, where we plot the dependence on \(\nu _{{\textsc {s}}}\) of \(\log {N_{\mathrm{{b}}}(\nu _{{\textsc {s}}})}/{N_{\mathrm{{b}}}(0)}\), with \(N_{\mathrm{{b}}}\) the integral of the distribution in selected bins of the variable x. The points represent the true value of the log ratio as obtained from the distribution in Eq. (26). The dot-dashed, dashed and continuous lines are the fit to these points with polynomials of order 1, 2 and 4, respectively. More precisely the first-order polynomial fit only employs the points in the interval \(\nu _{{\textsc {s}}}\in [-0.1,0.1]\), the second-order one employs the range \(\nu _{{\textsc {s}}}\in [-0.3,0.3]\), while the fourth-order polynomial fit is performed on all the points. Compatibly with Eq. (30), we see that the behavior is almost exactly linear when x is very small. Considerable departures from linearity are instead present, for bigger x, when \(\nu _{{\textsc {s}}}\) is as large as 0.3 in absolute value. Based on these plots, for training the linear order we selected the set of values \(\nu _{{\textsc {s}},i}\in \{\pm 0.05,\pm 0.1\}\), for which the linear approximation is valid.Footnote 6 The set \(\nu _{{\textsc {s}},i}\in \{\pm 0.05,\,\pm 0.3\}\) was instead employed for the quadratic order approximation. The figure also suggests that the quadratic order truncation in Eq. (31) should be sufficient to model the dependence of \(\log {r(x;{\varvec{\nu }})}\) on \(\nu _{{\textsc {s}}}\) in the entire phase-space of x, at least if we limit ourselves to the range \(\nu _{{\textsc {s}}}\in [-0.6,0.6]\).

The quality of the reconstruction of the log-ratio is displayed in Fig. 3 for the two different polynomial orders (linear and quadratic) that we have considered for the truncation of the series in Eq. (31). The exact analytic log-ratio in Eq. (30) is represented as dashed lines, to be compared with the reconstructed ratio reported as empty dots. The different colors correspond to different values of \(\nu _{{\textsc {s}}}\). As expected, the first-order truncation is accurate only if \(\nu _{{\textsc {s}}}\) is small. The accuracy improves with the quadratic truncation, for which the reconstructed log-ratio is essentially identical to the exact log-ratio. It should be kept in mind that, as explained in Sect. 2.3, the \(\nu _{{\textsc {s}}}\) range where an accurate reconstruction is needed depends on the allowed range of variability of \(\nu _{{\textsc {s}}}\), namely on its standard deviation \(\sigma _{{\textsc {s}}}\). From the figure we see that the linear polynomial modeling is adequate only if \(\sigma _{{\textsc {s}}}\) is below around 0.3, while with the quadratic one \(\sigma _{{\textsc {s}}}\) could be as large as 0.6.Footnote 7 The figure also reports the binned prediction for the log-ratio, as obtained from the quartic fit to \(\log {N_{\mathrm{{b}}}(\nu _{{\textsc {s}}})}/{N_{\mathrm{{b}}}(0)}\) previously described and displayed in Fig. 2. In realistic examples where the analytic log-ratio is not available, the binned prediction can be employed to monitor the quality of the reconstruction provided by the \({\widehat{\delta }}_a(x)\) networks. A more stringent test of the accuracy of the distribution log-ratio approximation, connected with the final validation of our strategy and its robustness to nuisances, will be discussed in Sect. 3.4.

3.3 Computing the test statistic

We finally have at our disposal all the ingredients to compute the test statistic \(t({\mathcal {D}},{\mathcal {A}})\). This consists of the \(\tau \) term, subtracted by the correction \(\Delta \). We now illustrate the evaluation of the two terms in turn, as implemented in the TensorFlow [25] package. The implementation is schematically represented in Fig. 4, and the corresponding code is available at [26].