Abstract

The search for feebly-interacting new-physics particles in the MeV-GeV mass range often involves high-intensity beams dumped into thick heavy targets. The challenge of evaluating the expected backgrounds for these searches from first principles is limited by the CPU time needed to generate the shower induced by the primary beam. We present a Monte Carlo biasing method allowing a three orders of magnitude increase in the efficiency for the simulation of the muon production in a 400 GeV/c proton beam-dump setup. At the same time, this biasing method is maintaining nearly every feature of a simulation from first principles.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Realising more clearly the possibility that new particles beyond the Standard Model (SM) could be found at low mass and feeble couplings rather than at the highest achievable energies, has attracted the attention of many new experimental proposals. It has also lead to efforts to extend existing experiments in such a direction. The fact that many particle interactions are needed to observe possibly associated physics events puts such experiments under the umbrella of the “intensity frontier”. In the ‘PBC frame-work’ at CERN [1], initiatives in this context are, among else, the SHiP experiment [2], the NA62 [3] beam dump operation (NA62-BD), NA64 [5] and FASER [4]. Other initiatives have started outside CERN, too, such as SeaQuest at FNAL [6]. While differing vastly in the details of their implementation, these experiments have in common that an intense particle beam is shot to interact with a ‘heavy’ target material. Rarely, such an interaction could create a low-mass (typically \(<1\) GeV) exotic particle, very feebly coupled to SM particles, whose implications (decay or missing energy) are investigated after layers (typically many tens of meters) of ‘shielding’. This shielding is supposed to absorb most of the products from known physics processes, while letting the feebly interacting new-physics particles which are searched for, pass. The scarceness of the sought-after new-physics processes poses a challenge not only to the detection but also to the simulation of the experiment. Typically, on the order of \(10^{18}\) or more primary particles are made to interact for proton-dump-experiments. Thus, in principle, Standard Model Processes that could constitute a background to a new-physics search have to be understood and simulated at that level, which seems an unfeasible challenge.

The concrete problem we tackled is the simulation of the muon production after a high-energy proton beam is absorbed into a thick target. The generated muons can induce a variety of background to the searches for visible decays of feebly-interacting particles. If a thick absorber made of a high-Z material is considered, the yield can be as low as \(5\times 10^{-4}\) single muons above 10 GeV per proton, making a brute-force simulation quite inefficient CPU-wise. In the context of NA62-BD, a past approach to tackle this problem has been performed scoring the muons at the downstream face of the absorber and parametrizing the distributions obtained in terms of momentum, position, and direction [7]. The parametrization was used as a particle gun for further simulation of the downstream beam line and experimental apparatus. In the context of the SHiP project, a more sophisticated approach using generative adversarial neural networks has been developed to define the particle gun [8]. Both methods allow a reduction of CPU time budget per muon of five to six orders of magnitude. From the reliability point of view, both approaches critically depend on the statistics of the sample used to define or train the parametrization. The method here described constitute a decisive progress. Using a biasing simulation technique, it allows a dramatic boost of the statistical power with respect to a simulation from first principles with virtually no loss of information. The simulation from first principles, without any biasing applied, is called analogue in this document.

The method described can be directly adapted to the simulation of high-intensity neutrino beams, if the appropriate parent particles are considered. The flux simulations at both the near and far detectors would benefit from the dramatic gain in CPU time efficiency, obtained while maintaining the principles of the hadronic shower model used. Moreover, the same biasing concepts can be applied to correctly evaluate the emission of exotic particles from every stage of the shower initiated by the beam particle.

2 Generalities about biasing

The efficiency of a Monte Carlo (MC) simulation can be defined as

where \(\sigma \) and T refer to the variance of the yield of interesting events (containing at least 1 muon) per event and the CPU time needed to simulate 1 full event, respectively. Note that this quantity is independent on the number of events simulated. The variance reduction (biasing) method described in this note has the remarkable feature of increasing the muon yield (thus lowering \(\sigma \)) by orders of magnitude, while only marginally increasing the CPU time T. As with any biasing technique, special care is required in the implementation such that the physics simulated under biasing replicates as much as possible the analogue Monte Carlo (MC). Since the dominant muon sources are produced in all stages of the hadronic shower development, the biasing method must preserve the shape and composition of the analogue shower, i.e. there can be no modification to the number or kinematics of the particles created in an analogue event.

In the next section we give a heuristic description of the biasing method. Then, we illustrate a concrete implementation of this method for a simple geometry, employing GEANT4 [9] as simulation toolkit, since it allows for straightforward usage of user-defined biasing schemes.

3 Setup and algorithm description

3.1 GEANT4 configuration

The geometry setup used to test the biasing algorithm is a simplification of the NA62 experiment absorber [3] (TAX) consisting of 2 blocks of copper followed by 6 blocks of iron. Each block has dimensions 0.78, 1.2, and 0.415 m in the x, y, and z directions, respectively, with the z-axis oriented along the beam-line. The primary particles are protons of \(400~\mathrm {GeV/c}\) momentum moving along the z-axis, with a pencil beam profile, and the impact point is at (0, 0) in the (x, y) plane. The overall TAX thickness corresponds to approximately 19 proton interaction lengths.

To the purpose of comparing biased with analogue muons, we have defined a scoring plane at the downstream face of the absorber. We used the FTFP_BERT physics list and turned on the simulation of short-lived particles.

3.2 Algorithm

Conceptually, the biasing scheme turns out to be straight-forward:

-

S.1

The event begins by shooting the primary proton, which at some point interacts inelastically and produces secondary particles of interest (mesons or photons)

-

S.2

When the first interesting particle reaches the end of its first step, we add an identical particle to the stack of secondaries. Here the original is marked as ”analogue” (a) and the clone is marked as ”biased” (b). The kinematics and starting point of (b) must be the same as those of (a).

-

S.3

The simulation of the original particle continues until it is destroyed or it leaves the world volume. Any time (a) creates other interesting particles, S.2 is applied to them.

-

S.4

The simulation of the biased particle starts. At each step the cross-sections of processes that would kill (b) without producing muons are set to 0. At the same time, the interaction lengths of processes leading to muons in the final state are set to

$$\begin{aligned} \lambda _{b}= \lambda _{a}\left[ 1-\exp (-l/\lambda _{a})\right] \end{aligned}$$(2)where \(\lambda _a\) is the analogue interaction length of the process and l is the distance between the current position of the particle and the projection along its current momentum on the plane at which the muons must be scored. Only this step modifies the weights (probabilities) carried by the biased particles. These weights are automatically computed, stored and propagated to daughters by GEANT4.

-

S.5

Whenever (b) produces another interesting particle, the secondary particle is marked as analogue, but further cloning is prevented.

When the simulation is done, there will be a mixture of biased and analogue muons in the output, but all of the biased ones will have weights strictly lower than 1. This criterion can be used to discard the analogue component, which would otherwise spoil the statistical power of the biased sample.

Having set the informal description of the algorithm, we now turn to the concrete implementation in GEANT4. We refer to the classes of the GEANT4 biasing framework [10].

We see that flagging the original particles and their clones is essential for the scheme to work. One can achieve this by deriving from the pure virtual class G4VUserTrackInformation to store the flag and possibly other pieces information (e.g. the PDG encoding of the mother particle of each track).

The cloning of each track is a ”non-physics” biasing operation for which a G4VBiasingOperation class is needed such that GEANT4 can handle everything swiftly. For our purposes, this class needs to implement the pure virtual methods DistanceToApplyOperation (used to decide whether or not a given particle must be cloned) and GenerateBiasingFinalState (used for the actual cloning operation). The former should always return DBL_MAX, but set the G4ForceCondition to true only for the first step of each interesting track. For the remaining steps, the condition is set to false. In the GenerateBiasingFinalState we create a new G4Track by copying the one of the incoming particle. We then assign as current momentum and position of this clone, the momentum and position of the original track at its creation, which can be significantly different from the ones at the end of the current step. An object of type G4VParticleChange is then created, using only the clone.

The next step is to ensure that all the cross-sections are handled as in point (S.4) above. GEANT4 has a built-in operation, G4BOptnChangeCrossSection, which allows the user to modify any cross-section by any factor at each step during the simulation of a particle.

The biasing interface of GEANT4 additionally requires a G4VBiasingOperator class to handle all these operations. ProposeOccurenceBiasingOperation acts only on the clones by making null all cross-sections of processes not leading to muons. The other mandatory method ProposeNonPhysicsBiasingOperation, on the other hand, acts only on original tracks through the cloning operation defined above.

Figure 1 illustrates the steps above applied to a \(K^{+}\).

Example of the biasing algorithm showing operations applied on analogue (a) and biased (b) particles. Particles to be cloned at the end of their first step are represented by blue rectangles. Solid black rectangles indicate particles undergoing analogue physics processes, but no cloning. Dashed rectangles are used for particles with modified cross-sections along their path. Particles to be kept at the end of the simulation are shown in green rectangles. The evolution of the weights (w) is also shown

Before showing the comparison of this method with the analogue simulation, we note a few aspects that users of this method should be aware of.

Step S.5 of the algorithm essentially removes muons of very small weights, that would be in any case discarded in a real application. Equation 2 is technically incorrect for charged particles as it assumes the analogue interaction length to be constant along the step. However, for above-GeV energies this limitation becomes irrelevant, as we will show in the next section.

One of the caveats of this biasing scheme is that its results are reliable only in the approximation that at most 1 muon/event reaches the scoring plane in the analogue setup. While this is not necessarily the case, we will show that for the simplistic geometry in our simulation, the analogue and biased samples agree very well.

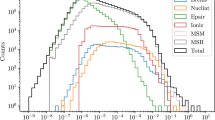

Finally, it is worth drawing some attention to the resulting weights. The weight distribution has an average of \(\simeq 3\times 10^{-4}\) and an RMS of \(\simeq 2\times 10^{-3}\). The transformations in S.4 produce weights of very small values (less than \(10^{-20}\)), in particular for muons generated by photon conversion. At the same time, about \(1\%\) of the weights are above 0.01, with a tail extending up to 1. When using the MC sample for background generation, extremely low weights induce an efficiency loss, while the muons with very large weight might affect the fluctuations. Possible solutions to these issues depend on the wanted application. One option would be to define a weight window, so that muons above a maximum threshold are split and muons below a minimum threshold undergo a Russian Roulette (see [10] for details). The choice of the weight window, the split multiplicity, and the Russian Roulette survival weight would be highly dependent on the application and would have to be optimised by the users.

The weight issues can be mitigated by choosing a constant enhancement factor for the processes leading to muons in the final state. Instead of setting the cross-sections to zero for other processes, one simply kills the clones and their daughters if the end process does not generate muons. The enhancement factor should depend on the mother particle species, not to alter the muon composition. With such an approach, the low weights are completely eliminated. Still one might impose some weight window if large fluctuations are seen to be induced by the few events in the high-weight tail. Again, all the involved parameters would have to be chosen by users and tuned according to the application. We stress that there is a natural trade-off between simulation efficiency and statistical power when one deals with biased MC samples. The algorithm proposed in this paper serves the primary purpose to allow the exploration of the full phase space of muons coming from proton interactions in thick absorbers. Reducing statistical fluctuations is left to the users.

4 Results

Using the setup described in the previous section we have obtained \(3.7\times 10^{-3}~\mu ^+\)/POT in the analogue sample and \(15~\mu ^+\)/POT in the biased sample, in the entire momentum spectrum. As opposed to the increase in statistics by more than three orders of magnitude, the CPU time overhead per event introduced by the biasing is roughly equivalent to the CPU time needed for an analogue event. On a CentOS 7 machine with Intel Xeon Gold 6230R, simulating \(10^4\) events requires about 350 s in the analogue setup and 720 s in the biased one. The hadronic shower is simulated faster in volumes which are somewhat homogeneous (such as the absorber in our simulation) and this implies that adding supplementary particles, though not undergoing heavy processes, increases the processing time significantly. The net increase in statistics one can achieve using our biasing scheme is a factor around 2000, while for more complex geometries, this gain is expected to increase somewhat.

z-coordinate of muon emission (top) and z-component of muon momentum at the scoring plane (bottom) in the analogue (symbols, blue) and biased (continuous, red) samples with positive particles plotted on the left column and negative ones on the right column. Ratio plots, together with the p value from the \(\chi ^{2}\) test for the analogue and biased distributions, are also shown

Figure 2 shows an excellent agreement between analogue and biased simulations in both the z-component of the muon momentum and the z-coordinate of the muon origin. The analogue sample starts to be poorly populated for all practical purposes at \(P_{z}\simeq 150~\mathrm {GeV/c}\), while this not the case for the biased sample even at momenta around \(300~\mathrm {GeV/c}\). The ratio plots show remarkable stability over the domain in which the analogue sample is adequately populated. We have also performed the \(\chi ^2\) test between the analogue and biased distributions. The ranges considered for the test are \(Z_{\mathrm {init}}<3000\mathrm {mm}\) and \(P_{z} < 200 \mathrm {GeV/c}\) respectively. The resulting p values assure that the samples are equivalent to a very large extent. In Fig. 3, the same distributions are split by mother particle. One can see that the agreement still holds nicely, even for sub-leading sources (\(K_{L}^{0}\)s and \(\gamma \)s).

Same as in Fig. 2, but with contributions split by mother particle

Standard deviation of the \(\mu ^+\) position x, y (left panels) and muon direction angle \(x^\prime ,y^\prime \) (right panels) at the downstream face of the absorber as a function of the \(\mu ^+\) momentum. Distributions for x and \(x^\prime \) (y and \(y^\prime \)) are shown in the top (bottom) panels. The analogue (biased) distribution is shown by the blue filled (red open) dots. The ratios between the analogue and biased distributions are also shown

Same as in Fig. 4, but for negative muons

In order to ensure the compatibility between the two samples, besides the momentum and vertex we must also check the agreement in terms of position and direction of the muons scored at the downstream face of the absorber. To this purpose, we stored the 2D distributions of \(P_{x}/P_{z}\equiv x^\prime \) vs x and, similarly, for \(y^\prime \) vs y in \(P_{z}\) bins of \(5~\mathrm {GeV/c}\) width. From these histograms, we then extracted the standard deviations \(\sigma _{x,x^\prime ,y,y^\prime }\) together with their errors.

Figures 4 and 5 show these quantities as function of \(P_{z}\) for positive and negative muons. The analogue distributions end at \(\simeq 200~\mathrm {GeV/c}\) due to insufficient statistics (less than 100 entries in each \(P_{z}\) bin). These last two plots ensure that the analogue and biased samples are compatible.

In summary, in this paper we have outlined a method to achieve an \({\mathcal {O}}(10^3)\) fold improvement in the statistical power for the production of muon halos in beam-dump experiments. The method has been validated using a simplified mock-up version of the NA62 beam dump. We expect that this method can be adapted in a straightforward fashion to a detailed description of the NA62 experiment, as well as to experiments with similar setup. The method can be directly applied to the simulation of neutrino beams, as well. Our results constitute a significant step forward in the aim to determine the expected background to searches for new-physics feebly-interacting particles from first principles.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: The paper is a proof of concept for an algorithm that improves the statistical power of muon halos simulation for beam-dump experiment. The simulation has to be run for each use case.]

References

J. Jaeckel, M. Lamont, C. Vallée, Nat. Phys. 16(4), 393–401 (2020). https://doi.org/10.1038/s41567-020-0838-4

M. Anelli et al. [SHiP], arXiv:1504.04956 [physics.ins-det]

E. Cortina Gil et al. [NA62], JINST 12(05), P05025 (2017). https://doi.org/10.1088/1748-0221/12/05/P05025. arXiv:1703.08501 [physics.ins-det]

J.L. Feng, I. Galon, F. Kling , S. Trojanowski, Phys. Rev. D 97(3), 035001 (2018). https://doi.org/10.1103/PhysRevD.97.035001. arXiv:1708.09389 [hep-ph]

D. Banerjee et al. [NA64], Phys. Rev. Lett. 125(8), 081801 (2020). https://doi.org/10.1103/PhysRevLett.125.081801. arXiv:2005.02710 [hep-ex]

C.A. Aidala et al., SeaQuest. Nucl. Instrum. Methods A 930, 49–63 (2019). https://doi.org/10.1016/j.nima.2019.03.039. arXiv:1706.09990 [physics.ins-det]

M.S. Rosenthal et al., Muon Background Studies for Beam Dump Operation of the K12 Beam Line at CERN, in Proc. ICAP’18, Key West, FL, USA, Oct. 2018, pp. 93–98. https://doi.org/10.18429/JACoW-ICAP2018-SUPAG05. http://jacow.org/icap2018/papers/supag05.pdf

C. Ahdida et al. [SHiP], JINST 14, P11028 (2019). https://doi.org/10.1088/1748-0221/14/11/P11028. arXiv:1909.04451 [physics.ins-det]

J. Allison et al., Nucl. Instrum. Methods A 835, 186–225 (2016). https://doi.org/10.1016/j.nima.2016.06.125

Geant4 Collaboration, Geant4 Book For Application Developers, Version 10.6v2. https://geant4-userdoc.web.cern.ch/UsersGuides/ForApplicationDeveloper/BackupVersions/V10.6c/html/index.html

Acknowledgements

SG acknowledges the support of the Institute of Atomic Physics through the CERN-RO Project No. 10/10.03.2020. BD and EM acknowledge support through the European Research Council under grant ERC-2018-StG-802836 (AxScale project). We also would like thank Simone Schuchmann for useful discussions on the presented work.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3

About this article

Cite this article

Ghinescu, S., Döbrich, B., Minucci, E. et al. A biased MC for muon production for beam-dump experiments. Eur. Phys. J. C 81, 767 (2021). https://doi.org/10.1140/epjc/s10052-021-09541-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-021-09541-7