Abstract

We introduce the DNNLikelihood, a novel framework to easily encode, through deep neural networks (DNN), the full experimental information contained in complicated likelihood functions (LFs). We show how to efficiently parametrise the LF, treated as a multivariate function of parameters of interest and nuisance parameters with high dimensionality, as an interpolating function in the form of a DNN predictor. We do not use any Gaussian approximation or dimensionality reduction, such as marginalisation or profiling over nuisance parameters, so that the full experimental information is retained. The procedure applies to both binned and unbinned LFs, and allows for an efficient distribution to multiple software platforms, e.g. through the framework-independent ONNX model format. The distributed DNNLikelihood can be used for different use cases, such as re-sampling through Markov Chain Monte Carlo techniques, possibly with custom priors, combination with other LFs, when the correlations among parameters are known, and re-interpretation within different statistical approaches, i.e. Bayesian vs frequentist. We discuss the accuracy of our proposal and its relations with other approximation techniques and likelihood distribution frameworks. As an example, we apply our procedure to a pseudo-experiment corresponding to a realistic LHC search for new physics already considered in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Likelihood Function (LF) is the fundamental ingredient of any statistical inference. It encodes the full information on experimental measurements and allows for their interpretation both from a frequentist (e.g. Maximum Likelihood Estimation (MLE)) and a Bayesian (e.g. Maximum a Posteriori (MAP)) perspectives.Footnote 1

On top of providing a description of the combined conditional probability distribution of data given a model (or vice versa, when the prior is known, of a model given data), and therefore of the relevant statistical uncertainties, the LF may also encode, through the so-called nuisance parameters, the full knowledge of systematic uncertainties and additional constraints (for instance coming from the measurement of fundamental input parameters by other experiments) affecting a given measurement or observation, as for instance discussed in Ref. [3].

Current experimental and phenomenological results in fundamental physics and astrophysics typically involve complicated fits with several parameters of interest and hundreds of nuisance parameters. Unfortunately, it is generically considered a hard task to provide all the information encoded in the LF in a practical and reusable way. Therefore, experimental analyses usually deliver only a small fraction of the full information contained in the LF, typically in the form of confidence intervals obtained by profiling the LF on the nuisance parameters (frequentist approach), or in terms of probability intervals obtained by marginalising over nuisance parameters (Bayesian approach), depending on the statistical method used in the analysis. This way of presenting results is very practical, since it can be encoded graphically into simple plots and/or simple tables of expectation values and correlation matrices among observables, effectively making use of the Gaussian approximation, or refinements of it aimed at taking into account asymmetric intervals or one-sided constraints. However, such ‘partial’ information can hardly be used to reinterpret a result within a different physics scenario, to combine it with other results, or to project its sensitivity to the future. These tasks, especially outside experimental collaborations, are usually done in a naïve fashion, trying to reconstruct an approximate likelihood for the quantities of interest, employing a Gaussian approximation, assuming full correlation/uncorrelation among parameters, and with little or no control on the effect of systematic uncertainties. Such control on systematic uncertainties could be particularly useful to project the sensitivity of current analyses to future experiments, an exercise particularly relevant in the context of future collider studies [4,5,6]. One could for instance ask how a certain experimental result would change if a given systematic uncertainty (theoretical or experimental) is reduced by some amount. This kind of question is usually not addressable using only public results for the aforementioned reasons. This and other limitations could of course be overcome if the full LF were available as a function of the observables and of the elementary nuisance parameters, allowing for:

- 1.

the combination of the LF with other LFs involving (a subset of) the same observables and/or nuisance parameters;

- 2.

the reinterpretation of the analysis under different theoretical assumptions (up to issues with unfolding);

- 3.

the reuse of the LF in a different statistical framework;

- 4.

the study of the dependence of the result on the prior knowledge of the observables and/or nuisance parameters.

A big effort has been put in recent years into improving the distribution of information on the experimental LFs, usually in the form of binned histograms in mutually exclusive categories, or, even better, giving information on the covariance matrix between them. An example is given by the Higgs Simplified Template Cross Sect. [7]. Giving only information on central values and uncertainties, this approach makes intrinsic use of the Gaussian approximation to the LF without preserving the original information on the nuisance parameters of each given analysis. A further step has been taken in Refs. [8,9,10] where a simplified parameterisation in terms of a set of “effective” nuisance parameters was proposed, with the aim of catching the main features of the true distribution of the observables, up to the third moment.Footnote 2 This is a very practical and effective solution, sufficiently accurate for many use cases. On the other hand, its underlying approximations come short whenever the dependence of the LF on the original nuisance parameters is needed, and with highly non-Gaussian (e.g. multi-modal) LFs.

Recently, the ATLAS collaboration has taken a major step forward, releasing the full experimental LF of an analysis [12] on HEPData [13] through the HistFactory framework [14], with the format presented in Ref. [15]. Before this, the release of the full experimental likelihood was advocated several times as a fundamental step forward for the HEP community (see e.g. the panel discussion in Ref. [16]), but so far it was not followed up with a concrete commitment. The lack of a concrete effort in this direction was usually attributed to technical difficulties and indeed, this fact was our main motivation when we initiated the work presented in this paper.

In this work, we propose to present the full LF, as used by experimental collaborations to produce the results of their analyses, in the form of a suitably trained Deep Neural Network (DNN), which is able to reproduce the original LF as a function of physical and nuisance parameters with the accuracy required to allow for the four aforementioned tasks.

The DNNlikelihood approach offers at least two remarkable practical advantages. First, it does not make underlying assumptions on the structure of the LF (e.g. binned vs unbinned), extending to use cases that might be problematic for currently available alternative solutions. For instance, there are extremely relevant analyses that are carried out using an unbinned LF, notably some Higgs study in the four-leptons golden decay mode [17] and the majority of the analyses carried out at B-physics experiments. Second, the use of a DNN does not impose on the user any specific software choice. Neural Networks are extremely portable across multiple software environments (e.g. C++, Python, Matlab, R, or Mathematica) through the ONNX format [18]. This aspect could be important whenever different experiments make different choices in terms of how to distribute the likelihood.

In this respect, we believe that the use of the DNNLikehood could be relevant even in a future scenario in which every major experiment has followed the remarkable example set by ATLAS. For instance, it could be useful to overcome some technical difficulty with specific classes of analyses. Or, it could be seen as a further step to take in order to import a distributed likelihood into a different software environment. In addition, the DNNLikehood could be used in other contexts, e.g. to distribute the outcome of phenomenological analyses involving multi-dimensional fits such as the Unitarity Triangle Analysis [19,20,21,22,23], the fit of electroweak precision data and Higgs signal strengths [24,25,26,27,28], etc.

There are two main challenges associated to our proposed strategy: on one hand, in order to design a supervised learning technique, an accurate sampling of the LF is needed for the training of the DNNLikelihood. On the other hand a (complicated) interpolation problem should be solved with an accuracy that ensures a real preservation of all the required information on the original probability distribution.

The first problem, i.e. the LF sampling, is generally easy to solve when the LF is a relatively simple function which can be quickly evaluated in each point in the parameter space. In this case, Markov Chain Monte Carlo (MCMC) techniques [29] are usually sufficient to get dense enough samplings of the function, even for very high dimensionality, in a reasonable time. However the problem may quickly become intractable with these techniques when the LF is more complicated, and takes much longer time to be evaluated. This is typically the case when the sampling workflow requires the simulation of a data sample, including computationally costly corrections (e.g. radiative corrections) and/or a simulation of the detector response, e.g. through Geant4 [30]. In these cases, evaluating the LF for a point of the parameter space may require \(\mathcal {O}\)(minutes) to go through the full chain of generation, simulation, reconstruction, event selection, and likelihood evaluation, making the LF sampling with standard MCMC techniques impractical. To overcome this difficulty, several ideas have recently been proposed, inspired by Bayesian optimisation and Gaussian processes, known as Active Learning (see, for instance, Refs. [31, 32] and references therein). These techniques, though less robust than MCMC ones, allow for a very “query efficient” sampling, i.e. a sampling that requires the smallest possible number of evaluations of the full LF. Active Learning applies machine learning techniques to design the proposal function of the sampling points and can be shown to be much more query efficient than standard MCMC techniques. Another possibility would be to employ deep learning and MCMC techniques together in a way similar to Active Learning, but inheriting some of the nice properties of MCMC. We defer a discussion of this new idea to a forthcoming publication [33], while in this work we focus on the second of the aforementioned tasks: we assume that an accurate sampling of the LF is available and design a technique to encode it and distribute it through DNNs.

A Jupyter notebook, the Python source files and results presented in this paper are available on GitHub  , while datasets and trained networks are stored on Zenodo

[34]. A dedicated Python package allowing to sample LFs and to build, optimize, train, and store the corresponding DNNLikelihoods is in preparation. This will not only allow to construct and distribute DNNLikelihoods, but also to use them for inference both in the Bayesian and frequentist frameworks.

, while datasets and trained networks are stored on Zenodo

[34]. A dedicated Python package allowing to sample LFs and to build, optimize, train, and store the corresponding DNNLikelihoods is in preparation. This will not only allow to construct and distribute DNNLikelihoods, but also to use them for inference both in the Bayesian and frequentist frameworks.

This paper is organized as follows. In Sect. 2 we discuss the issue of interpolating the LF from a Bayesian and frequentist perspective and set up the procedure for producing suitable training datasets. In Sect. 3 we describe a benchmark example, consisting in the realistic LHC-like New Physics (NP) search proposed in Ref. [9]. In Sect. 4 we show our proposal at work for this benchmark example, whose LF depends on one physical parameter, the signal strength \(\mu \), and 94 nuisance parameters. Finally, in Sect. 5 we conclude and discuss some interesting ideas for future studies.

2 Interpolation of the Likelihood function

The problem of fitting high-dimensional multivariate functions is a classic interpolation problem, and it is nowadays widely known that DNNs provide the best solution to it. Nevertheless, the choice of the loss function to minimise and of the metrics to quantify the performance, i.e. of the “distance” between the fitted and the true function, depends crucially on the nature of the function and its properties. The LF is a special function, since it represents a probability distribution. As such, it corresponds to the integration measure over the probability of a given set of random variables. The interesting regions of the LF are twofold. From a frequentist perspective the knowledge of the profiled maxima, that is maxima of the distribution where some parameters are held fixed, and of the global maximum, is needed. This requires a good knowledge of the LF in regions of the parameter space with high probability (large likelihood), and, especially for high dimensionality, very low probability mass (very small prior volume). These regions are therefore very hard to populate via sampling techniques [35] and give tiny contributions to the LF integral, the latter being increasingly dominated by the “tails” of the multidimensional distribution as the number of dimensions grows. From a Bayesian perspective the expectation values of observables or parameters, which can be computed through integrals over the probability measure, are instead of interest. In this case one needs to accurately know regions of very small probabilities, which however correspond to large prior volumes, and could give large contributions to the integrals.

Let us argue what is a good “distance” to minimise to achieve both of the aforementioned goals, i.e. to know the function equally well in the tails and close to the profiled maxima corresponding to the interesting region of the parameters of interest. Starting from the view of the LF as a probability measure (Bayesian perspective), the quantity that one is interested in minimising is the difference between the expectation values of observables computed using the true probability distribution and the fitted one.

For instance, in a Bayesian analysis one may be interested in using the probability density \(\mathcal {P} = \mathcal {L}\times \Pi \), where \(\mathcal {L}\) denotes the likelihood and \(\Pi \) the prior, to estimate expectation values as

where the probability measure is \(\hbox {d}\mathcal {P}(\textit{\textbf{x}}) = \mathcal {P}(\textit{\textbf{x}})\hbox {d}\textit{\textbf{x}}\), and we collectively denoted by the n-dimensional vector \(\textit{\textbf{x}}\) the parameters on which f and \(\mathcal {P}\) depend, treating on the same footing the nuisance parameters and the parameters of interest. Let us assume now that the solution to our interpolation problem provides a predicted pdf \(\mathcal {P}_{\mathrm {P}}(\textit{\textbf{x}})\), leading to an estimated expectation value

This can be rewritten, by defining the ratio \(r(\textit{\textbf{x}}) \equiv \mathcal {P}_{\mathrm {P}}(\textit{\textbf{x}})/\mathcal {P}(\textit{\textbf{x}}) = \mathcal {L}_{\mathrm {P}}(\textit{\textbf{x}})/\mathcal {L}(\textit{\textbf{x}})\), as

so that the absolute error in the evaluation of the expectation value is given by

For a finite sample of points \(\textit{\textbf{x}}_{i}\), with \(i=1,\ldots ,N\), the integrals are replaced by sums and Eq. (4) becomes

Here, the probability density function \(\mathcal {P}(\textit{\textbf{x}})\) has been replaced with \(\mathcal {F}(\textit{\textbf{x}}_{i})\), the frequencies with which each of the \(\textit{\textbf{x}}_{i}\) occurs, normalised such that \(\sum _{\textit{\textbf{x}}_{i}|_{\mathcal {U}(\textit{\textbf{x}})}}\mathcal {F}(\textit{\textbf{x}}_{i})=N\), the notation \(\textit{\textbf{x}}_{i}|_{\mathcal {U}(\textit{\textbf{x}})}\) indicates that the \(\textit{\textbf{x}}_{i}\) are drawn from a uniform distribution, and the 1/N factor ensures the proper normalisation of probabilities. This sum is very inefficient to calculate when the probability distribution \(\mathcal {P}(\textit{\textbf{x}})\) varies rapidly in the parameter space, i.e. deviates strongly from a uniform distribution, since most of the \(\textit{\textbf{x}}_{i}\) points drawn from the uniform distribution will correspond to very small probabilities, giving negligible contributions to the sum. An example is given by multivariate normal distributions, where, increasing the dimensionality, tails become more and more relevant (see “Appendix A”). A more efficient way of computing the sum is given by directly sampling the \(\textit{\textbf{x}}_{i}\) points from the probability distribution \(\mathcal {P}(\textit{\textbf{x}})\), so that Eq. (5) can be rewritten as

This expression clarifies the aforementioned importance of being able to sample points from the probability distribution \(\mathcal {P}\) to efficiently discretize the integrals and compute expectation values. The minimum of this function for any \(f(\textit{\textbf{x}})\) is in \(r(\textit{\textbf{x}}_{i})=1\), which, in turn, implies \(\mathcal {L}(\textit{\textbf{x}}_{i})=\mathcal {L}_{\mathrm {P}}(\textit{\textbf{x}}_{i})\). This suggests that an estimate of the performance of the interpolated likelihood could be obtained from any metric that has a minimum in absolute value at \(r(\textit{\textbf{x}}_{i})=1\). The simplest such metric is the mean percentage error (MPE)

Technically, formulating the interpolation problem on the LF itself introduces the difficulty of having to fit the function over several orders of magnitude, which leads to numerical instabilities. For this reason it is much more convenient to formulate the problem using the natural logarithm of the LF, the so-called log-likelihood \(\log \mathcal {L}\). Let us see how the error on the log-likelihood propagates to the actual likelihood. Consider the mean error (ME) on the log-likelihood

The last logarithm can be expanded for \(r(\textit{\textbf{x}}_{i}) \sim 1\) to give

It is interesting to notice that \(\text {ME}_{\log \mathcal {L}}\) defined in Eq. (8) corresponds to the Kullback–Leibler divergence [36], or relative entropy, between \(\mathcal {P}\) and \(\mathcal {P}_{\mathrm {P}}\):

While Eq. (10) confirms that small values of \(D_{\mathrm {KL}}=\text {ME}_{\log \mathcal {L}}\sim \text {MPE}_{\mathcal {L}}\) correspond to a good performance of the interpolation, \(D_{\mathrm {KL}}\), as well as ME and MPE, do not satisfy the triangular inequality and therefore cannot be directly optimised for the purpose of training and evaluating a DNN. Equation (8) suggests however that the mean absolute error (MAE) or the mean square error (MSE) on \(\log \mathcal {L}\) should be suitable losses for the DNN training: we explicitly checked that this is indeed the case, with MSE performing slightly better for well-known reasons.

Finally, in the frequentist approach, the LF can be treated just as any function in a regression (or interpolation) problem, and, as we will see, the MSE provides a good choice for the loss function.

2.1 Evaluation metrics

We have argued above that the MAE or MSE on \(\log \mathcal {L}(\textit{\textbf{x}}_{i})\) are the most suitable loss functions to train our DNN for interpolating the LF on the sample \(\textit{\textbf{x}}_{i}\). We are then left with the question of measuring the performance of our interpolation from the statistical point of view. In addition to \(D_{\mathrm {KL}}\), several quantities can be computed to quantify the performance of the predictor. First of all, we perform a Kolmogorov-Smirnov (K-S) two-sample test [37, 38] on all the marginalised one-dimensional distributions obtained using \(\mathcal {P}\) and \(\mathcal {P}_{P}\). In the hypothesis that both distributions are drawn from the same pdf, the p value should be distributed uniformly in the interval [0, 1]. Therefore, the median of the distribution of p values of the one-dimensional K-S tests is a representative single number which allows to evaluate the performance of the model. We also compute the error on the width of Highest Posterior Density Intervals (HPDI) \(\mathrm {PI}^{i}\) for the marginalised one-dimensional distribution of the i-th parameter, \(E_{\mathrm {PI}}^{i} =\left| \mathrm {PI}^{i} - \mathrm {PI}^{i}_{P} \right| \), as well as the relative error on the median of each marginalised distribution. From a frequentist point of view, we are interested in reproducing as precisely as possible the test statistics used in classical inference. In this case we evaluate the model looking at the mean error on the test statistics \(t_{\mu }\), that is the likelihood ratio profiled over nuisance parameters.

To simplify the presentation of the results, we choose the best models according to the median K-S p value, when considering bayesian inference, and the mean error on the \(t_{\mu }\) test-statistics, when considering frequentist inference. These quantities are compared for all the different models on an identical test set statistically independent from both the training and validation sets used for the hyperparameter optimisation.

2.2 Learning from imbalanced data

The loss functions we discussed above are all averages over all samples and, as such, will lead to a better learning in regions that are well represented in the training set and to a less good learning in regions that are under-represented. On the other hand, an unbiased sampling of the LF will populate much more regions corresponding to a large probability mass than regions of large LF. Especially in large dimensionality, it is prohibitive, in terms of the needed number of samples, to make a proper unbiased sampling of the LF, i.e. converging to the underlying probability distribution, while still covering the large LF region with enough statistics. In this respect, learning a multi-dimensional LF raises the issue of learning from highly imbalanced data. This issue is extensively studied in the ML literature for classification problems, but has gathered much less attention from the regression point of view [39,40,41].

There are two main approaches in the case of regression, both resulting in assigning different weights to different examples. In the first approach, the training set is modified by oversampling and/or undersampling different regions (possibly together with noise) to counteract low/high population of examples, while in the second approach the loss function is modified to weigh more/less regions with less/more examples. In the case where the theoretical underlying distribution of the target variable is (at least approximately) known, as in our case, either of these two procedures can be applied by assigning weights that are proportional to the inverse frequency of each example in the population. This approach, applied for instance by adding weights to a linear loss function, would really weigh each example equally, which may not be exactly what we need. Moreover, in the case of large dimensionality, the interesting region close to the maximum would be completely absent from the sampling, making any reweighting irrelevant. In this paper we therefore apply an approach belonging to the first class mentioned above, consisting in sampling the LF in the regions of interest and in constructing a training sample that effectively weighs the most interesting regions. As we clarify in Sect. 3, this procedure consists in building three samples: an unbiased sample, a biased sample and a mixed one. Training data will be extracted from the latter sample. Let us briefly describe the three:Footnote 3

Unbiased sample: A sample that has converged as accurately as possible to the true probability distribution. Notice that this sample is the only one which allows posterior inference in a Bayesian perspective, but would generally fail in making frequentist inference [35].

Biased sample: A sample concentrated around the region of maximum likelihood. It is obtained by biasing the sampler in the region of large LF, only allowing for small moves around the maximum. Tuning this sample, targeted to a frequentist MLE perspective, raises the issue of coverage, that we discuss in Sect. 3. One has to keep in mind that the region of the LF that needs to be well known, i.e. around the maximum, is related to the coverage of the frequentist analysis being carried out. To be more explicit, the distribution of the test-statistics allows to make a map between \(\Delta \log \mathcal {L}\) values and confidence intervals, which could tell, a priori, which region of \(\Delta \log \mathcal {L}\) from the maximum is needed for frequentist inference at a given confidence level. For instance, in the asymptotic limit of Wilks’ theorem [42] the relation is determined by a \(\chi ^{2}\) distribution. In general the relation is unknown until the distribution of the test-statistics is known, so that we cannot exactly tune sample \(S_{2}\). However, unless gigantic deviations from Wilks’ theorem are expected, taking twice or three times as many \(\Delta \log \mathcal {L}\) as the ones necessary to make inference at the desired confidence level using the asymptotic approach, should be sufficient.

Mixed sample: This sample is built by enriching the unbiased sample with the biased one, in the region of large values of the LF. This is a tuning procedure, since, depending on the number of available samples and the statistics needed for training the DNN, this sample needs to be constructed for reproducing at best the results of the given analysis of interest both in a Bayesian and frequentist inference framework.

Some considerations are in order. The unbiased sample is enough if one wants to produce a DNNLikelihood to be used only for Bayesian inference. As we show later, this does not require a complicated tuning of hyperparameters (at least in the example we consider) and reaches very good performance, evaluated with the metrics that we discussed above, already with relatively small statistics in the training sample (considering the high dimensionality). The situation complicates a bit when one wants to be able to also make frequentist inference using the same DNNLikelihood. In this case the mixed sample (and therefore the biased one) is needed, and more tuning of the network as well as more samples in the training set are required. For the example presented in this paper it was rather simple to get the required precision. However, for more complicated cases, we believe that ensemble learning techniques could be relevant to get stable and accurate results. We made some attempts to implement stacking of several identical models trained with randomly selected subsets of data and observed promising improvements. Nevertheless, a careful comparison of different ensemble techniques and their performances is beyond the scope of this paper. For this reason we will not consider ensemble learning in our present analysis.

The final issue we have to address when training with the mixed sample, which is biased by construction, is to ensure that the DNNLikelihood can still produce accurate enough Bayesian posterior estimates. This is actually guaranteed by the fact that a regression (or interpolation) problem, contrary to a classification one, is insensitive to the distribution in the target variable, since the output is not conditioned on such probability distribution. This, as can be clearly seen from the results presented in Sect. 3, is a crucial ingredient for our procedure to be useful, and leads to the main result of our approach: a DNNLikelihood trained with the mixed sample can be used to perform a new MCMC that converges to the underlying distribution, forgetting the biased nature of the training set.

In the next section we give a thorough example of the procedure discussed here in the case of a prototype LHC-like search for NP corresponding to a 95-dimensional LF.

3 A realistic LHC-like NP search

In this section we introduce the prototype LHC-like NP search presented in Ref. [9], which we take as a representative example to illustrate how to train the DNNLikelihood. We refer the reader to Ref. [9] for a detailed discussion of this setup and repeat here only the information that is strictly necessary to follow our analysis.

The toy experiment consists in a typical “shape analysis” in a given distribution aimed at extracting information on a possible NP signal from the standard model (SM) background. The measurement is divided in three different event categories, containing 30 bins each. The signal is characterized by a single “signal-strength” parameter \(\mu \) and the uncertainty on the signal is neglected.Footnote 4 All uncertainties affecting the background are parametrised in terms of nuisance parameters, which may be divided into three categories:

- 1.

Fully uncorrelated uncertainties in each bin: They correspond to a nuisance parameter for each bin \(\delta _{\text {MC},i}\), with uncorrelated priors, parametrising the uncertainty due to the limited Monte Carlo statistics, or statistics in a control region, used to estimate the number of background events in each bin.

- 2.

fully correlated uncertainties in each bin: they correspond to a single nuisance parameter for each source of uncertainty affecting in a correlated way all bins in the distribution. In this toy experiment, such sources of uncertainty are the modeling of the Initial State Radiation and the Jet Energy Scale, parametrised respectively by the nuisance parameters \(\delta _{\mathrm {ISR}}\) and \(\delta _{\mathrm {JES}}\).

- 3.

Uncertainties on the overall normalisation (correlated among event categories): They correspond to the previous two nuisance parameters \(\delta _{\mathrm {ISR}}\) and \(\delta _{\mathrm {JES}}\), that, on top of affecting the shape, also affect the overall normalisation in the different categories, plus two typical experimental uncertainties, that only affect the normalisation, given by a veto efficiency and a scale-factor appearing in the simulation, parametrised respectively by \(\delta _{\mathrm {LV}}\) and \(\delta _{\mathrm {RC}}\).

In summary, the LF depends on one physical parameter \(\mu \) and 94 nuisance parameters, that we collectively indicate with the vector \(\varvec{\delta }\), whose components are defined by \(\delta _{i}=\delta _{\text {MC},i}\) for \(i=1,\ldots ,90\), \(\delta _{91}=\delta _{\mathrm {ISR}}\), \(\delta _{92}=\delta _{\mathrm {JES}}\), \(\delta _{93}=\delta _{\mathrm {LV}}\), \(\delta _{94}=\delta _{\mathrm {RC}}\).

The full model likelihood can be written asFootnote 5

where \(n_{I}^{\text {obs}}\) is the observed number of events in the LHC-like search discussed in Ref. [9] and the product runs over all bins I. The number of expected events in each bin is given by \(n_{I}(\mu ,\varvec{\delta })=n_{s,I}(\mu )+n_{b,I}(\varvec{\delta })\), and the probability distributions are given by Poisson distributions in each bin

In this toy LF, the number of background events in each bin \(n_{b,I}(\varvec{\delta })\) is known analytically as a function of the nuisance parameters, through various numerical parameters that interpolate the effect of systematic uncertainties. The parametrisation of \(n_{b,I}(\varvec{\delta })\) is such that the nuisance parameters \(\varvec{\delta }\) are normally distributed with vanishing vector mean and identity covariance matrix

Moreover, due to the interpolations involved in the parametrisation of the nuisance parameters, in order to ensure positive probabilities, the \(\varvec{\delta }\)s are only allowed to take values in the range \([-5,5]\).

In our approach, we are interested in setting up a supervised learning problem to learn the LF as a function of the parameters. Independently of the statistical perspective, i.e. whether the parameters are treated as random variables or just variables, we need to choose some values to evaluate the LF. For the nuisance parameters the function \(\pi (\varvec{\delta })\) already tells us how to choose these points, since it implicitly treats the nuisance parameters as random variables distributed according to this probability distribution. For the model parameters, in this case only \(\mu \), we have to decide how to generate points, independently of the stochastic nature of the parameter itself. In the case of this toy example, since we expect \(\mu \) to be relatively “small” and most probably positive, we generate \(\mu \) values according to a uniform probability distribution in the interval \([-1,5]\). This could be considered as the prior on the stochastic variable \(\mu \) in a Bayesian perspective, while it is just a scan in the parameter space of \(\mu \) in the frequentist one.Footnote 6 Notice that we allow for small negative values of \(\mu \).Footnote 7 Whenever the NP contribution comes from the on-shell production of some new physics, this assumption is not consistent. However, the “signal” may come, in an Effective Field Theory (EFT) perspective, from the interference of the SM background with higher dimensional operators. This interference could be negative depending on the sign of the corresponding Wilson coefficient, and motivates our choice to allow for negative values of \(\mu \) in our scan.

Evolution of the chains in an emcee3 sampling of the LF in Eq. (11) with \(10^{3}\) walkers and \(10^{6}\) steps using the StretchMove algorithm with \(a=1.3\). The plots show the explored values of the parameter \(\mu \) (left) and of minus log-likelihood \(-\log \mathcal {L}\) (right) versus the number of steps for a random subset of \(10^{2}\) of the \(10^{3}\) chains. The parameter \(\mu \) was initialized from a uniform distribution in the interval \([-1,5]\). For visualization purposes, values in the plots are computed only for numbers of steps included in the set \(\{a\times 10^{b}\}\) with \(a\in [1,9]\) and \(b\in [0,6]\)

3.1 Sampling the full likelihood

To obtain the three samples discussed in Sect. 2.2 from the full model LF in Eq. (11) we used the emcee3 Python package [44], which implements the Affine Invariant (AI) MCMC Ensemble Sampler [45]. We proceeded as follows:

- 1.

Unbiased sample \(S_{1}\)

In the first sampling, the values of the proposals have been updated using the default StretchMove algorithm implemented in emcee3, which updates all values of the parameters (95 in our case) at a time. The default value of the only free parameter of this algorithm \(a=2\) delivered a slightly too low acceptance fraction \(\epsilon \approx 0.12\). We have therefore set \(a=1.3\), which delivers a better acceptance fraction of about 0.36. WalkersFootnote 8 have been initialised randomly according to the prior distribution of the parameters. The algorithm efficiently achieves convergence to the true target distribution, but, given the large dimensionality, hardly explores large values of the LF.

In Fig. 1 we show the evolution of the walkers for the parameter \(\mu \) (left) together with the corresponding values of \(-\log \mathcal {L}\) (right) for an illustrative set of 100 walkers. From these figures a reasonable convergence seems to arise already after roughly \(10^{3}\) steps, which gives an empirical estimate of the autocorrelation of samples within each walker.

Notice that, in the case of ensemble sampling algorithms, the usual Gelman, Rubin and Brooks statistics, usually denoted as \(\hat{R}_{c}\) [46, 47], is not guaranteed to be a robust tool to assess convergence, due to the fact that each walker is updated based on the state of the other walkers in the previous step (i.e. there is a correlation among closeby steps of different walkers). This can be explained as follows. The Gelman, Rubin and Brooks statistics works schematically by comparing a good estimate of the variance of the samples, obtained from the variance across independent chains, with an approximate variance, obtained from samples in a single chain, which have, in general, some autocorrelation. The ratio is an estimate of the effect of neglecting such autocorrelation, and when it approaches one it means that this effect becomes negligible. As we mentioned above, in the case of ensemble sampling, there is some correlation among subsequent steps in different walkers, which means that also the variance computed among walkers is not a good estimate of the true variance of samples. Nevertheless, since the state of a walker is updated from the state of all other walkers, and not just one, the correlation of the updated walker with each of the other walkers decreases as the number of walkers increases. This implies that in the limit of large number of walkers, the effect of correlation among walkers is much smaller than the effect of autocorrelation in each single walker, so that the Gelman, Rubin and Brooks statistics should still be a good metric to monitor convergence. Let us finally stress that correlation among closeby steps in different walkers of ensemble MCMC does not invalidate this sampling technique. Indeed, as explained in Ref. [45], since the sampler target distribution is built from the direct product of the target probability distribution for each walker, when the sampler converges to its target distribution, all walkers are an independent representation of the target probability distribution.

In order to check our expectation on the performance of the Gelman, Rubin and Brooks statistics to monitor convergence in the limit of large number of walkers, we proceeded as suggested in Ref. [48]: in order to reduce walker correlation, one can consider a number of independent samplers, extract a few walkers from each run, and compute \(\hat{R}_{c}\) for this set. Considering the aforementioned empirical estimate of the number of steps for convergence, i.e. roughly few \(10^{3}\), we have run 50 independent samplers for a larger number of steps (\(3\times 10^{4}\)), extracted randomly 4 chains from each, joined them together, and computed \(\hat{R}_{c}\) for this set. This is shown in the upper-left plot of Fig. 2. With a requirement of \(\hat{R}_{c}<1.2\) [47] we see that chains have already converged after around \(5\times 10^{3}\) steps, which is roughly what we empirically estimated looking at the chains evolution in Fig. 1. An even more robust requirement for convergence is given by \(\hat{R}_{c}<1.1\), together with stabilized evolution of both variances \(\hat{V}\) and W [47]. In the center and right plots of Fig. 2 we show this evolution, from which we see that convergence has robustly occurred after \(2-3\times 10^{4}\) steps. We have then compared this result with the one obtained performing the same analysis using 200 walkers from a single sampler. The result is shown in the lower panels of Fig. 2. As can be seen comparing the upper and lower plots, correlation of walkers played a very marginal role in assessing convergence, as expected from our discussion above.

An alternative and pretty general way to diagnose MCMC sampling is the autocorrelation of chains, and in particular the Integrated Autocorrelation Time (IAT). This quantity represents the average number of steps between two independent samples in the chain. For unimodal distributions, one can generally assume that after a few IAT the chain forgot where it started and converged to generating samples distributed according to the underlying target distribution. There are more difficulties in the case of multimodal distributions, which are however shared by most of the MCMC convergence diagnostics. We do not enter here in such a discussion, and refer the reader to the overview presented in Ref. [51]. An exact calculation of the IAT for large chains is computationally prohibitive, but there are several algorithms to construct estimators of this quantity. The emcee3 package comes with tools that implement some of these algorithms, which we have used to study our sampling [49, 50]. To obtain a reasonable estimate of the IAT \(\tau \), one needs enough samples, a reasonable empirical estimate of which, that works well also in our case, is at least \(50 \tau \) [49, 50]. An illustration of this, for the parameter \(\mu \), is given in the left panel of Fig. 3, where we show, for a sampler with \(10^{3}\) chains and \(10^{6}\) steps, the IAT estimated after different numbers of steps with two different algorithms, “G&W 2010” and “DFM 2017” (see Refs. [49, 50] for details). It is clear from the plot that the estimate becomes flat, and therefore converges to the correct value of the IAT, roughly when the estimate curves cross the empirical value of \(50\tau \) (this is an order of magnitude estimate, and obviously, the larger the number of steps, the better the estimate of \(\tau \)). The best estimate that we get for this sampling for the parameter \(\mu \) is obtained with \(10^{6}\) steps using the “DFM 2017” method and gives \({\overline{\tau }}\approx 1366\), confirming the order of magnitude estimate empirically extracted from Fig. 1. In the right panel of Fig. 3 we show the resulting one-dimensional (1D) marginal posterior distribution of the parameter \(\mu \) obtained from the corresponding run. Finally, we have checked that Figs. 1 and 3 are quantitatively similar for all other parameters.

As we mentioned above, the IAT gives an estimate of the number of steps between independent samples (it roughly corresponds to the period of oscillation, measured in number of steps, of the chain in the whole range of the parameter). Therefore, in order to have a true unbiased set of independent samples, one has to “thin” the chain with a step size of roughly \({\overline{\tau }}\). This greatly decreases the statistics available from the MCMC run. Conceptually there is nothing wrong with having correlated samples, provided they are distributed according to the target distribution, however, even though this would increase the effective available statistics, it would generally affect the estimate of the uncertainties in the Bayesian inference [52, 53]. We defer a careful study of the issue of thinning to a forthcoming publication [33], while here we limit ourselves to describe the procedure we followed to get a rich enough sample.

We have run emcee3 for \(10^{6}+5\times 10^{3}\) steps with \(10^{3}\) walkers for 11 times. From each run we have discarded a pre-run of \(5\times 10^{3}\) steps, which is a few times \({\overline{\tau }}\), and thinned the chain with a step size of \(10^{3}\), i.e. roughly \({\overline{\tau }}\).Footnote 9 Thinning has been performed by taking a sample from each walker at the same step every \(10^{3}\) steps. Each run then delivered \(10^{6}\) roughly independent samples. With parallelization, the sampler generates and stores about 22 steps per second.Footnote 10 The final sample obtained after all runs consists of \(1.1\times 10^{7}\) samples. We stored \(10^{6}\) of them as the test set to evaluate our DNN models, while the remaining \(10^{7}\) are used to randomly draw the different training and validation sets used in the following.

- 2.

Biased sample \(S_{2}\)

The second sampling has been used to enrich the training and test sets with points corresponding to large values of the LF, i.e. points close to the maximum for each fixed value of \(\mu \).

In this case we initialised 200 walkers in maxima of the LF profiled over the nuisance parameters calculated for random values of \(\mu \), extracted according to a uniform probability distribution in the interval \([-1,1]\).Footnote 11 Moreover, the proposals have been updated using a Gaussian random move with variance \(5\times 10^{-4}\) (small moves) of a single parameter at a time. In this way, the sampler starts exploring the region of parameters corresponding to the profiled maxima, and then slowly moves towards the tails. Once the LF gets further and further from the profiled maxima, the chains do not explore this region anymore. Therefore, in this case we do not want to discard a pre-run, neither to check convergence, which implies that this sampling will have a strong bias (obviously, since we forced the sampler to explore only a particular region).

In Fig. 4 we show the evolution of the chains for the parameter \(\mu \) (left panel) together with the corresponding values of \(\log \mathcal {L}\) (right panel) for an illustrative (random) set of 100 chains. Comparing Fig. 4 with Fig. 1, we see that now the moves of each chain are much smaller and the sampler generates many points all around the profiled maxima at which the chains are initialised.

In order to ensure a rich enough sampling close to profiled maxima of the LF, we have made \(10^{5}\) iterations for each walker. Since moves are much smaller than in the previous case (only one parameter is updated at a time), the acceptance fraction in this case is very large, \(\varepsilon \approx 1\). We therefore obtained a sampling of \(1.1\times 10^{7}\) points by randomly picking points within the \(10^{5}\cdot 200\cdot \varepsilon \) samples. As for \(S_{1}\), two samples of \(10^{6}\) and \(10^{7}\) points have been stored separately: the first serves to build the test set, while the second is used to construct the training and validation sets. As mentioned before, this is a biased sample, and therefore should only be used to enrich the training sample to properly learn the LF close to the maximum (and to check results of the frequentist analysis), but it cannot be used to make any posterior inference. Due to the large efficiency, this sampling took less than one hour to be generated.

- 3.

Mixed sample \(S_{3}\)

The mixed sample \(S_{3}\) is built from \(S_{1}\) and \(S_{2}\) in order to properly populate both the large probability mass region and the large log-likelihood region. Moreover, we do not want a strong discontinuity for intermediate values of the LF, which could become relevant, for instance, when combining with another analysis that prefers slightly different values of the parameters. For this reason, we have ensured that also intermediate values of the LF are represented, even though with a smaller effective weight, and that no more than a factor of 100 difference in density of examples is present in the whole region \(-\log \mathcal {L}\in [285,350]\). Finally, in order to ensure a good enough statistics close to the maxima, we have enriched further the sample above \(\log \mathcal {L}\approx -290\) (covering the region \(\Delta \log \mathcal {L}\lesssim 5\)).

\(S_{3}\) has been obtained taking all samples from \(S_{2}\) with \(\log \mathcal {L}> -290\) (around \(10\%\) of all samples in \(S_{2}\)), \(70\%\) of samples from \(S_{1}\) (randomly distributed), and the remaining fraction, around \(20\%\), from \(S_{2}\) with \(\log \mathcal {L}< -290\). With this procedure we obtained a total of \(10^{7}\)(\(10^{6}\)) train(test) samples. We have checked that results do not depend strongly on the assumptions made to build \(S_{3}\), provided enough examples are present in all the relevant regions in the training sample.

Upper panel: Gelman, Rubin and Brooks \(\hat{R}_{c}\), \(\sqrt{\hat{V}}\), and \(\sqrt{W}\) parameters computed from an ensemble of 200 walkers made by joining together 4 samples extracted (randomly) from 50 samplers of 200 walkers each. Lower panel: Same plots made using 200 walkers from a single sampler. The different lines in the plots represent the 95 different parameters of the LF

Estimate of the autocorrelation time \(\tau _{\mu }\) (obtained using both the original method proposed in Ref. [45] and the alternative one discussed by the emcee3 authors [49, 50]) as a function of the number of samples (left) and normalized histogram (right) for the parameter \(\mu \). The region on the right of the line \(\tau =S/50\) in the left plot represents the region where the considered \(\tau _{\mu }\) estimates are expected to become reliable

Evolution of the chains in an emcee3 sampling of the LF in Eq. (11) with 200 walkers and \(10^{5}\) steps using the GaussianMove algorithm, that updated one parameter at a time, with a variance \(5\times 10^{-4}\). The plots show the explored values of the parameter \(\mu \) (left) and of minus log-likelihood \(-\log \mathcal {L}\) (right) versus the number of steps for a random subset of 100 of the 200 chains. For visualization purposes, values in the plots are computed only for numbers of steps included in the set \(\{a\times 10^{b}\}\) with \(a\in [1,9]\) and \(b\in [0,6]\)

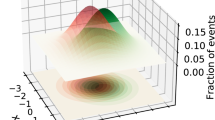

The distributions of the LF values in the three samples are shown in Fig. 5 (for the \(10^{7}\) points in the training/validation set).

We have used the three samples as follows: examples drawn from \(S_{3}\) were used to train the full DNNLikelihood, while results have been checked against \(S_{1}\) in the case of Bayesian posterior estimations and against \(S_{2}\) (together with results obtained from a numerical maximisation of the analytical LF) in the case of frequentist inference. Moreover, we also present a “Bayesian only” version of the DNNLikelihood, trained using only points from \(S_{1}\).

3.2 Bayesian inference

In the Bayesian approach one is interested in marginal distributions, used to compute marginal posterior probabilities and credibility intervals. For instance, in the case at hand, one may be interested in two-dimensional (2D) marginal probability distributions in the parameter space \((\mu ,\varvec{\delta })\), such as

or in 1D HPDI corresponding to probabilities \(1-\alpha \), such as

All these integrals can be discretized and computed by just summing over quantities evaluated on a proper unbiased LF sampling.

1D and 2D posterior marginal probability distributions for a subset of parameters from the unbiased \(S_{1}\). This gives a graphical representation of the sampling obtained through MCMC. The green (darker) and red (lighter) points and curves correspond to the training set (\(10^{7}\) points) and test set (\(10^{6}\) points) of \(S_{1}\), respectively. Histograms are made with 50 bins and normalised to unit integral. The dotted, dot-dashed, and dashed lines represent the \(68.27\%,95.45\%,99.73\%\) 1D and 2D HPDI. For graphical purposes only the scattered points outside the outermost contour are shown. The difference between green (darker) and red (lighter) lines gives an idea of the uncertainty on the HPDI due to finite sampling. Numbers for the \(68.27\%\) HPDI for the parameters in the two samples are reported above the 1D plots

This can be efficiently done with MCMC techniques, such as the one described in Sect. 3.1. For instance, using the sample \(S_{1}\) we can directly compute HPDIs for the parameters. Figure 6 shows the 1D and 2D posterior marginal probability distributions of the subset of parameters \((\mu , \delta _{50},\delta _{91},\delta _{92},\delta _{93},\delta _{94})\) obtained with the training set (\(10^{7}\) points, green (darker)) and test set (\(10^{6}\) points, red (lighter)) of \(S_{1}\). Figure 6 also shows the 1D and 2D \(68.27\%,95.45\%,99.73\%\) HPDIs. All the HPDIs, including those shown in Fig. 6, have been computed by binning the distribution with 60 bins, estimating the interval, and increasing the number of bins by 60 until the interval splits due to statistical fluctuations.

The results for \(\mu \) with the assumptions \(\mu >-1\) and \(\mu >0\), estimated from the training set, which has the largest statistics, are given in Table 1.

Figure 6 shows how the 1D and 2D marginal probability distributions are extremely accurate up to the \(99.73\%\) HPDI, with only tiny differences in the highest probability interval for some parameters due to the lower statistics of the test set. Notice that, by construction, there are no points in the samples with \(\mu <-1\). However, the credibility contours in the marginal probability distributions, which are constructed from interpolation, may show intervals that slightly extend below \(\mu <-1\). This is just an artifact of the interpolation and has no physical implications.

Considering that the sample sizes used to train the DNN range from \(10^{5}\) to \(5\times 10^{5}\), we do not consider probability intervals higher than \(99.73\%\). Obviously, if one is interested in covering higher HPDIs, larger training sample sizes need to be considered (for instance, to cover a Gaussian \(5\sigma \) interval, that corresponds to a probability \(1{-}5.7\times 10^{-7}\), even only on the 1D marginal distributions, a sample with \(\gg 10^{7}\) points would be necessary). We will not consider this case in the present paper.

3.3 Frequentist inference

In a frequentist inference one usually constructs a test statistics \(\lambda (\mu ,\varvec{\theta })\) based on the LF ratio

Since one would like the test statistics to be independent of the nuisance parameters, it is common to use instead the profiled likelihood, obtained replacing the LF at each value of \(\mu \) with its maximum value (over the nuisance parameters volume) for that value of \(\mu \). One can then construct a test statistics \(t_{\mu }\) based on the profiled (log)-likelihood ratio, given by

Whenever suitable general conditions are satisfied, and in the limit of large data sample, by Wilks’ theorem the distribution of this test-statistics approaches a \(\chi ^{2}\) distribution that is independent of the nuisance parameters \(\varvec{\delta }\) and has a number of degrees of freedom equal to \(\dim \mathcal {L}-\dim \mathcal {L}_{\text {prof}}\) [54]. In our case \(t_{\mu }\) can be computed using numerical maximisation on the analytic LF, but it can also be computed from \(S_{2}\) (and \(S_{3}\), which is identical in the large likelihood region), which was constructed with the purpose of describing the LF as precisely as possible close to profiled maxima. In order to compute \(t_{\mu }\) from the sampling we consider small bins around the given \(\mu \) value and take the point in the bin with maximum LF value. This procedure gives an estimate of \(t_{\mu }\) that depends on the statistics in the bins and on the bin size. In Fig. 7 we show the result for \(t_{\mu }\) using both approaches for different sample sizes drawn from \(S_{2}\).

Comparison of the \(t_{\mu }\) test-statistics computed using numerical maximisation of Eq. (17) and using a variable sample size from \(S_{2}\). We show the result obtained searching from the maximum by usind different binning in \(t_{\mu }\) with bin size 0.01, 0.02, 0.05, 0.1 around each value of \(\mu \) (between 0 and 1 in steps of 0.1)

The three samples from \(S_{2}\) used for the maximisation, with sizes \(10^{5}\), \(10^{6}\), and \(10^{7}\) (full training set of \(S_{2}\)), contain in the region \(\mu \in [0,1]\) around \(5\times 10^{4}\), \(5\times 10^{5}\), and \(5\times 10^{6}\) points respectively, which results in increasing statistics in each bin and a more precise and stable prediction for \(t_{\mu }\). As it can be seen \(10^{5}\) points, about half of which contained in the range \(\mu \in [0,1]\), are already sufficient, with a small bin size of 0.02, to reproduce the \(t_{\mu }\) curve with great accuracy. As expected, larger bin sizes result in too high profiled maxima estimates, leading to an underestimate of \(t_{\mu }\).

Under Wilks’ theorem assumptions, \(t_{\mu }\) should be distributed as a \(\chi ^{2}_{1}\) (1 d.o.f.) distribution, from which we can determine CL upper limits. The \(68.27\%(95.45\%)\) CL upper limit (under the Wilks’ hypotheses) is given by \(t_{\mu }= 1(4)\), corresponding to \(\mu < 0.37\)(0.74). These upper limits are compatible with the ones found in Ref. [9], and are quite smaller than the corresponding upper limits of the HPDI obtained with the Bayesian analysis in Sect. 3.2 (see Table 1). Even though, as it is well known, frequentist and Bayesian inference answer to different questions, and therefore do not have to agree with each other, we already know from the analysis of Ref. [9] that deviations from gaussianity are not very large for the analysis under consideration, so that one could expect, in the case of a flat prior on \(\mu \) such as the one we consider, similar results from the two approaches. This may suggest that the result obtained using the asymptotic approximation for \(t_{\mu }\) is underestimating the upper limit (undercoverage). This may be due, in the search under consideration, to the large number of bins in which the observed number of events is below 5 or even 3 (see Figure 2 of Ref. [9]). Indeed, the true distribution of \(t_{\mu }\) is expected to depart from a \(\chi ^{2}_{1}\) distribution when the hypotheses of Wilks’ theorem are violated. The study of the distribution of \(t_{\mu }\) is related to the problem of coverage of frequentist confidence intervals, and requires to perform pseudo-experiments and to make further assumptions on the treatment of nuisance parameters. We present results on the distribution of \(t_{\mu }\) obtained through pseudo-experiments in “Appendix B”. The important conclusion is that using the distribution of \(t_{\mu }\) generated with pseudo-experiments, CL upper limits become more conservative by up to around \(\sim 70\%\), depending on the choice of the approach used to treat nuisance parameters. This shows that the upper limits computed through asymptotic statistics undercover, in this case, the actual upper bounds on \(\mu \).

4 The DNNLikelihood

The sampling of the full likelihood discussed above has been used to train a DNN regressor constructed from multiple fully connected layers, i.e. a multilayer perceptron (MLP). The regressor has been trained to predict values of the LF given a vector of inputs made by the physical and nuisance parameters. In order to introduce the main ingredients of our regression procedure and DNN training, we first show how models trained using only points from \(S_{1}\) give reliable and robust results in the case of the Bayesian approach. Then we discuss the issue of training with samples from \(S_{3}\) to allow for maximum likelihood based inference. Finally, once a satisfactory final model is obtained, we show again its performance for posterior Bayesian estimates.

4.1 Model architecture and optimisation

We used Keras [55] with TensorFlow [56] backend, through their Python implementation, to train a MLP and considered the following hyperparameters to be optimised, the value of which defines what we call a model or a DNNLikelihood.

Size of training sample

In order to assess the performance of the DNNLikelihood given the training set size we considered three different values: \(10^{5}\), \(2\times 10^{5}\) and \(5\times 10^{5}\). The training set (together with a half sized evaluation set) has been randomly drawn from \(S_{1}\) for each model training, which ensures the absence of correlation between the models due to the training data: thanks to the large size of \(S_{1}\) (\(10^{7}\) samples) all the training sets can be considered roughly independent. In order to allow for a consistent comparison, all models trained with the same amount of training data have been tested with a sample from the test set of \(S_{1}\), and with half the size of the training set. In general, and in particular in our interpolation problem, increasing the size of the training set allows to reduce the generalization error and therefore to obtain the desired performance on the test set.

Loss function

In Sect. 2 we have argued that both MAE and MSE are suitable loss functions to learn the log-likelihood function. In our optimisation procedure we tried both, always finding (slightly) better results for the MSE. We therefore choose the MSE as our loss function in all results presented here.

Number of hidden layers

From a preliminary optimisation we concluded that more than a single Hidden Layer (HL) (deep network) always performs better than a single HL (shallow network). However, in the case under consideration, deeper networks do not seem to perform much better than 2HL networks, even though they are typically much slower to train and to make predictions. Therefore, after this preliminary assessment, we focused on 2HL architectures.

Activation function on hidden layers

We compared RELU [57], ELU [58], and SELU [59] activation functions and the latter performed better in our problem. In order to correctly implement the SELU activation in Keras we initialised all weights using the Keras “lecun_normal” initialiser [59, 60].

Number of nodes on hidden layers

We considered architectures with the same number of nodes on the two hidden layers. The number of trainable parameters (weights) in the case of n fully connected HLs with the same number of nodes \( d_{\text {HL}}\) is given by

$$\begin{aligned} d_{\text {HL}}\left( d_{\text {input}}+(n-1)d_{\text {HL}}+(n+1)\right) +1\,, \end{aligned}$$(18)where \(d_{\text {input}}\) is the dimension of the input layer, i.e. the number of independent variables, 95 in our case. DNNs trained with stochastic gradient methods tend to small generalization errors even when the number of parameters is larger than the training sample size [61]. Overfitting is not an issue in our interpolation problem [62]. In our case we considered HLs not smaller than 500 nodes, which should ensure enough bandwidth throughout the network and model capacity. In particular we compared results obtained with 500, 1000, 2000, and 5000 nodes on each HL, corresponding to 299001, 1098001, 4196001, and 25490001 trainable parameters.

Batch size

When using a stochastic gradient optimisation technique, of which Adam is an example, the minibatch size is an hyperparameter. For the training to be stochastic, the batch size should be much smaller than the training set size, so that each minibatch can be considered roughly independent. Large batch sizes lead to more accurate weight updates and, due to the parallel capabilities of GPUs, to faster training time. However, smaller batch sizes usually contribute to regularize and avoid overfitting. After a preliminary optimisation obtained changing the batch size from 256 to 4096, we concluded that the best performances were obtained by keeping the number of batches roughly fixed to 200 when changing the training set size. In particular, choosing batch sizes among powers of two, we have used 512, 1024 and 2048 for \(10^{5}\), \(2\times 10^{5}\) and \(5\times 10^{5}\) training set sizes respectively. Notice that increasing the batch size when enlarging the training set, also allowed us to keep the initial learning rate (LR)) fixed [63].Footnote 12 Similar results could be obtained by keeping a fixed batch size of 512 and reducing the starting learning rate when enlarging the training set.

Optimiser

We used the Adam optimiser with default parameters, and in particular with learning rate \(\epsilon =0.001\). We reduced the learning rate by a factor 0.2 every 40 epochs without improvements on the validation loss within an absolute amount (min_delta in Keras) \(1/N_{\text {points}}\), with \(N_{\text {points}}\) the training set size. Indeed, since the Keras min\(\_\) delta parameter is absolute and not relative to the value of the loss function, we needed to reduce it when getting smaller losses (better models). We have found that \(1/N_{\text {points}}\) corresponded roughly to one to few permil of the best minimum validation loss obtained for all different training set sizes. This value turned out to give the best results with reasonably low number of epochs (fast enough training). Finally, we performed early stopping [64, 65] using the same min_delta parameter and no improvement in the validation loss for 50 epochs, restoring weights corresponding to the step with minimum validation loss. This ensured that training did not go on for too long without substantially improving the result. We also tested the newly proposed AdaBound optimiser [66] without seeing, in our case, large differences.

Notice that the process of choosing and optimising a model depends on the LF under consideration (dimensions, number of modes, etc.) and this procedure should be repeated for different LFs. However, good initial points for the optimisation could be chosen using experience from previously constructed DNNLikelihoods.

As we discussed in Sect. 2, there are several metrics that we can use to evaluate our model. Based on the results obtained by re-sampling the DNNLikelihood with emcee3, we see a strong correlation between the quality of the re-sampled probability distribution (i.e. of the final Bayesian inference results) and the metric corresponding to the median of the K-S test on the 1D posterior marginal distributions. We therefore present results focusing on this evaluation metric. When dealing with the Full DNNLikelihood trained with the biased sampling \(S_{3}\) we also consider the performance on the mean relative error on the predicted \(t_{\mu }\) test statistics when choosing the best models.

4.2 The Bayesian DNNLikelihood

From a Bayesian perspective, the aim of the DNNLikelihood is to be able, through a DNN interpolation of the full LF, to generate a sampling analog to \(S_{1}\), which allows to produce Bayesian posterior density distributions as close as possible to the ones obtained using the true LF, i.e. the \(S_{1}\) sampling. Moreover, independently of how complicated to evaluate the original LF is, the DNNLikelihood is extremely fast to compute, allowing for very fast sampling.Footnote 13 The emcee3 MCMC package allows, through vectorization of the input function for the log-probability, to profit of parallel GPU predictions, which made sampling of the DNNLikelihood roughly as fast as the original analytic LF.

We start by considering training using samples drawn from the unbiased \(S_{1}\). The independent variables all vary in a reasonably small interval around zero and do not need any preprocessing. However, the \(\log \mathcal {L}\) values in \(S_{1}\) span a range between around \(-380\) and \(-285\). This is both pretty large and far from zero for the training to be optimal. For this reason we have pre-processed data scaling them to zero mean and unit variance. Obviously, when predicting values of \(\log \mathcal {L}\) we applied the inverse function to the DNN output.

We rank models trained during our optimisation procedure by the median p value of 1D K-S test on all coordinates between the test set and the prediction performed on the validation set. The best models are those with the highest median p value. In Table 2 we show results for the best model we obtained for each training sample size. All metrics shown in the table are evaluated on the \(\log \mathcal {L}\). Results have been obtained by training 5 identical models for each architecture (2HL of 500, 1000, 2000 and 5000 nodes each) and hyperparameters (batch size, learning rate, patience) choice and taking the best one. We call these three best models \(B_{1}{-}B_{3}\) (B stands for Bayesian). All three models have two HLs with \(5\times 10^{3}\) nodes each, and are therefore the largest we consider in terms of number of parameters. However, it should be clear that the gap with smaller models is extremely small in some cases with some of the models with less parameters in the ensemble of 5 performing better than some others with more parameters. This also suggests that results are not too sensitive to model dimension, making the DNNLikelihood pretty robust.

Figure 8 shows the learning curves obtained for the values of the hyperparameters shown in the legends. Early stopping is usually triggered after a few hundred epochs (ranging from around 200–500, with the best models around 200–300) and values of the validation loss (MSE) that range in the interval \(\approx [0.01, 0.003]\). Values of the validation ME, which, as explained in Sect. 2 correspond to the K-L divergence for the LF, range in the \(\approx [1, 5] \times 10^{-3}\), which, together with median of the p value of the 1D K-S tests in the range \(0.2{-}0.4\) deliver very accurate models. Training times are not prohibitive, and range from less than one hour to a few hours for the models we considered on a Nvidia Tesla V100 GPU with 32GB of RAM. Prediction times, using the same batch sizes used during training, are in the ballpark of \(10{-}15\,\upmu \text {s}/\text {point}\), allowing for very fast sampling and inference using the DNNLikelihood. Finally, as shown in Table 2, all models present very good generalization when going from the evaluation to the test set, with the generalization error decreasing with the sample size as expected.

In order to get a full quantitative assessment of the performances of the Bayesian DNNLikelihood, we compared the results of a Bayesian analysis performed using the test set of \(S_{1}\) and each of the models \(B_{1}{-}B_{3}\). This was done in two ways. Since the model is usually a very good fit of the LF, we reweighted each point in \(S_{1}\) using the ratio between the original likelihood and the DNNLikelihood (reweighting). This procedure is so fast that can be done for each trained model during the optimisation procedure giving better insights on the choice of hyperparameters. Once the best model has been chosen, the result of reweighting has been checked by directly sampling the DNNLikelihoods with emcee3.Footnote 14 We present results obtained by sampling the DNNLikelihoods in the form of 1D and 2D marginal posterior density plots on a chosen set of parameters \((\mu , \delta _{50},\delta _{91},\delta _{92},\delta _{93},\delta _{94})\).

1D and 2D posterior marginal probability distributions for a subset of parameters from the unbiased \(S_{1}\). The green (darker) distributions represent the test set of \(S_{1}\), while the red (lighter) distributions are obtained by sampling the DNNLikelihood \(B_{1}\). Histograms are made with 50 bins and normalised to unit integral. The dotted, dot-dashed, and dashed lines represent the \(68.27\%,95.45\%,99.73\%\) 1D and 2D HPDI. For graphical purposes only the scattered points outside the outermost contour are shown. Numbers for the \(68.27\%\) HPDI for the parameters in the two samples are reported above the 1D plots

Same as Fig. 9 but for the DNNLikelihood \(B_{2}\)

Same as Fig. 9 but for the DNNLikelihood \(B_{3}\)

We have sampled the LF using the DNNLikelihoods \(B_{1}{-}B_{3}\) with the same procedure used for \(S_{1}\).Footnote 15 Starting from model \(B_{1}\) (Fig. 9), Bayesian inference is accurately reproduced for \(68.27\%\) HPDI, well reproduced, with some small deviations arising in the 2D marginal distributions for \(95.45\%\) HPDI, while large deviations, especially in the 2D marginal distributions, start to arise for \(99.73\%\) HPDI. This is expected and reasonable, since model \(B_{1}\) has been trained with only \(10^{5}\) points, which are not enough to carefully interpolate in the tails, so that the region corresponding to HPDI larger than \(\sim 95\%\) is described by the DNNLikelihood through extrapolation. Nevertheless, we want to stress that, considering the very small training size and the large dimensionality of the LF, model \(B_{1}\) already works surprisingly well. This is a common feature of the DNNLikelihood, which, as anticipated, works extremely well in predicting posterior probabilities without the need of a too large training sample, nor a hard tuning of the DNN hyperparameters. When going to models \(B_{2}\) and \(B_{3}\) (Figs. 10 and 11) predictions become more and more reliable, improving as expected with the number of training points. Therefore, at least part of the deviations observed in the DNNLikelihood prediction have to be attributed to the finite size of the training set, and are expected to disappear when further increasing the number of points. Considering the relatively small training and prediction times shown in Table 2, it should be possible, once the desired level of accuracy has been chosen, to enlarge the training and test sets enough to match that precision. For the purpose of this work, we consider the results obtained with models \(B_{1}{-}B_{3}\) already satisfactory, and do not go beyond \(5 \times 10^{5}\) training samples.

In order to allow for a fully quantitative comparison, in Table 3 we summarize the Bayesian 1D HPDI obtained with the DNNLikelihoods \(B_{1}{-}B_{3}\) for the parameter \(\mu \) both using reweighting and re-sampling (only upper bounds for the hypothesis \(\mu >0\)). We find that, taking into account the uncertainty arising from our algorithm to compute HPDI (finite binning) and from statistical fluctuations in the tails of the distributions for large probability intervals, the results of Table 3 are in good agreement with those in Table 1. This shows that the Bayesian DNNLikelihood is accurate even with a rather small training sample size of \(10^{5}\) points and its accuracy quickly improves by increasing the training sample size.

4.3 Frequentist extension and the full DNNLikelihood

We have trained the same model architectures considered for the Bayesian DNNLikelihood using the \(S_{3}\) sample. In Table 4 we show results for the best models we obtained for each training sample size. Results have been obtained by training 5 identical models for each architecture and hyperparameter choice, as in Sect. 4.2, and taking the best one. We call these models \(F_{1}{-}F_{3}\) (F may stand for both Full and Frequentist, bearing in mind that these models also allow for Bayesian inference). As we anticipated in the previous section, the performance gap between models with different number of parameters is very small and often models with less parameters overperform, at least in the hyperparameter space we considered, models with more parameters. This is especially due to our choice of leaving the initial learning rate constant for all architectures, which resulted in a slightly too large learning rate for the bigger models, with a consequently less stable training phase.

This can be seen in Fig. 12, where we show the learning curves obtained for the values of the hyperparameters shown in the legends. The training curves for models \(F_{1}\)-\(F_{3}\) are less regular than those of models \(B_{1}\)-\(B_{3}\). Nevertheless, as the learning rate gets reduced, they quickly reach a good validation loss. As in the case of the Bayesian DNNLikelihood, no strong fine-tuning is needed for the DNNLikelihood to perform extremely well already with a moderate sized training set, so that we have chosen the best models without pushing optimisation further. The differences in the value of the metrics in Table 4 compared to the ones in Table 2 are due to the new region of LF values that is learnt from the DNN.

Comparison of the \(t_{\mu }\) test-statistics computed using numerical maximisation of the analytic likelihood and of the DNNLikelihoods \(F_{1}{-}F_{3}\). Each of the \(t_{\mu }\) prediction from the DNNLikelihoods corresponds to the best out of five trained models. The left and right plots show respectively the \(t_{\mu }\) test-statistics and the absolute error computed as \(|t_{\mu }^\mathrm{{DNN}}-t_{\mu }^\mathrm{{exact}}|\). Horizontal lines in the right plot represent the mean absolute error

Before repeating the Bayesian analysis presented for the Bayesian DNNLikelihood, we present results of frequentist inference using the Full DNNLikelihood. We used the models \(F_{1}{-}F_{3}\) to evaluate the test statistics \(t_{\mu }\). The left panel of Fig. 13 shows \(t_{\mu }\) obtained using the Full DNNLikelihoods, while the right panel of the same figure shows the absolute error with respect to numerical maximisation of the analytic LF defined as \(\Delta t_{\mu }\left( \mu \right) =|t_{\mu }^\mathrm{{DNN}}-t_{\mu }^\mathrm{{exact}}|\). Apart for some visible deviation in the prediction of model \(F_{1}\), it is clear that the Full DNNLikelihood is perfectly able to reproduce the test statistics, allowing for robust frequentist inference, already starting with relatively small training sets of few hundred thousand points. Clearly, the larger the training set, the smaller the error, with an average absolute error on \(t_{\mu }\) (dashed lines in the right panel of Fig. 13) that gets as low as a few \(10^{-2}\) for our models. We found that ensemble learning can help in reducing differences further (keeping under control statistical fluctuations in the training set and in the DNN weights). However, in the particular case under consideration, results are already satisfactory by taking the best out of five identical models trained with random subsets of the training set. For this reason we do not expand on ensemble learning in this work and just consider it as a tool to improve performance in cases where the LF is extremely complicated or has very high dimensionality.

1D and 2D posterior marginal probability distributions for a subset of parameters from the unbiased \(S_{1}\). The green (darker) distributions represent the test set of \(S_{1}\), while the red distributions are obtained by sampling the DNNLikelihood \(F_{1}\). Histograms are made with 50 bins and normalised to unit integral. The dotted, dot-dashed, and dashed lines represent the \(68.27\%,95.45\%,99.73\%\) 1D and 2D HPDI. For graphical purposes only the scattered points outside the outermost contour are shown. Numbers for the \(68.27\%\) HPDI for the parameters in the two samples are reported above the 1D plots

Same as Fig. 14 but for the DNNLikelihood \(F_{2}\)

Same as Fig. 14 but for the DNNLikelihood \(F_{3}\)