Abstract

One of the most criticized features of Bayesian statistics is the fact that credible intervals, especially when open likelihoods are involved, may strongly depend on the prior shape and range. Many analyses involving open likelihoods are affected by the eternal dilemma of choosing between linear and logarithmic prior, and in particular in the latter case the situation is worsened by the dependence on the prior range under consideration. In this letter, we revive a simple method to obtain constraints that depend neither on the prior shape nor range and, using the tools of Bayesian model comparison, extend it to overcome the possible dependence of the bounds on the choice of free parameters in the numerical analysis. An application to the case of cosmological bounds on the sum of the neutrino masses is discussed as an example.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In several cases, physics experiments try to measure unknown quantities: the mass of some particle, a new coupling constant, the scale of new physics. Most of the times, the absolute scale of such new quantities is completely unknown, and the analyses of experimental data require to scan a very wide range of values for the parameter under consideration, to finally end up with a lower or upper bound when data are compatible with the null hypothesis.

In the context of Bayesian analysis, performing this kind of analysis implies a profound discussion on the choice of the considered priors, which may be logarithmic when many orders of magnitude are involved. A robust analysis usually shows what happens when more than one type of prior is considered, but the calculation of credible intervals always require also a precise definition of the prior range. Especially in the case of logarithmic priors, a choice of the range can be difficult even when physical boundaries (e.g. a mass or coupling must be positive) exist, with the consequence that the selected allowed range for the parameter can influence the available prior volume and as a consequence the bound itself.

Let us consider for example the case of neutrino masses and their cosmological constraints. Current data are sensitive basically only on the sum of the neutrino masses and not on the single mass eigenstates (see e.g. [1, 2]). There are therefore good reasons to describe the physics by means of \({\varSigma }m_\nu \) and to consider a linear prior on it, as the parameter range is limited from below by oscillation experiments [3,4,5] and from above by KATRIN [6]. Even given these considerations, however, one can decide to perform the analysis considering a lower limit \({\varSigma }m_\nu >0\) [7], instead of enforcing the oscillation-driven one, \({\varSigma }m_\nu > rsim 60\,(100)~ \hbox {meV}\) (respectively for normal and inverted ordering of the neutrino masses, see e.g. [8, 9]): the obtained upper bounds will differ in the various cases.

In order to overcome these problems, in this letter we revisit a simple way [10,11,12] to use Bayesian model comparison techniques to obtain prior-independent constraints, which can be useful for an easier comparison of the constraining power of various experimental results, not only in the context of cosmology, but in all Bayesian analyses in general. Furthermore, we extend the already known method to address the problems related to the possible existence of degeneracies with multiple free parameters and the choice of the considered parameterizations when performing the numerical analyses.

2 Prior-free Bayesian constraints

The foundation of Bayesian statistics is represented by the Bayes theorem:

where \(\pi (\theta |{\mathcal {M}}_{i})\) and \(p(\theta |d,{\mathcal {M}}_{i})\) are the prior and posterior probabilities for the parameters \(\theta \) given a model \({\mathcal {M}}_{i}\), \({\mathcal {L}}_{{\mathcal {M}}_{i}}(\theta )\) is the likelihood function, depending on the parameters \(\theta \), given the data d and the model \({\mathcal {M}}_{i}\), and

is the Bayesian evidence of \({\mathcal {M}}_{i}\) [13], the integral of prior times likelihood over the entire parameter space \({\varOmega }_\theta \).

While the Bayes theorem indicates how to obtain the posterior probability as a function of all the model parameters \(\theta \), when presenting results we are typically interested in the marginalized posterior probability as a function of one parameter (or two), which we can generally indicate with x. The marginalization is performed over the remaining parameters, which we can indicate with \(\psi \):

Let us now assume that the prior is separable and we can write \(\pi (\theta |{\mathcal {M}}_{i})=\pi (x|{\mathcal {M}}_{i})\cdot \pi (\psi |{\mathcal {M}}_{i})\). Under such hypothesis, Eq. (3) can be written as:

Let us consider the marginalized posterior as written in Eq. (4). The prior dependence is only present explicitly outside the integral, and therefore we can obtain a prior-independent quantityFootnote 1 just dividing the posterior by the prior. The right-hand side of Eq. (4), however, has an explicit dependence on the value of x through the likelihood that appears in the integral. We can note that such integral resembles the definition of the Bayesian evidence in Eq. (2), not anymore for model \({\mathcal {M}}_{i}\), but for a sub-case of \({\mathcal {M}}_{i}\) which contains x as a fixed parameter. Let us label this model with \({\mathcal {M}}_{i}^{x}\) and define its Bayesian evidence:

which is independent of the prior \(\pi (x)\), but still depends on the parameter value x, now fixed. Note that Eq. (4) can be rewritten in the following form:

Now, let us consider two models \({\mathcal {M}}_{i}^{x_1}\) and \({\mathcal {M}}_{i}^{x_2}\). Since \(Z_i\) is independent of x, we can use Eq. (6) to obtain

which can be rewritten as

The left hand side of this equation is a ratio of the Bayesian evidences of the models \({\mathcal {M}}_{i}^{x_1}\) and \({\mathcal {M}}_{i}^{x_2}\), therefore it is a Bayes factor. For reasons that will be clear later, let us rename \(x_1\rightarrow x\) and \(x_2\rightarrow x_0\) and define this ratio as \({\mathcal {R}}(x,x_0|d)\), which was named “relative belief updating ratio” or “shape distortion function” in the past [10,11,12]:

Although this function has been already employed in the past, see e.g. [14,15,16,17], its use has been somewhat faded into obscurity. Here, we will revise its properties and discuss them in details.

Let us recall that \(Z_i^x\) is independent of \(\pi (x)\), see Eq. (5): this means that \({\mathcal {R}}(x,x_0|d)\) is also independent of \(\pi (x)\). This quantity therefore represents a prior-independent way to compare some results concerning two values of some parameter x. At the practical level, \({\mathcal {R}}\) is particularly useful when dealing with open likelihoods, i.e. when data only constrain the value of some parameter from above or from below. In such case, the likelihood becomes insensitive to the parameter variations below (or above) a certain threshold. Let us consider for example the absolute scale of neutrino masses, on which data (either cosmological or at laboratory experiments) only put an upper limit: the data are insensitive to the value of x when x goes towards 0, so we can consider \(x_0=0\) as a reference value. Regardless of the prior, when x is sufficiently close to \(x_0\) the likelihoods in x and \(x_0\) are essentially the same in all the points of the parameter space \({\varOmega }_\psi \), so \(Z_i^{x}\simeq Z_i^{x_0}\) and \({\mathcal {R}}(x,x_0)\rightarrow 1\). In the same way, when x is sufficiently far from \(x_0\), the data penalize its value (\(Z_i^{x}\ll Z_i^{x_0}\)) and we have \({\mathcal {R}}(x,x_0)\rightarrow 0\). In the middle, the function \({\mathcal {R}}\) indicates how much x is favored/disfavored with respect to \(x_0\) in each point, in the same way a Bayes factor indicates how much a model is preferred with respect to another one.

While \({\mathcal {R}}\) can define the general behavior of the posterior as a function of x, any probabilistic limit one can compute will always depend on the prior shape and range, which is an unavoidable ingredient of Bayesian statistic. The description of the results through the \({\mathcal {R}}\) function, however, allows to use the data to define a region above which the parameter values are disfavored, regardless of the prior assumptions, and also to guarantee an easier comparison of two experimental results. A good standard could be to provide a (non-probabilistic) “sensitivity bound”, defined as the value of x at which \({\mathcal {R}}\) drops below some level, for example \(|\ln {\mathcal {R}}|=1\), 3 or 5 in accordance to the Jeffreys’ scale (see e.g. [2, 13]). Let us consider \(x_0=0\) as above: we could say, for example, that we consider as “moderately (strongly) disfavored” the region \(x>x_s\) for which \(\ln {\mathcal {R}}<s\), with \(s=-\,3\) (or \(-5\)), and then use the different values \(x_s\) to compare the strengths of different data combinations d in constraining the parameter x. This will not represent an upper bound at some given confidence level, since it is not a probabilistic bound, but rather a hedge “which separates the region in which we are, and where we see nothing, from the the region we cannot see” [11].

From the computational point of view, it is not necessary to perform the integrals in the definition of \(Z_i^x\) in order to compute \({\mathcal {R}}\). One can directly use the right hand side of Eq. (9), i.e. numerically compute \(p(x|d,{\mathcal {M}}_{i})\) with a specific prior assumption, then divide by \(\pi (x,{\mathcal {M}}_{i})\) and normalize appropriately. Notice also that, once \({\mathcal {R}}\) is known, anyone can obtain credible intervals with any prior of choice: the posterior \(p(x|d,{\mathcal {M}}_{i})\) can easily be computed using Eq. (9) and normalizing to a total probability of 1 within the prior.

Few final comments: in most of the cases, obtaining limits with the \({\mathcal {R}}\) function is nearly equivalent to using a likelihood ratio test. The difference is that, while the likelihood ratio test only takes into account the likelihood values in the best-fit at fixed \(x_0\) and x, the \({\mathcal {R}}\) method weighs the information of the entire posterior distribution and takes into account the mean likelihood over the prior \({\varOmega }_\psi \). This means that in cases with multiple posterior peaks or complex posterior distributions, the limits obtained using the \({\mathcal {R}}\) function can be more conservative than those obtained with the likelihood ratio test. As an example, we provide in the lower panel of Fig. 1 a comparison of the likelihood ratio and of the \(-2\ln {\mathcal {R}}\) functions when the following likelihood is considered:

The dependence of the likelihood on x and \(\theta \) is shown in the upper panel of Fig. 1. In such case, the \({\mathcal {R}}\) function takes into account the existence of a second peak in the posterior. The choice of the function and the coefficients in Eq. (10) is appropriate to show that, while cutting at 1 (corresponding to the \(1\sigma \) limit, in a frequentist sense, for the likelihood ratio test) the likelihood ratio and the \({\mathcal {R}}\) methods give the same results, the cut at 4 (corresponding to a \(2\sigma \) significance for the likelihood ratio test) leads to different results, because the likelihood ratio takes into account only the likelihood values at the best-fit, while the \({\mathcal {R}}\) method is also affected by the second peak of the posterior. For the same reason, the local minimum of \(-2\ln {\mathcal {R}}\) at \(x=6\) appears.

Upper panel: Dependence of the likelihood in Eq. (10) on the parameters x and \(\theta \). Lower panel: Comparison of the results obtained with the likelihood ratio and the \({\mathcal {R}}\) methods when the likelihood in Eq. (10) is considered. The horizontal lines show the levels 0, 1, 4, respectively

Another advantage is computational. In cosmological analyses, it is typically difficult to study the maximum of the likelihood, because of the number of dimensions, the numerical noise and the computational cost of the likelihood. An example showing the technical difficulties in such kind of analyses can be found in [18]. Similar difficulties can emerge in different analyses. Even if the best-fit point is not known with sufficient precision, however, the \({\mathcal {R}}\) function allows to obtain a prior-independent bound with a Markov Chain Monte Carlo or similar method.

3 A simple example with Planck 2018 chains

To demonstrate a simple example with recent cosmological data, we provide in Fig. 2 the function \({\mathcal {R}}({\varSigma }m_\nu ,\,0)\) computed in few cases, obtained from the publicly available Planck 2018 (P18) chains Footnote 2 with four different data sets and considering the \({\varLambda }\)CDM+\({\varSigma }m_\nu \) model. The datasets include the full CMB temperature and polarization data [19] plus the lensing measurements [20] by Planck 2018, and BAO information from the SDSS BOSS DR12 [21,22,23,24] the 6DF [25] and the SDSS DR7 MGS [26] surveys.

The calculation of \({\mathcal {R}}\) is easy in this case. The Planck collaboration considered a flat prior on \({\varSigma }m_\nu \),Footnote 3 so we simply have to obtain the posterior \(p({\varSigma }m_\nu |d,{\varLambda }{\hbox {CDM}}+{\varSigma }m_\nu )\) by standard means and use it to compute \({\mathcal {R}}\) according to Eq. (9).Footnote 4 Since the lower limit adopted by Planck is \({\varSigma }m_\nu =0\), we can compute \({\mathcal {R}}\) for any positive value of \({\varSigma }m_\nu \), as far as we do not exceed the upper bound of the prior. To better show the results, we consider a logarithmic scale and an appropriate parameter range for the plot in Fig. 2.

The \({\mathcal {R}}({\varSigma }m_\nu ,\,0)\) function in Eq. (9) obtained from the Planck 2018 chains [7] for different data combinations, considering the \({\varLambda }\)CDM+\({\varSigma }m_\nu \) model. The horizontal lines show the levels \(\ln {\mathcal {R}}=0\), \(-\,1\), \(-\,3\), \(-\,5\), respectively

From the figure, we can notice that the data are completely insensitive to the value of \({\varSigma }m_\nu \) when it falls below \(\simeq 0.01~ \hbox {eV}\): in this region, there will be no change between prior and posterior distributions, and \({\mathcal {R}}\rightarrow 1\) as expected. On the other hand, \({\varSigma }m_\nu > rsim 0.4~ \hbox {eV}\) will be disfavored by data, for all the data combinations shown here, as \({\mathcal {R}}\rightarrow 0\). As is also expected, the exact shape of \({\mathcal {R}}\) between 0.01 and 0.4 eV depends on the inclusion of the BAO constraints and only slightly on the lensing dataset. Regardless of considering a cut at \({\mathcal {R}}=e^{-3}\) or \({\mathcal {R}}=e^{-5}\), indeed, the value of the sensitivity bound only depends on the inclusion of the BAO data. A comparison of the CMB dataset without (P18) or with (P18+BAO) the BAO constraints, therefore, can be summarized by two numbers, considering for example \(s=-5\):

4 The case of multiple models

In the previous sections we discussed the case when dealing with only one model, which was already known in the literature. The situation is slightly different when more models are considered, for example if one wants to study and take into account several extensions of the same minimal scenario, as in Ref. [27]. It is not difficult to rewrite the definition of \({\mathcal {R}}\) to deal with multiple models, if we assume that the prior for the parameter x under consideration is the same in all of them, i.e. that \(\pi (x)\equiv \pi (x|{\mathcal {M}}_{i})\) does not depend on \({\mathcal {M}}_{i}\).

Let us now recall the method proposed in [27]. The model-marginalized posterior distribution of the parameter x is obtained as

where \(p({\mathcal {M}}_{i}|d)\) is the posterior probability of the model \({\mathcal {M}}_{i}\), which can be computed using [28]

In both cases the sum runs over all the studied models. Coming back to Eq. (13) and using Eqs. (4)Footnote 5 and (14), we obtain the fully (prior- and model-) marginalized posterior probability of x:

Remembering that we assumed \(\pi (x)\equiv \pi (x|{\mathcal {M}}_{i})\) to be independent of \({\mathcal {M}}_{i}\), the ratio between prior and marginalized posterior probabilities for the parameter x is:

If we use this result to define \({\mathcal {R}}\) again as in Eq. (9), we have:

which has exactly the same meaning as before, apart for the fact that in this case \({\mathcal {R}}\) has been marginalized over several models.

From the computational point of view, in the model-marginalized case obtaining \({\mathcal {R}}\) is as simple as when only one model is considered. One just has to select a prior \(\pi (x)\) and a sufficiently broad range, obtain the marginalized posterior probability as in [27], then divide it by the considered prior and normalize appropriately.

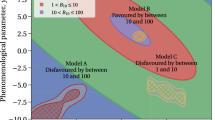

The \({\mathcal {R}}({\varSigma }m_\nu ,\,0)\) function in Eq. (9) obtained considering different models (dashed) together with the model-marginalized one from Eq. (17) (solid), using the full dataset adopted in Ref. [27] (see text for details). The horizontal lines show the levels \(\ln {\mathcal {R}}=0\), \(-\,1\), \(-\,3\), \(-\,5\), respectively

As an example, we provide in Fig. 3 the \({\mathcal {R}}\) function obtained from the vary same posteriors studied in Ref. [27]. Such cases are computed considering the full Planck 2015 (P15) CMB data [29, 30], together with the lensing likelihood [31] and the BAO observations by from the SDSS BOSS DR11 [32], the 6DF [25] and the SDSS DR7 MGS [26] surveys. The considered models are the same extensions of the \({\varLambda }\)CDM+\({\varSigma }m_\nu \) case adopted by the Planck collaboration for the 2015 public release, but with a prior \({\varSigma }m_\nu >60~ \hbox {meV}\). Also in this case we can see how the \({\mathcal {R}}\) function is very close to one below 0.1 eV and always goes to zero above \(\sim 0.7~ \hbox {eV}\). In the middle, the various models (dashed lines) have different constraining powers, whose weighted average is represented by the solid line. The model-marginalized, prior-independent result corresponds to

5 Discussion and conclusions

In this letter we discussed a possible way to show prior-independent results in the context of Bayesian analysis, generalising a previously known method [10,11,12] to deal with multiple models, extending also the work presented in Ref. [27]. The method uses Bayesian model comparison techniques to compare the constraining power of the data at different values of the considered parameter, and is particularly useful when open likelihoods are involved in the analysis. While the method can be similar to a likelihood ratio test, it does not only take into account the information contained in the best-fit point, i.e. the maximum of the likelihood, but also the information of the full posterior, so that in case of multivariate posterior distributions, more conservative limits are obtained. Furthermore, the discussed method can be much less expensive than the likelihood ratio test from the computational point of view.

We applied the simple formulas to the case of neutrino mass constraints from cosmology, discussing the case of several datasets analyzed with one single cosmological model, and the case where we have only one dataset but multiple models. In the latter case, Bayesian model comparison also allows to take into account the constraints from the different models to obtain a prior-independent and model-marginalized bound. An extended application of this method is left for a separate work.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: The publication does not include any data: the figures were obtained post-processing data obtained in previous publications.]

Notes

This is not exactly true, in the sense that the prior also enters the calculation of the Bayesian evidence, see Eq. (2). The shape of the posterior, in any case, is not affected by such contribution, that only enters as a normalization constant.

The chains are available through the Planck Legacy Archive, http://pla.esac.esa.int/pla/. Note that a simple post-processing of the available chains is sufficient to produce Fig. 2.

Note that, although the considered prior is linear, the calculations through the CAMB code enforce a non-trivial distortion of the prior, which comes from the numerical requirements of the code. These come from the fact that some combinations of parameter values may create numerical instabilities, divergences or simply unphysical values for some cosmological quantities. These problematic points are therefore excluded by the cosmological calculation “a priori”, in the sense that the even if they are formally included in the prior, their likelihood cannot be computed at the practical level. In the region below 1 eV, however, the prior on \({\varSigma }m_\nu \) is substantially unchanged by this fact.

Note that this is practically what is already shown by most authors in cosmology, since the results for 1-dimensional marginalized posteriors are often presented in plots where \(p_{\pi }(x|d)/p^{\mathrm{max}}_{\pi }\) is shown, being \(\pi (x)\) a linear prior on the quantity x, therefore not affecting the conversion between posterior and \({\mathcal {R}}\) according to Eq. (9). Apart for the normalization constant, \(p_{\pi }(x|d)/p^{\mathrm{max}}_{\pi }\) may be intended as an unnormalized posterior probability, which can be employed for bounds calculations as if a linear prior on x was considered, or as a shape distortion function, therefore not suitable to compute limits unless some prior is assumed first.

The marginalization over the parameters \(\psi \) is not necessarily the same in all the models. As we are not assuming anything on \(\psi \), they can be not the shared ones among the various models and can vary in number. In any case, the marginalization works inside each model independently, using for each \({\mathcal {M}}_{i}\) the appropriate parameter space and priors: the differences remain hidden in the definition of \(Z_i^x\).

References

M. Lattanzi, M. Gerbino, Front. Phys. 5, 70 (2018). https://doi.org/10.3389/fphy.2017.00070

P.F. De Salas, S. Gariazzo, O. Mena, C.A. Ternes, M. Tórtola, Front. Astron. Space Sci. 5, 36 (2018). https://doi.org/10.3389/fspas.2018.00036

F. Capozzi, E. Lisi, A. Marrone, A. Palazzo, Prog. Part. Nucl. Phys. 102, 48 (2018). https://doi.org/10.1016/j.ppnp.2018.05.005

P.F. de Salas, D.V. Forero, C.A. Ternes, M. Tórtola, J.W.F. Valle, Phys. Lett. B 782, 633 (2018). https://doi.org/10.1016/j.physletb.2018.06.019

I. Esteban, M. Gonzalez-Garcia, A. Hernandez-Cabezudo, M. Maltoni, T. Schwetz, JHEP 1901, 106 (2019). https://doi.org/10.1007/JHEP01(2019)106

M. Aker et al., Phys. Rev. Lett. 123, 221802 (2019). https://doi.org/10.1103/PhysRevLett.123.221802

N. Aghanim et al., (2018). arxiv:1807.06209

S. Wang, Y.F. Wang, D.M. Xia, Chin. Phys. C 42, 065103 (2018). https://doi.org/10.1088/1674-1137/42/6/065103

S. Wang, Y.F. Wang, D.M. Xia, X. Zhang, Phys. Rev. D 94(8), 083519 (2016). https://doi.org/10.1103/PhysRevD.94.083519

P. Astone, G. D’Agostini, arxiv:hep-ex/9909047

G. D’Agostini, in Workshop on confidence limits, CERN, Geneva, Switzerland, 17-18 Jan 2000: Proceedings (2000), pp. 3–27

G. D’Agostini, Bayesian Reasoning in Data Analysis (World Scientific, Singapore, 2003). https://doi.org/10.1142/5262

R. Trotta, Contemp. Phys. 49, 71 (2008). https://doi.org/10.1080/00107510802066753

G. D’Agostini, G. Degrassi, Eur. Phys. J. C 10, 663 (1999). https://doi.org/10.1007/s100529900171

K. Eitel, New J. Phys. 2, 1 (2000). https://doi.org/10.1088/1367-2630/2/1/301

P. Abreu et al., The European Physical Journal C 11(3), 383 (1999). https://doi.org/10.1007/s100529900190

J. Breitweg et al., Eur. Phys. J. C 14, 239 (2000)

P.A.R. Ade et al., Astron. Astrophys. 566, A54 (2014). https://doi.org/10.1051/0004-6361/201323003

N. Aghanim et al., (2019). arxiv:1907.12875

N. Aghanim et al., (2018). arxiv:1807.06210

S. Alam et al., Mon. Not. R. Astron. Soc. 470, 2617 (2017). https://doi.org/10.1093/mnras/stx721

F. Beutler et al., Mon. Not. R. Astron. Soc. 464, 3409 (2017). https://doi.org/10.1093/mnras/stw2373

A.J. Ross et al., Mon. Not. R. Astron. Soc. 464, 1168 (2017). https://doi.org/10.1093/mnras/stw2372

M. Vargas-Magaña et al., Mon. Not. R. Astron. Soc. 477, 1153 (2018). https://doi.org/10.1093/mnras/sty571

F. Beutler, C. Blake, M. Colless, D.H. Jones, L. Staveley-Smith, L. Campbell, Q. Parker, W. Saunders, F. Watson, Mon. Not. R. Astron. Soc. 416, 3017 (2011). https://doi.org/10.1111/j.1365-2966.2011.19250.x

A.J. Ross, L. Samushia, C. Howlett, W.J. Percival, A. Burden, M. Manera, Mon. Not. R. Astron. Soc. 449(1), 835 (2015). https://doi.org/10.1093/mnras/stv154

S. Gariazzo, O. Mena, Phys. Rev. D 99, 021301 (2019). https://doi.org/10.1103/PhysRevD.99.021301

W.J. Handley, M.P. Hobson, A.N. Lasenby, Mon. Not. R. Astron. Soc. 453(4), 4384 (2015). https://doi.org/10.1093/mnras/stv1911

R. Adam et al., Astron. Astrophys. 594, A1 (2016). https://doi.org/10.1051/0004-6361/201527101

P.A.R. Ade et al., Astron. Astrophys. 594, A13 (2016). https://doi.org/10.1051/0004-6361/201525830

P.A.R. Ade et al., Astron. Astrophys. 594, A15 (2016). https://doi.org/10.1051/0004-6361/201525941

L. Anderson et al., Mon. Not. R. Astron. Soc. 441(1), 24 (2014). https://doi.org/10.1093/mnras/stu523

Acknowledgements

The author receives support from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie individual grant agreement no. 796941.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3

About this article

Cite this article

Gariazzo, S. Constraining power of open likelihoods, made prior-independent. Eur. Phys. J. C 80, 552 (2020). https://doi.org/10.1140/epjc/s10052-020-8126-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-020-8126-0