Abstract

The ‘optimal’ factorization scale \(\mu _0\) is calculated for open heavy quark production. We find that the optimal value is \(\mu _F=\mu _0\simeq 0.85\sqrt{p^2_T+m_Q^2} \); a choice which allows us to resum the double-logarithmic, \((\alpha _s\ln \mu ^2_F\ln (1/x))^n\) corrections (enhanced at LHC energies by large values of \(\ln (1/x)\)) and to move them into the incoming parton distributions, PDF\((x,\mu _0^2)\). Besides this result for the single inclusive cross section (corresponding to an observed heavy quark of transverse momentum \(p_T\)), we also determined the scale for processes where the acoplanarity can be measured; that is, events where the azimuthal angle between the quark and the antiquark may be determined experimentally. Moreover, we discuss the important role played by the \(2\rightarrow 2\) subprocesses, \(gg\rightarrow Q\bar{Q}\) at NLO and higher orders. In summary, we achieve a better stability of the QCD calculations, so that the data on \(c{\bar{c}}\) and \(b{\bar{b}}\) production can be used to further constrain the gluons in the small x, relatively low scale, domain, where the uncertainties of the global analyses are large at present.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The present global PDF analyses (e.g. NNPDF3.0 [1], MMHT2014 [2], CT14 [3]) find that there is a large uncertainty in the low x behaviour of the gluon distribution. There is a lack of appropriate very low x data, particularly at low scales. However, recently measurements on open charm and open beauty in the forward direction have been presented by the LHCb collaboration [4,5,6,7]; moreover, the ATLAS collaboration has measured open charm production in the central rapidity region [8]. These data sample the gluon distribution at rather low x: namely in the domain \(10^{-5} \,{\lesssim }\, x \,{\lesssim }\, 10^{-4}\). A discussion of the data in terms of existing global PDFs has been presented in [9, 10], and they have been incorporated in a fit with the HERA deep inelastic data in [11].

In the ideal case it would be good to have such data where both the heavy quark and the heavy antiquark were measured, since when we observe only one quark (one heavy hadron) the value of x that is probed is smeared out over an order of magnitude by the unknown momentum of the unobserved quark in the \(Q{\bar{Q}}\)-pair [9, 12], where \(Q\equiv c,b\); see e.g. Fig. 1 in [9]. Nevertheless, even measurements of the inclusive cross section of one heavy quark can be used to check and further constrain the existing PDFs.

Another problem, which was emphasized in [10], is that the QCD prediction at NLO level strongly depends on the factorization scale, \(\mu _F\), assumed in the calculation. We might expect that the major source of the strong \(\mu _F\) dependence arises because in the DGLAP evolution of low x PDFs the probability of emitting a new gluon is strongly enhanced by the large value of \(\ln (1/x)\). Indeed, the mean number of gluons in the interval \(\Delta \ln \mu _F^2\) is [13]

leading to a value of \(\langle n \rangle \) up to about 8, for the case \(\ln (1/x)\sim 8\) with the usual \(\mu _F\) scale variation interval from \(\mu _F/2\) to \(2\mu _F\). In contrast, the NLO coefficient function allows for the emission of only one gluon. Therefore we cannot expect compensation between the contributions coming from the PDF and the coefficient function as we vary the scale \(\mu _F\). It was shown in [14,15,16] that this strong double-logarithmic part of the scale dependence can be successfully resummed by choosing an appropriate scale, \(\mu _0\), in the PDF convoluted with the LO hard matrix element, which in our case is \(\mathcal{M}(gg\rightarrow Q{\bar{Q}})\).

The outline of the paper is as follows. In Sect. 2 we recall the method of performing the resummation to determine the optimal scale \(\mu _0\). In Sect. 3 we justify choosing the renormalization scale equal to the factorization scale. Then in Sect. 4.1 we use the procedure discussed in Sect. 2, to resum the ln(1 / x) terms so as to determine the optimum factorization scale, \(\mu _0\). Unfortunately for heavy \(Q\bar{Q}\) production (unlike the Drell–Yan process) a large sensitivity to the choice of scale remains. In Sect. 4.2 we identify the source of the problem to be the important \(2\rightarrow 2\) (that is, \(gg\rightarrow Q\bar{Q}\)) diagrams at NLO and higher orders. We argue that it is possible to also resum these diagrams. We then find the scale sensitivity is reduced. It would be advantageous if both heavy mesons (arising from Q and \(\bar{Q}\)) could be measured experimentally, but, at present, the statistics are limited. However, a possibility to circumvent this problem is discussed in Sect. 5. In Sect. 6 we return to open single inclusive \(c{\bar{c}}\) and \(b{\bar{b}}\) production and compare the QCD predictions with the optimal scale with LHC data; and we are able to make an observation about the gluon PDF at low x. In Sect. 7 we present our conclusion.

2 Way to choose the optimum factorization scale

Here we recall the procedure proposed in [14,15,16], which provides a reduction in the sensitivity to the choice of factorization scale by resumming the enhanced double-logarithmic contributions from a knowledge of the NLO contribution. The cross section for open heavy quark production at LO + NLO at factorization scale \(\mu _f\) may be expressed in the formFootnote 1

where the coefficient function \(C^{(0)}\) does not depend on the factorization scale, while the \(\mu _f\) dependence of the NLO coefficient function arises since we have to subtract from the NLO diagrams the part already generated by LO evolution.

We are free to evaluate the LO contribution at a different scale \(\mu _F\), since the resulting effect can be compensated by changes in the NLO coefficient function, which then also becomes dependent on \(\mu _F\). In this way Eq. (2) becomes

Here the first \(\alpha _s\) correction \(C^{(1)}_{\mathrm{rem}}(\mu _F)\equiv C^{(1)}(\mu _f=\mu _F)\) is calculated now at the scale \(\mu _F\) used for the LO term, and not at the scale \(\mu _f\) corresponding to the cross section on the left hand side of the formula. Since it is the correction which remains after the factorization scale in the LO part is fixed, we denote it \(C_{\mathrm{rem}}^{(1)}(\mu _F)\). Note that although the first and second terms on the right hand side depend on \(\mu _F\), their sum, however, does not (to \(\mathcal{O}(\alpha _s^4)\)), and is equal to the full LO+NLO cross section calculated at the factorization scale \(\mu _f\).

Originally the NLO coefficient functions \(C^{(1)}\) are calculated from Feynman diagrams which are independent of the factorization scale. How does the \(\mu _F\) dependence of \(C^{(1)}_{\mathrm{rem}}\) in (3) actually arise? It occurs because we must subtract from \(C^{(1)}\) the \(\alpha _s\) term which was already included in the LO contribution. Since the LO contribution was calculated up to some scale \(\mu _F\) the value of \(C^{(1)}\) after the subtraction depends on the value \(\mu _F\) chosen for the LO component. The change of scale of the LO contribution from \(\mu _f\) to \(\mu _F\) also means we have had to change the factorization scale which enters the coefficient function \(C^{(1)}\) from \(\mu _f\) to \(\mu _F\). The effect of this scale change is driven by the LO DGLAP evolution, which is given by

where \(P_\mathrm{left}\) and \(P_\mathrm{right}\) denote DGLAP splitting functions acting on the PDFs to the left and right, respectively. That is, by choosing to evaluate \(\sigma ^{(0)}\) at scale \(\mu _F\) we have moved the part of the NLO (i.e. \(\alpha _s\)) corrections given by the last term of (4) from the NLO to the LO part of the cross section. In this way \(C^{(1)}\) becomes the remaining \(\mu _F\)-dependent coefficient function \(C^{(1)}_{\mathrm{rem}}(\mu _F)\) of (3). The idea is to choose a scale \(\mu _F=\mu _0\) such that the remaining NLO term does not contain the double-logarithmic \((\alpha _s\mathrm{ln}( \mu _F)\mathrm{ln}(1/x))^n\) contributions. It is impossible to nullify the whole NLO contribution since the function \(C^{(1)}(\mu )\) depends also on other variables; in particular, it depends on the mass, \(\hat{s}\), of the system produced by the hard matrix element. On the other hand we can choose such a value of \(\mu \) which makes \(C^{(1)}(\mu ,\hat{s})=0\) in the limit of large \(\hat{s} \gg m^2_Q\). Recall that the \(\ln (1/x)\) factor arises in the NLO after the convolution of the large \(\hat{s}\) asymptotics of the hard subprocess cross section with the incoming parton low-x distributions satisfying

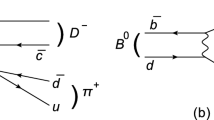

Heavy quark production at (a) LO via the \(gg\rightarrow Q{\bar{Q}}\) subprocess, and (b) via the \(gq\rightarrow Q{\bar{Q}}q\) subprocess. The diagrams with s-channel gluons \((g^*\rightarrow Q{\bar{Q}})\) are not shown for simplicity. Moreover, only the PDF below the hard matrix element, \(\mathcal{M}\), is shown. Note that the double-logarithmic (DL) integral in the NLO matrix element is exactly the same as in the first gluon cell below the LO matrix element. In both cases the ultraviolet convergence is provided by the \(k_t\) dependence of the respective matrix element. Therefore it is possible to move the large DL contribution from the coefficient function \(C^{(1)}\) of the NLO term, diagram (b), to the PDF term in the LO diagram (a) by choosing an appropriate value of \(\mu _F\), and in this way to resum all the higher-order DL contributions in the PDFs\((\mu _F)\) of the LO diagram (a)

At NLO level the change \(\mu _f\) to \(\mu _F\) is irrelevant: Eq. (3) is an identity (it just changes the higher-order terms). However, in this way we simultaneously resum all the higher-order double-logarithmic contributions in the PDFs\((\mu _F)\) of the LO part. As a result we are able to suppress the scale dependence caused by large values of log(1 / x).

Thus the choice of \(\mu =\mu _0\), which nullifies \(C^{(1)}\) at large \(\hat{s} \gg m^2_Q\), excludes the Double Log (DL), \(\alpha _s\ln \mu _F^2\ln (1/x)\), contribution from the NLO correction by resumming the series of double-logarithmic terms in the PDFs, which are then convoluted with the LO coefficient functions. To find the appropriate value of \(\mu _0\) we must choose the NLO subprocess driven by the same ladder-type diagrams (in the axial gauge) as the ladder diagrams that describe LO DGLAP evolution. The appropriate subprocess is gluon–light quark fusion, \(gq\rightarrow Q{\bar{Q}}q\). In the high-energy limit, where the subprocess energy satisfies \({\hat{s}}(gq)\gg m^2_Q\), the cross section described by this subprocess contains double-logarithmic terms log\((\mu ^2_F/\mu ^2_0)\) log\(({\hat{s}}/m^2_Q)\). The subprocess \(gq\rightarrow Q{\bar{Q}}q\) is contained in the sketch of Fig. 1b, where it is shown pictorially how the enhanced double-logarithmic terms are transferred to the PDFs in the LO term.

2.1 Extension to higher orders

We note that, in general, this decomposition can be continued to higher order. For example, if the NNLO contribution is known, then we will have three scales: \(\mu _f,~\mu _F=\mu _0\) and \(\mu _1\),

where the scale \(\mu _1\) is chosen to nullify the final term in the small x limit.

In fact in Sect. 4.2 we will use this equation to include the important \(2\rightarrow 2\) (that is, \(gg\rightarrow Q\bar{Q})\) subprocess at NLO and higher orders. We will show reasons why the scale choice \(\mu _1=\mu _0\) will give a good approximation for the resummation of these higher-order \(2\rightarrow 2\) contributions.

2.2 Comparison with \(k_t\) factorization

The approach we have introduced is based on collinear factorization. However, actually it is close in spirit to the \(k_t\)-factorization method. Indeed, there, the value of the factorization scale is driven by the structure of the integral over \(k_t\); see Fig. 2. In the \(k_t\)-factorization approach this \(k_t\) integral is written explicitly, while the parton distribution unintegrated over \(k_t\) is generated by the last step of the DGLAP evolution, similar to the prescription proposed in Refs. [17, 18]. Then, using the known NLO result, we account for the exact \(k_t\) integration in the last cell adjacent to the LO hard matrix element. This hard matrix element \(\mathcal {M}\), provides the convergence of the integral at large \(k_t\). In this way it puts an effective upper limit of the \(k_t\) integral, which plays the role of an appropriate factorization scale.

The diagram for \(AA^*\), where A is the amplitude for the subprocess \(gg\rightarrow Q{\bar{Q}}q\) shown in Fig. 1b. However, in the \(k_t\) factorization approach \(k_t\) is integrated over and the effective upper limit of the convergent integral essentially plays the role of the appropriate factorization scale

3 The renormalization scale \(\mu _R\)

Besides the factorization scale, the QCD prediction, truncated at NLO, strongly depends on the renormalization scale \(\mu _R\), since the LO term is already proportional to \(\alpha ^2_s(\mu _R)\). Let us discuss the possible choice of \(\mu _R\). First, it is reasonable to have \(\mu _R\,{\gtrsim }\, \mu _F\), since we expect all the contributions with virtualities less than \(\mu _F\) to be included in the PDFs, while those larger than \(\mu _F\) to be assigned to the hard matrix element. This is in line with the fact that the current scale of the QCD coupling increases monotonically during the DGLAP evolution. So the coupling responsible for heavy quark production should have a scale \(\mu _R\) equal to, or larger than, that in the evolution.

Another argument is based on the BLM prescription [19], which says that all the contributions proportional to \(\beta _0=11-\frac{2}{3}n_f\) should be assigned to \(\alpha _s\) by choosing an appropriate scale \(\mu _R\). A good way to trace the \(\beta _0\) contribution is to calculate the LO term generated by a new type of light quark, so \(n_f\rightarrow n_f +1\). Note that the new quark-loop insertion appears twice in the calculation. The part with scales \(\mu <\mu _F\) is generated by the virtual (\({\propto } \delta (1-z)\)) component of the LO splitting during DGLAP evolution, while the part with \(\mu >\mu _R\) accounts for the running \(\alpha _s\) behaviour obtained after the regularization of the ultraviolet divergence. In order not to miss some contribution and to avoid double counting we take the renormalization scale \(\mu _R=\mu _F\). The argument for this choice was made in more detail in [20] for the QED case.

Of course, all these are only the arguments why we expect \(\mu _R=\mu _F\), and these are not a proof. Formally we can only say that we expect \(\mu _R\) to be of the order of \(\mu _F\). Thus there could be further uncertainty in the scale dependence of the predictions due to the possibility that \(\mu _R \ne \mu _F\). However, based on these arguments, below we study the factorization scale dependence using the renormalization scale \(\mu _R=\mu _0\).

We emphasize (see also [10]) that the renormalization scale dependence affects just the normalization of cross section, but not its energy behaviour. It is cancelled in the ratio of the cross sections measured at the LHC energy of 7 (or 8 or 5) TeV to that at 13 TeV or in the ratio of the cross sections obtained at different rapidities. Thus these ratios will probe the low x dependence of the gluons at scale \(\mu _F=\mu _0\) essentially without any uncertainties due to possible variations of the \(\mu _R\) scale.

4 Sensitivity of predictions to the factorization scale

Here we implement the proposals of Eqs. (3) and (6) in an attempt to reduce the factorization scale dependence of the QCD predictions for \(Q\bar{Q}\) production in high-energy \(p\bar{p}\) collisions. Note, however, that calculating the NLO contribution of the diagram in Fig. 1, we have integrated over the momenta of other particles; in particular, over the transverse momentum, \(-k_t\), of the light quark.

4.1 The optimum scale to resum ln(1 / x) terms

We use the formulae from Appendix B of [21] to calculate the \(gg\rightarrow Q\bar{Q}q\) matrix element in the high-energy limit in order to find a scale,

that nullifies the double-logarithmic NLO contribution: that is, to find a scale \(\mu _0\) at which the DGLAP-induced contribution \((P_\mathrm{left}\otimes C^{(0)} +C^{(0)} \otimes P_\mathrm{right})\) replaces the NLO correction calculated explicitly. Note, however, that calculating the NLO contribution of the diagram in Fig. 1, we have integrated over the momenta of other particles; in particular, over the transverse momentum, \(-k_t\), of the light quark. Since we are going to consider the upper (heavy quark) box in Fig. 1 as the ‘hard’ subprocess, and would like to keep the DGLAP \(k_t\) ordering, we put an additional cut \(-|k_t|<\mathrm{min}\{m_{TQ},m_{T\overline{Q}}\}\); otherwise the lower part of diagram (which may be either qQ or \(q\bar{Q}\) scattering) may have \(k_t>m_T\), and would then be treated as the hard subprocess. Here \(m_T= \sqrt{m^2_Q+p^2_T}\).

The values that we find for the ‘optimal’ scale \(\mu _0\) are presented in Fig. 3 as the function of \(p_T/m_Q\) ratio, where \(p_T\) is the transverse momentum of the observed heavy quark. It turns out that the values of the optimal scale are close to the value \(\mu ^2_F=m^2_T\equiv p^2_T+m^2_Q\), which is used conventionally; that is \(F=1\). However, we now have a physics justification for the scale choice shown in Fig. 3, which to a good approximation is \(\mu _0\simeq 0.85m_T\), that is, \(F\simeq 0.85\).

Now that we have the value of \(\mu _0\), we can study the factorization scale, \(\mu _f\), dependence of the QCD predictions for \(c{\bar{c}}\) and \(b{\bar{b}}\) production. The results are shown in Fig. 4 for a Q quark of pseudorapidity \(\eta =3\)—typical of the LHCb experiment. The curves for the first two procedures,Footnote 2 mentioned in the caption of Fig. 4, are obtained from (3) setting \(\mu _F\) (and \(\mu _R\)) equal to \(\mu _0\), and then varying \(\mu _f\) in the range \((m_T/2, 2m_T)\). We use the CT14 [3] PDFs as an example of a recent set of partons which have no negative gluon distributions and take the corresponding heavy quark masses: \(m_c=1.3\) GeV and \(m_b=4.75\) GeV. Subroutines from the MCFM [22] and FONLL [23] programmes were used for the computations.

For simplicity we take \(F=0.85\), that is, \(\mu _0=0.85m_T\), and make predictions for three different values of the factorization scale \(\mu _f\), namely \(\mu _f=(0.5,\ 1,\ 2)m_T\). The results are shown in Fig. 4 by the dashed red curves. We repeat the cross section prediction, but now use (2) with the conventional choice \(\mu _f=(0.5,\ 1,\ 2)m_T\), which gives the blue curves. Not surprisingly, since the optimum scale is close to the conventional choice \(\mu _0= m_T\), the scale uncertainties are comparable.

The scale dependence of the predictions of the cross section, \(m_T^4{\mathrm{d}\sigma }/{\mathrm{d}\eta \mathrm{d}p^2_T}\), for \(c{\bar{c}}\) and \(b{\bar{b}}\) production, respectively, using the NLO CT14 parton set. The plot shows the scale variation \(\mu _f =(2,1,0.5)m_T\) for three different procedures: (i) the conventional prediction (blue curves), (ii) resumming the ln(1 / x) contributions with \(\mu _F=\mu _0\simeq 0.85m_T\) in (3) (dashed red curves), (iii) in addition resumming the \(2\rightarrow 2\) contributions with \(\mu _1=\mu _0\) in Eqs. (6, 8) (dotted red curves)

Unfortunately we still have rather strong dependence of the predicted cross section on the choice of the value of \(\mu _f\). It is caused by the relatively low mass contribution coming mainly from the \(2\rightarrow 2\) \((gg\rightarrow Q\bar{Q}\)) component of \(C^{(1)}\). This component does not contain a \(\ln (1/x)\) dependence, but, at our low scales, it is numerically large; it gives up to twice as large a contribution as the LO one. Moreover, being convoluted with low-x PDFs, which strongly depend on \(\mu _f\), it produces a large scale uncertainty.

4.2 The optimum scale to resum the higher-order \(2\rightarrow 2\) diagrams

In order to reduce the scale dependence it turns out to be important to fix the scale of the \(2\rightarrow 2\) NLO contribution, that is, to know the value of \(\mu _1\) in the \(2\rightarrow 2\) part of the second term on the right hand side of Eq. (6). Strictly speaking to do this we have to know the NNLO expression. At the moment there exists only a numerical NNLO result for t-quark pair production, see [24] and references therein. Nevertheless we can extract some use from these calculations. As was demonstrated in [25] (see Fig. 6 for example) the corrections to the \(2\rightarrow 2\) NLO contributions are mainly of Sudakov originFootnote 3; – these are ‘soft’ corrections corresponding to a relatively small momentum transferred along an additional gluon. Such corrections do not change essentially the original kinematics of the \(2\rightarrow 2\) subprocesses or the dependence of the corresponding ‘hard’ matrix element on the virtuality of incoming parton.

Thus it looks reasonable to convolute these terms with the same PDFs as those used for the LO evaluation. This will provide the correct resummation of the higher-order DL terms, \((\alpha _s\ln \mu _F\ln (1/x))^n\) (with \(n=2,3,\ldots \)) inside the incoming parton distributions. Referring to (6), it means that we may argue that the \(2\rightarrow 2\) part of the NLO coefficient function \(C^{(1)}\) must be convoluted with partons taken at the scale \(\mu _1=\mu _0\). In other words we write the cross section as

with \(\mu _F=\mu _0\) and \(\alpha _s(\mu _R)=\alpha _s(\mu _0)\), where we have divided the \(C^{(1)}\) correction into two terms \(C^{(1)}=C^{(1)}_{(2\rightarrow 2)}+C^{(1)}_{(2\rightarrow 3)}\), with only the second term evaluated at the residual factorization scale \(\mu _f\). The corresponding results, calculated from (8), are shown in Fig. 4 by the dotted red curves. We see that the remaining \(\mu _f\) dependence is much reduced. We consider this observation as a strong argument in favour of the possibility of using open charm or beauty data to constrain the low x partons at the scale \(\mu _f=0.85m_T\).

Since the major contribution to \(c{\bar{c}}\) and \(b{\bar{b}}\) production comes from gluon–gluon fusion (see Fig. 5), including these data in global parton analyses will allow a better study of the gluon low-x behaviour, and hence to strongly diminish the present uncertainty observed in this region.

5 Azimuthal cut to reduce optimal scale for \(b{\bar{b}}\) events

In the case of open \(b{\bar{b}}\) production the optimal scale is rather large; typically \(\mu ^2_0>30\) GeV\(^2\). On the other hand, the main uncertainties in the gluon PDF are observed at much lower scales \(\sim \)2–4 GeV\(^2\). One possibility to reduce the scale at which the process probes the partons is to observe both heavy quarks (i.e. both the quark and the antiquark), and then to select the events where the transverse momentum of the pair is small. This proposal was discussed in [12] (and in [14, 15] for Drell–Yan pair production). Unfortunately, the transverse momenta of B mesons can only be measured for a few particular decay modes, and the product of the branching ratios for the two B mesons is small. It means that we do not have sufficient statistics.

Another idea was proposed by Alexey Dzyuba.Footnote 4 As a rule the vertex of B meson decay can be observed experimentally, and it is possible to measure the azimuthal angle, \(\phi \), between the two heavy mesons. That is, we may select \(B\bar{B}\) events with good coplanarity. In such a case the transverse momenta of the incoming partons must be small, otherwise the coplanarity will be destroyed. In other words, for events with a small \(\Delta \phi =\pi -\phi \) we deal with lower scale partons. For example, in Table 1 we show the optimal scale \(\mu _0(\Delta \phi )\) calculated for events with \(\Delta \phi <\phi _0\) corresponding to the LHCb rapidity interval \(2<y<4.5\). As expected, for low \(\phi _0\) we have \(\mu _0\propto \phi _0\). For instance, for \(b{\bar{b}}\) production with \(\Delta \phi <10^{\circ }\) one can probe gluons at a rather low scale, namely \(\mu \simeq 1.5\) GeV.

The QCD predictions for the cross section for heavy meson \((D^+,B^+)\) production compared with LHCb data [5, 7], as a function of the \(p_T\) of the heavy meson. In the upper plot the blue (red) curves and data points, taken at \(\sqrt{s}=13\) TeV, correspond to the \(D^+\) rapidity bins \(2<y<2.5~ (4<y<4.5)\), respectively; whereas the lower plot corresponds to \(B^+\) rapidity in the interval \(4<y<4.5\) for a collider energy of \(\sqrt{s}=7\) TeV. CT14 NLO PDFs [3] are used. The optimal factorization scale is taken \(\mu _F=\mu _0=\mu _1=0.85m_T\); and the renormalization scale is taken to be \(\mu _R=\mu _F\); see Sect. 3

6 Comparison with \(c{\bar{c}}\) and \(b{\bar{b}}\) data

Now that we have the optimal factorization scale, \(\mu _0\simeq 0.85 m_T\), we can make an exploratory comparison with the existing LHC data for open single inclusive heavy-flavour production. To compare with the data we use the subroutines from MCFM and FONLL programmes [22, 23]. The QCD description of the present data in the low-x, low-\(\mu \) domain is shown in Fig. 6. As an example, we consider just the \(D^+\) (\(B^+\)) meson cross sections using the probabilities of the quark to meson transition \(P(c\rightarrow D^+)=0.25\) (see, for example, [26, p. 208]) and \(P(b\rightarrow B^+)=0.4\) (see [27] and [28, p. 63]). We account for the fact that the D / B meson momentum is less than that of the parent quark by making the assumption that \(p_D\sim 0.75p_c\) and \(p_B\sim 0.9p_b\) (see [28, 29]). That is, we calculate the meson cross sections as

where the last factor (1/0.75 or 1/0.9) accounts for the ratio of the \(\mathrm{d}p_{T,D(B)}\) and \(\mathrm{d}p_{T,c(b)}\) intervals.

It is seen that the QCD predictions obtained using the ’optimal’ factorization scale and the central values of CT14 NLO partons underestimate the LHCb \(c{\bar{c}}\) data. Note, however, there are large uncertainties in the behaviour of the low-x gluon distributions obtained from the global parton analyses. This uncertainty may be reduced for the NLO partonsFootnote 5 by including the open charm/beauty data in the global analysis and using the ‘optimal’ scale to calculate the corresponding cross sections. Of course, there are also the uncertainties due to higher \(\alpha _s\) order contributions not included into the calculations. When the NNLO formulae become available it will be possible to extend our procedure and to include open charm data into the NNLO global parton analyses.

7 Conclusion

We have calculated the ‘optimal’ factorization scale, \(\mu _0\), which allows a resummation of the higher-order \(\alpha _s\) corrections, enhanced at high energies by the large \(\ln (1/x)\) factor; that is, to resum the double-logarithmic, \((\alpha _s\ln \mu _F^2\ln (1/x))^n\), terms and to move them into the incoming parton distributions. The result is given in Fig. 3. It is essentially

for single open inclusive heavy quark production, where \(p_T\) is the transverse momentum of the observed heavy quark.

We also considered the case when the azimuthal angle, \(\phi \), between the heavy quark and the antiquark can be measured. We showed that by selecting events with small \(\Delta \phi =\pi -\phi \) we are able to probe smaller factorization scales \(\mu _0\). This is an advantage for \(b{\bar{b}}\) production: compare the results of Table 1 with Eq. (11). The disadvantage is that the rate is smaller for such events, even though we do not require that the transverse momentum of both heavy quarks are measured.

The choice \(\mu _F=\mu _0\) reduces the uncertainty of the perturbative QCD calculations. It will allow LHC data on \(c{\bar{c}}\) and \(b{\bar{b}}\) production to be included in global parton analyses to constrain the behaviour of the gluon distribution in the region of very small x and low scale, equal to \(\mu _0\), where the uncertainties of the present global parton analyses are especially large.

Notes

For ease of understanding we omit the parton labels \(a=g,q\) on the quantities in (2) and the following equations. The matrix form of the equations is implied.

The third procedure is the subject of Sect. 4.2.

Besides this there are, of course, the ‘renorm. group’ corrections, which account for the possible variation of the value of \(\mu _R\).

We thank Alexey Dzyuba of the Petersburg Nuclear Physics Institute for this idea.

Formally at the NLO level we do not account for the NNLO corrections.

References

R.D. Ball et al. (NNPDF Collaboration), JHEP 1504, 040 (2015)

L.A. Harland-Lang, A.D. Martin, P. Motylinski, R.S. Thorne, Eur. Phys. J. C 75(5), 204 (2015)

S. Dulat, T.J. Hou, J. Gao, M. Guzzi, J. Huston, P. Nadolsky, J. Pumplin, C. Schmidt, D. Stump, C.P. Yuan, Phys. Rev. D 93, 033006 (2016). arXiv:1506.07443

R. Aaij et al. (LHCb Collaboration), Nucl. Phys. B 871, 1 (2013)

R. Aaij et al. (LHCb Collaboration), JHEP 1603, 159 (2016)

R. Aaij et al. (LHCb Collaboration), arXiv:1610.02230 [hep-ex]

R. Aaij et al. (LHCb Collaboration), JHEP 1308, 117 (2013)

G. Aad et al. (ATLAS Collaboration), Nucl. Phys. B 907, 717 (2016). arXiv:1512.02913

R. Gauld, J. Rojo, L. Rottoli, J. Talbert, JHEP 1511, 009 (2015). arXiv:1506.08025

M. Cacciari, M.L. Mangano, P. Nason, Eur. Phys. J. C 75, 610 (2015)

O. Zenaiev et al., Eur. Phys. J. C 75, 396 (2015)

E.G. de Oliveira, A.D. Martin, M.G. Ryskin, Eur. Phys. J. C 71, 1727 (2011)

Y.L. Dokshitzer, D. Diakonov, S. Troian, Phys. Rep. 58, 269 (1980)

E.G. de Oliveira, A.D. Martin, M.G. Ryskin, Eur. Phys. J. C 73, 2361 (2013)

E.G. de Oliveira, A.D. Martin, M.G. Ryskin, Eur. Phys. J. C 72, 2069 (2012)

S.P. Jones, A.D. Martin, M.G. Ryskin, T. Teubner, J. Phys. G 43, 035002 (2016). arXiv:1507.06942

M.A. Kimber, A.D. Martin, M.G. Ryskin, Phys. Rev. D 63, 114027 (2001)

A.D. Martin, M.G. Ryskin, G. Watt, Eur. Phys. J. C 66, 163 (2010)

S.J. Brodsky, G.P. Lepage, P.B. Mackenzie, Phys. Rev. D 28, 228 (1983)

L.A. Harland-Lang, M.G. Ryskin, V.A. Khoze, Phys. Lett. B 761, 20 (2016). arXiv:1605.04935

S. Catani, M. Ciafaloni, F. Hautmann, Nucl. Phys. B 366, 135 (1991)

P. Nason, S. Dawson, R.K. Ellis, Nucl. Phys. B 327, 49 (1989)

M. Cacciari, M. Greco, P. Nason, JHEP 9805, 007 (1998)

M. Czakon, P. Fielder, D. Heymes, A. Mitov, JHEP 1605, 034 (2016). arXiv:1601.05375

C. Muselli, M. Bokvini, S. Forte, S. Marzani, G. Ridolfi, JHEP 1508, 076 (2015)

PDG, Reviews of particle properties. Phys. Lett. B 667, 1 (2008)

LHCb Collaboration, R. Aaij et al., Phys. Rev. D 85, 032008 (2012)

PDG, Reviews of particle properties. Chin. Phys. C 35, 090001 (2014)

M. Cacciari, S. Frixione, N. Houdeau, M.L. Mangano, P. Nason, G. Ridolfi, JHEP 1210, 137 (2012). arXiv:1205.6344

Acknowledgements

We thank Keith Ellis for valuable discussions. MGR and EGdO thank the IPPP at the University of Durham for hospitality. This work was supported by the RSCF Grant 14-22-00281 for MGR and by Capes and CNPq (Brazil) for EGdO.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3.

About this article

Cite this article

Oliveira, E.G.d., Martin, A.D. & Ryskin, M.G. Scale dependence of open \(c{\bar{c}}\) and \(b{\bar{b}}\) production in the low x region. Eur. Phys. J. C 77, 182 (2017). https://doi.org/10.1140/epjc/s10052-017-4750-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-017-4750-8