Abstract

We present the results of the first IceCube search for dark matter annihilation in the center of the Earth. Weakly interacting massive particles (WIMPs), candidates for dark matter, can scatter off nuclei inside the Earth and fall below its escape velocity. Over time the captured WIMPs will be accumulated and may eventually self-annihilate. Among the annihilation products only neutrinos can escape from the center of the Earth. Large-scale neutrino telescopes, such as the cubic kilometer IceCube Neutrino Observatory located at the South Pole, can be used to search for such neutrino fluxes. Data from 327 days of detector livetime during 2011/2012 were analyzed. No excess beyond the expected background from atmospheric neutrinos was detected. The derived upper limits on the annihilation rate of WIMPs in the Earth and the resulting muon flux are an order of magnitude stronger than the limits of the last analysis performed with data from IceCube’s predecessor AMANDA. The limits can be translated in terms of a spin-independent WIMP–nucleon cross section. For a WIMP mass of 50 GeV this analysis results in the most restrictive limits achieved with IceCube data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A large number of observations, like rotation curves of galaxies and the cosmic microwave background temperature anisotropies, suggests the existence of an unknown component of matter [1], commonly referred to as dark matter. However, despite extensive experimental efforts, no constituents of dark matter have been discovered yet. A frequently considered dark matter candidate is a Weakly Interacting Massive Particle [2]. Different strategies are pursued to search for these particles: at colliders, dark matter particles could be produced [3], in direct detection experiments, nuclear recoils from a massive target could be observed [4,5,6,7], and indirect detection experiments search for a signal of secondary particles produced by self-annihilating dark matter [8,9,10,11,12].

Gamma-ray telescopes provide very strong constraints on the thermally averaged annihilation cross section from observations of satellite dwarf spheroidal galaxies [13]. However, neutrinos are the only messenger particles that can be used to probe for dark matter in close-by massive baryonic bodies like the Sun or the Earth. In these objects dark matter particles from the Galactic halo can be accumulated after becoming bound in the gravitational potential of the Solar system as it passes through the Galaxy [14]. The WIMPs may then scatter weakly on nuclei in the celestial bodies and lose energy. Over time, this leads to an accumulation of dark matter in the center of the bodies. The accumulated dark matter may then self-annihilate at a rate that is proportional to the square of its density, generating a flux of neutrinos with a spectrum that depends on the annihilation channel and WIMP mass. The annihilation would also contribute to the energy deposition in the Earth. A comparison of the expected energy deposition with the measured heat flow allows one to exclude strongly interacting dark matter [15].

The expected neutrino event rates and energies depend on the specific nature of dark matter, its local density and velocity distribution, and the chemical composition of the Earth. Different scenarios yield neutrino-induced muon fluxes between \(10^{-8}\) and \(10^5\) per km\(^2\) per year for WIMPs with masses in the GeV–TeV range [16]. The large uncertainty on the neutrino flux leaves a discovery potential for the searches for dark matter. The AMANDA [17, 18] and Super-K [19] collaborations have already ruled out muon fluxes above \({\sim }10^3\) per km\(^2\) per year for masses larger than some 100 GeV. The ANTARES collaboration has recently presented the results of a similar search using 5 years of data [20]. The possibility of looking for even smaller fluxes with the much bigger IceCube neutrino observatory motivates the continued search for neutrinos coming from WIMP annihilations in the center of the Earth. This search is sensitive to the spin-independent WIMP–nucleon cross section and complements IceCube searches for dark matter in the Sun [21], the Galactic center [22] and halo [23] and in dwarf spheroidal galaxies [24].

2 The IceCube Neutrino Telescope

The IceCube telescope, situated at the geographic South Pole, is designed to detect the Cherenkov radiation produced by high energy neutrino-induced charged leptons traveling through the detector volume. By recording the number of Cherenkov photons and their arrival times, the direction and energy of the charged lepton, and consequently that of the parent neutrino, can be reconstructed.

IceCube consists of approximately 1 km\(^3\) volume of ice instrumented with 5160 digital optical modules (DOMs) [25] in 86 strings, deployed between 1450 and 2450 m depth [26]. Each DOM contains a 25.3 cm diameter Hamamatsu R7081-02 photomultiplier tube [27] connected to a waveform recording data acquisition circuit. The inner strings at the center of IceCube comprise DeepCore [28], a more densely instrumented sub-array equipped with higher quantum efficiency DOMs.

While the large ice overburden above the detector provides a shield against downward going, cosmic ray induced muons with energies \(\lesssim \) 500 GeV at the surface, most analyses focus on upward going neutrinos employing the entire Earth as a filter. Additionally, low energy analyses use DeepCore as the fiducial volume and the surrounding IceCube strings as an active veto to reduce penetrating muon backgrounds. The search for WIMP annihilation signatures at the center of the Earth takes advantage of these two background rejection techniques as the expected signal will be vertically up-going and of low energy.

3 Neutrinos from dark matter annihilations in the center of the Earth

WIMPs annihilating in the center of the Earth will produce a unique signature in IceCube as vertically up-going muons. The number of detected neutrino-induced muons depends on the WIMP annihilation rate \(\Gamma _A\). If the capture rate C is constant in time t, \(\Gamma _A\) is given by [16]

The equilibrium time \(\tau \) is defined as the time when the annihilation rate and the capture rate are equal. \(C_A\) is a constant depending on the WIMP number density. For the Earth, the equilibrium time is of the order of \(10^{11}\) years if the spin-independent WIMP–nucleon cross section is \(\sigma _{\chi -N}^\text {SI} \sim 10^{-43}\, \mathrm{cm}^2\) [29]. The age of the Solar system is \(t_\circ \approx 4.5\times 10^{9}\) years and so \(t_\circ /\tau \ll 1\). We thus expect that \(\Gamma _A \propto C^2\), i.e. the higher the capture rate, the higher the annihilation rate and thus the neutrino-induced muon flux.

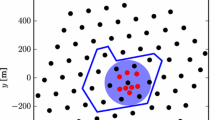

Rate at which dark matter particles are captured to the interior of the Earth [32] for a scattering cross section of \(\sigma _\mathrm{SI} = 10^{-44}\) cm\(^2\). The peaks correspond to resonant capture on the most abundant elements in the Earth [33]: \(^{56}\)Fe, \(^{16}\)O, \(^{28}\)Si and \(^{24}\)Mg and their isotopes

The rate at which WIMPs are captured in the Earth depends on their mass (which is unknown), their velocity in the halo (which cannot be measured observationally, and therefore needs to be estimated through simulations) and their local density (which can be estimated from observations). The exact value of the local dark matter density is still under debate [30], with estimations ranging from \({\sim } 0.2\) to \({\sim }0.5~\mathrm{GeV}/\mathrm {cm}^3\). We take a value of 0.3 GeV/cm\(^3\) as suggested in [31] for the results presented in this paper in order to compare to the results of other experiments. If the WIMP mass is nearly identical to that of one of the nuclear species in the Earth, the capture rate will increase considerably, as shown in Fig. 1.

The capture rate could be higher if the velocity distribution of WIMPs with respect to the Earth is lower, as only dark matter with lower velocities can be captured by the Earth. The velocity distribution of dark matter in the halo is uncertain, as it is very sensitive to theoretical assumptions. The simplest halo model is the Standard Halo Model (SHM), a smooth, spherically symmetric density component with a non-rotating Gaussian velocity distribution [34]. Galaxy formation simulations indicate, however, that additional macrostructural components, like a dark disc [35,36,37], are required. This would affect the velocity distribution, especially at low velocities, and, consequently, the capture rate in the Earth.

The signal simulations that are used in the analysis are performed using WimpSim [38], which describes the capture and annihilation of WIMPs inside the Earth, collects all neutrinos that emerge and lets these propagate through the Earth to the detector. The code includes neutrino interactions and neutrino oscillations in a complete three-flavor treatment. Eleven benchmark masses between 10 GeV and 10 TeV were simulated for different annihilation channels: the annihilation into \(b\bar{b}\) leads to a soft neutrino energy spectrum, while a hard channel is defined by the annihilation into \(W^+W^-\) for WIMP masses larger than the rest mass of the W bosons and annihilation into \(\tau ^+\tau ^-\) for lower WIMP masses.

4 Background

As signal neutrinos originate near the center of the Earth, they induce a vertically up-going signal in the detector. This is, however, a special direction in the geometry of IceCube, as the strings are also vertical. While in other point source searches, a signal-free control region of the same detector acceptance can be defined by changing the azimuth, this is not possible for an Earth WIMP analysis. Consequently, a reliable background estimate can only be derived from simulation.

Two types of background have to be taken into account: the first type consists of atmospheric muons produced by cosmic rays in the atmosphere above the detector. Although these particles enter the detector from above, a small fraction will be reconstructed incorrectly as up-going. The cosmic ray interactions in the atmosphere that produce these particles are simulated by CORSIKA [39].

The second type of background consists of atmospheric neutrinos. This irreducible background is coming from all directions and is simulated with GENIE [40] for neutrinos with energies below 190 GeV and with NuGeN [41] for higher energies.

5 Event selection

This analysis used the data taken in the first year of the fully deployed detector (from May 2011 to May 2012) with a livetime of 327 days. During the optimization of the event selection, only 10% of the complete dataset was used to check the agreement with the simulations. The size of this dataset is small enough to not reveal any potential signal, and hence allows us to maintain statistical blindness.

To be sensitive to a wide range of WIMP masses, the analysis is split into two parts that are optimized separately. The high energy event selection aims for an optimal sensitivity for WIMP masses of 1 TeV and the \(\chi \chi \rightarrow W^+W^-\) channel. The event selection for the low energy part is optimized for 50 GeV WIMPs annihilating into tau leptons. Because the capture rate for WIMPs of this mass shows a maximum (see Fig. 1), the annihilation and thus the expected neutrino rate are also maximal. As the expected neutrino energy for 50 GeV WIMPs is lower than 50 GeV, the DeepCore detector is crucial in this part of the analysis. Both samples are analyzed for the hard and the soft channel.

The data are dominated by atmospheric muons (kHz rate), which can be reduced via selection cuts, as explained below. These cuts lower the data rate by six orders of magnitude, to reach the level where the data are mainly consisting of atmospheric neutrino events (mHz rate). Since atmospheric neutrino events are indistinguishable from signal if they have the same direction and energy as signal neutrino events, a statistical analysis is performed on the final neutrino sample, to look for an excess coming from the center of the Earth (\(\mathrm{zenith} =180^\circ \)).

The first set of selection criteria, based on initial track reconstructions [42], is applied on the whole dataset, i.e. before splitting it into a low and a high energy sample. This reduces the data rate to a few Hz, so that more precise (and more time-consuming) reconstructions can be used to calculate the energy on which the splitting will be based. These initial cuts consist of a selection of online filters that tag up-going events, followed by cuts on the location of the interaction vertex and the direction of the charged lepton. These variables are not correlated with the energy of the neutrino and have thus similar efficiencies for different WIMP masses.

The variables that are used for cuts at this level are the reconstructed zenith angle, the reconstructed interaction vertex and the average temporal development of hits in the vertical (z) direction. The zenith angle cut is relatively loose to retain a sufficiently large control region in which the agreement between data and background simulation can be tested. An event is removed if the reconstructed direction points more than 60\(^\circ \) from the center of the Earth (i.e. the zenith is required to be larger than 120\(^\circ \)). In this way the agreement between data and background simulation can be tested in a signal-free zenith region between 120\(^\circ \) and 150\(^\circ \) (see zenith distribution in Fig. 5). The other cut values are chosen by looping over all possible combinations and checking which combination brings down the background to the Hz level, while removing as little signal as possible.

After this first cut level, the data rate is reduced to \(\sim \)3 Hz, while 30–60% of the signal (depending on WIMP mass and channel) is kept. The data is still dominated by atmospheric muons at this level. Now that the rate is sufficiently low, additional reconstructions can be applied to the data [43].

The distribution of the reconstructed energies for 50 GeV and 1 TeV WIMP signal events are shown in Fig. 2. The peak at \(\sim \)750 GeV is an artifact of the energy reconstruction algorithm used in this analysis: if the track is not contained in the detector, the track length cannot be reconstructed and is set to a default value of 2 km. The track length is used to estimate the energy of the produced muon, while the energy of the hadronic cascade is reconstructed separately and can exceed the muon energy. Events showing this artifact are generally bright events, so their classification into the high energy sample is desired. The reconstructed energy is not used for other purposes than for splitting the data. A division at 100 GeV, shown as a vertical line in this figure, is used to split the dataset into low and high energy samples which are statistically independent and are optimized and analyzed separately.

BDT score distributions at pre-BDT level for the low energy analysis (left) and for the high energy analysis using the Pull-Validation method (right). Signal distributions are upscaled to be visible in the plot. Signal and backgrounds are compared to experimental data from 10% of the first year of IC86 data. For the atmospheric neutrinos, all flavors are taken into account. In gray, the sum of all simulated background is shown. The vertical lines indicate the final cut value used in each analysis, where high scores to the right of the line are retained

Both analyses use Boosted Decision Trees (BDTs) to classify background and signal events. This machine learning technique is designed to optimally separate signal from background after an analysis-specific training [44] by assigning a score between −1 (background-like) and \(+\)1 (signal-like) to each event. In order to train a reliable BDT, the simulation must reproduce the experimental data accurately. Therefore a set of pre-BDT cuts are performed. Demanding a minimum of hits in a time window between −15 and 125 ns of the expected photon arrival time at each DOM, and a cut on the zenith of a more accurate reconstruction on causally connected hits improves the agreement between data and simulation. By comparing the times and distances of the first hits, the number of events with noise hits can be reduced. The last cut variable at this step is calculated by summing the signs of the differences between the z-coordinates of two temporally succeeding hits, which reduces further the amount of misreconstructed events. After these cuts, the experimental data rates are of the order of 100 mHz, and the data are still dominated by atmospheric muons. The BDTs are then trained on variables that show good agreement between data and simulation and have low correlation between themselves.

In the low energy optimization, the BDT training samples consist of simulated 50 GeV WIMP events and experimental data for the signal and background, respectively. Because the opening angle between the neutrino and its daughter lepton is inversely correlated to the energy of the neutrino, WIMP neutrino-induced muons in the high energy analysis are narrowly concentrated into vertical zenith angles, whereas in the low energy analysis they are spread over a wider range of zenith angles. Consequently, if the BDT for the high energy optimization was trained on simulated 1 TeV WIMP events, straight vertical events would be selected. This would make a comparison between data and simulation in a signal-free region more difficult. Instead, in the high energy analysis an isotropic muon neutrino simulation weighted to the energy spectrum of 1 TeV signal neutrinos is used to train a BDT.

Coincident events of neutrinos and atmospheric muons can affect the data rate. Their influence is larger at low energies, as the atmospheric neutrino flux decreases steeply with increasing energy. In the low energy analysis, this effect cannot be neglected. As the amount of available simulated coincident events was limited, individual correction factors for the components of atmospheric background simulation are applied to take this effect into account. These correction factors are calculated by scaling the BDT score distributions of the simulated background to the experimental data. Only events with a reconstructed zenith of less than 132\(^\circ \) are used to determine the correction factors. With this choice, the background cannot be incorrectly adjusted to a signal that could be contained in the experimental data, as 95% of WIMP induced events have a larger zenith.

The distributions of the BDT scores for the low energy and high energy analyses are shown in Fig. 3. Cuts on the BDT score are chosen such that the sensitivities of the analyses are optimal. The sensitivities are calculated with a likelihood ratio hypothesis test based on the values of the reconstructed zenith, using the Feldman–Cousins unified approach [45]. The required probability densities for signal and background are both calculated from simulations, as this analysis cannot make use of an off-source region. The background sample that is left after the cut on the BDT score mainly consists of atmospheric neutrinos and only has a small number of atmospheric muon events.

Two different smoothing methods are used to deal with the problem of small simulation statistics. The high energy analysis uses Pull-Validation [46], a method to improve the usage of limited statistics: a large number of BDTs (200 in the case of the present analysis) are trained on small subsets that are randomly resampled from the complete dataset. The variation of the BDT output between the trainings can be interpreted as a probability density function (PDF) for each event. This PDF can be used to calculate a weight that is applied to each event instead of making a binary cut decision. With this method, not only the BDT score distribution is smoothed (Fig. 3-right), but also the distributions that are made after a cut on the BDT score. In particular, the reconstructed zenith distribution used in the likelihood calculation is smooth, as events that would be removed when using a single BDT could now be kept, albeit with a smaller weight.

The low energy analysis tackles the problem of poor statistics of the atmospheric muon background simulation in a different way. In this part of the analysis, only a single BDT is trained (Fig. 3-left), and after the cut on the BDT score, the reconstructed zenith distribution is smoothed using a Kernel Density Estimator (KDE) [47, 48] with gaussian kernel and choosing an optimal bandwidth [49].

The event rates at different cut levels are summarized in Table 1.

6 Shape analysis

After the event selection, the data rate is reduced to 0.28 mHz for the low energy selection and 0.56 mHz for the high energy selection. Misreconstructed atmospheric muons are almost completely filtered out and the remaining data sample consists mainly of atmospheric neutrinos. To analyze the dataset for an additional neutrino signal coming from the center of the Earth, we define a likelihood test that has been used in several IceCube analyses before (e.g. [21, 22]). Based on the background (\(f_\mathrm{bg}\)) and signal distribution(\(f_\mathrm{s}\)) of space angles \(\Psi \) between the reconstructed muon track and the Earth center (i.e. the reconstructed zenith angle), the probability to observe a value \(\Psi \) for a single event is

Here, \(\mu \) specifies the number of signal events in a set of \(n_\mathrm{obs}\) observed events. The likelihood to observe a certain number of events at specific space angles \(\Psi _i\) is defined as

Following the procedure in [45], the ranking parameter

is used as test statistic for the hypothesis testing, where \(\hat{\mu }\) is the best fit of \(\mu \) to the observation. A critical ranking \(\mathcal {R}^{90}\) is defined for each signal strength, so that 90% of all experiments have a ranking larger than \(\mathcal {R}^{90}\). This is determined by \(10^4\) pseudo experiments for each injected signal strength. The sensitivity is defined as the expectation value for the upper limit in the case that no signal is present. This is determined by generating \(10^4\) pseudo experiments with no signal injected.

7 Systematic uncertainties

Due to the lack of a control region, the background estimation has to be derived from simulation. Therefore, systematic uncertainties of the simulated datasets were carefully studied. The effects of the uncertainties were quantified by varying the respective input parameters in the simulations.

Reconstructed zenith distributions of 1 year of IC86 data compared to the simulated background distributions. For the atmospheric neutrinos, all flavors are taken into account. In the low energy analysis (left) the distributions were smoothed by a KDE and in the high energy analysis (right) the Pull-Validation method was used. Signal distributions are upscaled to be visible in the plot. The gray areas indicate the total predicted background distributions with 1 sigma uncertainties, including statistical and systematic uncertainties

Different types of detector related uncertainties have to be considered. The efficiency of the DOM to detect Cherenkov photons is not exactly known. To estimate the effect of this uncertainty, three simulated datasets with 90, 100 and 110% of the nominal efficiency were investigated. With these datasets, the sensitivity varies by ±10% for both event selections of the analysis. Taking anisotropic scattering in the South Pole ice into account [50], has an effect of −10% in the high and the low energy selection. The reduced scattering length of photons in the refrozen ice of the holes leads to an uncertainty of −10% in both selections. Furthermore, the uncertainty on the scattering and absorption lengths influences the result by ±10% for the low energy and ±5% for the high energy selection.

Besides the detector related uncertainties, the uncertainties on the models of the background physics are taken into account. The uncertainty of the atmospheric flux can change the rates by ±30%, as determined e.g. in [51]. For low energies, uncertainties on neutrino oscillation parameters are significant. This effect has been studied in a previous analysis [21] and influences the event rates by ±6%. The effect of the uncertainty of the neutrino–nucleon cross section has been studied in the same analysis. It depends on the neutrino energy and is conservatively estimated as ±6% for the low and ±3% for the high energy sample. Finally, the rate of coincidences of atmospheric neutrinos and atmospheric muons has a large impact on the low energy analysis. While in the baseline data sets, coincident events were not simulated, a comparison with a test simulation that includes coincident events shows an effect of −30% on the final event rates.

Adding these uncertainties in quadrature results in a total of \(+\)34%/−48% in the low energy analysis and \(+\)32%/−35% for high energies. For the limit calculation, they are taken into account by using a semi-bayesian extension to the Feldman–Cousins approach [52]. Technically, it is realized by randomly varying the expectation value of each pseudo-experiment by a gaussian of the corresponding uncertainty. As an illustration, the effect of this procedure is shown in Fig. 4 for different uncertainties.

8 Results

As mentioned in Sect. 5, only 10% of the data were used for quality checks during the optimization of the analysis chain. Half of this subsample was used to train the BDTs and therefore these events could not be used for the later analysis. After the selection criteria were completely finalized, the zenith distributions of the remaining 95% of the dataset were examined (Fig. 5). No statistically significant excess above the expected atmospheric background was found from the direction of the center of the Earth.

Using the method described in Sect. 6, upper limits at the 90% confidence level on the volumetric flux

were calculated from the high and the low energy sample for WIMP masses between 10 GeV and 10 TeV in the hard and in the soft channel. Here \(\mu _\mathrm{s}\) denotes the upper limit on the number of signal neutrinos, \(t_\mathrm{live}\) the livetime and \(V_\mathrm{eff}\) the effective volume of the detector. Using the package WimpSim [38], the volumetric flux was converted into the WIMP annihilation rate inside the Earth \(\Gamma _A\) and the resulting muon flux \(\Phi _\mu \). The obtained 90% C.L. limits are shown in Fig. 6 and listed in Table 2. For each mass and channel, the result with the most restricting limit is shown.

Top individual upper limits at 90% confidence level (solid lines) on the muon flux \(\Phi _\mu \) for the low and high energy analysis. Systematic uncertainties are included. For the soft channel, \(\chi \chi \rightarrow b\bar{b}\) is assumed with 100% braching ratio, while for the hard channel the annihilation \(\chi \chi \rightarrow \tau ^{+}\tau ^{-}\) for masses \(\le \) 50 GeV and \(\chi \chi \rightarrow W^{+}W^{-}\) for higher masses is assumed. A flux with mixed branching ratios will be between these extremes. The dashed lines and the bands indicate the corresponding sensitivities with one sigma uncertainty. Bottom the combined best upper limits (solid line) and sensitivities (dashed line) with 1 sigma uncertainty (green band) on the annihilation rate in the Earth \(\Gamma _A\) for 1 year of IC86 data as a function of the WIMP mass. For each WIMP mass, the sample (high energy or low energy) which yields the best sensitivity is used. Systematic uncertainties are included. The dotted line shows the latest upper limit on the annihilation rate, which was calculated with AMANDA data [17, 18]

Upper limits at 90% confidence level on \(\sigma _{\chi -N}^\mathrm{SI}\) as a function of the annihilation cross section for 50 GeV WIMPs annihilating into \(\tau ^+\tau ^-\) and for 1 TeV WIMPs annihilating into \(W^+W^-\). Systematic uncertainties are included. As a comparison, the limits of LUX [5] are shown as dashed lines. The red vertical line indicates the thermal annihilation cross section. Also indicated are IceCube limits on the annihilation cross section for the respective models [22]

s

Upper limits at 90% confidence level on \(\sigma _{\chi -N}^\mathrm{SI}\) as a function of the WIMP mass assuming a WIMP annihilation cross section of \(\langle \sigma _A v \rangle = 3\times 10^{-26}\,\mathrm{cm}^{3}\,\mathrm{s}^{-1}\). For WIMP masses above the rest mass of the W bosons, annihilation into \(W^+W^-\) is assumed and annihilation into \(\tau ^+\tau ^-\) for lower masses. Systematic uncertainties are included. The result is compared to the limits set by SuperCDMSlite [6], LUX [5], Super-K [19] and by a Solar WIMP analysis of IceCube in the 79-string configuration [21]. The displayed limits are assuming a local dark matter density of \(\rho _\chi =0.3\) GeV cm\(^{-3}\). A larger density, as suggested e.g. by [56], would scale all limits linearly

Furthermore, limits on the spin-independent WIMP–nucleon cross section \(\sigma _{\chi -N}^\mathrm{SI}\) can be derived. In contrast to dark matter accumulated in the Sun, the annihilation rate in the Earth and \(\sigma _{\chi -N}^\mathrm{SI}\) are not directly linked. As no equilibrium between WIMP capture and annihilation can be assumed, the annihilation rate depends on \(\sigma _{\chi -N}^\mathrm{SI}\) and on the annihilation cross section \(\langle \sigma _A v \rangle \). Fig. 7 shows the limits in the \(\sigma _{\chi -N}^\text {SI}\) - \(\langle \sigma _A v \rangle \) plane for two WIMP masses. If a typical value for the natural scale \(\langle \sigma _A v \rangle = 3\times 10^{-26}\,\mathrm{cm}^{3}\,\mathrm{s}^{-1}\), for which the WIMP is a thermal relic [53], is assumed as annihilation cross section, from the limits in Fig. 7 one can derive upper limits on the spin-independent WIMP–nucleon scattering cross section as function of the WIMP mass. While the limits in Table 2 correspond to the investigated benchmark masses, in Fig. 8, interpolated results were taken into account, showing the effect of the resonant capture on the most abundant elements in the Earth.

We note that Solar WIMP, Earth WIMP, and direct searches have very different dependences on astrophysical uncertainties. A change in the WIMP velocity distribution has minor effects on Solar WIMP bounds [54, 55], while Earth WIMPs and direct searches are far more susceptible to it. In particular the existence of a dark disk could enhance Earth WIMP rates by several orders of magnitude [16] while leaving direct bounds largely unchanged. The limits presented here assume a standard halo and are conservative with respect to the existence of a dark disk.

9 Summary

Using 1 year of data taken by the fully completed detector, we performed the first IceCube search for neutrinos produced by WIMP dark matter annihilations in the center of the Earth. No evidence for a signal was found and 90% C.L. upper limits were set on the annihilation rate and the resulting muon flux as a function of the WIMP mass. Assuming the natural scale for the velocity averaged annihilation cross section, upper limits on the spin-independent WIMP–nucleon scattering cross section could be derived. The limits on the annihilation rate are up to a factor 10 more restricting than previous limits. For indirect WIMP searches through neutrinos, this analysis is highly complementary to Solar searches. In particular, at small WIMP masses around the iron resonance of 50 GeV the sensitivity exceeds the sensitivity of the Solar WIMP searches of IceCube. The corresponding limit on the spin-independent cross sections presented in this paper are the best set by IceCube at this time. Future analyses combining several years of data will further improve the sensitivity.

References

G. Bertone, D. Hooper, J. Silk, Phys. Rep. 405, 279 (2005)

G. Steigman, M.S. Turner, Nucl. Phys. B 253, 375 (1985)

D. Abercrombie et al., Nucl. Part. Phys. Proc. 273–275, 503–508 (2016)

E. Aprile et al., XENON100 Collaboration, Phys. Rev. Lett. 109, 181301 (2012)

D.S. Akerib et al., LUX Collaboration, Phys. Rev. Lett. 112, 091303 (2013)

R. Agnese et al., SuperCDMS Collaboration, Phys. Rev. Lett. 112, 041302 (2014)

G. Angloher et al., CRESST Collaboration,. Eur. Phys. J. C 76, 25 (2016)

M. Ackermann et al. (Fermi-LAT Collaboration), JCAP 1509.09, 008 (2015)

A. Abramowski et al., H.E.S.S. Collaboration, Phys. Rev. D 90, 112012 (2014)

J. Aleksić et al., MAGIC Collaboration, JCAP 1402, 008 (2014)

M. Boezio et al., PAMELA Collaboration, New J. Phys. 11, 105023 (2009)

M. Aguilar et al., AMS Collaboration, Phys. Rev. Lett. 110, 141102 (2013)

M.L. Ahnen et al., JCAP 1602(02), 039 (2016)

A. Gould, Astrophys. J. 328, 919 (1988)

K. Freese, Phys. Lett. B 167, 295 (1986)

W.H. Press, D.N. Spergel, Astrophys. J. 296, 679 (1985)

T.K. Gaisser, G. Steigman, S. Tilav, Phys. Rev. D 34, 2206 (1986)

G.D. Mack, J.F. Beacom, G. Bertone, Phys. Rev. D 76, 043523 (2007)

T. Bruch et al., Phys. Lett. B 674, 250 (2009)

A. Achterberg et al., AMANDA Collaboration, Astropart. Phys. 26, 129 (2006)

A. Davour, Ph.D. thesis, Universiteit Uppsala (2007)

S. Desai et al., Super-Kamiokande Collaboration, Phys. Rev. D 70, 083523 (2004)

S. Adrián-Martínez et al., ANTARES Collaboration, Proceedings of ICRC2015, PoS 1110 (2015). arXiv:1510.04508

M.G. Aartsen et al., IceCube Collaboration, Phys. Rev. Lett. 110, 131302 (2013)

M.G. Aartsen et al., IceCube Collaboration, Eur. Phys. J. C 75, 492 (2015)

M.G. Aartsen et al., IceCube Collaboration, Eur. Phys. J. C 75, 20 (2015)

M.G. Aartsen et al., IceCube Collaboration, Phys. Rev. D 88, 122001 (2013)

R. Abbasi et al., IceCube Collaboration, Nucl. Instrum. Methods A 601, 294 (2009)

A. Achterberg et al., IceCube Collaboration, Astropart. Phys. 26, 155 (2006)

R. Abbasi et al., IceCube Collaboration, Nucl. Instrum. Methods A 618, 139 (2010)

R. Abbasi et al., IceCube Collaboration, Astropart. Phys. 35, 615 (2012)

G. Jungman, M. Kamionkowski, K. Griest, Phys. Rep. 267, 195 (1996)

J.I. Read, J. Phys. G 41, 063101 (2014)

K.A. Olive et al., Particle Data Group, Chin. Phys. C 38, 090001 (2014)

S. Sivertsson, J. Edsjö, Phys. Rev. D 85, 123514 (2012)

W.F. McDonough, S. Sun, Chem. Geol. 120, 223 (1995)

K. Freese, J. Frieman, A. Gould, Phys. Rev. D 37, 3388 (1988)

G. Lake, Astrophys. J. 98, 1554 (1989)

J. Read, G. Lake, O. Agertz, V. Debattista, MNRAS 389, 1041 (2008)

J. Read, L. Mayer, A. Brooks, F. Governato, G. Lake, MNRAS 397, 44 (2009)

M. Blennow, J. Edsjö, T. Ohlsson, JCAP 01, 021 (2008)

D. Heck et al., FZKA Rep. 6019, 1–90 (1998)

C. Andreopoulos et al., Nucl. Instrum. Methods A 614, 87 (2010)

A. Gazizov, M. Kowalski, Comput. Phys. Commun. 172, 203 (2005)

J. Ahrens et al., AMANDA Collaboration, Nucl. Instrum. Methods A 524, 169–194 (2004)

M.G. Aartsen et al., IceCube Collaboration, Phys. Rev. D 91, 072004 (2015)

A. Hoecker et al., PoS A CAT 040, 1–134 (2007)

G.J. Feldman, R.D. Cousins, Phys. Rev. D 57, 3873 (1998)

M.G. Aartsen et al., IceCube Collaboration, Proceedings of ICRC2015, PoS 1211 (2015). arXiv:1510.05226

M. Rosenblatt, Ann. Math. Stat. 27, 832 (1956)

E. Parzen, Ann. Math. Stat. 33, 1065 (1962)

B.W. Silverman, Density Estimation for Statistics and Data Analysis (Chapman & Hall, London, 1986)

M.G. Aartsen et al., IceCube Collaboration, Proceedings of ICRC2013, 0580 (2013). arXiv:1309.7010

M.G. Aartsen et al., IceCube Collaboration, Phys. Rev. D 88, 112008 (2013)

J. Conrad, O. Botner, A. Hallgren, C. Pérez de los Heros, Phys. Rev. D 67, 012002 (2003)

G. Steigman, B. Dasgupta, J.F. Beacom, Phys. Rev. D 86, 023506 (2012)

M. Danninger, C. Rott, Phys. Dark Univ. 5–6, 35–44 (2014)

K. Choi, C. Rott, Y. Itow, JCAP 1405, 049 (2014)

F. Nesti, P. Salucci, J. Cosmol. Astropart. Phys. 1307, 016 (2013)

Acknowledgements

We acknowledge the support from the following agencies: U.S. National Science Foundation-Office of Polar Programs, U.S. National Science Foundation-Physics Division, University of Wisconsin Alumni Research Foundation, the Grid Laboratory Of Wisconsin (GLOW) grid infrastructure at the University of Wisconsin-Madison, the Open Science Grid (OSG) grid infrastructure; U.S. Department of Energy, and National Energy Research Scientific Computing Center, the Louisiana Optical Network Initiative (LONI) grid computing resources; Natural Sciences and Engineering Research Council of Canada, WestGrid and Compute/Calcul Canada; Swedish Research Council, Swedish Polar Research Secretariat, Swedish National Infrastructure for Computing (SNIC), and Knut and Alice Wallenberg Foundation, Sweden; German Ministry for Education and Research (BMBF), Deutsche Forschungsgemeinschaft (DFG), Helmholtz Alliance for Astroparticle Physics (HAP), Research Department of Plasmas with Complex Interactions (Bochum), Germany; Fund for Scientific Research (FNRS-FWO), FWO Odysseus programme, Flanders Institute to encourage scientific and technological research in industry (IWT), Belgian Federal Science Policy Office (Belspo); University of Oxford, United Kingdom; Marsden Fund, New Zealand; Australian Research Council; Japan Society for Promotion of Science (JSPS); the Swiss National Science Foundation (SNSF), Switzerland; National Research Foundation of Korea (NRF); Villum Fonden, Danish National Research Foundation (DNRF), Denmark.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3

About this article

Cite this article

Aartsen, M.G., Abraham, K., Ackermann, M. et al. First search for dark matter annihilations in the Earth with the IceCube detector. Eur. Phys. J. C 77, 82 (2017). https://doi.org/10.1140/epjc/s10052-016-4582-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-016-4582-y