Abstract

When averages of different experimental determinations of the same quantity are computed, each with statistical and systematic error components, then frequently the statistical and systematic components of the combined error are quoted explicitly. These are important pieces of information since statistical errors scale differently and often more favorably with the sample size than most systematical or theoretical errors. In this communication we describe a transparent procedure by which the statistical and systematic error components of the combination uncertainty can be obtained. We develop a general method and derive a general formula for the case of Gaussian errors with or without correlations. The method can easily be applied to other error distributions, as well. For the case of two measurements, we also define disparity and misalignment angles, and discuss their relation to the combination weight factors.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Error propagation as well as the averaging of results of individual measurements—at least in the context of strictly Gaussian errors, possibly with statistical or systematic correlations—are straightforward, they are covered in many textbooks,Footnote 1 and there seems to be no open issue, because all that is required is multi-variate analysis applied to normal distributions. It is the more surprising, that—to the best of the authors knowledge—no explicit analytical expression is available that serves to compute for a given set of measurements of some quantity with individual, generally correlated, errors of statistical and systematic nature, the statistical and systematic components of the uncertainty of the average.

That is to say, while in the Gaussian context it is clear how to obtain the average including its uncertainty, and that the total error ought to be the quadratic sum of the statistical and systematic (and perhaps other such as theoretical) error components, formulae of these individual components and their derivation have not received much attention. But the proper disclosure of the statistical (random) error component compared to the systematic uncertainty can be of importance. For example, in the context of the design of future experimental facilities it is crucial to know how much precision (say, over the world average) can be gained by simply generating larger data samples, in contrast to possible technological or scientific breakthroughs. There are also more involved cases [3] of error propagation where the knowledge of individual error components of intermediate averages are helpful, if not crucial. The systematic error is, of course, more troublesome as it cannot be reducedFootnote 2 as straightforwardly by increasing the sample size N.

In the next Section, we review the standard procedure to average a number of experimental determinations of some observable quantity. We also mention an approximate method to obtain the statistical and systematic error of an average of similar experiments where the ratio of the systematic to statistical components are comparable, or where the statistical error is dominant.

Then we turn to the main point, the exact determination of the individual error components of an average. We show that in the absence of correlations the square of the statistical error or any other type of uncertainty is weighted by the fourth power of the total errors.

Then we turn to correlations, starting with the simplest case of two measurements for which we introduce the concepts of disparity and misalignment angles. Finally, we present exact relations for the case of more than two measurements, and address some problems that arise when new measurements are added to an existing average iteratively.

2 Simplified procedures

Suppose one is given a set of measurements of some quantity v, with central values \(v_i\), statistical (random) errors \(r_i\) and total systematic errors \(s_i\). For simplicity, we are going to assume that the \(r_i\) and \(s_i\) are Gaussian distributed (the generalization to other error distributions is straightforward) in which case the total errors of the individual measurements are given by

If we furthermore temporarily assume that the measurements are uncorrelated, then the central value \(\bar{v}\) of their combination is given by the precision weighted average,

with total error

Similarly, the statistical component \(\bar{r}\) of \(\bar{t}\) can often be approximated by

The systematic component \(\bar{s}\) of \(\bar{t}\) is then obtained from

For example, two measurements with

would result in

Notice, that while the individual errors in Eq. (6) are symmetric under the simultaneous exchange of the statistical and systematic errors (we recall that all \(r_i\) and \(s_i\) are assumed Gaussian) and the labels of the two measurements, the result (7) does not exhibit the corresponding symmetry which would imply \(\bar{r} = \bar{s}\) exactly. The exact procedure introduced in the next section would indeed yield \(\bar{r} = \bar{s}\) in this example.

Now consider the case where one of the systematic errors, say \(s_1\), is larger and eventually \(s_1 \rightarrow \infty \). Then the weight of the first measurement approaches zero, and \(\bar{t} \rightarrow t_2\), as expected. However, one would also expect that \(\bar{r} \rightarrow r_2\) and \(\bar{s} \rightarrow s_2\), while instead \(\bar{r} < r_2\) remains constant and \(\bar{s} \rightarrow \sqrt{1924} \approx 44 > s_2\). Thus, one would face the unreasonable result that averaging some measurement with an irrelevant constraint (with infinite uncertainty) will decrease (increase) the statistical (systematic) error component, leaving only the total error invariant. In other words, if in a set of measurements there is one with negligible statistical error, then the average would also have vanishing statistical error, regardless of how unimportant that one measurement is compared to the others. Clearly, Eq. (4) is then unsuitable even as an approximation.

One can easily extend these consideration to the case where the individual measurements have a common contribution c entering the systematic error. The precision weighted average (2) and total error (3) are then to be replaced by

where the uncorrelated error components are given by

and where \(t_i^2 \ge 0\) requires \(c^2 \ge - u_i^2\) for all i.

The general case of correlated errors will be dealt with later, but we note that the case of two measurements with Pearson’s correlation coefficient \(\rho \) can always be brought to this form with \(c^2\) given by

A proper (normalizable) probability distribution requires \(|\rho | \le 1\), so that from Eq. (10),

guaranteeing that \(\bar{t}\) is real. On the other hand, \(u_1\) or \(u_2\), as well as \(\bar{u}\), in Eq. (9) may become imaginary provided that

in which case the first or second measurement, respectively, contributes with negative weight, and \(\bar{v}\) lays no longer between \(v_1\) and \(v_2\). In this situation, one rather (but equivalently) regards the measurement with a negative weight as a measurement of some nuisance parameter related to c. Replacing the inequalities (12) by equalities, gives rise to an infinite weight (one of the \(u_i = 0\)) as well as \(\bar{u} = 0\) and \(\bar{t} = c\).

As a concrete example, each of the four experimental collaborations at the Z boson factory LEP 1 [4–7] has measured some quantity \({\mathcal A}_\tau \) (related to the polarization of final-state \(\tau \) leptons produced in Z decays) with the results shown in Table 1. A number of uncertainties affected the four measurements in a similar way, leading to a relatively weak correlation matrix [8] which, while not quite corresponding to the form (8), (9), can be well approximated by it when using the average of the square root of the off-diagonal entries of the covariance matrix \(c \approx 0.0016\).

The values in the last line are \(\bar{v}\), \(\bar{r}\), \(\bar{s}\), \(\bar{t}\) and \(\bar{u}\) as calculated from Eqs. (4), (5), (8) and (9). \(\bar{v}\), \(\bar{r}\) and \(\bar{s}\) agree with Table 4.3 and \(\bar{t}\) agrees with Eq. (4.9) of the LEP combination in Ref. [8].

Table 2 shows the more recent example of the determination of the weak mixing angle [9] which is based on purely central (CC) electron events, events with a forward electron (CF), as well as muon pairs. Here the average of the off-diagonal entries of the covariance matrix amounts to \(c \approx 0.0010\). This is an example where the dominant uncertainty is from common systematics, namely from the imperfectly known parton distribution functions affecting the three channels in very similar ways.

We will return to these examples after deriving exact alternatives to formula (4).

3 Derivatively weighted errors

Our starting point is the basic property of a statistical error to scale as \(N^{-1/2}\) with the sample size. To implement this, we rewrite Eq. (1) as

Thus, the statistical error satisfies the relation,

In the absence of correlations we can use Eq. (3), and demand that analogously,

Notice, that Eq. (4) can be recovered from Eq. (15) upon substituting \(t_i \rightarrow r_i\) and \(\bar{t} \rightarrow \bar{r}\). Eq. (15) means that the relative statistical error of the combination, \(\bar{x}\), is given by the precision weighted average

where

Furthermore, giving the systematic components a similar treatment, we find

so that the expected symmetry between the two types of uncertainty becomes manifest, and moreover, Eq. (5) now follows directly from Eqs. (15) and (18), rather than being enforced. The central result is that for uncorrelated errors, the squares of the statistical and systematic components (or those of any other type) of an average are the corresponding individual squares weighted by the inverse of the fourth power of the individual total errors, or equivalently, weighted by the square of the individual precisions \(t_i^{-2}\).

Returning to the case where the only source of correlation is a common contribution \(c \ne 0\) equally affecting all measurements, we find from Eq. (8),

where

Applied to the case of \({\mathcal A}_\tau \) measurements we now find

which agree not exactly, but within round-off precision with the approximate numbers in Table 1.

4 Bivariate error distributions

As a preparation for the most general case of N measurements with arbitrary correlation coefficients, we first discuss in some detail the case \(N = 2\). Recall that the covariance matrix in this case reads

The precision weighted average is given by the expression,

where

obtained by minimizing the likelihood following a bivariate Gaussian distribution,

where

The one standard deviation total error \(\bar{t}\) is defined by

which results in

or conversely,

Equation (29) is useful in practice if one needs to recover the correlation between a pair of measurement uncertainties and their combination error.

We now turn to the generalization of Eq. (15) in the presence of a systematic correlation. When applying our method of derivatively weighted errors to Eq. (28) it is important to keep \(c^2 = \rho t_1 t_2\) fixed (this would be different in the presence of a statistical correlation). Doing this, we obtain

For the systematic component we find

and we also note that

More generally, one can compute the error contribution \(\bar{q}\) of any individual source of uncertainty q to the total error as

where \(c_q^2\) is the contribution of q to \(c^2\) with the constraint

If the two uncertainties \(q_i\) are fully correlated or anti-correlated between the two measurements, then

where the minus sign corresponds to anti-correlation.

The formalism is now general enough to allow statistical correlations, as well. As we will illustrate later, knowing all the \(\bar{q}\) is particularly useful if one wishes to successively include additional measurements to a combination—one by one—rather than having to deal with a multi-dimensional covariance matrix. This situation frequently arises in historical contexts when new measurements add information to a set of older ones, rather than superseding them. But there is a problematic issue with this, which apparently is not widely appreciated.

5 Disparity and misalignment angles

Continuing with the case of two measurements, we can relate \(\rho \) to the rotation angle necessary to diagonalize the matrix T. If we define an angle \(\beta \) quantifying the disparity of the total errors of two measurements through

then the diagonal from of T is \(R T R^T\) with

and

where

The angle \(\alpha \) may be interpreted as a measure of the misalignment of the two measurements with respect to the primary observable of interest v. Uncorrelated measurements of v are aligned (\(\rho = \alpha = 0\)), while the case \(|\rho | \gg |\tan \beta |\) is reflective of a high degree of misalignment. Indeed, in the extreme case where \(\beta = 0\) (\(|\alpha | = 90^\circ \)) two correlated measurements (\(\rho \ne 0\)) of the same quantity v are equivalent to two uncorrelated measurements, only one of which having any sensitivity to v at all. To reach the decorrelated configuration involves subtle cancellations between correlations and anti-correlations of the statistical and systematic error components of the original measurements.

We can now express the weight factor \(\omega \) in terms of the disparity and misalignment angles \(\beta \) and \(\alpha \),

In the case \(\rho = \alpha = 0\) this reduces to

and Eq. (23) now reads

One can write equations of the form (41) and (42) for \(\rho \ne 0\), as well, with shifted angles \(\bar{\beta }\) related to \(\beta \) by

However, this ceases to work out in the presence of a negative weight (\(\omega < 0\)), in which case one would need to replace the trigonometric by the hyperbolic functions.

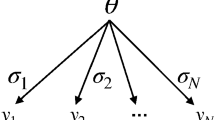

6 Multivariate error distributions

To treat cases of more than two measurements with generic correlations, one can choose one of two strategies. Either one effectively reduces the procedure to cases of just two measurements (in general at the price of some precision loss) by iteratively including additional measurements, or one deals with a multi-dimensional covariance matrix.

We first discuss the latter approach, starting with the trivariate case where

The average can be written as

with

The total error is given by

and for its statistical and systematic components we find (in the absence of statistical correlations),

respectively. The generalization of Eq. (33) is now also straightforward. e.g., in the case of 100 % correlation between the three measurements we have,

Analogous expressions hold for cases with \(N > 3\) measurements. For example, the covariance matrix for the case of \(N=4\) reads

All that remains to be computed are the weight factors \(\omega _i\). We found a convenient expression for them, e.g.,

Thus, the \(\omega _i\) can be obtained by computing the determinant of a matrix which is constructed by subtracting the ith column from each of the other columns (or the ith row from each of the other rows) and then removing the ith row and column. The reader is now equipped to handle cases of any N exactly.

The alternative strategy to compute averages is to add more measurements iteratively. We illustrate this using the example of the ATLAS results on the weak mixing angle (see Table 2). Besides the statistical error (there were no statistical correlations) there were seven sources of systematics, six of which correlated between at least two channels. The breakdown of these uncertainties as quoted by the ATLAS Collaboration [9] are shown in Table 3.

Good agreement is observed with Ref. [9], where the small differences are consistent with precision loss due to rounding. Indeed, the fact that there are round-off issues can already be seen from Ref. [9], where the quoted total error of the CF electron channel is smaller than the sum (in quadrature) of the statistical, PDF, and other systematic errors. A similar issue can be observed regarding the quoted combined systematic error which is larger than the sum in quadrature of its components.

However, there are small differences between the results from the exact procedure using Eqs. (50) and (51) and the iterative strategy using Eqs. (30) and (33). The reason can be traced to the asymmetric way in which the error due to higher orders enters the two electron channels. This induces subtle dependences of all sources of uncertainties (even those that were initially uncorrelated) on the correlated ones. It even affects the uncertainty induced by the finite muon energy resolution, which does not enter the electron channels at all. To account for this one can introduce additional contributions \(\Delta c_q^2\) to the off-diagonal entry of the bivariate covariance matrix of the all-electron result and the muon channel. These \(\Delta c_q^2\) can be chosen to enforce the exact result, but it is impossible to compute them beforehand. In fact, they depend on the new measurement to be added (here the muon channel) and not just the initial measurements (here the two electron channels). Moreover, the \(\Delta c_q^2\) necessary to enforce the correct average central value \(\bar{v}\) differs strongly from the \(\Delta c_q^2\) necessary to enforce the total error \(\bar{t}\). This observation is a reflection of the fact that the combination principle can be violated [12], which we state as the requirement that the combination of a number of measurements must not depend on the order in which they added to the average.

Thus, the iterative procedure generally suffers from a loss of precision. In this example the procedure gives nevertheless an excellent approximation because the uncertainty from higher order corrections (the origin of the asymmetric uncertainty) is itself very small. But there are cases in which the iterative procedure does not provide even a crude approximation and where one should use—if possible—the exact method based on the full covariance matrix. Unfortunately, its construction is not always possible, e.g., due to incomplete documentation of past results. Recent discussions of related aspects of this conundrum can be found in Refs. [13, 14].

7 Summary and conclusions

In summary, we have developed a formalism (derivatively weighted errors) to derive formulas for random errors or any error type of uncorrelated Gaussian nature. We introduced what we call disparity and misalignment angles to describe the case of two measurements, and found their relation to the statistical weight factors. For cases of more than two measurements with known covariances, we derived explicit formulas in a form which (as far as we are aware) did not appear before.

It is remarkable, that even in the context of purely Gaussian errors and perfectly known correlations there are intractable problems at the most fundamental statistical level. Specifically, they may arise even when a number of observations of the same quantity is combined and the error sources are recorded and the assumptions regarding their correlations are spelled out carefully. In statistical terms, one can conclude that such a combination—despite of all its recorded details—represents an insufficient statistics of the available information. The inclusion of further observations of the same quantity is then in general ambiguous.

On the other hand, there is no ambiguity in the absence of correlations or when any correlation is common to the set of observations to be combined. The fact that the ambiguities disappear in certain limits then reopens the possibility of useful approximations. For example, if an iterative procedure has to be chosen, one should first combine measurements where the dominant correlation is given approximately by a common contribution. Similarly, the measurements with small or no correlation with the other ones, are ideally kept for last.

Notes

Simply collecting more data often helps to reduce even the systematic error component, because some error sources that are nominally classified as systematic may be traceable to represent themselves statistical measurements. Moreover, with growing statistics one may restrict oneself to cleaner data by imposing stronger selection criteria (cuts). Nevertheless, the \(N^{-1/2}\) scaling of the statistical component is usually out of reach.

References

A. Gelman, J. B. Carlin, H. S. Stern, D. B. Rubin, Bayesian Data Analysis (Chapman & Hall, 1995)

L. Lista, Lect. Notes Phys. 909 (2016)

J. Erler, P. Masjuan, H. Spiesberger (2015, in preparation)

ALEPH Collaboration, A. Heister et al., Eur. Phys. J. C20, 401 (2001). arXiv:hep-ex/0104038

DELPHI Collaboration, P. Abreu et al., Eur. Phys. J. C14, 585 (2000)

L3 Collaboration, M. Acciarri et al. Phys. Lett. B429, 387 (1998)

OPAL Collaboration, G. Abbiendi et al., Eur. Phys. J. C21, 1 (2001). arXiv:hep-ex/0103045

ALEPH, DELPHI, L3, OPAL and SLD Collaborations, LEP Electroweak Working Group and SLD Electroweak and Heavy Flavour Groups, S. Schael et al., Phys. Rep. 427, 257 (2006). arXiv:hep-ex/0509008

ATLAS Collaboration, G. Aad et al., arXiv:1503.03709 [hep-ex]

L. Lyons, D. Gibaut, P. Clifford, Nucl. Instrum. Methods A270, 110 (1988)

A. Valassi, Nucl. Instrum. Methods A500, 391 (2003)

L. Lyons, A.J. Martin, D.H. Saxon, Phys. Rev. D41, 982 (1990)

R. Nisius, Eur. Phys. J. C74(8), 3004 (2014). arXiv:1402.4016 [physics.data-an]

L. Lista, Nucl. Instrum. Methods A764, 82 (2014). arXiv:1405.3425 [physics.data-an]

Acknowledgments

This work received support from PAPIIT (DGAPA–UNAM) project IN106913 and from CONACyT (México) project 151234.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3

About this article

Cite this article

Erler, J. On the combination procedure of correlated errors. Eur. Phys. J. C 75, 453 (2015). https://doi.org/10.1140/epjc/s10052-015-3688-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-015-3688-y