Abstract

Recent applications of machine-learned normalizing flows to sampling in lattice field theory suggest that such methods may be able to mitigate critical slowing down and topological freezing. However, these demonstrations have been at the scale of toy models, and it remains to be determined whether they can be applied to state-of-the-art lattice quantum chromodynamics calculations. Assessing the viability of sampling algorithms for lattice field theory at scale has traditionally been accomplished using simple cost scaling laws, but as we discuss in this work, their utility is limited for flow-based approaches. We conclude that flow-based approaches to sampling are better thought of as a broad family of algorithms with different scaling properties, and that scalability must be assessed experimentally.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Lattice quantum field theory (LQFT) is the only known systematically improvable approach to calculating physical observables in quantum field theories that exhibit non-perturbative dynamics. LQFT has been applied to first-principles studies of quantum chromodynamics (QCD) at low energy scales [1,2,3,4,5,6,7], to test proposed models for physics beyond the Standard Model [8,9,10,11], and to investigate various condensed matter systems [12, 13]. In this framework, discretized path integrals are evaluated numerically using stochastic Monte Carlo estimators,

where \(\phi _i\) are samples of the lattice field degrees of freedom drawn from the probability distribution defined by the Euclidean lattice action S,

The partition function Z is typically unknown, but this is not an obstacle to sampling with Markov chain Monte Carlo (MCMC) algorithms. At present, Hybrid/Hamiltonian Monte Carlo (HMC) [14,15,16] is the state-of-the-art MCMC algorithm for generating QCD field configurations, but its computational cost grows rapidly as the continuum limit is approached [17,18,19].

Recent work has explored whether improved configuration generation algorithms can be achieved using machine learning (ML) [20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62]. For example, promising proof-of-principle results using normalizing flows [63,64,65] have been obtained in a range of theories with different properties including gauge symmetries [27,28,29,30,31,32,33,34,35], fermionic degrees of freedom [32,33,34,35,36], and the existence of distinct topological sectors or multiple modes of the probability distribution [23,24,25,26,27, 29,30,31,32,33,34,35]. This includes a first demonstration for lattice QCD [35].

These results raise the question of whether a flow-based approach can be applied to lattice QCD calculations at state-of-the-art scale. The practical question is whether flow models can provide more cost-effective sampling than HMC for QCD at parameters and volumes of interest. Discussions of this question often conflate two separate concerns,

-

Scalability: whether an approach can be practically applied to some target theory, for which the ultimate question is the cost to generate N samples at a given set of parameters;

-

Cost scaling: how the cost of an approach changes as certain parameters are varied – in this case, the parameters of the target lattice QCD theory, including the parameters of the action and the lattice geometry.

When we ask whether an approach to gauge field sampling for QCD is “scalable,” we are asking not about its precise scaling properties, but whether it will work for QCD on state-of-the-art volumes at state-of-the-art parameters, and whether it can be used to push the state of the art further. For the sampling of gauge field configurations using HMC, cost scaling relations often directly and usefully predict scalability. However, the range of applicability, and hence utility, of any practical cost-scaling relations for flow-based algorithms is much more limited. The rest of this manuscript is devoted to elucidating this statement.

To that end, Sect. 2 first reviews HMC and flow-based sampling methods for lattice field theory, establishing definitions and notation for the rest of the discussion. Section 3 then compares flow-based approaches with HMC, emphasizing counterintuitive differences to the more familiar HMC paradigm. Section 4 presents numerical illustrations of different aspects of the scaling of flow-based approaches, demonstrating the limitations of scaling laws in assessing scalability. Finally, Sect. 5 speculates on potential paradigms for flow-based sampling at scale and discusses the outlook for these methods.

2 Preliminaries

2.1 HMC

In the HMC approach, gauge field configurations are generated in a Markov chain, where approximate molecular dynamics evolution in the fictitious “Monte Carlo time” direction is used to propose updates to the chain. Each step of the HMC algorithm proceeds by drawing fictitious momentum variables conjugate to each lattice field degree of freedom, integrating the Hamiltonian equations of motion, then accepting or rejecting the resulting new field configuration with a Metropolis test to correct for integrator errors and guarantee exactness of sampling [14,15,16]. For gauge theories with fermion content, such as QCD, the gauge fields are evolved in a fixed background of auxiliary “pseudofermion” fields, which encode fermionic effects stochastically [66, 67]. In this case, each integrator step requires solving large sparse systems of linear equations using implicit methods like conjugate gradient. For QCD, these solves typically dominate the computational cost. See e.g. Refs. [68,69,70] for more detailed reviews.

2.2 Normalizing flows and flow-based sampling

Normalizing flows are a framework for building exact, numerically tractable, machine-learned maps between probability distributions. In this construction, a diffeomorphic flow function f is applied to transform samples z drawn from a base distribution r to obtain samples \(\phi = f(z)\) distributed according to a model distribution q. The flow f is parametrized by neural networks and can be optimized to some objective by the minimization of a “loss function”. Conservation of probability gives the density of transformed samples,

Various frameworks have been developed to construct expressive, trainable flow transformations for which this expression can be evaluated tractably [27, 28, 34, 36, 64, 71,72,73,74,75,76,77].

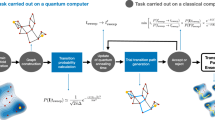

Flow transformations are a general tool which can be applied as components of different sampling approaches (and in non-sampling applications). In particular, in the “direct sampling” approach, which is the primary concern of this work, flows relate a simple, easy-to-sample base distribution to a learned approximation \(q \approx p\) of the target lattice field distribution, \({p=\exp [-S]/Z}\). Evaluating the reweighting factors \(w(\phi )=p(\phi )/q(\phi )\) between the model q and target p provides sufficient information to compute expectations under p. This may be accomplished e.g. by computing reweighted expectations as \(\langle {\mathcal {O}}\rangle _p = \langle {w \mathcal {O}}\rangle _q\). Alternatively, samples from p can be generated by using flow model samples as proposals for the Independence Metropolis algorithm [78,79,80] with acceptance probability \({p_\text {acc}(\phi \rightarrow \phi ') = \min [1, w(\phi ')/w(\phi )]}\). Powerfully, this approach allows composition with other MCMC algorithms with complementary properties [25]. As discussed further below, these straightforward applications are only a few examples of how flow-based sampling algorithms may be constructed.

Even within a particular sampling framework, there is no unique way to construct a flow model. Each concrete realization – a model architecture – is defined by many different choices. From high- to low-level, these are broadly:

-

domain of the variables z, \(\phi \) (e.g. \(\mathbb {R}\), \(\textrm{SU}(N)\), multiple fields);

-

the choice of base distribution r(z) (e.g. Gaussian, Haar uniform, free theory);

-

strategy for constructing flow functions (e.g. convex potential flows [74,75,76], neural ODEs [77], or coupling layers [64, 71] constructed using affine transformations [64], non-compact projections [73], neural splines [72], or gauge-equivariant transformations [27, 28, 34]);

-

structure of neural networks parametrizing the flow transformation (e.g. fully connected vs. convolutional networks, choice of activation functions);

-

and various hyperparameters (e.g. number of coupling layers, neural network depths and widths).

While flow-based sampling approaches in principle guarantee unbiased results by construction even for models with q arbitrarily different from p, performant sampling requires training the models. Just as for model architectures, many choices define a particular training scheme, including:

-

scheme for initializing model parameters (e.g. random distribution, retraining);

-

approach to training data (e.g. self-training, training on existing configurations);

-

choice of loss function to minimize (e.g. KL divergence [81], Stein discrepancies [82, 83], or gradient-based divergences like score matching or Fisher divergence [84, 85]);

-

optimization algorithm (e.g. SGD, Adam [86], second-order optimizers);

-

all hyperparameters of training (e.g. optimizer parameters, batch size);

-

and the schedule for training, which may vary any or all of these choices over time.

Besides direct sampling, other approaches to sampling lattice field distributions have been explored which use normalizing flows for different statistical modeling tasks. For example, Ref. [33] explored the use of flows which model a localized patch of a lattice field, conditioned on its environment. References [29, 31] employed flows to generalize the proposal distribution in HMC. Reference [36] used flows to model conditional distributions for Gibbs sampling. Stochastic normalizing flows [87, 88] and related approaches [44, 89, 90] use flows to relate a sequence of distributions interpolating between the base and target distributions. Reparametrization-based methods apply flows to the target distribution to obtain a “latent-space” distribution which may be more efficiently sampled with HMC [29, 30, 40, 91,92,93,94,95,96,97]. Flows play a different role in each case, requiring different architectures and training schemes.

2.3 Cost decomposition

For a generic sampling algorithm, the cost to generate a dataset equivalent to \(N_\text {indep}\) independent samples from a target distribution can be decomposed as

where \(C_\text {setup}\) is any up-front cost that must be paid before beginning data generation (e.g. the cost of training for flows, or of equilibration or decorrelating a forked stream for HMC), and \(C_\text {samp}\) is the cost of sampling thereafter. Generically, sampling costs may be further decomposed as

where \(\Delta C_\text {samp}\) is the cost to generate a single configuration (e.g. the cost of a single HMC trajectory, or of drawing a sample from a flow model) and \(N_\text {raw}\) is the total number of configurations output by the procedure. The effective sample size per configuration \({\text {ESS} \equiv N_\text {indep} / N_\text {raw} \in [0,1]}\) quantifies the loss of statistical power due to e.g. MCMC autocorrelations or the increase in variance due to reweighting. For HMC, \(\text {ESS} = 1 / 2 \tau _\text {int}\), where \(\tau _\text {int}\) is the integrated autocorrelation time. For flow-based direct sampling, the reweighting-inspired metric \(\text {ESS} = 1/\langle {w^2}\rangle _q\) [98, 99] is commonly employed (see Appendix A for further discussion). Note however that no single scalar metric may fully quantify the performance of any sampling algorithm, as the true ESS is always observable-dependent: for HMC, observables are sensitive to different autocorrelation times, whereas for flows, each observable has different correlations with the reweighting factors. Both \(C_\text {setup}\) and \(\Delta C_\text {samp}\) carry units of compute time (e.g. GPU hours), and depend strongly on the precise details of the algorithm implementation and hardware.

3 Costs and cost scaling: HMC versus flow-based approaches

In this section, we first analyze the role and limitations of cost scaling relations in the familiar context of HMC for QCD. Subsequently, we discuss different general aspects of flow-based approaches and their cost scaling properties, emphasizing differences with HMC. Features better demonstrated with numerical examples are deferred to Sect. 4.

3.1 Expectations for scaling laws from HMC

The cost to sample QCD field configurations using HMC is often parametrized as a function of the physical parameters of the target theory as [69, 100, 101]

where a is the lattice spacing, L/a is the extent of the lattice in units of sites, and \(M_\pi \) is the pion mass. The exponents \(z_L\), \(z_M\), and \(z_a\) define the cost scaling relation. A key aspect of the difficulty in assessing scalability is that their values depend on many factors, in particular both algorithmic choices and the targeted regime of physical parameters, i.e.,

-

1.

Scaling laws depend on how algorithm parameters are varied, and

-

2.

Scaling laws have limited regimes of applicability.

Naturally, not only the parameters of an algorithm are relevant, but also the structure of the algorithm itself, with the important consequence that

-

3.

Algorithmic developments can improve scaling properties.

Each of these key aspects are elaborated on below.

1. Scaling laws depend on how algorithm parameters are varied. Defining and quantifying a scaling relation requires choosing some scheme to vary algorithm parameters as the parameters of the target theory are varied. Different choices will yield different scaling relations. For example, discussions of HMC scaling typically quote a value of \(z_L = 5\) for the exponent of L/a in Eq. (6). Part of this, \((L/a)^4\), is due to simple operation counting on a four-dimensional lattice. However, the remaining factor of L/a follows from choosing a particular scheme to vary the algorithm parameters with the volume, specifically increasing the number of integrator steps per trajectory to keep the acceptance rate fixed [102]. A different choice would yield a different scaling relation: for example, keeping the step size fixed will result in a rapid decline of the acceptance rate, inducing long autocorrelation times and poorer scaling.

2. Scaling laws have limited regimes of applicability. Although universal behavior may be expected in some regimes of target physical parameters, in practice a wide range of values of the exponent of the lattice spacing \(z_a\) have been quoted in the literature [69, 103]. For example, many early studies [100, 104,105,106] of HMC cost scaling found \(z_a\) near its conjectured lower bound of 2 [107]. However, approaching the continuum limit, increasingly large potential barriers prevent HMC from tunneling between disjoint topological sectors. This effect, known as “topological freezing”, induces very long autocorrelation times and thus large \(z_a\). In fact, the a-dependence may instead be consistent with exponential [19, 108]. This large variation demonstrates the importance of assessing the limitations of any scaling law: extrapolating using an incorrect value of \(z_a\) for the parameter regime under consideration will not be predictive.

3. Algorithmic developments can improve scaling properties. Historically, new algorithmic developments have led to qualitative improvements in the capabilities and reach of HMC. For example, the scaling relation Eq. (6) was infamously used to diagnose the “Berlin wall”: at the time (ca. 2001), large measured values of \(z_M\) implied that calculations at physical pion masses would be practically impossible with near-future hardware [100, 101]. However, Hasenbusch preconditioning [109] was developed soon afterwards, reducing \(z_M\) and opening access to the physical pion mass regime. The development of multigrid preconditioners has provided additional improvements [110, 111].

3.2 Costs and cost scaling for flow-based approaches

History has demonstrated that algorithmic advances can redefine the limits of LQFT methods and enable new physics results. To that end, flow-based approaches provide an unprecedented new space of algorithms to explore. However, their costs and cost scaling properties can be counterintuitive from the HMC perspective. To understand these methods and their scaling properties, several key features of flow models must be appreciated, in particular that:

-

1.

Model weights are algorithm parameters;

-

2.

Flow evaluation costs do not typically vary with physical parameters, but model quality does.

In addition, the practical requirement for model training introduces conceptual complications. Specifically:

-

3.

Training and sampling are different dynamical processes, but cannot be considered independently;

-

4.

Training costs may be significant.

Finally, certain low-level computational concerns must be considered:

-

5.

Operation counting can predict raw computational costs; and

-

6.

Flow-based approaches may parallelize more effectively than serial samplers.

Each of these points is discussed below, emphasizing the conceptual differences between flow-based sampling approaches and the HMC paradigm.

1. Model weights are algorithm parameters. A fixed flow model architecture represents a parametric family of probability distributions. Within this family, a set of values for the neural network parameters \(\theta \) (“model weights”) specifies a particular model distribution, \(q_\theta \). The ESS to sample some target theory p will vary as a function of every model weight; their number thus defines the dimension of the space of algorithms to be explored. Relaxing the restriction to fixed architectures presents even further possibilities.

Conceptually, this is a drastically different situation from HMC, where the space of algorithm parameters is tractable to explore; flow models may have potentially billions of parameters. Of course, in practice, hand-tuning is impossible and model weights must instead be set implicitly by training e.g. with stochastic gradient descent methods. The practical analog to the set of HMC’s algorithm parameters are thus the set of all of the choices involved in defining a training scheme and architecture described in Sect. 2.2. Different scaling behavior results from how these choices are varied with target parameters. Note that although training schemes are often described in terms of relatively few hyperparameters, this apparent reduction in complexity is artificial. As discussed further below, the need for training only adds complexity, rather than providing any simplification.

2. Flow evaluation costs do not typically vary with physical parameters, but model quality does. For HMC, the cost to generate each sample, \(\Delta C_\text {samp}\), varies strongly with the parameters of the target theory due to the changing problem difficulty for the linear solvers. In contrast, applying a flow transformation typically involves only explicit algebraic operations. In this case, \(\Delta C_\text {samp}\) is independent of the precise values of the weights of the model, and thus the target parameters they implicitly encode. It follows that, for a fixed architecture, the dominant cost scaling with target parameters is due to the variation of the ESS (note that this may not hold if \(\Delta C_\text {samp}\) is dominated by linear solves to evaluate p, or e.g. for hybrid algorithms incorporating HMC updates). This severely limits the applicability of empirically measured cost scaling relations: as explored below, model quality is an unpredictable consequence of the complicated interplay between architecture, training scheme, and target theory.

3. Training and sampling are different dynamical processes, but cannot be considered independently. For HMC, the same process – evolution by Hamiltonian dynamics – is used for both setup and sampling. However, the same is not true for flows. Training is a search process in the high-dimensional space of model parameters, typically involving stochastic gradient descent, with dynamics arising from all the choices described in Sect. 2.2. Sampling dynamics depend on the interaction of fine-grained details of model quality with the role played by the model in the sampling algorithm (giving rise to e.g. the distribution of rejection run lengths in direct sampling with Metropolis). Unlike for HMC sampling, there is no reason to expect any common parametrization to apply to both cost components.

However, this does not mean that scaling behaviors of training and sampling costs can be studied in isolation. For flows, sampling efficiency is a function of expenditure on training, i.e., \(\text {ESS} = \text {ESS}(C_\text {train})\). Among other consequences, this implies that the scaling behavior of sampling and training costs are not defined without precisely specifying the stopping condition for training. Different choices of stopping condition result in qualitatively different scaling relations. For example, if training always uses a fixed amount of computation, then \(C_\text {train}\) scales trivially with the target parameters by construction, and all cost scaling is pushed on to \(C_\text {samp}\), as explored in Sect. 4.2. Instead, one may choose to train until some target ESS is achieved. In this case, the ESS scales trivially while \(C_\text {train}\) alone varies, as explored in Sect. 4.1. As demonstrated in Sects. 4.1 and 4.2, even the precise choice of fixed \(C_\text {train}\) or target ESS can strongly affect scaling properties. Other choices of stopping condition between these extremes are possible, each inducing different scaling properties. This sensitivity implies that scaling behaviors for flows are highly non-generic, thus the generalizability of empirically assessed scaling relations is severely limited.

4. Training costs may be significant. Most existing applications of flows to sampling in LQFT have employed training methods which apply the flow (or its inverse) to batches of field samples. For such a training approach, training costs can be decomposed similarly to the decomposition of sampling costs in Eq. (5):

where \(N_\text {train}\) is the total number of samples flowed during training and \(\Delta C_\text {train}\) is the combined cost of flowing a single sample and then backpropagating gradients. Typically, \(\Delta C_\text {train}\) is a few times larger than \(\Delta C_\text {samp}\). Training from a random initialization often involves \(\sim \) 1000s of optimizer steps using batches of \(\sim \) 100 s to 1000s of configurations, meaning \(N_\text {train}\) can be much larger than typical QCD ensemble sizes. This potentially large scale for training costs is an important factor in determining what will be computationally viable for QCD. However, efficient parallelization (as discussed below) may help mitigate this cost in practice. Moreover, importantly, as explored in Sect. 4, these costs are highly non-generic and can be optimized significantly.

5. Operation counting can predict raw computational costs. In some cases, simple operation counting can yield relations describing the dependence of \(\Delta C_\text {samp}\) and \(\Delta C_\text {train}\) (\(\Delta C\), collectively) on model hyperparameter choices and certain target theory parameters, such as the number of lattice sites \(\Omega \). For example, for flow models built from composed sub-transformations (e.g., coupling layers), the cost of applying a flow is linear in the number of sub-transformations. Similarly, for flow transformations parametrized by neural networks which interconnect all lattice sites, operation counting predicts that \(\Delta C \propto \Omega ^2\). More favorably, transformations parametrized by convolutions over the lattice geometry may have \({\Delta C \propto \Omega }\). (Note that these relations are often broken by e.g. cache effects or adaptive algorithm swapping, and may not apply for implementations on hardware such as GPUs and TPUs if their parallelism is not fully utilized.) Operation counting thus provides a useful but limited tool for assessing scaling properties and scalability: if the architecture itself is varied with the target, operation counting can predict scaling of \(\Delta C\), but cannot account for the (equally important) effect of varying model quality. Real-world resource constraints may induce further complications, e.g. if limited memory necessitates a trade-off between model size and the batch size for training.

6. Flow-based approaches may parallelize more effectively than serial samplers. Sampling from a flow model is embarrassingly parallelizable, and thus flow-based sampling admits new strategies for parallelism unavailable in the context of serial MCMC algorithms like HMC. For example, multiple compute nodes might independently and locally generate and store configurations \(\phi _i\), and pass only \(q(\phi _i)\) and \(p(\phi _i)\) to a central coordinator which updates the state of a global, distributed Markov chain with Independence Metropolis. Potential new strategies include not only the running of multiple independent samplers (without incurring additional setup costs), but also new schemes for problem division such as pipeline parallelism. Separately, the high arithmetic density of neural network operations suggests that flow-based sampling may not be communications-bound. Taken together, these properties suggest potential for flow-based methods to make more efficient use of computational resources than serial sampling algorithms, given real-world walltime and hardware constraints.

4 Limitations of scaling relations for flow-based approaches

The previous section discussed properties of flow-based sampling approaches for LQFT that are apparent from the definition of the approach. However, research and experimentation in this area has identified other properties of flows relevant to assessing scalability. This section presents simple numerical demonstrations of these features and discusses their implications for the utility of scaling relations. Note that these examples are applications to two-dimensional scalar field theory and the two-flavor Schwinger model, which are physically very different from QCD. However, the conclusions drawn from these experiments are not specific to the target theory, as made clear in the discussion. Further details of all numerical experiments are provided in Appendix A.

4.1 Training costs

As discussed in Sect. 3.2, the scale of training costs of flow models can be significant, requiring the flow to be applied to many more configurations than typical ensemble sizes. However, as demonstrated here, these costs are highly non-generic, and may be optimized significantly. Furthermore, their scaling behavior depends on the precise details of the training protocol. Specifically, we demonstrate that:

-

1.

Training costs may be optimized by orders of magnitude,

-

2.

Transfer learning can mitigate training costs, and

-

3.

Training cost scaling depends on training protocol.

The necessary conclusion is that the scaling of training costs is entirely specific to the approach employed.

Model quality as a function of training cost for two approaches to training, learning rate (LR) scheduling and batch size (BS) scheduling, for a flow model targeting real scalar field theory for \(m^2=-1\) and \(\lambda =1\) on a \(10 \times 10\) lattice. Each training scheme is applied to the same architecture. Cost is measured in units of the number of samples generated for training, \(N_\text {train}\). Dashed black lines indicate potential target ESSes of 0.2 and 0.6, as referenced in the main text. The batch size for “Schedule LR” is 16,384 throughout, while for “Schedule BS” it begins at 128 and doubles every 5000 steps until it reaches 16384. For “Schedule LR”, the learning rate is halved every 5000 steps. The ESS is measured using the training batch size every step, and smoothed over a rolling window of width 250 steps. Results vary by \(\sim 5\%\) under repeated experiments with different pseudorandom seeds. Further details are provided in Appendix A

1. Training costs may be optimized by orders of magnitude. Periodically reducing the optimization step-size (i.e., the learning rate) – a standard ML technique – can produce models of better quality at lower computational cost than training with a fixed learning rate. An alternative is to periodically increase the batch size during training [112]. As demonstrated in Fig. 1, in some cases this simple change can produce models of equivalent quality for drastically reduced cost as compared with those produced using learning rate scheduling. The precise improvement factor depends on the criterion used to stop training. For example, in this demonstration, batch size scheduling is \(\sim 110\) times less expensive than learning rate scheduling for training to a target ESS of 0.2, while for a target ESS of 0.6 the improvement is only a factor of \(\sim 12\). Note that the intent of this demonstration is not to emphasize the utility of this batch size reduction method, but rather to show the degree to which training costs may vary with approach. The practical consequence for assessing scalability is that training costs, and their scaling, are extremely sensitive to even minor perturbations in training protocol.

2. Transfer learning can mitigate training costs. Flow models can be retrained between theories with the same lattice geometry but different action parameters. Figure 2 illustrates the utility of this technique – “parameter transfer” – in training models for three different sets of Schwinger model target parameters. The large cost of training a model from a random initialization must always be paid once. However, as demonstrated, models targeting further parameters may be retrained from this initial model at significantly reduced cost compared with from-scratch training. Sequentially retraining along a trajectory through parameter space provides further improvement [25], at the cost of serializing training for different parameters.

Certain flow architectures, such as those parametrized by convolutional neural networks, additionally permit “volume transfer”: a model trained to generate configurations with one geometry may be used to generate configurations with another [28]. This makes it possible to perform the bulk of training for smaller volumes than the final target, and enables methods that are intractable for larger volumes, e.g., training with on-the-fly HMC generation [25] or using exact fermion determinants [32, 36]. In practice, we often find that retraining on the larger volume is unnecessary. For models trained on smaller volumes and applied to larger ones with no retraining, there is a generic cost scaling relationship with changing volume, detailed in Sect. 4.2.

The consequence of these observations is that, for the purposes of assessing scalability, the cost scaling properties of training from a random initialization are irrelevant if transfer learning is employed. Instead, the relevant scaling properties are those of the cost of retraining each new model, which will depend intimately on the set of physical parameters that are of interest in a particular study, and the order in which models are retrained between them.

3. Training cost scaling depends on training protocol. Training costs and their scaling properties are determined by various choices made in training. As discussed in Sect. 3.2, this includes the stopping condition for training, without which cost scaling behavior is not well-defined. For example, training for a fixed number of iterations implies a fixed training cost but varying model quality. Alternatively, training to a target ESS results in fixed sampling costs while the training costs vary with the target theory. The scaling of those training costs with the parameters of the target theory depends sensitively on the precise choice of target ESS, as illustrated in Fig. 3. As already discussed in Sect. 3.2, this demonstration that scaling relations are highly specific to the training approach implies that, even if scaling behaviour can be determined empirically for some particular approach, it is unlikely to be generic across different training approaches, let alone across different theories or flow model

Illustration of the reduction of training costs that can be obtained using transfer learning to train models for the Schwinger model with \(\beta =2.0\) and lattice geometry \(L\times T=8\times 8\) for three different \(\kappa \) targets. The ESS is measured on 6144 samples each epoch. Training curves are sequentialized to illustrate the total cost of training models for \(\kappa =0, 0.25, 0.263\) in order. The vertical lines indicate the overall cost in each case: blue for training each model from a random initialization, orange for retraining from an initial \(\kappa =0\) model, and green for sequentially retraining from \(\kappa =0 \rightarrow 0.25 \rightarrow 0.263\). Training is stopped when the target ESS is achieved in average over 100 epochs. Horizontal dotted lines mark the target \(\text {ESS} = 0.9, 0.7, 0.45\) for \(\kappa =0, 0.25, 0.263\), respectively. Further details are provided in Appendix A

4.2 Sampling costs

In this section, we demonstrate various limitations of scaling laws in describing sampling costs in flow-based approaches. In particular, as model quality determines sampling efficiency, the scaling of model quality with the parameters of the target theory is considered as a proxy for the scaling of sampling costs. We provide arguments for and numerical illustrations of several key properties. First, defining the maximum achievable model quality is a subtle question, since

-

1.

Achievable model quality is not a function of architecture alone.

Moreover, the scaling of model quality with parameters of the theory is complex, since

-

2.

Model quality scaling depends on training protocol, and

-

3.

Different architectures scale differently.

One scaling, however, can be derived generically:

-

4.

For fixed models, quality scales exponentially in volume.

Nevertheless, assessing scalability – i.e., utility at scale – from scaling laws is difficult, since

-

5.

Best scaling does not imply best performance, and

-

6.

Architecture dependence implies theory dependence.

Finally, we discuss the most relevant concern for scalability at present:

-

7.

Improving performance requires physics-informed algorithm design.

While the provided numerical demonstrations exclusively use the direct sampling approach, the conclusions apply more generally to sampling algorithms including flow models or other machine-learned components.

1. Achievable model quality is not a function of architecture alone. It is typical in gradient-descent-based optimization that after a period of rapid initial learning, optimization will enter a regime where model quality plateaus or improves only very slowly with further training. It is tempting to interpret the resulting model as fully saturated in quality, i.e. independent of training scheme and a function of architecture and target theory alone. However, this is not the case. As shown in Fig. 4, changing only the precise choice of optimization algorithm or initial distribution of model weights can result in significantly different final ESS. Further, training dynamics may be sensitive even to the pseudorandom seed used to generate the initial model parameters and training data. The appearance of saturation may also be misleading, as demonstrated by the “second-wind” training dynamics in one of the examples in Fig. 4, where an apparent plateau is broken by a second period of rapid learning with no associated change in training protocol. Although a finite flow has finite expressivity, the results of any particular training procedure can only bound the capabilities of an architecture. Practically, this implies that there is no notion of a “fully trained” model, and furthermore that model quality scaling relations cannot be quantified for an architecture class independent of training effects.

2. Model quality scaling depends on training protocol. The scaling behavior of model quality is necessarily determined by the choice of training scheme, which governs how model weights vary with the parameters of the target theory. As for training costs, this includes the stopping condition for training. Figure 5 demonstrates how different stopping criteria – specifically, different choices of fixed expenditure on training – induce different functional forms in model quality as a function of target theory parameters when retraining between target parameters. In this figure, models for all parameters are directly retrained from a single model; sequentially retraining instead would result in different functional forms again. The practical consequences are that cost scaling laws are not unique even for a particular architecture and training method.

3. Different architectures scale differently. Figure 6 provides a simple demonstration of how the model quality of even very similar architectures may exhibit different scaling behaviors with the parameters of the target theory. In this example, larger models (with more free parameters) exhibit better overall ESS and better scaling properties towards criticality – although the changing trade-off between ESS and \(\Delta C_\text {samp}\) results in crossovers of the sampling performance, as discussed further below. The smooth dependence of ESS (and consequently performance) on target parameters implies useful extrapolation can be possible over a small range of target parameters for a fixed architecture, although we emphasize that these functional forms are necessarily specific to how the models are trained.

4. For fixed models, quality scales exponentially in volume. As demonstrated throughout this work, generic cost scaling laws for flow models are difficult to obtain due to the complexity of the method. However, a particular restriction allows one to be derived analytically. Specifically, a straightforward argument implies that the quality of a fixed model constructed from a localFootnote 1 flow transformation degrades exponentially under volume transfer: if both p and q are defined for arbitrary volumes V and may be characterized by correlation lengths \(\xi _p\) and \(\xi _q\), then when \(L \gg \xi _p, \xi _q\) the integral defining the ESS factorizes over decorrelated subvolumes and scales as

Figure 7 demonstrates the onset of this effect in the large-volume regime for the Schwinger model, but we observe it to hold for other target theories as well. This effect was first described in Ref. [33].

It is important to emphasize the limited applicability of this scaling relation. Specifically, it applies only when the physical extent \(L/\xi _p\) is large, compared with the extent in lattice sites L/a. Thus, while this relation may present an obstacle to flow-based sampling in the thermodynamic limit \(L/\xi _p \rightarrow \infty \), it does not obstruct sampling in the continuum limit \(\xi _p/a \rightarrow \infty \) with \(L/\xi _p\) fixed. Away from the thermodynamic limit, the breakdown of this relation can have counterintuitive effects: as shown in Fig. 7, we have observed better-than-exponential scaling in the Schwinger model when \(L/\xi _p\) is small. The exponential scaling relation furthermore does not apply between theories in different dimensions or with different numbers of internal degrees of freedom. Most importantly, however, it does not relate different approaches or otherwise constrain what overall model quality (and thus quality of volume scaling properties) is achievable. Finally, the argument for this scaling relation holds only for fixed models transferred without retraining.

This effect does not present any principled obstacle to flow-based sampling at state-of-the-art QCD parameters where typically \(L/\xi _p = M_\pi L \sim 4\)–10. In practice, we are not interested in the thermodynamic limit, only control over finite-volume effects. However, it does emphasize the importance of developing high-quality models to reach volumes of interest. Sampling approaches which require modeling only subvolumes [33] may control the effect directly.

5. Best scaling does not imply best performance. Of course, scaling of model quality is not the sole factor determining sampling efficiency. In fact, in the demonstration of Fig. 6, an architecture with one of the least favorable scaling behaviors provides the best performance in our implementation. For this small model, the lower ESS is more than compensated for by its low cost of evaluation, \(\Delta C_\text {samp}\). This emphasizes the important point that, ultimately, only sampling performance at parameters of interest matters; scaling laws are only important insofar as they can be used to predict the performance of an algorithm at one target set of parameters from its performance elsewhere.

6. Architecture dependence implies theory dependence. As demonstrated in Fig. 6, even different choices of hyperparameters within an architecture class can lead to significantly different scaling of model quality with the parameters of the target theory. As discussed in Sect. 2.2, much more significant differences in architecture are possible, from which we should expect an even greater variety in scaling behaviors. Importantly, treating different physical theories requires structurally different architectures. For example, \(\textrm{SU}(N)\) variables cannot be flowed using the same transformations applicable to real scalar fields. Further theory-specific engineering is necessary to encode symmetries and other physical features. Given the dissimilarity between architectures required for different theories, it should not be assumed that features of model quality obtained for one theory apply for any other theory of interest. This is further complicated by the interaction of model quality with different varieties of critical behavior. We conclude that studying the scaling of flow model sampling for toy models such as \(\phi ^4\)-theory or even the Schwinger model provides little information about scaling properties for any flow-based approach to sampling gauge field configurations for QCD.

7. Improving performance requires physics-informed algorithm design. As seen in Figs. 1, 2, and 4, training with a fixed protocol eventually enters a slowly improving regime, after which point the expense of additional training will not reliably provide a practical increase in sampling efficiency. Similarly, as illustrated in Fig. 8, increasing the model size within fixed architecture classes often provides diminishing improvements in the model quality achieved by a fixed training protocol. Although further training or increase in model size may provide sudden improvements (e.g. second-wind training dynamics as in Fig. 4), these saturation-like behaviors present a practical obstacle to increasing sampling efficiency using “brute force” application of additional computational power.

Demonstration in real scalar field theory, with \(m^2 = -2\) on a \(10 \times 10\) lattice, of the differences in training cost scaling behavior in \(1/\lambda \) due to different choices of the stopping condition for training. Each model is retrained from \(\lambda = 4\) to the target \(\lambda \), halting training on the first step where the target ESS is achieved. The lack of smoothness is an inherent complication of quantifying scaling laws when using stopping conditions based on model quality, here due to the stochastic evaluation of the ESS well as the inherent noise of training. Further details are provided in Appendix A

Example training curves demonstrating sensitivity of training dynamics and final model quality to the choice of optimization algorithm (top panel) and the distribution used to randomly initialize the model weights (bottom panel), for real scalar field theory on a \(10 \times 10\) lattice with \(m^2=-2\) and \(\lambda =1\) (top), and \(m^2=-4\) and \(\lambda =1.5\) (bottom). Other than the optimizer or weights initialization, architectures and training protocols are the same for each curve. The legend for the top panel refers to the Adam optimizer of Ref. [86], the Adadelta optimizer of Ref. [113], and stochastic gradient descent (SGD). Except for the learning rate, optimizer hyperparameters are the Pytorch 1.10 defaults. In the legend for the bottom panel, Kaiming refers to the initialization procedure of Ref. [114], the Pytorch 1.10 default, with “width” an overall rescaling of the distribution. Xavier refers to the procedure of Ref. [115], with “gain” a parameter of the method. The two red lines indicate examples for two different pseudorandom seeds; all other curves vary only at the \(\sim \) 5–10% level for different seeds. The learning rate is decayed by a factor of 2 every 20,000 steps. The ESS is smoothed using a rolling window of width 250 steps. Further details are provided in Appendix A

Demonstration in the context of real scalar field theory of how model quality as a function of \(\lambda \) depends on the stopping condition used for training, for \(m^2 = -2\) on a \(10 \times 10\) lattice. Models are retrained directly from \(\lambda = 1\) to the target \(\lambda \), halting training after a fixed number of steps. Further details are provided in Appendix A

Scaling behavior of model quality with respect to theory parameters, for different model architectures applied to real scalar field theory with \(m^2=-4\) on a \(16 \times 16\) lattice. Each line denotes the ESS (top panel) and sampling performance (bottom panel) of a different affine coupling architecture, all trained using the same protocol. Performance is measured in effective samples per second, quantified for each model as its ESS divided by the computational cost of generating a configuration in units of RTX2080 Ti GPU-seconds. Note that these timings are specific to our implementation. Models are trained as described in Appendix A. In the legend, K denotes the convolutional kernel size and \(n_L\) denotes the number of coupling layers. Further details are provided in Appendix A

Volume scaling of the ESS for models trained to sample the Schwinger model at \(\beta =2\) and three different values of the fermion mass, including the pure-gauge limit, \(\kappa =0\). The top and bottom panels illustrate the volume dependence of the ESS and pseudoscalar mass \(a M_\pi \), respectively. For each target \(\kappa \), models are trained from scratch at \(L=8\) and volume transferred without retraining. In the top panel, the dotted lines are extrapolations backwards from the largest volume \(L=24\), where the ESS takes values \(\approx \) 0.58, 0.44, and 0.24, using Eq. (8). Deviations of the markers below these lines correspond to better-than-exponential scaling at smaller volumes, where the lower panel shows significant finite-size effects in \(a M_\pi \). The ESS is evaluated on \(10^4\) samples at each volume. Error bars are smaller than the markers. Further details are provided in Appendix A

Dependence of ESS on number of coupling layers \(n_L\) for two different architectures, with all other architecture and training hyperparameters fixed, for real scalar field theory on a \(16 \times 16\) lattice with \(m^2=-4\) and \(\lambda =1.25\). The architectures differ by only the base distribution: independent normal on each site (“Normal”), and the free-field distribution with a learned pole mass (“Free field”; as described in Appendix A). Each model is trained from a random initialization for 150 k steps, which is sufficient to reach a slowly improving regime in all cases. Training uses batch size 1024, and the learning rate is decayed by a factor 2 every 10,000 steps. Uncertainties for each point include the spread between two repeated experiments with different pseudorandom seeds. Further details are provided in Appendix A

Instead, improving sampler efficiency requires more qualitative algorithmic improvements. Among the infinite possible variations of model architecture, it is natural to expect that choices which incorporate a priori physics understanding will lead to better model quality. This is illustrated in Fig. 8, which compares two closely related architectures for scalar field theory. Here it is clearly visible that the physics-informed modification is far more successful at improving model quality than simply increasing the model size. Along the same lines, developing efficient flow-based sampling algorithms for QCD will require developing and testing new architectures, training schemes, and sampling approaches which incorporate a priori physics knowledge.

5 Outlook

For flow-based approaches to the sampling of lattice field configurations, even small differences in the ML approach – architecture, training scheme, and how they are varied with the parameters of the target theory – result in not only different overall costs, but also different cost scalings with the parameters of the target theory. In effect, each ML approach defines a different sampling algorithm with different cost scaling properties. Further, because theory-specific modeling is required, scaling properties assessed in one theory should not be expected to generalize to others. Taken together, this implies a very different paradigm for assessing algorithm scalability than has applied for QCD algorithms thus far, where scaling properties assessed in toy theories often translate directly to QCD. For flow-based methods, assessing scalability will require direct, experimental investigation of applications to QCD itself, which has only just begun [35].

It is not yet clear what at-scale applications of flow-based sampling methods to QCD will look like, but we can speculate, and some key aspects are already clear. For example, transfer learning and retraining will play a central role in mitigating training costs. Thus, architectures well-suited for transfer learning across wide ranges of parameters and volumes will be important in order to exploit this technique. Exponential volume scaling suggests that high-quality models will be necessary to achieve efficient sampling at scale. Coupled with the large overall scale of training costs, this suggests a paradigm of use very different from HMC. As seen for large ML models in industry applications, such as GPT [116] and MT-NLG [117], the typical paradigm is that significant computational resources and human time are invested over years in exploration and training. The resulting models can then be shared as a community resource, amortizing the bulk of training and development costs across the community as a whole.

Direct sampling with flow models has significant natural advantages over serial algorithms such as HMC, but requires high-quality models for efficient sampling. However, there are many other sampling approaches where flows may be employed. “Hybrid” approaches involving both flow components and more traditional sampling algorithms can exploit lower-quality models while leveraging the decades of engineering invested in algorithms like HMC. It is likely that the first at-scale application will involve such a hybrid approach. Over the longer term, improving flow model technology will enable increasingly efficient sampling approaches.

While the growing body of work on flow-based sampling methods continues to provide promising early results, we have only just begun to explore the space of what is possible. The broader field of machine learning is advancing rapidly, and experience dictates that we cannot anticipate what capabilities will be enabled by new developments. Creativity guided by physical intuition remains our most effective means of making progress.

Data availability

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: No associated data.]

Notes

I.e., for which variables are transformed conditioned only on information in a local neighborhood, such as for flows parametrized by convolutional neural networks.

References

C. Morningstar, The Monte Carlo method in quantum field theory (2007). arXiv:hep-lat/0702020

C. Lehner et al., Opportunities for lattice QCD in quark and lepton flavor physics. Eur. Phys. J. A 55(11), 195 (2019). https://doi.org/10.1140/epja/i2019-12891-2. arXiv:1904.09479 [hep-lat]

A.S. Kronfeld, D.G. Richards, W. Detmold, R. Gupta, H.W. Lin, K.F. Liu, A.S. Meyer, R. Sufian, S. Syritsyn, Lattice QCD and neutrino-nucleus scattering. Eur. Phys. J. A 55(11), 196 (2019). https://doi.org/10.1140/epja/i2019-12916-x. arXiv:1904.09931 [hep-lat]

V. Cirigliano, Z. Davoudi, T. Bhattacharya, T. Izubuchi, P.E. Shanahan, S. Syritsyn, M.L. Wagman, The role of lattice QCD in searches for violations of fundamental symmetries and signals for new physics. Eur. Phys. J. A 55(11), 197 (2019). https://doi.org/10.1140/epja/i2019-12889-8. arXiv:1904.09704 [hep-lat]

W. Detmold, R.G. Edwards, J.J. Dudek, M. Engelhardt, H.W. Lin, S. Meinel, K. Orginos, P. Shanahan, Hadrons and nuclei. Eur. Phys. J. A 55(11), 193 (2019). https://doi.org/10.1140/epja/i2019-12902-4. arXiv:1904.09512 [hep-lat]

A. Bazavov, F. Karsch, S. Mukherjee, P. Petreczky, Hot-dense lattice QCD: USQCD whitepaper 2018. Eur. Phys. J. A 55(11), 194 (2019). https://doi.org/10.1140/epja/i2019-12922-0. arXiv:1904.09951 [hep-lat]

B. Joó, C. Jung, N.H. Christ, W. Detmold, R. Edwards, M. Savage, P. Shanahan, Status and future perspectives for lattice gauge theory calculations to the exascale and beyond. Eur. Phys. J. A 55(11), 199 (2019). https://doi.org/10.1140/epja/i2019-12919-7. arXiv:1904.09725 [hep-lat]

R.C. Brower, A. Hasenfratz, E.T. Neil, S. Catterall, G. Fleming, J. Giedt, E. Rinaldi, D. Schaich, E. Weinberg, O. Witzel, Lattice gauge theory for physics beyond the standard model. Eur. Phys. J. A 55(11), 198 (2019). https://doi.org/10.1140/epja/i2019-12901-5. arXiv:1904.09964 [hep-lat]

T. DeGrand, Lattice tests of beyond Standard Model dynamics. Rev. Mod. Phys. 88, 015,001 (2016). https://doi.org/10.1103/RevModPhys.88.015001. arXiv:1510.05018 [hep-ph]

B. Svetitsky, Looking behind the Standard Model with lattice gauge theory. EPJ Web Conf. 175, 01,017 (2018). https://doi.org/10.1051/epjconf/201817501017. arXiv:1708.04840 [hep-lat]

G.D. Kribs, E.T. Neil, Review of strongly-coupled composite dark matter models and lattice simulations. Int. J. Mod. Phys. A 31(22), 1643,004 (2016). https://doi.org/10.1142/S0217751X16430041. arXiv:1604.04627 [hep-ph]

I. Ichinose, T. Matsui, Lattice gauge theory for condensed matter physics: ferromagnetic superconductivity as its example. Mod. Phys. Lett. B 28, 1430,012 (2014). https://doi.org/10.1142/S0217984914300129. arXiv:1408.0089 [cond-mat.str-el]

M. Mathur, T.P. Sreeraj, Lattice gauge theories and spin models. Phys. Rev. D 94, 085,029 (2016). https://doi.org/10.1103/PhysRevD.94.085029. arXiv:1604.00315 [hep-lat]

S. Duane, A.D. Kennedy, B.J. Pendleton, D. Roweth, Hybrid Monte Carlo. Phys. Lett. B 195, 216–222 (1987). https://doi.org/10.1016/0370-2693(87)91197-X

R.M. Neal, Probabilistic inference using Markov chain Monte Carlo methods (Department of Computer Science, University of Toronto Toronto, ON, Canada, 1993), chap. 5

R.M. Neal, Bayesian Learning for Neural Networks. Lecture Notes in Statistics, vol. 118 (1996)

U. Wolff, Critical slowing down. Nucl. Phys. Proc. Suppl. 17, 93–102 (1990). https://doi.org/10.1016/0920-5632(90)90224-I

S. Schaefer, R. Sommer, F. Virotta, Investigating the critical slowing down of QCD simulations. PoS LAT2009, 032 (2009). https://doi.org/10.22323/1.091.0032. arXiv:0910.1465 [hep-lat]

S. Schaefer, R. Sommer, F. Virotta, Critical slowing down and error analysis in lattice QCD simulations. Nucl. Phys. B 845, 93–119 (2011). https://doi.org/10.1016/j.nuclphysb.2010.11.020. arXiv:1009.5228 [hep-lat]

S.H. Li, L. Wang, Neural network renormalization group. Phys. Rev. Lett. 121, 260–601 (2018). https://doi.org/10.1103/PhysRevLett.121.260601

M.S. Albergo, G. Kanwar, P.E. Shanahan, Flow-based generative models for Markov chain Monte Carlo in lattice field theory. Phys. Rev. D 100(3), 034–515 (2019). https://doi.org/10.1103/PhysRevD.100.034515. arXiv:1904.12072 [hep-lat]

M.S. Albergo, D. Boyda, D.C. Hackett, G. Kanwar, K. Cranmer, S. Racanière, D.J. Rezende, P.E. Shanahan, Introduction to normalizing flows for lattice field theory (2021). arXiv:2101.08176 [hep-lat]

K.A. Nicoli, S. Nakajima, N. Strodthoff, W. Samek, K.R. Müller, P. Kessel, Asymptotically unbiased estimation of physical observables with neural samplers. Phys. Rev. E 101(2), 023–304 (2020). https://doi.org/10.1103/PhysRevE.101.023304. arXiv:1910.13496 [cond-mat.stat-mech]

K.A. Nicoli, C.J. Anders, L. Funcke, T. Hartung, K. Jansen, P. Kessel, S. Nakajima, P. Stornati, On estimation of thermodynamic observables in lattice field theories with deep generative models (2020). arXiv:2007.07115 [hep-lat]

D.C. Hackett, C.C. Hsieh, M.S. Albergo, D. Boyda, J.W. Chen, K.F. Chen, K. Cranmer, G. Kanwar, P.E. Shanahan, Flow-based sampling for multimodal distributions in lattice field theory (2021). arXiv:2107.00734 [hep-lat]

L. Del Debbio, J.M. Rossney, M. Wilson, Efficient modelling of trivializing maps for lattice \(\phi ^4\) theory using normalizing flows: a first look at scalability (2021). arXiv:2105.12481 [hep-lat]

G. Kanwar, M.S. Albergo, D. Boyda, K. Cranmer, D.C. Hackett, S. Racanière, D.J. Rezende, P.E. Shanahan, Equivariant flow-based sampling for lattice gauge theory. Phys. Rev. Lett. 125(12), 121–601 (2020). https://doi.org/10.1103/PhysRevLett.125.121601. arXiv:2003.06413 [hep-lat]

D. Boyda, G. Kanwar, S. Racanière, D.J. Rezende, M.S. Albergo, K. Cranmer, D.C. Hackett, P.E. Shanahan, Sampling using \(SU(N)\) gauge equivariant flows. Phys. Rev. D 103(7), 074–504 (2021). https://doi.org/10.1103/PhysRevD.103.074504. arXiv:2008.05456 [hep-lat]

S. Foreman, X.Y. Jin, J.C. Osborn, Deep learning Hamiltonian Monte Carlo (2021). arXiv:2105.03418 [hep-lat]

S. Foreman, T. Izubuchi, L. Jin, X.Y. Jin, J.C. Osborn, A. Tomiya, HMC with normalizing flows (2021). arXiv:2112.01586 [cs.LG]

S. Foreman, X.Y. Jin, J.C. Osborn, LeapfrogLayers: a trainable framework for effective topological sampling. PoS LATTICE2021, 508 (2022).https://doi.org/10.22323/1.396.0508. arXiv:2112.01582 [hep-lat]

M.S. Albergo, D. Boyda, K. Cranmer, D.C. Hackett, G. Kanwar, S. Racanière, D.J. Rezende, F. Romero-López, P.E. Shanahan, J.M. Urban, Flow-based sampling in the lattice Schwinger model at criticality. Phys. Rev. D 106(1), 014–514 (2022). https://doi.org/10.1103/PhysRevD.106.014514. arXiv:2202.11712 [hep-lat]

J. Finkenrath, Tackling critical slowing down using global correction steps with equivariant flows: the case of the Schwinger model (2022). arXiv:2201.02216 [hep-lat]

R. Abbott, M.S. Albergo, D. Boyda, K. Cranmer, D.C. Hackett, G. Kanwar, S. Racanière, D.J. Rezende, F. Romero-López, P.E. Shanahan, B. Tian, J.M. Urban, Gauge-equivariant flow models for sampling in lattice field theories with pseudofermions. Phys. Rev. D 106(7), 074–506 (2022). https://doi.org/10.1103/PhysRevD.106.074506. arXiv:2207.08945 [hep-lat]

R. Abbott, M.S. Albergo, A. Botev, D. Boyda, K. Cranmer, D.C. Hackett, G. Kanwar, A.G. Matthews, S. Racanière, A. Razavi, D.J. Rezende, F. Romero-López, P.E. Shanahan, J.M. Urban, Sampling QCD field configurations with gauge-equivariant flow models (2022). arXiv:2208.03832 [hep-lat]

M.S. Albergo, G. Kanwar, S. Racanière, D.J. Rezende, J.M. Urban, D. Boyda, K. Cranmer, D.C. Hackett, P.E. Shanahan, Flow-based sampling for fermionic lattice field theories (2021). arXiv:2106.05934 [hep-lat]

M. Gabrié, G.M. Rotskoff, E. Vanden-Eijnden, Adaptive Monte Carlo augmented with normalizing flows (2021). arXiv:2105.12603 [physics.data-an]

P. de Haan, C. Rainone, M.C.N. Cheng, R. Bondesan, Scaling up machine learning for quantum field theory with equivariant continuous flows (2021). arXiv:2110.02673 [cs.LG]

S. Lawrence, Y. Yamauchi, Normalizing flows and the real-time sign problem. Phys. Rev. D 103(11), 114–509 (2021). https://doi.org/10.1103/PhysRevD.103.114509. arXiv:2101.05755 [hep-lat]

X.Y. Jin, Neural network field transformation and its application in HMC (2022). arXiv:2201.01862 [hep-lat]

J.M. Pawlowski, J.M. Urban, Flow-based density of states for complex actions (2022). arXiv:2203.01243 [hep-lat]

M. Gerdes, P. de Haan, C. Rainone, R. Bondesan, M.C.N. Cheng, learning lattice quantum field theories with equivariant continuous flows (2022). arXiv:2207.00283 [hep-lat]

A. Singha, D. Chakrabarti, V. Arora, Conditional normalizing flow for Monte Carlo sampling in lattice scalar field theory (2022). arXiv:2207.00980 [hep-lat]

A. Matthews, M. Arbel, D.J. Rezende, A. Doucet, Continual repeated annealed flow transport Monte Carlo, 162, 15,196–15,219 (2022). https://proceedings.mlr.press/v162/matthews22a.html

M. Caselle, E. Cellini, A. Nada, M. Panero, Stochastic normalizing flows as non-equilibrium transformations (2022). arXiv:2201.08862 [hep-lat]

M. Caselle, E. Cellini, A. Nada, M. Panero, Stochastic normalizing flows for lattice field theory (2022). arXiv:2210.03139 [hep-lat]

L. Wang, Exploring cluster Monte Carlo updates with Boltzmann machines. Phys. Rev. E 96, 051–301 (2017). https://doi.org/10.1103/PhysRevE.96.051301

L. Huang, L. Wang, Accelerated Monte Carlo simulations with restricted Boltzmann machines. Phys. Rev. B 95(3) (2017). https://doi.org/10.1103/physrevb.95.035105

J. Song, S. Zhao, S. Ermon, A-nice-mc: adversarial training for mcmc (2018). arXiv:1706.07561 [stat.ML]

A. Tanaka, A. Tomiya, Towards reduction of autocorrelation in HMC by machine learning (2017). arXiv:1712.03893 [hep-lat]

D. Levy, M.D. Hoffman, J. Sohl-Dickstein, Generalizing Hamiltonian Monte Carlo with neural networks (2018). arXiv:1711.09268 [stat.ML]

J.M. Pawlowski, J.M. Urban, Reducing autocorrelation times in lattice simulations with generative adversarial networks. Mach. Learn. Sci. Tech. 1, 045,011 (2020). https://doi.org/10.1088/2632-2153/abae73. arXiv:1811.03533 [hep-lat]

G. Cossu, L. Del Debbio, T. Giani, A. Khamseh, M. Wilson, Machine learning determination of dynamical parameters: the Ising model case. Phys. Rev. B 100(6), 064–304 (2019). https://doi.org/10.1103/PhysRevB.100.064304. arXiv:1810.11503 [physics.comp-ph]

D. Wu, L. Wang, P. Zhang, Solving statistical mechanics using variational autoregressive networks. Phys. Rev. Lett. 122, 080–602 (2019). https://doi.org/10.1103/PhysRevLett.122.080602

D. Bachtis, G. Aarts, B. Lucini, Extending machine learning classification capabilities with histogram reweighting. Phys. Rev. E 102(3), 033–303 (2020). https://doi.org/10.1103/PhysRevE.102.033303. arXiv:2004.14341 [cond-mat.stat-mech]

Y. Nagai, A. Tanaka, A. Tomiya, Self-learning Monte-Carlo for non-abelian gauge theory with dynamical fermions (2020). arXiv:2010.11900 [hep-lat]

A. Tomiya, Y. Nagai, Gauge covariant neural network for 4 dimensional non-abelian gauge theory (2021). arXiv:2103.11965 [hep-lat]

D. Bachtis, G. Aarts, F. Di Renzo, B. Lucini, Inverse renormalization group in quantum field theory. Phys. Rev. Lett. (2021). arXiv:2107.00466 [hep-lat]

D. Wu, R. Rossi, G. Carleo, Unbiased Monte Carlo cluster updates with autoregressive neural networks (2021). arXiv:2105.05650 [cond-mat.stat-mech]

A. Tomiya, S. Terasaki, GomalizingFlow.jl: a Julia package for Flow-based sampling algorithm for lattice field theory (2022). arXiv:2208.08903 [hep-lat]

B. Máté, F. Fleuret, Deformation theory of Boltzmann distributions (2022). arXiv:2210.13772 [hep-lat]

S. Chen, O. Savchuk, S. Zheng, B. Chen, H. Stoecker, L. Wang, K. Zhou, Fourier-flow model generating Feynman paths (2022). arXiv:2211.03470 [hep-lat]

D.J. Rezende, S. Mohamed, Variational inference with normalizing flows (2016). arXiv:1505.05770 [stat.ML]

L. Dinh, J. Sohl-Dickstein, S. Bengio, Density estimation using Real NVP (2017). arXiv:1605.08803 [cs.LG]

G. Papamakarios, E. Nalisnick, D.J. Rezende, S. Mohamed, B. Lakshminarayanan, Normalizing flows for probabilistic modeling and inference (2019). arXiv:1912.02762 [stat.ML]

D.H. Weingarten, D.N. Petcher, Monte Carlo integration for lattice gauge theories with fermions. Phys. Lett. B 99, 333–338 (1981). https://doi.org/10.1016/0370-2693(81)90112-X

F. Fucito, E. Marinari, G. Parisi, C. Rebbi, A proposal for Monte Carlo simulations of fermionic systems. Nucl. Phys. B 180, 369 (1981). https://doi.org/10.1016/0550-3213(81)90055-9

R.M. Neal et al., MCMC using Hamiltonian dynamics. Handbook of Markov chain Monte Carlo vol. 2(11), p. 2 (2011)

C. Gattringer, C.B. Lang, Quantum chromodynamics on the lattice, vol. 788 (Springer, Berlin, 2010). https://doi.org/10.1007/978-3-642-01850-3

T. DeGrand, C.E. Detar, Lattice methods for quantum chromodynamics (2006)

L. Dinh, D. Krueger, Y. Bengio, Nice: non-linear independent components estimation (2014). arXiv:1410.8516

C. Durkan, A. Bekasov, I. Murray, G. Papamakarios, Neural spline flows. Advances in neural information processing systems, vol. 32 (2019)

D.J. Rezende, G. Papamakarios, S. Racanière, M.S. Albergo, G. Kanwar, P.E. Shanahan, K. Cranmer, Normalizing flows on Tori and spheres (2020). arXiv:2002.02428 [stat.ML]

L. Zhang, W. E, L. Wang, Monge–Ampère flow for generative modeling (2018). arXiv:1809.10188 [cs.LG]

C.W. Huang, R.T. Chen, C. Tsirigotis, A. Courville, Convex potential flows: universal probability distributions with optimal transport and convex optimization (2020). arXiv:2012.05942 [cs.LG]

B. Amos, L. Xu, J.Z. Kolter, Input convex neural networks 70, 146–155 (2017). arXiv:1609.07152 [cs.LG]

R.T. Chen, Y. Rubanova, J. Bettencourt, D.K. Duvenaud, Neural ordinary differential equations. Advances in neural information processing systems, vol. 31 (2018)

N. Metropolis, A.W. Rosenbluth, M.N. Rosenbluth, A.H. Teller, E. Teller, Equation of state calculations by fast computing machines. J. Chem. Phys. 21, 1087–1092 (1953). https://doi.org/10.1063/1.1699114

W.K. Hastings, Monte Carlo sampling methods using Markov chains and their applications. Biometrika 57, 97–109 (1970). https://doi.org/10.1093/biomet/57.1.97

L. Tierney, Markov chains for exploring posterior distributions. Ann. Stat. 1701–1728 (1994)

S. Kullback, R.A. Leibler, On information and sufficiency. Ann. Math. Stat. 22(1), 79–86 (1951). https://doi.org/10.1214/aoms/1177729694

Q. Liu, J. Lee, M. Jordan, A kernelized Stein discrepancy for goodness-of-fit tests, pp. 276–284 (2016)

J. Gorham, L. Mackey, Measuring sample quality with kernels, pp. 1292–1301 (2017)

A. Hyvärinen, P. Dayan, Estimation of non-normalized statistical models by score matching. J Mach Learn Res 6(4) (2005)

O. Johnson, Information theory and the central limit theorem (World Scientific, Singapore, 2004)

D.P. Kingma, J. Ba, Adam: a method for stochastic optimization (2017). arXiv:1412.6980 [cs.LG]

H. Wu, J. Köhler, F. Noé, Stochastic normalizing flows. Adv. Neural. Inf. Process. Syst. 33, 5933–5944 (2020). arXiv:2002.06707 [stat.ML]

D. Nielsen, P. Jaini, E. Hoogeboom, O. Winther, M. Welling, SurVAE flows: surjections to bridge the gap between VAEs and flows. Adv. Neural. Inf. Process. Syst. 33, 12685–12696 (2020)

M. Dibak, L. Klein, F. Noé, Temperature-steerable flows (2020). arXiv:2012.00429

M. Arbel, A. Matthews, A. Doucet, Annealed flow transport Monte Carlo. In: International Conference on Machine Learning, pp. 318–330 (2021)

M. Lüscher, Trivializing maps, the Wilson flow and the HMC algorithm. Commun. Math. Phys. 293, 899–919 (2010). https://doi.org/10.1007/s00220-009-0953-7. arXiv:0907.5491 [hep-lat]

G.P. Engel, S. Schaefer, Testing trivializing maps in the Hybrid Monte Carlo algorithm. Comput. Phys. Commun. 182, 2107–2114 (2011). https://doi.org/10.1016/j.cpc.2011.05.004. arXiv:1102.1852 [hep-lat]

D. Albandea, L. Del Debbio, P. Hernández, R. Kenway, J. Marsh Rossney, A. Ramos, Learning trivializing flows. Eur. Phys. J. C 83(7), 676 (2023). https://doi.org/10.1140/epjc/s10052-023-11838-8. arXiv:2302.08408 [hep-lat]

M.D. Parno, Y.M. Marzouk, Transport map accelerated Markov Chain Monte Carlo. SIAM/ASA J. Uncertain. Quantif. 6(2), 645–682 (2018). https://doi.org/10.1137/17M1134640

S.H. Li, L. Wang, Neural network renormalization group. Phys. Rev. Lett. 121, 260–601 (2018). https://doi.org/10.1103/PhysRevLett.121.260601. arxiv:1802.02840 [cond-mat.stat-mech]

M. Hoffman, P. Sountsov, J.V. Dillon, I. Langmore, D. Tran, S. Vasudevan, NeuTra-lizing bad geometry in Hamiltonian Monte Carlo using neural transport (2019)

L. Grenioux, A. Oliviero Durmus, E. Moulines, M. Gabrié, in Proceedings of the 40th International Conference on Machine Learning, Proceedings of Machine Learning Research, vol. 202, ed. by A. Krause, E. Brunskill, K. Cho, B. Engelhardt, S. Sabato, J. Scarlett (PMLR, 2023), pp. 11698–11733. https://proceedings.mlr.press/v202/grenioux23a.html

A. Doucet, N. De Freitas, N.J. Gordon et al., Sequential Monte Carlo methods in practice, vol. 1 (Springer, New York, 2001)

J.S. Liu, J.S. Liu, Monte Carlo strategies in scientific computing, vol. 10 (Springer, New York, 2001)

A. Ukawa, Computational cost of full QCD simulations experienced by CP-PACS and JLQCD Collaborations. Nucl. Phys. B Proc. Suppl. 106, 195–196 (2002). https://doi.org/10.1016/S0920-5632(01)01662-0

L. Del Debbio, Recent progress in simulations of gauge theories on the lattice. J. Phys. Conf. Ser. 640(1), 012,049 (2015). https://doi.org/10.1088/1742-6596/640/1/012049

A. Beskos, N. Pillai, G. Roberts, J.M. Sanz-Serna, A. Stuart, Optimal tuning of the hybrid Monte Carlo algorithm. Bernoulli 19(5A), 1501–1534 (2013)

S. Schaefer, Status and challenges of simulations with dynamical fermions. PoS LATTICE2012, 001 (2012). https://doi.org/10.22323/1.164.0001. arXiv:1211.5069 [hep-lat]

M. Hasenbusch, Full QCD algorithms towards the chiral limit. Nucl. Phys. B Proc. Suppl. 129, 27–33 (2004). https://doi.org/10.1016/S0920-5632(03)02504-0. arXiv:hep-lat/0310029

F. Jegerlehner, R.D. Kenway, G. Martinelli, C. Michael, O. Pene, B. Petersson, R. Petronzio, C.T. Sachrajda, K. Schilling, Requirements for high performance computing for lattice QCD: Report of the ECFA working panel (2000). https://doi.org/10.5170/CERN-2000-002

T. Lippert, Cost of QCD simulations with n(f) = 2 dynamical Wilson fermions. Nucl. Phys. B Proc. Suppl. 106, 193–194 (2002). https://doi.org/10.1016/S0920-5632(01)01661-9. arXiv:hep-lat/0203009

M. Luscher, S. Schaefer, Non-renormalizability of the HMC algorithm. JHEP 04, 104 (2011). https://doi.org/10.1007/JHEP04(2011)104. arXiv:1103.1810 [hep-lat]

L. Del Debbio, G.M. Manca, E. Vicari, Critical slowing down of topological modes. Phys. Lett. B 594, 315–323 (2004). https://doi.org/10.1016/j.physletb.2004.05.038. arXiv:hep-lat/0403001

M. Hasenbusch, Speeding up the hybrid Monte Carlo algorithm for dynamical fermions. Phys. Lett. B 519, 177–182 (2001). https://doi.org/10.1016/S0370-2693(01)01102-9. arXiv:hep-lat/0107019

J. Brannick, R.C. Brower, M.A. Clark, J.C. Osborn, C. Rebbi, Adaptive multigrid algorithm for lattice QCD. Phys. Rev. Lett. 100, 041–601 (2008). https://doi.org/10.1103/PhysRevLett.100.041601. arXiv:0707.4018 [hep-lat]

R. Babich, J. Brannick, R.C. Brower, M.A. Clark, T.A. Manteuffel, S.F. McCormick, J.C. Osborn, C. Rebbi, Adaptive multigrid algorithm for the lattice Wilson–Dirac operator. Phys. Rev. Lett. 105, 201–602 (2010). https://doi.org/10.1103/PhysRevLett.105.201602. arXiv:1005.3043 [hep-lat]

S.L. Smith, P.J. Kindermans, C. Ying, Q.V. Le, Don’t decay the learning rate, increase the batch size (2017). arXiv:1711.00489

M.D. Zeiler, Adadelta: an adaptive learning rate method (2012). arXiv:1212.5701

K. He, X. Zhang, S. Ren, J. Sun, Delving deep into rectifiers: surpassing human-level performance on ImageNet classification (2015). arXiv:1502.01852 [cs.CV]

X. Glorot, Y. Bengio, Understanding the difficulty of training deep feedforward neural networks, pp. 249–256 (2010)

T. Brown, B. Mann, N. Ryder, M. Subbiah, J.D. Kaplan, P. Dhariwal, A. Neelakantan, P. Shyam, G. Sastry, A. Askell et al., Language models are few-shot learners. Adv. Neural. Inf. Process. Syst. 33, 1877–1901 (2020)

S. Smith, M. Patwary, B. Norick, P. LeGresley, S. Rajbhandari, J. Casper, Z. Liu, S. Prabhumoye, G. Zerveas, V. Korthikanti, et al., Using deepspeed and megatron to train megatron-turing nlg 530b, a large-scale generative language model (2022). arXiv:2201.11990

A. Reuther, J. Kepner, C. Byun, S. Samsi, W. Arcand, D. Bestor, B. Bergeron, V. Gadepally, M. Houle, M. Hubbell, et al., Interactive supercomputing on 40,000 cores for machine learning and data analysis, in 2018 IEEE High Performance extreme Computing Conference (HPEC), pp. 1–6 (2018). https://doi.org/10.1109/hpec.2018.8547629. arXiv:1807.07814 [cs.DC]

A. Paszke, et al., in Advances in Neural Information Processing Systems 32, ed. by H. Wallach, H. Larochelle, A. Beygelzimer, F. d’ Alché-Buc, E. Fox, R. Garnett (Curran Associates, Inc., 2019), pp. 8024–8035. http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdf

J. Bradbury, R. Frostig, P. Hawkins, M.J. Johnson, C. Leary, D. Maclaurin, G. Necula, A. Paszke, J. VanderPlas, S. Wanderman-Milne, Q. Zhang, JAX: composable transformations of Python+NumPy programs (2018). http://github.com/google/jax

T. Hennigan, T. Cai, T. Norman, I. Babuschkin, Haiku: Sonnet for JAX (2020). http://github.com/deepmind/dm-haiku

A. Sergeev, M. Del Balso, Horovod: fast and easy distributed deep learning in TensorFlow (2018). arXiv:1802.05799 [cs.LG]

C.R. Harris, K.J. Millman, S.J. Van Der Walt, R. Gommers, P. Virtanen, D. Cournapeau, E. Wieser, J. Taylor, S. Berg, N.J. Smith et al., Array programming with numpy. Nature 585(7825), 357–362 (2020)

P. Virtanen, R. Gommers, T.E. Oliphant, M. Haberland, T. Reddy, D. Cournapeau, E. Burovski, P. Peterson, W. Weckesser, J. Bright et al., Scipy 1.0: fundamental algorithms for scientific computing in python. Nat. Methods 17(3), 261–272 (2020)

J.D. Hunter, Matplotlib: a 2d graphics environment. Comput. Sci. Eng. 9(3), 90–95 (2007). https://doi.org/10.1109/MCSE.2007.55

Acknowledgements

The authors thank Heiko Strathmann for detailed comments on a draft of this manuscript, and Gurtej Kanwar for useful discussions. RA, DCH, FRL, PES, and JMU are supported in part by the U.S. Department of Energy, Office of Science, Office of Nuclear Physics, under grant Contract Number DE-SC0011090. PES is additionally supported by the National Science Foundation under EAGER grant 2035015, by the U.S. DOE Early Career Award DE-SC0021006, by a NEC research award, and by the Carl G and Shirley Sontheimer Research Fund. KC and MSA are supported by the National Science Foundation under the award PHY-2141336. MSA thanks the Flatiron Institute for their hospitality. DB is supported by the Argonne Leadership Computing Facility, which is a U.S. Department of Energy Office of Science User Facility operated under contract DE-AC02-06CH11357. This work is funded by the U.S. National Science Foundation under Cooperative Agreement PHY-2019786 (The NSF AI Institute for Artificial Intelligence and Fundamental Interactions, http://iaifi.org/). This work is associated with an ALCF Aurora Early Science Program project, and used resources of the Argonne Leadership Computing Facility, which is a DOE Office of Science User Facility supported under Contract DEAC02-06CH11357. The authors acknowledge the MIT SuperCloud and Lincoln Laboratory Supercomputing Center [118] for providing HPC resources that have contributed to the research results reported within this paper. Numerical experiments and data analysis used PyTorch [119], JAX [120], Haiku [121], Horovod [122], NumPy [123], and SciPy [124]. Figures were produced using matplotlib [125].

Funding

Open Access funding provided by the MIT Libraries.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Heng-Tong Ding.

Appendix A: Details of numerical examples

Appendix A: Details of numerical examples

Numerical illustrations of flow models trained to sample scalar field configurations are optimized to model the action

where \(d=2\). Architectures are stacks of \(n_L\) affine coupling transformations with checkerboard variable partitioning, parametrized by convolutional neural networks, as implemented in Ref. [22]. All architectures have \(n_L=24\) coupling layers, convolutional kernel size \(K=5\), and two layers of hidden channels of width 12 within each neural network, except for the model used to generate the results shown in Figs. 6 and 8 where \(n_L\) and K differ as noted. Reverse KL self-training protocols are also similar to Ref. [22], but with the addition of learning rate or batch size scheduling where indicated. Gradient clipping has also been applied, specifically rescaling all gradients by a common factor as necessary to prevent the norm over all gradients from exceeding 100. Training and ESS evaluation both use batch size 16,384, except as noted in Fig. 1. Results shown in Fig. 6 are initially trained for 150,000 steps at \(\lambda =1.25\), then for 500 steps at each subsequent \(\lambda \), one after the other. This is sufficient to reach a slowly improving regime for all \(\lambda \), and produces comparable final results as from-scratch training or starting instead from \(\lambda =4\). In all cases, from-scratch training begins with learning rate \(10^{-3}\). For the results shown in Figs. 3, 5, and 6, when retraining, the learning rate is set to \(10^{-4}\) and the optimizer state is never reset (i.e. it is carried over from training for the previous parameters). Throughout, optimizer hyperparameters are the Pytorch 1.10 defaults unless otherwise specified.