Abstract

We introduce eco4cast,1 an open-source package aimed to reduce carbon footprint of machine learning models via predictive cloud computing scheduling. The package is integrated with machine learning models and employs an advanced temporal convolution neural network to forecast daily carbon dioxide emissions stemming from electricity generation.The model attains remarkable predictive accuracy by accounting for weather conditions, acknowledged for their robust correlation with carbon energy intensity. The hallmark of eco4cast lies in its capability to identify periods of temporal minimal carbon intensity. This enables the package to manage cloud computing tasks only during these periods, significantly reducing the ecological impact. Our contribution represents a compelling fusion of sustainability and computational efficiency. The code and documentation of the package are hosted on GitHub under the Apache 2.0 license.

Similar content being viewed by others

Notes

https://github.com/AIRI-Institute/eco4cast.

REFERENCES

“Paris Agreement,” in Report of the Conference of the Parties to the United Nations Framework Convention on Climate Change (21st Session), Paris, 2015 (HeinOnline, 2015), Vol. 4, p. 2017.

X. Wang, J. Wang, W. Guan, and F. Taghizadeh-Hesary, “Role of ESG investments in achieving COP-26 targets,” Energy Econ. 123, 106757 (2023). https://doi.org/10.1016/j.eneco.2023.106757

T. Gibon, E. G. Hertwich, A. Arvesen, B. Singh, and F. Verones, “Health benefits, ecological threats of low-carbon electricity,” Environ. Res. Lett. 12, 034023 (2017). https://doi.org/10.1088/1748-9326/aa6047

S. Frolov, “Quantum computing’s reproducibility crisis: Majorana fermions,” Nature 592, 350–352 (2021). https://doi.org/10.1038/d41586-021-00954-8

P. Henderson, J. Hu, J. Romoff, E. Brunskill, D. Jurafsky, and J. Pineau, “Towards the systematic reporting of the energy and carbon footprints of machine learning,” J. Mach. Learn. Res. 21 (248), 1–43 (2020).

D. Patterson, J. Gonzalez, Q. Le, C. Liang, L.‑M. Munguia, D. Rothchild, D. So, M. Texier, and J. Dean, “Carbon emissions and large neural network training,” arXiv Preprint (2021). https://doi.org/10.48550/arXiv.2104.10350

Ya. Feng, J. Zhang, Yo. Geng, S. Jin, Z. Zhu, and Z. Liang, “Explaining and modeling the reduction effect of low-carbon energy transition on energy intensity: Empirical evidence from global data,” Energy 281, 128276 (2023). https://doi.org/10.1016/j.energy.2023.128276

S. A. Budennyy, V. D. Lazarev, N. N. Zakharenko, A. N. Korovin, O. A. Plosskaya, D. V. Dimitrov, V. S. Akhripkin, I. V. Pavlov, I. V. Oseledets, I. S. Barsola, I. V. Egorov, A. A. Kosterina, and L. E. Zhukov, “eco2AI: Carbon emissions tracking of machine learning models as the first step towards sustainable AI,” Dokl. Math. 106 (S1), S118–S128 (2023). https://doi.org/10.1134/s1064562422060230

M. M. Forootan, I. Larki, R. Zahedi, and A. Ahmadi, “Machine learning and deep learning in energy systems: A review,” Sustainability 14, 4832 (2022). https://doi.org/10.3390/su14084832

Z. Xuan, Z. Xuehui, L. Liequan, F. Zubing, Ya. Junwei, and P. Dongmei, “Forecasting performance comparison of two hybrid machine learning models for cooling load of a large-scale commercial building,” J. Building Eng. 21, 64–73 (2019). https://doi.org/10.1016/j.jobe.2018.10.006

J. Runge, R. Zmeureanu, and M. Le Cam, “Hybrid short-term forecasting of the electric demand of supply fans using machine learning,” J. Building Eng. 29, 101144 (2020). https://doi.org/10.1016/j.jobe.2019.101144

H. Sharadga, S. Hajimirza, and R. S. Balog, “Time series forecasting of solar power generation for large-scale photovoltaic plants,” Renewable Energy 150, 797–807 (2020). https://doi.org/10.1016/j.renene.2019.12.131

C. Li, S. Lin, F. Xu, D. Liu, and J. Liu, “Short-term wind power prediction based on data mining technology and improved support vector machine method: A case study in Northwest China,” J. Cleaner Prod. 205, 909–922 (2018). https://doi.org/10.1016/j.jclepro.2018.09.143

W. Yang, J. Wang, H. Lu, T. Niu, and P. Du, “Hybrid wind energy forecasting and analysis system based on divide and conquer scheme: A case study in China,” J. Cleaner Prod. 222, 942–959 (2019). https://doi.org/10.1016/j.jclepro.2019.03.036

P. K. Dash, E. N. V. D. V. Prasad, R. K. Jalli, and S. P. Mishra, “Multiple power quality disturbances analysis in photovoltaic integrated direct current microgrid using adaptive morphological filter with deep learning algorithm,” Appl. Energy 309, 118454 (2022). https://doi.org/10.1016/j.apenergy.2021.118454

E. Sarmas, E. Spiliotis, V. Marinakis, T. Koutselis, and H. Doukas, “A meta-learning classification model for supporting decisions on energy efficiency investments,” Energy Buildings 258, 111836 (2022). https://doi.org/10.1016/j.enbuild.2022.111836

L. Tschora, E. Pierre, M. Plantevit, and C. Robardet, “Electricity price forecasting on the day-ahead market using machine learning,” Appl. Energy 313, 118752 (2022). https://doi.org/10.1016/j.apenergy.2022.118752

H.-T. Pao, H.-Ch. Fu, and Ch.-L. Tseng, “Forecasting of CO2 emissions, energy consumption and economic growth in China using an improved grey model,” Energy 40, 400–409 (2012). https://doi.org/10.1016/j.energy.2012.01.037

S. Kumari and S. K. Singh, “Machine learning-based time series models for effective CO2 emission prediction in India,” Environ. Sci. Pollut. Res. 30, 116601–116616 (2022). https://doi.org/10.1007/s11356-022-21723-8

Ya. Meng and H. Noman, “Predicting CO2 emission footprint using AI through machine learning,” Atmosphere 13, 1871 (2022). https://doi.org/10.3390/atmos13111871

Z. Zuo, H. Guo, and J. Cheng, “An LSTM-STRIPAT model analysis of China’s 2030 CO2 emissions peak,” Carbon Manage. 11, 577–592 (2020). https://doi.org/10.1080/17583004.2020.1840869

T. Nyoni and W. G. Bonga, “Prediction of CO2 emissions in India using ARIMA models,” DRJ–J. Econ. Finance 4 (2), 1–10 (2019). https://ssrn.com/abstract=3346378

P. Gopu, R. R. Panda, and N. K. Nagwani, “Time series analysis using ARIMA model for air pollution prediction in Hyderabad city of India,” in Soft Computing and Signal Processing, Ed. by V. S. Reddy, V. K. Prasad, J. Wang, and K. T. V. Reddy, Advances in Intelligent Systems and Computing, Vol. 1325 (Springer, Singapore, 2021), pp. 47–56. https://doi.org/10.1007/978-981-33-6912-2_5

H. Zhao, G. Huang, and N. Yan, “Forecasting energy-related CO2 emissions employing a novel SSA-LSSVM model: Considering structural factors in China,” Energies 11, 781 (2018). https://doi.org/10.3390/en11040781

M. R. Qader, S. Khan, M. Kamal, M. Usman, and M. Haseeb, “Forecasting carbon emissions due to electricity power generation in bahrain,” Environ. Sci. Pollut. Res. 29, 17346–17357 (2022). https://doi.org/10.1007/s11356-021-16960-2

M. S. Bakay and Ü. Ağbulut, “Electricity production based forecasting of greenhouse gas emissions in Turkey with deep learning, support vector machine and artificial neural network algorithms,” J. Cleaner Prod. 285, 125324 (2021). https://doi.org/10.1016/j.jclepro.2020.125324

C. Saleh, N. R. Dzakiyullah, and J. B. Nugroho, “Carbon dioxide emission prediction using support vector machine,” IOP Conf. Ser.: Mater. Sci. Eng. 114, 012148 (2016). https://doi.org/10.1088/1757-899x/114/1/012148

K. Leerbeck, P. Bacher, R. G. Junker, G. Goranović, O. Corradi, R. Ebrahimy, A. Tveit, and H. Madsen, “Short-term forecasting of CO2 emission intensity in power grids by machine learning,” Appl. Energy 277, 115527 (2020). https://doi.org/10.1016/j.apenergy.2020.115527

D. Patterson, J. Gonzalez, U. Hölzle, Q. H. Le, C. Liang, L.-M. Munguia, D. Rothchild, D. So, M. Texier, and J. Dean, “The carbon footprint of machine learning training will plateau, then shrink,” Computer 55 (7), 18–28 (2022). https://doi.org/10.1109/MC.2022.3148714

Yu. Hong, S. Wang, and Z. Huang, “Efficient energy consumption scheduling: Towards effective load leveling,” Energies 10, 105 (2017). https://doi.org/10.3390/en10010105

J. Kim and S. Cho, “Electric energy consumption prediction by deep learning with state explainable autoencoder,” Energies 12, 739 (2019). https://doi.org/10.3390/en12040739

E. Aguilar Madrid and N. Antonio, “Short-term electricity load forecasting with machine learning,” Information 12, 50 (2021). https://doi.org/10.3390/info12020050

T. Khan, W. Tian, S. Ilager, and R. Buyya, “Workload forecasting and energy state estimation in cloud data centres: ML-centric approach,” Future Gener. Comput. Syst. 128, 320–332 (2022). https://doi.org/10.1016/j.future.2021.10.019

R. Deng, Z. Yang, J. Chen, N. R. Asr, and M.‑Yu. Chow, “Residential energy consumption scheduling: A coupled-constraint game approach,” IEEE Trans. Smart Grid 5, 1340–1350 (2014). https://doi.org/10.1109/tsg.2013.2287494

K. van der Wiel, H. C. Bloomfield, R. W. Lee, L. P. Stoop, R. Blackport, J. A. Screen, and F. M. Selten, “The influence of weather regimes on European renewable energy production and demand,” Environ. Res. Lett. 14, 094010 (2019). https://doi.org/10.1088/1748-9326/ab38d3

P. Zippenfenig, “Open-meteo.com weather API (0.2.69),” Zenodo (2023). https://doi.org/10.5281/zenodo.8112599

Met Office. Cartopy: A cartographic python library with a Matplotlib interface. Exeter, Devon (2010–2015).

Sh. Bai, J. Z. Kolter, and V. Koltun, “An empirical evaluation of generic convolutional and recurrent networks for sequence modeling,” arXiv Preprint (2018). https://doi.org/10.48550/arXiv.1803.01271

Funding

This work was supported by ongoing institutional funding. No additional grants to carry out or direct this particular research were obtained.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

The authors of this work declare that they have no conflicts of interest.

Additional information

Publisher’s Note.

Pleiades Publishing remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

APPENDIX A

1.1 USAGE EXAMPLES

1.1.1 A.1. Integration and Forecasting with eco4cast and Google Cloud

The ImageNet dataset, commonly employed for training classification models, consists of a collection of 1000 distinct classes. The execution of a sole training epoch on this dataset demands a timespan of 2 h when utilizing the Nvidia V100 GPU. Consequently, the adoption of a eco4cast’s training strategy is deemed advantageous in the pursuit of this objective.

The principal modality for employing the eco4cast package entails its amalgamation with the infrastructure of Google Cloud. This provision facilitates end-users in the discretionary election of a virtual machine (VM), endowed with customizable hardware configurations encompassing alternatives for central processing unit (CPU) types, graphics processing unit (GPU) allocations, random-access memory (RAM) capacities, and preferences for hard drive specifications. Moreover, the eco4cast package adeptly orchestrates the seamless relocation of the designated VM across diverse Google Cloud zones, effectuating the comprehensive transfer of all data/models from the initial VM to the VM instantiated with identical specifications within the designated zones.

In order to obtain CO2 emission predictions, it is imperative for users to initially establish an account on electricitymaps.com and subsequently acquire an API key. This API key functions as an authorization credential, conferring privileged access to the most current emission data spanning diverse geographical domains. By incorporating the acquired API key into the eco4cast package, the CO2 prediction model becomes duly equipped to extract the requisite dataset, thereby enabling the formulation of predictive outcomes.

Additional details concerning the establishment of Google Cloud and electricitymaps accounts, along with illustrative instances of code elaborated hereunder, can be accessed via our GitHub repository at github.com/AIRI-Institute/eco4cast/examples.

The project employing eco4cast is structured into two fundamental segments:

• execution of a Python script on the local (master) machine.

• execution of a Python script on the virtual machine (VM).

Within the purview of this work, the initial script denominated as master_machine_main.py is positioned within the confines of the user’s local machine. Enclosed within this script are the essential parameters requisite for the establishment of a Google Cloud virtual machine (VM), these being subsequently employed as input arguments within the scope of the Controller class.

The Controller class represents the fundamental class responsible for executing a series of algorithms, including the following tasks:

• forecasting CO2 emissions for the upcoming 24 h.

• generating training time intervals within permissible zones.

• relocating the VM if it is necessary.

• carrying out a training process in the current zone.

• iterative replication of the procedural sequence upon culmination of the zone-based intervals.

vm_main.py assumes responsibility for the entire training logic on VM. It uses PyTorch as the neural network framework. Initially, the script performs model and dataset initialization, obtaining training intervals through command-line arguments. The training process centers around the IntervalTrainer class, which serves as the core of the training procedure.

The archival of training process parameters, encompassing states of Dataloaders, prevailing epoch status, particulars of batch, history of loss, and other related with training insights, can be systematically preserved within a file at any stage of the training process. To achieve this, a combination of Lightning Fabric and custom Dataloaders is used. This capability allows the training process to be paused at any point during an epoch. Therefore VM relocation is being performed seamlessly without losing any pertinent training information.

Moreover, users can customize the training process through the use of Lightning Fabric callbacks. This flexibility grants researchers and practitioners the capability to adapt and fine-tune the training process to suit their specific prerequisites and requirements.

1.1.2 A.2. Example with Local Usage

All the aforementioned functionalities are employable on a local computational system devoid of any dependence on Google Cloud services. Should your system be geographically located within the confines of the supported geographic zones, the eco4cast can be harnessed to facilitate model training on your local hardware infrastructure, particularly during time intervals characterized by minimal emissions in the certain region.

The implementation utilizes the CO2 Predictor and Interval Generator classes to generate training intervals in your specific zone. Subsequently, the IntervalTrainer class is engaged to commence model training during periods of minimal emissions, thereby carrying out an optimization of CO2 emissions.

Similar to the example provided above, the IntervalTrainer can effectively utilize callbacks system and save model states during epoch.

APPENDIX B

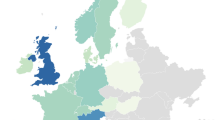

1.1 AREAS AND ZONES UNDER CONSIDERATION

1.1.1 B.1. Electricity Maps Areas

Currently supported electricity maps areas:

• BR–CS (Central Brazil)

• CA–ON (Canada–Ontario)

• CH (Switzerland)

• DE (Germany)

• PL (Poland)

• BE (Belgium)

• IT–NO (North Italy)

• CA–QC (Canada–Quebec)

• ES (Spain)

• GB (Great Britain)

• FI (Finland)

• FR (France)

• NL (Netherlands)

1.1.2 B.2. Google Cloud Zones

Each area or country consists of multiple Google Cloud zones (they are marked with letters “a,” “b,” “c” in the end of zone name).

In eco4cast we used the following compliance between them represented in Table 1.

Rights and permissions

About this article

Cite this article

Tiutiulnikov, M., Lazarev, V., Korovin, A. et al. eco4cast: Bridging Predictive Scheduling and Cloud Computing for Reduction of Carbon Emissions for ML Models Training. Dokl. Math. 108 (Suppl 2), S443–S455 (2023). https://doi.org/10.1134/S1064562423701223

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S1064562423701223