Abstract

We consider the inverse problem of determining two unknown coefficients in a linear system of partial differential equations using additional information about one of the solution components. The problem is reduced to a nonlinear operator equation for one of the unknown coefficients. The successive approximation method and the Newton method are used to solve this operator equation numerically. Results of calculations illustrating the convergence of numerical methods for solving the inverse problem are presented.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1. INTRODUCTION

Consider the problem of determining functions \(u(x,t) \) and \(a(x,t) \) such that

This problem is a mathematical model of the sorption dynamic process [1, p. 174; 2, p. 6] under the assumption that the absorbent properties change over time.

The existence and uniqueness of the solution of the following inverse problem were studied in [3].

Assume that the functions \(\mu (t)\) and \(\psi (x) \) are given and the functions \(\gamma (t) \) and \(\varphi (t) \) are unknown. It is required to determine \(\gamma (t) \), \(\varphi (t)\), \(u(x,t) \), and \(a(x,t) \) from the following additional information about one of the components of the solution to problem (1.1)–(1.4):

We will assume that the given functions \(\mu (t) \), \(\psi (x)\), \(g(t) \), and \(p(t) \) satisfy the following conditions.

Conditions A.

One has \(\mu ,g,p\in C[0,T]\); \(\psi \in C[0,l] \); \(\mu (t)>0\), \(g(t)>0 \), and \(p(t)<0 \) for \(0\leq t\leq T \); \(\psi (x)\ge 0\) for \(0\leq x\leq l \); \(\psi (l)=0\); and \(\psi (x) \) is not zero identically.

Let us give the definition of solution of the inverse problem. Let \(t_0\in (0,T] \). We introduce the rectangle \(Q_{t_0}=\{(x,t):0\leq x\leq l \), \(0\leq t\leq t_0\}\).

Definition.

A quadruple of functions \((\gamma (t),\varphi (t), u(x,t),a(x,t)) \) is called a solution of the inverse problem for \(t\in [0,t_0]\) if \(\gamma ,\varphi \in C[0,t_0]\), \(u,u_x,a,a_t\in C(Q_{t_0}) \), \(\gamma (t)>0\) and \(\varphi (t)>0 \) for \(0\leq t\leq t_0 \), \(u(x,t)>0\) and \(a(x,t)\ge 0 \) for \((x,t)\in Q_{t_0} \), and \(\gamma (t) \), \(\varphi (t)\), \(u(x,t) \), and \(a(x,t) \) satisfy Eqs. (1.1) and (1.2) and conditions (1.3)–(1.6) in \(Q_{t_0}\).

Here are some results in [3] to be used in what follows.

Given a function \(\gamma (t)\), consider the integral equation

for the function \(u(x,t) \), where

Under Conditions A, there exists a unique solution of the integral equation (1.7). To emphasize the dependence of this solution on the function \(\gamma (t)\), we denote it by \( u(x,t;\gamma )\).

Let us define the operator

where

It was shown in [3] that solving the inverse problem is reduced to solving the nonlinear operator equation

The present paper deals with numerical methods for solving Eq. (1.9) and the above-stated inverse problem. We use two iterative methods, the successive approximation method and the Newton method, to solve the nonlinear operator equation (1.9). There are quite a few papers dealing with iterative methods for solving operator equations to which inverse problems can be reduced (see, e.g., [4,5,6,7,8,9,10,11]). Many of them are determined by the peculiarities and specific features of each specific inverse problem.

Consider the successive approximation method for the operator equation (1.9).

Assume that the inequality

is satisfied. Define the positive constant

Let us introduce the set of functions

Consider the sequence of functions \(\gamma _n(t) \), \(n=0,1,2,\ldots \), recursively defined by the successive approximation method for solving Eq. (1.9),

The results in [3] imply an assertion about the convergence of the successive approximation method.

Theorem 1.

Let the functions \(\mu (t) \), \(\psi (x)\), \(g(t) \), and \(p(t) \) satisfy conditions A and inequality (1.10). Then there exists a \( t_0\in (0,T]\) such that, for each function \(\gamma _0(t)\in \Gamma _0 \), the sequence of functions \(\gamma _n(t) \) belongs to the set \( \Gamma _0\) and uniformly converges as \(n\to \infty \) to a continuous function \(\bar {\gamma }(t) \) that is a solution of Eq. (1.9).

2. NEWTON METHOD

Consider the application of the Newton method to the numerical solution of the inverse problem under study. As was already noted, it suffices to apply the Newton method to solve the nonlinear operator equation (1.9).

To construct the Newton method, one needs to know the derivative of the operator defined by formula (1.8). First, let us study the differentiability of the solution of the integral equation (1.7) with respect to the parameter.

Consider functions \(\gamma (t)\) and \(\gamma _{\Delta }(t)\) and a number \(\varepsilon \) such that the functions \(\gamma (t) \) and \(\gamma (t)+\xi \gamma _{\Delta }(t)\) are positive and continuous on the interval \([0,t_0] \) for all \(\xi \in (-\varepsilon ,\varepsilon )\).

Lemma.

If conditions A are satisfied, then the solution \( u(x,t;\gamma +\xi \gamma _{\Delta }) \) of Eq. (1.7) has the partial derivative \(\dfrac {\partial u}{\partial \xi }(x,t;\gamma +\xi \gamma _{\Delta })\Big |_{\xi =0}\).

Proof. Consider the function

Since the functions \(u(x,t;\gamma +\xi \gamma _{\Delta })\) and \(u(x,t;\gamma ) \) are solutions of Eq. (1.7) for \(\gamma (t)+\xi \gamma _{\Delta }(t) \) and \(\gamma (t) \), respectively, it follows that \(v(x,t;\gamma ,\gamma _{\Delta },\xi )\) satisfies the equation

where

and \(F_2(x,t,s,\tau ;\gamma ,\gamma _{\Delta },\xi )= (\gamma (t)+\xi \gamma _{\Delta }(t)) H(x,s,t,\tau ;\gamma + \xi \gamma _{\Delta })R(\tau ;\gamma +\xi \gamma _{\Delta }).\) Passing to the limit as \(\xi \to 0 \), we obtain

where

and

Equation (2.1) is a Volterra integral equation of the second kind for the function \(v(x,t;\gamma ,\gamma _{\Delta },\xi )\). Solving it for \(v(x,t;\gamma ,\gamma _{\Delta },\xi )\), passing to the limit as \(\xi \to 0 \), and using formulas (2.2) and (2.3), we find that the derivative \({\partial u(x,t;\gamma +\xi \gamma _{\Delta })}/\partial \xi \) exists for \(\xi =0 \). Denote it by \(w(x,t;\gamma ,\gamma _{\Delta })\). Equation (2.1) implies that \(w(x,t;\gamma ,\gamma _{\Delta })\) is a solution of the integral equation

The proof of the lemma is complete.

Let us study the Gateaux differentiability of the operator \(A \) defined in (1.8). It follows from the results in [3] that there exists a \(t_0\in (0,T] \) such that \(A \) maps the set \(\Gamma _0 \) into itself. In what follows, we assume that the number \(t_0 \) satisfies this condition.

We introduce the set

Theorem 2.

If conditions A and inequality (1.10) are satisfied, then, for each function \(\gamma \in \Gamma _{00} \), the operator \(A \) is Gateaux differentiable on \(\Gamma _{00} \).

Proof. Let \(\gamma (t) \) be an arbitrary function in \(\Gamma _{00} \), and let \(\gamma _{\Delta }(t) \) be a function and \(\xi \) a number such that the function \(\gamma (t)+\xi \gamma _{\Delta }(t)\) belongs to the set \(\Gamma _{00} \) as well. Let us show that the functions \(((A(\gamma +\xi \gamma _{\Delta }))(t)-(A\gamma )(t))/\xi \) converge uniformly on the interval \([0,t_0]\) as \(\xi \to 0 \).

Consider the functions

They uniformly converge on the interval \([0,t_0] \) to the function

as \(\xi \to 0 \).

It follows from the lemma that the functions

uniformly converge on the interval \([0,t_0] \) to the function

as \(\xi \to 0\). It follows from formulas (2.3), (2.5), and (2.6) that

where the function \(w(x,t;\gamma ,\gamma _{\Delta })\) is determined from Eq. (2.4). Thus, the operator \(A \) is Gateaux differentiable on the set \(\Gamma _{00} \), and its derivative \(A^{\prime}[\gamma ]\gamma _{\Delta } \) is determined by the right-hand side of Eq. (2.7). The proof of the theorem is complete.

The iterative process corresponding to the Newton method [12, p. 669] is defined as follows. One specifies the function \(\gamma _0(t) \). The subsequent functions \(\gamma _{n+1}(t) \), \(n=0,1,\ldots \), are determined by the formula \(\gamma _{n+1}(t)=\gamma _n(t)+\gamma _{\Delta n}(t)\), where \(\gamma _{\Delta n}(t)\) is the solution of the linear integral equation

3. NUMERICAL EXPERIMENTS

Let us present the results of some numerical experiments in which the successive approximation method (1.11) and the Newton method (2.8) were used to solve the inverse problem under study.

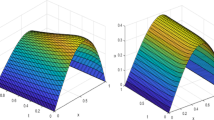

The general scheme of computational experiments was as follows. The functions \(\mu (t) \), \(\gamma (t) \), and \(\varphi (t) \) were specified on the interval \([0,T] \), and the function \(\psi (x) \) was defined on the interval \([0,l] \). With these functions, problem (1.1)–(1.4) was solved, and the functions \(g(t)=u(l,t)\) and \(p(t)=u_x(l,t) \) were determined. Then the operator equation (1.9) with the functions \(\mu (t) \), \(g(t) \), \(p(t) \), and \(\psi (x) \) was solved by the iterative methods (1.11) and (2.8), and the approximate function \(\tilde {\gamma }(t)\) was found. To determine the approximate function \(\tilde {\varphi }(t) \), we used the formula [3]

One and the same initial approximation \(\gamma _0(t)=\gamma _0 \) and the same stopping criterion

were used in the approximate solution of the operator equation (1.9) by both iterative methods.

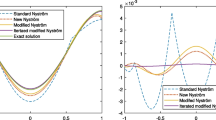

In the first computational experiment, \(T=0.5 \), \(l=1 \), and

Figure 1 shows the function \(\gamma (t)=3+\sin (2\pi t)\), the first iteration \(\gamma ^{I}_{1}(t)\) and the second iteration \(\gamma ^{I}_{2}(t)\) of the successive approximation method, and the first iteration \(\gamma ^{N}_{1}(t)\) and the second iteration \(\gamma ^{N}_{2}(t)\) of the Newton method. For \(\delta =0.001\), the successive approximation method stopped at the 9th iteration step, and the Newton method stopped at the 6th iteration step. In the scale of Fig. 1, the approximate solutions \(\tilde {\gamma }^{I}(t)=\gamma ^{I}_{9}(t)\) and \(\tilde {\gamma }^{N}(t)=\gamma ^{N}_{6}(t)\) thus obtained visually coincide with the exact solution \(\gamma (t)=3+\sin (2\pi t) \) and hence are not shown.

Figure 2 shows the function \(\varphi (t)=0.5+0.1\cos (2\pi t)\) and the functions \(\varphi ^{I}_{1}(t)\), \(\varphi ^{I}_{2}(t) \), \(\varphi ^{N}_{1}(t) \), and \(\varphi ^{N}_{2}(t) \) obtained by the substitution of the functions \(\gamma ^{I}_{1}(t)\), \(\gamma ^{I}_{2}(t) \), \(\gamma ^{N}_{1}(t) \), and \(\gamma ^{N}_{2}(t) \), respectively, into formula (3.1). The approximate solutions \(\tilde {\varphi }^{I}(t)=\varphi ^{I}_{9}(t)\) and \(\tilde {\varphi }^{N}(t)=\varphi ^{N}_{6}(t)\) obtained in a similar manner visually coincide with the exact solution \(\varphi (t)=0.5+0.1\cos (2\pi t)\) and hence are not shown.

In the second computational experiment, \(T=0.5\), \(l=1 \), and

The parameter \(\delta \) was chosen to be \(0.001 \). By analogy with Fig. 1, Fig. 3 shows the values of the exact function \(\gamma (t)\) as well as the functions obtained on the first two iterations for both methods. The convergence criterion was satisfied at the 7th step of the successive approximation method and at the 9th step of the Newton method. The approximate solutions \(\tilde {\gamma }^{I}(t)=\gamma ^{I}_{7}(t) \) and \(\tilde {\gamma }^{N}(t)=\gamma ^{N}_{9}(t)\) found at the final iteration of both methods visually match the exact solution in the scale of Fig. 3.

Figure 4 shows the function \(\varphi (t)=2+0.5\sin (2\pi t)\) as well as the functions \(\varphi ^{I}_{1}(t)\), \(\varphi ^{I}_{2}(t) \), \(\varphi ^{N}_{1}(t) \), and \(\varphi ^{N}_{2}(t) \) corresponding to the first two iterations. The functions \(\tilde {\varphi }^{I}(t)=\varphi ^{I}_{7}(t)\) and \(\tilde {\varphi }^{N}(t)=\varphi ^{N}_{9}(t)\) found by formula (3.1) coincide in the figure with \(\varphi (t)=2+0.5\sin (2\pi t)\).

The above examples, as well as a number of other numerical calculations, allow us to conclude that the convergence of both methods is quite fast and that there are no significant advantages in the rate of convergence of one of the methods in comparison with the other.

REFERENCES

Tikhonov, A.N. and Samarskii, A.A., Uravneniya matematicheskoi fiziki (Equations of Mathematical Physics), Moscow: Izd. Mosk. Gos. Univ., 1999.

Denisov, A.M. and Lukshin, A.V., Matematicheskie modeli neravnovesnoi dinamiki sorbtsii (Mathematical Models of Nonequilibrium Sorption Dynamics), Moscow: Izd. Mosk. Gos. Univ., 1989.

Denisov, A.M., Existence and uniqueness of a solution of a system of nonlinear integral equations, Differ. Equations, 2020, vol. 56, no. 9, pp. 1140–1147.

Bimuratov, S.Sh. and Kabanikhin, S.I., Solution of one-dimensional inverse problems of electrodynamics by the Newton–Kantorovich method, Comput. Math. Math. Phys., 1992, vol. 32, no. 12, pp. 1729–1743.

Monch, L., A Newton method for solving inverse scattering problem for a sound-hard obstacle, Inverse Probl., 1996, vol. 12, no. 3, pp. 309–324.

Kabanikhin, S.I., Scherzer, O., and Shichlenin, M.A., Iteration method for solving a two-dimensional inverse problem for hyperbolic equation, J. Inverse Ill-Posed Probl., 2003, vol. 11, no. 1, pp. 1–23.

Samarskii, A.A. and Vabishchevich, P.N., Chislennye metody resheniya obratnykh zadach matematicheskoi fiziki (Numerical Methods for Solving Inverse Problems of Mathematical Physics), Moscow: Editorial URSS, 2004.

Yan-Bo, Ma., Newton method for estimation of the Robin coefficient, J. Nonlin. Sci. Appl., 2015, vol. 8, no. 5, pp. 660–669.

Denisov, A.M., Iterative method for solving an inverse coefficient problem for a hyperbolic equation, Differ. Equations, 2017, vol. 53, no. 7, pp. 943–949.

Baev, A.V. and Gavrilov, S.V., An iterative way of solving the inverse scattering problem for an acoustic system of equations in an absorptive layered nonhomogeneous medium, Mosc. Univ. Comput. Math. Cybern., 2018, vol. 42, no. 2, pp. 55–62.

Denisov, A.M., Iterative method for solving an inverse problem for a hyperbolic equation with a small parameter multiplying the highest derivative, Differ. Equations, 2019, vol. 55, no. 7, pp. 940–948.

Kantorovich, L.V. and Akilov, G.P., Funktsional’nyi analiz (Functional Analysis), Moscow: Nauka, 1977.

Funding

This work was fiinancially supported by the RF Ministry of Science and Higher Education via a program of the Moscow Center for Fundamental and Applied Mathematics under agreement no. 075-15-2019-1621.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Translated by V. Potapchouck

Rights and permissions

Open Access. This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gavrilov, S.V., Denisov, A.M. Numerical Solution Methods for a Nonlinear Operator Equation Arising in an Inverse Coefficient Problem. Diff Equat 57, 868–875 (2021). https://doi.org/10.1134/S0012266121070041

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S0012266121070041