Abstract

This study examines consumers’ reactions to the communication styles of chatbots during failed service experiences. The current study explores whether the communication style adopted by a chatbot impacts consumer satisfaction and behavior intention and how expectancy violations can moderate these relationships in the service context. A pre-test examined the validity of the stimuli of chatbots that were either task-oriented or social-oriented after consumers encountered service failure. For more information, the experiment was designed to manipulate the AI-based chatbot agent’s process and style of communication and measure the role of expectancy violations. The main experiment results showed that interactions with social-oriented communication style chatbots enhance the level of consumers’ interaction satisfaction and intention of behavior. Respondents experienced a higher perception of warmth when interacting with social-oriented communication style chatbots than task-oriented. Moreover, expectancy violation moderates the mediation of warmth on the relationship between the chatbot’s communication style/type and interaction satisfaction, trust, and intention of patronage. Setting chatbots’ communication styles to be social-oriented can help reduce negative emotions among consumers caused by service failure; specifically, the perception of warmth created by the social-oriented communication style can alleviate negative evaluations of service agents and companies, such as dissatisfaction and loss of interest. Therefore, in managerial practice, the firm should choose the social-oriented communication style chatbot agent to recover the customer relationship after a service failure.

Similar content being viewed by others

Introduction

In the service industry, human workers are increasingly being supported or even replaced by AI, thus changing the nature of service and the consumer experience (Ostrom et al., 2019). Such applications/agents are so-called chatbots, which are still far from perfect replacements for humans. Although people may not think there is anything wrong with algorithm-based chatbots, they may still attribute service failures to chatbots. Service failures often evoke negative emotions (i.e., anger, frustration, and helplessness) in consumers, thus leading to an algorithmic aversion to chatbots (Jones-Jang and Park, 2023). Such experiences will cause consumers to perceive dissatisfaction when using services provided by robots (Tsai et al., 2021). However, there is little literature on how consumers respond to service failures caused by bots. (Honig and Oron-Gilad, 2018). Companies typically react to this problem by transferring angry consumers to human employees for further assistance (Choi et al., 2021) and avoiding the more serious negative effects of double deviation; however, this option incurs additional costs. Therefore, the questions of “How to solve and overcome this issue, and how can the negative influence of chatbots after the service failure be mitigated?” are raised.

In recent studies on related topics, researchers have begun to pay increased attention to designing robot dialog to match human-like characteristics in a new attempt to improve the humanization of chatbots. For example, chatbots can be used as an additional communication channel to position the brand (Roy and Naidoo, 2021). Van Pinxteren et al. (2023) did more research on the effect of chatbot communication styles on the engagement of consumers. However, little research has examined how chatbot agents’ communication styles affect users’ reactions when they encounter service failures.

We suggest that increases in warmth and competence perception, as factors in the chatbot’s communication style, will benefit consumers’ experiences of service failure. Individuals generally use warmth and competence to attribute their minds to inanimate objects (Pitardi et al., 2021); i.e., the perception of warmth reflects social-emotional, and competence perception gives expression to functional (Wirtz et al., 2018). The chatbot is regarded as lacking a mind and does not generate ideas regarding social judgments (Pitardi et al., 2021). Communication style can serve as social clues that influence consumers’ perception of chatbots (Feine et al., 2019), which give chatbots a human-like interactive experience through mind perception (Breazeal, 2003b; Huang and Rust, 2018; Thomas et al., 2018), and affect consumers’ actual service evaluation (Webster and Sundaram, 2009). Therefore, this perception may be beneficial in interactive settings where the presence of chatbots could detract from the consumers’ experience.

The main cause of expectation violations is consumers’ falsely high expectations of chatbots. People believe that chatbots should perform precisely every time, and violations of such high expectations significantly lower users’ subsequent choices for those chatbots (Jones-Jang and Park, 2023). Most researchers consider the anthropomorphism of chatbots to be the primary influencing factor in expectancy violations. Anthropomorphism leads consumers to perceive another entity’s mental state (warmth and competence). It also influences one’s expectations regarding the agent’s abilities, including emotion recognition, planning, and communication (Waytz et al., 2010). The chatbot communication style, similar to human-like interaction, is also affected by expectation violations in service conversations (Chang and Kim, 2022; Rapp et al., 2021). The current study expands on these findings to propose that the degree of expectation violation induced by service failure should influence consumers’ psychological perception (warmth and competence) via chatbot communication styles (social and task).

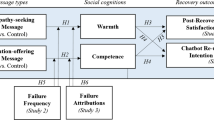

This study aims to investigate whether chatbot agents’ communication styles influence client satisfaction and behavior intention by creating a service scenario and assessing how expectation violation moderated these associations (see Fig. 1). Therefore, this research potentially makes several contributions to the literature. First, the current study focused on situations of service failure, while most of the existing literature focuses on the positive influence of the communication style of chatbots is positively associated with consumer experience. This study identifies the mechanism of mitigating negative evaluations of interactions from the perspective of the chatbot’s communication style and continuous use of chatbots. Second, based on the literature on consumers’ warmth and competence perception of chatbots, this study concentrated on how consumers perceive the chatbots’ communication style and investigated the effect on their interaction experience and subsequent reactions. Third, this study introduced expectancy violations and mind perception theory into the field of chatbots. The specific response of consumers caused by expectation violation affects the efficacy of the technology. Chatbot’s communication style reduces consumers’ negative evaluation through mind perception, particularly in cases of high expectation violation.

Theoretical framework and hypotheses

Related literature on human–computer interaction

Chatbot is driven by AI as a conversational agent, allowing users to search text-based information and conversation (Lester et al., 2004). With the increasing complexity of AI interaction technology, distinguishing between human-computer and human-to-human interaction becomes more challenging. Human interaction shows the response to social norms. Similar conclusions could be drawn from human interactions with chatbots (Reeves and Nass, 1996). When the chatbot is socially competent, it might be considered an automated social presence (Van Doorn et al., 2017), where individuals may feel that they are engaging with someone else during human-computer contact. Therefore, human-computer interactions reflect interpersonal interactions to some extent. Researchers argue that the principles of interpersonal interaction theory can be extended to human-computer interactions (Westerman et al., 2020; Gambino et al., 2020).

To test the applicability of theoretical thoughts to a wider range of human-computer interaction contexts, researchers have explored how chatbot affects the human interaction experience in a variety of contexts (see Table 1). In positive service environments, consumer attitudes and willingness to interact can be influenced by giving chatbots human-like qualities (Go and Sundar, 2019; Kim et al., 2019) and then optimizing customer experience (Roy and Naidoo, 2021). Ruan and Mezei (2022) proposed that when evaluating different attributes of a product, users report higher satisfaction with chatbots than with human frontline employees, particularly for experiential attributes. In service failure scenarios, most studies have shown that interacting with a chatbot causes people to make harsher evaluations of the service and even the company (Belanche et al., 2020; Jones-Jang and Park, 2023). This is because technology failures evoke negative emotions in consumers and generate more dissatisfaction with the service (Tuzovic and Kabadayi, 2021). Thus, individuals are likely to avoid using chatbots. However, Jones-Jang and Park (2023) have found in their experiments on the perceived controllability of humans and chatbots that people have a more positive view of AI-driven bad results when the control power of AI is lower than humans. The abovementioned chatbot-related documents provide evidence that there are limitations in understanding the response of chatbots to service failure.

Interaction with Chatbots

In the field of human-computer interaction, it is agreed that interactions result from the interplay between the user, the system, and the situation (Lallemand et al., 2015). Chatbots are based on AI and are designed for interaction, communication, and delivering chat functionalities to consumers. The literature on human-chatbot interactions suggests that human-human interactions show responses to social norms and that similar conclusions have been drawn in human-chatbot interactions (Nass et al., 1994; Reeves and Nass, 1996). According to the service robot acceptance model (SRAM) (Wirtz et al., 2018), functional, social, emotional, and associated elements frequently influence user adoption of bots. The chatbot’s appearance perception (i.e., anthropomorphism) can affect the user’s attitude and interaction intention (Go and Sundar, 2019; Kim et al., 2019). Breazeal (2003a) found that the social capabilities of chatbots (e.g., exhibiting socially appropriate actions and emotions) affect users’ interaction behavior; for example, when chatbots have social capabilities, users will be more willing to interact with them, thus having an improved chat experience (Huang and Rust, 2018). The automated social presence of robots makes consumers feel as though they are interacting with another social entity (Van Doorn et al., 2017). Thus, human-computer interaction reflects interpersonal interaction to some extent.

Consumers usually encounter technology failures in their interactions with chatbots, i.e., service failure. Such failures typically elicit negative feelings in consumers, for example anger and frustration. However, individuals have a limited understanding of the response to service failure caused by chatbots. Wang and Nakatsu (2013) found that irrelevant responses from chatbots may evoke negative user emotions (e.g., unhappiness and frustration). Ashktorab et al. (2019) highlighted the remedial measures to be taken when chatbots make a mistake. When a chatbot makes a mistake, users prefer that the chatbot acknowledge the misunderstanding and proactively offer restorative solutions. Similarly, Sheehan et al. (2020) believe that seeking clarification is an effective means of coping with chatbot communication errors and that it is not significantly different from zero errors. Therefore, this study adopts the perceived mind and expectancy violation theory better to understand consumers’ reactions to chatbot service failures.

Chatbots communication style

Chabot communication style refers to the communication patterns that are specific to that chatbot, which are reflected in meaningful deployments of linguistic variation and are important social cues that influence the tendency of consumer attitudes and behaviors (Feine et al., 2019). Based on the classification of communication style by interpersonal interaction and brand communication, the existing literature classifies the communication style of chatbots into social- and task-oriented types (Keeling et al., 2010; Chattaraman et al., 2019). The task-oriented communication style is more formal, involving purely on-task dialog (Keeling et al., 2010), and is highly goal-oriented and purposeful, constituting goal-setting, clarifying, and informing behaviors (Van Dolen et al., 2007). Furthermore, the social-oriented conversational type includes educated and relational communication, like regular greetings, small chat, emotional assistance, and positive sentiments (Van Pinxteren et al., 2023), with personalized and socialized style, thus satisfying emotional needs and enhancing the interaction between partners’ communication behaviors that enhance intimacy (Van Dolen et al., 2007). In the context of anthropomorphizing chatbots, the differences in conversation style will substantially affect users’ impressions of chatbot agents and the evaluation of actual service experience. It is necessary to further study how to incorporate communication style into the design of chatbot agents (Thomas et al., 2018). Drawing on the existing literature, the present investigation fills a research gap by examining how consumers evaluate chatbot agents’ task-oriented and social-oriented communication styles in failure scenarios.

According to the designer’s specific needs for a situation, Amazon Alexa’s interactions are strictly task-oriented (Clark et al., 2019). This result is consistent with Lopatovska and Williams (2018), which found that users did not form relational behaviors with chatbots like those they formed with family members or friends. However, based on social cognitive theory, the message interactivity of chatbot agents can also promote a feeling of interacting with other people (i.e., social presence). Two interactants can recognize one another by exchanging messages back and forth, even in an online chat situation (Go and Sundar, 2019). This is attributed to the social presence of psychological factors featuring emotional closeness or social ties. Tsai et al. (2021) found that applying interpersonal social presence communication strategies to chatbots and having them show support for empathy and warmth can improve users’ prosocial interactions and perceived conversations, improving user interaction satisfaction and brand likability. Følstad et al. (2018) observed that a more human-like conversational style (e.g., appropriate humor) helps develop trust between users and chatbots. One possible reason is that social dialog may be more effective in building confidence than task-oriented dialog (Chattaraman et al., 2019). Users showed greater future intentions to use the robot when it engaged in a social-oriented conversation compared to when it did not (Iwamura et al., 2011). Consequently, this study anticipates that chatbots adopting a social (vs. task) -oriented communication style could enhance consumers’ interactive satisfaction, trust, and patronage intention. Based on this, we propose the following hypothesis:

H1: The social-oriented (vs. task-oriented) chatbots enhance consumers’ interactive satisfaction, trust, and patronage intention.

Dimensions of mind perception

The theory of mind perception suggests that thinking (agency) and feeling (experience) are the two dimensions of mental capacity that individuals attribute to human and non-human entities (Pitardi et al., 2021; Gray et al., 2007). These dimensions are integral to constructing social cognition, specifically warmth and competence. Warmth perceptions include reliability, friendliness, and kindness, whereas competence perceptions encompass capacity, cognitive ability, and skill. (Cuddy et al., 2008). Van Doorn et al. (2017) suggested that these perceptions explain consumer reactions to technology in service interfaces. The service robot acceptance paradigm (Wirtz et al., 2018) states that social, emotional, and relational aspects influence warmth, while functional factors determine competence.

The existing literature shows that, compared with human interactions, consumers remain skeptical of chatbots (Adam et al., 2021), have mixed satisfaction levels (Shumanov and Johnson, 2021), and show aversion when minor errors occur (i.e., algorithmic aversion; Jones-Jang and Park, 2023). Therefore, we highlight the social interaction characteristics of chatbots through communication style. Further, according to social cognitive theory, we believe that the communication style of chatbots will affect consumers’ service experience through consumers’ perception of competence and warmth, particularly in instances of service failure by a chatbot.

Research shows that personal competence is positively influenced by other people’s attitudes toward a given individual (Kim et al., 2019). Chatbots with higher competence can generate higher functional value, which is conducive to improving consumer attitudes (Chung et al., 2020). Følstad et al. (2018) identified that chabots’ ability to articulate user requests and correctly provide useful responses is a key factor influencing user trust. However, the more social presence a user experiences while interacting with an online chat agent, the more emotional closeness and/or social connectedness a user feels. Enhancing this sense of connection will lead users to positively evaluate the agent, ultimately creating an intention to revisit the website in the future (Go and Sundar, 2019). Warmth perception is closely related to affective and interpersonal features (Yang et al., 2020), and socially oriented communication conveys feelings of affection and relational commitment (Williams and Spiro, 1985). We presume that when individuals interact with social-oriented chatbots, they can increase interactive satisfaction, trust, and patronage intention through warm perception, while competence perception mediates the effect of the task-oriented communication type and dependent variables (interactive satisfaction, trust, and patronage intention). Therefore, this study derives the following hypothesizes:

H2: a) The social-oriented chatbots make consumers feel a higher perception of warmth and thus have a positive impact on interaction satisfaction, trust, and patronage intention; and b) the task-oriented chatbots are positively associated with interaction satisfaction, trust, and patronage intention through competence perception.

Expectancy violations

When a product, brand, or company fails to meet consumer expectations, the resulting negative reaction is called expectancy violation (Cadotte et al., 1987; Sundar and Noseworthy, 2016). This occurs due to high pre-usage expectations and poor post-usage performance (Crolic et al., 2022). In human-computer interaction, expectancy violations caused by AI errors can adversely affect users. Jones-Jang and Park (2023) explained that initial positive perceptions of AI (automation bias) are replaced by acute disappointment when AI delivers unsatisfactory results (algorithmic aversion). People have unrealistic expectations about chatbot performance, leading to increased disappointment when encountering inadequate AI results (Alvarado-Valencia and Barrero, 2014). This false high expectation is the key driver for negative evaluations of chatbots. Crolic et al. (2022) regard the degree of anthropomorphism as the main influencing factor of the expected expectation violation. People have higher expectations of the efficacy of highly anthropomorphic chatbots prior to an interaction, and the experience of service failure can lead to violations of consumer expectations and negatively impact interaction satisfaction, the overall evaluation of the company, and the subsequent purchase intention. Combining language expectancy theory (Burgoon and Miller, 2018) with human-computer interaction, we presume that people will have certain expectations and preferences for the language use of chatbots and have specific preferences for competence and warm communication through their relationships and situations with communication agents. If the communication style is considered as the actual behavior of the chatbot, then the user’s response is the result of the interrelationship between the chatbot’s communication type and the user’s expectations (Chang and Kim, 2022; Rapp et al., 2021). This study predicts that service failure will lead to consumers’ expected violations. Consumers with high expected violations will be more aware of the warmth perception brought about by the social-oriented communication style to alleviate the negative impact of service failure, while consumers with low expectations of violation will feel more competent through task-oriented communication style to alleviate the negative consequences of service failure. Therefore, we propose the following hypothesizes:

H3: a) The social(vs. tak)-oriented communication style will enhance the indirect effect on interaction satisfaction, trust, and patronage intention through warmth perception when the expected violation degree is high; and b) when the expected violation degree is low, task orientated communication style to enhance the indirect influence through competence perception.

Method

Stimulus materials

The previous literature proved the feasibility of manipulating the communication style of chatbots by using screenshots of conversations between customers and chatbots (Chung et al., 2020; Skjuve et al., 2022; Youn and Jin, 2021), so the study chooses the screenshots of the conversations between the customer and the chatbot as the stimulus materials. Specifically, the research uses a virtual sports brand(s) and designs two conversation screenshots (i.e., task- and social-oriented) with different communication styles of chatbots. The task-oriented dialog situation emphasizes that the communication style is formal, agent interactions are limited to providing guidance and information to help users complete tasks, and the conversation is limited to the basic greeting (e.g., hello), while the social-oriented dialog scenes tend to be informal (i.e., interactive dialog forms) in communication style, and the function of agency interaction is not only to provide guidance and information help but also to maintain informal dialog adopting small chat, rhetorical inquiries, and endorsement (Chattaraman et al., 2019). Based on Van Dolen et al. (2007), Chattaraman et al. (2019), and Van Pinxten et al. (2023), this study designed the task- and social-oriented conversation content between participants and chatbots in the service failure context (see Table 2). Furthermore, this study uses the robot head portrait as a visual clue in the conversation, not the human (Go and Sundar, 2019).

Pre-tests

Before the formal study, the current work pre-tested the communication style and created two customer service conversations (task and social-oriented communication type). To ensure the validity of the dialog, 70 respondents were randomly assigned to read 1 of the 2 communication screenshots, after which the social and task-oriented evaluations were conducted on the communication style of chatbots in the screenshots (task condition: “This chatbot agent worked hard to provide information/was clearly goal oriented/was focused on product-related issues/wanted to make sure we made a decision about the product,” α = 0.762; social condition: “This chatbot agent was easy to talk with/genuinely liked to help customers/tried to establish a personal relationship/liked to talk and put people at ease,” Van Dolen et al. (2007); α = 0.838). Five-point Likert scales were used for responses (1 = “strongly disagree,” 5 = “strongly agree”). Our analysis confirmed that participants under task conditions evaluated the task orientation higher (Mtask = 3.693 vs. Msocial = 2.625; F (1, 67 = 107.20, p < 0.001); meanwhile, participants under social conditions evaluated the social orientation higher (Mtask = 2.671 vs. Msocial = 3.846; F (1, 67) = 98.957, p < 0.001).

Data, experiment design, procedure

We used a between-subjects design to test the hypotheses (see Fig. 2). In a scenario of service failure, we manipulated the communication style (task and social). One hundred forty-one participants (59.6% female, Mage = 31.28 years) were randomly assigned to one of two conditions. All panel participants were recruited from Sojump, the largest research platform in China.

All participants had to complete two identical sections: First, participants assessed their interaction efficacy expectations for the chatbot’s future performance. Next, we asked participants to read a passage about a failure in online shopping. Based on Crolic et al. (2022), we constructed a virtual sports brand website and described how participants could buy a pair of shoes for an upcoming trip. Failure stimulation was affected by delayed delivery time, quality problems with goods, and complications with subsequent return and replacement procedures. The scenarios of “product return and replacement” and “delivery delay” are used to reflect the real and failed phenomena that often occur in retail service environments. The service is considered to have failed because the chatbot agent could not understand the consumer’s needs or provide useful suggestions (Huang and Dootson, 2022). Therefore, the interaction outcome was intentionally made ambiguous, neither clearly successful nor unsuccessful. Subsequently, the realistic design of interactive stimulation was verified (Bagozzi et al., 2016). After the stimulus, participants were informed that they would next interact with a chatbot agent. Those in the task condition viewed a screenshot of a conversation with a task-oriented chatbot, while those in the social condition viewed a screenshot of a conversation with a socially-oriented chatbot. Finally, participants completed a questionnaire. In the last part of the survey, they answered demographic questions.

Measures

All measurement items were evaluated on a five-point Likert scale, with 1 representing “strongly disagree” and 5 representing “strongly agree.” Participants evaluated the chatbot’s communication style after completing the interaction in both social and task conditions. The social- and task-oriented measurement items aligned with the pre-test results. Definitions of all constructs are provided in Table 3.

Perceived warmth and competence

Based on Judd et al. (2005), four items were adopted for measuring perceived warmth (caring, friendly, warm, and sociable; α = 0.829) and four items for perceived competence (intelligent, energetic, organized, and motivated; α = 0.751).

Interaction satisfaction

Joosten et al.’s (2016) scales of satisfaction with the service process were used to evaluate participants’ interaction satisfaction (“I am satisfied/ contented/ happy about the interactions with the chatbot agency” and “The way the chatbot agency treated me during the interactions suited me well,” “I agree with the way the chatbot agency treated me during the interaction”; α = 0.913).

Trust

Following Bhattacherjee (2002) and Mozafari et al. (2021), the degree of trust was evaluated with a seven-item scale along three dimensions: ability, benevolence, and overall trust (“The chatbot agency has the necessary skills to deliver the service/has access to the information needed to handle my service request adequately/is fair in its conduct of my service request/has high integrity/ is receptive to my service request /makes efforts to address my service request/is trustworthy”; α = 0.887).

Patronage intention

Three measures of repurchase expectation in the study of Keeling et al. (2010) were measured (“I am likely to buy it again/to recommend it to friends/to revisit”; α = 0.865).

Expectancy violations

We use the approach used by Crolic et al. (2022) to measure the pre-interaction efficacy expectations (“I expect the chatbot agency to: do something for me/take action/be proactive in resolving my issues/ say things to calm me down”; α = 0.712) and post-interaction assessment (“I felt the chatbot agency: did a lot for me/ took action/ was proactive in resolving my issues/ said things to calm me down”; α = 0.798). Expectancy violations were determined by subtracting post-interaction evaluations from pre-interaction expectations, where higher values indicate greater violations.

The realism of the scenario

To assess ecological validity, this study evaluated the realism of the scenario using three items as described by Bagozzi et al. (2016): “The scenario is realistic,” “The scenario is believable,” and “It was easy for me to put myself in the situation of the customer.” (α = 0.717).

Results

By adopting SPSS PROCESS model 4, this study examined the relationship between communication style and interaction satisfaction, trust and patronage intention, and the mediators of ability and warmth perception (H1 and H2). The current study also verified the moderated mediation analysis of expectancy violations based on 5000 bootstrapped samples (H3; Hayes 2017, model 7).

Manipulation check

The independent sample T-test results indicated that respondents who chatted with the social-oriented style interactive screenshots scored higher on social-oriented (Mtask = 2.993, Msocial = 4.044, t (1,139) = −9.267, p = 0.000 < 0.05), while participants who viewed the task-oriented style interactive screenshots scored higher on task-oriented (Mtask = 3.914, Msocial = 3.514, t (1,139) = 3.171, p = 0.002 < 0.05). The manipulation of communication style was successful, and there was an indifference to the realism of the scenario (Mtask = 3.841, Msocial = 4.018, t (1,139) = −1.464, p = 0.198 > 0.05).

Hypothesis testing

This study adopted the communication style (social style and task style) of the chatbot as the independent variable and participants’ interaction satisfaction, trust, and intention of patronage as the dependent variable. The one-way ANOVA verifited the main effects of communication type on interaction satisfaction (Mtask = 2.881, SD = 0.998; Msocial = 3.802, SD = 0.735; F (1,139) = 39.548, p < 0.001), trust (Mtask = 3.269, SD = 0.868; Msocial = 4.037, SD = 0.494; F (1,139) = 42.768, p < 0.001), and patronage intention (Mtask = 2.836, SD = 1.042; Msocial = 3.820, SD = 0.796; F (1,139) = 40.139, p < 0.001) are all significant (see Fig. 3). In other words, the participants’ interaction satisfaction, trust, and patronage intention under social conditions are all higher than those under task conditions, therefore supporting H1.

Mediation effect

Measured warmth and competence perception are the mediators, the communication style is the independent variable (task-oriented = 0; social-oriented = 1), and interaction satisfaction, trust, and patronage intention are the dependent variables. The results (see Table 4) show that warmth mediates the impact of communication type on interaction satisfaction (b = 0.448, 95%CI = [0.225, 0.709]), trust (b = 0.336, 95%CI = [0.160, 0.562]), and patronage intention (b = 0.358, 95%CI = [0.161, 0.600]), excluding zero. Specifically, the social-oriented communication style is adopted to make the chatbot warmer (β = 0.683, p = 0.000 < 0.001), and it further improves consumers’ interactive satisfaction (β = 0.438, p = 0.000 < 0.001), trust (β = 0.414, P = 0.000 < 0.001) and patronage intention (β = 0.579, p = 0.000 < 0.001). However, the mediation effect of competence perception on the relationship between communication style and interaction satisfaction (b = 0.037, 95%CI = [−0.025, 0.126]), trust (b = 0.018, 95%CI = [−0.017, 0.097]), and patronage intention (b = 0.048, 95%CI = [−0.029, 0.178]), includes zero. Therefore, the mediation of the perception of warmth was supported (H2a), while the mediation of competence was not supported (H2b).

Moderated mediation

We tested the moderation effect of the expected violation on the indirect effects of communication style on interaction satisfaction, trust, and patronage intention through a moderated mediation analysis (see Table 5). The mediators are warmth and competence perceptions, and the expected violation is the moderator. Communication style is the independent variable, while interaction satisfaction, trust, and patronage intention are the dependent variables. The results show that expectation violation moderated the mediation of warmth perception between the communication style of chatbots and interaction satisfaction (b = 1.677, 95%CI = [1.126, 2.798], excludes 0), trust (b = 1.265, 95%CI = [0.730, 2.230], excludes 0) and patronage intention (index of moderated mediation = 1.352, 95%CI = [0.776, 2.343], excludes 0). However, the mediation of perceived competence between communication style and interaction satisfaction (b = 0.107, 95%CI = [−0.016, 0.440], includes zero), trust (b = 0.051, 95%CI = [−0.162, 0.259], includes zero) and patronage intention (b = 0.139, 95%CI = [−0.018, 0.591], includes zero) were not moderated by expectancy violation.

Discussion

The research results show that social-oriented chatbots can improve interaction satisfaction, trust, and patronage intention (H1). The social-oriented chatbots can improve warmth perception and positively impact interaction satisfaction, trust, and patronage intention compared to task-oriented (H2a). However, the mediation effects of competence perception on communication style and interaction satisfaction, trust, and patronage intention of chatbots are all insignificant (H2b). One explanation for these insignificant results is that the dimension dominates the interaction between people and chatbots. Alternatively, it is possible that the competence dimension is more important in some specific contexts. For example, people tend to have competence-related characteristics when deciding long-term goals.

Consistent with the predicted results in H3a, when consumers’ expectations are violated to a higher degree, chatbots using social-oriented (vs. task-oriented) communication style are more likely to cause higher interaction satisfaction, trust, and patronage intention. This is attributed to the fact that the social-oriented communication style can arouse consumers’ perception of warmth, thus improving their satisfaction, trust, and patronage intention. For consumers with low-level expectation violations, using social-oriented communication does not increase the warmth perception. The testing result of H3a is inconsistent with our prediction, possibly due to the helplessness or anger aroused by the service failure. This kind of emotion can be relieved by the warm signals conveyed by enthusiastic and passionate communication, while formal and mechanical communication makes conveying the signals related to warmth difficult. Especially for consumers who have expectancy violations due to service failure, it is difficult for consumers to pay attention to the signals related to mind perception conveyed by this communication.

General discussion, implications, limitations, and further research

General discussion

The interaction process with chatbots is an important driver of human-like characteristics. In real life, interactions between chatbots and consumers mainly involve human-like language. However, there is a need for further discussion as to which communication style chatbots should use or whether a specific communication style is effective for all consumers and all conditions. In this study, we aimed to investigate the impact of chatbot communication styles (task vs. social) on consumers’ interaction satisfaction and behavioral intentions. We focused on how consumers evaluate the warmth and competence of chatbot agents in the context of service failures.

The finding of this study showed that attributing human communication to chatbots can persuade people to show different mind tendencies towards chat agents. When a chatbot presents a warm and friendly way of communication, participants evaluate that chatbot in a manner similar to interpersonal interaction. However, these evaluations depend on the extent to which the participants’ expectancy violations.

Firstly, using chatbots’ social-oriented communication style is more conducive to improving consumers’ interaction satisfaction, trust, and patronage intention. This is because chatbots can improve the positive consumption experience by giving the essence of human quality (Roy and Naidoo, 2021) and alleviating the negative impact of service failure. In human-computer service interaction, consumers’ early inherent homogeneity and stereostyle of chatbots are the main reasons for the lack of social and emotional values, while social-oriented communication style makes up for the lack of chatbots, and they can also meet consumers’ demands for social-emotional values, which is attributable to consumers’ warmth perception.

Secondly, warmth perception plays a mediation role in the process wherein the communication style of chatbot affects consumers’ interaction satisfaction and behavior intention. Interestingly, as another dimension of mind perception, competence cannot serve as a theoretical mediation to explain the influence of the communication style of chatbots on consumer behavior. This result is consistent with those presented by Chung et al. (2020). It also preliminarily proves that warmth dominates the interaction between people and chatbots in the context of service failures. However, the ability dimension is more important in certain specific situations or a specific object. For example, when making decisions about long-term goals, people tend to be more inclined to the characteristics related to ability (Roy and Naidoo, 2021). However, the competence dimension is more important in some contexts and when dealing with a specific object. People tend to be more inclined to the characteristics related to competence when making decisions about long-term goals (Roy and Naidoo, 2021). Moffett et al. (2021) illustrated in a study of corporate relations that the mediation effect of competence perception is insignificant, i.e., consumers often do not pay attention to the competence of enterprises in the initial stage of the relationship between consumers and enterprises. The stimulus in this study reflects this relationship.

Thirdly, this study found that consumers with high levels of expectancy violations are more likely to perceive warmth in a social-oriented communication style, thereby mitigating the negative impact of service failures. According to the “machine heuristic” concept,people’s expectations of AI agents can be met or violated based on the situation, influencing their perceptions of these agents (Sundar, 2020). The social-oriented communication just overthrew the stereotypical-based “machine heuristic” of AI. Particularly in a service failure, people can feel the emotion and contingency the chatbot conveys. When consumers’ expectations are violated to a higher degree, the interactive communication style is more likely to give them the warmth perception of the chatbot agent, thus alleviating the negative comments and dissatisfaction generated. Moreover, the task-oriented chatbots may completely conform to machine heuristics, and people may still consider the chatbot agents to be neutral, objective, emotional, and mechanistic (Lew and Walther, 2023). Therefore, there will not be too much mind perception, and it is more unlikely that the degree of expectations violation will be affected, thus changing people’s view of it.

Implications

In the field of chatbots, scholars advocate increasing users’ humanized perception of chatbots by studying more anthropomorphic design cues (Adam et al., 2021). First, this work enhances chatbot humanization by incorporating social interaction communication cues. The literature on chatbot anthropomorphism also provides insights into designing chatbot discourse and communication styles with human-like characteristics for future applications (Araujo, 2018; Thomas et al., 2018; Sundar et al., 2015). This study addresses a gap in human-computer interaction research on service failures by demonstrating that using a social communication style in chatbots makes them seem more human to consumers. This approach increases perceptions of warmth during service failures and reduces negative outcomes, such as consumer dissatisfaction and loss of interest in chatbot agents.

Second, while previous social cognitive literature has explored various moderators of warmth and competence preferences in different service environments (e.g., Roy and Naidoo, 2021; Pitardi et al., 2021), this study extends that research by demonstrating that the extent of expectation violation due to failure can further moderate perceived warmth and competence levels.

Third, the present study found that the interaction style impacts human-computer interaction design. The chatbot’s design on communication style can be consistent with the overall positioning of the company. For example, service companies should bring more warmth to consumers, while consumers may consider technology-oriented companies to be more capable. Therefore, different style of chatbots should be used for the specific images that different companies want to portray. However, after consumers experience a failed shopping experience, the degree of consumers’ expectancy violations will determine the effectiveness of the chatbot style. It is effective for companies to adopt chatbots with social-oriented communication style. Service failures touch off higher expectancy violations by consumers, where companies can focus on social orientation to enhance the warmth of interactions when deploying chatbot conversations. The adoption of chatbots with a social-oriented communication style by companies could be an effective strategy. Therefore, the expectancy violations caused by failure and matching communication style can generate a favorable evaluation and more patronage intention. However, adopting chatbots with social-oriented communication style can effectively alleviate consumers’ stressful encounters but cannot completely help consumers solve problems. Since current AI technology does not fully meet all needs, managers should assign human agents to handle complex emotional reactions. Consequently, managers should continue enhancing employee training and management while employing chatbots to support human agents, improving service quality.

Limitations and future research

By gaining a more detailed understanding of consumer behavior in the context of chatbot technology, this study offers new insights into using chatbots to handle service failures, thereby aiding retail and service companies in their marketing strategies. However, this study has limitations. It uses dialog screenshots as experimental stimuli, which, although validated by previous research (Chung et al., 2020; Youn and Jin, 2021), may not be as reliable as real-time chat. Future research should allow participants to interact with chatbots in real time within actual online service interfaces.

Second, this research manipulates chatbots’ communication styles as dichotomous variables. Research into chatbots’ communication styles indicates that the degree of social orientation is also likely to lead to inconsistent conclusions. Future research should explore how various social-oriented chatbots affect consumers’ attitudes and behaviors.

Third, although this study discussed the impact of chatbot communication style on consumer satisfaction and behavior in the situation of chatbot service failure, we have only focused on the interaction initiated by consumers (i.e., consumer inquiries). Therefore, we can continue to explore the psychological and behavioral impact of the interaction initiated by chatbots on consumers in the future. For instance, “uninvited” interactions may threaten consumers’ perceived autonomy (Pizzi et al., 2021), and social-oriented communication styles may be seen as insincere, leading to feelings of disgust. Consequently, future research should focus on determining which type of chatbot is most suitable for specific interactions based on the context and characteristics involved.

Fourth, demographic variables are important factors influencing the adoption of chatbots. However, this study asked the participants to report age and gender during the experiment. This limitation provides opportunities to investigate the heterogeneity in adopting chatbots and human-computer interaction topics.

Fifth, regarding sample size, this study collected limited samples, which, although meeting the minimum requirement for testing the hypothesis, suggests that larger samples and multiple experiments might be more robust alternatives for the generalizability of results. Another method to enhance the realism of interactions with chatbots is the Wizard-of-Oz (WoZ) Experiment Approach. Tsai et al. (2021) employed WoZ to simulate interactions between humans and chatbots, particularly investigating the role of affect in communications. In subsequent research, we designed multiple WoZ experiments to more accurately simulate real communications with chatbots.

Furthermore, this study manipulated consumers, emotions in a specific service in a specific service context (i.e., failed online shopping) to examine consumers’ reactions to the chatbot. However, the research shows that the lack of emotion in chatbots is a potential factor that has a negative impact (Huang et al., 2019), and it is impossible to form independent opinions, which may be beneficial in a specific service context (i.e., neutral, low-emotional service scenarios). Future research can explore how chatbots with low or no emotions can improve consumers’ service experience in specific contexts. In conclusion, we should explore consumers’ potential benefits when interacting with service chatbots.

Data availability

To access raw data of the pretest and main experiment, please visit https://doi.org/10.7910/DVN/RVWOFC.

References

Adam M, Wessel M, Benlian A (2021) AI-based chatbots in customer service and their effects on user compliance. Electron Mark 31(2):427–445

Alvarado-Valencia JA, Barrero LH (2014) Reliance, trust and heuristics in judgmental forecasting. Comput Hum Be hav 36:102–113

Araujo T (2018) Living up to the chatbot hype: the influence of anthropomorphic design cues and communicative agency framing on conversational agent and company perceptions. Comput Hum Behav 85:183–189

Ashktorab Z, Jain M, Liao QV, Weisz JD (2019) Resilient chatbots: repair strategy preferences for conversational breakdowns. In Proc. CHI conference on human factors in computing systems (pp 1–12)

Bagozzi RP, Belanche D, Casaló LV, Flavián C (2016) The role of anticipated emotions in purchase intentions. Psychol Mark 33(8):629–645

Belanche D, Casaló LV, Flavián C, Schepers J (2020) Robots or frontline employees? Exploring customers’ attributions of responsibility and stability after service failure or success. J Serv Manag 31(2):267–289

Bhattacherjee A (2002) Individual trust in online firms: scale development and initial test. J Manag Inf Syst 19(1):211–241

Breazeal C (2003a) Emotion and sociable humanoid robots. Int J Hum -Comput Stud 59(1–2):119–155

Breazeal C (2003b) Toward sociable robots. Robot auton syst 42(3-4):167–175

Burgoon M, Miller GR (2018) An expectancy interpretation of language and persuasion. In: Recent advances in language, communication, and social psychology (pp 199–229)

Cadotte ER, Woodruff RB, Jenkins RL (1987) Expectations and norms in models of consumer satisfaction. J Mark Res 24(3):305–314

Chang W, Kim KK (2022) Appropriate service robots in exchange and communal relationships. J Bus Res 141:462–474

Chattaraman V, Kwon WS, Gilbert JE, Ross K (2019) Should AI-based, conversational digital assistants employ social- or task-oriented interaction style? A task-competency and reciprocity perspective for older adults. Comput Hum Behav 90:315–330

Chen CF, Wang JP (2016) Customer participation, value co-creation and customer loyalty–a case of airline online check-in system. Comput Hum Behav 62:346–352

Chen N, Mohanty S, Jiao J, Fan X (2021) To err is human: tolerate humans instead of machines in service failure. J Retail Consum Serv 59:102363

Choi S, Mattila AS, Bolton LE (2021) To err is human (-oid): how do consumers react to robot service failure and recovery? J Serv Res 24(3):354–371

Chung M, Ko E, Joung H, Kim SJ (2020) Chatbot e-service and customer satisfaction regarding luxury brands. J Bus Res 117:587–595

Clark L, Pantidi N, Cooney O, Doyle P, Garaialde D, Edwards J, … & Cowan BR (2019) What makes a good conversation? Challenges in designing truly conversational agents. In Proc. CHI conference on human factors in computing systems (pp 1–12)

Crolic C, Thomaz F, Hadi R, Stephen AT (2022) Blame the bot: anthropomorphism and anger in customer–chatbot interactions. J Mark 86(1):132–148

Cuddy AJ, Fiske ST, Glick P (2008) Warmth and competence as universal dimensions of social perception: the stereotype content model and the BIAS map. Adv Exp Soc Psychol 40:61–149

Feine J, Gnewuch U, Morana S, Maedche A (2019) A taxonomy of social cues for conversational agents. Int J Hum Comput Stud 132:138–161

Følstad A, Nordheim CB, Bjørkli CA (2018) What makes users trust a chatbot for customer service? An exploratory interview study. Lect Notes Comput Sci 11193:194–208

Gambino A, Fox J, Ratan RA (2020) Building a stronger CASA: extending the computers are social actors paradigm. Hum Mach Commun 1:71–85

Go E, Sundar SS (2019) Humanising chatbots: the effects of visual, identity and conversational cues on humanness perceptions. Comput Hum Behav 97:304–316

Gray HM, Gray K, Wegner DM (2007) Dimensions of mind perception. Science 315(5812):619–619

Hayes AF (2017) Introduction to mediation, moderation, and conditional process analysis: a regression-based approach. Guilford publications

Honig S, Oron-Gilad T (2018) Understanding and resolving failures in human-robot interaction: literature review and model development. Front Psychol 9:861

Huang MH, Rust RT (2018) Artificial intelligence in service. J Serv Res 21(2):155–172

Huang MH, Rust R, Maksimovic V (2019) The feeling economy: managing in the next generation of artificial intelligence (AI). Calif Manag Rev 61(4):43–65

Huang YSS, Dootson P (2022) Chatbots and service failure: when does it lead to customer aggression. J Retail Consum Serv 68:103044

Iwamura Y, Shiomi M, Kanda T, Ishiguro H, Hagita N (2011) Do elderly people prefer a conversational humanoid as a shopping assistant partner in supermarkets? In Proc. 6th international conference on human-robot interaction (pp 449–456)

Jones-Jang SM, Park YJ (2023) How do people react to AI failure? Automation bias, algorithmic aversion, and perceived controllability. J Comput -Mediat Commun 28(1):zmac029

Joosten H, Bloemer J, Hillebrand B (2016) Is more customer control of services always better? J Serv Manag 27(2):218–246

Judd CM, James-Hawkins L, Yzerbyt V, Kashima Y (2005) Fundamental dimensions of social judgment: understanding the relations between judgments of competence and warmth. J Personal Soc Psychol 89(6):899–913

Keeling K, McGoldrick P, Beatty S (2010) Avatars as salespeople: communication style, trust, and intentions. J Bus Res 63(8):793–800

Kim J, Fiore AM, Lee HH (2007) Influences of online store perception, shopping enjoyment, and shopping involvement on consumer patronage behavior towards an online retailer. J Retail Consum Serv 14(2):95–107

Kim SY, Schmitt BH, Thalmann NM (2019) Eliza in the uncanny valley: Anthropomorphizing consumer robots increases their perceived warmth but decreases liking. Mark Lett 30:1–12

Lallemand C, Gronier G, Koenig V (2015) User experience: a concept without consensus? Exploring practitioners’ perspectives through an international survey. Comput Hum Behav 43:35–48

Lester J, Branting K, Mott B (2004) Conversational agents. The practical handbook of internet computing, 220–240

Lew Z, Walther JB (2023) Social scripts and expectancy violations: evaluating communication with human or AI Chatbot interactants. Media Psychol 26(1):1–16

Liew TW, Tan SM, Ismail H (2017) Exploring the effects of a non-interactive talking avatar on social presence, credibility, trust, and patronage intention in an e-commerce website. Hum Centr Comput Inf Sci 7:1–21

Lopatovska I, Williams H (2018) Personification of the Amazon Alexa: BFF or a mindless companion. In Proc. conference on human information interaction & retrieval (pp 265–268)

Lou C, Kang H, Tse CH (2022) Bots vs. humans: how schema congruity, contingency-based interactivity, and sympathy influence consumer perceptions and patronage intentions. Int J Advert 41(4):655–684

Moffett JW, Folse JAG, Palmatier RW (2021) A theory of multiformat communication: mechanisms, dynamics, and strategies. J Acad Mark Sci 49:441–461

Mozafari N, Weiger WH, Hammerschmidt M (2021) Trust me, I’m a bot–repercussions of chatbot disclosure in different service frontline settings. J Serv Manag 33(2):221–245

Nass C, Steuer J, Tauber ER (1994) Computers are social actors. In Proc SIGCHI conference on Human factors in computing systems (pp 72–78)

Ostrom AL, Fotheringham D, Bitner MJ (2019) Customer acceptance of AI in service encounters: understanding antecedents and consequences. Handb Serv Sci II:77–103

Pavone G, Meyer-Waarden L, Munzel A (2023) Rage against the machine: experimental insights into customers’ negative emotional responses, attributions of responsibility, and coping strategies in artificial intelligence–based service failures. J Interact Mark 58(1):52–71

Pitardi V, Wirtz J, Paluch S, Kunz WH (2021) Service robots, agency and embarrassing service encounters. J Serv Manag 33(2):389–414

Pizzi G, Scarpi D, Pantano E (2021) Artificial intelligence and the new forms of interaction: Who has the control when interacting with a chatbot? J Bus Res 129:878–890

Prentice C, Nguyen M (2020) Engaging and retaining customers with AI and employee service. J Retail Consum Serv 56:102186

Przegalinska A, Ciechanowski L, Stroz A, Gloor P, Mazurek G (2019) In bot we trust: a new methodology of chatbot performance measures. Bus Horiz 62(6):785–797

Rapp A, Curti L, Boldi A (2021) The human side of human-chatbot interaction: a systematic literature review of ten years of research on text-based chatbots. Int J Hum -Comput Stud 151:102630

Reeves B, Nass C (1996) The media equation: how people treat computers, television, and new media like real people and places. Cambridge University Press

Roy R, Naidoo V (2021) Enhancing chatbot effectiveness: the role of anthropomorphic conversational styles and time orientation. J Bus Res 126:23–34

Ruan Y, Mezei J (2022) When do AI chatbots lead to higher customer satisfaction than human frontline employees in online shopping assistance? Considering product attribute type. J Retail Consum Serv 68:103059

Sheehan B, Jin HS, Gottlieb U (2020) Customer service chatbots: anthropomorphism and adoption. J Bus Res 115:14–24

Shumanov M, Johnson L (2021) Making conversations with chatbots more personalized. Comput Hum Behav 117:106627

Skjuve M, Følstad A, Fostervold KI, Brandtzaeg PB (2022) A longitudinal study of human–chatbot relationships. Int J Hum Comput Stud 168:102903

Sundar A, Noseworthy TJ (2016) Too exciting to fail, too sincere to succeed: the effects of brand personality on sensory disconfirmation. J Consum Res 43(1):44–67

Sundar SS (2020) Rise of machine agency: a framework for studying the psychology of human-AI interaction (HAII). J Comput -Mediat Commun 25(1):74–88

Sundar SS, Go E, Kim HS, Zhang B (2015) Communicating art, virtually! Psychological effects of technological affordances in a virtual museum. Int J Hum Comput Interact 31(6):385–401

Sundar A, Noseworthy T. J (2016) Too exciting to fail, too sincere to succeed: The effects of brand personality onsensory disconfirmation. J Consum Res 43(1):44–67

Thomas P, Czerwinski M, McDuff, D, Craswell, N, Mark, G (2018) Style and alignment in information-seeking conversation. In Proc. Conference on Human Information Interaction & Retrieval (pp. 42–51)

Tsai WHS, Liu Y, Chuan CH (2021) How chatbots’ social presence communication enhances consumer engagement: the mediating role of parasocial interaction and dialogue. J Res Interact Mark 15(3):460–482

Tsai WHS, Lun D, Carcioppolo N, Chuan CH (2021) Human versus chatbot: understanding the role of emotion in health marketing communication for vaccines. Psychol Mark 38(12):2377–2392

Tuzovic S, Kabadayi S (2021) The influence of social distancing on employee well-being: a conceptual framework and research agenda. J Serv Manag 32(2):145–160

Van Dolen WM, Dabholkar PA, De Ruyter K (2007) Satisfaction with online commercial group chat: the influence of perceived technology attributes, chat group characteristics, and advisor communication style. J Retail 83(3):339–358

Van Doorn J, Mende M, Noble SM, Hulland J, Ostrom AL, Grewal D, Petersen JA (2017) Domo arigato Mr. Roboto: emergence of automated social presence in organizational frontlines and customers’ service experiences. J Serv Res 20(1):43–58

van Pinxteren MME, Pluymaekers M, Krispin A, Lemmink J (2023) Effects of communication style on relational outcomes in interactions between customers and embodied conversational agents. Psychol Mark 40(5):938–953

Wang X, Nakatsu R (2013) How do people talk with a virtual philosopher: log analysis of a real-world application. In Proc. 12th international conference on entertainment computing–ICEC 2013: ICEC 2013, São Paulo, Brazil, October 16–18, 2013. Proceedings 12 (pp 132–137). Springer Berlin Heidelberg

Waytz A, Epley N, Cacioppo JT (2010) Social cognition unbound: Insights into anthropomorphism and dehumanization. Curr Direct Psychol Sci 19(1):58–62

Westerman D, Edwards AP, Edwards C, Luo Z, Spence PR (2020) I-it, I-thou, I-robot: the perceived humanness of AI in human-machine communication. Commun Stud 71(3):393–408

Webster C, Sundaram DS (2009) Effect of service provider’s communication style on customer satisfaction in professional services setting: the moderating role of criticality and service nature. J Serv Mark 23(2):103–113

Williams KC, Spiro RL (1985) Communication style in the salesperson-customer dyad. J Mark Res 22(4):434–442

Wirtz J, Patterson PG, Kunz WH, Gruber T, Nhat LV, Paluch S, Martins A (2018) Brave new world: service robots in the frontline. J Serv Manag 29(5):907–931

Yang LW, Aggarwal P, McGill AL (2020) The 3C’s of anthropomorphism: connection, comprehension, and competition. Consum Psychol Rev 3(1):3–19

Youn S, Jin SV (2021) In AI we trust?” The effects of parasocial interaction and technopian versus luddite ideological views on chatbot-based customer relationship management in the emerging “feeling economy. Comput Hum Behav 119:106721

Author information

Authors and Affiliations

Contributions

Conceptualization, N.C. and J.Y.; methodology, N.C. and J.Z; software, J.J.; validation, N.C. and J.Y.; formal analysis, N.C.; investigation, J.Y.; data curation, N.C.; writing—original draft preparation, N. C., G. S., and J.Y.; writing—review and editing, N. C., G. S., and J.Y.; supervision, J.Y.; project administration, J.Y. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

The Ethical Committee of Changzhou Vocational Institute of Mechatronic Technology (CZIMT-JY202308) reviewed and approved the experiment. All procedures implemented in the study adhered to the principles of the Declaration of Helsinki.

Informed consent

All voluntary participants provided the informed content to respond to the experiment. Experiment information was provided via an online survey sheet. Before the experiment, all respondents provided the electronic version of informed consent by selecting the accept option.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cai, N., Gao, S. & Yan, J. How the communication style of chatbots influences consumers’ satisfaction, trust, and engagement in the context of service failure. Humanit Soc Sci Commun 11, 687 (2024). https://doi.org/10.1057/s41599-024-03212-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-024-03212-0

- Springer Nature Limited