Abstract

Recent decades have seen widespread efforts to improve the generation and use of evidence across a number of sectors. Such efforts can be seen to raise important questions about how we understand not only the quality of evidence, but also the quality of its use. To date, though, there has been wide-ranging debate about the former, but very little dialogue about the latter. This paper focuses in on this question of how to conceptualise the quality of research evidence use. Drawing on a systematic review and narrative synthesis of 112 papers from health, social care, education and policy, it presents six initial principles for conceptualising quality use of research evidence. These concern taking account of: the role of practice-based expertise and evidence in context; the sector-specific conditions that support evidence use; how quality use develops and can be evaluated over time; the salient stages of the research use process; whether to focus on processes and/or outcomes of evidence use; and the scale or level of the use within a system. It is hoped that this paper will act as a stimulus for future conceptual and empirical work on this important, but under-researched, topic of quality of use.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Introduction

Over the past 2 decades, there have been widespread efforts to improve the generation and use of evidence across a number of sectors. Boaz and Nutley (2019) edited collection, for example, charts developments within the fields of health, social care, education, environmental and sustainability issues, and international development. The authors describe how there is now ‘a diverse landscape of initiatives to promote evidence use’ ranging from efforts to improve research generation and dissemination through to activities to build practitioners’ capacity to use research, foster collaborations between researchers and research users, and to develop system-wide approaches to evidence use (Boaz and Nutley, 2019: p. 261).

These kinds of initiatives, which are all focused on improving evidence use in some way, can be seen to raise important questions about how we understand and conceptualise quality of evidence use. As we see it, improved evidence use will require clarity about not only what counts as quality evidence, but also what counts as quality use. To date, there has been wide-ranging debate about the former, but very little dialogue about the latter. There is a well-developed literature around understanding and appraising the quality of different kinds of evidence (e.g., Cook and Gorard, 2007; Nutley et al., 2013; Puttick, 2018), but little in the way of an equivalent for understanding and appraising the quality of different kinds of use.

We see this as problematic. Most fundamentally, it fails to challenge the tendency for efforts to improve evidence use to focus more on the communication and synthesis of research findings and less on supporting the uptake and application of such evidence for decision-making and implementation (e.g., Gough et al., 2018). This can lead to system-level developments focusing heavily on creating access to valid and reliable evidence but saying little about how to support intelligent use of that evidence. Similarly, it can give rise to evidence use guides that provide detailed advice on how to identify the best evidence, but little information about how to make the best use of that evidence.

Against this backdrop, this paper focuses on the question of how to conceptualise the quality of research evidence use. In other words, this paper is concerned with how to approach the task of articulating what it means to use research evidence well. It comes out of ongoing work in Australia, the Monash Q ProjectFootnote 1, that is focused on this issue of ‘quality use of research evidence’ within the field of education. This paper shares insights from the first phase of this project, which involved a cross-sector systematic review and narrative synthesis of 112 relevant publications from health, social care, policy and education. The aim was to explore if and how quality of research evidence use had been defined and described within each of these sectors, as part of developing a quality use framework for Australian educators.

Drawing on the cross-sector review, this paper shares insights that emerged from within and across the sectors in relation to conceptualising quality use of research evidence. Based on these insights, we then draw out a series of initial principles for making sense of quality of research evidence use. The ideas underlying these initial principles have prompted important discussions and decisions in constructing our own framework for quality use of research in education (Rickinson et al., 2020). Our purpose here is not to present that framework, but rather to share the insights that informed it in a way that could be helpful for others who are seeking to better understand evidence use in other sectors. We are conscious that ‘existing lessons about how to do and use research well are not shared’ effectively between disciplines and policy/practice domains (Oliver and Boaz, 2019, p. 1). It is hoped that this paper will act as a stimulus for future conceptual and empirical work on this important, but under-researched, topic of quality of use.

We begin by outlining the aims of the Q Project and explaining the methodology of the cross-sector review. Then, we highlight key insights from the health, social care, education and policy sectors, identifying themes within and across the sectors. Based on these themes, we then put forward six initial principles for conceptualising quality of evidence use. We conclude by summarising the paper’s key arguments in relation to improving the use of evidence in policy and practice.

Q Project review methods

The Q Project is a 5-year initiative to understand and improve the use of research evidence in Australian schools. A partnership between Monash University and the Paul Ramsay Foundation, it involves close collaboration with teachers, school leaders, policy makers, researchers, research brokers and other key stakeholders across Australia. The project has four main strands:

-

Strand 1: Conceptualisation of quality use (2019–2020)—synthesising insights relating to high-quality evidence use in health, social care, policy and education in order to develop a quality use of research evidence framework for Australian educators.

-

Strand 2: School-based investigation of quality use (2020–2022)—examining the research use practices in 100 schools across four states to generate practical examples and empirical insights into high-quality research evidence use in varied settings.

-

Strand 3: Development of improvement interventions (2021–2023)—co-designing and trialling with groups of educators, interventions to support high-quality research evidence use in practice.

-

Strand 4: Engagement and communication campaign (2019–2023)—bringing together key players within Australian education to spark strategic dialogue and drive system-level change around research use in education.

This paper presents the analysis coming out of the cross-sector review within Strand 1. The review was informed by principles of systematic reviewing, following a transparent method with clearly defined and documented searches, inclusion and exclusion processes, and a quality appraisal process (Gough et al., 2017). The review was guided by the following question to elicit ideas across three practice-based sectors (i.e., health, social care, education): How has ‘quality of evidence use’ been described and conceptualised across sectors? The cross-sector scope was motivated by arguments within the evidence use field about the value of looking across, and learning from, different policy areas and disciplines (Davies et al., 2019), coupled with our impression that understanding the quality of evidence use had not been a point of focus within the field of education.

While we acknowledge the use of a broad range of evidence sources in practice, our project is concerned with research evidence, that is, evidence generated through systematic studies undertaken by universities or research organisation (Nelson et al., 2017). The review identified papers that were generally reflective of this type of evidence, though some also included broader sources. We therefore use the terms ‘research evidence’ and ‘quality use of research evidence’ throughout this paper, apart from where we are drawing on or describing ideas from prior work that used the more general terms of ‘evidence’ and ‘evidence use’.

The review process was developed in consultation with experts from a variety of fields, including systematic reviews, information science, evidence use, health, policy, social care and education. The design included a narrative synthesis of the included documents to accommodate the methodological diversity common in systematic reviews of social interventions (Gough et al., 2017; Popay et al., 2006).

Search strategy

The search methods included both database and informal searches (e.g., personal contacts, reference checks), with the latter included to ensure inclusion of quality sources that are often missed in traditional protocol-driven searches (Greenhalgh and Peacock, 2005). Based on the advice of sector experts, we selected databases specific to education (ERIC), health (Medline), and social care (Social Services Abstracts), along with the interdisciplinary PsychInfo database. Search terms were identified and tested through an initial review of the research and in consultation with the Monash University research librarians and database platform information scientists. We focused on works related to ‘evidence use and research use’ and ‘quality of use’, and included topics around evaluation, development and improvement of evidence use, at and across the individual, organisational and system levels. We adapted the search strings for each database using following combinations of keywords:

-

‘evidence use’ OR ‘evidence based’ OR ‘evidence informed’ OR ‘use of evidence’;

-

‘research use’ OR ‘research engagement’ OR ‘research literacy’ OR ‘research utili*’ OR ‘use of research’ OR ‘research implementation’ OR ‘implementation of research’; and

-

abilit* OR adapt* OR aptitude OR ‘best practice’ OR capabilit* OR competence OR ‘deep’ OR shallow OR effectiv* OR expertise* OR experience* OR quality OR innovat* OR intelligent OR ‘knowledge level’ OR ‘novice expert use’ OR novice OR expert OR professional OR skill* OR thoughtful OR wise.

We included research (conceptual or empirical) and professional (policy or practice) publications. Research publications included journal articles, research reports, research summaries, research syntheses, and research books and chapters. Professional publications included policy documents, practice guides, professional frameworks, and quality models/indicators. Articles included works published in English with emphasis on Australia, New Zealand, Canada, USA and UK. To ensure the identification of the largest number of papers in this emerging field, we did not specify date restrictions. We did not include publications focusing on topics such as the use of data (as opposed to the use of research), awareness of research (as opposed to use of research), and the quality of evidence (as opposed to quality of its use). We conducted the search between April and July, 2019.

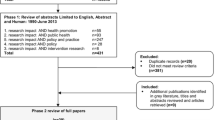

The search yielded 10,813 research and professional publications from the four databases. The titles and abstracts were exported from Endnote to Covidence for double screening, resulting in 268 included papers that were retrieved as full text documents (Fig. 1).

The informal searches from the internet, personal contacts and reference checks generated 175 additional documents. Internet searches involved Google, Google Scholar, and targeted searches of relevant organisational websites. Personal contacts involved both personal and survey-type requests to key international researchers and brokers within education, health, and social care. Reference list checks were conducted by the research team based on key references identified in the initial set of included papers.

Synthesis methods

Preliminary analysis involved data extraction and appraisal of the initial set of included documents (i.e., 268 + 175). The papers were organised by sector and validated through a series of moderation processes by the four members of the research team. This approach was suitable for studies involving diverse implementation and mixed method approaches (Popay et al., 2006), given the challenges in achieving consensus for quality criteria (Dixon-Woods et al., 2005). The categories used to organise the data were descriptive (e.g., aim, methodology, findings, themes) (Gough et al., 2017). During this process, there was a large number of documents related to policy, resulting in its establishment as an additional sector. Given that policy emerged as an additional sector, its included papers were not representative of the sector per se, but of the general search strategy.

During the moderation processes, the research team ranked the papers according to relevance to quality evidence use. The decision to exclude papers at this point was based on discussions around their fit-for-purpose. In other words, because there were few publications that explicitly focused on quality of use, many of our inclusion/exclusion decisions required careful consideration about whether a publication addressed issues of quality of use implicitly or indirectly. Disagreements were resolved through consensus. We chose to analyse and synthesise the included publications by these four sectors because we were interested to understand if and how discussions and ideas relating to quality of use had played out within each of the sectors.

This process resulted in the selection of 112 publications for in-depth analysis and synthesis, including 30 from health, 29 from social care, 31 from education and 22 from policy (see Supplementary Information). These included papers were the basis of four narrative syntheses, drafted as text-based documents of 6000–12,000 words in length. As an additional moderation process, the non-education narratives underwent a review by sector experts in health, social care, and policy for their feedback. The feedback was supportive of the general conclusions offered by the respective syntheses, and additional seminal papers were suggested for possible inclusion and consideration. The narratives underwent additional analyses to inform the development of the quality use of research evidence framework for education. For the purposes of this paper, we draw on a thematic analysis of these four narratives to identify emerging insights around the conceptualisation of quality of research evidence use. In the following two sections, we outline issues and insights that emerged from within each of the sectors, and then consider insights and themes that cut across the sectors.

Findings: insights from within the sectors

Across all sectors, there were very few publications that discussed quality of use specifically and there was a lack of clear definitions and descriptions of quality use either as a concept or as a practice. Each of the four sector narratives, however, provided helpful insights into the nature and development of evidence use within that sector, which in turn had implications for how quality of research evidence use might be conceptualised (Table 1). Each of the sectors is discussed below in terms of ideas and approaches that had some connection to quality of use. It should be noted, though, that it is not possible within the context of this paper to provide a detailed account of the historical development of evidence use within each sector.

Health sector

The health synthesis consisted of 30 theoretical and empirical papers encompassing the health fields (e.g., medicine, nursing, psychology, public health, mental health, occupational therapy, health care policy), with a date range from 1996 to 2018. Most of the papers proposed frameworks and models of research use around some or all stages of the research use process (i.e., identifying the problem, accessing and interpreting the evidence, decision-making, implementation, outcomes, and evaluation). Some provided empirical support through case study comparisons, surveys and interviews. The remaining papers provided elaborations around the nature and characteristics of users and systems.

There was a range of terms relating to evidence use within the health sector literature, including evidence-based practice, evidence-based decision-making, knowledge-translation, knowledge to action, and research use and implementation (Adams and Titler, 2013). Quality evidence use was implied through the many frameworks, which oriented toward improved outcomes for patients. One of the most enduring conceptualisations of evidence use in the health sector was that put forward by Sackett and colleagues (1996). They described evidence-based medicine as the ‘conscientious, explicit, and judicious use of current best evidence in making decisions about the care of the individual patient. The practice… means integrating individual clinical expertise with the best available external clinical evidence from systematic research’ (p. 71). Papers across the health sector have emphasised this interplay of clinical judgement (Bannigan, 2007; Craik and Rappolt, 2006; Hogan and Logan, 2004) or tacit (i.e., practical, experiential) knowledge (Gabbay and LeMay, 2004; Ward et al., 2010) with the evidence.

Evidence use was largely conceptualised as a dynamic process, challenging traditional notions of deterministic and linear knowledge transfer processes (Adams and Titler, 2013; Chambers et al., 2013; Ward et al., 2010). The literature presented a number of frameworks accounting for the complex and interactive processes with the evidence, the context, and the actors at all stages of the evidence use cycle (e.g., Abell et al., 2015; Kitson et al., 1998; Greenhalgh et al., 2004). Building on the best practices across the health disciplines, two papers developed new interdisciplinary frameworks (Hogan and Logan, 2004; Satterfield et al., 2009). As a way to differentiate knowledge to action frameworks, Davies et al. (2011) distinguished those that implemented codified knowledge (e.g., clinical guidelines) from those that focused on learning how to change practice, calling for different implementation strategies.

The remaining papers elaborated on individual and organisational capacity to support research use across the health fields. Each paper involved different stages of the research use process, and focused on capacity in terms of: individual-level skills (Baker-Ericzén et al., 2015; Craik and Rappolt, 2006; Mallidou et al., 2018); decision-making processes (Alonso-Coello et al., 2016; Chalmers et al., 2018; Gabbay and LeMay, 2004; Meyers et al., 2012; Stetler, 2001), and organisational-level support (Brennan et al., 2017; Dobrow et al., 2006; Leeman et al., 2017).

The key recurring insight within the health sector literature was the way in which evidence use is a dynamic and complex undertaking at every stage in the process. As shown in Table 1, this observation has implications for how quality of research evidence use might be conceptualised. Given the complex interactions at each stage of the process, for example, any understanding of quality of use needs to be based on a clear determination of the scope of the research use process. Alongside this, there is a need to understand the interactions of the actors with the evidence in context, and the individual and organisation capacity to support research use.

Social care sector

The social care synthesis consisted of 29 publications with a date range from 2003 to 2019. Most involved discussions relating to decision-making and implementation frameworks, with some focusing on success factors and barriers. There were also papers about practice approaches, evidence-use culture, scale construction and ethics. Evidence use was described as knowledge sharing and exchange (Austin et al., 2012; Morton and Seditas, 2018), evidence-informed practice or social work (Austin et al., 2012; Graaf and Ratliff, 2018), evidence-based practice (Cunningham and Duffee, 2009), evidence-based interventions (Gambrill, 2018), and empirically supported treatments (Graaf and Ratliff, 2018), among others.

The literature presented a number of models and frameworks oriented towards two broad themes. The first involved models and frameworks that focused on the need to consider evidence use in relation to practitioner expertise, contextual factors and client needs (e.g., Anderson, 2011; Bridge et al., 2008; Rosen, 2003). The second involved frameworks that delineated between process-oriented and product- or outcomes-oriented evidence use approaches (e.g., Drisco and Grady, 2015; Ghate and Hood, 2019; Okpych and Yu, 2014). While it was implicit that evidence use results in more effective and efficient practice, there was a lack of consistency regarding what constitutes effective evidence use, as well as what capabilities were needed to connect evidence with treatment to effect (Cunningham and Duffee, 2009; Epstein, 2009). Neither process or product-focus by itself was thought to be ideal in social care contexts, and as a result, acted as the rationale for the myriad frameworks, models, and perspectives proposed in the literature (Graaf and Ratliff, 2018; Keenan and Grady, 2014).

Similar to the health sector, the social care literature highlighted the need to understand the relationship of the evidence with practitioner expertise, the context, and the needs of the client/case, with tensions around the need to focus on process or product. Thus, in conceptualising quality use of research evidence, there is a need to understand the complex interplay of research evidence, practitioner expertise, context and client/case needs, as well as the kinds of practitioner capabilities that are needed to use research evidence effectively.

Education sector

The education synthesis consisted of 31 publications dating from 2009 to 2019. Most involved frameworks with a focus on processes and measurements enabling evidence use. The others oriented around the nature of evidence use, along with enablers and barriers. Similar to the other sectors, there was no clear conceptualisation of quality evidence use, with quality inferred through individual and organisational processes involving the effective (Brown et al., 2017; Godfrey 2019; Nelson and O’Beirne, 2014), thoughtful (Park, 2018), productive (Earl and Timperley, 2009), meaningful (Farley-Ripple et al., 2018), or discerning (Evans et al., 2017) use of evidence.

Evidence use in this sector was largely described as evidence-informed practice, highlighting the need for evidence to be considered in relation to contextual and other practice-related factors, challenging the notion that practice should flow directly from research (Brown and Rogers, 2015). Further, that evidence needs to be ‘contextualised and combined with practice-based knowledge (i.e., transformed) as part of a wider collaborative professional/social learning process’ by practitioners (Greany and Maxwell, 2017, p. 4). Coldwell et al. (2017) noted that teachers preferred the term evidence-informed teaching to emphasise teaching as a complex, situated professional practice, drawing on “a range of evidence and professional judgement, rather than being based on a particular form of evidence” (p. 5).

The education literature indicated a number of enablers to support evidence use across the system. Two research reviews identified the role of leadership, professional development, attitudes, networks, standards and policies (Dyssegaard et al., 2017; Tripney et al., 2018). Practitioner-focused attributes included mindsets (e.g., Earl, 2015; Stoll et al., 2018a), research literacy (e.g., Evans et al., 2017; Nelson and O’Beirne, 2014; Park, 2018), critical thinking skills (e.g., Brown and Rogers, 2015; Earl and Timperley, 2009; Sharples, 2013), and collaboration (e.g., Bryk et al., 2011; Earl and Timperley, 2009; Greany and Maxwell, 2017). Organisational-focused attributes included leadership (e.g., Brown and Greany, 2018; Creaby et al., 2017; Coldwell et al., 2017), partnerships (e.g., Farley-Ripple et al., 2018; Nelson and Campbell, 2019), and professional learning (e.g., BERA, 2014; Mincu, 2013; Stoll et al., 2018b).

Similar to health and social care, the education literature emphasised the role of practitioner expertise in evidence use, along with key enablers at individual, organisational and system levels. In conceptualising quality use of research evidence, then, there is a need to understand the nature and role of practitioner knowledge and expertise in context. There is also a need to consider the enablers of research use within and across the education system.

Policy sector

As described earlier, policy emerged as an additional sector during the review process as it became clear that there was a number of publications about evidence use and its quality within policy-making contexts. The 22 included papers largely discussed evidence use in general, with some that focused an evidence use governance framework, an evidence assessment framework and associated case studies, and standards of evidence and evidence use. Evidence use in policy-making contexts included a broad range of terms, such as research utilisation (Nutley et al., 2007), knowledge translation and uptake (Hawkins and Parkhurst, 2016), evidence-informed versus evidence-based policy-making (Hawkins and Parkhurst, 2016; Moore, 2006), knowledge co-production or transfer or exchange and mobilisation (Boaz and Nutley, 2019), knowledge-based policy or knowledge application (Nutley et al., 2010), and evidence dissemination (Moore, 2006), among others.

Overall, the literature acknowledged an increasing interest in and need for evidence to inform policy decisions. Yet, there were ‘crucial tensions’ in policy-making contexts between using the best evidence available and the ‘need for sufficient citizen engagement in or, at the very least, support for policies’ (Smith, 2017, p. 151). Much of the literature then discussed this tension and the challenges it represented, making reference to the political nature of policy-related decision-making and the need to situate evidence within complex contexts (Nutley et al., 2010; Nutley et al., 2007). It was generally recognised that nuanced approaches to evidence use were required to balance both the political situation and the issue (e.g., Parkhurst, 2017). There was also a need to consider the needs and aims of different stakeholders (e.g., Hawkins and Parkhurst, 2016), and the types and applicability of evidence to improve policy and decision-making (e.g., Breckon, 2016; Gluckman, 2011).

Quality evidence use was thus thought to be determined by the processes by which decisions were made and implemented (e.g., accountability, transparency), rather than by the outcomes produced from the policy (e.g., efficiency, effectiveness) (Boswell, 2014; Hawkins and Parkhurst, 2016; Parkhurst, 2017; Rutter and Gold, 2015). Rutter and Gold’s (2015) evidence assessment model linked the degree of transparency in decision-making to the likelihood of a given policy succeeding: ‘if it is not clear on what basis decisions have been made, it is impossible to judge the robustness of those decisions’ (p. 10). Hawkins and Parkhurst (2016) highlighted the need for accountability, transparency and contestability measures to maintain ‘democratic principles within processes of evidence utilisation’ (p. 587–588).

Compared to the other sectors, the policy literature emphasised the complexity of policy contexts and the significance of the process (i.e., how decisions involving evidence are made). In conceptualising quality use of research evidence, then, it is important to consider the interaction of the policy situation, the policy issue, and the needs of different stakeholders, which together points to the importance of policy processes rather than policy outcomes in relation to evidence use.

Findings: cross-sector insights

As well as the above insights that arose within each of the four sectors, there were also important themes that cut across the sectors despite their distinctive evidence use perspectives and approaches. These cross-sector insights concerned the breadth of evidence, the role of practitioner expertise, approaches to evaluation and self-assessment and the value of systems perspectives (Table 2).

Breadth of evidence

Notwithstanding the distinctive development of evidence use approaches within the different sectors, there was a strong a consensus across all sectors around the need to draw from a broad range of evidence to inform decisions. The health and social care literatures both highlighted challenges with ‘evidence hierarchies’ and called for a more inclusive view of practitioner-oriented evidence sources and research designs for ‘complex, frontline work’ (Epstein, 2009; Satterfield et al., 2009, p. 380). The health literature emphasised the role of a broad range of qualitative and patient-centred data, such as anecdotal information, diagnostic tests, and programme evaluations (e.g., Davies et al., 2011; Sackett et al., 1996; Stetler, 2001). Similarly, the social care literature called for the use of broad evidence sources (e.g., Austin et al., 2012; Graaf and Ratliff, 2018), such as client preferences, legal regulations and ethical guidelines (Gambrill, 2018; Schalock et al., 2011). Though the policy sector was largely concerned with the objectivity of the evidence (Langer et al., 2016; Levitt, 2013; Moore, 2006), diverse evidence sources were also noted such as programme evaluations, personal anecdotes, opinions and feedback (e.g., Breckon, 2016; Breckon, Hopkins, and Rickey, 2019). In the education sector, professional practice was seen to draw on ‘a range of evidence and professional judgement, rather than being based on a particular form of evidence’ (Brown et al., 2017; Coldwell et al., 2017, p. 12; Greany and Maxwell, 2017).

Practitioner expertise

Alongside breadth of evidence, the practice-based sectors of health, social care and education all emphasised the interaction of practitioner expertise with the evidence in context. In health, for example, the use of the term expertise referred to diverse processes such as: the confidence in skills to acquire, analyse and use research (Craik and Rappolt, 2006); a conscious critical thinking process (Stelter, 2001); the explicit use of clinical judgement (Hogan and Logan, 2004) and tacit knowledge (Gabbay and LeMay 2004; Ward et al., 2010). In social care, Epstein (2009) described expertise as the ability of practitioners to critically assess evidence types for value and case appropriateness. In education, the connection between research and data use was strongly linked with practical or tacit knowledge (BERA, 2014; Earl, 2015; Farley-Ripple et al., 2018; Greany and Maxwell, 2017). A strong theme was the need for practice-based evidence (e.g., professional judgement), research-based evidence (e.g., research studies), and data-based evidence (e.g., pupil-performance) to be used in combination (Nelson and Campbell, 2019).

As part of practitioner expertise, the practice-based sectors also identified a role for direct practitioner engagement in knowledge generation. In health, Chambers and Norton (2016) emphasised the need for practitioners’ ongoing adaptation of interventions to better suit the context, and for these changes to be shared in a research repository. In social care, Satterfield et al. (2009) highlighted the need for practitioners to use evidence ‘from practice-based research that examines practical problems found in social work practice’ (p. 378) and that data collection be undertaken by practitioners from multiple disciplines without formal academic credentials. The education sector has noted the long-standing tensions regarding the gap between research and practice, highlighting the need for more practitioner participation in knowledge generation processes (Brown and Greany 2018; Farley-Ripple et al., 2017; Nelson and Campbell, 2019; Sharples et al., 2019).

Evaluation and self-assessment

All four sectors presented strategies to support the development and improvement of evidence use through a variety of evaluation and self-assessment processes. Baker-Ericzen and colleagues (2015) conceptualised a novice to expert model to support the development of clinical decision-making strategies in mental health. Brennan and colleagues (2017) designed and validated a self-report tool to monitor and provide feedback about individual capacity in research use for health policy makers. At the organisational level, there was a focus on building in evaluation and ongoing feedback mechanisms (Bannigan, 2007; Davies et al., 2011). Examples include evaluating implementation (Meyers et al., 2012) and continuous quality improvement over time (Chambers et al., 2013).

Along similar lines, the education sector featured evaluation strategies for individual and school-wide evidence use. These included individual measures of evidence use in practice based on level of expertise, from novice through to expert (e.g., Brown and Rogers, 2015), as well as continuous self-assessment of individual and school engagement in research use (e.g., Stoll et al., 2018a; 2018b). The notion of continuously improving school systems relied on ongoing evidence-informed reflective practices (e.g., Brown and Greany, 2018; Coldwell et al., 2017; EEF, 2019), as well as intentional links to whole-school improvement initiatives (e.g., Creaby et al., 2017; Sharples et al., 2019). Additionally, the policy sector focused on oversight of governance practices. Rutter and Gold (2015) developed a framework to assess the transparency of evidence use within different parts of a policy, while Hawkins and Parkhurst (2016) proposed a good governance approach to evidence use based on evidence appropriateness, accountability, transparency and contestability. As Parkhurst (2017, p. iii) explained, good governance of evidence is about ‘the use of rigorous, systematic and technically valid pieces of evidence within decision-making processes that are representative of, and accountable to, populations served’.

Systems perspectives

The value of systems perspectives was reflected in all four sectors. Many of the health frameworks featured complex and interactive processes involving the evidence, the context, and the actors at all stages of the evidence use cycle (e.g., Greenhalgh et al., 2004; Ward et al., 2010). Some extended this to accommodate ongoing evidence creation and adaption within the research use process (e.g., Chambers and Norton, 2016; Graham et al., 2006). Bannigan (2007) called for systems perspectives to inform the future direction for health frameworks, particularly in relation to how different parts across the system are inter-related. The literature in the education sector also emphasised the need to focus on the coordination across ‘wide range of stakeholders—researchers, practitioners, policy makers and intermediaries’ for an ‘effective evidence ecosystem’ (e.g., Godfrey, 2019; Sharples, 2013, p. 24). This included embedding training in teacher education (e.g., Coldwell et al., 2017; Tripney et al., 2018); reflecting the role of evidence use in teacher certification (e.g., BERA, 2014; Tripney et al., 2018); and prioritising research use at the policy level (e.g., EEF, 2019; Park, 2018).

Along similar lines, the social care literature called for evidence use to be an inherent and continuously evolving practice across the system (Avby et al., 2014; Ghate and Hood, 2019). Ghate and Hood (2019) identified the need to understand social care from a ‘complex adaptive systems perspective’, which involved the interactions between people, technology and other factors in the environment (p. 101). In the policy literature, the complexity of temporal issues was highlighted, where evidence may be used at one point in time, but its quality and impact may not become apparent until some point in the future (Levitt, 2013). Conversely, the nature of politics is such that ‘policy makers react to major problems, formulate quick solutions to them, take decisions, implement these and then move on to the next set of problems’, so engagement with evidence may be limited by time and context (Moore, 2006, p. 9).

As indicated earlier in Table 2, these cross-sector insights can be seen to have implications for conceptualising quality research evidence use. The importance of drawing on a wide range of evidence makes clear the need to acknowledge such breadth and to determine, which are relevant in a given sector and context. The distinct nature of practice-based expertise and knowledge in context highlights not only the need to take account of such complexities, but also the need to specify and assess how research evidence use practices develop and improve over time at both the individual and organisation levels. Finally, the call for systems perspectives emphasises the need to consider the multilevel and interactive nature of research evidence use and how practices (and evidence) need to adapt to context over time. These implications helped us move towards some initial principles for conceptualising quality of research evidence use.

Towards principles for conceptualising quality use of research

The discussion so far has shown that understandings of how to define and frame quality of use are not well established in any of the four sectors examined. It has also become clear, however, that there are valuable insights and ideas within the health, social care, policy and education literatures that could inform and guide efforts to develop clearer specifications of what using research evidence well means and involves in different fields. With that in mind, we suggest that the cross-sector and within-sector insights emerging from our analysis of the literature, can be combined into six initial principles for conceptualising quality of research evidence use.

These principles suggest that in conceptualising quality of research evidence use, there is a need to:

-

1.

account for the role of practice-based expertise and evidence in context;

-

2.

identify the sector-specific conditions that support evidence use;

-

3.

consider how quality use develops, improves and can be evaluated over time;

-

4.

determine the salient stages of the evidence use process;

-

5.

consider whether to focus on processes and/or outcomes of evidence use; and

-

6.

consider the scale or level of evidence use within a system.

The need to account for the role of practice-based expertise and evidence in context

This principle underlines the need for conceptions of quality to take careful account of the complexity of using evidence in contexts of professional practice. Central to this principle is the notion that evidence does not stand alone, in the sense that using it requires users who are able to exercise judgement based on experience and evidence, within a social context (Fazey et al., 2014). These interactions were particularly salient in the practice-based sectors, emphasising the social and practical role of such expertise. Ethnographic studies of medical practices identified the important role of more knowledgeable and experienced others in how evidence was taken up (Gabbay and le May, 2004). Similarly, within education, teachers have been shown to rely far more on their own or their colleagues’ tacit knowledge than on research evidence, when making decisions about teaching and learning (Levin, 2013; Walker et al., 2019).

Practitioner expertise is reflected in the more inclusive notion of ‘evidence-informed’ policy and practice, terminology that also recognises the role that other forms of knowledge have in understanding a socially complex world (Boaz et al., 2019). Expertise thus includes the ability to identify appropriate evidence from a broad range of sources, including practitioner-generated evidence. These ideas are consistent with the need to consider sector-specific differences in what constitutes quality evidence, the nature of the research processes, the policy and practice contexts and the resources available (Oliver and Boaz, 2019). The conceptualising of quality research evidence use thus requires an in-depth understanding of these highly nuanced interactions in a given practice.

The need to identify the sector-specific conditions that support evidence use

This principle reflects the idea that evidence use does not happen in a vacuum, but demands capacities and supports across individuals, organisations and systems. The literature in all four sectors demonstrated considerable consensus around key enablers for quality research evidence use. These ranged from individual attitudes and skills in using research and collaborating through to the organisational roles of leadership and the provision of resources, tools, and training (e.g., Austin et al., 2012; Mallidou et al., 2018; Tripney et al., 2018).

Importantly, these enablers were nuanced across the sectors. For example, the practice-based sectors called for direct participation by practitioners in research (e.g., Chambers and Norton, 2016; Nelson and Campbell, 2019). In contrast, insights gained from the policy sector concerned the need for policy makers to access research evidence and collaborate with researchers to support better decision-making (Smith, 2017). Further, certain enablers were linked with having particular benefits. For example, the education sector highlighted the central role of leadership in establishing a research-rich culture (e.g., Dyssegaard et al., 2017; Godfrey, 2019), developing teacher capacity, and ensuring supportive processes and resources (Brown and Greany, 2018; Coldwell et al., 2017).

Building on the notion that evidence use is a complex and situated process involving interconnected elements, these examples illustrate the need to identify and understand enablers in a given practice, and how they interact to support the conditions for quality research evidence use.

The need to consider the how quality use develops, improves and can be evaluated over time

This principle acknowledges the dynamic nature of evidence use, and the need to take account of how quality can be developed, improved and evaluated over time. There were a number of improvement frameworks outlining sector-specific activities around evidence use, with their respective pathways toward improvement over time, consistent with the notion that research use is not a single event but a complex process that unfolds over time. Both the health and education sectors provided examples from cognitive-based developmental models involving novice to expert progressions, tailored to their respective contexts (Baker-Ericzén et al., 2015; Brown and Rogers, 2014; 2015).

Others drew from theories of change and developed progressions of context-appropriate activities. In the policy sector, for example Rutter and Gold (2015) developed rich descriptions of key dimensions of transparent decision-making, evaluated over a three-point scale. In education, Stoll and colleagues (2018a, b) developed an empirically informed self-assessment framework to assist teachers and schools with developing and evaluating key aspects of evidence-informed practice. Further, both the education and health literature emphasised the need for ongoing feedback to support continuous improvement at both the individual and organisational levels (e.g., Brown and Greany, 2018; Chambers et al., 2013; Sharples et al., 2019).

Taken together, these ideas all emphasise the importance of time as a dimension that is significant in thinking about the quality of research evidence use.

The need to determine the salient stages of the evidence use process

This principle takes account of the idea that there are different stages in using evidence, each with distinct purposes and processes. The cross-sector review highlighted a number of different stages in evidence use (e.g., identifying the problem, decision-making, implementation, evaluation). Several frameworks demonstrated the range of purposes and processes at different stages, impacting how quality research evidence use might be conceptualised.

In health, for example, there were certain frameworks that concentrated on decision-making while others focused specifically on the implementation stage. Satterfield and colleagues’ (2009) model for decision-making provided guidance for health practitioners to support high-quality care for patients, with consideration for the environment and organisational context that impacts on the uptake of a given intervention. In comparison, Kitson and colleagues’ (1998) multidimensional framework in health care considered evidence, context and facilitation as core elements for successful implementation, with specific indicators for each.

In education, Nelson and O’Beirne (2014) proposed a model for linking school-generated evidence processes with school improvement processes. Their model emphasised distinct processes related to a school enquiry cycle, including initial engagement with evidence to inform school improvement planning, implementation and evaluation activities. The evidence itself could be produced through a separate cycle and be integrated through school-level action research.

While not meant to be an exhaustive account, these examples serve to illustrate the point that using evidence can involve a number of different processes or stages, and the nature, sequence and significance of these stages can vary considerably between different contexts and sectors. Thus, in conceptualising quality research evidence use, there is a need to delineate the stages of the evidence use process that are pertinent and within scope.

The need to consider whether to focus on processes and/or outcomes of evidence use

This principle considers the need to determine whether the ‘quality of research evidence use’ is related to and reflected by the processes of the use and/or the outcomes of the use. In the policy sector, there was a clear emphasis on the processes of decision-making and implementation. Gluckman (2011, p. 7) pointed out that policy makers, advisors and decision-makers are accountable to an ‘increasingly informed, involved and vociferous society’, resulting in an emphasis on policy makers’ decision-making processes. These processes were tightly linked to the specific political environment, the policy issue in question, and the ways in which evidence is then identified, interpreted and used to inform those decisions (Hawkins and Parkhurst, 2016; Nutley et al., 2007; Parkhurst, 2017).

In contrast, the education sector indicated a greater desire to focus on outcomes, though there was a lack of evidence that strongly linked evidence-informed approaches to improved pupil or teaching outcomes (Coldwell et al., 2017; Greany and Maxwell, 2017; Nelson and O’Beirne, 2014). This was thought to be attributed to evidence use being a growing field (Tripney et al., 2018), and the research methods used to understand practice (Brown and Rogers, 2014; Levin, 2013). Coldwell et al. (2017) explained that ‘research is rarely “applied” in linear ways by teachers or schools’ resulting in ‘a tension between research that aims to demonstrate a causal link…but which inevitably simplifies the complexity of interpreting and applying evidence from one educational context to another, and research, which aims to find general approaches for improvement’ (p. 22–23).

Given the challenges attributed to understanding evidence use in context, these examples demonstrate the need to consider how the focus on processes and/or outcomes would impact the conceptualisation of quality research evidence use.

The need to consider the scale or level of evidence use within a system

Any conceptualisation of quality of evidence use needs to engage with questions of scale and system influence. Engaging with questions of scale means thinking about how quality of research evidence use might need to look different, or have different emphases, at different levels of a system such as for individuals or teams or organisations or whole systems. Engaging with questions of system influence, meanwhile, is about thinking through how quality of research evidence use is enabled or eroded by the interplay of different influences across multiple levels of the system. Central to this principle is the notion that evidence generation and use needs to be understood as a complex process involving interconnected elements within an ‘evidence ecosystem’ (BERA, 2014; Boaz and Nutley, 2019; Sharples, 2013, p. 20).

As noted earlier, the importance of systems perspectives was a strong theme in the evidence use literature of all four sectors. This was reflected in frameworks and initiatives that encompassed different levels (e.g., individuals, groups, organisations), different actors (e.g., practitioners, policy makers, researchers) and different practices (e.g., evidence generation, synthesis, distribution). It was also evident in calls for evidence use improvement efforts to focus more deliberately on the interactions between the constituent parts, and to recognise the influence that wider political and societal systems can have on evidence ecosystems.

As with the preceding five principles, these two points come together to highlight an important complexity that is involved in making sense of quality of research evidence use—the need to consider issues of scale and system influence.

Conclusions

In his work on The Politics of Evidence, Parkhurst (2017, p. 9–10) argues that to improve the use of evidence in policy requires clarity about not only what constitutes ‘good evidence for policy’ but also what constitutes ‘good use of evidence’ from a policy perspective. We agree strongly with Parkhurst’s dual focus, but note that interest in the former has far outweighed debate about the latter.

Our purpose in this paper, then, has been to report the findings of a cross-sector review to understand if and how quality of evidence use has been defined and described within the health, social care, education and policy literature. While we found a lack of explicit articulations of quality of use across all sectors, there were important insights into the nature of evidence use within each sector, which we suggest can inform how quality of research evidence use might be conceptualised. More specifically, we have argued that efforts to make sense of quality of research evidence use need to engage with six important issues:

-

(1)

the role of practice-based expertise and evidence in contexts;

-

(2)

the sector-specific conditions that support evidence use;

-

(3)

the development, improvement and evaluation of quality use over time;

-

(4)

the stages of the evidence process that are within scope;

-

(5)

the importance of evidence use processes and/or evidence use outcomes; and

-

(6)

the scale or level of the evidence use within a wider system.

We have presented these issues as initial principles in order to reflect the emerging nature of the literature on quality of use and the early-stage nature of the principles that we have identified. While they have certainly proved helpful in our own process of developing a framework to define and elaborate the concept of ‘quality use of research evidence’ in education (Rickinson et al., 2020), these principles have not been operationalised or tested beyond our own work. It is hoped, though, that sharing them here will provide a stimulus for future conceptual and empirical work on this important, but under-researched, topic of quality of use. We see this as one possible focus for ‘new conversations’ about evidence use that take account of and build on the distinctive traditions and contemporary approaches of different disciplines and policy/practice domains (Oliver and Boaz, 2019, p. 1).

Data availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Abell E, Cummings R, Duke A, Marshall J (2015) A framework for identifying implementation issues affecting extension human sciences programming. J Ext 53(5):1–15

Adams S, Titler M (2013) Implementing evidence-based practice. In: Foreman M, Mateo M (eds) Research for advanced practice nurses: from evidence to practice. Springer, pp. 321–350

Alonso-Coello P, Schünemann H, Moberg J, Brignardello-Petersen R, Akl E, Davoli M, Oxman A (2016) GRADE evidence to decision (etd) frameworks: a systematic and transparent approach to making well informed healthcare choices. BMJ 353:1–10. https://doi.org/10.1136/bmj.i2016

Anderson I (2011) Evidence, policy and guidance for practice: a critical reflection on the case of social housing landlords and antisocial behaviour in Scotland. Evid Policy 7(1):41–58. https://doi.org/10.1332/174426411X552990

Austin MJ, Dal Santo TS, Lee C (2012) Building organizational supports for research-minded practitioners. J Evid Base Soc Work 9(1-2):174–211. https://doi.org/10.1080/15433714.2012.636327

Avby G, Nilsen P, Dahlgren MA (2014) Ways of understanding evidence-based practice in social work: a qualitative study. Br J Soc Work 44:1366–1383. https://doi.org/10.1093/bsjw/bcs198

Baker-Ericzén M, Jenkins J, Park M, Garland M (2015) Clinical decision-making in community children’s mental health: using innovative methods to compare clinicians with and without training in evidence-based treatment. Child Youth Care Forum 44(1):133–157. https://doi.org/10.1007/s10566-014-9274-x

Brennan SE, Mckenzie JE, Turner T, Redman S, Makkar S, Williamson A, Haynes A, Green SE (2017) Development and validation of SEER (Seeking, Engaging with and Evaluating Research): a measure of policymakers’ capacity to engage with and use research. Health Res Policy Syst 15(1):1. https://doi.org/10.1186/s12961-016-0162-8

Bannigan K (2007) Making sense of research utilisation. In: Creek J, Lawson-Porter A (eds) Contemporary issues in occupational therapy: reasoning and reflection, John Wiley and Sons, pp. 189–215

Boaz A, Davies H, Fraser A, Nutley S (eds) (2019). What works now? Evidence-informed policy and practice. Policy Press

Boaz A, Nutley S (2019) Using evidence. In: Boaz A, Davies H, Fraser A and Nutley S (eds) What works now?: evidence-informed policy and practice. Policy Press, pp. 251–277

Boswell J (2014) ‘Hoisted with our own petard’: evidence and democratic deliberation on obesity. Pol Sci 47(4):345–365. https://doi.org/10.1007/s11077-014-9195-4

Breckon J (2016) Using research evidence: a practice guide. In: Alliance foR Useful Evidence. https://media.nesta.org.uk/documents/Using_Research_Evidence_for_Success_-_A_Practice_Guide.pdf. Accessed 14 Aug 2020

Breckon J, Hopkins A, Rickey B (2019) Evidence vs democracy: how ‘mini-publics’ can traverse the gap between citizens, experts, and evidence. In: Alliance for useful evidence. https://www.alliance4usefulevidence.org/assets/2019/01/Evidence-vs-Democracy-publication.pdf. Accessed 14 Aug 2020

Bridge TJ, Massie EG, Mills CS (2008) Prioritizing cultural competence in the implementation of an evidence-based practice model. Child Youth Serv Rev 30:1111–1118. https://doi.org/10.1016/j.childyouth.2008.02.005

British Educational Research Association [BERA] (2014) Research and the teaching profession: building the capacity for a self-improving education system (final report). https://www.thersa.org/globalassets/pdfs/bera-rsa-research-teaching-profession-full-report-for-web-2.pdf. Accessed 14 Aug 2020

Brown C, Greany T (2018) The evidence-informed school system in England: where should school leaders be focusing their efforts? Leadership Pol School 17(1):115–137. https://doi.org/10.1080/15700763.2016.1270330

Brown C, Rogers S (2014) Measuring the effectiveness of knowledge creation as a means of facilitating evidence-informed practice in early years settings in one London borough. Lond Rev Educ 12(3):245–260. https://doi.org/10.18546/LRE.12.3.01

Brown C, Rogers S (2015) Knowledge creation as an approach to facilitating evidence informed practice: examining ways to measure the success of using this method with early years practitioners in Camden (London). J Educ Chang 16(1):79–99. https://doi.org/10.1007/s10833-014-9238-9

Brown C, Schildkamp K, Hubers MD (2017) Combining the best of two worlds: a conceptual proposal for evidence-informed school improvement. Educ Res 59(2):154–172. https://doi.org/10.1080/00131881.2017.1304327

Bryk AS, Gomez LM, Grunow A, Hallinan MT (2011) Getting ideas into action: building networked improvement communities in education. In: Hallinan M (ed) Frontiers in sociology of education, Springer, pp. 127–162. Accessed 14 Aug 2020

Chalmers I, Oxman A, Austvoll-Dahlgren A, Ryan-Vig S, Pannell S, Semakula D, Albarqouni L, Glasziou P, Mahtani K, Nunan D, Heneghan C, Badenoch D (2018) Key concepts for informed health choices: a framework for helping people learn how to assess treatment claims and make informed choices. BMJ Evid Base Med 23(1):29–33. https://doi.org/10.1136/ebmed-2017-110829

Chambers DA, Glasgow RE, Stange KC (2013) The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implement Sci 8(1):117. https://doi.org/10.1186/1748-5908-8-117

Chambers DA, Norton WE (2016) The adaptome: advancing the science of intervention adaptation. Am J Prev Med 51(4 Suppl 2):S124–S131. https://doi.org/10.1016/j.amepre.2016.05.011

Coldwell M, Greaney T, Higgins S, Brown C, Maxwell B, Stiell B, Stoll L, Willis B, Burns H (2017) Evidence-informed teaching: an evaluation of progress in England. https://www.gov.uk/government/publications/evidence-informed-teaching-evaluation-of-progress-in-england. Accessed 14 Aug 2020

Cook T, Gorard S (2007) What counts and what should count as evidence? In: Centre for Educational Research and Innovation (ed) Evidence in education: linking research and policy. OECD publishing, pp. 33–49. Accessed 14 Aug 2020

Craik J, Rappolt S (2006) Enhancing research utilization capacity through multifaceted professional development. Am J Occup Ther 60(2):155–164. https://doi.org/10.5014/ajot.60.2.155

Creaby C, Dann R, Morris A, Theobald K, Walker M, White B (2017) Leading research engagement in education–guidance for organisational change. http://www.cebenetwork.org/sites/cebenetwork.org/files/CEBE%20-%20Leading%20Research%20Engagement%20in%20Education%20-%20Apr%202017.pdf. Accessed 14 Aug 2020

Cunningham WMS, Duffee DE (2009) Styles of evidence-based practice in the child welfare system. J Evid Base Soc Work 6(2):176–197. https://doi.org/10.1080/15433710802686732

Davies H, Powell A, Smith S (2011) Supporting NHS Scotland in developing a new knowledge-to-action model. http://www.knowledge.scot.nhs.uk/media/CLT/ResourceUploads/4002569/K2A_Evidence.pdf. Accessed 14 Aug 2020

Davies H, Boaz A, Nutley S, Fraser A (2019) Conclusions: lessons from the past, prospects for the future. In: Boaz A, Davies H, Fraser A, Nutley S (eds) What works now? Evidence-informed policy and practice revisited. Policy Press, pp. 359–382

Dixon-Woods M, Agarwal S, Jones D, Young B, Sutton A (2005) Synthesising qualitative and quantitative evidence: a review of possible methods. J Health Serv Res Policy 10(1):45–53. https://doi.org/10.1177/135581960501000110

Dobrow MJ, Goel V, Lemieux-Charles L, Black NA (2006) The impact of context on evidence utilization: a framework for expert groups developing health policy recommendations. Soc Sci Med 63(7):1811–1824. https://doi.org/10.1016/j.socscimed.2006.04.020

Drisco JW, Grady MD (2015) Evidence-based practice in social work: a contemporary perspective. J Clin Soc Work 43:274–282. https://doi.org/10.1007/s10615-015-0548-z

Dyssegaard C, Egelund N, Sommersel H (2017) A systematic review of what enables or hinders the use of research-based knowledge in primary and lower secondary school. Danish Clearinghouse for Educational Research. https://www.videnomlaesning.dk/media/2176/what-enables-or-hinders-the-use-of-research-based-knowledge-in-primary-and-lower-secondary-school-a-systematic-review-and-state-of-the-field-analysis.pdf. Accessed 14 Aug 2020

Earl LM (2015) Reflections on the challenges of leading research and evidence use in schools. In: Brown CD (ed.) Leading the use of research and evidence in schools. Institute of Education Press, pp. 146–152

Earl LM, Timperley T (2009) Understanding how evidence and learning conversations work. In: Earl LM, Timperley T (eds) Professional learning conversations, Springer, pp. 1–12

Education Endowment Foundation (2019) The EEF guide to becoming an evidence-informed school governor and trustee. https://educationendowmentfoundation.org.uk/public/files/Publications/EEF_Guide_for_School_Governors_and_Trustees_2019_-_print_version.pdf. Accessed 14 Aug 2020

Epstein I (2009) Promoting harmony where there is commonly conflict: evidence-informed practice as an integrative strategy. Soc Work Health Care 48(3):216–231. https://doi.org/10.1080/00981380802589845

Evans C, Waring M, Christodoulou A (2017) Building teachers’ research literacy: integrating practice and research. Res Paper Educ 32(4):403–423. https://doi.org/10.1080/02671522.2017.1322357

Farley-Ripple E, Karpyn AE, McDonough K, Tilley K (2017) Defining how we get to research: a model framework for schools. In: Eryaman MY, Schneider B (eds) Evidence and public good in educational policy, research and practice, Springer, pp. 79–95

Farley-Ripple E, May H, Karpyn A, Tilley K, McDonough K (2018) Rethinking connections between research and practice in education: a conceptual framework. Educ Res 47(4):235–245. https://doi.org/10.3102/0013189X18761042

Fazey I, Bunse L, Msika J, Pinke M, Preedy K, Evely AC, Lambert E, Hastings E, Morris S, Reed MS (2014) Evaluating knowledge exchange in interdisciplinary and multi-stakeholder research. Glob Environ Change 25:204–220. https://doi.org/10.1016/j.gloenvcha.2013.12.012

Gabbay J, May A (2004) Evidence based guidelines or collectively constructed ‘mindlines?’ Ethnographic study of knowledge management in primary care. BMJ 329(7473):1013–1016. https://doi.org/10.1136/bmj.329.7473.1013

Gambrill E (2018) Contributions of the process of evidence-based practice to implementation: educational opportunities. J Soc Work Educ 54(1):S113–S125. https://doi.org/10.1080/10437797.2018.1438941

Ghate D, Hood R (2019) Using evidence in social care. In: Boaz A, Davies H, Fraser A, Nutley S (eds), What works now? Evidence-informed policy and practice. Policy Press, pp. 89–109

Gluckman P (2011) Towards better use of evidence in policy formation: a discussion paper. Office of the Prime Minister’s Science Advisory Committee. https://www.pmcsa.org.nz/wp-content/uploads/11-06-01-IPANZ-IPS-Evidence-in-policy1.pdf. Accessed 14 Aug 2020

Godfrey D (2019) Moving forward–How to create and sustain an evidence-informed school eco-system. In: Godfrey D, Brown C (eds) An ecosystem for research-engaged schools. Routledge, pp. 202–219

Gough D, Maidment C, Sharples J (2018) UK what works centres: aims, methods and contexts. EPPI-Centre, Social Science Research Unit, UCL Institute of Education, University College London. https://discovery.ucl.ac.uk/id/eprint/10055465/1/UK%20what%20works%20centres%20study%20final%20report%20july%202018.pdf. Accessed 14 Aug 2020

Gough D, Oliver S, Thomas J (2017) An introduction to systematic reviews, 2nd edn, Sage

Graaf G, Ratliff GA (2018) Preparing social workers for evidence-informed community-based practice: an integrative framework. J Soc Work Educ 54(1):S5–S19. https://doi.org/10.1080/10437797.2018.1434437

Graham ID, Logan JB, Harrison ME, Straus S, Tetroe J, Caswell W, Robinson N (2006) Lost in knowledge translation: time for a map? J Contin Educ Health Prof 26(1):13–24. https://doi.org/10.1002/chp.47

Greany T, Maxwell B (2017) Evidence-informed innovation in schools: aligning collaborative research and development with high quality professional learning for teachers. Int J Innov Educ 4(2-3):147–170. https://doi.org/10.1504/IJIIE.2017.088095

Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O (2004) Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q 82(4):581–629. https://doi.org/10.1111/j.0887-378X.2004.00325

Greenhalgh T, Peacock R (2005) Effectiveness and efficiency of search methods in systematic reviews of complex evidence: audit of primary sources. BMJ 331:1064–1065. https://doi.org/10.1136/bmj.38636.593461.68

Hawkins B, Parkhurst J (2016) The ‘good governance’ of evidence in health policy. Evid Policy 12(4):575–592. https://doi.org/10.1332/174426415X14430058455412

Hogan DL, Logan J (2004) The Ottawa model of research use: a guide to clinical innovation in the NICU. Clin Nurse Spec 18(5):255–261. https://doi.org/10.1097/00002800-200409000-00010

Keenan EK, Grady MD (2014) From silos to scaffolding: engaging and effective social work practice. J Clin Soc Work 42:193–204. https://doi.org/10.1007/s10615-014-0490-5

Kitson A, Harvey G, Mccormack B (1998) Enabling the implementation of evidence based practice: a conceptual framework. Qual Health Care 7(3):149–158. https://doi.org/10.1136/qshc.7.3.149

Langer L, Tripney J, Gough D (2016) The science of using science: researching the use of research evidence in decision-making. http://eppi.ioe.ac.uk/cms/Portals/0/PDF%20reviews%20and%20summaries/Science%202016%20Langer%20report.pdf?ver=2016-04-18-142701-867. Accessed 14 Aug 2020

Leeman J, Calancie L, Kegler M, Escoffery C, Herrmann A, Thatcher E, Fernandez M (2017) Developing theory to guide building practitioners’ capacity to implement evidence-based interventions. Health Educ Behav 44(1):59–69. https://doi.org/10.1177/1090198115610572

Levin B (2013) To know is not enough: research knowledge and its use. Rev Educ 1(1):2–31. https://doi.org/10.1002/rev3.3001

Levitt R (2013) The challenges of evidence: provocation paper for the alliance for useful evidence. NESTA. Alliance for Useful Evidence. https://www.alliance4usefulevidence.org/assets/The-Challenges-of-Evidence1.pdf. Accessed 14 Aug 2020

Mallidou A, Atherton P, Chan L, Frisch N, Glegg S, Scarrow G (2018) Core knowledge translation competencies: a scoping review. BMC Health Serv Res 18(1):1–15. https://doi.org/10.1186/s12913-018-3314-4

Meyers D, Durlak C, Wandersman J (2012) The quality implementation framework: a synthesis of critical steps in the implementation process. Am J Commun Psychol 50(3-4):462–480. https://doi.org/10.1007/s10464-012-9522-x

Mincu M (2013) BERA Inquiry paper 6. Teacher quality and school improvement: what is the role of research? https://doi.org/10.13140/RG.2.2.32009.24169. Accessed 14 Aug 2020

Moore P (2006) Iterative best evidence synthesis programme: Hei Kete Raukura. Evidence based policy project report, August 2006. Ministry of Education, Wellington, NZ

Morton S, Seditas K (2018) Evidence synthesis for knowledge exchange: balancing responsiveness and quality in providing evidence for policy and practice. Evid Policy 14(1):155–167. https://doi.org/10.1332/174426416X14779388510327

Nelson J, Campbell C (2019) Using evidence in education. In: Boaz A, Davies H, Fraser A, Nutley S (eds) What works now? Evidence-informed policy and practice revisited. Policy Press, pp. 131–149

Nelson J, Mehta P, Sharples J, Davey C (2017) Measuring teachers’ research engagement: findings from a pilot study. Education Endowment Foundation

Nelson J, O’Beirne C (2014) Using evidence in the classroom: what works and why? NFER. http://dera.ioe.ac.uk/id/eprint/27753. Accessed 14 Aug 2020

Nutley S, Morton S, Jung T, Boaz A (2010) Evidence and policy in six European countries: diverse approaches and common challenges. Evid Policy 6(2):131–144

Nutley S, Walter I, Davies HTO (2007) Using evidence: how research can inform public services. Policy Press

Nutley S, Powell A, Davies H (2013) What counts as good evidence? Alliance for Useful Evidence. NESTA. https://research-repository.st-andrews.ac.uk/bitstream/handle/10023/3518/What_Counts_as_Good_Evidence_published_version.pdf?sequence=1. Accessed 14 Aug 2020

Okpych NJ, Yu JL-H (2014) A historical analysis of evidence-based practice in social work: the unfinished journey toward an empirically grounded profession. Soc Serv Rev 88(1):3–58. https://doi.org/10.1086/674969

Oliver K, Boaz A (2019) Transforming evidence for policy and practice: creating space for new conversations. Palgrave Commun 5(1):1–10. https://doi.org/10.1057/s41599-019-0266-1

Park V (2018) Leading data conversation moves: toward data-informed leadership for equity and learning. Educ Admin Q 54(4):617–647. https://doi.org/10.1177/0013161X18769050

Parkhurst J (2017) The politics of evidence: from evidence-based policy to the good governance of evidence. Routledge

Popay P, Roberts H, Sowden A, Petticrew M, Arai L, Rodgers M, Britten N (2006) Guidance on the conduct of narrative synthesis in systematic reviews. Institute of Health Research. https://www.lancaster.ac.uk/media/lancaster-university/content-assets/documents/fhm/dhr/chir/NSsynthesisguidanceVersion1-April2006.pdf. Accessed 14 Aug 2020

Puttick R (2018) Mapping the standards of evidence used in UK social policy. https://media.nesta.org.uk/documents/Mapping_Standards_of_Evidence_A4UE_final.pdf. Accessed 14 Aug 2020

Rickinson M, Walsh L, Cirkony C, Salisbury M, Gleeson J (2020) Quality use of research evidence framework. https://www.monash.edu/education/research/projects/qproject/publications/quality-use-of-research-evidence-framework-qure-report. Accessed 14 Aug 2020

Rosen A (2003) Evidence-based social work practice: challenges and promise. Soc Work Res 27(4):197–208. https://doi.org/10.1093/swr/27.4.197

Rutter J, Gold J (2015) Show your workings: assessing how government uses evidence to make policy. Institute for Government. https://www.instituteforgovernment.org.uk/sites/default/files/publications/4545%20IFG%20-%20Showing%20your%20workings%20v7.pdf. Accessed 14 Aug 2020

Sackett D, Rosenberg W, Gray J, Haynes R, Richardson W (1996) Evidence based medicine: what it is and what it isn’t. BMJ 312(7023):71–72. https://doi.org/10.1136/bmj.312.7023.71

Satterfield J, Spring B, Brownson R, Mullen E, Newhouse R, Walker B, Whitlock E (2009) Toward a transdisciplinary model of evidence‐based practice. Milbank Q 87(2):368–390. https://doi.org/10.1111/j.1468-0009.2009.00561

Schalock RL, Verdugo MA, Gomez LE (2011) Evidence-based practices in the field of intellectual and developmental disabilities: an international consensus approach. Eval Program Plan 34:273–282. https://doi.org/10.1016/j.evalprogplan.2010.10.004

Sharples J (2013) Evidence for the frontline: a report for the alliance for useful evidence. https://www.alliance4usefulevidence.org/assets/EVIDENCE-FOR-THE-FRONTLINE-FINAL-5-June-2013.pdf. Accessed 14 Aug 2020

Sharples J, Albers B, Fraser S, Kime S (2019) Putting evidence to work: a school’s guide to implementation: guidance report. https://educationendowmentfoundation.org.uk/public/files/Publications/Implementation/EEF_Implementation_Guidance_Report_2019.pdf. Accessed 14 Aug 2020

Smith KE (2017) Beyond ‘evidence-based policy’ in a ‘post-truth’ world: the role of ideas in public health policy. In: Hudson J, Needham C, Heins E (eds) Social policy review 29. Analysis and debate in social policy, Policy Press, pp. 151–175

Stetler C (2001) Updating the Stetler Model of research utilization to facilitate evidence-based practice. Nurs Outlook 49(6):272–279

Stoll L, Greany T, Coldwell M, Higgins S, Brown C, Maxwell B, Stiell B, Willis B, Burns H (2018a). Evidence-informed teaching: self-assessment tool for teachers. https://iris.ucl.ac.uk/iris/publication/1533174/1. Accessed 14 August 2020

Stoll L, Greany T, Coldwell M, Higgins S, Brown C, Maxwell B, Stiell B, Willis B, Burns H (2018b) Evidence-informed teaching: self-assessment tool for schools. https://iris.ucl.ac.uk/iris/publication/1533172/1. Accessed 14 Aug 2020

Tripney J, Gough D, Sharples J, Lester S, Bristow D (2018) Promoting teacher engagement with research evidence. https://www.wcpp.org.uk/wp-content/uploads/2018/11/WCPP-Promoting-Teacher-Engagement-with-Research-Evidence-October-2018.pdf. Accessed 14 Aug 2020

Walker M, Nelson J, Bradshaw S, Brown C (2019) Teachers’ engagement with research: what do we know. A research briefing. Millbank: Education Endowment Foundation. Education Endowment Foundation. https://educationendowmentfoundation.org.uk/public/files/Evaluation/Teachers_engagement_with_research_Research_Brief_JK.pdf. Accessed 14 Aug 2020

Ward V, Smith S, Carruthers S, Hamer S, House A (2010) Knowledge brokering. Exploring the process of transferring knowledge into action (Final report). University of Leeds. https://www.researchgate.net/publication/262952769_Knowledge_Brokering_Exploring_the_process_of_transferring_knowledge_into_action. Accessed 14 Aug 2020

Acknowledgements

The work reported in this article is part of the Monash Q Project, which is a partnership funded by the Paul Ramsay Foundation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rickinson, M., Cirkony, C., Walsh, L. et al. Insights from a cross-sector review on how to conceptualise the quality of use of research evidence. Humanit Soc Sci Commun 8, 141 (2021). https://doi.org/10.1057/s41599-021-00821-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-021-00821-x

- Springer Nature Limited