Abstract

Observing stochastic trajectories with rare transitions between states, practically undetectable on time scales accessible to experiments, makes it impossible to directly quantify the entropy production and thus infer whether and how far systems are from equilibrium. To solve this issue for Markovian jump dynamics, we show a lower bound that outperforms any other estimation of entropy production (including Bayesian approaches) in regimes lacking data due to the strong irreversibility of state transitions. Moreover, in the limit of complete irreversibility, our effective version of the thermodynamic uncertainty relation sets a lower bound to entropy production that depends only on nondissipative aspects of the dynamics. Such an approach is also valuable when dealing with jump dynamics with a deterministic limit, such as irreversible chemical reactions.

Similar content being viewed by others

Introduction

Energy transduction and information processing—the hallmarks of biological systems—can only happen in finite times at the cost of continuous dissipation. Quantifying time irreversibility and entropy production is thus a central challenge in experiments on mesoscopic systems out of equilibrium1,2, including living matter3,4,5,6,7,8,9,10 and technological devices11. Experimental efforts have been paralleled by the recent development of nonequilibrium statistical mechanics, focused on adding methods for estimating the entropy production rate σ in steady states10,12,13,14,15,16,17,18,19,20. Several approaches, for example, focus on improving estimates of σ in cases of incomplete information and coarse-graining21,22,23,24,25. Sometimes, they are based on lower bounds on σ2,18,19, of which the thermodynamic uncertainty relation (TUR) is a prominent example17,26,27,28,29,30,31,32,33,34. Classical methods estimate σ by adding local contributions from non-zero fluxes between states35. However, recent approaches have also introduced methods exploiting the statistics of return times or waiting times36,37,38,39,40.

For high-dimensional diffusive systems or Markov jump processes on an ample state space, where the experimental estimate of microscopic forces becomes difficult, the TUR is a valuable tool for quantifying approximately σ. Indeed, the TUR is a frugal inequality, being based only on the knowledge of the first two cumulants of any current J integrated over a time τ, i.e. its average 〈J〉 and its variance var(J) = 〈J2〉 − 〈J〉2,

However, (1) might provide a loose bound on σ. For example, far from equilibrium, kinetic factors41 often constrain the right-hand side of (1) to values much smaller than σ.

Let us focus on a Markov jump process with transition rate wij from state i to state j, in a stationary regime with steady-state probability ρi. If there is a coupling between equilibrium reservoirs and the system, for each forward transition between two states i and j, denoted (i, j), the backward evolution (j, i) is possible. By defining fluxes ϕij = ρiwij, the mean entropy production rate is written42,43,44 as

The system is in equilibrium only if all currents are zero, that is, ϕij = ϕji for every pair {i, j}. Experimentally, fluxes ϕij are estimated by counting the transitions between states i and j in a sufficiently long time interval t.

The challenge we focus on is evaluating the entropy production rate with experimental data missing the observation of one or more backward transitions—any null flux makes (2) inapplicable. This situation is encountered in a vast class of idealized mesoscopic systems such as totally asymmetric exclusion processes45, directed percolation46, spontaneous emission in a zero-temperature environment47, enzymatic reactions48, perfect resetting49. A first solution to this problem involves replacing each unobserved transition ϕij by a fictitious rate ϕij ~ t−1 scaling with the observation time. Intuitively, this corresponds to assuming that no transition was observed during a time t because its flux was barely lower than t−1. A Bayesian approach refines this simple argument, proposing the optimized assumption ϕij ≃ (ρj/ρi)/t for null fluxes in unidirectional transitions35. Hence, it allows us to estimate the entropy production directly from (2).

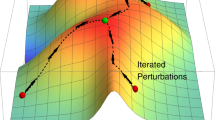

In this Letter, we put forward an additional estimation of the entropy production rate, not based on (2). Instead, we introduce a lower bound to σ (see (15) with (3) below) using the exact quantities needed for the estimation of (2), i.e., single fluxes. Notwithstanding that, our inequality can be tight and give an estimate of σ that outperforms (2) (and any standard TUR) in regimes lacking data, in which transitions between states may appear experimentally as unidirectional in a trajectory of finite duration t. The efficacy of our approach derives from several optimizations related to (i) the short time limit τ → 018,19, (ii) the so-called hyperaccurate current32,33,50,51, and, most importantly, (iii) an uncertainty relation32,52 in which the specific presence of the inverse hyperbolic tangent boosts the lower limit imposed to σ by the TUR (1) (the hyperbolic tangent appears in several derivations of TURs29,30). In the extreme case in which all observed transitions appear unidirectional, the inequality simplifies to

where the average jumping rate (or dynamical activity, or frenesy53) κ characterizes the degree of agitation of the system. Thus, far from equilibrium, the nondissipative quantity κ binds the average amount of dissipation σ. Key to our approach is the assumption that the overall rate of all unobserved (reverse) transitions is of the order ~t−1. At the same time, we make no assumption for the specific reverse rate of each unidirectional transition. Equation (3) can also be used if only forward rates are known analytically and backward rates are small and unknown. The chemical reactions described at the end of the paper fall into this scenario.

Results and discussion

Empirical estimation

Equation (2) holds for Markov jump processes with transition rates satisfying the local detailed balance condition \({w}_{ij}/{w}_{ji}={e}^{{s}_{ij}}\), where sij = − sji is entropy increase in the environment (in units of kB = 1, hereafter) when transition (i, j) takes place. For these processes, the experimental data we consider are time series of states (i(0), i(1), …, i(n)) and of the corresponding jumping times (t(1), t(2), …, t(n) < t). The total residence time \({t}_{i}^{R}\) that a trajectory spends on a state i gives the empirical steady-state distribution \({p}_{i}={t}_{i}^{R}/t\) that approaches the steady-state probability distribution ρi for long times t.

The estimation of σ is based on empirical measurements of fluxes ϕij, which we define starting from the number nij = nij(t) of observed transitions (i, j),

If the observation time t is much larger than the largest time scale, i.e. \(t \, \gg \, {\tau }_{{{{{\rm{sys}}}}}}={({\min }_{i,j}{\phi }_{ij})}^{-1}\), the empirical flux \({\dot{n}}_{ij}\) converges to ϕij and the estimate of the entropy production rate simply becomes

However, our focus is on systems in which t < τsys, so that some transitions \((i,j)\in {{{{\mathcal{I}}}}}\) are probably never observed (nij = 0) while their reverse ones (j, i) are (nji ≠ 0). In this case, the process appears absolutely irreversible54 and (4) is inapplicable—the estimate (5) would give an infinite entropy production. We assume that the network remains connected if one removes the transitions belonging to the set \({{{{\mathcal{I}}}}}\) so that the dynamics stays ergodic. Note that the case where both nij = 0 and nji = 0 poses no difficulties. Indeed, one neglects the edge {i, j}, at the possible price of underestimating σ. We leave this understood and deal with the residual cases in which only one of the two countings is null.

If in a time t a transition from i to j is not observed, we conclude that the typical time scale of the transition is not shorter than t, that is ϕij ≲ t−1. A more quantitative argument35 suggests “curing” the numerical estimates of fluxes by introducing a similar minimal assumption,

and uses these regularized estimates of ϕ’s in (5).

Lower bounds to σ

To use TURs, we define currents by counting algebraically transitions between states. For example, for (i, j), an integrated current during a time τ is just the counting nij(τ) − nji(τ) during that period. By linearly combining single transition currents via antisymmetric weights cij = − cji (stored in a matrix c), one may define a generic current J = Jc = ∑i < j cij[nij(τ) − nji(τ)].

Among all possible currents, one can choose those giving the best lower bound to σ, for instance, by machine learning methods18,19,20. However, refs. 50,51 show that the TUR can be analytical optimized by choosing the hyperaccurate current J hyp. In the limit τ → 0, the coefficients defining J hyp take the simple form32

Moreover, the TUR (1) is the tightest when J is integrated over an infinitesimal time τ → 018,19 and can become equality in the limit τ → 0 only for overdamped Langevin dynamics. However, for Markovian stationary processes Eq. (24) in ref. 32 states that

where \({{{{\mathcal{T}}}}}\equiv M\tau\) is a time span collecting M short steps of duration τ. It is therefore useful to exploit the TUR in the short τ limit and define the short-time precision \({\mathfrak{p}}(J)\) as

For Markov jump processes, for a generic J, we have

and the related TUR in the limit τ → 0 is

Focusing on the hyperaccurate current, which is also characterized by 〈J hyp〉 = var(J hyp), the optimized TUR reduces to \(\sigma \ge {\sigma }_{{{{{\rm{TUR}}}}}}^{{{{{\rm{hyp}}}}}}=2{{\mathfrak{p}}}^{{{{{\rm{hyp}}}}}}\) (involving the so-called pseudo-entropy55) with

In the following, we will use an improvement of the TUR. We start from the implicit formula derived in52,

which depends on the frenesy

and the function f that is the inverse of \(x\tanh x\). We use the relation g(x) = x/f(x) with g the inverse function of \(x{\tanh }^{-1}x\)56 to turn (13) into an explicit lower bound on the entropy production

The inequality (15) reduces to the TUR close to equilibrium, where σ → 0, and is tighter than the kinetic uncertainty relation41 far from equilibrium where \(\tanh (\sigma /2\sqrt{{{\mathfrak{p}}}^{{{{{\rm{hyp}}}}}}\kappa })\to 1\), namely \(\kappa \ge {{\mathfrak{p}}}^{{{{{\rm{hyp}}}}}}\).

Empirically, for κ t ≫ 1, we can approximate the optimized precision (12) and the frenesy (14) with

Neither of them does require the regularization (6). However, the (positive) argument of the \({\tanh }^{-1}\) function in (15) needs to be strictly lower than 1, i.e. \({{\mathfrak{p}}}_{{{{{\rm{emp}}}}}}^{{{{{\rm{hyp}}}}}} < {\kappa }_{{{{{\rm{emp}}}}}}\).

With \({{\mathfrak{p}}}_{{{{{\rm{emp}}}}}}^{{{{{\rm{hyp}}}}}}\) there arises a problem when one measures irreversible transitions in all edges, \(\min ({\dot{n}}_{ij},{\dot{n}}_{ji})=0\) for every i and j: in that case, one can see that \({{\mathfrak{p}}}_{{{{{\rm{emp}}}}}}^{{{{{\rm{hyp}}}}}}={\kappa }_{{{{{\rm{emp}}}}}}\). To fix the divergence of \({\tanh }^{-1}\) that it would lead to, we use an assumption milder than the requirement of reversibility for all transitions used for (6).

If \({{\mathfrak{p}}}_{{{{{\rm{emp}}}}}}^{{{{{\rm{hyp}}}}}}={\kappa }_{{{{{\rm{emp}}}}}}\), we assume that the observation time t is barely larger than the typical time needed to have any reverse transition. By denoting a tiny rate of any unobserved transition \((i,j)\in {{{{\mathcal{I}}}}}\) as ϵij, the ratio of the precision of the hyperaccurate current over the frenesy becomes

where we have set \({\sum }_{(i,j)\in {{{{\mathcal{I}}}}}}{\epsilon }_{ij}=1/t\) according to our assumption and we replaced κemp with κ because they give the same bound to leading order in t.

Hence, in an experiment measuring only irreversible transitions for t ≫ 1/κ, (15) with (18) still provide a lower bound on the entropy production rate:

By Taylor expanding the argument of \({\tanh }^{-1}\) to the leading order, (19) simplifies to the lower bound anticipated in (3), which is based simply on evaluating the frenesy κ empirically with (17).

Examples

Let us illustrate the performance of the various estimators of the entropy production rate with the four-state nonequilibrium model sketched in Fig. 1. Some transitions (black arrows) have a constant rate wij = 1 (in dimensionless units). Other transition rates depend on a nonequilibrium strength α, with α = 0 representing equilibrium: wij = e−α (red arrows), and wij = e−3α (thin blue arrows). The latter class is the first to go undetected for sufficiently large α, which causes the empirical estimate σemp to start deviating from the theoretical value (α ≳ 1.7 in Fig. 1). However, σemp remains the best option for evaluating σ up to a value α ≈ 4, where the lower bound \({\sigma }_{\tanh }^{{{{{\rm{hyp}}}}}}\) takes over as the best σ estimator far from equilibrium. Interestingly, for α ≳ 4, the probability firr to measure a trajectory with only irreversible transitions (green curve in Fig. 1) is still small. Hence, it is the full scheme with (15) and the extreme case (3) that provides a good estimate of σ. For this setup, the TUR optimized with the hyperaccurate current is only useful close to equilibrium but is never the best option. For comparison with the hyperaccurate version, in Fig. 1, we also show the loose lower bound offered by the TUR for the current J defined on the single edge {i = 1, j = 2}.

For the 4-states model (inset and description in the subsection Examples), estimates of the entropy production rate and theoretical value σth (left axis), and the fraction of trajectories displaying only irreversible transitions (green curve, right axis) as a function of the nonequilibrium strength α. The sampling time is t = 103, and bands show one standard deviation variability over trajectories. Highlighted regions: (i) the empirical estimate works well while lower bounds progressively depart from σth; (ii) σemp deviates from σth but remains the best estimator; (iii) the lower bound \({\sigma }_{\tanh }^{{{{{\rm{hyp}}}}}}\) is the best estimator.

To appreciate the influence of the trajectory duration on the estimators, in Fig. 2a, we plot the scaling with the sampling time t of σemp and \({\sigma }_{\tanh }^{{{{{\rm{hyp}}}}}}\). In this example, the farther one goes from equilibrium, the longer \({\sigma }_{\tanh }^{{{{{\rm{hyp}}}}}}\) remains the better estimator. This aspect can be crucial if, for experimental limitations, one is restricted to a finite t. It is also emphasized in Fig. 2b, where we plot the time at which σemp becomes larger than \({\sigma }_{\tanh }^{{{{{\rm{hyp}}}}}}\), as a function of the true dissipation rate σth. Again, it shows that in more dissipative regimes, one can rely on \({\sigma }_{\tanh }^{{{{{\rm{hyp}}}}}}\) if trajectories are too short to obtain a good estimate of σth with σemp.

a For three values of the nonequilibrium parameter α (see legend) in the model of Fig. 1, scaling with the sampling time t of the estimators σemp and \({\sigma }_{\tanh }^{{{{{\rm{hyp}}}}}}\), and theoretical values (horizontal thick lines). Bands show one standard deviation variability over trajectories. b Time when σemp becomes larger than \({\sigma }_{\tanh }^{{{{{\rm{hyp}}}}}}\), as a function of σth.

The second example shows how our lower bound may scale favorably with the system size compared to the other σ estimators. We study a periodic ring of N states with local energy \({u}_{i}=-\cos (2\pi i/N)\) and transitions rates wi,i+1 = 1, \({w}_{i,i-1}=\exp [-\alpha /N+{u}_{i}-{u}_{i-1}]\) (temperature is T = 1). Figure 3 shows the ratios of \({\sigma }_{{{{{\rm{TUR}}}}}}^{{{{{\rm{hyp}}}}}}\), \({\sigma }_{\tanh }^{{{{{\rm{hyp}}}}}}\), and σemp over the theoretical value σth, as a function of the nonequilibrium force α, both for a ring with N = 10 and for a longer ring with N = 20 states. For each N, we see that \({\sigma }_{\tanh }^{{{{{\rm{hyp}}}}}}\) far from equilibrium is the best estimator of the entropy production rate. Furthermore, it also appears to be the estimator that scales better by increasing the system size: its plateau at σ/σth ≃ 1 scales linearly with N (the inset of Fig. 3 shows the α* value where σ/σth drops below the arbitrary threshold 0.9, divided by N, as a function of N), while this is not the case for the empirical estimate σemp. These results propose \({\sigma }_{\tanh }^{{{{{\rm{hyp}}}}}}\) as a valuable resource in a continuous limit to deterministic, macroscopic conditions. Next, we explore this possibility.

For the ring model described in the subsection Examples, we show various estimates of the entropy production rate divided by the theoretical value, for t = 104, as a function of the nonequilibrium strength α/N, for ring lengths N = 10 and N = 20. The inset shows α*/N. For α → 0, the standard deviation of σ/σth (shaded bands) are amplified because also σth → 0.

Deterministic limit

Our approach extends to deterministic dynamics resulting from the macroscopic limit of underlying Markov jump processes for many interacting particles such as driven or active gasses57,58, diffusive and reacting particles59,60, mean-field Ising and Potts models61,62, and charges in electronic circuits63. In these models, the state’s label i is a vector with entries that indicate the number of particles of a given type. The system becomes deterministic when the typical number of particles goes to infinity, controlled by a parameter, such as a system size V → ∞. In this limit, it is customary to introduce continuous states x = i/V 64, e.g., a vector of concentrations, such that wij = Vωr(x) where ± r labels the transitions to (or from) the states infinitesimally close to x. Such transition rate scaling is equivalent to measuring events in a macroscopic time \(\tilde{t}\equiv tV\). The probability pi ~ δ(x − x*) peaks around the most probable state 〈x〉 ≡ x*. The entropy production rate (5) becomes extensive in V 65, and its density takes the deterministic value

with ω±r(x*) the macroscopic fluxes.

We are interested in the case where backward transitions (r < 0) are practically not observable, i.e., the fluxes ω−∣r∣(x*) are negligibly small compared to the experimental errors. Since κ is also extensive in V, we define the frenesy density \(\tilde{\kappa }\equiv \kappa /V={\sum }_{r}{\omega }_{r}({x}^{* })\) and apply (3) as

The formula above holds for \(\tilde{t} \, \ll \, 1/\max ({\omega }_{-| r| }({x}^{* }))\), which is the typical time when backward fluxes become sizable.

We compare (20) and (21) for systems of chemical reactions with mass action kinetics, i.e., each flux ω±r(x*) is given by the product of reactant concentrations times the rate constant k±r66. In particular, we take the following model of two chemical species, X and Y, with uni- and bimolecular reactions,

Figure 4a shows, for a specific set of rate constants, that the bound (21) outperforms the empirical estimation (20) for all times when the dynamics appears absolutely irreversible. This occurs for all randomly drawn values of the rate constants (Fig. 4b). Additionally, we plot the histogram of times \({\tilde{t}}_{q}\) at which (21) and (20) reach the fraction q < 1 of the theoretical entropy production rate, for q = 0.6 in Fig. 4c and q = 0.8. Figure 4d. Times resulting from (21) are significantly shorter than those from the empirical measure (20).

a Estimates (20) and (21) normalized to the true σth as a function of macroscopic time \(\tilde{t}\) up to the inverse of the maximum flux, for the chemical reaction network (22) with forward rate constants k+r = {5, 2, 1, 0.2} and backward k−r = 10−5. b With 2000 random realizations of the chemical reaction network (uniformly sampled rate constants k+r ∈ [10−2, 102], and k−r = 10−5), estimate (20) vs. (21), both normalized to the true \({\tilde{\sigma }}_{{{{{\rm{th}}}}}}\), for a fixed time \(\tilde{t}=0.1/\max ({\omega }_{r}({x}^{* }))\). c Probability distribution of \({\tilde{t}}_{q}\) for q = 0.6, and d q = 0.8.

The application of (3) is possible either when the forward rates are analytically known (as in the example in Fig. 4) or when they can be experimentally reconstructed. Direct measurement of chemical fluxes is a challenging task. One case in which they are measurable with high precision is photochemical reactions in which the emission of photons at a specific known frequency signals the reaction events (see47 and references therein). In general, one can measure reliably only concentrations, from which one can extract reaction fluxes only in specific networks (and if the reaction constants are known). We note, however, that the same method exemplified with chemical reactions can be used, e.g., for electronic circuits, where counting electron fluxes between resistive elements is much easier.

Conclusion

In summary, the lower bound (15), turning into (3) for systems that appear absolutely irreversible, is more effective than the direct estimate (5) with (6) in cases of lacking data. Knowledge of the macroscopic fluxes is enough to apply our formula (3), which outperforms the direct estimation in strongly irreversible systems where all backward fluxes are undetectable. Thus, we provide a effective tool to estimate the dissipation in biological and artificial systems, whose performances are limited by energetic constraints7,67,68.

Methods

Dynamics of the stochastic model systems described in the subsection Examples and the deterministic chemical reaction network described in subsection Deterministic limit have been obtained by the standard Gillespie algorithm and numerical integration of the rate equations, respectively.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code availability

The numerical codes that support the findings of this study are available from the corresponding author upon reasonable request.

References

Ciliberto, S. Experiments in stochastic thermodynamics: short history and perspectives. Phys. Rev. X 7, 021051 (2017).

Li, J., Horowitz, J. M., Gingrich, T. R. & Fakhri, N. Quantifying dissipation using fluctuating currents. Nat.Commun. 10, 1–9 (2019).

Martin, P., Hudspeth, A. & Jülicher, F. Comparison of a hair bundle’s spontaneous oscillations with its response to mechanical stimulation reveals the underlying active process. Proc. Natl Acad. Sci. 98, 14380–14385 (2001).

Battle, C. et al. Broken detailed balance at mesoscopic scales in active biological systems. Science 352, 604–607 (2016).

Turlier, H. et al. Equilibrium physics breakdown reveals the active nature of red blood cell flickering. Nat. Phys. 12, 513 (2016).

Dabelow, L., Bo, S. & Eichhorn, R. Irreversibility in active matter systems: fluctuation theorem and mutual information. Phys. Rev. X 9, 021009 (2019).

Yang, X. et al. Physical bioenergetics: energy fluxes, budgets, and constraints in cells. Proc. Natl Acad. Sci. 118, e2026786118 (2021).

Ro, S. et al. Model-free measurement of local entropy production and extractable work in active matter. Phys. Rev. Lett. 129, 220601 (2022).

Fodor, É., Jack, R. L. & Cates, M. E. Irreversibility and biased ensembles in active matter: Insights from stochastic thermodynamics. Annu. Rev. Condens. Matter Phys. 13, 215–238 (2022).

Di Terlizzi, I. et al. Variance sum rule for entropy production. Science 383, 971 (2024).

Gümüş, E. et al. Calorimetry of a phase slip in a Josephson junction. Nature Physics 19, 196–200 (2023).

Loos, S. A. M. & Klapp, S. H. Irreversibility, heat and information flows induced by non-reciprocal interactions. New J. Phys. 22, 123051 (2020).

Ehrich, J. Tightest bound on hidden entropy production from partially observed dynamics. J. Stat. Mech.: Theory Exp. 2021, 083214 (2021).

Roldán, É., Barral, J., Martin, P., Parrondo, J. M. & Jülicher, F. Quantifying entropy production in active fluctuations of the hair-cell bundle from time irreversibility and uncertainty relations. New J. Phys. 23, 083013 (2021).

Wachtel, A., Rao, R. & Esposito, M. Free-energy transduction in chemical reaction networks: from enzymes to metabolism. J. Chem. Phys. 157, 024109 (2022).

Cates, M. E., Fodor, É., Markovich, T., Nardini, C. & Tjhung, E. Stochastic hydrodynamics of complex fluids: discretisation and entropy production. Entropy 24, 254 (2022).

Dechant, A. & Sasa, S.-i Improving thermodynamic bounds using correlations. Phys. Rev. X 11, 041061 (2021).

Manikandan, S. K., Gupta, D. & Krishnamurthy, S. Inferring entropy production from short experiments. Phys. Rev. Lett. 124, 120603 (2020).

Otsubo, S., Ito, S., Dechant, A. & Sagawa, T. Estimating entropy production by machine learning of short-time fluctuating currents. Phys. Rev. E 101, 062106 (2020).

Kim, D.-K., Bae, Y., Lee, S. & Jeong, H. Learning entropy production via neural networks. Phys. Rev. Lett. 125, 140604 (2020).

Bilotto, P., Caprini, L. & Vulpiani, A. Excess and loss of entropy production for different levels of coarse graining. Phys. Rev. E 104, 024140 (2021).

Dieball, C. & Godec, A. Mathematical, thermodynamical, and experimental necessity for coarse graining empirical densities and currents in continuous space. Phys. Rev. Lett. 129, 140601 (2022).

Ghosal, A. & Bisker, G. Entropy production rates for different notions of partial information. J. Phys. D: Appl. Phys. 56 (2023).

Nitzan, E., Ghosal, A. & Bisker, G. Universal bounds on entropy production inferred from observed statistics. Phys. Rev. Res. 5, 043251 (2023).

van der Meer, J., Degünther, J. & Seifert, U. Time-resolved statistics of snippets as general framework for model-free entropy estimators. Phys. Rev. Lett. 130, 257101 (2023).

Barato, A. C. & Seifert, U. Thermodynamic uncertainty relation for biomolecular processes. Phys. Rev. Lett. 114, 158101 (2015).

Macieszczak, K., Brandner, K. & Garrahan, J. P. Unified thermodynamic uncertainty relations in linear response. Phys. Rev. Lett. 121, 130601 (2018).

Gingrich, T. R., Rotskoff, G. M. & Horowitz, J. M. Inferring dissipation from current fluctuations. J. Phys. A: Math. Theor. 50, 184004 (2017).

Hasegawa, Y. & Van Vu, T. Fluctuation theorem uncertainty relation. Phys. Rev. Lett. 123, 110602 (2019).

Timpanaro, A. M., Guarnieri, G., Goold, J. & Landi, G. T. Thermodynamic uncertainty relations from exchange fluctuation theorems. Phys. Rev. Lett. 123, 090604 (2019).

Dechant, A. & Sasa, S.-i. Fluctuation–response inequality out of equilibrium. Proc. Natl Acad. Sci. 117, 6430–6436 (2020).

Falasco, G., Esposito, M. & Delvenne, J.-C. Unifying thermodynamic uncertainty relations. New J. Phys. 22, 053046 (2020).

Van Vu, T., Tuan Vo, V. & Hasegawa, Y. Entropy production estimation with optimal current. Phys. Rev. E 101, 042138 (2020).

Van Vu, T. & Saito, K. Thermodynamic unification of optimal transport: thermodynamic uncertainty relation, minimum dissipation, and thermodynamic speed limits. Phys. Rev. X 13, 011013 (2023).

Zeraati, S., Jafarpour, F. H. & Hinrichsen, H. Entropy production of nonequilibrium steady states with irreversible transitions. J. Stat. Mech.: Theory Exp. 2012, L12001 (2012).

Martínez, I. A., Bisker, G., Horowitz, J. M. & Parrondo, J. M. Inferring broken detailed balance in the absence of observable currents. Nat. Commun. 10, 1–10 (2019).

Skinner, D. J. & Dunkel, J. Improved bounds on entropy production in living systems. Proc. Natl Acad. Sci. 118, e2024300118 (2021).

Skinner, D. J. & Dunkel, J. Estimating entropy production from waiting time distributions. Phys. Rev. Lett. 127, 198101 (2021).

Van der Meer, J., Ertel, B. & Seifert, U. Thermodynamic inference in partially accessible Markov networks: a unifying perspective from transition-based waiting time distributions. Phys. Rev. X 12, 031025 (2022).

Harunari, P. E., Dutta, A., Polettini, M. & Roldán, É. What to learn from few visible transitions’ statistics? Phys. Rev. X 12, 041026 (2022).

Di Terlizzi, I. & Baiesi, M. Kinetic uncertainty relation. J. Phys. A: Math. Gen 52, 02LT03 (2019).

Maes, C. On the origin and the use of fluctuation relations for the entropy. Séminaire Poincaré 2, 29–62 (2003).

Seifert, U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 75, 126001 (2012).

Peliti, L. & Pigolotti, S. Stochastic Thermodynamics: An Introduction (Princeton University Press, 2021).

Derrida, B. Non-equilibrium steady states: fluctuations and large deviations of the density and of the current. J. Stat. Mech. 2007, P07023 (2007).

Takeuchi, K. A., Kuroda, M., Chaté, H. & Sano, M. Directed percolation criticality in turbulent liquid crystals. Phys. Rev. Lett. 99, 234503 (2007).

Penocchio, E., Rao, R. & Esposito, M. Nonequilibrium thermodynamics of light-induced reactions. J. Chem. Phys. 155, 114101 (2021).

Reuveni, S., Urbakh, M. & Klafter, J. Role of substrate unbinding in Michaelis–Menten enzymatic reactions. Proc. Nat Acad. Sci. 111, 4391–4396 (2014).

Mori, F., Olsen, K. S. & Krishnamurthy, S. Entropy production of resetting processes. Phys. Rev. Res. 5, 023103 (2023).

Busiello, D. M. & Pigolotti, S. Hyperaccurate currents in stochastic thermodynamics. Phys. Rev. E 100, 060102 (2019).

Busiello, D. M. & Fiore, C. Hyperaccurate bounds in discrete-state Markovian systems. J. Phys. A: Math Theor. 55, 485004 (2022).

Van Vu, T. et al. Unified thermodynamic—kinetic uncertainty relation. J. Phys. A 55, 405004 (2022).

Maes, C. Frenesy: time-symmetric dynamical activity in nonequilibria. Phys. Rep. 850, 1–33 (2020).

Murashita, Y., Funo, K. & Ueda, M. Nonequilibrium equalities in absolutely irreversible processes. Phys. Rev. E 90, 042110 (2014).

Shiraishi, N. Optimal Thermodynamic Uncertainty Relation in Markov Jump Processes. J. Stat. Phys. 185, 19 (2021).

Nishiyama, T. Thermodynamic-kinetic uncertainty relation: properties and an information-theoretic interpretation. Preprint at https://arxiv.org/abs/2207.08496 (2022).

Bertini, L., De Sole, A., Gabrielli, D., Jona-Lasinio, G. & Landim, C. Macroscopic fluctuation theory. Rev. Mod. Phys. 87, 593–636 (2015).

Solon, A. P. & Tailleur, J. Revisiting the flocking transition using active spins. Phys. Rev. Lett. 111, 078101 (2013).

Gaveau, B., Moreau, M. & Toth, J. Variational nonequilibrium thermodynamics of reaction-diffusion systems: I. The information potential. J. Chem. Phys. 111, 7736–7747 (1999).

Falasco, G., Rao, R. & Esposito, M. Information thermodynamics of turing patterns. Phys. Rev. Lett. 121, 108301 (2018).

Meibohm, J. & Esposito, M. Finite-time dynamical phase transition in nonequilibrium relaxation. Phys. Rev. Lett. 128, 110603 (2022).

Herpich, T., Thingna, J. & Esposito, M. Collective power: minimal model for thermodynamics of nonequilibrium phase transitions. Phys. Rev. X 8, 031056 (2018).

Freitas, N., Delvenne, J.-C. & Esposito, M. Stochastic thermodynamics of nonlinear electronic circuits: a realistic framework for computing around kt. Phys. Rev. X 11, 031064 (2021).

Van Kampen, N. G. Stochastic Processes In Physics And Chemistry Vol. 1 (Elsevier, 1992).

Herpich, T., Cossetto, T., Falasco, G. & Esposito, M. Stochastic thermodynamics of all-to-all interacting many-body systems. New J. Phys. 22, 063005 (2020).

Rao, R. & Esposito, M. Nonequilibrium thermodynamics of chemical reaction networks: wisdom from stochastic thermodynamics. Phys. Rev. X 6, 041064 (2016).

Gao, C. Y. & Limmer, D. T. Principles of low dissipation computing from a stochastic circuit model. Phys. Rev. Res. 3, 033169 (2021).

Freitas, N., Proesmans, K. & Esposito, M. Reliability and entropy production in nonequilibrium electronic memories. Phys. Rev. E 105, 034107 (2022).

Acknowledgements

The authors thank the anonymous reviewers for their insightful comments. Funding from the research grant BAIE_BIRD2021_01 of Università di Padova is gratefully acknowledged. G.F. is funded by the European Union—NextGenerationEU—and by the program STARS@UNIPD with project “ThermoComplex”.

Author information

Authors and Affiliations

Contributions

M.B. and G.F. conceptualized, wrote the software for data analysis and took care of visualization, wrote the original draft of the paper. M.B., T.N., and G.F. contributed to deriving and discussing the mathematical results and commented on the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Physics thanks Tan Vu and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Baiesi, M., Nishiyama, T. & Falasco, G. Effective estimation of entropy production with lacking data. Commun Phys 7, 264 (2024). https://doi.org/10.1038/s42005-024-01742-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42005-024-01742-2

- Springer Nature Limited