Abstract

Measuring entropy production of a system directly from the experimental data is highly desirable since it gives a quantifiable measure of the time-irreversibility for non-equilibrium systems and can be used as a cost function to optimize the performance of the system. Although numerous methods are available to infer the entropy production of stationary systems, there are only a limited number of methods that have been proposed for time-dependent systems and, to the best of our knowledge, none of these methods have been applied to experimental systems. Herein, we develop a general non-invasive methodology to infer a lower bound on the mean total entropy production for arbitrary time-dependent continuous-state Markov systems in terms of the moments of the underlying state variables. The method gives quite accurate estimates for the entropy production, both for theoretical toy models and for experimental bit erasure, even with a very limited amount of experimental data.

Similar content being viewed by others

Introduction

In the last two decades, the framework of stochastic thermodynamics has enabled us to give thermodynamic interpretation to mesoscopic systems that are driven arbitrarily far from equilibrium1. Using the assumption of local detailed balance and a time scale separation between the system and its environment, one can define thermodynamic quantities such as heat, work and entropy production for general Markov systems2,3. General results such as fluctuation theorems and thermodynamic uncertainty relations have been proposed and verified experimentally4,5,6,7,8,9,10,11,12,13,14. A fundamental quantity in stochastic thermodynamics is the entropy production1,2. Indeed, minimising entropy production is an important problem in several applications, such as heat engines15, information processing16 and biological systems17,18. Furthermore, it provides information and places bounds on the dynamics of the system in the form of the thermodynamic speed limits19,20,21,22,23,24,25,26,27 and the dissipation-time uncertainty relation28,29,30,31. Meanwhile measuring entropy production experimentally is difficult since one needs extensive knowledge about the details of the system, requiring a large amount of data.

Over the last couple of years, several methods have been developed to infer entropy production and other thermodynamic quantities in a model-free way or with limited information about the underlying system32,33. These techniques involve estimating entropy production using some experimentally accessible quantities. This includes methods based on thermodynamic bounds, such as the thermodynamic uncertainty relation34,35,36, inference schemes based on the measured waiting-time distribution37,38,39,40,41, and methods based on ignoring higher-order interactions42,43. However, most of these methods focus only on steady-state systems and do not work for time-dependent processes44,45,46,47,48,49,50,51,52,53,54,55,56. Nonetheless, time-dependent dynamics plays a crucial role in many systems. One can for example think about nano-electronic processes, such as bit erasure or AC-driven circuits, but also in biological systems, where oscillations in, e.g., metabolic rates or transcription factors, effectively lead to time-dependent driving57,58,59,60. There are few methods to infer entropy production for time-dependent systems11,12,61,62,63,64, and it is often challenging to apply them to experimental data. For instance, thermodynamic bound-based methods require the measurement of quantities such as response functions11,61 or time-inverted dynamics12, which require an invasive treatment of the system. Another interesting method combines machine learning with a variational approach63. This method does however require a large amount of experimental data and a high temporal resolution. This might also be the reason why, to the best of our knowledge, no experimental studies exist on the inference of entropy production under time-dependent driving.

This paper aims to address these issues by deriving a general lower bound on the mean of the total entropy produced for continuous-state time-dependent Markov systems in terms of the time-dependent moments of the underlying state variables. The method gives an analytic lower bound for the entropy production if one only focuses on the first two moments and reduces to a numerical scheme if higher moments are taken into account. The scheme also gives the optimal force field that minimizes the entropy production for the given time-dependent moments. After presenting the theoretical calculations and applications to analytically solvable models, we will test our method in an experimental scenario involving the erasure of computational bits57. The method works quite well for all examples, even when only the first two moments are taken into account and with a very limited amount of data ( < 100 trajectories).

Methods

Stochastic thermodynamics

We consider a d-dimensional stochastic system whose state at time t is denoted by a variable x(t) = {x1(t), x2(t), . . . . , xd(t)}. The system experiences a time-dependent force field \({{{{{\bf{F}}}}}}\left({{{{{\bf{x}}}}}}(t),t\right)\) and is in contact with a thermal reservoir at constant temperature T. The stochastic variable x(t) evolves according to an overdamped Langevin equation

kB is the Boltzmann constant and ζ(t) represents the Gaussian white noise with \(\left\langle {{{{{\boldsymbol{\zeta }}}}}}(t)\right\rangle =0\) and \(\left\langle {{{{{\boldsymbol{\zeta }}}}}}(t){{{{{\boldsymbol{\zeta }}}}}}(t^{\prime} )\right\rangle =2{{{{{\bf{D}}}}}}\,\delta (t-t^{\prime} )\), with D being the diffusion matrix. One can also write the Fokker-Planck equation associated with the probability distribution \(P\left({{{{{\bf{x}}}}}},t\right)\) as

with \({{{{{\bf{v}}}}}}\left({{{{{\bf{x}}}}}},t\right)\) being the probability flux given by

This Fokker-Planck equation arises in a broad class of physical systems like colloidal particles65, electronic circuits58 and spin systems66. Stochastic thermodynamics dictates that the mean total entropy produced when the system runs up to time tf is given by2

Although this formula, in principle, allows one to calculate the entropy production of any system described by a Fokker-Planck equation, one would need the force field and probability distribution at each point in space and time. This is generally not feasible in experimental set-ups.

In this paper, our aim is to develop a technique that gives information about the total entropy production in terms of experimentally accessible quantities. Using methods from the optimal transport theory19,67,68,69, we will derive a non-trivial lower bound on Stot(tf) completely in terms of the moments of x(t). This means, we can infer an estimate for Stot(tf) simply by measuring the first few moments.

Bound for one-dimensional systems

Let us first look at the one-dimensional case (d = 1) and denote the n-th moment of x(t) by Xn(t) = 〈x(t)n〉. Throughout this paper, we assume that these moments are finite for the entire duration of the protocol. We will first look at the case where one only has access to the first two moments X1(t) and X2(t), as the resulting estimate for the entropy production has a rather simple and elegant expression. Subsequently we will turn to the more general case.

First two moments

Our central goal is to obtain a lower bound on Stot(tf), constrained on the known time-dependent moments. This can be done by minimizing the following action:

with respect to the driving protocol, F(x, t). We have introduced four Lagrange multipliers, two of which, namely ζ1(tf) and ζ2(tf) associated with fixing of first two moments at the final time tf and the other two, μ1(t) and μ2(t) at the intermediate times. In the action, we have included the contribution of moments at the final time tf separately, as dictated by the Pontryagin’s Maximum Principle70

Minimizing the action with respect to F(x, t) seems very complicated, due to the non-trivial dependence of P(x, t) on F(x, t) in Eq. (2). To circumvent this problem, we use methods from the optimal transport theory and introduce a coordinate transformation y(x, t) as68

and overdot indicating the time derivative. Mathematically, this coordinate transformation is equivalent to changing from Eulerian to Lagrangian description in fluid mechanics. It has also been used to derive other thermodynamic bounds such as the thermodynamic speed limit19. Using this equivalence, one can show that71

for any test function g(x, t) and initial probability distribution P0(x) = P(x, 0). Plugging \(g(x,t)=\delta \left(x-y(x^{\prime} ,t)\right)\), we get

On the other hand, putting g(x, t) = v(x, t) in Eq. (8), we obtain

Similarly, the moments and their time-derivatives are given by

We can also write the force-field F(x, t) in terms of y(x, t) by using Eqs. (3) and (6). Hence we can calculate various quantities such as probability distribution, mean total dissipation, and force field utilizing y(x, t). In fact, it turns out that y(x, t) is uniquely determined for a given force-field. Using the relations in Eqs. (7) and (8), we can reformulate the optimisation of the action \({\mathbb{S}}(x,v,{t}_{f})\) in Eq. (5) with respect to F(x, t) as an optimisation problem in y(x, t). Meanwhile one can rewrite \({\mathbb{S}}(x,v,{t}_{f})\) as

For a small change y(x, t) + δy(x, t), the total change in action is

For the optimal protocol, this small change has to vanish. Vanishing of the first term on the right hand side for arbitrary δy(x, t) gives the Euler-Lagrangian equation

Let us now analyse the other two terms. Recall that for all possible paths y(x, t), optimisation is being performed with the fixed initial condition y(x, 0) = x [see Eq. (6)]. Consequently, we have δy(x, 0) = 0 which ensures that the second term on the right hand side of Eq. (14) goes to zero. On the other hand, the value of y(x, tf) at the final time can be different for different paths which gives δy(x, tf) ≠ 0. For the second line to vanish, we must then have the pre-factor associated with δy(x, tf) equal to zero. Thus, we get two boundary conditions in time

Observe that the first equation clearly demonstrates the importance of incorporating the terms at the final time tf in the action described in Eq. (5). If these terms are omitted, we would obtain \(\dot{y}(x,{t}_{f})=0\), which according to Eq. (12) suggests that the time derivative of moments at the final time is zero. However, this is not true. To get consistent boundary condition, it is necessary for ζ1(tf) and ζ2(tf) to be non-zero.

Proceeding to solve Eq. (15), one can verify that

where λ1(t) and λ2(t) are functions that are related to the Lagrange multipliers μ1(t) and μ2(t). To see this relation, one can take the time derivative in Eq. (18) and compare it with the Euler-Lagrange equation (15) to obtain

Let us now compute these λ(t)-functions. For this, we use the solution of \(\dot{y}(x,t)\) in \({\dot{X}}_{n}(t)\) in Eq. (12) for n = 1 and n = 2. This yields

One can then use Eq. (10) to show that Stot(tf) satisfies the bound

where A2(t) = X2(t) − X1(t)2 is the variance of x(t) and \({S}_{{{{{{\rm{tot}}}}}}}^{12}({t}_{f})\) is the bound on the mean total entropy produced with first and second moments fixed. Eq. (21) is one of the central results of our paper. It gives a lower bound \({S}_{{{{{{\rm{tot}}}}}}}^{12}({t}_{f})\) on the mean total entropy dissipated completely in terms of the first moment and variance. Our bound also requires the knowledge of the diffusion coefficient. For this, one can use the short-time mean squared displacement

as used recently in64 or use other inferring methods72,73. In this work, we do not delve into the estimation of diffusion coefficient and assume it to be given.

Furthermore, in our analysis, we have focused on minimizing the mean total entropy generated up to time tf with respect to the force field. However, an alternative approach involves minimizing the entropy production rate at each intermediate time, as opposed to the total entropy production, but, in Supplementary note 1, we show that this approach generally leads to sub-optimal protocols and therefore no longer gives a bound on the total entropy production.

Optimal protocol with first two moments

Having obtained a lower bound for the entropy production, it is a natural question to ask for which protocols this bound is saturated. To answer that question, we first turn to the flux v(x, t) that gives rise to this optimal value. For this, we use Eqs. (6) and (18) and obtain

with λ(t)-functions given in Eq. (20). One can verify that this equation is always satisfied for Gaussian processes. This is shown in Supplementary note 2.

Next, we turn to the probability distribution associated with the optimal dissipation. For this, one needs the form of y(x, t) which follows from Eq. (18)

Plugging this in Eq. (9), we obtain the distribution as

which then gives the optimal force-field as

We see that the optimal protocol comprises solely of a conservative force field with the associated energy landscape characterized by two components: the first two terms correspond to a time-dependent harmonic oscillator, whereas the last term has the same shape as the equilibrium landscape associated with the initial state. Furthermore, from Eq. (26), we also observe that this optimal protocol preserves the shape of the initial probability distribution. A similar result was also recently observed in the context of the precision-dissipation trade-off relation for driven stochastic systems74.

Fixing first m moments

So far, we have derived a bound on Stot(tf) based only on the knowledge of first two moments. We will now generalise this for arbitrary number of moments. Consider the general situation where the first m moments of the variable x(t) are given. Our goal is to optimise the action

with respect to v(x, t). Once again, μ(t)- functions are the Lagrange multipliers for m moments with contribution at the final time t = tf included separately. As done before in Eq. (6), we introduce the new coordinate y(x, t), and rewrite the action in terms of this map

For a small change y(x, t) + δy(x, t), the total change in action is

which vanishes for the optimal path. Following the same line of reasoning as before, we then obtain the Euler-Lagrange equation

with two boundary conditions

However, we still need to compute functions μi(t) and ζi(t) with i = 1, 2, . . , m in order to completely specify the boundary conditions. To calculate ζ-functions, we use Eq. (12) and obtain

On the other hand, for μ(t)-functions, we use the second derivative of moments and obtain

Both Eqs. (33) and (34) hold for all positive integer values of i ≤ m. Solving this gives all μ(t) and ζ(t) functions in terms of \({{{{{{\mathcal{B}}}}}}}_{i}\), i = 1, . . . , n and Xi, i = 1, . . . , m + n − 2.

Once Eq. (30) is solved, the bound on mean total entropy dissipated can then be written as

Unfortunately, we could not obtain an analytic expression for Eq. (30) due to the presence of higher-order moments and \({{{{{{\mathcal{B}}}}}}}_{i}\), which are not a priori known. Instead, we will focus on numerically integrating Eq. (30). One can use the value of y(x, t) to calculate the higher-order moments and therefore the μ(t)’s in Eq. (34), which in turn can be used to obtain y(x, t + Δt). Finally, one can make sure that the boundary condition, Eq. (31), is satisfied through a shooting method.

Bound in higher dimensions

Having developed a general methodology in one dimension, we now extend these ideas to higher dimensional systems with state variables x(t) = {x1(t), x2(t), . . . . , xd(t)}. Herein again, our aim is to obtain a bound on Stot(tf) with information about moments, including mixed moments.

First two moments

As before, let us first introduce the notation

for first two (mixed) moments of xi(t) variables. Once again, this is a constrained optimisation problem and we introduce the following action functional

where functions μi(t), λij(t), αi(t) and βij(t) all stand for Lagrange multipliers associated with given constraints. Further carrying out this optimisation is challenging since distribution \(P\left({{{{{\bf{x}}}}}},t\right)\) depends non-trivially on the force-field \({{{{{\bf{F}}}}}}\left({{{{{\bf{x}}}}}},t\right)\). We, therefore, introduce a d-dimensional coordinate

and rewrite \({\mathbb{S}}({{{{{\bf{x}}}}}},{{{{{\bf{v}}}}}},{t}_{f})\) completely in terms of y(x, t). We then follow same steps as done before but in higher dimensional setting. Since this is a straightforward generalisation, we relegate this calculation to Supplementary note 3 and present only the final results here. For the optimal protocol, we find

with functions γij(t) related to the Lagrange multipliers μi(t), λij(t), see Eq. (S34) in the Supplementary note. Furthermore, in Supplementary note 3, we show that γij(t) functions are the solutions of a set of linear equations:

where γ(t) and A(t) are two matrices with elements γij(t) and \({A}_{ij}(t)={X}_{2}^{i,j}(t)-{X}_{1}^{i}(t){X}_{1}^{j}(t)\) respectively. Notice that the elements of A(t) comprise only of variance and covariance which are known. Therefore, the matrix A(t) is fully known at all times.

To solve Eq. (42), we note that A(t) is a symmetric matrix and thus, there exists an orthogonal matrix O(t) such that

where Λ(t) is a diagonal matrix whose elements correspond to the (positive) eigenvalues of A(t). O(t) can be constructed using eigenvectors of A(t) and it satisfies the orthogonality condition O−1(t) = OT(t). Combining the transformation Eq. (43) with Eq. (42), we obtain

This relation enables us to write \({\dot{y}}_{i}\left({{{{{\bf{x}}}}}},t\right)\) in Eq. (41) as

Finally, plugging this in the formula

we obtain the bound in general d dimensions as

where the elements of \({{{{{\bf{{{{{{\mathcal{G}}}}}}}}}}}}(t)\) matrix are fully given in terms of A(t) in Eq. (44) which in turn depends on the variance and covariance of x(t). Eq. (47) represents a general bound on the mean entropy production in higher dimensions. For the two-dimensional case, we obtain an explicit expression (see Supplementary note 3)

The bound is equal to the sum of two one dimensional bounds in Eq. (21) corresponding to two coordinates x1(t) and x2(t) plus a part that arises due to the cross-correlation between them. In absence of this cross-correlation \(\left({A}_{12}(t)=0\right)\), Eq. (48) correctly reduces to the sum of two separate one dimensional bounds.

Optimal protocols with first two moments

Let us now look at the flux and the distribution associated with this optimal dissipation. For this, we first rewrite Eq. (41) in vectorial form as

and solve it to obtain the solution \({{{{{\bf{y}}}}}}\left({{{{{\bf{x}}}}}},t\right)\) as

Here Ω(t) stands for the time-ordered exponential and is given by

with γ(t) defined in Eq. (44) and \({{{{{\mathcal{T}}}}}}\) corresponding to the time-ordering operator. Finally, substituting the solution \({{{{{\bf{y}}}}}}\left({{{{{\bf{x}}}}}},t\right)\) from Eq. (50) in Eq. (40) gives the optimal flux, whereas using Eq. (S26) in the Supplementary note, one obtains the optimal distribution

Now the optimal force-field follows straightforwardly from Eq. (3) as

To sum up, we have derived a lower bound to the total dissipation Stot(tf) in general d dimensions just from the information about first two moments. Together with this, we also calculated the force-field that gives rise to this optimal value. In what follows, we consider the most general case where we know the first-m (mixed) moments of the state variable x(t) = {x1(t), x2(t), . . . , xd(t)}.

Fixing first m moments in general d dimensions

Let us define a general mixed moment as

where \({i}_{j}\in {{\mathbb{Z}}}^{+}\) and i1 ≥ i2 ≥ i3 ≥. . ≥im for all 1 ≤ il ≤ d. For m = 1 and m = 2, Eq. (55) reduces to first two moments in Eq. (37). Here, we are interested in the general case for which we consider the following action

In conjunction to the previous sections, we again recast this action in terms of \({{{{{\bf{y}}}}}}\left({{{{{\bf{x}}}}}},t\right)\) defined in Eq. (40) as

Optimising this action in the same way as before, we obtain the Euler-Lagrange equation

along with the boundary conditions

The Lagrange multipliers \({\mu }_{{i}_{1},{i}_{2},...,{i}_{l}}(t)\) and \({\zeta }_{{i}_{1},{i}_{2},...,{i}_{l}}(t)\) can be computed using time-derivatives of the (mixed) moments \({X}_{l}^{{i}_{1},{i}_{2},..,{i}_{l}}(t)\) as

Once again, for m > 2, we find dependence of the right hand side of Eqs. (61) and (62) on (mixed) moments with order higher than m. As discussed before, one can tackle this by utilizing the solution \({y}_{i}\left({{{{{\bf{x}}}}}},t\right)\) recursively to obtain these higher moments [as discussed after Eq. (35)].

Solving Eq. (58) numerically yields the solution \({{{{{{\bf{y}}}}}}}_{* }\left({{{{{\bf{x}}}}}},t\right)\) using which in Eq. (46) we obtain a lower bound to Stot(tf). The associated optimal flux and optimal distribution can also be calculated as before.

Results

Theoretical examples

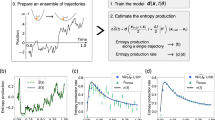

We now test our framework on two analytically solvable toy models. The first one consists of a one dimensional free diffusion model with initial position drawn from the distribution \({P}_{0}({x}_{0}) \sim \exp (-{x}_{0}^{4})\). This example will demonstrate how our method becomes more accurate when we incorporate knowledge of higher moments. The second example involves two-dimensional diffusion in a moving trap, serving as an illustration of our method’s applicability in higher dimensions.

Free diffusion

We first consider a freely diffusing particle in one dimension

This example serves two purposes: First, we can obtain the exact expression of Stot(tf) which enables us to rigorously compare the bounds derived above with their exact counterpart. Second, we discuss the intricacies that arise when we fix more than first two moments. To begin with, the position distribution P(x, tf) is given by

Since no external force acts on the system, the total entropy produced will be equal to the total entropy change of the system. Therefore, the mean Stot(tf) reads

We emphasize that Eqs. (65) and (66) are exact results and do not involve any approximation.

Let us now analyse how our bound compares with this exact value. The first four moments of the position are given by

Combining this with Eq. (36), shows that the dissipation bound for the first two moments reads

As illustrated in Fig. 1a, this bound \({S}_{{{{{{\rm{tot}}}}}}}^{12}({t}_{f})\), although not exact, is still quite close to the exact value in Eq. (66). This is somewhat surprising as one can show that the force field that saturates the bound is given by

which is significantly different from the force field associated with free relaxation (F(x, t) = 0). The error is shown in Fig. 1b (discussed later).

a Estimation of the mean total entropy produced Stot(tf) for model (64) by using the first two moments (in orange) and first four moments (in blue). The grey curve is the exact result whose expression is given in Eq. (66). b The relative error in the estimated values compared to the exact value as a function of tf [see Eq. (74)]. Orange symbols represent error due to the first two moments while blue ones correspond to the first four moments. c Probability distribution of the total entropy produced stot(tf) for the optimal protocol obtained by fixing the first two moments (in orange) and first four moments (in blue) till time tf = 1. Here also, the grey symbols correspond to the simulation of Eq. (64). For both panels, we have used D = 1andkB = 1.

Going beyond mean, we have also plotted the full probability distribution of total dissipation in Fig. 1c. To do this, we evolve the particle with the optimal force-field in Eq. (71) starting from the position x0 drawn from the distribution P0(x0) in Eq. (64). For different trajectories thus obtained, we measure the total entropy produced and construct the probability distribution out of them. Figure 1c shows the result. Here, we see deviation from the exact result indicating that fixing first two moments only does not give a very good approximation of dissipation beyond its average value. To improve this, we instead fix the first four moments of position and carry out this analysis.

One can wonder whether it is possible to get an even better estimate by taking into account the first four moments. To check this, we will now proceed by solving the Euler-Lagrange equation (30) numerically for m = 4. Since, this is a second-order differential equation, we need two boundary conditions in time. One of them is Eq. (32) which gives y(x, 0) = x. However, the other one in Eq. (31) gives a condition at final time tf which is difficult to implement numerically. For this, we use the following trick. We expand the initial \(\dot{y}(x,0)\) as

where we typically choose s = 9. The coefficients θi are free to vary but must give the correct time derivative of first four moments in Eqs. (67)-(69). We then assign a cost function as

Observe that the cost function vanishes if the boundary condition (31) is satisfied. Starting from y(x, 0) = x and \(\dot{y}(x,0)\) in Eq. (72), we solve the Euler-Lagrange equation (30) numerically and measure the cost function at the final time. We then perform a multi-dimensional gradient descent in θ-parameters to minimise \({{{{{\mathcal{C}}}}}}({t}_{f})\). Eventually, we obtain the numerical form of the optimal map y*(x, t).

Using this map, we then obtain a refined bound on the dissipation, \({S}_{{{{{{\rm{tot}}}}}}}^{1234}({t}_{f})\). This is illustrated in Fig. 1(a). As seen, this bound is better than \({S}_{{{{{{\rm{tot}}}}}}}^{12}({t}_{f})\) and in fact, matches very well with the exact one. To exemplify the tightness of these bounds, we have plotted, in Fig. 1(b), the relative error

as a function of tf. This clearly shows that the estimate with four moments is tighter than the one with two moments. We also observe that the tightness of bound increases as we increase the final time tf. With larger tf, the final distribution P(x, tf) in Eq. (65) approaches a Gaussian form. For instance X2(t) ≃ 2Dt and X4(t) ≃ 12D2t2 at large times, exhibiting Gaussian characteristics. Therefore, for large tf, our method becomes exact. Furthermore, we can also obtain the associated optimal protocols by numerically inverting Eqs. (6) and (9). With these protocols, we then obtain the distribution of total entropy produced which is shown in Fig. 1(c). Compared to the previous case, we again find that the estimated distribution is now closer to the exact one.

In this example, we were able to calculate the moments analytically and obtain the exact lower bound either analytically (for two moments) or numerically (otherwise). However, in many cases, it is not possible to calculate the moments and one needs to rely on the available trajectories to obtain them. An example of this is presented below. Now if the number of trajectories (denoted by R) is large, then one still has an exact numerical estimate of the moments and hence the exact lower bound. However, with small R, the moments deviate from their actual values and this affects the accuracy of our estimates. Moreover, the effect of noise will be greater on higher moments compared to the lower moments. This means that estimate with higher moments is more likely to be inaccurate than with lower moments for small trajectory number. On the other hand, when R is significantly large, the accuracy of estimate with higher moments is always high. In Supplementary note 4, we have demonstrated the effect of noise arising due to the finite number of trajectories on the lower bound. Similarly, while acquiring the trajectory data experimentally, there are intrinsic errors that occur due to the data measurement. Due to this, the observed position is different from the actual position and one incurs some error during the measurement process75. In Supplementary note 5, we have discussed the impact of such measurement errors on our lower bound.

Two dimensional Brownian motion

The previous example illustrated our method in a one-dimensional setting. In this section, we look at a two-dimensional system with position \({{{{{\bf{x}}}}}}(t)=\left({x}_{1}(t),{x}_{2}(t)\right)\). The system undergoes motion in presence of a moving two-dimensional harmonic oscillator \(U({x}_{1},{x}_{2},t)=K\left({x}_{1}-vt\right)\left({x}_{2}-vt\right)\) and its dynamics is governed by

We also assume that the initial position is drawn from Gaussian distribution as

For this example, our aim is to compare the bound derived in Eq. (48) with the exact expression. Using the Langevin equation, we obtain the first two cumulants and covariance to be

Plugging them in Eq. (48) gives

In fact, one can carry out exact calculations for this model. To see this, let us introduce the change of variable

and rewrite the Langevin equations as

where \({\eta }_{{R}_{1}}(t)\) and \({\eta }_{{R}_{2}}(t)\) are two independent Gaussian white noises with zero mean and delta correlation. Clearly these two equations are independent of each other which means that we can treat R1(t) and R2(t) as two independent one dimensional Brownian motions. Also, observe that both of them are Gaussian processes. Following our analysis in Supplementary note 2, we can now express average dissipation completely in terms of first two cumulants. The first two cumulants for our model are

Finally inserting them in Eq. (21), we obtain

which matches with the estimated entropy production in Eq. (81) exactly. In other words, our method becomes exact in this case.

Bit erasure

Motivated by the very good agreement between the bound and the actual entropy production in simple theoretical models, we will now turn to experimental data of a more complicated system, namely bit erasure76. The state of a bit can often be described by a one-dimensional Fokker-Planck equation, with a double-well potential energy landscape. This landscape can for example be produced by an optical tweezer77, a feed-back trap57, or a magnetic field78,79. Initially, the colloidal particle can either be in the left well (state 0) or in the right well (state 1) of the double-well potential. By modulating this potential, one can assure that the particle always ends up the right well (state 1). During this process, the expected amount of work put into the system is always bounded by the Landauer’s limit, \(\langle W\rangle \ge {k}_{B}T\ln 2\)57. Over the last few years, Landauer’s principle has also been tested in a more complex situation where symmetry between two states is broken80,81. To add an extra layer of complexity, we consider here the scenario where this symmetry is broken by deploying an asymmetric double-well potential and we will use the data from80 to test our bound. The system satisfies the Fokker-Planck equation

where U(x, t) is the time-dependent asymmetric double-well potential given by

Notice that initially, the potential U(x, t) has its minimum located at − xm and + ηxm, where η≥1 is the asymmetry factor. For η = 1, the potential is completely symmetric initially. On the other hand, for η ≠ 0, the two wells are asymmetric and the system is out of equilibrium initially. Furthermore, the functions m(t), g(t) and f(t) represent the experimental protocols to modulate U(x, t) during erasure operation. They are defined in Fig. 2a as well as in Supplementary note 6. The experimental protocol consists of symmetrizing U(x, t) by changing the function m(t), while the other two functions g(t) and f(t) are kept fixed. Then, g(t) and f(t) are changed during which the barrier is lowered, the potential is tilted and again brought back to its symmetric form at time t = 3tf/4. By this time, the particle is always at the right well. Finally, the right well is expanded to its original size with minimum located at + ηxm at the end of the cycle time tf. The mathematical forms of these protocol functions are also given in supplementary table (I). The time-modulation of the potential is shown in the middle panel in Fig. 2b and the experimental trajectories thus obtained are shown in Fig. 2c.

a Plot of the the control functions, g(t), f(t) and m(t), that are modulated experimentally to carry out the erasure operation. b Time modulation of the potential U(x, t) in Eq. (91) at different times. c Experimental trajectories obtained through by changing the protocol. The grey trajectory starts from the left well initially and ends up in the right well at the end of the cycle. On the other hand, the red trajectory starts from the right well and also ends up in the right well.

Here, we are interested in estimating the mean entropy produced during the erasure operation by using the bound in Eq. (21). For this, we take 20 − 50 trajectories for different cycle times tf and measure the first two moments. The precise values of cycle time and number of trajectories considered are given in supplementary table (II). We fit the measured moments using the basis of Chebyshev polynomials as

where we typically choose s = 20. For our analysis, we have rescaled the position by x → x/xm and t → t/tm with \({t}_{m}={x}_{m}^{2}/D\) being the diffusive time scale. We have illustrated the resulting experimental plots in Fig. 3. In the experiment, Eb/KBT = 13, A = 0.2, η = 3 and tm = 2.52ms. With the form of X1(t) and X2(t) in Eqs. (93) and (94), we use the formula in Eq. (21) to estimate the mean entropy produced as a function of the cycle time.

Figure 4 illustrates the primary outcome of this section. The estimated mean entropy production Stot has been plotted for different dimensionless cycle times tf. The experimental results are essentially in perfect agreement with simulation results despite the very low amount of data and the non-trivial dynamics of the system. This shows that our method is well-suited to study the entropy production of general time-dependent experimental systems.

Estimated mean total entropy produced Stot as a function of the rescaled cycle time tf/tm. We have also performed a comparison with the exact values of Stot obtained by simulating the Langevin equation with potential U(x, t) given in Eq. (91). The error bars are calculated through least-squares fitting to moments X1(t) and X2(t). An analysis on calculating the error is given in Eq. (95).

Recall that in estimating the entropy production, we fitted the measured moments using the basis of Chebyshev polynomials in Eqs. (93) and (94). Due to small number of trajectories, the measured moments are never smooth. Therefore, while carrying out the fitting procedure, we invariably incur some error ΔA2(t) = A2(t)∣fit − A2(t)∣data and similarly for other quantities. This will, in turn, give rise to error in the inferred value of \({S}_{{{{{{\rm{tot}}}}}}}^{12}({t}_{f})\). We calculate this error as

Using the values of \({{\Delta }}{\dot{A}}_{2}(t)\), ΔA2(t) and \({{\Delta }}{\dot{X}}_{1}(t)\), we calculate \({{\Delta }}{S}_{{{{{{\rm{tot}}}}}}}^{12}({t}_{f})\) via Eq. (95) and take \(\pm {{\Delta }}{S}_{{{{{{\rm{tot}}}}}}}^{12}({t}_{f})\) as error in the value of the inferred entropy production. This error is then displayed in Fig. 4.

Conclusion

In this paper, we have constructed a general framework to derive a lower bound on the mean value of the total entropy production for arbitrary time-dependent systems described by overdamped Langevin equation. The bound, thus obtained, is given in terms of the experimentally accessible quantities, namely the (mixed) moments of the observable. If one only includes the first two moments, one gets a simple analytical expression for the lower bound, whereas including higher moments leads to a numerical scheme. We tested our results both on two analytically solvable toy models and on experimental data for bit erasure, taken from80. The lower bound is close to the real entropy production, even if one only takes into account the first two moments. This makes it a perfect method to infer the entropy production and opens up the possibility to infer entropy production in more complicated processes in future research. One can for example think about biological systems, where upstream processes such as glycolytic oscillations59 or oscillatory dynamics in transcription factors60, can effectively lead to time-dependent thermodynamic descriptions. Throughout our paper, we have assumed that the diffusion coefficient is constant, but the results can be extended to spatially-dependent diffusion coefficients as discussed in Supplementary note 7.

Compared to some previous works, our work offers several advantages in inferring entropy production. First and foremost, our method manages to infer entropy production from a very limited amount of data. For all the examples discussed in the paper, we obtain a good estimate with of the order of < 1000 trajectories. For the bit erasure experiment, we actually used < 100 trajectories [see Supplementary note 6]. On the other hand, for the theoretical example of free diffusion, as demonstrated in Supplementary note 4, the error is approximately ~ 10% with ~ 700 trajectories. Other proposed methods in the literature typically need at least of the order of 104 trajectories63 or require data from different measurement set-ups11,12,61. This has allowed us for the first time, to apply an inference method directly to a time-dependent experimental system and show an excellent agreement between the inferred value and the simulation result. Furthermore, one can easily use smoothing methods to calculate the derivatives of the moments. We therefore anticipate our method to perform well when time-dependent protocols do not change rapidly and the temporal variation in moments is not drastic.

Having said this, our method also raises some open questions and a further investigation is needed to answer them. For example, while we have developed our method for continuous overdamped systems, extending these ideas for underdamped systems or discrete-state systems is an interesting future direction. In particular, recently in82, the connection between the entropy productions in systems governed by the Fokker-Planck equation and those governed by discrete master equations was studied. Exploring the applicability of our method in such a context would be interesting. Moreover, our method gives a tight bound only for the time-dependent systems with conservative forces. For non-conservative forces, although, in principle, our method works, we anticipate it to give a loose bound. In order to achieve a tighter bound, it might be possible to decompose the entropy production into excess and housekeeping parts arising due to time-dependent driving and non-conservative forcing respectively83,84,85,86. Our method might give an estimate of the excess part, while there are proposed methods for the housekeeping part in the steady-state34,35,36,37,38,39,40,41,44,45,46,47,48,49. Combining our method with these methods to infer the entropy production even in presence of non-conservative force fields remains a promising future direction. In some cases, the housekeeping entropy can also be related to an effective system with time-dependent periodic driving87, a scenario where our method is applicable. Hence, for these cases, it might be possible to use our method even in the non-equilibrium steady-state. Finally, it would be interesting to compare our method with the methodology from42,43, to infer entropy-production in high-dimensional systems, where it would be impossible to sample the entire phase-space. We end by stressing that, although we view thermodynamic inference as the main application of our results, the thermodynamic bounds Eqs. (21) and (47) are also interesting in their own rights and might lead to a broad range of applications similar to the thermodynamic speed limit and the thermodynamic uncertainty relations6,22.

Data availability

Data available on request from the authors.

Code availability

Computer codes used in this paper are available on request from the authors.

References

Seifert, U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 75, 126001 (2012).

Peliti, L. & Pigolotti, S. Stochastic Thermodynamics: An Introduction (Princeton University Press, 2021).

Sekimoto, K. Langevin Equation and Thermodynamics. Prog. Theor. Phys. Suppl. 130, 17–27 (1998).

Seifert, U. Entropy production along a stochastic trajectory and an integral fluctuation theorem. Phys. Rev. Lett. 95, 040602 (2005).

Hayashi, K., Ueno, H., Iino, R. & Noji, H. Fluctuation theorem applied to f1-atpase. Phys. Rev. Lett. 104, 218103 (2010).

Barato, A. C. & Seifert, U. Thermodynamic uncertainty relation for biomolecular processes. Phys. Rev. Lett. 114, 158101 (2015).

Gingrich, T. R., Horowitz, J. M., Perunov, N. & England, J. L. Dissipation bounds all steady-state current fluctuations. Phys. Rev. Lett. 116, 120601 (2016).

Proesmans, K. & den Broeck, C. V. Discrete-time thermodynamic uncertainty relation. Europhys. Lett. 119, 20001 (2017).

Hasegawa, Y. & Van Vu, T. Fluctuation theorem uncertainty relation. Phys. Rev. Lett. 123, 110602 (2019).

Timpanaro, A. M., Guarnieri, G., Goold, J. & Landi, G. T. Thermodynamic uncertainty relations from exchange fluctuation theorems. Phys. Rev. Lett. 123, 090604 (2019).

Koyuk, T. & Seifert, U. Operationally accessible bounds on fluctuations and entropy production in periodically driven systems. Phys. Rev. Lett. 122, 230601 (2019).

Proesmans, K. & Horowitz, J. M. Hysteretic thermodynamic uncertainty relation for systems with broken time-reversal symmetry. J. Stat. Mech.: Theory Exp. 2019, 054005 (2019).

Harunari, P. E., Fiore, C. E. & Proesmans, K. Exact statistics and thermodynamic uncertainty relations for a periodically driven electron pump. J. Phys. A: Math. Theor. 53, 374001 (2020).

Pal, S., Saryal, S., Segal, D., Mahesh, T. S. & Agarwalla, B. K. Experimental study of the thermodynamic uncertainty relation. Phys. Rev. Res. 2, 022044 (2020).

Pietzonka, P. & Seifert, U. Universal trade-off between power, efficiency, and constancy in steady-state heat engines. Phys. Rev. Lett. 120, 190602 (2018).

Proesmans, K., Ehrich, J. & Bechhoefer, J. Finite-time landauer principle. Phys. Rev. Lett. 125, 100602 (2020).

Ilker, E. et al. Shortcuts in stochastic systems and control of biophysical processes. Phys. Rev. X 12, 021048 (2022).

Murugan, A., Huse, D. A. & Leibler, S. Speed, dissipation, and error in kinetic proofreading. Proc. Natl Acad. Sci. 109, 12034–12039 (2012).

Aurell, E., Mejía-Monasterio, C. & Muratore-Ginanneschi, P. Optimal protocols and optimal transport in stochastic thermodynamics. Phys. Rev. Lett. 106, 250601 (2011).

Aurell, E., Gawȩdzki, K., Mejía-Monasterio, C., Mohayaee, R. & Muratore-Ginanneschi, P. Refined second law of thermodynamics for fast random processes. J. Stat. Phys. 147, 487–505 (2012).

Sivak, D. A. & Crooks, G. E. Thermodynamic metrics and optimal paths. Phys. Rev. Lett. 108, 190602 (2012).

Shiraishi, N., Funo, K. & Saito, K. Speed limit for classical stochastic processes. Phys. Rev. Lett. 121, 070601 (2018).

Proesmans, K., Ehrich, J. & Bechhoefer, J. Optimal finite-time bit erasure under full control. Phys. Rev. E 102, 032105 (2020).

Ito, S. & Dechant, A. Stochastic time evolution, information geometry, and the cramér-rao bound. Phys. Rev. X 10, 021056 (2020).

Zhen, Y.-Z., Egloff, D., Modi, K. & Dahlsten, O. Universal bound on energy cost of bit reset in finite time. Phys. Rev. Lett. 127, 190602 (2021).

Van Vu, T. & Saito, K. Finite-time quantum landauer principle and quantum coherence. Phys. Rev. Lett. 128, 010602 (2022).

Dechant, A. Minimum entropy production, detailed balance and wasserstein distance for continuous-time markov processes. J. Phys. A: Math. Theor. 55, 094001 (2022).

Falasco, G. & Esposito, M. Dissipation-time uncertainty relation. Phys. Rev. Lett. 125, 120604 (2020).

Neri, I. Universal tradeoff relation between speed, uncertainty, and dissipation in nonequilibrium stationary states. SciPost Phys. 12, 139 (2022).

Kuznets-Speck, B. & Limmer, D. T. Dissipation bounds the amplification of transition rates far from equilibrium. Proc. Natl Acad. Sci. 118, e2020863118 (2021).

Yan, L.-L. et al. Experimental verification of dissipation-time uncertainty relation. Phys. Rev. Lett. 128, 050603 (2022).

Seifert, U. From stochastic thermodynamics to thermodynamic inference. Annu. Rev. Condens. Matter Phys. 10, 171–192 (2019).

Roldán, E. Thermodynamic probes of life. Science 383, 952–953 (2024).

Gingrich, T. R., Rotskoff, G. M. & Horowitz, J. M. Inferring dissipation from current fluctuations. J. Phys. A: Math. Theor. 50, 184004 (2017).

Li, J., Horowitz, J. M., Gingrich, T. R. & Fakhri, N. Quantifying dissipation using fluctuating currents. Nat. Commun. 10, 1666 (2019).

Manikandan, S. K., Gupta, D. & Krishnamurthy, S. Inferring entropy production from short experiments. Phys. Rev. Lett. 124, 120603 (2020).

Martínez, I. A., Bisker, G., Horowitz, J. M. & Parrondo, J. M. R. Inferring broken detailed balance in the absence of observable currents. Nat. Commun. 10, 3542 (2019).

Skinner, D. J. & Dunkel, J. Estimating entropy production from waiting time distributions. Phys. Rev. Lett. 127, 198101 (2021).

Harunari, P. E., Dutta, A., Polettini, M. & Roldán, E. What to learn from a few visible transitions’ statistics? Phys. Rev. X 12, 041026 (2022).

van der Meer, J., Ertel, B. & Seifert, U. Thermodynamic inference in partially accessible markov networks: A unifying perspective from transition-based waiting time distributions. Phys. Rev. X 12, 031025 (2022).

Pietzonka, P. & Coghi, F. Thermodynamic cost for precision of general counting observables. Phys. Rev. E 109, 064128 (2024).

Lynn, C. W., Holmes, C. M., Bialek, W. & Schwab, D. J. Decomposing the local arrow of time in interacting systems. Phys. Rev. Lett. 129, 118101 (2022).

Lynn, C. W., Holmes, C. M., Bialek, W. & Schwab, D. J. Emergence of local irreversibility in complex interacting systems. Phys. Rev. E 106, 034102 (2022).

Roldán, E. & Parrondo, J. M. R. Estimating dissipation from single stationary trajectories. Phys. Rev. Lett. 105, 150607 (2010).

Lander, B., Mehl, J., Blickle, V., Bechinger, C. & Seifert, U. Noninvasive measurement of dissipation in colloidal systems. Phys. Rev. E 86, 030401 (2012).

Otsubo, S., Ito, S., Dechant, A. & Sagawa, T. Estimating entropy production by machine learning of short-time fluctuating currents. Phys. Rev. E 101, 062106 (2020).

Van Vu, T., Vo, V. T. & Hasegawa, Y. Entropy production estimation with optimal current. Phys. Rev. E 101, 042138 (2020).

Kim, D.-K., Bae, Y., Lee, S. & Jeong, H. Learning entropy production via neural networks. Phys. Rev. Lett. 125, 140604 (2020).

Terlizzi, I. D. et al. Variance sum rule for entropy production. Science 383, 971–976 (2024).

Dechant, A. Thermodynamic constraints on the power spectral density in and out of equilibrium. arxiv preprint 2306.00417 (2023).

Busiello, D. M. & Pigolotti, S. Hyperaccurate currents in stochastic thermodynamics. Phys. Rev. E 100, 060102 (2019).

Ghosal, A. & Bisker, G. Entropy production rates for different notions of partial information. J. Phys. D: Appl. Phys. 56, 254001 (2023).

Nitzan, E., Ghosal, A. & Bisker, G. Universal bounds on entropy production inferred from observed statistics. Phys. Rev. Res. 5, 043251 (2023).

Skinner, D. J. & Dunkel, J. Improved bounds on entropy production in living systems. Proc. Natl Acad. Sci. 118, e2024300118 (2021).

Baiesi, M., Falasco, G. & Nishiyama, T. Effective estimation of entropy production with lacking data. arxiv preprint 2305.04657 (2023).

Meyberg, E., Degünther, J. & Seifert, U. Entropy production from waiting-time distributions for overdamped langevin dynamics. J. Phys. A: Math. Theor. 57, 25LT01 (2024).

Jun, Y., Gavrilov, M. C. V. & Bechhoefer, J. High-precision test of landauer’s principle in a feedback trap. Phys. Rev. Lett. 113, 190601 (2014).

Freitas, N., Delvenne, J.-C. & Esposito, M. Stochastic and quantum thermodynamics of driven rlc networks. Phys. Rev. X 10, 031005 (2020).

Chandra, F. A., Buzi, G. & Doyle, J. C. Glycolytic oscillations and limits on robust efficiency. Science 333, 187–192 (2011).

Heltberg, M. L., Krishna, S. & Jensen, M. H. On chaotic dynamics in transcription factors and the associated effects in differential gene regulation. Nat. Commun. 10, 71 (2019).

Koyuk, T. & Seifert, U. Thermodynamic uncertainty relation for time-dependent driving. Phys. Rev. Lett. 125, 260604 (2020).

Dechant, A. & Sakurai, Y. Thermodynamic interpretation of wasserstein distance. arxiv preprint 1912.08405 (2019).

Otsubo, S., Manikandan, S. K., Sagawa, T. & Krishnamurthy, S. Estimating time-dependent entropy production from non-equilibrium trajectories. Commun. Phys. 5, 11 (2022).

Lee, S. et al. Multidimensional entropic bound: Estimator of entropy production for langevin dynamics with an arbitrary time-dependent protocol. Phys. Rev. Res. 5, 013194 (2023).

Blickle, V., Speck, T., Helden, L., Seifert, U. & Bechinger, C. Thermodynamics of a colloidal particle in a time-dependent nonharmonic potential. Phys. Rev. Lett. 96, 070603 (2006).

Garanin, D. A. Fokker-planck and landau-lifshitz-bloch equations for classical ferromagnets. Phys. Rev. B 55, 3050–3057 (1997).

Villani, C. Topics in Optimal Transportation (American Mathematical Society, 2003).

Benamou, J.-D. & Brenier, Y. A computational fluid mechanics solution to the monge-kantorovich mass transfer problem. Numerische Mathematik 84, 375 (2000).

Van Vu, T. & Saito, K. Thermodynamic unification of optimal transport: Thermodynamic uncertainty relation, minimum dissipation, and thermodynamic speed limits. Phys. Rev. X 13, 011013 (2023).

Kopp, R. E. Pontryagin maximum principle. Math. Sci. Eng. 5, 255 (1962).

Batchelor, G. K. An Introduction to Fluid Dynamics (Cambridge University Press, 2000).

Boyer, D., Dean, D. S., Mejía-Monasterio, C. & Oshanin, G. Optimal estimates of the diffusion coefficient of a single brownian trajectory. Phys. Rev. E 85, 031136 (2012).

Lindwall, G. & Gerlee, P. Fast and precise inference on diffusivity in interacting particle systems. J. Math. Biol. 86, 64 (2023).

Proesmans, K. Precision-dissipation trade-off for driven stochastic systems. Commun. Phys. 6, 226 (2023).

Thapa, S., Lomholt, M. A., Krog, J., Cherstvy, A. G. & Metzler, R. Bayesian analysis of single-particle tracking data using the nested-sampling algorithm: maximum-likelihood model selection applied to stochastic-diffusivity data. Phys. Chem. Chem. Phys. 20, 29018–29037 (2018).

Landauer, R. Irreversibility and heat generation in the computing process. IBM J. Res. Dev. 5, 183 (1961).

Bérut, A. et al. Experimental verification of landauer’s principle linking information and thermodynamics. Nature 483, 187 (2012).

Hong, J., Lambson, B., Dhuey, S. & Bokor, J. Experimental test of landauer’s principle in single-bit operations on nanomagnetic memory bits. Sci. Adv. 2, e1501492 (2016).

Martini, L. et al. Experimental and theoretical analysis of landauer erasure in nano-magnetic switches of different sizes. Nano Energy 19, 108–116 (2016).

Gavrilov, M. & Bechhoefer, J. Erasure without work in an asymmetric double-well potential. Phys. Rev. Lett. 117, 200601 (2016).

Gavrilov, M., Chétrite, R. & Bechhoefer, J. Direct measurement of weakly nonequilibrium system entropy is consistent with gibbs-shannon form. Proc. Natl Acad. Sci. 114, 11097–11102 (2017).

Busiello, D. M., Hidalgo, J. & Maritan, A. Entropy production for coarse-grained dynamics. N. J. Phys. 21, 073004 (2019).

Hatano, T. & Sasa, S.-i Steady-state thermodynamics of langevin systems. Phys. Rev. Lett. 86, 3463–3466 (2001).

Maes, C. & Netoĉný, K. A nonequilibrium extension of the clausius heat theorem. J. Stat. Phys. 154, 188 (2014).

Dechant, A., Sasa, S.-i & Ito, S. Geometric decomposition of entropy production in out-of-equilibrium systems. Phys. Rev. Res. 4, L012034 (2022).

Dechant, A., Sasa, S.-i & Ito, S. Geometric decomposition of entropy production into excess, housekeeping, and coupling parts. Phys. Rev. E 106, 024125 (2022).

Busiello, D. M., Jarzynski, C. & Raz, O. Similarities and differences between non-equilibrium steady states and time-periodic driving in diffusive systems. N. J. Phys. 20, 093015 (2018).

Acknowledgements

We would like to thank Prithviraj Basak and John Bechhoefer for fruitful discussions on the paper and for providing the data used in our study. We also thank Jonas Berx for carefully reading the manuscript. This project has received funding from the European Union’s Horizon 2020 research and innovation program under the Marie Sklodowska-Curie grant agreement No. 847523 ‘INTERACTIONS’ and grant agreement No. 101064626 ‘TSBC’ and from the Novo Nordisk Foundation (grant No. NNF18SA0035142 and NNF21OC0071284).

Author information

Authors and Affiliations

Contributions

K.P. designed research; P.S. and K.P. did the analytical calculations; P.S. implemented numerical codes and simulations. Both authors checked the results and wrote the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Physics thanks the anonymous reviewers for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Singh, P., Proesmans, K. Inferring entropy production from time-dependent moments. Commun Phys 7, 231 (2024). https://doi.org/10.1038/s42005-024-01725-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42005-024-01725-3

- Springer Nature Limited