Abstract

Adaptive networks with time-varying connectivity, often called plasticity, provide a fundamental paradigm to model complex dynamical systems. In these systems, different groups of elements frequently exhibit different yet synchronized dynamics within each group. Here we propose a framework to study patterns of synchronous solutions in a large class of plastic networks and derive a general approach to analyze the stability of these solutions. This approach decouples the role of the network topology from that of the dynamic, thus leading to a dimensionality reduction of the stability problem and allowing us to investigate how adaptation affects the emergence of multi-stable patterns of synchronized activity. To illustrate its potentialities, we apply our method to three networks of oscillators, with distinct topology, dynamics, and adaptation rules. Our working framework encompasses a large class of heterogeneous multi-layer dynamical networks, connected (even with delays) via different plastic links, and can have a broad impact on the analysis of complex plastic networks.

Similar content being viewed by others

Introduction

Many complex networks formed of interacting dynamical units can give rise to cluster synchronization (CS), where different groups of elements exhibit different yet synchronized dynamics within each group1. For example, CS is a common phenomenon in teams of interacting robots performing synchronous coordinated tasks2 and in biological and ecological systems3,4. Furthermore, CS is responsible for traffic jams of vehicles5 and can be employed for cooperative rendezvous and cooperative target classification in networks of unmanned aerospace vehicles6. In neural dynamics, synchronization of brain areas is believed to provide a cognition mechanism7,8, functional assemblies of brain neurons display distinct interdependent synchronous oscillations9, and central pattern generators exploit synchronous clusters of neurons to generate gaits in animal locomotion10. Therefore, the study of CS is relevant to analyzing and controlling both natural (ecological, social, immune, neural, and cellular)11 and artificial12 systems.

A second common feature of natural and artificial complex systems consisting of interconnected dynamical units is adaptivity (or plasticity), i.e., the presence of temporally evolving connectivity structures, which coevolve with the network nodes13,14. A series of recent studies focused on the relevance of adaptation for the dynamics of complex systems15,16,17,18,19,20,21,22. Some papers23,24 investigated the role that adaptation (plasticity) plays in promoting full synchronization in complex networks. Furthermore, an MSF approach to study the stability of fully synchronized regimes in plastic networks has been presented in25,26.

Despite the common concomitance of CS and adaptation in natural and artificial systems, CS in adaptive networks has remained relatively unexplored20,21, maybe because in most cases the synchronization is not exact, but only approximate. Previous work has shown that already a not-evolving complex network can support several coexisting patterns of synchronized clusters (CS patterns)27; therefore, understanding the effects of plasticity and the mechanisms ruling the emergence of CS in adaptive networks is a relevant issue. In particular, these mechanisms may combine with a learning strategy to encode specific patterns of synchronized activity in the network28,29.

In this paper, we fill this gap by proposing a method to analyze CS and multi-stability in multi-layer adaptive systems with delays, based on a dimensionality reduction provided by the Master Stability Function (MSF) approach30. We remark that even if our focus is on exact synchronization, the results of our analysis are robust to heterogeneity, at least to a certain extent, meaning that the network nodes that belong to the same cluster remain coherent when their homogeneity is broken. Some of the fundamental questions of broad scientific interest31,32,33 that we address are (i) which CS patterns can exist in networks with adaptation; (ii) which ones of these patterns attract the dynamics in the presence of adaptation; (iii) whether we can perform the linear stability analysis in a reduced space to overcome the large dimensionality of the stability problem. We will show that: (i) the possible CS patterns (both stable and unstable) are determined by the network topology within the broad class of adaption rules we consider; (ii) the possibly multiple stable CS patterns (adaptive networks are characterized by a strong presence of multi-stability) are determined also by the network dynamics; (iii) the number of variables needed to perform the stability analysis can be reduced significantly, even by orders of magnitude. As stated above, the developed theory can be applied to many fields.

Here, we focus on three case studies to illustrate the potentialities of the method and investigate the presence of multi-stability for different adaptation rules. The first case study is about encoding and storing visual memory items in a network of neural mass models interacting via Hebbian plasticity. The second case study is about a (two-layer) network of coupled phase oscillators, which provides a model for tumor disease and sepsis. The third case study is about a random network of chaotic oscillators without a direct physical meaning, but with some features captured by our analysis framework and complementary to those of the first two case studies. In all cases, the proposed MSF-based method allows for accurate stability analysis of the possible CS solutions. This extensive numerical analysis is possible thanks to the MSF dimensionality reduction.

A relevant feature of the considered adaptive networks is the emergence of multi-stability, represented by different CS patterns with different collective dynamics, i.e., by coexisting clusters that differ in composition and coherent dynamics, ranging from stationary to oscillatory. Our analysis can characterize the stability of different CS solutions and, as such, provides a flexible tool for understanding the complexity of synchronization scenarios in adaptive networks.

Results

We study the dynamics of a general adaptive multi-layer network, which encompasses a wide variety of single-node dynamical models, different connection types, and plasticity rules. The dynamical evolution of this adaptive network composed of N coupled nodes can be described by the following general set of delay differential equations (i, j = 1, …, N):

where xi is the m-dimensional state vector of the node i, with individual dynamics described by the vector field Fi ∈ Rm, i = 1, …, N, and S is an m-dimensional function (e.g., a saturation function) describing the coupling of the node with the rest of the network. This network contains L different types of connections, each corresponding to a different layer, indexed by ℓ. For each layer (ℓ = 1, 2, …, L), \({A}^{\ell }=\{{a}_{ij}^{\ell }={a}_{ji}^{\ell }\}\) is the symmetric adjacency matrix that describes the connectivity (\({a}_{ij}^{\ell }\in \{0,1\}\)), σℓ is the overall coupling strength, and gℓ is the coupling function. The adaptive nature of the coupling is taken into account by the N-dimensional matrix \({B}^{\ell }=\{{b}_{ij}^{\ell }(t)\}\) (asymmetric, in general), whose entries evolve dynamically according to the adaptive rule Eq. (1b). The parameter ϵℓ > 0 represents the inverse of the adaptation time scale. The evolution of the adaptive coupling terms \({b}_{ij}^{\ell }(t)\) is controlled by a nonlinear function Hℓ, which depends on the state of the nodes i and j. This adaptation rule can cover a wide class of plasticity functions, including Hebbian plasticity34, Wang-Rinzel plasticity35, as well as other adaptive schemes26,36,37.

This paper deals with the emergence of CS in the general system (1). We consider a partition of the set \({{{{{{{\mathcal{V}}}}}}}}\) of the network nodes into clusters \({{{{{{{{\mathcal{C}}}}}}}}}_{1},{{{{{{{{\mathcal{C}}}}}}}}}_{2},\ldots ,{{{{{{{{\mathcal{C}}}}}}}}}_{Q}\), \({\cup }_{p = 1}^{Q}{{{{{{{{\mathcal{C}}}}}}}}}_{p}={{{{{{{\mathcal{V}}}}}}}}\), \({{{{{{{{\mathcal{C}}}}}}}}}_{p}\cap {{{{{{{{\mathcal{C}}}}}}}}}_{q}={{\emptyset}}\) for p ≠ q. In particular, we are interested in the existence and stability of CS patterns, where all the elements within each cluster have an identical time evolution, i.e., xi(t) = xj(t) if i and j belong to the same cluster. A first challenge is to identify possible patterns of cluster synchronization for the general system of equations (1). In the Supplementary Note 1, we show that any equitable cluster partition for the adjacency matrix A corresponds to a flow-invariant cluster synchronous solution for the system (1). As the considered network model can have different types of connections, a valid solution presenting CS with the minimum number Q of equitable clusters can be found by using a variation38,39 of Hasler and Belykh’s coloring algorithm40 (for more details see Supplementary Note 1).

A second challenge concerns the stability of CS solutions, which depends on the complete network dynamical evolution, governed by Eq. (1). Therefore, once the Q equitable clusters are found, the CS analysis is applied to a coarse-grained dynamical model (called quotient network) whose Q nodes correspond to the possible equitable clusters. In particular, we analyze the stability of the CS patterns by linearizing Eq. (1) about a state corresponding to synchronization among all the nodes within each cluster39. By denoting the cluster synchronization state as xi(t) = sp(t), where the node i belongs to the cluster \({{{{{{{{\mathcal{C}}}}}}}}}_{p}\), we can write the quotient network dynamics,

where fp is the vector field of each node belonging to the cluster \({{{{{{{{\mathcal{C}}}}}}}}}_{p}\) (i.e., fp = Fi for any i ∈ Cp), \({k}_{pq}^{\ell }\) represents the weight of the connection of type ℓ between the clusters \({{{{{{{{\mathcal{C}}}}}}}}}_{p}\) and \({{{{{{{{\mathcal{C}}}}}}}}}_{q}\), and the Q − dimensional matrix \({R}^{\ell }=\{{r}_{pq}^{\ell }\}\) is the quotient matrix of ℓ-th type, such that \({r}_{pq}^{\ell }={\sum }_{j\in {{{{{{{{\mathcal{C}}}}}}}}}_{q}}{a}_{ij}^{\ell }\) (\(i\in {{{{{{{{\mathcal{C}}}}}}}}}_{p},\quad p,q=1,2,\ldots ,Q\)). Let np be the number of nodes in the cluster \({{{{{{{{\mathcal{C}}}}}}}}}_{p}\), therefore \(\sum\nolimits_{p = 1}^{Q}{n}_{p}=N\). In the following, we will tacitly assume that p depends on i and q depends on j, i.e., p = p(i) and q = q(j).

To analyze the local stability of the synchronous state, we introduce infinitesimal variations about sp(t): wi(t) = xi(t) − sp(t) and \({y}_{ij}^{\ell }(t)={b}_{ij}^{\ell }(t)-{k}_{pq}^{\ell }(t)\), where \(i\in {{{{{{{{\mathcal{C}}}}}}}}}_{p}\) and \(j\in {{{{{{{{\mathcal{C}}}}}}}}}_{q}\). It is important to emphasize that the stability problem involves N variables wi(t) and up to N2 variables \({y}_{ij}^{\ell }(t)\) for each ℓ, which for sufficiently large N makes the analysis soon infeasible. This motivated us to develop a technique to reduce the dimensionality of the stability problem, which we present in the following.

First, we find the variational equations in vector form (see Supplementary Note 2), in terms of two vectors (W(t) and \({{{{{{{{\boldsymbol{y}}}}}}}}}_{q}^{\ell }(t)\)) and of the N × N diagonal matrix Ep, which is the cluster indicator matrix: Ep has entries Ep,ii = 1, if node \(i\in {{{{{{{{\mathcal{C}}}}}}}}}_{p}\), 0 otherwise, i.e., this matrix identifies all the nodes that belong to cluster \({{{{{{{{\mathcal{C}}}}}}}}}_{p}\). Next, we find a coordinate transformation that separates the perturbation modes in the stability analysis as much as possible, thus reducing the stability problem into sub-problems of the lowest dimension. To this end, we compute the transformation (based on a simultaneous block diagonalization, SBD) introduced in41,42,43, which has been previously applied to analyze the stability of complete synchronization44 and CS45 in non-adaptive networks. The canonical transformation matrix46 (see Supplementary Note 3) \(T=\left(\begin{array}{c}{T}_{\parallel }\\ {T}_{\perp }\\ \end{array}\right)\) is the orthogonal matrix that simultaneously block-diagonalizes the matrices A1, A2,…, AL, E1, E2,…, EQ into M diagonal blocks, each one of size dα (α = 1, …, M). The Q × N sub-matrix T∥ is associated with longitudinal perturbations to the synchronization manifold, which therefore characterize the nature of the synchronized dynamics (e.g., periodic, quasi-periodic, chaotic) but not the stability of the CS. This is taken into account by transverse perturbations associated with the (N − Q) × N sub-matrix T⊥47.

To analyse the CS stability by following the MSF approach30, we now consider the transformed coordinates η(t) = (T ⊗ Im)W(t) (where ⊗ denotes the Kronecker product) and \({{{{{{{{\boldsymbol{\xi }}}}}}}}}_{q}^{\ell }(t)=T{{{{{{{{\boldsymbol{y}}}}}}}}}_{q}^{\ell }(t)\). In particular, we focus on the perturbations along the transverse manifold, η⊥(t) = (T⊥ ⊗ Im)W(t) and \({{{{{{{{\boldsymbol{\xi }}}}}}}}}_{q\perp }^{\ell }(t)={T}_{\perp }{{{{{{{{\boldsymbol{y}}}}}}}}}_{q}^{\ell }(t)\).

By using the matrix T (see details in the Supplementary Note 2), for the transverse perturbations we finally obtain,

where the terms ρ1, …, ρ4 are the time-varying matrices defined in Methods. The set \(\left\{{{{{{{{{\boldsymbol{s}}}}}}}}}_{p}(\cdot )\right\}\) collects all the synchronous solutions (delayed or not) corresponding to the Q clusters.

Through Eq. (3b), each entry of the vector \({{{{{{{{\boldsymbol{\xi }}}}}}}}}_{q\perp }^{\ell }(t)\) corresponds to one and only one entry of the vector η⊥, q = 1, …, Q. Moreover, through Eq. (3a), the entries of the vector η⊥ are organized into M groups, each one independent of the others, and in general with a different number of elements. M depends on the network topology and the analyzed cluster pattern and ranges between 1 (each component of η⊥ depends on the other components) and N − Q (all the entries of the perturbation vector η⊥,i are independent of each other). The larger M, the higher the degree of decoupling and therefore the dimensional reduction. If we call D the sum of the distinct dα values, the number of equations to analyze is Nred = (m + LQ)(Q + D).

We have therefore decoupled the stability problem into independent lower-dimensional equations. We remark that the time evolution of each entry of the vector \({{{{{{{{\boldsymbol{\xi }}}}}}}}}_{q\perp }^{\ell }(t)\) is decoupled from all other entries. Some of these entries are multiplied by zero coefficients on the right-hand side of Eq. (3a) and so can be safely removed from the analysis. According to the MSF approach, the stability of the cluster solution can be studied in terms of the Transverse Lyapunov Exponents (TLEs)48,49.

In summary, the dimensional reduction provided by the MSF approach can be quantified as follows. The original Eqs. (1) are Norig = mN + LN2, which is the same number of equations of the variational system, i.e., Eqs. (2) and (3). However, the Eqs. (3) have M diagonal blocks, corresponding to M independent sets of equations, which allows the study of the stability of clusters in terms of a set of decoupled equations39,50,51. All the 1-size blocks can be parameterized according to the MSF approach so that only one equation must be simulated. For example, if the network has an all-to-all topology we have the maximum reduction since all blocks are scalar, i.e., M = N − Q, D = 1, and the number of equations to analyze is \({N}_{red}=\left.(m+LQ)(Q+1)\right)\), where we recall that m is the dimension of the state vector of the ith oscillator, Q is the minimum number of equitable clusters, and L is the number of different types of connections.

Applications of the methodology to three case studies

To validate and illustrate the potentialities of the introduced methodology, this will be applied to case studies that differ in the adaptation rules and other topological and dynamical features.

The first example refers to neural systems and to the possibility of storing stimulation patterns in memory, where the memory items are represented by synchronized clusters displaying population bursts in the β-γ range52,53,54,55. The corresponding network has the following characteristics: all-to-all initial topology, uniform delay, homogeneous nodes, Hebbian adaptation rule, and a single layer.

The second example models the emergence of a disease state in a volume element of tissue represented as a two-layer network of phase oscillators, whose interactions are slower than the oscillators’ dynamics and are modeled through an adaptive process. The healthy state corresponds to synchronized dynamics, and the presence of several frequency clusters denotes a pathological regime56,57. The corresponding network contains two layers with a multiplex initial topology, no delay, heterogeneous nodes, and an adaptation rule that depends on a parameter related to age.

The third case study is a small (N = 10) synthetic network with Erdős-Rényi initial topology, no delay, homogeneous nodes, Hebbian adaptation rule, and a single layer.

The theoretical approach previously described allows us (i) to analyze the stability of a given CS solution through system (3) and (ii) to study the behavior of the corresponding quotient network through system (2), at a reduced computational cost.

Population coding: a plastic network of neural masses

We consider a network of N identical neural mass models58, arranged in a single layer and interacting via plastic gap junctions, with homogenous synaptic delay transmission τ. In particular, each network node corresponds to a Wilson-Cowan model, which describes the dynamics of a neural mass made up of two coupled populations of neurons, one excitatory and one inhibitory59. The network plasticity obeys the Hebbian rule60 and involves only excitatory-excitatory connections. The model parameters (see Methods) are set to values that correspond to the emergence of a stationary regime for the dynamics of an isolated node, i.e., the excitatory and inhibitory population firing rates (represented by the state variable E and I) approach a constant value61. Our goal is to study the emergence of firing rate oscillations in biologically relevant frequency ranges induced by the stimulation of a sub-group of neural populations, as in the case of visual and auditory stimuli62,63. Furthermore, oscillatory behavior (population bursts) promoted by the plastic properties of the network can provide a means to store memory items, somehow in analogy to the mechanisms reported for working memory64.

In particular, we consider a network with a fixed, fully connected, initial topology. Since the all-to-all topology is compatible with any coloring of the network, i.e., with any CS pattern38,40, the network per se admits any clustering configuration. We now aim to check if the network can encode and decode simple visual memory patterns. This, in turn, provides a unique test-bed to investigate which patterns are selected by the adaptation rule.

As a first test (see Fig. 1a) we stimulated N1 nodes of the network with a current pulse with normalized height 0.2 and 100 ms duration, mimicking an external stimulation. The other N2 nodes (with N1 + N2 = N) are not stimulated. We want to study the network capability of encoding/decoding information through coexisting CS solutions. Notice that the analysis depends on the ratio N1/N; therefore, it is valid for any value of N, owing to the assumption of fully-connected initial topology. To this end, we start with the simplest case of a network with Q = 2 stable clusters – where C1 contains the N1 stimulated nodes and C2 contains the N2 non-stimulated nodes – to study if the nodes of the network reach a stable solution. We analyze the stability of the cluster pattern solution that emerges at regime (i.e., after the effects of the initial pulse are over), meaning that the stimulus has determined the onset of two stable clusters in the network. Once the clustering has been induced and the corresponding quotient network found, we can analyze the stability of the CS solution. The TLEs computed through Eq. (3b) are always negative (see Supplementary Note 6). Therefore, the emerging cluster solution is always stable and the nodes within each cluster are phase- and frequency-synchronized. As the nodes within each cluster are synchronized, the frequency of the collective oscillations can be computed by analyzing the behavior of the Q = 2 nodes of the quotient network, which is much simpler than the original network.

The network is split into two clusters a by stimulating N1 nodes with a current pulse with normalized height 0.2 and 100 ms duration, and not stimulating the remaining N2 nodes. Depending on the values of N1 and the synaptic coupling strength σ, the stimulated cluster can produce stable brain oscillations (b) (blue region) or present persistent activity corresponding to a constant population firing (b, green and dark blue regions). The dashed black lines mark the edges between the three regions and have been computed semi-analytically (see Supplementary Note 5). c–e Time evolution of the state variables E1 (solid blue) and E2 (dashed red) for the set of parameters corresponding to dots in panel (b). f Frequency of the collective oscillations at the three values of σ marked by colored vertical bars in (b). Each curve in (f) has the same coloring as the corresponding bar. By varying N1, the network can produce oscillations in the β − γ range.

Figure 1b shows the color-coded asymptotic behavior of the network, for different values of the synaptic coupling strength σ and of the number N1 of stimulated nodes. In the dark blue region, both populations have the same low persistent activity, corresponding to a constant population firing (i.e., not corresponding to brain oscillations), as shown in Fig. 1c. In the blue region, the stimulated population produces stable brain oscillations, as shown in Fig. 1e, whereas the non-stimulated population remains in a low persistent regime. In the green region, the stimulated (non-stimulated) populations present high (low) persistent activity as shown in Fig. 1d, but again not corresponding to brain oscillations. The edges between these regions (dashed lines) have been computed semi-analytically (see Supplementary Note 5).

The oscillations in the light blue region are in the β-γ range, as illustrated in Fig. 1f, which shows the frequency of the collective oscillations at the three values of σ marked by colored vertical bars in Fig. 1b. Each curve in Fig. 1f has the same coloring as the corresponding bar. The curves are plotted versus the number N1 of stimulated nodes. We notice that the higher the synaptic coupling σ, the lower the number N1 of nodes that must be stimulated to observe brain oscillations. Moreover, the obtained range of frequencies is the same for any considered value of σ.

We remark that, in the absence of adaptation, this network (with the same parameters) would not be able to generate persistent brain oscillations (see Supplementary Note 6).

We have obtained proof-of-concept evidence that the considered network can code the visual stimulus into brain oscillations whose frequency depends on the global coupling σ and the stimulus itself. Next, we will check if the network can code the stimulus into CS solutions corresponding to Q = 3 clusters.

As a second test (shown in Fig. 2), we stimulated two groups of nodes for 100 ms with stimulations of different intensity: namely, N1 (N2) nodes with a current pulse of higher (lower) normalized height. The other N3 nodes (with N1 + N2 + N3 = N) are not stimulated, as shown in Fig. 2a. Accordingly, to analyze the network behavior we split the nodes into Q = 3 clusters: C1 and C2 containing active nodes, whereas nodes in C3 are in a low persistent regime. As in the first test, the nodes within each cluster are always frequency- and phase-synchronized since the TLEs are always negative. Therefore, the activity of the whole network can be studied by analyzing the quotient network. We observe that stable oscillations in the quotient network can emerge for a suitable combination of N1 and N2 nodes. In these cases, the activity of the two stimulated clusters is phase-locked with a constant phase lag Δ (see Methods) and generates oscillations again in the range β-γ.

After setting σ = 20, the network is split into 3 clusters a by stimulating two groups of nodes for 100 ms with stimulations of different intensities (N1 nodes with a current pulse of normalized height 0.25 and N2 with a smaller pulse of height 0.15). The other N3 nodes are not stimulated. Color-coded frequency (b) and phase lag Δ (c) of the nodes in the clusters C1 and C2 for different values of N1 and N2. In the white regions, there are no asymptotic oscillations. The time plots (d, e) show the state variables E1 (blue) and E2 (orange) for the two sets of parameters corresponding to the red and black dots in panels (b, c).

Figure 2 b and c show, for different values of N1 and N2, the color-coded frequency f of the nodes in the clusters C1 and C2 and phase lag Δ between oscillations of nodes in C1 and in C2. In the white regions, there are no oscillations. Moreover, the behavior along the thick diagonal corresponds to the case N3 = 0, i.e., to the case with only two populations, both stimulated. In the colored region, the studied oscillatory solutions are stable since all the TLEs are negative. Figure 2d and e show the time plots of the state variables E1 (blue) and E2 (orange) for the two sets of parameters corresponding to the red (Fig. 2d) and black (Fig. 2e) dots in Fig. 2b, c.

We observe a complete symmetry in the diagram reported in Fig. 2b by exchanging N1 with N2, due to the homogeneity in the original network, and that γ oscillations emerge whenever N1 >> N2 (or equivalently for N2 >> N1). By contrast, low frequencies in the β-range are observable when the composition of the two stimulated clusters is comparable.

Furthermore, we observe that the phase lag between the two clusters ranges in the interval [π/2, 3π/2]. We remark that the phase lag is π whenever N1 = N2 and deviates from π as far as ∣N1 − N2∣ increases. Notice that, due to the symmetry of the network, the phase is symmetric with respect to the axis (N1 = N2), i.e., Δ(N1, N2) = 2π − Δ(N2, N1).

This second test proves that the network can code two different stimuli into persistent brain oscillations with different frequency f and phase lag Δ. Therefore, we conjecture that larger numbers of stimuli can be coded by the network by generating different cluster solutions with larger numbers of clusters. Moreover, each CS solution is characterized by a specific frequency f and phase lag Δ, which can be used to decode the stimulus. To check the validity of this conjecture and thus verify that the plasticity rule allows storing multiple memory items in the network, we performed a third test.

The memory items we consider are black and white images (size 200 × 200) of polygons inscribed in the same circumference with different numbers of edges, as shown in Fig. 3a. Each polygon corresponds to a different item to memorize and a different network stimulation pattern. Each pixel of the image is univocally associated with a network node (i.e., we considered a network with N = 40000 nodes) where we stimulate the N1 (N2) nodes corresponding to black (white) pixels with a higher (lower) current pulse for 100 ms. TLEs are always negative (see Supplementary Note 6) and we can analyze the network behavior by using the quotient network. In this case, we observe the emergence of phase-locked oscillations in the β-γ range with a constant phase-lag Δ among the two population clusters. Indeed the situation is analogous to the one reported in the previous experiment whenever N3 = 0. Figure 3b shows Δ for different values of N1. In the interval N1/N ∈ [0.4, 0.6] we observe an almost linear dependence of the phase-lag on the percentage of nodes in the cluster C1. This dependence can be employed to encode the number of edges of each polygon. Indeed this is possible as shown in Fig. 3b, where the red circles and the number next to each of them show the phase coding of the number of edges. Figure 3c and d show the time plots of the state variables E1 (blue) and E2 (orange) for the two sets of parameters corresponding to polygons with 8 (Fig. 3c) and 4 (Fig. 3d) edges.

The network is composed of N = 40000 nodes, each one univocally associated with a pixel of black and white images (size 200 × 200) representing regular polygons a. The network is split into 2 clusters, both active, by stimulating the N1 nodes corresponding to black pixels with a current pulse with height 0.25 and width 100 ms, and the N2 nodes corresponding to white pixels with a current pulse with height 0.15 and width 100 ms. b Asymptotic phase lag among the oscillations emerging in the two stimulated clusters, for different values of N1. The red dots show the phase coding of the number of polygon edges. The time plots (c, d) show the state variables E1 (blue) and E2 (orange) for the two sets of parameters corresponding to polygons with 8 c and 4 d edges.

The results of this third test can represent an example of population coding, where the relevant quantity is the phase lag Δ among collective oscillations, somehow resembling the frequency-band coupling observed in the monkey visual cortex63.

All the results reported here are obtained with the methodology previously introduced. In particular, for the third experiment, this presents the advantage that the number of equations that must be simulated can be reduced from Norig ≈ 1.6 ⋅ 109 to Nred = 12, as m = 2, L = 1, Q = 2, and D = 1. The diagonal structure of the terms ρ1, ρ2, ρ3 and ρ4 in Eq. (3b) (see Supplementary Note 7) implies that (i) the stability of each cluster is independent of the stability of any other clusters (i.e., the clusters are not one-way dependent and not even intertwined39) and (ii) the dimension of the space we have to explore to study the stability of the synchronous solution can be strongly reduced. For this example, we notice that the term ρ2 in the variational Eq. (3a) is zero. However, the same equations are influenced by the quotient network state sp, whose behavior is affected by σ, as shown in Eq. (2).

Propagation of tumor disease: a two-layer adaptive network model

As a second example, we analyze an adaptive two-layer network of phase oscillators with multiplex topology as a model for the emergence of pathological states induced by tumors or infections57. In this simplified scheme, the healthy state corresponds to a fully frequency-synchronized regime of the network. By contrast, pathological nodes have a metabolism that is faster than healthy nodes, and the pathological state is characterized by the presence of multifrequency clusters: one with a normal metabolism, containing healthy nodes, and one or more with a faster metabolism, containing pathological nodes. The presence of multifrequency clusters leads to a decreased overall synchronization of the network. In particular, the two-layer (L = 2) heterogeneous network is composed of N nodes (of two kinds) and represents a volume element of tissue consisting of parenchyma (organ tissue), basal membrane, and stroma (immune layer). This network mimics the functional interactions between parenchyma (N cells in the first layer) and stroma (N cells in the immune layer), which lead to the propagation of tumor disease. The communication through cytokines (which mediate the interaction between the cells) is modeled by adaptive connections, as their timescale is slow compared to the cell metabolism65,66.

Each node i of the network models a cell of both the organ tissue and the immune layer; therefore, it is described by a 2-dimensional state vector \({{{{{{{{\boldsymbol{x}}}}}}}}}_{i}={[{\phi }_{i}^{(1)},{\phi }_{i}^{(2)}]}^{T}\) (see Methods), where \({\phi }_{i}^{(1)}\) and \({\phi }_{i}^{(2)}\) are phase variables related to the metabolic activity of the i-th cell of the organ tissue (parenchyma) and of the immune layer, respectively. The model equations are detailed in Methods.

Each parenchyma cell in isolation can be of two kinds, namely healthy or pathological, depending on the value of the natural binary frequency \({\omega }_{i}^{(1)}\), which represents the metabolism velocity of an isolated cell. Accordingly, the network is non-homogeneous, because the nodes are of two different kinds. A higher (lower) natural frequency \({\omega }_{i}^{(1)}\) is associated with pathological (healthy) cells, since a higher velocity corresponds to a faster metabolism in the cell, usually associated with a pathological state. However, once connected within the network, the state of a cell, either healthy or pathological, is influenced by its interactions with the other cells. Indeed, the state of the cell is related to the rate \({\dot{\phi }}_{i}^{(1)}\) of cytokine production, which depends on both the natural frequency \({\omega }_{i}^{(1)}\) and the adaptive coupling terms \({b}_{ij}^{\ell }\). Once coupled, healthy and tumor cells differ by their metabolic activity, i.e., tumor cells are less energy-efficient and thus have a faster cellular metabolism, corresponding to a higher rate \({\dot{\phi }}_{i}^{(1)}\) of cytokine production.

As the adaptive interaction mediated by cytokines can involve any pair of cells57, the cells of the organ tissue are initially linked through a fully connected topology (\({a}_{ij}^{\ell }=1\) in Eq. (1)), where the strength of the connections depends on the adaptation variables \({b}_{ij}^{\ell }\).

The health state of the complete network depends on the rate values \(\{{\dot{\phi }}_{i}^{(1)}\}\)57 and it is monitored through the parameter \({\bar{\nu }}^{(1)}\), which provides an average measure (over different trials) of the standard deviation of the angular frequencies \({\dot{\phi }}_{i}^{(1)}\) (see Methods). A zero value of \({\bar{\nu }}^{(1)}\) means that all the nodes are locked in frequency; in this case, the network is healthy. On the contrary, whenever \({\bar{\nu }}^{(1)}\) is non-zero, frequency clusters appear in the network, meaning that some nodes evolve with a higher frequency, corresponding to a pathological state. An equivalent parameter \({\bar{\nu }}^{(2)}\) measures the standard deviation of the angular frequencies in the second layer, but its value is not related to the onset of a pathological condition because in tumor disease mutated cells are almost always located in the parenchymal layer57.

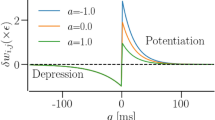

The parameter β governs the plasticity rule of the cytokines and it can be associated with different adaptation rules. For instance, for β = π/2, a symmetric rule is obtained where the coupling increases between any two oscillators with close-by phases67; on the contrary, if β = 0, the coupling will be strengthened if the oscillators have a phase shift of π/2. β mimics a systemic sum parameter which may account for different influences such as physiological changes due to age in the extracellular matrix, inflammation, systemic and local inflammatory baseline, etc. For tumor disease, it can include the malignancy grade of tumor cells. For the sake of brevity, we call this parameter the age parameter.

Using the MSF approach, we investigate how a small fraction r < 0.1 of initially pathological nodes and the age parameter β influence the onset of tumor disease in a network composed of N = 200 nodes. In particular, we analyzed the network behavior for a grid of 11 × 11 values of the parameters r ∈ [0, 0.1] and β ∈ [0.40π, 0.55π].

Due to the multiplex topology of the network, many different cluster patterns are admitted (see Supplementary Note 8). Nodes within a cluster are phase-synchronized (and therefore frequency-synchronized) and represent a group of cells in the tissue that produce cytokine with the same metabolism velocity. Depending on the evolution of the quotient network, clusters can evolve either with the same frequency (which means that all the cells in the tissue produce cytokine at the same rate and therefore the tissue is healthy) or with different frequencies (which means that some cells in the tissue produce cytokine with a faster metabolism and therefore the tissue is pathological). Therefore, to investigate if a stable cluster pattern is healthy/pathological, we evaluate if the parameter \({\bar{\nu }}^{(1)}\) in the quotient network is close to zero. In this paper, we perform two tests. The first test is to analyze the presence of multi-stability by varying β and r. In the second test, we investigate how different cluster patterns influence the onset of the pathological state.

In the first test, we focus on the simplest pattern shown in Figs. 3 and 4 of ref. 57, where the nodes of the network split into two groups of phase-synchronized nodes. To analyze the stability of this pattern for different values of β and r, we initially divide the network into two phase clusters, one with r ⋅ 200 pathological nodes and the other one with (1 − r) ⋅ 200 healthy nodes. All the nodes within a cluster have the same initial phase, i.e., \({{{{{{{{\boldsymbol{s}}}}}}}}}_{i}={[{\phi }_{i}^{(1)},{\phi }_{i}^{(2)}]}^{T}={{{{{{{{\boldsymbol{s}}}}}}}}}_{j}\) if i, j ∈ Cp, where si is the state of which we are analyzing the stability. The initial phases of each cluster are randomly selected from a uniform distribution in the range [0, 2π], whereas the initial coupling weights \(\{{b}_{p,q}^{1}\}\) and \(\{{b}_{p,q}^{2}\}\) between clusters are randomly selected from uniform distributions defined in the ranges [0, 2] and [ − 1, 1], respectively. In the Supplementary Note 9, we show that this 2-cluster solution synchronized in phase (and thus in frequency) exists and is stable for any considered value of β and r. Moreover, the parameter \({\bar{\nu }}^{(1)}\) is always close to zero (see Supplementary Note 9). Therefore, we found that a stable healthy state is possible in the whole considered range of the parameters. This result can hardly be obtained via the analysis performed in57, which was based on extensive network simulations with different random initial conditions, and therefore led to a focus only on the pattern with the largest basin of attraction for each (β,r) pair. Our analysis suggests that there should be a coexistence among different healthy and pathological states in the whole bi-dimensional parameter plane (β,r). Therefore, a deeper analysis of the multiple stable cluster patterns admitted by the network is required.

As a second test, we extended the result obtained in57 by analyzing the network multi-stability, to see (i) how the fragmentation of the network in multiple clusters affects the overall health of the network, in terms of the parameter \({\bar{\nu }}^{(1)}\), and (ii) how many healthy and pathological states can coexist. To this aim, we divided initially the (1 − r) ⋅ 200 healthy nodes into Na phase clusters, each one containing (1 − r) ⋅ 200/Na nodes. The analyzed network structure is shown in Fig. 4a: 200 ⋅ r nodes (corresponding to the red node of the quotient network in Fig. 4a) are set as pathological nodes (\({\omega }_{i}^{(1)}\) = 1), whereas 200 ⋅ (1 − r) nodes (corresponding to the green nodes of the quotient network in Fig. 4a) are set as healthy nodes (\({\omega }_{i}^{(1)}\) = 0) and split into Na phase clusters. Each cluster corresponds to a different initial phase, selected randomly, as in the first test. To study the effect of clustering on the network evolution, we considered three values of Na, namely 6, 10, and 15, meaning that we have initially 6 (or 10 or 15) clusters of healthy nodes and we analyzed whether these clusters and the pathological nodes synchronize in frequency thus either yielding a healthy state or not.

The volume element of tissue is modeled as a 2-layer heterogeneous network composed of N = 200 nodes with adaptive connections. The network is split into Na + 1 clusters (a) by properly selecting the initial conditions: 200 ⋅ r nodes are set as pathological (red circle), 200 ⋅ (1 − r) as healthy (green circles); healthy nodes are split into Na phase clusters. b Two-dimensional phase-diagram in the plane (β, r) displaying the boundary between healthy (leftmost areas) and pathological states (rightmost areas) for different values of Na. c Average parameter \({\bar{\nu }}^{(\ell )}\) vs β for r = 0.07 and the three values of Na examined in panel (b). The behaviour of the network for r = 0.07 and β = 0.55π is reported in (d1-d6) for Na = 6 and in (e1-e6) for Na = 15: raster plots showing the time evolution of the network (d1, d2, e1, e2), phase velocity of the nodes (d3, d4, e3, e4), and snapshots of the entries of the matrix Bl (d5,d6, e5, e6). The panels (d1,d3,d5) and (e1,e3,e5) are related to the parenchyma layer. The panels (d2,d4,d6) and (e2,e4,e6) are related to the immune layer.

For each parameter set, we analyze the quotient network and compute the average indicator \({\bar{\nu }}^{(1)}\) (see Methods) to discriminate between pathological (\({\bar{\nu }}^{(1)} > 0\)) and healthy (\({\bar{\nu }}^{(1)}=0\)) conditions. We take an average over \({{{{{{{\mathcal{N}}}}}}}}\) trials with different random selections of the initial conditions for each cluster to make this indicator robust. The results are shown in Fig. 4b. For each value of Na, a colored line marks the boundary between healthy (leftmost areas) and pathological (rightmost areas) states of the network. This implies that, for a given parameter set (r and β fixed), we can reach a healthy or pathological state depending on the initial conditions, i.e., on Na.

The variability of tumor disease results from the initial genetic state of the tumor cells, their subsequent mutations, epithelial-mesenchymal transitions, and interactions with the innate immune system68. Therefore, starting from different initial conditions allows monitoring of different possible evolutions. The model predicts that the tissue can fall into a pathological state with a high number of tumor cells when the fraction r of initially pathological cells or the age of the patient (β) increases. Moreover, the number Na of healthy clusters influences the onset of the tumor; the higher Na, the wider the parameter region that corresponds to the pathological state. Therefore, in the presence of smaller groups of initially healthy nodes synchronized at different phases, the network will become pathological more easily. By contrast, a single larger phase cluster of initially healthy nodes is more robust to the propagation of the tumor.

Figure 4c1 and c2 show \({\bar{\nu }}^{(\ell )}\) versus β for r = 0.07 and different values of Na. As already shown in Fig. 4b, when Na increases, the pathological condition (\({\bar{\nu }}^{(1)} > 0\)) is obtained in a wider range of β. At the same time, the value of the indicator \({\bar{\nu }}^{(2)}\) concerning the immune layer seems not to be influenced by Na and reveals that this layer is usually not frequency-locked for β > 0.438π. Moreover, for the same set of parameters (β,r) the network exhibits different behaviors, depending on the chosen value of Na, i.e., on the initial conditions, thus clearly showing multi-stability. Once again, this shows that knowledge of β and r is not sufficient to determine the onset of a pathological state and that the initial conditions of the network should also be taken into account.

The network dynamics is more deeply analyzed in some specific cases, by considering r = 0.07 and β = 0.55π for Na = 6 (Fig. 4d) and Na = 15 (Fig. 4e). In particular, the raster plots (see Methods) reported in the leftmost panels show the time evolution of the network. Notice that Na = 6 corresponds to green nodes starting from 6 sets of different initial conditions (Fig. 4d1 and d2), whereas Na = 15 corresponds to green nodes starting from 15 different sets of close initial conditions (Fig. 4e1 and e2), as better detailed in Methods. In Fig. 4d3 and d4, this leads to synchronization between green and red nodes and therefore to a healthy state. Indeed, although the pathological nodes in the parenchymal layer have different natural velocity \({\omega }_{i}^{(1)}\), they synchronize their metabolic rate \({\dot{\phi }}_{i}^{(1)}\) with the healthy nodes due to the coupling. The same information can be extracted from Fig. 4d3, which shows \({\dot{\phi }}_{i}^{(l)}\) in the parenchymal layer. We remark that the metabolic rates in the parenchymal and immune layers are different due to the different coupling terms at regime, as pointed out in Fig. 4d5 and d6, which show the coupling matrix B1 and B2 once the asymptotic state is reached.

When the number of different sets of close initial conditions increases, multi-frequency clusters appear and the network falls into a pathological state (Fig. 4e1 and e2), where the pathological (red) nodes in the parenchyma layer evolve with a higher metabolic rate (Fig. 4e3). A corresponding cluster of lower frequencies is observed in the immune layer (Fig. 4e4). This indicates an essential change in the dynamical state of the immune layer, where all nodes have a velocity close to their velocity when isolated \({\omega }_{i}^{(2)}=0\) (Fig. 4e4). Figure 4e5 and e6 confirm that the asymptotic coupling is weaker than in Fig. 4d5 and d6.

To check the robustness to heterogeneity (which is a common feature in natural networks) of the results obtained in this case study, we selected one parameter of each node randomly (similarly to what was done in refs. 69,70), thus considering a fully heterogeneous network and preventing the possibility of perfect CS. In particular, the natural frequency of the isolated nodes in the first layer is selected as \({\omega }_{i}^{(1)}=| {{{{{{{\mathcal{Z}}}}}}}}(0,\Sigma )|\) for healthy nodes and \({\omega }_{i}^{(1)}=1+{{{{{{{\mathcal{Z}}}}}}}}(0,\Sigma )\) for pathological nodes, where \({{{{{{{\mathcal{Z}}}}}}}}(0,\Sigma )\) is a random variable with mean 0 and standard deviation Σ. Therefore, each node is associated with a different value of the parameter \({\omega }_{i}^{(1)}\) and no exact CS is admitted by the network for any Σ ≠ 0. This implies that to analyze the network we have to simulate its whole dynamics and we cannot employ the quotient network. The obtained results for \(\Sigma \in \left\{1{0}^{-3},1{0}^{-2},1{0}^{-1}\right\}\) are compared to the case Σ = 0 (solid curves in Fig. 5). For each value of Σ, the network behavior is analyzed for \({{{{{{{\mathcal{N}}}}}}}}=30\) different realizations of \({{{{{{{\mathcal{Z}}}}}}}}(0,\Sigma )\). The mean parameter \({\bar{\nu }}^{(1)}\) averaged across the \({{{{{{{\mathcal{N}}}}}}}}\) trials is shown in Fig. 5 for Na = 6 (Fig. 5a), Na = 10 (Fig. 5b), Na = 15 (Fig. 5c).

Average parameter \({\bar{\nu }}^{(1)}\) vs β for a heterogeneous network with r = 0.07, Na = 6 (a), 10 (b), and 15 (c) and natural frequency of the isolated nodes in the first layer set to \({\omega }_{i}^{(1)}=| {{{{{{{\mathcal{Z}}}}}}}}(0,\Sigma )|\) for healthy nodes and \({\omega }_{i}^{(1)}=1+{{{{{{{\mathcal{Z}}}}}}}}(0,\Sigma )\) for pathological nodes. Solid lines (orange in (a), yellow in (b), purple in (c)): benchmark solution, obtained with our method for Σ = 0. Crosses: Σ = 10−3. Squares: Σ = 10−2. Triangles: Σ = 10−1.

We remark that the specific value \({\bar{\nu }}^{(1)}\) is relatively relevant, what is important is the threshold value of β from which \({\bar{\nu }}^{(1)}\ne 0\), corresponding to the onset of a pathological state. With this caveat in mind, for all the considered values of Na, the results obtained with the proposed method remain valid for Σ≤10−2. The results shown in Figs. 4b and c have been obtained through the analysis method introduced in this paper, which reduced the computation times by two orders of magnitude with respect to simulations of the whole network required to obtain Fig. 5. Indeed, the number of equations that must be simulated is reduced from Norig = 80400 to Nred = 128 for Na = 6, as m = 2, L = 2, Q = 7, and D = 1. Moreover, the reduction of the dimensionality of the state space obtained by using the quotient network enables an accurate analysis of the multi-stable solutions displayed by the model.

Network of Lorenz systems with non-global coupling

To further investigate the potentiality of the method we analyze a synthetic network with non-global coupling. In particular, we consider a small symmetric network with one layer, no delay, N = 10 nodes, whose initial topology is of Erdős-Rényi kind (i.e., dense, but not global), with an edge removal probability of 0.1. This is a single-layer network, therefore we omit the index ℓ. Each oscillator is a Lorenz system, with coupling in the first variable (see Methods). When isolated, each node evolves toward a chaotic attractor. The adaptation law is the classical Hebb’s rule. By using the coloring method proposed in refs. 38,39, we can split the network into Q = 3 clusters: nodes from 1 to 6 belong to cluster \({{{{{{{{\mathcal{C}}}}}}}}}_{1}\) (see Fig. 6a, dark blue nodes), nodes 7 and 8 belong to cluster \({{{{{{{{\mathcal{C}}}}}}}}}_{2}\) (light blue nodes), and the other (yellow) nodes belong to cluster \({{{{{{{{\mathcal{C}}}}}}}}}_{3}\). The second and third clusters are intertwined because their perturbations are associated with the same block of matrix \({\hat{B}}_{\perp }\) (see Supplementary Note 10) and therefore share the same TLEs.

Structure and analyzed clustering pattern (a). Transverse Lyapunov Esponents (TLEs) for cluster \({{{{{{{{\mathcal{C}}}}}}}}}_{1}\) b and \({{{{{{{{\mathcal{C}}}}}}}}}_{2}-{{{{{{{{\mathcal{C}}}}}}}}}_{3}\) c. Synchronization errors between the nodes of cluster \({{{{{{{{\mathcal{C}}}}}}}}}_{1}\) (d), \({{{{{{{{\mathcal{C}}}}}}}}}_{2}\) (e), \({{{{{{{{\mathcal{C}}}}}}}}}_{3}\) f. The blue areas in (b, c) correspond to a stable clustering pattern (a) and to a low synchronization error (purple regions) in (d-f).

Figure 6b and c show the TLEs for a regular grid of 100 × 100 values of σ and ϵ for cluster \({{{{{{{{\mathcal{C}}}}}}}}}_{1}\) and \({{{{{{{{\mathcal{C}}}}}}}}}_{2}\) (the same result holds for cluster \({{{{{{{{\mathcal{C}}}}}}}}}_{3}\), due to the intertwining mentioned above), respectively.

TLEs lower than zero correspond to parameter sets where all the nodes within the cluster \({{{{{{{{\mathcal{C}}}}}}}}}_{i}\) are synchronized. A similar analysis can be carried out by simulating the whole network and by computing the synchronization error E (see Methods) between the nodes of each cluster. Figures 6d-f show the corresponding results. As expected, the two methods manifest an excellent agreement, but the proposed MSF-based method allows for reducing the computational time of one order of magnitude. Indeed, the entries of the matrices ρ1, …, ρ4 are organized into M = 6 diagonal blocks (see Supplementary Note 10) and the number of equations that must be simulated is reduced from Norig = 130 to Nred = 36, as m = 3, L = 1, Q = 3, and D = 3.

Discussion

As stated in the Introduction, a general methodology to treat CS in adaptive networks has not been developed yet. In this article, we have proposed a method to analyze the multi-stability of CS patterns in multi-layer adaptive networks of heterogeneous oscillators with delays. This method exploits an implementation of the MSF approach, which can considerably reduce the dimensionality of the stability analysis. This reduction lowers the computational effort required for the numerical analysis, thus making it suitable for studying multiple coexisting stable solutions. We remark that in the case of complete synchronization (only one cluster), our method can be reduced to the one developed in ref. 26.

Thanks to this approach, we have been able to extend the study of cluster synchronization27,39,45,71,72,73 to the case of networks with adaptive connections of different types, heterogeneous nodes, and node-to-node communication delays. We were also able to show which CS patterns were selected by the chosen adaptation rule. Our results can find application in neural dynamics and machine learning since CS plays a key role in fundamental neural processes, such as coordination and cognition, and plasticity can provide a mechanism to embed in the network a learning strategy to encode specific patterns28,29,74.

As a proof of concept, we applied our method to three networks of oscillators with different dynamical evolution and adaptation rules. In these examples, we identified multi-stable CS patterns displaying different (i.e., stationary or oscillatory) coherent dynamical regimes. The analysis of oscillatory patterns of synchronized clusters can be relevant in biology, where periodic fluctuations play key roles in many processes, including the cell cycle, circadian regulation, metabolism, embryo development, neuronal activity, and cardiac rhythms75. In many biological systems, other key features are adaptation and multi-stability. Therefore, the proposed method is suited to analyze the behavior of these systems, at least in conditions of small heterogeneity, as in the second case study. The main limitations of the proposed approach are two: the required symmetries of both coupling and its strength, which can be hard to fulfill in some adaptive systems, and the fact that if the number of different oscillators is high (i.e., we consider many different fi’s) the dimensional reduction advantages are less evident. Despite this, even by reasoning on simplified models we can gain insight into the behavior of real networks with a higher degree of heterogeneity. An a posteriori robustness analysis can verify if a simplified model can capture the main synchronization features of a less idealized network.

In the first considered example (a plastic single-layer network of neural masses), we used the proposed method to analyze the network encoding/decoding capabilities, and we report the possibility of employing phase differences among population oscillations in the β-γ bands to store visual information. The frequency bands at which we observe the oscillations are usually evoked by visual stimulation: β-bursting is believed to be correlated to visual attention76, whereas γ-rhythms are at the basis of visual coding77. Furthermore, phase coupling and coding play a fundamental role in brain activity63,78. Recently, evidence of θ-phase dependent neuronal coding during sequence learning of pictures has been reported also in the human temporal lobe79.

In the second example (a two-layer adaptive network model for the propagation of tumor disease), we used our method to investigate the role of multi-stability at the onset of tumor disease. From the analysis of this model, it emerges that the parameter β in the model (related to the age) is fundamental in controlling the onset of the pathology. The percentage of pathological states increases with β, and therefore with age80,81. Furthermore, the initial number of clusters present in the healthy state has a strong influence on the state of the model: the more clusters are initially present, the more probable it is that the disease will develop. Therefore, a healthy state displaying a high degree of phase and frequency synchronization is more resilient to tumor development. An a posteriori robustness analysis shows that, even resorting to a simplified model with two different kinds of nodes (one with ωi = 1 and one with ωi = 0), can capture the main synchronization features of a less idealized network, with a much higher degree of heterogeneity.

In the third example, the main distinctive feature is the non-all-to-all initial topology.

In all cases, our method allowed us to obtain valuable dimensional reductions and consequent numerical advantages. In general, the extent of the dimensional reduction depends on the network features (initial topology, number of different types of nodes, etc.). Nonetheless, the analysis method is general and can be applied to many examples in various fields. Even if the method is focused on exact CS and has a local validity due to the linearization at the basis of the MSF approach, it can be a useful tool to get a first idea of the possible stable solutions admitted by an adaptive network that can be described by the formalism of Eq. (1). If necessary, the robustness of the analysis against the high heterogeneity that characterizes many of these systems can be checked a posteriori through direct simulations, as we did in the second example.

Methods

Variational equations for the transverse perturbations

As detailed in the Supplementary Note 2, for the transverse perturbations we obtain (for q = 1, …, Q),

where the block-diagonal matrices marked with ⊥ are minors of the complete matrices, In is the identity matrix of size n, \(\hat{B}=TB{T}^{-1}\) and \(\hat{A}=TA{T}^{-1}\) contain only the blocks related to the transverse perturbations. In the above expression,

and

where Df is the m × m Jacobian of the nodes’ vector field and the m-dimensional matrix DS1 (DS2) is the derivative of S with respect to its first (second) argument.

Plastic network of Wilson-Cowan neural masses

This is a single-layer network, therefore we omit the index ℓ. Each node is a neural mass model of the type introduced by Wilson and Cowan82,83, each composed of an excitatory and an inhibitory population. The dynamics of each population is described in terms of the variable Ei (Ii) representing the firing rate of the i-th excitatory (inhibitory) population. The two populations within each node are cross-coupled via a sigmoid function mimicking an effective synaptic coupling with coupling strengths ωξχ with ξ, χ ∈ [E, I], where E (I) denotes the excitatory (inhibitory) population. The parameter τE (τI) is the refractory period of the excitatory (inhibitory) population after a trigger and θE (θI) determines the firing rate of the excitatory (inhibitory) population when isolated. Furthermore, all the neural mass models (nodes) are globally coupled (aij = 1) via excitatory population activities through Hebbian-like adaptive connections (see Eq. (8) below). The strength of the global recurrent coupling is controlled by the parameter σ. The dynamical evolution of the i-th neural mass obeys the following equations:

where the sigmoid functions ΓI and ΓE in Eq. (7) have the following expressions

and range between 0 and 1. The parameters entering in Eqs (7) and (8) are fixed as follows \(\hat{\tau }=8\) ms, γE = 1.3, γI = 2, θE = 2.2, θI = 3.7, wEE = 16, wEI = 12, wIE = 15, wII = 3 and ϵ = 0.01Hz. The transmission delay is set to τ = 10 ms. The time constants τE and τI and parameter θE are chosen so that (i) the isolated node is quiescent and (ii) the brain waves that the network can generate are in the β − γ range.

Multi-layer adaptive network for tumor disease propagation

We model the organic tissue as a two-layer adaptive network. The network layer of parenchymal cells (super-script (1)) is composed of N phase oscillators \({\phi }_{i}^{(1)}\), i = 1, …, N, of two kinds, whereas the network layer of immune cells (super-script (2)) is composed of N adaptively coupled homogeneous phase oscillators \({\phi }_{i}^{(2)}\). The communication through cytokines that mediate the interaction between the parenchymal cells is modeled by the coupling weights \({b}_{ij}^{1}\), and those between the immune cells by coupling weights \({b}_{ij}^{2}\)57 (To employ our formalism, we have substituted the coupling weights \({k}_{ij}^{1}\) defined in57 with \({b}_{ij}^{1}=1+{k}_{ij}^{1}\).).

To tailor the model to the formalism of Eq. (1), the network is modeled as a N-node network, where the i-th node contains the i-th oscillator of both the parenchymal and the immune layer. The state equations of the network are:

where γ = 0.3, α = 0.28π, ϵ = 0.3, ω(2) = 0. The other parameters are specified in the Results.

To quantitatively characterize the collective dynamics of the network we use the indicator

where \( < {\dot{\phi }}_{j}^{(1)} > =\frac{1}{T}\int\nolimits_{t}^{t+T}{\dot{\phi }}_{j}^{(1)}({t}^{{\prime} })d{t}^{{\prime} }\) and \({\bar{\omega }}^{(\ell )}=\frac{1}{N}{\sum }_{j} < {\dot{\phi }}_{j}^{(\ell )} > \) are the mean phase velocity and the corresponding spatial (population) average for the oscillators in the first layer. In practice, the parameter ν(ℓ) measures the standard deviation of the average phase velocities. A finite value of ν(ℓ) indicates the formation of multifrequency clusters in layer ℓ, while a zero value of ν(ℓ) is associated with a fully synchronized state. The network splitting into clusters with different frequencies in layer ℓ = 1 is taken as an indication of a pathological state, whereas the fully synchronous situation of the same layer denotes a healthy state. Indeed, (i) tumor cells are less energy-efficient and thus have a faster cellular metabolism (i.e., multifrequency clusters appear in pathological conditions) and (ii) in tumor disease mutant cells are almost always parenchymal cells, i.e., only ν(1) determines the onset of the pathology.

The parameter ν(ℓ) can be computed efficiently by simulating only the quotient network, as \({{{{{{{{\boldsymbol{x}}}}}}}}}_{i}={[{\phi }_{i}^{(1)},{\phi }_{i}^{(2)}]}^{T}={{{{{{{{\boldsymbol{s}}}}}}}}}_{p}\) if node \(i\in {{{{{{{{\mathcal{C}}}}}}}}}_{p}\).

To analyze multi-stability, we perform \({{{{{{{\mathcal{N}}}}}}}}=100\) simulations of the quotient network, starting from different random initial conditions and we compute the parameter \({\bar{\nu }}^{(\ell )}\) as the average value of ν(ℓ) across the \({{{{{{{\mathcal{N}}}}}}}}\) trials. As for parameter ν(1), a positive value of \({\bar{\nu }}^{(1)}\) indicates a pathological state.

Phase lag computation

The definition of the phase lag Δ assumes that isolated or coupled nodes (neural masses) maintain relatively close temporal characteristics and each one evolves on a structurally stable periodic orbit in the state space of the corresponding model. The phase variable, defined modulo 1, indicates the position of the node j on its periodic orbit. Consequently, the phase lag in a network of two neural masses can be described by Δ, which is the difference between the corresponding phase variables. The time evolution of Δ, being quite complex due to nonlinear interactions, can be determined through numerical simulations. Following84, we first compute all the crossing times ti(k) (indexed by k) for which the variable Ei passes a threshold Eth = 0.2. The phase lag Δ measures the normalized delay between the crossing times of nodes i and j corresponding to the k-th event: \(\Delta (k)=2\pi \frac{{t}_{j}(k)-{t}_{i}(k)}{{t}_{i}(k)-{t}_{i}(k-1)}{{{{{{{\rm{Mod}}}}}}}}(2\pi )\). Δ is the asymptotic value of Δ(k).

Raster plots

The raster plots in Fig. 4 were obtained as follows. We consider a specific clusterization with Q = Na + 1 clusters. We randomly select the initial condition sq(0) of the q-th cluster Cq. All the nodes within this cluster are set with close initial conditions, i.e., xi(0) = sq(0) + ζ if i ∈ Cq, where ζ is a random variable distributed normally with mean value 0 and variance 10−2. Then, we simulate the network for 660 units of time. We compute the time instants \({t}_{i}^{(j)},i=1,\ldots ,N\) when the i-th phase \({\phi }_{i}^{(\ell )}\) overcomes π, where ℓ denotes the layer. All these time instants are marked in the raster plots with green (red) dots for healthy (pathological) nodes.

Synthetic network with non-global coupling

This is a single-layer network, therefore we omit the index ℓ. Each node is described by a Lorenz system coupled through Hebbian-like adaptive connections on the x variable:

where \(\tilde{\sigma }=10\), \(\tilde{\rho }=28\) and \(\tilde{\beta }=8/3\).

The synchronization error among the Ni nodes in cluster i is computed as:

where \({\bar{{{{{{{{\boldsymbol{x}}}}}}}}}}_{i}\) is the mean state among the nodes in cluster i:

Data availability

All data generated or analyzed during this study are included in this published article (and its supplementary information files).

Code availability

The source code for the numerical simulations presented in the paper will be made available upon request.

References

Manrubia, S. C., Mikhailov, A. S. & Zanette, D. Emergence of dynamical order: synchronization phenomena in complex systems, vol. 2 (World Scientific, 2004).

Hwang, K.-S., Tan, S.-W. & Chen, C.-C. Cooperative strategy based on adaptive q-learning for robot soccer systems. IEEE Trans. Fuzzy Syst. 12, 569–576 (2004).

Blasius, B., Huppert, A. & Stone, L. Complex dynamics and phase synchronization in spatially extended ecological systems. Nature 399, 354–359 (1999).

Passino, K. Biomimicry of bacterial foraging for distributed optimization and control. IEEE Control Syst. Mag. 22, 52–67 (2002).

Orosz, G., Wilson, R. E., Szalai, R. & Stépán, G. Exciting traffic jams: Nonlinear phenomena behind traffic jam formation on highways. Phys. Rev. E 80, 046205 (2009).

Chandler, P., Pachter, M. & Rasmussen, S. Uav cooperative control. In Proceedings of the 2001 American Control Conference. (Cat. No.01CH37148), vol. 1, 50–55 vol.1 (2001).

Ward, L. M. Synchronous neural oscillations and cognitive processes. Trends Cogn. Sci. 7, 553–559 (2003).

Guevara Erra, R., Perez Velazquez, J. L. & Rosenblum, M. Neural synchronization from the perspective of non-linear dynamics. Front. Comput. Neurosci. 11, 98 (2017).

Schnitzler, A. & Gross, J. Normal and pathological oscillatory communication in the brain. Nat. Rev. Neurosci. 6, 285–296 (2005).

Ijspeert, A. J. Central pattern generators for locomotion control in animals and robots: a review. Neural Netw. 21, 642–653 (2008).

Kaneko, K. Relevance of dynamic clustering to biological networks. Phys. D: Nonlinear Phenom. 75, 55–73 (1994).

Buono, P.-L. et al. Symmetry-breaking bifurcations and patterns of oscillations in rings of crystal oscillators. SIAM J. Appl. Dynamical Syst. 17, 1310–1352 (2018).

Gross, T. & Blasius, B. Adaptive coevolutionary networks: a review. J. R. Soc. Interface 5, 259–271 (2008).

Gross, T. & Sayama, H. Adaptive networks (Springer, 2009).

Berner, R. Patterns of synchrony in complex networks of adaptively coupled oscillators (Springer Nature, 2021).

Berner, R., Yanchuk, S. & Schöll, E. What adaptive neuronal networks teach us about power grids. Phys. Rev. E 103, 042315 (2021).

Berner, R. & Yanchuk, S. Synchronization in networks with heterogeneous adaptation rules and applications to distance-dependent synaptic plasticity. Front. Appl. Math. Stat. 7, 714978 (2021).

Thiele, M., Berner, R., Tass, P. A., Schöll, E. & Yanchuk, S. Asymmetric adaptivity induces recurrent synchronization in complex networks. Chaos: An Interdisciplinary J. Nonlinear Sci. 33, 023123 (2023).

Fialkowski, J. et al. Heterogeneous nucleation in finite-size adaptive dynamical networks. Phys. Rev. Lett. 130, 067402 (2023).

Berner, R., Gross, T., Kuehn, C., Kurths, J. & Yanchuk, S. Adaptive dynamical networks. Phys. Rep. 1031, 1–59 (2023).

Sawicki, J. et al. Perspectives on adaptive dynamical systems. Chaos: Interdiscip. J. Nonlinear Sci. 33, 071501 (2023).

Johnson-Groh, M. A look at adaptive systems from biology to machine learning. Scilight 2023, 301105 (2023).

Zhou, C. & Kurths, J. Dynamical weights and enhanced synchronization in adaptive complex networks. Phys. Rev. Lett. 96, 164102 (2006).

Sorrentino, F. & Ott, E. Adaptive synchronization of dynamics on evolving complex networks. Phys. Rev. Lett. 100, 114101 (2008).

Sorrentino, F., Barlev, G., Cohen, A. B. & Ott, E. The stability of adaptive synchronization of chaotic systems. Chaos: Interdiscip. J. Nonlinear Sci. 20, 013103 (2010).

Berner, R., Vock, S., Schöll, E. & Yanchuk, S. Desynchronization transitions in adaptive networks. Phys. Rev. Lett. 126, 028301 (2021).

Sorrentino, F., Pecora, L. M., Hagerstrom, A. M., Murphy, T. E. & Roy, R. Complete characterization of the stability of cluster synchronization in complex dynamical networks. Sci. Adv. 2, e1501737 (2016).

Palm, G. Neural associative memories and sparse coding. Neural Netw. 37, 165–171 (2013).

Yoshioka, M. Linear stability analysis of retrieval state in associative memory neural networks of spiking neurons. Phys. Rev. E 66, 061913 (2002).

Pecora, L. M. & Carroll, T. L. Master stability functions for synchronized coupled systems. Phys. Rev. Lett. 80, 2109 (1998).

Aoki, T. & Aoyagi, T. Self-organized network of phase oscillators coupled by activity-dependent interactions. Phys. Rev. E 84, 066109 (2011).

Berner, R., Schöll, E. & Yanchuk, S. Multiclusters in networks of adaptively coupled phase oscillators. SIAM J. Appl. Dynamical Syst. 18, 2227–2266 (2019).

Feketa, P., Schaum, A. & Meurer, T. Synchronization and multicluster capabilities of oscillatory networks with adaptive coupling. IEEE Trans. Autom. Control 66, 3084–3096 (2021).

Gerstner, W. & Kistler, W. M. Mathematical formulations of hebbian learning. Biol. Cybern. 87, 404–415 (2002).

Wang, X.-J. & Rinzel, J. Alternating and synchronous rhythms in reciprocally inhibitory model neurons. Neural Comput. 4, 84–97 (1992).

Chakravartula, S., Indic, P., Sundaram, B. & Killingback, T. Emergence of local synchronization in neuronal networks with adaptive couplings. PloS one 12, e0178975 (2017).

Yuan, W.-J. & Zhou, C. Interplay between structure and dynamics in adaptive complex networks: Emergence and amplification of modularity by adaptive dynamics. Phys. Rev. E 84, 016116 (2011).

Lodi, M., Della Rossa, F., Sorrentino, F. & Storace, M. An algorithm for finding equitable clusters in multi-layer networks. In 2020 IEEE International Symposium on Circuits and Systems (ISCAS), 1–5 (IEEE, 2020).

Lodi, M., Sorrentino, F. & Storace, M. One-way dependent clusters and stability of cluster synchronization in directed networks. Nat. Commun. 12, 4073 (2021).

Belykh, I. & Hasler, M. Mesoscale and clusters of synchrony in networks of bursting neurons. Chaos: Interdiscip. J. Nonlinear Sci. 21, 016106 (2011).

Uhlig, F. Simultaneous block diagonalization of two real symmetric matrices. Linear Algebra Its Appl. 7, 281–289 (1973).

Maehara, T. & Murota, K. A numerical algorithm for block-diagonal decomposition of matrix *-algebras with general irreducible components. Jpn. J. Ind. Appl. Math. 27, 263–293 (2010).

Murota, K., Kanno, Y., Kojima, M. & Kojima, S. A numerical algorithm for block-diagonal decomposition of matrix *-algebras with application to semidefinite programming. Jpn. J. Ind. Appl. Math. 27, 125–160 (2010).

Irving, D. & Sorrentino, F. Synchronization of dynamical hypernetworks: Dimensionality reduction through simultaneous block-diagonalization of matrices. Phys. Rev. E 86, 056102 (2012).

Zhang, Y. & Motter, A. E. Symmetry-independent stability analysis of synchronization patterns. SIAM Rev. 62, 817–836 (2020).

Panahi, S., Klickstein, I. & Sorrentino, F. Cluster synchronization of networks via a canonical transformation for simultaneous block diagonalization of matrices. Chaos: Interdiscip. J. Nonlinear Sci. 31, 111102 (2021).

Pikovsky, A. S. & Grassberger, P. Symmetry breaking bifurcation for coupled chaotic attractors. J. Phys. A: Math. Gen. 24, 4587 (1991).

Heagy, J., Carroll, T. & Pecora, L. Desynchronization by periodic orbits. Phys. Rev. E 52, R1253 (1995).

Pikovsky, A. & Politi, A. Lyapunov exponents: a tool to explore complex dynamics (Cambridge University Press, 2016).

Pecora, L. M., Sorrentino, F., Hagerstrom, A. M., Murphy, T. E. & Roy, R. Cluster synchronization and isolated desynchronization in complex networks with symmetries. Nat. Commun. 5, 4079 (2014).

Lodi, M., Sorrentino, F. & Storace, M. Forget partitions? Not yet ... In 2022 IEEE International Symposium on Circuits and Systems (ISCAS), 1531–1535 (IEEE, 2022).

Düzel, E., Penny, W. D. & Burgess, N. Brain oscillations and memory. Curr. Opin. Neurobiol. 20, 143–149 (2010).

Martin, C. & Ravel, N. Beta and gamma oscillatory activities associated with olfactory memory tasks: different rhythms for different functional networks? Front. Behav. Neurosci. 8, 218 (2014).

Lundqvist, M. et al. Gamma and beta bursts underlie working memory. Neuron 90, 152–164 (2016).

Hanslmayr, S., Axmacher, N. & Inman, C. S. Modulating human memory via entrainment of brain oscillations. Trends Neurosci. 42, 485–499 (2019).

Weinberg, R. The biology of cancer, 2nd edn, New York. NY: Garland Publishing. (2014).

Sawicki, J., Berner, R., Löser, T. & Schöll, E. Modeling tumor disease and sepsis by networks of adaptively coupled phase oscillators. Front. Netw. Physiol. 1, 730385 (2022).

Moran, R., Pinotsis, D. A. & Friston, K. Neural masses and fields in dynamic causal modeling. Front. Comput. Neurosci. 7, 57 (2013).

Wilson, H. R. & Cowan, J. D. A mathematical theory of the functional dynamics of cortical and thalamic nervous tissue. Kybernetik 13, 55–80 (1973).

Hebb, D. O. The organization of behavior: A neuropsychological theory (Psychology Press, 2005).

Maruyama, Y., Kakimoto, Y. & Araki, O. Analysis of chaotic oscillations induced in two coupled wilson–cowan models. Biol. Cybern. 108, 355–363 (2014).

Haenschel, C., Baldeweg, T., Croft, R. J., Whittington, M. & Gruzelier, J. Gamma and beta frequency oscillations in response to novel auditory stimuli: a comparison of human electroencephalogram (eeg) data with in vitro models. Proc. Natl Acad. Sci. 97, 7645–7650 (2000).

Whittingstall, K. & Logothetis, N. K. Frequency-band coupling in surface eeg reflects spiking activity in monkey visual cortex. Neuron 64, 281–289 (2009).

Mongillo, G., Barak, O. & Tsodyks, M. Synaptic theory of working memory. Science 319, 1543–1546 (2008).

Altan-Bonnet, G. & Mukherjee, R. Cytokine-mediated communication: a quantitative appraisal of immune complexity. Nat. Rev. Immunol. 19, 205–217 (2019).

Morán, G. A. G., Parra-Medina, R., Cardona, A. G., Quintero-Ronderos, P. & Rodríguez, É. G. Cytokines, chemokines and growth factors. In Autoimmunity: From Bench to Bedside [Internet] (El Rosario University Press, 2013).

Aoki, T. & Aoyagi, T. Co-evolution of phases and connection strengths in a network of phase oscillators. Phys. Rev. Lett. 102, 034101 (2009).

L Longo, D. Harrison’s Hematology and Oncology (McGraw-Hill Education, 2017).

Wang, D. & Terman, D. Locally excitatory globally inhibitory oscillator networks. IEEE Trans. Neural Netw. 6, 283–286 (1995).

Baruzzi, V., Lodi, M., Sorrentino, F. & Storace, M. Bridging functional and anatomical neural connectivity through cluster synchronization. Sci. Rep. 13, 22430 (2023).

Belykh, V. N., Osipov, G. V., Petrov, V. S., Suykens, J. A. & Vandewalle, J. Cluster synchronization in oscillatory networks. Chaos: Interdiscip. J. Nonlinear Sci. 18, 037106 (2008).

Cho, Y. S., Nishikawa, T. & Motter, A. E. Stable chimeras and independently synchronizable clusters. Phys. Rev. Lett. 119, 084101 (2017).

Della Rossa, F. et al. Symmetries and cluster synchronization in multilayer networks. Nat. Commun. 11, 1–17 (2020).

Fellous, J.-M. & Linster, C. Computational models of neuromodulation. Neural Comput. 10, 771–805 (1998).

Winfree, A. T. The geometry of biological time, vol. 12 (Springer Science & Business Media, 2001).

Wróbel, A. et al. Beta activity: a carrier for visual attention. Acta neurobiologiae experimentalis 60, 247–260 (2000).

Murty, D. V., Shirhatti, V., Ravishankar, P. & Ray, S. Large visual stimuli induce two distinct gamma oscillations in primate visual cortex. J. Neurosci. 38, 2730–2744 (2018).

Moser, E. I., Kropff, E. & Moser, M.-B. Place cells, grid cells, and the brain’s spatial representation system. Annu. Rev. Neurosci. 31, 69–89 (2008).

Reddy, L. et al. Theta-phase dependent neuronal coding during sequence learning in human single neurons. Nat. Commun. 12, 4839 (2021).

Flanders, W. D., Lally, C. A., Zhu, B.-P., Henley, S. J. & Thun, M. J. Lung cancer mortality in relation to age, duration of smoking, and daily cigarette consumption: results from cancer prevention study ii. Cancer Res. 63, 6556–6562 (2003).

Yang, M. et al. Global trends and age-specific incidence and mortality of cervical cancer from 1990 to 2019: an international comparative study based on the global burden of disease. BMJ open 12, e055470 (2022).

Wilson, H. R. & Cowan, J. D. Excitatory and inhibitory interactions in localized populations of model neurons. Biophysical J. 12, 1–24 (1972).

Chow, C. C. & Karimipanah, Y. Before and beyond the wilson–cowan equations. J. Neurophysiol. 123, 1645–1656 (2020).

Jalil, S., Allen, D., Youker, J. & Shilnikov, A. Toward robust phase-locking in melibe swim central pattern generator models. Chaos: An Interdisciplinary J. Nonlinear Sci. 23, 046105 (2013).

Acknowledgements

The authors would like to express their sincere appreciation to Eckehard Schöll and Rico Berner for reading the first version of this manuscript and providing constructive comments. AT received financial support from the Labex MME-DII (Grant No ANR-11-LBX-0023-01), the ANR Project ERMUNDY (Grant No ANR-18-CE37-0014), and CY Generations (Grant No ANR-21-EXES-0008), all part of the French programme “Investissements d’Avenir”. ML has been supported by INFN, Sezione di Firenze for his visit to Sesto Fiorentino (Italy).

Author information

Authors and Affiliations

Contributions

F.S., M.S., and A.T. designed and supervised the research; M.L., S.P., F.S., and M.S. developed the theory; M.L. and S.P. applied the theory to the case studies; M.L., F.S., M.S., and A.T. analyzed the data and interpreted the results; M.S. wrote and revised the manuscript; M.L., F.S., and A.T. contributed to writing and revised the manuscript; S.P. revised the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Physics thanks the anonymous reviewers for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions