Abstract

Diabetic eye disease (DED) is a leading cause of blindness in the world. Annual DED testing is recommended for adults with diabetes, but adherence to this guideline has historically been low. In 2020, Johns Hopkins Medicine (JHM) began deploying autonomous AI for DED testing. In this study, we aimed to determine whether autonomous AI implementation was associated with increased adherence to annual DED testing, and how this differed across patient populations. JHM primary care sites were categorized as “non-AI” (no autonomous AI deployment) or “AI-switched” (autonomous AI deployment by 2021). We conducted a propensity score weighting analysis to compare change in adherence rates from 2019 to 2021 between non-AI and AI-switched sites. Our study included all adult patients with diabetes (>17,000) managed within JHM and has three major findings. First, AI-switched sites experienced a 7.6 percentage point greater increase in DED testing than non-AI sites from 2019 to 2021 (p < 0.001). Second, the adherence rate for Black/African Americans increased by 12.2 percentage points within AI-switched sites but decreased by 0.6% points within non-AI sites (p < 0.001), suggesting that autonomous AI deployment improved access to retinal evaluation for historically disadvantaged populations. Third, autonomous AI is associated with improved health equity, e.g. the adherence rate gap between Asian Americans and Black/African Americans shrank from 15.6% in 2019 to 3.5% in 2021. In summary, our results from real-world deployment in a large integrated healthcare system suggest that autonomous AI is associated with improvement in overall DED testing adherence, patient access, and health equity.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Introduction

Diabetic eye disease (DED) affects a third of people with diabetes mellitus (DM) and is a leading cause of blindness and visual impairment in working-aged adults in the developed world1. Since patients with DED often have no symptoms in the early stages of disease, current guidelines from the American Academy of Ophthalmology and American Diabetes Association recommend that patients with diabetes receive an annual eye examination2,3. These annual screenings allow for early diagnosis and treatment that can help prevent severe vision loss4. As such, annual DED testing is included as a Healthcare Effectiveness Data and Information Set (HEDIS) measure.

Unfortunately, adherence to these annual DED testing guidelines has historically been low. A previous study in the United States found that only 50–60% of Medicare beneficiaries with DM received annual eye exams5. This adherence rate is even lower (30–40%) in smaller health systems and low-income metropolitan patient populations3,6. Previous qualitative investigations have identified misinformation about the importance of regular testing, logistic challenges with scheduling appointments, and anxiety as key barriers to annual eye exams7. In 2018, the FDA De Novo authorized the use of an autonomous artificial intelligence (AI) system (LumineticsCore® formerly known as IDx-DR, Digital Diagnostics, Coralville, IA) to diagnose DED. This system can autonomously analyze images of the retina at the point of care, such as at primary care clinics, with a sensitivity of 87.2% and specificity of 90.7% in a pivotal clinical trial against a prognostic standard, i.e. patient outcome8. Subsequent studies have further validated the accuracy, sensitivity, and specificity of this autonomous AI technology, using ophthalmologists’ reads as the reference standard9,10,11.

In 2020, Johns Hopkins Medicine, an integrated healthcare system with over 30 primary care sites, began deploying autonomous AI for DED testing in some of its primary care clinics. This study aimed to determine whether implementation of this technology increased the rate of care gap closure in different patient populations and whether its implementation was associated with higher overall adherence rates.

Results

Patient demographics

A total of 17,674 patients with diabetes were managed at Johns Hopkins Medicine in 2019. Most patients were female (53.0%) and under 65 years old (69.0%). The two most highly represented racial groups were White (45.2%) and Black or African American (40.6%), and the two most common insurance coverages were commercial/other (48.7%) and Medicare (30.0%). Nearly all patients resided in an urban setting.

A total of 17,590 patients with diabetes were managed at Johns Hopkins Medicine in 2021. Again, most patients were female (51.1%) and under 65 years old (71.1%). Most patients were White (47.9%) and Black or African American (37.1%), and most patients had either commercial/other insurance (53.1%) or Medicare (28.1%). A detailed breakdown of the population demographics is available in Table 1. Overall, the patient demographics between AI-switched sites and non-AI sites were similar. Patients at non-AI sites had a higher inflation-adjusted mean income ($90,200 in 2019 and $98,000 in 2021) than patients at AI-switched sites ($63,400 in 2019 and $63,400 in 2021). Additionally, more patients at non-AI sites were covered under military insurance compared to AI sites (13.0% vs. 6.2%).

Propensity score weighting analysis

In our propensity score weighting analysis, we used inverse probability to assign weights to patients based on social determinants of health to ensure comparability across the different types of sites and years. The analysis showed that DED testing adherence among AI-switched sites increased by 7.4 percentage points (95% CI: [5.2, 9.5], p < 0.001) from 2019 to 2021. On the other hand, the adherence rate among non-AI sites decreased by 0.3 percentage points (95% CI: [−1.5, 0.9], p < 0.653). The change in adherence rate at AI-switched sites was 7.6 percentage points (95% CI [5.2, 10.1], p < 0.001) higher than that at non-AI sites, suggesting that there was a significantly higher increase in DED testing between 2019 and 2021 at AI-switched sites than at non-AI sites.

Subgroup changes in DED adherence rate from 2019 to 2021

In 2019, the overall adherence rate across all sites was 42.2%. The baseline adherence rate was 46.1% at AI-switched sites and 40.4% at non-AI sites. In 2021, the overall adherence rate across all sites increased to 44.8%. Of note, the adherence rate increased to 54.5% at AI-switched sites and remained stable at 40.3% at non-AI sites. A detailed breakdown in adherence rate for each patient population is shown in Table 2.

We examined the change in DED testing adherence rate across 8 demographic and social determinants of health categories: gender, age, race, ethnicity, language preference, insurance coverage, geography, and national ADI quartile (Table 2). The largest adherence gaps at AI-switched sites in 2019 had significantly decreased by 2021 after AI implementation. For the race category, the largest initial gap in 2019 was between American Indian or Alaska Native patients and Native Hawaiian or Other Pacific Islander patients. This gap shrank from 37.3% in 2019 to 20.5% in 2021. For the insurance category, the largest initial gap in 2019 was between patients with military insurance and those covered by Medicaid. This gap shrank from 32.9% in 2019 to 21.1% in 2021. For the ADI category, the largest initial gap in 2019 was between the 1st and 2nd ADI quartiles. This gap shrank from 8.8% in 2019 to −2.5% in 2021. From 2019 to 2021, among the AI-switched sites, the largest improvements in adherence rate within the categories of race, insurance coverage and ADI quartile were: +19.0% in Native Hawaiian or Other Pacific Islander patients, +12.2% in Black or African American patients, +13.7% in Medicaid-insured patients, and +11.7% in the 2nd ADI quartile.

Discussion

In this study, we examined the change in annual DED testing adherence rate before and after implementation of autonomous AI technology at primary care clinics at Johns Hopkins Medicine. From 2019 to 2021, i.e. coming out of the COVID pandemic, which caused widespread adherence issues, we observed a substantial increase in adherence rate among AI-switched sites, while the adherence rate among non-AI sites remained unchanged. This improvement in AI-switched sites over non-AI sites, 7.6 percentage points, remained statistically significant after adjustment by propensity score weighting methods. Among the AI-switched sites, the patient populations that experienced substantial improvement in adherence rate included Black or African American patients, patients with Medicaid insurance coverage, and patients with high ADI scores. Therefore, our data suggests that deployment of autonomous AI improved access to retinal evaluation and health equity in these historically disadvantaged patient groups. Our additional observations are as follows.

First, the overall adherence rate across all sites was 42.2% in 2019, which was lower than the nationwide average of 58.3%12, but higher than the 34% seen in other low-income metropolitan populations in the United States3. The overall adherence rate in 2021 increased slightly to 44.8%. However, the adherence rate among AI-switched sites substantially increased to 54.5%, much closer to the nationwide average. By extrapolation of our data, large scale deployment of this technology across the entire health system could substantially increase overall adherence rate, which in turn could improve HEDIS metrics, Centers for Medicare and Medicaid Services (CMS) Merit-based Incentive Payment System (MIPS) rating, and payer reimbursement.

Second, among the AI-switched sites, there were significant outsize increases in adherence rates in the Black or African American ( + 12%) and Native Hawaiian or Other Pacific Islander ( + 19%) patient populations from 2019 to 2021. In contrast, over the same time period, the adherence rates for these two patient groups actually decreased by 0.6% and 1%, respectively, among non-AI sites. These data suggest that the deployment of autonomous AI improved access when it comes to DED management, particularly for historically disadvantaged populations. Prior studies have found that the African American population uses eye care services at much lower rates than White patients, even though Black patients with type 2 diabetes are significantly more likely to develop retinopathy than White patients13,14,15,16. A previous focus group found that for many African American patients, transportation, lack of free time, and inconvenience of eye exam served as main barriers for annual DED screening16. In fact, minority populations overall have consistently lower unadjusted eye examination rates than White populations17.

Third, our data also suggests that autonomous AI was associated with improved health equity and smaller care gaps between patient groups. Among AI-switched sites and before AI deployment in 2019, a large adherence rate gap existed between Asian Americans and Black/African Americans (61.1% vs. 45.5%). This 15.6% gap shrank to 3.5% by 2021. Similarly, among AI-switched sites and before AI deployment in 2019, a large adherence gap existed between patients with military insurance and patients with Medicaid insurance (63.1% vs. 30.2%). This 32.9% gap shrank to 21.1% by 2021. Lastly, among AI-switched sites and before AI deployment in 2019, an adherence rate gap of 8.4% existed between the most socioeconomically advantaged (ADI 1st quartile) and the most socioeconomically disadvantaged (ADI 4th quartile). By 2021, the adherence rate gap between patients from all 4 quartiles had closed.

Fourth, we observed treatment heterogeneity with improvement in adherence rate even after autonomous AI deployment. Though autonomous AI improved access to retinal evaluation for the most disadvantaged patient groups and reduced care gaps, such improvement was not universal. For example, among the AI-switched sites, multiple patient subgroups barely experienced any improvement in adherence rate from 2019 to 2021: Asian ( + 0.4%), American Indian or Alaska Native ( + 2.3%), non-English speaking patients ( + 2.8%), and military insurance coverage ( + 2.8%). While it is beyond the scope of the current study to evaluate the cause for this treatment heterogeneity, the relative lack of trust in healthcare AI or concerns about data usage by patients could be a limiting factor in adoption18,19,20. Patient trust in autonomous AI technologies will affect adoption of AI into our healthcare system, and this is certainly an important topic that warrants more thorough investigation.

Our study is limited by its retrospective nature and the fact that nearly all patients included in our study live in a metropolitan area, so our observations may not generalize to patient populations living in micropolitan, small town, and rural residences. However, our data demonstrated that deployment of autonomous AI for DED testing in the primary care setting is highly associated with improvement in adherence rate, patient access and health equity. We employed propensity score weighting methods to address the inherent limitations of an observational study and are reassured that our analyses showed a significant impact of autonomous AI on DED testing adherence rates. Future studies in the form of a prospective randomized clinical trial, similar to a recent study that investigated the role of autonomous AI for DED testing in youth with diabetes21, could help delineate whether there is a causal relationship between autonomous AI and improvement in population-level metrics. Additionally, qualitative surveys that evaluate patient views toward the use of AI technology could help identify targets of patient education that can help address the treatment heterogeneity observed in our study. The actual follow-up rate with ophthalmology for patients who test positive for more than mild diabetic retinopathy per autonomous AI and the associated impact on healthcare cost should be evaluated and quantified as well.

Methods

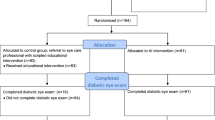

This was a retrospective study approved by the Institutional Review Board of the Johns Hopkins School of Medicine. All research adhered to the tenets of the Declaration of Helsinki. Our study included all patients with diabetes mellitus who were managed at primary care sites of Johns Hopkins Medicine in the calendar years 2019 (pre-AI deployment) and 2021 (post-AI deployment). Subject demographic information that was retrieved from the electronic health records system included gender, age, race, ethnicity, preferred language, insurance status, ZIP code of residence, national area deprivation index (ADI), and inflation-adjusted median household income in the past 12 months.

Additionally, from the ZIP code data, each patient’s residence was identified as metropolitan, micropolitan, small town, or rural based on the 2010 Rural-Urban Commuting Area Codes22. The national ADI score is a 100-point scale that measures socioeconomic disadvantage based on data like a geographic region’s housing quality, education, income, and employment23. A higher national ADI score correlates with increased overall socioeconomic disadvantage.

Each patient’s clinic site was categorized as either “AI-switched” or “non-AI” site. An “AI-switched” site is defined as a site that did not have autonomous AI DED testing (LumineticsCore® formerly known as IDx-DR, Digital Diagnostics, Coralville, IA) in 2019, but had it by 2021. The same autonomous AI system was deployed across all AI-switched sites. A “non-AI” site is defined as a site that never had autonomous AI deployment and where patients are referred to eyecare for DED testing.

Statistical analyses

Patient demographic characteristics were described. Categorical and binary variables were reported as numbers and percentages. These variables were compared using chi-squared tests (Table 1).

The primary outcome measure was adherence to annual DED testing in a given calendar year. Adherence was defined as either receiving an autonomous AI exam or a dilated eye exam with an ophthalmologist. To compare the primary outcome between AI-switched sites and non-AI sites, we used propensity score weighting methods to ensure comparability across sites and years. The inverse-probability-weighted regression adjustment model was used to estimate the average treatment effect (ATE) in four groups (non-AI sites in 2019, AI-switched sites in 2019, non-AI sites in 2021, and AI-switched sites in 2021). These four groups were matched based on all the relevant covariates (age, gender, race, ethnicity, preferred language, insurance, ADI quartile, family income, and geographical region), as well as an adjusted Poisson regression with the covariates for the outcome model to estimate the difference in adherence rates. The covariates balance was checked after the inverse probability weighting to ensure appropriate balance on all covariates among the four groups. Robust standard errors estimate clustering on participants was used to account for patients who were seen in both 2019 and 2021. The difference-in-difference was also estimated from this model.

The changes in adherence rates among various patient subgroups were calculated using Poisson regression models with Generalized Estimating Equation framework and are reported as percentages with 95% confidence intervals (Table 2).

Statistical analysis was performed using R Statistical Software Version 4.3.1 (R Foundation for Statistical Computing, Vienna, Austria), and the propensity score weighting methods were carried out in Stata Version 17.0. The level of statistical significance was set at p < 0.05.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The original data will be available upon official written request to the corresponding author.

Code availability

The code used for data analysis will be available upon official written request to the corresponding author.

References

Cheung, N., Mitchell, P. & Wong, T. Y. Diabetic retinopathy. Lancet 376, 124–136 (2010).

Lin, K., Hsih, W., Lin, Y., Wen, C. & Chang, T. Update in the epidemiology, risk factors, screening, and treatment of diabetic retinopathy. J. Diabetes Investig. 12, 1322–1325 (2021).

Kuo J. et al. Factors associated with adherence to screening guidelines for diabetic retinopathy among low-income metropolitan patients. Mo Med. 117, 258–264 (2020).

Bragge P. Screening for Presence or Absence of Diabetic Retinopathy: A Meta-analysis. Arch. Ophthalmol. 129, 435–444 (2011).

Lee, P. P., Feldman, Z. W., Ostermann, J., Brown, D. S. & Sloan, F. A. Longitudinal rates of annual eye examinations of persons with diabetes and chronic eye diseases. Ophthalmology 110, 1952–1959 (2003).

Lock L. J. et al. Analysis of Health System Size and Variability in Diabetic Eye Disease Screening in Wisconsin. JAMA Netw. Open 5, e2143937 (2022).

Kashim, R., Newton, P. & Ojo, O. Diabetic Retinopathy Screening: A Systematic Review on Patients’ Non-Attendance. Int. J. Environ. Res. Public. Health 15, 157 (2018).

Abràmoff, M. D., Lavin, P. T., Birch, M., Shah, N. & Folk, J. C. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. Npj Digit. Med. 1, 39 (2018).

Grzybowski A. et al. Diagnostic accuracy of Automated Diabetic Retinopathy Image Assessment Softwares: IDx-DR and MediosAI. Ophthalmic Res. https://doi.org/10.1159/000534098 (2023).

Shah A. et al. Validation of Automated Screening for Referable Diabetic Retinopathy With an Autonomous Diagnostic Artificial Intelligence System in a Spanish. Popul. J. Diabetes Sci. Technol. 15, 655–663 (2021).

van der Heijden, A. A. et al. Validation of automated screening for referable diabetic retinopathy with the IDx‐DR device in the Hoorn Diabetes Care System. Acta Ophthalmol. (Copenh.) 96, 63–68 (2018).

U.S. Department of Health and Human Services. Healthy People 2030. https://health.gov/healthypeople/objectives-and-data/browse-objectives/diabetes/increase-proportion-adults-diabetes-who-have-yearly-eye-exam-d-04/data (2020).

Sloan, F. A., Brown, D. S., Carlisle, E. S., Picone, G. A. & Lee, P. P. Monitoring Visual Status: Why Patients Do or Do Not Comply with Practice Guidelines. Health Serv. Res. 39, 1429–1448 (2004).

Harris, E. L., Sherman, S. H. & Georgopoulos, A. Black-white differences in risk of developing retinopathy among individuals with type 2 diabetes. Diabetes Care 22, 779–783 (1999).

Leske M. C. et al. Diabetic retinopathy in a black population. Ophthalmology 106, 1893–1899 (1999).

Fathy, C., Patel, S., Sternberg, P. & Kohanim, S. Disparities in Adherence to Screening Guidelines for Diabetic Retinopathy in the United States: a Comprehensive Review and Guide for Future Directions. Semin. Ophthalmol. 31, 364–377 (2016).

Shi, Q., Zhao, Y., Fonseca, V., Krousel-Wood, M. & Shi, L. Racial Disparity of Eye Examinations Among the U.S. Working-Age Population With Diabetes: 2002–2009. Diabetes Care 37, 1321–1328 (2014).

Richardson J. P. et al. Patient apprehensions about the use of artificial intelligence in healthcare. Npj Digit. Med. 4, 140 (2021).

York, T., Jenney, H. & Jones, G. Clinician and computer: a study on patient perceptions of artificial intelligence in skeletal radiography. BMJ Health Care. Inform 27, e100233 (2020).

Abràmoff M. D. et al. Considerations for addressing bias in artificial intelligence for health equity. Npj Digit. Med. 6, 170 (2023).

Wolf R. M. et al. Autonomous artificial intelligence increases screening and follow-up for diabetic retinopathy in youth: the ACCESS randomized control trial. Nat. Commun. 15, 421 (2024).

Rural-Urban Commuting Area Codes. USDA Economic Research Service https://www.ers.usda.gov/data-products/rural-urban-commuting-area-codes.aspx (2010).

Hu, J., Kind, A. J. H. & Nerenz, D. Area Deprivation Index Predicts Readmission Risk at an Urban Teaching Hospital. Am. J. Med. Qual. 33, 493–501 (2018).

Acknowledgements

We thank Christopher Lo (Johns Hopkins University Department of Biostatistics) for additional assistance with our statistical analyses. This study was partially funded by the Research to Prevent Blindness Career Advancement Award that supports TYAL. The University of Wisconsin receives unrestricted funding from Research to Prevent Blindness. RC is funded by the NIH K23 award 5K23EY030911. RMW receives research support from Novo Nordisk and Boehringer Ingelheim, outside of the submitted work. The funder played no role in study design, data collection, analysis and interpretation of data, or the writing of this manuscript.

Author information

Authors and Affiliations

Contributions

Conceptualization: TYAL and JJH. Data acquisition: JJH. Data analysis: JJH, YD, ML, and JW. Original draft: JJH and TYAL. Draft revisions: RC, RMW, MDA, YD, ML, and JW.

Corresponding author

Ethics declarations

Competing interests

The Authors declare the following Competing Non-Financial and Financial Interests. MDA: Director, Consultant of Digital Diagnostics Inc., Coralville, Iowa, USA; patents and patent applications assigned to the University of Iowa and Digital Diagnostics that are relevant to the subject matter of this manuscript; Exec Director, Healthcare AI Coalition Washington DC; member, American Academy of Ophthalmology (AAO) AI Committee; member, AI Workgroup Digital Medicine Payment Advisory Group (DMPAG); Treasurer, Collaborative Community for Ophthalmic Imaging (CCOI), Washington DC; Chair, Foundational Principles of AI CCOI Workgroup. The remaining Authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Huang, J.J., Channa, R., Wolf, R.M. et al. Autonomous artificial intelligence for diabetic eye disease increases access and health equity in underserved populations. npj Digit. Med. 7, 196 (2024). https://doi.org/10.1038/s41746-024-01197-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-024-01197-3

- Springer Nature Limited