Abstract

This study investigates olfaction, a complex and not well-understood sensory modality. The chemical mechanism behind smell can be described by so far proposed two theories: vibrational and docking theories. The vibrational theory has been gaining acceptance lately but needs more extensive validation. To fill this gap for the first time, we, with the help of data-driven classification, clustering, and Explainable AI techniques, systematically analyze a large dataset of vibrational spectra (VS) of 3018 molecules obtained from the atomistic simulation. The study utlizes image representations of VS using Gramian Angular Fields and Markov Transition Fields, allowing computer vision techniques to be applied for better feature extraction and improved odor classification. Furthermore, we fuse the PCA-reduced fingerprint features with image features, which show additional improvement in classification results. We use two clustering methods, agglomerative hierarchical (AHC) and k-means, on dimensionality reduced (UMAP, MDS, t-SNE, and PCA) VS and image features, which shed further insight into the connections between molecular structure, VS, and odor. Additionally, we contrast our method with an earlier work that employed traditional machine learning on fingerprint features for the same dataset, and demonstrate that even with a representative subset of 3018 molecules, our deep learning model outperforms previous results. This comprehensive and systematic analysis highlights the potential of deep learning in furthering the field of olfactory research while confirming the vibrational theory of olfaction.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Scientifically, the sense of smell is intriguing due to its complexity, close connection to memory and emotions, and the ongoing discourse surrounding its underlying mechanisms. Within the olfactory epithelium, spanning a 3.7 cm zone in the upper nasal passages, olfactory receptor neurons interact with volatile substances, giving rise to the perception of odors1. As established through the Nobel Prize-winning work of Linda Buck and Richard Axel, olfactory receptors (ORs) belong to the G-Protein Coupled Receptor class2. The molecular characteristics of odorants determine their affinity for multiple ORs and vice versa. An action potential is generated by structural and electrochemical changes initiated by the binding of odorant and receptor, transmitting olfactory information to the brain. The present-day investigations are focused on understanding the mechanism that underlies the binding and triggering described, which is still not clearly understood3.

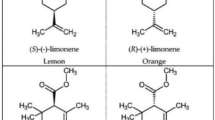

The chemical mechanism behind smell can be described by so far proposed two theories: vibrational and docking theories. According to Dyson4, who first proposed vibrational theory, the olfactory receptors detect the localized vibrations of smell molecules, much like a chemical spectroscope. Another subsequent and more widely accepted Shape Theory posits that following the binding of odorants to receptors, a conformational shift occurs in the receptors, turning them from an inactive to an active state, called docking or lock-and-key5. Reviving the Vibration Theory, Turin proposed Inelastic Electron Tunneling Spectroscopy (IETS) as a means of detecting vibrational energy of odorant6. Consequently, olfaction is now recognized as an exemplary system within the emerging domain of quantum biology7. Despite the significant disagreement around the Vibration Theory8,9, certain research indicates that molecular vibrations may contribute to the sense of smell10,11,12. The swipe card mechanism also suggests that molecular vibration energy is important in addition to docking13.

Our investigation delves into the vibrational theory, proposing a strong correlation between a molecule’s olfactory characteristics and its vibrational frequency in the infrared spectrum. The field of predicting molecular properties is changing due to the rise of data-driven and machine-learning methodologies14,15,16,17,18,19, which provide significant improvements over conventional statistical methods. This paper explores this new wave of approaches, concentrating on the prediction of molecule odor from vibrational frequencies. The emphasis lies on multi-label classification, employing advanced deep-learning methodologies.

This paper’s key contributions are (1) We assembled a large and novel dataset of VS; (2) systematic analysis of VS using data-driven clustering, deep learning classification methods, and Explainable AI that reveal a relationship between VS, odor, and shape; (3) unimodal and multimodal deep learning models for improved odor classification.

This paper is structured as follows: The first section details the datasets (odor and VS) and explains the featurization of the datasets into fingerprints, gaussian smoothed spectra and image representation; then, it describes models and methods for classification (cost-sensitive deep learning models), dimensionality reduction (UMAP, MDS, t-SNE, PCA), clustering(AHC, k-mean) and saliency analysis (permutation analysis, GradCam++). The following section details the results of classification and clustering, followed by an analysis of constituent molecules within each cluster and a discussion on important features obtained by saliency analysis. Finally, conclusions are presented in the last section.

Materials and methods

This section starts with describing the datasets and featurizing them, following that, classification, clustering, and saliency analysis methods are described.

Dataset

The characterization of odorants involves the consideration of various qualitative descriptors, including hedonic attributes. Of the various odorant datasets with their odor labels available, we used a combined dataset from the training materials provided by Firmenich during the “Learning to Smell Challenge” with the Leffingwell PMP 200120. This combined dataset has 7,374 molecules with significant structural diversity and 109 distinct odor classes. We also used VS of odorant molecules, as receptors detect vibrational energy reflected in VS by the mechanism of IETS, as mentioned previously.

Integrated dataset (IGD)

Saini et al. compiled a dataset by combining two distinct sets of expertly labeled odor datasets20. These sets originated from the training materials provided by Firmenich during the “Learning to Smell Challenge” and Leffingwell PMP 2001. The resulting dataset, after merging and preprocessing, comprised 7374 molecules distributed across 109 unique odor classes, each with different sample counts, as illustrated in Fig. 1. The complete list of all 7374 odorant molecules can be found in Supplementary file 1.

Subset of integrated dataset (Subset-IGD)

The CAS Registry number or International Chemical Identifier (InChI) Code was generated for all IGD molecules by utilizing their SMILE code21,22. The conversion from SMILE to CAS or InChI was done using the NistChemPy Python library23. Subsequently, the Vibrational Spectra (VS) corresponding to the obtained CAS and InChI were acquired from the Chemistry Webbook hosted by the National Institute of Standards and Technology (NIST)24. Vibrational frequencies and intensities of all these molecules were determined with the B3LYP/6-31G* level of theory, a type of density functional theory (DFT), after optimizing the molecular structures for minimum energy. Gaussian 09 suite25 was used for DFT calculations. Although IGD had 7374 molecules, the molecules without VS were eliminated and 3018 molecules were left in Subset-IGD having 109 unique odors. Consequently, Subset-IGD emerged as a representative sub-dataset derived from IGD. Figure 1 shows the percentage of samples associated with odor classes in IGD and Subset-IGD, visually indicating that Subset-IGD is a representative sub-dataset of IGD. This is confirmed by the Kolmogorov-Smirnov (K-S) test26, which yielded a test statistic (D) of 0.0734 and a p-value of 0.93, signifying Subset-IGD to be a representative sub-dataset of IGD. All 3018 odorant molecules are listed in Supplementary file 1.

Featurising molecules

We featurized molecules and their VS to get a meaningful numerical representation for training our classification models. Molecules were featurized using traditional daylight fingerprinting as they gave the best result on the used dataset in the previous studies. We experimented with the Morgan fingerprint, too, also known as the extended-connectivity fingerprint (ECFP4) having bit length of 2048 bits and the fragment radius was set to 2 (diameter 4)27, RDKit was used to generate these features but they performed poorly compared to Daylight fingerprints28; classification results of Morgan Fingerprint are detailed in supplementary file 5. Gaussian smoothing was performed on VS, followed by downsampling to get features. Smoothing broadens peaks in VS, and downsampling reduces the input dimension to the model, improving model performance29. Three channel images of VS were generated using GASF, GADF and MTF transformation; this allows the utilisation of advanced pattern recognition techniques, improving classification accuracy30. Hence, we had three types of features with which we carried out further analysis: Daylight Fingerprint Features (DFF), Gaussian Smoothed Dimensionally reduced features (GS_VS), Images presentation of VS and ResNet50 features (VS_IMG).

Gaussian smoothed dimensionally reduced features for VS (GS_VS)

For each spectrum, a set of wavenumbers (vwns) associated with a specific molecule is projected onto a linear bounded frequency scale (BFS), usually ranging from 1 to 4000 cm−1. This range is chosen to encompass all essential vibrational normal modes for subsequent analysis. Then, Gaussian smoothing is applied to the spectra. The BFS is normalized and sampled at fixed increments of L cm−1 for dimensionality reduction. The resulting spectra are utilized as features for the deep learning model, referred to henceforth as GS_VS29. The equation for a Gaussian kernel is:

A sigma value of 10 cm−1 and L set to 5 cm−1 were employed, resulting 800 (4000/L) descriptor variables. The smoothing procedure applies a smearing function that broadens the vibrational peaks, which allows for comparing different compounds with slightly different frequencies. Figure 2 shows an overview of this process. Turner et al. introduced EVA descriptor for spectra, which has been validated for use in QSAR studies31. Proposed GS_VS performs equally well as the EVA descriptor, but when 3-channel images are generated for the EVA descriptor, the model’s performance on them is significantly reduced. A possible reason for this poor performance could be that the intensity of peaks is not considered while generating them. Hence, we use GS_VS for our research.

Fingerprint-based features (DFF)

To generate features for our deep learning model, we used a traditional featurization technique: daylight fingerprinting using the Rdkit. These Daylight fingerprint features (DFF) were generated from the SMILE representation of the molecules. These 1024 binary fingerprints capture the presence or absence of specific chemical substructures within a molecule.

Overview of feature generation from VS for molecule 2-methyl-phenol (shown in inset). (a) Projected VS onto a BFS (b) spectra obtained after Gaussian smoothing (c) normalised spectra with BFS sampled at 5 cm−1. Obtained spectra in (c) is further processed to generate image features using GAF and MTF (d1) polar coordinate mapping (d2) Markov transition matrix (e1) Gramian angular summation field (GASF) a 224X224X1 image (e2) Gramian angular difference field (GADF) a 224X224X1 image (e3) Markov transition field (MTF) a 224X224X1 image (f) GASF, GADF and MTF are overlayed to obtain a 224X224X3 image for each VS (g) pre-trained ResNet50 model is used to get flattened feature VS_IMG.

Images presentation of VS and ResNet50 features (VS_IMG)

The obtained GS_VS is encoded into images using two frameworks, Markov Transition Field (MTF) and Gramian Angular field (GAF)30. These methods aid in transforming the time series into an image format, enhancing the classification process. Three images Gramian angular summation field (GASF) a 224 × 224 × 1 image, Gramian angular difference field (GADF) a 224 × 224 × 1 image, and Markov transition field (MTF) a 224X224X1 image obtained from GS_VS are overlayed to obtain a 224X224X3 image for each VS. We use pre-trained ResNet50 network (on ImageNet) to obtain the flattened features (VS_IMG) for these 3-channel images32. This is achieved with the help of both Python/keras.applications.resnet50 and Python/keras models. Figure 2 shows an overview of the process of converting GS_VS to VS_IMG.

Gramian angular field (GAF)

To create a 2-D image, the first step is to transform the normalized time series data into polar coordinates. While mapping to the polar coordinate system, the normalized time series value is represented as the angular cosine, and the time stamps are illustrated as the radius in the polar coordinate system. For a time series \(Y = \{y_1, y_2, \ldots , y_n\}\), the angle (\( \theta _i \)) and radius (\( r_i \)) are computed using equations 2 and 3:

Where \(\overline{Y}_{\text {i}}\) is normalized time series, \( N \) and \( t_i \) are the constant value and time stamp, respectively, that divide the polar coordinate’s time span into equal portions. When transitioning a time series from the Cartesian coordinate system to the polar coordinate system, the GAF transformation exhibits the property of bijectiveness, generating a unique value in the polar system and a distinct inverse value. Unlike the Cartesian coordinate system, the absolute temporal relationship is preserved within the polar coordinate system. Consequently, a Gramian matrix is constructed, where each matrix element corresponds to the trigonometric function of the sum or difference across different time intervals. By employing the following equations, GASF and GADF can be derived:

Markov transition field (MTF)

Dividing a data series \(Y = \{y_1, y_2, \ldots , y_n\}\) evenly into Q bins, each \(y_i\) is associated with a bin \(q_j\) (\(j \in [1, Q]\)). Hence, by using a first-order Markov chain, a Markov matrix Z of size \(Q \times Q\) can be built. It makes the following expression: \(z_{\text {ij}}=p\{ y_{\text {t}}\in q_{\text {i}}|y_{\text {t-1}} \in q_{\text {j}}\}\)

The likelihood of the point y, presently situated in bin \(q_i\), transitioning to bin \(q_j\) in the subsequent time is represented by \(z_{ij}\). Evidently, \(z_{ij}\) fulfils the relation: \(\sum _{j=1}^{Q} z_{ij} = 1\). Due to the memorylessness property of the Markov chain, the current elements in the matrix Z are independent of the previous element, leading to a notable loss of pertinent data. To address this constraint, the matrix Z is expanded to the Markov transform field N by introducing a time axis. The data point at time stamps i and j is associated with respective bins: \(q_i\) and \(q_j\), following the division of the dataset into Q bins along the time axis. The transition probability from \(q_i\) to \(q_j\) is denoted by \(N_{ij}\) and expressed as follows:

Data analysis

To perform a systematic analysis of VS both clustering and classification were performed, and also saliency analysis of the model was done to explain model predictions.

Classification models

The histogram plot in Fig. 1 indicates that fruity is the highest occurring label, and fennel/cedar has the lowest occurrence in our dataset. As apparent, the distribution has a heavy class imbalance and is highly skewed.

To ensure a general representation of data in both the training and test datasets, we opted for iterative stratified sampling instead of random sampling for the dataset split. This method was executed using the iterative_train_test_split class available in the scikit-multilearn library33. Choosing random sampling unintentionally led to the omission of minority odor descriptors, worsening the pre-existing label imbalance. Visualization in Fig. 3a and b illustrates the data split into test and training sets using random and iterative sampling, respectively. The line charts present a comparative analysis between random and stratified splits, where the non-overlap of the orange and blue line chart indicates the relative occurrence disparity of a specific label in the training and test sets. The findings highlight that stratified sampling yields a better representative test and training set split.

Given the considerable class imbalance in our dataset, we employed a cost-sensitive multilayer perceptron (CSMLP) for this study. The conventional multilayer perceptron (MLP) trained through the backpropagation of error algorithm assumes equal misclassification costs (false negative and false positive). However, recognizing that a false negative is more detrimental or costly than a false positive34, we introduced the CSMLP to address this issue. In the proposed CSMLP, we adapted the MLP’s loss function to accommodate class imbalance. To enhance the penalty for mistakes on minority class samples, we utilized a combination of weighted binary cross entropy (WBCEL) and focal loss. In WBCEL, assigned weights are proportional to the \(\delta \) power of the inverse of class frequencies35,36,37, thereby imposing a higher penalty for errors on the minority class. Focal loss assigns a weight to each sample based on its difficulty, which is measured in terms of the loss incurred by the CSMLP on that particular sample38. Samples with higher losses are regarded as more challenging. The equations for Focal Loss (FL), Weighted Binary Cross-Entropy Loss (WBCEL), and Combined Loss (CL) are as follows:

\(\varvec{\lambda }\) is the hyper-parameter that controls the importance of each loss.

\(\varvec{\gamma }\) is focusing paramerter in FL and \(\delta \) is used for smoothing the effect of class weight.

C is number of classes and N is number of samples in a batch. \({\textbf {y}}_{{{\textbf {true,ij}}}}\) true label for jth class of ith example.

\({\textbf {y}}_{{{\textbf {pred,ij}}}}\) predicted probability for jth class of ith example.\(\varvec{\varepsilon }\) is a small constant to avoid numerical instability.

\({\textbf {w}}_{{\textbf {pos,j}}}\) = (Number of -ve labels for class j)/(Total number of samples in dataset)

\({\textbf {w}}_{{\textbf {neg,j}}}\) = (Number of +ve labels for class j)/(Total number of samples in dataset)

We first trained unimodal baseline models on PCA-reduced VS_IMG, DFF of Subset-IGD and IGD, and GS_VS features alone. We then developed multimodal fusion model that learn jointly from PCA-reduced VS_IMG and DFF. Figure 4 shows an overview of the fusion model.

All these models are CSMLP with fully connected layers, for activation function we have used Relu, and at the final layer, we used sigmoid. Models were trained with Adam39(keras-optimizer), and dropout was used as a regularizer to prevent overfitting. Along with CSMLP, we tried random resampling techniques: Multi-Label Random Under-Sampling (ML-RUS) and Multi-Label Random Over-Sampling (ML-ROS). However, we did not observe any significant improvement in results. We leave the detailed architecture of all models in the supplementary file 2 and results of resampling in the supplementary file 5.

Fusion model

Fusion model learns from PCA-reduced VS_IMG concatenated by PCA-reduced DFF to produce a final prediction. Figure 4 shows an overview of the fusion model40. We generated 6 different sets of PCA-reduced DFF, with percentage variance ranging from 70 to 95%, and when concatenated with PCA-reduced VS_IMG, we saw an increase in the F1 score with increased variance. Figure 5a shows value of F1 score for different values of variance.

Diagram of fusion architecture that learns jointly from VS_IMG and DFF. (a) VS_IMG a 224X224X3 image obtained by overlaying GASF-GADF-MTF (b,c) ResNet50 pre-trained model is then used to extract features from VS_IMG (d) these flattened features are then dimensionally reduced to 3036 using PCA (e) DFF for same molecule obtained from RDKit (f) DFF are dimensionally reduced using PCA (g) PCA reduced features from (d), (f) are concatenated and later classified using CSMLP.

Dimensionality reduction and clustering

Features obtained from GS_VS and VS_IMG are high dimensional; reducing the dimensionality to 2D helps visualise compounds in a 2D space and observe their distribution. PCA, t-SNE, UMAP and MDS were used for dimensionality reduction, and then clustering was performed using AHC and k-mean clustering.

Dimensionality reduction

Four-dimensionality reduction techniques were applied on GS_VS and VS_IMG: PCA, t-SNE, UMAP and MDS. All were applied using Python 3.7.6 packages. Principal Component Analysis (PCA) is a multivariate analysis technique designed to extract essential information through an orthogonal transformation41. This transformation generates correlated variables known as principal components, which are new linearly independent variables capturing the key features of the original data. Multidimensional Scaling (MDS) functions as a technique for network localization, revealing the relationships, whether similarity or dissimilarity, among pairs of objects within a given dataset. This information is then translated into distances between points within a two-dimensional space42. The t-SNE method, an evolution of Stochastic Neighbor Embedding (SNE), gauges the similarities between pairs of high-dimensional data objects and their subsequent two-dimensional embedding. Utilizing gradient descent, t-SNE minimizes Kullback-Liebler divergence to generate a two-dimensional embedding43. We followed the protocol suggested by Arora et. al. for better t-SNE visualization44. UMAP, a recent dimension reduction technique, accurately captures non-linear structures in large datasets45. Based on algebraic topology and Riemannian geometry, UMAP constructs a manifold. Our visualization process entails a delicate balance between local and global information, a balance contingent on the careful selection of values for two key parameters: the “minimum distance” and “number of neighbors”. The “number of neighbors” parameter plays a pivotal role in balancing local and global structural aspects, while the “minimum distance” parameter governs the grouping of points within the low-dimensional representation. Following extensive testing and evaluation of various values, we settled on fixed values of 15 for the number of neighbors and 0.1 for the minimum distance.

Clustering

Agglomerative Hierarchical Clustering (AHC) and K-means were employed on the reduced dimensions derived from the four preceding techniques. The objective was to categorize similar odor molecules into distinct groups. Previous studies have undertaken clustering analyses based on fingerprint features of odor molecules46, yet none have specifically addressed the clustering of large VS datasets. The clustering process was executed using Python packages on the two-dimensional data to tackle challenges associated with high-dimensional clustering47. For Agglomerative Hierarchical Clustering (AHC), the Euclidean distance matrix (2x2) for each molecule was computed, employing the “ward.D2” aggregative criterion. This criterion aims to minimize the inertia within classes while maximizing the inertia between classes. The clustering process involved successively grouping the two closest classes until a complete clustering tree was obtained. While hierarchical clustering is known for its simplicity and versatility in handling various similarity or distance measures, the irreversible merging of clusters restricts the ability to correct erroneous decisions48,49. In the case of k-means clustering, various numbers of centroids corresponding to the desired cluster count were experimented. Points were assigned to their nearest cluster during each iteration, and the algorithm iteratively refined cluster centroids until no further changes occurred48,50. It’s worth noting that the k-means clustering algorithm is sensitive to outliers and may be less effective when dealing with clusters that deviate from hyper-spherical shapes.

To ascertain the most suitable number of clusters, we constructed an “elbow” curve illustrating intra-cluster variability against the number of clusters for each dimensional reduction technique. Following the establishment of clusters using either k-means or AHC, we examined the distribution of odor notes within these clusters. Additionally, we explored the chemical groups or functions of molecules present in different clusters.

Feature Importance analysis

The explainability of the classification model is very important for developing vibration-based biomimetic sensors. We used two techniques for feature importance analysis: Permutation feature importance (PFI) and GradCam++51,52. Both are model-agnostic methods and do not require model retraining. PFI can capture variable interaction, too. GradCam++ provides visual explanations of CNN model predictions in terms of better object localization than the state-of-the-art.

Permutation feature importance (PFI)

For the model trained on GS_VS features we performed feature importance analysis using PFI. Let f is trained/tested uni-modal model on GS_VS and its error measure L(y, f). Then feature importance as the extent to which a feature \(X_{i}\) affects L[y, f(X)], on its own and through its interactions with \(X_{i}\). Permutation importance was first introduced by Breiman (Breiman, 2001)53, the present study uses its model-agnostic version later proposed by Fisher, Rudin, and Dominici (2018)51. Let \(e_{orig}=L(y, f(X)))\) be error with original data, and \(e_{perm}=L(f(y, X_{j,perm})))\) be error with jth feature permuted then feature importance \(FI_j\) is given by equation: \(FI_j = e_{\text {orig}} - e_{\text {perm}}\)

GradCAM++

For the model trained on VS_IMG, we conducted saliency analysis employing GradCAM++. GradCAM++52 computes the second-order derivative of the predicted probability for the smell category concerning the final convolutional layer:

where \(\phi _i\) and \(\phi _j\) represent the activation maps of the ith and jth feature maps, and \(w_i\) and \(w_j\) denote the weights associated with the ith and jth feature maps. Additionally, \(\alpha _{ij}^c\) represents the weight derived from the global average pooling of gradients across the spatial dimensions.

Subsequently, the gradient-weighted class activation maps undergo global average pooling, given by equation 8, Z represents the number of spatial locations in the feature map. Finally, the heatmap is generated, as given in equation 9:

The resultant heatmap in GradCAM++ signifies the regions in VS_IMG that held the utmost significance for the smell category prediction. A higher value at a specific location indicates the increased importance of that location in the classification process.

Results and discussion

In this section, we discuss results of classification, clustering and saliency analysis.

Classification results

We trained five different classification models as described earlier; four of these were unimodal models trained on GS_VS, PCA-reduced VS_IMG, DFF for Subset-IGD and IGD and the last one is a multimodal model trained on PCA-reduced VS_IMG and DFF. Model performance was evaluated on micro-averaged scores. Numerous experiments were undertaken by adjusting hyperparameters to refine the model and achieve optimal results. All five deep models underwent end-to-end training for 100 epochs, with model weights being restored from the epoch with the highest F1 score. Overall, for DFF of IGD and Subset-IGD, CSMLP significantly outperformed all the models utilized by Saini et. al.20. A possible reason for this improvement could be a large number of free parameters of deep models. These parameters give them the flexibility to fit highly complex data that traditional machine learning models are too simple to fit. The model trained on VS_IMG outperformed the model trained on GS_VS; the results highlight the potential of deep learning in olfaction and can be exploited further. The fusion model outperformed the unimodal model trained on VS_IMG. This model was trained on PCA-reduced VS_IMG and DFF, indicating how the two features complement each other. The findings can be interpreted in two ways: firstly, as an affirmation of the swipe card mechanism for olfaction proposed by Brookes et al.13, indicating potential involvement of vibrational energy in addition to docking; and secondly, as an indication that vibrational spectra, when augmented with physicochemical features, offer enhanced attributes for QSAR studies. It is noteworthy that complex activation mechanisms, extending beyond docking, are observed in biological processes such as cancer immunology54. Additionally, in the realm of QSAR studies for biochemical molecules, as in contexts like drug design, it is a common practice to employ vibrational spectra as a proxy for structure29.

We try to concatenate different numbers of PCA-reduced DFF with the variance of the reduced features varying from 70% to 95% to the PCA-reduced VS_IMG, Fig. 5a shows results for different combinations. Table 1 shows the results of all the classification models.

(a) Plot depicts the change in precision, recall and F1 score with respect to change in % variance of PCA-reduced DFF features. (b) Relevance produced by PFI methods, the contour maps represent the feature importance produced by the interpretability method, overlayed on spectra of 2-Cyclopenten-1-one, 2-hydroxy-5-ethyl-3-methyl (shown in inset). Red indicates important features, and it decreases from red to blue. The intensities of wavenumber 1005-1670 and 2985-3215 (range 201-334 and 597-643 in dimensionally reduced GS_VS) contributes most significantly.

Clustering results

Both VS_IMG and GS_VS were subjected to dimensionality reduction, yielding two-dimensional data through four distinct reduction techniques. Following this, the two clustering methods were employed on these 2D coordinates to group molecules based on their structural similarity. To identify the most suitable number of clusters, we generated an “elbow” curve depicting intra-cluster variability in relation to the number of clusters, details of the elbow curve can be found in Supplementary file 2. The positioning of olfactory compounds in the two-dimensional space, as defined by the computations from all techniques, is illustrated in Fig. 6 for GS_VS and Fig. 7 for VS_IMG.

Our investigation into cluster composition adopted two approaches: first, analyzing the frequencies of odor notes linked with the molecules, and second, determining the count of molecules featuring specific odor notes. Given the considerable variation in odor occurrences, spanning from 1 to 821, a direct comparison of occurrence numbers wouldn’t be reliable, particularly for less frequent odor notes.

As an illustration, employing the UMAP-kmeans methodology resulted in 1151 molecules being assigned to cluster C1. Among these, the odor note “green” occurred 500 times in the entire dataset, and within cluster C1, it appeared 201 times. Hence, approximately 40.2% of molecules associated with the “green” odor were consolidated in C1, forming 17.46% of the total molecules in this cluster.

In assessing the efficacy of the employed techniques for distinguishing between odors, we calculated the silhouette score for each clustering technique across various features and dimensionality reduction methods55. As depicted in Fig. 8a, it is evident that UMAP exhibited the most pronounced discriminative capability.

(a) Silhouette score for different dimensionality reduction techniques and clustering techniques for GS_VS and VS_IMG. (b) Histogram illustrating the distribution of chemical functional groups across clusters. (A) Alcohol(Al_coo) (B) Alcohol(Al_oh) (C) Alcholo(Al_oh_notert) (D) Aromatic Amine(Ar_n) (E) Aromatic Hydroxyl(Ar_oh) (F) Carboxylic Acid(Coo) (G) Carboxylic Acid(Coo2) (H) Carbonyl O (C_o) (I) Carbonyl O excluding Cooh (C_o_nocoo) (J) Tertiary amines (Nh0) (K) Thiol(Sh) (L) Aldehyde (M) Allylic_oxid (N) Aryl_methyl (O) Benzene (P) Bicyclic (Q) Ester (R) Ether (S) Furan (T) Ketone (U) Ketone_topliss (V) Methoxy (W) Para_hydroxylation (X) Phenol (Y) Phenol_noorthohbond (Z) Unbrch_alkane.

For clarity, we concentrated on presenting the results related to UMAP for GS_VS only, while the clustering results from other methods and VS_IMG features are detailed in supplementary file 3.

The cluster dC1(UMAP k-means) was majorly constituted of ‘cofee’, ‘camphor’, ‘sulfuric’, ‘vegetable’, ‘earthy’, ‘meat’, ‘nut’, ‘oily’ and ‘wood’ with their %ON greater than 50%. In dc2 ‘vanilla’, ‘spicy’, and ‘sweet’ odors were present especially and in dc3 ‘sour’, ‘wine’, ‘apple’ were present predominantly. On the other hand, cluster hc1 especially had molecules with odors ‘vegetable’, ‘sulphuric’, ‘earthy’, ‘meat’, ‘nut’, hc2 had ‘coconut’, and ‘sour’. In hc3, the following odors were prominent ‘apple’, ‘balsamic’, ‘fruity’, ‘spicy’, ‘phenolic’, ‘and herbal’, they constituted more than 58% of molecules, although this cluster had molecules of all 109 odors present.

Chemical structures and functions of odorants

Using RdKit, we checked the presence or absence of 85 structures and functions with diverse characteristics within the molecules of clusters. The complete list of these 85 structures and functions can be found in Supplementary File 4. We focused on identifying chemical functional groups in a minimum of 5% of the molecules within any of the three clusters. For cluster d in Fig. 6, we get 26 such functional groups. Here, we limit the discussion to cluster d; for the rest of the clusters, the analysis can be found in supplementary file 4. As shown in Fig. 8b, carbonyl compounds were notably prevalent in clusters dC2. Moreover, the dC3 cluster had a significant presence of odorant molecules with carbonyl groups. Within these clusters, dC3 displayed a notably higher percentage (92.5%) of carbonyl compounds, primarily as ester functional group. In cluster dC2, molecules with benzene rings and ethers were prevalent, constituting 43% and 58% of the molecules, respectively. In contrast, molecules belonging to other clusters exhibited a scarcity of these chemical groups. Molecules with ether groups were particularly frequent in cluster dC3, comprising 45% of its composition.

Feature importance analysis

Permutation feature importance (PFI)

Figure 5b illustrates the features identified as important through PFI analysis, superimposed on the spectra of 2-Cyclopenten-1-one, 2-hydroxy-5-ethyl-3-methyl. The figure highlights that the regions of interest for the modal are precisely those with the most significant physical information. Specifically, intensity values for wavenumber ranges 1005-1670 and 2985-3215 play a pivotal role in determining the distinctiveness of the modal.

GradCAM++

To understand what model has learned from spectra, GradCAM++ was employed to generate saliency maps, revealing the most crucial regions for classification. For the 821 molecules exhibiting the most frequent fruity smell, Fig. 9 displays the average of (a) GASF, (b) GADF, (c) MTF, and (d) saliency map, each of size 224x224. Additionally, (e) contour maps depict the overlay of feature importance on the average spectra of these 821 molecules, with red indicating higher values that gradually decrease from red to blue. The examination of average saliency maps for each molecule was undertaken to address subtle differences present in individual spectra, with a focus on identifying common and significant patterns of interest56. In Fig. 9d, the red regions represent the most crucial areas. Moreover, Fig.9e showcases the significant signal locations (where red denotes the highest importance, decreasing to blue) superimposed on the average vibrational spectra (VS) of 821 molecules with a fruity smell. The noteworthy bands between 1000 and 1500 cm−1 and 3000–3700 cm−1 underscored the importance, while regions of VS lacking or exhibiting less frequent peaks are shaded in blue. The saliency analysis shows that model focuses in the most physically informative regions of VS. This aligns with the intuition that humans may utilize this approach when comparing various spectra. Supplementary file 2 provides saliency maps for the three other most frequent smell categories, namely ‘sweet’, ‘green’, and ‘floral’.

Diagram of saliency analysis of model trained on VM_IMG. (a), (b), (c) and (d) represents the average GASF, GADF, MTF, and saliency map of 821 molecules with “fruity” order, each of size 224X224. (e) shows the significant signal locations overlayed on an average VS of 821 molecules. In (a), (b), (c), (d) and (e) red color signifies higher values/importance.

Conclusion

We compiled a novel and extensive dataset of VS for odorant molecules; prior studies have utilized very few molecules to study the relation between VS and odour57. We systematically analyzed them using deep learning-based classification techniques and performed clustering to understand the relation between VS, structure, and complex odor perception. We utilized GASF-GADF-MTF to transform VS into images to improve the classification results further. Furthermore, we try a fusion of VS and traditional DFF features to improve the classification results. A significant limitation of our study lies in the fact that it is currently confined to vibrational spectra (VS) of only 3018 molecules. A more expansive dataset could offer enhanced insights into the relationship between VS and odor. The outcomes of this research fortify the proposition that vibration plays a significant role in olfaction. Moreover, it suggests that the capabilities of biological olfaction might be replicable through vibration-based sensing and identification. The results derived from saliency analysis underscore the specific regions where the model concentrates its attention during decision-making, highlighting the potential of VS in facilitating automated odor classification and artificial odor design for applications such as perfumes and cosmetics. This study also underscores the capacity of deep learning to advance the field of odor classification.

Data availibility

The Vibrational Spectra (VS) used in this work were acquired from the Chemistry Webbook hosted by the National Institute of Standards and Technology (NIST)24. A list of molecules of IGD and Subset-IGD (Supplementary File 1), along with implementation details of classification models (Supplementary File 2), are provided as supporting information.

References

Sela, L. & Sobel, N. Human olfaction: A constant state of change-blindness. Exp. Brain Res. 205, 13–29. https://doi.org/10.1007/s00221-010-2348-6 (2010).

Buck, L. & Axel, R. A novel multigene family may encode odorant receptors: A molecular basis for odor recognition. Cell 65, 175–187. https://doi.org/10.1016/0092-8674(91)90418-x (1991).

Brookes, J. C. Olfaction: The physics of how smell works? Contemp. Phys. 52, 385–402. https://doi.org/10.1080/00107514.2011.597565 (2011).

Malcolm Dyson, G. The scientific basis of odour. J. Soc. Chem. Ind. 57, 647–651. https://doi.org/10.1002/jctb.5000572802 (1938).

Amoore, J. E., Johnston, J. W. & Rubin, M. The stereochemical theory of odor. Sci. Am. 210, 42–49. https://doi.org/10.1038/scientificamerican0264-42 (1964).

Turin, L. A spectroscopic mechanism for primary olfactory reception. Chem. Sens. 21, 773–791. https://doi.org/10.1093/chemse/21.6.773 (1996).

Ball, P. Physics of life: The dawn of quantum biology. Nature 474, 272–274. https://doi.org/10.1038/474272a (2011).

Keller, A. & Vosshall, L. B. A psychophysical test of the vibration theory of olfaction. Nat. Neurosci. 7, 337–338. https://doi.org/10.1038/nn1215 (2004).

Block, E., Jang, S., Matsunami, H., Batista, V. S. & Zhuang, H. Reply to turin et al.: Vibrational theory of olfaction is implausible. Proc. Natl. Acad. Sci. 112, https://doi.org/10.1073/pnas.1508443112 (2015).

Franco, M. I., Turin, L., Mershin, A. & Skoulakis, E. M. C. Molecular vibration-sensing component in drosophila melanogaster olfaction. Proc. Natl. Acad. Sci. 108, 3797–3802. https://doi.org/10.1073/pnas.1012293108 (2011).

Haffenden, L., Yaylayan, V. & Fortin, J. Investigation of vibrational theory of olfaction with variously labelled benzaldehydes. Food Chem. 73, 67–72. https://doi.org/10.1016/s0308-8146(00)00287-9 (2001).

Hara, J. Olfactory discrimination between glycine and deuterated glycine by fish. Experientia 33, 618–619. https://doi.org/10.1007/bf01946534 (1977).

Brookes, J. C., Horsfield, A. P. & Stoneham, A. M. The swipe card model of odorant recognition. Sensors (Basel) 12, 15709–15749 (2012).

Keller, A. et al. Predicting human olfactory perception from chemical features of odor molecules. Science 355, 820–826. https://doi.org/10.1126/science.aal2014 (2017).

Shang, L., Liu, C., Tomiura, Y. & Hayashi, K. Machine-learning-based olfactometer: Prediction of odor perception from physicochemical features of odorant molecules. Anal. Chem. 89, 11999–12005. https://doi.org/10.1021/acs.analchem.7b02389 (2017) (PMID: 29027463).

Wakayama, H., Sakasai, M., Yoshikawa, K. & Inoue, M. Method for predicting odor intensity of perfumery raw materials using dose-response curve database. Ind. Eng. Chem. Res. 58, 15036–15044. https://doi.org/10.1021/acs.iecr.9b01225 (2019).

Sharma, A., Kumar, R., Ranjta, S. & Varadwaj, P. K. Smiles to smell: Decoding the structure-odor relationship of chemical compounds using the deep neural network approach. J. Chem. Inf. Model. 61, 676–688. https://doi.org/10.1021/acs.jcim.0c01288 (2021).

Muthyala, R. S., Butani, D., Nelson, M. & Tran, K. Testing the vibrational theory of olfaction: A bio-organic chemistry laboratory experiment using hooke’s law and chirality. J. Chem. Educ. 94, 1352–1356. https://doi.org/10.1021/acs.jchemed.6b00991 (2017).

Putin, E. et al. Deep biomarkers of human aging: Application of deep neural networks to biomarker development. Aging 8, 1021-1033, https://doi.org/10.18632/aging.100968 (2016).

Saini, K. & Ramanathan, V. Predicting odor from molecular structure: a multi-label classification approach. Sci. Rep. 12. https://doi.org/10.1038/s41598-022-18086-y (2022).

Heller, S. R., McNaught, A., Pletnev, I., Stein, S. & Tchekhovskoi, D. Inchi, the iupac international chemical identifier. J. Cheminf. 7, https://doi.org/10.1186/s13321-015-0068-4 (2015).

Weininger, D. Smiles, a chemical language and information system. 1. introduction to methodology and encoding rules. J. Chem. Inf. Comput. Sci. 28, 31-36. https://doi.org/10.1021/ci00057a005 (1988).

NistChemPy: Python API for NIST Chemistry WebBook. https://pypi.org/project/NistChemPy/ (2023). Accessed 15 December 2023.

Nist chemistry webbook. https://webbook.nist.gov/chemistry/cas-ser/. Accessed November 2023.

Frisch, M. J. et al. Gaussian 09.01. Gaussian Inc. Wallingford CT 2009.

Massey, F. J. The kolmogorov-smirnov test for goodness of fit. J. Am. Stat. Assoc. 46, 68–78. https://doi.org/10.1080/01621459.1951.10500769 (1951).

Zhong, S. & Guan, X. Count-based morgan fingerprint: A more efficient and interpretable molecular representation in developing machine learning-based predictive regression models for water contaminants’ activities and properties. Environ. Sci. Technol. 57, 18193–18202. https://doi.org/10.1021/acs.est.3c02198 (2023).

Landrum, G. Rdkit: Open-source cheminformatics. http://www.rdkit.org (2018). Accessed: 2024-07-31.

Turner, D. The eva spectral descriptor. Eur. J. Med. Chem. 35, 367–375. https://doi.org/10.1016/s0223-5234(00)00141-0 (2000).

Wang, Z. & Oates, T. Imaging time-series to improve classification and imputation, https://doi.org/10.48550/ARXIV.1506.00327 (2015).

Turner, D. B., Willett, P., Ferguson, A. M. & Heritage, T. W. J. Comput.-Aided Mol. Des. 13, 271–296. https://doi.org/10.1023/a:1008012732081 (1999).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition, https://doi.org/10.48550/ARXIV.1512.03385 (2015).

Szymański, P. & Kajdanowicz, T. A scikit-based Python environment for performing multi-label classification. ArXiv e-prints (2017). 1702.01460.

Ling, C. X. & Sheng, V. S. Cost-Sensitive Learning, 231–235 (Springer, 2011).

Wang, Y.-X., Ramanan, D. & Hebert, M. Learning to model the tail. In Guyon, I. et al. (eds.) Advances in Neural Information Processing Systems, vol. 30 (Curran Associates, Inc., 2017).

Mikolov, T., Sutskever, I., Chen, K., Corrado, G. & Dean, J. Distributed representations of words and phrases and their compositionality, https://doi.org/10.48550/ARXIV.1310.4546 (2013).

Huang, C., Li, Y., Loy, C. C. & Tang, X. Learning deep representation for imbalanced classification. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 5375–5384. https://doi.org/10.1109/CVPR.2016.580 (2016).

Lin, T.-Y., Goyal, P., Girshick, R., He, K. & Dollar, P. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 42, 318–327. https://doi.org/10.1109/tpami.2018.2858826 (2020).

Keras Developers. Keras documentation - optimizers (2023). Accessed 7 December 2023.

Ameta, D., Gupta, V., Sathian, R. P., Behera, L. & Sandhan, T. Statistical and deep convolutional feature fusion for emotion detection from audio signal. In 2023 International Conference on Bio Signals, Images, and Instrumentation (ICBSII), 1–7. https://doi.org/10.1109/ICBSII58188.2023.10181060 (2023).

Abdi, H. & Williams, L. J. Principal component analysis. WIREs Comput. Stat. 2, 433–459. https://doi.org/10.1002/wics.101 (2010).

Saeed, N., Nam, H., Al-Naffouri, T. Y. & Alouini, M.-S. A state-of-the-art survey on multidimensional scaling-based localization techniques. IEEE Commun. Surv. Tutor. 21, 3565–3583. https://doi.org/10.1109/comst.2019.2921972 (2019).

Arora, S., Hu, W. & Kothari, P. K. An analysis of the t-sne algorithm for data visualization. In Bubeck, S., Perchet, V. & Rigollet, P. (eds.) Proceedings of the 31st Conference On Learning Theory, vol. 75 of Proceedings of Machine Learning Research, 1455–1462 (PMLR, 2018).

Kobak, D. & Berens, P. The art of using t-SNE for single-cell transcriptomics. Nat. Commun. 10, 5416 (2019).

McInnes, L., Healy, J., Saul, N. & GroSSberger, L. Umap: Uniform manifold approximation and projection. J. Open Source Softw. 3, 861. https://doi.org/10.21105/joss.00861 (2018).

Rugard, M., Jaylet, T., Taboureau, O., Tromelin, A. & Audouze, K. Smell compounds classification using umap to increase knowledge of odors and molecular structures linkages. PLOS ONE 16, e0252486. https://doi.org/10.1371/journal.pone.0252486 (2021).

Oskolkov, N. tSNE vs. UMAP: Global Structure (2020). Medium. Accessed 17 March 2024.

B, K. A comparative study on k-means clustering and agglomerative hierarchical clustering. Int. J. Emerg. Trends Eng. Res. 8, 1600-1604. https://doi.org/10.30534/ijeter/2020/20852020 (2020).

Abbas, O. A. Comparisons between data clustering algorithms. Int. Arab J. Inf. Technol. 5, 320–325 (2008).

Ordonez, C. Clustering binary data streams with k-means. 12, https://doi.org/10.1145/882085.882087 (2003).

Fisher, A., Rudin, C. & Dominici, F. All models are wrong, but many are useful: Learning a variable’s importance by studying an entire class of prediction models simultaneously. J. Mach. Learn. Res. 20, 1–81 (2019).

Chattopadhay, A., Sarkar, A., Howlader, P. & Balasubramanian, V. N. Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks. In 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), 839–847, https://doi.org/10.1109/WACV.2018.00097 (2018).

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

Wade, N. Meet jim allison, the texan who just won a nobel cancer breakthrough. Wired .

Rousseeuw, P. J. Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 20, 53–65. https://doi.org/10.1016/0377-0427(87)90125-7 (1987).

Jiang, S. et al. Using atr-ftir spectra and convolutional neural networks for characterizing mixed plastic waste. Comput. Chem. Eng. 155, 107547. https://doi.org/10.1016/j.compchemeng.2021.107547 (2021).

Pandey, N., Pal, D., Saha, D. & Ganguly, S. Vibration-based biomimetic odor classification. Sci. Rep. 11, https://doi.org/10.1038/s41598-021-90592-x (2021).

Acknowledgements

DA acknowledges financial support by ISS Delhi under IKS Centre project(2-28/IKS Center-2/2022-23/54) funded by IKS Division, Ministry of Education at AICTE. DA also acknowledges support from IKSMHA Center IIT Mandi where part of this work was completed. Authors are thankful to Rishav Mishra and Surendra Kumar Dhaka from IIIT Una for their help in data collection and analysis.

Author information

Authors and Affiliations

Contributions

D.A. : Conceptualization, Data collection, Analysis, and paper writing. L.B.: Supervision, Interpretation, Review and editing. A.C.: Supervision, Interpretation, Review and editing. T.S. : Conceptualization, Supervision, Interpretation, Review and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ameta, D., Behera, L., Chakraborty, A. et al. Predicting odor from vibrational spectra: a data-driven approach. Sci Rep 14, 20321 (2024). https://doi.org/10.1038/s41598-024-70696-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-70696-w

- Springer Nature Limited