Abstract

Climate change affects plant growth, food production, ecosystems, sustainable socio-economic development, and human health. The different artificial intelligence models are proposed to simulate climate parameters of Jinan city in China, include artificial neural network (ANN), recurrent NN (RNN), long short-term memory neural network (LSTM), deep convolutional NN (CNN), and CNN-LSTM. These models are used to forecast six climatic factors on a monthly ahead. The climate data for 72 years (1 January 1951–31 December 2022) used in this study include monthly average atmospheric temperature, extreme minimum atmospheric temperature, extreme maximum atmospheric temperature, precipitation, average relative humidity, and sunlight hours. The time series of 12 month delayed data are used as input signals to the models. The efficiency of the proposed models are examined utilizing diverse evaluation criteria namely mean absolute error, root mean square error (RMSE), and correlation coefficient (R). The modeling result inherits that the proposed hybrid CNN-LSTM model achieves a greater accuracy than other compared models. The hybrid CNN-LSTM model significantly reduces the forecasting error compared to the models for the one month time step ahead. For instance, the RMSE values of the ANN, RNN, LSTM, CNN, and CNN-LSTM models for monthly average atmospheric temperature in the forecasting stage are 2.0669, 1.4416, 1.3482, 0.8015 and 0.6292 °C, respectively. The findings of climate simulations shows the potential of CNN-LSTM models to improve climate forecasting. Climate prediction will contribute to meteorological disaster prevention and reduction, as well as flood control and drought resistance.

Similar content being viewed by others

Explore related subjects

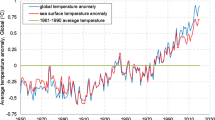

Find the latest articles, discoveries, and news in related topics.Climate change affects soil development, plant growth, food security, water safety, air quality, traffic safety, tourism, agricultural production, forest fire, flood, ecosystems, renewable energy sources, energy management, sustainable socio-economic development, and human health1,2,3,4,5,6,7,8,9,10,11. Especially, extreme rainfall and temperature events often lead to serious disasters such as drought, mudslides and floods12. Climate change is having adverse effects on crop productivity13,14. Climate change is also the main driving factor for the annual growth of trees. The increment of tree growth decreases with the increase of daily maximum temperature, but increases with the increase of relative humidity 15. The global average annual air temperature in 2023 is 1.45 ± 0.12 °C higher than pre-industrial levels (1850–1900). Moreover, the average air temperature from 2014 to 2023 is 1.20 ± 0.12 °C higher than the temperature (1850–1900) (World Meteorological Organization). The Paris Agreement aims to control the global average temperature rise lower than pre-industrial levels by 2 °C, and strives to limit the temperature rise to within 1.5 °C. If humans significantly reduce greenhouse gas emissions and accelerate carbon neutrality, we still have the potential to avoid the worst climate disasters. Meanwhile, robust long-term climate predictions contribute to the formulation of climate management policies. Compared to seasonal forecasting, skillfully predicting monthly averages has significant social importance, and predictive information can be incorporated into time sensitive decisions, such as agricultural, urban, energy and reservoir management practices 16. However, current climate model forecast is not satisfying yet17. Therefore, accurate climate prediction is an urgent scientific issue that needs to be addressed. The sub seasonal time scale, ranging from approximately two weeks to three months, is often described as a “predictable desert” due to a lack of predictive skills.

Artificial intelligence (AI) models have been widely applied in multidisciplinary fields18,19,20,21,22,23,24,25,26,27,28,29,30,31. With the deepening of research, AI models are able to explore the context of time series and have been introduced into climate modeling32,33,34,35,36. Machine Learning (ML) models provide a new perspective to climate forecasting37,38,39,40. Artificial Neural Network (ANN) and Recurrent NN (RNN) are adapted to predict of outdoor air temperature in four European cities. ANNs are used to estimate precipitation in the Brazilian Legal Amazon41. The comparison of measured and predicted air temperature confirms the accurate training of the neural networks42. ANNs have nonlinear generalizability and predictive ability43. A deep learning algorithm is employed to predict the El Niño–Southern Oscillation (ENSO). At the six-month lead, the performance of the DL is significantly better than the multi-models44. Deep learning (DL) NN models are used for statistical downscaling45. Long short-term memory (LSTM) can learn nonlinear relationships with long-term dependencies, which may be difficult for traditional ML and NN models to capture46,47,48,49. A convolutional LSTM is developed for precipitation nowcasting50. The correlation skill of the Nino3.4 index of the convolutional neural networks (CNN) model is higher than those of current dynamical forecast systems51. The CNN could more accurately forecast future storm surges52. The CNN has been found to have the potential to extract spatial information from high-resolution predictions. It has been successfully applied to predict precipitation53 and tropical cyclone wind radius54. The MTL-NET model is utilized to forecast the Indian Ocean Dipole (IOD). The MTL-NET can forecast the IOD seven month ahead, outperforming most of climate dynamical models. The multi-task learning model (MTL-NET) contains four convolutional layers, two maximum pooling layers, and a LSTM layer55. The ConvLSTM and U-Net models are utilized to forecast Marine heatwaves (MHWs) in the the South China Sea (SCS) region. The innovative forecasting system gives better predictions of MHWs56. The Precipitation Nowcasting Network Via Hypergraph Neural Networks (PN-HGNN) is first adopted for precipitation forecasts57. The probabilistic method (DEUCE) integrates Bayesian neural networks and the U-Net to predicting precipitation58. Climate-invariant machine learning is proposed to predict climate59. The latent deep operator network (L-DeepONet) is used to forecast weather and climate60. The GCN-GRU (Graph Convolutional Network and Gated Recurrent Unit) is used to forecast visibility weather61. Nevertheless, Establishing robust artificial intelligence models for climate predicting remains a challenging task as there is still no clear understanding of climate data.

In summary, artificial intelligence can be used to predict climate variables and its predictive performance has been evaluated. The LSTM model, as an artificial intelligence method, has also made certain progress in predicting climate factors. CNN is one of the most representative network structures in DL. Compared with other DL approaches, CNN has stronger feature extraction and information mining capabilities. Combining CNN and LSTM can more effectively extract spatiotemporal features from input data. The experimental results show that the combined CNN-LSTM model has better prediction accuracy than independent LSTM and CNN models62,63,64. Various ANN, RNN, LSTM and CNN methods are employed to predict climate factors. However, the comparison between the multiple climatic elements prediction results of artificial intelligence models (ANN, RNN, LSTM, CNN, and CNN-LSTM) in the jinan city is not clear. This study conducted in-depth exploration and experimentations to find the best climate simulation model. In this article, we use a set of climate datasets to demonstrate that the CNN-LSTM hybrid model is an effective climate prediction system and can be applied to various climate prediction problems in principle.

In this study, CNN-LSTM was applied to predict climate time series. The proposed CNN-LSTM hybrid scheme was mainly tested in Jinan City to predict monthly climate factors. The following research issues are addressed: (1) What are the skills of the CNN-LSTM hybrid method compared to traditional ANN methods? (2) What are the hyperparameters of the CNN-LSTM hybrid model? (3) Is the CNN-LSTM hybrid solution of practical significance in East China? By answering these questions, the author aims to develop more proficient climate forecasting and introduce artificial intelligence methods into climate forecasting on a broader scale, inspiring future creative work.

Study area and data

Study area

Jinan is located in East China, central Shandong, and the southeast edge of North China Plain (Fig. 1). Jinan belongs to the continental monsoon climate zone. Jinan has excellent agricultural resources and a long tradition of vegetable cultivation, earning the reputation of “China’s high-quality vegetable basket”. In 2022, the total agricultural output value was 52.6 billion yuan, with a total grain output of 2.962 million tons. Jinan has completed afforestation of 773.3 hectares and forest nurturing of 5333.3 hectares, with over 60 families and more than 300 types of tree and shrub resources.

Location Map of the research area. The map is generated using ArcGIS Pro 2.5 (ArcGIS Pro), URL: https://www.esriuk.com.

Data and data standardization

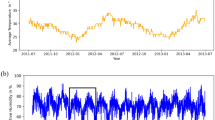

The monthly climatological data during 1951–2022 in Jinan city are obtained from the meteorological platform (http://www.nmic.cn/). The monthly climatological data include average atmospheric temperature (AT), extreme minimum atmospheric temperature, extreme maximum atmospheric temperature, precipitation (P), average relative humidity (RH), and sunlight hours (SH).

The climatological datasets are partitioned into three divisions for calibration (training) (80%) (from January 1951 to October 2008), verification (10%) (from November 2008 to November 2015), and testing (predicting) (10%) (from December 2015 to December 2022). Then, three divisions sets are standardized to ensure uniformity among the climatological data. This helps to improve the prediction accuracy of the artificial intelligence models. At the training phase, the optimal parameter values for the artificial intelligence models are assigned. At the prediction period, the standardized data are offered to the networks to climate forecast. At the predicting phase, the climatic elements are accurately predicted by the models.

In order to improve the training speed of the artificial intelligence models and the prediction accuracy, it needs standardization of the climate data and unify its value range between 0 and 165. The formula is as follows:

where, lm is the normalized climate data, Lm represents the m-th value of the original climate data, Lmax expresses the maximum value of the climate sequence, Lmin expresses the minimum value of the climate sequence.

Methodology

Artificial neural network (ANN)

A three-layer back propagation (BP) ANN consists of a single input layer, a single hidden layer and a single output layer (Fig. 2). The artificial neurons (nodes) in each layer of the BPANN are closely connected with nodes in other layers66.

The output value of each layer is estimated by the following equation:

where Yi is the output of the ith node; f means activation function (transfer function); a is the number of nodes; Wij represents weight; Xj and bt is input of the jth neuron and threshold of nodes.

The error is calculated based on the forecasted and expected outputs. The mean square error (MSE) of the ANN is calculated using the equation:

where m, Dg and Kg are respectively the number of training climate data, the expected and actual output of the gth sample, and the actual output of the gth sample.

Four kinds of activation functions (transfer functions) are typically logsig (Sigmoid) in Eq. (4), purelin in Eq. (5), tansig (tanh) in Eq. (6) and Rectified Linear Unit (ReLU, or positive linear transfer function(poslin)) in Eq. (7), respectively.

After model training and validation, the settings of the model have been determined. The input variables are 12, the output variable is 1, and the neurons of hidden layer are 5. The activation functions are logsig and purelin, and training algorithm is trainbr. The learning rate is 0.001, epochs are 100 and the goal is 10–5.

Recurrent neural network (RNN)

Memory loss is the main drawback of feedforward artificial neural networks. To overcome this limitation, Recurrent Neural Networks (RNNs) integrate the feedback connections into their structure67. The RNNs employ an internal state to solve sequential climate time series forecasting.

Transferring information from one neuron to the next. For example, the last hidden state sn−1 and the recent input xn give the recent hidden state sn in Eq. (8). The recent output yn extracts features from Sn in Eq. (9)68.

where A, B, W are the weights, and bs and b0 are the biases.

Similarly, the parameters of the model are set. The input variables are 12, the output variable is 1, and the neurons of hidden layer are 8. The activation functions are tansig for hidden layer and purelin for output layer. training function is trainlm. learning function is learngdm. Performance function mse is 10–5.

Long short-term memory neural network (LSTM)

LSTM is the most popular method in climate prediction, and used to address long-term dependency issues in RNN. Moreover, LSTM can more widely capture nonlinear features in climate data through three gates. The LSTM can be trained utilizing climate data to forecast climate change. The input gate determines how much input can be stored in the memory unit. The forgetting gate controls how much climate data from the last memory unit should be trashed. The output gate determines how much output from the memory unit. These gates operate together to regulate the climate data and assist LSTM in capturing and processing long-term dependencies in climate data. By utilizing multiple gates to filter the climate data, short-term memory (sn−1) and long-term memory (Dn−1) are processed. Therefore, LSTM can recall data on diverse timescales, making it suitable for climate forecast69.

Figure 3 illustrates the structural framework of the LSTM cell (unit). Inputs of the cell comprise the climate information kn, the hidden state of the previous cell sn−1 and the cell state of the previous cell Dn−1. Outputs of the unit include the hidden state st and the unit state Dn, which are regulated by the forget gate fn, the input gate jn, and the output gate on70. The regulating course and the outputs of the cell are calculated as follows:

where b and W are the coefficient (Weight) and bias vector.

Similarly, the hyperparameters of the model are set. The input size is 12, the number of output is 1, and the number of hidden units is 100. The activation functions are tanh and sigmoid. The Adam Optimizer is used and the learning rate is set to 0.001. The goal is 10–5.

Convolutional neural network (CNN)

CNN includes convolutional computation and has a deep structure (Fig. 4). Compared with ANNs, the advantage of the CNN is that it can improve the ability to extract inputting features by increasing depth. The operation steps for the CNN include: (a) after inputting the data into CNN, a large number of features are extracted and weights were reduced through several convolutions and pooling; (b) Transforming the features into regression results of nodes through a fully connected layer; (c) the CNN uses a resharing mechanism to assign the same weights to different neurons, thereby using the sum of the same weights, which reduces the number of parameters62. To process the input through layers, neurons, and activation functions, the ReLU is utilized. Normalization techniques are used to avoid overfitting. The Adam Optimizer is used and the learning rate is set to 0.001. The goal is 10–5. Epochs of trainning parameter are 200. Batch sizes are 20.

CNN-LSTM

Figure 5 describes the climate simulation process using CNN-LSTM. Weight sharing and local receptive fields are the ways in which CNN learns the features of climate data. The output characteristics of each time step in the LSTM models are extracted through a gate mechanism. The fully connected layer of time distribution does not only obtain the output of the last time step, but also obtains the output of all time steps. On this foundation, this article puts forward a regression system for climate modeling using CNN and LSTM networks.

This proposed CNN-LSTM model is directly fed the 3D climate data, and its shape is 12 × 1 × 1. In the first convolutional layer, shapes of convolutional kernels are 3 × 1 and stride is 1, which allows to extract abstract features of the climate data. In the second layer (max pooling layer), their shapes are 2 × 1 and their stride is 1. The third and fourth layers are respectively batch normalization and ReLU. In convolutional Layer 2, 32 kernels in the size of 3 × 1. To reduce the size of the feature matrix, followed a max-pooling layer, which has a size of 2 × 1 with 1 stride. One LSTM layer is utilized in the model. the LSTM contain 100 neurons. LSTM layers are translated into the features using a fully connected layer. Finally, a regression layer is employed to predict climatic element. Epochs of trainning parameter are 200. Batch sizes are 20. The learning rate is 0.005, and the Adam Optimizer is used.

Experiment

Cross-validation

Fivefold cross validation is utilized to avoid overfitting. The validation climate data are utilized for part-to-part training and validation. The dats are divided into five parts. The folding process is repeated five times. In the 1st fold, 20% is utilized for validation and 80% for training. Similarly, in the 2nd fold, 20% is utilized for validation. Therefore, by folding the climate data, overfitting of the artificial intelligence models is prevented. Because it uses different training climate data each time, it does not lean towards the training climate data. Therefore, overfitting issues can be prevented71.

Evaluation criteria

Performance evaluation of the different models is performed, by comparing the differences between the observed and simulated climate values. The various parameter metrics namely Pearson correlation coefficient (R), root mean square error (RMSE), and mean absolute error (MAE) are utilized. The different methods such as ANN, RNN, LSTM, CNN, and the CNN-LSTM are applied to get high performance72. Calculations of R, RMSE and MAE are in following equations.

where Lm is the value of observed climate data, Om is the predicted climate value, and I is the length of climate data. \(\overline{L}\) is the average of the observed climate data, \(\overline{O}\) represents the average of the forecasted climate values.

Simulating monthly average atmospheric temperature

The models have a “input–output” structure, where output is climatic element for t + 1 month and inputs are climatic element in t and t-s previous months. Through periodic analysis, the cycle of climate factors is 11. Meanwhile, there are 12 months in a year. Therefore, the input variables are 12. The models are used to simulate and forecast the climate changes of 1-time-step ahead for monthly time scale. The data from the past 12 months are used as input for the five models, and the next 1 month data is used as output for the five models.

The simulation results of the different algorithms in the ANN are shown in Table 1. Comparing the results, it can be seen that their training performances have significant differences. Trainbr has the highest R (0.9894), the lowest RMSE (1.8199), and the lowest MAE (1.4787). The trainbr algorithm also performs well in the validation stage, with R (0.9870), RMSE (1.9923) and MAE (1.6352), respectively. In the prediction phase, R, RMSE, and MAE of trainbr are respectively 0.9907, 2.0669, and 1.8042, indicating its good generalization ability.

The simulation results of different combinations of training functions (tansig (T), purelin (PU), logsig (L), poslin (PO)) are shown in Table 2. The combination (logsig-purelin) training results have the lowest RMSE and MAE values, and the highest R value, indicating a successful combination. In addition, this combination also performs well in cross validation stage and has good generalization ability in the prediction stage.

Table 3 illustrates the statistical results achieved using various artificial intelligence models (ANN, RNN, LSTM, CNN) and the proposed CNN-LSTM model in terms of different evaluation indicators namely R, RMSE, and MAE in training stage, verification stage and predicting stage. The proposed CNN-LSTM approach attains monthly average atmospheric temperature prediction accuracy with R (0.9981) and a minimal error in RMSE (0.6292) and MAE (0.5048). This indicates the CNN-LSTM model is well effective than other in monthly average atmospheric temperature prediction. The minimum prediction error is achieved by the CNN-LSTM model compared to other artificial intelligence models. The lower the predicting error, the greater will be predicting accuracy and performance. The hybrid CNN-LSTM model performed better than the four models in simulating the average atmospheric temperature for one month ahead.

Figures 6 represent the predicted monthly average atmospheric temperature with respect to different models. The predicted value of the hybrid CNN-LSTM model is very close to the actual value. The five models can efficiently capture the monthly average atmospheric temperature trends as well as peaks. However, the performance of the CNN-LSTM model at simulating the monthly average atmospheric temperature is optimal.

Comparison of observed and simulated monthly extreme minimum atmospheric temperature

Table 4 compares observed and simulated monthly extreme minimum atmospheric temperature using the best ANN, RNN, LSTM, CNN and CNN-LSTM models for one month ahead simulated during the three periods. It is worth noting that the five models have better performance in the calibration (training) period, the verification period and predicting period according to R, RMSE and MAE values. The CNN-LSTM model is the most accurate monthly extreme minimum atmospheric temperature forecast that offers one step ahead based on R, RMSE and MAE performance criteria.The value of R, RMSE and MAE are respectively 0.9971, 0.8350 °C and 0.6583 °C while training the CNN-LSTM model with 80% of basic data. The CNN-LSTM has nicely forecasted the monthly extreme minimum atmospheric temperature with the R of 0.9970, RMSE of 0.8287 °C, MAE of 0.5979 °C, respectively.

Figure 7 represents simulated results of the five artificial intelligence models for one-time-step-ahead extreme minimum atmospheric temperature forecasted in the prediction period. The five models capture the temporal variations and the peaks of the monthly extreme minimum atmospheric temperature. However, the performance of the CNN-LSTM model at simulating the monthly extreme minimum atmospheric temperature is optimal.

Comparison of observed and simulated monthly extreme maximum atmospheric temperature

Table 5 shows the R, MSE and RMSE of the five models for forecasting one time step in the training, verification and testing periods. The CNN-LSTM model has the slightest error and the most correlation in simulating monthly extreme maximum atmospheric temperature in the calibration, verification and predicting periods. Simulated results in the predicting stage for the R, RMSE and MAE criterion values for the CNN-LSTM model in the one step ahead are 0.9987, 0.4932 °C and 0.3931 °C, respectively. However, the forecasting results at one month ahead for ANN, RNN, and LSTM and CNN models are acceptable. The CNN-LSTM model performs better than the ANN, RNN, LSTM, and CNN models. For instance, the RMSE values in the predicting period for the best ANN, RNN, LSTM, and CNN models, to forecast monthly extreme maximum atmospheric temperature one time-steps-ahead 2.2345 °C, 2.2979 °C, 2.1666 °C, and 0.8212 °C, respectively, are obtained.

Figure 8 compares observed and forecasted extreme maximum atmospheric temperature using the ANN, RNN, LSTM, CNN and CNN-LSTM models for one-time-step-ahead forecasted in the predicting stage in the monthly time scale. The results indicate that CNN-LSTM model with good accuracy forecasted extreme maximum atmospheric temperature peaks. In general, the CNN-LSTM model forecasts in one time step are better than the ANN, RNN, LSTM, and CNN models.

Comparison of observed and simulated monthly precipitation

Table 6 represent the R analysis, MAE and RMSE analysis respectively with respect to the five models. The error values (MAE and RMSE) achieved by the CNN-LSTM model are less when compared to other four models such as ANN, RNN, LSTM, and CNN. The MAE and RMSE values achieved by the CNN-LSTM model in predicting period are 8.1762 mm and 6.7051 mm, respectively. The R values of the proposed CNN-LSTM model is 0.9962 which is larger compared to other existing models. From ANN to CNN-LSTM model, the RMSE and MAE decrease, and R increases.

Figure 9 shows the predicted results of five models and actual precipitation. The figure shows clearly that the overall performance of CNN-LSTM model is superior to ANN, RNN, LSTM, and CNN models. The trends of CNN and CNN-LSTM have better agreement with observations than ANN, RNN, and LSTM models. Moreover, Fig. 4 indicates that ANN model performs less than the RNN and LSTM models. The ANN model cannot efficiently capture the monthly precipitation trends as well as the peaks and it has a poor correlation with the observed precipitation. The ANN simulation cannot meet climate simulation objective, while the CNN and CNN-LSTM simulated results can.

Comparison of observed and simulated monthly average relative humidity

Table 7 represents the R, RMSE, MAE for the five models. The best CNN-LSTM with the slightest value RMSE and MAE and the most value R is selected in both the verification and predicting stages. In Table 5, the RMSE, MAE, and R criteria values for the CNN-LSTM model in predicting stages 0.6615% are 0.5093% and 0.9984, respectively.

Figure 10 shows the superior performance of the CNN-LSTM over the ANN, RNN, LSTM, and CNN models in forecasting monthly average relative humidity. The CNN-LSTM hybrid model has been able to simulate and predict relative humidity peaks well.

Comparison of observed and simulated monthly sunlight hours

Table 8 shows the R, RMSE and MAE values of the ANN, RNN, LSTM, and CNN, CNN-LSTM models in training, verification and predicting stages. The optimized CNN-LSTM model in predicting stage has better assessment criteria, with R = 0.9984, RMSE = 2.9058 h, MAE = 2.3794 h, respectively.

Figure 11 shows results comparing the observed and predicted monthly sunlight (sunshine) hours using the ANN, RNN, LSTM, and CNN, CNN-LSTM models for one month ahead predicting during the predicting period. Also, the forecasted values of the CNN-LSTM model are more compact in the proximity of the observed values, compared to the ANN, RNN, LSTM, and CNN models. this means better performance of the CNN-LSTM model.

Comparison with other literatures

It can be inferred from Tables 1, 2, 3, 4, 5 and 6 that the CNN-LSTM model improves the CNN and LSTM models, for its RMSE and MAE are smaller and R is larger than CNN and LSTM models in training, verification and testing stages. Therefore, model performance can be ranked from high to low as: CNN-LSTM > CNN > LSTM > RNN > ANN. The generalization ability of the five models is consistent with their fitting performance rank. The CNN-LSTM model provides strong guarantee for the flexibility in capturing inherit features of climate data.

In addition to the intuitive error metrics described above, we also calculated the average running time by recording 100 random runs of each model, and the running results are recorded in Table 9. Among all the models, CNN-LSTM is the most computationally expensive model, and ANN is the least computationally expensive model. However, CNN-LSTM increases in PM2.5 prediction accuracy.

Different artificial intelligence models have been applied to climate prediction in different regions. The RMSE and R2 values of the Feed-forward neural network (FNN) model for the monthly minimum atmospheric temperature (Tmin) prediction in Türkiye were calculated as about 1.09 °C and 0.98 for the testing process, respectively. The R2 value of of the FNN model for the monthly maximum air temperature (Tmax) prediction was calculated as 0.9928, whereas the RMSE value was obtained as 0.9136 °C. The FNN is more successful than the Elman neural network (ENN) in modeling Tmin, Tmax and monthly mean air temperature (T)73. A diurnal regressive NN algorithm is presented to predict urban air temperature in the U.S. Statistics generate a bias of 0.8 K, an RMSE of 2.6 K, and R2 of 0.874. The atmospheric temperature forecasting GATG model integrates the graph attention network (GAT) module and the gated recurrent unit (GRU) cell. When the predicting length is 96 h in china, the RMSE, MAE, and Correlation Coefficient (CORR) results of the GATG model are about 3.161, 2.849, and 0.831, respectively75. The CEEMD-BiLSTM is builded using CEEMD (complementary ensemble empirical mode decomposition) and BiLSTM (directional LSTM), and it is used to predict monthly temperature in Zhengzhou City. The average relative error of the CEEMD-BiLSTM is about 0.22%. The performance indicates that the model has a good prediction quality76. The CEEMDAN–BO–BiLSTM model has a MAE of 1.17, and a RMSE of 1.43 for monthly mean air temperature in Jinan City77. Our model's prediction accuracy is similar to those of these models.

Precipitation (P) series have unpredictable randomness and nonlinear. Consequently, the accurate prediction of P is very laborious. For improving P series forecast accuracy, P data is decomposed into some subcomponents through different decomposition approaches (wavelet transform (WT), time varying filtering (TVF-EMD), and CEEMD). Then, the ENN is used to construct, WT-ENN, TVF-EMD-ENN, and CEEMD-ENN models to forecast the subcomponents of P. Finally, the models are applied to P series in twelve different regions in China. The predictions by TVF-EMD-ENN are better than those of WT-ENN and CEEMD-ENN78. A hybrid monthly P prediction model (ABR-LSO) is built by integrating the AdaBoostRegressor (ABR) with the Lion Swarm Optimization (LSO) algorithm. RMSE and MAE values of the ABR-LSO are 52.39 and 39.89 in in Idukki, India79. A multiscale LSTM model with attention mechanism (MLSTM-AM) is employed to improve the accuracy of monthly precipitation prediction. The average Nash–Sutcliffe efficiency (NSE) and relative absolute error (RAE) in the YRB for the model are 0.66 and 0.35. The performance of the MLSTM-AM is better than the LSTM, MLSTM, and multiple linear regression (MLR)80. Our model has higher prediction accuracy than these models.

Two ANN models namely radial base function (RBF) and multiple layer perceptron (MLP) are employed for the forecasts of hourly and monthly meteorological variables in Ongole and Hyderabad, India. The RBF and MLP have given about 91–96% accuracy for the forecasts of air temperature (AT), and soil temperature (ST) and relative humidity (RH). In addition, the predictions demonstrated a strong correlation with the recorded data in between about 0.61 and 0.9481.

The different machine learning algorithms are used to predict AT and RH at Terengganu state in Malaysia. The multi-layered perceptron (MLP-NN) performs well in forecasting daily RH and AT with R of about 0.633 and 0.713, respectively. However, in monthly forecast AT, MLP-NN can be utilized to forecast monthly AT with R of about 0.846. Whereas in prediction monthly RH, the efficiency of radial basis function (RBF-NN) is higher than other models with R of about 0.711. The results indicate that there is great potential for predicting AT and RH values with an acceptable range of accuracy on either architecture of the ANN82. The ANNs are used to predict monthly relative humidity (RH) in Sivas Province, Turkey. The R, MAE, and RMSE values ranged between about 0.97–0.98, 1.92–2.59, and 2.47–3.39, respectively. The results show that the ANN provides satisfactory predictions for monthly RH83. The seasonal autoregressive moving average (SARIMA) and ANN models are utilized to forecast the monthly RH in Delhi, India. The SARIMA model provides the predicted RH with RMSE of about 6.0 and MAE of about 4.6. However, ANN model reported the predicted RH with RMSE of about 4.7 and MAE of about 3.4. The ANN is more reliable for forecasting RH than SARIMA84. The LSTM and adaptive neuro-fuzzy inference system (ANFIS) are used to forecast one day ahead of RH in Turkey. In the testing period, the MAE, R and RMSE values of the LSTM are 5.76%, 0.892 and 7.51%, respectively, in Erzurum province. Moreover, their values are 5.95%, 0.887 and 7.67%, respectively, in Erzurum province using the ANFIS. The results indicate that the ANFIS and LSTM perform satisfactory performance in RH prediction85. Our model has lower prediction errors than these models.

ANNs are used to estimate sunshine duration (SD) in Iran. Average determination coefficient (R2), mean bias error (MBE), RMSE for the comparison between measured and estimated SD are calculated resulting 0.81, 0.1% and 6.3%, respectively86. Three different ANN models, namely, general regression NN (GRNN), MLP, and RBF, are used in the estimation of sunshine duration (SD) in Turkey. The average of estimated SD is about 6.795 h 6.772 h, and 0.672 h by MLP, GRNN and RBF with mean bias error (MBE) 0.072 h, 0.049 h and − 0.054 h, percentage mean absolute error (MAPE) 9.36%, 9.88% and 10.75%, RMSE 0.626, 0.797, and 1.128, percentage root mean square error (%RMSE) 9.30%, 11.75% and 16.78% and R is found to be 0.956, 0.953 and 0.890, respectively. Statistical indicators show that MLP and GRNN models produce better results than the RBF87. Our model has higher prediction accuracy than these models.

In this article, we propose a more effective method to predict the monthly climate of Jinan city. The proposed method is based on the fusion of CNN and LSTM models. In order to further evaluate the predictive effectiveness of the CNN-LSTM model, the prediction results of the CNN-LSTM model are compared with the other three models (ANN, RNN, LSTM, and CNN). In summary, in the performance comparison of the five models, it can be seen that CNN-LSTM can better handle nonlinear and non-stationary climate signals. For the processing of climate time series, the LSTM is suitable for the forecast of nonlinear climate data, and it can further improve the forecast accuracy of the CNN. In addition, the LSTM model can capture a series of climate information before and after, which is more conducive to the prediction of non smooth and nonlinear climate time series. Considering the advantages of these two models, the constructed CNN-LSTM model is feasible for predicting nonlinear climate sequences with better predictive performance and higher accuracy. Consequently, the CNN-LSTM runs best in climate forecast, further indicating that the CNN-LSTM is effective and feasible for forecasting non-stationary climate data. When proposing, testing, and evaluating this new method, we intend to provide decision support for future rational planning, especially flood control and disaster reduction.

Conclusion

-

(1)

The potential of artificial intelligence models for monthly climate factors prediction during 1951–2022 in Jinan city is investigated in this study. The novel climate prediction model “CNN-LSTM” is presented to accurately predict climatic elements based on historical data. The effectiveness of the CNN-LSTM is validated by climate data in Jinan city. The performance of the CNN-LSTM is investigated using three evaluation indicators namely MAE, R and RMSE.

-

(2)

The CNN-LSTM is compared with ANN, RNN, LSTM, and CNN utilizing the same monthly climate data. The input variables lag times are derived from the cycle of monthly climate data. The performance achieved by the CNN-LSTM model is greater than other compared artificial intelligence models (ANN, RNN, LSTM, CNN). The prediction results of CNN-LSTM model is superior to other models in generalization ability and precision. This indicates the effectiveness of the proposed CNN-LSTM model over other existing artificial intelligence models. This means that compared to other models, the CNN-LSTM has the lowest RMSE and MAE values and the highest R value.

-

(3)

In climate change monitoring, extreme climate is of extraordinary importance, and the CNN-LSTM model also has higher efficiency than other four models in extreme climate prediction. The surveys show that predicted extreme air temperature is more accurate than predicted precipitation. The CNN model is close to CNN-LSTM model in some single coefficients but the errors demonstrate that the CNN-LSTM has more stable performance. The CNN-LSTM can reasonably predict the magnitude and monthly variations in climate elements, capture the peaks of the climate data, and meet the evaluation criteria of model performance. The CNN-LSTM is a promising approach for climate simulation.

Limitations and suggestions

-

(1)

The simulated monthly climate values of the hybrid CNN-LSTM model are substantially similar to the observed climate values, but there are some differences which require more accurate multi-time scale climate data. This study only considered historical climate data of the month as the input variable for the model. Therefore, building hourly model requires a higher resolution data. The application of accurate multi-time scale climate data can provide improvements in the climate prediction.

-

(2)

In future, the prediction accuracy of CNN-LSTM will also compare with current physics-based dynamical models. The models can be further extended by applying the decomposition techniques (WT, TVF-EMD, and CEEMD), bidirectional LSTM (BiLSTM), transfer learning, bidirectional GRU (BiGRU), and considering the residual components of climate data as input for the models development. The performance of climate modeling will be further improved by exploring multi-time scales, spatial features and other factors such as solar radiation, greenhouse temperature, terrain, vegetation, El Niño-Southern Oscillation, etc. This strategy can be utilized for any prediction application to make short-term, medium-term, and long-term operational plans. Therefore, the robustness of the models can be utilized to forecast multi-time scale climatic elements. In the simulation and prediction of different extreme climates, we will integrate multiple data such as remote sensing and ground observations for spatiotemporal multi-scale climate modeling. In the process of climate modeling, we will integrate physical mechanisms into machine learning models to improve the interpretability and generalization ability of the models. It provides a new technological approach for the combination of traditional physical models and advanced artificial intelligence algorithms.

Data availability

Data and materials are available from the corresponding author upon request.

References

Guo, Q., He, Z. & Wang, Z. Change in air quality during 2014–2021 in Jinan City in China and its influencing factors. Toxics 11, 210 (2023).

Guo, Q., He, Z. & Wang, Z. Long-term projection of future climate change over the twenty-first century in the Sahara region in Africa under four Shared Socio-Economic Pathways scenarios. Environ. Sci. Pollut. Res. 30, 22319–22329. https://doi.org/10.1007/s11356-022-23813-z (2023).

Guo, Q. et al. Changes in air quality from the COVID to the post-COVID era in the Beijing-Tianjin-Tangshan region in China. Aerosol Air Qual. Res. 21, 210270. https://doi.org/10.4209/aaqr.210270 (2021).

Zhao, R. et al. Assessing resilience of sustainability to climate change in China’s cities. Sci. Total Environ. 898, 165568. https://doi.org/10.1016/j.scitotenv.2023.165568 (2023).

Zheng, Y. et al. Assessing the impacts of climate variables on long-term air quality trends in Peninsular Malaysia. Sci. Total Environ. 901, 166430. https://doi.org/10.1016/j.scitotenv.2023.166430 (2023).

Zhou, S., Yu, B. & Zhang, Y. Global concurrent climate extremes exacerbated by anthropogenic climate change. Sci. Adv. 9, eabo1638. https://doi.org/10.1126/sciadv.abo1638 (2023).

Zurek, M., Hebinck, A. & Selomane, O. Climate change and the urgency to transform food systems. Science 376, 1416–1421. https://doi.org/10.1126/science.abo2364 (2022).

Klisz, M. et al. Local site conditions reduce interspecific differences in climate sensitivity between native and non-native pines. Agricult. For. Meteorol. 341, 109694. https://doi.org/10.1016/j.agrformet.2023.109694 (2023).

Li, X. et al. Attribution of runoff and hydrological drought changes in an ecologically vulnerable basin in semi-arid regions of China. Hydrol. Process. https://doi.org/10.1002/hyp.15003 (2023).

Xue, B. et al. Divergent hydrological responses to forest expansion in dry and wet basins of China: Implications for future afforestation planning. Water Resour. Res. 58, e2021WR031856. https://doi.org/10.1029/2021WR031856 (2022).

Guo, Q., He, Z. & Wang, Z. The characteristics of air quality changes in Hohhot City in China and their relationship with meteorological and socio-economic factors. Aerosol Air Qual. Res. 24, 230274. https://doi.org/10.4209/aaqr.230274 (2024).

Wang, Y., Hu, K., Huang, G. & Tao, W. Asymmetric impacts of El Niño and La Niña on the Pacific-North American teleconnection pattern: The role of subtropical jet stream. Environ. Res. Lett. 16, 114040. https://doi.org/10.1088/1748-9326/ac31ed (2021).

Abbas, G. et al. Modeling the potential impact of climate change on maize-maize cropping system in semi-arid environment and designing of adaptation options. Agricult. For. Meteorol. 341, 109674. https://doi.org/10.1016/j.agrformet.2023.109674 (2023).

Mangani, R., Gunn, K. M. & Creux, N. M. Projecting the effect of climate change on planting date and cultivar choice for South African dryland maize production. Agricult. For. Meteorol. 341, 109695. https://doi.org/10.1016/j.agrformet.2023.109695 (2023).

Liang, R., Sun, Y., Qiu, S., Wang, B. & Xie, Y. Relative effects of climate, stand environment and tree characteristics on annual tree growth in subtropical Cunninghamia lanceolata forests. Agricult. For. Meteorol. 342, 109711. https://doi.org/10.1016/j.agrformet.2023.109711 (2023).

Kumar, A., Chen, M. & Wang, W. An analysis of prediction skill of monthly mean climate variability. Clim. Dyn. 37, 1119–1131. https://doi.org/10.1007/s00382-010-0901-4 (2011).

Chen, Y. et al. Improving the heavy rainfall forecasting using a weighted deep learning model. Front. Environ. Sci. https://doi.org/10.3389/fenvs.2023.1116672 (2023).

Guo, Q., He, Z. & Wang, Z. Predicting of daily PM2.5 concentration employing wavelet artificial neural networks based on meteorological elements in Shanghai, China. Toxics 11, 51 (2023).

He, Z., Guo, Q., Wang, Z. & Li, X. Prediction of monthly PM2.5 concentration in Liaocheng in China employing artificial neural network. Atmosphere 13, 1221 (2022).

Guo, Q. & He, Z. Prediction of the confirmed cases and deaths of global COVID-19 using artificial intelligence. Environ. Sci. Pollut. Res. 28, 11672–11682. https://doi.org/10.1007/s11356-020-11930-6 (2021).

Guo, Q., He, Z. & Wang, Z. Simulating daily PM2.5 concentrations using wavelet analysis and artificial neural network with remote sensing and surface observation data. Chemosphere 340, 139886. https://doi.org/10.1016/j.chemosphere.2023.139886 (2023).

Guo, Q., He, Z. & Wang, Z. Prediction of hourly PM2.5 and PM10 concentrations in Chongqing City in China based on artificial neural network. Aerosol Air Qual. Res. 23, 220448. https://doi.org/10.4209/aaqr.220448 (2023).

Fang, S. et al. MS-Net: Multi-source spatio-temporal network for traffic flow prediction. IEEE Trans. Intell. Transp. Syst. 23, 7142–7155. https://doi.org/10.1109/TITS.2021.3067024 (2022).

Rajasundrapandiyanleebanon, T., Kumaresan, K., Murugan, S., Subathra, M. S. P. & Sivakumar, M. Solar energy forecasting using machine learning and deep learning techniques. Arch. Comput. Methods Eng. 30, 3059–3079. https://doi.org/10.1007/s11831-023-09893-1 (2023).

Han, Y. et al. Novel economy and carbon emissions prediction model of different countries or regions in the world for energy optimization using improved residual neural network. Sci. Total Environ. 860, 160410. https://doi.org/10.1016/j.scitotenv.2022.160410 (2023).

Wang, H. et al. Scientific discovery in the age of artificial intelligence. Nature 620, 47–60. https://doi.org/10.1038/s41586-023-06221-2 (2023).

Nathvani, R. et al. Beyond here and now: Evaluating pollution estimation across space and time from street view images with deep learning. Sci. Total Environ. https://doi.org/10.1016/j.scitotenv.2023.166168 (2023).

Faraji, M., Nadi, S., Ghaffarpasand, O., Homayoni, S. & Downey, K. An integrated 3D CNN-GRU deep learning method for short-term prediction of PM2.5 concentration in urban environment. Sci. Total Environ. 834, 155324. https://doi.org/10.1016/j.scitotenv.2022.155324 (2022).

Hu, T. et al. Crop yield prediction via explainable AI and interpretable machine learning: Dangers of black box models for evaluating climate change impacts on crop yield. Agricult. For. Meteorol. 336, 109458. https://doi.org/10.1016/j.agrformet.2023.109458 (2023).

Priyatikanto, R., Lu, Y., Dash, J. & Sheffield, J. Improving generalisability and transferability of machine-learning-based maize yield prediction model through domain adaptation. Agricult. For. Meteorol. 341, 109652. https://doi.org/10.1016/j.agrformet.2023.109652 (2023).

von Bloh, M. et al. Machine learning for soybean yield forecasting in Brazil. Agricult. For. Meteorol. 341, 109670. https://doi.org/10.1016/j.agrformet.2023.109670 (2023).

Liu, N. et al. Meshless surface wind speed field reconstruction based on machine learning. Adv. Atmos. Sci. 39, 1721–1733. https://doi.org/10.1007/s00376-022-1343-8 (2022).

Li, Y. et al. Convective storm VIL and lightning nowcasting using satellite and weather radar measurements based on multi-task learning models. Adv. Atmos. Sci. 40, 887–899. https://doi.org/10.1007/s00376-022-2082-6 (2023).

Yang, D. et al. Predictor selection for CNN-based statistical downscaling of monthly precipitation. Adv. Atmos. Sci. 40, 1117–1131. https://doi.org/10.1007/s00376-022-2119-x (2023).

Wang, T. & Huang, P. Superiority of a convolutional neural network model over dynamical models in predicting central pacific ENSO. Adv. Atmos. Sci. 40, 1–14. https://doi.org/10.1007/s00376-023-3001-1 (2023).

Zou, H., Wu, S. & Tian, M. Radar quantitative precipitation estimation based on the gated recurrent unit neural network and echo-top data. Adv. Atmos. Sci. 40, 1043–1057. https://doi.org/10.1007/s00376-022-2127-x (2023).

Bi, K. et al. Accurate medium-range global weather forecasting with 3D neural networks. Nature 619, 533–538. https://doi.org/10.1038/s41586-023-06185-3 (2023).

Zhang, Y. et al. Skilful nowcasting of extreme precipitation with NowcastNet. Nature 619, 526–532. https://doi.org/10.1038/s41586-023-06184-4 (2023).

Ham, Y.-G. et al. Anthropogenic fingerprints in daily precipitation revealed by deep learning. Nature https://doi.org/10.1038/s41586-023-06474-x (2023).

Shamekh, S., Lamb, K. D., Huang, Y. & Gentine, P. Implicit learning of convective organization explains precipitation stochasticity. Proc. Natl. Acad. Sci. 120, e2216158120. https://doi.org/10.1073/pnas.2216158120 (2023).

Pinheiro Gomes, E., Progênio, M. F. & da Silva Holanda, P. Modeling with artificial neural networks to estimate daily precipitation in the Brazilian Legal Amazon. Clim. Dyn. https://doi.org/10.1007/s00382-024-07200-7 (2024).

Papantoniou, S. & Kolokotsa, D.-D. Prediction of outdoor air temperature using neural networks: Application in 4 European cities. Energy Build. 114, 72–79. https://doi.org/10.1016/j.enbuild.2015.06.054 (2016).

Roebber, P. Toward an adaptive artificial neural network-based postprocessor. Mon. Weather Rev. https://doi.org/10.1175/MWR-D-21-0089.1 (2021).

Chen, Y. et al. Prediction of ENSO using multivariable deep learning. Atmos. Ocean. Sci. Lett. 16, 100350. https://doi.org/10.1016/j.aosl.2023.100350 (2023).

Baño-Medina, J., Manzanas, R. & Gutiérrez, J. M. Configuration and intercomparison of deep learning neural models for statistical downscaling. Geosci. Model Dev. 13, 2109–2124. https://doi.org/10.5194/gmd-13-2109-2020 (2020).

Zhong, H. et al. Prediction of instantaneous yield of bio-oil in fluidized biomass pyrolysis using long short-term memory network based on computational fluid dynamics data. J. Clean. Prod. 391, 136192. https://doi.org/10.1016/j.jclepro.2023.136192 (2023).

Jiang, N., Yu, X. & Alam, M. A hybrid carbon price prediction model based-combinational estimation strategies of quantile regression and long short-term memory. J. Clean. Prod. https://doi.org/10.1016/j.jclepro.2023.139508 (2023).

Guo, Y. et al. Stabilization temperature prediction in carbon fiber production using empirical mode decomposition and long short-term memory network. J. Clean. Prod. https://doi.org/10.1016/j.jclepro.2023.139345 (2023).

Yang, C.-H., Chen, P.-H., Wu, C.-H., Yang, C.-S. & Chuang, L.-Y. Deep learning-based air pollution analysis on carbon monoxide in Taiwan. Ecol. Inform. https://doi.org/10.1016/j.ecoinf.2024.102477 (2024).

Yang, X. et al. A spatio-temporal graph-guided convolutional LSTM for tropical cyclones precipitation nowcasting. Appl. Soft Comput. 124, 109003. https://doi.org/10.1016/j.asoc.2022.109003 (2022).

Ham, Y.-G., Kim, J.-H. & Luo, J.-J. Deep learning for multi-year ENSO forecasts. Nature 573, 568–572. https://doi.org/10.1038/s41586-019-1559-7 (2019).

Xie, W., Xu, G., Zhang, H. & Dong, C. Developing a deep learning-based storm surge forecasting model. Ocean Model. 182, 102179. https://doi.org/10.1016/j.ocemod.2023.102179 (2023).

Hu, W. et al. Deep learning forecast uncertainty for precipitation over the Western United States. Mon. Weather Rev. 151, 1367–1385. https://doi.org/10.1175/MWR-D-22-0268.1 (2023).

Wang, C. & Li, X. A deep learning model for estimating tropical cyclone wind radius from geostationary satellite infrared imagery. Mon. Weather Rev. 151, 403–417. https://doi.org/10.1175/MWR-D-22-0166.1 (2023).

Ling, F. et al. Multi-task machine learning improves multi-seasonal prediction of the Indian Ocean Dipole. Nat. Commun. 13, 7681. https://doi.org/10.1038/s41467-022-35412-0 (2022).

Sun, W. et al. Artificial intelligence forecasting of marine heatwaves in the south China sea using a combined U-Net and ConvLSTM system. Remote Sens. 15, 4068 (2023).

Sun, X. et al. PN-HGNN: Precipitation nowcasting network via hypergraph neural networks. IEEE Trans. Geosci. Remote Sens. 62, 1–12. https://doi.org/10.1109/TGRS.2024.3407157 (2024).

Harnist, B., Pulkkinen, S. & Mäkinen, T. DEUCE v1.0: A neural network for probabilistic precipitation nowcasting with aleatoric and epistemic uncertainties. Geosci. Model Dev. 17, 3839–3866. https://doi.org/10.5194/gmd-17-3839-2024 (2024).

Beucler, T. et al. Climate-invariant machine learning. Sci. Adv. 10, eadj7250. https://doi.org/10.1126/sciadv.adj7250 (2024).

Kontolati, K., Goswami, S., Em Karniadakis, G. & Shields, M. D. Learning nonlinear operators in latent spaces for real-time predictions of complex dynamics in physical systems. Nat. Commun. 15, 5101. https://doi.org/10.1038/s41467-024-49411-w (2024).

Chen, H. et al. Visibility forecast in Jiangsu province based on the GCN-GRU model. Sci. Rep. 14, 12599. https://doi.org/10.1038/s41598-024-61572-8 (2024).

Pan, S. et al. Oil well production prediction based on CNN-LSTM model with self-attention mechanism. Energy 284, 128701. https://doi.org/10.1016/j.energy.2023.128701 (2023).

Dehghani, A. et al. Comparative evaluation of LSTM, CNN, and ConvLSTM for hourly short-term streamflow forecasting using deep learning approaches. Ecol. Inform. 75, 102119. https://doi.org/10.1016/j.ecoinf.2023.102119 (2023).

Boulila, W., Ghandorh, H., Khan, M. A., Ahmed, F. & Ahmad, J. A novel CNN-LSTM-based approach to predict urban expansion. Ecol. Inform. 64, 101325. https://doi.org/10.1016/j.ecoinf.2021.101325 (2021).

Lima, F. T. & Souza, V. M. A. A large comparison of normalization methods on time series. Big Data Res. 34, 100407. https://doi.org/10.1016/j.bdr.2023.100407 (2023).

Liu, K. et al. New methods based on a genetic algorithm back propagation (GABP) neural network and general regression neural network (GRNN) for predicting the occurrence of trihalomethanes in tap water. Sci. Total Environ. 870, 161976. https://doi.org/10.1016/j.scitotenv.2023.161976 (2023).

Khaldi, R., El Afia, A., Chiheb, R. & Tabik, S. What is the best RNN-cell structure to forecast each time series behavior?. Expert Syst. Appl. 215, 119140. https://doi.org/10.1016/j.eswa.2022.119140 (2023).

Chandrasekar, A., Zhang, S. & Mhaskar, P. A hybrid Hubspace-RNN based approach for modelling of non-linear batch processes. Chem. Eng. Sci. 281, 119118. https://doi.org/10.1016/j.ces.2023.119118 (2023).

Al Mehedi, M. A. et al. Predicting the performance of green stormwater infrastructure using multivariate long short-term memory (LSTM) neural network. J. Hydrol. 625, 130076. https://doi.org/10.1016/j.jhydrol.2023.130076 (2023).

Ma, J., Ding, Y., Cheng, J. C. P., Jiang, F. & Wan, Z. A temporal-spatial interpolation and extrapolation method based on geographic Long Short-Term Memory neural network for PM2.5. J. Clean. Prod. 237, 117729. https://doi.org/10.1016/j.jclepro.2019.117729 (2019).

Sejuti, Z. A. & Islam, M. S. A hybrid CNN–KNN approach for identification of COVID-19 with 5-fold cross validation. Sensors Int. 4, 100229. https://doi.org/10.1016/j.sintl.2023.100229 (2023).

Guo, Q. et al. Air pollution forecasting using artificial and wavelet neural networks with meteorological conditions. Aerosol Air Qual. Res. 20, 1429–1439. https://doi.org/10.4209/aaqr.2020.03.0097 (2020).

Bilgili, M., Ozbek, A., Yildirim, A. & Simsek, E. Artificial neural network approach for monthly air temperature estimations and maps. J. Atmos. Solar-Terr. Phys. 242, 106000. https://doi.org/10.1016/j.jastp.2022.106000 (2023).

Hrisko, J., Ramamurthy, P., Yu, Y., Yu, P. & Melecio-Vázquez, D. Urban air temperature model using GOES-16 LST and a diurnal regressive neural network algorithm. Remote Sens. Environ. 237, 111495. https://doi.org/10.1016/j.rse.2019.111495 (2020).

Yu, X., Shi, S. & Xu, L. A spatial–temporal graph attention network approach for air temperature forecasting. Appl. Soft Comput. 113, 107888. https://doi.org/10.1016/j.asoc.2021.107888 (2021).

Zhang, X., Xiao, Y., Zhu, G. & Shi, J. A coupled CEEMD-BiLSTM model for regional monthly temperature prediction. Environ. Monitor. Assess. 195, 379. https://doi.org/10.1007/s10661-023-10977-5 (2023).

Zhang, X., Ren, H., Liu, J., Zhang, Y. & Cheng, W. A monthly temperature prediction based on the CEEMDAN–BO–BiLSTM coupled model. Sci. Rep. https://doi.org/10.1038/s41598-024-51524-7 (2024).

Song, C., Chen, X., Wu, P. & Jin, H. Combining time varying filtering based empirical mode decomposition and machine learning to predict precipitation from nonlinear series. J. Hydrol. 603, 126914. https://doi.org/10.1016/j.jhydrol.2021.126914 (2021).

Priestly, S. E., Raimond, K., Cohen, Y., Brema, J. & Hemanth, D. J. Evaluation of a novel hybrid lion swarm optimization—AdaBoostRegressor model for forecasting monthly precipitation. Sustain. Comput. Inform. Syst. 39, 100884. https://doi.org/10.1016/j.suscom.2023.100884 (2023).

Tao, L., He, X., Li, J. & Yang, D. A multiscale long short-term memory model with attention mechanism for improving monthly precipitation prediction. J. Hydrol. 602, 126815. https://doi.org/10.1016/j.jhydrol.2021.126815 (2021).

Rajendra, P., Murthy, K. V. N., Subbarao, A. & Boadh, R. Use of ANN models in the prediction of meteorological data. Model. Earth Syst. Environ. 5, 1051–1058. https://doi.org/10.1007/s40808-019-00590-2 (2019).

Hanoon, M. S. et al. Developing machine learning algorithms for meteorological temperature and humidity forecasting at Terengganu state in Malaysia. Sci. Rep. 11, 18935. https://doi.org/10.1038/s41598-021-96872-w (2021).

Gurlek, C. Artificial neural networks approach for forecasting of monthly relative humidity in Sivas, Turkey. J. Mech. Sci. Technol. 37, 4391–4400. https://doi.org/10.1007/s12206-023-0753-6 (2023).

Shad, M., Sharma, Y. D. & Singh, A. Forecasting of monthly relative humidity in Delhi, India, using SARIMA and ANN models. Model. Earth Syst. Environ. 8, 4843–4851. https://doi.org/10.1007/s40808-022-01385-8 (2022).

Ozbek, A., Ünal, Ş & Bilgili, M. Daily average relative humidity forecasting with LSTM neural network and ANFIS approaches. Theor. Appl. Climatol. 150, 697–714. https://doi.org/10.1007/s00704-022-04181-7 (2022).

Rahimikhoob, A. Estimating sunshine duration from other climatic data by artificial neural network for ET0 estimation in an arid environment. Theor. Appl. Climatol. 118, 1–8. https://doi.org/10.1007/s00704-013-1047-1 (2014).

Kandirmaz, H. M., Kaba, K. & Avci, M. Estimation of monthly sunshine duration in Turkey using artificial neural networks. Int. J. Photoenergy 2014, 680596. https://doi.org/10.1155/2014/680596 (2014).

Acknowledgements

This research was supported by Shandong Provincial Natural Science Foundation (Grant No. ZR2023MD075), LAC/CMA (Grant No. 2023B02), State Key Laboratory of Loess and Quaternary Geology Foundation (Grant No. SKLLQG2211), Shandong Province Higher Educational Humanities and Social Science Program (Grant No. J18RA196), the National Natural Science Foundation of China (Grant No. 41572150), and the Junior Faculty Support Program for Scientific and Technological Innovations in Shandong Provincial Higher Education Institutions (Grant No. 2021KJ085).

Author information

Authors and Affiliations

Contributions

Q.G. provided the data. Q.G. and Z.H. conceived the experiments, Q.G. and Z.H.conducted the experiments, Q.G., Z.H. and Z.W. analyzed the results. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Guo, Q., He, Z. & Wang, Z. Monthly climate prediction using deep convolutional neural network and long short-term memory. Sci Rep 14, 17748 (2024). https://doi.org/10.1038/s41598-024-68906-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-68906-6

- Springer Nature Limited