Abstract

Recent research shows that emotional facial expressions impact behavioral responses only when their valence is relevant to the task. Under such conditions, threatening faces delay attentional disengagement, resulting in slower reaction times and increased omission errors compared to happy faces. To investigate the neural underpinnings of this phenomenon, we used functional magnetic resonance imaging to record the brain activity of 23 healthy participants while they completed two versions of the go/no-go task. In the emotion task (ET), participants responded to emotional expressions (fearful or happy faces) and refrained from responding to neutral faces. In the gender task (GT), the same images were displayed, but participants had to respond based on the posers’ gender. Our results confirmed previous behavioral findings and revealed a network of brain regions (including the angular gyrus, the ventral precuneus, the left posterior cingulate cortex, the right anterior superior frontal gyrus, and two face-responsive regions) displaying distinct activation patterns for the same facial emotional expressions in the ET compared to the GT. We propose that this network integrates internal representations of task rules with sensory characteristics of facial expressions to evaluate emotional stimuli and exert top-down control, guiding goal-directed actions according to the context.

Similar content being viewed by others

Introduction

Items laden with affective significance are crucial in decision-making as they impact cognitive control and behavioral performance1. According to a classical theoretical frame, the motivational model2, emotional stimuli, especially threatening ones, grab selective attention, prioritize their processing, and elicit behaviors independently from the subject's current goals3,4. However, the empirical evidence is inconsistent with this hypothesis. Recent behavioral evidence indicates that emotional facial and body expressions modulate arm or leg movements just when task-relevant5,6,7,8,9. In all these studies, the experimental design consisted of two versions of a Go/No-go task given to healthy participants in a counterbalanced fashion. In one version, the emotion task (ET), emotions are task-relevant, as participants have to respond according to whether the faces' image displays an emotion, i.e., they have to respond to emotional expressions and refrain from moving for neutral expressions. In the other version, the gender task (GT), emotions are task-irrelevant, i.e., the same pictures are shown to participants, but they need to respond to the posers' gender, moving when they see the image of a man and refraining from moving to women faces or vice versa. Using such a design, it was possible to compare how task-relevance of emotional expressions impacts action readiness for identical stimuli and movements in two different contexts without asking participants for explicit perceptual judgments. Results showed that when threatening expressions are task-relevant, i.e., in the ET, they elicit longer reaction times (RTs) and lower accuracy than happy faces. As we always presented facial images within the participants’ focus of attention5,6,7,8,9, these effects are likely to arise because threatening expressions delay attentional disengagement, prompting the individuals’ instinct to monitor the object of potential threat in more depth10. By contrast, all differences between emotional expressions disappear when they are task-irrelevant, i.e., in the GT. Notably, in all the above-cited studies, the effects could be ascribed only to the stimuli's valence as other key confounding factors, such as arousal and stimulus perceptual complexity, were carefully controlled. While the aforementioned evidence is highly robust, it failed to provide insights into the neural substrates of the task-relevance phenomenon, as all the studies focused solely on behavioral aspects.

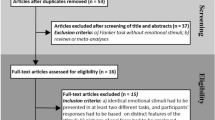

Some attempts to study the task-relevance of emotional facial expressions have been made using another emotional version of the Go/No-go task while recording brain activity via functional magnetic resonance imaging (fMRI)11,12. In this experimental design, participants had to move on one emotional facial expression (e.g., angry) and refrain from moving on a different emotional expression (e.g., happy) in one block of trials and vice versa. Then, the effect on behavior and neural activity of such emotions was compared across blocks. However, in this design, participants were required to execute distinct responses based on the stimulus valence. For instance, in one block, participants had to respond by moving when presented with sad faces and refrain from moving for happy faces. The opposite set of instructions was applied to the next block. Consequently, emotional faces were associated with different motor responses within a given block. This design choice makes it challenging to compare the impact of emotional expressions on the same movement, as it can only be assessed across different blocks. This introduces the risk of conflating the modulation of action readiness with task instructions, rendering the interpretation of such results difficult. Ishai et al.13 provided more reliable evidence. They gave a working memory task to 14 healthy participants, who had first to memorize a target face (neutral or fearful) and later to detect it among distractors by pressing a key. This way, the target face, e.g., the fearful face, became the behaviorally relevant stimulus, while the other, e.g., the neutral face, was the distractor. Exploiting event-related fMRI, Ishiai et al.13 compared the activation elicited by neutral and fearful faces when task-relevant and task-irrelevant. They found that within the face-responsive regions, i) task-relevant faces evoked stronger responses than task-irrelevant faces, and they showed a higher adaptation after repetition; ii) fearful task-relevant faces induced more adaptation than task-relevant neutral ones; iii) task-irrelevant faces, regardless of their repetition or valence, did not show neural adaptation. These results align with those of Gur et al.14. They showed 12 healthy participants happy, sad, angry, fearful, disgusted, and neutral facial expressions, and, in different blocks, they asked them to indicate whether the displayed emotional face was positive or negative or whether the poser was older or younger than 30. Gur et al.14 found that the activity of the amygdala and hippocampus was modulated by the relevance of the emotional content of the face, as these regions were more responsive when participants had to discriminate the valence of facial expressions than when they had to determine the posers’ age. Finally, Everaert et al.15 also found that task-relevance of emotional expression impacts brain activity. They gave two versions of a modified oddball paradigm to two different groups of participants. The authors showed happy and sad faces of young and old people in both versions. One group of 13 persons was instructed to respond to a specific emotion, e.g., happiness, and the other group (n = 14) was instructed to respond to a specific age, e.g., old. The authors measured participants’ event-related potentials while they performed the tasks. They found that in the emotional task, the unattended (oddball) face, i.e., the sad one following the above examples, elicited a greater P3a (a marker of attentional processing) independently from the posers’ age. However, such changes did not occur in the age task. Thus, they concluded that the modulation of brain activity by the valence of emotional stimuli occurs only when participants focus on emotional stimuli, not when their attention is directed towards non-emotional stimulus properties. Overall, these findings suggest that emotional expressions do not belong to a privileged stimulus category with prioritized processing. However, those studies had significant limitations, i.e., (i) differently from5,6,7,8,9, stimuli arousal was not considered, even though it has been shown that this dimension impacts behavioral responses16; (ii) the number of participants was low. These limitations cast doubt on the interpretation of the results, calling for further studies. Thus, to investigate the neural underpinnings of the face emotions’ task-relevance we gave the task developed by Mirabella9 to a relatively large group of healthy participants while recording their fMRI activity. This experimental design permits within-subject comparisons of activity levels elicited by emotional stimuli based on the task-relevance of their valence while controlling for their arousal. We hypothesized that if participants exhibit varied behavioral reactions to the same stimuli contingent on the experimental context, then there should exist a network of brain regions activated differently when the valence of the stimuli is task-relevant compared to when it is task-irrelevant. These brain regions would potentially form the foundation for understanding the neural underpinnings of the task-relevance phenomenon of emotional expressions.

Materials and methods

In this section, we report how we determined our sample size, all data exclusions, all inclusion/exclusion criteria, whether inclusion/exclusion criteria were established before data analysis, all manipulations, and all measures in the study. This study was not preregistered.

Participants

Starting from previous behavioral studies8,9, we determined the sample size required to achieve a power of 0.90 with a two-factor repeated measures analysis of variance (ANOVA) using G*Power 3.1 software17. Input variables were α = 0.05, effect size f = 0.45, and correlations between repeated measures r = 0.5. The resulting sample was 12 persons. However, as we aimed to get insight into the neural substrates of task relevance, we increased the number of participants, enrolling 23 university students (8 males, mean age ± SD 23 ± 3.1 years, range 19–31). Participants were recruited via advertisements hung in university buildings. Recruitment lasted about nine months. Subjects participated for course credits. All participants were Caucasian, right-handed, as assessed with the Edinburgh handedness inventory18, and had normal or corrected-to-normal vision. Subjects were naïve about the purpose of the study, and none had a history of neurological or psychiatric disorders. All volunteers gave their written informed consent to participate. The study was designed following the principles of the Declaration of Helsinki and was approved by the Research Ethics Committee of Fondazione Santa Lucia in Rome (CE/Prog. 687).

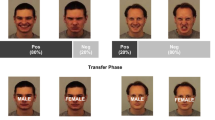

Stimuli

Stimuli were taken from the Pictures of Facial Affect database19 and consisted of 12 grayscale pictures taken from four actors (two female and two male), each displaying three facial expressions, i.e., fear, happiness, and neutral. The stimuli that were employed were the same as those used in Mirabella9. After the experimental session, participants were asked to fill in two questionnaires for evaluating the level of (i) arousal (on an 8-point Likert scale, where 0 meant ‘not arousing’ and 7 meant ‘highest arousing’) and (ii) valence (a 15-point scale, where −7 meant ‘very negative,’ 0 meant ‘neutral,’ +7 ‘very positive’) of each facial expression. As previously observed9, we found that the arousal of emotional expressions (fearful and happy) was the same, while their valence was different (see Table 1). A one-way ANOVA with repeated measures on the pictures’ level of arousal with Emotion as a factor (levels: fear, happiness, and neutral) showed a main effect [F(1.88, 41.40) = 76.6, η2p = 0.63, p < 0.001]. Post hoc tests with Bonferroni correction showed that fearful and happy faces did not differ (p = 0.67), while both these facial emotional expressions were more arousing than neutral faces (both p < 0.001). The same analysis on the pictures’ emotional valence showed a main effect of Emotion [F(1.45, 32.01) = 835.7, η2p = 0.96, p < 0.001]. Post hoc tests revealed that the three facial expressions differed as expected, i.e., participants assigned negative values to fearful faces, positive values to happy faces, and values close to zero to neutral faces (ps < 0.001).

Experimental paradigm

Participants performed two Go/No-go tasks inside the scanner in a single acquisition session. Stimuli presentation and behavioral responses were controlled by home-made software implemented in MATLAB (MathWorks, Inc., Natick, Massachusetts, Stati Uniti). Stimuli were projected onto an MRI-compatible screen close to the subjects’ heads in the magnetic resonance tube, visible through a mirror mounted inside the head coil.

Participants responded by pressing the keys of a fiber optic response box positioned over their stomach using their right hand. The sequence of events in the two Go/No-go tasks was the same except for the instruction on how to provide a response (Fig. 1). At the beginning of each trial, a white cross appeared at the center of the screen, together with a red circle on the bottom left side. Participants were instructed to fixate on the cross and press the left button with their right index finger within 700 ms. The left-key press elicited the appearance of another red circle located on the bottom right side of the screen. Participants had to hold the left key for a variable period of 400–700 ms (the variable time interval was incorporated to enhance the unpredictability of the go-signal’s appearance). Then, the left circle stimulus disappeared, and simultaneously, one of the pictures depicting a facial expression appeared in the middle of the screen (go-signal). If participants did not press the left button within the time limits, or released it too early, the trial was aborted and considered an error. Those error trials accounted for 3% of the total trials in the ET and 3.2% in the GT.

(A) Emotion task. Each trial started with presenting a white cross that participants had to fixate on together with a red circle on the bottom left side. Participants had to press the key just below the stimulus. Then, a red circle appeared on the right side, and after a variable delay, the left circle disappeared and a picture depicting a facial expression was shown in the middle of the screen. If an emotional expression (happy or fearful) was displayed, participants had to move their index finger from the left to the right button as quickly as possible (go trials). Differently, if the facial expression was neutral, participants had to refrain from moving until the picture disappeared (No-go trials). A visual feedback was provided. All stimuli were projected against a black background of uniform luminance. (B) Gender task. The sequence of events was the same as (A) until the display of the facial picture. In the male version, participants were instructed to move their index finger from the left to the right button as quickly as possible (Go trials) at the presentation of a male face and to refrain from moving when a female face was shown (No-go trials) irrespectively of the emotional expression. Vice versa in the female version.

In the ET (Fig. 1A), participants were instructed to move their index finger from the left to the right button as quickly as possible whenever an emotional facial expression (happy or fearful) was displayed (Go trials, 67% of trials in a block). Differently, participants had to refrain from moving whenever a neutral expression appeared, keeping the finger on the left key until the picture disappeared (No-go trials, 33% of trials in a block). In the GT, participants were instructed to move or refrain from moving their index fingers according to the gender of the actors’ faces. Subjects had to move at the presentation of female faces (67% of trials in a block) and refrain from moving at male faces (33% of trials in a block) irrespectively of the actors’ expressions. In the other half of the trials, they had the opposite instructions. Therefore, in the GT, both Go and No-go trials consisted of fearful, happy, and neutral faces in equal proportions.

In the Go trials, subjects had 500 ms to release the left key and 750 ms to press the right key. If these time limits were exceeded, the trial was aborted and considered an omission error. The images remained on the screen for 1500 ms in the No-go trials. Visual feedback lasting 500 ms signaled successful and unsuccessful trials. The inter-trial interval varied randomly between 1500–6000 ms in a truncated gamma distribution, and during this period, participants placed their hands at the starting position.

Each session consisted of six runs. Each run included 60 trials consisting of 24 trials of the ET, 18 trials of the GT-female version, and 18 trials of the GT-male version. In each trial, a cue appearing at the center of the screen, i.e., the word “emotion,” “male,” or “female,” instructed the participant about the response that they had to provide. The order of block of trials in each run was randomized. Before entering the scanner, all subjects performed one run to familiarize themselves with the tasks.

Image acquisition

All MRI scans were collected using a 3 T Philips Achieva scanner at the Santa Lucia Foundation in Rome (Italy). Functional T2*-weighted images were collected using a gradient-echo EPI sequence using blood-oxygenation level-dependent (BOLD) imaging20. For the main experiment, 38 contiguous 2.5 mm slices were acquired in the anterior–posterior commissure line with an in-plane resolution of 2.5 × 2.5 mm2 and an interleaved excitation order (0 mm gap), 64 × 64 image matrix, echo time (TE) = 30 ms, flip angle = 77°, repetition time (TR) = 2 s. From the superior convexity, sampling included all the cerebral cortex, excluding only the ventral portion of the cerebellum. Each subject completed six 336 s long task scans and two 352 s long resting-state scans, whose results are not reported in this study. Structural images were collected using a sagittal magnetization-prepared rapid acquisition gradient echo (MPRAGE) T1-weighted sequence (TR = 12.66 ms, TE = 5.78 ms, flip angle = 8°, 512 × 512 matrix, 0.5 × 0.5 mm2 in-plane resolution, 342 contiguous 1 mm thick sagittal slices).

Image preprocessing

MRI data preprocessing was performed using the SPM12 software package (Wellcome Centre for Human Neuroimaging, University College London). T1-weighted images were segmented into grey matter, white matter, and cerebral spinal fluid using a priori tissue probability maps and normalized to Montreal Neurological Institute (MNI) space21. Functional data preprocessing consisted of the following steps: first, given that the signal was collected from each slice at a different time, functional images were corrected for such differences using the central slice of each volume as a reference. Second, we corrected motion artifacts (head movements) by realigning images to the first volume of each session, estimating six motion parameters (three translations and three rotations). Third, functional images were aligned to structural, skull-stripped anatomical images by coregistering them. Fourth, images were normalized to MNI space21 (using coregistered structural images to estimate the parameters of spatial warping) and smoothed with a 6 mm full-width at half-maximum isotropic Gaussian kernel.

Data analysis

As far as behavioral performance is concerned, we analyze the RTs and the rate of omission errors (i.e., instances in which participants did not move although the go-signal was shown) across experimental conditions via two two-way repeated-measures ANOVA [within-subject factors: Emotion (fear and happiness) and Task (ET and GT)]. We chose not to analyze the No-go trials because we focused only on examining how behavioral parameters characterizing responses during Go trials were modulated when emotional stimuli were presented in the two task contexts. The assumption of normality was assessed using the Shapiro–Wilk test. Bonferroni corrections were applied to all multiple comparisons. The effect size was quantified by the partial eta-squared (ηp2) for the ANOVA and Cohen’s d for the t-test. We assessed the strength of null hypotheses by computing Bayes factors (BF10), setting the prior odds to 0.70722. BF10 values < 0.33 and < 0.1 provide moderate and robust support for the null hypothesis. Conversely, BF10 values > 3 and > 10 constitute moderate and strong support for the alternative hypothesis.

Regarding brain activity, the time series of functional MR images obtained from each participant were analyzed individually. The effects of the experimental paradigm were estimated on a voxel-by-voxel basis, using the general linear model (GLM) implemented in SPM12. Each experimental trial was modelled by two neural events: the button press at the start of each trial and the onset of face stimulus. These neural events were modeled using a canonical hemodynamic response function. At the first level, we modeled the BOLD responses by separate regressors depending on the task (ET, GT), emotion (happy, fear, neutral), and response (Go, No-go). As a result, the GLM included nine task-related regressors: emotional-happy-go, emotional-fear-go, emotional-neutral-no-go, gender-happy-go, gender-fear-go, gender-neutral-go, gender-happy-no-go, gender-fear-no-go, gender-neutral-no-go. Additionally, omission errors in Go trials and commission errors in No-go trials were modeled as separate regressors. Notably, each condition was computed relative to the baseline, i.e., the fixation period. At the group level, we implemented a random-effects analysis on Go trials, selecting the happy-go and fear-go combinations from each task (i.e., emotional-happy-go, emotional-fear-go, gender-happy-go, gender-fear-go). Brain regions recruited during the presentation of emotional stimuli in the two tasks were identified via a two-way repeated-measures analysis of variance with task (ET and GT) and emotion (fear and happiness) as factors.

The statistical parametric map of the F-statistic resulting from the task by Emotion interaction was threshold at p < 0.05 corrected at the cluster level for multiple comparisons using a topological false discovery rate procedure based on random field theory23 after applying a cluster-forming uncorrected threshold of p < 0.001 at the voxel level. To determine the functional profile of each region (cluster) exhibiting significant interaction, we calculated the average activation level for each subject and condition by averaging the estimated percent BOLD signal changes from individual analyses across all voxels in the cluster. The box plots in Fig. 3 illustrate the distribution of these values across subjects. Such values were subjected to a two-way repeated-measures analysis of variance at the group level, with Task and Emotion as factors. The results of this analysis are shown in (Table 4).

Ethics statement

This study, involving human participants, was reviewed and approved by Research Ethics Committee of Fondazione Santa Lucia in Rome (CE/Prog. 687). Informed consent was obtained from all participants.

Results

Behavioral performance

Behavioral results were in line with those of Mirabella9, i.e., the valence of emotional stimuli affects motor responses only when task-relevant. First, a two-way ANOVA on mean RTs of Go-trials revealed a main effect of Emotion, Task, and an interaction between these two factors (Table 2, Fig. 2A). The main effect of Emotion was because participants reacted more slowly to fearful than to happy faces (M ± SD, 361.6 ± 32.8 vs. 350.9 ± 32.1 ms). The main effect of the Task was because participants had shorter RTs in the GT than in the ET (346.1 ± 28.7 vs. 366.4 ± 33.5 ms). The interaction between these two factors qualified for both main effects. During the ET, fearful faces increased the RTs with respect to happy faces (375.3 ± 30.8 vs. 357.6 ± 34.6 ms), but this effect disappeared in the GT (347.9 ± 29.3 vs. 344.3 ± 28.7 ms). Furthermore, in the emotional task, the RTs for fearful and happy faces were longer than the RTs for the corresponding emotional expressions displayed in the GT. Second, a two-way ANOVA on the mean percentage of omission errors revealed a main effect of Emotion, Task, and an interaction between these two factors (Table 2, Fig. 2B). The main effect of Emotion was because participants made more omission errors in response to the presentation of fearful than happy faces (15.7 ± 11.5 vs. 9.7 ± 8.9%). The main effect of the Task was because participants made more omission errors in the ET than in the GT (14.4 ± 11.1 vs. 11 ± 10%). Again, the interaction between these two factors explained the main effects. In the ET, fearful faces increased the mean rate of omission errors with respect to happy faces (19 ± 11.7 vs. 9.8 ± 8.4%). Conversely, in the GT, there was no difference between omission errors for fearful versus happy expressions (12.4 ± 10.5 vs. 9.6 ± 9.6%).

(A) Effect of emotional facial expressions on reaction times (RTs) and the rate of omission errors (B) in the emotion task (on the left) and the gender task (on the right). Only in the emotion task participants were slower and made a higher percentage of omission errors when the Go-signal was a fearful face with respect when it was a happy face.

Neural substrates of the emotional faces’ task-relevance phenomenon

The main aim of the present work was to assess the effect of the task-relevance of emotional expressions on brain activations in two different task contexts. To this aim, we run a two-way repeated-measure ANOVA like the one used for the behavioral analysis, with task (ET vs. GT) and emotion (happy vs. fear) as factors on percent BOLD signal changes. Note that both the behavioral and fMRI analyses only included the Go trials so that the analysis is balanced in terms of the presence of motor responses.

The voxel-wise statistical map of the interaction between Task and Emotion revealed a bilateral set of regions differentially responding to happy and fearful faces depending on whether they were task-relevant. The activation profile of these regions is shown in (Fig. 3), whereas the anatomical and statistical details are provided in (Tables 3 and 4). The largest cluster was found in the bilateral precuneus/posterior cingulate cortex, extending posteriorly into the parieto-occipital fissure. Another bilateral region showing a significant interaction was found in the angular gyrus. Smaller unilateral regions were found in the right inferior occipital gyrus, the left fusiform gyrus, and the anterior part of the right superior frontal gyrus.

Lateral (top) and medial (bottom) surface view of the cerebral hemispheres, with superimposed regions showing a significant task*emotion interaction. Box plots show average percent blood oxygenation level dependent signal changes for fearful and happy faces in the emotional and gender tasks for each region. In all box plots, the box boundary closest to zero indicates the first quartile, a thick black line within the box marks the median, and the box boundary farthest from zero indicates the third quartile. Whiskers indicate values 1.5 times the interquartile range below the first quartile and above the third quartile. Filled circles represent outliers. All statistics are reported in (Table 4).

In three brain regions, the left and right ventral precuneus (vPCu), the left posterior cingulate cortex (l-Pos-CC), and the right anterior superior frontal gyrus (r-ant-SFG), the neural activity was significantly modulated by emotional faces in both tasks but in opposite directions, i.e., when in the ET, fearful faces elicited more activity than happy faces the opposite was true for the GT (Fig. 3). In three other areas, the left and right angular gyri (AG) and the left fusiform gyrus (l-FG), a significant difference between the activity triggered by happy and fearful faces occurred during the ET but not during GT. Finally, in the right inferior occipital gyrus (r-IOG), the difference in activity between the two emotional expressions was limited to the GT. However, such a difference was very close to significance also in the ET [t(22) = −2.65, p = 0.059, d = −0.53], and the Bayes factor value (BF10 = 3.6) provided moderate support to the alternative hypothesis. Overall, the activation pattern of this brain network across the two emotional expressions was always different in the ET with respect to the GT, suggesting the same emotional facial expressions were treated differently in the two tasks.

Discussion

In this study, we investigated the neural basis of task relevance of facial emotional expressions to unveil the neurophysiological mechanisms accounting for the modulation of behavioral reactions to such affective stimuli. As in our previous studies, we found that behavioral responses to emotional stimuli are modulated just when they are task-relevant5,6,7,8,9,24,25. Consistently, we observed distinct activation patterns within a network of brain regions in response to the presentation of the same emotional faces in the ET and the GT. The areas we identified have been implicated in a wide variety of tasks and functions, and several belong to the default mode network, i.e., the AG, vPCu, the l-pos-CC, and the r-ant-SFG26,27. Although we cannot exclude that emotional stimuli can rapidly and automatically trigger changes in brain activity28, facilitating their detection, this does not necessarily lead to a behavioral reaction. Such a reaction occurs only when the emotional stimulus is relevant to a person’s goals. In our daily lives, we are exposed to countless emotional stimuli, many of which have no direct relevance to our goals. If we were to respond automatically to each and every one of them, we would struggle to implement adaptive behavioral strategies. Instead, we selectively react to emotionally salient stimuli, i.e., those that indicate tangible risks or rewards within the context we find ourselves in. Moreover, emotional stimuli flow is modulated by cognitive control, a process known as emotion regulation (ER), which allows up- and down-regulation of positive and negative emotions so that emotional responses can be adjusted in accordance with the current context’s demands29,30.

Below, we will outline the possible function of each identified region in encoding the emotional stimuli's task relevance in the two Go/No-go tasks. Subsequently, we will offer a speculative explanation of how the network might operate.

The role of face-responsive regions

In line with Ishai et al.13, we found that the relevance of emotional stimuli modulated extrastriate regions of face-responsive regions, namely, the r-IOG and the l-FG. In the l-FG, happy faces triggered more activity compared to fearful faces in the ET, while in the GT, the activity levels were similar for both expressions. In contrast, within the r-IOG, fearful faces elicited greater activity than happy faces in the GT, whereas no differences were observed in the ET. Both the IOG and the FG are core components of the face processing network31, and several studies showed that their activation is not purely stimulus-driven but heavily depends on task demands32,33,34. Notably, Wiese et al.32, by comparing the activations of IOG and FG in participants who had to make either a gender or an age categorization task on the same set of unfamiliar faces, found enhanced activation in the r-FG and l-IOG during the former than the latter task. They interpreted the more robust activation during gender categorization as reflecting more effortful and less automatic processing than age categorization. Hence, in line with this research, we propose that the different response patterns observed in the r-IOG and l-FG may indicate distinct sensory processing when the goal is to identify an individual’s gender versus emotional expressions. In support to this hypothesis, several studies support the notion that the FG is involved in the specific representation of facial emotional displays35,36.

Activation within the AG

Previous research found increased activation of the AG (and PCu) when participants were asked to evaluate their internal emotional state after viewing an image, compared to when they were asked to assess non-affective perceptual features of the same image in different trials37. Although our design is different, we also found that AG (and PCu, see below) are involved. However, we did not observe a general increase in the fMRI signal in the ET compared to the GT. Instead, we found that only in the ET the activity triggered by the two facial emotions differed, with the BOLD response to fearful faces being higher than to happy faces. Recent research suggests that AG’s role may encompass a domain-general function, i.e., facilitating the real-time storage of multisensory spatiotemporally extended representations, thereby supporting the processing of episodic and semantic memories38. The role of AG in episodic memory has not been clarified. However, it has been suggested that the AG may be involved in allocating attention towards relevant aspects of internally generated mnemonic representations or that it could dynamically retrieve memory information in a form accessible to decision-making processes guiding behavioral responses38,39,40.. We hypothesized that in the ET, the AG might act as a buffer where instructions for directing attention and responding to emotional expressions are deposited. Notably, Regenbogen et al.41 showed that the input from the FG to the AG is enhanced when emotional faces are shown to healthy people, indicating that this could represent a privileged route to process such affective stimuli.

The v-PCu’s and l-Pos-CC’s role

It has been suggested that the PCu plays a crucial role in goal-directed tasks by integrating external information with internal representations to retrieve context-dependent memory traces and driving attention to the most relevant features of stimuli42,43. In other words, the PCu would allow access to stored representations for online processing of the external environment. Notably, the precuneus has two main functional subdivisions. The dorsal portion of the PCu is more involved in cognitive control and processing of external information. In contrast, the v-PCu is more engaged in internal processing, e.g., in elaborating episodic memories or subjective values of stimuli43. The dorsal portion of the Pos-CC is close to the vPCu, and some authors consider these two regions to be functionally only one44. In these regions, the activity for happy and fearful expressions differs significantly in both tasks. Therefore, we hypothesize that the activation of the v-PCU and l-Pos-CC supports the extraction of salient facial features based on the instructions given to participants for the two tasks.

The role of the r-ant-SFG

This area is involved in one well-recognized ER strategy, i.e., the reappraisal45. Reappraisal is the process of intentionally changing the emotional impact of valenced stimuli to make reactions appropriate to a given situation46. This strategy is very efficient as it acts early during emotional processing29. Patients with lesions of the r-ant-SFG show an impaired reappraisal of emotions45, and this region is also abnormally activated in people with fearless-dominance psychopathic personality traits47. The enhanced activity of this region was associated with a heightened distractibility of patients to words with positive valence during an emotional Stroop task. The available evidence indicates that the r-ant-SFG may engage in computations associated with assessing emotional stimuli within specific contexts. Therefore, in our scenario, the r-ant-SFG could appraise the fearful and happy faces presented in the ET to the GT, assigning them the appropriate significance based on task instructions.

Network functioning

Here, we present a potential yet speculative manner in which the identified network might operate. Face-responsive regions would process sensory features of emotional faces. This information would be sent in parallel to the v-PCu/l-Pos-CC and the r-ant-SFG. The v-PCu/l-Pos-CC would integrate sensory signals with the memory of task rules, directing attention to facial features relevant for accurately performing the task. The AG would perform similar computations but only during the ET, which requires more processing due to its greater difficulty compared to the GT. The activity of all these regions would be modulated by the r-ant-SFG based on the evaluation of the task-relevance of the emotional content of facial images. Future functional and effective connectivity studies are required to elucidate the directional relationships and causal influences between these brain areas to validate the proposed framework.

Limitations

The main limitation of this study lies in the relatively small sample size, which may have hindered our ability to identify the functioning of smaller brain regions like the amygdala and hippocampus, which previous research, using a region of interest approach, showed to be involved in the phenomenon of task-relevance of emotional faces14. Nevertheless, unlike the approach taken by Gur et al.14, exploiting a whole-brain approach, we successfully detected a broader cortical network without relying on a priori assumptions.

Conclusions

For the first time, we have provided evidence of the neurophysiological mechanisms by which the valence of emotional faces influence motor control. We identified a network of brain areas that respond differently to the valence of identical emotional facial expressions depending on the task-relevance, without requiring explicit perceptual judgments from participants. Unlike previous studies14,15, we did not ask participants to categorize emotions or other features of the images; instead, we implicitly directed them to use visual information for action. This is significant because visual signals used for guiding motor actions are known to be processed differently from those leading to perception48. Thus, our study is the first to demonstrate the neural basis for how valence affects behavioral reactions in various contexts.

Data availability

The datasets presented in this study can be found in online repositories. The names of the repository and accession can be found at: https://osf.io/xd4vs/.

References

Pessoa, L. How do emotion and motivation direct executive control?. Trends Cogn. Sci. 13, 160–166. https://doi.org/10.1016/j.tics.2009.01.006 (2009).

Bradley, M. M., Codispoti, M., Cuthbert, B. N. & Lang, P. J. Emotion and motivation I: Defensive and appetitive reactions in picture processing. Emotion 1, 276–298 (2001).

Lang, P. J. & Bradley, M. M. Emotion and the motivational brain. Biol. Psychol. 84, 437–450. https://doi.org/10.1016/j.biopsycho.2009.10.007 (2010).

Vuilleumier, P. How brains beware: Neural mechanisms of emotional attention. Trends Cogn. Sci. 9, 585–594. https://doi.org/10.1016/j.tics.2005.10.011 (2005).

Montalti, M. & Mirabella, G. Investigating the impact of surgical masks on behavioral reactions to facial emotions in the COVID-19 era. Front. Psychol. 15, 1359075. https://doi.org/10.3389/fpsyg.2024.1359075 (2024).

Montalti, M. & Mirabella, G. Unveiling the influence of task-relevance of emotional faces on behavioral reactions in a multi-face context using a novel flanker-Go/No-go task. Sci. Rep. 13, 20183. https://doi.org/10.1038/s41598-023-47385-1 (2023).

Mirabella, G., Grassi, M., Mezzarobba, S. & Bernardis, P. Angry and happy expressions affect forward gait initiation only when task relevant. Emotion 23, 387–399. https://doi.org/10.1037/emo0001112 (2023).

Mancini, C., Falciati, L., Maioli, C. & Mirabella, G. Threatening facial expressions impact goal-directed actions only if task-relevant. Brain Sci. 10, 794. https://doi.org/10.3390/brainsci10110794 (2020).

Mirabella, G. The weight of emotions in decision-making: How fearful and happy facial stimuli modulate action readiness of goal-directed actions. Front. Psychol. 9, 1334. https://doi.org/10.3389/fpsyg.2018.01334 (2018).

Belopolsky, A. V., Devue, C. & Theeuwes, J. Angry faces hold the eyes. Vis. Cogn. 19, 27–36. https://doi.org/10.1080/13506285.2010.536186 (2011).

Tottenham, N., Hare, T. A. & Casey, B. J. Behavioral assessment of emotion discrimination, emotion regulation, and cognitive control in childhood, adolescence, and adulthood. Front. Psychol. 2, 39. https://doi.org/10.3389/fpsyg.2011.00039 (2011).

Hare, T. A., Tottenham, N., Davidson, M. C., Glover, G. H. & Casey, B. J. Contributions of amygdala and striatal activity in emotion regulation. Biol. Psychiatr. 57, 624–632. https://doi.org/10.1016/j.biopsych.2004.12.038 (2005).

Ishai, A., Pessoa, L., Bikle, P. C. & Ungerleider, L. G. Repetition suppression of faces is modulated by emotion. Proc. Natl. Acad. Sci. USA 101, 9827–9832. https://doi.org/10.1073/pnas.0403559101 (2004).

Gur, R. C. et al. Brain activation during facial emotion processing. Neuroimage 16, 651–662. https://doi.org/10.1006/nimg.2002.1097 (2002).

Everaert, T., Spruyt, A., Rossi, V., Pourtois, G. & De Houwer, J. Feature-specific attention allocation overrules the orienting response to emotional stimuli. Soc. Cogn. Affect. Neurosci. 9, 1351–1359. https://doi.org/10.1093/scan/nst121 (2014).

Lundqvist, D., Juth, P. & Öhman, A. Using facial emotional stimuli in visual search experiments: The arousal factor explains contradictory results. Cogn. Emot. 28, 1012–1029. https://doi.org/10.1080/02699931.2013.867479 (2014).

Faul, F., Erdfelder, E., Buchner, A. & Lang, A. G. Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behav. Res. Methods 41, 1149–1160. https://doi.org/10.3758/BRM.41.4.1149 (2009).

Oldfield, R. C. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 9, 97–113 (1971).

Ekman, P. & Friesen, W. V. Pictures of facial affect (Consulting Psychologists Press, 1976).

Kwong, K. K. et al. Dynamic magnetic resonance imaging of human brain activity during primary sensory stimulation. Proc. Natl. Acad. Sci. USA 89, 5675–5679. https://doi.org/10.1073/pnas.89.12.5675 (1992).

Mazziotta, J. C., Toga, A. W., Evans, A., Fox, P. & Lancaster, J. A probabilistic atlas of the human brain: Theory and rationale for its development. The international consortium for brain mapping (ICBM). Neuroimage 2, 89–101. https://doi.org/10.1006/nimg.1995.1012 (1995).

Morey, R. D. & Rouder, J. N. BayesFactor: Computation of bayes factors for common designs. R package version 0.9.12–4.2. https://CRAN.R-project.org/package=BayesFactor (2018).

Chumbley, J. R. & Friston, K. J. False discovery rate revisited: FDR and topological inference using gaussian random fields. Neuroimage 44, 62–70. https://doi.org/10.1016/j.neuroimage.2008.05.021 (2009).

Mancini, C., Falciati, L., Maioli, C. & Mirabella, G. Happy facial expressions impair inhibitory control with respect to fearful facial expressions but only when task-relevant. Emotion 22, 142–152. https://doi.org/10.1037/emo0001058 (2022).

Calbi, M. et al. Emotional body postures affect inhibitory control only when task-relevant. Front. Psychol. 13, 1035328. https://doi.org/10.3389/fpsyg.2022.1035328 (2022).

Gordon, E. M. et al. Default-mode network streams for coupling to language and control systems. Proc. Natl. Acad. Sci. USA 117, 17308–17319. https://doi.org/10.1073/pnas.2005238117 (2020).

Uddin, L. Q., Kelly, A. M., Biswal, B. B., Castellanos, F. X. & Milham, M. P. Functional connectivity of default mode network components: Correlation, anticorrelation, and causality. Hum. Brain Mapp. 30, 625–637. https://doi.org/10.1002/hbm.20531 (2009).

Carretié, L., Yadav, R. K. & Méndez-Bértolo, C. The missing link in early emotional processing. Emot. Rev. 13, 225–244. https://doi.org/10.1177/17540739211022821 (2021).

Gross, J. J. Emotion regulation: Affective, cognitive, and social consequences. Psychophysiology 39, 281–291. https://doi.org/10.1017/s0048577201393198 (2002).

McRae, K. & Gross, J. J. Emotion regulation. Emotion 20, 1–9. https://doi.org/10.1037/emo0000703 (2020).

Kanwisher, N. & Yovel, G. The fusiform face area: a cortical region specialized for the perception of faces. Philos. Trans. R. Soc. Lond. B Biol. Sci. 361, 2109–2128. https://doi.org/10.1098/rstb.2006.1934 (2006).

Wiese, H., Kloth, N., Güllmar, D., Reichenbach, J. R. & Schweinberger, S. R. Perceiving age and gender in unfamiliar faces: An fMRI study on face categorization. Brain Cogn. 78, 163–168. https://doi.org/10.1016/j.bandc.2011.10.012 (2012).

Wiese, H., Schweinberger, S. R. & Neumann, M. F. Perceiving age and gender in unfamiliar faces: Brain potential evidence for implicit and explicit person categorization. Psychophysiology 45, 957–969. https://doi.org/10.1111/j.1469-8986.2008.00707.x (2008).

Cohen Kadosh, K., Henson, R. N., Cohen Kadosh, R., Johnson, M. H. & Dick, F. Task-dependent activation of face-sensitive cortex: an fMRI adaptation study. J. Cogn. Neurosci. 22, 903–917. https://doi.org/10.1162/jocn.2009.21224 (2010).

Ganel, T., Valyear, K. F., Goshen-Gottstein, Y. & Goodale, M. A. The involvement of the “fusiform face area” in processing facial expression. Neuropsychologia 43, 1645–1654. https://doi.org/10.1016/j.neuropsychologia.2005.01.012 (2005).

Vuilleumier, P., Armony, J. L., Driver, J. & Dolan, R. J. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat. Neurosci. 6, 624–631. https://doi.org/10.1038/nn1057 (2003).

Satpute, A. B., Shu, J., Weber, J., Roy, M. & Ochsner, K. N. The functional neural architecture of self-reports of affective experience. Biol. Psychiatr. 73, 631–638. https://doi.org/10.1016/j.biopsych.2012.10.001 (2013).

Humphreys, G. F., Lambon Ralph, M. A. & Simons, J. S. A unifying account of angular gyrus contributions to episodic and semantic cognition. Trends Neurosci. 44, 452–463. https://doi.org/10.1016/j.tins.2021.01.006 (2021).

Wagner, A. D., Shannon, B. J., Kahn, I. & Buckner, R. L. Parietal lobe contributions to episodic memory retrieval. Trends Cogn. Sci. 9, 445–453. https://doi.org/10.1016/j.tics.2005.07.001 (2005).

Wheeler, M. E. & Buckner, R. L. Functional dissociation among components of remembering: Control, perceived oldness, and content. J. Neurosci. 23, 3869–3880. https://doi.org/10.1523/jneurosci.23-09-03869.2003 (2003).

Regenbogen, C., Habel, U. & Kellermann, T. Connecting multimodality in human communication. Front. Hum. Neurosci. 7, 754. https://doi.org/10.3389/fnhum.2013.00754 (2013).

Isenburg, K., Morin, T. M., Rosen, M. L., Somers, D. C. & Stern, C. E. Functional network reconfiguration supporting memory-guided attention. Cereb. Cortex https://doi.org/10.1093/cercor/bhad073 (2023).

Lyu, D., Pappas, I., Menon, D. K. & Stamatakis, E. A. A precuneal causal loop mediates external and internal information integration in the human brain. J. Neurosci. 41, 9944–9956. https://doi.org/10.1523/jneurosci.0647-21.2021 (2021).

Cavanna, A. E. & Trimble, M. R. The precuneus: a review of its functional anatomy and behavioural correlates. Brain 129, 564–583. https://doi.org/10.1093/brain/awl004 (2006).

Falquez, R. et al. Detaching from the negative by reappraisal: The role of right superior frontal gyrus (BA9/32). Front. Behav. Neurosci. 8, 165. https://doi.org/10.3389/fnbeh.2014.00165 (2014).

Uusberg, A., Ford, B., Uusberg, H. & Gross, J. J. Reappraising reappraisal: An expanded view. Cogn. Emot. https://doi.org/10.1080/02699931.2023.2208340 (2023).

Sadeh, N. et al. Emotion disrupts neural activity during selective attention in psychopathy. Soc. Cogn. Affect. Neurosci. 8, 235–246. https://doi.org/10.1093/scan/nsr092 (2013).

Goodale, M. A. How (and why) the visual control of action differs from visual perception. Proc. Biol. Sci. 281, 20140337. https://doi.org/10.1098/rspb.2014.0337 (2014).

Acknowledgements

The authors thank Jill Waring for revising the text and providing useful comments.

Funding

This research was supported/was supported by (i) the Department of Clinical and Experimental Sciences of the University of Brescia under the Project “Departments of Excellence 2023–2027” (IN2DEPT Innovative and Integrative Department Platforms), and (ii) PRIN PNNR P2022KAZ45 both awarded to G.M. by the Italian Ministry of University and Research (MIUR).

Author information

Authors and Affiliations

Contributions

GM conceived the presented idea; GG planned the experiment; MT and GS carried out the experiment; GM analyzed behavioral data; GG and MT analyzed fMRI data; GM wrote the first draft of the manuscript; and GG, MT, and GS provided critical feedback on the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Mirabella, G., Tullo, M.G., Sberna, G. et al. Context matters: task relevance shapes neural responses to emotional facial expressions. Sci Rep 14, 17859 (2024). https://doi.org/10.1038/s41598-024-68803-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-68803-y

- Springer Nature Limited