Abstract

Breast cancer (BC) significantly contributes to cancer-related mortality in women, underscoring the criticality of early detection for optimal patient outcomes. Mammography is a key tool for identifying and diagnosing breast abnormalities; however, accurately distinguishing malignant mass lesions remains challenging. To address this issue, we propose a novel deep learning approach for BC screening utilizing mammography images. Our proposed model comprises three distinct stages: data collection from established benchmark sources, image segmentation employing an Atrous Convolution-based Attentive and Adaptive Trans-Res-UNet (ACA-ATRUNet) architecture, and BC identification via an Atrous Convolution-based Attentive and Adaptive Multi-scale DenseNet (ACA-AMDN) model. The hyperparameters within the ACA-ATRUNet and ACA-AMDN models are optimized using the Modified Mussel Length-based Eurasian Oystercatcher Optimization (MML-EOO) algorithm. The performance is evaluated using a variety of metrics, and a comparative analysis against conventional methods is presented. Our experimental results reveal that the proposed BC detection framework attains superior precision rates in early disease detection, demonstrating its potential to enhance mammography-based screening methodologies.

Similar content being viewed by others

Introduction

Breast cancer (BC) is the most prevalent type of malignancy in women and is the second leading cause of cancer-related mortality among women1. One in every 36 female deaths is related to BC, or around 3% of all female deaths are caused by BC. In order to improve the survival rate of the patient, early BC identification is crucial2. Due to the huge lethality and high cost of cancer-related treatments, researchers are introducing increasingly accurate BC diagnostic models into practice3,4. Radiotherapists use mammography as an efficient imaging method to detect and screen the presence of BC. Mammography is the primary clinical test for BC and is quite accurate in predicting BC. Breast Mammography usually detects 80–90% of BC cases in females with no symptoms5. Identifying early signs of breast cancer, such as breast lumps and calcifications, is crucial for timely diagnosis and is effectively facilitated by mammography6.

Early detection of BC through mammography can greatly reduce the cost of treating BC. Although mammography is advantageous, screening BC from it consumes more time and requires expert knowledge for accurate detection. Also, the decision made by radiologists varies. Computer Assisted Diagnosis (CAD) software is created to help radiotherapists improve prediction accuracy while screening through mammography7. However, CAD software has a substantial risk of producing false positive and false negative outcomes8. As a result, it is crucial to improve doctors' detection effectiveness using CAD systems with deep learning approaches. Because of their diverse size, shape, and position, the BC cells are difficult to detect and localize automatically from the BC mammography images9. Several methods have been developed for fragmenting medical mammography images. Due to the limitations of each approach, segmentation of mammography images is proven to be a challenging task10. The recognition of signs of BC from breast mammography images is also a significant challenge. An essential stage in the analysis of mammography images is the feature extraction mechanism. Traditional approaches use handcrafted features to describe the image's content11. The neural network is developed as a replacement for automatically detecting the best characteristics. Additionally, incorrectly interpreting these images results in a dangerously inaccurate diagnosis12. Consider a false negative diagnosis, where a BC in its early stages is mistaken for a typical instance. One of the traditional methods for reducing these kinds of computational errors is feature selection (FS), which eliminates duplicate features and chooses a group of distinctive features. Distinctive features might not be granted the significance they should require in the classification process due to these duplicate features13.

To aid in decision-making, experts have used a variety of machine learning14 approaches in medical image interpretation over the last few years. However, the system's performance suffers concerning efficacy and precision because of the complicated nature of traditional machine learning methods, like segmentation, feature extraction, preprocessing, and others15,16. The newly developed deep learning techniques tackle conventional machine learning problems. This technique can successfully represent features to perform the tasks of object localization and image classification17. Medical professionals can use their expertise to connect dataset features to facts, which is difficult for machine learning techniques to accomplish. In contrast to conventional techniques that rely on manual methods, deep learning eliminates this problem by including processing and feature engineering as a component of learning18. CNN is the most widely used deep learning method for image processing. The 2D input-image configuration can specifically alter the CNN design. However, it achieved a precision of only 88%, which has to be further raised to be more effective than the state-of-the-art methods19. Hence, this paper generates and implements a novel deep-learning approach for early screening and BC.

The main achievements that have been performed in this work are listed below.

-

Proposed a two-step BC detection method improving efficiency and precision in mammogram segmentation and diagnosis.

-

Developed MLL-EOO module to optimize feature extraction in Trans-Rs-UNet and Multi-scale DenseNet, enhancing segmentation.

-

Utilized heuristic-assisted Trans-Res-UNet and Multi-scale DenseNet for intelligent BC detection.

-

Implemented Modified Mussel Length-based Eurasian Oystercatcher Optimization algorithm for fine-tuning the deep learning models.

-

The proposed MLL-EOO module improves the limitation of feature region size selection.

The further sections in this paper are given below. Section "Motivation" carries the literature review done in examining the pre-existing works. Section "Methods" contains the development of the intelligent BC detection model with heuristic-assisted Trans-Res-UNet and Multi-scale DenseNet using mammogram images; provides a detailed view of the Atrous Convolution-based Attentive and Adaptive BC segmentation model using mammogram images; comprises the architectural representation of the Atrous Convolution-based Attentive and Adaptive BC detection model using mammogram images. Section "Results and discussion" gives the experimental outputs and the discussions that are carried out regarding the generated results. Section 5 summarizes the developed deep learning-based BC detection framework.

Motivation

Literature review

Das et al. (2021) proposed a stacked ensemble model for breast cancer (BC) classification that combined breast histopathology images and gene expression data20. The model incorporated the Convex Hull Algorithm and t-Distributed Stochastic Neighbor embedding to transform the 1D gene expression data into images. The dataset and its decomposed forms were utilized to enhance performance. Three convolutional neural networks (CNNs) served as the foundational classifiers in the first stage, with the data decomposed using Variational Mode Decomposition and Empirical Wavelet Transform. The results of the first stage were used to train the second-stage classifier. The model employed gene expression data from Mendeley to create 2D datasets. Training and validation were conducted using synthetic and photographic datasets of breast histopathology. The proposed method demonstrated improved performance, highlighting its effectiveness in BC classification.

In 2021, Saber et al.21 proposed a Transfer Learning (TL) method using ResNet50, Inception V3, VGG-16, ResNet, and VGG-19 networks for feature extraction from the MIAS dataset to aid in the automatic identification and classification of breast cancer (BC) susceptible areas. TL of the VGG16 model demonstrated effective categorization of mammogram breast images for BC diagnosis. In 2022, Jiang et al.22 introduced the Probabilistic Anchor Assignment (PAA) technique to accurately identify and classify mammograms as malignant or benign, improving prognosis ability. The proposed framework included a single-stage PAA-based detector to identify abnormal tumor areas and a two-branch ROI detector for tumor categorization, incorporating a Threshold-adaptive Post-processing Algorithm for complex breast data. The model was trained and evaluated using publicly available mammogram databases, demonstrating enhanced classification accuracy compared to existing techniques.

In 2022, Kavitha et al.23 presented a novel computerized mammogram-based breast cancer (BC) diagnosis framework. The framework employed median filtering for preprocessing to eliminate irrelevant data in mammographic images. BC segmentation was achieved using the Optimal Kapur's Multilevel Thresholding with Shell Game Optimization (OKMT-SGO) method. The proposed model incorporated a Backpropagation Neural Network (BPNN) classifier and a CapsNet feature extractor for BC identification. Evaluation using benchmark DDSM and Mini-MIAS datasets showcased the superior performance of the proposed method in diagnostic accuracy. In 2022, Kumari and Jagadesh24 employed feature selection techniques to enhance classifier performance. Intensity, texture, and shape-based features were extracted from preprocessed medical images. The selected features were used with the XGBoost classifier and compared to other classifiers. The MIAS database was utilized for experimentation and evaluation. Results showed that the proposed XGBoost framework outperformed other feature selection techniques in categorising MIAS mammography images as abnormal or normal, demonstrating its superior performance.

In 2021, Patil and Biradar25 proposed an improved hybrid classification model for mammogram-based breast cancer (BC) detection. The approach involved image preprocessing, feature extraction, segmentation26,27, and identification stages. A median filter was used for noise removal, and the Firefly updated Chicken-based Optimization (FC-CSO) algorithm was employed for tumor segmentation. Features were extracted and fed into a Recurrent Neural Network (RNN) and a Convolutional Neural Network (CNN) for classification28. The combination of the two models achieved superior accuracy compared to traditional classifiers.

In 2022, Pramanik et al.29 proposed a breast mass categorization system using mammograms. The VGG16 architecture with an attention mechanism extracted deep features from mammography images. The Social Ski-Driver (SSD) technique and Adaptive Beta Hill Climbing search strategy were employed to obtain optimal features. The K-Nearest Neighbors (KNN) classifier utilized these features for data classification. The suggested model demonstrated successful recognition and discrimination between healthy and cancerous breasts. Remarkably, the framework achieved higher precision by utilizing only 25% of the attention-aided VGG16 model's features on a publicly available dataset. In 2020, Zheng et al.30 introduced the DL-Assisted Efficient AdaBoost Algorithm (DLA-EABA) for breast cancer (BC) identification. The study investigated the characterization of breast masses using transfer learning from diverse imaging modalities, such as mammography, MRI, digital breast tomosynthesis, and ultrasound. The deep learning model incorporated LSTM layers, convolutional layers, fully connected layers, max-pooling layers, activation layers, and error estimation for classification. The fusion of machine learning approaches with feature selection and extraction methods was examined, and the model's performance was evaluated against existing segmentation methods and conventional classifiers.

Problem statement and objectives

Breast cancer screening through mammography is crucial in early detection, offering the potential for more successful treatment outcomes. However, challenges arise in accurately interpreting mammograms, particularly in women with dense breasts, leading to increased rates of false predictions. Furthermore, normal mammogram results do not guarantee the absence of breast cancer, underscoring the limitations of relying solely on this screening method. In addition, false diagnoses can subject women to unnecessary radiation exposure. The complexity of handling the large volume of mammography images and the variability in prediction outcomes among radiologists further highlight the limitations of traditional breast cancer detection approaches. To address these challenges and improve the accuracy of breast cancer prediction while minimizing errors, we propose adopting deep learning techniques for breast cancer detection from mammography images. This novel approach aims to enhance the precision of predictions and mitigate the generation of false errors, offering a more efficient and reliable method for breast cancer detection.

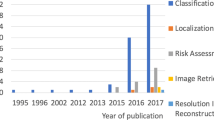

Table 1 presents a comprehensive overview of current breast cancer (BC) detection techniques, along with their respective merits and demerits20,21,22,23,24,25,29,30. CNN and Empirical Wavelet Transform (EWT) demonstrate improved accuracy, recall, and precision detection rates but introduce hardware and time complexity. VGG achieves higher accuracy, AUC, and sensitivity, enhancing system robustness primarily for prognosis objectives. PAA extracts peripheral regions to identify diseases but incurs computational burdens that degrade system robustness. BPNN offers discriminative features for detection but lacks parameter tuning for further enhancement. XGBoost selects notable features for increased detection accuracy but does not support large-scale dimensional datasets. CNN significantly extracts boundary-level regions for accurate results yet, blur or noise in images degrades system robustness and can lead to misdiagnosis. KNN acquires deep and optimal features for cancer region detection but suffers from high time complexity and premature convergence. CNN-LSTM obtains desirable early-stage disease detection but is susceptible to overfitting. These challenges underscore the need to develop and implement an accurate BC detection approach using deep learning techniques.

Methods

Intelligent breast cancer detection model with heuristic-assisted Trans-Res-U-Net and multi-scale DenseNet using mammogram images

Proposed model and description

Traditional breast cancer detection methods like mammography, MRI, and CAD tools frequently fail to diagnose early stages, especially in women with dense breast tissues or surgical histories, leading to misdiagnoses and unnecessary treatments. These techniques, while common, often result in inaccurate predictions and can expose healthy individuals to harmful radiation. Despite the development of advanced imaging technologies such as PET and Molecular Breast Imaging, their high costs limit accessibility primarily to high-risk patients. To improve accuracy and reduce costs, a new deep learning-based framework for breast cancer detection has been introduced.

In the developed deep learning-based BC detection framework, the mammogram images are primarily obtained from standardized mammogram image data sources. These raw images are then provided to the developed ACA-ATRUNet classifier for the segmentation process. Before classification, these images are segmented to improve the overall accuracy of the further detection process. The segmented images are now given to the implemented ACA-AMDN framework for classifying the BC images. An enhanced metaheuristic optimization algorithm called the MML-EOO algorithm is suggested to reduce the processing complexity and computational time. The recommended MML-EOO algorithm optimizes the hidden neurons in the ACA-ATRUNet classifier, Epochs in the ACA-ATRUNet classifier, steps per epochs in the ACA-ATRUNet classifier, hidden neurons in the ACA-AMDN classifier, epochs in the ACA-AMDN classifier, and the batch size in the ACA-AMDN classifier, respectively. These optimized parameters help in fastening the entire detection process. The final detection image of BC is obtained from the ACA-AMDN classifier.

Atrous convolution-based attentive and adaptive breast cancer segmentation model using mammogram images

ACA-ATRUNet-based breast cancer segmentation

The obtained raw mammogram images \(B{C}_{fs}^{img}\) are given to the generated ACA-ATRUNet for image segmentation where \(img\) symbolizes images, \(fs\) implies feature selection. ACA-ATRUNet is developed by replacing the normal convolutional layer in the Trans-Res-UNet with an Atrous convolutional layer and including an attention mechanism. The entire process is repeated several numbers of times (Multi-scale) before producing the final segmented BC image output \(S{I}_{ad}^{TRU}\). With the aid of the recommended MML-EOO algorithm, the elements in the ACA-ATRUNet structure are adjusted to increase the precision of the segmented BC images. The elements, such as epochs, steps per epochs, and the hidden neurons in the ACA-ATRUNet, are optimized by the suggested MML-EOO algorithm. This optimization is done to reluimize the dice coefficient and the accuracy between the mask images and the segmented BC images. The main idea behind this parameter optimization is mathematically represented in Eq. (1).

The term \(S1\) in Eq. (1) denotes the objective function of the developed ACA-ATRUNet, \(h{i}_{lm}^{TRU}\) denotes the optimally adjusted number of hidden neurons, \(e{h}_{kl}^{TRU}\) denotes the optimally adjusted number of epochs, \(s{e}_{jk}^{TRU}\) denotes the number of optimally adjusted steps per epoch, \(Dice\) signifies the dice co-efficient between the mask image and the segmented BC image, and \(Arcy\) represents the accuracy. The steps per epoch are tuned in the range \(\left[\text{300,1000}\right]\), the hidden neurons are tuned in the range \(\left[\text{5,255}\right]\), and the epochs are tuned in the range \(\left[\text{5,50}\right]\). These parameters are tuned to maximize the Dice coefficient and accuracy. The Dice coefficient is the overlap among the masked and segmented images. The dice coefficient between the mask image and the segmented image is given by Eq. (2).

The term \(M{I}_{ma}^{am}\) in Eq. (2) denotes the mask images and \(S{I}_{ad}^{TRU}\) represents the segmented BC image. The accuracy \(Arcy\) evaluated using Eq. (3).

In Eq. (6), the terms \(VW\) represent the true negative, \(TU\) represents the true positive, \(VX\) represents the false negative, and \(TV\) represents the false positive, respectively. The pictorial representation of the implemented ACA-ATRUNEt-based BC mammogram image segmentation is provided in Fig. 1.

Architectural representation of atrous convolution-based attentive and adaptive breast cancer detection model using mammogram images

ACA-AMDN-based breast cancer detection

The segmented images from the ACA-ATRUNet \(S{I}_{ad}^{TRU}\) are fed to the developed ACA-AMDN structure for BC image classification. ACA-AMDN is developed by replacing the normal convolutional layer in the DenseNet with an Atrous convolutional layer and including an attention mechanism. The process is repeated several times (Multi-scale) in the DenseNet structure before producing the final classification output. The classified image output of BC mammogram images is given by \(C{I}_{hs}^{MDN}\). The parameters are optimized in the ACA-AMDN structure with the assistance of the implemented MML-EOO algorithm. The parameters like epochs, batch size, and the hidden neurons in the Multi-scale DenseNet are optimally tuned with the help of the proposed MML-EOO algorithm. This optimization aims at maximizing accuracy and minimizing False Positive Rate (FPR). The major contribution behind this parameter optimization is formulated as in Eq. (4)

The term \(S2\) in Eq. (4) denotes the objective function of the developed ACA-AMDN, \(h{i}_{ml}^{MDN}\) denotes the optimally adjusted number of hidden neurons, \(e{h}_{lk}^{MDN}\) denotes the optimally adjusted number of epochs, \(b{s}_{kj}^{MGN}\) denotes the number of optimally adjusted steps per epoch, and \(XY\) denotes the FPR. The batch size is tuned as \(\left[2, 4, 8, 16, 32, 64\right]\) the hidden neurons are tuned in the range \(\left[5, 255\right]\), and the epochs are tuned in the range \(\left[5, 50\right]\). These parameters are optimized to maximize the accuracy and minimize the FPR. The FPR is computed using Eq. (5) as follows.

The pictorial illustration of the implemented ACA-AMDN-based BC classification is shown in Fig. 2.

Proposed MML-EOO

By optimizing the epoch, hidden neurons, step size of Trans-Rs-UNet and epoch, hidden neurons, and batch size of Multi-scale DenseNet, the final prediction result of the generated BC classification model can be improved. The suggested MML-EOO algorithm achieves this parameter optimization. The suggested MML-EOO algorithm achieves this parameter optimization. Because of its balanced exploitation and exploration and the capacity to eliminate local optimums, the EOO31 algorithm is used in this paper. Due to the oyster size selection constraint, however, this technique cannot resolve challenging real-time issues. As a result, the EOO algorithm's oyster size constraint \(O\) is upgraded using the formula provided in Eq. (6).

The term \(r\) in Eq. (10) represents the current iteration value, \(R\) symbolizes the maximum iteration count, and \(O\) denotes the size of the oyster. The value of \(O\) is in the range \(\left[\text{3,5}\right]\) in the traditional EOO algorithm, which is upgraded using Eq. (10) in the developed MML-EOO algorithm. The value \(O\) decreases linearly from 50 to 30 mm in the suggested MML-EOO algorithm. The value \(O\) in Eq. (6) is used to update the size of the oyster in Eqs. 7, 8, 9, and 10. The exploration of the EO is described as follows. The amount of energy that is available in the EO \(K\) at the final stage of hunting the oyster is given by Eq. (7).

This size of the oyster \(O\) in Eq. (7) is upgraded using the fitness-based concept provided in Eq. (6). In Eq. (11),\(N\) denotes the current energy requirement,\(J\) denotes the time requirement of the EO to open the ideal oyster, and \(f\) represents a number in random in the range \(\left[\text{0,1}\right]\) that is selected to increase the predictability in the search area. The value of the available energy in the EO \(K\) varies inversely as the iteration count \(r\). The position in which the ideal oyster is found available is provided in Eq. (8).

The term \(Q\) in Eq. (8) represents the amount of energy the EO obtained from eating the ideal oyster of size \(O\) and \({P}_{r}\) represents the position of the ideal oyster. The value of \(J\) and the value of \(Q\) relies on \(O\). The time required to open a selected oyster \(J\) is formulated as in Eq. (5).

The value of \(O\) in Eq. (9) is updated using the fitness-based concept provided in Eq. (10). The presently available energy in the bird is computed as in Eq. (10).

The calorie that can be obtained by consuming the oyster \(Q\) is given in Eq. (11).

The value of \(Q\) in Eq. (11) is updated using Eq. (6). If the time is negative, it represents that the bird has reached its maximum capacity in opening the oyster and cannot further spend energy in opening it. This is considered an exceptional case.\(N\) remains constant in the last iteration and its preceding iteration. Thus \(N\) and \(J\) will have a negative value. The main contribution of the EOO algorithm is given as follows.

-

1.

The precision of selecting a mussel by calculating the time needed to break one is calculated using the bird's energy and the mussel's size as variables to estimate the anticipated location of the desired food.

-

2.

The random numbers entered during optimization help investigate new areas during each cycle. Avoid a local minimum issue as a result.

-

3.

The random numbers used at each optimization stage ensure research and application.

Algorithm 1: Proposed MML-EOO pseudocode

The flowchart of the suggested MML-EOO algorithm is given in Appendix (A) supplementary information. The pseudocode of the proposed MML-EOO algorithm is presented in Algorithm 1. In our model, the linear activation function is employed in the regression-based output layers to directly output unbounded numerical values, crucial for maintaining the scale of our target variable. Conversely, the softmax activation function, used in the classification layers, transforms raw neural network scores into probabilities, essential for distinguishing among categories like benign, malignant, or normal in mammogram imaging. While linear functions help preserve output consistency, softmax is vital for accurate multi-class classification, facilitating definitive diagnostic decisions.

Results and discussion

Mammogram images collection

Two major BC mammography image databases provided the input BC pictures needed to carry out the segmentation and detection functions in the implemented BC detection model. Table 2 contains information about the database and the sources from which the images are available. The term \(B{C}_{fs}^{img}\) represents the collected images from the two standard databases.

Experimental setup

The constructed DL-based BC detection model was assessed using the Python platform. The experimental results of this evaluation were further discussed. The DL-based BC detection model was constructed with an iteration count that should not exceed 50 and a maximum population size of 10, respectively. The MML-EOO-ACA-ATRUNet-AMDN-based BC detection framework was assessed against different classifiers like UNet32, KNN29, CNN25, XGBoost24, ResUNet+ + 33, GPA-TUNet34, and Deeplab35, and contrasted with existing meta-heuristic algorithms like Grey Wolf Optimization algorithm (GWO)-ACA-ATRUNet-AMDN36, Honey Badger Algorithm (HBA)-ACA-ATRUNet-AMDN37, JAYA-ACA-ATRUNet-AMDN38, and EOO-ACA-ATRUNet-AMDN39 algorithm for representing the accuracy of the developed deep learning-based BC detection model.

Validation metrics used in evaluation

The below-provided metrics are utilized in assessing the implemented BC detection framework.

\(Np=\frac{\text{VW}}{TV+VW}\quad\quad (12)\) | \(pcn=\frac{TU}{TU+TV}\quad\quad (13)\) | \(Fd=\frac{\text{TV}}{TV+TU}\quad\quad (14)\) |

\(Sp=\frac{\text{VW}}{VX+TU}\quad\quad (15)\) | \(Se=\frac{\text{TU}}{TU+VX}\quad\quad (16)\) | \(\text{Fs} = \frac{\text{2*TU}}{2*\left(TU+TV+VX\right)}\quad\quad (17)\) |

\(Fn=\frac{\text{VX}}{TU+VX}\quad\quad (18)\) | \(Jd\left(M{I}_{ma}^{am},S{I}_{ad}^{TRU}\right)=\frac{\left|M{I}_{ma}^{am}\cap S{I}_{ad}^{TRU}\right|}{\left|M{I}_{ma}^{am}\cup S{I}_{ad}^{TRU}\right|}\quad\quad (19)\) | |

\(\text{Mc} = \frac{\text{TU*VW-TV*VX}}{\sqrt{\left(TU+TV\right)\left(TU+VX\right)\left(VW+TV\right)\left(VW+VX\right)}}\quad\quad (20)\) | ||

Negative Predictive Value (NPV) \(Np\) is determined by Eq. (12). The precision \({\text{pcn}}\) is evaluated based on Eq. (13). False Discovery Rate (FDR) \(Fd\) is computed as in Eq. (14). Specificity \(Sp\) is determined as in Eq. (15). Matthews Correlation Co-efficient (MCC) \(Mc\) is evaluated as provided in Eq. (20). Sensitivity \(Se\) is calculated using Eq. (16). The F1 score \({\text{Fs}}\) is determined using Eq. (17). False Negative Rate (FNR) \(Fn\) is evaluated using Eq. (18). The Jaccard distance \(Jd\) between the ground truth image/mask images and the segmented image is computed using Eq. (19).

The images gathered from the two databases are shown in Fig. 3.

Experimental outcome

The segmented BC mammogram images obtained from various deep learning techniques and the ground truth comparison with the suggested MML-EOO-ACA-ATRUNet technique output are shown in Fig. 4.

Performance comparison of the developed BC detection model with conventional classifiers

The performance comparison of the developed MML-EOO-ACA-ATRUNet-MDN BC detection model with respect to conventional classifiers for the MIAS Mammography Dataset and CBIS-DDSM Breast Cancer Image Dataset is given in Figs. 5 and 6, respectively. The precision of the implemented MML-EOO-ACA-ATRUNet-MDN 5%, 2.56%, 3.8%, and 5.56% higher than the KNN, CNN, RAN, and RAN-LSTM classifiers for MIAS Mammography Dataset for ReLU activation function, respectively. We have mentioned the precision and accuracy results here; other evaluation measures' performance comparison results can be found in Appendix A: supplementary material.

Comparison of the proposed BC detection framework with existing algorithms

The performance comparison of the proposed MML-EOO-ACA-ATRUNet-MDN BC detection model with respect to various existing algorithms for MIAS Mammography Dataset and CBIS-DDSM breast cancer image is given in Figs. 7 and 8, respectively. The accuracy of the proposed MML-EOO-ACA-ATRUNet-MDN-based BC detection framework is 2.32%, 3.27%, 3.39%, and 3.63% better than the EOO-ACA-ATRUNet-MDN, JAYA-ACA-ATRUNet-MDN, HBA-ACA-ATRUNet-MDN, and GWO-ACA-ATRUNet-MDN algorithms respectively for CBIS-DDSM breast cancer image on Leaky ReLU activation function.

Statistical examination of the implemented BC detection framework with traditional classifiers

The statistical examination of the implemented MML-EOO-ACA-ATRUNet-MDN-based BC detection framework to different traditional classifiers in the MIAS Mammography Dataset and CBIS-DDSM breast cancer image is shown in Figs. 9 and 10, respectively. The precision of the implemented MML-EOO-ACA-ATRUNet-MDN BC detection framework is 2.63%, 1.33%, 5.13%, and 5.71% better than the GPA-TUNet, Deeplab, ResUNet+ + , and UNet classifiers respectively for MIAS Mammography Dataset.

Statistical assessment of the constructed BC detection model with other heuristic algorithms

The statistical assessment of the constructed MML-EOO-ACA-ATRUNet-MDN BC detection model to other heuristic algorithms in the MIAS Mammography Dataset and CBIS-DDSM breast cancer image is illustrated in Figs. 11 and 12, respectively. The sensitivity of the constructed MML-EOO-ACA-ATRUNet-MDN BC detection model is 1.04%, 2.11%, 2.65%, and 2.11% higher than the EOO-ACA-ATRUNet-MDN, JAYA-ACA-ATRUNet-MDN, HBA-ACA-ATRUNet-MDN, and GWO-ACA-ATRUNet-MDN algorithms respectively for MIAS Mammography Dataset.

Cost function analysis of the generated BC detection framework

The cost function of the generated MML-EOO-ACA-ATRUNet-MDN-based BC detection framework is 7.87%, 9.4%, 13.11%, and 23.19% lesser than the JAYA-ACA-ATRUNet-MDN, GWO-ACA-ATRUNet-MDN, HBA-ACA-ATRUNet-MDN, and EOO-ACA-ATRUNet-MDN algorithms respectively for CBIS-DDSM breast cancer image shown in Fig. 13.

Classifier analysis of the suggested MML-EOO-ACA-ATRUNet-MDN-based BC detection framework

The performance comparison of the suggested MML-EOO-ACA-ATRUNet-MDN-based BC detection framework is tabulated in Table 3. The precision of the suggested MML-EOO-ACA-ATRUNet-MDN-based BC detection framework is 34.52%, 18.41%, 19.32%, and 22.49% higher than the KNN, CNN, XGBoost, and ACA-ATRUNet-MDN classifiers respectively for MIAS Mammography Dataset.

Algorithmic evaluation of the recommended MML-EOO-ACA-ATRUNet-MDN-based BC detection model

The algorithmic evaluation of the recommended MML-EOO-ACA-ATRUNet-MDN-based BC detection framework is tabulated in Table 4. The NPV of the recommended MML-EOO-ACA-ATRUNet-MDN-based BC detection model is 1.94%, 1.64%, 1.68%, and 1.2% better than the GWO-ACA-ATRUNet-MDN, HBA-ACA-ATRUNet-MDN, JAYA-ACA-ATRUNet-MDN, and EOO-ACA-ATRUNet-MDN algorithms respectively for CBIS-DDSM breast cancer image.

Conclusion

In this study, we have successfully developed and validated a highly accurate deep learning-based framework for breast cancer (BC) detection. The framework's robustness was first established through the collection of mammography images from benchmark datasets. A key innovation in our approach is the ACA-ATRUNet, a novel architecture combining Transformer blocks, ResNet, and UNet, which was meticulously tuned using the modified MML-EOO algorithm. This was crucial for effective segmentation, a foundational step in our two-phase detection process. The subsequent phase, actual BC detection, was executed using the ACA-AMDN, also fine-tuned with the MML-EOO algorithm. The combined use of these advanced technologies not only enhances the detection efficiency and precision but also addresses the common limitation in feature region size selection typically encountered in feature extraction networks.

Data availability

The datasets used/analyzed in our study are publicly available and were accessed as follows: The MIAS Mammography dataset, accessed on 2023-01-11, is available at https://www.kaggle.com/datasets/kmader/mias-mammography, the CBIS-DDSM: Breast Cancer Image Dataset, accessed on 2023-03-07, can be found at https://www.kaggle.com/datasets/awsaf49/cbis-ddsm-breast-cancer-image-dataset.

References

Wu, N. et al. Deep neural networks improve radiologists’ performance in breast cancer screening. IEEE Trans. Med. Imaging 39(4), 1184–1194 (2019).

Sameti, M. et al. Image feature extraction in the last screening mammograms prior to detection of breast cancer. IEEE J. Sel. Top. Signal Process. 3(1), 46–52 (2009).

Geweid, G. G. & Abdallah, M. A. A novel approach for breast cancer investigation and recognition using M-level set-based optimization functions. IEEE Access 7, 136343–136357 (2019).

Yaqub, M. et al. GAN-TL: Generative adversarial networks with transfer learning for MRI reconstruction. Appl. Sci. 12(17), 8841 (2022).

Lee, J. & Nishikawa, R. M. Identifying women with mammographically-occult breast cancer leveraging GAN-simulated mammograms. IEEE Trans. Med. Imaging 41(1), 225–236 (2021).

Lu, M. et al. Detection and localization of breast cancer using UWB microwave technology and CNN-LSTM framework. IEEE Trans. Microwave Theory Tech. 70(11), 5085–5094 (2022).

Singla, C., Sarangi, P. K., Sahoo, A. K. & Singh, P. K. Deep learning enhancement on mammogram images for breast cancer detection. Mater. Today Proc. 49, 3098–3104 (2022).

Su, Y., Liu, Q., Xie, W. & Hu, P. YOLO-LOGO: A transformer-based YOLO segmentation model for breast mass detection and segmentation in digital mammograms. Comput. Methods Program. Biomed. 221, 106903 (2022).

Raaj, R. S. Breast cancer detection and diagnosis using hybrid deep learning architecture. Biomed. Signal Process. Control 82, 104558 (2023).

Gurudas, V. R., Shaila, S. G. & Vadivel, A. J. S. C. S. Breast cancer detection and classification from mammogram images using multi-model shape features. SN Comput. Sci. 3(5), 404 (2022).

Rehman, K. U., Li, J., Pei, Y. & Yasin, A. A review on machine learning techniques for the assessment of image grading in breast mammogram. Int. J. Mach. Learn. Cybern. 13(9), 2609–2635 (2022).

Kalita, D. J., Singh, V. P. & Kumar, V. Detection of breast cancer through mammogram using wavelet-based LBP features and IWD feature selection technique. SN Comput. Sci. 3(2), 175 (2022).

Melekoodappattu, J. G., Dhas, A. S., Kandathil, B. K. & Adarsh, K. S. Breast cancer detection in mammogram: Combining modified CNN and texture feature based approach. J. Ambient Intell. Hum. Comput. 14(9), 11397–11406 (2023).

Suganthi, M. & Madheswaran, M. An improved medical decision support system to identify the breast cancer using mammogram. J. Med. Syst. 36, 79–91 (2012).

Sarvestani, Z. M. et al. A novel machine learning approach on texture analysis for automatic breast microcalcification diagnosis classification of mammogram images. J. Cancer Res. Clin. Oncol. 149(9), 6151–6170 (2023).

Yaqub, M. et al. State-of-the-art CNN optimizer for brain tumor segmentation in magnetic resonance images. Brain Sci. 10(7), 427 (2020).

Singh, L. & Alam, A. An efficient hybrid methodology for an early detection of breast cancer in digital mammograms. J. Ambient Intell. Hum. Comput. 15(1), 337–360 (2024).

Ketabi, H. et al. A computer-aided approach for automatic detection of breast masses in digital mammogram via spectral clustering and support vector machine. Phys. Eng. Sci. Med. 44(1), 277–290 (2021).

Ittannavar, S. S. & Havaldar, R. H. Detection of breast cancer using the infinite feature selection with genetic algorithm and deep neural network. Distrib. Parallel Databases 40(4), 675–697 (2022).

Das, A. et al. Breast cancer detection using an ensemble deep learning method. Biomed. Signal Process. Control 70, 103009 (2021).

Saber, A., Sakr, M., Abo-Seida, O. M., Keshk, A. & Chen, H. A novel deep-learning model for automatic detection and classification of breast cancer using the transfer-learning technique. IEEE Access 9, 71194–71209 (2021).

Jiang, J. et al. Breast cancer detection and classification in mammogram using a three-stage deep learning framework based on PAA algorithm. Artif. Intell. Med. 134, 102419 (2022).

Kavitha, T. et al. Deep learning based capsule neural network model for breast cancer diagnosis using mammogram images. Interdiscip. Sci. Comput. Life Sci. 14, 113–129 (2021).

Kanya-Kumari, L. & Naga Jagadesh, B. An adaptive teaching learning based optimization technique for feature selection to classify mammogram medical images in breast cancer detection. Int. J. Syst. Assur. Eng. Manag. 15(1), 35–48 (2024).

Patil, R. S. & Biradar, N. Automated mammogram breast cancer detection using the optimized combination of convolutional and recurrent neural network. Evol. Intell. 14(4), 1459–1474 (2021).

Khan, T.M., Robles-Kelly, A., & Naqvi, S.S. T-Net: A resource-constrained tiny convolutional neural network for medical image segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (2022).

Khan, T.M., et al. MKIS-net: A light-weight multi-kernel network for medical image segmentation. In 2022 International Conference on Digital Image Computing: Techniques and Applications (DICTA). IEEE (2022).

Yaqub, M. et al. DeepLabV3, IBCO-based ALCResNet: A fully automated classification, and grading system for brain tumor. Alex. Eng. J. 76, 609–627 (2023).

Pramanik, P. et al. Deep feature selection using local search embedded social ski-driver optimization algorithm for breast cancer detection in mammograms. Neural Comput. Appl. 35(7), 5479–5499 (2023).

Zheng, J. et al. Deep learning assisted efficient AdaBoost algorithm for breast cancer detection and early diagnosis. IEEE Access 8, 96946–96954 (2020).

Yousif, M., et al., Eurasian Oystercatcher Optimiser: New Meta-heuristic Algorithm. 2022.

Anaya-Isaza, A. et al. Comparison of current deep convolutional neural networks for the segmentation of breast masses in mammograms. IEEE Access 9, 152206–152225 (2021).

Hizukuri, A. et al. Computerized segmentation method for nonmasses on breast DCE-MRI images using ResUNet++ with slice sequence learning and cross-phase convolution. J. Imaging Inform. Med. https://doi.org/10.1007/s10278-024-01053-6 (2024).

Li, C., Wang, L. & Li, Y. Transformer and group parallel axial attention co-encoder for medical image Segmentation. Sci. Rep. 12(1), 16117. (2022)

Niu, Z. et al. DeepLab-based spatial feature extraction for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 16(2), 251–255 (2018).

Dhiman, R. Motor imagery signal classification using Wavelet packet decomposition and modified binary grey wolf optimization. Meas. Sens. 24, 100553 (2022).

Hashim, F. A. et al. Honey badger algorithm: New metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. 192, 84–110 (2022).

Rao, R. V. & Saroj, A. An elitism-based self-adaptive multi-population Jaya algorithm and its applications. Soft Comput. 23, 4383–4406 (2019).

Salim, A. et al. Eurasian oystercatcher optimiser: New meta-heuristic algorithm. J. Intell. Syst. 31(1), 332–344 (2022).

Pang, L. et al. CD-TransUNet: a hybrid transformer network for the change detection of urban buildings using L-band SAR images. Sustainability 14(16), 9847 (2022).

Acknowledgements

This work was supported by grants from the National Natural Science Foundation of China (No. 32000622). The authors would like to thank the School of Biomedical Science, Hunan University, and funding organizations.

Author information

Authors and Affiliations

Contributions

M.Y: Conceptualization, Formal analysis, Methodology, Validation, Visualization, Writing – original draft, Writing – review & editing. F.J: Investigation, Formal Analysis, Validation, Writing – review & editing. N.A: Analysis, visualization, Writing – review & editing. S.A: Software, Formating, Writing – original draft, Writing – review & editing, Validation. A.M: Investigation, validation, Writing – review & editing. H.J: Validation, Review & editing. L.H: Conceptualization, Investigation, Project administration, Writing – review & editing. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yaqub, M., Jinchao, F., Aijaz, N. et al. Intelligent breast cancer diagnosis with two-stage using mammogram images. Sci Rep 14, 16672 (2024). https://doi.org/10.1038/s41598-024-65926-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-65926-0

- Springer Nature Limited