Abstract

Intracranial aneurysms (IAs) are a common vascular pathology and are associated with a risk of rupture, which is often fatal. Aneurysm growth of more than 1 mm is considered a surrogate of rupture risk, therefore, this study presents a comprehensive analysis of intracranial aneurysm measurements utilizing a dataset comprising 358 IA from 248 computed tomography angiography (CTA) scans measured by four junior raters and one senior rater. The study explores the variability in sizing assessments by employing both human raters and an Artificial Intelligence (AI) system. Our findings reveal substantial inter- and intra-rater variability among junior raters, contrasting with the lower intra-rater variability observed in the senior rater. Standard deviations of all raters were above the threshold for IA growth (1 mm). Additionally, the study identifies a systemic bias, indicating a tendency for human experts to measure aneurysms smaller than the AI system. Our findings emphasize the challenges in human assessment while also showcasing the capacity of AI technology to improve the precision and reliability of intracranial aneurysm assessments, especially beneficial for junior raters. The potential of AI was particularly evident in the task of monitoring IA at various intervals, where the AI-based approach surpassed junior raters and achieved performance comparable to senior raters.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Intra- and inter-rater differences in intracranial aneurysm (IA) size measurement can affect their radiological characterization1,2,3 and upon based treatment decision. Clinical practice is generally inclined towards preventive endovascular or neurosurgical IA treatment, but these options come with a high risk of procedure-related complications4. Hence, small IAs frequently go untreated in patients because the risk of complications from treatment is thought to be higher than the risk of rupture. The majority of patients with small IAs undergo frequent computed tomography or magnetic resonance angiographies (CTA and MRA, resp.) to monitor the development of IA over time. If a size increase (i.e. growth) of an IA is observed, it is usually prioritized for treatment because growing IAs are more likely to rupture than the stable ones5,6,7,8,9.

Growth of an aneurysm is determined by assessing its size from consecutive angiographic scans. The primary parameter for measurement is the aneurysm’s dome diameter. The determination of whether an aneurysm has undergone growth relies on the disparity between the measurements performed in baseline and follow-up angiographic scans. If the IA size measurements increase for at least 1 mm in any direction10, the aneurysm is categorized as having undergone growth and is therefore associated with an elevated risk of rupture. However, the established size increase threshold for identifying aneurysm growth is positioned well within the range of potential intra- and inter-rater variability, therefore determining the IA growth through manual size measurement seems rather unreliable.

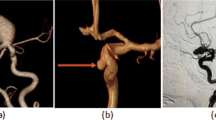

In practice, the clinicians typically use manual annotation tools and a 2D slice-by-slice viewer to select two dome wall endpoints and thereby measure the IA size on the three-dimensional (3D) MRA and CTA scans, or if in the interventional suite, on 3D digital subtraction angiography (DSA) or even two-dimensional (2D) DSA. The use of manual 2D measurements has inherent limitations, such as limited perspectives of the aneurysm that might not accurately represent the entirety of its dimensions11,12. The variability in the 2D measurement plane selection further contributes to the variability of size measurement, in addition of the variability of endpoint selection, both within the same and among different raters13,14.

In contemporary research, numerous engineering research teams have introduced computational methodologies and software solutions to offer a more objective, precise, and consistent assessment of the IA morphology15,16. Nevertheless, in clinical practice, the healthcare professionals continue to rely predominantly on the manual 2D size measurements. This prevalent reliance on traditional techniques might stem from the lack of (i) comprehensive comparative analysis between the manual measurements and those obtained using these emerging computerized tools, and (ii) experimental evidence of enhancing the accuracy and/or consistency in morphological evaluation, and (iii) their alignment with the prevailing norms and practices in the clinical domain.

This study focuses on the accuracy and reproducibility aspects of IA size measurement with three key objectives: (1) quantification of intra- and inter-rater variability of IA size measurements of one senior and four junior raters, (2) comparison of the accuracy and precision of computerized 3D versus the manual 2D IA size measurements, and (3) validation of the proficiency of junior versus senior raters’, and versus the computerized 3D measurement approach for assessing aneurysm growth. The implications of this study’s findings highlight the potential of computerized 3D IA size measurements to provide a valuable assistance to clinicians, enhancing the standardization of aneurysm morphology evaluation.

Experiments and results

This study assesses and compares IA size measurements obtained from various human raters, using 2D manual size assessment, and a computerized 3D measurement. Human raters that participated in the assessment of IA size were young doctors or medical students in their final years (junior raters 1–4) and one neuroradiologist with > 15 years of experience in the field (senior rater).

Each junior rater independently performed 484 IA size measurements on 248 CTA images, measuring 126 IAs twice. The senior rater conducted 246 IA measurements, including 63 instances where the IAs were measured twice. The CTA data were anonymized and presented to the raters in randomized order; hence, during the experiment the raters were not aware they were performing repeated measurements. The computerized 3D IA measurements were obtained for all IA. For more details regarding the dataset, refer to Sect. 0.1.

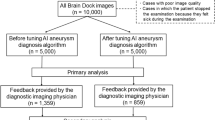

The study encompassed four experiments that evaluated (1) intra-rater variability, (2) inter-rater variability, (3) a comparative analysis between the computerized and manual measurements, and (4) impact of the measurement values on the assessment of IA growth status (see Fig. 1).

Inter-rater analysis

The first set of experiments compared the performance of junior raters versus the senior rater. Figure 2 shows the scatter diagrams and reports the pairwise intra-class correlation coefficient (ICC) values, separately for the ruptured and unruptured IAs. Similarly, the Bland-Altman plots report corresponding systematic (average difference) and random errors (standard deviation).

The average differences between junior raters and the senior rater in the manual IA size measurements were smaller for ruptured cases compared to stable cases (0.51 vs 0.8, − 0.13 vs 0.62, − 0.18 vs 0.27, 0.78 vs 0.96, respectively). Conversely, the standard deviations of the measurements were generally higher for the ruptured cases than for the stable ones. Figure 2 illustrates that the variability of the manual measurements was generally higher for the larger ruptured IAs but not for the unruptured ones.

We conducted comparisons between all raters by computing Cohen’s d effect size for the IA size measurements across all combinations of raters (see Table 1). Comparing senior rater to junior raters 1, 2, 3 and 4 we obtained Cohen’s values 0.18, 0.07, 0.02 and 0.25, respectively. The measurements for junior raters 1 and 4 had somewhat larger discrepancies to the senior rater’s measurements, while the measurements by junior raters 2 and 3 were well aligned with senior’s ones.

When comparing the measurements between the junior raters we observed that the comparison between junior rater 1 and 4, as well as between junior rater 2 and 3, yielded a rather low Cohen’s d value of 0.06. Other combinations of junior raters yielded Cohen’s d values between 0.12 and 0.24 (see Table 1).

The ICC2 analysis, as outlined by Koo et al.17, for inter-rater variability indicates good agreement (0.75–0.90) or excellent agreement (above 0.9) between junior raters and the senior rater (0.89, 0.89, 0.91, and 0.91 for raters 1–4, respectively). The ICC2 analysis based on the rupture vs. unruptured IA groups reveals excellent agreement for all the raters in the unruptured group (0.92, 0.92, 0.95, and 0.96 for raters 1–4, respectively) and good agreement for the ruptured group (0.82, 0.83, 0.88, 0.85 for raters 1–4, respectively). Note that throughout this paper, we adhere to the interpretation guidelines for the ICC2 values as provided by Koo et al.17.

Intra-rater analysis

For the intra-rater analysis, each junior rater conducted repeated measurements on a set of 126 intracranial aneurysms (IAs), while the senior rater undertook the same for 63 IAs. To prevent measurement memorization effects, cases were anonymized using random names. The order of assessment was randomized in the first and second assessment round, performed with a three weeks break.

The intra-rater variability analysis is provided through scatter and Bland-Altman plots for each rater shown in Fig. 3. Junior rater 1 exhibited an average intra-rater difference of 0.23 mm and 0.08 mm, with standard deviations of 1.67 mm and 3.8 mm for unruptured and ruptured cases, respectively. Junior rater 2 demonstrated mean differences of 0.05 mm and − 0.19 mm, with standard deviations of 1.65 mm and 3.07 mm for unruptured and ruptured groups. Junior rater 3 showed mean differences of 0.35 mm and − 0.09 mm, with standard deviations of 2.68 mm and 5.12 mm for unruptured and ruptured groups, respectively. Junior rater 4 achieved differences of 0.34 mm and − 0.31 mm, with standard deviations of 1.87 mm and 2.72 mm for unruptured and ruptured groups, respectively. The senior rater exhibited differences of 0.29 mm and 0.01 mm, with standard deviations of 1.23 mm and 1.73 mm for unruptured and ruptured groups, respectively.

The intra-rater Cohen’s d analysis revealed low values for all raters (0.06, 0.03, 0.07, 0.01, and 0.07 for Junior raters 1, 2, 3, 4, and the senior rater, respectively). The analysis indicated generally smaller Cohen’s d values for the stable group (0.01, 0.07, 0.04, 0.02, and 0.01 for Junior raters 1, 2, 3, 4, and the senior rater, respectively) compared to the ruptured group (0.17, 0.03, 0.10, 0.02, and 0.03 for Junior raters 1, 2, 3, 4, and the senior rater, respectively).

The intra-rater ICC2 variability analysis demonstrates good agreement (0.75–0.90) or excellent agreement (above 0.9) for all raters (0.91, 0.91, 0.88, and 0.93 for junior raters 1–4, respectively, and 0.94 for the senior rater). The ICC2 analysis, based on the rupture vs. unruptured group, indicates excellent correlation for all junior raters in the unruptured group (0.96, 0.94, 0.91, and 0.95 for junior raters 1–4, respectively) and good correlation for the ruptured group (0.81, 0.87, 0.85, 0.89 for junior raters 1–4, respectively). The senior rater achieved excellent correlation for both groups (0.96 for the unruptured group and 0.91 for the ruptured group).

Validation of computerized measurements

The automated measurements were compared to manual measurements, and the agreement and consistency can be observed from the scatter and Bland-Altman plots in Fig. 4 for each rater versus the automated approach. The results indicated that junior raters 1, 2, 3, and 4 had mean differences of 2.44 mm, 2.82 mm, 2.36 mm, and 3.05 mm for the unruptured group and 3.01 mm, 3.58 mm, 2.82 mm, and 3.56 mm for the ruptured group, respectively, when compared to the computerized measurements. The corresponding standard deviations were 2.48 mm, 2.64 mm, 2.66 mm, and 2.33 mm for the unruptured group and 3.66 mm, 3.5 mm, 3.12 mm, and 3.39 mm for the ruptured group, respectively. For the senior rater, mean differences of 3.32 mm and 3.57 mm were achieved for the unruptured and ruptured groups, respectively, with standard deviations of 2.32 mm and 3.55 mm for the unruptured and ruptured groups, respectively.

To further evaluate the mean disparity between measurements conducted by the senior rater and computerized measurements, we display box-and-whisker plots in Fig. 5 that illustrate the distribution of these measurements and associate the corresponding measurements via connecting lines. The consistent inclination of the connecting lines on Fig. 5 implies a variance between the computerized measurements and those conducted by the senior rater. This corroborates the findings from the Bland-Altman plots (see Fig. 4), indicating a systematic bias between the senior rater and computerized measurements (3.32 and 3.57 for unruptured and ruptured aneurysms, respectively).

The agreement and consistency between the computerized measurements and the manual measurements was assessed using the ICC. The senior rater demonstrated a robust ICC of 0.90, while the junior raters achieved comparable ICC values of 0.92, 0.88, 0.91, and 0.88, respectively.

Monitoring size over time

For a small group of 10 patients in the dataset, who had been imaged at multiple time-points, we have analyzed the performed manual and computerized measurements over time with the aim to establish the validity of size measurements to identify IA’s morphological status (e.g. growing, stable or shrinking). Figure 6 depicts the obtained size measurements for the 10 cases. With the exception of cases 3 and 10, the disparity between the measurements between the senior and the junior raters is smaller than the SD of the senior rater’s measurements. Notably, the measurements provided by junior raters exhibit more substantial deviations from the senior rater’s measurements in contrast to the computerized measurements.

Discussion

In current clinical practice, the risk of rupture for IAs is closely linked to factors like aneurysm size, its growth rate, and prior instances of subarachnoid hemorrhage (SAH), among others. Well-known predictive models for rupture, such as ELAPSS18 and PHASES10, primarily rely on the size of the IA. Additionally, when surgery is not recommended, regular monitoring of IA shape is advised. If an intracranial aneurysm grows more than 1 mm10, it is generally classified as a growing intracranial aneurysm. It is established that growing IA are more likely to rupture, meaning measurements of baseline and follow-up aneurysms need to be precise and repeatable. This underscores the need for precise and repeatable measurements of baseline and follow-up aneurysm sizes.

However, manual measurements of intracranial aneurysms can vary due to factors like image quality, imaging modality, the experience of the rater, and external elements such as fatigue, night-time conditions, and heavy workload19. Furthermore, the variability in measurements arising from manual annotation that involves subjective judgement, for instance in 2D viewing plane selection and endpoint localization, can either obfuscate the actual changes in size in growing IAs, or lead to false growth indications in stable IAs. In this study we conducted an analysis of the intra-rater variability involving four junior raters and one senior rater.

The examination of inter-rater variability through Bland-Altman plots in Fig. 2, comparing junior raters to the senior rater, revealed that all junior raters exhibited a low mean value (average 0.66 [0.27–0.96] for the nonruptured group and average 0.25 [-0.18–0.78] for the ruptured group). The standard deviation ranged from 0.5 to 2.56 mm for the nonruptured group and from 3.27 to 3.63 mm for the ruptured group. D’Argento et al.20, who also explored inter-rater variability among experienced raters, reported a corresponding standard deviation value of approximately 1.5 mm. This discrepancy is anticipated given the difference in the raters’ experience levels. The agreement among the five raters was assessed through Cohen’s d effect size. According to Cohen’s original work21, effect sizes of 0.2 are considered small, 0.5 medium, and 0.8 large; however, these benchmarks are somewhat arbitrary and should not be strictly adhered to22. In the comparison of raters (see Table 1), the observed effect sizes ranged from 0.06 to 0.24, signifying small effect sizes. This indicates that there is no substantial difference between the raters.

The junior raters exhibited rather large standard deviations in their measurements (avg. 1.96 [1.65–2.68] for unruptured and 3.67 [2.72–5.12] for ruptured group), while the senior rater displayed a substantially smaller standard deviation of 1.23 mm for unruptured and 1.73 for ruptured group. The nearly doubled standard deviation observed in the ruptured group of junior raters compared to senior raters can be attributed to the challenge of distinguishing the aneurysm’s edge from the adjacent blood pool, introducing ambiguity into the measurements. While this discrepancy is expected due to differences in experience between the junior and senior raters, the results underscore that the intra-rater variability for all raters exceeds the threshold set for determining aneurysm growth.

An alternative to manual measurement is the use of deep learning-based segmentation and measurement for IA. The computerized measurement process for IA size involved four main steps: vascular segmentation, selection of the region of interest, isolation of the IA, and measurement of IA size by determining the largest diameter of the isolated dome. By comparing our automatic measurements to manual measurements conducted by a senior rater (who demonstrated an intra-rater standard deviation of 1.23 mm), we observe a standard deviation of 2.32 mm for the unruptured group and 3.55 mm for the ruptured group. Mean differences are 3.32 and 3.57 for the ruptured and unruptured groups, respectively. This pattern mirrors findings from a previous study by Rajabzadeh-Oghaz et al.23, where a mean difference of 2.01 mm was noted between computerized and corresponding manual measurements. This variability may be attributed to the fact that computerized measurements were conducted in 3D space, whereas manual measurements relied on a selected 2D viewing plane, likely introducing inconsistencies.

While we recognize that the standard deviation of automatic artificial intelligence-based algorithms is not yet at the level of a senior rater, it underscores an area that requires improvement. However, the algorithm excels in monitoring changes in IA size, where the emphasis is on aneurysm change rather than standard deviation. We assume that when assessing IA growth, the intelligence-based algorithms will consistently produce a similar deviation at each time point. This consistency can be attributed to the similar IA morphology observed across all time points. To evaluate and compare artificial intelligence-based measurements with human raters, we conducted measurements on a group of 10 patients who underwent angiographic scanning at multiple time points. This examination aimed to assess the level of agreement among junior raters, senior raters, and computerized measurements, with the senior rater’s measurements serving as the established benchmark. Visual observations revealed considerable variability in the measurements of junior raters. In Fig. 6, six instances (cases 1, 2, 5, 6, 8, 9) showed inconsistent IA morphology changes compared to the senior rater. Conversely, computerized measurements were inconsistent in only one, possibly two cases. Specifically, in case 3, computerized measurements indicated growth, while the senior rater classified the aneurysm as stable. In case 10, both the senior rater and the computerized method characterized the aneurysm as stable. However, the computerized measurement indicated a slight decrease in IA size, while the senior rater’s measurements indicated a minor increase. Thus, the assessment of IA change using artificial intelligence-based measurements not only proves robust and repeatable but also surpasses the performance of junior raters, underscoring its superior reliability.

Accurate assessment of intracranial aneurysm growth, often defined by a mere 1 mm change in aneurysm size18, is pivotal. The intricacies of intra- and inter-rater variability, however, can introduce the potential for wrong indication, falsely categorizing an aneurysm’s status as growing, stable or shrinking in follow-up images. Even though the senior raters exhibit lower variability, it remains slightly above the 1 mm threshold for growth indication. Such erroneous determinations hold the grave consequences of both surgical complications and spontaneous rupture. Addressing these concerns, a computerized aneurysm size measurement approach emerges as a reliable and consistent tool for quantifying intracranial aneurysm morphology change. This approach not only aids in precise measurements but also offers an invaluable second opinion. Particularly beneficial for junior raters, computerized approaches like the one proposed can significantly enhance the evaluation of intracranial aneurysms. Furthermore, by embracing computerized measurements, the monitoring of intracranial growth can be effectively shielded from the influences of intra- and inter-rater variability, thus ushering in a new era of reliability and accuracy in clinical decision-making.

Methods

Data

We collected a set of 248 CTA scans from the ASAN medical center, Seoul, Republic of Korea, all of which featured at least one untreated IA. Among these scans, there were 145 male and 90 female patients, with a median age of 57 for males and 51 for females. Among 235 patients assessed, 8 received a single follow-up scan, while one patient underwent two follow-up scans, and another underwent three. The average duration between successive scans was 1340 days, with a range from 547 to 2738 days. These 10 patients underwent follow-up scans to monitor the potential growth of aneurysms throughout their lives.

The CTA acquisitions were conducted on 11 CT scanners, specifically the models GE LightSpeed Pro 16, GE LightSpeed QX/i, GE Optima CT660, GE LightSpeed VCT, GE Discovery CT750 HD, GE HiSpeed CT/I, GE Optima CT660, and GE Revolution CT.

A proficient radiologist with over 15 years of experience, who was not involved as a rater in this study, meticulously analyzed the CTA scans. The analysis involved locating the aneurysms within the scans and determining whether they had previously ruptured based on the patient’s clinical records. The study identified a total of 358 IAs, among which 201 had experienced rupture, while 157 were unruptured. The observed IAs had a median diameter of 3.38 mm, with 52% categorized as small (diameter \(<3\) mm), 35% as medium-sized (3 mm < diameter \(<7\) mm), and 13% as large-sized (diameter \(>7\) mm). Aneurysm sizes were categorized as small, medium, or large using measurements from clinical center reports. None of our raters were involved in generating these original reports, ensuring unbiased assessment.

Importantly, the ruptured IAs present in the dataset were all imaged within a week after the rupture event, and previous research had indicated minimal morphological changes in the aneurysm shape prior to rupture, as compared to their appearance after rupture24. Notably, previously treated aneurysms were not included in this study even though they were present in the dataset.

The study encompassed aneurysms from five distinct anatomical locations. The largest subset of IAs was located at the middle cerebral artery bifurcation, totaling 119 cases. Additionally, there were 69 aneurysms on the anterior communicating artery, 5 on the posterior communicating artery, and 53 on the anterior choroidal artery. In the posterior circulation, 39 cases were identified, while the anterior cerebral artery featured 21 cases. Further details regarding the dataset can be found in Table 2.

Human raters

In this investigation, measurements were conducted by a team consisting of four junior raters and one senior rater. The junior raters, comprising young doctors or students in the final years of their medical studies, lacked prior specialized training in IA measurements. This scenario simulated the conditions typically encountered by radiologists at the outset of their specialization, as formal training for IA size measurement is generally not provided, based on our experience. Nonetheless, to ensure consistency in this study, our senior rater, possessing over 15 years of experience, conducted an instructional session for the junior raters before commencing with the measurements. The initial segment of the session encompassed a comprehensive overview of general IA morphology, definition of IA neck, potential case variations, fundamental aspects of the CTA modality, and the fundamental principles underlying the adjustment of window and width settings for obtaining accurate measurements. The subsequent phase of training consisted of live demonstrations illustrating the localization of endpoints and the extraction of the IA size measurement, all in diverse measurement scenarios and with the utilization of the designated software25. During this phase, the raters were introduced the dataset without revealing any particulars about which cases were repetitions or involved multiple scans from the same patients. Subsequently, all junior raters participated in a practical training session supervised by the senior rater. This hands-on session allowed them to seek clarification by posing questions while conducting the IA size measurements. Moreover, if uncertain, they were given the option to skip a particular aneurysm, and to later engage in a follow-up session with the senior rater for clarification. During this session, the focus was on refining their ability to identify the CTA slice that best visualizes the aneurysm, although the senior rater refrained from assisting in localizing the endpoints needed for the size measurement or confirming measurement accuracy.

Manual measurement protocol

All CTA imaging data for the cases underwent anonymization and were stored on a single computer equipped with an integrated Digital Imaging and Communications in Medicine (DICOM) viewer25 capable of performing the requisite measurements. To ensure any potential bias stemming from the use of different workstations was eliminated, uniformity was maintained as all raters employed the identical setup. The data for each cases encompassed source CTA scan, patient gender and age information, coordinates denoting the global location of the aneurysm, and the aneurysm’s status (ruptured or unruptured). To obviate software-related divergences in image assessment among raters, exclusive utilization of the provided DICOM viewer was mandated.

A standardized measurement protocol was adhered to by each rater. Initially, the DICOM image was loaded into the software using drag-and-drop actions. While raters were instructed to solely employ 1 mm sliced images for performing measurements, the option to examine 5 mm sliced images was available upon preference. Subsequently, the rater input coordinates specifying the global location of the aneurysm, which consequently adjusted the software’s field of view to the approximate aneurysm position. Once the appropriate 3D volume view slice and angle were selected, the rater initiated the measurement of the intracranial aneurysm by holding down the left mouse button, thereby selecting the first endpoint. The second endpoint was recorded when the left mouse button was released. Next to the line connecting the two endpoints, the measured distance between the two was displayed and automatically copied to the clipboard. In the event that a rater deemed the IA measurement acceptable, they proceeded to paste the corresponding value into the Microsoft Excel table of measurements.

Computerized measurement

The computerized measurement process for IA size involved four main steps: (1) vascular segmentation, (2) selection of the region of interest (ROI), (3) isolation of the IA, and (4) measurement of IA size by determining the largest diameter of the isolated dome. In steps 1 and 3, trained and validated models from prior studies were utilized26,27. No further training was conducted specifically for this study. Hence, in this section, we provide a condensed overview of the methodology and findings.

For vascular segmentation, we employed a self-adapting framework named nnU-Net28. The preprocessing was fully automated following the nnU-Net pipeline. Initially, all cases underwent cropping based on a minimal bounding box encapsulating foreground intensity values (i.e., CTA intensity \(>-1024\)). This reduction in computational load was achieved without loss of relevant information. The CTA’s intensity values were resampled using third-order spline interpolation, while resampling of associated vessel masks employed nearest neighbor interpolation. Normalization was applied to all non-zero voxels by mean subtraction and division by standard deviation. Given our high-resolution 3D scan datasets, we configured the nnU-Net architecture as “3dfullres” to deploy a 3D U-Net CNN model29. In this encoder-decoder architecture, downsampling in the encoder utilized convolution layers with a stride of 2, while upsampling in the decoder employed transposed convolutions with a stride of 2. The nnU-Net framework automatically selected patches of size 96\(\times \)160\(\times \)160 and a batch size of 2. The Adam optimizer was employed for 150 epochs with an initial learning rate of \(0.9 \times 10^3\). A training and validation dataset of 210 CTA cases from the same dataset were used. The mean Dice similarity coefficient (DSC) was reported and used for further analysis across all test folds (trained using 5 folds), yielding a mean DSC of 0.91 for the nnUnet model trained and tested on the 210 CTAs. Following the nnUnet vessel segmentation, the marching cubes and the smooth non-shrinking algorithms30,31 were applied so as to reconstruct corresponding 3D surface mesh of the aneurysm and surrounding vessels.

Extraction of the ROI on the vascular mesh surrounding the particular IA was based on the coordinates of each IA’s center, as previously annotated by a radiologist. The nearest point to the global IA coordinates on the vascular segmentation served as the starting point to identify 5000 mesh points closest according to geodesic distance. This strategy ensured the inclusion of proximal vessels connected to the IA, while excluding nearby peripheral vessels. While the selected number of 5000 connected points sufficed for all the aneurysms within this dataset, the number would likely need to be increased in case of giant aneurysms.

Isolation of the IA was performed by a geometric classifier learning approach proposed in a prior study27. The approach was trained and validated on an expanded dataset, utilizing the publicly available dataset named IntrA32 for this purpose. The IntrA consists of 103 3D models of entire brain vessels extracted from the MRA scans, featuring 116 IAs. Surface meshes from the IntrA dataset were directly employed without reprocessing. The procedure for IA detection and isolation was not explicitly detailed in the paper.

For our purposes a skilled neurosurgeon manually isolated the aneurysm from surrounding vessels by delineating a closed curve on each extracted surface mesh. The closed curve was annotated as an ordered sequence of points on the surface mesh, with the last point connecting the first to obtain a closed curve. The line segments connecting the two point in a sequence where projected to the surface mesh according to smallest geodesic distance, thus yielding the final closed curve isolating the mesh points on either side. Those points closest to IA center were labeled as the aneurysm, while the other as vessel points. Using the above procedure the surgeon’s flexibility in annotating the points and the closed curve was not confined to a specific aneurysm neck shape. The obtained manual IA isolations were used for training and validation of a multi-layer neural network (MNN) point classification model named PointNet++33. The achieved true positive rate (TPR) for the point classification task on the IntrA dataset was 0.96. The obtained point classication model was then applied to the surface mesh in the CTA-extrated ROI.

Automated computation of IA size measurement involved finding the maximum distance (MaxLen) between two points of the isolated IA dome mesh. Notably, the MaxLen cannot be directly compared to rater measurements of IA size due to its consideration of the entire 3D space as opposed to a single 2D slice, potentially leading to systematially larger, but consistent measurements.

Evaluation metrics

In this study, we employed various data visualization techniques to analyze intra- and inter-rater differences, assess the correlation between raters using the interclass correlation score, and establish trends in measurement comparison through box plots enhanced with visual connections.

A fundamental technique in analytical chemistry and biomedicine, the Bland–Altman plot34 or difference plot, was utilized for evaluating the concordance between distinct assays. The Bland-Altman plot enables the identification of potential biases in mean differences and estimation of the range within which 95% of the disparities between the second and first methods reside. Both unit differences and percentage differences plots were employed to scrutinize the data. Importantly, the Bland-Altman plot method solely delineates the extent of agreements and refrains from rendering judgments about the acceptability of these ranges.

To quantify the correlation among all raters, the interclass correlation score (ICC)35 was harnessed. We adopted the implementation provided by Pingouin36. As we utilized a fixed group of k raters assessing each target, the application of ICC to a broader rater population is not applicable. Although we eliminated mean differences among raters, we retained sensitivity to interactions. This customized modification of the ICC score is denoted as ICC2 in Pingouin.

In the context of comparing two measurement groups, a comparative strategy involved plotting two adjacent box plots. Notably, these box plots were augmented with visual connections that provide insights into measurement trends, thereby facilitating the estimation of prevailing tendencies between measurements.Č

Data availibility

The data used in this study are available upon a reasonable request to the corresponding author.

Change history

06 August 2024

A Correction to this paper has been published: https://doi.org/10.1038/s41598-024-69217-6

References

Dimmick, S., Jones, M., Steinfort, B., Pines, C. & Faulder, K. Accuracy and interobserver reliability of three-dimensional rotational angiography versus mathematical models for volumetric measurement of intracranial aneurysms. J. Clin. Neurosci. 16, 1195–1198 (2009).

Forbes, G., Fox, A. J., Huston, J., Wiebers, D. O. & Torner, J. Interobserver variability in angiographic measurement and morphologic characterization of intracranial aneurysms: a report from the international study of unruptured intracranial aneurysms. Am. J. Neuroradiol. 17, 1407–1415 (1996).

Suh, S. H., Cloft, H. J., Huston, J., Han, K. H. & Kallmes, D. F. Interobserver variability of aneurysm morphology: Discrimination of the daughter sac. J. Neurointervent. Surg. 8, 38–41 (2016).

Algra, A. M. et al. Procedural clinical complications, case-fatality risks, and risk factors in endovascular and neurosurgical treatment of unruptured intracranial aneurysms: A systematic review and meta-analysis. JAMA Neurol. 76, 282–293 (2019).

Villablanca, J. P. et al. Natural history of asymptomatic unruptured cerebral aneurysms evaluated at ct angiography: growth and rupture incidence and correlation with epidemiologic risk factors. Radiology 269, 258–265 (2013).

Mehan, W. A. et al. Unruptured intracranial aneurysms conservatively followed with serial ct angiography: could morphology and growth predict rupture?. J. Neurointervent. Surg. 6, 761–766 (2014).

Inoue, T., Shimizu, H., Fujimura, M., Saito, A. & Tominaga, T. Annual rupture risk of growing unruptured cerebral aneurysms detected by magnetic resonance angiography. J. Neurosurg. 117, 20–25 (2012).

Brinjikji, W. et al. Risk factors for growth of intracranial aneurysms: A systematic review and meta-analysis. Am. J. Neuroradiol. 37, 615–620 (2016).

Korja, M., Lehto, H. & Juvela, S. Lifelong rupture risk of intracranial aneurysms depends on risk factors: A prospective finnish cohort study. Stroke 45, 1958–1963 (2014).

Greving, J. P. et al. Development of the phases score for prediction of risk of rupture of intracranial aneurysms: a pooled analysis of six prospective cohort studies. Lancet Neurol. 13, 59–66 (2014).

Wong, S. C., Nawawi, O., Ramli, N. & Abd Kadir, K. A. Benefits of 3d rotational dsa compared with 2d dsa in the evaluation of intracranial aneurysm. Acad. Radiol. 19, 701–707 (2012).

Kawashima, M. et al. Three-dimensional digital subtraction angiography vs two-dimensional digital subtraction angiography for detection of ruptured intracranial aneurysms: a study of 86 aneurysms. Neurol. India 53, 287 (2005).

Maldaner, N. et al. Interrater agreement in the radiologic characterization of ruptured intracranial aneurysms based on computed tomography angiography. World Neurosurg. 103, 876–882 (2017).

Wostrack, M. et al. Interobserver variability in the characterization of giant intracranial aneurysms with special emphasis on aneurysm diameter and shape. Acta Neurochir. 157, 1859–1865 (2015).

Piccinelli, M. et al. Automatic neck plane detection and 3d geometric characterization of aneurysmal sacs. Ann. Biomed. Eng. 40, 2188–2211 (2012).

Xiang, J. et al. Aview: An image-based clinical computational tool for intracranial aneurysm flow visualization and clinical management. Ann. Biomed. Eng. 44, 1085–1096 (2016).

Koo, T. K. & Li, M. Y. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropr. Med. 15, 155–163 (2016).

Backes, D. et al. Elapss score for prediction of risk of growth of unruptured intracranial aneurysms. Neurology 88, 1600–1606 (2017).

Waite, S. et al. Tired in the reading room: The influence of fatigue in radiology. J. Am. Coll. Radiol. 14, 191–197 (2017).

D’Argento, F. et al. Intra-and inter-observer variability in intracranial aneurysm segmentation: comparison between ct angiography (semi-automated segmentation software stroke vcar) and digital subtraction angiography (3d rotational angiography). Radiol. Med. (Torino) 126, 484–493 (2021).

Cohen, J. Statistical power analysis for the behavioral sciences, Rev (Lawrence Erlbaum Associates Inc, 1977).

Thompson, B. Effect sizes, confidence intervals, and confidence intervals for effect sizes. Psychol. Sch. 44, 423–432 (2007).

Rajabzadeh-Oghaz, H. et al. Computer-assisted three-dimensional morphology evaluation of intracranial aneurysms. World Neurosurg. 119, e541–e550 (2018).

Rahman, M. et al. Unruptured cerebral aneurysms do not shrink when they rupture: Multicenter collaborative aneurysm study group. Neurosurgery 68, 155–161 (2011).

Kos, E. Grafični vmesnik za računalniško-podprto [!] analizo angiografskih slik z modeli globokega učenja: magistrsko delo: magistrski študijski program druge stopnje Elektrotehnika. Ph.D. thesis, [E. Kos] (2022). Na spremnem listu tudi nasl. v angl.: Graphical user interface for computer-assisted analysis of angiographic scans based on deep learning models.

Bizjak, Ž., Choi, J. H., Park, W., Pernuš, F. & Špiclin, Ž. Deep geometric learning for intracranial aneurysm detection: Towards expert rater performance. J. NeuroIntervent. Surg. (2023).

Bizjak, Ž., Likar, B., Pernuš, F. & Špiclin, Ž. Vascular surface segmentation for intracranial aneurysm isolation and quantification. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, October 4–8, 2020, Proceedings, Part VI 23, 128–137 (Springer, 2020).

Isensee, F., Jaeger, P. F., Kohl, S. A., Petersen, J. & Maier-Hein, K. H. nnu-net: a self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 18, 203–211 (2021).

Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Navab, N., Hornegger, J., Wells, W. M. & Frangi, A. F. (eds.) Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015, pp. 234–241 (Springer International Publishing, Cham, 2015).

Larrabide, I. et al. Three-dimensional morphological analysis of intracranial aneurysms: A fully automated method for aneurysm sac isolation and quantification. Med. Phys. 38, 2439–2449 (2011).

Cebral, J. R. & Löhner, R. From medical images to anatomically accurate finite element grids. Int. J. Numer. Meth. Eng. 51, 985–1008 (2001).

Yang, X., Xia, D., Kin, T. & Igarashi, T. Intra: 3d intracranial aneurysm dataset for deep learning. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2020).

Qi, C. R., Su, H., Mo, K. & Guibas, L. J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 652–660 (2017).

Altman, D. G. & Bland, J. M. Measurement in medicine: The analysis of method comparison studies. J. R. Stat. Soc. Ser. D (The Statistician) 32, 307–317 (1983).

Shrout, P. E. & Fleiss, J. L. Intraclass correlations: Uses in assessing rater reliability. Psychol. Bull. 86, 420 (1979).

Vallat, R. Pingouin: Statistics in python. J. Open Source Softw. 3, 1026 (2018).

Author information

Authors and Affiliations

Contributions

A.P. helped with study design and manual measurements. N.Š., Z.P., U.Š. and P.S. helped with manual measurements, study design and implementation. J.H.C and W.P. managed data collection and helped with study design. T.R., N.T. and L.J. helped with writing and editing the manuscript, preparing figures, Ž.Š. helped with writing, editing, study design, figure preparation and founding Ž.B. designed study, helped with data preparation, wrote original manuscript, prepared figures. All authors reviewed manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: In the original version of this Article Nina Špegel, Zala Podobnik, Uroš Šinigoj, Petra Skubic, Tina Robič & Nika Tabor were incorrectly affiliated with ‘Medilab Diagnostic Imaging, Vodovodna 100, 1000, Ljubljana, Slovenia’. The correct affiliation is listed here: Faculty of Medicine, University of Ljubljana, Ljubljana, Slovenia.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Planinc, A., Špegel, N., Podobnik, Z. et al. Assessing accuracy and consistency in intracranial aneurysm sizing: human expertise vs. artificial intelligence. Sci Rep 14, 16080 (2024). https://doi.org/10.1038/s41598-024-65825-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-65825-4

- Springer Nature Limited