Abstract

IgA nephropathy progresses to kidney failure, making early detection important. However, definitive diagnosis depends on invasive kidney biopsy. This study aimed to develop non-invasive prediction models for IgA nephropathy using machine learning. We collected retrospective data on demographic characteristics, blood tests, and urine tests of the patients who underwent kidney biopsy. The dataset was divided into derivation and validation cohorts, with temporal validation. We employed five machine learning models—eXtreme Gradient Boosting (XGBoost), LightGBM, Random Forest, Artificial Neural Networks, and 1 Dimentional-Convolutional Neural Network (1D-CNN)—and logistic regression, evaluating performance via the area under the receiver operating characteristic curve (AUROC) and explored variable importance through SHapley Additive exPlanations method. The study included 1268 participants, with 353 (28%) diagnosed with IgA nephropathy. In the derivation cohort, LightGBM achieved the highest AUROC of 0.913 (95% CI 0.906–0.919), significantly higher than logistic regression, Artificial Neural Network, and 1D-CNN, not significantly different from XGBoost and Random Forest. In the validation cohort, XGBoost demonstrated the highest AUROC of 0.894 (95% CI 0.850–0.935), maintaining its robust performance. Key predictors identified were age, serum albumin, IgA/C3, and urine red blood cells, aligning with existing clinical insights. Machine learning can be a valuable non-invasive tool for IgA nephropathy.

Similar content being viewed by others

Introduction

IgA nephropathy (IgAN) is the most common primary glomerulonephritis worldwide, leading to end-stage kidney failure in 30–40% of patients within two decades of diagnosis1. For favorable outcomes in IgAN patients, early detection and timely treatment are essential. IgAN presents variable clinical courses, characterized by various degrees of hematuria and/or proteinuria, complicating diagnosis with general laboratory tests2,3. Definitive diagnosis requires kidney biopsy, which, however, has several contraindications and entails risks like significant bleeding4, which in severe cases, may require interventions such as transfusion, arterial embolization, or surgery, representing a crucial clinical challenge5.

The potential for predicting the diagnosis of IgAN before or without kidney biopsy has been a topic of discussion6. Specifically, the study of non-invasive diagnostic approaches for IgAN through blood and urine biomarkers has gained attention. Biomarkers such as microscopic hematuria, persistent proteinuria, serum IgA levels, and the serum IgA/C3 ratio have been identified as effective for distinguishing IgAN from other kidney diseases7,8. Although these variables are measurable in routine clinical settings, their diagnostic capability is limited, serving primarily to aid differential diagnosis. Recent studies have emphasized the importance of galactose-deficient IgA1 (Gd-IgA1), Gd-IgA1-specific IgG, and Gd-IgA1-containing immune complexes in IgAN pathogenesis9,10. Elevated serum levels of these markers have been observed in IgAN, suggesting their potential as specific biomarkers11. However, their practical application is limited, as their measurement requires advanced equipment not available in general medical facilities.

Machine learning, a subset of artificial intelligence, is instrumental in analyzing extensive clinical data from electronic health records, facilitating the development of predictive models12,13. Its application in nephrology expects advancements in predicting acute kidney injury onset14, prognosis of chronic kidney disease15, dialysis hypotension onset16, and assisting kidney pathological diagnosis17. IgAN diagnostic prediction studies have predominantly employed logistic regression8,18,19, a conventional statistical model assuming linear relationships and thus limiting predictive performance. Advanced machine learning algorithms, capable of modeling non-linear relationships and complex interactions, could improve predictive performance20. However, the efficacy of machine learning in predicting IgAN diagnosis remains unexplored.

This study aims to develop and validate diagnostic prediction models for IgAN using machine learning, based on patient demographics, blood tests, and urine tests, which can be easily obtained in clinical practice. Our other goal is to show machine learning models can be a non-invasive, highly accurate, and reliable diagnostic approach for IgAN, compared to the conventional clinical parameters or conventional statistical models.

Methods

Study design and study participants

This study is a retrospective cohort study involving patients at St. Marianna University Hospital. It included all adult patients who underwent native kidney biopsy from January 1, 2006, to September 30, 2022. Patients with inconclusive diagnoses and those with multiple primary diagnoses were excluded. The data for the cohort were collected from electronic health records, with patients who underwent kidney biopsy between January 1, 2006, and December 31, 2019, included in the derivation cohort, and those who underwent biopsy between January 1, 2020, and September 30, 2022, included in the validation cohort. Details of patient selection for the derivation and validation cohorts are shown in Fig. 1.

Ethics approval

This study was conducted according to “The Declaration of Helsinki”, “the Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis Statement”21, and “Guidelines for Developing and Reporting Machine Learning Predictive Models in Biomedical Research: A Multidisciplinary View”22. The study protocol was approved by the institutional review board of St. Marianna University Hospital (approval number 6025). As the study was retrospective and involved minimal risk, the requirement for informed consent was waived.

Predictor variables

We utilized information that is routinely measured in clinical practice as potential predictor variables. Baseline data of patients before the native kidney biopsy were retrospectively collected from electronic health records. These included demographic characteristics, blood tests, and urine tests. Demographic characteristics included age, sex, height, weight, body mass index, and blood test items comprising white blood cells, hemoglobin, total protein, albumin, blood urea nitrogen, creatinine, uric acid, aspartate aminotransferase, alanine aminotransferase, alkaline phosphatase, lactate dehydrogenase (LDH), creatine kinase (CK), total cholesterol, glucose, hemoglobin A1c, C-reactive protein, immunoglobulin G (IgG), immunoglobulin A (IgA), immunoglobulin M (IgM), complement C3, complement C4, IgA/complement C3 ratio (IgA/C3), antinuclear antibodies. Urine test items included urine protein/creatinine ratio (UPCR) and urine red blood cells (Urine RBC), with Urine RBC scored on a scale of 0 = < 1/high power field (HPF), 2.5 = 1– 4/HPF, 7.5 = 5–9/HPF, 20 = 10–29/HPF, 40 = 30–49/HPF, 75 = 50–99/HPF, 100 = ≥ 100/HPF.

Outcome measures

The outcome of this study is the diagnosis of IgA nephropathy. The definitive diagnoses made through kidney biopsy by nephrologists were collected. IgA nephropathy was assigned as the correct label (1) and all other diagnoses as (0).

Data preprocessing

The number and proportion of missing values for each variable are shown in Supplementary Table S1. Variables with more than 20% missing values were not included in the analysis. To avoid potential bias arising from excluding patients with missing data, imputation was adopted. The k-nearest neighbor imputation algorithm was employed to fill in missing values for continuous variables. The variables were standardized to have a mean of 0 and a standard deviation of 1.

Variable selection

Effective variable selection not only enhances the learning process but also makes the results more accurate and robust. Given the importance of ensuring model reliability and trustworthiness in the medical domain, it is preferable to integrate the results of multiple feature selections instead of relying on a single feature selection23. Therefore, the variable reduction was performed to prevent overfitting of machine learning models and to reduce computational costs. Predictor variables with nearly zero variance, i.e., variables whose proportion of unique values was less than 5%, were excluded from the analysis. Four variable selection methods were applied to identify subsets of predictor variables. These methods included Least Absolute Shrinkage and Selection Operator, Random Forest-Recursive Feature Elimination, Random Forest-Filtering, and SelectFromModel with Extra Trees. The final predictor variables for model development were determined by integrating the results from the four methods, choosing variables that appeared three or more times across all methods.

Model development and evaluation

For model development, the following five machine learning algorithms—eXtreme Gradient Boosting (XGBoost), LightGBM, Random Forest, Artificial Neural Network, 1 Dimentional-Convolutional Neural Network (1D-CNN)—and logistic regression were applied to the data of the derivation cohort. XGBoost and LightGBM, along with Random Forest, are tree-based algorithms that combine decision trees with ensemble learning. XGBoost and LightGBM enhance predictive performance by sequentially building decision trees and correcting the errors of previous trees by boosting techniques24,25. Random Forest mitigates overfitting by independently training multiple decision trees and integrating their predictions through bagging techniques26. Artificial Neural Networks consist of an input layer, hidden layers, and an output layer, capable of handling complex relationships between inputs and outputs using non-linear activation functions27. 1D-CNNs are specialized neural networks designed to process sequential data by applying convolutional filters along one dimension, effectively capturing local patterns in time-series data. This makes them particularly useful for tasks involving sequential medical data28. Logistic regression is a statistical linear model widely used for binary classification, producing probabilistic outputs and classifying as positive or negative based on a specific threshold29. To identify the optimal hyperparameters for each model, training, and validation were conducted in the derivation cohort using 5-repeated five-fold cross-validation. Bayesian optimization was used for hyperparameter tuning. The hyperparameters of each model tuned are shown in Supplementary Table S2.

The performance of the final prediction models was evaluated in both the derivation and validation cohorts. The model performance was assessed using the Area Under the Receiver Operating Characteristic curve (AUROC) and the Area Under the Precision-Recall Curve (AUPRC). The 95% confidence intervals (95% CI) for each metric were generated through 1000 bootstrap iterations with unique random seeds. AUROC and AUPRC were selected as they reflect performance across all classification thresholds and are less affected by class imbalance. The deep ROC analysis was also performed to assess discriminability in more detail30. Three groupings were made according to the false positive rate, and the normalized group AUROC, mean sensitivity, and mean specificity for each group were calculated. The model calibration was evaluated using calibration plots and the Brier score. Calibration plots compare the actual positive fraction to the average predicted probability across quintiles of predicted probability. The Brier score, reflecting the mean squared difference between predicted probabilities and actual outcomes, serves as a dual measure of predictive performance and calibration.

Model interpretations

The SHapley Additive exPlanations (SHAP) method was used to explore the interpretability of the models with high diagnostic performance. SHAP provides a unified approach for the interpretation of model predictions, offering consistent and locally accurate attribution values, i.e., the SHAP values, for each variable within the predictive model31. The role of each variable in predicting IgA nephropathy can be explained as their collective contributions to the overall risk output for each case.

Sensitivity analysis

Sensitivity analysis was performed to examine the differences in results caused by data split. In this analysis, ten-fold cross-validation was performed on all the data without splitting the data by the derivation cohort and the validation cohort, and adjusting hyperparameters, training, and evaluating the performance.

Statistical analysis

Continuous variables were described using mean and standard deviation for normally distributed data, and median values along with interquartile ranges for non-normally distributed data. Categorical variables were presented as counts and percentages. For statistical comparisons, the Student's t-test was applied to normally distributed continuous variables, the Mann–Whitney U test to non-normally distributed continuous variables, and the chi-square test to categorical variables. Variables with a two-tailed p-value less than 0.05 were considered statistically significant. For variable selection, we employed the sklearn library in Python (version 3.10.12). Model development utilized the sklearn, xgboost, lightgbm, and torch libraries and evaluation was conducted using the sklearn, optuna, deeproc, and shap libraries. R (version 4.2.2) was used for statistical analyses.

Ethics approval and consent to participate

The study was performed in accordance with the Declaration of Helsinki and Ethical Guidelines for Medical and Health Research Involving Human Subjects. The study was approved by the St. Marianna University Hospital Institutional Review Board (approval number: 6025) which allowed for analysis of patient-level data with a waiver of informed consent.

Results

Patient characteristics

After excluding participants with multiple primary diagnoses or without definitive diagnosis, 1268 participants were enrolled. Of these, 1027 were included in the derivation cohort and 241 in the validation cohort. The baseline characteristics and outcomes for the derivation and validation cohorts are presented in Table 1. In the derivation cohort, 294 (28.6%) were diagnosed with IgA nephropathy, compared to 59 (24.5%) in the validation cohort. The baseline characteristics of the IgA nephropathy and non-IgA nephropathy groups for each cohort are detailed in Supplementary Tables S3 and S4.

Predictor variables

The predictive variables were chosen based on their appearance in at least three out of four feature selection methods applied, namely Least Absolute Shrinkage and Selection Operator, Random Forest-Recursive Feature Elimination, Random Forest-Filtering, and SelectFromModel with Extra Trees. A total of 14 variables were selected as predictors and included in the machine learning models: age, hemoglobin, total protein, albumin, LDH, CK, C-reactive protein, IgG, IgA, complement C3, complement C4, IgA/C3, Urine RBC, and UPCR (Supplementary Table S5).

Model performance

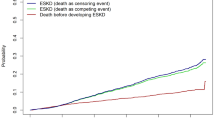

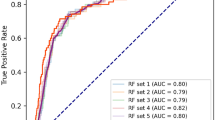

In the derivation cohort, LightGBM achieved the highest AUROC at 0.913 (95% CI 0.906–0.919), significantly higher than logistic regression, Artificial Neural Network and 1D-CNN, not significantly different from XGBoost and Random Forest (Fig. 2). In the validation cohort, XGBoost had the highest AUROC at 0.894 (95% CI 0.850–0.935), though no significant differences were observed with any models. In the derivation cohort, the AUPRC for XGBoost was 0.779 (95% CI 0.771–0.794), significantly higher than logistic regression, Artificial Neural Network and 1D-CNN with no significant difference from LightGBM and Random Forest (Fig. 3). In the validation cohort, XGBoost also scored the highest AUPRC at 0.748 (95% CI 0.630–0.846), no significant differences were found with any models. The results of the group normalized AUROC, mean sensitivity, and mean specificity for each machine learning model in the derivation and validation cohorts by the deep ROC analysis are shown in Table 2. XGBoost and LightGBM had favorable normalized group AUROC in all three groups divided by the false positive rate. The calibration plot demonstrated good calibration for all models, with the Brier Score ranging from 0.107 to 0.131 (Supplementary Fig. S1).

Model interpretations

SHAP values were calculated for the high-performing XGBoost, LightGBM, and Random Forest models to interpret these models. The SHAP bar plots showed the influential variables on the models' predictions (Supplementary Fig. S2). Age, albumin, IgA/C3, and Urine RBC were consistently among the top five predictor variables across all three models. Figure 4 shows the SHAP beeswarm plots, which revealed a negative correlation between age and the prediction of IgAN, while positive correlations were observed with albumin, IgA/C3, and Urine RBC. The SHAP dependence plots indicated various complex relationships between variables and the prediction of IgAN, showing similar patterns across the models (Supplementary Figs. S3–S5).

Shapley additive explanations beeswarm plots of (a) XGBoost, (b) LightGBM, and (c) Random Forest for prediction of IgA nephropathy. LDH, lactate dehydrogenase; CK, creatine kinase; IgG, immunoglobulin G; IgA, immunoglobulin A; IgA/C3, immunoglobulin A/Complement C3 ratio; Urine RBC, urine red blood cells; UPCR, urine protein to creatinine ratio.

Sensitivity analysis

The performance was evaluated using ten-fold cross-validation across the entire dataset for sensitivity analysis. LightGBM achieved the highest AUROC at 0.913 (95% CI 0.906–0.921), significantly higher than logistic regression, Artificial Neural Network and 1D-CNN, not significantly different from XGBoost and Random Forest (Supplementary Fig. S6). The AUPRC for XGBoost was 0.785 (95% CI 0.775–0.809), significantly higher than logistic regression, Artificial Neural Network and 1D-CNN with no significant difference from LightGBM and Random Forest (Supplementary Fig. S7). The calibration plot showed good calibration for all the models, with the Brier Score ranging from 0.106 to 0.129 (Supplementary Fig. S8).

Discussion

In this study, we developed and validated machine learning-based predictive models for diagnosing IgA nephropathy. To the best of our knowledge, this is the first study to compare and evaluate the performance of multiple machine-learning models in diagnosing IgA nephropathy. We evaluated several algorithms, finding that models employing XGBoost, LightGBM, and Random Forest were effective. We confirmed key predictors like age, serum albumin, serum IgA/C3 ratio, and urine red blood cells, in line with previous findings. Additionally, it suggested that the relationships between predictive factors and IgAN predictions could extend beyond simple linearity, hinting at the importance of analyzing diverse patterns for accurate diagnosis of IgAN. These findings suggest that machine learning has potential in the non-invasive and reliable diagnosis of IgA nephropathy.

Several machine learning models were evaluated, and XGBoost, LightGBM, and Random Forest exhibited consistently superior predictive performance in the derivation and validation cohorts to the conventional logistic regression model, which we have traditionally relied on for the prediction of IgAN8,18,19. While logistic regression is known for its high transparency and interpretability, it assumes linear relationships between predictor variables and target variables, which can limit its performance20,29. Recently, the utility of clinical prediction models using machine learning has been discussed, but there are few reports on the diagnosis of IgAN32,33,34. An IgAN prediction study involving 155 patients undergoing kidney biopsy showed the utility of machine learning models, with Bayesian Networks achieving an AUROC of 0.83 and logistic regression an AUROC of 0.7532. Another study with 519 IgAN patients and 211 non-IgAN patients indicated the potential of Artificial Neural Networks with an AUROC of 0.839 for logistic regression and 0.881 for Artificial Neural Networks33. However, these studies were limited to comparisons between two models without statistical analysis using 95% confidence intervals, making it difficult to generalize the results. In a study involving 120 patients with primary glomerular diseases, the prediction model based on superb microvascular imaging-based deep learning, radiomics characteristics, and clinical factors achieved an AUROC of 0.884 (95% CI 0.773–0.996)34. However, the study had a small sample size, and without comparisons to other models, the robustness remains unclear. Therefore, we evaluated the performance of multiple models in a larger patient population than in previous studies. Specifically, in a cohort of 1,268 participants, six models (5 machine learning models and a conventional logistic regression model), with LightGBM performing best in the derivation phase, statistically significantly higher than Artificial Neural Network, 1D-CNN, and logistic regression, not significantly different from XGBoost and Random Forest. Previous studies evaluated various tabular data sets and showed that machine learning methods frequently outperformed logistic regression35,36. Recent studies have shown that tree-based machine learning models like XGBoost, LightGBM, and Random Forest outperform Neural Networks in general tabular data prediction37,38. The superior performance of XGBoost, LightGBM, and Random Forest in predicting IgA nephropathy is consistent with these previous findings, underscoring the potential value of tree-based machine learning models for non-invasive diagnosis of IgAN. However, no significant differences in model performance were observed in the validation phase, indicating the necessity for further verification of model generalizability.

We clarified the “black box” of XGBoost, LightGBM, and Random Forest through the SHAP method, identifying age, albumin, IgA/C3, and Urine RBC as important predictor variables. This method is a widely used explanatory technique for interpreting the contribution of predictor variables to model outputs31,39,40. Previous studies have reported that the presence of microscopic hematuria and/or persistent proteinuria, IgA, and IgA/C3 are useful for distinguishing IgA nephropathy from other kidney diseases7,8. Other research using multivariate logistic regression suggested that age, IgA/C3, albumin, IgA, IgG, eGFR, and the presence of hematuria are independent predictive variables for IgAN33. The key predictor variables identified in our study are in line with previous related studies. We additionally visualized the relationships between each variable and the predictions of these models through the SHAP dependence plots, discovering the possibility of various complex relationships. The findings that all three models showed similar results also suggest the importance and robustness of age, albumin, IgA/C3, and Urine RBC as predictor variables. These insights are poised to enhance our understanding of how these variables relate to IgAN moving forward.

Our findings have clinically significant implications. First, simple, accurate, and non-invasive predictive models for IgAN can be developed using similar methods, with potential for clinical application. Second, our models employ variables that are routinely collected in clinical settings, meaning that their adoption requires no additional tests or financial costs beyond standard clinical care procedures. Third, identifying key variables and visualizing their relationships with IgAN predictions could provide new perspectives for distinguishing IgAN in clinical settings.

This study has several limitations. First, it relied on the data from a single center, lacking external validation across various institutions. Assessing our model's external validity in diverse patient groups remains essential. Second, the limited sample size, particularly in the validation phase, might lead to inadequate statistical power, requiring careful interpretation of each model's evaluative performance. Third, our study cohort included all patients undergoing kidney biopsy, not focused on those with specific clinical manifestations of IgAN like chronic glomerulonephritis. This wide scope requires careful consideration before implementing our predictive models in clinical settings. Given these limitations, future studies should aim for broader validation and verification in multiple institutions to assess the generalizability and clinical potential utility of the models.

In conclusion, this study demonstrated the utility of machine learning models using common clinical data in the diagnostic prediction of IgA nephropathy. The machine learning models (XGBoost, LightGBM, and Random Forest) showed higher diagnostic performance compared to a conventional statistical model and the ability to handle complex relationships of prediction. These models can be helpful for non-invasive and reliable methods to predict IgAN.

Data availability

The dataset cannot be disclosed as approval has not been received from the Ethics Committee of St Marianna University Hospital. The code underlying this article will be shared on reasonable request to the corresponding author.

References

Chauveau, D. & Droz, D. Follow-up evaluation of the first patients with IgA nephropathy described at Necker Hospital. Contrib. Nephrol. 104, 1–5 (1993).

Rovin, B. H. et al. Executive summary of the KDIGO 2021 guideline for the management of glomerular diseases. Kidney Int. 100, 753–779 (2021).

Rodrigues, J. C., Haas, M. & Reich, H. N. IgA nephropathy. Clin. J. Am. Soc. Nephrol. 12, 677–686 (2017).

Eiro, M., Katoh, T. & Watanabe, T. Risk factors for bleeding complications in percutaneous renal biopsy. Clin. Exp. Nephrol. 9, 40–45 (2005).

Poggio, E. D. et al. Systematic review and meta-analysis of native kidney biopsy complications. Clin. J. Am. Soc. Nephrol. 15, 1595 (2020).

Tomino, Y. et al. Measurement of serum IgA and C3 may predict the diagnosis of patients with IgA nephropathy prior to renal biopsy. J. Clin. Lab. Anal. 14, 220–223 (2000).

Maeda, A. et al. Significance of serum IgA levels and serum IgA/C3 ratio in diagnostic analysis of patients with IgA nephropathy. J. Clin. Lab. Anal. 17, 73–76 (2003).

Nakayama, K. et al. Prediction of diagnosis of immunoglobulin a nephropathy prior to renal biopsy and correlation with urinary sediment findings and prognostic grading. J. Clin. Lab. Anal. 22, 114–118 (2008).

Kiryluk, K. et al. Aberrant glycosylation of IgA1 is inherited in pediatric IgA nephropathy and henoch-schönlein purpura nephritis. Kidney Int. 80, 79–87 (2011).

Magistroni, R., D’Agati, V. D., Appel, G. B. & Kiryluk, K. New developments in the genetics, pathogenesis, and therapy of IgA nephropathy. Kidney Int. 88, 974–989 (2015).

Yanagawa, H. et al. A panel of serum biomarkers differentiates IgA nephropathy from other renal diseases. PLoS ONE 9, e98081 (2014).

Wong, J., Horwitz, M. M., Zhou, L. & Toh, S. Using machine learning to identify health outcomes from electronic health record data. Curr. Epidemiol. Rep. 5, 331–342 (2018).

Hobensack, M., Song, J., Scharp, D., Bowles, K. H. & Topaz, M. Machine learning applied to electronic health record data in home healthcare: A scoping review. Int. J. Med. Inform. 170, 104978 (2023).

Tomašev, N. et al. A clinically applicable approach to continuous prediction of future acute kidney injury. Nature 572, 116–119 (2019).

Kanda, E., Epureanu, B. I., Adachi, T. & Kashihara, N. Machine-learning-based Web system for the prediction of chronic kidney disease progression and mortality. PLOS Digit Health 2, e0000188 (2023).

Lee, H. et al. Deep learning model for real-time prediction of intradialytic hypotension. Clin. J. Am. Soc. Nephrol. 16, 396 (2021).

Jayapandian, C. P. et al. Development and evaluation of deep learning–based segmentation of histologic structures in the kidney cortex with multiple histologic stains. Kidney Int. 99, 86–101 (2021).

Gao, J. et al. A novel differential diagnostic model based on multiple biological parameters for immunoglobulin A nephropathy. BMC Med. Inform. Decis. Mak. 12, 58 (2012).

Han, Q.-X. et al. A non-invasive diagnostic model of immunoglobulin A nephropathy and serological markers for evaluating disease severity. Chin. Med. J. 132, 647 (2019).

Goldstein, B. A., Navar, A. M. & Carter, R. E. Moving beyond regression techniques in cardiovascular risk prediction: Applying machine learning to address analytic challenges. Eur. Heart J. 38, 1805–1814 (2017).

Collins, G. S., Reitsma, J. B., Altman, D. G. & Moons, K. G. M. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): The TRIPOD statement. Ann. Intern. Med. 162, 55–63 (2015).

Luo, W. et al. Guidelines for developing and reporting machine learning predictive models in biomedical research: A multidisciplinary view. J. Med. Internet Res. 18, e323 (2016).

Pfeifer, B., Holzinger, A. & Schimek, M. G. Robust random forest-based all-relevant feature ranks for trustworthy AI. Stud. Health Technol. Inform. 294, 137–138 (2022).

Chen, T. & Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 785–794 (ACM, 2016).

Ke, G. et al. LightGBM: A highly efficient gradient boosting decision tree. In Advances in Neural Information Processing Systems vol. 30 (Curran Associates, Inc., 2017).

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

Jain, A. K., Mao, J. & Mohiuddin, K. M. Artificial neural networks: A tutorial. Computer 29, 31–44 (1996).

Kiranyaz, S. et al. 1D convolutional neural networks and applications: A survey. Mech. Syst. Signal Process. 151, 107398 (2021).

Cox, D. R. The regression analysis of binary sequences. J. R. Stat. Soc. Ser. 20, 215–242 (1958).

Carrington, A. M. et al. Deep ROC analysis and AUC as balanced average accuracy, for improved classifier selection, audit and explanation. IEEE Trans. Pattern Anal. Mach. Intell. 45, 329–341 (2023).

Lundberg, S. M. & Lee, S.-I. A unified approach to interpreting model predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems 4768–4777 (Curran Associates Inc., 2017).

Ducher, M. et al. Comparison of a Bayesian network with a logistic regression model to forecast IgA nephropathy. BioMed Res. Int. 2013, 1–6 (2013).

Hou, J., Fu, S., Wang, X., Liu, J. & Xu, Z. A noninvasive artificial neural network model to predict IgA nephropathy risk in Chinese population. Sci. Rep. 12, 8296 (2022).

Qin, X., Xia, L., Ma, Q., Cheng, D. & Zhang, C. Development of a novel combined nomogram model integrating deep learning radiomics to diagnose IgA nephropathy clinically. Ren. Fail. 45, 2271104 (2023).

Caruana, R. & Niculescu-Mizil, A. An empirical comparison of supervised learning algorithms. In Proceedings of the 23rd International Conference On Machine Learning—ICML ’06 161–168 (ACM Press, 2006).

Fernández-Delgado, M., Cernadas, E., Barro, S. & Amorim, D. Do we need hundreds of classifiers to solve real world classification problems?. J. Mach. Learn. Res. 15, 3133–3181 (2014).

Borisov, V. et al. Deep neural networks and tabular data: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–21 (2022).

Grinsztajn, L., Oyallon, E. & Varoquaux, G. Why do tree-based models still outperform deep learning on typical tabular data?. Adv. Neural Inf. Process. Syst. https://doi.org/10.48550/arXiv.2207.08815 (2022).

Lv, Z., Cui, F., Zou, Q., Zhang, L. & Xu, L. Anticancer peptides prediction with deep representation learning features. Brief Bioinform. 22, bbab008 (2021).

Thorsen-Meyer, H.-C. et al. Dynamic and explainable machine learning prediction of mortality in patients in the intensive care unit: A retrospective study of high-frequency data in electronic patient records. Lancet Digit Health 2, e179–e191 (2020).

Acknowledgements

We extend our heartfelt gratitude to Ms. Yoshiko Ono and Ms. Mami Ohori for their significant contributions to the collection of patient data. Their dedication and efforts were instrumental in the advancement of our research. We wish to express our sincere gratitude to the Tateishi Science and Technology Foundation (Grant no. 2237009) and the Nishikawa Medical Foundation (Grant no. 202201) for their generous support of our research. Their financial contributions were instrumental in enabling us to pursue this project and have made a significant impact on our ability to advance in our field.

Author information

Authors and Affiliations

Contributions

R.N. designed the research plan and analyzed the data. R.N., D.I., and Y.S. participated in the writing of the paper. R.N., D.I., and Y.S. participated in the approval of the final manuscript.

Corresponding author

Ethics declarations

Competing interests

R.N. was financially supported by the Tateishi Science and Technology Foundation (Grant ID: 2237009) and the Nishikawa Medical Foundation (Grant ID: 202201).

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Noda, R., Ichikawa, D. & Shibagaki, Y. Machine learning-based diagnostic prediction of IgA nephropathy: model development and validation study. Sci Rep 14, 12426 (2024). https://doi.org/10.1038/s41598-024-63339-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-63339-7

- Springer Nature Limited