Abstract

This research conducts a detailed analysis of a nonlinear mathematical model representing COVID-19, incorporating both environmental factors and social distancing measures. It thoroughly analyzes the model’s equilibrium points, computes the basic reproductive rate, and evaluates the stability of the model at disease-free and endemic equilibrium states, both locally and globally. Additionally, sensitivity analysis is carried out. The study develops a sophisticated stability theory, primarily focusing on the characteristics of the Volterra–Lyapunov (V–L) matrices method. To understand the dynamic behavior of COVID-19, numerical simulations are essential. For this purpose, the study employs a robust numerical technique known as the non-standard finite difference (NSFD) method, introduced by Mickens. Various results are visually presented through graphical representations across different parameter values to illustrate the impact of environmental factors and social distancing measures.

Similar content being viewed by others

Introduction

The new coronavirus SARS-CoV-2, which gave rise to COVID-19, first appeared in Wuhan, China, at the end of 2019 and quickly spread throughout the world. It dispersed throughout continents, having a profound effect on social, economic, and health issues globally. When an infected person coughs, sneezes, talks, or breathes, respiratory droplets are the main way that the virus spreads. It can cause minor respiratory problems, acute respiratory distress syndrome (ARDS), severe pneumonia, and in rare cases, even death. Severe illness is more common in some populations, such as the elderly and people with underlying medical issues. We refer for details some work as1,2.

Mathematicians have played a pivotal role in studying COVID-19 by employing diverse strategies, including the development of intricate epidemiological models such as susceptible, infected, and recovered (SIR) and susceptible, exposed, infected, and recovered (SEIR) utilizing statistical analysis and machine learning for data interpretation and forecasting, applying optimization techniques to devise control measures and vaccination strategies, understanding network dynamics through network theory to identify transmission patterns, and developing mathematical immunology models to predict vaccine efficacy and immune response dynamics. Collaborating across disciplines, mathematicians have worked closely with epidemiologists, virologists, healthcare professionals, and policymakers to integrate mathematical models with empirical data, guiding public health responses and aiding policymakers in making informed decisions to effectively mitigate the spread of the virus and ultimately save lives. In this context, reference is made to the works cited as3,4,5,6.

Mathematical modeling stands out as the most effective means of predictive analysis for accurately anticipating the prevalence of infectious diseases. By forecasting infection rates, valuable insights can be gained to formulate effective strategies for disease control. For instance, researchers done some important work referenced as7,8,9. The field of mathematical models for infectious diseases has undergone rapid advancement in recent decades, a progress attributed in part to the enhanced capacity of researchers to gather relevant data10,11,12.

An essential focus in epidemiology revolves around examining the global stability of endemic equilibrium within the mathematical models of infectious diseases. Researchers established numerous results on the global and local stability analysis. We refer some sophisticated results as13,14,15,16. Researchers have introduced various methods to establish the stability of equilibrium points, including monotone dynamical systems, the geometric approach, and the application of Lyapunov functions. We refer to the significant contributions of researchers as presented in17,18,19. For more informative contribution about stability, readers should read the articles like20,21. The V–L approach is a mathematical framework used to analyze the dynamics of complex systems, including infectious disease models like those used in studying COVID-19. We refer some references as22,23. The study of infectious diseases like COVID-19 typically involves mathematical modeling to understand how the disease spreads through populations. Differential equation models, such as compartmental models (e.g., SIR, SEIR models), are more commonly used in epidemiology to predict disease dynamics and assess the impact of interventions. For reader, we refer to24,25,26.

However, the V–L approach, originating from fields like control theory and nonlinear dynamics, can potentially offer insights into the stability and behavior of dynamical systems. In the context of infectious diseases like COVID-19, this approach could potentially be used to analyze the stability of disease equilibria, study the effects of parameter variations or interventions on disease dynamics, and understand the resilience or vulnerability of the system to perturbations. Researchers continuously explore new mathematical and computational techniques to enhance our understanding of infectious diseases, and the V–L approach might be an area of interest for more in-depth investigations in the future to gain insights into the dynamics of diseases like COVID-19. Authors27 introduced a mathematical model for an infectious disease . They analyzed the stability of both the disease-free and endemic equilibria, considering fixed controls. Additionally, they explored optimal control strategies for the disease, incorporating time-dependent controls. Liao and Wang28 proposed a method for ensuring global stability of the endemic equilibria, employing a combination of the Lyapunov function method and V–L matrices properties. However, this approach faced challenges, such as determining suitable Lyapunov functions and coefficients.

In our study, we propose a modification to the Lyapunov function method, integrating the theory of V–L stable matrices28,29,30. The key distinction lies in our modified method’s repeated use of Lemma 2.4 and Lemma 2.8 (presented in28), along with the reduction of matrix dimensions. This departure from the original method, which sometimes omitted this technique, shifts the analysis from differentiable functions to matrices associated with them. Notably, our modified approach leverages Lemma 2.4, Lemma 2.8, and matrix dimension reduction in each step, simplifying the implementation of higher-dimensional matrices. This reduction in complexity can enhance the computational efficiency compared to the original method28.

Moreover, the authors in27 employed intricate concepts and theorems to establish the global stability of the endemic equilibrium. In contrast, Tian and Wang31 investigated the global stability of cholera epidemic models using a modified approach. They relied on monotone dynamical systems, the geometric approach, and V–L stable matrices. Their models encompassed various functions, transmission pathways, and pathogen growth rates. The modified method presented here offers a straightforward means of proving the global stability of models featuring nonlinear incidence rates. Numerical analysis is performed by using NSFD method given by Mickens32. The said tool is a powerful numerical method which has more advantages than usual Euler and RK4 method. The dynamical properties positivity of the aforesaid method has been proved. We refer fundamental work on the said method as33,36. To thoroughly explore the application of fraction calculus in disease modeling trends, we consult the works cited in37,38,39. Moreover, to extend the use of fractional calculus from HIV and dengue dynamics to COVID-19, incorporating environmental factors and social distancing measures for a comprehensive analysis of disease transmission and control interventions. We consult the work cited in40,41,42,43. By applying fractional calculus to our COVID-19 research work, we gain a deeper understanding of the epidemic’s dynamics, leading to more accurate predictive models and better strategies for control and mitigation. For this, we reference the works cited in44,45,46,47.

Here, the following question arises:

-

1.

Why is COVID-19 timeliness to explore such a study?

-

2.

What makes this study different from the previously published COVID-19 studies?

-

3.

Are there any similarly findings in line with the previously published studies?

Our analysis answers the first question as this study explores COVID-19 urgently due to its global impact, necessitating a deeper understanding to inform effective intervention strategies. And answers the second question as this study differs from previous COVID-19 research by proposing a novel modification to stability analysis methods, integrating V–L stable matrices, offering a more efficient approach to analyzing disease models.

Researchers from various fields aim to enhance understanding and support medical researchers in responding effectively to both current and future pandemics. In this context, mathematical researchers utilize previous data to modify and advance models, providing deeper insights into pandemics. Therefore, this research gives the answer of the third question as, our study involves the utilization of mathematical models, including SIR and SEIR models, to analyze disease dynamics and assess interventions. It focuses on global stability analysis within infectious disease models and advancements in methodologies. The application of numerical analysis methods, such as the NSFD method, is employed for studying disease dynamics and ensuring numerical stability.

We introduce Table 1 summarizing the primary findings from relevant literature sources that we have incorporated into our research. This table offers a concise summary of the literature sources supplying the essential results cited in this paper. By having this table, readers could quickly glance at it to understand the main findings of each literature source without having to dig through the main text for that information. This approach helps to organize and present the information in a more digestible format, enhancing the overall readability and accessibility of the document.

Manuscript Organization: Section "Introduction" contains literature review. Section "Research and data methodology" contains formulation of the model. Moving to section "Local stability of DFE and EE", we assess the disease free equilibrium (DFE) and endemic equilibrium (EE) points, local stability of the model. Section "Global stability of DFE and EE" provides a brief background essential for establishing the global stability of the model. Section "Sensitivity analysis" we present sensitivity of the model. Section "Numerical results and discussion" contains numerical analysis and discussion. Section "Conclusion" contains conclusion.

Research and data methodology

In epidemiology, comprehending the dynamics of infectious diseases is paramount for effective public health interventions and policy formulation. Mathematical models serve as indispensable tools in simulating and predicting disease spread within populations. The model described herein, represented by the system of differential equations in Eq. (2), embodies a compartmental model widely utilized in epidemiology known as the SEIR model. This model has been extended to encompass separate compartments for asymptomatic and symptomatic infections, prophylactic treatment, and the pathogen population.

In this section, we outline the investigation conducted by authors referenced in1 on the subsequent mathematical framework given by the following system.

Model building process

Upon a comprehensive review of the research paper as cited above, we came to the conclusion that their suggested model was not adequately scrutinized and needs further investigation and analysis. We refined the model by reverting the recovered class into the susceptible class due to considerations regarding the weakened immune system, and subsequently made additional adjustments to the model outlined in system (1). We then revisit the updated model given in (2) and establish a detailed analysis for the stability and numerical results by using NSFD method.

Compartmental structure

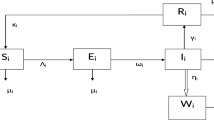

The population is compartmentalized based on disease status and the pathogen population into susceptible (S), exposed (E), symptomatic infectious (\(I_{S}\)), asymptomatic infectious (\(I_{A}\)), recovered (R), and pathogen (P) categories, defined in Table 2. A graphical representation illustrating the proposed model governing the system of differential equations outlined in (2) is shown in Fig. 1.

Each compartment is governed by a differential equation describing the rate of change of individuals or the pathogen population over time. Susceptible individuals can transition to exposed through contact with infected individuals or the pathogen, influenced by transmission dynamics. Exposed individuals progress to infectious states, symptomatic or asymptomatic, at specific rates. Recovery and disease-induced mortality rates determine transitions from infectious to recovered states. Pathogen population dynamics are affected by transmission from infectious individuals and decay processes.

The model integrates parameters governing disease transmission, progression rates, recovery rates, mortality rates, and pathogen dynamics nomenclature is given in Table 2. This model construction process allows for a comprehensive representation of infectious disease dynamics, facilitating insights into transmission patterns, intervention strategies, and disease control measures.

The dynamic of the refined model governs in the form of differential equations given in (2).

In summary, our model offers a robust framework for studying and understanding the complexities of COVID-19 dynamics, providing valuable insights for informing public health policies and interventions.

Model testing approach

To ensure the reliability and applicability of our proposed model, we conducted a rigorous testing process, employing various methodologies to assess its predictive capabilities and robustness. The testing framework comprised the following:

The model’s predictive accuracy has been validated by comparing its simulations with past epidemiological data obtained from1. Through ongoing adjustments to the model’s parameters, we ensured its close alignment with observed trends in disease incidence, prevalence, and other relevant metrics. This process confirmed the reliability of our model in forecasting epidemiological outcomes.

A comprehensive sensitivity analysis has been conducted to evaluate the impact of parameter variations on model outcomes. By systematically varying key parameters within plausible ranges, we assessed the sensitivity of the model to different inputs and identified parameters with the most significant influence on model predictions. This analysis enabled us to better understand the model’s behavior under varying conditions and to identify potential sources of uncertainty.

Finally, to ensure the robustness and credibility of our model. The feedback received from peer reviewers strengthened the validity and reliability of our model and its implications for public health policy and practice.

Schematic diagram of our proposed model (2).

Feasibility of solution

After describing the human population in system (2), it is imperative to demonstrate that, for all \(t\ge 0\), the state parameters \(S, E, I_{A}, I_{S}, R, and P\) are positive. For every \(t\ge 0\), the solution with positive beginning data remains positive and is bounded. Now from system (2), clearly one has that \(\frac{dN}{dt}=b-\mu N-\left((\delta -\tau _{S})+(\delta -\tau _{A})+\mu _{P}P\right)\) and \(\sup _{t\longrightarrow +\infty } N\le \frac{b}{\mu }\). The feasible region is described as

At this point, (3) is positively invariant with respect to (2). This indicates that all solutions of the system with \((S, E, I_{A}, I_{S}, R, P)\in R^{6}_{+}\) remain in \(\Omega\) and thus the suggested model (2) is epidemiologically well formulated.

DFE

Solving equations given in system (2) we get the DFE points by setting \(S^{\prime }\), \(E^{\prime }\), \(I^{\prime }_{A}\), \(I^{\prime }_{S}\), \(R^{\prime }\) and \(P^{\prime }\) all equal to zero. In this case \(I^{\prime }_{A}=I^{\prime }_{S}=P^{\prime }=0\), which implies that \(E=0\) and \(R=0\) too. Hence, we get the DFE \(E_{0}=(\frac{b}{\mu },0,0,0,0,0)\).

EE

To deduce EE point, let use \(S^{\prime }\), \(E^{\prime }\), \(I^{\prime }_{A}\), \(I^{\prime }_{S}\), \(R^{\prime }\) and \(P^{\prime }\) in system (2) in right sides and consider

From 3rd and 4th equations in the system (4), we get

and

So

or

or

For simplicity let

which implies that

Furthermore

Let

then

Furthermore

For simplicity let

then

From second equation of system (2), we have

Adding first two equations of system (4) while using \(R^*=\omega E^*\), we get

Solving equation (5) and (6), we get

Computation of \(R_{0}\)

The basic reproduction rate, often represented as \(R_{0}\), quantifies the average count of subsequent infections initiated by one person within a population that is entirely susceptible. This metric serves as a gauge to determine if the infection is likely to propagate within the population. The calculation of \(R_{0}\) employs the methodology known as the “next generation matrix approach”.

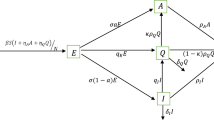

Since the first and fifth equations in system (2) are independent of each other, we have consequently reduced system (2) to the following:

Let \(x=(E,I_{A}, I_{S}, P)^{T},\) then the model can be written as \(\frac{d}{dt}(x)=\mathcal{F}(x)-\mathcal{V}(x)\), where

Suppose F and V represent the Jacobian matrices of \(\mathcal{F}\) and \(\mathcal{V}\) at DFE respectively, then we obtain \(FV^{-1}\) as

where \(c_{1}=\mu +\upsilon\), \(c_{2}=\mu +\delta +\gamma _{S}+\tau _{S}\), and \(c_{3}=\mu +\delta +\gamma _{A}+\tau _{A}\). The reproduction number \(R_{0}\) is the largest eigenvalue of \(FV^{-1}\), given by

The notation stands for basic reproductive number for human \(R_{0}^{H}\) and for pathogens \(R_{0}^{P}\). Our remarks are recorded as:

and

therefore

Here, \(R_0\) is composed of two portions, which correspond to the two COVID-19 transmission modes. In addition, we present 3D profile of \(R_0\) corresponding to various parameters to investigate the trends of parameters involve in (13) in Fig. 2 as follows:

Local stability of DFE and EE

Theorem 3.1

The DFE is locally asymptotically stable for \(R_{0}<1\).

Proof

The jacobian matrix with respect to the system (2) is given by

The characteristic polynomial of \(J_{0}\) about \((S^{0}, E^{0}, I_S^{0}, I_A^{0}, R^0, P^0)\) is given by

Here

with

It follows from (14) that \(\lambda _{1}=-\mu\), \(\lambda _{2}=-(\mu +\varphi )\) and \(\lambda _{3}=-\mu _P\) are the eigenvalues of \(J_{0}\), its other eigenvalues are the roots of (15). Furthermore, it is clear that both \(a>0\) and \(b>0\). Considering the high sensitivity of parameters \(\gamma _{S}\), \(\tau _{S}\), and \(\beta _{2}\), it is important to note that \((\gamma _{S}+\tau _{S})>(\gamma _{A}+\tau _{A})\) and \(S^{0}\beta _{2}<(\delta +\mu +\gamma _{A}+\tau _{A})\) to maintain the local asymptotic stability of the DFE provided that \(R_{0}<1\). Failure to meet these conditions would render the DFE locally asymptotically unstable provided that \(R_{0}>1\). Consequently, we conclude that \(c>0\). This analysis establishes that \(ab-c>0\). This indicates that the real parts of the roots of (15) are negative. Thus, the system’s single positive equilibrium point of the system (2) is locally asymptotically stable according to the Hurwitz criterion. This implies that the DFE is locally asymptotically stable. This completes the proof. \(\square\)

Local stability analysis of EE at \(E_{*}\)

In this subsection, we establish the asymptotic stability of (2) at \(E_{*}\).

Theorem 3.2

The EE denoted by \(E_{*}\) is locally asymptotically stable with \(R_{0}>1\).

Proof

At \(E_{*}\), the jacobian matrix of (2) can be deduced as

For simplicity, let \(k_{1}=\frac{\beta _{1}P^*}{1+\alpha _{1}P^*}+\frac{\beta _{2}(I_{A}^*+I_{S}^*)}{1+\alpha _{2}(I_{A}^*+I_{S}^*)}\), \(k_{2}=\frac{\beta _{2}S^*}{(1+\alpha _{2}(I_{A}^*+I_{S}^*))^2}\), \(k_{3}=\frac{\beta _{1}S^*}{(1+\alpha _{1}P^*)^{2}}\), \(c_{1}=\mu +\upsilon\), \(c_{2}=\mu +\delta +\gamma _{S}+\tau _{S}\) and \(c_{3}=\mu +\delta +\gamma _{A}+\tau _{A}\). The matrix shown above can be expressed in the following manner:

here in this case

We see that one eigenvalue of Jacobian matrix \(J_{*}\) is clearly negative, i.e. \(\lambda _{1}=-\mu _{P}<0\). The remaining eigenvalues can be tested by observing that \(\det (J_{*})\) and \(\textrm{Tr}(J_{*})\) have different signs. Now from the above jacobian matrix \(J_{*}\), we have

Considering the high sensitivity of parameters \(\eta\), \(c_{2}\), and \(c_{3}\), it is important to note that \(\eta >\frac{c_{2}}{c_{2}-c_{3}}\) (assuming that \(c_{2}\ne c_{3}\)) to maintain the local asymptotic stability of the EE provided that \(R_{0}>1\). Failure to meet these conditions would render the EE locally asymptotically unstable. In this context, we see that \(\det (J_{*})\) and \(\textrm{Tr}(J_{*})\) have opposite signs which insures that the eigenvalues of \(J_{*}\) have negative real parts. This implies that the system (2) is locally asymptotically stable at \(E_{*}\). \(\square\)

Global stability of DFE and EE

In this section, we investigate the V–L stability of matrix A defined in (16) to determine the global stability of the endemic equilibrium \(E_{*}\). The following definitions and preliminary lemmas are necessary prerequisites for this examination.

Definition 4.1

30,48,49,50. Consider a square matrix, denoted as M, endowed with the property of symmetry and positive (negative) definiteness. In this context, the matrix M is succinctly expressed as \(M>0\) or (\(M<0\)).

Definition 4.2

30,48,49,50. We write a matrix \(A_{n\times n}>0(A_{n\times n}<0)\) if \(A_{n\times n}\) is symmetric positive (negative) definite.

Definition 4.3

30,48,49,50. If a positive diagonal matrix \(H_{n\times n}\) exists, such that \(HA+A^{T}H^{T}<0\) then a non-singular matrix \(A_{n\times n}\) is V–L stable.

Definition 4.4

30,48,49,50. If a positive diagonal matrix \(H_{n\times n}\) exists, such that \(HA+A^{T}H^{T}<0\) \((>0)\) then, a non singular matrix \(A_{n\times n}\) is diagonal stable.

The \(2\times 2\) V–L stable matrix is determined by the following lemma.

Lemma 4.1

30,48,49,50. Let \(B=\begin{bmatrix} B_{11} &{} B_{12} \\ B_{21} &{} B_{22}\\ \end{bmatrix}\) is V–L stable if and only if \(B_{11}<0\), \(B_{22}<0\), and \(\det (B)=B_{11}B_{22}-B_{12}B_{21}>0\).

Lemma 4.2

30,48,49,50. Consider the nonsingular \(A_{n\times n}=[A_{ij}]\), where \((n\ge 2)\), the positive diagonal matrix \(C_{n\times n}=\textrm{diag}(C_{1},\ldots , C_{n})\), and \(D=A^{-1}\) such that

Then, for \(H_{n}>0\), it ensures that the matrix expression \(HA + A^{T}H^{T}\) is positive definite.

Global stability of DFE

We employ the same methodology used in51 to obtain the necessary outcomes for globally asymptotically.

Theorem 4.5

If \(R_{0}<1\), the system (2) is globally asymptotically stable at \({E}_{0}=\left( \frac{b}{\sigma },0,0,0,0,0 \right)\).

Proof

Taking the Lyapunov function corresponding to all dependent classes of the model

Taking derivative with respect to t yields

After utilizing the values derived from system (2) and performing requisite calculations, we obtain:

Using \(m_1=m_2=m_3=m_4=m_5=1\) in the above equation gives

Thus, the system (2) is globally asymptotically stable at \(E_{0}\), whenever \(R_{0}<1\). \(\square\)

Global stability analysis of the EE at \(E_{*}\)

We propose the following Lyapunov function in order to prove the global stability at \(E_{*}\).

Here, \(m_{1}\), \(m_{2}\), \(m_{3}\), \(w_{4}\), \(m_{5}\), and \(w_{6}\) are non-negative constants. By taking the derivative of L with respect to time along the trajectories of the model (8), one has

Here

Using these values in \(L^\prime\), which implies that \(L^\prime =Z(MA+A^{T}M)Z^{T}.\)

Here, \(Z=[S-S^*, E-E^*, I_S-I_S^*, I_A-I_A^*, R-R^*, P-P^*]\), \(M={\textbf{diag}}(m_1, m_2, m_3, m_4, m_5, m_6)\), and

It is important to note that matrix \(A_{(n-1)\times (n-1)}\) is derived from matrix A by removing its final row and column. In accordance with the works of Zahedi and Kargar48, Shao and Shateyi49, Masoumnezhad et al.50, Chien and Shateyi30, and Parsaei et al.29, we introduce a series of lemmas and theorems to examine the global stability of the EE at \(E_{*}\).

In order to make the calculation easier, we present the matrix (16) in more simplified form as given below:

Here, \(a=\frac{\beta _{1}P}{1+\alpha _{1}P}+\frac{\beta _{2}(I_A+I_S)}{1+\alpha _{2}(I_A+I_S)}+\mu\), \(b=\frac{\beta _{2}S^*}{(1+\alpha _{2}(I_A+I_S))(1+\alpha _{2}(I_A^*+I_S^*))}\), \(c=\varphi\), \(d=\frac{\beta _{1}S^*}{(1+\alpha _{1}P)(1+\alpha _{1}P^*)}\), \(e=\frac{\beta _{1}P}{1+\alpha _{1}P}+\frac{\beta _{2}(I_A+I_S)}{1+\alpha _{2}(I_A+I_S)}\), \(f=(\mu +\upsilon )\), \(g=\eta \upsilon\), \(h=(\mu +\delta +\gamma _S+\tau _{S})\), \(i=(1-\eta )\upsilon\), \(j=(\mu +\delta +\gamma _A+\tau _{A})\), \(k=\gamma _S\), \(l=\gamma _A\), \(m=(\mu +\varphi )\), \(n=\tau _S\), \(p=\tau _A\), and \(q=\mu _P\).

It is important to note that all the parameters a, b, c, d, e, f, g, h, i, j, k, l, m, n, p, and q are positive. Throughout this paper, it is important to note that \(a-e\) and \(-a+e\) are always equal to \(\mu\) and \(-\mu\) respectively. Moreover, we conclude that all the diagonal elements (the negative values) of the matrix A are negative, which is a good sign for stability. We need to compute the eigenvalues to make a definitive conclusion for the global stability of \(E_{*}\). But finding the eigenvalues of the matrix A is quite time consuming. Therefore, we introduce a series of lemmas and theorems given below to examine whether all the eigenvalues of the matrix A have negative real parts or not?.

Theorem 4.6

The matrix \(A_{6\times 6}\) defined in (17) is V–L stable.

Proof

Obviously \(-A_{66}>0\). Consider \(C=-\widetilde{A}\) be a \(5\times 5\) matrix that is produced by eliminating the final row and column \(-A\). Utilizing Lemma 4.2, we can demonstrate the diagonal stability of matrices \(C=-\widetilde{A}\) and \(\mathbb{C}=-\widetilde{A^{-1}}\). Let examine the matrix \(C=-\widetilde{A}\) and \(\mathbb{C}=-\widetilde{A^{-1}}\), from (17) we obtain

Here

and

Since

and

Therefore

which implies that

Using the information provided in Lemma 4.2, we assert and demonstrate that \(C=-\widetilde{A}\) and \(\mathbb{C}=-\widetilde{A^{-1}}\) exhibit diagonal stability, thereby establishing the V–L stability of the matrix A. \(\square\)

Lemma 4.3

In accordance with Theorem 4.6, the matrix \(C=-\widetilde{A}\) is affirmed to possess diagonal stability.

Proof

As \(C_{55}>0\), using Lemma 4.2, we must demonstrate the diagonal stability of the reduced matrix \(D=\widetilde{C}\) and its inverse \(\mathbb{D}=\widetilde{C^{-1}}\) to complete the proof. Thus the matrix D can be obtained from the matrix C given in Theorem 4.6, we have

here

and

Since

and

Therefore

\(\square\)

Lemma 4.4

The matrix \(\mathbb{C}=-\widetilde{A^{-1}}\) defined in Theorem 4.6 is diagonal stable.

Proof

Consider the matrix \(\mathbb{C}\) in 4.6, we see that \(\mathbb{C}_{55}>0\), Using Lemma 4.2, we must demonstrate that the modified matrix \(E=\widetilde{\mathbb{C}}\) and its inverse \(\mathbb{E}=\widetilde{\mathbb{C}^{-1}}\) exhibit diagonal stability. This establishes the completion of the proof. Thus the matrix D can be obtained from the matrix \(\mathbb{C}\) given in Theorem 4.6, we have

Here

and

Here

Since

and

Therefore

where \(\det (-A)<0\) and \(\ell >0\). \(\square\)

Lemma 4.5

The matrix D, as described in Lemma 4.3 and denoted by \(\widetilde{C}\), exhibits diagonal stability.

Proof

It is clear that \(D_{44}>0\). By applying Lemma 4.2, our task is to demonstrate the diagonal stability of the reduced matrix \(F=\widetilde{D}\) and its inverse \(\mathbb{F}=\widetilde{D^{-1}}\), thereby completing the proof. Thus from Lemma 4.3, we have

Here

and \(\mathbf{f_{11}}=f h j - b (h i + g j)>0 \ \ (\textrm{see}\ \ \textrm{Proposition}\ \ 4.1\ \ (iv))\), \(\mathbf{f_{12}}=-b (h i + g j)\), \(\mathbf{f_{13}}=b f j\), \(\mathbf{f_{21}}=e h j\), \(\mathbf{f_{22}}=a h j\), \(\mathbf{f_{23}}=b (a - e) j\), \(\mathbf{f_{31}}=e g j\), \(\mathbf{f_{32}}=a g j\), \(\mathbf{f_{33}}=(-a + e) b i + a f j>0 \ \ (\textrm{see}\ \ \textrm{Proposition}\ \ 4.1\ \ (v))\).

Since \(\det (D)>0\), and \(\mathbf{f_{33}}>0\), therefore \(\mathbb{F}_{33}>0\). \(\square\)

Lemma 4.6

The matrix \(\mathbb{D}\), as defined in Lemma 4.3 denoted by \(\widetilde{C^{-1}}\), exhibits diagonal stability.

Proof

It is obvious that \(\mathbb{D}_{44}>0\), by employing Lemma 4.2, our task is to demonstrate the diagonal stability of a modified matrix \(G=\widetilde{\mathbb{D}}\) and its inverse \(\mathbb{G}=\widetilde{\mathbb{D}^{-1}}\). This verification serves as the completion of the proof. Thus from Lemma 4.3, we have

Here

and

Here

Since \(\det (\mathbb{D})>0\), and \(\mathbf{g_{33}}>0\). Therefore \(\mathbb{G}_{33}>0.\) \(\square\)

Lemma 4.7

The matrix denoted as E, which is defined in Lemma 4.4 as \(\widetilde{\mathbb{C}}\), exhibits diagonal stability.

Proof

Since

and

So it is obvious that \(E_{44}>0\), Using Lemma 4.2, our objective is to demonstrate that the reduced matrix \(H=\widetilde{E}\) and \(\mathbb{H}=\widetilde{\mathbb{E}^{-1}}\) exhibit diagonal stability. Thus the matrix D can be obtained from the matrix E given in Lemma 4.4, we have

Here

and

Since \(\det (E)>0\), and \(\mathbf{h_{33}}>0\). Therefore \(\mathbb{H}_{33}>0.\) \(\square\)

Lemma 4.8

The matrix \(\mathbb{E}\) given by \(\widetilde{\mathbb{C}^{-1}}\) in Lemma 4.4 exhibits diagonal stability.

Proof

It is obvious that \(\mathbb{E}_{44}>0\), by applying Lemma 4.2, our task is to establish the diagonal stability of the reduced matrix \(I=\widetilde{\mathbb{E}}\) and its inverse \(\mathbb{I}=\widetilde{\mathbb{E}^{-1}}\). This demonstration serves as the completion of the proof. Thus the matrix I and \(\mathbb{I}\) can be obtained from the matrix \(\mathbb{E}\) given in Lemma 4.4, we have

Here

and

Since \(\det (\mathbb{E})>0\), and \(\mathbf{i_{33}}>0\). Therefore \(\mathbb{I}_{33}>0.\) \(\square\)

Proposition 4.1

Let \(a=\frac{\beta _{1}P}{1+\alpha _{1}P}+\frac{\beta _{2}(I_A+I_S)}{1+\alpha _{2}(I_A+I_S)}+\mu\), \(b=\frac{\beta _{2}S^*}{(1+\alpha _{2}(I_A+I_S))(1+\alpha _{2}(I_A^*+I_S^*))}\), \(f=(\mu +\upsilon )\), \(g=\eta \upsilon\) and \(h=(\mu +\delta +\gamma _S+\tau _{S})\) in (17), and if \((\mu +\delta +\gamma _S+\tau _{S})>\frac{\beta _{2}S^*}{(1+\alpha _{2}(I_A+I_S))(1+\alpha _{2}(I_A^*+I_S^*))}\), then the following conditions holds:

-

(i)

\(fh>bg\),

-

(ii)

\(afh>\mu bg\),

-

(iii)

\(afhj>\mu b(hi+gj),\)

-

(iv)

\(fhj>b(hi+gj),\)

-

(v)

\(afj>\mu bi\).

Assume that the pairs fh, bg and afh and \(\mu bg\) are nearly equal, such that \(bg-af\simeq 0\) and \(\mu bg-afh\simeq 0\).

Proof

(i) Since

as \(0<\eta <1\), so \(\mu +\upsilon >\eta \upsilon ,\) this implies that \(f>g\). Furthermore, for

which implies that

therefore

This implies that

(ii) One can easily see that

and for

from (i), we know that

Therefore

this implies that \(afh>\mu bg.\)

(iii) Since \(a>\mu\) and \(h>b\). This implies that

Now as \((\mu +\upsilon )>(1-\eta \upsilon )\) and \((\mu +\delta +\gamma _{A}+\tau _{A})>(\mu +\delta +\gamma _{S}+\tau _{S}).\) This implies that

or

Also \((\mu +\upsilon )>\eta \upsilon\). Therefore \((\mu +\upsilon )(\mu +\delta +\gamma _{A}+\tau _{A})>\eta \upsilon (\mu +\delta +\gamma _{A}+\tau _{A})\). This implies that

From (18) and (21), we deduced that

(iv) From (i) and (iii), one can easily see that \(fhj>b(hi+gj).\)

(v) Since \(a>\mu\), \((\mu +\upsilon )>(1-\eta )\upsilon\), and for

This implies that

which means that

\(\square\)

Lemma 4.9

The matrix denoted as F and defined as \(\widetilde{D}\) in Lemma 4.5 exhibits diagonal stability.

Proof

It is obvious that \(F_{33}>0\), using Lemma 4.1, we must establish the diagonal stability of both the reduced matrix \(J=\widetilde{F}\) and the matrix \(\mathbb{J}=\widetilde{F^{-1}}\). Thus from Lemma 4.5 we have

Since both \(J_{11}>0\) and \(J_{22}>0\), furthermore \(\det (J)=af>0\) because both a and f are positive. Now

Since

Thus using Lemma 4.1, the matrix F is diagonally stable. \(\square\)

Lemma 4.10

The matrix \(\mathbb{F}\), as defined in Lemma 4.5 with \(\widetilde{D^{-1}}\), exhibits diagonal stability.

Proof

It is obvious that \(\mathbb{F}_{33}>0\), applying Lemma 4.1, our goal is to establish the diagonal stability for both the reduced matrix \(K=\widetilde{\mathbb{F}}\) and the matrix \(\mathbb{K}=\widetilde{\mathbb{F}^{-1}}\). Thus from Lemma 4.5 we have

Since

Furthermore

Now

here

and

Since

furthermore

Therefore

Thus using Lemma 4.1, the matrix \(\mathbb{F}\) is diagonally stable. \(\square\)

Lemma 4.11

The matrix G given by \(\widetilde{\mathbb{D}}\) in Lemma 4.6 exhibits diagonal stability.

Proof

It is obvious that \(G_{33}>0\), applying Lemma 4.1, our goal is to establish the diagonal stability of both the reduced matrix \(L=\widetilde{G}\) and \(\mathbb{L}=\widetilde{G^{-1}}\). Thus from Theorem 4.6 we have

Since

furthermore

Therefore

Now

here

and

Since

furthermore

Therefore

Thus using Lemma 4.1, the matrix G is diagonally stable. \(\square\)

Lemma 4.12

The matrix \(\mathbb{G}\) is given by \(\widetilde{\mathbb{D}^{-1}}\) in Lemma 4.6 exhibits diagonal stability.

Proof

It is obvious that \(\mathbb{G}_{33}>0\), applying Lemma 4.1, our goal is establish the diagonal stability of both the reduced matrix \(M=\widetilde{\mathbb{G}}\) and \(\mathbb{M}=\widetilde{\mathbb{G}^{-1}}\). Thus from Lemma 4.6 we have

Since

furthermore

Therefore

Now

here

and

Since

furthermore

Therefore

Thus using Lemma 4.1, the matrix \(\mathbb{M}\) is diagonally stable. \(\square\)

Lemma 4.13

The matrix H given by \(\widetilde{E}\) in Lemma 4.7 exhibits diagonal stability.

Proof

It is obvious that \(H_{33}>0\), applying Lemma 4.1, our goal is to establish the diagonal stability of both the reduced matrix \(N=\widetilde{H}\) and the matrix \(\mathbb{N}=\widetilde{H^{-1}}\). Thus from Lemma 4.7 we have

Since

furthermore

Therefore

Now

here

and \(\mathbf{n_{11}}=-a j m q\ell <0,\)\(\mathbf{n_{12}}=-i (d m p - c l q + b m q)\ell <0,\)\(\mathbf{n_{21}}=e j m q\ell >0,\)\(\mathbf{n_{22}}=m (d i p + b i q - f j q)\ell <0 \,\,\textrm{if} \,\,f j q>d i p + b i q.\)

Since

furthermore

Therefore

Thus using Lemma 4.1, the matrix H is diagonally stable. \(\square\)

Lemma 4.14

The matrix \(\mathbb{H}\) given by \(\widetilde{E^{-1}}\) in Lemma 4.7 exhibits diagonal stability.

Proof

It is obvious that \(\mathbb{H}_{33}>0\), applying Lemma 4.1, our goal is to establish the diagonal stability of both the reduced matrix \(P=\widetilde{\mathbb{H}}\) and \(\mathbb{P}=\widetilde{\mathbb{H}^{-1}}\). Thus from Lemma 4.7, one has

Since

furthermore

Therefore

Now

here

where

Since

furthermore

Therefore

Thus using Lemma 4.1, the matrix \(\mathbb{H}\) is diagonally stable. \(\square\)

Lemma 4.15

The matrix I given by \(\widetilde{\mathbb{E}}\) in Lemma 4.9 exhibits diagonal stability.

Proof

It is obvious that \(I_{33}>0\), applying Lemma 4.1, our goal is to establish diagonal stability of both the reduced matrix \(Q=\widetilde{I}\) and \(\mathbb{Q}=\widetilde{I^{-1}}\). Thus from Lemma 4.9 we have

Since

furthermore

Therefore,

Now

here

where

Since

furthermore

Therefore

Thus using Lemma 4.1, the matrix I is diagonally stable. \(\square\)

Lemma 4.16

The matrix \(\mathbb{I}\) given by \(\widetilde{\mathbb{E}^{-1}}\) in Lemma 4.8 exhibits diagonal stability.

Proof

It is obvious that \(\mathbb{I}_{33}>0\), applying Lemma 4.1, our goal is to establish diagonal stability of both the reduced matrix \(T=\widetilde{\mathbb{I}}\) and the matrix \(\mathbb{T}=\widetilde{\mathbb{I}^{-1}}\). Thus from Lemma 4.8 we have

Since

furthermore

Therefore

Now

here

and

Since

furthermore

Therefore

Thus using Lemma 4.1, the matrix \(\mathbb{I}\) is diagonally stable. \(\square\)

In summary of the preceding discussions, we’ve drawn these conclusions regarding the global asymptotic stability of the EE.

Theorem 4.7

When the value of \(R_{0}\) is greater than 1, the endemic equilibrium \(E_{*}=(S^*, E^*, I_{S}^*, I_{A}^*, R^*, P^*)\) in model (2) exhibits global asymptotic stability within \(\Omega\).

Proof

Building upon above Theorem and a sequence of lemmas, it is assured that the EE of model (2) achieves global asymptotic stability. \(\square\)

Sensitivity analysis

Here in this section, we conduct sensitivity analysis to explore how the model responds to variations in parameter values, aiming to understand which parameters significantly influence the transmission of the disease, specifically the reproductive number (\(R_{0}\)). To perform this analysis, we employ the normalized forward sensitivity index method, as outlined in52. This method involves determining the ratio of the relative change in a variable to the relative change in the corresponding parameter. Alternatively, sensitivity indices can be derived using partial derivatives when the variable is a differentiable function of the parameter.

Therefore, for our proposed model, we present the normalized forward sensitivity index of “\(R_{0}\)” using the given role:

Numerical results and discussion

We simulate our results by extending the NSFD method. The mentioned scheme is convergent, consisted and have many dynamical properties (see32). Mickens’s34 established a general NSFD method for a coupled system. The reason behind of develing such schemes was that to avoid inaccuracies in the standard methods like Euler, RKM methods. Here, consider a problem35 described by

we define \(t_{i+1}=t_0+nh\), where h is positive step size, let the discretization of y at \(t_n\) be \(y_n\approx y(t_n)\), then the the problem (25) becomes

The procedure (26) is called NSFD method, because

-

In the discretized version of (26), the usual denominator h is replaced by a nonnegative function say \(\phi (h);\) such that

$$\begin{aligned} \phi (h)=h+O(h^2),\ \text{as}\ h\rightarrow 0. \end{aligned}$$ -

The nonlinear function \(\theta\) is approximated by nonlocal way.

The major advantages of the aforesaid method are given as (see details33):

-

The NSFD method avoids numerical instabilities even on using very small step size we need to implement the scheme.

-

First, the NSFD method behaves more or equally well with an order RK4 technique most of the time. Although the numerical algorithmic complexity is similar to that of the Euler scheme, there is a significant computational benefit.

-

Second, a system that provides good dynamic behavior even for huge time increments is produced by maintaining the dynamical restrictions. Additionally, this offers a significant computational and implementation advantage.

To deduce the NSFD algorithm for our model (2). We can write in differences form \(S^{\prime }\) as

such that \(\zeta (h)=1-\exp (-h)\) (see36), and \(h\rightarrow 0\), then \(\zeta (h)\rightarrow 0\). After rearranging the terms, we get the algorithm in simplified form as

From the numerical scheme we get (30), the positivity condition of the NSFD method clearly can be observed. We use the said method and the following numerical values given in Table 3 for the simulations to present graphically our results.

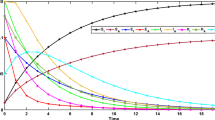

The population dynamics is presented graphically in Fig. 3 using the numerical values of Table 3. Here we present the population using the values for the parameters \(\alpha _{1}=0.1,\ \alpha _2=0.1\).

The population dynamics of the proposed model (2).

Here in Fig. 3 we have displayed the combined population dynamics for the given parameters values.

Case I

When \(\alpha _1=0.05, \ 0.10, \ 0.15\), we describe the population dynamics of various classes using that values of \(\alpha _2=0.10\) in Figs. 4, 5, 6, 7, 8, 9.

Dynamics of the susceptible class of the proposed model (2).

Dynamics of the exposed class of the proposed model (2).

Dynamics of the symptomatic infected compartment of the proposed model (2).

Dynamics of the asymptomatic infected compartment of the proposed model (2).

Dynamics of the recovered class of the proposed model (2).

Dynamics of the compartment of organism that causes disease of the proposed model (2).

Here in Fig. 4 as time increases from 0 to 100 days in the populations. The number of susceptible people decreases quickly over the first ten days while the number of exposed people rises quickly in first 20 days as a result of contact with infected people as shown in Fig. 5. Also the population of infected people is increasing very rapidly for initial 20 days. In the same way there is quick increase of symptomatic infected and asymptomatic infected classes (see Figs. 6, and 7). The concentration of pathogen is also increases as shown in Fig. 9. Also the population of recovered class is rising smoothly as in Fig. 8. Here as the infectious environment ratio increases, therefore for first 25 days, the spread of COVID-19 through interactions in homes, schools, workplaces, restaurants, or during commutes increases. After the 25 days decline can be seen in the transmission dynamics. When \(\alpha _1=0.05\) there is high chance to get infection, similarly if we increase \(\alpha _1=0.10\) the chance further decreases to get infection and so on. For \(\alpha _1=0.15\), there is further low chance of getting infection in contact with infectious environment like institutions, market, food shops etc.

Case II

When \(\alpha _2=0.05, \ 0.10, \ 0.15\), we describe the population dynamics of various classes using that values of \(\alpha _1=0.10\) in Figs. 10, 11, 12, 13, 14, 15.

Dynamics of the susceptible class of the proposed model (2).

Dynamics of the exposed class of the proposed model (2).

Dynamics of the Infected symptomatic infected compartment of the proposed model (2).

Dynamics of the asymptomatic infected compartment of the proposed model (2).

Dynamics of the recovered class of the proposed model (2).

Dynamics of the compartment of organism that causes disease of the proposed model (2).

As time increases in the populations from 0 to 100 days, Fig. 10 shows this. As illustrated in Fig. 11, the number of susceptible individuals rapidly drops during the first ten days, while the number of exposed individuals rapidly increases during the first twenty days as a result of interaction with infected individuals. For the first 20 days after infection, the number of infected individuals is also growing quite quickly. Similarly, both symptomatic and asymptomatic infected classes are rapidly growing (see Figs. 12 and 13). As seen in Fig. 15, there is also a rise in pathogen concentration. As seen in Fig. 14, the population of the recovered class is likewise growing steadily. Here, for the first 25 days, the spread of diseases increases as the infectious environment ratio does. On reducing the social interaction with infectious individual, the chances of getting infection is also rising. As at \(\alpha _2=0.05\), there is high risk to get infection. But on increasing the values of \(\alpha _2=0.10\), and \(\alpha _2=0.15\), the social distance increases the chances of getting infection will decrease.

Conclusion

The present article conducts a comprehensive review aimed at understanding the global dynamics of COVID-19. The researchers identified equilibrium points, calculated the basic reproductive rate, and assessed the model’s stability at disease-free and endemic equilibrium states, both locally and globally. The main focus lies in examining COVID-19 dynamics using a suggested model, with particular attention to the characteristics of V–L matrices. A series of lemmas and theorems based on V–L matrices theory are presented in this regard to study the global stability of the endemic equilibrium. Non-standard finite difference techniques are employed for numerical analysis, contributing to ongoing efforts to comprehend the pandemic’s intricacies. The paper also elaborates on the effects of social distance and interaction with infected environments. Precautionary measures such as increasing social distances, wearing face masks, sanitation, and avoiding large gatherings are highlighted as effective strategies for reducing the risk of infection. These findings contribute to the broader understanding of COVID-19 dynamics and provide insights into potential strategies for mitigating its spread.

As future work, the authors plan to utilize the Volterra–Lyapunov (V–L) matrix theory approach to investigate the global stability of other infectious disease models that have not yet been thoroughly studied using the said methodology.

Data availability

All the data used is included in the paper.

References

Mwalili, S., Kimathi, M., Ojiambo, V., Gathungu, D. & Mbogo, R. SEIR model for COVID-19 dynamics incorporating the environment and social distancing. BMC Res. Notes 13(1), 1–5 (2020).

Anderson, R. M. & May, R. M. Infectious Diseases of Humans: Dynamics and Control (Oxford University Press, Oxford, 1991).

Hethcote, H. W. The mathematics of infectious diseases. SIAM Rev. 42(4), 599–653 (2000).

Korobeinikov, A. Global properties of basic virus dynamics models. Bull. Math. Biol. 66(4), 879–883 (2004).

Abouelkheir, I., El Kihal, F., Rachik, M. & Elmouki, I. Time needed to control an epidemic with restricted resources in SIR model with short-term controlled population: A fixed point method for a free isoperimetric optimal control problem. Math. Comput. Appl. 23(4), 64 (2018).

De la Sen, M., Ibeas, A., Alonso-Quesada, S. & Nistal, R. On a SIR model in a patchy environment under constant and feedback decentralized controls with asymmetric parameterizations. Symmetry 11(3), 430 (2019).

Hethcote, H. W. & van den Driessche, P. Some epidemiological models with nonlinear incidence. J. Math. Biol. 29(3), 271–287 (1991).

Zhao, Y., Li, M. & Yuan, S. Analysis of transmission and control of tuberculosis in Mainland China, 2005–2016, based on the age-structure mathematical model. Int. J. Environ. Res. Publ. Health 14(10), 1192 (2017).

Agaba, G. O., Kyrychko, Y. N. & Blyuss, K. B. Time-delayed SIS epidemic model with population awareness. Ecol. Complex. 31, 50–56 (2017).

Bairagi, N. & Adak, D. Role of precautionary measures in HIV epidemics: A mathematical assessment. Int. J. Biomath. 9(06), 1650096 (2016).

Sayan, M. et al. Dynamics of HIV, AIDS in Turkey from, 1985 to 2016. Qual. Quant. 52, 711–723 (2018).

Liu, X., Takeuchi, Y. & Iwami, S. SVIR epidemic models with vaccination strategies. J. Theor. Biol. 253(1), 1–11 (2008).

Ma, Y., Liu, J. B. & Li, H. Global dynamics of an SIQR model with vaccination and elimination hybrid strategies. Mathematics 6(12), 328 (2018).

Upadhyay, R. K., Pal, A. K., Kumari, S. & Roy, P. Dynamics of an SEIR epidemic model with nonlinear incidence and treatment rates. Nonlinear Dyn. 96, 2351–2368 (2019).

Mwasa, A. & Tchuenche, J. M. Mathematical analysis of a cholera model with public health interventions. Biosystems 105(3), 190–200 (2011).

Wang, L. & Xu, R. Global stability of an SEIR epidemic model with vaccination. Int. J. Biomath. 9(06), 1650082 (2016).

Bentaleb, D. & Amine, S. Lyapunov function and global stability for a two-strain SEIR model with bilinear and non-monotone incidence. Int. J. Biomath. 12(02), 1950021 (2019).

Chen, X., Cao, J., Park, J. H. & Qiu, J. Stability analysis and estimation of domain of attraction for the endemic equilibrium of an SEIQ epidemic model. Nonlinear Dyn. 87, 975–985 (2017).

Baba, I. A. & Hincal, E. Global stability analysis of two-strain epidemic model with bilinear and non-monotone incidence rates. Eur. Phys. J. Plus 132, 1–10 (2017).

Geng, Y. & Xu, J. Stability preserving NSFD scheme for a multi-group SVIR epidemic model. Math. Methods Appl. Sci. 40(13), 4917–4927 (2017).

Zaman, G., Kang, Y. H. & Jung, I. H. Stability analysis and optimal vaccination of an SIR epidemic model. Biosystems 93(3), 240–249 (2008).

Yi, L., Liu, Y. & Yu, W. Combination of improved OGY and guiding orbit method for chaos control. J. Adv. Comput. Intell. Intell. Inform. 23(5), 847–855 (2019).

Wang, X., Liu, X., Xie, W. C., Xu, W. & Xu, Y. Global stability and persistence of HIV models with switching parameters and pulse control. Math. Comput. Simul. 123, 53–67 (2016).

Hu, Z., Ma, W. & Ruan, S. Analysis of SIR epidemic models with nonlinear incidence rate and treatment. Math. Biosci. 238(1), 12–20 (2012).

Misra, A. K., Sharma, A. & Shukla, J. B. Stability analysis and optimal control of an epidemic model with awareness programs by media. Biosystems 138, 53–62 (2015).

Thieme, H. R. Global stability of the endemic equilibrium in infinite dimension: Lyapunov functions and positive operators. J. Differ. Equ. 250(9), 3772–3801 (2011).

Kar, T. K. & Jana, S. A theoretical study on mathematical modelling of an infectious disease with application of optimal control. Biosystems 111(1), 37–50 (2013).

Liao, S. & Wang, J. Global stability analysis of epidemiological models based on Volterra–Lyapunov stable matrices. Chaos Solitons Fractals 45(7), 966–977 (2012).

Parsaei, M. R., Javidan, R., Shayegh Kargar, N. & Saberi Nik, H. On the global stability of an epidemic model of computer viruses. Theory Biosci. 136, 169–178 (2017).

Chien, F. & Shateyi, S. Volterra–Lyapunov stability analysis of the solutions of babesiosis disease model. Symmetry 13(7), 1272 (2021).

Tian, J. P. & Wang, J. Global stability for cholera epidemic models. Math. Biosci. 232(1), 31–41 (2011).

Verma, A. K. & Kayenat, S. On the convergence of Mickens’ type nonstandard finite difference schemes on Lane–Emden type equations. J. Math. Chem. 56, 1667–1706 (2018).

Cresson, J. & Pierret, F. Non standard finite difference scheme preserving dynamical properties. J. Comput. Appl. Math. 303, 15–30 (2016).

Mickens, R. E. Numerical integration of population models satisfying conservation laws: NSFD methods. J. Biol. Dyn. 1(4), 427–436 (2007).

Gurski, K. F. A simple construction of nonstandard finite-difference schemes for small nonlinear systems applied to SIR models. Comput. Math. Appl. 66, 2166–2177 (2013).

Mickens, R. E. & Washington, T. M. A note on an NSFD scheme for a mathematical model of respiratory virus transmission. J. Differ. Equ. Appl. 18(3), 525–529 (2012).

Baishya, C., Achar, S. J. & Veeresha, P. An application of the Caputo fractional domain in the analysis of a COVID-19 mathematical model. Contemp. Math. 255–283 (2024).

Gao, W., Veeresha, P., Cattani, C., Baishya, C. & Baskonus, H. M. Modified predictor–corrector method for the numerical solution of a fractional-order SIR model with 2019-nCoV. Fractal Fract. 6(2), 92 (2022).

Achar, S. J., Baishya, C., Veeresha, P. & Akinyemi, L. Dynamics of fractional model of biological pest control in tea plants with Beddington–DeAngelis functional response. Fractal Fract. 6(1), 1 (2021).

Jan, R., Khan, A., Boulaaras, S. & Ahmed Zubair, S. Dynamical behaviour and chaotic phenomena of HIV infection through fractional calculus. Discrete Dyn. Nat. Soc. 2022, Article ID 5937420 (2022).

Tang, T.Q., Jan, R., Bonyah, E., Shah, Z. & Alzahrani, E. Qualitative analysis of the transmission dynamics of dengue with the effect of memory, reinfection, and vaccination. Comput. Math. Methods Med. 2022, Article ID 7893570 (2022).

Jan, R., Boulaaras, S. & Shah, S. A. A. Fractional-calculus analysis of human immunodeficiency virus and CD4+ T-cells with control interventions. Commun. Theor. Phys. 74(10), 105001 (2022).

Jan, A., Boulaaras, S., Abdullah, F. A. & Jan, R. Dynamical analysis, infections in plants, and preventive policies utilizing the theory of fractional calculus. Eur. Phys. J. Spec. Top. 232(14), 2497–2512 (2023).

Shah, Z., Bonyah, E., Alzahrani, E., Jan, R., & Aedh Alreshidi, N. Chaotic phenomena and oscillations in dynamical behaviour of financial system via fractional calculus. Complexity 2022, Article ID 8113760, (2022).

Jan, R., Boulaaras, S., Alyobi, S. & Jawad, M. Transmission dynamics of Hand-Foot-Mouth Disease with partial immunity through non-integer derivative. Int. J. Biomath. 16(06), 2250115 (2023).

Jan, R. et al. The investigation of the fractional-view dynamics of Helmholtz equations within Caputo operator. Comput. Mater. Continua 68(3), 3185–3201 (2021).

Jan, R., Razak, N. N. A., Boulaaras, S., Rehman, Z. U. & Bahramand, S. Mathematical analysis of the transmission dynamics of viral infection with effective control policies via fractional derivative. Nonlinear Eng. 12(1), 20220342 (2023).

Zahedi, M. S. & Kargar, N. S. The Volterra–Lyapunov matrix theory for global stability analysis of a model of the HIV/AIDS. Int. J. Biomath. 10(01), 1750002 (2017).

Shao, P. & Shateyi, S. Stability analysis of SEIRS epidemic model with nonlinear incidence rate function. Mathematics 9(21), 2644 (2021).

Masoumnezhad, M. et al. An approach for the global stability of mathematical model of an infectious disease. Symmetry 12(11), 1778 (2020).

Yusuf, T. T. On global stability of disease-free equilibrium in epidemiological models. Eur. J. Math. Stat. 2(3), 37–42 (2021).

Maji, C., Al Basir, F., Mukherjee, D., Ravichandran, C. & Nisar, K. COVID-19 propagation and the usefulness of awareness-based control measures: A mathematical model with delay. AIMs Math. 7(7), 12091–12105 (2022).

Acknowledgements

Authors Kamal Shah and Thabet Abdeljawad would like to thank Prince Sultan University for support through TAS research lab. The author Asma Al-Jaser would like to thank Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2024R406), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

M.R. wrote the draft and did revision. K.S. has done numerical part. T.A. has included theoretical part. I.A. has contributed in literature and and funding contribution. A.A.-J. has included fundamental analysis and helped in revision. M.A. has edited the last version.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Riaz, M., Shah, K., Abdeljawad, T. et al. A comprehensive analysis of COVID-19 nonlinear mathematical model by incorporating the environment and social distancing. Sci Rep 14, 12238 (2024). https://doi.org/10.1038/s41598-024-61730-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-61730-y

- Springer Nature Limited