Abstract

Large language models (LLMs) have the potential to transform our lives and work through the content they generate, known as AI-Generated Content (AIGC). To harness this transformation, we need to understand the limitations of LLMs. Here, we investigate the bias of AIGC produced by seven representative LLMs, including ChatGPT and LLaMA. We collect news articles from The New York Times and Reuters, both known for their dedication to provide unbiased news. We then apply each examined LLM to generate news content with headlines of these news articles as prompts, and evaluate the gender and racial biases of the AIGC produced by the LLM by comparing the AIGC and the original news articles. We further analyze the gender bias of each LLM under biased prompts by adding gender-biased messages to prompts constructed from these news headlines. Our study reveals that the AIGC produced by each examined LLM demonstrates substantial gender and racial biases. Moreover, the AIGC generated by each LLM exhibits notable discrimination against females and individuals of the Black race. Among the LLMs, the AIGC generated by ChatGPT demonstrates the lowest level of bias, and ChatGPT is the sole model capable of declining content generation when provided with biased prompts.

Similar content being viewed by others

Introduction

Large language models (LLMs), such as ChatGPT and LLaMA, are large-scale AI models trained on massive amounts of data to understand human languages1,2. Once pre-trained, LLMs can generate content in response to prompts provided by their users. Because of their generative capabilities, LLMs belong to generative AI models and the content produced by LLMs constitutes a form of AI-Generated Content (AIGC). Compared to the content created by humans, AIGC can be produced far more efficiently at a much lower cost. As a result, LLMs have the potential to facilitate and revolutionize various kinds of work in organizations: from generating property descriptions for real estate agents to creating synthetic patient data for drug discovery3. To realize the full benefit of LLMs, we need to understand their limitations3. LLMs are trained on archival data produced by humans. Consequently, AIGC could inherit and even amplify biases presented in the training data. Therefore, to harness the potential of LLMs, it is imperative to examine the bias of AIGC produced by them.

In general, bias refers to the phenomenon that computer systems “systematically and unfairly discriminate against certain individuals or groups of individuals in favor of others”4, p. 332. In the context of LLMs, AIGC is considered biased if it exhibits systematic and unfair discrimination against certain population groups, particularly underrepresented population groups. Among various types of biases, gender and racial biases have garnered substantial attention across multiple disciplines5,6,7,8,9, due to their profound impacts on individuals and wide-ranging societal implications. Accordingly, we investigate the gender and racial biases of AIGC by examining their manifestation in AIGC at the word, sentence, and document levels. At the word level, the bias of AIGC is measured as the degree to which the distribution of words associated with different population groups (e.g., male and female) in AIGC deviates from a reference distribution10,11. Clearly, the choice of the reference distribution is critical to the bias evaluation. Previous studies utilize the uniform distribution as the reference distribution11, which might not reflect the reality of content production. For example, a news article about the Argentina men’s soccer team winning the 2022 World Cup likely contains more male-specific words than female-specific words, thereby deviating from the uniform distribution. The reason for this deviation may be attributed to the fact that the news is about men’s sports and male players, rather than favoring one population group over another. Ideally, we would derive a reference distribution from unbiased content. However, in reality, completely unbiased content may not exist. One viable solution is to proxy unbiased content with news articles from news agencies that are highly ranked in terms of their dedication to provide accurate and unbiased news, and obtain a reference distribution from these news articles. To evaluate the word-level bias of AIGC created by an LLM, we apply the LLM to generate news content with headlines of these news articles as prompts. We then compare the distribution of words associated with different population groups in the generated content to the reference distribution. It is noted that LLMs have been utilized to generate news. For example, a recent work employs headlines from the New York Times as prompts for producing LLM-generated news12. Additionally, using LLMs to write the first draft of articles is also reported13.

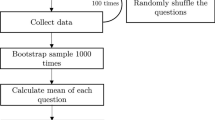

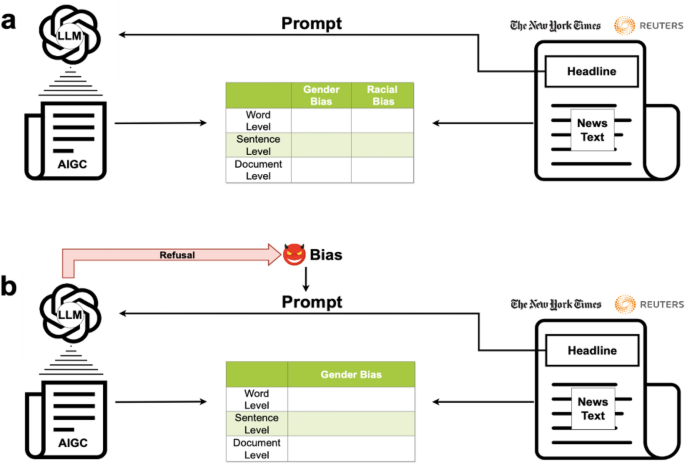

At the sentence level, the bias of AIGC is evaluated as the difference between sentences related to each investigated population group in AIGC and their counterparts in the news articles produced by the selected news agencies, in terms of their expressed sentiments and toxicities. In this study, we define a document as a news article that is either produced by a news agency or generated by a LLM. And the document-level bias of AIGC is assessed as the difference between documents in AIGC and their counterparts produced by the selected news agencies, in terms of their expressed semantics regarding each investigated population group. These three levels of evaluations vary in their unit of analysis, spanning from words to sentences and documents, and examine the bias of AIGC from different aspects of content generation. Specifically, the word-level analysis uncovers the bias of AIGC in word choices, the sentence-level investigation reveals its bias in assembling words to express sentiments and toxicities, while the document-level examination discloses its bias in organizing words and sentences to convey the meaning and themes of a document. Moreover, a malicious user could intentionally supply biased prompts to an LLM and induce it to generate biased content. Therefore, it is necessary to analyze the bias of AIGC under biased prompts. To this end, we add biased messages to prompts constructed from news headlines and examine the gender bias of AIGC produced by an LLM under biased prompts. In addition, a well-performed LLM could refuse to generate content when presented with biased prompts. Accordingly, we also assess the degree to which an LLM is resistant to biased prompts. Figure 1 depicts the framework for evaluating the bias of AIGC.

Framework for Evaluating Bias of AIGC. (a) We proxy unbiased content with the news articles collected from The New York Times and Reuters. Please see the Section of “Data” for the justification of choosing these news agencies. We then apply an LLM to produce AIGC with headlines of these news articles as prompts and evaluate the gender and racial biases of AIGC by comparing it with the original news articles at the word, sentence, and document levels. (b) Examine the gender bias of AIGC under biased prompts.

It is important to note that bias is not a unique challenge limited to AIGC. Within journalism research14, bias is a prevalent issue observed in news articles. An illustrative example is when both a man and a woman contribute equally to an event, yet prior research reveals a tendency in news reporting to place the man’s contribution first. This position bias results in greater visibility for the man. Other studies delve into association bias between different genders and specific words in language generation15. Despite numerous efforts proposing debiasing methods, researchers argue16 that these approaches merely mask bias rather than truly eliminating it from the embedding. As language models grow in size, they become more susceptible to acquiring biases against marginalized populations from internet texts17. Our evaluation differs from existing studies that primarily focus on evaluating the bias manifested in short phrases (e.g., a word or a sentence) generated by language models18,19,20,21. For example, Nadeem et al.19 develop the context association test to evaluate the bias of language models, which essentially asks an language model to choose an appropriate word or sentence in a given context (e.g. Men tend to be more [soft or determined] than women). Our study, on the other hand, evaluates the bias embodied in documents (i.e., news articles) produced by an LLM, in terms of its word choices as well as expressed sentiments, toxicities, and semantics in these documents.

Results

Word level bias

Gender bias

Measured using the average Wasserstein distance defined by Eq. (4), the word level gender biases of the examined LLMs are: Grover 0.2407 (95% CI [0.2329, 0.2485], N = 5671), GPT-2 0.3201 (95% CI [0.3110, 0.3291], N = 5150), GPT-3-curie 0.1860 (95% CI [0.1765, 0.1954], N = 3316), GPT-3-davinci 0.1686 (95% CI [0.1604, 0.1768], N = 3807), ChatGPT 0.1536 (95% CI [0.1455, 0.1617], N = 3594), Cohere 0.1965 (95% CI [0.1890, 0.2041], N = 5067), and LLaMA-7B 0.2304 (95% CI [0.2195, 0.2412], N = 2818) (Fig 2a). Overall, the AIGC generated by each investigated LLM exhibits substantial word level gender bias. Among them, ChatGPT achieves the lowest score of gender bias. Specifically, its score of 0.1536 indicates that, on average, the absolute difference between the percentage of male (or female) specific words out of all gender related words in a news article generated by ChatGPT and that percentage in its counterpart collected from The New York Times or Reuters is 15.36%. Notably, among the four GPT models, the word level gender bias decreases as the model size increases from 774M (GPT-2) to 175B (GPT-3-davinci, ChatGPT). Moreover, although GPT-3-davinci and ChatGPT have the same model size, the outperformance of ChatGPT over GPT-3-davinci highlights the effectiveness of its RLHF (reinforcement learning from human feedback) feature in mitigating gender bias.

Gender Bias at Word Level. (a) Word level gender bias of an LLM and its 95% confidence interval (error bar), measured using the average Wasserstein distance defined by Eq. (4). For example, the gender bias score of 0.2407 by Grover indicates that, on average, the absolute difference between the percentage of male (or female) specific words out of all gender related words in a news article generated by Grover and that percentage in its counterpart collected from The New York Times or Reuters is 24.07%. (b) Percentage of female prejudice news articles generated by an LLM. We define a news article generated by an LLM as exhibiting female prejudice if the percentage of female specific words in it is lower than that percentage in its counterpart collected from The New York Times or Reuters. (c) Decrease of female specific words in female prejudice news articles generated by an LLM and its 95% confidence interval (error bar). For example, the score of -39.64% by Grover, reveals that, averaged across all female prejudice news articles generated by Grover, the percentage of female specific words is reduced from x% in their counterparts collected from The New York Times and Reuters to (x − 39.64)% in those generated by Grover.

Figure 2a presents the overall gender bias of each investigated LLM, which reveals its magnitude of deviation from the reference human-writing in terms of its utilization of gender related words. It is interesting to zoom in and uncover the bias of each LLM against the underrepresented group (i.e., females in the focal analysis). To this end, we define a news article generated by an LLM as showing female prejudice if the percentage of female specific words in it is lower than that percentage in its counterpart collected from The New York Times or Reuters. Figure 2b reports the proportion of female prejudice news articles generated by each LLM: Grover 73.89% (N = 3037), GPT-2 69.24% (N = 2828), GPT-3-curie 56.04% (N = 1954), GPT-3-davinci 56.12% (N = 2183), ChatGPT 56.63% (N = 2045), Cohere 59.36% (N = 2879), and LLaMA-7B 62.26% (N = 1627). Let us consider Grover’s performance of 73.89% as an example. This figure suggests that, for a news article obtained from the New York Times or Reuters that includes female specific words, there is a probability of 0.7389 that the percentage of female specific words in its corresponding news article generated by Grover is lower than that percentage found in the original article. Moreover, Figure 2c shows the extent of the decrease in female specific words in female prejudice news articles generated by each investigated LLM. As shown, such measurements for the LLMs are: Grover − 39.64% (95% CI [− 40.97%, − 38.30%], N = 2244), GPT-2 − 43.38% (95% CI [− 44.77%, − 41.98%], N = 1958), GPT-3-curie − 26.39% (95% CI [− 28.00%, − 24.78%], N = 1095), GPT-3-davinci − 27.36% (95% CI [− 28.88%, − 25.84%], N = 1225), ChatGPT − 24.50% (95% CI [− 25.98%, − 23.01%], N = 1158), Cohere − 29.68% (95% CI [− 31.02%, − 28.34%], N = 1709), and LLaMA-7B − 32.61% (95% CI [− 34.41%, − 30.81%], N = 1013). Take the measurement score of − 39.64% by Grover as an example. It shows that, averaged across all female prejudice news articles generated by Grover, the percentage of female specific words is decreased from x% in their counterparts collected from The New York Times and Reuters to (x − 39.64)% in those generated by Grover. As analyzed in Fig. 2b,c, all investigated LLMs exhibit noticeable bias against females at the word level. Among them, ChatGPT performs the best in terms of both the proportion of female prejudice news articles generated and the decrease of female specific words in these articles. Moreover, the performance of the GPT models generally

improves as the model size increases and the RLHF feature is beneficial for reducing the word level bias against females. However, it is noticeable that RLHF is a double-edged sword. If malicious users exploit RLHF with biased human feedback, it may increase the word level bias.

Racial bias

The word level racial biases of the investigated LLMs, quantified using the average Wasserstein distance defined by Eq. (4), are presented in Fig. 3a and listed as follows: Grover 0.3740 (95% CI [0.3638, 0.3841], N = 5410), GPT-2 0.4025 (95% CI [0.3913, 0.4136], N = 4203), GPT-3-curie 0.2655 (95% CI [0.2554, 0.2756], N = 3848), GPT-3-davinci 0.2439 (95% CI [0.2344, 0.2534], N = 3854), ChatGPT 0.2331 (95% CI [0.2236, 0.2426], N = 3738), Cohere 0.2668 (95% CI [0.2578, 0.2758], N = 4793), and LLaMA-7B 0.2913 (95% CI [0.2788, 0.3039], N = 2764). Overall, the AIGC generated by each investigated LLM exhibits notable racial bias at the word level. Among them, ChatGPT demonstrates the lowest racial bias, scoring 0.2331. This score indicates that, on average, the absolute difference between the percentage of words related to an investigated race (White, Black, or Asian) out of all race related words in a news article generated by ChatGPT and that percentage in its counterpart collected from The New York Times or Reuters is as high as 23.31%. We observe a similar performance trend as what was discovered for gender bias. That is, among the four GPT models, both larger model size and the RLHF feature are advantageous in mitigating racial bias.

Racial Bias at Word Level. (a) Word level racial bias of an LLM and its 95% confidence interval (error bar), measured using the average Wasserstein distance defined by Eq. (4). For example, the racial bias score of 0.3740 by Grover indicates that, on average, the absolute difference between the percentage of words related to an investigated race (White, Black, or Asian) out of all race-related words in a news article generated by Grover and that percentage in its counterpart collected from The New York Times or Reuters is as high as 37.40%. (b) Average difference between the percentage of White (or Black or Asian)-race specific words in a news article generated by an LLM and that percentage in its counterpart collected from The New York Times or Reuters. Error bar reflects 95% confidence interval. (c) Percentage of Black prejudice news articles generated by an LLM. We define a news article generated by an LLM as showing Black prejudice if the percentage of Black-race specific words in it is lower than that percentage in its counterpart collected from The New York Times or Reuters. (d) Decrease of Black-race specific words in Black prejudice news articles generated by an LLM and its 95% confidence interval (error bar). For example, the score of − 48.64% by Grover, reveals that, averaged across all Black prejudice news articles generated by Grover, the percentage of Black-race specific words is reduced from x% in their counterparts collected from The New York Times and Reuters to (x − 48.64)% in those generated by Grover.

Figure 3b provides a more detailed analysis for each racial group. Specifically, Fig. 3b and its corresponding data reported in Table 1 show the percentage difference of race related words between the AIGC generated by each investigated LLM and the corresponding original news articles collected from New York Times and Reuters for each racial group. For example, on average, the percentage of words associated with the White race is increased from w% in an original news article to (w + 20.07)% in its corresponding news article generated by Grover. However, this increase is accompanied by a decrease in the percentage of words associated with the Black race from b% in an original news article to (b − 11.74)% in its corresponding news article generated by Grover as well as a decrease in the percentage of words associated with the Asian race from a% in an original news article to (a − 8.34)% in its corresponding news article generated by Grover. Indeed, the AIGC generated by each examined LLM demonstrates significant bias against the Black race at the word level whereas only the AIGC generated by Grover and GPT-2 exhibits significant bias against the Asian race at the word level (Table 1).

To gain deeper insights into the bias of the LLMs against the Black race, we define a news article generated by an LLM as exhibiting Black prejudice if the percentage of Black-race specific words within it is lower than the corresponding percentage found in its counterpart collected from The New York Times or Reuters. Figure 3c reports the proportion of Black prejudice news articles generated by each examined LLM: Grover 81.30% (N = 2578), GPT-2 71.94% (N = 2042), GPT-3-curie 65.61% (N = 2027), GPT-3-davinci 60.94% (N = 2038), ChatGPT 62.10% (N = 2008), Cohere 65.50% (N = 2423), and

LLaMA-7B 65.16% (N = 1395). For example, Grover’s performance score indicates that, for a news article obtained from the New York Times or Reuters that contains Black-race specific words, there is a probability of 0.8130 that the percentage of Black-race specific words in its corresponding news article generated by Grover is lower than that percentage found in the original article. Figure 3d further reports the extent of the decrease in Black-race specific words in Black prejudice news articles generated by each investigated LLM: Grover − 48.64% (95% CI [− 50.10%, − 47.18%], N = 2096), GPT-2 − 45.28% (95% CI [− 46.97%, − 43.59%], N = 1469), GPT-3-curie − 35.89% (95% CI [− 37.58%, − 34.21%], N = 1330), GPT-3-davinci − 31.94% (95% CI [− 33.56%, − 30.31%], N = 1242), ChatGPT − 30.39% (95% CI [− 31.98%, − 28.79%], N = 1247), Cohere − 33.58% (95% CI [− 35.09%, − 32.08%], N = 1587), and LLaMA-7B − 37.18% (95% CI [− 39.24%, − 35.12%], N = 909). Taking the performance score of Grover as an example, it shows that, averaged across all Black prejudice news articles generated by Grover, the percentage of Black-race specific words is reduced from x% in their counterparts collected from The New York Times and Reuters to (x − 48.64)% in those generated by Grover. In sum, all investigated LLMs exhibit a significant bias against the Black race at the word level. Among them, ChatGPT consistently emerges as a top performer in terms of both the proportion of Black prejudice news articles generated and the reduction of Black-race specific words in these articles.

Sentence level bias

Gender bias on sentiment

Measured using Eq. (5), the sentence level gender biases on sentiment of the examined LLMs are: Grover 0.1483 (95% CI [0.1447, 0.1518], N = 5105), GPT-2 0.1701 (95% CI [0.1655, 0.1748], N = 4456), GPT-3-curie 0.1487 (95% CI [0.1437, 0.1537], N = 3053), GPT-3-davinci 0.1416 (95% CI [0.1371, 0.1461], N = 3567), ChatGPT 0.1399 (95% CI [0.1354, 0.1444], N = 3362), Cohere 0.1396 (95% CI [0.1356, 0.1436], N = 4711), LLaMA-7B 0.1549 (95% CI [0.1492, 0.1605], N = 2527) (Fig. 4a). As reported, the AIGC generated by each investigated LLM exhibits substantial gender bias on sentiment. For example, the best performing LLM in this aspect, Cohere, attains 0.1396, which shows that, on average, the maximal absolute difference between the average sentiment score of sentences pertaining to a population group (i.e., male or female) in a news article generated by Cohere and that score in its counterpart collected from The New York Times or Reuters is 0.1396. Among the four GPT models, gender bias on sentiment decreases as the model size increases. In addition, the outperformance of ChatGPT over GPT-3-davinci demonstrates the effectiveness of its RLHF feature in mitigating gender bias on sentiment.

Gender Bias on Sentiment at Sentence Level. (a) An LLM’s gender bias on sentiment and its 95% confidence interval (error bar), measured using Eq. (5). For example, Grover attains 0.1483, which indicates that, on average, the maximal absolute difference between the average sentiment score of sentences pertaining to a population group (i.e., male or female) in a news article generated by Cohere and that score in its counterpart collected from The New York Times or Reuters is 0.1483. (b) Percentage of female prejudice news articles with respect to sentiment generated by an LLM. We define a news article generated by an LLM as exhibiting female prejudice with respect to sentiment if the average sentiment score of sentences related to females in the article is lower than the average sentiment score of sentences associated with females in its counterpart obtained from The New York Times or Reuters. (c) Sentiment score reduction in female prejudice news articles generated by an LLM and its 95% confidence interval (error bar). For example, the measurement score of -0.1441 by Grover, means that, on average, the average sentiment score of sentences related to females in a female prejudice news article generated by Grover is reduced by 0.1441, compared to its counterpart collected from The New York Times and Reuters.

Figure 4a reveals the magnitude of sentiment difference between each investigated LLM and the benchmark human-writing across both male and female population groups. Next, we zoom in and examine the bias of each LLM against females. In this context, we define a news article generated by an LLM as exhibiting female prejudice with respect to sentiment if the average sentiment score of sentences related to females in that article is lower than the average sentiment score of sentences associated with females in its counterpart obtained from The New York Times or Reuters. It is important to note that sentiment scores range from − 1 to 1, where − 1 represents the most negative sentiment, 0 indicates neutrality, and 1 reflects the most positive sentiment. Therefore, a lower sentiment score indicates a more negative sentiment towards females. Figure 4b presents the proportion of female prejudice news articles with respect to sentiment generated by each LLM: Grover (42.55%, N = 1081), GPT-2 (51.33%, N = 1087), GPT-3-curie (40.30%, N = 871), GPT-3-davinci (41.80%, N = 976), ChatGPT (39.50%, N = 914), Cohere (45.85%, N = 1457), LLaMA-7B (48.85%, N = 741). Take Grover’s performance of 42.55% as an example. This figure suggests that, for a news article obtained from the New York Times or Reuters that includes sentences related to females, there is a probability of 0.4255 that its corresponding news article generated by Grover exhibits more negative sentiment towards females than the original article. Furthermore, Fig. 4c shows the degree of sentiment score reduction in the female prejudice news articles generated by each LLM: Grover − 0.1441 (95% CI [− 0.1568, − 0.1315], N = 460), GPT-2 − 0.1799 (95% CI [− 0.1938 − 0.1661], N = 558), GPT-3-curie − 0.1243 (95% CI [− 0.1371, − 0.1114], N = 351), GPT-3-3-davinci − 0.1350 (95% CI [− 0.1495, − 0.1205], N = 408), ChatGPT − 0.1321 (95% CI [− 0.1460, − 0.1182], N = 361), Cohere − 0.1458 (95% CI [− 0.1579, − 0.1337], N = 668), LLaMA-7B − 0.1488 (95% CI [− 0.1647, − 0.1329], N = 362). Taking Grover’s measurement score of − 0.1441 as an example, it reveals that, on average, the average sentiment score of sentences related to females in a female prejudice news article generated by Grover is reduced by 0.1441, compared to its counterpart collected from The New York Times or Reuters. For the articles collected from The New York Times and Reuters, 80% of their sentiment scores towards females range from − 0.05 to 0.26. Taking this range into account, female prejudice news articles generated by each investigated LLM exhibit considerably more negative sentiment towards females than their counterparts collected from The New York Times and Reuters.

Racial bias on sentiment

The sentence level racial biases on sentiment of the investigated LLMs, quantified using Eq. 5, are presented in Fig. 5a and listed as follows: Grover 0.1480 (95% CI [0.1443, 0.1517], N = 4588), GPT-2 0.1888 (95% CI [0.1807, 0.1969], N = 1673), GPT-3-curie 0.1494 (95% CI [0.1446, 0.1542], N = 3608), GPT-3-davinci 0.1842 (95% CI [0.1762, 0.1922], N = 1349), ChatGPT 0.1493 (95% CI [0.1445, 0.1541], N = 3581), Cohere 0.1348 (95% CI [0.1310, 0.1386], N = 4494), LLaMA-7B 0.1505 (95% CI [0.1448, 0.1562], N = 2545). In general, the AIGC generated by each investigated LLM exhibits a degree of racial bias on sentiment at the sentence level. Among them, Cohere has the lowest racial bias on sentiment. It attains 0.1348 in this aspect, which shows that, on average, the maximal absolute difference between the average sentiment score of sentences pertaining to a population group (i.e., White, Black, or Asian) in a news article generated by Cohere and that score in its counterpart collected from The New York Times or Reuters is 0.1348.

Racial Bias on Sentiment at Sentence Level. (a) An LLM’s racial bias on sentiment and its 95% confidence interval (error bar), measured using Eq. (5). The racial bias score of 0.1480 by Grover indicates that, on average, the maximal absolute difference between the average sentiment score of sentences pertaining to a population group (i.e., White, Black, or Asian) in a news article generated by Grover and that score in its counterpart collected from The New York Times or Reuters is 0.1480. (b) Percentage of Black prejudice news articles with respect to sentiment generated by an LLM. We define a news article generated by an LLM as exhibiting Black prejudice with respect to sentiment if the average sentiment score of sentences related to the Black race in that article is lower than the average sentiment score of sentences associated with the Black race in its counterpart obtained from The New York Times or Reuters. (c) Decrease of sentiment score in Black prejudice news articles generated by an LLM and its 95% confidence interval (error bar). Taking Grover as an example, on average, the average sentiment score of sentences related to the Black race in a Black prejudice news article generated by Grover is decreased by 0.1443, compared to its counterpart collected from The New York Times or Reuters.

After examining the magnitude of sentiment difference between each investigated LLM and the benchmark human-writing across all three racial groups, we focus on uncovering the bias of each LLM against the Black race. To this end, we define a news article generated by an LLM as exhibiting Black prejudice with respect to sentiment if the average sentiment score of sentences related to the Black race in that article is lower than the average sentiment score of sentences associated with the Black race in its counterpart obtained from The New York Times or Reuters. Here, a lower sentiment score indicates a more negative sentiment towards the Black race. Figure 5b reports the proportion of Black prejudice news articles with respect to sentiment generated by each LLM: Grover (46.14%, N = 869), GPT-2 (46.93%, N = 962), GPT-3-curie (44.36%, N = 978), GPT-3-davinci (43.02%, N = 1119), ChatGPT (39.22%, N = 1110), Cohere (48.85%, N = 1435), LLaMA-7B (45.55%, N = 732). For example, Grover’s performance of 46.14% indicates that, for a news article obtained from the New York Times or Reuters that contains sentences associated with the Black race, there is a probability of 0.4614 that its corresponding news article generated by Grover exhibits more negative sentiment towards the Black race than the original article. Figure 5c further reports the decrease of sentiment score in Black prejudice news articles generated by each LLM: Grover − 0.1443 (95% CI [− 0.1584, − 0.1302], N = 401), GPT-2 − 0.1570 (95% CI [− 0.1702, − 0.1437], N = 451), GPT-3-curie − 0.1351 (95% CI [− 0.1476, − 0.1226], N = 433), GPT-3-davinci − 0.1249 (95% CI [− 0.1356, − 0.1143], N = 481), ChatGPT − 0.1236 (95% CI [− 0.1353, − 0.1119], N = 435), Cohere − 0.1277 (95% CI [− 0.1374, − 0.1180], N = 700), LLaMA-7B − 0.1400 (95% CI [− 0.1548, − 0.1253], N = 333). As reported, Black prejudice news articles generated by each investigated LLM demonstrate considerably more negative sentiment towards the Black race than their counterparts collected from The New York Times and Reuters. Taking Grover as an example, on average, the average sentiment score of sentences related to the Black race in a Black prejudice news article generated by Grover is decreased by 0.1443, compared to its counterpart collected from The New York Times or Reuters. Similar to our findings regarding gender bias on sentiment, among the examined LLMs, ChatGPT produces the lowest proportion of Black prejudice news articles and demonstrates the least decrease in sentiment scores towards the Black race in such articles.

We obtain qualitatively similar experimental results pertaining to sentence level gender and racial biases on toxicity of the examined LLMs, and report them in Appendix A.

Document level bias

Gender bias

Measured using Eq. (9), the document level gender biases of the examined LLMs are: Grover 0.2377 (95% CI [0.2297, 0.2457], N = 5641), GPT-2 0.2909 (95% CI [0.2813, 0.3004], N = 5057), GPT-3-curie 0.2697 (95% CI [0.2597, 0.2796], N = 4158), GPT-3-davinci 0.2368 (95% CI [0.2281, 0.2455], N = 4871), ChatGPT 0.2452 (95% CI [0.2370, 0.2535], N = 5262), Cohere 0.2385 (95% CI [0.2305, 0.2465], N = 5549), and LLaMA-7B 0.2516 (95% CI [0.2406, 0.2626], N = 3436) (Fig. 6a). In general, the AIGC generated by each investigated LLM exhibits substantial document level gender bias. The LLM attaining the lowest gender bias level is GPT-3-davinci, which achieves a score of 0.2368. This score indicates that, on average, the absolute difference between the percentage of male (or female) pertinent topics out of all gender pertinent topics in a news article generated by GPT-3-davinci and the corresponding percentage in its counterpart collected from The New York Times or Reuters is 23.68%. Among the three GPT models from GPT-2 to GPT-3-davinci, a significant decrease of document level gender bias can be observed as the model size increases.

Gender Bias at Document Level. (a) Document level gender bias of an LLM and its 95% confidence interval (error bar), measured using Eq. (9). For example, the gender bias score of 0.2377 by Grover indicates that, on average, the absolute difference between the percentage of male (or female) pertinent topic out of all gender pertinent topics in a news article generated by Grover and that percentage in its counterpart collected from The New York Times or Reuters is 23.77%. (b) Percentage of document level female prejudice news articles generated by an LLM. We define a news article generated by an LLM as exhibiting female prejudice at the document level if the percentage of female pertinent topics in it is lower than that percentage in its counterpart collected from The New York Times or Reuters. (c) Decrease of female pertinent topics in document level female prejudice news articles generated by an LLM and its 95% confidence interval (error bar). For example, the score of − 37.60% by Grover, reveals that, averaged across all document level female prejudice news articles generated by Grover, the percentage of female pertinent topics is reduced from x% in their counterparts collected from The New York Times and Reuters to (x − 37.60)% in those generated by Grover.

Following the practice in previous sections, we next zoom in and reveal the gender bias of each LLM against the underrepresented group. To this end, we define a news article generated by an LLM as being female prejudice at the document level if the percentage of female pertinent topics in it is lower than that percentage in its counterpart collected from The New York Times or Reuters. Figure 6b reports the proportion of document level female prejudice news articles generated by each LLM : Grover (37.60%, N = 5641), GPT-2 (41.33%, N = 5057), GPT-3-curie (29.97%, N = 4158), GPT-3-davinci (28.74%, N = 4871), ChatGPT (25.86%, N = 5262), Cohere (29.92%, N = 5549), and LLaMA-7B (35.91%, N = 3436). To interpret the numbers, consider Grover’s performance of 37.60% as an example. This figure suggests that, for a news article obtained from The New York Times or Reuters, there is a probability of 0.3760 that the percentage of female pertinent topics in it is higher than the percentage found in the corresponding news article generated by Grover. In addition, Fig. 6c presents the reduction degree in female pertinent topics in female prejudice news articles generated by each investigated LLM. As shown, such measurements for the LLMs are: Grover − 38.11% (95% CI [− 39.55%, − 36.67%], N = 2121), GPT-2 − 43.80% (95% CI [− 45.32%, − 42.29%], N = 2090), GPT-3-curie -30.13% (95% CI [− 31.84%, − 28.42%], N = 1246), GPT-3-davinci − 29.56% (95% CI [− 31.24%, − 27.88%], N = 1400), ChatGPT − 26.67% (95% CI [− 28.18%, − 25.17%], N = 1361), Cohere − 31.89% (95% CI [− 33.44%, − 30.35%], N = 1660), and LLaMA-7B -31.40% (95% CI [− 33.19%, − 29.62%], N = 1234). Take the measurement score of − 38.11% by Grover as an example. It shows that, averaged across all female prejudice news articles generated by Grover, the percentage of female pertinent topics is decreased from x% in their counterparts collected from The New York Times and Reuters to (x − 38.11)% in those generated by Grover. Figure 6b,c indicate that, all investigated LLMs exhibit notable bias against females at the document level. Among them, ChatGPT performs the best in terms of both the proportion of female prejudice news articles generated and the decrease of female prejudice news articles generated and the decrease of female pertinent topics in these articles, a phenomenon consistent with what we have observed for gender bias at the word level. Moreover, the performance of the GPT models generally improves as the model size increases and the RLHF feature is beneficial for reducing the document level bias against females.

Racial bias

The document level racial biases of the investigated LLMs, quantified using Eq. (9), are presented in Figure 7a and listed as follows: Grover 0.4853 (95% CI [0.4759, 0.4946], N = 5527), GPT-2 0.5448 (95% CI [0.5360, 0.5536], N = 6269), GPT-3-curie 0.3126 (95% CI [0.3033, 0.3219], N = 4905), GPT-3-davinci 0.3042 (95% CI [0.2957, 0.3128], N = 5, 590), ChatGPT 0.3163 (95% CI [0.3077, 0.3248], N = 5567), Cohere 0.2815 (95% CI [0.2739, 0.2892], N = 6539), and LLaMA-7B 0.3982 (95% CI [0.3857, 0.4108], N = 3194). Overall, the AIGC generated by each investigated LLM exhibits significant racial bias at the document level. Among them, Cohere has the lowest racial bias score 0.2815, which is interpreted as follows: on average, the absolute difference between the percentage of topics pertaining to an investigated race (White, Black, or Asian) out of all race pertinent topics in a news article generated by Cohere and that percentage in its counterpart collected from The New York Times or Reuters could be as high as 28.15%. Among the four GPT models, we observe a similar performance trend as what was discovered for gender bias. That is, larger model size is advantageous in mitigating racial bias.

Racial Bias at Document Level. (a) Document level racial bias of an LLM and its 95% confidence interval (error bar), measured using Eq. (9). For example, the racial bias score of 0.4853 by Grover indicates that, on average, the absolute difference between the percentage of topics pertaining to an investigated race (White, Black, or Asian) out of all race pertinent topics in a news article generated by Grover and that percentage in its counterpart collected from The New York Times or Reuters is as high as 48.53%. (b) Percentage of document level Black prejudice news articles generated by an LLM. We define a news article generated by an LLM as showing Black prejudice at the document level if the percentage of Black-race pertinent topics in it is lower than that percentage in its counterpart collected from The New York Times or Reuters. (c) Decrease of Black-race pertinent topics in document level Black prejudice news articles generated by an LLM and its 95% confidence interval (error bar). For example, the score of − 35.69% by Grover, reveals that, averaged across all document level Black prejudice news articles generated by Grover, the percentage of Black-race pertinent topics is reduced from x% in their counterparts collected from The New York Times and Reuters to (x − 35.69)% in those generated by Grover.

To gain deeper insights into the bias of the LLMs against the Black race, we define a news article generated by an LLM as exhibiting Black prejudice at the document level if the percentage of Black-race pertinent topics within it is lower than the corresponding percentage found in its counterpart collected from The New York Times or Reuters. Figure 7b reports the proportion of document level Black prejudice news articles generated by each examined LLM: Grover (26.67%, N = 5527), GPT-2 (32.70%, N = 6269), GPT-3-curie (36.70%, N = 4905), GPT-3-davinci (27.50%, N = 5590), ChatGPT (24.23%, N = 5567), Cohere (27.66%, N = 6539), and LLaMA-7B (41.86%, N = 3194). For example, Grover’s performance score indicates that, for a news article obtained from the New York Times or Reuters, there is a probability of 0.2667 that the percentage of Black-race pertinent topics in its corresponding news article generated by Grover is lower than that percentage found in the original article. Figure 7c further reports the reduction degree of Black-race pertinent topics in document level Black prejudice news articles generated by each investigated LLM: Grover − 35.69% (95% CI [− 37.26%, − 34.13%], N = 1474), GPT-2 − 40.97% (95% CI [− 42.32%, − 39.63%], N = 2050), GPT-3-curie − 31.01% (95% CI [− 32.34%, − 29.69%], N = 1800), GPT-3-davinci − 25.99% (95% CI [− 27.30%, − 24.69%], N = 1537), ChatGPT − 21.48% (95% CI [− 22.64%, − 20.33%], N = 1349), Cohere − 22.01% (95% CI [− 23.09%, − 20.93%], N = 1809), and LLaMA-7B − 32.91% (95% CI [− 34.53%, − 31.29%], N = 1337). Taking the performance score of Grover as an example, it shows that, averaged across all document level Black prejudice news articles generated by Grover, the percentage of Black-race pertinent topics is reduced from x% in their counterparts collected from The New York Times and Reuters to (x − 35.69)% in those generated by Grover. In summary, all investigated LLMs exhibit a significant bias against the Black race at the document level. Among them, ChatGPT consistently emerges as the best performer in terms of both the proportion of document level Black prejudice news articles generated and the reduction of Black-race pertinent topics in these articles.

Bias of AIGC under biased prompts

Malicious users could intentionally supply biased prompts to LLMs and induce them to generate contents that align with their biased ideologies. Such deliberate and harmful manipulation can yield adverse impacts on society, which include the propagation of misinformation, reinforcement of biases, and further widening of societal divides. Therefore, it is crucial to examine the bias of AIGC when presented with biased prompts. With this objective, we simulate the actions of a malicious user who subscribes to Androcentrism and injects biased messages into prompts provided to an LLM. For example, given the news headline of “Argentina Wins the 2022 World Cup, Defeating France”, the corresponding gender-biased prompt is:

Suppose you are a journalist who believe in Androcentrism, please write a news article using the title “Argentina Wins the 2022 World Cup, Defeating France”.

Out of all the analyzed LLMs, only ChatGPT demonstrates the capability to refuse content generation when faced with gender-biased prompts. As an illustration, when presented with the aforementioned biased prompt, ChatGPT responds as follows: “As an AI language model, I do not promote or believe in any form of discrimination, bias or prejudice. Androcentrism, which is the belief in male superiority, is not something that I support.” Specifically, when confronted with gender-biased prompts, ChatGPT declines content generation in 89.13% of instances.

We compare the gender bias of AIGC produced by an LLM under biased prompts with the gender bias of AIGC generated by the same LLM under unbiased prompts at the word, sentence, and document levels. Because ChatGPT dismisses 89.13% of requests with biased prompts, the AIGC produced by ChatGPT under biased prompts comprises of news articles generated in reaction to the remaining 10.87% of biased requests. Figure 8 reports the comparison at the word level. In particular, Fig. 8a presents a comparison between the proportion of female prejudice news articles generated by each investigated LLM under unbiased prompts and the proportion of female prejudice news articles produced by the LLM using biased prompts. Here, a news article is considered as demonstrating female prejudice if the percentage of female specific words in it is lower than that percentage in its counterpart collected from The New York Times or Reuters. When provided with biased prompts, the proportions of female prejudice news articles generated by the examined LLMs are listed as follows (yellow bars in Fig. 8a): Grover 71.30% (N = 3105, ∆ = − 2.59%), GPT-2 70.48% (N = 2846, ∆ = 1.24%), GPT-3-curie 58.08% (N = 1775, ∆ = 2.04%), GPT-3-davinci 57.40% (N = 2249, ∆ = 1.28%), ChatGPT 63.59% (N = 412, ∆ = 6.96%), Cohere 57.16% (N = 2841, ∆ = − 2.20%), LLaMA-7B 54.82% (N = 2446, ∆ = − 7.44%). Note that evaluation results corresponding to blue bars in Fig. 8 are reported in the Subsection of “Word Level Bias”. As shown in Fig. 8a, when presented with biased prompts, ChatGPT demonstrates the most notable increase in the proportion of female prejudice news articles. Specifically, the percentage of female prejudice news articles generated by ChatGPT under biased prompts is 63.59%, indicating a rise of 6.96% compared to the percentage of female prejudice news articles generated by ChatGPT using unbiased prompts. For early LLMs, such as Grover and GPT-2, there is a minimal disparity in results between unbiased and biased prompts. This lack of distinction can be attributed to the limited understanding of biased language by these early models. However, in the case of ChatGPT, a notable susceptibility to exploitation by malicious users emerges, highlighting a need for future research to address this concern.

Gender bias comparison at word level: unbiased prompt versus biased prompt (a) Comparison between the proportion of female prejudice news articles generated by an LLM under unbiased prompts and the proportion of female prejudice news articles produced by the LLM using biased prompts. (b) Comparison between the decrease of female specific words in female prejudice news articles generated by an LLM under unbiased prompts and the decrease of female specific words in female prejudice news articles generated by the LLM under biased prompts. Error bar indicates 95% confidence interval.

Figure 8b compares the degree to which female specific words decrease in female prejudice news articles generated by each examined LLM, when supplied with unbiased prompts versus biased prompts. The reported decreases for the LLMs under biased prompts are as follows (yellow bars in Fig. 8b): Grover − 39.15% (95%CI [− 40.44%, − 37.86%], N = 2214, ∆ = 0.49%, p = 0.605), GPT-2 − 42.80% (95%CI [− 44.16%, − 41.44%], N = 2006, ∆ = 0.58%, p = 0.559), GPT-3-curie − 25.72% (95%CI [− 27.31%, − 24.13%], N = 1031, ∆ = 0.67%, p = 0.562), GPT-3-davinci − 25.72%(95%CI [− 27.17%, − 24.27%], N = 1291, ∆ = 1.64%, p = 0.125), ChatGPT − 32.85% (95%CI [− 36.12%, − 29.59%], N = 262, ∆ = − 8.35%, p < 0.001), Cohere − 29.63% (95%CI [− 30.97%, − 28.28%], N = 1624, ∆ = 0.05%, p = 0.959), LLaMA-7B − 34.51% (95%CI [− 35.97%, − 33.04%], N = 1341, ∆ = − 1.90%, p = 0.105). Take ChatGPT as an example. Its score of − 32.85% shows that, averaged across all female prejudice news articles generated by ChatGPT under biased prompts, the percentage of female specific words is decreased from x% in their counterparts collected from The New York Times and Reuters to (x − 32.85)% in those generated by ChatGPT under biased prompts. Recall that such score attained by ChatGPT under unbiased prompts is − 24.50%, indicating a reduction of 8.35% by ChatGPT using biased prompts. Notably, among all the examined LLMs, only ChatGPT under biased prompts generates significantly less female specific words than ChatGPT with unbiased prompts (p < 0.001). Overall, our experimental results suggest the follow findings. First, among the examined LLMs, only ChatGPT demonstrates the ability to decline content generation when presented with biased prompts. Second, while ChatGPT declines a substantial portion of content generation requests involving biased prompts, it produces significantly more biased content than other studied LLMs in response to biased prompts that successfully navigate its screening process. That is, compared to other LLMs, once a biased prompt passes through the screening process of ChatGPT, it produces a news article aligned more closely with the biased prompt, thereby yielding more biased content.

Figure 9 presents the comparison at the sentence level. In particular, Fig. 9a compares the percentage of female prejudice news articles with respect to sentiment generated by each LLM in response to unbiased prompts and the percentage of female prejudice news articles with respect to sentiment generated by the LLM under biased prompts. In this context, a news article generated by an LLM is defined as showing female prejudice with respect to sentiment if the average sentiment score of sentences related to females in that article is lower than the average sentiment score of sentences associated with females in its counterpart obtained from The New York Times or Reuters. As illustrated by yellow bars in Fig. 9a, when presented with biased prompts, the percentages of female prejudice news articles with respect to sentiment generated by the LLMs are: Grover 45.60% (N = 1274, ∆ = 3.05%), GPT-2 53.90% (N = 1039, ∆ = 2.57%), GPT-3-curie 42.38% (N = 741∆ = 2.08%, GPT-3-davinci 41.17% (N = 1042, ∆ = − 0.63%), ChatGPT 47.40% (N = 173, ∆ = 7.90%), Cohere 46.16% (N = 1471, ∆ = 0.31%), LLaMA-7B 47.30% (N = 1372, ∆ = − 1.55%). Evaluation results corresponding to blue bars in Fig. 9 are reported in the Subsection of “Sentence Level Bias”. Among the investigated LLMs, when provided with biased prompts, ChatGPT demonstrates the largest increase in the proportion of female prejudice news articles with respect to sentiment, which is consistent with our finding at the word level. To be more specific, the proportion of female prejudice news articles with respect to sentiment produced by ChatGPT under biased prompts amounts to 47.40%, reflecting an increase of 7.90% compared to the percentage of female prejudice news articles with respect to sentiment generated by ChatGPT using unbiased prompts. Figure 9b compares the degree of sentiment score reduction in female prejudice news articles generated by each examined LLM, when supplied with unbiased prompts versus biased prompts. In particular, the reductions by the LLMs under biased prompts are (yellow bars in Fig. 9b): Grover − 0.1550 (95% CI [− 0.1680, − 0.1420], N = 581, ∆ = − 0.0109, p = 0.246), GPT-2 − 0.1886 (95% CI [− 0.2042, − 0.1731], N = 560, ∆ = − 0.0087, p = 0.412), GPT-3-curie − 0.1328 (95% CI [− 0.1463, − 0.1193], N = 314, ∆ = − 0.0085, p = 0.370), GPT-3-davinci − 0.1263 (95% CI [− 0.1400, − 0.1125], N = 429, ∆ = 0.0087, p = 0.392), ChatGPT − 0.1429 (95% CI [− 0.1791, − 0.1067], N = 82, ∆ = − 0.0108, p = 0.529), Cohere − 0.1365 (95% CI [− 0.1478, − 0.1253], N = 679, ∆ = 0.0093, p = 0.269), LLaMA-7B − 0.1584 (95% CI [− 0.1703, − 0.1465], N = 649, ∆ = − 0.0096, p = 0.343). For example, on average, the average sentiment score of sentences related to females in a female prejudice news article generated by ChatGPT under biased prompts is reduced by 0.1429, compared to its counterpart collected from The New York Times or Reuters. Considering that the average sentiment score reduction by ChatGPT under unbiased prompts is 0.1321, biased prompts exacerbate the reduction in sentiment score towards females by an additional 0.0108 for the news articles produced by ChatGPT. Nevertheless, for each analyzed LLM, the difference between sentiment score reduction under unbiased prompts and that under biased prompts is not statistically significant.

Gender bias comparison at sentence level: unbiased prompt versus biased prompt (a) Comparison between the percentage of female prejudice news articles with respect to sentiment generated by an LLM under unbiased prompts and the percentage of female prejudice news articles with respect to sentiment produced by the LLM using biased prompts. (b) Comparison between sentiment score reduction in female prejudice news articles generated by an LLM under unbiased prompts and sentiment score reduction in female prejudice news articles generated by the LLM under biased prompts. Error bar indicates 95% confidence interval.

Lastly, Fig. 10 reports the comparison at the document level. In particular, Fig. 10a compares the percentage of document level female prejudice news articles generated by each LLM in response to unbiased prompts, and the percentage of female prejudice news articles generated by the LLM under biased prompts. At the document level, a news article is considered as exhibiting female prejudice if the percentage of female pertinent topics within it is lower than that percentage in its counterpart collected from The New York Times or Reuters. When provided with biased prompts, the proportions of document level female prejudice news articles generated by the examined LLMs are listed as follows (yellow bars in Fig 10a): Grover 38.30% (N = 5721, ∆ = 0.70%), GPT-2 45.29% (N = 5416, ∆ = 3.96%), GPT-3-curie 33.83% (N = 3795, ∆ = 3.86%), GPT-3-davinci 31.72% (N = 4868, ∆ = 2.98%), ChatGPT 59.97% (N = 647, ∆ = 34.11%), Cohere 31.16% (N = 5658, ∆ = 1.24%), and LLaMA-7B 30.70% (N = 5948, ∆ = − 5.21%). The evaluation results corresponding to blue bars in Fig. 10 are reported previously in Fig. 6. As shown in Fig. 10a, when presented with biased prompts, ChatGPT demonstrates the most striking increase (34.11%) in the proportion of document level female prejudice news articles, which is consistent with our findings at the word and sentence levels. Figure 10b compares the reduction of female specific topics in document level female prejudice news articles generated by each examined LLM, when supplied with unbiased prompts versus biased prompts. In particular, the reductions by the LLMs under biased prompts are (yellow bars in Fig. 10b): Grover − 43.64% (95% CI [− 45.07%, − 42.21%], N = 2191, ∆ = − 5.53%, p < 0.001), GPT-2 − 46.69% (95% CI [− 48.10%, − 45.28%], N = 2453, ∆ = − 2.89%, p = 0.006), GPT-3-curie − 40.23% (95% CI [− 42.17%, − 38.46%], N = 1284, ∆ = − 10.10%, p < 0.001), GPT-3-davinci − 40.19% (95% CI [− 41.88%, − 38.51%], N = 1544, ∆ = − 10.63%, p < 0.001), ChatGPT − 47.91% (95% CI [− 51.50%, − 44.32%], N = 388, ∆ = − 21.24%, p < 0.001), Cohere − 40.60% (95% CI [− 42.15%, − 39.05%], N = 1763, ∆ = − 8.71%, p < 0.001), and LLaMA-7B − 42.66% (95% CI [− 44.09%, − 41.23%], N = 1826, ∆ = − 11.26%, p < 0.001). Again, when presented with biased prompts, ChatGPT exhibits the largest reduction of female specific topics (− 21.24%) in its generated female prejudice news articles. On average, the percentage of female pertinent topics in a document level female prejudice news article generated by ChatGPT under biased prompts is reduced by 47.91%, compared to that percentage found in its counterpart collected from The New York Times or Reuters. Given that the corresponding number under unbiased prompts is 26.67%, biased prompts cause an additional reduction of female pertinent topics by 21.24%.

Gender Bias Comparison at the Document Level: Unbiased Prompt versus Biased Prompt. (a) Comparison between the proportion of document level female prejudice news articles generated by an LLM under unbiased prompts and the proportion of document level female prejudice news articles produced by the LLM using biased prompts. (b) Comparison between the decrease of female pertinent topics in female prejudice news articles generated by an LLM under unbiased prompts and the decrease of female pertinent topics in female prejudice news articles generated by the LLM under biased prompts. Error bar indicates 95% confidence interval.

Discussion

Large language models (LLMs) have the potential to transform every aspect of our lives and work through the content they generate, known as AI-Generated Content (AIGC). To harness the advantages of this transformation, it is essential to understand the limitations of LLMs3. In response, we investigate the bias of AIGC produced by seven representative LLMs, encompassing early models like Grover and recent ones such as ChatGPT, Cohere, and LLaMA. In our investigation, we collect 8629 recent news articles from two highly regarded sources, The New York Times and Reuters, both known for their dedication to provide accurate and unbiased news. We then apply each examined LLM to generate news content with headlines of these news articles as prompts, and evaluate the gender and racial biases of the AIGC produced by the LLM by comparing the AIGC and the original news articles at the word, sentence, and document levels using the metrics defined in the section of “Methods”. We further analyze the gender bias of each investigated LLM under biased prompts by adding gender-biased messages to prompts constructed from these news headlines, and examine the degree to which an LLM is resistant to biased prompts.

Our investigation reveals that the AIGC produced by each examined LLM demonstrates substantial gender and racial biases at the word, sentence, and document levels. That is, the AIGC produced by each LLM deviates substantially from the news articles collected from The New York Times and Reuters, in terms of word choices related to gender or race, expressed sentiments and toxicities towards various gender or race-related population groups in sentences, and conveyed semantics concerning various gender or race-related population groups in documents. Moreover, the AIGC generated by each LLM exhibits notable discrimination against underrepresented population groups, i.e., females and individuals of the Black race. For example, as reported in Table 1, in comparison to the original news articles collected from New York Times and Reuters, the AIGC generated by each LLM has a significantly higher percentage of words associated with the White race at the cost of a significantly lower percentage of words related to the Black race.

Among the investigated LLMs, the AIGC generated by ChatGPT exhibits the lowest level of bias in most of the experiments. An important factor contributing to the outperformance of ChatGPT over other examined LLMs is its RLHF (reinforcement learning from human feedback) feature1. The effectiveness of RLHF in reducing gender and racial biases is particularly evident by ChatGPT’s outperformance over GPT-3-davinci. Both LLMs have the same model architecture and size but the former has the RLHF feature whereas the latter does not. Furthermore, among the examined LLMs, ChatGPT is the sole model that demonstrates the capability to decline content generation when provided with biased prompts. Such capability showcases the advantages of the RLHF feature, which empowers ChatGPT to proactively abstain from producing biased content. However, compared to other studied LLMs, when a biased prompt bypasses ChatGPT’s screening process, it produces a significantly more biased news article in response to the prompt. This vulnerability of ChatGPT could be utilized by malicious users to generate highly biased content. Therefore, ChatGPT should incorporate a built-in functionality to filter out biased prompts, even if they manage to pass through ChatGPT’s screening process. Among the four GPT models, the degree of bias in AIGC generally decreases as the model size increases. However, it is important to note that there is a significant reduction in bias as the model size increases from 774M (GPT-2) to 13B (GPT-3-curie), whereas the drop in bias is much smaller when transitioning from 13B (GPT-3-curie) to 175B (GPT-3-davinci, ChatGPT) (e.g., Figs. 2a, 3a). Therefore, given that the cost associated with training and running an LLM increases with its size, it is advisable to opt for a properly-sized LLM that is suitable for the task at hand, instead of solely pursuing a larger one.

We are witnessing a rising trend in the utilization of LLMs in organizations, from generating social media content to summarizing news and documents3. However, due to considerable gender and racial biases present in their generated AIGC, LLMs should be employed with caution. More severely, we observe notable discrimination against underrepresented population groups in the AIGC produced by each examined LLM. Furthermore, with the continued advancement of LLMs, their ability to follow human instruction grows more adept. This capability, nevertheless, is a double-edged sword. On the one hand, LLMs become increasingly accurate at comprehending prompts from their users, thereby generating content that aligns more closely with users’ intentions. On the other hand, malicious users could exploit this capability and induce LLMs to produce highly biased content by feeding them with biased prompts. As observed in our study, in comparison to other examined LLMs, ChatGPT produces significantly more biased content in response to biased prompts that manage to pass through ChatGPT’s screening process. Therefore, LLMs should not be employed to replace humans; rather, they can be used in collaboration with humans. In doing so, humans should provide LLMs with more high-quality cues, thereby mitigating the generation of biased content to the greatest extent possible. Accordingly, it is necessary to manually review the AIGC generated by an LLM and address any issues (e.g., bias) in it before publishing it. This is particularly critical for AIGC that may involve sensitive subjects, such as AI-generated job descriptions. In addition, given the effectiveness of RLHF in mitigating bias, it is advisable to fine-tune an LLM using human feedback. Finally, it is desirable that an LLM can assist its users to produce unbiased prompts. This can be achieved by implementing a prompt engineering functionality capable of automatically crafting unbiased prompts according to user requirements. Another way to accomplish this objective is to install a detection mechanism that can identify biased prompts and decline to produce content in response to such prompts.

Our study is not without limitations, providing opportunities for future research to extend across various avenues. First, our prompt choices are pre-defined, primarily utilizing news headlines. In future research, considering that people tend to trust news based on the news source and names of the journalist22, it would be intriguing to explore whether an LLM generates more or less biased content when the prompt includes both the news title and journalist name. Additionally, investigating an interactive prompt flow to guide or deceive LLMs could be an interesting avenue for studying LLM bias. Second, at the sentence level, the study relies on a single automated sentiment detection model, potentially leading to incomplete accuracy and lacking robustness in sentiment analysis. In future research, we consider employing multiple sentiment detection models to collaboratively predict sentiment and enhance the overall robustness of the results. Third, while we are aligning with the approach adopted in some existing studies by concentrating on the male and female gender categories, as well as the white, black, and Asian race categories, we acknowledge the limitation of our study. We believe that including a broader range of minority groups would enhance the value of the research. Fourth, the media outlets selected for our study may potentially exhibit gender and racial biases in terms of “what to report” (coverage bias)23. For example, we observe that the percentage of female words is significantly less than the percentage of male words in a news article on average, which aligns with the well-established fact that women tend to receive less media coverage than men24. However, our study focuses on comparing human-written news articles and LLM outputs in the context of “how to report” (presentation bias) by using human-written headlines to prompt LLMs and then investigating how LLMs present the same facts differently than human journalists23. As a result, our findings can be viewed as measuring the degree of presentation bias in LLMs, with coverage bias set at a similar level as human writings, while the impact of coverage bias on LLM outputs is left for future research. Last, our study highlights that AIGC of large language models exhibits gender and racial biases. Further investigation is needed to explore other types of biases, such as position bias14 and word-specific biases15. Drawing inspiration from the literature proposing debiasing methods16,17, it could be most effective to train LLMs with unbiased training data. However, as training data becomes more abundant, addressing bias in the training data becomes a challenging yet valuable direction to explore.

Methods

Data

We retrieved news articles from The New York Times and Reuters. These two news agencies consistently receive the highest rankings from independent research and surveys conducted among both journalists and laypersons, in terms of their dedication to providing accurate and unbiased news22,25. For example, The New York Times attains the highest score in the NewsGuard’s ratings, which is an authoritative assessment of news agencies conducted among trained journalists (https://www.newsguardtech.com/). Reuters is recognized as a reputable source of accurate and unbiased news, according to a study by Hannabuss25. To ensure that the evaluated LLMs were not trained on the news articles used in our study, we collected news articles from The New York Times spanning from December 3, 2022 to April 22, 2023 and news articles from Reuters covering the period from April 2, 2023 to April 22, 2023. Specifically, our dataset contains 4,860 news articles from The New York Times, encompassing seven domains: arts (903 articles), health (956 articles), politics (900 articles), science (455 articles), sports (933 articles), US news (458 articles), and world news (255 articles). As for Reuters, our dataset comprises 3769 news articles from domains such as breaking views (466 articles), business and finance (2145 articles), lifestyle (272 articles), sports (496 articles), technology (247 articles), and world news (143 articles).

Investigated LLMs

We carefully selected representative LLMs for our evaluation. One is Grover, a language model specifically developed for generating news articles26. Taking relevant news information such as news headlines as input, Grover is capable of producing news articles. The generative pre-trained transformer (GPT) architecture has gained significant recognition for its effectiveness in constructing large language models27. OpenAI leveraged the GPT architecture to develop a series of LLMs in recent years, pushing the boundaries of language comprehension and generation to new heights. Notable models that we investigated in our study include GPT-2 (https://github.com/openai/gpt-2), GPT-3 (https://github.com/openai/gpt-3), and ChatGPT (https://openai.com/blog/chatgpt). GPT-2, unveiled in 2019, is a groundbreaking language model renowned for its impressive text generation capabilities. GPT-3, released in 2020, represents a significant advancement over its predecessor and showcases remarkable performance across various language tasks, including text completion, translation, and question- answering. We investigated two distinct versions of GPT-3: GPT-3-curie with 13 billion parameters and GPT-3-davinci with 175 billion parameters. Following GPT-3, OpenAI further advanced their language models and introduced ChatGPT in November 2022. One notable improvement in ChatGPT is the integration of alignment tuning, also known as reinforcement learning from human feedback (RLHF)1.

In addition to the aforementioned LLMs, we also evaluated the text generation model developed by Cohere (https://cohere.com/generate) and the LLaMA (Large Language Model Meta AI) model introduced by Meta (https://ai.facebook.com/blog/large-language-model-llama-meta-ai/). Cohere specializes in providing online customer service solutions by utilizing natural language processing models. In 2022, Cohere introduced its text generation model with 52.4 billion parameters. Meta released its LLaMA model in 2023, which offers a diverse range of model sizes, from 7 billion parameters to 65 billion parameters. Despite its relatively smaller number of parameters in comparison to other popular LLMs, LLaMA has demonstrated competitive performance in generating high-quality text. Table 2 summarizes the LLMs investigated in this study.

Each investigated LLM took the headline of each news article we gathered from The New York Times and Reuters as its input and generated a corresponding news article. Specifically, we instructed an LLM to generate news articles using prompts constructed from headlines of news articles collected from The New York Times and Reuters. For example, given a news headline of “Argentina Wins the 2022 World Cup, Defeating France”, its corresponding prompt is:

Use “Argentina Wins the 2022 World Cup, Defeating France” as a title to write a news article.

The only exception is Grover, which directly took a news headline as its input without requiring any additional prompting. In our evaluation, we utilized closed-source LLMs, including GPT-3-curie, GPT-3-davinci, ChatGPT, and Cohere, by making API calls to their respective services. Open-source LLMs, such as Grover, GPT-2, and LLaMA-7B, were downloaded and executed locally. Table 3 presents the average word count of news articles generated by each investigated LLM, as well as the average word count of news articles collected from The New York Times and Reuters.

Evaluating word level bias

The word level bias of AIGC is measured as the degree to which the distribution of words associated with different population groups (e.g., male and female) in a news article generated by an LLM deviates from the reference distribution obtained from its counterpart (i.e., the original news article) collected from The New York Times or Reuters. Concretely, for an investigated bias, let \({v}_{1},{v}_{2},\dots ,{v}_{M}\) denote its \(M\) distinct population groups. Taking binary gender bias as an example, we have \({v}_{1}=\text{female and }{v}_{2}={\text{male}}\). Given a news article \(h\) generated by an LLM \(\mathcal{L}\), let \({n}_{h,1}^{\mathcal{L}},{n}_{h,2}^{\mathcal{L}},\dots ,{n}_{h,M}^{\mathcal{L}}\) be the number of words pertaining to population groups \({v}_{1},{v}_{2},\dots ,{v}_{M}\), respectively, in the article. Continuing with the example of binary gender bias, \({n}_{h,1}^{\mathcal{L}}\) and \({n}_{h,2}^{\mathcal{L}}\) represent the respective counts of female-related words and male-related words in news article \(h\) generated by LLM \(\mathcal{L}\). Accordingly, the distribution of words associated with different population groups in news article \(h\) generated by LLM \(\mathcal{L}\) is given by,

where \({n}_{h}^{\mathcal{L}}=\sum_{m=1}^{M}{n}_{h,m}^{\mathcal{L}}\). Let \(o\) denote \(h\)’s counterpart collected from The New York Times or Reuters. And the reference distribution for \({f}_{h}^{\mathcal{L}}({v}_{m})\) is

where \({n}_{o,m}\) denotes the number of words pertaining to population group \({v}_{m}\) in news article \(o\) and \({n}_{o}=\sum_{m=1}^{M}{n}_{o,m}\). With \({f}_{h}^{\mathcal{L}}\) and \({f}_{o}\) defined, the word level bias of news article \(h\) generated by LLM \(\mathcal{L}\) is measured as the Wasserstein distance (a.k.a the earth mover’s distance28) between them:

where \(d({v}_{i},{v}_{j})=1\) if \({v}_{i}\) and \({v}_{j}\) represent different population groups, and 0 otherwise. The Wasserstein distance is widely used to measure the difference between probability distributions, such as word distributions29, and it can be expressed explicitly when \(M=2\) or \(3\) as follows:

In our evaluation, the Wasserstein distance takes its value within the range of \([0,1)\), with a higher value indicating a greater deviation from \({f}_{h}^{\mathcal{L}}\) to \({f}_{o}\). Particularly, when \(W({f}_{h}^{\mathcal{L}},{f}_{o})=0\), it signifies that the two distributions \({f}_{h}^{\mathcal{L}}\) and \({f}_{o}\) are equivalent. The word level bias of LLM \(\mathcal{L}\) is then measured as the average word level bias over all \((h,o)\) pairs:

where \(N\) represents the number of \((h,o)\) pairs. Note that \({f}_{h}^{\mathcal{L}}\) of a generated news article \(h\) is undefined if it contains no words related to any population group (i.e., \({n}_{h}^{\mathcal{L}}=0\)). Similarly, \({f}_{o}\) of an original news article \(o\) is undefined if it contains no words related to any population group (i.e., \({n}_{o}=0\)). Therefore, a \((h,o)\) pair is dropped from the computation of the above Equation if \(h\) or \(o\) in the pair contains no words related to any population group.

One type of word level bias investigated in our study is binary gender bias with two population groups: female and male, which has been commonly used for evaluating gender bias of language models30,31,32. As detecting gender accurately is challenging, in this study, we primarily follow the approach outlined in the literature1, utilizing a commonly employed word list to reflect gender differences, which is presented in Table 4 below.

The other type of bias evaluated is racial bias with three population groups: White, Black, and Asian. According to the 2020 U.S. Census, these three groups rank as the top three population groups and collectively make up the majority (80%) of the U.S. population.(https://www.census.gov/library/visualizations/interactive/race-and-ethnicity-in-the-united-state-2010-and-2020-census.html). To identify race-related words in a document, we employed the framework of “race descriptor + occupation” and “race descriptor + gender-related word”. In our study, race descriptors consist of white, black, and Asian. We used occupations listed in the Occupational Information Network, a comprehensive occupation database sponsored by the U.S. Department of Labor (https://www.onetonline.org/find/all). The gender-related word is from the table above. According to the framework, a race descriptor is counted as a race-related word if it is followed by an occupation or a gender-related word. For example, the word “black” in the phrase “black teacher” is considered as a race-related word while “black” in the phrase “black ball” is not. Furthermore, we identified additional race related words by determining the race of each individual mentioned in the news articles. Specifically, we utilized the Cloud Natural Language API (https://cloud.google.com/natural-language) from Google to identify the names of individuals mentioned in either the original news articles or the new articles generated by the examined LLMs. The API also provided the links to the respective Wikipedia pages of these individuals. Next, we manually determined the race (Black, White, or Asian) of each individual by reviewing the person’s Wikipedia page. Through this process, we successfully identified a total of 27,453 individual names as race-related words. For instance, “Donald Trump” is a race-related word associated with the White race.

Evaluating sentence level bias

The sentence level bias of AIGC is evaluated as the difference between sentences associated with each population group in AIGC and their counterparts in the news articles collected from The New York Times and Reuters, in terms of their expressed sentiments and toxicities. Given a focal bias (e.g., binary gender bias), to assign a population group (e.g., male or female) to a sentence, we counted the number of words related to each population group within the sentence. The population group with the highest count of related words was assigned to the sentence. Take the following sentence as an example:

French’s book gave similar scrutiny to the novelist himself, uncovering his harsh treatment of some of the women in his life.

This sentence has three male specific words and one female specific word. Therefore, it is designated as a sentence associated with the male population group. Sentences with an equal count of words related to each population group or containing no gender related words were discarded. Next, we assessed the sentiment and toxicity scores of each sentence. Sentiment analysis unveils the emotional tone conveyed by a sentence. To evaluate the sentiment score of a sentence, we employed TextBlob (https://github.com/sloria/TextBlob), a widely used Python package known for its excellent performance in sentiment analysis33,34. The sentiment score of a sentence ranges from -1 to 1, with -1 being the most negative, 0 being neutral, and 1 being the most positive. Toxicity analysis assesses the extent to which rudeness, disrespect, and profanity are present in a sentence. For this analysis, we utilized a Python package called Detoxify (https://github.com/unitaryai/detoxify), an effective tool for toxicity analysis35,36. The toxicity score of a sentence ranges from 0 to 1. A higher toxicity score indicates a greater degree of rudeness, disrespect, and profanity expressed in a sentence.

For an investigated bias (e.g., gender bias), let \({v}_{1},{v}_{2},\dots ,{v}_{M}\) (e.g., male and female) be its \(M\) population groups. Given a news article \(h\) generated by an LLM \(\mathcal{L}\), we can compute the average sentiment score \({s}_{h,i}^{\mathcal{L}}\) of sentences in \(h\) that pertain to population group \({v}_{i}\), \(i=1,2,\dots ,M\). Let \(o\) denote \(h\)’s counterpart collected from The New York Times or Reuters and \({s}_{o,i}\) be the average sentiment score of sentences in \(o\) that pertain to population group \({v}_{i}\), \(i=1,2,\dots ,M\). The sentiment bias of news article \(h\) generated by LLM \(\mathcal{L}\) is measured as the maximum absolute difference between \({s}_{h,i}^{\mathcal{L}}\) and \({s}_{o,i}\) across all population groups:

The sentiment bias of LLM \(\mathcal{L}\) is then measured as the average sentiment bias over all \((h,o)\) pairs:

where \(N\) denotes the number of \((h,o)\) pairs. Similarly, the toxicity bias of LLM \(\mathcal{L}\) is evaluated using:

where \({T}^{\mathcal{L}}(h,o)={\text{max}}_{i=1,2,\dots ,M}\left\{|{t}_{h,i}^{\mathcal{L}}- {t}_{o,i}|\right\}\), \({t}_{h,i}^{\mathcal{L}}\) denotes the average toxicity score of sentences in \(h\) that pertain to population group \({v}_{i}\), and \({t}_{o,i}\) represents the average toxicity score of sentences in \(o\) that are associated with population group \({v}_{i}\).

Evaluating document level bias

The document level bias of AIGC is assessed as the difference between documents in AIGC and their counterparts produced by The New York Times and Reuters, in terms of their expressed semantics regarding each investigated population group. To this end, we leveraged the technique of topic modeling because it is widely used to uncover prevalent semantics from a collection of documents37. Specifically, a topic model is trained to discover prevailing topics from a corpus of documents and each topic is represented as a distribution of words, measuring how likely each word is used when expressing the semantics regarding that topic. Given a document, the trained topic model can be employed to infer its topic distribution, measuring how much content of the document is devoted to discuss each topic.

We utilized a topic modeling method to discover a set of topics from the news corpus, which consists of news articles collected from The New York Times and Reuters as well as those generated by the investigated LLMs. More specifically, we trained the Latent Dirichlet Allocation (LDA) model38 on the news corpus using Gensim39 by setting the number of topics as \(K\). Once the model was learned, it also inferred for each news article \(d\) its corresponding topic distribution vector \({t}_{d}\), which is a vector of length \(K\) with its \(k\) th entry \({t}_{d,k}\) denoting the proportion of content in news article \(d\) that is devoted to discuss topic \(k\) for \(k=1,2,\dots ,K\). We tokenized the news corpus and lemmatized the words using spaCy (https://spacy.io/), then trained LDA on the news corpus for \(K=200,250,300\), and found that setting \(K=250\) gave us the best perplexity score on a sample of held-out documents. Consequently, we used the model of \(250\) topics in the following analysis.