Abstract

Refractive distortions in underwater images usually occur when these images are captured through a dynamic refractive water surface, such as unmanned aerial vehicles capturing shallow underwater scenes from the surface of water or autonomous underwater vehicles observing floating platforms in the air. We propose an end-to-end deep neural network for learning to restore real scene images for removing refractive distortions. This network adopts an encoder-decoder architecture with a specially designed attention module. The use of the attention image and the distortion field generated by the proposed deep neural network can restore the exact distorted areas in more detail. Qualitative and quantitative experimental results show that the proposed framework effectively eliminates refractive distortions and refines image details. We also test the proposed framework in practical applications by embedding it into an NVIDIA JETSON TX2 platform, and the results demonstrate the practical value of the proposed framework.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Underwater images usually suffer from refractive distortions when these images are observed through a dynamic refractive water surface (Seemakurthy and Rajagopalan 2015; Xiong and Heidrich 2021). This condition can occur when capturing images using certain unmanned systems, either through the water-to-air or air-to-water paths, such as unmanned aerial vehicles (UAVs) capturing shallow underwater scenes from the surface of the water, or autonomous underwater vehicles (AUVs) observing floating platforms above the water (Fig. 1). Many existing algorithms for underwater image enhancement focus on addressing severe color distortions (Peng and Cosman 2017; Zhou et al. 2023, 2024), which is due to that different wavelengths of light have different levels of attenuation during underwater propagation (Li et al. 2022b). Color distortion worsens as the detection distance increases. By contrast, refractive imaging tends to have shorter detection distances. As a result, the captured images suffer from slight color distortions. However, the refraction of light causes serious refractive distortions, which result in significant losses of the objects’ morphological information. Therefore, removing refractive distortions is important.

Over the past decades, numerous methods have been proposed to address the issue of refractive distortions. Many works rely on videos or consecutive images and obtain the refractive geometry information of objects at different time points to restore the distorted scenes (Efros et al. 2004; Donate et al. 2006; Donate and Ribeiro 2007; Zhang and Yang 2019; Zhang et al. 2021). These methods have high requirements for equipment installation and data acquisition, which present limited practical application value. The deep learning-based method (Li et al. 2018) achieves competitive restoration results using a single distorted image without relying on videos or consecutive frames. Inspired by the work of Li et al. (2018), a self-attention generative adversarial network (Li et al. 2020) was proposed to remove distortions in underwater images. However, these approaches involve offline processing operations after data collection. The motion platform lacks portability, and deep learning-based methods still need improvements in computing efficiency. Therefore, implementing deep learning network models for efficient and rapid processing of distorted underwater images enables mobile online detection by unmanned systems.

In this study, we propose an effective and efficient deep neural network for nonrigid refractive distortion removal. This network builds upon the insights from previous works and aims to satisfy the requirements in practical applications. The main contributions of this study are summarized as follows:

-

(1)

We propose an attention-based deep neural network for the removal of refractive distortions. This network offers a more lightweight and computationally efficient solution for practical applications in underwater scenes.

-

(2)

We deploy the deep network into practical applications by embedding a pretrained model into an NVIDIA JETSON TX2 installed on underwater vehicles.

The remainder of the paper is organized as follows. Section 2 introduces the related works. Section 3 presents the detailed method. Section 4 reports and analyzes the experimental results. Section 5 elaborates on the conclusions.

2 Related works

The removal of refractive distortions from underwater images can be divided into two categories: video sequence- and deep learning-based methods.

As for the video sequence-based methods, researchers record a video in a stationary scene under varying distortions to obtain a sequence of distorted images across consecutive frames. Then, they estimate a nondistorted image by averaging the distorted consecutive image frames (Levin et al. 2008). The concept of ‘lucky patches’ is proposed as a solution to remove distortions for fully utilizing the information from multiple frames. Researchers attempt to locate the ‘lucky patches’ from the multiframe inputs using manifold embedding (Efros et al. 2004), clustering (Donate et al. 2006; Donate and Ribeiro 2007), and Fourier-based averaging (Wen et al. 2007) algorithms. However, deploying this solution in practical applications is difficult due to the stringent data acquisition requirements and the lack of flexibility in operation.

Meanwhile, deep learning-based methods have been widely used in numerous computer vision tasks, and they significantly promote the advancement of underwater image restoration. Li et al. (2018) proposed a deep learning framework for correcting distortions in a single underwater image, which trains on sharp and distorted image pairs. Thapa et al. (2021) presented the distortion-guided network (DG-Net), which employs a physically constrained convolutional network to estimate the distortion map from the refracted image. The two works inherit the advantages of generative adversarial networks (GANs) (Goodfellow et al. 2014). All these approaches involve learning-based single-image restoration by training a model on a large-scale dataset. The model is superior to video sequence-based methods. However, no significant breakthrough has been made to date on the portability of deep models for real-world applications. Other algorithms, such as restoration methods (Zhang and Yang 2019) and compressed sensing methods (James et al. 2019; Zhang et al. 2021), show great potential in restoring distorted underwater images. The hierarchical structure optimization-based approach introduces a novel strategy to estimate deformations in an image using the nearest neighbor estimators (Tian and Narasimhan 2012, 2015). However, they require a long sequence of distorted images as input or spend significant time estimating a nondistorted image.

Deep neural networks, especially GANs, have achieved remarkable success in numerous image processing tasks, such as image generation (De Souza et al. 2023), medical image enhancement (Zhong et al. 2023), image fusion (Rao et al. 2023a, b), super resolution (Wang et al. 2018; Xie et al. 2018), and image translation (Ko et al. 2023; Boroujeni and Razi 2024). The architecture of encoder-decoder convolutional networks has demonstrated its effectiveness in learning discriminative features across various applications (Isola et al. 2017; Li et al. 2018; Thapa et al. 2020). The attention mechanism also plays an important role in promoting network architectures in recent years (Li et al. 2022a; Roy and Bhaduri 2023). Many previous works have utilized fundamental network architectures and augmented them with novel components. These approaches can remove refractive distortions. However, we reveal several challenges, such as computational efficiency and platform compatibility, when we deploy them in our practical applications.

Therefore, we build upon the insights from previous works (Li et al. 2018) and make some improvements to propose a deep neural network for meeting the requirements of practical applications and taking advantage of deep learning networks. The proposed network rapidly removes nonrigid refractive distortions and offers a more lightweight and computationally efficient solution. Moreover, it can be deployed on an embedded NVIDIA JETSON TX2 platform, which demonstrates its practical value in unmanned systems such as AUVs or UAVs.

3 Proposed method

3.1 Wavy water surface imaging model

According to Snell’s law, certain phenomena occur at the interface as light travels from one medium to another (Cap et al. 2003). Some of the light undergoes refraction, while the rest is reflected (De Greve 2006). When an optical camera is deployed under the water to capture a natural scene in the air or when the camera is in the air to capture an underwater scene (Fig. 1), the information captured by the imaging plane of the camera comes directly from the refracted light. When the water-air interface is in a natural, undisturbed, still condition, the image captured by the camera at an angle perpendicular to the interface is still the natural condition of the scene. However, the water is always fluctuating in practical applications. The shooting angle of the camera, the three-dimensional effect of the object scene, and the degree of water surface fluctuations all affect the refractive imaging. These factors lead to a shift in the pixel position of the captured image. The imaging results will differ from the natural state of the scene. This phenomenon is known as reflective distortion in underwater imaging. Reflective distortions can hinder the effectiveness of practical applications, such as checking the corrosion of bridges using an underwater optical camera installed on AUVs or performing underwater missions to shoot near-coastal scenes. We suppose that the optical axis of the camera is perpendicular to the surface of the water, and the formation of a distorted image is mainly caused by the refraction of the fluctuating water surface. The overview of the reflective imaging model is shown in Fig. 2. The imaging model can be mathematically expressed as

where \(I_d\left(x,t\right)\) represents the distorted underwater image observed at time t, W(x, t) represents the unknown distortion field causing distortion at time t, I(x) represents a clear image without any distortion.

W(x, t) is a two-dimensional vector that is linearly related to the gradient of the height of the water surface at time t. Therefore, the distortion field can be determined by the height of the water surface h(x, t) at time t

where \(\alpha\) represents the constant, \(\nabla\) denotes the gradient. The incidence vector vin and the normal vector \(\vec{n}\) together affect the projective vector vout. The normal vector \(\vec{n}\) in the refractive plane can be represented by two tangent vectors as

A constrain \(\frac{{v_{{{\text{out}}}}^{x} }}{{v_{{{\text{out}}}}^{y} }} = \frac{{h_{x} }}{{h_{y} }}\) between the x and y components of the projective vector and the height of the water surface h. The projective vector directly influences the direction of the water surface. Therefore, (hx, hy) directly affects the direction of W(x, t). The magnitude of W(x, t) can be obtained from Snell’s law.

If the mean value of the water depth h0 in the refraction scene is a prior knowledge, then \(\left\| W \right\| = h_{0} \cdot \tan (\theta - \theta ^{\prime})\). However, \(\nabla h < < 1\) and \(\sin \theta \approx \tan \theta \approx \theta\) when water surface fluctuations are close to calm conditions. Thus, this case can be expressed as

Equation (1) to Eq. (5) describe the nonrigid mathematical formulation of the wavy water surface imaging model (the refractive imaging model), and the distortion field can be represented by the height of the water surface h(x, t) at time t. Given that \(\alpha\) and \(\nabla h\) are unknown variables, we cannot obtain W(x, t). Thus, we cannot derive the nondistorted underwater image I(x). Given that the deep learning network enables end-to-end image restoration, the nonrigid distorted image restoration can be performed by estimating the warping field through a deep learning model. Thus, Eq. (1) can be rewritten as

where \(\widetilde{W}(x)\) represents the deep learning mapping distortion field. Distorted images can be restored simply by estimating \(\widetilde{W}(x)\) from an end-to-end network model.

3.2 Proposed network

The proposed network is a GAN with an attention-based encoder-decoder architecture.

3.2.1 Generative adversarial networks

A GAN consists of the generator model and the discriminator model, and its core idea is to use the adversarial network to form a generative model for network training (Goodfellow et al. 2014). As shown in Fig. 3, the generator mainly completes the exploration of sample data distribution learning and generates sample data that can deceive the discriminator as the goal. The discriminator model determines the probability that the input data are true or false. During network training, the generator model is used to generate instances similar to the original data by taking the random noise sampled from the Gaussian distribution as input. The generated and real data are further forwarded into the discriminator model. The discriminator model determines whether the input data are real or fake data generated by the generator. In the learning process, the generator and the discriminator are trained alternately to produce results that are infinitely close to the real data.

3.2.2 Encoder-decoder architecture

Encoder-decoder network-based architectures are widely used in the field of image translation, such as style migration (Ledig et al. 2017) and super resolution (Li and Wand 2016). The GAN model pixel2pixel (Isola et al. 2017) has been particularly influential in image processing tasks, and its architecture is shown in Fig. 4. The encoder encodes the input image into a compressed vector and performs dimensionality reduction. Then, the decoder transforms the compressed vector into an output related to the special task.

3.2.3 Attention mechanism

In the field of deep learning, the attention mechanism is a weighting operation that enhances the performance of the network. It works similarly to human vision, which selectively pays attention to important fields. The attention mechanism aims to extract the most important and beneficial information from a complex multitude of information (Niu et al. 2021). This aim is consistent with the goal of this study to restore distorted fields in underwater images. The structure of the channel attention module used in this study is shown in Fig. 5. First, Global MaxPool (1 × 1 × C) and Global AvgPool (1 × 1 × C) operations are performed simultaneously on the input feature map (H × W × C). Then, the outputs of the two branches are forwarded to the fully connected layer and summed, and the outputs are input into the Sigmoid activation function to generate the weights (1 × 1 × C). Finally, the input feature map is multiplied by the weights. In the channel attention mechanism, matrix multiplication and element-wise nonlinear multiplication are utilized in the pooling and activation layers. This addition of nonlinear features allows the feature elements to interact with each other. Consequently, the network learns to focus automatically for the distortion removal task.

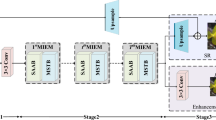

3.2.4 Proposed network architecture

The pipeline of the proposed network architecture is shown in Fig. 6, which integrates several crucial components. Inspired by the previous works (Li et al. 2018, 2020), we make improvements in several aspects, such as network size and computational efficiency. The network consists of convolution layers, residual networks, and deconvolution layers arranged in sequence within an encoder-decoder framework. The encoder consists of three convolution layers with a stride of 2 and a kernel size of 4, while the decoder comprises deconvolution layers with the same stride and kernel size. The channel configuration for this module is {32, 64, 128, 64, 32, 2}. Eight residual blocks are situated between the encoder and decoder. Each block contains two convolution layers with a stride of 1, a kernel size of 3, and 128 dimensions, along with an additive skip connection. In addition, an attention mechanism is integrated into the network. The encoder-decoder structure outputs a two-dimensional distortion field. According to the work of Li et al. (2018), an initial recovery image is produced via bilinear interpolation. Underwater images exhibit varying degrees of distortion across different regions. The proposed method generates an attention image alongside the distortion field, which allows the network to concentrate on correcting the distorted areas. The attention image is derived by measuring the distance of each pixel from its original position based on the two-channel distortion field. This mechanism directs the network to prioritize highly distorted regions while paying less attention to mildly distorted ones. Consequently, the network learns to address major and minor distortions through the attention mechanism. The proposed network produces the restored image by merging the attention image with the initial recovery image. The generator network of the proposed method can be expressed as

where G represents the generator network, and θ represents the learning parameter of the network.

3.2.5 Loss function

Designing an effective loss function is crucial for deep neural networks to meet their training objectives. The term ‘loss’ refers to the discrepancy between the predicted value and the true value for a single sample within the deep learning model framework. A smaller loss indicates a better-performing model. This study builds upon previous works (Li et al. 2018, 2020) to train a deep neural network using a combination of content loss, adversarial loss, and perceptual loss. Given that underwater images often suffer from one or more refractive distortions, we use a pixel-level loss function as the content loss to achieve more accurate median image predictions. This function effectively restores the refractive distortion regions, which brings the predicted image closer to the real scene’s morphology. For the adversarial loss, we employ the relative average least square GAN (RaLSGAN). Unlike the standard least square GAN, RaLSGAN enhances the capability of the network to learn sharper edges and produce more detailed textures. This method compensates for the limitations of the content loss function, particularly in addressing local blurriness and preserving high-frequency information. The perceptual loss function relies on the difference between the generated image and the target image in the feature space of the neural network, which helps minimize or eliminate artifacts in the output of the network.

4 Experimental results and analysis

We experimentally verify the effectiveness of the proposed method. Several image restoration algorithms are selected as the comparison methods, which specialize in removing underwater image distortions, enhancing underwater images, and eliminating image blurs. The comparison methods include the method of Li et al. (2022a, b), the method of Seemakurthy and Rajagopalan (2015), the method of Li et al. (2018), and the method of Peng and Cosman (2017). Among these comparison methods, the methods of Li et al. (2022a, b) and Li et al. (2018) are deep learning-based frameworks, and the methods of Seemakurthy and Rajagopalan (2015) and Peng and Cosman (2017) are traditional image restoration methods.

4.1 Implementation details and dataset

We run the comparison methods and the proposed method on a PC with 13th Gen Intel(R) Core (TM) i7-13700KF CPU (3.4 GHz) and one NVIDIA GTX 1070Ti GPU. This PC has a dual system of Windows 10 and Ubuntu 16.04 installed. The methods of Li et al. (2018) and Li et al. (2022a, b) and the proposed method are implemented on an Ubuntu 16.04 platform with Pytorch (https://pytorch.org/). Meanwhile, the methods of Seemakurthy and Rajagopalan (2015) and Peng and Cosman (2017) are implemented on a Windows 10 Pro platform with MATLAB R2019b (https://ww2.mathworks.cn/).

The dataset we used was released by Li et al. (2018), which provides a training set and a testing set for comparing different deep neural networks in the field of underwater image restoration. The dataset is constructed based on the ImageNet dataset (https://www.image-net.org/). The data providers capture distorted images through a transparent tank filled with clear water using a Cannon 5D Mark IV camera. The parameters of the HD camera are illustrated in Table 1. The fluctuating water flow inside the tank is generated by a small agitating pump, and a monitor displays an image of ImageNet placed below the transparent tank. Through this displaying and recapturing strategy, 324452 images from all 1000 ImageNet categories are collected, which are sufficiently large to train a deep neural network. We train our deep neural network using the dataset released by Li et al. (2018) and select 1000 images with 256 × 256 pixels from the testing dataset to compare the performance of the proposed method and the comparison methods.

4.2 Experimental results

We evaluate the proposed method and the comparison methods qualitatively and quantitatively. The corresponding results are presented in Fig. 7 and Table 2.

Visual comparisons of different image restoration methods: a input images; b ground truth images; c results of our proposed method; d results of Li et al. (2022a, b); e results of Li et al. (2018); f results of Peng and Cosman (2017); g results of Seemakurthy and Rajagopalan (2015). We add the values of SSIM and PSNR for each image. Best viewed on a high-resolution display with zoom-in capability

The qualitative results in Fig. 7 show that our methods and the methods of Li et al. (2018) and Seemakurthy and Rajagopalan (2015) have a significant effect in restoring refractive distortions. The qualitative experimental results of the three image restoration methods are all close to the geometric shape of the ground truth images. However, the method of Seemakurthy and Rajagopalan (2015) has limited ability to eliminate distortions, and the qualitative results show that it usually introduces circle artifacts. As for the method of Li et al. (2018) and ours, both methods show potential in removing image distortions. However, the qualitative results of Li et al. deviate slightly from the hue of the ground truth images. The reason is that the method of Li et al. (2018) consists of a combined U-Net architecture that contains a warpNet and a colorNet. The color deviation is mainly introduced by the colorNet. The method of Li et al. (2022a, b), while not as effective for distortion correction, yields significant improvements in sharpness in the restoration results for underwater blur removal. The method of Peng and Cosman (2017) is designed to remove color distortion and improve image sharpness. This method counterbalances the lost information during underwater imaging, such as the loss of red signals, as confirmed by its quantitative results. The proposed method restores the distorted content in an image while ensuring the same hue style as the input image. The overall quantitative results of ours are competitive, but some improvements in terms of sharpness can still be made.

Regarding the quantitative results, we employ two classic evaluation metrics: structural similarity index (SSIM) (Wang et al. 2004) and peak signal-to-noise ratio (PSNR). SSIM is an image quality evaluation metric based on structural information. It evaluates the similarity of images by comparing the brightness, contrast, and structural information of two images. The value of SSIM lies between 0 and 1, where 1 means that the two images are identical. An SSIM value closer to 1 means higher image quality and greater similarity to the ground truth image. PSNR is an error-based metric for evaluating image quality. It evaluates the image quality by calculating the ratio between the peak signal and the noise of the image. An image’s PSNR value being higher signifies better quality and less error relative to the ground truth image. Several distorted single underwater images with SSIM and PSNR values are shown in Fig. 7. The proposed method outperforms other comparison methods in terms of SSIM, which suggests that the images restored by the proposed method are closer to the ground truth images with regard to the structural information. Among all six images presented in Fig. 7, five images processed by the proposed method obtain the best PSNR values. Only one image ranks second in terms of the PSNR value, with a small difference from the first-ranked method. We process a total of 1000 images using the proposed method and the comparison methods. Then, we take the mean values of PSNR and SSIM for the 1000 images, and the results are shown in Table 2. The proposed method ranks first in terms of PSNR and SSIM metrics. The ranking results of Li et al. (2018) follow closely behind ours, which is consistent with the qualitative analysis. The proposed method restores images closer to the ground truth images in terms of brightness, contrast, and structural information. Therefore, only a small difference exists between the restored and ground truth images, and other comparison methods vary more in these aspects and consequently perform relatively poorly in quantitative SSIM and PSNR metrics.

4.3 Ablation study

We conduct extensive ablation studies on each component, including the network structure, the normalization strategies (instance normalization for IN and group normalization for GN), and the attention mechanism (ATE), to investigate the impact of these components on our method. The quantitative results for the ablation study with SSIM and PSNR metrics on the testing set are illustrated in Table 3. IN and GN are two typical normalization methods that are usually used in deep neural networks (Li et al. 2020). IN normalizes the features of each sample individually, while GN divides the feature channels into groups and normalizes the features within each group. As shown in Table 3, GN works slightly better than IN, but only a small quantitative difference is observed for different normalization methods. The ATE plays a vital role in boosting quantitative scores. With the attention mechanism, the complete GAN + GN + ATE framework can achieve much higher SSIM and PSNR scores than the GAN + GN framework.

4.4 Practical application

We test the proposed method in practical application and port the pretrained model to an embedded NVIDIA JETSON TX2 platform. Our deep framework can be widely utilized in the field of autonomous robotics and other AI applications, as shown in Fig. 8. We calculate the results of the average processing time used for each image on the testing set (Table 3) to evaluate the processing efficiency of the algorithm after porting. In the experiments, the comparison methods run on a PC with 13th Gen Intel(R) Core (TM) i7-13700KF CPU (3.4 GHz) and one NVIDIA GTX 1070Ti GPU platform presented in the previous subsection, while our ported algorithm runs on the NVIDIA JETSON TX2 platform. Table 4 shows that the proposed method has a significant advantage over other methods in terms of image processing speed.

5 Conclusions

In this study, we propose a deep neural network for the removal of refractive distortions in underwater images. The network is computationally efficient and portable. The proposed method adopts a U-Net-based architecture and an attention mechanism. The end-to-end model can pay close attention to the distorted areas of images during network training. It removes refractive distortions in images caused by light refraction while maintaining a consistent color hue with the input images. Qualitative and quantitative experimental results demonstrate the effectiveness of our proposed method in eliminating refractive distortions and enhancing image details. We also conduct practical application experiments in an embedded NVIDIA JETSON TX2 platform, and the results prove the practical value of the proposed method.

Despite the competitive performance, the proposed method still has several limitations. First, we notice that the restored underwater images still suffer from the sharpness issue. Second, the slight color degradation of the underwater images has been ignored, although the light travels a short distance under the water when studying refractive imaging. Third, we have not yet realized the NVIDIA JETSON TX2 embedded solution for robotic platforms (e.g., AUVs and ROVs). We plan to address these issues in our future work.

Availability of data and materials

The data and source codes presented in this study may be available from the corresponding author upon reasonable request.

References

Boroujeni S, Razi A (2024) IC-GAN: an improved conditional generative adversarial network for RGB-to-IR image translation with applications to forest fire monitoring. Expert Syst Appl 238:121962. https://doi.org/10.1016/j.eswa.2023.121962

Cap N, Ruiz B, Rabal H (2003) Refraction holodiagrams and Snell’s law. Optik 114(2):89–94. https://doi.org/10.1078/0030-4026-00227

De Greve B (2006) Reflections and refractions in ray tracing. pp 1–6. Retrieved from https://graphics.stanford.edu/courses/cs148-10-summer/docs/2006--degreve--reflection_refraction.pdf

De Souza VLT, Marques BAD, Batagelo HC, Gois JP (2023) A review on generative adversarial networks for image generation. Comput Graph-UK 114:13–25. https://doi.org/10.1016/j.cag.2023.05.010

Donate A, Dahme G, Ribeiro E (2006) Classification of textures distorted by waterwaves. In: 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, pp 421–424. https://doi.org/10.1109/ICPR.2006.371

Donate A, Ribeiro E (2007) Improved reconstruction of images distorted by water waves. In: Braz J et al (eds) Advances in computer graphics and computer vision. Springer, Berlin, pp 264–277. https://doi.org/10.1007/978-3-540-75274-5_18

Efros A, Isler V, Shi J, Visontai M (2004) Seeing through water. In: NIPS’04: Proceedings of the 17th International Conference on Neural Information Processing Systems, Vancouver, pp 393–400. https://proceedings.neurips.cc/paper_files/paper/2004

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S et al (2014) Generative adversarial nets. In: NIPS’14: Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, pp 2672–2680. https://proceedings.neurips.cc/paper_files/paper/2014/file/5ca3e9b122f61f8f06494c97b1afccf3-Paper.pdf

Isola P, Zhu JY, Zhou TH, Efros AA (2017) Image-to-image translation with conditional adversarial networks. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, pp 5967–5976. https://doi.org/10.1109/CVPR.2017.632

James JG, Agrawal P, Rajwade A (2019) Restoration of non-rigidly distorted underwater images using a combination of compressive sensing and local polynomial image representations. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, pp 7839–7848. https://doi.org/10.1109/ICCV.2019.00793

Ko K, Yeom T, Lee M (2023) SuperstarGAN: generative adversarial networks for image-to-image translation in large-scale domains. Neural Netw 162:330–339. https://doi.org/10.1016/j.neunet.2023.02.042

Ledig C, Theis L, Huszár F, Caballero J, Cunningham A, Acosta A et al (2017) Photo-realistic single image super-resolution using a generative adversarial network. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, pp 105–114. https://doi.org/10.1109/CVPR.2017.19

Levin IM, Savchenko VV, Osadchy VJ (2008) Correction of an image distorted by a wavy water surface: laboratory experiment. Appl Opt 47(35):6650–6655. https://doi.org/10.1364/AO.47.006650

Li C, Wand M (2016) Precomputed real-time texture synthesis with Markovian generative adversarial networks. In: 14th European Conference on Computer Vision (ECCV), Amsterdam, pp 702–716. https://doi.org/10.1007/978-3-319-46487-9_43

Li TY, Rong SH, Chen L, Zhou HY, He B (2022a) Underwater motion deblurring based on cascaded attention mechanism. IEEE J Ocean Eng 49(1):262–278. https://doi.org/10.1109/JOE.2022.3192047

Li TY, Rong SH, Zhao WF, Chen L, Liu YB, Zhou HY et al (2022b) Underwater image enhancement using adaptive color restoration and dehazing. Opt Express 30(4):6216–6235. https://doi.org/10.1364/OE.449930

Li TY, Yang QQ, Rong SH, Chen L, He B (2020) Distorted underwater image reconstruction for an autonomous underwater vehicle based on a self-attention generative adversarial network. Appl Opt 59(32):10049–10060. https://doi.org/10.1364/AO.402024

Li ZQ, Murez Z, Kriegman D, Ramamoorthi R, Chandraker M (2018) Learning to see through turbulent water. In: 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, pp 512–520. https://doi.org/10.1109/WACV.2018.00062

Niu ZY, Zhong GQ, Yu H (2021) A review on the attention mechanism of deep learning. Neurocomputing 452:48–62. https://doi.org/10.1016/j.neucom.2021.03.091

Peng YT, Cosman PC (2017) Underwater image restoration based on image blurriness and light absorption. IEEE Trans Image Proc 26(4):1579–1594. https://doi.org/10.1109/TIP.2017.2663846

Rao DY, Xu TY, Wu XJ (2023a) TGFuse: an infrared and visible image fusion approach based on transformer and generative adversarial network. IEEE Trans Image Proc. https://doi.org/10.1109/TIP.2023.3273451

Rao YJ, Wu D, Han MA, Wang T, Yang Y, Lei T et al (2023b) AT-GAN: a generative adversarial network with attention and transition for infrared and visible image fusion. Inf Fusion 92:336–349. https://doi.org/10.1016/j.inffus.2022.12.007

Roy AM, Bhaduri J (2023) DenseSPH-YOLOv5: an automated damage detection model based on DenseNet and Swin-Transformer prediction head-enabled YOLOv5 with attention mechanism. Adv Eng Inf 56:102007. https://doi.org/10.1016/j.aei.2023.102007

Seemakurthy K, Rajagopalan AN (2015) Deskewing of underwater images. IEEE Trans Image Proc 24(3):1046–1059. https://doi.org/10.1109/TIP.2015.2395814

Thapa S, Li N, Ye J (2020) Dynamic fluid surface reconstruction using deep neural network. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, pp 21–30. https://doi.org/10.1109/CVPR42600.2020.00010

Thapa S, Li N, Ye J (2021) Learning to remove refractive distortions from underwater images. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, pp 5007–5016. https://doi.org/10.1109/ICCV48922.2021.00496

Tian YD, Narasimhan SG (2012) Globally optimal estimation of nonrigid image distortion. Int J Comput Vis 98(3):279–302. https://doi.org/10.1007/s11263-011-0509-0

Tian YD, Narasimhan SG (2015) Theory and practice of hierarchical data-driven descent for optimal deformation estimation. Int J Comput Vis 115(1):44–67. https://doi.org/10.1007/s11263-015-0838-5

Wang XT, Yu K, Wu SX, Gu JJ, Liu YH, Dong C et al (2018) ESRGAN: enhanced super-resolution generative adversarial networks. In: 15th European Conference on Computer Vision (ECCV), Munich, pp 63–79. https://doi.org/10.1007/978-3-030-11021-5_5

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Proc 13(4):600–612. https://doi.org/10.1109/TIP.2003.819861

Wen ZY, Fraser D, Lambert A, Li HD (2007) Reconstruction of underwater image by bispectrum. In: IEEE International Conference on Image Processing, San Antonio, pp 1–4. https://doi.org/10.1109/ICIP.2007.4379367

Xie Y, Franz E, Chu M, Thuerey N (2018) tempoGAN: a temporally coherent, volumetric GAN for super-resolution fluid flow. ACM Trans Graph 37(4):95. https://doi.org/10.1145/3197517.3201304

Xiong J, Heidrich W (2021) In-the-wild single camera 3D reconstruction through moving water surfaces. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, pp 12558–12567. https://doi.org/10.1109/ICCV48922.2021.01233

Zhang Z, Tang YG, Yang K (2021) A two-stage restoration of distorted underwater images using compressive sensing and image registration. Adv Manuf 9(2):273–285. https://doi.org/10.1007/s40436-020-00340-z

Zhang Z, Yang X (2019) Reconstruction of distorted underwater images using robust registration. Opt Express 27(7):9996–10008. https://doi.org/10.1364/OE.27.009996

Zhong G, Ding W, Chen L, Wang Y, Yu Y (2023) Multi-scale attention generative adversarial network for medical image enhancement. IEEE Trans Emerg Top Comput Intell 7(4):1113–1125. https://doi.org/10.1109/TETCI.2023.3243920

Zhou J, Gai Q, Zhang D, Lam KM, Zhang W, Fu X (2024) IACC: cross-Illumination awareness and color correction for underwater images under mixed natural and artificial lighting. IEEE Trans Geosci Remote Sens 62:4201115. https://doi.org/10.1109/TGRS.2023.3346384

Zhou JC, Sun JM, Zhang WS, Lin ZF (2023) Multi-view underwater image enhancement method via embedded fusion mechanism. Eng Appl Artif Intell 121:105946. https://doi.org/10.1016/j.engappai.2023.105946

Acknowledgements

The authors would like to thank the anonymous reviewers for their constructive suggestions for revision.

Additional information

Edited by: Wenwen Chen.

Author information

Authors and Affiliations

Contributions

All authors contributed to the conceptualization and design of the research. Tengyue Li and Long Chen conceived the idea of this paper on the wavy water surface imaging model and deep neural network and made critical modifications. Jiayi Song and Tengyue Li drafted the manuscript. Zhiyu Song carried out the NVIDIA JETSON TX2 experiments. Arapat Ablimit and Jiayi Song conducted the literature search and data analysis. All authors made critical revisions and corrections to the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Informed consent for publication was obtained from all participants.

Competing interests

The authors declare that they have no competing interests. Long Chen is one of the Editorial Board Members, but he was not involved in the journal’s review of, or decision related to, this manuscript.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, T., Song, J., Song, Z. et al. Removing nonrigid refractive distortions for underwater images using an attention-based deep neural network. Intell. Mar. Technol. Syst. 2, 25 (2024). https://doi.org/10.1007/s44295-024-00038-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44295-024-00038-z