Abstract

Exploring the underwater world presents significant physical and financial barriers. Telepresence technologies offer a potential solution by a providing a more accessible version of an underwater experience. Our research involved a scoping review to consolidate previous findings on vision-based technologies that aim to recreate underwater experiences and their user evaluations. We searched 5 academic databases for papers describing or evaluating technologies providing visual underwater experiences without actual submersion. We systematically searched YouTube to include immersive experiences not documented in academic publications. Our review included 45 academic papers and 23 YouTube videos classified by their level of ‘reality’, ‘degrees of freedom’, and presence of interactive elements. The technologies reviewed included virtual reality, 360\(^{\circ }\) video and imaging, augmented and mixed reality, head-mounted displays, mobile devices, cameras, sensors, remotely operated vehicles (ROVs), and games. Half of the selected papers featured user evaluations (with sample sizes ranging from 5 to 1006 participant); these methods included interviews, performance tracking, and questionnaires. We identified six main application areas for these technologies: (i) general environmental awareness, (ii) formal education about marine life, (iii) therapeutic interventions, (iv) access to underwater heritage sites, (v) ROV teleoperation and simulations, and (vi) entertainment. Immersive technologies, such as head-mounted displays and augmented reality, were prevalent across all application categories, though their usability varied. Cost considerations were also diverse, with costs ranging from expensive ROVs and simulated environments to cheaper 360\(^{\circ }\) videos. Our findings indicate a need for more robust user studies, including long-term research and comparisons among real-time, pre-recorded, and simulated experiences. A better understanding of entertainment-driven applications could benefit education, environmental conservation, and healthcare. The findings of the scoping review are discussed with respect to the technologies identified and the corresponding user studies.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The vast depths of our oceans and seas are home to a wealth of natural wonders and historical artefacts. However, the nature of these environments, characterised by extreme pressure, darkness, and remoteness, hinders our ability to fully understand and interact with the underwater world. There are numerous barriers for those seeking to experience underwater spaces, especially sensitive populations, such as individuals with physical limitations (older people) or those facing financial constraints, like the cost of diving equipment and travel to dive sites. In response to these challenges, many solutions that include a range of different technologies have been developed to simulate the underwater experience from the shore for various applications, ranging from aiding marine researchers to improving health outcomes.

This review summarises the different technologies designed to bridge the gap between terrestrial observers and underwater spaces, highlighting their applications across different fields. Our own interest (as explained in more detail in Hagen et al. (2024) and Jones et al. (2024) focuses especially on designing an underwater telepresence application for digitally undeserved people, hence our interest in easy-to-use, scalable, and cost-efficient solutions. Nonetheless, this scoping review includes all relevant technologies, offering readers a broad spectrum of different perspectives on the design.

The concept of telepresence first appeared in the human-computer interaction literature, defining the illusion of being present in a distant location (for example, by Minsky (1980)) and is closely connected to the concept of ‘presence’, introduced around the same time by Corker et al. (1980). In a strictly technical sense, telepresence involves using sensors and effects to link the user’s body via telecommunication channels to a robotic system, enabling the user to be remotely embodied in the robot. Sheridan (1992) defines telepresence as the feeling experienced by a teleoperator of being phenomenally ‘there’ at the remote operation site. In this paper, we will engage with the more phenomenological treatment of the term telepresence, rather than the strictly technical, as the feeling of being present in a remote location. This encompasses not just teleoperation but also simulated virtual reality (VR) experiences that enhance users’ sense of presence in underwater spaces, whether through simulated or pre-recorded content, without necessarily involving real-time communication.

Successful implementations of underwater telepresence already have a long history, with teleoperated remotely operated vehicles (ROVs) since the 1960s (Perkins and Brady 1984), with the addition of VR in the 1990s (Schebor 1995). Previous reviews, such as those by Capocci et al. (2017), Sivčev et al. (2018), Theodoropoulos and Antoniou (2022), and Xia et al. (2022) have primarily focused on technical aspects or focused on a single application. This review aims to explore underwater telepresence from the perspective of ‘feeling of being underwater’, emphasizing a user-induced sense of presence (Slater 2018). In this scoping review, we will examine different aspects of immersion, exploring its psychological characteristics and its objective properties as a technology itself (Agrawal et al. 2020). Our ultimate goal is to guide the development of future designs that enhance the accessibility of underwater experiences through telepresence and virtual underwater environments. This review aims to identify and analyse studies exploring the use of technology to simulate the experience of being underwater for individuals not physically submerged. This objective is addressed in two key components:

-

(1)

To investigate the technology used for creating underwater experiences.

-

(2)

To examine the reported experiences of individuals engaging with such technology.

The findings are split between three sections of the paper. Focusing on the first objective of the review, Section 4 describes underwater telepresence experiences identified in the studies. This includes a proposed classification of the nature of the experiences (Section 4.1), along with the main technologies identified (Section 4.2).

Section 5 focuses on the methodology of user evaluations conducted in the studies considered in this review, detailing the samples (Section 5.1), qualitative (Sections 5.1 and 5.2), and quantitative (Section 5.3) methods, with a particular focus on standardised scales.

Section 6 provides further details on how underwater telepresence is used and evaluated in different application contexts. This includes raising environmental awareness (Section 6.1), educational applications (Section 6.2), healthcare interventions (Section 6.3), underwater heritage outreach projects (Section 6.4), ROV operations and simulations (Section 6.5), and entertainment purposes (Section 6.6).

2 Methodology

This work is following the scoping review methodology outlined by Munn et al. (2018). We have used two primary sources: academic databases and grey literature.

2.1 Academic databases records

We used Web of Science (WoS), IEEE Xplore, ACM Library, Scopus and PubMed for obtaining academic records for this review. We conducted searches in the set of libraries (except PubMed) using author keywords. As PubMed does not support authors’ keywords, we have used Medical Subject Headings (MeSH) terms to capture relevant medical literature. The summary of the search queries summarised in the Table 1.

The inclusion and exclusion criteria were formulated to accommodate to the overall conceptual goal of describing complete user experiences relating to delivering the feeling of being underwater from on-shore locations, and relating to the two objectives of the review by describing the technology in a a sufficient detail. Papers were included if they met the following criteria:

-

(1)

The paper describes or evaluates technology capable of providing individuals with the experience of being underwater without actual submersion. We have excluded paper describing VR or augmented reality (AR) devices that are meant to be used underwater, such as AR for diving applications or underwater VR experiences tailored to water parks.

-

(2)

The technology incorporates a visual element that allows users to perceive an underwater environment. While there could be a scope for reviewing, for example, purely audio experiences of being underwater, in this review we focused on the visual aspect as a primary sensory modality for creating a sense of presence.

On the other hand, papers were excluded if they:

-

(1)

Did not provide adequate descriptions or evaluations of technology related to the underwater experience: in some cases, the description of the technology was not sufficient for clear understanding of what the technology entailed and how it was meant to be delivered.

-

(2)

Focused solely on measuring parameters, such as salinity or temperature, as these do not contribute to the user’s underwater experience.

-

(3)

Featured only still photographs without offering substantial technological insights into creating the underwater experience. We concluded that flat still photographs are not sufficient to create the sense of presence. We included a paper that was using 360\(^{\circ }\) photographs though.

-

(4)

Concentrated exclusively on computational methods unrelated to image interpretation in underwater settings, without user experience elements.

-

(5)

Described systems with synthetic VR unrelated to the sensation of being underwater.

-

(6)

Described haptic interfaces for ROV teleoperation without a direct visual element. We have excluded papers that described synthetic VR interfaces that are created using underwater sonars for example, as they are not aimed at creating the feeling of being underwater.

-

(7)

Were position papers with no empirical research or technological focus.

2.2 YouTube as a primary grey literature source

By recognizing YouTube as a source of ‘grey literature’, we capture the broader dimensions of the topic. YouTube videos can illustrate practical demonstrations of underwater spaces and immersive technologies that may not have been identified in the academic literature. Although other video hosting platforms offer diverse forms of content (such as Vimeo), we chose YouTube as the largest.

For the systematic search of the videos, we employed an experimental protocol adapted to the limitations of the YouTube search engine: the platform does not allow you to see how many videos were found or to use search operators in the same way as most academic databases. We used specific keywords for our search, which can be found in Table 2. These keywords were created based on two considerations: (1) creators usually prefer very short titles for the videos, (2) we definitely have include a reference to the underwater spaces. Additionally, as YouTube has many videos relating to the personal underwater diving experience videos, we had to exclude videos relating to snorkeling or scuba diving. Before finalizing these keywords, we validated them using a test set of relevant known videos. We examined the top 30 results for each keyword search but left out any promotional videos.

The process for selecting videos from YouTube was straightforward. We applied the same exclusion criteria that is used for academic papers but excluded any footage of diving or aquariums, as it did not align with our research objectives. To ensure consistent quality, three reviewers independently reviewed each video. Only when all three agreed on its relevance was a video included in the review (agreement rate \(\kappa = 0.73\)).

2.3 Limitations of the search methodology

While our search strategy was designed to be specific, we acknowledge its limitations. A more sensitive approach, including abstract and title searches for the academic literature libraries, revealed some papers that the keyword and MeSH terms search missed. However, this broader approach yielded an unmanageable number of papers. As for the grey literature, the review also has a set of limitations. Firstly, by focusing solely on the initial 30 results, our review of ‘grey literature’ videos lacks comprehensiveness. Additionally, since we relied only on the video titles and descriptions as searchable descriptors, some relevant videos may have been overlooked due to inadequate descriptions provided by the creators. Understanding the limitations, we treated our findings qualitatively, revealing the diversity of the technologies, applications and methodologies.

3 Summary of the identified records

The PRISMA (preferred reporting items for systematic reviews and meta-analyses) framework guided our reporting process as summarised in Fig. 1. We identified 1091 papers through the keyword search (as of 31/08/2023, with amended keyword ‘immers*’ on 7/12/2023), from PubMed (882), IEEE Xplore (106), Scopus (459), WoS (268), ACM (17) databases. After all stages of exclusion, 45 academic papers were included in the review. The YouTube search followed a similar pathway, with 90 records identified and 23 final videos selected through agreement over the inclusion criteria by three independent reviewers describing 14 distinct underwater experiences.

Flow diagram of inclusion and exclusion of sources. Out of the identified 1732 records we have excluded 349 duplicate records. After title screening 1300 more papers were excluded, 3 papers we were not able to obtain, and 35 papers were excluded during full report assessment according to our criteria. Finally, 45 academic papers were selected for the full review. Out of the 90 initial YouTube videos identified, after screening 65 videos were excluded and 2 could not be retrieved. In total, we have considered 68 records for this review

4 Underwater telepresence experiences

The experiences we identified through our search were classified based on the nature of the content, the degree of freedom involved, and the existence of interactive elements, as summarised in Tables 3 and 4. These categories allow us to understand the nature of the experiences provided in different contexts and how they influence the user experience.

4.1 Overview and classification of the experiences

The content ranges from fully simulated to real-time experiences, including whether they are delivered as real-time (or close to real-time) streams or pre-recorded footage. The experiences are categorised according to the degree of freedom involved: the ability to ‘look around’ corresponds to three degrees of freedom (3DoF), used for rotation only, while ‘move around’ corresponds to six degrees of freedom (6DoF), used for rotation and translation. The presence of interactivity is an important feature for many experiences, reported to enhance the sense of presence when implemented within appropriate contextual parameters (Slater 2018).

According to the level of realism, we split the experiences into three categories. ‘Real’ experiences consist of real-time or pre-recorded footage from an existing ocean or sea location or scene. On the other end, there are fully ‘simulated’ experiences. ‘Hybrid’ experiences ‘augment’ scanned 3D scenes of existing locations with digital elements, such as simulated marine life and digital light effects to increase users’ sense of presence (as in, for example, Thompson et al. (2019)).

‘Real’ experiences, such as educational data collection using underwater ROVs (Gerringer et al. 2023), are prevalent in research applications (denoted as RI in Table 3) where telepresence, defined as a ‘mediated perception of an environment’ (Steuer 1992) facilitates teleoperation, and interaction with the environment plays a crucial role. Fully ‘simulated’ experiences create an underwater environment that resembles real marine spaces, offering the ability to create beautiful and diverse scenes that can be delivered reliably. Such experiences are used, for example, in healthcare applications: the therapeutic effect is achieved by creating a particular experience for the patients that takes place in the immediate physical environment (Steuer 1992). For example, a visually rich and aesthetically pleasing fully simulated underwater experience ‘theBlu’ was used in a healthcare scenario by Yeo et al. (2020). A ‘hybrid’ approach is common for applications in underwater heritage, where it serves to create an immersive virtual exploration of an accurate representation of an underwater heritage site, as used in Bruno et al. (2017) (Fig. 2). This approach provides a middle ground between representing the dive site setting authentically and ensuring the engagement and reliability of the simulated environment.

Example of a hybrid experience based on a representation of an real underwater heritage site augmented by a set of synthetic elements to create a game, reproduced from Bruno et al. (2018)

All the experiences are located on a conceptual scale called the ‘reality-virtuality continuum’ as described by Milgram and Kishino (1994) and feature various degrees of interaction (Fig. 3). At one end of the scale, we have pre-recorded open-source video streams from NOAA OCEANOS missions in Gerringer et al. (2023) that offer limited interactive elements. Moving along the continuum, there are live-stream ROV teleoperations through a computer screen in Omerdic et al. (2014), 3D TVs, and 360\(^{\circ }\) videos used in many environmental awareness campaigns and healthcare interventions, leading to interactive simulated VR experiences like ‘theBlu’ or ROV control within a stereoscopic VR (Elor et al. 2021).

Milgram and Kishino’s reality-virtuality continuum adapted from Milgram and Kishino (1994). In our case, ‘real’ experiences can be situated on the left side of the spectrum, corresponding to the real environment, and ‘simulated’ experiences are positioned on the right, corresponding to the virtual environment. In our case, both augmented virtuality (AV) and augmented reality correspond to the ‘hybrid’ experiences

The level of interaction or the capacity to naturally explore the virtual environment alongside ‘immersion’ (the capacity to isolate from the external world) and ‘imagination’ (an individual’s capacity for mental imagery) contribute significantly to the overall sense of presence (Burdea and Coiffet 2003). Although few studies have investigated the relationship between mental imagery and the sense of presence, our review concentrates on immersion as both a psychological effect (Agrawal et al. 2020) and as an objective property of the system (Slater 2009). We also explore ‘interaction’ more precisely, specifically user tracking and interactive elements within the virtual environment.

4.2 Technologies used in underwater telepresence

Several technologies and devices are employed across the papers, contributing to the creation of underwater telepresence. They all relate to the different ways of delivering the underwater experience to the target audience on the front end or data collection on the opposite side.

4.2.1 Virtual reality (VR)

This technology creates a perceptual illusion of being in a different location than one’s physical presence. This illusion is primarily perceptual, not cognitive. The perceptual system reacts to stimuli from a mediated environment before the cognitive system can discern experience as not originating from the real world. The real power of VR lies in its ability to create experiences that convincingly make users feel present in an artificial environment despite their awareness of its virtual nature (Slater 2018). These experiences range from underwater archaeological site tours to simulating ROV operations. VR environments empower users to interact with and explore underwater settings in a controlled and, at times, realistic manner. In underwater heritage outreach, projects such as those by Bruno et al. (2017, 2018) and Liarokapis et al. (2020) used VR to virtually transport users to underwater archaeological sites. These VR experiences offered an immersive way of exploring shipwrecks and historical ruins without the physical constraints of diving. Moreover, VR has been instrumental in environmental education and advocacy. Studies like Markowitz et al. (2018), Vercelloni et al. (2018), and Nelson et al. (2020) used virtual environments to raise awareness about marine ecosystem issues, such as coral reef acidification. Despite its immersive capability, rendering VR environments can be computationally intensive, as noted in McCarthy and Martin (2019). Immersive VR systems such as Sensorama (1956) have been around since the 1950s. Recent advancements in hardware and rendering techniques have made VR systems using relatively inexpensive head-mounted displays (HMDs) more accessible to a broader consumer audience, such as gamers. This relative drop in price for consumer HMDs led to an increase in research projects focusing on applications built for the general audience, such as ‘theBlu’ and ‘Titanic VR’. An emerging trend in certain studies, like Jain et al. (2016), is the transition toward extended reality (XR), which enhances VR by introducing additional sensory modalities beyond traditional controllers. For instance, making the scene respond to the user’s pattern of breathing enriches the sense of presence. Despite concerns about VR-induced nausea, known as cybersickness (McCauley and Sharkey 1992), this issue was not identified as a significant limitation of the technology.

4.2.2 360\(^{\circ }\) video and imaging

This technology involves capturing video and photographic content in a way that records a full 360\(^{\circ }\) field of view simultaneously, enabling the creation of immersive experiences by capturing natural underwater scenes, as opposed to the simulated experiences created using VR. This technology enables the creation of immersive, panoramic videos and images where viewers can look around in every direction, feeling as though they are physically present at the location where the video was recorded. Modern 360\(^{\circ }\) cameras, equipped with multiple lenses to cover the entire field of view, can capture high-resolution content. These cameras have become increasingly compact, user-friendly, and accessible to both professionals and enthusiasts. Vercelloni et al. (2018) used 360\(^{\circ }\) images of coral reefs for an aesthetic evaluation study, Gerringer et al. (2023) aimed to increase environmental awareness, and Verdes et al. (2021) sought to augment graduate course on Marine Biodiversity delivered online. 360\(^{\circ }\) video and imaging strike a balance in immersive experiences, sitting between fully interactive synthetic VR environments and traditional flat video. Its uniqueness lies in the ease of creation compared to building simulated VR environments and the inherent realism it offers. Unlike VR, which requires complex programming, 360\(^{\circ }\) content is simpler to produce. Specialized cameras capture real-world environments, providing naturally realistic experiences, albeit without interactivity.

4.2.3 Augmented reality (AR) and mixed reality (MR)

These techniques overlay digital information–such as images, text, or animations–onto real-world, live, or pre-recorded video. For the underwater telepresence, AR and MR play a prominent role by increasing the interactivity of the captured underwater footage. Thompson et al. (2019) demonstrates MR with underwater 360\(^{\circ }\) footage augmented with digital elements to ensure visual consistency and enhance users’ illusion of presence. Liestøl et al. (2021) allows users to observe shipwrecks from an on-shore location using AR, interacting with additional digital information overlaid on the underwater scene for educational and informative purposes. In an educational context, for example, AR marine creatures were recreated in a mobile application by Verdes et al. (2021) to enhance student online learning. AR can be delivered by HMDs or mobile devices. Recent advances in computer vision greatly enhance the ease of implementation of such techniques.

4.2.4 Head-mounted displays (HMDs)

A key factor in immersing users within a virtual environment lies in the ability to isolate them from physical surroundings and replace these with sensory triggers from the virtual environment. This is often achieved through HMDs, which provide head tracking, stereoscopic views, and sometimes 3D surround sound to increase the immersive levels of VR systems. Devices such as the HTC Vive, Oculus Rift, and Zeiss VR One are commonly employed in studies to provide users with an immersive visual experience. These devices, worn on the head, deliver 3D visuals, such as VR and AR experiences, as well as 360° videos and photos, enhancing the sense of presence and immersion. The actual devices vary over time, starting from the nVisor helmet augmented by a set of trackers in Ryan et al. (2010) and Haydar et al. (2011) to Oculus Rift in Jain et al. (2016). The cost of these technologies varies; for example, Ougradar and Ahmed (2019) successfully used low-cost no-screen Virtoba VR HMD (retail price at the time of release in 2016 was about 30 USD) together with iPhone 6 for a study on anxiety reduction during dental extractions, as opposed to Oculus Rift (retail price at the time of release in 2016 was around 500 USD), used by many other studies (e.g., Magrini et al. (2016); Markowitz et al. (2018); Secci et al. (2019)). In some instances (e.g., Yeo et al. (2020)), participants reported that the headsets were uncomfortable and bulky, highlighting that while effective in creating immersive environments, HMDs are not without their drawbacks.

4.2.5 Mobile devices

In scenarios where broadening the accessibility of the experience to a larger population is a priority, AR experiences and 3D reconstructions can be presented via mobile devices rather than HMDs. This approach provides a straightforward and low-cost alternative, enabling users to interact with three-dimensional content without the need to acquire and set up more complex HMD systems. For instance, in an educational setting, Verdes et al. (2021) employed mobile devices to allow students to engage with 3D reconstructions of marine creatures on their own devices. On the other hand, Barrile et al. (2021) and Liestøl et al. (2021) created AR experiences of underwater heritage sites that visitors can interact with on-site using their mobile devices. While the level of immersion is very limited in this case, the use of mobile devices is particularly beneficial in contexts where the primary objective is to introduce the experience to a broader, potentially less technologically equipped audience or in the location of the site, accessible via visitors’ own devices.

Moreover, as highlighted earlier, mobile devices may also function as cost-effective HMDs. By using the device’s screen, low-cost HMDs such as Virtoba VR (and similar VR adapters for smartphones) provide a VR experience. Although these may not match the visual quality and immersion level of standalone VR systems, they offer a more accessible and budget-friendly alternative.

4.2.6 Cameras and sensors

Underwater cameras and sensors are employed for data collection, capturing real-time imagery of underwater environments and generating hybrid experiences by reconstructing real underwater scenes using photogrammetry. FishCam showcases an example of a cost-effective solution for recording underwater footage (Mouy et al. 2020). Continuous improvement in modern consumer-level camera technology enhances its accessibility and widespread use, reducing the need to introduce custom-built devices for many applications. For example, GoPro camera footage was used to create the shipwreck 3D model by McCarthy and Martin (2019). In simulated scenarios, sensors may take on a different role. As notable example is the MIT Media Lab’s scuba diving simulator project, ‘Amphibian’. This project employed multiple sensors to simulate a more realistic diving experience, such as a breathing sensor to detect the user’s breathing patterns for accurate buoyancy control, motion sensors to detect leg movements, as well as haptic gloves with motion detection and physical feedback (Jain et al. 2016).

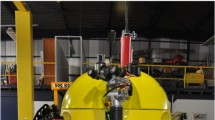

4.2.7 Remotely operated vehicles (ROV)

Unmanned submersible devices equipped with sensors, motors and, most notably, cameras for underwater telepresence are invaluable in exploring underwater environments. They offer us the opportunity to observe, interact with and record data, all without requiring a human operator to be physically present underwater. ROVs are pivotal in facilitating both real-time interactions with the environment (as in Pallant et al. (2016)), as well as for creating reconstructed underwater scenes (Magrini et al. 2016). Some papers feature complex professional ROVs, such as NAUTIUS, accessible only to large organisations (as in Jenkyns et al. (2015) and Brennan et al. (2018). However, the general trend for the democratisation of ROV technology is evident in papers such as, for example, Chouiten et al. (2012), where a low-cost consumer-level ROV web-based remote teleoperation experience is presented. While capable of providing the most direct (real and close to real-time) interaction with underwater environments, the use of ROVs is limited owing to logistical difficulties: ROVs can only be deployed by personnel close to the sea and can only be used under favourable weather conditions. Most importantly, the complexity of controlling ROVs means that, in the studies identified, they were operated by trained operators, thereby limiting their broader application in some domains.

4.2.8 Games and storytelling

While not a technology, building narratives through gaming and storytelling plays a continuous and critical role in underwater heritage (Liarokapis et al. 2020; Liestøl et al. 2021) and environmental outreach projects (Calvi et al. 2017), serving as an essential factor in increasing engagement with the underwater scenes. Numerous studies, as well as the games and experiences identified through reviews on platforms like YouTube, demonstrate the significance of narrative elements in fostering user engagement, which results in positive outcomes in educational and environmental projects. It has already been established that accurate sensory inputs can trigger a sense of physical presence even though users might cognitively recognize their current reality. Yet the cognitive side is not always anchored in reality, as the user can exercise their imagination based on a narrative (Agrawal et al. 2020).

The impact of narrative elements on viewer response was highlighted by Nelson et al. (2020). In this study, participants were presented with the same underwater video footage accompanied by either a positively or negatively valenced environmental message. The variation in the storytelling significantly influenced the participants’ reactions, as evidenced by the differing amounts they donated to a local charity after viewing the videos.

5 User studies in underwater telepresence

Underwater telepresence, at its core, is a human-computer interaction technology. As such, the development of any underwater experience is not complete without making the technology accessible to end users. The insights gained from user studies can be used not only for further improving the technology but also for gauging its acceptance and adoption and evaluating the impact of the technology on healthcare or educational outcomes. Around half of the papers featured a user study, as summarised in Tables 5 and 6. Among the experiences derived from grey literature, ‘theBlu’ was formally evaluated in a few academic studies.

5.1 Sample

Characteristics of participant samples play an important role in establishing the depth and detail of the study. Given the diverse objectives and methodologies of the studies analysed, there was a significant variation in sample sizes and populations. The median sample size across these studies was 25, the mean was approximately 90, and the standard deviation was 213. The smallest sample size was five individuals in Pallant et al. (2016), while the largest encompassed 1006 participants in Nelson et al. (2020). As a rule, usability studies, designed to get initial feedback and validation of the prototype from the target audience, feature much smaller sample sizes. This approach is deemed sufficient for their purposes and facilitates qualitative data collection. Conversely, healthcare interventions, or larger outreach projects, featured larger sample sizes. Studies feature populations of different ages, with Calvi et al. (2017) reporting the youngest participant of the evaluation on an immersive underwater game experience to be under one year old, whereas Yeo et al. (2020) documented the oldest participant, aged 75, in a study evaluating coral reef aesthetics. Numerous studies recruited students from schools and higher education institutions. Heritage and environmental outreach projects often involved event and exhibition visitors in user studies (e.g., Markowitz et al. (2018)). While many studies recruited members of the general population, there were instances where it was crucial to recruit individuals with a particular professional background. For example, a group of divers was enlisted to evaluate the realism of a diving simulator ‘Amphibian’ (Jain et al. 2016), and a group of 11 archaeologists participated in Haydar et al. (2011) to evaluate an interactive simulated platform for marine archaeology research.

5.2 Qualitative analysis

Qualitative analysis plays an important role in evaluating user experiences with underwater telepresence technologies, offering deep insights into the user experiences, elucidating quantitative outcomes, and gathering suggestions for improvement. The majority of user studies incorporated some form of qualitative data collection, primarily utilizing open-ended questionnaires or semi-structured interviews, enabling participants to express their views, impressions, and feedback in their own words. A common element across many studies has been the use of exit interviews, conducted either alongside quantitative data collection or independently (as in Jain et al. (2016); Agrawal et al. (2019); Liarokapis et al. (2020); Kelleher et al. (2022)). A few studies undertook formal thematic analysis to categorize and interpret qualitative data systematically. For example, Gerringer et al. (2023) highlighted key themes in student reflections on their educational experiences with underwater presence technologies, such as transformed ocean perceptions, insights into scientific processes, and ‘it is okay to ask questions’. Many studies (e.g., Pallant et al. (2016); Agrawal et al. (2019)) opted to present direct quotations from participants that primarily captured general impressions, suggestions, and feedback without delving into the underlying themes or patterns in the data. For example, in the context of evaluation of a simulated remotely operated vehicle (ROV) training engine, de la Cruz et al. (2020a) collected user feedback, where users rated its potential usefulness and provided concrete suggestions for improvement, such as introducing delays in the communication between the ROV and operator to enhance the realism of the simulation. The qualitative findings also play a crucial role in understanding the quantitative outcomes better. For instance, Bruno et al. (2018) used one-on-one interviews to explore preferences for HMDs vs. 3D TVs in studying interactions with an underwater heritage site.

5.3 Quantitative analysis and standardised acales

The usability studies featured a mix of task performance logs and questionnaires. The task performance was evaluated by various metrics, such as the duration of task completion, achieved scores, or other quantifiable characteristics. In many cases, standardised tools were used to evaluate user experience, attitudes, or psychological states. A comparative experimental setup was often used in the user studies identified in this review. Such comparisons spanned different populations and different types of content and, in healthcare and educational studies, examined changes pre- and post-intervention. Notably, comparisons also focused on different levels of immersion, such as 2D TV displays, 360-degree videos, and interactive VR.

5.3.1 General usability measures

The Client Satisfaction Questionnaire is a tool designed to evaluate client satisfaction with services received, particularly in healthcare and social service settings (Attkisson and Zwick 1982) and was featured as a part of a pilot trial focusing on the feasibility and acceptability of using ‘theBlu’ virtual experience for reducing pain in cancer patients by Kelleher et al. (2022). National Aeronautics Space Administration-Task Load Index (NASA-TLX) (Hart and Staveland 1988) is extensively recognised as a multi-faceted tool for assessing workload, effectiveness, and performance in system evaluations, as was demonstrated by Liarokapis et al. (2020) to compare between different delivery modalities of a serious game dedicated to underwater heritage exploration and Elor et al. (2021) in comparing between different levels of immersion for ROV control tasks. Aside from NASA-TLX, Elor et al. (2021) used multiple other standard usability evaluation tools: John Brooke’s system usability scale (SUS) (Brooke 1996), recognised for its straightforward and efficient ‘quick-and-dirty’ approach to measuring system usability through a short, 10-question survey, and the Intrinsic Motivation Inventory, a validated questionnaire for measuring a user’s internalised motivations when interacting with a system (Markland and Hardy 1997). The virtual reality sickness questionnaire (Kim et al. 2018), a tool for evaluating cybersickness symptoms, was also used by Elor et al. (2021) for measuring the discomfort experienced by participants while using the virtual reality system. This questionnaire was designed to assess adverse symptoms of VR usage, such as nausea, dizziness, disorientation, and headache. An adaptation for VR usability, the virtual reality user satisfaction evaluation-advanced version (Kalawsky 1999) was used by Katsouri et al. (2015) to assess the effectiveness and user-friendliness of virtual reality applications in researching an underwater heritage site.

5.3.2 Immersion and presence evaluation

In the realm of VR technologies, several standard tools have been identified for evaluating immersion and presence in user studies. The Slater-Usoh-Steed presence questionnaire (SUS-P) (Slater et al. 1994) was used by Katsouri et al. (2015) and Elor et al. (2021) for measuring immersion. This tool focuses on how users perceive their existence within a virtual space, particularly their sense of ‘being there’ and the extent to which the virtual environment becomes the dominant reality. The presence questionnaire (PQ) by Witmer and Singer (1998) assesses various aspects of presence in more detail than SUS-P, covering aspects such as involvement, adaptation, sensory fidelity, and interface quality, using a series of questions that respondents rate based on their virtual reality experiences. This questionnaire was used in the studies conducted by Katsouri et al. (2015), Jain et al. (2016), and Calvi et al. (2017). iGroup presence questionnaire (IPQ) represents the most recent advancement among questionnaires designed to measure presence. Building upon both PQ and SUS-P (Schubert et al. 2001), it was applied in Jain et al. (2016) to assess the impact of various components of the underwater diving simulator ‘Amphibian’, such as the breathing simulator and haptic feedback, on different dimensions of user presence, using IPQ and PQ together. The presence and reality judgement questionnaire (Baños et al. 2000) is designed to measure two key constructs in virtual environments: presence (the subjective experience of being in one place or environment even when physically situated in another) and reality judgment (how users perceive and interpret the realism or believability of the virtual environment). This questionnaire is particularly useful in studies aimed at understanding user’s perception of the virtual environment’s realism and their emotional and cognitive engagement. Yeo et al. (2020) employed it to explore different aspects of how immersive underwater experiences affect feelings of boredom.

5.3.3 User’s internal state evaluation

Healthcare applications use standard tools to evaluate the user’s internal state and the efficiency of the intervention. In evaluating the effect of VR on pain management in cancer patients, Kelleher et al. (2022) conducted a comprehensive evaluation of the user experience using a wide range of specialized tools: brief pain inventory (Cleeland and Ryan 1994), coping strategies questionnaire (Rosenstiel and Keefe 1983), chronic pain self-efficacy scale (Anderson et al. 1995), visual analogue scale (Delgado et al. 2018). In Agrawal et al. (2019), the adolescent paediatric pain tool (Savedra et al. 1993), a specialised pain questionnaire designed for young patients, was used to assess the effectiveness of a relaxing underwater VR experience for pain management in children with sickle cell disease. To explore how underwater VR experiences can alleviate boredom, Yeo et al. (2020) used two standardised scales: summary of positive and negative experiences scale (Diener et al. 2010) and multidimensional state boredom scale (Fahlman et al. 2013). Ougradar and Ahmed (2019) evaluated how a similar experience can help with anxiety during dental extractions with modified dental anxiety scale (Humphris et al. 1995).

5.3.4 Evaluating environmental attitudes

An inherent feature of underwater telepresence is its ability to bring the marine environment closer to the user. In Markowitz et al. (2018), the new ecological paradigm (NEP) Dunlap et al. (2000) and connectedness to nature scale (CNS) Mayer and Frantz (2004) scales were used to measure changes in environmental attitudes and a sense of connectedness to nature before and after experiencing immersive VR content about ocean acidification. Yeo et al. (2020) used the Inclusion of Nature in Self (Schultz 2002) scale, along with other measures, to evaluate how underwater experiences with different levels of immersion could improve mood and reduce.

6 Applications of underwater telepresence technologies

Out of the forty-three academic publications and twenty-three videos, we identified six main applications. It was common for the paper to encompass elements of more than one application, resulting in significant overlap. Owing to the methodology of the review, the number of studies identified for each application is not indicative of the development of the field. However, it provides an overview of the technologies used and user study practices and methods across the different application domains. Here, we present some key patterns in the technologies employed, user study methodologies, and outcomes for each application, illustrated through the most notable examples

6.1 Environmental awareness

The studies examined in this section sought to enhance public knowledge and understanding of marine ecosystems and their associated challenges. A common underlying hypothesis across these papers is that by raising public awareness about the hidden wonders and issues beneath the ocean surface, people’s environmental attitudes and behaviours will subsequently be positively influenced. The key papers for this application focus on improving public awareness using simulated VR experiences (Calvi et al. 2017; Markowitz et al. 2018) or 360\(^{\circ }\) images, or video footage (Vercelloni et al. 2018; Nelson et al. 2020) that depict underwater environments. The visual experience was often enriched with a narrative or a game focusing on a particular aspect of environmental protection, such as ocean acidification (Markowitz et al. 2018; Nelson et al. 2020) or sustainable diving practices (Calvi et al. 2017). All the interactions designed for these user evaluations were short (\(< 10\) min). The user studies showcase explored various aspects of user involvement in environmental awareness issues. They measured general experience engagement by using PQ (Calvi et al. 2017), aesthetic perceptions of the environment using statistical modelling (Vercelloni et al. 2018), educational outcomes and environmental attitudes by employing NEP and CNS (Markowitz et al. 2018), and behaviour changes by tracking donation amounts (Nelson et al. 2020). These studies exhibit a strong commitment to detailing their user study designs. As an example, Nelson et al. (2020) conducted the most extensive study reviewed, with a total of 1007 participants, to compare the effect of a flat vs. 360\(^{\circ }\) video on ocean acidification. The participants’ demographics were well recorded: age, sex, proximity to the sea, income, education level, and religiosity were all reported. Underwater experiences have been shown to enhance environmental awareness and concern in the identified studies. Markowitz et al. (2018) found that immersive VR experiences were particularly effective in conveying complex scientific information about marine life and ocean acidification. This led to increased learning and awareness among various groups of young adults. VR experiences have proven to effectively communicate conservation messages, with Nelson et al. (2020) showing that such experiences can increase donations to environmental causes more effectively than traditional media. These studies indicate that underwater experiences, especially immersive ones, can raise environmental awareness and promote pro-environmental attitudes and behaviours. However, as these experiences were short and mostly occurred on a one-off basis, there is room for further research to evaluate the effect of long-term interactions on environmental attitudes.

6.2 Education

Underwater telepresence is highly effective for educational purposes, by enabling students to engage with marine environments beyond traditional field trips. Quite a few studies, as well as educational games identified on YouTube, incorporated educational elements (see Tables 3 and 4). Here, we overview the papers that have educational goals as their primary objective and focus on delivering information about marine life in the higher education context (Pallant et al. 2016; Verdes et al. 2021; Gerringer et al. 2023). For educational purposes, pre-recorded underwater footage of actual marine environments provided students with an opportunity to engage with the marine environments. Gerringer et al. (2023) used open-access video from NOAA OCEANOSFootnote 1 missions ROV. Pallant et al. (2016) enhanced student engagement in the TREET project by involving them in designing ROV dive plans and actively participating in data collection. Verdes et al. (2021) used 360\(^{\circ }\) diving video and AR representations of marine specimens to substitute field trips during COVID restrictions. While the technologies involved are less immersive (2D videos in Pallant et al. (2016), Gerringer et al. (2023), and a 360\(^{\circ }\) video in Verdes et al. (2021)), the interactions were grounded in the educational context, offered real footage, and lasted longer. The three studies exploring the potential of underwater telepresence in higher education directly evaluated students’ experiences using a mix of surveys and interviews. Gerringer et al. (2023) involved 20 undergraduate biology students in learning from NOAA videos Verdes et al. (2021), engaged 23 master-level students studying biodiversity with virtual field trips. In the context of the TREET project, Pallant et al. (2016) adopted a live-streaming approach, bringing the undersea world to 8 undergraduate students via an ROV. A key limitation identified across these studies is their reliance on self-reported findings rather than objective educational outcome evaluations. In all cases, the students reported positive outcomes, such as increased understanding of the research process and increased motivation. In particular, Gerringer et al. (2023) noted that students expanded their view of the oceans. Initially, perceiving the ocean as empty, they can describe this deep-water habitat with terms such as ‘diverse population’, ‘fantastic sites’, and ‘full of life’ after the project. While the experiences were positive in terms of engagement and self-reported understanding of the research process, Verdes et al. (2021) noted that while their VR scuba diving video provided a valuable experience, the students mentioned that it could not replace the impact of an actual field trip.

6.3 Healthcare applications

We identified several studies focusing on therapeutic interventions in the healthcare sector. The studies used underwater VR experiences for various purposes, such as pain relief distraction (Agrawal et al. 2019; Ougradar and Ahmed 2019; Kelleher et al. 2022) mood improvement (Yeo et al. 2020), and even serving as a neutral control environment (Ryan et al. 2010), highlighting the relaxing nature of underwater scenes. Beyond academic research, the VR application Hololfit exemplifies the innovative application of underwater experiences in healthcare. In all studies, participants were introduced to a simulated underwater environment through the use of an HMD. Yeo et al. (2020) and Kelleher et al. (2022) employed ‘theBlu’ VR experience for alleviating pain and improving mood. KindVR Aqua VR paediatric experience was used by Agrawal et al. (2019) for pain management in children and young adults. A set-up with the Aquarium VR mobile application together with the Virtoba VR headset is showcasing a cost-efficient VR solution in Ougradar and Ahmed (2019) for reducing anxiety during tooth extractions. While most of the technologies used offered some level of interactivity, this aspect was not the primary focus of the studies.

‘Holofit’ also includes an HMD. It tracks the user’s performance on compatible training equipment and changes the environment accordingly, incorporating gamification elements to increase engagement and motivation. The primary goal of most studies was to test a specific intervention in a clinical setting among targeted populations. This included such as 20 adults with IV colorectal cancer experiencing moderate pain (Kelleher et al. 2022), 50 dental patients (Ougradar and Ahmed 2019), and 30 paediatric patients with sickle cell disease hospitalised for vaso-occlusive events (Agrawal et al. 2019). Yeo et al. (2020) compared the efficacy of increased impassivity for mood improvement with the general adult public, even though the intervention was primarily intended for use among individuals in long-term care facilities. The studies used validated tools to evaluate the intervention effects by measuring self-reported pain or anxiety levels. While most of the studies were focused on feasibility, some employed control groups or comparison conditions; for example, Yeo et al. (2020) used a between-participant design comparing theBlu with 360\(^{\circ }\) video and traditional 2D media for mood enhancement. Their findings indicated that increased immersion is associated with greater improvements in mood. In all the user studies conducted, the desired effect was achieved: the underwater experience successfully helped alleviate boredom, served as a welcome distraction during anxiety or pain episodes, or acted as a relaxation tool. The rigorous reporting of user demographics, typical for the healthcare domain, could be used as an example to improve the reporting and evaluation of user experiences in other applications. However, some studies lacked control groups, and the short duration of the experiences used for these studies limited our understanding of how effective these interventions might be when applied repeatedly as a part of a regular pain, boredom, or anxiety management plan.

6.4 Access to underwater heritage

While underwater heritage has significant historical value, it often remains accessible to the general public and is challenging for researchers to access. The digital exploration of underwater heritage sites serves a dual purpose: preserving cultural relics and democratizing access to these often hard-to-reach sites. Underwater heritage outreach was one of the most represented fields, with almost half of the studies identified in the review falling under this category. Underwater heritage exploration experiences are typically hybrid. They are created by visualising 3D reconstructions (obtained via acoustic or optical methods by divers, autonomous underwater vehicles, or ROVs) of the underwater sites augmented by a dynamic simulated environment (e.g., marine life, light effects). One remarkable example is the study by Nawaf et al. (2021), where the authors described the use of photogrammetry for continuously monitoring the excavation of the Xlendi site, an Archaic period shipwreck in the western Mediterranean, over ten years. They further developed a VR and a mobile application to enable the general public to explore the site.

The delivery of these experiences relied on some mode of visualization of the 3D reconstructions of an underwater site, such as through a mobile application or computer screen (e.g., Barrile et al. (2021)), sometimes incorporating AR elements (Barrile et al. 2020; Liestøl et al. 2021), 3D TV (e.g., Bruno et al. (2018)), or, in most studies, with HMD (as in McCarthy and Martin (2019), as shown in Fig. 4). In general, the experiences were either static or relied on controllers to allow users to move around the site; notably, Skamantzari et al. (2020) implemented the ability of the visitors to navigate the site by physically walking. The experiences were often presented in a broader context, either at a location close to the site or at a dedicated exhibition. As the experience of exploring underwater heritage is based on a combination of interaction and learning, most of the experiences incorporate a tour, story-telling elements, or gamification. For example, Plecher et al. (2022) developed a VR simulator for diving with game-like interactive elements designed to explore the wreck of a Roman merchant ship, while Bruno et al. (2017) offered a similar experience for interacting with the underwater archaeological site of Cala Minnola (Fig. 2). The increasing availability of immersive technology and the growing general interest in the topic are evident from an overview of non-academic literature. Two games ‘Titanic VR’ and ‘SubROV: Underwater Discoveries’ were developed that both allow the users to interact with underwater heritage while blending entertainment with educational elements.

a Project leader Kevin Martin takes local residents working in the slaughterhouse directly opposite the wreck site on a virtual dive during archaeological fieldwork in 2018. Although the residents knew the basic history and location of the wreck, none of them had ever seen the wreck. b A scan of a 17th-century ship model was used as the basis of the reconstruction, with small alterations such as Vermeer’s Milkmaid added to the stern (McCarthy and Martin 2019)

The studies that include formal evaluation mainly involved members of the general public, such as, for example, museum visitors (McCarthy and Martin 2019). The goal of the studies was to evaluate the overall experience and usability aspects as well as the degree of immersion. The methods used included a mix of qualitative impressions, surveys and questionnaires, as well as logs of interactions. Notably, Haydar et al. (2011) evaluated three platforms in an underwater archaeology navigation scenario: an immersive system with an HMD, a semi-immersive system with a large 3D screen, and the AR map system in an underwater archaeology navigation scenario. The results indicated that immersive conditions improved navigation task performance and, in general, were preferred by the participants.

In this section, we can trace the development of underwater telepresence for heritage outreach projects from the early ones described in Haydar et al. (2011) to more mature applications, some of which have been implemented in local museums and exhibitions (for example, Bruno et al. (2017); McCarthy and Martin (2019); Secci et al. (2019)). This application has extended beyond academic studies, finding commercial success in products like the video game ‘Titanic VR’, identified through a YouTube search, which includes elements of Titanic shipwreck exploration. Overall, the widespread application of underwater telepresence is well-established, and we expect it to continue expanding beyond academia, driven by the growing accessibility of related technologies.

6.5 ROV teleoperation and simulations

ROV control is a complex domain with ongoing research dedicated to creating better control and training tools. We will consider papers that describe underwater telepresence in the context of operating underwater vehicles including ROV operation design (Omerdic et al. 2014; Elor et al. 2021), overviews of ROV operations for marine science (Bruzzone et al. 2003; Jenkyns et al. 2015), and a large number of training simulators, like de la Cruz et al. (2020a), and many more found in the grey literature. The Internet has revolutionized marine science by enabling researchers worldwide to collaboratively participate in field research. Jenkyns et al. (2015) and Bruzzone et al. (2003) describe large-scale institutional efforts for ROV teleoperation in marine science. On a smaller scale, Omerdic et al. (2014), described using an internet connection with a mini ROV, including multiple user interface elements like voice, kinnect, and joystick.

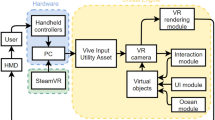

Complete systems with HMD (Fig. 5) interfaces were built for underwater exploration as early as the 1990s (Hine et al. 1994). However, ROV user interface design is still an ongoing research area, as demonstrated by Elor et al. (2021). In their study, the authors evaluated the user performance for fine ROV control tasks using stereoscopic VR vs. monoscopic VR, and desktop teleoperation conditions.

ROV involved in Antarctic exploration and an operator in an HMD. The operator can choose to display either live stereo video, computer-generated graphics, or a mixture of the two in the helmet (by Hine et al. (1994))

de la Cruz et al. (2020a) described an immersive VR-simulated environment of an ROV with a physically limited actuated hand. Outside of the academic literature, we identified a few other simulated ROV environments, such as ‘Sentio’ by SMD Subsea Technology. Most of the commercial solutions did not use VR, offering a 2D experience.

The design of user studies varies significantly in all papers. For example, Bruzzone et al. (2003) demonstrated that the system functioned in principle by engaging marine scientists worldwide in remote research through telepresence application. On the other side of the scale, de la Cruz et al. (2020a) and Elor et al. (2021) provided detailed evaluations with 12 and 25 participants, respectively. The studies mainly engaged individuals with a high level of prior technology usage experience since the technologies developed are intended for professional applications. de la Cruz et al. (2020a) differentiated between experienced ROV operators and novices to estimate the simulation effectiveness as a training tool, finding that novices took approximately five times longer than experts to complete tasks.

Elor et al. (2021) demonstrated that increased immersion improves control performance, thus showing that using HMDs for ROV control, particularly stereoscopic VR, can improve the ROV control performance of experienced operators. When it comes to simulations, it is intriguing that the realism of the simulation is perceived differently from the realism of the experience. Participants in the study by de la Cruz et al. (2020a) suggested reducing the image update rate to match the normally available bandwidth. It is evident that the use of ROVs has long moved from the laboratory setting to become a daily necessity in many domains. Despite their widespread adoption, challenges in operating ROVs persist. Every year, advancements are made in user interfaces, human-robot interaction solutions, and simulations, aiming to enhance safety, improve user experience, and reduce the learning curve for operators. While there is a trend towards smaller, more affordable ROVs and improvements in teleoperation interfaces, operating ROVs remains complex, limiting its accessibility to a broader audience.

6.6 Entertainment

Underwater telepresence inherently holds significant entertainment value. It is not surprising that most of the sources from YouTube were dedicated to the entertainment purpose of the experience, such as games, as shown in Table 4. While many experiences discussed in other sections include elements of gamification and storytelling (Calvi et al. 2017; Bruno et al. 2019; Liarokapis et al. 2020; Plecher et al. 2022), this section is dedicated to those that identify entertainment as their primary goal.

Many of the technologies employed in these papers demonstrate a commitment to immersive, and interactive experiences. For example, the ‘Amphibian’ diving simulator by Jain et al. (2016) replicated a range of underwater sensations using a motion platform, HMD, and sensory feedback devices to simulate scuba diving ending up in a multi-modal system as shown in its prototype form in Fig. 6. Meanwhile, without employing HMD, the ‘physical simulation’ ‘Bathysphere Project’ by Explorandia also utilized a motion platform. This system offers users an immersive experience without relying on VR through a synchronised motion of a mock-up ‘submarine’ on a motion platform, user controls, and a live stream from the camera situated in a nearby pond.

Among the experiences identified on YouTube, ‘theBlu’ was by far the most referenced, including in academic literature. Both Yeo et al. (2020) and Kelleher et al. (2022) have used it in therapeutic settings. The other two noteworthy mentions are games ‘Titanic VR’ (Immersive VR Education Ltd.) and ‘SubROV’ (sqr3lab, in collaboration with the Bermuda Institute of Ocean Sciences and the Schmidt Ocean Institute). These games feature highly realistic environments that incorporate underwater heritage and educational elements. Many of the games use the submarine as the user’s point of view (POV).

Out of all the experiences with entertainment potential, ‘Amphibian’ was most extensively tested, involving a group of 12 experienced divers. This study, conducted by Jain et al. (2016), aimed to compare the simulator with real-life scuba diving experiences. It utilized standard tools for evaluating presence and realism and gathered qualitative data through interviews.

We can draw several broad conclusions from the extensively studied multi-modal ‘Amphibian’. The breathing simulation, which closely replicated the user’s body movements during inhalation and exhalation, was deemed the most realistic aspect by participants of the user studies. Users appreciated the audio effects and the visuals, although they were not perceived as entirely realistic. However, the kinesthetic feedback, particularly the hand-swimming motions, received less favourable reviews owing to discomfort and unnatural feel. The tactile interaction with marine life received mixed responses, and the temperature simulation aspect was largely unnoticed by participants. This study, alongside the success of commercially available experiences, suggests that the interactivity of audio and visual elements is crucial for creating an engaging experience. This section didn’t include many academic sources, resulting in a shortage of formal user studies to explore the mechanics of engagement within these experiences, aside from the ones performed for ‘Amphibian’ (Jain et al. 2016) or ‘theBlu’ (Yeo et al. 2020; Kelleher et al. 2022). However, the commercial success of the other games could be an indicator of their engaging nature. The high level of interactivity creates a sense of presence and immersion, leading to more satisfying and long-term interactions. There is potential to apply the features found in entertainment applications to other areas, including healthcare, education, and environmental outreach, to create similarly engaging experiences.

Multi-modal system for virtual diving developed at MIT media laboratory Jain et al. (2016)

7 Discussion

Based on the patterns identified in our review, we have formulated a set of design recommendations and future directions. These recommendations are intended to guide the development of underwater telepresence applications, with a focus on inclusivity and engagement, particularly for older populations. Beyond our project’s specific objectives, these recommendations also aim to enhance digital accessibility and environmental connectivity. The recommendations emphasize the importance of all the aspects of creating immersive experiences, incorporating practical considerations, and identifying potential areas for future research. By adhering to these guidelines, developers can create more accessible, engaging, and impactful underwater telepresence experiences that effectively bridge the digital divide and create a deeper connection with marine environments.

7.1 Prioritize immersive technologies

The extensive use of immersive technology highlights its importance in delivering an engaging user experience, particularly in the context of underwater telepresence. The use of immersive technology, such as HMDs, 3D screens, and AR, was consistent across all the applications, encompassing both real and simulated experiences.

Our review reveals a trend towards examining the impact of an enhanced sense of presence by comparing different delivery modes. This was explicitly confirmed in certain studies across various applications: Haydar et al. (2011) examined interactions with underwater heritage reconstructions; Elor et al. (2021) focused on aiding ROV operator tasks; Nelson et al. (2020) worked on improving environmental attitudes by demonstrating a change in the amount of conservation organisation donations; and Yeo et al. (2020) found that an increased sense of presence was linked to improved mood. The preference towards greater physical immersion in some cases could be explained by the effect of novelty (Berlyne 1970; Silvia 2006), as mentioned in Bruno et al. (2018), and evaluations of long-term interactions would be necessary to establish the effect definitively. Nevertheless, these studies collectively demonstrate that increased immersion enriches the user experience and leads to more effective outcomes in educational and operational contexts. We argue that for new applications, incorporating immersive technologies should be considered as it can significantly improve the user experience.

7.2 Leverage interactivity

Several examples demonstrate the importance of audio-visual interactivity in building a sense of presence and engaging experiences. The most physically immersive technologies, such as ‘Amphibian’ (Jain et al. 2016) and ‘Bathysphere Project’, exemplify this by aiming to stimulate multiple senses and blending physical with virtual interactions. User testing by Jain et al. (2016) for ‘Amphibian’ revealed that interactive audio and visual elements (even if they are not particularly realistic) are crucial for maintaining a strong sense of immersion. Other features, such as motion platforms, were found to be less important. ‘Holofit’ effectively uses interactive responsiveness, gamification, and compelling visuals to enhance exercise motivation. Interactivity emerges as a core feature in cultivating a sense of presence and immersion, which in turn can heighten engagement. Creating responsive audio-visual cues in the environment should be considered, in particular, for creating experiences in healthcare, environmental and educational contexts.

7.3 Incorporate narrative elements into interaction

Not all engagement with underwater environments requires physical immersion delivered by immersive technologies. On the other side of the spectrum, in educational settings (exemplified by Pallant et al. (2016); Gerringer et al. (2023)), engagement with the underwater environment often did not rely on immersive technology to change students’ relationship with the sea. This effect was likely achieved through a long-term experience of interacting with actual marine data, akin to the work of marine scientists. Similarly, the success of commercially available games without the use of VR (see Table 4) also suggests that narrative elements are great tools for building user engagement, even without direct physical immersion.

7.4 Factor in accessibility

While increased immersion through immersive technology and interactivity can generally positively affect the outcomes, it should be used with accessibility in mind. Some studies (e.g., Jain et al. (2016); Yeo et al. (2020)) noted the discomfort associated with HMDs owing to their weight and bulkiness. This is a particularly important accessibility factor to consider when designing the technology for healthcare settings.

Another aspect of accessibility is the associated cost of equipment. Although the cost of hardware has been decreasing, making HMDs more accessible to a wider audience, exploring alternatives like smartphone VR headsets (as in Ougradar and Ahmed (2019)) or augmented reality applications (like the ones in Verdes et al. (2021)) could provide even more cost-effective and accessible options, when the technology has to be applied at scale. This factor is also of particular importance for practical applications in healthcare or educational applications.

7.5 Balance the practical constraints

Although the technologies discussed in this review have the potential to enhance users’ experiences of feeling underwater, the studies examined highlight practical concerns, particularly regarding the use of ROVs and VR experiences. These concerns were specifically identified from the studies we have reviewed, but it is likely that additional feasibility concerns were not covered in the reviewed material.

While ROVs could provide a high level of engagement and interactivity, an attractive feature for applications such as education and environmental outreach, they are challenging to apply outside professional settings. Indeed, low-cost set-ups, like those mentioned by Chouiten et al. (2012), are becoming more prevalent. However, in the studies considered, ROVs were only used by trained operators (such as Bruzzone et al. (2003); Pallant et al. (2016); Elor et al. (2021)), pointing out the practical barriers associated with ROV operation.

On the other hand, while simulated environments are reliable and logistically easier to deploy, creating them takes significant time and resources. Environmental awareness and healthcare interventions have shown that, despite being less immersive than fully interactive VR, 360\(^{\circ }\) videos (such as those used in Yeo et al. (2020)) may be a cost-effective way to implement underwater experiences on a larger scale. This is especially the case when augmented with synthetic elements for enhanced interactivity, as described by Thompson et al. (2019).

In the future, advancements in human-robot interaction, artificial intelligence, and reductions in computing costs could mitigate these limitations. However, for the time being, these factors should be acknowledged as important considerations in the design process.

7.6 Use a wide range of evaluation methods

The design of a technology with user experience at its core is incomplete without the ability to evaluate the impact on users. In general, the diversity in user studio methodologies prevented us from making a direct comparison between different technologies. In many cases (for example Kouřil and Liarokapis (2018); Pehlivanides et al. (2020); Nawaf et al. (2021)), the studies could benefit from more extensive user testing, a larger participant population, or better demographic reporting. We argue that by adopting a more uniform approach to evaluation involving widely recognised and validated instruments, researchers and designers can improve the quality of assessments and contribute to a more cohesive body of knowledge. For instance, using established scales for measuring user experience, such as John Brooke’s system usability scale (SUS) or the presence questionnaire (PQ), could provide consistent metrics comparable across different studies. Similarly, standard psychological scales could be employed to assess underwater telepresence’s emotional and cognitive impacts, ensuring that findings are reliable and can be compared across different contexts. Ultimately, qualitative methods should not be overlooked, especially for pilot and small-scale studies, or to augment quantitative findings.

7.7 Explore long-term and real-time interactions

Most studies focus on single, short interactions with an underwater environment, as seen in Jain et al. (2016), Markowitz et al. (2018), and Kelleher et al. (2022), with notable exceptions in educational settings, such as Pallant et al. (2016), Verdes et al. (2021), and Gerringer et al. (2023). We believe there is a place for a long-term study that investigates how repeated interactions influence users.

Similarly, while many studies (as shown in Tables 5 and 6) highlight the role of immersive technology (such as HMDs and 3D screens) in building a sense of connection to the environment or providing therapeutic effects, there remains a gap in understanding how real-time interactions may influence the sense of presence compared to pre-recorded and simulated experiences. We also believe that entertainment-driven applications of underwater telepresence technology can offer valuable insights and inspiration. Video games showcase creative design solutions for immersion and interactivity that encourage long-term interaction and high levels of user engagement. These solutions could benefit other applications, such as education, environmental outreach, and healthcare.

7.8 Consider the potential of artificial intelligence

We did not identify the explicit use of AI tools (beyond some computer vision involved in 3D reconstructions of underwater heritage sites) in any of the technologies reviewed. The potential of AI to transform the field of underwater telepresence remains largely untapped. Although AI has been minimally used in reviewed technologies, its potential to transform underwater telepresence is significant. Currently, available AI capabilities could implement features that could greatly enhance underwater telepresence technology. These include identifying marine life species (Jalal et al. 2020; Raza and Song 2020), creating interactive stories or visual elements with generative models (Zhu et al. 2023; Bruce et al. 2024), improving underwater footage with advanced computer vision techniques (Singh and Bhat 2023), and refining ROV control (Christensen et al. 2022). All these capabilities are well within the scope of today’s state-of-the-art AI and offer significant benefits for various applications.

8 Conclusions

This review presents a detailed examination of the current state of technology aimed at bridging the gap between individuals onshore and underwater environments, fostering a deeper bond between humanity and the underwater world. It explores the array of technological solutions that have been proposed to convey the underwater experience to those on land for various applications, as well as the impact of these technologies on users. Our study includes a scoping review of relevant literature and an examination of YouTube videos. The resulting findings were synthesised into an overview of the technological advancements, diverse applications, and user experiences, as showcased in a large variety of academic studies and YouTube videos, unified by the general aim of bringing the marine environment closer to individuals onshore. Technological implementations span a complete toolkit of devices engineered to create immersive experiences, such as VR and HMDs, haptic devices, interactive motion platforms, as well as elements of storytelling and gamification tailored to underwater experiences. On the backend, ROVs and cameras are indispensable tools for creating these experiences. Historically, underwater telepresence originated with the operation of ROVs for extensive underwater exploration missions. Over time, enhancements in immersion, presence, affordability, and accessibility have been observed. In professional settings, such as marine research and underwater archaeology, the integration of ROVs with VR and photogrammetry has revolutionised the capacity to discover and document underwater sites on a larger scale than was possible before. On the other hand, simulated or pre-recorded underwater experiences found their application in healthcare, education, and entertainment. In educational and environmental awareness applications, immersive experiences have proven to significantly enhance learning outcomes and foster an emotional connection with the marine environment. In healthcare, underwater VR experiences offer a non-pharmacological alternative for managing pain and anxiety, enhancing patient care. This review aims to serve as comprehensive design guidance, covering both the array of technologies available and the methods for evaluating user interaction. Insights from various sources have been distilled into a coherent set of recommendations. Technologically, we emphasize the importance of immersive technologies, interactive engagement, and narrative elements. The technological design must consider practical limitations, such as affordability and user comfort, alongside addressing the challenges inherent in developing and deploying technologies like ROVs for non-professional use. We also highlight areas ripe for further exploration, including long-term and real-time applications in fields such as healthcare, education, and environmental awareness. Additionally, we suggest that AI can significantly improve user experiences. This synthesis is intended to inform and guide future developments in underwater telepresence technology and its applications.

Availability of data and materials

Not applicable.

Code availability

Not applicable.

Notes

NOAAS Okeanos Explorer is a converted United States Navy ship, now an exploratory vessel for the National Oceanic and Atmospheric Administration (further referred to as NOAA).

References