Abstract

Coral reefs are essential ecosystems in the vast expanses of oceans, nurturing various forms of marine life within their vibrant and expansive structures. However, these underwater paradises suffer considerable threat from the population explosions of crown-of-thorns starfish (COTS), which detrimentally affect scleractinian corals across the Indo-Pacific region. This study addresses the early drawback of solely relying on texture analysis for COTS detection, recognizing the associated insufficiency due to variability in reef substrates. By integrating multiresolution analysis employing wavelet transform, edge information, and texture analysis using gray-level co-occurrence probability, this approach employs crucial Haralick features refined for pattern recognition. This enables a more detailed understanding of COTS traits, including the detection of the numerous sharp spines that cover their upper bodies. This approach considerably enhances classification reliability, making notable progress with an impressive accuracy of 95.00% using the eXtreme Gradient Boosting (XGBoost) Classifier. Moreover, this model streamlines processing requirements by increasing computational and memory efficiencies, making it more resource-efficient than the current models. This advancement enhances detection and opens avenues for early intervention and future research. Furthermore, integrating the model with underwater imagery could enable citizen science initiatives and autonomous underwater vehicle (AUV) surveys. Empowering trained volunteers and equipping AUVs with this technology could considerably expand coral reef monitoring efforts. Early COTS outbreak detection allows for shorter response times, potentially mitigating the damage and facilitating targeted conservation strategies.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Coral reefs, nurtured by tiny polyps, are essential underwater ecosystems and host an astounding diversity of marine life within their vibrant, expansive structures. Despite covering less than 0.1% of the ocean floor, they support more than 25% of marine species (Fisher et al. 2015), hence serving as a biodiversity hub critical for various reasons. Moreover, among various aquatic organisms, coral reef organisms hold promise vis-à-vis search for potential medical breakthroughs. This is because they present possibilities for use in treatments targeting such ailments as cancer, arthritis, human bacterial infections, and viral diseases. Other than their medicinal potential, these reefs serve as crucial coastal barriers, offering historical climate insights and contributing nearly 30 billion USD annually via tourism, fisheries, and coastal protection (Cesar et al. 2003). However, these ecosystems suffer mounting threats due to climate change, unsustainable practices, overfishing of few species, and the increasing crown-of-thorns starfish (Acanthaster planci, COTS) population, resulting in extensive coral degradation (Van den Hoek and Bayoumi 2017).

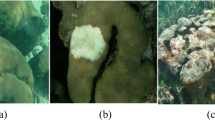

The population explosion of COTS is a leading cause of scleractinian coral mortality across the vast Indo-Pacific coral reefs. These outbreaks, shown in Fig. 1, are pivotal in the degradation of coral reefs, thereby posing considerable ecological and conservation challenges (Deaker and Byrne 2022). These starfish pose a notable threat during their potential outbreaks, indicated by densities ranging between 15 and 100 COTS per hectare, while confirmed outbreaks surpass 100 COTS per hectare (Dumas et al. 2020). A single adult starfish can consume approximately 10 m\(^2\) of coral per year, and their large outbreak populations considerably affect the local cover with them persisting for more than a decade (Keesing and Lucas 1992). On account of their high reproduction rate, the population of these starfish rapidly grows in certain regions, increasing their numbers by approximately 5–10 times in a span of 2 to 3 years (Chandler et al. 2023), coupled with notable population surges during outbreaks.

Timely identification or prevention of increasing starfish population densities is the optimal approach to averting or minimizing their harmful effect on coral communities. However, our capacity to detect the emergence of these population surges has been notably limited because of variations in methods used for surveying COTS. Traditionally, two primary techniques are used. One method involves recording reef sections for subsequent COTS counting (Jan et al. 2007; Dirnwoeber et al. 2012), while the other, the Manta Tow Survey, involves counting COTS while being towed behind a boat (Liu et al. 2021). These approaches, despite their widespread use, are labor-intensive and error-prone, frequently resulting in underestimation of COTS populations in specific regions (Dayoub et al. 2015). However, with the recent advancements in edge devices and image analysis, there has been a growing interest in the deployment of automated systems for surveying COTS (Arima et al. 2014; Bonin-Font et al. 2017; Abbasi et al. 2022). This has resulted in the development of sophisticated survey machines that incorporate these cutting-edge technologies.

Major contributions outlined in this study can be summarized as follows:

-

(1)

An efficient, real-time machine learning framework for COTS classification in reef environments is developed, leveraging a discrete wavelet transform (DWT) for streamlined surveys, early outbreak identification, and targeted COTS control team deployment. The framework reduces computational complexity by employing principal component analysis (PCA) to select optimal features, expediting the classification process and minimizing the model’s size for achieving enhanced performance on resource-constrained devices.

-

(2)

Color information and spatial texture analysis using gray-level co-occurrence probability (GLCP) are used to enhance the understanding of texture and spatial relationships within coral habitats that host COTS and other marine species. Integration of crucial Haralick features refines pattern recognition to identify key traits of COTS, including the detection of sharp spines covering their upper bodies.

-

(3)

The proposed solution harnesses the robustness of eXtreme Gradient Boosting (XGBoost) in adeptly managing numerous variables, particularly in reef environments where correlations are prevalent. This proficiency considerably increases both the accuracy and speed of COTS classification in reef environments with similar structures, thereby providing researchers with a reliable and effective approach for such ecosystems.

This paper is structured as follows: The introduction presents the research scope. Section 2 explores the related works, highlighting the existing approaches and their limitations. Section 3 presents the proposed system for recognizing COTS, detailing the system’s architecture, components, and design reasoning. Section 4 elaborates on the methodology employed to recognize COTS from the dataset, discussing the techniques and algorithms employed. Section 5 presents the experimental results and findings derived from the implemented methodology. Finally, Section 6 summarizes key points, draws conclusions, and proposes potential avenues for future research.

2 Related work

The current research suffers considerable challenges with color variability and shape complexities in COTS detection. To address these issues, Clement et al. (2005) pioneered the use of local binary patterns (LBPs) for texture-based classification. Recognizing the limitations in color segmentation and shape matching, they identified distinct thorns as reliable features, prompting detailed texture analysis. However, LBP does not effectively encode color information, which is an effective cue for pattern recognition such as object and scene image classification (Banerji et al. 2013). LBP’s focus on local texture patterns might limit the method’s ability to consider larger spatial context or structural information, potentially affecting the differentiation of COTS from other marine elements. Similarly, Dayoub et al. (2015) observed challenges in prior methods that relied on shapes and colors for COTS identification. Hence, they combined an extended version of LBP, called uniform patterns, for textures and a ’histogram of oriented gradient’ (HOG) for edge information, leveraging the sharpness of long thorns. Training a random forest classifier with numerous features from images, their approach successfully recognized and even tracked these starfish in images while achieving high accuracy.

Efficient and precise object detection methods are crucial in the evolution of computer vision Zhao et al. (2019), shaping the diverse array of algorithms integrated into COTS detection methodologies. Pooloo et al. (2021) contributed to this evolution by harnessing the power of EfficientDet-D0 in their custom dataset for COTS detection. This advanced model is characterized by its weighted bidirectional feature pyramid network (Tan et al. 2020) and compound scaling strategy. Sheth and Prajapati (2022) comprehensively compared various object detection models, including YOLOv5, YOLO-X, Detectron2, faster region-based convolutional neural network, and Retina Net, further enriching the exploration of detection techniques in this domain. Li et al. (2022) proposed implementation of YOLOv4 on edge devices, particularly Nvidia Jetson Xavier, to facilitate real-time COTS monitoring. Similarly, Nguyen (2022) aimed to optimize the YOLOv5 model for edge deployment using TensorFlow Lite, attempting versatility in device application. However, both suffered strikingly similar obstacles. The challenges encompassed adapting sophisticated YOLO models to edge devices, wherein computational demands and hardware compatibility posed considerable obstacles. Moreover, Heenaye-Mamode Khan et al. (2023) introduced a novel approach to convolutional neural networks (CNNs) with an enhanced attention module. They applied various pretrained CNN models, including VGG19 and MobileNetV2, to their dataset to detect and classify COTS using transfer learning, providing insights into the causal features associated with COTS.

Feature extraction is a crucial step in pattern analysis, as it captures essential shape information to facilitate classification via a formalized approach. The extraction process aims to acquire the information optimally suited for classification from raw data. Diverse techniques for image feature extraction include statistical feature extraction (comprising first- and second-order statistics), global transformation, series expansion features (such as Fourier transforms, wavelet transformation, Gray level co-occurrence transform, LBP), and geometrical and topological features extraction methods (Kumar and Bhatia 2014). However, certain methods, such as Gabor filters, suffer drawbacks. Gabor filters lack complete shift invariance, making them sensitive to small changes in object position. Similarly, Fourier transforms, while widely used, struggle with capturing localized information because of their global nature. Noteworthily, wavelet transformation emerges as a promising approach for overcoming the challenges suffered by Gabor filters, and Fourier transforms in image feature extraction.

DWT is a promising approach to enhance image classification in intricate underwater settings. The current techniques, reliant on shape and color, suffer difficulties in distinguishing objects with similar textures in their surroundings (Dayoub et al. 2015). To address this, prioritizing the analysis of prominent edges, particularly in intricate patterns, becomes crucial. Exploring the potential 2-dimensional discrete wavelet transform (2-D DWT), an image is decomposed into one approximation, and three detailed images unveil detailed edge and texture information (Huang and Aviyente 2008), enhancing detection and segmentation capabilities across various scenarios. This method’s adaptability and nuanced insights may offer valuable advancements, potentially extending to the detection of challenging marine organisms.

3 Proposed working system

In Fig. 2, the methodology presents a systematic approach for identifying COTS in reef environments. Initially, image dimensions are standardized to a height (h) of 720 pixels, a width (w) of 720 pixels, and 3 color channels via preprocessing to ensure dataset uniformity amidst varying image sizes. Following the partition of training and test images, a DWT with Daubechies wavelets is employed, facilitating effective multiresolution analysis and edge detail preservation. This method is crucial in capturing intricate underwater features, increasing classification accuracy and addressing challenges such as diverse scales and lighting variations. In addition, the approach extends beyond traditional gray-scale imagery by separately applying the DWT to the red, green, and blue (RGB) channels, enhancing analysis depth and comprehensive understanding of COTS traits in reef environments. Noteworthily, results are decomposed up to level 4, further refining the feature extraction process with cA (approximation coefficients) and cD (detailed coefficients) at each level.

Following an extensive wavelet-based analysis, the methodology seamlessly integrates gray-level co-occurrence matrix (GLCM) analysis across individual RGB channels, marking the initial step for GLCP and extracting crucial textural and spatial features. This analysis identifies key features such as ’homogeneity’, ’contrast’, ’dissimilarity’, and ’energy’. Feature normalization and PCA are employed to refine feature extraction in the classification task. PCA effectively captures prominent features while simplifying computation, strengthening the precision and robustness of the classification model. This comprehensive feature extraction process enables a deep understanding of COTS traits within intricate reef ecosystems. Moreover, the machine learning model is trained using the XGB algorithm, rooted in wavelet analysis and complemented by spatial texture analysis. Remarkably, this framework occupies less than a megabyte of storage space and requires minimal computational resources, thereby making it highly adaptable and seamlessly integrated with edge devices.

4 Methodology for proposed framework

4.1 Multiresolution analysis with wavelets

This study draws inspiration from Beijbom et al. (2012), who explored coral image classification using multiscale color and texture filter banks at various scales. The methodology, rooted in DWT, aligns with their emphasis on multiscale analysis while addressing a different task. DWT is a potent tool for image signal analysis, breaking down an image signal via iterative low- and high-pass filter applications, as shown in Fig. 3. This process involves a low-pass filter, condensing redundant signal details, and a high-pass filter, capturing intricate signal specifics. The transformative DWT results in two separate one-dimensional iterations, storing filter coefficients on either side of the matrix and subdividing the image into four distinct sub-bands (low-low (LL), low-high (LH), high-low (HL), and high-high (HH)) (Kumar and Bhatia 2014), representing various frequency components. In this specific case, working with \(w \times h \times 3\) images from an underwater dataset, DWT is applied across the three image channels, generating four \(\frac{w}{2} \times \frac{h}{2} \times 3\) sub-band images.

This represents the breakdown of image details into various frequency sub-bands, a process iterated four times, ultimately decreasing the image dimensions by \(\frac{1}{8}\) of the original. The application of DWT is grounded in its inherent multiresolution properties, preserving both high- and low-frequency features and facilitating the extraction of discriminative multiscale characteristics. By segregating fine-scale and large-scale information into the wavelet detail and approximation coefficients, respectively, DWT yields decomposition coefficients that encompass all original signal data, enabling direct extraction of multiscale features. While this methodology is inspired by the multiscale analysis in Beijbom et al. (2012), the focus herein diverges by leveraging DWT to break down image details into distinct frequency sub-bands. This comprehensive approach incorporates color information through the decomposition of RGB channels and subsequent wavelet and GLCM texture feature extraction, demonstrating the efficacy of this method in preserving discriminative features across scales in the context of underwater image analysis.

Regarding wavelet transforms, there are two main types of wavelet functions: scaling (or approximation) functions denoted by \(\varphi\) and wavelet functions denoted by \(\psi\). DWT can be realized using either the scaling function \(\varphi\) or wavelet function \(\psi\). The choice between \(\varphi\) and \(\psi\) depends on the specific wavelet transform algorithm and the properties desired for analysis.

The DWT is described as follows:

where M represents a normalization factor, which ensures that the wavelet transform is energy-preserving or unitary. In addition, a and b control scaling and translation of \(\varphi\), respectively.

In the exploration of DWTs, various wavelets characterized by their scaling function \(\varphi\) were carefully considered, each offering unique properties for signal decomposition. This study explores six common wavelet families, namely the Haar wavelet (haar), biorthogonal wavelets (’biorX.Y’, where X and Y refer to the orders), Symlets wavelet (symN), Meyer wavelet (dmey), Coiflet wavelet (coifN), and Daubechies wavelet with approximation order ’dbN’, where filter length increases with order (N). While the Haar and biorthogonal wavelets exhibit symmetry, the Daubechies wavelet is approximately symmetrical. In particular, the use of symmetric Daubechies wavelets captures attention for efficiently representing real signals in multiresolution and enhancing and restoring images in an effective manner (Lina 1997; Stanković and Falkowski 2003). Although biorthogonal wavelets offer advantages for image compression (Krishna et al. 2022), they were not used because the focus was on image classification. Furthermore, Daubechies wavelets were selected rather than Haar wavelets for several reasons: they offer superior frequency localization (Sharif and Khare 2014), more efficiently represent image features because of having more vanishing moments, capture finer details and variations, and generally yield smoother signal reconstructions (Park et al. 2001).

Daubechies wavelets

This study emphasizes the use of Daubechies (db) wavelets, denoted by dbN, where N represents the number of points in the basic filter, characterizing this wavelet family within the orthogonal wavelet spectrum (Daubechies 1990). These wavelets are distinguished by their optimal vanishing moments, exhibiting the most vanishing moments in comparison with all families within this category. A vanishing moment refers to a wavelet’s ability to represent polynomial behavior or information in a signal. Specifically, the number of vanishing moments in db wavelets is equal to half the count of filter points (\(\frac{N}{2}\)). For instance, db2, with two filters, has one moment and encodes polynomials of one coefficient, representing constant signal components. Similarly, db4 encodes polynomials of two coefficients, covering constant and linear signal components, while db6 encodes three coefficients, representing constant, linear, and quadratic signal components. This unique property aligns with the pursuit of precision and computational efficiency in signal/image processing, highlighting the ability of this wavelet family to encapsulate varying signal components in different applications.

To harness both spectral and spatial information, we decomposed the three color channels (red, green, and blue) of each image using a two-level wavelet transform with a Daubechies-like (db2) filter. This decomposition segmented each channel into four sub-bands: LL, LH, HL, and HH (see Fig. 3). The LL sub-bands primarily contain smoothed approximations, while the LH, HL, and HH coefficients encompass more detailed information. Consequently, this process yielded 12 features per image (3 color channels \(\times \;4\) sub-bands), effectively capturing detailed information at various orientations. We iterated this procedure for a second, third, and fourth level of decomposition in order to enhance the analysis, resulting in an additional 36 features. In total, we extracted 48 wavelet decomposition parameters per image, considerably contributing to texture feature generation.

4.2 Texture feature generation

This study focuses on using GLCP for precise texture feature extraction in COTS images. GLCP is a robust statistical method that considers spatial relationships between pixels, capturing the co-occurrence frequency of pixel pairs with specific gray-level values and predefined spatial offsets. This is achieved using GLCM, which encodes the spatial distribution of similar gray-level values within the image. This approach leverages wavelet decomposition to capture detailed texture information and extracts edge features from the long thorns of the starfish, thereby enhancing the robustness of the classification approach. In addition, the focus extends to the probabilistic interpretation provided by GLCP, refining the understanding of spatial texture and enabling the derivation of quantitative measures such as contrast, energy, homogeneity, and dissimilarity. These measures, supported by the literature (Mokji and Bakar 2007; Kiaee et al. 2019), allow for comprehensive capture of intricate texture and edge information.

Going beyond the basic approach of counting co-occurrences within a set window, this method explores spatial texture by computing \(C_{ij}\), which represents conditional probabilities of any two gray levels (i, j) based on their specific displacement. The analysis relies on G, which represents the number of quantized gray levels, representing the tonal range of the image from 0 (absolute black) to \(G-1\) (pure white). In this context, \(P_{i,\,j}\) precisely monitors the frequency of encountering gray levels (i and j) as neighboring pixels, with a separation indicated by a specific displacement in the x- and y-directions. The collection of GLCP can be defined as follows:

Revealing the intricacies of COTS texture that extend beyond simple intensity counts, four key Haralick features were studied: contrast, energy, homogeneity, and dissimilarity (Haralick et al. 1973). From the perspective of GLCP, these features are examined as prominent features for capturing all necessary information from the four decomposed levels of the wavelet transform.

-

(1)

Contrast (CON): COTS often exhibit more distinctive features and color variations compared with the surrounding coral reef (Dayoub et al. 2015). Contrast is effective in capturing these local variations, making it easier to differentiate between the starfish and the background.

$$\begin{aligned} \text {Contrast}(\text {CON}) = \sum \limits _{i, j=0}^{G-1} C_{i j}(i-j)^2. \end{aligned}$$(4) -

(2)

Energy (EN): The orderliness and structured appearance of COTS can be highlighted by high energy values. This feature is useful for identifying regions where the distinct patterns of starfish attract specific attention versus the more uniform coral reef background.

$$\begin{aligned} \text {Energy}(\text {EN}) = \sum \limits _{i, j=0}^{G-1} C_{i j}^2. \end{aligned}$$(5) -

(3)

Homogeneity (HOM): COTS may present regions with uniform color or texture. By emphasizing areas with similar gray levels, homogeneity assists in identifying these uniform patches on the starfish, contributing to its recognition.

$$\begin{aligned} \text {Homogeneity}(\text {HOM}) = \sum \limits _{i, j=0}^{G-1} \frac{C_{i j}}{1 + (i - j)^2}. \end{aligned}$$(6) -

(4)

Dissimilarity (DIS): Dissimilarity is crucial for capturing the differences between adjacent pixel values. In the context of COTS identification, it assists in highlighting areas where the texture of starfish considerably deviates from that of surrounding coral, aiding in anomaly detection.

$$\begin{aligned} \text {Dissimilarity}(\text {DIS}) = \sum \limits _{i, j=0}^{G-1} C_{i j} |i - j|. \end{aligned}$$(7)

Algorithm 1 Feature extraction from images

4.3 Classification

Classification, a crucial task in machine learning, involves the categorization of data into predefined classes with respect to their features. This study employs four robust supervised classifiers to enhance decision-making in this classification process: (1) XGB, (2) random forest classifier (RFC), (3) logistic regression (LR), and (4) support vector machines (SVMs) using a radial basis function kernel. XGB is a highly effective algorithm because of its ensemble learning approach. The iterative boosting process combines predictions from multiple decision trees, correcting errors and increasing overall model accuracy (Chen and Guestrin 2016). Its superiority is attributed to its adept handling of intricate feature interactions and complex relationships, making it particularly advantageous for diverse classification tasks. By incorporating 192 features generated through a combination of multiresolution and texture analysis across all four levels of wavelet decomposed coefficients, XGB effectively uses this comprehensive information to augment its predictive capabilities. Moreover, its algorithmic structure is based on optimizing a cost function via the sequential addition of decision trees. Each tree learns from the errors of its predecessors in this iterative process, and, coupled with the regularization term and gradient descent-based optimization, it adeptly manages complex relationships within the feature space. This capability results in superior classification performance vis-à-vis the reef ecosystem study. The proposed solution leverages XGB’s robustness in adeptly managing numerous variables, particularly in reef environments where correlations are prevalent, thus considerably increasing both the accuracy and speed of COTS classification in such ecosystems, hence providing researchers with a dependable and efficient approach for such environments.

5 Results and discussion

5.1 Datasets and evaluation metrics

Three distinct datasets were integrated to construct a comprehensive, consolidated dataset of 700 images evenly distributed, with 350 images per class: COTS and non-COTS. The primary dataset was derived from the CSIRO COTS Detection Dataset by Liu et al. (2021), meticulously designed to address the COTS outbreak-induced coral loss on the Great Barrier Reef. Encompassing 23500 underwater images with a resolution of width (\(\widehat{w}\)) 1280 pixels and height (\(\widehat{h}\)) 720 pixels, this dataset features 4919 images depicting COTS, including instances with multiple starfish in a single frame. A subset of 140 images (70 COTS, 70 coral environments/non-COTS) was taken from this extensive collection. This involved excluding consecutive frames, mitigating the risk of the model learning continuous angles during training that could result in overfitting during testing. The second data source was the Heron Island Coral Reef Dataset (HICRD), comprising 6003 low-quality, 3673 good-quality, and 2000 restored images. This dataset exclusively contained underwater coral environments and did not contain COTS in any frames. Strategically, 100 coral reef images were selected from HICRD, emphasizing low-light conditions to align with the literature findings, indicating a 27% higher likelihood of finding COTS in low-brightness areas compared with visible areas (Kayal et al. 2017; Ling et al. 2020). The third data source, obtained from an open Kaggle page, also used by Heenaye-Mamode Khan et al. (2023), contributed 280 COTS and 179 non-COTS images. This meticulously curated dataset, which incorporated a deliberate strategy to prevent overfitting, enabled comprehensive training and evaluation of the model across diverse scenarios, including those with various tonalities and textures dependent on environment and depth.

In terms of the evaluation metrics, the assessment of classifier models involves key indicators such as Accuracy, Precision (P), Recall (R), and F1 score. These metrics provide valuable insights into the effectiveness and real-time performance of the classification process. Simultaneously, the dataset is split into 80% for training and 20% for testing, hence comprising 559 images for training and 140 for testing. During the initial experimentation phase, the focus was primarily on accuracy as the key metric, spanning various algorithms, including XGB, RFC, LR, and SVM. This exploration encompassed the performance across various wavelet families, allowing for assessment of their effectiveness and suitability for specific classification tasks. In this experimental setup, XGB, RFC, and LR used the Binary Cross Entropy Loss function, while SVM employed the Hinge Loss function.

5.2 Wavelet selection and evaluation

Experiments were conducted using various wavelets from different families to evaluate the effectiveness of wavelet-based and GLCP statistical measures for COTS detection, as presented in Table 1. After assessing the performance at the first level of decomposition, several promising wavelets were selected for further analysis at higher decomposition levels. These included Haar, Symlets (Sym2, Sym4, Sym6, Sym8), Coiflets (Coif2), Biorthogonal (bior2.2), and Daubechies (db2, db4, db6). Wavelets with poor performance and high computational costs were excluded from further consideration.

The decision to exclude wavelets with higher vanishing moments, such as Symlet variants with vanishing moments of more than 5 (10 filters) and Daubechies wavelets with vanishing moments of more than 4 (8 filters), was influenced by several technical factors. While higher-order wavelets may capture more detailed information, not all of this information may be relevant or discriminative for the classification task. This is because some finer details captured by higher-order wavelets may be noise or irrelevant variations that introduce confusion into the classification process. In addition, higher vanishing moments may not effectively capture subtle texture details required for accurate classification because the starfish appeared relatively smaller in the dataset. Similarly, Meyer wavelets were excluded from further analysis because of their poor performance in capturing relevant features for COTS detection. This comprehensive evaluation process enabled identifying and focusing on the most effective wavelet variants for the specific classification task, considering both the performance and computational considerations.

5.3 Higher-order decomposition analysis

Wavelet decomposition reveals layers of increasingly fine details (textures, edges) within progressively smoother representations. This layer-by-layer analysis unlocks richer information for diverse image-processing tasks. Initially, we opted for wavelets from level 1 decomposition, capturing the overall structure and dominant textures of the starfish. However, with progression to higher levels (2, 3, 4), hidden details such as subtle variations in spine texture, slight differences in arm shapes, and even internal structures became more visible in high-resolution images, as presented in Table 2. Each level of decomposition represents the image at a different resolution, hence enabling the analysis of features at various scales. Integrating these features extracted from higher levels of decomposition can furnish the machine-learning model with richer information, potentially enhancing discrimination and classification accuracy.

After evaluating the performance across various decomposition levels, we observed that certain wavelet variants, including ’sym2’, ’sym4’, ’sym8’, and ’db2’ wavelets up to level 4 decomposition, yielded notable results. Of these, the Daubechies wavelet with 2 filters (one vanishing moment) was the most effective. However, beyond level 4 decomposition, the diminishing size of the image reduced to more than approximately \(\frac{1}{64}\) of the original size rendered further decomposition ineffective. This decline in utility was attributed to the addition of redundant features, resulting in sustained accuracy up to level 5, followed by subsequent decline, prompting the discontinuation of further decomposition levels.

The performance of the models was assessed using the refined feature set. Noteworthily, the analysis results highlighted the considerable efficacy of the XGB and RFC models, both of which showed promise for further enhancement with larger training datasets. The performance of the SVM model leveled off after a certain point, indicating its limited ability to incorporate additional data points, as shown in Fig. 4. Consequently, we decided to discontinue its usage for subsequent analyses. Remarkably, the exceptional area under the curve of 96% achieved by XGB in the receiver operating characteristics (ROC) analysis highlights its superiority in distinguishing COTS from other entities in the reef ecosystem (see Fig. 5). This outstanding performance not only solidifies the efficacy of XGB but also suggests its potential as a cornerstone in future efforts of coral reef monitoring and management.

5.4 Quality feature analysis

To address the issue of redundancy within the 192 generated features, PCA was employed to streamline the feature vector. A comprehensive series of experiments was conducted using various feature subsets obtained via information gain feature selection. These subsets included Top-1, 3, 5, 10, 25, 50, 100, and 150, each representing different numbers of features with the highest information gain, as presented in Table 3. For instance, the Top-1 feature subset comprised only one feature with the highest information gain, while the Top-50 feature subset consisted of 50 features with maximum information gain.

Through systematically reducing the dimensionality of the feature space, the resilience of the models, particularly XGB and RFC, in maintaining high classification accuracy was showcased even with a considerably reduced number of features. Noteworthily, the findings revealed that the models consistently achieved remarkable accuracy levels via only 50 features, confirming the effectiveness of the approach in streamlining the classification process without sacrificing predictive performance. This highlights the practical applicability of the approach, particularly in scenarios wherein computational resources and ease of model interpretation are crucial factors.

5.5 Model comparision

Thus far, there has been only limited exploration into COTS detection, with deep learning models showing promise of achieving higher testing accuracies. However, these existing models do not comprehensively explain the complexities involved in COTS image classification. Table 4 comprehensively assesses diverse techniques for COTS detection, evaluating key metrics, namely accuracy, precision, recall, and F1 score. The method, represented by [Proposed], attracts attention with its remarkable accuracy rates of 95.00% and 93.57%, respectively, leveraging colored wavelet-guided GLCP in conjunction with XGB. This success is underpinned by the strategy of integrating features that accentuate edge responses in COTS images drawn from edge-oriented texture descriptors, effectively supplementing texture-based analysis. Noteworthily, the approach also exhibits high precision, further enhancing its effectiveness in the accurate identification of COTS. The substantial performance margin over prior methods, exemplified by the RFC and particle filter (Dayoub et al. 2015), and the enhanced VGG19 with CBAM (Heenaye-Mamode Khan et al. 2023), highlights the efficacy of this novel approach. Moreover, the framework outshines models such as EfficientDet-D0 (Pooloo et al. 2021), hence reaffirming its superiority and representing a considerable leap in COTS detection.

6 Conclusions

This study introduced a robust framework for enhancing the effectiveness of COTS control programs, crucial for maintaining reef resilience in the Indo-Pacific region. By innovatively combining texture-based and edge-based features within color images, the methodology achieved a considerable accuracy of 95.00% in identifying and categorizing COTS across diverse reef structures. This performance, validated against the established techniques, is invaluable for directing COTS control strategies and optimizing computational resource allocation. A series of tests conducted on multiresolution analysis using different wavelet families demonstrated that db wavelets outperformed the others by effectively removing smooth background noise while preserving sharp edges and other features in the image. The finite length of db wavelets ensured that their influence was localized within the image, making them compact and decreasing computational complexity. These characteristics make db wavelets particularly advantageous for this application.

Future improvements, particularly through integration with neural network approaches and expansion of the image dataset, are expected to further increase the adaptability and effectiveness of this method in marine conservation. In addition, employing wavelet descriptors for object recognition would broaden the application of this technology in real-time video processing. Wavelet descriptors can capture fine details and textures, making them well-suited for the detection of various marine species and objects within complex underwater environments. Exploring this approach further vis-à-vis video processing would yield notable advancements.

Availability of data and materials

The data is openly available at https://github.com/dsatyam09/Beyond-Texture.

References

Abbasi A, MahmoudZadeh S, Yazdani A (2022) A cooperative dynamic task assignment framework for cotsbot auvs. IEEE Trans Autom Sci Eng 19(2):1163–1179. https://doi.org/10.1109/TASE.2020.3044155

Arima M, Yoshida K, Tonai H (2014) Development of a coral monitoring system for the use of underwater vehicle. In: OCEANS 2014-Taipei, Taipei, pp 1–6. https://doi.org/10.1109/OCEANS-TAIPEI.2014.6964462

Banerji S, Sinha A, Liu C (2013) New image descriptors based on color, texture, shape, and wavelets for object and scene image classification. Neurocomputing 117:173–185. https://doi.org/10.1016/j.neucom.2013.02.014

Beijbom O, Edmunds PJ, Kline DI, Mitchell BG, Kriegman D (2012) Automated annotation of coral reef survey images. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, pp 1170–1177. https://doi.org/10.1109/CVPR.2012.6247798

Bonin-Font F, Burguera A, Lisani JL (2017) Visual discrimination and large area mapping of Posidonia Oceanica using a lightweight AUV. IEEE Access 5:24479–24494. https://doi.org/10.1109/ACCESS.2017.2764998

Cesar H, Burke L, Pet-Soede L (2003) The economics of worldwide coral reef degradation. CEEC, Arnhem

Chandler JF, Burn D, Caballes CF, Doll P, Kwong S, Lang B et al (2023) Increasing densities of Pacific crown-of-thorns starfish (Acanthaster cf. solaris) at Lizard Island, northern Great Barrier Reef, resolved using a novel survey method. Sci Rep 13(1):19306

Chen T, Guestrin C (2016) XGBoost: a scalable tree boosting system. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), San Francisco, pp 785–794. https://doi.org/10.1145/2939672.2939785

Clement R, Dunbabin M, Wyeth G (2005) Towards robust image detection of crown-of-thorns starfish for autonomous population monitoring. In: Proceedings of the 2005 Australasian Conference on Robotics & Automation (ACRA 2005), Sydney, pp 1–8. https://doi.org/10.1109/ACRA.2005.1616460

Daubechies I (1990) The wavelet transform, time-frequency localization and signal analysis. IEEE Trans Inf Theory 36(5):961–1005. https://doi.org/10.1109/18.57199

Dayoub F, Dunbabin M, Corke P (2015) Robotic detection and tracking of crown-of-thorns starfish. In: 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, pp 1921–1928. https://doi.org/10.1109/IROS.2015.7353629

Deaker DJ, Byrne M (2022) Crown of thorns starfish life-history traits contribute to outbreaks, a continuing concern for coral reefs. Emerg Top Life Sci 6(1):67–79. https://doi.org/10.1042/ETLS20210239

Dirnwoeber M, Machan R, Herler J (2012) Coral reef surveillance: infrared-sensitive video surveillance technology as a new tool for diurnal and nocturnal long-term field observations. Remote Sens 4(11):3346–3362. https://doi.org/10.3390/rs4113346

Dumas P, Fiat S, Durbano A, Peignon C, Mou-Tham G, Ham J et al (2020) Citizen science, a promising tool for detecting and monitoring outbreaks of the crown-of-thorns starfish Acanthaster spp.. Sci Rep 10:291. https://doi.org/10.1038/s41598-019-57251-8

Fisher R, O’Leary RA, Low-Choy S, Mengersen K, Knowlton N, Brainard RE et al (2015) Species richness on coral reefs and the pursuit of convergent global estimates. Curr Biol 25(4):500–505. https://doi.org/10.1016/j.cub.2014.12.022

Haralick RM, Shanmugam K, Dinstein I (1973) Textural features for image classification. IEEE Trans Syst Man Cybern SMC-3(6):610–621. https://doi.org/10.1109/TSMC.1973.4309314

Heenaye-Mamode Khan M, Makoonlall A, Nazurally N, Mungloo-Dilmohamud Z (2023) Identification of crown of thorns starfish (COTS) using convolutional neural network (CNN) and attention model. PLoS ONE 18(4):e0283121

Huang K, Aviyente S (2008) Wavelet feature selection for image classification. IEEE Trans Image Proc 17(9):1709–1720. https://doi.org/10.1109/TIP.2008.2001050

Jan RQ, Shao YT, Lin FP, Fan TY, Tu YY, Tsai HS et al (2007) An underwater camera system for real-time coral reef fish monitoring. Raffles Bull Zool 14:273–279

Kayal M, Bosserelle P, Adjeroud M (2017) Bias associated with the detectability of the coral-eating pest crown-of-thorns seastar and implications for reef management. R Soc Open Sci 4:170396. https://doi.org/10.1098/rsos.170396

Keesing JK, Lucas JS (1992) Field measurement of feeding and movement rates of the crown-of-thorns starfish Acanthaster planci (L.). J Exp Mar Biol Ecol 156(1):89–104. https://doi.org/10.1016/0022-0981(92)90018-6

Kiaee N, Hashemizadeh E, Zarrinpanjeh N (2019) Using GLCM features in Haar wavelet transformed space for moving object classification. IET Intell Transp Syst 13(7):1148–1153. https://doi.org/10.1049/iet-its.2018.5192

Krishna S, Kumar D, Dwivedi VK (2022) Biorthogonal wavelets for multiresolution image compression. In: 2022 2nd International Conference on Power Electronics & IoT Applications in Renewable Energy and its Control (PARC), Mathura, pp 1–7. https://doi.org/10.1109/PARC52418.2022.9726558

Kumar G, Bhatia PK (2014) A detailed review of feature extraction in image processing systems. In: 2014 Fourth International Conference on Advanced Computing & Communication Technologies, Rohtak, pp 5–12. https://doi.org/10.1109/ACCT.2014.74

Li Y, Liu J, Kusy B, Marchant R, Do B, Merz T et al (2022) A real-time edge-AI system for reef surveys. In: Proceedings of the 28th Annual International Conference on Mobile Computing and Networking, Sydney, pp 903–906. https://doi.org/10.1145/3495243.3558278

Lina JM (1997) Image processing with complex daubechies wavelets. J Math Imaging Vis 7:211–223. https://doi.org/10.1023/A:1008274210946

Ling S, Cowan ZL, Boada J, Flukes EB, Pratchett MS (2020) Homing behaviour by destructive crown-of-thorns starfish is triggered by local availability of coral prey. Proc R Soc B-Biol Sci 287:20201341. https://doi.org/10.1098/rspb.2020.1341

Liu J, Kusy B, Marchant R, Do B, Merz T, Crosswell J et al (2021) The CSIRO crown-of-thorn starfish detection dataset. Preprint at arXiv:2111.14311

Mokji MM, Bakar SARA (2007) Gray level co-occurrence matrix computation based on Haar wavelet. In: Computer Graphics, Imaging and Visualisation (CGIV 2007), Bangkok, pp 273–279. https://doi.org/10.1109/CGIV.2007.45

Nguyen QT (2022) Detrimental starfish detection on embedded system: a case study of YOLOv5 deep learning algorithm and TensorFlow lite framework. J Comput Sci Inst 23:105–111. https://doi.org/10.35784/jcsi.2896

Park JH, Kim KO, Yang YK (2001) Image fusion using multiresolution analysis. In: IGARSS 2001. Scanning the Present and Resolving the Future. Proceedings. IEEE 2001 Interational Geoscience and Remote Sensing Symposium (Cat. No.01CH37217), Sydney, pp 864–866. https://doi.org/10.1109/IGARSS.2001.976662

Pooloo N, Aumeer W, Khoodeeram R (2021) Monitoring coral reefs death causes with artificial intelligence. In: 2021 IST-Africa Conference (IST-Africa), South Africa, pp 1–9

Sharif I, Khare S (2014) Comparative analysis of Haar and Daubechies wavelet for hyper spectral image classification. Int Arch Photogramm, Remote Sens Spatial Inf Sci XL-8:937–941. https://doi.org/10.5194/isprsarchives-XL-8-937-2014

Sheth S, Prajapati DJ (2022) Recognition of underwater starfishes using deep learning. In: 2022 Second International Conference on Next Generation Intelligent Systems (ICNGIS), Kottayam, pp 1–5. https://doi.org/10.1109/ICNGIS54955.2022.10079811

Stanković RS, Falkowski BJ (2003) The Haar wavelet transform: its status and achievements. Comput Electr Eng 29(1):25–44. https://doi.org/10.1016/S0045-7906(01)00011-8

Tan M, Pang R, Le QV (2020) EfficientDet: scalable and efficient object detection. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, pp 10778–10787. https://doi.org/10.1109/CVPR42600.2020.01079

Van den Hoek LS, Bayoumi EK (2017) Importance, destruction and recovery of coral reefs. IOSR J Pharm Biol Sci 12(2):59–63

Zhao ZQ, Zheng P, Xu ST, Wu X (2019) Object detection with deep learning: a review. IEEE Trans Neural Netw Learn Syst 30(11):3212–3232

Acknowledgements

The authors sincerely thank the faculties at K. J. Somaiya College of Engineering, Vidyavihar, for their invaluable guidance throughout the project. Special thanks are also extended to CSIRO and their team for open-sourcing crucial datasets, which enabled global collaboration and facilitated substantial contributions to this work.

Additional information

Edited by: Lin Gao.

Author information

Authors and Affiliations

Contributions

Satyam Dubey conceived the research idea, developed the methodology, and drafted the manuscript with critical modifications. Jagannath Nirmal provided guidance and insights throughout the research process, particularly in implementing wavelet transform techniques. Both authors contributed to the conceptualization and design of the study, as well as the analysis and interpretation of data. Satyam Dubey served as the corresponding author, while Jagannath Nirmal provided critical revisions and corrections to the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Informed consent for publication was obtained from all participants.

Competing interests

No potential conflict of interest was reported by the author(s).

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dubey, S., Nirmal, J. Beyond texture: unveiling spiny crown-of-thorns starfish with multiresolution analysis. Intell. Mar. Technol. Syst. 2, 16 (2024). https://doi.org/10.1007/s44295-024-00033-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44295-024-00033-4