Abstract

Structural integrity is essential for safety in infrastructure, as it can help prevent catastrophic failures and financial losses. The significance of vibration-based damage detection has grown substantially in fields such as civil and mechanical engineering. Concurrently, the advancements in computational capacities have facilitated the integration of machine learning into damage detection processes through post-processing algorithms. Nevertheless, these require extensive data from structure-affixed sensors, raising computational requirements. In an effort to address this challenge, we propose a novel approach utilizing a pre-trained convolutional neural network (CNN) based on images to identify and assess structural damage. This method involves employing wavelet transform and scalograms to convert numerical acceleration data into image data, preserving spatial and temporal information more effectively compared to conventional Fourier transform frequency analysis. Six acceleration data channels are collected from carefully chosen nodes on a mini bridge model and a corresponding finite element bridge model, to train the CNN. The efficiency of training is further enhanced by applying transfer machine learning through two pre-trained CNNs, namely Alexnet and Resnet. We evaluate our method using different damage scenarios, and both Alexnet and Resnet show prediction accuracies over 90%.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The infrastructure in the United States has been a topic of concern in recent years due to its aging and deteriorating condition. A significant number of the roads, bridges, and other critical infrastructure need repair or replacement. The projected cost of these necessary improvements is estimated to be in the trillions of dollars, an assertion supported by the American Society of Civil Engineers [2]. The ASCE 2021 Report Card [2] gives a comprehensive evaluation of the country's infrastructure needs, emphasizing the urgency of this issue. For instance, many of the country's roads and bridges are in poor condition, and ASCE gave the nation's roads a grade of D and its bridges a grade of C + . Overall, the state of America's infrastructure is a significant challenge for policymakers and infrastructure stakeholders. Addressing the nation's infrastructure needs will require significant investment, innovative solutions, and long-term planning to ensure the safety, reliability, and sustainability of critical infrastructure. Therefore, ensuring the safety and reliability of infrastructure systems relies heavily on effective structural damage detection [11]. By detecting and addressing structural damage early on, engineers and maintenance teams can prevent further deterioration and potentially catastrophic failures [14]. This can help to extend the lifespan of infrastructure assets, reduce maintenance and repair costs, and most importantly, protect public safety. With the increasing demands on infrastructure systems and the effects of natural disasters and climate change, structural damage detection is more important than ever to ensure that infrastructure systems can continue to serve their intended purposes and provide for the needs of society [35].

Many researchers have made significant contributions to the development of various techniques for structural damage detection for over decades. Some of these methods include vibration-based damage detection, acoustic emission testing, and non-destructive evaluation using advanced sensors and imaging technologies. One study developed a wireless smart sensor system for bridge health monitoring, allowing for real-time data collection and analysis [30]. Another review paper discussed various structural health monitoring techniques, including sensors, signal processing, and machine learning algorithms [23]. Nonlinear vibration-based damage detection using variational mode decomposition was explored as another technique [36]. A recent study used ambient vibration measurements and Kalman filtering to detect damage in a laboratory structure in real-time [24]. These efforts have helped to advance the field of structural health monitoring and improve the ability of engineers and maintenance teams to detect and address structural damage in a timely and effective manner. Among these methods, modal analysis is recognized as one of the most prevalent techniques for structural damage detection within the field of structural health monitoring [13]. This approach is based on vibration-based structural dynamic behaviors which present changes of the natural frequencies and mode shapes when structures have damages.

Researchers have investigated different modal analysis methods, including operational modal analysis [40], empirical mode decomposition [38], and Hilbert-Huang transform [37], for more accurate and robust damage detection. Li et al. [28] utilized modal analysis to detect damage in a steel frame structure. In additional, a recent study proposed a novel technique for damage detection in a composite structure using a combination of vibration-based modal analysis and a deep learning-based pattern recognition approach [34]. The research showed that changes in the natural frequencies and mode shapes of the structure serves as indicators to identify the location and severity of the damage. Although vibration-based modal analysis is a powerful tool for detecting structural damage, the technique is subject to limitations that can affect its accuracy and usefulness in certain situations. Modal analysis is highly sensitive to noise and environmental factors (non-stationary signal), which can affect the accuracy of the results [10]. Another challenge is that modal analysis typically demands the use of a large number of sensors and specialized algorithms, rendering it less feasible for smaller entities or those with constrained resources.

Therefore, in this research, the proposed method bypasses the necessity of extracting higher mode shapes as in modal analysis method, which converts the structural dynamic behaviors into numerical representation (i.e., frequencies and mode shapes). In order to mitigate the high computational requirements needed for modal extraction and address the challenge of analyzing non-stationary signals, a graphical representation of scalograms generated from the wavelet transform can be employed to detect structural damage. Unlike modal analysis, which required complicated algorithms and calculations, scalograms simply represents the measured dynamic signal as graphical information with varying frequencies and durations [1]. These images can then be analyzed to identify changes in the graphical information resulting from structural damage. For example, in a study by Liu and Chang [29], wavelet transform was used to detect damage in a concrete beam based on changes in the wavelet coefficients. The results showed that the method was effective in identifying the location and severity of damage. Similarly, in a study by Eslami and Ahmadi [12], wavelet transform was used to detect damage in a steel frame structure. The study demonstrated that the method was able to detect the location of damage with high accuracy. Wavelet transform also provides temporal resolution, which enables the detection of changes in dynamic characteristics over time. These studies demonstrate the potential of wavelet transform for spatially and temporally resolving damage in structures.

In the meanwhile, artificial intelligence (AI) has shown promising results in structural damage detection by enabling automated and intelligent analysis of large amounts of data generated from sensors installed on structures [28]. AI techniques such as machine learning (ML) and deep learning have been applied to analyze various types of data, including vibration, acoustic, and image data, for detecting structural damage [39]. These methods can learn to identify patterns and anomalies in the data that are indicative of damage. ML models require less computational efforts while maintaining similar or higher levels of analyzing accuracies compared with traditional approaches such as model updating techniques through finite element methods [4]. Deep learning, in particular, has emerged as a popular technique due to its ability to learn complex features and patterns from large amounts of data. Gu and Cao [18] used convolutional neural networks (CNNs) to detect structural damage, achieving high accuracy rates in both simulated and experimental data. Chen and Hua [7] utilized transfer learning-based deep neural networks to detect damage in bridge structures. These studies demonstrate the potential of ML-based methods for accurate and efficient structural damage detection.

The proposed method combines the benefits of wavelet transform and machine learning techniques to detect structural damage. A set of scalograms, which contains the entire structural frequency domain information, will be used as input features for the ML network. By analyzing the dynamic response of the structure under different damage conditions, machine learning algorithms can predict the severity and location of structural damage. To obtain the dynamic behavior of the structure, a set of accelerometers will be attached to it, and the acceleration data collected from various locations will be used to generate the scalogram learning features. In order to decode the structural implications of the spectrogram graphs, graphical-based machine learning algorithms such as CNNs will be incorporated into the training process. CNNs are a type of deep learning algorithm that have been highly successful in image and signal processing tasks, including computer vision and image recognition.

The proposed approach for structural damage detection adopts two pre-trained networks, Alexnet and Resnet, as damage detection models. These networks are known for their high efficiency and accurate performance in image detection tasks [20, 25]. By leveraging pre-trained networks, the prior knowledge and parameters of the network can be retained and utilized for new training tasks. This technique is known as transfer learning and can significantly improve the training speed and accuracy of the models. The approach is then evaluated through case studies on a frame bridge model structure. The results demonstrate the effectiveness of the proposed method in accurately identifying and localizing structural damage.

The main originality and contribution of the research work is summarized as follows:

-

1)

Assessing the viability of graphically interpreting structural dynamic behavior under various damage scenarios in laboratory-scale bridge and building models.

-

2)

Examining the effectiveness of employing pre-trained CNNs for image-based machine learning to improve the efficiency and precision of structural damage detection.

-

3)

Investigating the possibility of bypassing current methods for detecting structural damage and establishing a straightforward paradigm for structural health monitoring. This standard is not only beneficial for academic research but also applicable in industrial settings for conducting extensive investigations.

The remaining chapters are structured as follows. Chapter 2 covers the methodology of the proposed research, specifically focusing on frequency domain analysis utilizing wavelet transform. It also delves into the architecture of convolutional neural networks and investigates the application of transfer learning and pre-trained models. Chapter 3 is dedicated to the validation of the proposed method, involving numerical and experimental analysis conducted using a miniature bridge model. Finally, Chapter 4 provides a comprehensive discussion of the research findings, highlighting any limitations encountered and proposing potential avenues for improvement.

2 Methodology

2.1 Vibration-based structural frequency domain analysis

Within structural dynamics, frequency domain analysis is a technique employed to examine how structures respond under harmonic loading conditions. This method is extensively utilized for detecting structural damage. Frequency domain analysis entails converting the time-domain response of a structure into a frequency-domain representation through algorithms like Fourier transform, Wavelet transform, Laplace transform, Z-transform, and others. The most used frequency domain analysis method for structural dynamics is through Fast Fourier Transform (FFT) algorithm [19]. Conducting FFT analysis allows for the investigation of a broader spectrum of signal characteristics than when analyzing data in the time domain. In the frequency domain, individual frequency components can be extracted from a combined signal, allowing for the analysis of specific characteristics one at a time. FFT has emerged as a fundamental method for system identification and structural health monitoring. This method facilitates the extraction of a system's structural responses, including aspects like natural frequencies and mode shapes. Figure 1 below demonstrates an FFT solution converting a sinusoidal signal to its representation in frequency domain.

In Fig. 1, a time signal which composed by three sinusoidal waves with different frequencies shows three distinctively spikes (frequencies) after FFT. Equation (1) demonstrates the signal transformed from time-domain \(x(t)\) to frequency domain \(X\left(\omega \right)\).

where \(X\left(\omega \right)\) is the complex-valued function in the frequency domain, \(x(t)\) is the function in the time domain, \(\omega\) is the angular frequency, \(t\) is the time variable.

The FFT algorithm is effective at capturing overall frequency characteristics of a signal that persist throughout its entirety. However, this approach may not be suitable for all signal types, such as signals with short intervals of characteristic oscillation or varying amplitudes along the sequence. For example, when a structure is subjected to an earthquake event, the dynamic properties of structures may change during the recording of the acceleration data. These changes can serve as critical indicators of potential structural damage. Therefore, when analyzing structural dynamics, there may be anomalous characteristics present in the measured data. However, FFT is only suitable for analyzing stationary signals and may not be able to accurately capture the anomalous features present in the data.

The wavelet transform serves as an alternative method involving the decomposition of a signal into a set of wavelets. In contrast to FFT, the wavelet transform has the capability to furnish both frequency and the corresponding time information linked to these frequencies. This feature enables the analysis of how frequencies evolve over time, particularly evident during seismic events. This characteristic can be achieved by various wavelet shapes in the wavelet transform.

A wavelet is a wave-like oscillation localized in time [9]. Comparing with FFT, wavelet transform have two basic properties: scale and shift.

where \(\tau\) is the time shifting factor, \(s\) is the scaling, \(\psi\) is the wavelet function.

In Eq. (2), the wavelet transform starts with a wavelet function \(\psi\), which is a fixed waveform that is shifted and scaled to analyze the signal at different amplitudes and locations.

The process of time shifting entails moving the wavelet function along the time axis, resulting in a change in the wavelet's position on the time domain plot.This is equivalent to changing the position of the wavelet on the time domain plot. Shifting the wavelet function enables the analysis of the signal at various time points, facilitating the identification of variations in the signal's frequency content over time. Conversely, time dilation of the wavelet function achieves scaling, entailing the modification of the wavelet's size on the time domain plot. Scaling the wavelet function allows the analysis of the signal at different frequency scales, providing the capability to determine the frequency content of the signal at varying resolutions. The concurrent application of time shifting and scaling to the wavelet function enables the assessment of the signal's frequency content across different time scales and resolutions simultaneously. This facilitates identification of changes in the frequency content of the signal over time and localized changes in frequency content at specific points in time.

There are many different types of wavelet functions (shown in Fig. 2), each with their own unique properties [17]. Among the frequently utilized wavelet functions, the Morlet wavelet family stands out. This complex-valued wavelet is defined by a sinusoidal wave enveloped within a Gaussian window. Its distinctive attribute of effective localization in both the time and frequency domains makes it particularly advantageous for signal analysis. The Daubechies wavelet, a wavelet function family known for exceptional frequency localization, is commonly used for image noise reduction. The Coiflet wavelet, with its smoother and more regular properties compared to the Daubechies wavelet, is frequently applied in data compression and noise reduction. Additional well-known wavelet functions encompass the Gaussian wavelet, the Symlets wavelet, and the Mexican hat wavelet, each distinguished by unique properties and corresponding uses. The selection of a wavelet function is contingent on the particular necessities of the signal or image processing operation being performed.

For instance, Fig. 3a presents a typical free vibration response from a cantilever structure. The comparison is made between scalograms derived from the analytic Morlet wavelet and the bump wavelet, otherwise known as the Gaussian derivative wavelet. As depicted in Fig. 3b, the time-domain signal derived from the analytic Morlet Wavelet presents an uneven surface. This is indicative of slight changes in the structural frequency bandwidth over time, a feature attributed to the wavelet's strong localization in both time and frequency domains. This characteristic makes the Morlet Wavelet particularly effective in analyzing non-stationary signals [26]. Moreover, as a complex-valued wavelet, it not only captures amplitude but also the phase information of the signal, a property that proves beneficial in the study of seismic or structural dynamic fields. Conversely, as demonstrated in Fig. 3c, the scalogram derived from the Bump wavelet exhibits a notably smooth edge. Characterized by a wider variance in time and a narrower variance in frequency, this wavelet tends to smooth out the input signal, which may result in some information loss. Therefore, this feature renders it ideal for edge detection applications, enabling it to accurately capture sharp transitions in signals or images. In conclusion, given the results and considerations, the analytic Morlet wavelet has been selected for generating scalograms in this research.

During the transformation of a signal from the time domain to the frequency domain, the Continuous Wavelet Transform (CWT) and Discrete Wavelet Transform (DWT) are often the methods of choice [1]. CWT provides a continuous time–frequency representation of a signal, while DWT provides a discrete time–frequency representation of a signal. CWT is more computationally intensive than DWT, but it can capture high-frequency details with higher accuracy. In the realm of structural damage detection, the CWT is a particularly effective method for processing dynamic responses from structures. This is due to its ability to retain the maximum amount of damage-related information from the building.

A graphical representation of the wavelet transform is a scalogram, which illustrates the time–frequency characteristics of the signal (as shown in Fig. 4). The horizontal axis represents time, the vertical axis represents frequency, and the color or intensity of each point in the plot reflects the strength of the wavelet coefficient at that specific time and frequency. The scalogram provides a two-dimensional view of the signal's time-varying frequency content, allowing for easier identification of frequency changes over time. It's important to note that the gray region outside the dashed white line defines the cone of influence and it describes the significance of edge effects with the input signal. The unshaded areas (delineated by the white dash line) ensure accurate time–frequency representations of structural responses.

In Fig. 4a, a signal with a non-stationary component is shown as noise and varying frequencies over time. As the signal changes over time, the frequency components of the signal also change (as illustrated in Fig. 4b). These changes are shown in the scalogram (Fig. 4c) as a change in the intensity or color of each point along the time and frequency axes. Areas with intense colors correspond to high wavelet coefficients, indicating the presence of significant signal components. Conversely, areas with less intense colors represent lower coefficients, indicating less significant signal components.

An illustration of the use of scalograms for structural damage identification is presented in Fig. 5. For the pilot study model, a three-story building is modeled, and three channels of acceleration data subjected to a ground excitation are measured. The acceleration is converted to 2-D scalograms, and these scalograms will be stitched as a training image feature. The structural damage is simulated through member stiffness loss. In Fig. 5, two sets of scalograms with non-damaged and damaged conditions for the system. Comparing Fig. 5a and b, it is observable that the second frequency bars (in red rectangular frames) have significantly changed due to the stiffness degradation of the first-floor column (80% of undamaged stiffness). Based on this finding, structural damage is depicted by alterations in the scalograms. Different structure damage locations and severities will appear differently in scalograms. When comparing specific location scalograms with one another, the change in intensity and magnitude represent a change in mode shape and frequency. However, interpreting and summarizing such differences are difficult; therefore, machine learning is more effective in identifying these differences since machine learning can review large volumes of data and discover specific trends and patterns that would not be apparent to humans [3].

2.2 Convolutional Neural Network overall architecture

Convolutional neural networks, a subset of artificial neural networks, are frequently employed for image recognition, classification, and segmentation tasks [25]. It is composed of multiple layers of small filters that learn to extract meaningful features from the input data, with each layer learning more complex representations of the data. The filters in the CNN are convolved over the input data, computing a dot product between the filter weights and a small patch of the input data at each location. By stacking multiple layers, the network can gradually learn more complex features, from simple edges and textures to higher-level objects and shapes. The resulting outputs are then passed through a non-linear activation function, such as the Rectified Linear Unit (ReLU), to introduce non-linearities into the model. The outputs of each layer are then passed to the next layer, allowing the network to learn hierarchical representations of the input data [27].

One of the main advantages of CNNs is their ability to automatically learn features from raw data without the need for hand-engineered features [27]. This makes them highly effective in many image processing applications, as they can identify patterns and structures that might be difficult for humans to detect. The overall architecture of a CNN typically consists of the following layers (as illustrated in Fig. 6):

-

Input layer: Accepts the input image and passes it on to the next layer.

-

Convolutional layer: The input image is convolved with multiple filters to learn local features and reduce the dimensionality of the data.

-

Activation layer: An activation function is applied to introduce non-linearity into the network and allow it to learn more complex features.

-

Pooling layer: The feature maps produced by the convolutional layer are down sampled to reduce the spatial dimensions and control overfitting.

-

Fully Connected layer: Process the high-level features from the previous layers and produce the final output by using traditional artificial neural network methods such as ReLU, softmax and sigmoid functions.

-

Output layer: The final layer produces the desired outputs, such as class probabilities for image classification.

These layers can be repeated and stacked on top of each other to form a deep neural network, allowing the network to learn increasingly complex features at higher levels of abstraction.

In a CNN, the convolutional layer is where most of the computational heavy lifting happens. This layer performs a mathematical operation known as "convolution" that is used to extract features from the input data. A convolution involves sliding a filter (or kernel), which is a small matrix of weights, over the input data (such as an image). At each position of the filter, a dot product operation is performed between the filter and the part of the input it currently overlaps. The results of these operations across the input generate a "feature map" or "convolved feature". Each feature map represents a specific feature extracted from the input.

Activation layers in neural networks introduce non-linearity into the model, enabling it to learn complex patterns from the data. It operates like filters in a neural network, assessing and determining what information should be transmitted onward to subsequent layers in the network. Commonly used activation functions are ReLU, Sigmoid, Tanh, and Softmax. In our study, all the input data have positive values, without normalization, and the activation function selected is ReLU. As shown in Fig. 7, ReLU is a piecewise linear function that will output the input directly if it is positive, and will output 0 otherwise. Therefore, ReLU is a monotonically increasing linear activation function which reduces the likelihood of a vanishing gradient. In practice, networks with ReLU tend to converge better than those with other activation functions [25].

In CNNs, the pooling layer is a key component that follows the convolutional layer. Its main function is to reduce the spatial size (width and height) of the input representation, which in turn reduces the amount of parameters and computation required in the network. This not only helps to make the network computationally efficient but also aids in controlling overfitting.

The operation performed by a pooling layer is referred to as "down-sampling" or "sub-sampling" [16]. This involves summarizing a region of the input image (or the preceding layer's feature map) using a specific aggregation operation, as illustrated in Fig. 8. The most common types of pooling are max pooling and average pooling:

-

1.

Max Pooling: Max pooling returns the maximum value from a particular window (usually 2 × 2 or 3 × 3) of the input representation. It effectively retains the most prominent feature from the considered window, helping the network to be somewhat invariant to small translations.

-

2.

Average Pooling: Average pooling calculates the average value for each patch of the feature map. It is often used in natural language processing tasks but less frequently in image recognition tasks due to its tendency to blur features.

Considering its effectiveness in extracting detailed information from scalograms for image-based damage detection research, max pooling is chosen for future machine learning training endeavors.

2.3 Transfer learning and pre-trained models

Recently, among various applications of deep learning, image detection and computer vision has been receiving increasing attention [32]. Different varieties of CNNs models have been developed to accommodate varying requirements of input data. These models are trained with large visual database and are initially applied for object recognitions [25]. Although the input training image types and classes of these models are different from the images in this study, the architecture and layer knowledge of these models can still be adopted to eliminate the initial training cost. Therefore, to achieve high training efficiency and accuracy, this study is conducted based on transfer learning.

Transfer learning is a subfield of machine learning which applies the previous achievements to a different but similar task [5]. With the knowledge from a related task that has already been learned, learning efficiency in a new task will be significantly improved. Usually, transfer learning uses a pre-trained model that is created and trained by a group of researchers or a well-established institute with an extremely large dataset. Currently, transfer learning has been commonly used for computer vision, such as visual recognition, image captioning and object classification [33].

There are wide range of pre-trained models, especially some are winners of ImageNet [15] classification benchmarks [21], such as Alexnet, VGG, GoogLeNet and Resnet, in chronological order. Among these models, a simple model (Alexnet) and a comprehensive model (Resnet) are selected in this study. Therefore, the performances of these two models are presented in the next chapter. The characteristics of these two models are introduced as follow:

Alexnet model [25] is the first large network that presented well performance and outperformed all previous non-deep learning-based models by a significant margin in the 2012 competition. Alexnet is a convolutional neural network with pooling, normalization, and fully connected layers. The Alexnet was trained for images with the size of 227 by 227 by 3, and the depth of the model is eight layers. Resnet model [20] is the 2015 winner of the ImageNet Scale Visual Recognition Challenge (ILSVRC) [21], and the depth of the layers is increased to 50 layers. Instead of directly stacking layers on plain CNN and learn the underlying mapping, Resnet stacks layers and fits a residual mapping. It serves as a direct pathway between input and output, learning the features based on the provided input. The residual network has shown greater ability to optimize and gain higher accuracy [8].

2.4 Image-based ML approach procedure

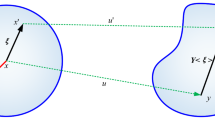

Figure 9 demonstrates the proposed image-based ML procedure. First, acceleration data from selected nodes of the structure is recorded for all damage scenarios. Second, scalograms from wavelet transform are categorized into groups with different labels accordingly. Third, all the training examples are inputted into pre-trained networks for transform learning to reach 100% prediction accuracy. Finally, the testing examples are predicted with damage location and severity for performance evaluation.

3 Numerical and experimental validation

In order to facilitate the validation of the proposed method, a scaled-down frame-bridge model is established, as shown in Fig. 10. The four-bay bridge model is composed of 20 nodes and 36 frame members. On each bay of the bridge, there are four horizontal flat members with a length of 180 mm, making 720 mm in total length. The joints from the lower and upper levels are connected with I-shape beam members and the two sides of the bridge are parallel attached through I-shape beam members, making the bridge’s total height and width 125 mm and 105 mm, respectively. The material properties and section properties will be introduced in the model updating section. In the meanwhile, to create a comprehensive representation of the proposed method, a numerical finite element (FE) model is built using OpenSees software [31] as shown in Fig. 11.

Accelerometers (Model 2260 1-Axis Module from Silicon Designs, Inc.) are strategically deployed on the bridge model for acquisition of dynamic structural responses (as circled in Fig. 10). In detail, six accelerometers are attached diagonally along two sides of the bridge measuring nodal acceleration data (sampling rate is 100 Hz). In the numerical model, the data from the corresponding nodes (node 2, 4, 8, 13, 17, and 19) are collected.

An accurate FE model is formulated that can generate reliable training data (e.g., various data patterns) for the numerical validation. To begin with, dynamic properties of the laboratory bridge model, such as natural frequencies and mode vectors, will be extracted by the peak-picking method or the eigensystem realization algorithm. Then, an FEM model is built with the same dimensions and material properties of the miniature bridge model. Although this FEM model is built following the same design specification as the miniature model, dynamic properties of the FEM model are expected to be deviated from those of experimental results due to the inherent differences in nominal material properties, ideal boundary conditions, fixed damping ratio, etc. In this study, it is presumed that only the stiffness values of a model necessitate updating. A linear structure with N degrees of freedom is considered. Therefore, the stiffness matrix \(\mathbf{K}\in {\mathbb{R}}^{{\it {N}}\times {\it {N}}}\) of the structures can be parameterized by an updating vector \({\varvec{\upalpha}}\in {\mathbb{R}}^{{{\it{N}}}_{\boldsymbol{\alpha }}}:\)

where \({\mathbf{K}}_{0}\)∈ \({\mathbb{R}}^{{\it{N}}\times {\it{N}}}\) is the initial structural stiffness matrix, and \({\mathbf{K}}_{i}\) ∈ \({\mathbb{R}}^{{\it{N}}\times {\it{N}}}\) is the \({i}^{\mathrm{th}}\)

influence matrix corresponding to the updating parameter \({\alpha }_{i}\).

To minimize discrepancies between the actual and FEM models, we formulated an optimization problem as shown in Eq. (4). Through continuous iterations in the optimization process, the FEM model was optimized to match the actual model.

where \(i\) denotes the mode number; n is the total mode number (generally considered up to 90% mass participation); \({\lambda }_{i}^{\mathrm{EXP}}\) is the eigenvalue at the \({i}^{\mathrm{th}}\) mode extracted from experiment; \({\lambda }_{i}\left(\mathbf{K}\left({\varvec{\upalpha}}\right)\right)\) is the eigenvalue at the \({i}^{\mathrm{th}}\) mode calculated from the FEM model when the stiffness matrix is \(\mathbf{K}\left({\varvec{\upalpha}}\right)\); \({\mathrm{MAC}}_{i}\left(\mathbf{K}\left({\varvec{\upalpha}}\right)\right)\) denotes ratio of mode similarity; \({w}_{1}\) and \({w}_{2}\) are weighting factors; \({{\varvec{\upalpha}}}_{L}\) and \({{\varvec{\upalpha}}}_{U}\) are lower and upper bounds for updating vector \({\varvec{\upalpha}}\), respectively.

In this optimization procedure, a multi-start approach is employed to explore potential global minima. Through solving the optimization problem, we obtained an accurate FEM model that faithfully emulates real structural responses. In the meantime, the updating procedure is separated into 3 steps (as shown in Fig. 12). The multi-step model updating simplifies the computation demands of model updating, and it accommodates the requirement of different analysis method from the numerical model and the bridge model. The first step is to update the natural frequencies by conducting eigen analysis in numerical model, and the frequency responses from bridge model are derived from peak-picking method (in frequency domain). After the bridge frequencies are matched in Step 1, mode shapes are being updated by performing transient analysis in the numerical model; an imposed load is applied to the bridge model to gather bridge acceleration data. The measured acceleration data is processed into frequency domain information through Fourier transform. Afterwards, the normalized mode shapes are updated in Step 2. At the end, in Step 3, a random horizontal bridge member is replaced by a 3D printed bridge member (Figs. 13 and 14) to emulate a damage scenario for final model updating. From Fig. 14, two flat beams with two different thicknesses are printed representing moderate and severe damage. In the numerical model, a member stiffness degradation percentage is assumed to classify damage severity (100%-90%, 89%-50% and 49%-10% degradations are considered as undamaged, moderate damaged and severe damaged structures, respectively). All the updating parameters and targets are listed in Table 1.

3.1 Numerical validation

To emulate the structural damages, the changes in member stiffness and are simulated in FE analysis. Single-member damage scenarios are considered in this project. To verify the training process efficiently, the damages from horizontal bridge members are considered. Therefore, there are in total 16 members with undamaged, moderate, and severe damaged scenarios. Table 2 lists the classification attributes and labels. The frame member damage cases are categorized into three levels: undamaged (100%-90%), moderate damage (89%-50%), and severe damage (49%-10%). A 2% stiffness degradation interval is applied for each damage level to generate distinctive learning examples. A total of 688 machine learning examples are created using this method. All the member arrangements are demonstrated in Fig. 15.

To generate learning features, acceleration data subjected to a random external excitation from selected joints are recorded from a numerical model or a real model. In this project, six acceleration data from nodes, Node 2, 4, 8, 13, 17, and 19, are collected by running the OpenSees simulation. From the node data, six scalograms converted through the wavelet transform are stitched into one image to serve as a learning feature (Fig. 16). The resolution of scalogram is determined by the available computational resources. Specifically, in our study, the use of Alexnet and Resnet necessitates specific input sizes of 227 × 227 × 3 and 224 × 224 × 3, respectively. These dimensions are adjusted by the CNN network upon inputting the images to meet these requirements. Therefore, to ensure the learning accuracy, the initial size of the input scalogram in this study ranges from approximately 230 × 300 to 250 × 320. The order of the six scalograms are: Node 2 and Node 17 are in the first row (from left to right), Node 8 and Node 13 are in the second row (from left to right), and Node 4 and Node 19 are in the third row (from left to right). Figure 16 illustrates four different damage cases: (a) indicates moderate damage in Member 12; (b) shows severe damage in Member 12; (c) plots another severe damage occurs in Member 11; (d) represents an undamaged case. For machine learning purpose, 688 learning examples are randomly separated into 95% training examples (654) and 5% testing examples (34).

In this study, the efficiency of the pre-trained network is tested. To accommodate the classification requirement, the last fully connected layer is modified to fit 33 classes (labels). The prior learned knowledge from Alexnet and Resnet is preserved in the transfer learning process.

Stochastic gradient descent with momentum (SGDM) optimization algorithm is selected through a trial-and-error method. The other optimizers considered are adaptive moment estimation (Adam) and root mean square propagation (RMSProp). Stochastic gradient descent updates the network parameters (weight and biases) to minimize the loss function (cross-entropy, shown in Eq. (5)) by taking small steps at each iteration in the direction of the negative gradient of the loss [6]. Momentum helps accelerate the gradient descent and it helps with faster convergence. Equations (5) and (6) demonstrated the SGDM optimizer [22].

where N is the number of prediction class, \({y}_{c}\) is the indicator for each class, and \({p}_{c}\) is the predicted probability.

where θ is the weight parameter; α is the learning rate; \(\nabla\) is the gradient operator; \(\gamma\) is the moment term which takes gradient of previous time steps into consideration.

Since two networks are trained with prior knowledge (weights and bias parameters), the training accuracies gained rapidly after the first epoch. Alexnet is a relatively shallow network, the accuracy reached 20% within the first epoch, and it takes 250 epochs to reach 100% accuracy. Compared with Alexnet, Resnet has a more advanced network architecture, and the accuracy has ascended to 60% in the first epoch. To achieve 100% training accuracy, a total of eight epochs was required. Figure 17 summarizes the training progress of Alexnet and Resnet. It is noticeable that the training rates for both networks keep constant between 50 to 80% accuracy and decelerate when approaching 100%.

Equation (7) calculates the prediction accuracy percentage. The accuracy is defined as number of correctly predicted labels over the total number of labels.

The testing results of Alexnet model are presented in Fig. 18a, which includes four wrong prediction cases. There are four cases where predictions were not accurate: firstly, moderate damage to Member 1 was incorrectly identified as moderate damage to Member 9; secondly, moderate damage to Member 3 was incorrectly attributed to Member 11; thirdly, the model mistakenly identified moderate damage to Member 7 as damage to Member 15; and finally, moderate damage to Member 9 was misclassified as damage to Member 1. When calculated using Eq. (7), these inaccuracies yield a prediction accuracy of 88%.

The testing results of Resnet model are shown in Fig. 18b, which includes two wrong prediction case. The mis-predicted cased are: Member 14 moderate damage is predicted to be Member 6 moderate damage, and Member 4 moderate damage is predicted to be Member 12 moderate damage. The estimated prediction accuracy is 94%. Therefore, the Resnet model outperforms the Alexnet in this study, and it will be employed in the future experimental validation and prediction.

3.2 Experimental validation

To validate the proposed methodology, a miniaturized bridge model is utilized to generate experimental testing cases. Acceleration data is then measured under random excitations applied to the bridge model. The bridge is securely positioned on a test platform, maintaining a predetermined fixed boundary condition. Four randomly selected damage scenarios are imposed in the bridge model with two sizes of 3D printed flat beam (as shown in Figs. 13 and 14). They are: Member 1 with severe damage (labeled as E1-S), Member 11 with severe damage (labeled as E11-S), Member 14 with moderate damage (labeled as E12-M), Member 12 with moderate damage (labeled as E14-M) and undamaged (labeled as E-U). Figure 19 illustrates a comparison between measured structural behavior and simulated model output of E-U and E14-M cases. In Fig. 19a and b, undamaged bridge scalograms are plotted, measured case and simulated case appears similar first mode trend (the brightest bar in the plot). The discrepancies occur at the second mode (in red rectangular frames), which measured case shows slightly higher magnitude. In Fig. 19c and d, measured case appears higher first mode magnitude in Node 4 and Node 19 (in red rectangular frames). However, expect these differences, most features are preserved from measured data.

The experimental validation results are shown in Fig. 20. To eliminate the measurement error, three measurements of each damage scenario are processed in the trained ML network for damage prediction. In Fig. 20, one of the E12-M (Member 12 moderate damage) case is wrongly predicted as E-U (undamaged) case; however, the rest of two measurements show the correct results. Therefore, in the real-time bridge damage prediction environment, this bridge has a high probability of moderate damage at Member 12.

4 Discussion

This research presented a new approach to conduct ML-based structural damage detection. Through the application of wavelet transform, the vibration measurements can be easily converted from time domain data into time–frequency multi-domain information. This approach constructively avoids the high computational demands from modal extraction. Moreover, the concept of utilizing pre-trained models enhances the accuracy of predictions and optimizes efficiency. The incorporation of an image-based deep learning network further accelerates the speed and effectiveness of damage detection.

To validate the proposed idea, a miniature bridge model was prepared, and its structural responses were synchronized with a numerical FEM model through the model updating technique. As a result, the FEM model emulated the real model’s structural behaviors. Through the updated model, various damage scenarios were simulated, and corresponding data were generated for ML training. Two pretrained models, Alexnet and Resnet are selected to perform transfer learning. The testing accuracies for numerical testing are all above 85% (Alexnet: 88%, Resnet:94%).

Furthermore, advancements in computation have made possible an innovative approach that allows for the real-time implementation of structural health monitoring. This approach efficiently fills the inspection gap by identifying and mitigating critical damages before they spiral out of control. Through the project, our findings are as follows: 1) The proposed method integrated with a sensor-based system enables to continuously monitor structural integrity. As a result, when critical structural elements are damaged, the proposed method clearly informed damage locations (global inspection) and their severity (local inspection). 2) The proposed continuous monitoring timely filled the inspection gap by identifying critical damages before getting out of control, and it serves as a reliable indicator for evaluating structural damage after extreme natural disasters. 3) No additional cost for system improvement is required if a sensing system is already installed on a bridge structure.

Availability of data and materials

Some or all the data, models, or codes that support the findings of this study are available from the corresponding author upon reasonable request.

References

Addison PS (2002) The illustrated wavelet transform handbook: Introductory theory and applications in science, engineering, medicine, and finance. Signal Processing Series. CRC Press

ASCE’s 2021 infrastructure report card (2021) Infrastructure investment gap 2020–2020. Retrieved from https://infrastructurereportcard.org/resources/investment-gap-2020-2029/

Asquero (2022) Advantages and disadvantages of machine learning. Retrieved from https://www.asquero.com/article/advantages-and-disadvantages-of-machine-learning/

Avci O, Abdeljaber O, Kiranyaz S, Hussein M, Gabbouj M, Inman DJ (2021) A review of vibration-based damage detection in civil structures: From traditional methods to machine learning and deep learning applications. Mech Syst Signal Process 147:107077

Brownlee J (2019) A gentle introduction to transfer learning for deep learning. Machine learning mastery. Retrieved from https://machinelearningmastery.com/transfer-learning-for-deep-learning/

Brownlee J (2021) How to choose an optimization algorithm. Machine learning mastery. Retrieved from https://machinelearningmastery.com/tour-of-optimization-algorithms

Chen W, Hua X (2019) Structural damage detection of bridge structures using transfer learning-based deep neural networks. J Sound Vib 461:114994

Dash D, Ferrari P, Wang J (2020) Decoding imagined and spoken phrases from non-invasive neural (MEG) Signals. Front Neurosci, 14.

Daubechies I (1992) Ten lectures on wavelets. Society for Industrial and Applied Mathematics

Dervilis N, Worden K, Cross EJ (2016) On robust regression analysis as a means of exploring environmental and operational conditions for SHM data. J Sound Vib 370:349–367

Engineering News-Record (2019) The importance of structural health monitoring. Retrieved from https://www.enr.com/articles/46857-the-importance-of-structural-health-monitoring

Eslami MR, Ahmadi H (2017) Damage detection in a steel frame structure using wavelet transform. J Constr Steel Res 134:98–107. https://doi.org/10.1016/j.jcsr.2017.05.024

Farrar CR, Worden K (2012) Structural health monitoring: A machine learning perspective. John Wiley & Sons

Federal Highway Administration (2017) Bridge preservation guide: Inspection, evaluation, and repair. Retrieved from https://www.fhwa.dot.gov/bridge/preservation/guide/guidetoc.cfm

Fei-Fei L, Deng J, Li K (2010) ImageNet: Constructing a large-scale image database. J Vis 9(8):1037

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT press

Goswami JC, Chan AK (2020) Wavelets and signal processing. Wiley

Gu H, Cao M (2018) Damage detection of structures using convolutional neural networks. J Sound Vib 422:229–245

Hall M (2008) Fast fourier transform in digital signal processing. Elsevier

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 770–778)

ImageNet (2015). Retrieved from https://www.image-net.org/challenges/LSVRC/index.php

Khandelwal R (2021) Overview of different optimizers for neural networks. Retrieved from https://medium.datadriveninvestor.com/overview-of-different-optimizers-for-neural-networks-e0ed119440c3

Khodaparast HH, Eslami MR, Moradi B (2014) A review of structural health monitoring techniques for smart structures. Measurement 51:98–114. https://doi.org/10.1016/j.measurement.2014.01.014

Kim D, Kim JT, Lee J (2019) A study on real-time structural damage detection using an ambient vibration measurement and Kalman filter. Mech Syst Signal Process 119:73–86. https://doi.org/10.1016/j.ymssp.2018.07.030

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems (Vol. 25)

Kumar P, Gabor V, Singh GK (2017) Morlet wavelet-based ECG signal analysis for detection of cardiac anomalies. Biomed signal process and control 33:241–254. https://doi.org/10.1016/j.bspc.2016.11.014

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444. https://doi.org/10.1038/nature14539

Li Y, Liu S, Qiao P, Wang G (2019) A review on deep learning for structural health monitoring. Mech Syst Signal Process 115:494–508. https://doi.org/10.1016/j.ymssp.2018.06.027

Liu H, Chang CC (2015) Damage detection in concrete beams using wavelet transform. J Comput Civ Eng 29(2):04014047. https://doi.org/10.1061/(ASCE)CP.1943-5487.0000397

Loh CH, Yang JN (2010) Structural health monitoring for bridges using wireless smart sensor technology. J Bridg Eng 15(3):313–320. https://doi.org/10.1061/(ASCE)BE.1943-5592.0000099

McKenna F, Fenves GL, Scott MH (2000) Open system for earthquake engineering simulation University of California, Berkeley. Retrieved from http://opensees.berkeley.edu

Mahapatra S (2019) Why deep learning over traditional machine learning? Retrieved from https://towardsdatascience.com/why-deep-learning-is-needed-over-traditional-machine-learning-1b6a99177063

Sarkar D (2018) A comprehensive hands-on guide to transfer learning with real-world applications in deep learning. Retrieved from https://towardsdatascience.com/a-comprehensive-hands-on-guide-to-transfer-learning-with-real-world-applications-in-deep-learning-212bf3b2f27a

Shao X, Zhang J, Chen J, Chen W (2021) Structural damage detection in a composite structure using vibration-based modal analysis and deep learning-based pattern recognition approach. Compos Struct 262:113764. https://doi.org/10.1016/j.compstruct.2020.113764

U.S. Department of Homeland Security (2018) Critical infrastructure: The national risk management center. Retrieved from https://www.dhs.gov/critical-infrastructure-national-risk-management-center

Wang Y, Li L, Li J, Li X, Li L (2016) Nonlinear vibration-based structural damage detection with incomplete mode shapes using variational mode decomposition. Mech Syst Signal Process 66–67:698–710. https://doi.org/10.1016/j.ymssp.2015.04.035

Wei Z, Liu L, Feng Y (2017) Vibration-based damage detection method for a steel arch bridge based on frequency change and mode shape curvature. Measurement 102:101–110. https://doi.org/10.1016/j.measurement.2017.02

Wu Z, Wang Y, Ren J, Zhang C (2015) Vibration-based damage detection for bridge structures using empirical mode decomposition. Struct Control Health Monit 22(4):630–646. https://doi.org/10.1002/stc.1758

Xu K, Zhang X, Wu W (2021) A survey of artificial intelligence-based approaches for structural damage detection. Arch Comput Methods Eng 28(1):31–54. https://doi.org/10.1007/s11831-020-09487-5

Xu W, Zhang S, Zhang X (2014) Operational modal analysis for damage detection of bridge structure based on EMD and improved Hilbert transform. Smart Struct Syst 14(6):981–1004. https://doi.org/10.12989/sss.2014.14.6.981

Acknowledgements

We thank Mr. Joushua Dyogi for his assistance with the experimental setup and structural element fabrication using a 3D printer.

Funding

This work was supported by the ATC + Program (20014127, Development of a smart monitoring system integrating 3D printed battery-free antenna sensor technology with AI optimization) funded by the Ministry of Trade, Industry & Energy (MOTIE, Korea).

Author information

Authors and Affiliations

Contributions

Xi Song (contribution rate: 45%): Contributed to data collection, data analysis, major experiment and manuscript writing. Dan Li: (contribution rate: 25%): Contributed to design the experiment, analyze and interpret the data, and participated in manuscript writing. Chunhe Cho (contribution rate: 30%): contributed to guide the research direction, provide critical revisions, and ensure the accuracy of the work.

Corresponding author

Ethics declarations

Competing interests

Dan Li serves on the journal secretariat for Urban Lifeline and was not involved in the editorial review, or the decision to publish, this article. All authors declare that there are no other competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Song, X., Li, D. & Cho, C. Image-based machine learning approach for structural damage detection through wavelet transforms. Urban Lifeline 2, 4 (2024). https://doi.org/10.1007/s44285-023-00010-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44285-023-00010-z