Abstract

Vehicle detection in dim light has always been a challenging task. In addition to the unavoidable noise, the uneven spatial distribution of light and dark due to vehicle lights and street lamps can further make the problem more difficult. Conventional image enhancement methods may produce over smoothing or over exposure problems, causing irreversible information loss to the vehicle targets to be subsequently detected. Therefore, we propose a multi-exposure generation and fusion network. In the multi-exposure generation network, we employ a single gated convolutional recurrent network with two-stream progressive exposure input to generate intermediate images with gradually increasing exposure, which are provided to the multi-exposure fusion network after a spatial attention mechanism. Then, a pre-trained vehicle detection model in normal light is used as the basis of the fusion network, and the two models are connected using the convolutional kernel channel dimension expansion technique. This allows the fusion module to provide vehicle detection information, which can be used to guide the generation network to fine-tune the parameters and thus complete end-to-end enhancement and training. By coupling the two parts, we can achieve detail interaction and feature fusion under different lighting conditions. Our experimental results demonstrate that our proposed method is better than the state-of-the-art detection methods after image luminance enhancement on the ODDS dataset.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Vehicle detection [1–4] is a fundamental step in various intelligent transportation tasks, including vehicle type recognition [5] and license plate detection [6–8]. Although there are many successes in vehicle detection, there are still many problems to be solved. In practice, it is a challenging task to identify and detect vehicles in dim light.

Low brightness and low contrast often affect the extraction of target features in images and reduce the performance of object detection. Meanwhile, additional noise is generated in dim light, which further affects and impairs the information structure of the target in the image. What is worse in the task of vehicle detection is that vehicles are illuminated with large fluctuations on the road due to the presence of vehicle lights and street lamps.

People usually choose image enhancement methods [9–15] to improve the quality of images in dim light conditions. For example, RetinexNet [14] uses a decomposition network and an enhancement network to enhance images illuminated with dim backlight by taking advantage of the fact that the reflectance of images is the same under different illuminations. However, it is difficult to ensure that a single image enhancement can handle the vehicle images in different areas with different brightnesses due to the uneven light and darkness caused by factors such as vehicle lights and street lamps. Furthermore, it may even produce over exposure in some areas, which further damages the original detection feature. Therefore, the multi-exposure method used for high dynamic range (HDR) images is a good reference. The method uses different camera parameters for several different shots, and then fuses them to learn the complementary features to detect the vehicle to cover different exposure conditions.

In addition, the purpose of enhancing images in dim light conditions is to improve the visual quality of the images, which is not the same as the goal of vehicle detection. For example, the smoothing operation commonly used for image enhancement may have an impact on features that are essential for detection. Therefore, the impact of image enhancement should be taken into consideration in the end-to-end vehicle detection network under dim light conditions.

Combining the above motives, this paper proposes a multi-exposure generation fusion network (MEGF-Net). The method consists of two parts as the name implies. First, a generative network is used to simulate the effect of multiple nonlinear exposures in HDR cameras, using historical information to maintain details in key areas of vehicle detection on the one hand, and to generate images with progressively higher exposures on the other hand. Next, a fusion network is used to smoothly fuse multiple images to detect vehicles in dim light. From an end-to-end point of view, the multi-exposure fusion network (MEF-Net) provides vehicle detection information to guide the generation network to fine-tune the parameters so that the generation network can be effectively optimized for the vehicle detection problem, while the multi-exposure generation network (MEG-Net) provides pre-trained enhanced images for the fusion network. In our experiments, we also control and optimize the additional parameters needed, resulting in a significant performance gain with only a small increase in parameters.

To summarize, this paper makes the following contributions:

1) For the vehicle detection problem in dim light, we propose an end-to-end MEGF-Net, which couples image enhancement and vehicle detection together for joint training.

2) In MEG-Net, a single gated memory unit with two inputs and one output is used to solve the over smoothing and over exposure problems, and the network performance can be significantly improved by adding only a few parameters.

3) Our method has excellent performance in vehicle detection under dim light conditions on the ODDS dataset, reaching 78.09% mAP. It is 5.90% higher than the fine-tuned object detection baseline, and 2.91% higher than the state-of-the-art method BIMEF [15]. At the same time, the number of parameters and the detection time are basically unchanged.

2 Related works

2.1 Image enhancement under dim light conditions

Image enhancement under dim light conditions has been a longstanding challenge in computer vision, with early solutions often relying on local statistics or intensity mapping techniques such as histogram equalization [16–18] and gamma correction [19]. More recent approaches have focused on Retinex theory [14, 20], aiming to address the relationship between illumination and reflectance. For example, BIMEF [15] designed a weight matrix for image fusion using light estimation techniques and fused the input image with the synthetic image based on the weight matrix. The fusion process uses multi-scale Retinex decomposition to adjust the brightness and contrast of different exposure images, while using pixel-level weighting to retain the detailed information of each exposure. After that, deep learning further advanced the field of image enhancement [9–13, 21], with state-of-the-art methods demonstrating superior results in enhancing images in dim light, the performance gain is still limited when applied to vehicle detection under dim light conditions. This is partly because image enhancement and vehicle detection have different goals, and neural networks alone may struggle to address the uneven illumination within an image. Therefore, it is important for researchers to explore new approaches that can effectively enhance images in dim light while also facilitating accurate vehicle detection.

2.2 Vehicle detection under dim light conditions

In the past few years, deep learning has emerged as a powerful technique for vehicle detection under dim light conditions. Li et al. [22] proposed a nighttime framework that employs multiple means to enhance nighttime vehicle information. First, a multi-exposure fusion BIMEF algorithm was used to enhance the quality of nighttime images. Then, multi-scale salient feature maps of highlighted vehicle regions were combined with vehicle visual feature maps to enhance the vehicle information during detection. Finally, an integration algorithm was designed to combine multiple networks to provide richer vehicle visual features. Gao et al. [23] fused infrared and visible images to improve the detection performance of nighttime vehicles. This article proposed a vehicle detection model of generative adversarial network (GAN) fusing infrared and visible images, named GF-detection, with different feature extraction strategies according to the differences between the two. Similarly, Shao et al. [24] proposed a cascaded detection network framework, FteGanOd, in which the FTE module was built based on CycleGAN, and proposed multi-scale feature fusion to enhance the detection of nighttime vehicle features. Additionally, the OD module based on the existing object detection network was improved by cascading with the FTE module to detect vehicles on EF maps. Furthermore, Mo et al. [25] concluded that vehicle highlight information such as vehicle lights was a highly credible visual feature at night. Therefore, a novel framework for nighttime vehicle detection aided by vehicle highlight information was proposed. They proposed a feature aggregation mechanism that combined multi-scale highlighting features with the visual features of the vehicle to make full use of the vehicle highlighting location cues. Our algorithm is inspired by the multi-exposure concept in BIMEF, while we perform end-to-end joint learning of image enhancement and vehicle detection, and thus compare the performance with existing methods.

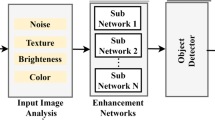

3 MEGF-Net

As illustrated in Fig. 1, the proposed method consists of two modules: a multi-exposure generation net (MEG-Net) and a multi-exposure fusion net (MEF-Net). MEG-Net uses a neural network to imitate the high dynamic range image, which provides the fusion module with multiple enhanced images and performs adaptive fusion and detection during the multi-exposure fusion stage. MEF-Net provides vehicle detection information to guide MEG-Net in fine-tuning the parameters so that the generation network can be effectively optimized for the problem of vehicle detection.

3.1 MEG-Net

In MEG-Net, in order to obtain different levels of exposure images from the input images in dim light, the easiest solution is to use the previous image as input and obtain the next image through a convolutional neural network, forming a single-input, single-output structure. However, the use of the above strategy will most likely lead to localized over smoothing or over exposure of the images due to the more severe illumination and darkness inhomogeneity problem on dark roads with vehicle lights and street lamps, thus compromising the detailed information in the vehicle detection task.

To optimize the above problem, a convolutional recurrent neural network (ConvRNN) [26] based on two-stream progressive exposure is applied to the encoder of MEG-Net. On the one hand, the input relies on the information of the previous stage to realize the memory function of the neural network, and avoids losing the ability to learn the information of the more distant stages by using the historical information to maintain the details of the key regions, thus reducing the probability of extreme values in the key positions in the image. On the other hand, the upper layer of the same stage is used to complete the encoding process, extract the features of the input image at that stage by increasing the number of channel dimensions, and then restore the size of the input image by decoding to generate images with gradually higher exposure. This design can effectively avoid the problem of over smoothing or over exposure caused by the single input and single output of conventional convolutional neural networks. As presented in Fig. 1, the encoder has multiple stages, each of which consists of multiple convolutional recurrent modules (CRM) connected in series. We implement this through a series of convolutional training with dual inputs. The coding and decoding process of each stage through deep learning can be represented by Eq. (1). This makes it available for the output of this stage to be used as input for the next stage.

where I represents the picture of each stage, H represents an intermediate hidden layer module CRM, and \({D_{\theta }}\) and \({E_{\omega }}\) represent the decoder and encoder in MEG-Net with corresponding parameters θ and ω. t represents the current stage.

The process of each encoder is illustrated in Eq. (2), and the input of each CRM consists of two parts, i.e., previous layer of this stage and same layer of the previous stage.

where \(H_{t}^{l}\) represents the l layer of the t stage in CRM. CRM is initialized as \({H_{0}} = 0\) and \({I_{0}}\) is the original input image. The input of the first layer is the output image of the previous stage, expressed by the formula \(H_{t}^{0} = {I_{t - 1}}\).

Since vehicle detection tasks are often the cornerstone of intelligent transportation systems and many vehicle tasks need to be performed on the basis of obtaining vehicle locations, it is crucial to design lightweight ConvRNN structures with fewer parameters in less time without losing accuracy.

We have designed a lightweight CRM composed of a single gated memory unit and a few additional parameters to ensure that long-term key information is learned, and the structure is illustrated in Fig. 2. From the perspective of sequence length, there is no need to use the common convolutional long short-term memory (ConvLSTM) network and convolutional gated recurrent unit (ConvGRU) network because the number of stages required for MEG-Net is much smaller than the sequence length of natural language processing. The total number of parameters of the single gated memory unit is only half of that of ConvLSTM, which will have fewer parameters and faster training and usage. The memory unit is calculated using Eq. (3) ∼ Eq. (5).

where f represents the unique gating module that determines the extent of resetting its history encoding. The value of the gating function is controlled by a sigmoid function. W and U represent dilated convolution and regular convolution in Fig. 2, respectively. ⊙ represents Hadamard product. \(\hat{H}_{t}^{l}\) is the output result of a single gate memory unit.

In addition as shown in Eq. (6), the lightweight channel attention mechanism \({A_{c}}\) and the spatial attention mechanism \({A_{s}}\) modules are used within CRM to focus on important features and suppress unnecessary features [27].

Specifically, in the channel dimension, the global maximum pooling (GMP) and global average pooling (GAP) are used in parallel to compress the length and width of the image, and the weight calibration of the channel dimension is obtained by sharing a multilayer perceptron. In the spatial dimension, the average and maximum values in the channel dimension need to be taken separately for information extraction. After that, the number of channels is compressed by a custom-sized convolution kernel. Finally, a nonlinear function is used to weight the original feature map weight coefficients to complete the whole attention module.

3.2 Pseudo supervised pre-training of MEG-Net

To achieve a better complementary end-to-end training, we first pre-train MEG-Net. However, in practice, it is difficult to collect image pairs with different exposures of the same scene at the same time. Therefore, we first need to generate pseudo ground-truth images with different exposure levels for images illuminated with dim backlight. We select the camera response model [15] to generate the required pseudo ground-truth images, which can well simulate the nonlinear function relationship between each pixel value of the image and the irradiance, and also has excellent scalability. The model can be expressed as:

where \({P_{x}}\) and \({P_{0}}\) are the pixel values of the desired image and the input image, the exposure rate \({k_{x}}\) is the ratio of their irradiance, and the function g is the exposure transformation function. The parameters \(a= -\)0.32 and \(b = 1.12\) are internal parameters of the used camera and are camera dependent only. The values are obtained by fitting all camera response curves in the DoRF dataset [28]. The parameter k is related to the desired exposure rate of the dataset images and the value of the approximate desired exposure rate needs to be solved and counted.

After that, MEG-Net is pseudo supervised pre-trained by the above model to generate images with the desired exposure. To measure the difference between the network-generated image \({I_{t}}\) and the generated pseudo exposure image \({\hat{I}_{t}}\), we need to construct the loss function according to the desired. Due to the problem of uneven light and darkness in the image, if we only use the common mean square error (MSE) loss, it may lead to these overexposed outliers having a large impact on the results, making the loss function larger. Therefore, the first part of the loss function uses a combination of MSE and mean absolute error (MAE) to form a combined loss function named SMAE. For locations with small errors, MSE is used to make the loss easily converge and more stable, while MAE is used to reduce the impact of outliers with large errors. As displayed in Eq. (8), the distance between the generated image \({{I_{t}}}\) and the real image \({{{\hat{I}}_{t}}}\) is calculated by SMAE. δ is a hyperp-arameter whose magnitude determines the focus of the SMAE on MSE and MAE. We use H to represent SMAE [29].

In addition to the regression loss from the errors of pixel points, we also add the evaluation metric of the overall quality of the generated image to the loss function. The structural similarity (\(SSIM\)) [30] index can be a useful response to the perception of quality in the visual domain and it is calculated by using Eq. (9). By using a Gaussian filter at pixel \({p_{t}}\), we can obtain the variance δ and the mean μ. \({\mathrm{C}_{1}}\) and \({\mathrm{C}_{2}}\) represent constants.

Therefore, we finally adopt a loss function combining \(SSIM\) and SMAE, as presented in Eq. (10). The \(SSIM\) at stage t is subtracted to calculate our required loss function. N denotes the number of pixels in a image.

It is possible to make our model scalable without relying on image pairs of different luminance by pseudo supervised pre-training. In addition, this section demonstrates the performance of the final vehicle detection in dim light.

3.3 MEF-Net

For MEF-Net, we choose the commonly used object detection framework in convolutional neural networks as the basis of the network. This allows us to place MEG-Net in front of MEF-Net by only dealing with the channel dimensions at the connection of the two networks and ultimately enables end-to-end learning and makes our method compatible with any detection framework.

In the object detection framework, one-stage has no region proposal network compared to a two-stage network. It is not only faster, but also more compatible with the requirements of the chips in cameras. Therefore, we use RetinaNet in one-stage for training, where the focal loss is useful for detecting difficult vehicles in the dark. In addition, we use a variant of the residual network as the backbone network of RetinaNet. Compared to the most commonly used ResNet [31], this variant moves the batch normalization (BN) and RELU operations to before the convolutional layer, refer to Fig. 3, making it pre-activated and easier to train the whole network.

At the interface between MEF-Net and MEG-Net, we choose to crop the first layer of the detector framework and use convolutional kernel channel dimension expansion [32], which allows the detector to process multiple images from the generation module simultaneously and perform adaptive fusion. Specifically, for the pre-trained vehicle detector, we ensure that MEF-Net has the same channel dimension as the generative network by replicating and normalizing the parameter weights of the T times first layer convolutional kernel while helping to maintain better differentiation and complementary region information across different pseudo exposures. For RetinaNet, after we convolutionally expand the channel dimension of the first convolutional layer in Fig. 3, we can plug it into the whole network framework displayed in Fig. 1 to implement the multi-exposure fusion function.

In addition, since the final output of MEG-Net is T tri-color pictures, we can feed the generated pictures into the squeeze-excitation net (SENet) [33] before the information is fed into the vehicle detector, so that it acts as a “bridge” connecting the two networks. This allows the learning process to use global information to strengthen useful channel features and weaken useless ones to obtain channel dimensional weights when 3T channels are fused.

In this phase of vehicle detection, the loss function consists of two parts, confidence loss \({L_{\mathrm{conf}}}\) and regression loss \({L_{\mathrm{reg}}}\).

where \({{N_{a}}}\) represents the full number of samples, \({{N_{p}}}\) represents the number of positive samples, and the parameter λ is used to balance \({L_{\mathrm{conf}}}\) with \({L_{\mathrm{reg}}}\). \({{{\hat{p}}_{i}}}\) is 1 for the vehicle, \({{{\hat{p}}_{i}}}\) is 0 for the background, and \({{p_{i}}}\) is the confidence score between 0 and 1. \({{g_{i}}}\) denotes the vector consisting of the coordinates of the four parameters of the predicted vehicle boxes, and \({{{\hat{g}}_{i}}}\) is the ground-truth bounding box.

\({L_{\mathrm{conf}}}\) is a binary loss function. The proportion of vehicle targets in the dataset is much smaller than the proportion of background, so the targets of the anchor will be mainly negative samples. Our selected focal loss function adds two dynamic scaling factors to the binary cross-entropy to dynamically and significantly reduce the loss caused by the easily distinguishable negative samples in the learning process, thus focusing the loss on the part of the positive samples that are difficult to distinguish. This is extremely critical for difficult object detection under dim light conditions. The confidence loss is given by Eq. (12), where the parameters α and γ are designed as double-balancing factors that smoothly adjust the simple samples so that their weight loss is significantly reduced, which allows the loss function to focus on the vehicle samples that are difficult to detect in dim light.

The regression loss is another part of the loss function, which is computed by positive samples only. We use the SMAE loss to calculate the distance between \({{g_{i}}}\) and \({{{\hat{g}}_{i}}}\), which is expressed by Eq. (13):

Finally, MEG-Net and MEF-Net are tied together for joint training so that the two parts form an end-to-end learning system. Analysis from a functional perspective: MEF-Net provides vehicle detection information to guide the generative network to fine-tune parameters so that vehicle regions can be specifically enhanced to accomplish detection; MEG-Net provides pre-trained enhanced images for the fusion network to perform adaptive fusion and detection after the channel dimension attention mechanism. After coupling the two parts for training, we can achieve detailed interaction and feature fusion under different lighting conditions, and end-to-end learning for vehicle detection under abnormal lighting.

4 Evaluation

4.1 Setup

4.1.1 Datasets and evaluation metrics

We select 15,000 images of vehicles under darkness from surveillance photos as our ODDS (object detection under dim light surveillance) dataset. Each of these images has from 1 to 23 labeled vehicles. The size of the labeled vehicles also has a relatively large variance. We divide the training and test sets in a 9:1 ratio, and use 10-fold cross-validation to split a portion of the training set as the validation set. In addition, we also use the large-scale normal-light vehicle dataset UA-DETRAC [34] to pre-train the object detection framework. We select 80,000 photos and more than 500,000 vehicles for pre-training. For the evaluation metrics, the overall performance of the vehicle detection model is measured using mean average precision (mAP), which is calculated as the area under the precision-recall curve.

4.1.2 Pre-training settings

In the pre-training phase, we first set the number of modules for MEG-Net. We set the number of stages to 4 and the number of coding modules for each stage to 4. For all the black night images in the dataset, we solve for the desired optimal exposure rate values and perform the statistics. The statistical histogram is plotted as Fig. 4 shows. We choose the first quartile, second quartile, third quartile and maximum value of the distribution as the four different levels of exposure rates needed. Then we set the four stages in the following order: \({{k_{1}}} = 3.8328\), \({{k_{2}}} = 4.0223\), \({{k_{3}}} = 4.3367\) and \({{k_{4}}} = 6.9852\). The four k values are brought into Eq. (7) for the generation of the pseudo exposure images. During training, because the images generated by the camera response model will unavoidably generate noise and artifacts, the early stopping [35] method in deep learning is used to avoid obtaining these unfavorable factors during pre-training. Specifically, we can stop pre-training when the average peak signal-to-noise ratio (PSNR) of \({{I_{t}}}\) and \({{{\hat{I}}_{t}}}\) reaches 25. The deep learning part is implemented by the PyTorch library.

4.1.3 Data augmentation

We have used a number of data enhancement methods in our experiments. Three common methods are chosen. First, we let the image of the dataset flip randomly horizontally with a probability of 50%. Then, the images are rotated randomly clockwise or counterclockwise in a range of 15 degrees. Finally, the dataset is randomly cropped. The number of datasets is increased by these methods.

4.1.4 Training parameter setting

For the anchor used in the detection, we set the anchor scales to 8, 16, 32, 64, 128 and 256. We also set the anchor ratio to 0.75, 1.00 and 1.33 depending on the actual situation. These anchors cover a very wide range of vehicle sizes.

For the training of the model, we use an initial learning rate of 0.01 and a learning rate decay factor of 0.1. We set the batch size to 16 and epoch to 160, while using the state-of-the-art rectified Adam optimizer [36]. The focal loss parameter is set to \(\alpha =0.25\) and \(\gamma =3\). The GPU devices used in our experiments are two Tesla V100.

4.2 Performance of the proposed method

We use RetinaNet to pre-train under the UA-DETRAC dataset and then fine-tune it on the ODDS dataset. The resulting model is defined as the baseline. Our method is compared with the fine-tuned baseline network in terms of the number of parameters and overall accuracy. The comparison images of the results are presented in Fig. 5. It can be seen that our method successfully detects more vehicles in the dark. In Fig. 6, it can be seen that there is a more significant improvement in mAP values for our method, which increases by 5.90% to 78.09%. In terms of the number of parameters, the design of the lightweight convolutional recurrent module makes the existing method add only 0.49 M computational parameters to the original 33.60 M number of parameters.

Qualitative comparison of vehicle detection in dim light. The left side shows the result of baseline detection after fine-tuning. The right side is the result of detection by the method in this paper. The number in the lower right foot of the figure represents the total number of detected vehicles in darkness

Meanwhile, we take state-of-the-art methods to improve the quality of images illuminated with dim backlight to compare with our MEGF-Net. We select methods LLNet [9], KinD [10], RRDNet [11], DeepUPE [12], DSLR [13], RetinexNet [14] and BIMEF [15] to pre-train the images. These are all image enhancement methods separate from object detection, rather than our end-to-end training. The quantitative comparison of different methods under the RetinaNet detector is visualized in Fig. 6. The pre-trained baseline reaches 38.51% and the fine-tuned baseline reaches 72.19%. The fine-tuned methods show a higher mAP compared to all pre-trained results, which also validates the large difference in the light distribution of the UA-DETRAC dataset under normal light compared to the ODDS dataset under dim light conditions. When pre-processed only, the mAP using LLNet, DeepUPE, DSLR and BIMEF improved by 4.55%, 6.72%, 5,52% and 8.12% over the baseline. After fine-tuning with ODDS, the mAP of LLNet, DeepUPE, DSLR, BIMEF improved by 1.79%, 2.27%, 2.72% and 2.99%, respectively, over the baseline. This is because fine-tuning has greatly reduced the difference in data between normal and dim light, so these methods obtain slightly lower performance gains under fine-tuning than under pre-training.

It is worth noting that KinD and RetinexNet degrade the performance in some way. This may be caused by excessive smoothing (KinD) or artifacts (RetinexNet) in the vehicle detection region when the image is enhanced.

From Fig. 6, we can see that the multiple exposure method BIMEF [15] is second to our method and superior to other image enhancement methods. This also verifies that it is reasonable and effective to use the multiple exposure method in our detection task. Finally, it is easy to see that our mAP values are higher than any of the methods for image enhancement, which also verifies that this task performs better using an end-to-end method than a method that separates enhancement from detection.

We also replace RetinaNet to compare on detectors for vehicle recognition under darkness. As illustrated in Fig. 7, the detectors were replaced with YOLO3 [37] and FPN [38]. The mAP of pre-trained baseline for these two detectors reached 36.46% and 35.56%, respectively, and the fine-tuned baseline reached 69.97% and 69.33%. In the framework of our method, the mAP values reached 76.18% and 75.83%. It is easy to see that RetinaNet in our method has the best performance in this task. This also verifies that focal loss is critical for the detection of difficult vehicle samples under low illumination. In summary, we validate the superiority of our method from different perspectives, and the extremely high mAP proves that it outperforms existing state-of-the-art techniques.

4.3 Ablation studies

Channel dimension attention

We added the channel dimensional attention mechanism SENet between MEG-Net and MEF-Net. We perform ablation experiments on this part and the results are displayed in the first row of Table 1. We can obtain that adding the channel dimensional attention mechanism increases the mAP by 0.38%.

Joint training

For different lighting conditions, the exposure required for the images is also different. Meanwhile, before end-to-end training, MEG-Net cannot determine which features will be helpful for vehicle recognition, and MEF-Net can provide assistance for it. To verify the effectiveness of joint training, we freeze the weight values of MEG-Net after pre-training, i.e., the final training only fine-tunes the weight values of MEF-Net. The comparison results are summarized in the second row of Table 1. The value of mAP becomes 73.89%, which is much lower than 78.09%. This verifies that end-to-end joint training is essential.

Single gated memory unit

To verify the performance of our proposed single gated memory unit, we perform ablation experiments on this part. The experiments replace the coding part of all MEG-Net with convolutional recurrent neural networks without gating. The results are presented in the third row of Table 1. It can be seen that using a single gated memory unit can increase the performance by 3.24%.

Attention mechanism within the convolutional recurrent module

Within the convolutional recurrent module of MEG-Net, we include lightweight channel-dimensional and spatial-dimensional attention mechanisms. We remove all the attention mechanisms within the module for comparison in the ablation experiments. The results are shown in the fourth row of Table 1. This part of mixed attention can improve the mAP of the network by 0.88%.

Convolutional kernel expansion

We use a convolutional kernel channel dimension expansion for the first layer of the detector in MEF-Net to handle the interface between the two networks. Adaptive fusion is achieved by replicating and normalizing the T times parameters. In addition, we can perform experiments by fusing the corresponding detection frames after feeding the images into the detectors separately, using a post-fusion method to handle the channel dimension interface. The results of this part of the ablation experiment are presented in the fifth row of Table 1. It is easy to see that the performance can be improved by 0.46% using this method.

Model design of MEG-Net

We designed several modules for comparison with our method to prove that our proposed two-input single-output recurrent module is effective, as illustrated in Fig. 8. The methods are listed below.

1) Branched exposure generation (BEG). The stages are parallel to each other and do not affect each other, which generates images with different exposures according to \({I_{0}}\).

2) Serial exposure generation (SEG). The input of this stage is the output of the previous stage, but the difference with our approach is that the two-stream progressive exposure input strategy is not used in the encoding stage.

3) Recurrent exposure generation (REG). This is the training method proposed in this paper, which uses a two-stream progressive exposure input approach to avoid information loss due to over smoothing or over exposure.

We conducted experiments on each of the three methods mentioned above, and the results are presented in Table 2. All the methods are improved by adding only a small number of parameters, but the detection results are improved in different degrees. Specifically, our BEG module can improve the quality of images, which verifies that our MEF can provide important information guidance for the enhancement module as a way to generate complementary information in different exposures. At the same time, SEG produces a more erratic performance gain, which may be due to the loss of critical information caused by over exposure or over smoothing in the intermediate stages. Our proposed REG method has the best performance among several comparisons, which validates that a two-stream progressive exposure input structure with memory capability can perform this task effectively. Finally, we visualize the four intermediate outputs of the camera response model, BEG, SEG and REG of the multi-exposure images as demonstrated in Fig. 9, thus providing visual support material for the method that we use.

4.4 Hyper-parameter analysis

For MEG-Net, we choose four stages as the number of multi-exposures, and four convolutional recurrent modules are selected as encoders for each stage. In general, increasing the number of stages will not only increase the number of parameters and computational cost of the model, but also require more space to generate more pseudo-exposure images in advance to support learning. Increasing the number of encoders in each stage may result in overfitting due to the depth of the layers. For the selection of the number, we conducted a comparison experiment and the results are presented in Fig. 10. Trade-offs were made between accuracy and efficiency. We finally chose a 4 × 4 coding structure. In addition, our network becomes a single-exposure enhanced detection network when the number of stages of MEG-Net is 1. The results in Fig. 10 also verify that our multi-exposure method improves by approximately 2% compared to the single-exposure method.

5 Conclusion

In this paper, we propose an end-to-end method to improve vehicle detection performance under dim light conditions through multi-exposure generation and fusion network. For the problem of uneven light and darkness during vehicle detection in darkness, we introduce a single gated memory unit with two-stream progressive exposure input in MEG-Net and perform ablation experiments on this part. The experimental results demonstrate that end-to-end joint training can boost mAP to 78.09% in ODDS dataset, a dataset with poor lighting conditions. Meanwhile, the number of parameters remains almost constant. Our method outperforms a variety of common image enhancement methods. In addition, the ablation experiments on each part in the paper also prove the rationality of each module in the network of this method.

Availability of data and materials

The datasets analyzed during the current study are available in the UA-DETRAC repository, https://detrac-db.rit.albany.edu/Detection. The ODDS dataset belongs to Beijing iTarge Technology Co.,Ltd. The datasets analyzed during the current study are not publicly available.

Abbreviations

- BEG:

-

branched exposure generation

- ConvRNN:

-

convolutional recurrent neural network

- CRM:

-

convolutional recurrent modules

- GAP:

-

global average pooling

- GMP:

-

global maximum pooling

- mAP:

-

mean average precision

- MEF-Net:

-

multi-exposure fusion network

- MEG-Net:

-

multi-exposure generation network

- MEGF-Net:

-

multi-exposure generation fusion network

- REG:

-

recurrent exposure generation

- SEG:

-

serial exposure generation

- SENet:

-

squeeze-excitation net

- SSIM:

-

structural similarity

References

Xiao, J., Cheng, H., Sawhney, H. S., & Han, F. (2010). Vehicle detection and tracking in wide field-of-view aerial video. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 679–684). Piscataway: IEEE.

Yuan, M., Wang, Y., & Wei, X. (2022). Translation, scale and rotation: cross-modal alignment meets RGB-infrared vehicle detection. In S. Avidan, J. B. Brostow, M. Ciss, et al.(Eds.), Proceedings of the 17th European conference on computer vision (pp. 509–525). Cham: Springer.

Yayla, R., & Albayrak, E. (2022). Vehicle detection from unmanned aerial images with deep mask R-CNN. Computer Science Journal of Moldova, 30(2), 148–169.

Charouh, Z., Ezzouhri, A., Ghogho, M., & Guennoun, Z. (2022). A resource-efficient CNN-based method for moving vehicle detection. Sensors, 22(3), 1193.

Liao, B., He, H., Du, Y., & Guan, S. (2022). Multi-component vehicle type recognition using adapted CNN by optimal transport. Signal, Image and Video Processing, 16(4), 975–982.

Al-batat, R., Angelopoulou, A., Premkumar, K. S., Hemanth, D. J., & Kapetanios, E. (2022). An end-to-end automated license plate recognition system using YOLO based vehicle and license plate detection with vehicle classification. Sensors, 22(23), 9477.

Park, S.-H., Yu, S.-B., Kim, J.-A., & Yoon, H. (2022). An all-in-one vehicle type and license plate recognition system using YOLOv4. Sensors, 22(3), 921.

Wang, Q., Lu, X., Zhang, C., Yuan, Y., & Li, X. (2023). LSV-LP: large-scale video-based license plate detection and recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 45(1), 752–767.

Lore, K. G., Akintayo, A., & Sarkar, S. (2017). LLNet: a deep autoencoder approach to natural low-light image enhancement. Pattern Recognition, 61, 650–662.

Zhang, Y., Zhang, J., & Guo, X. (2019). Kindling the darkness: a practical low-light image enhancer. In L. Amsaleg, B. Huet, M. A. Larson, et al. (Eds.), Proceedings of the 27th ACM international conference on multimedia (pp. 1632–1640). New York: ACM.

Zhu, A., Zhang, L., Shen, Y., Ma, Y., Zhao, S., & Zhou, Y. (2020). Zero-shot restoration of underexposed images via robust retinex decomposition. In Proceedings of the IEEE international conference on multimedia and expo (pp. 1–6). Piscataway: IEEE.

Wang, R., Zhang, Q., Fu, C.-W., Shen, X., Zheng, W.-S., & Jia, J. (2019). Underexposed photo enhancement using deep illumination estimation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 6849–6857). Piscataway: IEEE.

Ignatov, A., Kobyshev, N., Timofte, R., Vanhoey, K., & van Gool, L. (2017). DSLR-quality photos on mobile devices with deep convolutional networks. In Proceedings of the IEEE international conference on computer vision (pp. 3297–3305). Piscataway: IEEE.

Wei, C., Wang, W., Yang, W., & Liu, J. (2018). Deep retinex decomposition for low-light enhancement. In Proceedings of the British machine vision conference 2018 (pp. 1–12). Swansea: BMVA Press.

Ying, Z., Li, G., & Gao, W. (2017). A bio-inspired multi-exposure fusion framework for low-light image enhancement. arXiv preprint. arXiv:1711.00591.

Jebadass, J. R., & Balasubramaniam, P. (2022). Low light enhancement algorithm for color images using intuitionistic fuzzy sets with histogram equalization. Multimedia Tools and Applications, 81(6), 8093–8106.

Sobbahim, R. A., & Tekli, J. (2022). Low-light homomorphic filtering network for integrating image enhancement and classification. Signal Processing. Image Communication, 100, 116527.

Zhang, F., Shao, Y., Sun, Y., Zhu, K., Gao, C., & Sang, N. (2021). Unsupervised low-light image enhancement via histogram equalization prior. arXiv preprint. arXiv:2112.01766.

Jeong, I., & Lee, C. (2021). An optimization-based approach to gamma correction parameter estimation for low-light image enhancement. Multimedia Tools and Applications, 80(12), 18027–18042.

Guo, X., Li, Y., & Ling, H. (2017). LIME: low-light image enhancement via illumination map estimation. IEEE Transactions on Image Processing, 26(2), 982–993.

Fu, X., Liang, B., Huang, Y., Ding, X., & Paisley, J. W. (2020). Lightweight pyramid networks for image deraining. IEEE Transactions on Neural Networks and Learning Systems, 31(6), 1794–1807.

Li, J., Xiao, D., & Yang, Q. (2022). Efficient multi-model integration neural network framework for nighttime vehicle detection. Multimedia Tools and Applications, 81(22), 32675–32699.

Gao, P., Tian, T., Zhao, T., Li, L., Zhang, N., & Tian, J. (2022). GF-detection: fusion with GAN of infrared and visible images for vehicle detection at nighttime. Remote Sensing, 14(12), 2771.

Shao, X., Wei, C., Shen, Y., & Wang, Z. (2021). Feature enhancement based on cyclegan for nighttime vehicle detection. IEEE Access, 9, 849–859.

Mo, Y., Han, G., Zhang, H., Xu, X., & Qu, W. (2019). Highlight-assisted nighttime vehicle detection using a multi-level fusion network and label hierarchy. Neurocomputing, 355, 13–23.

Ballas, N., Yao, L., Pal, C., & Courville, A. C. (2016). Delving deeper into convolutional networks for learning video representations. [Paper presentation]. In Proceedings of the 4th international conference on learning representations, San Juan, Puerto Rico.

Ma, B., Wang, X., Zhang, H., Li, F., & Dan, J. (2019). CBAM-GAN: generative adversarial networks based on convolutional block attention module. In X. Sun, Z. Pan, & E. Bertino (Eds.), Proceedings of the 5th international conference on artificial intelligence and security (pp. 227–236). Piscataway: IEEE.

Grossberg, M. D., & Nayar, S. K. (2004). Modeling the space of camera response functions. IEEE Transactions on Pattern Analysis and Machine Intelligence, 26(10), 1272–1282.

Laina, I., Rupprecht, C., Belagiannis, V., Tombari, F., & Navab, N. (2016). Deeper depth prediction with fully convolutional residual networks. arXiv preprint. arXiv:1606.00373.

Wang, Z., Bovik, A. C., Sheikh, H. R., & Simoncelli, E. P. (2004). Image quality assessment: from error visibility to structural similarity. IEEE Transactions on Image Processing, 13(4), 600–612.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770–778). Piscataway: IEEE.

Gupta, P., Thatipelli, A., Aggarwal, A., Maheshwari, S., Trivedi, N., Das, S., et al. (2021). Quo vadis, skeleton action recognition? International Journal of Computer Vision, 129(7), 2097–2112.

Hu, J., Shen, J., & Sun, G. (2018). Squeeze-and-excitation networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 7132–7141). Piscataway: IEEE.

Wen, L., Du, D., Cai, Z., Lei, Z., Chang, M. C., Qi, H., et al. (2020). UA-DETRAC: a new benchmark and protocol for multi-object detection and tracking. Computer Vision and Image Understanding, 193, 102907.

Prechelt, L. (2012). Early stopping – but when? In G. Montavon, G. B. Orr, & K. Robert (Eds.), Neural networks: tricks of the trade (2nd ed., pp. 53–67). Berlin: Springer.

Liu, L., Jiang, H., He, P., Chen, W., Liu, X., Gao, J., et al. (2020). On the variance of the adaptive learning rate and beyond. In Proceedings of the 8th international conference on learning representations (pp. 1–13). Retrieved October 5, 2023, from https://openreview.net/forum?id=rkgz2aEKDr.

Redmon, J., & Farhadi, A. (2018). YOLOv3: an incremental improvement. arXiv preprint. arXiv:1804.02767.

Min, K., Lee, G.-H., & Lee, S.-W. (2022). Attentional feature pyramid network for small object detection. Neural Networks, 155, 439–450.

Acknowledgements

Not applicable.

Funding

This work was supported in part by the Science and Technology Innovation foundation (No. JSGG20210802152811033).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by BD. The first draft of the manuscript was written by BD and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Du, B., Du, C. & Yu, L. MEGF-Net: multi-exposure generation and fusion network for vehicle detection under dim light conditions. Vis. Intell. 1, 28 (2023). https://doi.org/10.1007/s44267-023-00030-x

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44267-023-00030-x