Abstract

The finite-time Lyapunov exponent (FTLE) is widely used for understanding the Lagrangian behavior of unsteady flow fields. The FTLE field contains many important fine-level structures (e.g., Lagrangian coherent structures). These structures are often thin in depth, requiring Monte Carlo rendering for unbiased visualization. However, Monte Carlo rendering requires hundreds of billions of samples for a high-resolution FTLE visualization, which may cost up to hundreds of hours for rendering a single frame on a multi-core CPU. In this paper, we propose a neural representation of the flow map and FTLE field to reduce the cost of expensive FTLE computation. We demonstrate that a simple multi-layer perceptron (MLP)-based network can accelerate the FTLE computation by up to hundreds of times, and speed up the rendering by tens of times, while producing satisfactory rendering results. We also study the impact of the network size, the amount of training, and the predicted property, which may serve as guidance for selecting appropriate network structures.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Computational fluid dynamics is widely used to simulate flow phenomena in various domains, such as oceanology, aerodynamics, and chemical reaction. Powered by high performance computing (HPC), the scientific simulation generates an overwhelming amount of time-varying flow data, posing great challenges in storing, processing, and understanding the data. For unsteady flows, scientists often study the structures and fields that reveal the Lagrangian flow behavior, such as the pathlines (trajectories of particles), finite-time Lyapunov exponent (FTLE), and Lagrangian coherent structures (LCSs). Among them, the FTLE field is of particular interest, as it describes how nearby particles diverge over time. This allows scientists to focus on important regions, such as coherent flow behaviors and barriers (e.g., LCSs).

However, visualizing all the complex and fine-level structures in an FTLE field often involves prohibitive computation cost. These structures can be thin and subtle, which renders the regular sampling of FTLE values along rays insufficient to capture the structures. Using Monte Carlo rendering can preserve these structures in visualization, but the amount of computation required may increase dramatically: a visualization image at a standard resolution already contains millions of pixels, and each pixel may need thousands of random samples to achieve a smoothing rendering effect. This leads to billions of samples in the FTLE field, where each sample requires tracing six particles for hundreds or even thousands of steps. For example, the MCFTLE approach [1] used 200 to 388 billion FTLE samples to produce astonishing FLTE rendering results, which required up to hundreds of hours on a multi-core CPU. Note that once the viewing or lighting parameters change, this procedure may need to restart from scratch.

In this paper, we aim to reduce the computation cost of the FTLE field based on one observation: an unsteady field can be densely filled with millions of pathlines to produce a high-resolution FTLE field. Therefore, with hundreds of billions of FTLE samples, the computation is likely to be redundant. By leveraging the information in the previously traced particles, we may greatly reduce the tracing time and therefore the entire FTLE computation cost. Toward this goal, we design a neural network to “remember” all samples by approximating the FTLE computation. We use the neural network instead of traditional spatial data structures, as the neural network can naturally focus on complex regions and provide higher accuracy in interpolation [2]. Previous work [3] also demonstrated that the neural network could describe complex functions and transitions [3]. Once trained, our neural network can take the initial location of a sampled particle and predict its location after a certain tracing interval, or the neural network may predict the FTLE value at a sampled location directly. This replaces the costly FTLE computation with relatively faster network inference. We demonstrate with experiments that this strategy can accelerate the Monte Carlo rendering of FTLE fields by tens of times.

Our contributions can be summarized as follows:

(1) We leverage the power of the neural network to accelerate the costly FTLE computation in Monte Carlo rendering.

(2) We propose two strategies (i.e., approximating the flow map and the FTLE field) using a simple yet effective neural network structure.

(3) We study the impact of the network size, the number of training epochs, and the predicted property. This may guide readers in selecting appropriate network structures and hyperparameters.

(4) We demonstrate the effectiveness and efficiency of our approach through experiments.

2 Related work

Our technique is closely related to the computation of the finite-time Lyapunov exponent (FTLE) field, the rendering of the FTLE field, and the neural representation of scientific data. In this section, we will discuss recent work in these three directions. Note that we do not discuss the neural rendering approaches (e.g., NeRF [4]), as we use a neural network to represent and access data and follow a traditional Monte Carlo framework for rendering. Therefore, the neural rendering approaches are less relevant.

2.1 Computation of finite-time Lyapunov exponent fields

An unsteady vector field \(v(\mathbf{x}, t)\) describes the time-varying flow in a certain domain [5, 6]. To understand the Lagrangian behavior of flow, the trajectories of mass-less particles are often analyzed. In the unsteady vector fields, these trajectories are named pathlines. The tangent directions of the pathlines are aligned everywhere with the vector field. Therefore, the pathlines reflect the flow directions and movements of particles. The pathline of a particle can be described by the following:

where τ is the duration of movement starting from time t at location x. This function, which maps a particle to a later location after a certain integration duration, is also known as the flow map as well.

To understand the Lagrangian characteristics of a flow, especially the behavior difference among nearby particles, it is common to study how the flow map changes over the seeding location by taking the gradient of the flow map with respect to the location:

where ∂ and ∂x denote gradient. The gradient of the flow map gives a Jacobian matrix at every point in the domain. Therefore, it is less viable to understand the gradient field, but more practical to study the scale of the difference, using FTLE:

where positive eigenvalue \(\lambda _{\max}\) denotes the (squared) largest magnitude of separation. The ridges of the FTLE fields are often used for extracting Lagrangian coherent structures (LCSs). LCS represents the most repelling, attracting, and shearing material lines that form the centerpieces of observed tracer patterns in unsteady dynamical systems. It is useful for identifying the Lagrangian properties of the flow around tracer trajectories [6]. LCS and FTLE are powerful tools for analyzing the flow behavior and visualizing the flow characteristics of unsteady vector fields.

However, the computation of the FTLE is costly. The gradient of the flow map at a point x is estimated by tracing three pairs of extremely close particles centered at that point, where each pair of particles provides the finite difference along one axis [7, 8]. Tracing the particles is performed by curve integration (typically the fourth-order Runge–Kutta method) and the computation cost is often expensive because it is proportional to the integration time τ. To accelerate the FTLE computation, it is crucial to develop robust approaches for approximating the flow map from sampled integral curves or estimating the FTLE values directly. For estimating the FTLE, Garth et al. [9] introduced an adaptive refinement of the flow map computation for fast FTLE computation by Catmull–Rom interpolation. Sadlo et al. [10] presented a method for filtered ridge extraction based on adaptive mesh refinement. Üffinger et al. [11] further extended previous approaches to higher order approximation. Barakat et al. [12] modeled the flow behavior around automatically detected geometric structures embedded in the flow. Kuhn et al. [13] proposed timeline refinement schemes. For the integral curve approximation schemes, hierarchical line integration [14], interpolation based on dynamic partitioning [15] or multi-resolution [16] and edge maps [17] were developed. Barakat et al. [18] presented a technique that coupled the computation and the visualization of salient flow structures at interactive frame rates using a hierarchical representation of the FTLE field. The field is adaptively sampled and rendered by ray marching. Different from these methods, our approach uses neural network to “remember” the previous FTLE (or flow map) samples and interpolate new values in the deep latent space. Therefore, it avoids costly curve integration in the Monte Carlo rendering stage while maintaining a relatively accurate rendering result.

2.2 Neural representation

Scientific data are usually simulated and stored on grid-based representations. However, as the grid-based representation may suffer from high storage and computation costs, researchers have explored different schemes for representing scientific data. Earlier work in this area focused on handcrafted design such as discrete cosine transforms [19] or wavelet-based representation [20–23]. With these methods, the data can be operated in the transformed space, so that end users may remove high frequencies while preserving low frequencies for storage efficiency. More recent techniques, such as TAMRESH [24] and TTHRESH [25] employ tensor decomposition for data reduction. However, these methods require careful design of the algorithm, considering the data dimension and coefficient selection.

Inspired by work in image processing, researchers exploited 3D convolution [2] to characterize spatial scientific data. In practice, only sampled data can be effectively encoded due to the larger number of 3D convolution kernel parameters compared with 2D. However we also note that different forms of scientific data share a common definition: volume data can be considered to be a mapping from a spatial-temporal point to a scalar value, regardless of the storage format (i.e., structured or unstructured grids). Neural networks naturally conform to this definition. Lu et al. [26] proposed a neural representation of scalar fields by learning a mapping from data coordinates to scalar values and achieved data compression by controlling the network size, and weight quantization. Vincent et al. [27] leveraged MLPs with periodic activation functions for implicit neural representations, which outperformed ReLU-MLPs. By passing input points through a Fourier feature mapping, Matthew et al. [28] designed an MLP-based network to learn high-frequency functions in low-dimensional problem domains. This approach performs well in representing complex 3D objects and scenes. With neural mapping, the volumetric data can be accessed at any resolution given spatial coordinates.

Neural representation provides efficient data representation and speeds up computation. It facilitates the processing of downstream tasks such as flow map reconstruction [29], rendering [30], and other tasks. In this paper, we introduce neural representation in the computation and rendering of the FTLE to deliver superior performance in both efficiency and accuracy.

2.3 Monte Carlo rendering

In scientific visualization, ray marching is the most extensively used tool to display 3D volumes. However, it may introduce unpredictable bias [31]. To resolve this issue, Monte Carlo rendering is developed to produce unbiased results and exploit the power of parallelization on GPUs [32]. With enough samples, Monte Carlo rendering can always converge to exact solutions. The intensity of a pixel is computed by integrating the contributions from thousands of light paths [33–35]. Therefore, Monte Carlo rendering is ideal to produce realistic rendering results from scenes with heterogeneous participating media, such as water, stream, smoke, fire, explosion, clouds, and atmosphere [32].

The FTLE field often contains thin structures, which are likely to be missed in standard ray tracing. Therefore, the FTLE is often regarded as heterogeneous media [1, 36] and rendered by the Monte Carlo method with a single-scattering model [37]. Monte Carlo methods are also generally applicable to the progress and consistent rendering of integration-based scalar fields [1], where a light path by randomly generating successive scattering events is called free-flight sampling. As an alternative, delta tracking [38] can be adapted to determine the location of a new scattering event and estimate transmittance for an unbiased solution. However, delta tracking is a binary estimator with high variance which leads to long rendering time or artifacts in the form of bias or noise [39]. Several approaches have been proposed to lower the variance, such as partitioning the medium by kd-trees [32] or grids [40], efficient beam estimates [41–43], efficient distance sampling [44–48], the non-exponential transmittance model [49–51] and neural rendering [52, 53]. Our approach differs from these approaches, as it combines the neural representation and Monte Carlo rendering. The neural representation provides an accurate description of the complicated flow map or FTLE field, while Monte Carlo rendering retrieves data efficiently with this neural representation and preserves the fine-level structures in visualization.

3 Approach

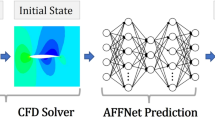

Our approach is motivated by a simple observation that many redundant samples are produced during the Monte Carlo rendering of FTLE fields. Unlike the rendering of an existing volume, sampling an FTLE is expensive due to the iterative curve integration. To eliminate the costly integration, we leverage the neural network to “remember” the previously generated samples by using them to train the network. During the Monte Carlo rendering, we use the trained network to approximate the FTLE value or flow map without computing the integral curve, as shown in Fig. 1. In this section, we explain our Monte Carlo rendering of the FTLE field, the neural network for approximating FTLE and flow maps, and the implementation details.

The workflow of our approach. The conventional Monte Carlo rendering of the FTLE traces particles (blue box), computes the FTLE value (red box), and feeds the FTLE to the renderer. Our approach uses an MLP to either predict the flow map (blue dashed line) and replace the particle tracing, or predict the FTLE value directly (red dashed line) and replace both the particle tracing and the FTLE computation

3.1 Monte Carlo rendering of FTLE

We consider the rendering of FTLE scalar fields to be the light transportation simulation in volumetric data, following MCFTLE [1, 36]. This simulation regards a participating medium as a collection of microscopic particles that either scatter photons or absorb photons. The chance of different interactions between the ray and the particles is described by a series of coefficients. The absorption coefficient \(\mu _{a}\) and scattering coefficient \(\mu _{s}\) are the probability densities for scattering and absorbing particles, respectively. The extinction coefficient \(\mu _{t} = \mu _{a} + \mu _{s}\) is the summation of the absorption coefficient and scattering coefficient, indicating the probability of either the scattering or absorbing event happening per unit distance [31]. The single-scattering albedo \(\alpha = \mu _{s} / \mu _{t}\) denotes the probability of a scattering event. The transmittance \(T(\mathbf{x},\mathbf{y})\) expresses the remaining radiance after traveling a finite distance through the medium with the integration of the extinction coefficient along the ray, i.e., \(T(\mathbf{x}, \mathbf{y})=e^{-\int _{x}^{y} \mu _{t}(\mathrm{t}) \mathrm{dt}}\). Given the above definitions, the light integration through the participating medium [31] can be formalized as:

where ω denotes the light direction, and \(L_{e}(\mathbf{y}, \omega )\) and \(L_{s}(\mathbf{y}, \omega )\) are the emission and scattering radiance, respectively.

For unbiased integration along the ray, we further leverage delta tracking and ratio tracking. Delta tracking is introduced for fictitious media, represented by the null-collision coefficient \(\mu _{n}(x)\) to homogenize the heterogeneous medium. The local density is set to the combined extinction coefficient \(\overline{\mu} = \mu _{n}(x) + \mu _{s}(x)\), which is also known as the majorant extinction coefficient as well. Photons interacting with null-collision particles may simply continue in the original ray direction. In our implementation, we use free-flight sampling which resembles a random walk along the ray. At each step, the algorithm leverages a stochastic scheme to determine whether the collisions are real or fictitious. Specifically, a random number ξ is generated and compared with the ratio. When the random number is smaller than the ratio, the collision is considered to be real. This free-flight sampling process repeats until a real collision occurs or the ray exceeds the domain. Formally, this can be expressed as:

where \(L_{e}\) is the radiance emitted by the light source, \(\boldsymbol{\alpha } (\mathbf{x}_{s} )\) reflects the FTLE value at point \(\mathbf{x}_{s}\), and \(T(\mathbf{x}_{s},\mathbf{x}_{l})\) is the transmittance. Note that we do not consider the emissive medium and surface point in Equation (4).

For transmittance \(T(\mathbf{x}_{s},\mathbf{x}_{l})\) estimation, delta tracking may introduce high variance, because it is a track-length transmittance estimator as a form of Russian roulette [31]. In our application, we use ratio tracking [39], which gives a fractional weight instead of a binary result depending on whether a real collision occurs. In this scheme, the tracking does not terminate until reaching the bounds of the domain. This process can be expressed by the following equation:

Combining Equations (5) and (6), we have an unbiased estimate of the volume rendering integral by Monte Carlo rendering (e.g., Fig. 2). Nevertheless we also note that the tentative collisions require the evaluation of spatially varying coefficients. Therefore, although accurate, the ratio tracking may lead to a large number of rejected collisions, especially when the majorant extinction is too loose. Given that the access to FTLE values requires expensive computation, the performance of the tracker also relies on a majorant extinction that tightly bounds the extinction function. This is an optimization direction orthogonal to our approach. Typical solutions include spatial subdivision schemes [32, 40].

Single-scattered volumetric light transport simulation in heterogeneous participating media. The gray arrow from the camera shows the sampling ray in direction using delta tracking. The white circles denote null-collision events and the blue circle denotes a scattering event. The line from the light bulb shows the transmittance \(T(\mathbf{x}_{s},\mathbf{x}_{l})\) between the light source and the scattering point \(\mathbf{x}_{s}\) estimated by ratio tracking

3.2 Neural compression for FTLE scalar fields

The time-consuming pathline computation is involved in both delta tracking when locating a scattering point \(x_{s}\) and the ratio tracking when estimating the transmittance \(T(x_{s},x_{l})\). Inspired by the recent advancement of neural radiance fields to represent scenes [4, 54], we train a neural network to represent the FTLE field. The network is based on MLP with its input being a single spatial location \(\mathbf{p}=(x,y,z)\) and its output being the corresponding FTLE value \(\delta (\mathbf{p})\) (or the flow map \(\phi _{t}^{\tau}(\mathbf{p})\)) at point p. We use a large number of samples (i.e., pairs of points and the corresponding outcomes) to optimize the network so that it can represent the FTLE or flow map. During Monte Carlo rendering, we feed the 3D coordinate generated by free-flight sampling to the neural network and acquire the scattering-albedo \(\alpha (\mathbf{x}_{s})\). As the inference using the neural network is much faster than the pathline integration, using the network to approximate the FTLE or flow map will accelerate the Monte Carlo rendering.

Formally, we consider the FTLE field to be a mapping from a point \(\mathbf{p}=(x,y,z)\) in the domain to a scalar value \(\delta (\mathbf{p})\), the flow map a mapping \(\phi (\mathbf{p})\), and the neural network a function \(F_{\Theta }(\mathbf{p})\), where Θ denotes the parameters of the neural network and characterizes the function F. The goal of the neural network training is to produce a function F that can approximate the FTLE mapping θ or the flow map ϕ:

The Lagrangian coherent structures correspond to the high-frequency components, as they are often detected as the ridges in the FTLE. To preserve these structures, we introduce a positional encoding of a point p before feeding the coordinate to the neural network. This strategy is demonstrated to be effective in terms of representing data with high-frequency variation in NeRF [4]. In this setting, the input of the neural network becomes:

where L denotes the maximum level of the frequency encoding. In our implementation, we use \(L=10\). With this input, the approximation process using the neural network can be expressed as \(F_{\Theta}(\varphi (\mathbf{p}))\).

3.3 Implementation details

We implemented our network using the PyTorch framework. The MLP networks have 8 or 16 fully-connected layers with the ReLU activation function and 256 or 512 channels per layer. Please refer to Sect. 4.2 for a discussion of the impact of the network size. We use Adam optimizer with moment parameters \(\beta _{1} = 0.9\) and \(\beta _{2} = 0.999\). The learning rate is initialized as 0.0001 and exponentially decays over epochs. To handle the high dynamic range in the FTLE field, we use the neural network to predict FTLE in log-scale, and evaluate the loss in both the log-scale and the original linear scale, following Bako et al. [55]. Specifically, we transform the ground truth to log-scale using \(\log (x+1)\) and use the neural network to approximate this transformed value. Our loss is the mean squared error (MSE) between the sampled and predicted values at both scales:

where N is the number of samples, \(\hat{y}_{i}\) is the predicted FTLE or flow map, and \({y}_{i}\) is the ground truth. Note that for the linear scale, we only enforce a constraint on the average of predicted values to avoid a global mean shift, as the log scale loss term has already placed local constraints on individual voxels.

Our implementation leverages the power of GPUs to sample the FTLE field, train the neural network, and perform inference in parallel. Specifically, we implement an offline Monte-Carlo FTLE sampler using CUDA to generate the reference images and training data. For the online computation, we use NVIDIA TensorRT for parallel inference inside the renderer to replace the particle tracer. At each step of the free-flight, we compute the collision points along the pixel rays using CUDA and store the points in a GPU buffer. Then, we perform the inference using TensorRT with the trained neural network and produce the next tracking events in another GPU buffer using CUDA.

4 Results and evaluation

We examine our approach using three unsteady flow datasets under different settings. Specifically, we compare our approach with the traditional Monte Carlo rendering [1] as the ground truth. We also compare the results produced by networks of different sizes and with different training epochs, and compare the networks producing different fields. All results are collected on a single NVIDIA A100 GPU with 80GB graphics memory. We denote the network that produces the FTLE directly as nueral-FTLE, and denote the network that produces the flow map for conventional FTLE computation as neural-FM. As neural-FTLE always outperforms neural-FM in all settings, we mainly focus on discussing the performance of neural-FTLE. For each dataset under each setting, we train the network for approximately 1000 epochs until full convergence, which takes 14 to 28 hours. However we note that much fewer epochs are required to achieve satisfactory rendering results (usually within 100 epochs). In the following part, we will focus on the timing performance for rendering without further discussing the training time, as the training is performed only once while the rendering needs to be performed many times.

4.1 Datasets

In this section, we describe the datasets with their respective experimental settings and explain how the training data are produced for these datasets.

Double gyre

The double dyre [6] is a classic 2D unsteady vector field used to evaluate the performance of FTLE calculations as illustrated in Fig. 3. We use the temporal-periodic domain in \([0,2]\times [0,1]\times [0,1]\) following MCFTLE [1]. Note that the velocity field does not change over z and the w-component is fixed at zero because the flow is two-dimensional. We produce the FTLE field with an integration duration \(\tau = 10\). In the experiment, we do not explicitly store the vector field but produce the vectors on the fly using the following equation:

The double gyre dataset rendered by MCFTLE [1] and our approach with 1000 samples per pixel (spp). The rendering results in the green and red rectangles are enlarged for detailed investigation. The first row shows the result of MCFTLE as the ground truth. The second row shows the result of our approach using an MLP network to represent the FTLE field. At a resolution of \(4000 \times 2000\), our approach is 17.04 times faster than MCFTLE while preserving most fine-level structures. The color distributions between the two images (produced by tevFootnote

) are similarHydrocarbon flame

The hydrocarbon flame [56] is a direct numerical simulation performed for incompressible homogeneous flow with decaying isotropic turbulence. The domain is \([0,599]\times [0,191]\times [0,191]\) and the FTLE integration duration is \(\tau = 11\). As demonstrated in Fig. 4, the flow is more turbulent on the left side and mostly laminar on the right side.

ECMWF

The European Centre for Medium-Range Weather Forecasts (ECMWF) datasetFootnote 2 is a simulation of the global weather. In our experiment, we use the daily flow field in October 2018 over the Northern Hemisphere. The domain is \([0,359]\times [0,180]\times [0,36]\) and the integration duration \(\tau = 11\). The ECMWF dataset exhibits complex Lagrangian coherent structures because of transportation patterns and the mixing behavior of atmospheric flows [7], which pose a great challenge for our methods to capture these features.

Training data

We randomly sample hundreds of millions of points in the flow field and compute the corresponding FTLE values. Note that we can use the samples from the conventional Monte Carlo rendering without creating extra samples. For each sampled point, we generate six particles along the three axes with a very small gap (10−6 in our experiment) and trace the particles to compute their flow maps and the partial derivatives, as depicted in Fig. 1. In this way, we produce one FTLE sample and six flow map samples for a sampled point. The FTLE samples and flow map samples are stored in separate files, where each file contains 10242 samples. During the training stage, we randomly select 100 files for each epoch.

4.2 Rendering quality study

Overall performance

We find that our neural-FTLE network produces satisfactory results with a sufficiently large network size (16 layers by 512 channels) and training (1000 epochs). The network generates accurate FTLE values with PSNRs being 30.02 for double gyre, 23.12 for ECMWF, and 21.62 for hydrocarbon flame datasets. The rendered images are also similar to the ground truth produced by MCFTLE [1], with the SSIM being 0.82 for double gyre, 0.78 for ECMWF, and 0.8657 for hydrocarbon datasets.

Qualitative comparison

We then study the rendering results perceptually for qualitative comparisons. For the double gyre dataset, we can see that the rendering result produced by our neural-FTLE and the ground truth are mostly identical, as shown in Fig. 3. The difference in their color histograms is barely observable, either. When magnifying regions in the red and green rectangles, we find that the fine-level structures (e.g., the blue, orange, and brown layers) are quite similar as well. Nevertheless, the ground truth seems to be smoother, which may lead to the decrease of the SSIM to some degree.

For the ECMWF dataset, we also find that the rendering results are similar to the ground truth, as displayed in the last row of Fig. 5. As the Lagrangian behavior of the ECMWF dataset is complicated, we can see that both visualizations exhibit many fine-level structures. The structures in different visualizations are similar in shape, although the color may shift by a small amount, damaging the PSNR. An explanation is that the FTLE contains many more samples with small values, which dominate the training process and the neural network may tend to predict smaller FTLE values. These errors accumulate during Monte Carlo sampling, leading to bluer visual impressions. Meanwhile, although the average is shifting, the maximum is less impacted. Therefore, we can still perceive the most important structures (i.e., the ridges in the FTLE field) in both visualizations. The rendering results of the hydrocarbon flame dataset provide similar observations, where the structures are similar but the color in our result leans to the blue side.

Impact of training epochs

We study the validation losses over epochs for networks with four different sizes using the three datasets, as shown in Fig. 6. In all the loss curves, we observe similar patterns, where the loss drops steeply at the beginning and becomes mostly flat. The elbow point usually comes in the first 100 epochs. The loss curve for the hydrocarbon flame dataset fluctuates than the other two datasets, but still exhibits a similar pattern in general.

Then, we study the impact of the number of epochs qualitatively using the ECMWF dataset, as shown in Fig. 5. Although the validation loss only decreases from 0.115 (in the first epoch) to 0.062 (in the 1000-th epoch), the difference in rendering results is blindingly obvious. The change is especially dramatic in the first 100 epochs before the validation loss curve becomes flat. After 100 epochs, the network can produce the rough patterns of the FTLE field, although the structures seem to be blurred, as illustrated in Fig. 5(d). With further training, the rendering result using the final network produces structures with particularly sharp edges, as illustrated in Fig. 5(e). These structures are similar to the ground truth in Fig. 5(f).

Impact of network size

In general, we find that increasing the size of the neural network boosts the performance in terms of representation power and rendering quality. In Fig. 6, it seems that the width of the network (i.e., the number of channels) seems to play a major role. We can see that the gray and yellow loss curves (corresponding to wider networks with 512 layers) appear below the blue and orange curves, indicating that the wider networks have smaller validation losses. This is especially easy to observe for the double gyre dataset. For the hydrocarbon flame dataset, as this trend is not clear in the line chart, we will further verify this with the rendering results and the PSNR later. Increasing the depth of the network (i.e., the number of layers) also enhances the performance, as we can see that the orange curve (\(16\times 256\)) appears below the blue curve (\(8 \times 256\)), and the yellow curve (\(16\times 512\)) below the orange curve (\(16\times 256\)). However, the impact of the depth seems to be smaller compared to that of the width.

We then investigate the rendering results using the hydrocarbon flame dataset produced by networks of different sizes, as illustrated in Fig. 4. The visualizations demonstrate similar structures, but the ones generated by wider networks (in the second row) seem to be smoother. The PSNR and SSIM also show the same trend. The wider networks have larger PSNRs (22.45 and 22.57 for 512 layers, compared to 2.162 and 21.74 for 256 layers) and larger SSIMs (0.888 and 0.887 for 512 layers, compared to 0.866 and 0.871 for 256 layers). Similarly, we also find that the deeper networks perform better, but the increase is marginal compared to the width. This is understandable as the number of parameters is linearly proportional to the depth but square proportional to the width for the MLP.

Impact of the predicted property

We find that the neural-FTLE outperforms neural-FM in terms of FTLE rendering results in almost all cases. For example, in Fig. 7, we can see that neural-FM also produces Lagrangian coherent structures (the red voxels) that are similar to the ones in Fig. 3. However, the FTLE values computed from the predicted flow map seem to suffer from a global mean shift towards large values. A possible explanation is that the flow map is not continuous at LCS (e.g., separation lines), which makes it challenging for the neural network to approximate the flow map. In addition, the error in the flow map may be magnified during the computation of the FTLE, which also leads to obvious visual differences in rendering results. Therefore, for the FTLE rendering, we may safely conclude that neural-FTLE may produce superior results. Nevertheless, we note that the neural representation of the flow map may benefit a series of downstream tasks beyond FTLE computation and rendering.

4.3 Efficiency study

Time efficiency

We compare the time for computing FTLE and Monte Carlo rendering using neural inference and particle tracing (RK4, as used in MCFTLE [1]), as displayed in Table 1. We only discuss the time for neural-FTLE as it is used for producing our results. For FTLE computation, we can see that the performance of neural inference solely relies on the size of the network and is independent of the dataset, while the time using particle tracing may vary across datasets. In our experiment, the use of neural network provides a speedup ranging from 46.4 to 270.9, depending on the network size and the dataset. For Monte Carlo rendering, the speedup ranges from 10.2 to 135.3, depending on the portion of computation cost for the FTLE computation. As the double gyre dataset is relatively simple, fewer samples (FTLE computation) are needed in Monte Carlo rendering. Therefore, the FTLE computation occupies a smaller portion of the total rendering time, leading to smaller speedup. When the dataset is more complex, the speedup will be higher. For example, using the ECMWF dataset, the largest network is still 50.5 times faster than the conventional approach.

Storage efficiency

Neural representation also reduces the space required to store the flow field, as shown in Table 2. In our experiment, the smallest network (\(8\times 256\)) requires 2.47 MB storage while the largest (\(16 \times 512\)) requires 16.46 MB, which are much smaller than the original datasets (2278.13 MB for hydrocarbon flame and 1995.74 MB for ECMWF). The only exception is the synthetic dataset (e.g., double gyre), where the vectors can be computed on the fly without any storage.

4.4 Discussion

First, using neural networks as surrogate models for the prohibitive computation of physics attributes may provide significant performance gain. Other than the FTLE computation, a similar example is using the convolutional neural network (CNN) for approximating instantaneous vorticity deviation (IVD) in vortex detection [57]. However, we should also be alarmed that the reduced inference cost should be worth the additional training cost. Additionally, we should be cautious about the physics constraints. For example, we witness a clear gap between neural-FTLE and neural-FM, as the neural network is completely unaware of the connection between these two fields. By enforcing physics constraints, we may enhance the generalizability of the network across physics attributes. In the future, we would like to further study the performance of the physics-informed neural network on FTLE rendering and similar tasks.

Second, it is critical to select appropriate targets for the surrogate models. On the one hand, errors may accumulate during computation, and predicting the results directly may be more practical. For example, our attempt at neural-FM is unsuccessful. A possible explanation is that the error in the flow maps may be magnified during the computation of the FTLE without any physics constraint. In contrast, predicting the FTLE values directly may bypass this limitation. On the other hand, the final results are often specific, which may prohibit the trained network from being adapted to other tasks. The neural-FM may benefit a series of downstream tasks beyond the FTLE computation, while the neural-FTLE can only be used for this specific task. Similarly, predicting FTLE values for Monte Carlo rendering may lead to lower PSNR than predicting the rendering results directly. Nevertheless, predicting the visualization results may not produce results in other view angles when the integration rays are completely different.

Third, in our current experiment, we only examine the performance in terms of predicting FTLE values with a fixed integration interval τ. This greatly reduces the amount of data to be learned but also leads to a limitation that the network needs to be retrained when the interval changes. Although it is possible to include the interval as a parameter of the neural network, the performance may not be ideal when the space to learn is overly complicated: a small network may not be able to describe the complex physical phenomenon, while a large network may lead to longer inference time and increase the rendering time. In addition, using large networks to approximate complex transformations may require more sophisticated training strategies. For this specific task (i.e., FTLE rendering), we would suggest recommending multiple small networks for different intervals. The neural networks may share particle trajectories as training data and initial parameters to reduce the training cost.

5 Conclusions and future work

We propose a neural representation to accelerate the Monte Carlo rendering of finite-time Lyapunov exponent fields. Our approach uses MLPs to represent the FTLE or flow map as continuous implicit functions to eliminate the time-consuming particle tracing during rendering. Our approach can accelerate the FTLE computation by up to hundreds of times and the entire Monte Carlo rendering by tens of times. The neural network also reduces the storage by two orders of magnitude. We study the impact of approximating FTLE versus an alternative for predicting flow maps, and find that predicting the FTLE directly yields superior rendering results.

In the future, we would like to explore the following directions. First, we would like to further improve the neural representation in general. Our current approach uses frequency encoding for high-frequency signals, which does not consider their spatial distribution. We would like to leverage spatial hashing, space partition, and parametric encoding to better fit the data characteristics. Second, we want to further reduce the network inference time by reducing the size of the networks. To maintain the representation quality, we may use multiple smaller networks to replace a single larger network. Third, we would like to use the neural network to accelerate the tracking of Monte Carlo rendering. Specifically, we might use the neural network to learn how to sample along a ray and reduce the number of samples needed to produce a high-fidelity visualization.

Availability of data and materials

All data generated or analyzed during this study are included in this published article.

References

Günther, T., Kuhn, A., & Theisel, T. (2016). MCFTLE: Monte Carlo rendering of finite-time Lyapunov exponent fields. Computer Graphics Forum, 35(3), 381–390.

Han, J., & Wang, C. (2020). SSR-TVD: spatial super-resolution for time-varying data analysis and visualization. IEEE Transactions on Visualization and Computer Graphics, 28(6), 2445–2456.

Han, J., Zheng, H., Xing, Y., Chen, D. Z., & Wang, C. (2020). V2V: a deep learning approach to variable-to-variable selection and translation for multivariate time-varying data. IEEE Transactions on Visualization and Computer Graphics, 27(2), 1290–1300.

Mildenhall, B., Srinivasan, P. P., Tancik, M., Barron, J. T., Ramamoorthi, R., & NeRF, R. Ng. (2020). Representing scenes as neural radiance fields for view synthesis. In A. Vedaldi, H. Bischof, T. Brox et al. (Eds.), Proceedings of the 16th European conference on computer vision (pp. 405–421). Berlin: Springer.

Pobitzer, A., Peikert, R., Fuchs, R., Schindler, B., Kuhn, A., Theisel, H., et al. (2011). The state of the art in topology-based visualization of unsteady flow. Computer Graphics Forum, 30(6), 1789–1811.

Shadden, S. C., Lekien, F., & Marsden, J. E. (2005). Definition and properties of Lagrangian coherent structures from finite-time Lyapunov exponents in two-dimensional aperiodic flows. Physica D. Nonlinear Phenomena, 212(3–4), 271–304.

Haller, G., & Yuan, G. (2000). Lagrangian coherent structures and mixing in two-dimensional turbulence. Physica D. Nonlinear Phenomena, 147(3–4), 352–370.

Haller, G. (2001). Distinguished material surfaces and coherent structures in three-dimensional fluid flows. Physica D. Nonlinear Phenomena, 149(4), 248–277.

Garth, C., Gerhardt, F., Tricoche, X., & Hans, H. (2007). Efficient computation and visualization of coherent structures in fluid flow applications. IEEE Transactions on Visualization and Computer Graphics, 13(6), 1464–1471.

Sadlo, F., & Peikert, R. (2007). Efficient visualization of Lagrangian coherent structures by filtered AMR ridge extraction. IEEE Transactions on Visualization and Computer Graphics, 13(6), 1456–1463.

Üffinger, M., Sadlo, F., Kirby, M., Hansen, C. D., & Ertl, T. (2012). FTLE computation beyond first-order approximation. In n. C. Andujar & E. Puppo (Eds.), The 33rd annual conference of the European association for computer graphics, eurographics 2012-short papers (pp. 61–64). Eindhoven: The Eurographics Association.

Barakat, S. S., & Tricoche, X. (2013). Adaptive refinement of the flow map using sparse samples. IEEE Transactions on Visualization and Computer Graphics, 19(12), 2753–2762.

Kuhn, A., Engelke, W., Rössl, C., Hadwiger, M., & Theisel, H. (2014). Time line cell tracking for the approximation of Lagrangian coherent structures with subgrid accuracy. Computer Graphics Forum, 33(1), 222–234.

Hlawatsch, M., Sadlo, F., & Weiskopf, D. (2010). Hierarchical line integration. IEEE Transactions on Visualization and Computer Graphics, 17(8), 1148–1163.

Chandler, J., Obermaier, H., & Joy, K. I. (2014). Interpolation-based pathline tracing in particle-based flow visualization. IEEE Transactions on Visualization and Computer Graphics, 21(1), 68–80.

Agranovsky, A., Obermaier, H., Garth, C., & Joy, K. I. (2015). A multi-resolution interpolation scheme for pathline based Lagrangian flow representations. In D. L. Kao, M. C. Hao, M. A. Livingston, & T. Wischgoll (Eds.), Visualization and data analysis 2015 (Article No. 93970K), Bellingham: SPIE.

Bhatia, H., Jadhav, S., Bremer, P.-T., Chen, G., Levine, J. A., Nonato, L. G., & Pascucci, V. (2011). Flow visualization with quantified spatial and temporal errors using edge maps. IEEE Transactions on Visualization and Computer Graphics, 18(9), 1383–1396.

Barakat, S.S., Garth, C., Tricoche, X. (2012). Interactive computation and rendering of finite-time Lyapunov exponent fields. IEEE Transactions on Visualization and Computer Graphics, 18(8), 1368–1380.

Yeo, B.-L., & Liu, B. (1995). Volume rendering of DCT-based compressed 3D scalar data. IEEE Transactions on Visualization and Computer Graphics, 1(1), 29–43.

Guthe, S., Wand, M., Gonser, J., & Straßer, W. (2002). Interactive rendering of large volume data sets. In The 13th IEEE visualization conference (pp. 53–60). Los Alamitos: IEEE.

Ihm, I., & Park, S. (1999). Wavelet-based 3D compression scheme for interactive visualization of very large volume data. Computer Graphics Forum, 18(1), 3–15.

Muraki, S. (1993). Volume data and wavelet transforms. IEEE Computer Graphics and Applications, 13(4), 50–56.

Woodring, J., Mniszewski, S., Brislawn, C., DeMarle, D., & Ahrens, J. (2011). Revisiting wavelet compression for large-scale climate data using JPEG 2000 and ensuring data precision. In D. H. Rogersı. T. Silva (Ed.), The 1st IEEE symposium on large-scale data analysis and visualization 2011, LDAV 2011 (pp. 31–38). Los Alamitos: IEEE.

Suter, S. K., Makhynia, M., & Pajarola, R. (2013). Tamresh–tensor approximation multiresolution hierarchy for interactive volume visualization. Computer Graphics Forum, 32(3), 151–160.

Ballester-Ripoll, R., Lindstrom, P., & Pajarola, R. (2020). TTHRESH: tensor compression for multidimensional visual data. IEEE Transactions on Visualization and Computer Graphics, 26(9), 2891–2903.

Lu, Y., Jiang, K., Levine, J. A., & Berger, M. (2021). Compressive neural representations of volumetric scalar fields. Computer Graphics Forum, 40(3), 135–146.

Sitzmann, V., Martel, J., Bergman, A., Lindell, D., & Wetzstein, G. (2020). Implicit neural representations with periodic activation functions. In H. Larochelle, M. Ranzato, R. Hadsell et al. (Eds.), Advances in neural information processing systems 33: annual conference on neural information processing systems 2020 (NeurIPS 2020) (pp. 7462–7473). Berlin: Springer.

Tancik, M., Srinivasan, P., Mildenhall, B., Fridovich-Keil, S., Raghavan, N., Singhal, U., et al. (2020). Fourier features let networks learn high frequency functions in low dimensional domains. Advances in Neural Information Processing Systems, 33, 7537–7547.

Sahoo, S., Lu, Y., & Berger, M. (2022). Neural Flow Map Reconstruction. Computer Graphics Forum, 41(3), 391–402.

Yariv, L., Gu, J., Kasten, Y., & Lipman, Y. (2021). Volume rendering of neural implicit surfaces. In M. Ranzato, A. Beygelzimer, P. S. Liang et al. (Eds.), Advances in neural information processing systems 34: annual conference on neural information processing systems 2021 (NeurIPS 2021) (pp. 4805–4815).

Novák, J., Georgiev, I., Hanika, J., & Monte, W. J. (2018). Carlo methods for volumetric light transport simulation. Computer Graphics Forum, 37(2), 551–576.

Yue, Y., Iwasaki, K., Chen, B.-Y., Dobashi, Y., & Nishita, T. (2010). Unbiased, adaptive stochastic sampling for rendering inhomogeneous participating media. ACM Transactions on Graphics, 29(6), 1–8.

Subrahmanyan, C. (1960). Radiative transfer. New York: Dover.

Kajiya, J. T. (1986). The rendering equation. In D. C. Evans & R. J. Athay (Eds.), Proceedings of the 13th annual conference on computer graphics and interactive techniques, (SIGGRAPH 1986) (pp. 143–150). New York: SIGGRAPH.

Veach, E. (1998). Robust Monte Carlo methods for light transport simulation. Ph.D. dissertation, Stanford University (1997).

Baeza Rojo, I., Gross, M., & Günther, T. (2019). Accelerated Monte Carlo rendering of finite-time Lyapunov exponents. IEEE Transactions on Visualization and Computer Graphics, 26(1), 708–718.

Max, N. (1995). Optical models for direct volume rendering. IEEE Transactions on Visualization and Computer Graphics, 1(2), 99–108.

Woodcock, E., Murphy, T., Hemmings, P., & Longworth, S. (1965). Techniques used in the GEM code for Monte Carlo neutronics calculations in reactors and other systems of complex geometry. In Proceedings of the conference on applications of computing methods to reactor problems, (pp. 1–23). Lemont: Argonne National Laboratory

Novák, J., Selle, A., & Jarosz, W. (2014). Residual ratio tracking for estimating attenuation in participating media. ACM Transactions on Graphics, 33(6), 1–11.

Szirmay-Kalos, L. Tóth, B., & Magdics, M. (2011). Free path sampling in high resolution inhomogeneous participating media. Computer Graphics Forum, 30(1), 85–97.

Novák, J., Engelhardt, T., & Dachsbacher, C. (2011). Screen-space bias compensation for interactive high-quality global illumination with virtual point lights. In M. Garland & R. Wang (Eds.), Symposium on interactive 3D graphics and games (pp. 119–124). New York: ACM.

Novák, J., Nowrouzezahrai, D., Dachsbacher, C., & Jarosz, W. (2012). Virtual ray lights for rendering scenes with participating media. ACM Transactions on Graphics, 31(4), Article No. 60.

Bitterli, B., & Jarosz, W. (2017). Beyond points and beams: higher-dimensional photon samples for volumetric light transport. ACM Transactions on Graphics, 36(4), Article No. 112.

Kulla, C., & Fajardo, M. (2012). Importance sampling techniques for path tracing in participating media. Computer Graphics Forum, 31(4), 1519–1528.

Marco, J., Jarabo, A., Jarosz, W., & Gutierrez, D. (2018). Second-order occlusion-aware volumetric radiance caching. ACM Transactions on Graphics, 37(2), Article No. 20.

Kutz, P., Habel, R., Li, Y. K., & Novák, J. (2017). Spectral and decomposition tracking for rendering heterogeneous volumes. ACM Transactions on Graphics, 36(4), Article No. 111.

Miller, B., Georgiev, I., & Jarosz, W. (2019). A null-scattering path integral formulation of light transport. ACM Transactions on Graphics, 38(4), 1–13.

Lin, D., Wyman, C., & Yuksel, C. (2021). Fast volume rendering with spatiotemporal reservoir resampling. ACM Transactions on Graphics, 40(6), Article No. 279.

Bitterli, B., Ravichandran, S., Müller, T., Wrenninge, M., Novák, J., Marschner, S., & Jarosz, W. (2018). A radiative transfer framework for non-exponential media. ACM Transactions on Graphics, 37(6), Article No. 225.

Vicini, D., Jakob, W., & Kaplanyan, A. (2021). A non-exponential transmittance model for volumetric scene representations. ACM Transactions on Graphics, 40(4), Article No. 136.

Kettunen, M., d’Eon, E., Pantaleoni, J., & Novák, J. (2021). An unbiased ray-marching transmittance estimator. ACM Transactions on Graphics, 40(4), Article No. 137.

Zhang, C., Yu, Z., & Zhao, S. (2021). Path-space differentiable rendering of participating media. ACM Transactions on Graphics, 40(4), Article No. 137.

Nimier-David, M., Müller, T., Keller, A., & Jakob, W. (2022). Unbiased inverse volume rendering with differential trackers. ACM Transactions on Graphics, 41(4), Article No. 44.

Müller, T., Evans, A., Schied, C., & Keller, A. (2022). Instant neural graphics primitives with a multiresolution hash encoding. ACM Transactions on Graphics, 41(4), Article No. 102.

Bako, S., Vogels, T., McWilliams, B., Meyer, M., Novák, J., Harvill, A., et al. (2017). Kernel-predicting convolutional networks for denoising Monte Carlo renderings. ACM Transactions on Graphics, 36(4), Article No. 97.

Hyun Kim, S., Huh, K. Y., & Bilger, R. W. (2002). Second-order conditional moment closure modeling of local extinction and reignition in turbulent non-premixed hydrocarbon flames. Proceedings of the Combustion Institute, 29(2), 2131–2137.

Deng, L., Wang, Y., Liu, Y., Wang, F., Li, S., & Liu, J. (2019). A CNN-based vortex identification method. Journal of Visualization, 22(1), 65–78.

Funding

This work is supported by National Key R&D Program of China (No. 2021YFB0300103), and the National Natural Science Foundation of China (Nos. 61902446, 62172456, and 91937302).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. YX prepared the material and collected the experiment data. All authors contributed to the analysis of experiment results and the first draft equally. They all read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors have no financial or proprietary interests in any material discussed in this article.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xi, Y., Luan, W. & Tao, J. Neural Monte Carlo rendering of finite-time Lyapunov exponent fields. Vis. Intell. 1, 10 (2023). https://doi.org/10.1007/s44267-023-00014-x

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44267-023-00014-x