Abstract

Governments are issuing regulations and laws demanding that companies protect collected and processed personal data. In Brazil, the federal government sanctioned the General Personal Data Protection law, which defines personal and sensitive data associated with Brazilian citizens. One existing barrier for companies to comply with the law is identifying where personal data is stored inside their infrastructure, mainly concerning personal data inserted into unstructured documents. Named Entity Recognition and Classification (NERC) can support companies in this task by implementing supervised learning models to identify personal data. In this study, we designed an experiment to evaluate machine learning-based NERC using BERT and LSTM approaches to recognize personal data related to Brazil’s context. We established a generic training corpus based on online documents and trained two models for each approach, one considering the original corpus and another after lowercasing it. The study also assessed relation extraction to differentiate personal entities from others. For NERC and relation extraction evaluations, we established a training corpus comprising documents from two organizations related to the education and health sectors. BERT fine-tuned with uncased corpus scored an F1 measure of 0.8 and achieved the best performance in recognizing entities, followed by LSTM based on the same corpus. After applying relation extraction, BERT models achieved better F1 scores than LSTM models. The uncased BERT model achieved an F1 score of 0.85, which was the best. Experiment results also indicated that relation extraction improves the performance of BERT models to discover personal entities.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Advances in computing power, storage capacity, and network technologies have increased companies’ capabilities to collect and process massive amounts of data [1]. This capability made possible the emergence of new business models based on online services, and a common characteristic of most of them is the volume of data collected and processed. Inside the world of digital data, which companies are responsible for, specific types of information have gained attention: Personally Identifiable Information (PII) and Sensitive PII. The U.S. Department of Homeland Security (DHS) defines PII as any information that allows one to infer the individual’s identity, and Sensitive PII is a particular category of PII that "if lost, compromised, or disclosed without authorization, could result in substantial harm, embarrassment, inconvenience, or unfairness to an individual" [2]. Literature generically refers to PII as personal data, and this paper will use both terms.

Worries about the protection of personal data collected and processed began in the 1960s, and the first legislation considering this concern was the Data Protection Act, which was passed by a German state in 1970 [1]. The debate on privacy has increased significantly in recent years, and new laws and regulations to impose obligations on companies about personal data have been issued. Some well-known examples are the General Data Protection Regulation (GDPR) [3], created by The European Union, and the California Consumer Privacy Act (CCPA) [4]. In Brazil, the federal government sanctioned a specific bill comprising data protection known, in Portuguese, as ’Lei Geral de Proteção de dados’ (LGPD) [5]. LGPD took inspiration, reproduces most of the rules, and keeps a very close structure to the European Union regulation [6].

The LGPD law, as well as similar worldwide laws and regulations, imposes penalties on not-compliant companies, causing worries and triggering the implementation of information security controls to protect personal data. However, one of the company’s most significant challenges is discovering the information that should be protected [7]. This challenge results from the collaborative environment and the myriad of tools used in work, allowing employees to handle PII related to themselves, other employees, or customers [8]. One consequence of handling PII in a shared environment and the lack of knowledge about where it is stored is to overexpose personal data [9]. Korba et al. [8] also pointed out that the scenario increases the likelihood of personal data leaks.

In order to mitigate overexposure and breaches, the starting point is to discover the PII held by a company in its technological infrastructure [8], and this challenge can be more significant when documents written in natural language, one type of unstructured data, include PII. The Natural Language Processing (NLP) task that can be applied to this scenario is information extraction, more specifically, its sub-tasks referred to as Named Entity Recognition and Classification (NERC) and relation extraction. Early NERC approaches used rules-based approaches to identify entities [10], and Korba et al. [8] implemented regular expressions and dictionaries to recognize personal data.

In a later approach, NERC was dealt with as a classification problem, and shallow classifiers like Naive Bayes and Conditional Random Fields (CRF) were applied to determine entities [11]. The study of Dias et al. [12] evaluated using shallow classifiers to identify PII in European Portuguese documents. More recently, research has focused on deep learning-based NERC implementations [13]. Yayik et al. [14] applied a Bi-LSTM classifier to recognize personal data in Turkish texts, and Petrolini et al. [15] assessed two BERT-based models to identify sensitive data.

Although the research has discussed the discovery of PII in unstructured data for a considerable time, we believe that it needs to evolve in some aspects concerning the applicability of NLP in real-world scenarios. For example, some studies in the area analyze the use of machine learning-based NERC to identify coarse-grained and well-known entities [12, 16, 17], and we believe it is essential to evaluate machine learning approaches in fine-grained discovering. Additionally, only a few studies include relation extraction in the NLP pipeline, and it is essential to minimize false-positives occurrences. Finally, there is a lack of studies evaluating NLP tasks in Portuguese [18], which indicates the importance of new research focusing on the language, and we found no study targeting the discovery of Brazilian PII entities.

So, this work proposes to answer the research question, "How accurately learning-based approaches and entity relations can identify fine-grained entities related to Brazilian PII?". In order to answer this question, this research designed an experiment to evaluate NERC approaches to recognize PII entities in Brazilian Portuguese documents. Additionally, the experiment applies a relation extraction strategy to determine entities that represent sensitive content and need protection. Aiming to assess the performance of the experiment in real-world applications, training and testing datasets are related to different domains. We tagged PII entities using generic templates from the internet to train the NERC models, and we evaluated the models using documents provided by two organizations comprising the education and health sectors. The main contributions of this paper are:

-

To the best of our knowledge, we present the first evaluation of NERC models to identify fine-grained entities related to Brazilian PII.

-

We evaluate the contribution of relation extraction based on the token distance to discard entities not related to personal data identified by NERC models.

The remainder of this manuscript is organized as follows. “Related work” presents the related work applying NERC approaches to identify PII and sensitive topics in unstructured data. “Experiment design” describes the experiment designed to recognize PII in Brazilian Portuguese documents. “Results” presents the metrics collected in the experiment and discusses the results. Finally, “Conclusion” concludes the paper.

2 Related work

We observed that the application of entity recognition approaches to identify PII is primary evaluated in data discovery and privacy-related studies. Several studies described rule-based assessments, but as this work focused on machine learning approaches, we selected studies using similar proposals. We also described hybrid approaches implementing rule-based and machine learning approaches to identify personal data.

Studies have applied machine learning classifiers to identify a set of coarse-grained entities. The study of Geng et al. [19] implemented a hybrid approach to classify emails containing personal data. The study used a rule-based approach to identify four coarse-grained entities (email, phone number, address, and money) in Enron email dataset. The authors created a table using association rules based on the presence of the entities, and implemented Decision Tree and SVM classifiers to predict if a message includes personal information.

Another study tested the NERC approach to de-identify PII and PHI. It evaluated OpenNLP and Spacy libraries to recognize five entities (Name, Place, Address, Dates and numbers) in English medical documents [16]. Kaplan [17] analyzed the performance of Bi-LSTM-CRF architecture to recognize six personal data entities in call centers transcriptions. The experiment used transcriptions of English-spoken calls taken by a call center and implemented the deep learning architecture using FLAIR. The study also evaluated the use of different embeddings in combination with FLAIR.

Although analyzing the NERC’s performance in identifying the most common types of entities is very important, the most current regulation scenario imposes the necessity of recognizing a wider number of personal data-related entities. Aiming to meet GDPR compliance, Dasgupta et al. [20] proposed a set of 134 Personal Data Entity Types (PDET) and evaluated the performance of neural network-based models to recognize fine-grained entities in three English-related datasets. Nagpal et al. [21] used the same set of 134 PDET to analyze the performance of BERTEC, a language model based on DistilBERT that incorporates embeddings as side information. The study evaluated BERTEC using three English datasets: OntoNotes, IMDB reviews, and Jigsaw Toxicity.

The study of Yayik et al. [14] proposed a solution based on Bi-LSTM neural network, rule-based model, and dictionary to extract 77 personal data entities from a dataset containing Turkish sentences. The study also proposed applying entity relation extraction based on profiles that use window sizes and considers that all entities inside a window size range belong to the same profile.

Two studies analyzed NERC approaches to recognize fine-grained entities in German datasets [22, 23]. In the study of Fenz et al. [22], the authors proposed a system to identify 18 types of patient data defined by HIPPA in health records aiming to document de-identification. The system implemented a hybrid approach, combining an SVM classifier with dictionaries and pattern matching. The system proposed relation extraction handcrafted rules considering the entities order in a document. Leitner et al. [23] implemented a NERC approach to recognize 19 fine-grained entities in legal documents. The authors created a dataset containing 750 German court decisions and evaluated the performance of six models comprising CRF and Bi-LSTM architectures.

The study of Dias et al. [12] focused on the Portuguese language and proposed a hybrid system for discovering 18 types of personal data combining machine learning with rule-based and lexicon-based models. The personal information considered in the study refers to the Portugal data format. The study evaluated the performance of CRF, Random Forest, and Bi-LSTM classifiers in three datasets; one created specifically for the assessment since public datasets did not contain all entities searched.

As analyzed by Yayik et al. [14], Fenz et al. [22], Leitner et al. [23], and Dias et al. [12], our work targets a set of fine-grained personal data entities that meet a Brazilian law, which differentiates this study from others. Although the study of Dias et al. [12] implemented a system to discover entities in Portuguese texts, their study targeted entities related to documents issued by Portugal, and this study focuses on entities related to Brazilian documents. Additionally, different from Yayik et al. [14], Fenz et al. [22], and Dias et al. [12], this study evaluates NERC approaches based exclusively on machine learning classifiers. We do not implement a hybrid approach to recognize entities.

Another aspect differentiating this work is the implementation of relation extraction to filter the entities that need to be protected from the set of entities discovered by the NERC approach. As mentioned by Nagpal et al. [21], the presence of an entity in a text does not necessarily characterize PII, so as well as Yayik et al. [14], we implement an entity relation extraction approach based on the distance between tokens as well as some rules to remove entities that might not be associated with PII.

3 Experiment design

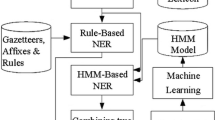

In this section, we detail the experiment implemented, which followed the process presented in Fig. 1. In the first stage of the process, we created a training corpus using online documents containing PII and unfilled templates of documents. We aimed to develop a corpus not related to the domains evaluated in the experiment. For the testing corpus, we used documents provided by two organizations from the education and health sectors. This experiment’s corpora are formed exclusively by documents written in Brazilian Portuguese.

The following stage comprised preprocessing training and testing corpora. We created a normalized version of the training corpus and implemented an OCR script to convert digitized documents to text format in the testing corpus. In the last stage, we performed two evaluations of the NERC approaches: (i) the performance before relation extraction considering personal data entities and non-personal data entities contained in the documents; (ii) the performance after relation extraction considering only PII entities. The experiment design and settings are detailed in the following subsections.

3.1 Annotated entities and datasets

LGPD uses generic definitions and does not define which information should be considered personally identifiable. Therefore, we built the label set based on the Personal Data Entity Types taxonomy proposed by Dasgupta et al. [20]. We also used a NIST guide for double-checking [24]. In order to define the entity set, we analyzed documents provided by the two organizations and interviewed employees, so we compared the entities identified with those present in the taxonomy and the NIST guide. The result was a set of 23 personal data tags identifying Brazilian PII, presented in Fig. 2.

Additionally, we needed to add a 24th tag because many Brazilian documents contain the name of the city and the state written as a single word using a marked character (e.g., an hyphen) to segment, and the tokenizer function does not split into two tokens. So, we identified a total of 24 tags. It is worth noticing that the documents received by the organizations to establish the testing corpus did not contain four existing entities shown in Fig. 2: voter ID number, bank, bank agency, and account number. So, although we trained the models considering 24 entities, the experiment recognized 20 of them.

As PII identification is necessary for different industry domains, we defined an initial training corpus based on documents from distinct sectors. This strategy also aimed to avoid bias in the trained models. We applied two approaches based on publicly available information to establish the training dataset: (i) we downloaded documents written in a natural language containing PII; (ii) we downloaded templates of documents requesting PII data and filled them with personal data. In order to implement the second approach, we searched the internet for files containing lists of PII data. The searches returned many documents comprising personal data available publicly, primarily on governmental sites. Additionally, we also found samples of leaked databases available on the internet. So, we merged the PII data collected to the templates for creating documents written in natural language encompassing PII. The two approaches produced 1.109 annotated documents.

During the tagging process, we verified that some number-based entities were found in different formats, and some formats are less frequent than others, so we decided to implement a data augmentation approach. Data augmentation encompasses strategies for increasing the number of texts in a training corpus through diverse new textual content [25]. Data augmentation constructs synthetic new or modified texts based on existing content, increasing the size and enhancing the training corpus’s quality to improve the machine learning model [26, 27]. The application of data augmentation is valuable in scenarios where the volume of data is restricted, as usually occurs in those comprising personal data.

Data augmentation performed in this study aimed to increase the number of entities found in less frequent formats, and we focused on entities related to ID numbers, such as federal ID and state ID. So, we implemented Python scripts to create copies of some documents included in the training corpus and, in the new document, replace the format of the ID numbers for those less frequent. For each ID-related entity, we created a list of possible formats and implemented a pseudorandom function to select one of them. The script generated 122 new documents containing the target entities in different formats. As the entity length could differ depending on the format picked by the scripts, the new documents needed to be tagged.

We also tried a second data augmentation approach intending to expand the corpus by modifying the texts of the documents. We evaluated using Brazilian word embeddings to replace the nouns in the original documents for others that fit in the context. For this, we implemented GloVe embeddings trained with Brazilian documents available publicly.Footnote 1 However, our attempt did not succeed because noun substitution generated new documents that did not correspond to the context of real-world documents, so we discarded the produced documents and did not include them in the training corpus.

Two annotators manually tagged the final training corpus comprising 1.231 documents, resulting in 6.371 sentences for training the machine learning models. The primary annotator was one of the authors, assisted by a professional hired for the task. In order to ensure that annotations follow the same pattern, all tags were reviewed by the primary annotator. The total of annotated tags was 16.551. Although it is not possible to ensure the balance of the tags due to the documents’ structure, we defined a minimum of a hundred occurrences for each annotated tag.

In order to evaluate the trained models, we created a test set consisting of 80 real-world documents containing PII from two organizations from the health and education sectors. We selected 40 distinct documents from each company. In the 80 documents, we localized 906 entities corresponding to the 23 personal data evaluated. From the total, 566 entities are really associated with people and must be protected according to Brazilian law.

3.2 Preprocessing

The preprocessing stage comprises the application of techniques in the raw text, improving the quality of the unstructured data processed by an NLP task [28]. Applying preprocessing techniques aims to enhance pattern extraction and improve the performance of an NLP task. In this experiment, we employed different preprocessing techniques in the training and testing corpora. The training involved documents in PDF and DOC formats, so we initially converted all to plain text. As the study of Castro et al. [29] concluded that normalization was the preprocessing technique that produces the most significant improvement in the performance of a NERC experiment, we decided to evaluate the impact of normalizing the text to lowercase for training the models. So we kept one training corpus to the original text of each document and created a second training corpus with all texts converted to lowercase. Consequently, we trained two models for each evaluated approach, the first comprising the original texts and named as cased, and the normalized one named uncased.

The testing corpus was also preprocessed. The two organizations delivered PDF documents, and we converted all of them to plain text format. Some PDFs documents were generated from a digitization process, so we implemented a script using Tesseract to perform OCR and convert the documents to plain text. In some cases, the OCR application resulted in errors in the plain text, and we manually corrected them. Although these errors will occur in real-world applications, we believe that procedures can be implemented to correct most of them automatically, and we will address this situation in future work. The most common error perceived was the misconversion of electronic addresses.

As some PDFs result from the digitization of semi-structured physical forms (i.e., medical records), the conversion of these documents resulted, in many cases, in the name of the form fields in one line and the values associated with them in a line below. We pondered about removing these documents from the test corpus; however, we decided to keep them because they were an example of real-world documents, and we wanted to evaluate the models for real-world application. Figure 3 presents an example of a digitized form-based document in the original format and after its conversion to plain text. Another conversion issue related to this document type is that the conversion resulted in short paragraphs. We dealt with these cases by merging paragraphs containing less than 40 characters with the next paragraph.

3.3 NERC approaches

Recent surveys analyzed the application of traditional machine learning and deep learning approaches for recognizing entities [13, 30]. Ehrmann et al. [30] pointed out CRF as the leading traditional classifier implemented in the NERC task. When considering deep learning approaches, Li et al. [13] and Ehrmann et al. [30] emphasized the implementation of LSTM and Transformers for entity recognition. We also analyzed a survey comprising the application of NERC in the cybersecurity domain, and CRF, LSTM, and Transformers approaches were applied for recognizing entities in different cybersecurity activities [31].

During the design of the experiment, we considered implementing the CRF classifier. The study of Amaral and Vieira [32] demonstrated that a system based on the classifier overcame other systems to identify entities in Brazilian Portuguese texts. We implemented CRF using the Spacy-CRFsuite.Footnote 2 However, the model performed poorly in some tests, and we excluded it from the experiment.

Due to removing CRF, the experiment evaluated only deep learning approaches. The first approach was Long-Short Term Memory (LSTM), specifically the Birectional LSTM+CRF combination proposed by Huang et al. [33]. We chose the combination because it achieved the best overall performance in recognizing entities in a NERC contest comprising Brazilian Portuguese texts [34].

In addition, the study of Akbik et al. [35] demonstrated that the use of stacked embeddings improved the performance of Bi-LSTM+CRF approach in the NERC task, so we stacked Portuguese-trained Flair’s forward and backward contextual embeddings with GloVeFootnote 3 word embedding as input for our models. The GloVe vector dimension utilized was 300. The implementation used the FLAIR framework [36], and we set the number of hidden layers to 256, the number of epochs to 100, and the learning rate to 0.1.

The Transformer architecture also achieved significant results in performing NERC on Brazilian Portuguese texts [37]. For implementing the Transformer approach, we opted for fine-tuning the BERTimbauFootnote 4 model because it overcame other models in a NERC experiment [38]. In order to fine-tune the model, we set the learning rate to 0.0001, the batch size to 16, and three epochs.

Unlike occurs for the English Language, Portuguese is considered a low-resource language [39]. Consequently, there is a lack of resources regarding the NERC application for the Portuguese language [40]. To the best of our knowledge, this study pioneers the discovery of Brazilian PII, so we could not find other machine learning models to compare with the performance obtained by the models trained. Due to this, we did not establish baselines and focused on comparing the models trained from the corpus crafted for this study.

3.4 Post-processing and relation extraction

Before applying the relation extraction task, we performed validations regarding some types of entities. All entities recognized as the tag city were checked against a dictionary containing all Brazilian cities. We applied Levenshtein distance in the comparison to handle typos and abbreviations in the documents. As many entities refer to Brazilian ID numbers that follow a strict number of digits, we validated the entities’ length to mitigate the chances of identifying a wrong value as an entity. Additionally, some identity numbers contain a check digit, and in these cases, we implemented functions to validate the entity’s value.

Relation extraction performed a primary role in the scope of this study because we targeted the recognition of PII entities related to Brazilian data protection law. We considered the relation extraction a relevant task to reduce the number of false positives on PII discovery because it allows the recognition of entities associated with an individual. Due to this context, our proposal identified a particular entity and recognized if it is related to someone. For example, a phone number can be associated with a person or a company, and according to Brazilian LGPD law, it must be protected only in the first association.

We employed the entity relation method proposed by El-Assady et al. [41], which uses distance restriction to define the relationship. The method defines that two or more entities must be close to each other (i.e., in the same paragraph) in the document to be considered valuable. We implemented an approach similar to Yayik et al. [14] that defined a window to establish an entity as PII. The window consists of the number of existing tokens between two tokens in the text. However, unlike Yayik et al. [14], who evaluated the window distance between predefined keywords and an entity, our approach was based primarily on the distance between two recognized entities. We applied the method and defined the token distances based on an analysis of the documents comprising the training corpus.

3.5 Experiment evaluation

We evaluated the experiment in two stages of the process. Initially, we assessed the result of the named entity recognition task, in which we considered the approaches’ performances in identifying the entities tagged in the training corpus. In this stage, we did not consider if a system must protect the PII entity recognized. For example, identifying a company’s phone in a document was considered correct in this stage because the phone was one type of entity tagged in the training data, even if the entity was not related to PII.

In the second stage, we evaluated the performance after applying the relation extraction task. In this assessment, we considered an entity only those that a company must protect. So, the previous example concerning the company’s phone is not targeted at this stage, and we focused only on PII-related entities.

The metrics used for experiment evaluation were precision, recall, and F1-measure. We adopted the sets of metrics defined in Segura-Bedmar et al. [42] because it allowed us to analyze if the approaches were identifying tags’ boundaries or assigning the tags incorrectly. Segura-Bedmar et al. [42] presented four approaches to calculating precision and recall: Strict, Exact, Partial, and Type.

The Strict approach considers that the boundaries of an identified entity must comprise only the correct tokens, and the tag must be appropriately assigned. The Exact type only cares about the tag boundaries, so an entity must be considered in cases where the tag is assigned incorrectly as long as the tokens were identified correctly. The Partial type considers only the partial matching of the entity boundaries and does not consider the assigned tag type. Finally, the Type approach only verifies if the type of an entity was assigned correctly and does not check if the boundaries match [42].

Considering that the objective of the experiment was to analyze the use of NERC and relation extraction to identify personal data in documents, we believe that evaluating the performance of the models only using the strict approach is not appropriate because, in real-world applications, the recognition of partial entities is enough to discover documents that need to be protected. Therefore, to measure the four approaches, we manually extracted from each document the entities that should be recognized by NERC and which of these entities are related to PII and should remain after the relation extraction stage.

4 Results

In this section, we initially compare the four trained models considering only the application of NERC without the application of validation rules and relation extraction. We next present the final result of the relation extraction proposed for identifying only PII entities. It is important to reinforce that the NERC result considered all entities found in the testing corpus related to the set of 23 personal data illustrated in Fig. 2, and for the second comparison, we considered only the entities found in the testing corpus that should be protected. This section also analyzes the impact of NERC and relation extraction for discovering documents containing PII entities. In this evaluation, we focus on identifying documents that include personal data. Finally, we discuss the overall results and present the study’s limitations.

4.1 NERC

After performing the NERC approaches, we generated the first result set. Table 1 presents the number of entities correctly, incorrectly, and partially correct recognized for each model. The table also shows how many entities were missed by each model and the total of false positives (spurious). The total number of entities in the 80 documents is 906.

The results show that all models missed a significant quantity of entities in the documents. The model that best performed in discovering entities was \(\hbox {BERT}_{\textrm{uncased}}\). Although the number of missed entities is quite worrying for applying the models for discovering entities for information security proposals, analyzing the entities identified in each document makes it possible to verify that most missed entities were related to addresses data found in the documents unrelated to PII. Additionally, we identified that the models did not recognize several people’s names included in longer documents.

Table 1 also presents that BERT and LSTM models identified only a few spurious entities, and it is very relevant in this study’s context since the objective of the NERC is to identify documents that need to be protected because they hold personal data. The small number of spurious entities supposes that the models generate few false positives, which is important because of reducing the number of documents that need to be analyzed by the information security personnel.

Figure 4 and Table 2 present the selected metrics considering the documents related to the health and education sectors. Considering the document sample assessed in this study and the F1-score metric, \(\hbox {BERT}_{\textrm{uncased}}\) in the Type approach was the model that achieved the best performance. The F1 score was 0.8. Two models scored 0.775, the second higher, \(\hbox {BERT}_{\textrm{uncased}}\) and \(\hbox {LSTM}_{\textrm{uncased}}\). The difference was that \(\hbox {BERT}_{\textrm{uncased}}\) achieved the metric in the Partial approach and \(\hbox {LSTM}_{\textrm{uncased}}\) in the Type approach.

4.2 Relation extraction

The proposed process applied the NERC outcome to the next stage comprising validation functions and relation extraction rules. Unlike the NERC approach that aimed to recognize any of the 23 entity types assessed in this study, even if the entity is unrelated to a person, this second stage should identify only PII entities. This stage’s result should indicate if the information security personnel must protect a specific document. Table 3 presents the first result of this stage. The table shows the number of correctly, incorrectly, partially correct, missed, and spurious entities identified for each evaluated model. Since this stage must recognize only PII-related entities, the total number of entities in the 80 documents is 566.

We observed that the number of spurious entities increased for all models evaluated because we considered a correctly recognized entity as spurious if it is not related to personal data. For example, if NERC recognized a company’s address and entity relation rules did not exclude it, it was counted as spurious since there is no association with a person. Through the analysis of Table 3 is possible to verify that the implemented rules failed in removing some entities recognized in the NERC stage. The lowest number of spurious entities was identified by \(\hbox {BERT}_{\textrm{cased}}\), summing up 22, followed by \(\hbox {BERT}_{\textrm{uncased}}\), which summed up 48. Therefore, in our assessment, the BERT-fine-tuned models detected the fewest spurious entities.

On the other hand, the number of missed entities decreased. As we mentioned in “NERC”, the NERC approach missed many entities unrelated to personal data, which were not considered in this evaluation. Regarding missed entities, BERT-fine-tuned models also performed better than LSTM. However, unlike the previous result, the \(\hbox {BERT}_{\textrm{uncased}}\) reached the lowest number of missed entities, a total of 89. The \(\hbox {BERT}_{\textrm{cased}}\) model missed 121 PII entities.

Figure 5 and Table 4 present the metrics obtained after performing relation extraction. We observed that BERT models achieved a superior F1 score considering only PII entities. On the other hand, LSTM models worsen the F1-score metric. Again, \(\hbox {BERT}_{\textrm{uncased}}\) model in the Type approach achieved the best performance. It is worth noting that both Precision and Recall achieved metrics pretty close, both superior to 0.8, which suggests that the number of missed and spurious entities was low. The F1-score calculated for this approach was 0.851. \(\hbox {BERT}_{\textrm{uncased}}\) model also achieved an F1-score of 0.84 in the Partial approach, which was the second highest. Following \(\hbox {BERT}_{\textrm{uncased}}\), the \(\hbox {BERT}_{\textrm{cased}}\) was the model to achieve the highest F1-score. The Partial approach reached an F1 score of 0.825. However, in this case, the model did not present a balance between Precision and Recall since it achieved a Precision of 0.912, the best for all models, but the Recall was 0.753.

As mentioned in “Annotated entities and datasets”, two document sets were evaluated in the experiment. Tables 5 and 6 present the metrics for each set of documents individually. As in the consolidated result, the \(\hbox {BERT}_{\textrm{uncased}}\) model achieved the best performance in each set individually. The model obtained an F1-score of 0.904 for the educational documents. The Partial approach achieved this result and was slightly higher than the 0.901 obtained by the Type approach. Based on this number, we can assume that \(\hbox {BERT}_{\textrm{uncased}}\) assigned incorrect labels for a small set of recognized entities. Despite that, we think that model’s performance was pretty good for educational documents, considering the set of distinct PII entities in the evaluation.

The \(\hbox {BERT}_{\textrm{uncased}}\) model obtained an F1-score of 0.828 in the set of documents related to the health sector. The result also demonstrates that the \(\hbox {BERT}_{\textrm{uncased}}\) model achieved the best result in identifying PII entities in education documents than health sectors. As we mentioned in “Annotated entities and datasets”, the health organization provided us with digitized documents based on physical forms, and we observed that BERT models did not perform well in this type of document. We observed that \(\hbox {BERT}_{\textrm{cased}}\) also obtained superior performances in education documents. This performance was not noticed in LSTM models since the cased version obtained a better F1 score in health documents.

4.3 Undiscovered documents

An issue of traditional discovery approaches, mainly based on regular expressions and dictionaries, is the significant number of discovered entities associated with false positives. On the other hand, we must avoid missing documents containing PII. So considering our sample of 80 documents, we analyzed the results of each model evaluated to present if they might miss documents containing PII entities. Table 7 shows the number of documents in which the NERC models recognized no entity and how much it represents from the sample evaluated. The table also presents the same data after performing the relation extraction proposal.

The table demonstrates that the \(\hbox {BERT}_{\textrm{uncased}}\) model did not miss any document in the NERC approach. In the cased model of BERT, the NERC approach resulted in the loss of one document. The LSTM resulted in missing three documents in both models, cased and uncased.

After applying entity relation rules, we obtained a more significant number of undiscovered documents. \(\hbox {BERT}_{\textrm{uncased}}\) presented the best scenario, and the number of missed documents increased from zero to three, representing 3.75% of the sample. There are two reasons for the increment in the number of missed documents after applying relation extraction in the entities recognized by \(\hbox {BERT}_{\textrm{uncased}}\).

The first concerns the recognition of entities not related to PII by the NERC approach. For example, one of the missed documents comprised four entities for the NERC evaluation, in which three were related to personal data, and one was associated with the city where the health organization is located, which had no relation with personal data. When we performed the NERC approach in this document, the entity related to the city were recognized, so the document detected one entity. However, when relation extraction was applied, as the name of the city was found alone in the document, the entity was excluded, resulting in no entities remaining.

The second reason is associated with the design of entity relation rules. The rules considered the distance between entities to decide whether to keep or remove them. In two cases, the \(\hbox {BERT}_{\textrm{uncased}}\) model missed PII entities, resulting in recognizing entities pretty far from each other, and the consequence was the exclusion of both.

One example was a document comprising nine entities for NERC evaluation, two related to personal data positioned at the beginning of the document and seven associated with address and contact entities of the educational organization. The BERT uncased model recognized two entities, the person’s name (one of the two personal data) and the phone number of the educational organization, at the end of the document. Due to the distance between the recognized entities, both were excluded by entity relation rules. These two reasons also impacted the other models.

4.4 Discussion and limitations

In this section, we added discussions about the results achieved in the experiment, associating them with the discovery of personal data, the primary motivation for this study. We also explore the results in the context of recognizing fine-grained entities and the application of a generic training corpus in different domains. Finally, we present some limitations related to the experiment designed.

In the NERC evaluation, the BERT model fine-tuned with lowercase documents achieved the best performance with an F1-score of 0.8. Although BERT obtained the best performance in the NERC evaluation, the \(\hbox {LSTM}_{\textrm{uncased}}\) model also obtained an F1-score of 0.775. Considering the sample of documents evaluated, the results present that BERT and LSTM performed better for uncased models. Despite this study considering only 24 entities, a smaller number compared to other fine-grained studies, we understand that BERT and LSTM should be considered for recognizing fine-grained entities.

In a broader analysis of the NERC results, we observed that the Type approach obtained the higher F1-score for all models evaluated, and this indicates that models correctly recognize the entities’ tags but fail to identify the entities’ boundaries. This result is positive, considering using NERC to identify PII entities in documents. From the information security perspective, it is very relevant to know the type of PII correctly for most entities, even if some were not framed correctly. The primary reason is that some data types are more valuable than others. For example, many government and bank sites utilize the federal ID number to identify the user, so cyber criminals constantly target this type of data, which should receive special attention from cybersecurity teams.

A central point in the experiment was evaluating the relation extraction contribution to discard entities unrelated to personal data but identified in the NERC stage. So the token distance approach was applied to obtain some context of the text to decide whether an entity refers to PII. Again, \(\hbox {BERT}_{\textrm{uncased}}\) achieved the best performance after applying relation extraction and was associated with the Type approach.

Considering only PII-related entities, \(\hbox {BERT}_{\textrm{uncased}}\) achieved an F1-score of 0.851, improving the metric to 0.08 if compared to NERC evaluation. The better performance in the F1 score resulted from a significant improvement in the Recall since many entities missed in the NERC stage were related to non-PII entities. On the other hand, we verified that the Precision metric achieved a lower result after relation extraction because many non-PII entities were not removed using the token distance approach implemented. Based on this, we understand that improving the Precision metric through fine-tuning relation extraction rules is possible. We also understand this is only possible by increasing the number of documents in the testing corpus because crafting rules based on the established corpus could generate a bias.

The training corpus used in the experiment comprised documents from different sectors, and we were careful not to include documents from the domains related to the testing corpus. Cross-domain NER is a well-known challenge due to the difficulty for a trained model to generalize entity recognition for distinct domains [43, 44]. The results demonstrated that \(\hbox {BERT}_{\textrm{uncased}}\) could improve the performance in recognizing entities in distinct domain documents when fine-tuned with a generic-labeled corpus. In “Relation extraction”, we presented the results for each domain individually, and \(\hbox {BERT}_{\textrm{uncased}}\) performed F1-score above 0.8 in both domains, despite a difference of 0.07 between them.

Although the \(\hbox {BERT}_{\textrm{cased}}\) and LSTM models did not perform best, we believe the approaches should be evaluated with a training corpus comprising a larger number of entities. On the other side, as mentioned in “Experiment design”, the CRF-trained model did not obtain a positive performance in an initial assessment, and we decided to remove it from the experiment. Based on the initial evaluation, we understand that CRF models do not achieve a good performance in recognizing fine-grained entities in multiple domains.

Discovering personal data entities is crucial for companies to overcome the lack of knowledge related to PII stored in their infrastructure. It is also needed because companies can be penalized and fined for violating laws and regulations like LGPD. Based on the testing corpus consisting of 80 documents, results demonstrated that only \(\hbox {BERT}_{\textrm{uncased}}\) model recognized entities in all documents in the NERC evaluation. After applying relation extraction, all models failed to identify PII entities; consequently, organizations would not protect some documents. On the other hand, results demonstrated the identification of a small number of spurious entities, so a real-world application should not misidentify a large number of documents. Considering the number of documents stored in companies, a small number of false positives is very important to ensure supervision from security teams and that a company does not waste resources and time protecting unnecessary documents.

Although we based the experiment on a well-known methodology to obtain consistent results, it is essential to present some limitations related to this study. The first limitation is the number of industry sectors providing documents to establish the testing corpus. The experiments evaluated the NERC approaches in documents provided by two industry sectors. As every industry sector usually uses proper language and handles different document structures, this context limits our evaluation.

The number of entities evaluated in the experiment is also a limitation. We annotated 24 entities for training the model and evaluated the model considering 20 entities included in document sets provided by organizations. In real-world applications, documents might contain entities not comprised in the experiment, and models should deal with them. Finally, the number of annotations related to some entities is also a limitation. The documents in the training corpus are unbalanced, and some entities appear much more frequently than others. This limitation can primarily impact ID-related entities because some have similar features, and the NERC approaches can create a bias.

5 Conclusion

This study evaluated the application of information extraction sub-tasks to identify 24 PII entities in Brazilian Portuguese documents. The analysis consisted of four trained models comprising BERT and LSTM, where each classifier was trained with one uncased and one cased corpus. In the first stage, we assessed the NERC performance of these models considering all entities in the documents, even if an entity was unrelated to personal data. In the second stage, we applied relation extraction rules based on tokens distance to evaluate if it allowed us to identify only PII-related entities. We also discussed documents encompassing personal data that would not be discovered after relation extraction implementation.

The experiment results demonstrated that NERC approaches achieve significant performance for discovering personal data and should be considered an alternative to traditional techniques. Based on the NERC evaluation, the experiment’s first results recognized only a few spurious entities, meaning the approaches produced a few false positives. It is very positive from the data discovery perspective since the excess of false positives could create an overload of work to protect the documents, many of them unnecessarily. In opposition, we also demonstrated that the trained models missed entities in NERC and relation extraction stages. Although relation extraction reduced the number of missed entities, we presented that a consequence of missing some entities is that documents containing personal data would not be discovered.

Although this study presented valuable information about the application of information extraction to discover PII, we identified improvements that can result in future work. First, the experiment evaluated the four models trained in documents associated with two organizations from distinct industry sectors; however, we propose expanding the number of documents and including organizations related to other sectors to assess the models’ performance. The application of relation extraction to differentiate PII entities from others unrelated to personal data and avoid false positives in the discovery is also a relevant topic that needs further study, and we intend to evaluate new approaches to compare to the one applied in this study.

The corpus used to train the models combines manually annotated documents and ones generated with data augmentation. We suggest that future work evaluate new data augmentation techniques to expand the corpus comprising documents with PII entities, primarily those related to applying Natural Language Generation (NLG) based on fine-tuned Large Language Models (LLM). In this study, we faced an issue in the preprocessing stage since OCR conversion generated errors. Different approaches to correct OCR typos are addressed in the literature, so future work could evaluate them in the NERC process to avoid manual correction. Finally, we propose expanding the set of PII entities comprising sensitive personal data defined on LGPD, including, for example, religious and political options and PHI.

Data availability

The corpora generated and analyzed during the current study are not publicly available because they are based on real documents from Brazilian organizations and comprise personal data associated with Brazilian people. Additionally, Brazilian data protection law imposes restrictions to make personal data publicly available.

References

Tikkinen-Piri C, Rohunen A, Markkula J. Eu general data protection regulation: changes and implications for personal data collecting companies. Comput Law Secur Rev. 2018;34(1):134–53.

Office DP. Handbook for safeguarding sensitive PII 2017.

European Parliament, Council of the European Union: Regulation (EU) 2016/679 of the European Parliament and of the Council 2016. https://data.europa.eu/eli/reg/2016/679/oj Accessed 13 Jun 2023.

Legislature CS. California Consumer Privacy Act of 2018 2018. https://oag.ca.gov/privacy/ccpa. Accessed 13 Jun 2023.

Brasil: Lei \(\text{n}^{\circ }\) 13.709, de 14 de agosto de 2018. Diário Oficial [da] República Federativa do Brasil 2018.

Bertoni E. Convention 108 and the gdpr: trends and perspectives in Latin America. Comput Law Secur Rev. 2021;40:105516. https://doi.org/10.1016/j.clsr.2020.105516.

LLC PI. 2022 global encryption trends study. Technical report, Ponemon Institute 2022.

Korba L, Wang Y, Geng L, Song R, Yee G, Patrick AS. Buffett S, Liu H, You Y. Private data discovery for privacy compliance in collaborative environments. In: International Conference on Cooperative Design, Visualization and Engineering. Springer, pp. 142–150; 2008.

Symantec: 2018 shadow data report. Technical report, Symantec 2018.

Nadeau D, Sekine S. A survey of named entity recognition and classification. Lingvist Investig. 2007;30(1):3–26.

Goyal A, Gupta V, Kumar M. Recent named entity recognition and classification techniques: a systematic review. Comput Sci Rev. 2018;29:21–43.

Dias M, Boné J, Ferreira JC, Ribeiro R, Maia R. Named entity recognition for sensitive data discovery in Portuguese. Appl Sci. 2020;10(7):2303.

Li J, Sun A, Han J, Li C. A survey on deep learning for named entity recognition. IEEE Transac Knowl Data Eng. 2020;34(1):50–70.

Yayik A, Aybar V, Apik HH, İçöz S, Bakar B, Güngör T. Deep learning-aided automated personal data discovery and profiling. Turk J Electr Eng Comput Sci. 2022;30(1):167–83.

Petrolini M, Cagnoni S, Mordonini M. Automatic detection of sensitive data using transformer-based classifiers. Fut Internet. 2022;14(8):228.

Pearson C, Seliya N, Dave R. Named entity recognition in unstructured medical text documents. In: 2021 International Conference on Electrical, Computer and Energy Technologies (ICECET), 2021;1–6. IEEE.

Kaplan M. May i ask who’s calling? named entity recognition on call center transcripts for privacy law compliance. arXiv preprint arXiv:2010.15598. 2020.

Souza E, Costa D, Castro DW, Vitório D, Teles I, Almeida R, Alves T, Oliveira AL, Gusmão C. Characterising text mining: a systematic mapping review of the Portuguese language. IET Softw. 2018;12(2):49–75.

Geng L, Korba L, Wang X, Wang Y, Liu H, You Y. Using data mining methods to predict personally identifiable information in emails. In: Advanced Data Mining and Applications: 4th International Conference, ADMA 2008, Chengdu, China, October 8-10, 2008. Proceedings 4, Springer, p. 272–281; 2008.

Dasgupta R, Ganesan B, Kannan A, Reinwald B, Kumar A. Fine grained classification of personal data entities. arXiv preprint arXiv:1811.09368 2018.

Nagpal A, Dasgupta R, Ganesan B. Fine grained classification of personal data entities with language models. In: 5th Joint International Conference on Data Science & Management of Data (9th ACM IKDD CODS and 27th COMAD). p. 130–134; 2022.

Fenz S, Heurix J, Neubauer T, Rella A. De-identification of unstructured paper-based health records for privacy-preserving secondary use. J Med Eng Technol. 2014;38(5):260–8.

Leitner E, Rehm G, Moreno-Schneider J. Fine-grained named entity recognition in legal documents. In: Semantic Systems. The Power of AI and Knowledge Graphs: 15th International Conference, SEMANTiCS 2019, Karlsruhe, Germany, September 9–12, 2019, Proceedings, Springer, p. 272–287; 2019.

McCallister E, Grance T, Scarfone K. Guide to protecting the confidentiality of personally identifiable information vol. SP 800-122. NIST, 2010.

Feng SY, Gangal V, Wei J, Chandar S, Vosoughi S, Mitamura T, Hovy E. A survey of data augmentation approaches for NLP. In: Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, 2021;968–988. Association for Computational Linguistics, Online. https://doi.org/10.18653/v1/2021.findings-acl.84. https://aclanthology.org/2021.findings-acl.84.

Shorten C, Khoshgoftaar TM, Furht B. Text data augmentation for deep learning. J Big Data. 2021;8:1–34.

Shorten C, Khoshgoftaar TM. A survey on image data augmentation for deep learning. J Big data. 2019;6(1):1–48.

Uysal AK, Gunal S. The impact of preprocessing on text classification. Informat Process Manage. 2014;50(1):104–12.

Castro PV, Silva N, Silva Soares A. Portuguese named entity recognition using lstm-crf. In: Computational Processing of the Portuguese Language: 13th International Conference, PROPOR 2018, Canela, Brazil, September 24–26, 2018, Proceedings 13, p. 83–92. Springer; 2018.

Ehrmann M, Hamdi A, Pontes EL, Romanello M, Doucet A. Named entity recognition and classification in historical documents: a survey. ACM Comput Surv. 2023. https://doi.org/10.1145/3604931.

Gao C, Zhang X, Han M, Liu H. A review on cyber security named entity recognition. Front Informat Technol Electron Eng. 2021;22(9):1153–68.

Amaral DOF, Vieira R. Nerp-crf: uma ferramenta para o reconhecimento de entidades nomeadas por meio de conditional random fields. Linguamática. 2014;6(1):41–9.

Huang Z, Xu W, Yu K. Bidirectional lstm-crf models for sequence tagging. arXiv preprint arXiv:1508.01991 2015.

Collovini S, Neto JFS, Consoli BS, Terra J, Vieira R, Quaresma P, Souza M, Claro DB, Glauber R. Iberlef 2019 portuguese named entity recognition and relation extraction tasks. In: IberLEF@ SEPLN, 2019;390–410.

Akbik A, Blythe D, Vollgraf R. Contextual string embeddings for sequence labeling. In: COLING 2018, 27th International Conference on Computational Linguistics, 2018;1638–1649.

Akbik A, Bergmann T, Blythe D, Rasul K, Schweter S, Vollgraf R. FLAIR: An easy-to-use framework for state-of-the-art NLP. In: NAACL 2019, 2019 Annual Conference of the North American Chapter of the Association for Computational Linguistics (Demonstrations), 2019;54–59.

Souza F, Nogueira R, Lotufo R. Portuguese named entity recognition using bert-crf. arXiv preprint arXiv:1909.10649 2019.

Souza F, Nogueira R, Lotufo R. Bertimbau: pretrained bert models for brazilian portuguese. In: Brazilian Conference on Intelligent Systems. Springer, p. 403–417; 2020.

Santos Neto MV, Silva NFF, Silva Soares A. A survey and study impact of tweet sentiment analysis via transfer learning in low resource scenarios. Language Resources and Evaluation, 2023;1–42.

Rocha NC, Barbosa AMP, Schnr YO, Machado-Rugolo J, Andrade LGM, Corrente JE, Arruda Silveira LV. Natural language processing to extract information from portuguese-language medical records. Data 2022;8(1):11.

El-Assady M, Sevastjanova R, Gipp B, Keim D, Collins C. Nerex: named-entity relationship exploration in multi-party conversations. Comput Graph Forum. 2017;36(3):213–25.

Segura-Bedmar I, Martínez Fernández P, Herrero Zazo M. Semeval-2013 task 9: Extraction of drug-drug interactions from biomedical texts (ddiextraction 2013). (2013). Association for Computational Linguistics.

Jia C, Liang X, Zhang Y. Cross-domain ner using cross-domain language modeling. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, 2019;2464–2474.

Liu Z, Xu Y, Yu T, Dai W, Ji Z, Cahyawijaya S, Madotto A, Fung P. Crossner: evaluating cross-domain named entity recognition. In: Proceedings of the AAAI Conference on Artificial Intelligence, 2021;35:13452–13460.

Author information

Authors and Affiliations

Contributions

LI and CAC conceived of the presented idea. LI and MGM carried out the experiment. LI wrote the manuscript with support from CAC and MGM. CAC supervised the project. All authors discussed the results and contributed to the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was approved by the Grupo Hospitalar Conceição Research Ethics Committee, Reference CAAE number 52948621.8.2001.5530. The Unisinos University Research Ethics Committee also approved this study, Reference CAAE number 52948621.8.1001.5344.

Competing interests

The authors declare that they have no competing interest, and there has been no financial support for this work that could have influenced its outcome.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ignaczak, L., Martins, M.G., da Costa, C.A. et al. An evaluation of NERC learning-based approaches to discover personal data in Brazilian Portuguese documents. Discov Data 1, 5 (2023). https://doi.org/10.1007/s44248-023-00005-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44248-023-00005-9