Abstract

In Machine Learning, the datasets used to build models are one of the main factors limiting what these models can achieve and how good their predictive performance is. Machine Learning applications for cyber-security or computer security are numerous including cyber threat mitigation and security infrastructure enhancement through pattern recognition, real-time attack detection, and in-depth penetration testing. Therefore, for these applications in particular, the datasets used to build the models must be carefully thought to be representative of real-world data. However, because of the scarcity of labelled data and the cost of manually labelling positive examples, there is a growing corpus of literature utilizing Semi-Supervised Learning with cyber-security data repositories. In this work, we provide a comprehensive overview of publicly available data repositories and datasets used for building computer security or cyber-security systems based on Semi-Supervised Learning, where only a few labels are necessary or available for building strong models. We highlight the strengths and limitations of the data repositories and sets and provide an analysis of the performance assessment metrics used to evaluate the built models. Finally, we discuss open challenges and provide future research directions for using cyber-security datasets and evaluating models built upon them.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As a result of the significant technological advancements made throughout the years, people’s lifestyles are shifting from traditional to more electronic. This shift has resulted in an increase in cybercrimes on the Internet. Therefore, adequate measures have to be put in place to secure computer systems. Moreover, computer security or cyber-security systems must be capable of detecting and preventing cyber-attacks in real-time. The intersection of the Machine Learning (ML) and cyber-security fields has recently been rapidly growing as researchers make use of either fully labelled datasets with Supervised Learning (SL), unlabeled datasets with Unsupervised Learning (UL) or combining labelled and unlabeled data with Semi-Supervised Learning (SSL) to identify the various types of cyber-attacks. Due to the high cost and scarcity of labelled data in the cyber-security domain, SSL applications for cyber-security tasks have gained traction. Several datasets have been made available to the public to build ML-based defensive mechanisms. In ML, the quality of the output is determined by the quality of the input [1]; in other words, for ML models to generalize effectively, the datasets upon which they are built must be representative of real-world data. Therefore, surveys on the available datasets and performance evaluation metrics used to build and evaluate SSL models are required to give up-to-date information on recent cyber-security datasets and suitable performance metrics used in SSL frameworks to provide a starting point for new researchers who wish to investigate this vital subject.

Several works focusing on cyber-security provide discussions of datasets and data repositories that can be used for building ML models. For instance, Ring et al. [2] presented an extensive survey on network-based intrusion detection datasets discussing datasets containing packet-based, flow-based and neither packet- nor flow-based data while Glass-Vanderlan et al. [3] focused on Host-Based Intrusion Detection Systems (HIDS) and touched upon datasets and sources mainly related to HIDS. Other articles described datasets for (i) intrusion, malware and spam detection (e.g. [4,5,6,7,8]); (ii) network anomaly detection (e.g. [9]); or (iii) phishing URL detection (e.g. [10]). However, these works often focus on a particular cyber-security domain and do not examine in detail the characteristics of the available datasets and the performance evaluation metrics that are suitable for the various research challenges.

Because of the expanding interest in this area and the rapid speed of research, these surveys quickly become outdated; there is, therefore, an obvious need for a comprehensive survey to present the most recent datasets and evaluation metrics and their usage in the literature. To fill this gap, we present an exhaustive evaluation of the cyber-security datasets used to build SSL models. In this paper, we conduct a systematic literature review (SLR) of publicly available cyber-security datasets and performance assessment metrics used for building and evaluating SSL models. To this end, we provide a summary of datasets used to construct models for cyber-security-related tasks; the covered areas include not only network- and host-based intrusion detection, but also spam and phishing detection, Sybil and botnet detection, Internet traffic and domain name classification, malware detection and categorization, and power grid attacks detection. Additionally, we examine the performance assessment metrics used to evaluate the SSL models and discuss their usage in the selected papers. Furthermore, we provide a list of datasets, tools, and resources used to collect and analyze the data that have been made publicly available in the literature. Finally, we provide a discussion on the open research challenges and a list of observations with regard to datasets and performance metrics. This is, to the best of our knowledge, the first SLR analyzing a wide array of cyber-security datasets and performance evaluation metrics for SSL tasks, as well as providing easy access to publicly available datasets.

Our key contributions are the following:

-

1.

We provide a description of the most commonly used SSL techniques.

-

2.

We provide insights on the major cybercrimes for which SSL solutions have been explored.

-

3.

We present a systematic literature review of the publicly available cyber-security datasets, repositories and performance evaluation metrics used.

-

4.

We analyze the open challenges found in the literature and provide a set of recommendations for future research.

The remaining sections are organized as follows. Section 2 presents the definitions, important concepts, and basic assumptions of SSL, as well as a brief introduction to the methods utilized in the literature we reviewed and an overview of the different cybercrimes the included articles’ authors propose to counter. Additionally, we provide examples that highlight successful industrial deployments of ML for countering cyber threats, demonstrating the practical applications of the methods discussed in the literature. In Sect. 3, we present the methodology we used to construct our survey and in Sect. 4, an in-depth analysis of the publicly available datasets and the different evaluation metrics used in the selected papers is presented. Section 5 discusses the open challenges faced by the reviewed methods applying SSL for cyber-security, with respect to the datasets and evaluation metrics, presents a set of observations and the lessons learned, and highlights strategies for bridging the gap between research and practice. Finally, Sect. 6 concludes the work.

2 Background on SSL and cyber-security

Machine Learning (ML), the core subset of Artificial Intelligence (AI), may be defined as the systematic study of computer algorithms and systems that allow computer programs to automatically improve their knowledge or performance through experience [11]. It is a branch of computer science where the goal is to teach computers with sample data, i.e., training data, to make predictions or decisions on unseen data. ML algorithms can be categorized into three main types: SL, UL, and Reinforcement Learning (RL). In SL, the task, i.e., the inference of the function to map input data points from an instance space to their corresponding labels in the output space using labelled examples [12, 13], can either be classification where the function being learned is discrete, i.e., input data points in the input space are mapped to categorical values, or regression where the function being learned is continuous, i.e., input data points are mapped to real values. In contrast to SL, in UL, there are no labels available, therefore the goal of UL algorithms is to capture important patterns or extract relationships from untagged (unlabeled) data as probability density distributions [14] and in RL, the algorithms’ goal is to attempt to maximize the feedback (reward) they are provided with. SSL conceptually stands between SL and UL, [15,16,17]. Out-of-core Learning (OL), or Incremental, or Online Learning, is a learning technique where the data becomes available in a sequential, one at a time, manner [18]. In OL, the model can learn from newly available data, in addition to making predictions from it. Information Technology (IT) security, Computer security or simply cyber-security is the protection of computer systems and networks from cyber-attacks, i.e., information disclosure, loss, theft, or damage to their hardware, software, or electronic data, as well as from the disruption or misdirection of the services they offer [19].

SSL and ML, in general, have brought significant benefits to the cyber-security domain, including improved detection capabilities, adaptive learning, automation, and threat intelligence [20] (see Sect. 2.3 for industrial examples). However, there are also challenges that need to be addressed, including the lack of quality data, adversarial attacks, model explainability, and bias and discrimination [21, 22]. Addressing these challenges will be critical to ensuring that ML remains a useful tool in the fight against cyber threats.

In the remainder of this section, we introduce the key principles and techniques of SSL, provide a summary of cybercrimes examined in the literature, and present examples that demonstrate the potential of ML in mitigating cyber threats in the real world.

2.1 SSL concepts and methods

We will first introduce some notations. Let \(\mathcal {D}_L=(x_i,l(x_i ))_{i=1}^k\) denote a labelled dataset where each sample \((x_i,l(x_i))\) consists of data point \(x_i\) from the instance space \(\mathcal {X}\) and a target variable \(l(x_i)\) in the output space \(\mathcal {Y}\). Let \(\mathcal {D}_U=(x_i)_{i=k+1}^{k+u}\) denote an unlabeled dataset. In SL, when \(l(x_i)\) consists of categorical values we face a classification task and when it consists of real values we have a regression task. In UL, the model is only provided with unlabeled data, i.e., \(\mathcal {D}_U\). SL can build strong models to predict labels for unlabeled samples, but it requires \(\mathcal {D}_L\) to contain diverse samples manually labelled by domain experts, which may not only be too costly but may also contain inaccurate labels due to human mistakes. Therefore, in practice, \(u \gg k\). On the other hand, even though UL does not require labelled samples to infer patterns, it is prone to overfitting. SSL makes use of both \(\mathcal {D}_L\) and \(\mathcal {D}_U\) to infer a function whose performance surpasses one built with either SL or UL by making use of at least one of the main learning assumptions, i.e., smoothness, low-density, manifold, [23], and cluster, [24], assumption.

The smoothness assumption is based on the notion that if two data points, \(x_1\) and \(x_2\), lie close in the instance space, \(\mathcal {X}\), their corresponding class labels, \(l(x_1)\) and \(l(x_2)\), should also be close (the same), in the output space \(\mathcal {Y}\); the transitivity assumption, that states that if \(x_1\) lies close to \(x_2\) and \(x_2\) lies close to \(x_3\), then \(x_1\) lies transitively close to \(x_3\), is an important idea in the smoothness assumption because “close points in \(\mathcal {X}\) have the same label,” thus this assumption implies that if \(x_2\) is a noisy version of \(x_1\), they should still have the same predicted label. In the low-density assumption, it is implied that data points with the same label are clustered in high-density sections of the instance space, i.e., the decision boundary must pass through a low-density region, \(\mathcal {R} \subset \mathcal {X}\), and the probability of any data point, \(p(x_i)\), being in the low-density region is low, i.e., \(p(x_i)\) in \(\mathcal {R}\) is low. This also verifies that the smoothness assumption is satisfied. In the manifold assumption, the instance space, \(\mathcal {X}\), consists of one or more Riemannian manifolds \(\mathcal {M}\) on which samples share the same label. According to the cluster assumption, which can be seen as a generalization of the other three assumptions mentioned earlier [16], if data points are in the same cluster, they are likely to share the same label, and there may be several clusters constituting the same class [15].

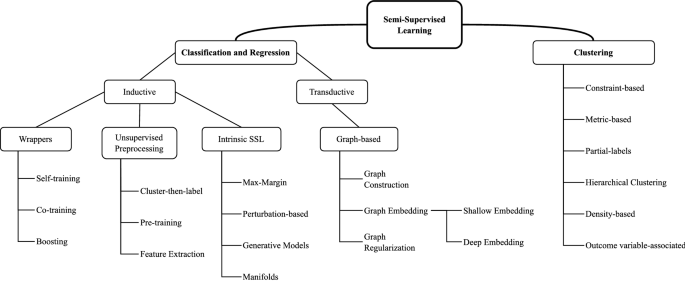

Based on [16, 25, 26], the taxonomy in Fig. 1 provides a general overview of the SSL approaches which will be described in more detail in Sects. 2.1.1 and 2.1.2. An overview of the key concepts in the taxonomy is presented next.

SS Classification and Regression methods can either be transductive or inductive [15, 27, 28]. In inductive SSL, the model is first built using information from \(\mathcal {D}_L\) and \(\mathcal {D}_U\) and it can then be used as one built with SL to generate predictions for previously unseen, unlabeled samples; there exists a clear distinction between a training phase and a testing phase. In transductive SSL, on the other hand, the goal is to generate labels for the unlabeled samples fed to the learner, therefore there is no clear distinction between a training and testing phase. Frequently, transductive approaches create a graph across all data points, including labelled and unlabeled, expressing the pairwise similarity of data points with weighted edges and are incapable of handling additional unseen data [17]. We group both SS Classification and Regression because they predict output values for input samples but note that most SS Classification approaches are incompatible with SS Regression, and we, therefore, specify when they may be compatible in Sect. 2.1.1.

In the SS Clustering assumption, the learner’s goal is clustering but a small amount of knowledge is available in the form of constraints, must-link constraints (two samples must be within the same cluster) and cannot-link constraints (two data points cannot be within the same cluster). It differs from traditional clustering in the way the constraints are accommodated: either by biasing the search for relevant clusters or altering a distance or similarity metric [29]. When it is not possible for an SL method to work, even in a transductive form, because the available knowledge is too far from being representative of a target classification of the items, the cluster assumption may allow the use of the available knowledge to guide the clustering process [30]. Bair [25] provides a survey on SS Clustering methods and groups them into constraint-based, partial-labels, SS hierarchical clustering and outcome variable associated methods.

A plethora of SSL approaches have been proposed in the literature, each making use of at least one of the SSL assumptions described. The following sections briefly describe the frequently used SSL methods showing how they relate to the SSL assumptions.

2.1.1 SSL for classification and regression

We divide the classification and regression methods between the two main classes: inductive SSL and transductive SSL.

2.1.1.1 Inductive methods

The goal of inductive methods is to build a model from labelled and unlabeled data and use the model as a built-in SL (only with labelled data) to make predictions on unlabeled data. Inductive methods can further be divided into wrapper methods, unsupervised preprocessing, and intrinsically semi-supervised methods. In wrapper methods, one or more supervised-based learners are first trained based on the labelled data only, then the learner or set of learners are applied to the unlabeled data to generate pseudo-labels which are used for training in the next iterations. Pseudo-labels, \(l(x_i)\), \(k<i<k+u\), are simply the most confident labels produced by the learner or set of learners for a set of unlabeled samples, \(\mathcal {X}_U \subset \mathcal {D}_U\), [31]. The wrapper methods we will consider are self-training and co-training. According to the way they make use of the unlabeled data, unsupervised preprocessing methods can be divided into feature extraction, unsupervised clustering and parameter initialization or pre-training.

2.1.1.1.1 Wrapper methods In wrapper methods, a model is first trained on labelled data to generate pseudo-labels for an unlabeled subset, \(\mathcal {X}_U \subset \mathcal {D}_U\), then the model is iteratively re-trained, until all unlabeled data are labelled or some stopping criterion is met, with a new dataset containing both the labelled dataset, \(\mathcal {D}_L\), and the pseudo-labels, \(l{(x_i)}\), \(k<i<k+u\), of the subset \(\mathcal {X}_U\), generated in previous iterations. They are the most well-known and oldest SSL methods [27, 31]. Wrapper methods may be used for classifictaion and regression and are divided into three categories: self-training, co-training, and boosting.

-

1.

Self-training. Self-training [32] also referred to as self-learning, are wrapper methods that consist of a single base SL learner that is iteratively trained on a training set consisting of the original labelled data and the high-confidence predictions, pseudo-label, from the previous iterations. They are the most basic wrapper methods [31] and may be applied to most, if not all, SL algorithms such as Random Forests (RF) [33], Support Vector Machines (SVM) [34], etc.

-

2.

Co-training. Co-training methods, [35, 36], assume that (i) features can be split into two or more distinct sets or views; (ii) each feature subset is sufficient to train a good classifier; (iii) the views are conditionally independent given the class label. Co-training extends the principle of self-training to multiple SL learners that are each iteratively trained with the pseudo-labels from the other learners, in other words, learners “teach” each other with the added pseudo-labels to improve global performance. For co-training to work well, the sufficiency (ii) and independence (iii) assumptions should be satisfied [35]. Multi-view co-training, the basic form of co-training, constructs two learners on distinct feature sets or views. When no natural feature split is known a priori, single-view co-training may be used to build two or more weak learners with different hyper-parameters on the same feature set. There exist several approaches based on single-view co-training such as tri-training [37], co-forest [38], co-regularization [39], etc. In co-regularization, the two terms of the objective function minimize the error rate and optimize the disagreement between base learners [39].

2.1.1.1.2 Unsupervised preprocessing The unsupervised preprocessing methods use \(\mathcal {D}_U\) and \(\mathcal {D}_L\) at two different steps. The first step often consists of extraction (feature extraction) or transformation (unsupervised clustering) of the feature space or for initialization of a model’s parameters (pre-training) while the second step consists of using knowledge from \(\mathcal {D}_L\) to label the unlabeled data points in \(\mathcal {D}_U\). We briefly describe the methods in the next points.

-

1.

Feature Extraction: Feature extraction is one of the most critical steps to take in ML. It consists of extracting a set of relevant features for ML models to work. Typically, SSL feature extraction methods consist of either finding lower-dimensional feature spaces, from \(\mathcal {X}\), without sacrificing significant amounts of information or finding lower-dimensional vector representations of highly dimensional data objects by considering the relationships between the inputs. Examples of SSL feature extraction methods are autoencoder (AE) [14] and a few of its variants, such as denoising autoencoder [40] and contractive autoencoder [41], and methods in NLP (Natural Language Processing) such as Word2Vec [42], GloVe [43], etc.

-

2.

Unsupervised clustering: Also referred to as cluster-then-label methods, these methods explicitly join the SL or SSL classification or regression algorithms and UL or SSL clustering algorithms. The UL or SSL clustering algorithm first clusters all the data points, then those clusters are fed to the SL or SSL classifier or regressor for label inference [44,45,46].

2.1.1.1.3 Intrinsically semi-supervised Intrinsically semi-supervised methods are typically extensions of existing SL methods to directly include the information from unlabeled data points in the loss function. Regarding the SSL assumption they rely on, these methods can be further grouped into four categories: (i) maximum-margin methods, where the goal is to maximize the distance between data points and the decision boundary (low density-assumption), (ii) perturbation-based methods, often implemented with neural networks (NN), rely directly on the smoothness assumption (a noisy, or perturbated, version of a data point should have the same predicted label, as the original data point), (iii) manifold-based methods either explicitly or implicitly estimate the manifolds on which the data points lie and (iv) generative models whose primary goal is to infer a function that can generate samples, similar to the available samples, from random noise.

2.1.1.2 Transductive methods

A learner is said to be transductive if it only works on the labelled and unlabeled data available at training and cannot handle unseen data [17]. The goal of a transductive learner is to infer labels for an unlabeled dataset \(\mathcal {D}_U\), using \(\mathcal {D}_L\). If a new unlabeled data point, \(x_u \notin \mathcal {D}_U\), is given, the learner must be reapplied, from scratch to all the data, i.e., \(\mathcal {D}_L\), \(\mathcal {D}_U\), and \(x_u\). Graph-based methods, which are often transductive in nature, define a graph where the nodes are labelled and unlabeled samples in the dataset, and edges (weighted) reflect the similarity of the samples. These methods usually assume label smoothness over the graph. Graph methods are non-parametric and discriminative [17]. The defined loss function is optimized to achieve two goals: (i) for already labelled samples, from \(\mathcal {D}_L\), the inferred labels should correspond to their true labels and (ii) the predicted labels of similar samples on the graph be the same. A transductive learner’s task may be classification or regression.

2.1.2 SSL for clustering

Semi-supervised clustering methods can be used with partially labelled data as well as other types of outcome measures. When cluster assignments, or partial labels, for a subset of the data, are known beforehand, the objective is to classify the unlabeled samples using the known cluster assignments [47], this is, in a sense, equivalent to an SL problem. When more complex relationships among the samples are known in the form of constraints, the problem becomes a generalization of the previous objective and is either called constrained clustering [48], i.e., an existing clustering method is modified to satisfy the constraints, or distance-based (metric-based) clustering, i.e., an alternative distance metric is used to satisfy the constraints [49, 50].

Hierarchical and partitional clustering techniques are the two main types of clustering algorithms. Hierarchical clustering methods recursively locate nested clusters in either agglomerative or divisive mode. In agglomerative mode, they start with each data point in its own cluster and merge the most similar clusters successively to form a cluster hierarchy and in divisive or top-down mode, they start with all the data points in one cluster and recursively divide each cluster into smaller clusters [51]. SS Hierarchical clustering methods group samples using a tree-like architecture, known as a hierarchy. They either built separate hierarchies for must-link and cannot-link constrained samples [52,53,54,55] or use other types of constraints [56,57,58,59,60]. Finally, SS Clustering may be used to build clusters related to a given outcome variable [61].

We refer the interested reader to [16, 25, 26, 29, 62] for detailed descriptions of the methods mentioned in this section.

2.2 Cybercrimes

As mentioned in Sect. 1, a cyber-attack is any offensive maneuver that targets computer systems aiming at information disclosure, theft of or damage to their hardware, software, or electronic data, as well as from the disruption or misdirection of the services they provide, and cyber-security can be defined as the protection of computer systems against cyber-attacks [19]. Cybercrimes are criminal activities that involve the use of digital technologies such as computers, smartphones, the internet, and other digital devices [63]. From a legal perspective, cybercrimes can be defined as criminal offences that involve the use of a computer or a computer network [64]. The cyber-attacks covered in this article can all be seen as specific types of cybercrime, we, therefore, use the two terms interchangeably. Note that different jurisdictions may have different laws regarding what constitutes a cybercrime or cyber-attack. Therefore, an activity that is considered a cyber-attack in one jurisdiction may not be considered a cybercrime in another jurisdiction, depending on the specific laws in each location but cybercrimes typically involve the illegal or unauthorized use of digital technologies such as computers [63, 64]. Additionally, some activities that are not considered cyber attacks in some jurisdictions may still be considered cybercrimes if they violate specific laws related to computer systems and networks [63]. Cybercrimes may also be viewed from technical [65] and procedural [66, 67] perspectives.

The IBM X-Force Incident Response and Intelligence Services (IRIS) estimated the profit made by a group of attackers to be over US$123 million in 2020 [68] and the Cost of a Data Breach report published in 2021 by IBM Security estimates the global average cost per incident to US$4.24 million [69]. Cybercriminals are always taking advantage of catastrophes, disasters, and hot events for their own gains. A clear example is the surge in cybercrimes of all sorts witnessed at the beginning of the pandemic.

The following subsections briefly describe the cybercrimes countered in the covered literature.

2.2.1 Network intrusion

Any unlawful action on a digital network is referred to as network intrusion. Network intrusions or breaches can be thought of as a succession of acts carried out one after the other, each dependent on the success of the last. The stages of the intrusion are sequential, beginning with reconnaissance and ending with the compromising of sensitive data [70]. These principles are useful for managing proactive measures and finding bad actors’ behaviour. Network intrusions often include the theft of valuable network resources and virtually always compromise network and/or data security [71, 72]. Living off the land, multi-routing, buffer overwriting, covert CGI scripts, protocol-specific attacks, traffic flooding, Trojan horse malware, and worms are the most frequent intrusion attacks.

Some intruders will attempt to implant code that cracks passwords, logs keystrokes, or imitates a website in order to lead unaware users to their own. Others will infiltrate the network and steal data on a regular basis or alter websites accessible to the public with a range of messages. Intruders may get access to a computer system in a number of ways, including internally, externally, or even physically.

2.2.2 Phishing

IBM X-Force identified phishing as one of the most used attack vectors in 2021 because of their ease of use and low resource requirements [73]. Phishing is a form of cybercrime where the attackers’ aim is to trick users into revealing sensitive data, including personal information, banking, and credit card details, IDs, passwords, and more valuable information via replicas of legitimate websites of trusted organizations. Phishing attacks can be grouped into deceptive phishing and technical subterfuge [74]. Deceptive phishing is often performed via emails, SMS, calendar invitations, using telephony, etc., and technical subterfuge is the act of tricking individuals into disclosing their sensitive information through technical subterfuge by downloading malicious code into the victim’s system. We refer the reader to a recent in-depth study on phishing attacks [74].

2.2.3 Spam

Spam, not to be mistaken for canned meat, may be defined as unsolicited and unwanted messages, typically sent in bulk, that can take several forms such as email, text messages, phone calls, or social media messages. The content of spam messages can vary widely, but they are often commercial in nature and aim to advertise a product or service or promote a fraudulent scheme or solicit donations [75].

2.2.4 Malware

Malware or malicious software is defined as any software that intentionally executes malicious payloads on victim machines (computers, smartphones, computer networks, and so on) to cause disruptions. There exist several varieties of malware, such as computer viruses, worms, Trojan horses, ransomware, spyware, adware, rogue software, wipers, and scareware. In the 2022 Threat Intelligence Index, IBM X-Force reported that ransomware, a type of malware, was again the top attack type in 2021, although decreasing from 23%, in 2020, to 21% [73]. Defensive tactics vary depending on the type of malware, but most may be avoided by installing antivirus software and firewalls, applying regular patches to decrease zero-day threats, safeguarding networks from intrusion, performing regular backups, and isolating infected devices.

2.2.5 Other cyber-attacks

In addition to intrusions, spam, phishing and malware, we also discuss SSL applications for:

-

1.

Traffic classification - traffic classification may be used to detect patterns suggestive of denial-of-service attacks, prompt automated re-allocation of network resources for priority customers, or identify customer use of network resources that in some manner violates the operator’s terms of service [76];

-

2.

Sybil detection—a Sybil attack may be defined as an attack against identity in which an individual entity masquerades as numerous identities at the same time [77];

-

3.

Stock market manipulation detection—market manipulation may be defined as an illegal practice in an attempt to boost or reduce stock prices by generating an illusion of an active trading [78, 79];

-

4.

Social bot detection—a social bot may be defined as a social media account that is operated by a computer algorithm to automatically generate content and interact with humans (or other bot users) on social media, in an attempt to mimic and possibly modify their behaviour [80, 81];

-

5.

Shilling attack detection—a Shilling attack is a particular type of attack in which a malicious user profile is injected into an existing collaborative filtering dataset to influence the recommender system’s outcome. The injected profiles explicitly rate items in a way that either promotes or demotes the target items [82];

-

6.

Pathogenic social media account detection—Pathogenic Social Media (PSM) accounts refer to accounts that have the capability to spread harmful misinformation on social media to viral proportions. Terrorist supporters, water armies, and fake news writers are among the accounts in this category [83, 84];

-

7.

Fraud detection—in the banking industry such as credit card fraud detection. Credit card fraud may happen when unauthorized individuals obtain access to a person’s credit card information and use it to make purchases, other transactions, or open new accounts [85]; and

-

8.

Detection of attacks on other platforms such as the power grid - the smart grid enables energy customers and providers to manage and generate electricity more effectively. The smart grid, like other emerging technology, raises new security issues [86].

2.3 Examples of industry deployments of ML in cyber-security

This section presents examples of successful industrial deployments of ML for countering cyber threats. The first example is “IBM X-Force Threat Management” [87], an ML platform deployed to counter cyber threats. IBM X-Force Threat Management is a cloud-based security platform that leverages ML to provide advanced threat detection and response capabilities. It analyzes massive amounts of security data, including network traffic, system logs, and user behaviour, to identify and respond to potential threats in real-time using ML algorithms. The ML models are trained on large datasets of historical security events, allowing the system to learn and adapt to new threats over time. Depending on the use case and data available, it is possible that IBM X-Force Threat Management may use a combination of ML techniques, such as SSL and Reinforcement Learning, in addition to other optimization methods for enhancing security policies. However, it should be noted that without specific information from IBM, it cannot be definitively confirmed whether these techniques are actually employed. Nonetheless, the platform has demonstrated success in detecting various types of cyber threats, including banking Trojans such as IcedID,Footnote 1 TrickBot and QakBot.

The second example is the Deep Packet Inspection (DPI) system developed by Darktrace, a cyber-security company. The system uses unsupervised ML algorithms to learn the expected behaviour of a network and detect anomalies that may indicate malicious activity. The system can also automatically respond to detected threats by initiating a range of actions, such as quarantining a device or blocking network traffic. Darktrace has deployed its DPI system in various industries, including healthcare, finance, and energy. In one instance, a UK construction company used the system to detect and respond to a ransomware attack.Footnote 2 The system identified the attack within minutes of it starting and initiated a range of responses, including blocking the attacker’s IP address and quarantining affected devices. The company was able to contain the attack and avoid paying the ransom demanded by the attackers.

Our third example is Feedzai, an ML platform that provides fraud prevention and anti-money laundering for financial institutions and businesses. Feedzai employs a variety of ML techniques, including Deep Learning and combining SL and UL (SSL),Footnote 3 to detect and prevent fraudulent activity in real-time. After partnering with a large European bank, Feedzai’s platform reduced false positives and accurately identified fraudulent activity, resulting in lower losses due to fraud.Footnote 4

Overall, IBM X-Force Threat Management, Darktrace, and Feedzai demonstrate how ML can be successfully deployed in the industry to counter cyber threats and provide advanced threat detection and response capabilities.

3 Review methodology

This section provides the details of the methodology we followed. To achieve our goal of reviewing the datasets and evaluation metrics used in the applications of SSL techniques to cyber-security, we followed the standard systematic literature review guidelines outlined in [88] for assessing the search’s completeness. The entire process was done on Covidence [89], an online tool for systematic review management and production. We first defined our three research questions shown below. These are motivated by the need to examine the efforts being made to safeguard users and computer systems against attacks using SSL. This stems from the fact that attacks are far more harmful than vulnerability scans or related operations. We intend to review the datasets as well as the evaluation metrics used in the literature identifying the cyber-attacks as soon as possible to take the necessary actions to reverse them.

-

RQ. 1:

With the introduction and use of SSL in cyber-security, what are the assessment metrics used to evaluate the built models?

-

RQ. 2:

What datasets are the proposed SSL approaches built upon? What are the most used datasets?

-

RQ. 3:

What are the open challenges with respect to the datasets and performance assessment metrics?

Our inclusion and exclusion criteria were then defined from the above research questions. A paper is included if it directly applies SSL for detecting at least one of the cyber-attacks mentioned in Sect. 2.2. with enough details to address our research questions. On the other hand, a paper is excluded if (i) another paper of the same authors superseded the work, in which case the latest work is considered, (ii) it does not use SSL for the inclusion criteria and (iii) the approach is discussed at a high level, with insufficient information to fulfill the research questions. The entire process was done on Covidence [89], an online tool for systematic review management and production. We then queried IEEE Xplore and ACM Digital Library for articles having (“semi-supervised learning” AND “cyber-security”), (“semi-supervised” AND “cyber-security”) and (“semi-supervised” AND “security”) anywhere within the article.

The keywords (“semi-supervised learning” AND “cyber-security”) have been chosen because SSL has been increasingly used in cyber-security to improve the accuracy of detection and classification systems [90]. This combination has been used to find articles that specifically focus on using SSL in cyber-security tasks such as intrusion detection, malware detection, network traffic analysis, etc. Similarly, the combination of (“semi-supervised” AND “cyber-security”) has been used to find articles that discuss semi-supervised learning in a cyber-security context, even if they do not explicitly mention the phrase “semi-supervised learning”. Finally, the combination (“semi-supervised” AND “security”) has been used to broaden the search beyond just cyber-security and potentially include other domains where SSL has been applied to security-related tasks.

Note that we did not limit the search to the title, abstract or keywords because it was essential to making sure to find all the articles discussing and applying SSL methods for cyber-security for screening. The reason we chose these databases is that they are among the top databases suggested by our university library for conducting Computer Science research and they also contain papers published in top-tier venues. To complement the results obtained from IEEE Xplore and ACM Digital Library, we submitted the same search queries to Google Scholar and extracted the top 200 search results sorted by relevance. The combinations mentioned earlier and this search strategy allowed us to find articles that are relevant to using SSL in cyber-security, and gain a better understanding of how it is being/has been used to improve security systems.

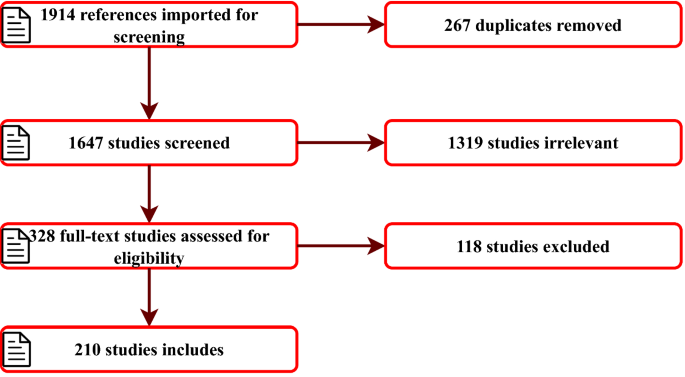

As seen in Fig. 2, in total, 1914 studies were imported for screening; 267 duplicates were automatically removed, and the remaining 1647 studies’ titles and abstracts were manually screened for relevance. Based on our inclusion and exclusion criteria, 1319 studies were found irrelevant, because they either did not discuss SSL methods or cyber-attack defences. The remaining 328 studies’ full texts were further assessed as they were either partially or fully related to our inclusion criteria, and finally, 210 relevant studies were included for data extraction. Furthermore, we used state-of-the-art surveys and review articles on SSL [16, 27] and ML for cyber-security [4] to construct this extensive review of cyber-security datasets and performance evaluation metrics for SSL models.

4 Datasets and performance assessment metrics

In this section, we summarize and analyze the public datasets and performance assessment metrics used in the selected papers.

4.1 Datasets and repositories

AI, especially ML, has proven itself a particularly useful tool in cyber-security as well as other fields of computer science and has extensively featured in the literature for cybercrime or malicious activity detection. “Cost of a Data Breach” [69], published by IBM Security, reported a US$3.81 million, or almost 80% difference between breach costs of companies with fully deployed security AI/ML and automation and companies without security AI/ML and automation. We present the public datasets used in the covered literature in this section, grouped by type of attack and show their usage in the selected papers in Figs. 3, 4, 5, and 6. Note that we acknowledge the difference between Spam and Phishing in Subsections 2.2.3 and 2.2.2 as they are different attack vectors but due to the scarcity of these datasets, we have combined them in a single section.

4.1.1 Network intrusion datasets and sources

In terms of network intrusion, we found a total of 18 public datasets and sources in the papers we reviewed. We begin by providing a brief description of each dataset; we, then, provide a summary of their main characteristics as well as some key data usage statistics.

-

1.

KDD’99 and NSL-KDD. The KDD’99 dataset is a statistically preprocessed dataset which has been available since 1999 from DARPA [91], it is an updated version of the DARPA98. It is the most used dataset in the selected papers. The dataset has three components, basic, content and traffic features, making a total of 41 features for normal and simulated attack traffic. The NSL-KDD dataset, proposed by Tavallaee [92], is a version of the KDD’99 dataset in which redundant records are removed to enable the classifiers to produce unbiased results. The two datasets contain various attack types such as Neptune-DoS, pod-DoS, Smurf-DoS, and buffer-overflow. Table 1 gives a brief composition of the KDD’99 and NSL-KDD datasets.

-

2.

Moore Set. The Moore Set [93] was prepared in 2005 by researchers at Intel Research. It comprises real-world traces collected by the high-performance network monitor. Each object in the Moore set represents a single flow of TCP packets between client and server, which consists of 248 characteristics. The information in the features is derived using packet header information alone, while the classification- class has been derived using content-based analysis. Table 2 shows a brief composition of the Moore Set.

-

3.

LBNL2005. The Lawrence Berkeley National Laboratory (LBNL) 2005 traffic traces were collected at the LBNL/ICSI under the Enterprise Tracing Project over a period of three months in 2004 and 2005 on two routers [94]. It contains full header network traffic recorded at a medium-sized enterprise covering 22 subnets and includes trace data for a wide range of traffic including web, email, backup, and streaming media. Because the traffic traces are completely anonymized, all the packets do not have a payload. As seen in Table 3, the LBNL trace consists of five datasets labelled: D0–D4. The “Per Tap” row specifies the number of traces collected on each monitored router port while the “Snaplen” row gives the maximum number of bytes recorded for each packet.

-

4.

CAIDA Datasets. The Centre for Applied Internet Data Analysis (CAIDA), based at the University of California’s San Diego Supercomputer Center, collects a variety of data from geographically and topologically diverse locations and makes it available to the research community to the extent possible while respecting the privacy of individuals and organizations who donate data or network access. The CAIDA-DDoS Dataset [95], comprises approximately one hour of anonymized traffic from a DDoS attack on August 4, 2007 (20:50:08 UTC to 21:56:16 UTC). This type of denial-of-service attack tries to prevent access to the targeted server by using all of the server’s computational power and all of the bandwidth on the network linking the server to the Internet. The traces only include attack traffic to the victim and responses to the attack from the victim. Non-attack traffic has been eliminated to the greatest extent practicable.

-

5.

Kyoto2006+. The Kyoto2006+ is a publicly available benchmark dataset, consisting of 24 statistical features, that is built on three years of network traffic, from November 2006 to August 2009 [96]. It covers both regular servers and honeypots deployed at Kyoto University in Japan labelled as normal (no attack), attack (known attack) and unknown attack. It includes a variety of attacks performed against the honeypots such as shellcode, exploits, DoS, port scans, backscatter, and malware, shown in Table 4. An updated version of the dataset contains additional data collected from November 2006 to December 2015 [97].

-

6.

UNIBS2009. The UNIBS-2009 trace [98], was compiled by the University of Brescia in 2009. It consists of traffic traces collected by running Tcpdump on the edge router of the university’s campus network on three consecutive working days (2009.9.30, 2009.10.1 and 2009.10.02) connecting the network to the Internet through a 100 Mbps uplink. As shown in Table 5, the dataset supplies the true labels, and the traffic trace includes Web (HTTP and HTTPS), Mail (POP3, IMAP4, SMTP and their Secure Sockets Layer variants), Skype, P2P (BitTorrent, Edonkey), SSH (Secure Shell), FTP (File Transfer Protocol) and MSN.

-

7.

UNB ISCX-2012. The Installation Support Center of Expertise (ISCX)-2012 dataset has been prepared at the ISCX at the University of New Brunswick [99]. It is built on 7 days of network traffic, shown in Table 6, and consists of over two million traffic packets characterized by 20 features taking nominal, integer, or float values. The dataset includes full packet payloads in pcap format.

-

8.

CTU-13. The CTU-13 dataset was compiled by the Czech Technical University [100]. It consists of botnet traffic captured in the university in 2011. The dataset includes thirteen scenarios, shown in Table 7, covering different botnet attacks, that use a variety of protocols and performing different actions, mixed with normal traffic and background traffic. The dataset is available in the forms of unidirectional flow, bidirectional flow, and packet capture.

-

9.

SCADA 2014. The Supervisory Control And Data Acquisition (SCADA) [101] is a database proposed by Mississippi State University Key Infrastructure Protection Center in 2014 to evaluate the industrial network intrusion detection model. It is one of the standard databases in the current industrial control network intrusion detection commonly used in experiments. It includes the Gas system dataset and Water storage system dataset from the Industrial Control System network layer.

-

10.

UNSW-NB15. The UNSW-NB15 dataset was compiled in 2015 by the University of New South Wales Canberra at the School of Engineering and IT, UNSW Canberra at ADFA, using a small, emulated network over 31 h by getting normal and malicious raw network packets. It consists of nine attack types: analysis, backdoors, DoS, exploits, generic, fuzzers, reconnaissance, shell code and worms. It consists of over two million records each characterized by 49 features taking nominal, integer, or float values. The dataset’s data distribution is shown in Table 8.

-

11.

AWID 2015. The Aegean Wi-Fi Intrusion Dataset (AWID), published in 2015 [102], comprises the largest amount of Wi-Fi network data (normal and attack) collected from real network environments. The 16 attack types can be grouped into flooding, impersonation, and injection. As seen in Table 9, the dataset contains over 5 million samples each characterized by 154 features, representing the WLAN frame fields along with physical layer meta-data.

-

12.

ISCXVPN2016. The ISCXVPN2016 [103], published by the UNB in 2016, comprises traffic captured using Wireshark and tcpdump, generating a total amount of 28GB of data. For the VPN, an external VPN service provider connected to using OpenVPN (UDP mode) was used. To generate SFTP and FTPS traffic an external service provider and Filezilla as a client was used. Table 10 shows the data distribution in the ISCXVPN2016 dataset.

-

13.

CIDDS. The Coburg Intrusion Detection Datasets (CIDDS), prepared at Coburg University of Applied Sciences (Hochschule Coburg), consist of several labelled flow-based datasets created in virtual environments using OpenStack. The CIDDS database’s most used dataset, CIDDS-001, released in 2017, covers four weeks of unidirectional traffic flows each characterized by 19 features taking nominal, integer, or float values. As seen in Table 11, the dataset includes attacks such as DoS, port scan and SSH brute force.

-

14.

CICIDS2017. The Canadian Institute for Cyber-security Intrusion - Evaluation Dataset (CIC-IDS)-2017 was produced in an emulated network environment at the CIC [104]. It is built on 5 days (July 3 to July 7, 2017) of network traffic, shown in Table 12, and includes a variety of most common attack types including FTP patator, SSH patator, DoS slowloris, DoS Slowhttptest, DoS Hulk, DoS GoldenEye, Heartbleed, Brute force, XSS, SQL Injection, Infiltration, Bot, DDoS (Distributed denial of service), and Port Scan each characterized by 80 features extracted using CICFlowMeter [103, 105]. The dataset also includes full packet payloads in pcap format.

-

15.

UGR’16. The UGR’16 dataset, proposed in 2018 by Maciá-Fernández et al. [106], comprises NetFlow network traces collected from a real Tier 3 ISP network made up of several organizations’ and clients’ virtualized and hosted services including WordPress, Joomla, email, FTP, etc. NetFlow sensors were installed in the network’s border routers to capture all incoming and outgoing traffic from the ISP. As seen in Table 13, two sets of data are provided: one for training models (calibration set) and the other for testing the models’ outputs (test set).

-

16.

Kitsune2019. The Kitsune Network Attack Dataset, Kitsune2019, has been prepared at Ben-Gurion University of the Negev, Israel and was released in May 2018 [107]. The dataset is composed of 9 files covering 9 distinct attacks situations on a commercial IP-based video surveillance system and an IoT network: OS (Operating System) Scan, Fuzzing, Video Injection, ARP Man in the Middle, Active Wiretap, SSDP Flood, SYN DoS, Secure Sockets Layer Renegotiation and Mirai Botnet. It contains 27,170,754 samples each characterized by 115 real features. The violation column in Table 14 indicates the attacker’s security violation on the network’s confidentiality (C), integrity (I), and availability (A).

-

17.

NETRESEC is a software company that specializes in network security monitoring and forensics. They also maintain.pcap repository files gathered from various Internet sources [108]. It is a list of freely accessible public packet capture repositories on the Internet. Most of the websites listed on their website provide Full Packet Capture (FPC) files, however, others only provide truncated frames.

-

18.

MAWI archive. The MAWI archive [109] consists of an ongoing collection of daily Internet traffic traces captured within the WIDE backbone network at several sampling points. Tcpdump is used to retrieve traffic traces, and the IP (Internet Protocol) addresses in the traces are encrypted using a modified version of Tcpdpriv (MAWI Working Group Traffic Archive (http://www.wide.ad.jp)). The samplepoint-F consists of daily traces at the transit link of WIDE to the upstream ISP and has been in operation since 01/07/2006.

-

19.

KaggleFootnote 5 is an online data sharing and publishing platform. It includes security-based datasets such as KDD’99 and NSL-KDD. Registered users can also upload and explore data analysis models.

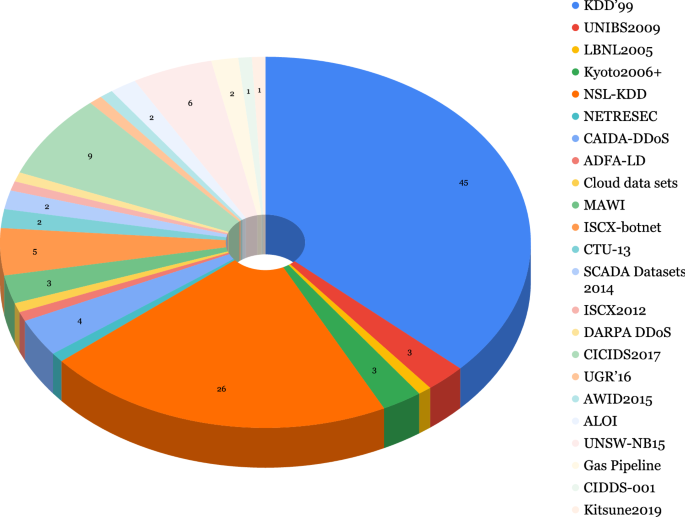

A breakdown of the usage of the Intrusion Detection datasets in the selected papers is shown in Fig. 3, we also provide an overview of the Network Intrusion datasets in Table 15. As seen in Fig. 3, the KDD’99 dataset, despite being old and containing redundant and noisy records, is the most used of the 17 intrusion detection datasets described in this section. 45 out of the 100 selected papers used either the KDD’99 alone or in conjunction with some other intrusion detection dataset. This dataset is followed by the NSL-KDD dataset which is only a smaller version without the redundant and noisy records present in KDD’99. Additionally, none of these datasets are balanced, therefore suitable evaluation metrics should be used when evaluating models built on these datasets. We must highlight that the four most recent datasets used in the papers reviewed were already published in 2017 and 2018 and they have not been extensively explored in an SSL context. Finally, we refer the interested reader to a recent comprehensive survey of Network-based Intrusion datasets [2].

4.1.2 Spam and phishing datasets and sources

-

1.

Spam Email. The SPAM Email Dataset contains a total of 4601 emails including 1813 spam emails and 2788 legitimate emails each characterized by 58 attributes. It was donated to the UCI Machine Learning Repository by Hewlett Packard in 1999 [110].

-

2.

Ling-Spam. The Ling-Spam dataset, proposed by Androutsopoulos et al. [111] in 2000, contains both spam and legitimate emails retrieved from an email distribution list, the Linguistic list, focusing on linguistic interests around research opportunities, job postings, and software discussion. The dataset contains 2,893 different emails, of which 2,412 are genuine emails collected from the list’s digests and 481 are spam emails retrieved from one of the corpus’ authors.

-

3.

WEBSPAM-UK2006. The WEBSPAM-UK2006 dataset was obtained using a set of.UK pages downloaded by the Laboratory of Web Algorithmics of the University of Milan (Università degli Studi di Milano) and manually assessed by a group of volunteers in 2006. The dataset consists of labels, URLs and hyperlinks and HTML page contents of 77,741,046 Web pages [112].

-

4.

SpamAssassin (spamassassin.apache.org). Apache SpamAssassin is an Open-Source anti-spam platform providing a filter to classify email and block spam. The SpamAssassin Public mail corpus is a selection of 6,047 emails prepared by SpamAssassin in 2006. Of the total count, there are 1,897 spam messages and 4,150 legitimate emails.

-

5.

TREC2007 Public Corpus. The TREC 2007 Public Corpus contains all email messages delivered to a particular server. The server contained several accounts, fallen into disuse and several ‘honeypot’ accounts published on the web, which were used to sign up for a few services, some legitimate and some not. The TREC dataset contains 75,419 messages, of which 25,220 are legitimate emails and 50,199 are junk messages; the messages are divided into three subcorpora [113].

-

6.

SMS Spam Collection. The SMS Spam Collection Dataset is a publicly available dataset created by Almeida et al. [114,115,116] in 2011. It is a labelled dataset of 5574 SMS messages, 747 spam and 4827 ham, collected from mobile phones.

-

7.

“Gold standard” opinion spam. The “gold standard” opinion spam dataset was proposed by Ott et al. [117] in 2011. The corpus comprises 1,600 review texts, 800 deceptive and 800 genuine, on 20 hotels in the Chicago area. The genuine reviews were obtained from reviewing websites such as TripAdvisor, Expedia and Yelp and the deceptive ones were rendered using Amazon Mechanical Turk (AMT). In the dataset, 400 reviews are written with a negative sentimental polarity and 400 depict a positive sentimental polarity.

-

8.

Spear phishing email dataset (2011) & Benign email dataset (2013). These two datasets have been prepared by Symantec’s enterprise mail scanning service. The spear phishing email dataset contains 1,467 emails from 8 campaigns and the benign email dataset contains 14,043 emails. The emails were sent between 2011 and 2013, and have attachments, anonymous customer information and PII. The extraction process is described in [118, 119].

-

9.

MovieLens Dataset. The GroupLens Research has collected and made available rating datasets from the MovieLens website (https://movielens.org). The datasets were collected over various periods of time, depending on the size of the set. The MovieLens 20 M contains 20 million ratings and 465,000 tag applications applied to 27,000 movies by 138,000 users collected from January 1995 to March 2015 [120].

-

10.

Netflix. The Netflix datasetFootnote 6 consists of listings of all the movies and TV shows available on Netflix, along with details such as - cast, directors, ratings, release year, duration, etc.

-

11.

Twitter and Sina Weibo are two of the most influential social network media platforms in the world. Authors in the selected papers have either used crawlers or APIs to get sample data from these sources.

-

12.

PhishTank,Footnote 7DeltaPhish [121], Phish-LablsFootnote 8 and Anti-Phishing Working Group(APWGFootnote 9) are anti-phishing resources that publicly report phishing web pages in an effort to reduce fraud and identity theft caused by phishing and related incidents.

-

13.

YELPFootnote 10 and delicious.comFootnote 11 publish crowd-sourced reviews about businesses. Similar to Twitter and Sina Weibo, APIs and crawlers may be used to extract data from these sources.

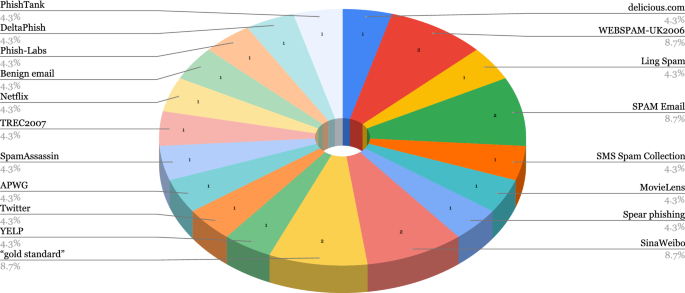

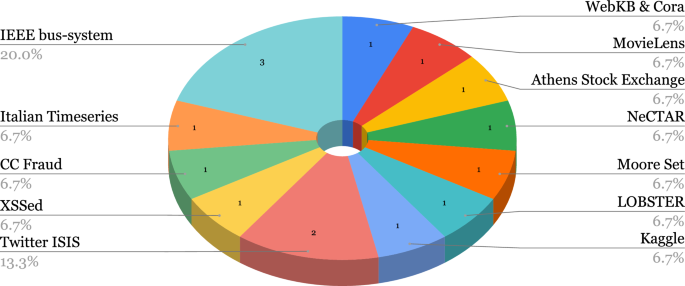

A breakdown of the usage of the described Spam and Phishing datasets in the selected papers is shown in Fig. 4, we also provide an overview of the Spam and Phishing datasets in Table 16. We observe that, in the revised works, there is no tendency towards using one or two specific datasets when tackling spam and/or phishing. In effect, the majority of the datasets are used in a single publication and only four, i.e. WEBSPAM-UK2006, Spam Email, SinaWeibo and “gold standard,” out of nineteen are used in two papers as shown in Fig. 4. Additionally, except for the “gold standard” dataset, none of these datasets is balanced.

4.1.3 Malware datasets and sources

-

1.

Georgia Tech Packed-Executable Dataset. The Georgia Tech Packed-Executable dataset [122] was published in 2008. It consists of 2598 packed viruses collected from the Malfease Project dataset (http://malfease.oarci.net), and 2231 non-packed benign executables collected from a clean installation of Windows XP Home plus several common user applications. The authors also generated 669 packed benign executables by applying 17 different executable packing tools freely available on the Internet to the executables in the Windows XP start menu. Of the 3267 packed executables in their collection, PEiD (http://peid.has.it), one of the most used signature-based detectors for packed executables, was able to detect only 2262 of them, whereas 1005 remained undetected. Therefore, those 1005 undetected samples were kept in the test and the train set contains 4493 samples: 2231 samples related to the non-packed benign executables and 2262 patterns related to the packed executables detected using PEiD.

-

2.

The Malimg Dataset [123], proposed in 2011 by the University of California, Santa Barbara, contains 9458 malware images from 25 families.

-

3.

The Malware Genome Project [124], proposed by researchers at the North Carolina State University in 2011, contains 1260 Android Malware samples belonging to 49 different malware families collected from August 2010 to October 2011.

-

4.

Malheur [125, 126], proposed in 2011, is a tool for the automatic analysis of malware behaviour in a sandbox environment.

-

5.

Malicia Dataset. The Malicia dataset [127, 128], published in 2013, comprises 11,688 malware binaries collected from 500 drive-by download servers over a period of 11 months in Windows Portable Executable format. The objective of their work was to identify hosts which spread malware in the wild and to collect samples of malware. In order to collect the samples of malware they set up a honeypot and clients in this honeypot were referring to the malware URL database for downloading and milking the website by resolving the IP address.

-

6.

CTU-Malware. The CTU-Malware dataset [129], also compiled by the Czech Technical University, consists of hundreds of captures (called scenarios) of different malware communication samples. Both malware and normal samples are included in the dataset as shown in Table 17.

-

7.

In 2015, Microsoft launched the Microsoft Malware Classification Challenge, along with the release of a dataset [130] consisting of over 20,000 malware samples belonging to nine families. Each malware file includes an identifier, which is a 20-character hash value that uniquely identifies the file, and a class label, which is an integer that represents one of the nine families to which the malware may belong.

-

8.

USTC-TFC2016. The USTC-TFC2016 dataset [131], published in 2017, consists of ten types of malware traffic from public websites which were collected from a real network environment from 2011 to 2015. Along with such malicious traffic, the benign part contains ten types of normal traffic which were collected using IXIA BPS, a professional network traffic simulation equipment. The dataset’s size is 3.71 GB in the pcap format. The dataset's composition is shown in Table 18.

-

9.

CICAndMal2017. The CICAndMal2017 android malware dataset, published in 2018 by the CIC [132], consists of four malware categories namely Adware, Ransomware, Scareware, and SMS Malware and 80 traffic features extracted using CICFlowMeter [103, 105]. The dataset includes 5,065 benign apps from the Google play market published in 2015, 2016, and 2017 and 426 malware samples belonging to 42 unique malware families. The dataset is fully labelled and contains network traffic, logs, API/SYS calls, phone statistics, and memory dumps of malware families shown in Table 19.

-

10.

CICMalDroid2020. Also published by the CIC in 2020, the CICMalDroid2020 dataset [133, 134] consists of more than 17,341 Android samples from several sources collected from December 2017 to December 2018. It includes complete capture of static and dynamic features and contains samples spanning between five distinct categories: Adware, Banking malware, SMS malware, Riskware and Benign. Out of 17,341 samples, 13,077 samples ran successfully while the rest failed due to errors such as time-out, invalid APK files, and memory allocation failures. Of the 13,077 samples, 12% failed to be opened mostly due to an “unterminated string” error. From the 11,598 remaining samples, 470 extracted features comprise frequencies of system calls, binders, and composite behaviours, 139 extracted features comprise frequencies of system calls and 50,621 extracted features comprise static information, such as intent actions, permissions, permissions, sensitive APIs, receivers, etc. A brief composition of the dataset is shown in Table 20.

-

11.

VxHeavensFootnote 12 is a website dedicated to providing information about malware. The archive comprises over 17,000 programs belonging to 585 malware families (Trojan, viruses, worms).

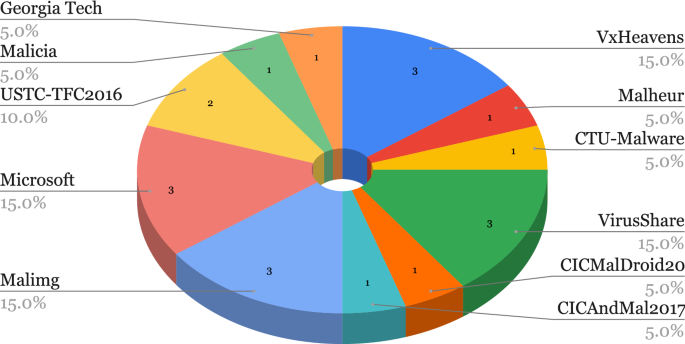

We provide an overview of the Malware datasets in Table 21. In Fig. 5, we also show a breakdown of the usage of the described Malware datasets in the selected papers. For these datasets, we observe that out of eleven datasets four have been used in three publications, one was used in two publications and the remaining six have been used only once. In addition, none of these datasets are balanced.

4.1.4 Additional datasets and sources

-

1.

IEEE Test Feeders. For nearly two decades, the Distribution System Analysis (DSA) Subcommittee’s Test Feeder Working Group (TFWG) has been constructing publicly available distribution test feeders for use by academics. These test feeders aim to create distribution system models that reflect a wide range of design options and analytic issues. The 13-bus and 123-bus Feeders are part of the Test Feeder systems created in 1992 to evaluate and benchmark algorithms in solving unbalanced three-phase radial systems. The DSA Subcommittee approved them during the 2000 Power and Energy Society (PES) Summer Meeting. Schneider et al. [135] summarize the TFWG efforts and intended uses of Test Feeders.

-

2.

XSSedFootnote 13 project was created in February 2007. It is an archive of cross-site scripting (XSS) vulnerable websites and provides information on things related to XSS vulnerabilities.

-

3.

The NeCTAR (National eResearch Collaboration Tools and Resources) cloud platform,Footnote 14 launched in 2012 by the Australian Research Data Commons, provides Australia’s research community with fast, interactive, self-service access to large-scale computing infrastructure, software and data.

-

4.

The Mobile-Sandbox [136] proposed by the University of Erlangen-Nurember, Germany, in 2014 is a static and dynamic analyzer system designed to support analysts detect malicious behaviours of malware.

-

5.

Credit Card Fraud. The dataset has been collected and analyzed during a research collaboration of Worldline and the Machine Learning Group (http://mlg.ulb.ac.be) of ULB (Université Libre de Bruxelles) on big data mining and fraud detection [137,138,139,140,141,142,143,144,145]. The dataset contains transactions made by credit cards in September 2013 by European cardholders. This dataset presents transactions that occurred in two days, where we have 492 frauds out of 284,807 transactions. The dataset is highly unbalanced, the positive class (frauds) accounts for 0.172% of all transactions. It contains only numerical input variables which are the result of a PCA transformation. Unfortunately, the original features and more background information about the data are not provided due to confidentiality issues. The only features not transformed with PCA are ’Time’ and ’Amount’. Feature ’Time’ contains the seconds elapsed between each transaction and the first transaction in the dataset. The feature ’Amount’ is the transaction Amount.

-

6.

Twitter ISIS Dataset. The Twitter ISIS dataset [84], published in 2018, consists of ISIS-related tweets/retweets in Arabic gathered from Feb. 2016 to May 2016. The dataset includes tweets and the associated information such as user ID, re-tweet ID, hashtags, number of followers, number of followees, content, date, and time. About 53 M tweets are collected based on the 290 hashtags such as State of the Islamic-Caliphate, and Islamic State. Table 22 provides a brief overview of the Twitter ISIS dataset composition.

-

7.

Italian Retweets Timeseries. The Italian Retweets Timeseries dataset [146], published in 2019, contains temporal data of about 5,121,132 retweets from 47,947 users taken from the Italian Twittersphere published between 18/06/2018 and 01/07/2018.

The breakdown of the usage of the additional datasets in the selected papers is shown in Fig. 6.

4.2 Performance assessment metrics

Frequently, a model’s performance is evaluated by constructing a confusion matrix [147], shown in Table 23, and calculating several metrics from the values of the confusion matrix. Table 24 shows the metrics commonly used to evaluate the performance of ML models. TP represents the true positives, the samples predicted as malicious or attacks that were truly malicious, TN the true negatives, the samples predicted as benign that were truly benign, FP the false positives, the samples predicted as attacks that were in fact benign, and FN the false negatives, the samples predicted as benign that were in fact attacks or malicious.

The accuracy score represents the fraction of correctly predicted samples, benign and malicious, and the error rate considers the misclassified samples. The accuracy metric may be misleading, especially when classes are highly imbalanced. The precision rate is the ratio of correctly predicted benign samples to all samples predicted as benign, and the sensitivity is the ratio of correctly predicted benign samples to samples to all benign samples. The Negative Predictive Value relates to the precision but considers the malicious samples; similarly, the specificity relates to the sensitivity but also considers the malicious samples. The False Positive (Negative) Rate is the ratio of malicious (benign) samples predicted as benign (malicious) to all the malicious (benign) samples. The \(F_1\)-score is the harmonic mean of the precision and recall scores. This metric aggregates two metrics to provide a more global view of the performance. The Geometric-Mean measures how balanced the prediction performances are on both the majority and minority classes.

The kappa (\(\kappa \)) statistic, introduced in [148], considers a model prequential accuracy, \(p_0\), and the probability of randomly guessing a correct prediction, \(p_c\). If the model is always correct, \(\kappa =1\), and if the predictions are similar to random guessing, then \(\kappa =0\). A \(\kappa < 0\) indicates less agreement than would be expected by chance alone. The Matthews Correlation Coefficient (also known as phi coefficient or mean square contingency coefficient), introduced in [149], may be seen as a discretization of the Pearson Correlation Coefficient [150], or Pearson’s r, for a binary confusion matrix. It measures the difference between predicted and actual values and returns a value between \(-1\) and \(+1\), where \(-1\) indicates a completely incorrect classifier and 1 indicates the exact opposite.

Researchers also use graphical-based metrics to observe the performance. However, these metrics make the comparison between different models more complex. For this reason, summarizations of graphical-based metrics are used. An example of such metrics is the receiver operating characteristic curve, or ROC curve, which provides a graphical representation of a binary classifier system’s diagnostic performance when its discrimination threshold is modified. The Area Under the ROC (AUC ROC or AUROC) represents the probability that a uniformly drawn random positive sample is ranked higher than a consistently drawn random negative sample. Like the ROC, the Precision-Recall Curve (PRC) employs multiple thresholds on the model’s predictions to compute distinct scores for precision and recall. Because computing the Area Under the PRC (AUPRC) is not as straightforward as the AUROC computation process, the interested reader is referred to [151] where a review of the main solutions proposed to compute the AUPRC is presented. Finally, training time and inference time are the time required to build a model and provide predictions, respectively.

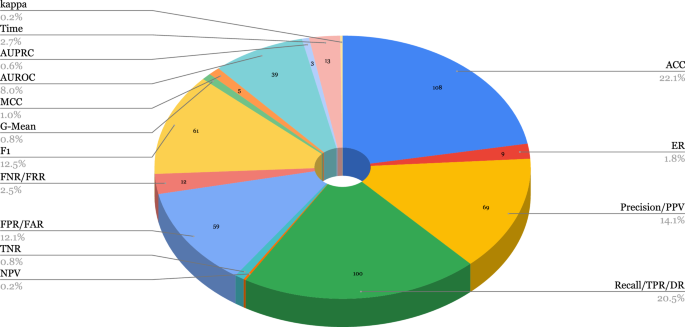

As seen in Fig. 7, where we present a breakdown of the usage of the evaluation metrics in the selected papers, the ACC is the most used of the 15 metrics considered for evaluation in the selected papers. In 108 out of the 210 selected papers, or 22.1%, the ACC is used for evaluation. It is followed by the DR which has been used in 100 papers, or 20.5%, the PPV which has been used in 69 papers, or 14.1% and the \(F_1\)-score which has been used in 61 papers, or 12.5%. As highlighted in Sect. 4.1, except for the “gold standard” dataset, none of the presented datasets is balanced, which points that the ACC measure is not a suitable metric for performance assessment. The DR, PPV and \(F_1\)-score, however, are more suitable metrics than the accuracy as they consider the class imbalance in datasets. In cyber-security, the DR is useful as there is a high cost associated to attacks, similarly the PPV is an important metric to consider as a low PPV indicates that benign samples or transactions are being flagged as attacks which renders the ML model useless. Due to the imbalanced nature of cyber-security datasets as seen in Sect. 4.1, the \(F_1\)-score is a useful assessment metric as it simply balances the DR and PPV. The least used metrics are the NPV and \(\kappa \)-score, which have both been used only once in the selected papers. The NPV is proportional to the frequency of attacks in the dataset, in other words, it is sensitive to imbalanced datasets. As a result, if the prevalence of attacks in the training dataset differs from the prevalence of attacks in the actual world, the computed NPV may be inaccurate. That is, as the prevalence of attacks decreases, the NPV increases because there are more true negatives for every false negative. This is because a false negative would imply that a data point is actually an attack, which is improbable given the scarcity of attacks [152]. Similarly, the \(\kappa \)-score is also sensitive to imbalanced datasets, therefore it is not suitable in the cyber-security domain where attacks are less frequent than benign samples or transactions. Finally, the time complexity (training and inference) is only reported in 2.7% of the selected papers.

5 Open issues and challenges

This section answers our third research question and presents the open challenges found in the literature. We cover open issues and challenges in the areas of the datasets and assessment metrics used, review the learnt lessons and recommend future research directions. Finally, we also discuss the challenge of the gap between research and practice in the field of cyber-security, particularly in the application of ML.

5.1 Datasets and repositories

In Sect. 4.1, we have described 45 datasets, repositories and sources. We summarize the key issues found related to the datasets in this subsection.

- Outdated datasets.:

-

Over 70 of the 100 reviewed articles focusing on intrusion detection used either the KDD’99 or the NSL-KDD datasets which are closed, anonymized, and outdated (over 20 years old) datasets. Similarly, the most recent Spam and Phishing email dataset used in the selected papers is from 2013. Therefore it is possible that some of the parts under consideration are no longer relevant due to changes in attack vectors and additional factors such as availability and comparability. Additionally, the use of outdated datasets hinders the ability to generalize the results to current real-world scenarios [153].

- Limited number of samples.:

-

Besides being outdated, both the Spam and Phishing datasets used in the selected papers, except for the TREC and WEBSPAM-UK, contain less data when compared to the intrusion datasets. They comprise 5000 or fewer samples, with the “gold standard” dataset containing only 1600 samples.

- Non-representative data distribution.:

-

Moreover, in addition to not only containing synthetically generated but also manually labelled data, the class imbalance in these datasets is not representative when compared to real-world scenarios, rendering the proposed approaches ineffective when applied to real data. This is one of the primary reasons why most academic methods are not implemented in practice.

- Lack of train/test splits to allow comparison of results.:

-

As shown in Table 15, apart from the KDD’99, NSL-KDD, UNIBS2009, AWID2015, UNSW-NB15 and UGR’16 datasets, the datasets in the selected papers are not originally split into train and test partitions, but even then, authors train and test their proposed approaches on random and narrower partitions of these datasets or train/test partitions

- Several datasets used are kept private.:

-

Most of the data collected from traffic or spam and/or phishing feeds are frequently kept private, making it impossible for other authors to reproduce results.

- Lack of benchmark datasets.:

-

There are no updated, standard and public benchmark datasets for the different cyber-security problems. Due to these facts, accurate comparisons of the approaches are impossible without having to re-implement them and obtain the data from sources such as traffic or phishing feeds.

In computer science, the quality of the output is decided by the quality of the input, as stated by George Fuechsel in the concept “Garbage in, Garbage out.”. We acknowledge the limitations of the reviewed datasets and repositories and advocate the need for the development of more up-to-date, standardized, and open benchmark cyber-security datasets that reflect the current state of cyber threats and attack vectors, those datasets should also be adequately separated into training/testing and validation partitions. Additionally, we recommend that future studies should consider using multiple datasets and testing the models on a variety of scenarios to improve the generalizability of the results and allow proper evaluation, comparison, and real-world applications.

5.2 Performance assessment metrics

In Sect. 4.2, we presented the 15 metrics used in the selected papers for assessing the performance of the SSL models built on the datasets presented in Sect. 4.1. In this subsection, we present an overview of the significant issues identified in relation to the performance assessment metrics.

- Overlooked/unused metrics.:

-

Throughout the selected papers, we have noticed that certain important assessment metrics are not used in most of the papers. For example, in [154], only the AUROC is reported, in [155, 156], only DR and FPR or FAR are reported, and in [157] only DR and ACC are reported. This shows that authors are giving more importance to certain metrics while overlooking others, such as PPV and \(F_1\)-score, which should be used in conjunction as they consider the class imbalance in datasets.

- Inadequate assessment frameworks.:

-

The accuracy is a misleading metric in imbalanced settings, however, it has been used alone in [158,159,160,161]. Furthermore, the accuracy can be inadequate for use in the real world, where data is typically unbalanced. In light of this, it is important to conduct assessments using realistic deployment situations with unbalanced data and adequate assessment frameworks. The chosen metrics must accommodate the needs of the target audience.

- Training and Inference time.:

-

Only 2.7% reported time complexity measurements, which is an important metric in the cyber-security domain where attack should be detected as soon as possible and static models often need to be rebuilt from scratch to detect unseen attacks, more importance should be given to this assessment metric as it is imperative to detect and mitigate those attacks in a timely manner.

- False alarm rate.:

-