Abstract

For the purpose of processing sequential data, such as time series, and addressing the challenge of manually tuning the architecture of traditional recurrent neural networks (RNNs), this paper introduces a novel approach-the Recurrent Stochastic Configuration Network (RSCN). This network is constructed based on the random incremental algorithm of stochastic configuration networks. Leveraging the foundational structure of recurrent neural networks, our learning model commences with a modest-scale recurrent neural network featuring a single hidden layer and a solitary hidden node. Subsequently, the node parameters of the hidden layer undergo incremental augmentation through a random configuration process, with corresponding weights assigned structurally. This iterative expansion continues until the network satisfies predefined termination criteria. Noteworthy is the adaptability of this algorithm to handle time series data, exhibiting superior performance compared to traditional recurrent neural networks with similar architectures. The experimental results presented in this paper underscore the efficacy of the proposed RSCN for sequence data processing, showcasing its advantages over conventional recurrent neural networks in the context of the performed experiments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In practice, statistical learning problems across diverse domains, including financial economics, retail sales, and medical monitoring, frequently entail the analysis of time series data [1, 2]. The unique nature of time series data, characterized by the temporal ordering of observations, inherently poses challenges to their analysis. The intricacies involved in unraveling the patterns within time series data have led many to recognize time series analysis as a pivotal challenge within the realms of machine learning and data mining.

To effectively process time series data and leverage its historical information, it is imperative to imbue the network with short-term memory capacity. Traditional feedforward networks, being inherently static, lack this memory capability. While machine learning approaches such as the sliding window method, hidden Markov model, and conditional random field graph transformation network offer notable advantages in handling complex data and constructing high-precision models, practical experience has demonstrated their limitations in dealing with the raw form of natural data. Recurrent Neural Networks (RNNs), introduced by Rumelhart for sequential data processing [3], stand out as a crucial deep learning methodology. Much like how convolutional networks are specialized for processing grid-based values, such as images, RNNs exhibit superior performance in handling and predicting sequences of data. Notably, RNNs possess the ability to input one sequence and concurrently generate another. Extensive empirical evidence supports the efficacy of RNNs as a potent learning model for time series prediction [1]. Furthermore, RNNs have achieved cutting-edge results in the realm of sequence data applications. This includes noteworthy accomplishments in sequence-to-sequence learning, such as machine translation [4, 5], and in domains like speech recognition [6, 7]. The versatility and effectiveness of recurrent neural networks underscore their pivotal role in advancing the state-of-the-art across diverse applications involving sequential data.

However, the conventional recurrent neural network confronts numerous challenges in both its design and implementation. The predominant training method for recurrent neural networks is backpropagation through time (BPTT) [8], which relies on a gradient-based optimization algorithm. However, this approach is constrained by the limitations of local optimization, often proving inadequate in addressing issues such as the vanishing or exploding gradient problem. In recognition of these challenges, Sepp Hochreiter and collaborators introduced the long-term and short-term memory network in 1997 [9]. This innovation successfully mitigated the gradient disappearance problem seen in previous recurrent neural networks, enabling the completion of more complex tasks. Nevertheless, the issue of gradient explosion persists. Moreover, determining the correct and efficient architecture for a recurrent neural network, enhancing learning speed, and reducing computational overhead pose significant challenges. The manual configuration of RNNs based solely on experimental trial and error, without technical guidance, can lead to considerable time consumption and potentially impact the efficiency of the learning model. Addressing these complexities is crucial for advancing the effectiveness and practicality of recurrent neural networks.

A significant challenge associated with RNN is the phenomenon of gradient vanishing or exploding, which is contingent upon the choice of activation function. Specifically, as temporal sequences extend, the gradient may precipitously diminish or escalate. Therefore, the improvement of RNN has been widely studied, such as Echo State Networks (ESN) and Liquid State Machines (LSM). ESN [10] employ a gradient-free approach for modeling time-series datasets. Within ESNs, echo states facilitate the feedforward transition across reservoirs, eliminating the necessity for gradient propagation through temporal steps. ESN possess the capability to effectively mitigate the issues of local minima and gradient vanishing during the backpropagation process [11]. LSM [12] offer a sophisticated approach to harness the computational potential of recurrent RNN without necessitating direct training of the network. LSM constitute a variant of pulse neural networks wherein the conventional sigmoid activation function is supplanted by a threshold function, and each neuron functions as an accumulative memory unit. Consequently, during the update process of a neuron, its value derives not from the summation of inputs from adjacent neurons but from its own cumulative history. Upon reaching a predefined threshold, the neuron discharges its stored energy to subsequent neurons. This mechanism engenders a pulsatile pattern characterized by quiescence until the abrupt activation threshold is met. LSM leverage the principles underlying the reservoir computing (RC) paradigm [13], with a comprehensive review of developments within this research domain provided in [14].

In the realm of machine learning, the concept of neural networks converging slowly to local minima has prompted a focus on neural networks with random weights (NNRW). Addressing the challenge of slow convergence and local minimum issues, NNRW strategically addresses these concerns by allocating input weights randomly during the training phase while regulating only the output weights [15, 16]. Early studies underscored the significance of the output layer’s weight, suggesting that other weights may not necessitate adjustment after correct initialization. This foundational idea is echoed in the seminal work of Broomhead and Lowe, demonstrating that NNRW outperforms other conventional training methods such as backpropagation [17]. The exploration of random learning techniques in neural networks gained scholarly attention in the late 1980 s and continued to evolve with the introduction of various models. For instance, Igelnik and Pao introduced the random vector functional link (RVFL) model, featuring a direct link between the input and output layers [18]. While this model provides a general approximation with a probability of 1 for continuous functions, it has inherent limitations. In 2017, Wang Dianhui and colleagues pioneered stochastic configuration networks (SCN) [19] and subsequently refined the concept with the introduction of deep stochastic configuration networks [20]. This innovation significantly reduced the time required for network configuration while maintaining the accuracy and generalization ability of neural network regression or classification. Practical applications have demonstrated the noteworthy achievements of the stochastic configuration network across diverse fields, including soft-sensing techniques, industrial process modeling, and robotic grasping recognition [21,22,23,24]. In the context of learning sequence data using random algorithms for neural network models, a literature reference [25] proposes leveraging SCN with a deep stacked structure to address the challenge of unstable data streams, further emphasizing the versatility and effectiveness of this approach. Motivated by the broad learning system architecture, a broad SCN is presented in [26], which has higher regression accuracy and stability than SCN.

Inspired by the preceding discourse, we introduce a novel methodology tailored for time series data. While adhering to the conventional Recurrent Neural Network (RNN) algorithm framework, our approach features distinct design and utilization nuances. The innovations and ensuing benefits of our approach are encapsulated as follows:

-

1.

In this study, we introduce an innovative Recurrent Neural Network (RNN) based on the stochastic configuration algorithm, which facilitates the automatic construction of an RNN with a single hidden layer. This model possesses the unique capability to autonomously build the network structure and determine the optimal number of nodes.

-

2.

In contrast to traditional RNNs, which often require iterative training on experimental data to optimize the learning model, our algorithm circumvents the need for repeated node selection. Typically, a single training iteration yields results for multiple datasets, significantly reducing the time required for network configuration experiments.

-

3.

Unlike traditional neural networks and stochastic configuration networks (SCN), our algorithm achieves comparable fitting effects with fewer nodes. By adopting a sliding window approach to learn representations and process sequential data, it results in a more concise network structure with reduced parameter configuration. When training outcomes for the network are comparable, the recurrent SCN exhibits higher accuracy in testing sequence data, underscoring its superior generalization ability.

-

4.

The recurrent SCN network undergoes end-to-end training, eliminating the necessity for modular or staged training. This streamlined learning process directly optimizes the overall task goal. Whether applied to univariate time series or multivariable time series in higher dimensions, the fitting performance of the recurrent SCN consistently outperforms that of both traditional RNNs and SCNs.

2 Preliminaries

2.1 Artificial neural networks

Artificial neural networks (ANNs), also known as neural networks (NNs) or connectionist models, emulate and abstract key characteristics of the human brain or natural neural networks. In recent years, ANNs have garnered substantial attention owing to their remarkable advantages: (1) universal approximation: ANNs can effectively approximate complex nonlinear relationships. (2) Robustness and fault tolerance: each neuron in the network stores both quantitative and qualitative information with an equipotential distribution, rendering ANNs robust and tolerant to faults. (3) Parallel distributed processing: employing a parallel distributed processing method enables ANNs to execute a multitude of operations swiftly. (4) Learning and adaptability: ANNs exhibit the capability to learn and adapt to unknown or uncertain systems. (5) Integration of quantitative and qualitative knowledge: ANNs can seamlessly handle both quantitative and qualitative knowledge concurrently.

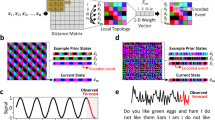

Concerning its structure, a neural network is typically segmented into an input layer, an output layer, and hidden layers (refer to Fig. 1). Each node within the input layer corresponds to a prediction variable, and the output layer may consist of multiple nodes representing target variables. Positioned between the input and output layers is the hidden layer, where the complexity of the neural network is determined by the number of hidden layers and nodes in each layer. The topology, or architecture, of a neural network is shaped by factors such as the number of hidden layers, the quantity of nodes, and the connectivity pattern between nodes. Constructing a neural network from the ground up involves decisions regarding the number of hidden layers and nodes, the choice of activation function, and any imposed restrictions on weights. Unless the problem at hand is straightforward, training a neural network can be a time-intensive process.

2.2 Stochastic configuration networks

Stochastic configuration network is an incremental learning method adding nodes one by one under a supervisory mechanism until the preset conditions are met. Given a training dataset of input and output X, Y containing N data, where the input \(x_i, i=1,2,\ldots ,N\) has d variables while the output \(y_i, i=1,2,\ldots ,N\) has m variables. Assume that the target function is \(f: \mathbb {R}^{d} \rightarrow \mathbb {R}^{m}\), stochastic configuration networks start from a feedforward neural network with a single hidden node, configuring the hidden layer node parameters randomly and increasing the number of hidden nodes gradually. Denote the input weight matrix, the bias vector and the output weight matrix as \(W, b, \beta\), respectively. Then, suppose that a single-layer feedforward neural network with hidden nodes \(L-1\) has been established, namely,

and the current residual error is \(e_{L-1}=f-f_{L-1}=\left[ \begin{array}{cc} e_{L-1,1},e_{L-1,2}, \ldots , e_{L-1,m} \end{array}\right]\). However, when the current network does not meet the error tolerance conditions, the convergence properties of the network are improved by gradually increasing the nodes and configuring them in a structured way until the convergence conditions are finally met. SCNs randomize the random basis function of the new hidden node \(g_L\) according to the supervisory mechanism, after that evaluates the output weights in a constructive way. The output function \(f_L(x)\) is considered as a linear combination of the previous network \(f_{L-1}\) and the newly appended node \(g_L\). Figure 2 shows the structure of stochastic configuration networks.

In order to simplify the algorithm conveniently, for \(q=1,2,\ldots ,m\), define output weights as follows:

where the activation function of the L th hidden node is expressed as,

By gradually increasing network nodes, a simulation algorithm for SCNs is established. This mainly includes two folds, first, according to the formula (4) calculating \(h_L\), obtain the optimal input weight and bias of the L th node under the conditions and the second part calculates the output weight of the L th node by the formula (3). With the increase of the number of nodes, the fitting effect of the network on the data set is getting better and better, until finally it meets the error requirements.

2.3 Recurrent neural network

Recurrent neural networks deal with sequential data of any length by using neurons. In the classical model of fully connected neural network, the structure of the network is from the input layer to the hidden layer and the output layer, and the layers are fully or partially connected, but the node cannot be connected to the nodes of same layer. However, the nodes between the hidden layers of the recurrent neural network are connected, and the hidden layer input includes not only the input layer output, but also the hidden layer output at the previous time. The recurrent neural network has an input vector \(X^t\) at each moment, and the current hidden state \(S^t\) is jointly determined by the state \(S^{t-1}\) at the previous moment and this input \(X^t\). For a data stream, data from different time steps in the stream are passed to the input layer of the network in turn, and finally the output layer predicts the next time step data in the stream or the result of processing the current data. In fact, the recurrent neural network only needs to ensure that there is an input at each time, but the output does not need to be available at each time step, that is, the input and output time steps themselves are not needed to be of equal length. The classic recurrent neural network structure is shown in Fig. 3.

The specific algorithm is described below. After training the input set X=\(\{{x_1, x_2, \ldots , x_N}\}\) for the given sequence data and the output set \(Y=\{{y_1,y_2,..., y_N}\}\), \(i=1,2,\ldots ,N\), a standard recurrent neural network can fit the hidden state S and the predicted value of the data. The network calculation method at time t is as follows:

where U is the input weight from the input layer to the hidden layer, W is the hidden weight matrix between each time step, b is the bias vector, V is the output weight from the hidden layer to the output layer, h, g are two activation functions.

The sharing parameter is a significant statistical method used in RNNs, which allows the model to be extended to apply and generalize across different forms of examples. Therefore, only a single model that works for all time steps and all sequence lengths needs to be learned. What is more, the most popular training strategy is backpropagation through time, similar to the backpropagation algorithm, the network training process consists of three steps: (1) the output value of each neuron is computed forward; (2) the value of the error term of each neuron is computed in reverse, i.e., the partial derivative of the error function with respect to the weighted input of the j th neuron; (3) calculates the gradient of each weight and updates the weights using the stochastic gradient descent algorithm.

3 Stochastic configuration networks for time series

3.1 Definitions of recurrent stochastic configuration network

First, some basic definitions are presented. Consider the length of all sequence data to be fixed and use \(\tau\) for ease of understanding. Given a target function, assuming the input and output of our sample points are drawn from the target function \(f:\mathbb {R}^{\tau \times d}\rightarrow \mathbb {R}^ {\tau \times m}\). Let \(L_2 (D)\) be the Hilbert space with Lebesgue measurable vector function as the form:

where D is a compact set belonging to \(\mathbb {R}^{\tau \times d}\), both the target function and our learning model are elements from \(L_2 (D)\). By defining the state vector, with the given initial state \(S^0\), and then for each time step \(t \in \{1, 2, \ldots ,\tau \}\), we have

Then suppose a recurrent stochastic configuration network with \(L-1\) hidden nodes is as follows:

where \(\beta =\left[ \begin{array}{cc} \beta _{1}, \ldots , \beta _{L-1}\end{array}\right] ^\textrm{T}\), \(\beta _{j}=\left[ \begin{array}{cc}\beta _{j,1}, \ldots ,\beta _{j,m}\end{array}\right] , j=1, 2,\ldots ,L-1\) is the output weight from hidden layer to output layer.

The residual error for this \(L-1\) nodes network is denoted as

where

means the the residual error of time t. The mean square error is considered as the loss function of the network and the norm is Frobenius norm, if there exists t s.t. \(\Vert e_{L-1}^t \Vert\) unsatisfies the given tolerance value, a new node that is, a new random basis function \(g_L\) including proper weights \(U_L\), \(W_L\) and \(b_L\)needs to be added to the network, for \(t \in \{1, 2, \ldots ,\tau \}\), the old nodes \(g_j\), \(j=1, \ldots ,L-1\) will not be changed by the newly added nodes, the independent variable of \(g_j\) is still a linear combination of the input of time step t and the state vector of j nodes in the previous time step, and the newly generated \(g_ L\) will also increase the consideration of newly added nodes at the last moment, and finally estimate the output weight \(\beta _ L\), through formula

In the end, we get a recurrent stochastic configuration network with approving fitting effect. The sequence-to-sequence recurrent stochastic configuration network structure is shown in Fig. 4. The red color represents the newly added nodes and the weights that need to be set. In this model d and m are 2, i.e., there are two input nodes and two output nodes. There are two time steps and each time step has one output.

Remark 1

The input vector and the hidden state in both the echo state network and the recurrent SCN are related to the time, and the node in both networks is connected to the nodes of the same layer. However, there are several differences between the SCN and the ESN. In the SCN, the number of nodes in each layer and the connection modes between each two nodes are configured stochastically based on the actual needs, while in the ESN, the total number of the nodes is fixed and there is no distinguish between one hidden layer and another hidden layer.

3.2 Universal approximation property

The theoretical feasibility of recurrent stochastic configuration networks is discussed in details and will illustrate the universal approximation property of the algorithm through theorems and mathematical proofs. Theoretically, although only sequence-to-sequence recurrent stochastic configuration network algorithm is established, it also can be extended to sequence-to-vector methods. Sequence-to-sequence model solves the problem that the input and output are sequence data of same length, which is the most classic type of structure in RNN, while the sequence-to-vector model solves the problem that the data output is a vector result in a certain time step.

Under the definitions given in the previous section, parameters \(\delta _{L, q}^{t}\) required to construct the sequence-by-sequence model are complemented. For each function g belonging to \(\varGamma\) while \({\text {span}}(\varGamma )\) is dense, there is \(b_{{g}} \in \mathbb { R }^+\), and \(0<\Vert {g} \Vert < b_{{g}}\), given \(k \in (0,1)\) and a non-negative real sequence \(\{\nu _L\}\), and there is \(\lim _{L\rightarrow \infty } \nu _L=0\) and \(\nu _L\le (1-k)\). For the definitions of \(t \in \{1, 2, \ldots ,\tau \}\), \(q=1, \ldots ,m\) and \(L = 1, 2, \ldots\),

When t is fixed, the random basis function \({g}_L\) also is seen as hidden state of L th node \(S_L^t\). For the ReSCN structure simulating the sequence data of \(\tau\) time steps, the next theorem is proposed such that the universal approximation property is ensured.

Theorem 1

If the hidden state output \(S_L^t\) of Lth node satisfies the following inequality constraints

and the output weights are given by

then we have \(\lim _{L \rightarrow \infty }\left\| f-f_{L-1}\right\| =0\).

Proof

The residual error of the network with \(L-1\) nodes is related to the residual error of the network with L nodes as follows:

Since the error is obtained by adding the square sum of the error corresponding to each time step \({\Vert e_{L}\Vert }^{2}=\sum \limits _{t=1}^{\tau } \sum \limits _{q=1}^{m}{\Vert e_{L,q}^t\Vert }^{2}\), then from the formula of output weights (11) one proves that \(\{\Vert e_L \Vert ^2\}\) is monotonically decreasing,

Substituting through the formula of output weight, one gets

Due to the decrease of sequence \(\{\vert e_L \vert \}\) and the fact that it has a lower bound according to the positive definiteness of norm, the sequence has a limit according to the monotone boundedness theorem.

By substituting the inequality condition (10) into (14), one has

Consequently, the inequality (16) holds,

Note that \(\gamma _L=\nu _L {\Vert e_{L-1}\Vert }^{2}\)is larger than 0 and \(\lim _{L \rightarrow \infty }\gamma _L=0\), so we have \(\lim _{L \rightarrow \infty } {\Vert e_{L}\Vert }=0\). \(\square\)

3.3 Algorithm description

In this part, the algorithm implementation of ReSCNs is described in detail. This algorithm is mainly divided into the following two folds:

-

1.

Configure hidden parameters: generate new hidden nodes and add them to the model by randomly configuring input weights, recurrent weights and bias that meet inequality constraints.

-

2.

Estimate the output weight: constructively determine the output weight of the current learning model.

For a given training sequence dataset with input X=\(\{{x_1,x_2,\ldots ,x_N}\}\) and output \(Y = \{ {y_1},{y_2}, \cdots ,{y_N}\}\), for i th data (\(i=1,2,\ldots ,N\)),

Given the initial hidden state \(S^0=\left[ \begin{array}{cc} S_1^0, S_2^0, \ldots \end{array}\right]\), the corresponding residual tensor of the network with \(L-1\) nodes is denoted as \(e_{L-1}(X)=\{e_{L-1,q}^t\} \in \mathbb {R}^{N \times T \times m}\). Use \(h_L (x)\) represents the activation function result of N inputs \(\{x_1, x_2, \ldots , x_n \}\) at the Lth hidden node,

Thus, the output 3-mode tensor of the hidden layer is composed of L slices, i.e., \(H_L(X)=\left[ \begin{array}{cc} h_1, h_2, \ldots , h_L \end{array}\right] \in \mathbb {R}^{N \times \tau \times L}\), for all data, \(S_L^t (X)=[\begin{array}{cc}s_1^t, s_2^t, \ldots ,s_N^t \end{array} ]\) represents the hidden state of N data corresponding to time t under the Lth hidden node, which is also expressed in the form of 3-mode tensor. The variable definition required of activation function is given above, and the output weight from the hidden layer to the output layer is defined as follows:

where \({\Vert \cdot \Vert }_2\) is the 2-norm of the matrix.

In order to make the calculation more handy, we introduce a set of variables \(\xi _{L,p}, q=1, \ldots , m\) in algorithm descriptions

In the light of above theorem, the optimal estimation for the output weight of each step is obtained as

Using the least square method to find the optimal solution \(\beta ^*\), set the sum of squares of residuals:

The extreme point of the function can be obtained according to the stagnation point:

Finally, one gets \(\beta ^{*}=\left( \sum \limits _{t=1}^{\tau } H_{L}^{t^{\textrm{T}}} H_{L}^{t}\right) ^{-1} \sum \limits _{t=1}^{\tau } H_{L}^{t^{\textrm{T}}} Y^{t}\).

When the output is independent on time t, i.e., \(y_{i}=\left[ \begin{array}{cc} y_{i, 1}, y_{i, 2}, \ldots , y_{i, m}\end{array}\right] \in \mathbb {R}^{m}, i=1,2, \ldots , N\), the output of the hidden node (hidden state) is the same as the definition of the previous model. The output of the last time step is recorded as the output of this hidden layer. The 3-mode tensor is \(H_L^\tau (X)=[\begin{array}{cc} S_1^\tau ,S_2^\tau ,\ldots ,S_L^\tau \end{array}] \in \mathbb {R}^{N \times L}\), for the q dimension in the output, the output weight of the Lth node is as follows:

In this case, the variable \(\xi _ {L, P}, q = 1, \ldots , m\) in the algorithm description is

And the optimal solution of the output weights of each step can be obtained by using the least square method and MP pseudoinverse:

The pseudo-code of the algorithm is as follows:

Remark 2

When selecting the parameters of the above algorithm, the following conditions need consideration. First, we should consider the number of batches of data, the number of sequences in one batch of data and the length of each data sequence. Second, we should consider the number of layers of the neural network, the number of neurons in hidden layers. Finally, we should consider the number of cycles for the RNN and LSTM.

4 Empirical demonstration

Building upon the stochastic configuration network, this paper introduces recurrent stochastic configuration networks (ReSCN) and establishes its universal approximation property within a recurrent structure. The algorithmic perspective of a recurrent stochastic configuration network commences with a modest-scale recurrent neural network featuring a single hidden layer and node. The network parameters, specifically those of the hidden layer node, are progressively augmented through random and constructive configuration until the network satisfies predetermined termination criteria. Throughout the iterative construction process, both hidden parameters and recurrent parameters undergo random configuration under a supervision mechanism. The weights of the output layer are determined based on the principle of least-loss function. Utilizing the method of least squares, the output layer’s weight is ascertained. For the target function, assuming the establishment of a feedback neural network with a single-layer \(L-1\) node, input weights, biases from the input layer to the hidden layer, and output weights (\(\beta _j\)) are randomly determined using the ReSCN model. The network continues to evolve by adding nodes until the residual \(e_{L-1}\) meets defined conditions and the number of nodes is within limits, thereby creating a neural network that effectively fits the target function.

4.1 Function approximation

The initial dataset employed for time series prediction and analysis comprises experimental data constructed by combining multiple sinusoidal functions and noise. Specifically, two sinusoidal functions are employed-one with an amplitude of 0.5, a random frequency, and an initial phase of 10, and the other with an amplitude of 0.2, a random frequency, and an initial phase of 20. Additionally, random noise is incorporated into the data. Each simulated time series consists of 500 time steps. To generate a diverse dataset, a total of 10,000 time series are created using the aforementioned data functions. Among these, the initial 7000 data points are designated as the training set, the subsequent 2000 data points form the verification set, and the final 1000 data points constitute the test set. This segmentation enables comprehensive training, validation, and evaluation of the time series prediction model (Fig. 5).

In this simulation experiment, a ReSCN is constructed with specific parameter settings as follows: the search range for input weight, recurrent weight, and error is \(\Theta = \left[ \begin{array}{cc} 0.5, 1, 5, 10, 30, 50, 100, 150, 200, 250,300,350 \end{array} \right]\), and the maximum allowable number of network nodes is set at 20. To facilitate comparison, a linear model is employed for prediction, establishing a benchmark rooted mean square error of 0.0683. Additionally, two SCNs are established, each with a maximum number of network nodes set at 40 and 100, respectively. In these SCN configurations, the training and test data are converted into two-dimensional array forms, the RNN includes a dense layer, the loss function is mean squared error (MSE), and the Adam optimizer is selected.

As illustrated in Fig. 6, the experiment reveals a notably higher training rate for the recurrent stochastic configuration network compared to the SCN. Further insights gleaned from the experimental results, as detailed in Table 1, highlight the superior training effectiveness of the recurrent stochastic configuration network with 20 nodes when contrasted with the SCN featuring 100 nodes. This enhanced performance is achieved alongside a substantial reduction in the number of parameters that need to be configured in comparison to the SCN. In the context of processing sequence data, the ReSCN capitalizes on the inherent memory capabilities embedded within the network structure, leading to a significant reduction in the overall complexity of the network architecture.

4.2 Benchmark datasets

This section utilizes four real-world datasets, encompassing clothing retail sales, the unemployment rate, sunspot numbers, and minimum temperature change data in Melbourne. The first two datasets are sourced from the Federal Reserve’s economic data, capturing non-seasonally adjusted monthly retail sales of clothing and clothing accessories stores (in millions of dollars) from 1992 to 2022, and seasonally adjusted monthly unemployment rates from 1948 to 2022, respectively. The unemployment rate reflects the percentage of the unemployed in the labor force, with the labor force data encompassing individuals aged 16 and older residing in the United States or the District of Columbia. The third dataset represents the monthly count of sunspots observed over more than 230 years (1749–1983), as documented by Andrews and Herzberg. The final dataset records the lowest daily temperature recorded by the Australian Meteorological Service in Melbourne over a span of 10 years (1981–1990), measured in degrees Celsius. For the first three monthly time series datasets, each comprising 12 months (1 year) as a group, the sliding window method is employed to establish the training set, verification set, and test set. The last daily dataset, covering 14 days (2 weeks) as a cycle, utilizes the verification set to assess the model’s overfitting degree, aiding in the appropriate selection of the number of nodes or epochs. In this experiment, the chronological division of the dataset is intentional. Utilizing data from previous decades to predict current results aligns with logical reasoning, providing insights into the model’s effectiveness. Short-term predictions are established through model fitting, and the results on the test set are presented. Given the significant changes in the data trend for the first two datasets in 2020 due to the COVID-19 pandemic, further model fitting is deemed inappropriate. Consequently, the corresponding data for this period are removed from consideration. The number of datasets post min–max standardization is summarized in Table 2.

In the training of the clothing retail sales dataset, both the recurrent stochastic configuration network and the RNN are configured with a maximum of 200 network nodes, and the allowable error is set at 0.01. Consequently, the ReSCN model generates 132 nodes, the SCN model generates 105 nodes, and the RNN model is configured with 100 nodes. The epoch of RNN for this model and subsequent datasets is set to 3.

For the unemployment rate dataset and the Melbourne minimum temperature change dataset, the maximum number of nodes for both random algorithms is set to 100, with the recurrent neural network nodes also configured at 100. In the case of the sunspot number dataset, 60 is chosen as the maximum number of nodes for the random algorithm, and the number of RNN nodes is set to 64.

Figures 7, 8, 9, 10 depict the prediction results of the recurrent stochastic configuration network on the four test datasets, with the blue line representing the real-time series data and the orange line depicting the prediction results. Notably, the recurrent stochastic configuration network demonstrates a robust fitting effect on diverse data types, showcasing proficiency in handling stable and seasonal time series data. In the latter two datasets (Figs. 9 and 10), similar to the recurrent neural network, the prediction results exhibit a slight time lag, yet the overall trend aligns consistently with the actual data.

In conclusion, four model evaluation metrics are employed to compare the performance of the aforementioned SCNs with the classical RNN. The results indicate that, overall, the fitting effectiveness of ReSCN surpasses that of both SCN and RNN. Particularly notable is the scenario where RNN and SCN exhibit comparable performance in the training set; however, the former demonstrates more accurate predictions on the test data, indicating superior learning outcomes (Table 3).

The results from the aforementioned experiments indicate that the Root Mean Square Error (RMSE) and Mean Absolute Error (MAE) metrics for ReSCN consistently outperform those of RNN with an equivalent number of nodes. In contrast to traditional neural networks and stochastic configuration algorithms, this approach excels in characterizing learning, processing sequential data using a sliding window methodology, and achieving comparable fitting effects with fewer nodes-resulting in a more concise network structure and reduced parameter configuration. Notably, when the training outcomes of the network are comparable, ReSCN exhibits heightened accuracy and demonstrates stronger generalization abilities in sequential data testing.

4.3 Stock price prediction

In recent years, with the rapid development of the economy, an increasing number of individuals are entering the stock market for investment. Accurately predicting changes in stock prices can mitigate investment risks for stock investors and enhance overall returns. Given the multitude of factors influencing stock prices, simple models often prove inadequate for precise predictions. In this section, we leverage the Recurrent Stochastic Configuration Network (ReSCN) to conduct experiments. The experiment involves the use of 5203 stock data points for Google spanning from January 2001 to September 2021, calculated on a daily basis. The dataset includes five variables: opening price, maximum price, minimum price, closing price, and adjusted closing price. To predict the future value of the ’opening price’ for the next day (31), information from the previous month, encompassing all features in the target list and 30 days’ stock price data, is utilized. Standardized data are employed, with \(80\%\) of the stock price data used as the training set and the remaining temporal data utilized as the test set.

The parameter search range for ReSCN is defined as \(\Theta =\left[ \begin{array}{cc} 0.5, 1,2,4,10,15,32,64,100,150,200\end{array}\right]\), with a tolerance error set at 0.009. Three activation functions—relu, tanh, and sigmoid—are employed individually. In the final ReSCN model generated, the first two activation functions utilize six hidden layer nodes, while the third requires only four nodes. The network’s training speed and performance results corresponding to these three different activation functions are illustrated in Fig. 11. Observing the results, it is evident that the model using relu as the activation function exhibits the slowest convergence speed. Conversely, the learning model using sigmoid as the activation function achieves a good fitting effect on the training data with only four nodes. Although the rate of Root Mean Square Error (RMSE) decline varies, each activation function yields a convergence model swiftly.

To provide a more objective validation of the algorithm’s effectiveness under similar conditions, additional models-namely, the SCN and LSTM-are established alongside ReSCN. The parameters for the SCN model remain consistent, but 36 nodes are required to achieve an equivalent adjustment effect on the training set. For the LSTM deep learning model, a gridsearchCV is employed to fine-tune hyperparameters and identify an optimal model. The network architecture involves two LSTM layers with 50 nodes each, one ‘dropout’ layer, and one ‘dense’ layer. During parameter selection, the batch size is chosen from the range [16, 20], the epoch is varied within [8, 10], and the optimizer is selected between Adam and adadelta. In the final optimized model, a training batch size of 20, 10 epochs, and the Adam optimizer are utilized.

In Fig. 12, the training effects of the three learning models on the test set are illustrated and compared with the actual data. Upon examination of the prediction results, it becomes apparent that the opening price predicted by SCN is consistently higher than the actual values, indicating a significant deviation. The comprehensive prediction performance of the selected LSTM surpasses that of SCN, successfully capturing the development trend of stock prices. However, the LSTM exhibits noticeable delays and its specific accuracy is not exceptionally high. Contrastingly, ReSCN demonstrates a prediction pattern generally consistent with the global results. While there are instances where the predicted opening prices are lower than the actual values, the discrepancies are minor, leading to overall more accurate prediction results (Table 4).

In the Google stock price prediction experiment, ReSCN demonstrates superior adaptability by requiring only a single digital network node, outperforming more complex SCN and LSTM models with significantly better results on the test set. Notably, the utilization of gridsearchCV in previous experiments to determine LSTM model parameters resulted in increased computation time and cost. The experimental findings underscore ReSCN’s commendable performance, accurately predicting the opening price of the next day. Comparative analysis reveals that, in contrast to other methods, ReSCN exhibits the smallest Root Mean Square Error (RMSE) and Mean Absolute Error (MAE), measuring 2.557639 and 1.99725, respectively. This positions ReSCN as a more suitable choice for stock price prediction, providing investors with a reliable tool for making informed investment decisions.

In the context of the aforementioned time series, ReSCNs are trained in an end-to-end fashion, optimizing the overall task goal directly without the need for module or stage training during the learning process. This approach distinguishes itself from RNN, as it eliminates the necessity for repetitive node selection, thereby reducing the time required for network configuration experiments. Unlike RNN, which demands multiple iterations on experimental data to optimize the learning model, this algorithm typically achieves comparable results after a single training session on various datasets.

4.4 Household power consumption prediction

The proliferation of new energy power generation technologies, such as solar energy, and the emergence of innovative electrical equipment have generated a wealth of available power consumption data. In this experiment, we leverage the time series dataset from individual household power consumption in the UCI database for regression modeling and prediction of power consumption in the upcoming week. These data are specifically utilized for short-term multi-step prediction. Comprising 2 million measurements spanning December 2006 to November 2010, the dataset captures household electricity consumption per minute for a residence located in Sceaux, 7 km away from Paris. This multivariate series encompasses seven variables, excluding date and time. Such models not only aid families in financial planning but also contribute to efficient power demand planning for specific households on the supply side (Fig. 13).

Firstly, data preprocessing involves marking missing values in the dataset, filling them with the observations from the same time on the previous day. To mitigate the impact of excessive noise on time series analysis, resampling technology is employed, converting the minute-by-minute power consumption observations into daily samples. This multi-step time series predicts the upcoming week based on the household power consumption of the preceding week, outputting seven time steps corresponding to each day of the week. The dataset is divided into standard weeks, providing 159 complete standard weeks for training the prediction model. To augment training data, time steps are iterated, and data are divided into overlapping windows, each predicting the next seven days. After training, the average error of the learning model on the training set from Sunday to Saturday is calculated. Error analysis for each predicted time step facilitates evaluating the advantages and disadvantages of ReSCN compared to other machine learning and deep learning methods. The maximum number of nodes in the recurrent neural network is set to 80, with a parameter search range of \([-\lambda , \lambda ]\), where \(\lambda \in \left[ \begin{array}{cc} 0.5, 1, 5, 10, 30, 50, 100, 150, 200\end{array}\right]\). The activation function is set to the relu function. Additionally, results from a naive model establish a baseline performance, serving as a quantitative measure of prediction problem difficulty. As a comparative experiment, benchmark mean square error, set at 465 kW, is derived based on the assumption that the upcoming week will resemble the same week a year ago. Furthermore, various machine learning methods, elastic network random sampling consistent algorithm, passive attack regression algorithm, and deep learning algorithms (RNN and LSTM) are compared for network performance. The deep learning network architecture comprises three layers: a hidden layer with 100 nodes using the relu activation function, a dense layer with 50 nodes using relu activation, and the output layer. Optimization is conducted through the Adam learner, utilizing MSE as the loss function, with 70 epochs and 16 data batches for training. The mean square error of daily power consumption in the next week predicted by different learning models on the test set is depicted in Fig. 14.

The predictive performance of the learning model is notably better on Tuesday and Friday. Overall, the deep learning algorithm demonstrates superior prediction effects compared to the machine learning method. The prediction error of ReSCN for daily power consumption is lower than that of RNN, and it outperforms LSTM except on Mondays. Subsequently, two model evaluation indicators, RMSE and MAE, are employed to compare ReSCN with the two deep learning methods, RNN and LSTM. It is evident that the overall testing effectiveness of ReSCN surpasses that of the other two algorithms.

5 Conclusions

This brief focuses on the RNN algorithm and introduces the recurrent stochastic configuration network based on the incremental random algorithm of SCN. Existing machine learning and deep learning algorithms often utilize shallow networks or lack the capability for the automatic adjustment of network architecture. In contrast, our approach employs the SCN algorithm to determine the RNN structure, enabling effective time series analysis. The efficacy of ReSCN is validated through experimental results. Enhancing the representation and learning ability of recurrent neural networks for natural language and other sequence data remains an area for further investigation. Continuous exploration is expected to yield a more effective sequence data processing algorithm with enhanced learning capabilities.

Data availability

No Data associated in the manuscript.

References

Lara-Benítez P, Carranza-García M, Riquelme JC (2021) An experimental review on deep learning architectures for time series forecasting. Int J Neural Syst 31(03):2130001

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Rumelhart DE, Hinton GE, Williams RJ (1985) Learning internal representations by error propagation. California Univ San Diego La Jolla Inst for Cognitive Science, San Diego

Sutskever I, Vinyals O, Le QV (2014) Sequence to sequence learning with neural networks. Advances in neural information processing systems, 27

Cho K, Van Merriënboer B, Gulcehre C, et al (2014) Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv preprint arXiv:1406.1078

Hinton G, Deng L, Yu D et al (2012) Deep neural networks for acoustic modeling in speech recognition: the shared views of four research groups. IEEE Signal Process Mag 29(6):82–97

Hori T, Cho J, Watanabe S (2018) End-to-end speech recognition with word-based RNN language models. In: 2018 IEEE spoken language technology workshop (SLT). IEEE. pp 389–396

Werbos PJ (1990) Backpropagation through time: what it does and how to do it. Proc IEEE 78(10):1550–1560

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Hu R, Huang Q, Wang H, Chang S (2019) Monitor-based spiking recurrent network for the representation of complex dynamic patterns. Int J Neural Syst 29:1950006–1950023

Jaeger H, Haas HH (2004) Non-linearity: predicting chaotic systems and saving energy in wireless communication. Science 304:78–80

Maass W, Natschliiger T, Markram H (2002) Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput 14(11):2531–2560

Verstraeten D, Schrauwen B, D́haene M, Stroobandt D (2007) An experimental unification of reservoir computing methods. Neural Netw 20(3):391–403

Schrauwen B, Verstraeten D, Campenhout 1. Y (2007) An overview of reservoir computing: theory, applications and implementations. In: Proceedings of the 15th European symposium on artificial neural networks. pp 471–482

Mesquita DPP, Gomes JPP, Rodrigues LR (2019) Artificial neural networks with random weights for incomplete datasets. Neural Process Lett 50:2345–72

Scardapane S, Wang D (2017) Randomness in neural networks: an overview. John Wiley Sons, Inc., New York

Broomhead DS, Lowe D (1988) Multivariable functional interpolation and adaptive networks. Complex Syst 2:321–355

Pao YH, Takefuji Y (1992) Functional-link net computing: theory, system architecture, and functionalities. Computer 25(5):76–79

Wang D, Li M (2017) Stochastic configuration networks: Fundamentals and algorithms. IEEE Trans Cybernetics 47(10):3466–3479

Wang D, Li M (2018) Deep stochastic configuration networks with universal approximation property. In: 2018 international joint conference on neural networks (IJCNN). IEEE. pp 1–8

Tian P, Sun K, Wang D (2022) Performance of soft sensors based on stochastic configuration networks with nonnegative garrote. Neural Comput Appl 34:1–11

Dai W, Li D, Zhou P et al (2019) Stochastic configuration networks with block increments for data modeling in process industries. Inf Sci 484:367–386

Dai W, Zhou X, Li D et al (2021) Hybrid parallel stochastic configuration networks for industrial data analytics. IEEE Trans Ind Inf 18(4):2331–2341

Gao Y, Luan F, Pan J et al (2020) FPGA-based implementation of stochastic configuration network for robotic grasping recognition. IEEE Access 8:139966–139973

Pratama M, Wang D (2019) Deep stacked stochastic configuration networks for lifelong learning of non-stationary data streams. Inf Sci 495:150–174

Zhang C, Ding S, Du W (2022) Broad stochastic configuration network for regression. Knowl Based Syst 243:108403

Funding

This study was supported by National Natural Science Foundation of China (62103093), Fundamental Research Funds for the Central Universities (N2108003), National Key Research and Development Program of China (2022YFB3305905), Xingliao Talent Program of Liaoning Province of China (XLYC2203130), Natural Science Foundation of Liaoning Province of China (2023-MS-087).

Author information

Authors and Affiliations

Contributions

JZ: funding acquisition, project administration, supervision and writing—review and editing. HZ: conceptualization, formal analysis, methodology, and writing—original draft, visualization. XZ: writing—review and editing. HZ: writing—review and editing. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, JX., Zhao, H. & Zhang, X. Universal approximation property of stochastic configuration networks for time series. Industrial Artificial Intelligence 2, 3 (2024). https://doi.org/10.1007/s44244-024-00017-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44244-024-00017-7