Abstract

As a randomized learner model, SCNs are remarkable that the random weights and biases are assigned employing a supervisory mechanism to ensure universal approximation and fast learning. However, the randomness makes SCNs more likely to generate approximate linear correlative nodes that are redundant and low quality, thereby resulting in non-compact network structure. In light of a fundamental principle in machine learning, that is, a model with fewer parameters holds improved generalization. This paper proposes orthogonal SCN, termed OSCN, to filtrate out the low-quality hidden nodes for network structure reduction by incorporating Gram–Schmidt orthogonalization technology. The universal approximation property of OSCN and an adaptive setting for the key construction parameters have been presented in details. In addition, an incremental updating scheme is developed to dynamically determine the output weights, contributing to improved computational efficiency. Finally, experimental results on two numerical examples and several real-world regression and classification datasets substantiate the effectiveness and feasibility of the proposed approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Neural networks (NNs) are an essential part of machine learning, because they can propose efficient solutions to complex data analysis tasks that are difficult to be solved by conventional methods [1,2,3,4]. Feedforward neural networks (FNNs), the kind of classical NNs, have been widely used and studied due to their simple construction and strong nonlinear mapping ability [5, 6]. However, the generalization of FNNs is very sensitive to the network parameter settings, such as learning rate, owing to the use of gradient descent algorithms for training [7,8,9]. Similarly, this training approach can be subjected to local minima, time-consuming problems, and some other limitations [9, 10].

Randomized algorithms have shown great potential in exploring fast learning and low computational cost [11,12,13,14]. Therefore, random vector functional link networks (RVFLNs), a kind of single hidden layer FNNs with randomized algorithms, are presented [15,16,17,18,19,20,21]. In RVFLNs, the input weights and biases are randomly assign from certain fixed intervals range and remain constant. And, the output weights are obtained by solving a linear equation [22]. Although RVFLNs have demonstrated significant potential, it is difficult to construct an appropriate network structure to accomplish modeling tasks. In general, it is challenging, if not impossible, to obtain a proper network topology via human experience. The network with too large or too small size will suffer from performance degrading.

Constructive algorithm starts with a simple network and gradually adds hidden nodes (hidden nodes and weights) until a predefined condition could be satisfied [23, 24]. This construction feature makes it possible for the constructive algorithm to find the most suitable network structure. Further, RVFLNs with constructive algorithms are proposed, called (IRVFLNs). However, recent work [25] indicates that these IRVFLN-based models have difficulties in guaranteeing the universal approximation property, as a consequence of extensive scope setting lacking scientific justification. In [26, 27], the poor approximation performance of common RVFLNs with the fixed parameter scope is explained in more detail.

According to the previous work, an advanced randomized learner model, known as stochastic configuration networks (SCNs) was reported in [28]. Specifically, SCNs employ an incremental construction approach where a hidden node and all its connected weights and biases are added in each iteration. Also, SCN takes advantage of a scope setting vector to select a set of candidate weights and biases randomly, under the constraints of a supervisory mechanism. It is this step that makes SCNs and its variants, including deep version [29,30,31], robust version [32,33,34], ensemble version [35] and 2D version [36] exhibit the satisfactory performance in big data, uncertain data problems and image data modeling tasks. However, SCNs are more likely to generate approximate linear correlative nodes resulting from the randomness, even if the supervisory mechanism is employed. These nodes with small outputs are redundant and low quality, which easily give rise to ill-conditioned hidden output matrix, depreciating generalization performance. Simultaneously, the redundancy among a myriad of candidate nodes induces a large model size, thus contributing little to more compact network structure.

Focusing on the abovementioned problems, the improved SCNs, termed as orthogonal SCN (OSCN) is proposed. The Gram–Schmidt orthogonalization technology is integrated into SCNs to evaluate the level of correlation among random generated nodes, which filters out redundant nodes and achieves better performance. This paper proposes OSCN under the following contributions and novelties:

-

1)

The Gram–Schmidt orthogonalization technology is adopted to evaluate and filter out low-quality candidate nodes in the stochastic configuration process, thereby simplifying the structure network and enhancing generalization performance.

-

2)

In the orthogonal framework, the optimal output weight can be determined by taking advantage of a constructive scheme, which avoids complicated and time-consuming retraining procedure and results in high computational efficiency to a certain extent.

-

3)

The universal approximation property of OSCN is established in the form of orthogonal supervisory mechanism. Additionally, an adaptive setting for construction parameters, which can be adaptively generated in the supervisory mechanism, is given.

The rest of this paper is constructed as follows. We revisit SCNs in Sect. 2. Section 3 introduces OSCN in terms of theoretical analysis and algorithm implementation. In Sect. 4, comparative experimental results and analysis are shown. Finally, Sect. 5 concludes and indicates the future work.

2 Brief reviews of SCNs

SCNs, as a class of advanced universal approximators, have demonstrated its superiority in a wide range of applications, attributing to fast learning speed and sound generalization [28].

Assuming that we have built a network model with L-1 hidden nodes: \(f_{L - 1} (x) = \sum\nolimits_{j = 1}^{L - 1} {\beta_{j} } g_{j} (w_{j}^{{\text{T}}} x + b)\). \(e_{L - 1} = f - f_{L - 1}\) represents the residual error. If the current residual error fails to achieve the predefined condition. SCNs will incrementally produce Lth hidden nodes associated with a set of candidate parameters giving rise to the current error approach to the predefined condition.

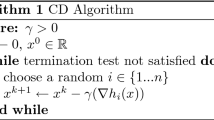

The algorithm implementation of SCN can be expressed as follows:

-

Initialization

Give X = {x1, x2,…, xN} to be the N inputs to a training dataset, where xi ∈ ℝd. And accordingly, give T = {t1, t2,…,tN} to be N outputs, where ti ∈ ℝm. Relative parameters in the incremental constructive process, which can refer to OSCN Algorithm as described using pseudo codes, are illustrated in details.

-

Hidden parameter configuration

Assigning \(w_{L}\) and \(b_{L}\) stochastically from the support scope to in acquisition of a set of candidates random basis function \(h_{L}^{{}} {\kern 1pt} { = }g_{L} (w_{L}^{T} x + b_{L} ){\kern 1pt} {\kern 1pt} (0 < \left\| h \right\| \le b_{g} )\), which satisfies the following inequality:

where \(r{\text{ and }}\mu_{L}\) are contractive parameters.

-

Output weight determination

There are three original schemes, including SC-I, SC-II, and SC-III, for evaluating the output weights. Three algorithmic implementations are illustrated in Fig. 1. Concretely, SC-I updates the output weights of the newly added hidden node, and removes the necessity for recalculating the former. SC-II recalibrates a portion of the existing output weights according to predefined sliding window size and achieves the suboptimal solution to the output weights. The output weights of all existing hidden nodes in SC-III are assigned by means of solving a global optimal problem, which can be more likely to achieve effectively in targeting on a universal approximator during the incremental learning process.

-

Calculate the current residual error eL and update e0: = eL, update L: = L + 1 until the network meets the predefined conditions: \(L \ge L_{\text{max}}\) or \(\parallel e_{0} \parallel \le \varepsilon\).

Remark 1

SC-III outperforms the others (SC-I, SC-II) in terms of generalization and convergence, but suffers form largest computation load due to Moore–Penrose generalized inverse [37] implemented. The newly added output weights in SC-I are determined employing a constructive scheme, which contributes to minimal computation load, but involves worst convergence. From the perspective of both computation load and convergence, SC-II compromises in comparison of SC-I, SC-III.

Remark 2

Note that the fastest decrease in residual error depends on construction parameters (r, μL), as shown in (1). Hence, how to select appropriate construction parameters is directly making a difference to model learning. Besides, as the construction process proceeds, the newly added hidden nodes with smaller outputs due to the randomness are less conducive to the reduction of residual error. Even though these nodes may be selected to add the existing network, they are less likely to maintain the network compactness and achieve faster convergence speed. Therefore, the quality of nodes requires to be ameliorated.

To mitigate the weakness mentioned above, an orthogonal version of SCN, termed OSCN, which can be built efficiently with high-quality nodes, and achieve the global optimal parameters, is proposed.

3 Orthogonal stochastic configuration networks

In this section, the proposed OSCN is detailed. Firstly, the description of our proposed model is presented, followed by theoretical analysis. Afterward, we give the overall procedure for OSCN in OSCN Algorithm.

3.1 Description of OSCN model

The OSCN framework can be summarized in Fig. 2. This process can be generalized as configuring random parameters associated with the first hidden nodes first, then the subsequent hidden nodes are made orthogonal to each other to guarantee that the networks will converge more efficiently without redundant nodes. Details of constructing OSCN are outlined below.

Give \(X = \left\{ {x_{1} ,x_{2} , \ldots ,x_{N} } \right\}\) to be the N inputs to a training dataset,\(x_{i} = [x_{i,1},x_{i,2}, \ldots ,x_{i,d} ] \in {\mathbb{R}}^{d}\). And accordingly, give \(T = \left\{ {t_{1}, t_{2} , \ldots ,t_{N} } \right\}\) to be N outputs, \(t_{i} = \left[ {t_{i,1},t_{i,2}, \ldots ,t_{i,m} } \right] \in {\mathbb{R}}^{m} .\) Suppose that OSCN has already constructed L-1 hidden nodes, let the candidate nodes in stochastic configuration of Lth hidden node can be written as follows:

Considering the randomness, we introduce the Gram–Schmidt into stochastic configuration process to guarantee the quality of candidate nodes from the perspective of collinearity [38]. Then the orthogonal vector of the candidate node can be calculated by

To avoid generating approximate linear correlative hidden nodes with small output weights that are inefficient on decrease in residual error, a small positive number σ is given to estimate whether the candidate node is redundant for residual error reduction, if \(\left\| {v_{L} } \right\| \ge \sigma\), that means this one can be considered as a good one. The best-hidden node added to the network can be configured by maximizing the supervisory mechanism among a multitude of candidate nodes. After orthogonalization \({\text{span}}\left\{ {v_{1} ,v_{2} , \ldots ,v_{L} } \right\} = {\text{span}}\left\{ {h_{1} ,h_{2} , \ldots ,h_{L} } \right\}\) which means \(v_{1} , \, v_{2} , \ldots , \, v_{L}\) are equivalent to \(h_{1} ,h_{2} , \ldots ,h_{L} .\) Therefore, the OSCN model can be formulated as \(f_{L} = f_{L - 1} + v_{L} \beta_{L} ,\) the current residual error \(e_{L - 1}\) is denoted by \(e_{L- 1} = f - f_{L - 1} = [e_{L - 1,1},e_{L - 1,2,\ldots}, e_{L - 1,m} ] \in R^{N \times m}\) and the output weights can be expressed as \(\beta = [\beta_{1} ,\beta_{2} , \ldots ,\beta_{L} ]^{{\text{T}}}\), where \(\beta_{L} = [\beta_{L,1} ,\beta_{L,2} , \ldots ,\beta_{L,m} ] \in {\text{R}}^{1 \times m} .\)

3.2 Output weight evaluation

Although OSCN can improve the quality of candidate nodes to help in building a compact network for better performance through filtering out redundant nodes, it may take a little bit more training time to each orthogonalization during Tmax stochastic configuration. Fortunately, benefitting from the orthogonalization construction, the proposed OSCN model can update the output weights similar to SC-I, and its convergence performance is similar to SC-III. In the next part, we will prove this nature.

In the orthogonal framework, the output weights are analytically determined by

Notice that, for an OSCN with L hidden nodes, we have \(f_{L} = f_{L - 1} + v_{L} \beta_{L}\) and \(< v_{i} ,v_{j} > = 0,i \ne j\) so that

Thus, for \(e_{i} = \, [e_{i,1} ,e_{i,2,\ldots }, e_{i,m} ], i = 1,2, \ldots ,L,\) according to Eq. (5), we have

Substituting Eq. (4) into Eq. (6), it can be known that,

Then,

So we can get

The above equations can be summarized as \(e_{1} \bot {\text{span}}\{ v_{1} \} {; }e_{2} \bot {\text{span}}\{ v_{1} ,v_{2} \}\); Suppose for all \(2 \le k \le L\), \(e_{{k{ - }1}} \bot {\text{span}}\{ v_{1} ,v_{2} , \ldots ,v_{{k{ - }1}} \}\); According to \(< v_{k} ,v_{j} > = 0,k \ne j\) and Eq. (8), \(< e_{k} ,v_{k} > = 0.\) For all \(1 \le j \le k - 1\),

So \(e_{k}\; \bot \;{\text{span}}\{ v_{1} ,v_{2} , \ldots ,v_{k} \} ,\) that is, \(e_{L} \; \bot \; {\text{span}}\{ v_{1} ,v_{2} , \ldots ,v_{L} \} .\)

For the least squares solution \(\beta^{*} { = }\mathop {{\text{arg}}}\limits_{\beta } min\left\| {T - V_{L} \beta } \right\|,\)\(\,\beta^{*} \in {\text{R}}^{L \times m}\), given the deduction above, we have

where VL = [v1,v2,⋯,vL]. Thus,

\(\left\| {T - V_{L} \beta^{*} } \right\| = \left\| {T - V_{L} \beta } \right\|\) holds if and only if \(\beta = \beta^{*} .\) Therefore, \(\beta\) based on Eq. (4) is also the LS solution of \(\parallel T - V_{L} \beta \parallel = 0.\)

As a consequence, OSCN can train the newly added output weight while maintaining the same effect as global method that requires calculating the output weights all together after node added. In this way, OSCN can be more likely to avoid the complicated and time-consuming retraining procedure and make up for some of the orthogonal computation time to some extent.

3.3 Universal approximation property

The theoretical analysis is investigated on the universal approximation property, which can serve as an extension of that given in [28].

OSCNs with L-1 hidden nodes have been constructed:\(f_{L - 1} (x) = \sum {_{j = 1}^{L - 1} v_{j} \beta_{j} }\), \(e_{L- 1} = f - f_{L- 1} = [e_{L- 1,1},e_{L - 1,2}, \ldots ,e_{L- 1,m} ]\) where vi represents the ith hidden output after orthogonalization. Represent the current residual error as \(e_{L} = e_{L - 1} - v_{L} \beta_{L}\), \(\beta_{L} = [\beta_{L,1} ,\beta_{L,2} , \ldots ,\beta_{L,q} , \ldots ,\beta_{L,m} {]}\).

Theorem 1

Suppose that span (\(\Gamma\)) is dense in L2 space. Given 0 < r < 1 and a non-negative real number sequence \(\left\{ {\mu_{L} } \right\}\) with \(\lim_{L \to + \infty } \mu_{L} = 0\) and \(\mu_{L} \le 1 - r\). For L = 1,2,... denoted by

There exists VL = [v1,v2,⋯,vL] such that span{h1,h2,⋯,hL} = span{v1,v2,⋯,vL} concentrating on satisfying the following orthogonal form of inequality constraints (supervisory mechanism):

Then, the output weights can be obtained by

Then, we have \(\lim_{L \to + \infty } \left\| {f - f_{L} } \right\| = 0\).

For the purpose of simplicity, a set of instrumental variables \(\xi_{L} = \sum\nolimits_{q = 1}^{m} {\xi_{L,q} }\) are introduced as follows:

Proof

According to Eq. (17), the verification of OSCN is similar to SCNs, it is easy to validate that the sequence \(\left\| {e_{L} } \right\|^{2}\) is monotonically decreasing and converges.

From Eqs. (15)–(17), we can further obtain

Therefore, \(\left\| {e_{L} } \right\|^{2} - (r + \mu_{L} )\left\| {e_{L - 1} } \right\|^{2} \le 0\). It is worthy of mentioning that \(\mathop {\lim }\nolimits_{L \to \infty } \mu_{L} \left\| {e_{L - 1} } \right\|^{2} = 0\) while \(\mathop {\lim }\nolimits_{L \to + \infty } \mu_{L} = 0\). According to the abovementioned equations, we can easily get that \(\mathop {\lim }\nolimits_{{L \to { + }\infty }} \left\| {e_{L - 1} } \right\|^{2} = 0\), that is \(\mathop {\lim }\nolimits_{{L \to { + }\infty }} \left\| {e_{L} } \right\| = 0\). Above completes the whole process of proof. This completes the proof.

3.4 Adaptive construction parameter

Theorem 1 provides inequality constraints (supervisory mechanism) to guarantee the universal approximation property. It can be easily observed that the final hidden output \(v_{L}\) added to the network can be configured through selecting the one that maximizes \(\xi_{L}\) among a collection of candidate nodes. From Eq. (18), it can be found that the construction parameters \(r\) and \(\mu_{L}\) are also the key factors making a difference to the candidate set. In SCNs, \(r\) is an incremental sequence within an adjustable interval (0.9–1), and \(\mu_{L} = (1 - r)/(L + 1)\). Although r is kept unchanged in the incremental constructive process, this artificial setting for r may abandon a plethora of weights and biases selected randomly over some intervals imposed restrictions on a range. Consequently, the assignment of candidate random parameters is more likely to being confronted with time-consuming problems or even more unnecessary fails.

In Theorem 2, an adaptive setting for construction parameters is provided.

Theorem 2

Given a non-negative sequence \(\tau_{L} = r + \mu_{L} = (L/(L + 1) + 1/(L + 1)^{2} )\), we have \(\left\| {{\mathbf{e}}_{L}^{{}} } \right\|^{2} \le \tau_{L} \left\| {{\mathbf{e}}_{{L{ - }1}}^{{}} } \right\|^{2}\) and \(\lim_{L \to \infty } \left\| {{\mathbf{e}}_{L}^{{}} } \right\|{ = }0\).

Proof

It is easy to obtain \(\lim_{L \to + \infty } \tau_{L} = 1\), The theoretical result stated in Theorem 1, we can get

Similar to \(1 - x < e^{ - x} ,x > 0\), we have:

Then, considering

and

We can further obtain:

Finally, Eq. (20) can be formulated by:

Hence, we can get \(\lim_{L \to \infty } \left\| {{\mathbf{e}}_{L}^{{}} } \right\| = 0\). This completes the proof.

The proposed OSCN (pseudo codes) is described in OSCN Algorithm.

4 Performance evaluation

In this section, in order to further substantiate the effectiveness and superiority of the proposed algorithms, comparisons among OSCN, SCN (here refer to SC-III), and IRVFLN [24] are conducted through two numerical examples, twenty real-world regression and classification cases. We have selected real-world datasets deriving from the UCI database [39] and KEEL [40].

The activation function is denoted as g(x) = 1/(1 + exp(-x)) involving all the algorithms. According to the experience in [28], we set r to 0.999 for SCN directly. The parameters \(\tau_{L} (r + \mu_{L} )\) for OSCN are given according to Theorem 2. Besides, the parameter σ is typically set to 1e−6 [41], but the value will be set to adjust to specific cases. The experiment averaged 50 trials. In addition, for each function approximation and benchmark dataset, and the Average (AVE) and Standard Deviations (DEV) of Root Mean Squares Error (RMSE) are displayed in the corresponding tables, respectively. For each classification case, we give the AVE and DEV in the corresponding tables as well.

The experiments concerning all algorithms are performed in MATLAB 2017a simulation software platform under a PC that configures with Intel Xeon W-2123, 3.6 GHz CPU, 16G RAM.

4.1 Regression cases

Two numerical examples, including single and multiple outputs, and ten real-world cases are investigated to evaluate the overall performance among OSCN, SCN and IRVFLN in regression cases within this part.

Numerical examples are the real-valued function generated by

The dataset contains 1000 randomly generated samples from the uniform distribution [0, 1], among which 800 samples are chosen as the training set, whereas the testing set comprised 200 samples. For all testing algorithms, the expected training error tolerance ε of RMSE is 0.05. In addition, the parameter σ is 1e−6 for OSCN. The maximum number of candidate nodes Tmax and a maximum of hidden nodes Lmax of SCN-based algorithms are set to 20 and 100, respectively. Υ = {150:10:200} for SCN-based algorithms and [− 150, 150] for IRVFLN. And the experimental results including the network architecture complexity, training time, AVE and DEV of training and testing RMSE with regard to all algorithms are reported in Table 1.

As seen from Table 1, in the case of achieving the same stop RMSE, OSCN requires fewer hidden nodes and takes about the same amount of time to train as SCN, and on these basis it still has smaller RMSE and DEV.

We set stop RMSE to 0.01 to compare these algorithms in approximation capability, the detailed convergence and fitting curves are plotted in Fig. 3, which shows the variation trend of RMSE accompanied by the increasing number of hidden nodes, and the corresponding approximation capability, respectively. For this function y, the results for IRVFLN are worse than others in both nodes and approximation. Compared to SCN, the algorithm we proposed not only achieves the same desired approximation, but also effectively reduces the number of iterations.

To further compare the performance of three algorithms, multiple-outputs numerical example is employed in this part. Two inputs x1, x2, two intermediate variables x3, x4 and two outputs y1 and y2 are used [42]. The relationships of this numerical example can be shown as following:

The inputs are randomly generated from N(− 0.5, 0.2), including 600 training data and 400 testing data whose means and variances are − 0.5 and 0.2. The maximum times of random configuration Tmax and the scope are set to 10 and {10:5:50}, respectively. Moreover, the parameter σ equals to 1e−8 for OSCN. In order to explore more differences among the three algorithms, the number of hidden nodes is setup for 4, 6 and 8, respectively. In the case of convergence of the entire model, results of training two outputs are shown in Table 2.

Comparisons are carried out from the perspective of AVE and DEV of the training RMSE on condition that all algorithms can achieve the same nodes. The convergent rate of OSCN, which outperforms SCN and IRVFLN, in each phase is apparent, especially in later phase of y2. The appearances indicate that the added nodes of OSCN are more conducive to residual error decline. Moreover, the estimation variance (VAR) information of each data (x1, x2) within 50 trials on y1 and y2 is given in Figs. 4, 5, 6, where the corresponding variance of each data is shown in the contour distribution. It can be clearly seen from Figs. 4, 5, 6, the variance distributions of SCN and IRVFLN are larger than those of OSCN. These experimental results, as displayed in Table 2 and Figs. 4, 5, 6, confirm that OSCN has the faster convergence rate and stronger stationary in training DEV and estimation VAR of each data for this numerical example compared with SCN and IRVFLN.

Finally, we illustrate the efficiency and feasibility of OSCN through more complex real-world regression cases. Employing ten real-world regression problems is to achieve the goal of performance evaluation. Meanwhile, the relevant information of real-world regression cases is displayed in Table 3. Table 4 shows detailed parameter settings including their expected training error tolerances ε of RMSE. In addition, the parameter σ equals to 1e−6 for OSCN.

Comparisons among OSCN, SCN, IRVFLN, corresponding to the number of hidden nodes and the AVE and DEV of training RMSE in which the predetermined error tolerance for each datasets is given, are drawn, as displayed in Table 5. It worth mentioning that, with the same expected error, OSCN requires fewer network nodes than both IRVFLN and SCN in all real-world cases, even though SCN has the capability to achieve a relatively compact network through its supervisory mechanism.

Combining Table 6 with Table 5, we can see that OSCN and SCN have better approximate property than IRVFLN obviously under most circumstances. IRVFLN basically cannot reach our expect error under the specified Lmax. OSCN is better at prediction performance and more compact than SCN under the similar AVE of training RMSE. Furthermore, OSCN exhibits exceptional advantages in the case of the overall testing RMSE, compared to SCN, especially in complex real-world situations such as Concrete and Compactiv, which indicates that OSCN may perform more favorably when it comes to complex datasets. The performance of OSCN on Forestfire data, however, is inferior to IRVFLN, but better than SCN.

4.2 Real-world classification cases

In this second part, the classification performance of the proposed algorithm is validated in comparison to SCN and IRVFLN when it comes to the same number of hidden nodes. Ten selected datasets, which stem from real-world multiclass classification problems, are to make comparisons on training and testing accuracy. The relevant descriptions about them can be found in Table 7. Furthermore, the parameter settings of algorithms are shown in Table 8. Table 9 gives the results of comparison in the AVE and DEV of training and testing accuracy.

In general, the effect of the classification experiments is not as obvious as that of the regression experiments. But, as found in Table 9, OSCN is still much excellent in training accuracy and testing accuracy on the whole than both SCN and IRVFLN on condition that nodes is kept identical. As far as the Pima dataset is concerned, IRVFLN is significantly more stable than other two algorithms, but its accuracy is much lower than SCN and OSCN. Generally speaking, one has a preference for the expected accuracy than the most stable results with poor performance on expecting, thus OSCN and SCN are the better choices.

5 Complexity analysis

In this section, we analyze the computation complexity of OSCN, SCN, IRVFLN algorithms in detail. Suppose that the number of training instance is N, the dimension of each training instance is n, the number of class labels is m and the number of hidden nodes is L. we can obtain that the size of H is \(L \times N\) and the size of T is \(m \times N\). These three algorithms present significant differences in terms of time consumption as they solve the output weights. OSCN requires the computation complexity of \(O\left( {L^{3} + mNL^{2} } \right)\). While the computation complexity of SCN, in general, will be approximately \(O\left( {L^{3} + mNL} \right)\). Therefore, it is not difficult to calculate the computation complexity of IRVFLN, which is \(O(mN)\).

6 Conclusion

This paper proposes an advanced learning approach for SCNs with orthogonalization technology. The proposed orthogonal SCN (OSCN) can avoid generating redundant nodes to reduce the complexity of network and enhance the convergence performance. Concretely, OSCN makes the candidate nodes orthogonal to the existing nodes, and abandons poor candidates according to a criterion of node quality. Then, an orthogonal form of supervisory mechanism is established to guarantee the universal approximation property. Under the framework of OSCN, the global optimal parameters can be determined analytically by employing an incremental updating scheme, which is reported in detail in this paper. Theories and experimental results illustrate that OSCN performs better performance on reducing the number of convergence iterations, improving stability, as well as approximation and estimation capacities. The future work will explore the proposed approach in combination with block increments to further reduce the number of iterations while improving modeling efficiency.

Data availability

The datasets generated during and/or analyzed during the current study are available in the UCI and KEEL dataset repository, http://www.ics.uci.edu/~mlearn/MLRepository.html.

References

Deshpande G, Wang P, Rangaprakash D, Wilamowski B (2015) Fully connected cascade artificial neural network architecture for attention deficit hyperactivity disorder classification from functional magnetic resonance imaging data. IEEE Trans Cybern 45(12):2668–2679

Dai W, Liu Q, Chai T-Y (2015) Particle size estimate of grinding processes using random vector functional link networks with improved robustness. Neurocomputing 169:361–372

Huang S-C, Do B-H (2014) Radial basis function based neural network for motion detection in dynamic scenes. IEEE Trans Cybern 44(1):114–125

Najmaei N, Kermani MR (2011) Applications of artificial intelligence in safe human-robot interactions. IEEE Trans Syst Man Cybern B Cybern 41(2):448–459

Dai K, Zhao J, Cao F (2015) A novel algorithm of extended neural networks for image recognition. Eng Appl Artif Intel 42:57–66

Ma L, Khorasani K (2004) Facial expression recognition using constructive feedforward neural networks. IEEE Trans Syst Man Cybern B Cybern 34(3):1588–1595

Wang M, Fu W, He X, Hao S, Wu X (2022) A survey on large-scale machine learning. IEEE Trans Knowl Data Eng 34(6):2574–2594

Ahmad F, Abbasi A, Kitchens B, Adjeroh D, Zeng D (2022) Deep learning for adverse event detection from web search. IEEE Trans Knowl Data Eng 34(6):2681–2695

Alhamdoosh M, Wang D (2014) Fast decorrelated neural network ensembles with random weights. Inf Sci 264:104–117

Lin S, Zeng J, Zhang X (2019) Constructive neural network learning. IEEE Trans Cybern 49(1):221–232

Cao F, Wang D, Zhu H (2016) An iterative learning algorithm for feedforward neural networks with random weights. Inf Sci 1(9):546–557

Schmidt WF, Kraaijveld MA, Duin RPW. Feedforward neural networks with random weights. Proceedings, 11th IAPR Int. Conf. Pattern Recognition. Vol.II. Conference B: Pattern Recognition Methodology and Systems, The Hague, Netherlands, 1992, pp. 1–4.

Scardapane S, Wang D (2017) Randomness in neural networks: an overview, Wiley Interdisciplinary Reviews. Data Mining Knowl Discov. 7(2):e1200

Wang X-Z, Zhang T, Wang R (2017) Noniterative deep learning: incorporating restricted Boltzmann machine into multilayer random weight neural networks. IEEE Trans Syst Man Cybern Syst. 49(7):1299–1308

Chen CLP, Wan JZ (1999) A rapid learning and dynamic stepwise updating algorithm for flat neural networks and the application to time-series prediction. IEEE Trans Syst Man Cybern B Cybern 29(1):62–72

Igelnik B, Pao Y-H (1995) Stochastic choice of basis functions in adaptive function approximation and the functional-link net. IEEE Trans Neural Netw 6(6):1320–1329

Pao Y-H, Park G-H, Sobajic DJ (1994) Learning and generalization characteristics of the random vector functional-link net. Neurocomputing 6(2):163–180

Pao Y-H, Takefuji Y (1992) Functional-link net computing, theory, system architecture, and functionalities. IEEE Comput 3(5):76–79

Xu K, Li H, Yang H (2019) Kernel-based random vector functional-link network for fast learning of spatiotemporal dynamic processes. IEEE Trans Syst Man Cybern Syst. 49(5):1016–1026

Ye H, Cao F, Wang D (2020) A hybrid regularization approach for random vector functional-link networks. Expert Syst Appl 140:12912

Scardapane S, Wang D, Uncini A (2018) Bayesian random vector functional-link networks for robust data modeling. IEEE Trans. Cybern. 48(7):2049–2059

Zhang L, Suganthan PN (2017) Visual tracking with convolutional random vector functional link network. IEEE Trans Cybern 47(10):3243–3253

Kwok T-Y, Yeung D-Y (1997) Constructive algorithms for structure learning in feedforward neural networks for regression problems. IEEE Trans Neural Netw 8(3):630–645

Kwok T-Y, Yeung D-Y (1997) Objective functions for training new hidden units in constructive neural networks. IEEE Trans Neural Netw 8(8):1131–1148

Li M, Wang D-H (2017) Insights into randomized algorithms for neural networks: practical issues and common pitfalls. Inf Sci 382–383:170–178

Gorban AN, Tyukin IY, Prokhorov DV, Sofeikov KI (2016) Approximation with random bases: Pro et contra. Inf Sci 364–365:129–145

Li M, Gnecco G, Sanguineti M. Deeper insights into neural nets with random weights. In Proceedings of the Australasian Joint Conference on Artificial Intelligence, Perth, WA, Australia, pp. 129–140, December 2022

Wang D, Li M (2017) Stochastic Configuration Networks: Fundamentals and Algorithms. IEEE Trans Cybern 47(10):3466–3479

Lu J, Ding J (2019) Construction of prediction intervals for carbon residual of crude oil based on deep stochastic configuration networks. Inf Sci 486:119–132

D. Wang, M. Li, “Deep stochastic configuration networks with universal approximation property”, arXiv:1702.0563918, 2017, to appear in IJCNN, 2018.

Felicetti MJ, Wang D (2022) Deep stochastic configuration networks with different random sampling strategies. Inf Sci 607:819–830

Lu J, Ding J (2020) Mixed-Distribution based robust stochastic configuration networks for prediction interval construction. IEEE Trans Ind Inform 16(8):5099–5109

Wang D, Li M (2017) Robust stochastic configuration networks with kernel density estimation for uncertain data regression. Inf Sci 412:210–222

Li M, Huang C, Wang D (2019) Robust stochastic configuration networks with maximum correntropy criterion for uncertain data regression. Inf Sci 473:73–86

Lu J, Ding J, Dai X, Chai T (2020) Ensemble stochastic configuration networks for estimating prediction intervals: a simultaneous robust training algorithm and its application. IEEE Trans Neural Netw Learn Syst. 31(12):5426–5440

Li M, Wang D (2021) 2-D stochastic configuration networks for image data analytics. IEEE Trans Cybern 51(1):359–372

Lancaster P, Tismenetsky M (1985) The theory of matrices: with applications. Elsevier, Amsterdam

Shores TS (2018) Applied linear algebra and matrix analysis, 2nd edn. Springer International, Gewerbestrasse, pp 206–312

Blake CL, Merz CJ (1998) UCI repository of machine learning databases. Dept. Inf. Comput. Sci., Univ. California, Irvine, CA. http://www.ics.uci.edu/~mlearn/MLRepository.html.

Alcalá-Fdez J, Fernández A, Luengo J, Derrac J, García S, Sánchez L, Herrera F (2011) Keel data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. J Mult Valued Log Soft Comput 17(2–3):255–287

Zhou P, Jiang Y, Wen C, Chai T-Y (2019) Data modeling for quality prediction using improved orthogonal incremental random vector functional-link networks. Neurocomputing 365:1–9

Liu Y, Wu Q-Y, Chen J (2017) Active selection of informative data for sequential quality enhancement of soft sensor models with latent variables. Ind Eng Chem Res 56(16):4804–4817

Boyd S, Vandenberghe L (2004) Convex optimization. Cambridge University Press, Cambridge

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 61973306, in part by the Natural Science Foundation of Jiangsu Province under Grant BK20200086, and in part by the Postgraduate Research & Practice Innovation Program of Jiangsu Province under Grant KYCX22_2560.

Author information

Authors and Affiliations

Contributions

WD: funding acquisition, project administration, supervision and writing-review and editing. ZJ: conceptualization, formal analysis, methodology, and writing-original draft, visualization. CN: writing-review and editing. SZ: writing-review and editing. XW: writing-review and editing. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dai, W., Ning, C., Pei, S. et al. Orthogonal stochastic configuration networks with adaptive construction parameter for data analytics. Industrial Artificial Intelligence 1, 8 (2023). https://doi.org/10.1007/s44244-023-00004-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44244-023-00004-4