Abstract

The unpredictability of artificial intelligence (AI) services and products pose major ethical concerns for multinational companies as evidenced by the prevalence of unfair, biased, and discriminate AI systems. Examples including Amazon’s recruiting tool, Facebook’s biased ads, and racially biased healthcare risk algorithms have raised fundamental questions about what these systems should be used for, the inherent risks they possess, and how they can be mitigated. Unfortunately, these failures not only serve to highlight the lack of regulation in AI development, but it also reveals how organisations are struggling to alleviate the dangers associated with this technology. We argue that to successfully implement ethical AI applications, developers need a deeper understanding of not only the implications of misuse, but also a grounded approach in their conception. Judgement studies were therefore conducted with experts from data science backgrounds who identified six performance areas, resulting in a theoretical framework for the development of ethically aligned AI systems. This framework also reveals that these performance areas require specific mechanisms which must be acted upon to ensure that an AI system implements and meets ethical requirements throughout its lifecycle. The findings also outline several constraints which present challenges in the manifestation of these elements. By implementing this framework, organisations can contribute to an elevated trust between technology and people resulting in significant implications for both IS research and practice. This framework will further allow organisations to take a positive and proactive approach in ensuring they are best prepared for the ethical implications associated with the development, deployment and use of AI systems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Emboldened by developments in predictive analysis and algorithmic processes, AI is being applied to domains from finance [13] to tourism [32] resulting in data scientists, designers and engineers proposing guidelines for their responsible development and deployment. Unfortunately, AI may be misused, or behave in unpredicted and potentially harmful ways [5]. Given the proliferation of AI, pressure is building to design AI systems to be accountable, transparent, and fair. While academic discussion on the relationship between AI and ethics has been ongoing for decades, recent literature addresses technical approaches [24], laws to govern the impact of AI [29] and building trustworthiness [14], with the debate for which approach is most relevant remains unresolved [18]. This is partly due to literature primarily focusing on the potential of AI on a theoretical level [4], with the question remaining of how ethics should be developed in conjunction with these systems (Vakkuri and Abrahamsson 28. These gaps offer an opportunity to assess extant approaches to incorporating ethics and values into AI (Greene et al. 10.

2 Literature Review

2.1 Understanding AI

Conceptualised in the 1940s [7], AI is still considered to be an emerging technology as the techniques used to implement it are continuously evolving [25, 26]. AI has since become powerful driver of innovation and change, deciding what information we see on social media [23], when to brake our vehicles when obstacles appear [27], and how to move money around with little human intervention [3]. Trends advocate that the depth of AI utilisation and integration into all aspects of daily life will rapidly multiply as technology develops and costs decline [31]. AI can thus be conceptualised as an ecosystem comprising three elements: data collection and storage, statistical and computational techniques, and output systems [1]. Data collection devices gather information from various sources: e.g., wearable devices capturing physical activity. Algorithms then use information gathered to predict consumer demands: e.g., Spotify suggesting songs based on listening patterns, before finally output systems produce a response, or communicate with users through interfaces [21]. This provision of personal data allows users access to customised information, services, and entertainment, often for free, primarily those rooted in quantitative analysis and logic. Tracing the origins of moral reasoning behaviour during system development however has led to ethical questions becoming more obvious and actual (Greene et al., 2019).

2.2 Ethical Concerns

As per Leslie [15], “AI ethics is a set of values, principles, and techniques that employ widely accepted standards of right and wrong to guide moral conduct in the development and use of AI technologies” (p. 4). AI ethics as a research stream has emerged in response to several individual and societal harms that the misuse, poor design, or negative unintended consequences of AI systems may cause [15]. For example, bias and discrimination are a common danger within these systems as data driven technologies can replicate and amplify patterns of marginalisation, discrimination, and inequality that exist within society. Indeed, many of the features and metrics of models that facilitate data mining may inadvertently replicate the designers’ prejudices [16]. Data samples used to train and test AI systems can be insufficiently representative of populations from which they are drawing inferences. This leads to the likelihood of biased outcomes due to flawed data being fed into the system from the start [11]. Similarly, users impacted by classifications, predictions or decisions produced by AI systems may be unable to hold accountable the parties responsible. Automating cognitive functions can complicate the designation of responsibility in algorithmically generated outcomes due to the complex production, design, and implementation of AI systems, making it difficult to pinpoint accountable parties. Should a negative consequence occur from system use, accountability gaps may harm the developer’s autonomy [6] and violate user rights [12]. Another concern involves machine learning models delivering results by executing high dimensional correlations beyond interpretive capabilities of human reasoning. The rationale of outcomes directly affecting users may remain obscure to those users. In some cases, a lack of explainability where processed data might harbour traces of bias, discrimination, unfairness, or inequality may be illegal in some jurisdictions i.e. the European Union [22]. Furthermore, careless data management, irresponsible design and contentious deployment practices can result in implementing AI systems that produce unsafe, unreliable, or poor-quality outcomes that can damage the wellbeing of persons and society, undermining public trust [17], 17.

3 Research Gap

Ethical analyses are required to avoid risks associated with the lack of developmental oversight with these systems. The alternative is that these risks may overcome the benefits and society may reject AI applications, despite their potential to improve information systems [2]. Ethical development and deployment of AI is the first step in this direction, but more needs to be done [8]. Even though the academic discussion on the relationship between AI and ethics has been ongoing for decades, there is no shared definition of what AI ethics is, or what it should entail (Vakkuri and Abrahamsson 28. Given this state of uncertainty, we state our research questions as below:

-

1.

What performance areas are required for developing ethically aligned AI?

-

2.

What constraints do organisations experience in these performance areas to develop ethically aligned AI?

-

3.

What key mechanisms are used in these performance areas to develop ethically aligned AI?

4 Research Strategy

To identify ethically aligned AI systems’ performance areas, expert judgement studies were conducted via semi-structured interviews, enabling the researcher to explore emergent areas as they arose in the interviews, and to focus on additional lines of questioning towards areas the experts had evident experience of. For this investigation, we define experts as “persons to whom society and/or peers attribute special knowledge about matters being elicited” as per [9] (p. 681). Following selection methodologies described by Yin [30] the selection of experts ensured multiple objectives:

-

a.

A representative sample of experts was obtained.

-

b.

Useful variations of theoretical interest were gathered.

-

c.

Experts have several years of experience working with AI.

-

d.

Given AI’s broad impact, ethical concerns can only be successfully addressed from a multi-disciplinary perspective.

Based on this selection criteria, ten experts across several organisations were selected (outlined below in Table 1).

The data collected were coded before creating a matrix of categories corresponding to the emerging performance areas. Visualising the data in this manner allowed the researcher to combine, compare and report findings, while also providing initial high-level analysis. The data was then arranged into significant groups through the process of coding for data reduction where irrelevant, overlapping, or repetitive data were removed (O'Flaherty and Whalley 19. Re-checking data, regularities and patterns, drawing explanations and reviewing findings among third persons was also utilised as per Yin [30]. Content analysis was additionally performed within each interview to ensure consistency and regularity.

5 Findings

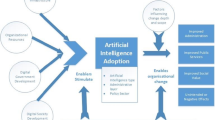

Only areas, constraints, and mechanisms that were observed multiple times by two or more experts formed the basis of these findings Identified mechanisms have been depicted as being either “essential” as crucial mechanisms to ensure that an AI system meets the ethical expectations throughout its lifecycle or “advanced” which the findings describe as being able to deliver significant ethical value but may face challenges in implementation. Figure 1 below presents a preliminary theoretical framework for developing ethically aligned AI systems that emerged from this research.

5.1 Transparency

5.1.1 Constraints

Pursuing transparency can involve public demonstrations of intellectual property and was argued to be a threat to competitive advantage. It was advised that developers should provide an overview of their system to their clients with customized code, creating plugins, and offer support in its application: “You should say saying “This is a high-level view of how I built my model, and this is how it turned out”” (E-1).

5.1.2 Essential Mechanisms

The processes of an AI system need to be documented, focusing on traceability. Systemic logging of information can mitigate future issues through accountability and oversight: “There is always traceability from input to output” (E-1). This requirement includes recording variables such as datasets, algorithms, developers, and outcomes. Explainability can also instil confidence in the system and allay uncertainties towards the decision-making process. Developers must be able to demonstrate that the system recognises appropriate ethical considerations: “You should be able to explain. Explainability.” (E-6). Striking a balance between data acquisition and service provision are essential to providing an excellent customer experience, with configurability revealed as being essential in giving users the ability to set their own preferences regarding their use of data. This begins with acquiring consent from the users and ensuring they are informed on how their data will be utilized, along with the reasons for data collection: “If there’s targeted advertising algorithm run by Google, I probably don’t want to know why a certain app would be great for me. But if I do, it should be there. It’s important” (E-9).

5.1.3 Advanced Mechanisms

Promoting open standards was identified as an advanced mechanism for developing transparency, with experts arguing that making the source code publicly available can eliminate presumptions over biased behaviour of AI systems utilized by organisations, intentional or otherwise. This is a cost-effective solution, ensuring quality, interoperability, and eliminates vendor lock-ins for clients: “…promoting open standards we are sharing knowledge with others and learning from others” (E-3). Publishing algorithms also serves to heighten the levels of transparency, while providing the opportunity to identify any drawbacks or shortcomings. Establishing this process develops peer reviews of algorithms: “Publish the algorithms online, show the public how it is done in a way that they can have faith in the system” (E-3).

5.2 Accountability

5.2.1 Constraints

There are limited regulations by government bodies over how to establish accountability over the activities performed by AI systems, resulting in active debates in the area. Companies like IBM were revealed to start adopting their own standards until legal restrictions come to fruition, drawing similarities from other fields: “The Hippocratic oath—there should be an equivalent for data on an ongoing basis and strict regulations by law” (E-7). Experts recommended that organisations should adopt accountability mechanisms for each use case of an AI system throughout its lifecycle by ensuring traceability. Secondly, experts admitted that user consent for storing activity logs might prove to be difficult in maintaining audit trails once the system is under the control of the client, revealing a bias towards the providers of the AI systems: “The biggest ethical consideration would be collecting and storing user data… You’ve got to trust the company enough to collect only the right data.” (E-9).

5.2.2 Essential Mechanisms

Shared responsibility is vital when it comes to the accountability of flawed outputs: “If you are supplying the model, you should have accountability and ownership of it. If you are using the model, you should have the same accountability and ownership as well” (E-1). This is necessary as the data used in these algorithms passes through various stages which require multiple levels of stakeholders. The experts were unanimous that accountability also lies with the company responsible for the flawed outputs of the product or service, highlighting that corporate responsibility is vital for providing oversight and direction of the project: “In the same way data breaches are a breach of customer data, the company itself is held responsible, so too should the company be held accountable for the outputs of the AI system” (E-2). While experts acknowledged the difficulty in providing accountability, they suggested conducting frequent system audits to ensure internal and external evaluation, and to enforce a common set of regulations for common practice across development teams: “Audits, there is a reporting obligation” (E-5).

5.2.3 Advanced Mechanisms

Companies were also advised to define the responsibility of each stakeholder throughout the AI system’s life cycle. This is vital in the event of any legal considerations of the development, distribution, or implementation of the system: “There has to be accountability. If cancer screening in Ireland sub-contract the work out, are the subcontractors held accountable? It must be defined” (E-6).

5.3 Diversity

5.3.1 Constraints

Catering to all cultural and geopolitical sensitivities was perceived to be a challenge, primarily due to contextual awareness surrounding the collection of diversified data across global locations: “In Europe, ethics might be different than in parts of China. How do you get that consistency?” (E-6). Within this constraint, the trend of introducing customised data protection regulations and AI legislation is continuing to emerge across global markets and should be taken into consideration.

5.3.2 Essential Mechanisms

Multi-cultural teams can make a system more ethically aligned: “If you have more diverse teams developing AI systems, you’ll catch the biases sooner” (E-5). Having this diversity can help identify potential ethical issues early in development: “It’s not just one white male view of the world. Diversity is not just gender, it’s sexuality, race, ethnicity etc.… It’s a broader perspective.” (E-9). Experts also highlighted the importance of capturing diverse datasets arguing they should be diverse in terms of gender, age, culture, and location: “Make sure the dataset you are using has enough diversity and inclusion… See how that correlates to what outputs emerge” (E-1). Eliminating bias is also necessary to avoid prejudice present in society being fed into an AI system: “The most ethical decision is making sure the training dataset for building the models is free from bias as possible” (E-2). One approach suggests putting supervision mechanisms in place to oversee the development process: “Human supervision is needed to see that we are not going over the line and are still ethically aligned” (E-8).

5.3.3 Advanced Mechanisms

Developers should focus towards establishing universal system requirements that are representative of factors like “Socioeconomical, geopolitical etc.… you must train these systems as every culture has differences and you must take those into account” (E-10). Developers should consider diverse use cases, the context of how these systems and models are created, and who is affected by their outputs so “…the data the machine uses caters to universal requirements” (E-2).

5.4 Data Governance

5.4.1 Constraints

There are several layers of stakeholders from developers and testers to data administrators that hold access rights to sensitive data the system might be reliant on resulting in danger of privilege exploitation. Notwithstanding the form of this data, whether anonymised or pseudonymised this data is accessible to privileged users and may be prone to intentional or unintentional modifications. The experts advise organisations to consistently evolve the mechanisms for managing privilege accounts to minimize the risks. For example, “shared accounts should be eliminated, audit trails must be maintained, and automated auditing technologies should be utilised to raise the alarm against malicious activities” (E-1).

5.4.2 Essential Mechanisms

Data integrity must be retained throughout a system’s lifecycle, and measures should be taken for the anonymisation and cleansing of data wherever appropriate: “Before giving the data to the system, we do the cleaning of data and remove unwanted data” (E-8) further mitigating the likelihood of bias This is achieved by utilising generalised datasets to train the systems and eliminate datasets that do not represent all demographics. It is important to note that groupthink needs to be avoided at this stage where similar minds might be biased towards certain datasets. Developers must also adhere to data utilisation principles, ensuring data is used only for the purpose for which it was collected. Key concerns of the data owners were identified as how/where/why the data will be used, leading to: “80% of any AI project is spent looking at the data, the range of values within that data, and the features within that dataset. It is a huge piece of work that has to happen before building any model” (E-2). Obtaining the data owner’s consent was also as an essential method of ensuring an appropriate level of system governance, as systems should not fetch any data as its input if the consent of the data owner is missing. Organisations must obtain and retain evidence of the data owner’s consent to eliminate the possibility of unintentional infringements: “you must show the characteristics of the data being used, showing them that is securely held, and that it is done in a way that they can have faith in the system” (E-3) as.

5.4.3 Advanced Mechanisms

Embracing open-source development reduces the possibility of unintended data infringements. This approach poses obstacles for its sole reliance; however, it was argued that: “AI should be there from an open-source perspective so that it can be reused by other data scientists, that is very important” (E-1). Prior to utilisation, the organisation should adopt procedures to test confidentiality, integrity, and availability (CIA) requirements of the data in each use case required for the AI system: “Make sure the datasets are ethically aligned with your core ethos. If you are gathering data from data sources you barely even know, you can expect bad surprises” (E-9).

5.5 Security

5.5.1 Constraints

Experts highlighted it was difficult make a system fully secure due to the constantly evolving threats that these systems are subjected to, however resilience capabilities should be incorporated where possible to avoid malicious activity, for example creating systems that could allow the organisation to dynamically reconfigure when any threat is detected. This can be made possible through inhouse or outsourced security operations centres for continuous surveillance of AI systems. The organisation may also choose to adopt calibration as a service, which serves to prevent falsified data among other offerings.

5.5.2 Essential Mechanisms

Access controls should be granted according to the user requirements, leading to developers, administrators, managers etc.… having different levels of permissions, reflecting “the human aspect of what people or teams have access to this system, and how we are auditing their access to it and the actions they take when they interact with it” (E-2). Experts advised establishing access control policies, procedures, and guidelines to ensure authorised use of the system. A data privacy officer (DPO) was recommended to be involved in structuring this level of governance for accessing sensitive data, and to conduct regular security audits. Senior security leaders should create a defined audit programme, formulating security strategies, risk management policies, and procedures to perform risk assessments: “The key is auditing… We must ensure auditability” (E-4). Organizations may opt for self-control assessments or external security audits to ensure compliance if required. Experts also emphasised the importance of secure algorithms, expressing concern that effective security protocols should be adhered to “to make sure an organisation’s algorithms can’t be externally influenced” (E-1). These algorithms are responsible for the manipulation and interpretation of data so having sufficient security in place is vital for system integrity.

5.5.3 Advanced Mechanisms

Experts recommended organisations maintain privileged accounts for the minimization of risks through identity access management. Through this approach, organisations can apply roles, responsibilities, and policies to either individual or group level accounts depending on their purpose: “Developers could be placed within the one team, with established permissions as to what actions they can take already allocated to that team account” (E-5). The experts outlined that it was best practice to create users with a least privilege mentality, giving no access when an individual account is first created. It is then up to the senior security leaders to grant additional access to individual accounts based on their need. Further permissions can be given to individual accounts based on their requirements but should be continually monitored.

5.6 Culture

5.6.1 Constraints

Complacency emerged as the main constraint when creating an ethical culture, for example, organisations dealing reactively with major events by “coming up with minimum effort to fix something. That complacency is not good enough with AI.” (E-7). This complacency may result in neglectful behaviour that can lead to biased decision making when developing these systems. Experts agreed that organisations need to actively: “…come up with our own standards, and that should definitely be on a proactive basis” (E-7).

5.6.2 Essential Mechanisms

When it comes to translating ethical expectations, training emerged as vital area to help create and sustain an ethical work culture: “…training, simple as that. Developers should be trained so mistakes should be avoided” (E-4). By having employees trained in ethical guidelines, they learn what the company’s ethos is, while also becoming more skilled in solving for flawed datasets or emerging levels of bias. Education also emerged as an integral area to inform the stakeholders on their responsibilities, not only for the developers responsible for delivering an ethically aligned system, but also for the clients who will implement these systems. “Educating users plays an important role… the company must educate the clients on what responsibilities lie with them, and what the company is responsible for” (E-4). The consumer must understand what the AI system was developed for, as having misconceptions results in hinderances to its deployment.

5.6.3 Advanced Mechanisms

Responsible innovation requires the creators to anticipate, reflect and obtain proactive user engagement. Engaging relevant stakeholders throughout the systems’ lifecycle works to enhance the technological acceptance, user awareness, and eliminate unintended ethical consequences. For example, the impact of customer trust is substantial if a poorly trained model generates bad responses, it should be “in the company’s interest to think about system requirements proactively… You can’t wait and be reactive” (E-2). If something were to go wrong with the system. “there would be reputational damage, client damage, financial damage. You have to proactively manage your requirements” (E-3). It is essential that organisations “definitely come up with their own standards on a proactive basis” (E-7).

6 Discussion, Conclusions, and Implications

As AI becomes more prominent, there is more pressure than ever on organisations to ensure they uphold strict standards to produce ethically aligned AI systems. This research develops the literature in the still emerging field of ethical AI by identifying six distinct performance areas that are crucial to its development. We furthermore identify the main constraints organisations may encounter within these areas, while also identifying both essential and advanced mechanisms required for their success. While we endeavoured to achieve the highest levels of objectivity, accuracy, and validity, as is true of any research this study has several limitations which can be addressed by future research. Given the approach of this research, a relatively small population size of qualitative interviews was pursued which might present generalizability limitations. While this research makes an initial foray into developing a preliminary theoretical framework for ethical AI development, future studies are now advised to explore these issues further through large scale, quantitative investigations, aimed at larger population sizes. Furthermore, future research is also advised to explore emerging key risk factors, and key control indicators to blend with the output of this investigation and achieve a greater alignment of AI systems.

Nonetheless, considering the lack of empirical studies that seek to explore the mechanisms of ethical AI development, these findings make a novel contribution to the literature. This research offers several approaches for how using this instrument can assist organisations in implementing a robust, ethically aligned compliance strategy while also offering several future research directions. Firstly, this research identifies the spectrum of requirements in establishing ethically aligned AI systems. The framework herein provides organisations with an effective roadmap to create their ethical strategy across six key areas, allowing for a more granular perspective on what these systems require, while also providing a more detailed appreciation on how these performance areas may be developed and managed for strategy formulation. Secondly, from a practical point of view these are original findings that reveal the importance of being aware not only of what areas should be focused on during the development of these systems, but also what constraints they might face. This research serves to identify the strategic value in countering these constraints as it offers clarity on what components organisations lack, the capability they require and the realistic approaches they demand for execution. Thirdly, the analysis of these areas represents a level of complexity that has not yet been empirically examined, providing a sound basis for further work. We would encourage future studies to explore this framework through various lines of enquiry.

Several managerial implications have also been outlined. Firstly, this research reinforces the strategic value of planning for ethically aligned AI systems, advocating that should developers neglect these areas, they are putting themselves at a distinct disadvantage. Secondly, organisations must recognise the inherent value offered by these performance areas, rather than considering them only to be supporting tools. Using this framework, organisations can contribute to elevated trust between technology and people, preventing harm (intentional or otherwise) to consumers and society. These novel findings offer value to both developers and practitioners in developing a systemic culture of AI innovation that is conducive towards ethically aligned principles, while identifying how they can be tangibly operationalised.

7 Availability of Material

This investigation adhered to a strict ethical approval process, subject to an external department committee evaluation. The purpose of the investigation was provided to each participant, in addition to the interview protocol, before to obtaining signed consent forms for study participation and publication of any future materials from the participants. The author confirms that the data supporting the findiings of this study are available within the article.

Data availability

The data that underlies the findings of this research paper are available upon request from the corresponding author for the purpose of replicating and verifying the results presented in this study. The data will be made available in compliance with the regulations and policies governing data sharing and confidentiality. Please note that some data may be subject to restrictions due to privacy and confidentiality concerns or legal agreements with data providers. In such cases, the corresponding author will work with interested parties to facilitate access to the data within the confines of these restrictions.

Abbreviations

- AI:

-

Artificial intelligence

- CIA:

-

Confidentiality, integrity, and availability

- DPO:

-

Data privacy officer

- E:

-

Expert

- IBM:

-

International business machines

References

Agrawal A, Gans J, Goldfarb A. Prediction machines: the simple economics of artificial intelligence. Cambridge: Harvard Business Press; 2018.

Batista R, Villar O, Gonzalez H, Milian V. Cultural challenges of the malicious use of artificial intelligence in Latin American Regional Balance. Proceedings of the 2nd European Conference on the Impact of Artificial Intelligence and Robotics, ECIAIR. 2020. pp. 7–13.

Boison E, Tsao L. Money moves: following the money beyond the banking system. US Att’ys Bull. 2019;67:95.

Brundage M. Limitations and risks of machine ethics. J Exp Theor Artif Intell. 2014;26(3):355–72.

Cath C. Governing artificial intelligence: ethical, legal and technical opportunities and challenges. New York: The Royal Society Publishing; 2018.

Chen JQ. Who Should Be the Boss? Machines or a Human?. ECIAIR 2019 European Conference on the Impact of Artificial Intelligence and Robotics: Academic Conferences and publishing limited. p. 71.

Copeland BJ. The essential turing. Oxford: Clarendon Press; 2004.

Dwivedi YK, Hughes L, Ismagilova E, Aarts G, Coombs C, Crick T, Duan Y, Dwivedi R, Edwards J, Eirug A (2019) Artificial Intelligence (AI): multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. International Journal of Information Management. p. 101994.

Garthwaite PH, Kadane JB, O’Hagan A. Statistical methods for eliciting probability distributions. J Am Stat Assoc. 2005;100(470):680–701.

Greene D, Hoffmann AL, Stark L. Better, nicer, clearer, fairer: a critical assessment of the movement for ethical artificial intelligence and machine learning. Proceedings of the 52nd Hawaii international conference on system sciences. 2019.

Gurkaynak G, Yilmaz I, Haksever G. Stifling artificial intelligence: human perils. Comput Law Secur Rev. 2016;32(5):749–58.

Hu Q, Lu Y, Pan Z, Gong Y, Yang Z. ’Can AI artifacts influence human cognition? The effects of artificial autonomy in intelligent personal assistants. Int J Inf Manag. 2021;56: 102250.

Königstorfer F, Thalmann S. Applications of artificial intelligence in commercial banks–a research agenda for behavioral finance. J Behav Exp Financ. 2020;27: 100352.

Kroll JA. The fallacy of inscrutability. Philos Trans R Soc A Math, Phys Eng Sci. 2018;376(2133):20180084.

Leslie D. ’Understanding artificial intelligence ethics and safety: a guide for the responsible design and implementation of AI systems in the public sector. SSRN Electron J. 2019. https://doi.org/10.2139/ssrn.3403301.

Luengo-Oroz M, Bullock J, Pham KH, Lam CSN, Luccioni A. From artificial intelligence bias to inequality in the time of COVID-19. IEEE Technol Soc Mag. 2021;40(1):71–9.

Miraz MH, Excell PS, Ali M. Culturally inclusive adaptive user interface (CIAUI) framework: exploration of plasticity of user interface design. Int J Inf Technol Dec Mak (IJITDM). 2021;20(01):199–224.

Nemitz P. Constitutional democracy and technology in the age of artificial intelligence. Philos Trans R Soc A Math, Phys Eng Sci. 2018;376(2133):20180089.

O'Flaherty B, Whalley J. Qualitative analysis software applied to is research—developing a coding strategy. ECIS 2004 Proceedings, p. 123.

Pereira A. Ethical Challenges in Collecting and Analysing Biometric Data. Proceedings of the 2nd European Conference on the Impact of Artificial Intelligence and Robotics, ECIAIR. 2020. p. 108–114.

Puntoni S, Reczek RW, Giesler M, Botti S. Consumers and artificial intelligence: an experiential perspective. J Mark. 2021;85(1):131–51.

Schmidt P, Biessmann F, Teubner T. Transparency and trust in artificial intelligence systems. J Decis Syst. 2020;29(4):260–78.

Schroeder J. Marketplace theory in the age of AI communicators. First Amend L Rev. 2018;17:22.

Selbst AD. Disparate impact in big data policing. Ga L Rev. 2017;52:109.

Stahl BC, Eden G, Jirotka M. Responsible research and innovation in information and communication technology: identifying and engaging with the ethical implications of ICTs. Responsible innovation managing the responsible emergence of science and innovation in society. Chichester: John Wiley & Sons Ltd; 2013. p. 199–218.

Stahl BC, Timmermans J, Flick C. Ethics of Emerging Information and Communication TechnologiesOn the implementation of responsible research and innovation. Sci Pub Policy. 2017;44(3):369–81.

Thierer A, Hagemann R. Removing roadblocks to intelligent vehicles and driverless cars. Wake Forest JL & Pol’y. 2015;5:339.

Vakkuri V, Abrahamsson P. The key concepts of ethics of artificial intelligence. 2018 IEEE International Conference on Engineering, Technology and Innovation (ICE/ITMC): IEEE. p. 1–6.

Wachter S, Mittelstadt B, Floridi L (2017) Transparent, explainable, and accountable AI for robotics. Sci Robot 2

Yin R. Case study research: design and methods. 4th ed. London: Sage Publisher; 2008.

Zhang D, Mishra S, Brynjolfsson E, Etchemendy J, Ganguli D, Grosz B, Lyons T, Manyika J, Niebles JC, Sellitto M (2021) The AI Index 2021 Annual Report. arXiv preprint arXiv:2103.06312. Accessed 20 July 2023.

Zsarnoczky M. How does artificial intelligence affect the tourism industry? VADYBA. 2017;31(2):85–90.

Acknowledgements

This research has been presented at the European Conference on the Impact of Artificial Intelligence and Robotics (2021).

Funding

No funding was responsible for the development of this study.

Author information

Authors and Affiliations

Contributions

ST: is the sole author for this investigation.

Corresponding author

Ethics declarations

Conflict of Interest

The authors report there are no competing interests to declare regarding the veracity of the findings presented herein.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Treacy, S. Mechanisms and Constraints Underpinning Ethically Aligned Artificial Intelligence Systems: An Exploration of Key Performance Areas. Hum-Cent Intell Syst 3, 189–196 (2023). https://doi.org/10.1007/s44230-023-00036-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s44230-023-00036-0