Abstract

Making responsible lending decisions involves many factors. There is a growing amount of research on machine learning applied to credit risk evaluation. This promises to enhance diversity in lending without impacting the quality of the credit available by using data on previous lending decisions and their outcomes. However, often the most accurate machine learning methods predict in ways that are not transparent to human domain experts. A consequence is increasing regulation in jurisdictions across the world requiring automated decisions to be explainable. Before the emergence of data-driven technologies lending decisions were based on human expertise, so explainable lending decisions can, in principle, be assessed by human domain experts to ensure they are fair and ethical. In this study we hypothesised that human expertise may be used to overcome the limitations of inadequate data. Using benchmark data, we investigated using machine learning on a small training set and then correcting errors in the training data with human expertise applied through Ripple-Down Rules. We found that the resulting combined model not only performed equivalently to a model learned from a large set of training data, but that the human expert’s rules also improved the decision making of the latter model. The approach is general, and can be used not only to improve the appropriateness of lending decisions, but also potentially to improve responsible decision making in any domain where machine learning training data is limited in quantity or quality.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

At the same time as banks and other lenders seek to make financial decisions more automated, there is an increasing demand from regulatory authorities for responsibility and accountability in these decisions. A particular challenge for banks’ credit risk management, and machine learning (ML) promises to improve on current techniques in applications such as credit scoring by leveraging the increasing availability of data and computation [1]. Credit scoring is typically based on models designed to classify applicants for credit as either “good” or “bad” risks [2]. Machine learning applications in credit scoring have been shown to improve accuracy, but the best-performing algorithms, such as ensemble classifiers and neural networks, require large datasets, which may not be easy to obtain, and lack interpretability, which presents issues for fairness, compliance and risk management [3, 4]. Owing to this, in practice less accurate but more interpretable models are often still used [5], yet these models can also be less accurate in ways that relate to fairness.

In this paper we introduce a way of addressing both issues by combining ML classification models trained on limited data with a well established form of “human-in-the-loop” knowledge acquisition based on Ripple-Down Rules (RDR) [6] to construct fair and compliant rules that could also improve overall performance. The proposed framework, referred to as ML+RDR, enables a human domain expert to incrementally refine and improve a model trained using machine learning by adding rules based on domain knowledge applied to those cases where the machine learning model fails to fairly and correctly predict the outcome. If all cases processed are monitored in this way then domain knowledge could be used to correct any error made by the machine learning model. On the other hand, when developing a machine learning model, there are usually training cases where machine learning does not learn to assign the label given in the training data. This provides a pre-selected set of incorrectly classified cases for which the domain expert can add rules, and in the study here we have only added rules for these cases. That is, in this paper we have only applied RDR to correct such “data errors”, without considering whether these are specifically related to fairness, as our aim was specifically to evaluate the effectiveness of focusing on classification errors made by the ML model on the training data. The potential for extension of our approach to correcting “fairness errors” is, however, described when we analyse the error-correcting process of RDR in more detail later in the paper.

The implications of the approach are far wider than simple error correction in that the judgement of the human domain expert may be based on all sorts of considerations of what is “responsible” judgement by a financial institution about a particular case, such as the regulatory requirements for credit rating criteria. For example, according to the Australian Prudential Regulation Authority (APRA), such criteria must be fair, plausible, intuitive and result in a meaningful differentiation of risk [7]. Rules created by a domain expert can be expected to adhere to such criteria, including fairness of a decision and, due to their interpretability, can be easily checked against such requirements.

With such objectives in mind, we have designed our approach to be consistent with common requirements from lender institutions or banks. First, we retain the flexibility to customise the conversion from the raw scores to calibrated probabilities then finally to binary outcomes based on a cost/reward structure aligned to the business use case. Second, we ensure the stability of the decision strategy when the raw scores are updated after the ML model is improved by rule acquisition as the score is calibrated. Finally, we provide transparency on the process of converting the raw scores to calibrated scores and the decision strategy with a declarative specification of how this is done. An overview of the framework for our proposed approach is shown in Fig. 1.

A framework for responsible decision-making. The framework assumes that a machine learning algorithm (ML) runs on a training dataset to construct a model (top panel). As cases (either from the training set in our controlled experiments, or in the intended use case from new applications for credit) come in for decisioning, the ML model is enhanced with the addition of knowledge-base (RDR) built incrementally by human interaction. In this way the knowledge acquisition process incrementally adds human expertise to the RDR to handle correctly cases that are wrongly classified by ML (cases correctly classified by ML do not require processing by RDR)

The remainder of the paper is structured as follows: Section 2 presents related work and the context for the framework proposed in this paper. Section 3 describes the experimental setup and the framework for domain knowledge driven credit risk assessment. Section 4 describes the experimental results. Section 5 illustrates how domain knowledge could be leveraged to improve model performance in the credit risk domain, using the knowledge acquisition framework (RDR) that enables a domain expert to construct the new features and rules that may be necessary. Section 6 provides concluding remarks and discusses future directions.

2 Related Work and Background

In this paper we address the issue of responsible AI systems, focusing on the area of financial technology (fintech). Dictionary definitions of “responsible” mention obligations to follow rules or laws, or having control over or being a cause of some outcome or action. A simple summary of these meanings is to be accountable or answerable for something, in the literal sense of being able to give an account of something that happened, i.e., to be able to give an explanation for it, which is a characteristic of human expertise [8].

2.1 Responsible AI and Fintech

In the context of AI, the term “responsible” typically relates to issues of algorithmic fairness, or the avoidance of various forms of prohibited or undesirable bias, in AI-mediated decision-making [9]. To ensure fairness AI systems must be accountable in terms of legal and ethical requirements, and to be accountable they must be able to explain their decisions to human experts in the relevant domains [10]. One proposal is to view responsibility as the intersection of fairness, and explainability, but both of these are largely open problems; for example, it is not straightforward to evaluate either [11], and there is a growing number of definitions of fairness in the literature [12].

Towards ensuring fairness through explainability, over the past decade there has been a rapid increase [13] in research on methods of making AI systems, the data on which they are trained, and the outputs that they generate, more intelligible to humans [14, 15]. Such research covers techniques referred to mainly either as explainable AI (XAI) [16] or interpretable ML (IML) [17] (these and other terms, such as transparent or intelligible, are also often used synonomously — in this paper we will use these terms interchangeably).

Furthermore, it is widely assumed that there is a trade-off in ML, whereby increases in the predictive accuracy of models leads to decreases in their intelligibility, but this ignores the fact that by applying additional explainability methods to performant black box models their errors can be further analysed and potentially corrected [10].

Generally, the techniques used currently for explainability of ML models are applied as part of commercially available or open-source software toolsFootnote 1. Some commonly available methods can be summarised as follows:

-

1.

Variable or feature importance in model predictions, e.g., Shapley values [18], can be used to assess whether the output predicted by a black box model for a given input is consistent with human understanding in a domain.

-

2.

Surrogate models that are chosen to be inherently interpretable, e.g., linear regression or decision tree models in LIME [19], can explain the prediction of a black box model of a test instance when trained on synthetic data that is generated to be similar to the instance and classified by the black box model.

-

3.

Quantitative and qualitative standardized documentation from evaluation of ML models in the form of “model cards” [20] that can highlight potential impacts on various groups due to characteristics of the data captured in black box models.

-

4.

Fairness metrics, where membership by individuals in known protected or sensitive groups is indicated in the data by a variable; ML models can then be trained under the constraint that classification performance should be similar for individuals, whether or not they are members of sensitive groups [21].

-

5.

Visualizations such as plots or dashboards based on any of these methods [22].

In previous work [23, 24], we have found feature importance measures to be useful to assess the performance of models in credit risk assessment, but these alone are not sufficient to address errors in prediction. Surrogate models are also only for use in explanation, and furthermore can have issues of stability (where different runs of an explainability method can generate different explanations for the same instance [25]), or faithfulness (where it is not clear how the explanation relates to the model’s prediction [26]).

Model cards are likely to play an increasingly important role in future, possibly as part of forthcoming legislation on AI systems. Fairness metrics, particularly those that can be implemented in ML models [12] can be investigated as part of our future research, but in our experiments in this paper we do not have data for which there is information on protected attributes. In this paper we applied a new implementation of RDR with a GUI through which human experts can inspect data on which ML makes errors, and rules can be added or updated, described in Section 3.2.2. Visualizations that can assist human experts in the RDR process could be added to this GUI, but this is left for further work.

For the finance industry, it is critically important that the application of AI systems complies with regulatory and ethical standards, particularly in the area of credit decisioning [27]. A recent survey [3] reviewed 136 papers on applications of ML to problems of credit risk evaluation, covering mainly tasks of credit scoring, prediction of non-performing assets, and fraud detection. The most commonly applied ML methods were support vector machines, neural networks and ensemble methods. However, only one early work on explainability [28] was cited. Another recent survey reviewed 76 studies on statistical and ML approaches to credit scoring, finding that ensembles and neural networks could outperform traditional logistic regression, but that a key limitation was the inability of some ML models to explain their predictions [29].

Current research on credit decisioning tasks with explainable ML appears to mainly use methods to assess variable importance (for example [30,31,32]). Instead of using complex ML algorithms to increase accuracy for credit risk prediction and then applying explainability techniques, in [33] the opposite approach was investigated. That is, use a linear method such as logistic regresssion, where the impact of any variable on the output can be assessed within current regulatory requirements, but manually engineer complex features, enabling accuracies similar to those of more complex algorithms to be achieved. However, this reliance on human feature engineering may be difficult to scale up, particularly when alternative data sources are used [34].

The approach we propose in this paper differs from previous work on explainable AI. Previous work on XAI focuses on providing explanations for an existing model trained by a machine learning algorithm, whereas what we propose in this paper is the addition of further knowledge in the form of rules to correct errors in an existing model. These data-driven rules are intrinsically understandable by someone with a relevant background as they are provided by human experts. However, only the new knowledge added is intrinsically explicable, so other explainability techniques would be needed for that part of the model built my machine learning which performs correctly on the training data. We have not needed to use such techniques in this research as our focus has been on demonstrating that human knowledge can be easily added to a model built by machine learning. This additional human knowledge not only improves the performance of the overall system, but can also be explicitly added to overcome issues of fairness, etc. that a human user might identify in the output of an ML-based system.

2.2 Knowledge Acquisition with Ripple-Down Rules

Ripple-Down Rules (RDR) are used in this study because RDR is a knowledge acquisition method for acquiring knowledge on a case-by-case basis [6] and so can be applied to the cases misclassified by the ML system used here. RDR is long established and has been used in a range of different industrial applications [6]. In particular, Beamtree (https://beamtree.com.au) provides RDR systems for medical application and appears to have about 1000 different RDR knowledge bases in use around the world, developed by individual chemical pathologists in customer laboratories rather than knowledge engineers, which provide clinical interpretations of laboratory results and audits of whether chemical pathology test requests are appropriate.

The various versions of RDR for knowledge acquisition from human domain experts have two central features. First, since a domain expert always provides a rule in a context, the rule should be used only in the same context. That is, if an RDR knowledge-based system (KBS) does not provide a conclusion for a case and a rule is added, this new rule should only be evaluated on any subsequent case after the previous KBS has again failed to provide a conclusion. Similarly, if a wrong conclusion is given, the new rule replacing this conclusion should only be evaluated after the wrong conclusion is again given via the same rule path.

Maintaining context is achieved by using linked rules, where each rule has two links (for whether it fires or not) which specify the next rule to be evaluated, or to exit inference. When a new rule is added, the exit is replaced by a link to the new rule. If no conclusion has been givenFootnote 2, the inference pathway will be entirely through the false branch of the rules evaluated, and the new rule will be linked to the false branch of the last rule evaluated, which was the previous exit point. If a conclusion is to be replacedFootnote 3, then the new rule will be linked to the true branch of the rule giving the wrong conclusion, or if other rules giving other corrections for this rule have been added, but which have failed to fire for this case, then the new correction will be linked to the false branch of the last correction rule, which was the previous exit point.

The second central feature of RDR is that rules are provided for examples or cases, and the case for which a rule is added is stored as a “cornerstone case” along with the rule in the KBS. If the domain expert decides a wrong conclusion has been given and adds a new rule to override this conclusion, the cornerstone case for the rule giving the wrong conclusion is shown to the domain expert to help ensure they select at least one condition for the new rule that discriminates between the cases (unless they decide the conclusion for the cornerstone case is also wrong). Checking the single cornerstone case associated with the rule being corrected is generally sufficient to ensure correctness, but it is also a minimum requirement. In the study described in this paper, when an RDR rule is added to correct a conclusion that has been given by the ML model, which we term the machine learning knowledge base (MLKB), it is appropriate to consider all the training cases for which the MLKB gave the correct conclusion as cornerstone cases. This is because none of these conclusions should be changed unless, after very careful consideration, the domain expert decides the training data conclusion (label) was wrong and should be changed.

Since the rule is automatically linked into the KBS, and the domain expert is simply identifying features from the case which they believe led to the conclusion, including at least one feature which differentiates the new case from the cornerstone cases (which already have a correct conclusion), RDR rules can be added very rapidly, taking at most a few minutes to add a rule, even when a KBS already has thousands of rules [35]Footnote 4. In that study, and also for the Beamtree knowledge bases, multiple classification RDR was used, so thousands of cornerstone cases may need to be checked when a rule is added to give a new conclusion rather than correct a conclusion. In practice, the domain expert only has to see two or three of perhaps thousands of cornerstone cases to select sufficient features in the current case such that all the cornerstone cases are excluded.

In this paper, RDR rules are added to improve the performance of a KBS developed by machine learning. This approach was also used in [36]. In that study the authors developed knowledge bases using machine learning, which were then evaluated on test data. Any errors in the test data were corrected with RDR rules. Simulated experts were used to provide the rules, whereas we have used a human domain expert. Furthermore, in [36] the rules were added to correct errors in test cases, rather than in training cases as we propose in this paper. In a real world application, the advantage of using errors in the training data is that these cases are at hand, rather than having to wait for errors to occur. In other related work where RDRs are used to improve the performance of another system, they have been used to reduce the false positives from a system detecting duplicate invoices [37]. Also, in open information extraction tasks they have been used to customise named entity extraction [38] and relationship extraction [39] to specific domains.

3 Experimental Setup

To test the hypothesis that human knowledge acquired from domain experts can complement and improve machine learning to make more accurate and responsible decisions, we conducted two experiments with benchmark data: one with a dataset restricted to a relatively small number of training examples, and a second in which the training sample size was increased by a factor of ten.

3.1 Materials

In our experiments, we used Lending Club dataFootnote 5, which covers the years 2016 to 2018. Using domain knowledge, a subset of features was carefully chosen to consider only those features that would be available prior to deciding whether or not to offer a loan. A total of 16 features were selected, and the outcome (class) was binary, either “default” (1) or “repaid” (0). Both the machine learning algorithm and the human expert used the same set of features.

3.2 Methods

3.2.1 Machine Learning

In credit risk, binary prediction is not sufficient since what is needed is a model score representing the Probability of Default (PD). To apply machine learning we need to train a model with a numeric output, i.e., a regression-type model. In this paper we used XGBoost [40], which learns an ensemble of regression trees using gradient boosting [41]. Although XGBoost is widely used, this paper does not present any argument about its usefulness for credit assessment, rather it is simply as an example of how a machine learning method can be improved on using human expert domain knowledge.

3.2.2 Responsible Decision-Making with Knowledge Acquisition

We developed a new implementation of RDR based on the structure of the freely available code released in [6], with a new graphical user interface designed for this domain to enable the domain expert to quickly view data, add and evaluate rules. A rule covering an ML training case (example) that had been misclassified by the ML knowledge base was built by adding one condition at a time, until no cases for which ML gave the correct classification were misclassified by the evolving rule. The features to be added as rule conditions were determined by the domain expert based on their expertise. Our new RDR implementation has been designed to interact with the other components similar to a data and decisioning pipeline as used in the finance industry. This was used in all the experiments reported in this paper.

3.2.3 Prediction Costs and Profits

In order to determine the cutoff, the problem should be viewed as one of binary prediction. This means that there are four possible outcomes with respect to the labelled data. To be consistent with real-world applications a realistic cost structure should be used, and the following was adopted (other costs are of course possible). Since in production a prediction of default results in no loan being given, irrespective of the actual outcome, costs (or profits) of zero were assigned. On the other hand, the two remaining outcomes where loans are predicted to be repaid have non-zero values assigned. In the case of a “good” loan (one that was repaid), the profit was assigned a value of $300, whereas a “bad” loan (where there was a default) is assigned a cost of $1,000Footnote 6.

Given these cost assignments, the cutoff is determined as follows, using all of the n cases that were used during training (\(n = 500\)):

-

1.

the predicted scores and actual binary outcomes are extracted into a table with two columns and n rows

-

2.

this table is sorted in descending order of the predicted scores

-

3.

we calculate \(n-1\) cutoffs, where the ith cutoff is calculated as the mean of the ith and \((i+1)\)th scores

-

4.

cutoffs are used to convert scores to binary predictions based on the rule that if a score is greater than the cutoff the prediction is 1, and 0 otherwise

-

5.

for each of the cutoffs, predictions are made for each of the n cases and compared to the actual outcomes

-

6.

for each of the cutoffs, the total $ value is calculated as the sum of \(\textrm{TN} \times 300 - \textrm{FN} \times 1000\)

-

7.

the selected cutoff is the cutoff with the highest $ value

The optimal cutoff selected by this method is used to convert scores output from the machine learning to the binary outcomes used in the decision strategy in all of the experiments. Here \(\textrm{TN}\) denotes the number of true negatives, where the loan was predicted to be repaid and this actually occurred, and \(\textrm{FN}\) is the number of false negatives, where the loan was predicted to be repaid, but actually there was a default.

3.3 Experiment 1

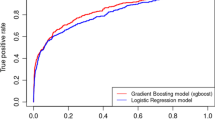

This experiment was devised to emulate real-world situations where there is only a small number of labelled examples available to train a machine learning model. Although a much larger dataset is available, we initially selected only 500 stratified random samples from credit card and debt consolidation in year 2016, as shown in Figure 2. Then XGBoost was trained to build a predictive model called \(ML_{500}\). Specifically, \(ML_{500}\) is an ensemble of XGBoost models made up of five separate models obtained from each fold of a five-fold cross validation; ensembling of models has been shown to be among the best performing approaches to credit scoring [42]. The ensembled score is an average of the results from the five different models. Based on a cut-off calculated to maximise total dollar value profit (see Section 3.2.3), the binary classification of \(ML_{500}\) is determined.

The \(ML_{500}\) predictions may be incorrect because the features available may not be particularly relevant or may be due to noisy data, but a small training dataset is also likely to contain cases where there are insufficient other examples of that type of case for the statistical measure(s) employed by the machine learning to be effective. In contrast a domain expert may be able to suggest a rule based on a single case using their insights about credit risk. The expert creates RDR rules utilising the features that are already there in the dataset, but can also develop more relevant features derived from features in the dataset based on their prior experience in credit risk and then use these new features in rule conditions.

The expert attempts to correct the errors made by the machine learning model on the 500 training cases by developing a rule for each incorrect case selected, case by case. Cases for which rules are developed are called “cornerstone cases” in the RDR literature. The resulting knowledge base is called \(RDR_{500}\), and the combined model is referred to as \(ML_{500} + RDR_{500}\). 195 of the 500 training cases were misclassified by \(ML_{500}\). Given that this is a difficult learning problem even with a large training dataset, we asked the expert to consider only those cases where the conclusion was wrong and different from the conclusions given by a model developed with a much larger training set (see below, termed \(ML_{5000}\)). It was expected this would help select those cases where there were not enough examples in the 500 case training set. There were 24 such cases. The expert could not construct meaningful rules for 4 of these. The expert only needed to construct rules for 11 of the remaining 20 cases, as some of the rules covered more than one of the error cases. After each rule was added, the initial error cases were checked to see what errors, if any, remained, requiring further rule addition. In real-world use, without a larger dataset also available, the expert would not be able to select which of the error cases to consider, but it seemed to appropriate to reduce his task in this experiment. Using the RDR approach it took the expert an average of 4 minutes to add each rule. The rules also correctly classified other test cases that \(ML_{500}\) misclassified, and in total 41 of the 195 error cases were now correctly classified.

3.4 Experiment 2

In order to compare the results to machine learning from a large dataset, another 4500 stratified random samples from the 2017 credit card and debit consolidation categories were selected and added to the 500 cases used to build \(ML_{500}\), resulting in 5000 training cases overall. Similarly to \(ML_{500}\), we used XGBoost to build the prediction model \(ML_{5000}\) from the 5000 cases. The aim was to see how well the RDR rules added could compensate for a lack of training data; i.e, how well \(ML_{500} + RDR_{500}\) compared to \(ML_{5000}\). Additionally, we wanted to investigate if the knowledge acquired from the expert using a small dataset (\(RDR_{500}\)) could improve a machine learning model constructed using a large dataset.

4 Results

We selected 10,000 unseen stratified random samples from credit card and debt consolidation in the year 2018 to build a test dataset. We evaluated the performance of \(ML_{500}\) and \(ML_{5000}\) using these 10,000 test cases. We also augmented each machine learning model with \(RDR_{500}\) to evaluate the effectiveness of \(RDR_{500}\), the knowledge base acquired using only the error case after machine learning from 500 training cases.

The framework for testing \(ML_{500} + RDR_{500}\) is shown in Fig. 3. In these experiments the ML conclusion for a case was accepted, unless an RDR rule fired overriding the conclusion for that case, in which case the RDR conclusion was accepted. The same comparison was also carried out with \(ML_{5000}\) and \(ML_{5000}\) enhanced with \(RDR_{500}\). The four experiments are shown in Fig. 4.

Fig. 5 presents the results when only a small dataset is available for machine learning and compares the outcomes of \(ML_{500}\) and \(ML_{500} + RDR_{500}\). When we augmented \(ML_{500}\) with \(RDR_{500}\), the number of good loans offered increased by 9.12%, the number of bad loans increased by 8.73%, and the profit increased by 10.3%, which is about half the increase of using machine learning with 5000 training cases (see Fig. 6). This suggests that the RDR rules acquired based on past experience with risk assessment relax the requirements for approval; however, in a way that also boosts overall profit. In developing the ML model, the cut-off between good and bad loans depended on the choice of -$1000 loss for a bad loan and $300 profit from a good loan. We also tried other values which changed the threshold for the cut-off between good and bad loans, but this did not change the overall result that augmenting \(ML_{500}\) with \(RDR_{500}\) increased profit. Clearly, increasing the unit benefit on good loans, or increasing the unit cost on bad loans, will affect the overall profit. However, this will affect both \(ML_{500}\) and \(ML_{500} + RDR_{500}\). Our results clearly show improvement for \(ML_{500} + RDR_{500}\) vs. \(ML_{500}\) in the number of good loans vs. bad loans, which is the basis of our claim that the technique is useful. The issue for applications of such predictive models is the relative cost of good vs. bad loans. It is therefore a business decision on whether the relative costs make the predictive model useful, or not. For example, increasing the profit to $400 and keeping the cost of -$1000 results in an overall profit improvement of 9.63%, whereas keeping the profit at $300 and increasing the cost to -$1100 results in an improvement of 10.93%. In this paper, we have shown indicative costs based on typical real-world experience, and minor variations as would be reasonable in business applications have also shown a profit improvement, demonstrating the robustness of the approach. Of course, larger variations in relative costs will either increase or decrease the amount of overall profit, and this could be addressed by further work.

Fig. 6 shows the results when a large dataset is available, and compares the results of \(ML_{5000}\) and \(ML_{5000}\) with \(RDR_{500}\). A similar trend is observed, in that the number of both good and bad loans increases (3.7% and 3.6%, respectively), and the profit also increases by 3.9%. The advantages offered by \(RDR_{500}\) are less for \(ML_{5000}\) than for \(ML_{500}\) given that the machine learning model has access to a much larger training dataset. Secondly, the RDR was not developed for training cases that were misclassified by \(ML_{5000}\) but training cases for \(ML_{500}\) that were misclassified by \(ML_{500}\).

Let us now examine the results of \(ML_{5000}\) and \(ML_{500}+RDR_{500}\). \(ML_{5000}\) increases profit by 21.5% over \(ML_{500}\), whereas \(ML_{500}+RDR_{500}\) increases profit by 10.3% over \(ML_{500}\). This shows that the increase in profit for \(ML_{500}+RDR_{500}\) is nearly half of the rise in profit for \(ML_{5000}\) (a large dataset). In other words, domain knowledge could significantly improve performance even when only a small dataset is available. Given the small data set is one tenth the size of the larger data set, there may be cases in the larger data set which do not appear at all in the smaller data set, so that the expert will not have written rules for such cases.

We believe this improvement even on a large training set is attributable to the fact that domain experts are able to construct RDR rules that capture core domain concepts that are useful in addressing the limitations of a broad range of machine learning models, including models built using a large dataset. Machine learning models can only learn from available data, which may be inadequate for learning all the essential domain concepts. A similar result was found in a study on data-cleansing for Indian street address data, where domain experts provided a more widely applicable knowledge base than could be developed by machine learning’s essentially statistical approach [43].

5 Domain Knowledge for Responsible Credit Risk Assessment

In this section we provide examples of the kinds of credit risk assessment knowledge that is acquired as RDR rules and used to enhance the machine learning model’s performance. The RDR rules capture some of the key insights from the expert’s experience with credit risk assessments. For example:

-

Does the applicant have a willingness to repay?

-

Employment length is often inversely related to loan risk.

-

Normally, there is a positive correlation between the number of lending accounts opened in the past 24 months and lending risk.

-

-

Does the applicant have the capacity to repay?

-

Loan risk is inversely correlated to cover of current balance and loan (annual income divided by the sum of the loan amount applied for and the average current balance of existing loans).

-

5.1 New Features

The domain expert introduced the following two additional features when constructing RDR rules to improve the performance of the model:

-

cover of current balance and loan (called cover_bal_loan): annual income divided by the sum of the loan amount applied for and the average current balance of existing loans. Higher value means lower risk, and vice versa.

-

cover of instalment amount (called cover_install_amnt): annual income divided by the requested loan’s repayment amount. Higher value means lower risk, and vice versa.

5.2 RDR Rule

To illustrate the type of rules created by the domain expert, we show one RDR rule here. An RDR rule is constructed interactively by the domain expert by adding one attribute at a time, with the help of a user-friendly graphical interface (see Section 3.2.2). The expert constructed the following RDR rule to approve a loan that was refused by the machine learning algorithm.

Note that the features cover_bal_loan and cover_install_amnt used in the following rule are new additional features introduced by the expert (for definitions see Section 5.1). The feature emp_length_n refers to the length of employment which, as noted earlier, is often inversely related to loan risk. The expert determines thresholds in the rule through inspection of the feature values in cases in the dataset, combined with knowledge from their experience of past cases and their outcomes.

The machine-learning model does not benefit from the above domain insights and hence cannot use them to enhance its performance. Statistical measures are typically used by machine learning algorithms to discover and use model-building features. Since a human expert can add new rules based on domain knowledge that may not be statistically justified by the training data, or define new features to be used in rules that are not available to the machine learning algorithm (since they are not in its search space), the use of RDR can clearly go beyond the capabilities of ML alone for a given task and dataset. For example, if only a small amount of data is available, machine learning approaches may not be able to detect critical features or thresholds due to insufficient data, resulting in reduced performance, as we showed experimentally in Section 4. The ML+RDR approach may also improve on ML alone in other ways, particularly with respect to criteria such as fairness, as argued in Section 6.

6 Discussion

Machine learning can produce irresponsible or unfair outcomes for various reasons related to either the data used for training or the algorithm and how the algorithm interacts with the data used for training [44, 45]. In this paper we have addressed only one, but nevertheless a central, reason why machine learning can produce unfair outcomes. The central issue is that learning methods are ultimately based on some sort of statistical assessment. That is, they are designed to ignore noise and anomalous data, i.e., patterns that don’t occur repeatedly in the data. Conversely, the more often a pattern is repeated in data, the more influence it will have in the model being learned. The obvious problem is that as well as reducing the impact of noise on the model, rare patterns will also be overlooked.

Secondly, since machine learning can only rely on statistics, features that are frequent, but irrelevant to the decision being made, can be learned as apparently important features. For example, suppose a model trained by machine learning is used in hiring decisions, where the majority of the training data consists of males. A human will (or should) know whether or not gender has anything to do with the ability to do the job.

If the training data does not include any examples at all of patterns that need to be learned, the ML+RDR approach we have proposed will not help. But if the training data contains at least one example or a pattern that is important and needs to be learned, the method we have suggested enables a domain expert to correct the performance of the knowledge base learned by machine learning to be improved to cover the pattern. Very likely there will also be noisy anomalous cases in the training data which machine learning has appropriately ignored. In the results above, there were a number of cases which our domain expert could not make sense of. We assume that a genuine domain expert, although they might puzzle over some anomalous cases, is well capable of recognising which cases they understand and can write a rule for and which they cannot.

In particular, the training data may be inadequate because it is historical data and our current understanding of what is best practice in avoiding unfair and unethical decisions may not be reflected in this historical training data. A human, with an understanding of societal or organisation requirements, would be expected to be able to apply current ideas of best practice. The domain expert will need to either search the training data to find cases where a different decision is now warranted, wait for such cases to occur, or construct hypothetical cases for which they write a rule.

Thirdly, decisions need to be able to be explained if we are to be sure they are fair and reasonable, and as noted there are now regulations to this effect in many financial markets. Although there are various techniques to identify the relevant importance of various features in overall decision making (see Section 2.1), the advantage of using human knowledge, particularly the RDR approach we have outlined, is that the rules involved in a decision can be immediately identified as well as the “cornerstone” cases that prompted their addition, as well as the identity of the domain expert who added them (and potentially other information relevant for auditing purposes). If an RDR rule has been corrected by the addition of another rule, it is also clear which features in the data were responsible for the different decision.

Finally, when it is realised that an unfair decision has been made for a case, the knowledge base can immediately be corrected with a domain expert adding a rule. In contrast, in a pure machine learning approach, there must be sufficient examples for training before the system can be improved.

Studies proposing new machine learning algorithms nearly always compare the new algorithm to other algorithms across a range of data sets of different types and sizes. We have not done that here as our aim instead has been to suggest an extension or addition to machine learning, which may apply to any machine learning algorithm that learns to discriminate between data instances, whether classifying them or ranking them, etc.

Any such machine learning method depends on there being sufficient instances in the training data of the different types of data. We are simply making the fairly obvious suggestion that, as well as trying to obtain more training data to get more examples of rarer data instances, a domain expert may be able to provide rules to cover examples in the training data that were too rare for machine learning to learn from. We have demonstrated this with one case study, and although the approach may work better or worse with other machine learning algorithms and other data sets, the approach must always work to some extent. That is: if a machine learning method fails to learn how to deal with rare cases in the training data, but a domain expert knows how to construct rules for such data, then the overall performance can be improved. We are not arguing for any advantage in using XGBoost with rules; XGBoost simply happens to be a suitable machine learning method for this domain.

7 Conclusion and Future Work

One of the most pressing challenges today, given the increasing application of AI (ML) in fintech, is to ensure lending criteria are ethically sound and meet the requirements of the various authorities. The experimental results presented in this paper show that it is possible to successfully acquire credit risk assessment insights from an expert to augment machine learning models and enhance their performance, which can include correcting unfair decisions. Although not directly considering unfair decisions, our study shows that we can capture and reuse human insights to prevent or correct unethical lending by machine learning and better conform to the guidelines.

In this study we built ML models using XGBoost, and in further research we will evaluate our approach with other ML methods and training data, particularly simulated training data. The aim of these further studies is not to demonstrate that the approach will work with other methods or data, as it must by definition, but to investigate how its performance relates to both the size of training data set and the number of anomalous (rather than rare) patterns that the training data contains.

We suggested earlier that the significant advantage of manually adding rules for errors made by the ML on the training data is that this provides a selected or “curated” set of cases for which manually added rules are required, rather than having to wait for these errors to occur after deployment of the system, as in the approach of [36]. However, if the training data set is too small, not only will ML methods tend to work poorly, but only a few of the rare patterns that exist may be represented in the training data, so that although adding expert-generated RDR rules will still improve the performance over ML alone, the performance will not be as good as when more of the rare patterns are included in the training data.

Using simulated data will allow us to inject varying numbers of rare patterns into the training data. We expect that including more rare patterns might degrade the ML performance, as well as improving the overall performance improvement from adding the RDR rules. We will also evaluate varying the amount of data that is purely anomalous rather than representing a rare, but real pattern in the data. The ML may try to learn from the anomalous data if its overfitting-avoidance methods do not work, whereas the expert should be able to identify that the data instance does not make sense.

Given that using a human expert in such studies is costly we will also use simulated experts as far as possible. In this context a simulated expert is rule-base extracted from an interpretable ML model trained on all possible data, so, like a human expert, it knows more than a machine learning method trained on limited data [46, 47].

We will also investigate building models with RDR without using ML. Although this will involve more effort from the domain expert, it may facilitate developing more ethical models, given that the model captures and expresses an expert’s intuitions and knowledge which is presumably more consistent with ethical lending practices and guidelines than the knowledge gained purely from the essential statistical assessment of data that machine learning necessarily relies on.

Notes

Many such tools are available, such as IBM AI Explainability 360 https://www.ibm.com/blogs/research/2019/08/ai-explainability-360/.

When no conclusion can be given for a case, this means the KBS is incomplete.

When the wrong conclusion is given for a case, this means the KBS is incorrect.

This assumes the person adding the rules is a domain expert and knows what decision should be made about a case without consulting other resources

Available from: https://www.lendingclub.com/info/download-data.action.

These values are commonly used; the use of other values was also investigated but did not alter the overall outcomes in our experimaents.

Abbreviations

- AI:

-

Artificial Intelligence

- ML:

-

Machine Learning

- PD:

-

Probability of default

- FN:

-

False negative

- TN:

-

True negative

- \(ML _{500}\) :

-

ML model trained on sample size of 500

- \(RDR _{500}\) :

-

RDR model constructed on examples misclassified by \(ML _{500}\)

- \(ML _{5000}\) :

-

ML model trained on sample size of 5000

- \(ML _{500} + RDR _{500}\) :

-

Combined ML and RDR models trained on sample of 500

- RDR:

-

Ripple-down rules

- KBS:

-

Knowledge based system

- GUI:

-

Graphical user interface

- XAI:

-

Explainable AI

- IML:

-

Interpretable ML

- MLKB:

-

Machine learning derived knowledge base (i.e., model)

- ML+RDR:

-

Combined ML model with RDR constructed on exceptions

- APRA:

-

Australian Prudential Regulatory Authority

- XGBoost:

-

eXtreme Gradient Boosting algorithm

- fintech:

-

Financial technology

References

Leo M, Sharma S, Maddulety K. Machine learning in banking risk management: a literature review. Risks. 2019;7:29. https://doi.org/10.3390/risks7010029.

Hand DJ, Henley WE. Statistical classification methods in consumer credit scoring: a review. J R Stat Soc Ser A. 1997;160(3):523–41.

Bhatore S, Mohan L, Reddy YR. Machine learning techniques for credit risk evaluation: a systematic literature review. J Bank Financ Technol. 2020;4:111–38. https://doi.org/10.1007/s42786-020-00020-3.

Kleinberg J, Mullainathan S, Raghavan M. Inherent trade-offs in the fair determination of risk scores; 2016. arXiv preprint arXiv:1609.05807v2

Dumitrescu E, Hué S, Hurlin C, Tokpavi S. Machine learning for credit scoring: improving logistic regression with non-linear decision-tree effects. Eur J Oper Res. 2022;297(3):1178–92.

Compton P, Kang BH. Ripple-down rules: the alternative to machine learning. CRC Press; 2021.

Australian Prudential Regulation Authority: Capital Adequacy: Internal ratings-based approach to credit risk. Prudential standard APS 113 ; 2020

Feltovich P, Prietula M, Ericsson K. Studies of expertise from psychological perspectives: historical foundations and recurrent themes. In: The cambridge handbook of expertise and expert performance. Cambridge: CUP; 2018. p. 59–83.

Dignum V. Responsible artificial intelligence: how to develop and use AI in a responsible way. Springer; 2019. https://doi.org/10.1007/978-3-030-30371-6.

Barredo Arrieta A, Díaz-Rodríguez N, Del Ser J, Bennetot A, Tabik S, Barbado A, Garcia S, Gil-Lopez S, Molina D, Benjamins R, Chatila R, Herrer F. Explainable artificial intelligence (XAI): concepts, taxonomies, opportunities and challenges toward responsible AI. Inform Fusion. 2020;58:82–115.

Dai J, Upadhyay S, Aïvodji U, Bach S, Lakkaraju H. 2022 Fairness via Explanation Quality: Evaluating Disparities in the Quality of Post hoc Explanations. In: AIES ’22, AAAI/ACM Conference on Artificial Intelligence, Ethics, and Society, pp. 203–214.

Toreini E, Aitken M, Coopamootoo K, Elliott K, Zelaya V, Missier P, Ng M, van Moorsel A. Technologies for trustworthy machine learning: A survey in a socio-technical context; 2022. arXiv preprint arXiv:2007.08911

Graziani M, Dutkiewicz L, Calvaresi D, Pereira Amorim J, Yordanova K, Vered M, Nair R, Henriques Abreu P, Blanke T, Pulignano V, Prior J, Lauwaert L, Reijers W, Depeursinge A, Andrearczyk V, Müller H. Explainable artificial intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Artif Intell Rev. 2022. https://doi.org/10.1007/978-3-030-30371-6.

Gunning D, Aha D. DARPA’s explainable artificial intelligence program. AI Mag. 2019;40(2):44–58.

Guidotti R, Monreale A, Ruggieri S, Turini F, Giannotti F, Pedreschi D. A survey of methods for explaining black box models. ACM Comput Surv. 2019;51(5):1–42. https://doi.org/10.1145/3236009.

Ding W, Abdel-Basset M, Hawash H, Ali A. Explainability of artificial intelligence methods, applications and challenges: a comprehensive survey. Inf Sci. 2022;615:238–92. https://doi.org/10.1016/j.ins.2022.10.013.

Chen V, Li J, Kim JS, Plumb G, Talwalkar A. Interpretable machine learning: moving from mythos to diagnostics. Commun ACM. 2022;65(8):43–50. https://doi.org/10.1145/3546036.

Lundberg S, Lee SI. 2017 A unified approach to interpreting model predictions. Adv Neural Inform Process Syst .

Ribeiro M, Singh S, Guestrin C. 2016 Why Should I Trust You?: Explaining the Predictions of Any Classifier. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining . p. 1135–1144.

Mitchell M, Wu S, Zaldivar A, Barnes P, Vasserman L, Hutchinson B, Spitzer E, Raji I, Gebru T. Model Cards for Model Reporting. In: FAT* ’19: Proceedings of the Conference on Fairness, Accountability, and Transparency; 2019. p. 220–229.

Vogel R, Bellet A, Clémençon S. Learning fair scoring functions: bipartite ranking under ROC-based fairness constraints. Proceed Int Conf Artif Intell Stat (AISTATS). 2019;130:784.

Baniecki H, Kretowicz W, Piatyszek P, Wisniewski J, Dalex Biecek P. Responsible machine learning with interactive explainability and fairness in python. J Mach Learn Res. 2022;22:1–7.

Suryanto H, Guan C, Voumard A, Beydoun G.:Transfer learning in credit risk. In: ECML-PKDD 2019: Proceedings of European Conference on machine learning and knowledge discovery in databases, Part III; 2020. p. 483–498.

Suryanto H, Mahidadia A, Bain M, Guan C, Guan A. Credit risk modelling using transfer learning and domain adaptation. Front Artif Intell. 2022;70:1.

Alvarez-Melis D, Jaakkola T. On the robustness of interpretability methods; 2018. arXiv preprint arXiv:1806.08049.

Dasgupta S, Frost N, Moshkovitz M. Framework for Evaluating Faithfulness of Local Explanations. In: ICML 2022: Proceedings of the 39th International Conference on Machine Learning. PMLR 162; 2022.

Chen J. Fair lending needs explainable models for responsible recommendation; 2018. arXiv preprint arXiv:1809.04684.

Baesens B, Setiono R, Mues C, Vanthienen J. Using neural network rule extraction and decision tables for credit-risk evaluation. Manage Sci. 2003;49(3):312–29.

Dastile X, Celik T, Potsane M. Statistical and machine learning models in credit scoring: a systematic literature survey. Appl Soft Comput J. 2020;106263:1–21.

Bracke P, Datta A, Jung C, Sen S. Machine learning explainability in finance: an application to default risk analysis. Bank of England Staff Working Paper no. 816 (2019)

Bussmann N, Giudici P, Marinelli D, Papenbrock J. Explainable machine learning in credit risk management. Comput Econ. 2021;57:203–16.

Jammalamadaka K, Itapu S. Responsible AI in automated credit scoring systems. AI Ethics. 2022. https://doi.org/10.1007/s43681-022-00175-3.

Bücker M, Szepannek G, Gosiewska A, Biecek P. Transparency, auditability, and explainability of machine learning models in credit scoring. J Oper Res Soc. 2022;73(1):70–90.

Djeundje V, Crook J, Calabrese R, Hamid M. Enhancing credit scoring with alternative data. Expert Syst Appl. 2021;163:113766.

Compton P, Peters L, Lavers T, Kim Y. Experience with long-term knowledge acquisition. In: Proceedings of the Sixth International Conference on Knowledge Capture, KCAP 2011; 2011. p. 49–56.

Kim D, Han SC, Lin Y, Kang BH, Lee S. RDR-based knowledge based system to the failure detection in industrial cyber physical systems. Knowl-Based Syst. 2018;150:1–13.

Ho VH, Compton P, Benatallah B, Vayssire J, Menzel L, Vogler H. An Incremental Knowledge Acquisition Method for Improving Duplicate Invoice Detection. In: Ioannidis YE, Lee DL, Ng RT (eds) Proceedings of the 25th IEEE International Conference on Data Engineering, ICDE 2009, IEEE; 2009. p. 1415–1418.

Kim M, Compton P. Improving the performance of a named entity recognition system with knowledge acquisition. In: ten Teije A, Volker J, Handschuh S, Stuckenschmidt H, d’Aquin M, Nikolov A, Aussenac-Gilles N, Hernandez N, editors. Knowledge engineering and knowledge management18th international conference (EKAW 2012). Berlin: Springer; 2012. p. 97–113.

Kim M, Compton P. Improving open information extraction for informal web documents with ripple-down rules. In: Richards D, Kang BH, editors. Knowledge management and scquisition for intelligent systems (PKAW 2012). Berlin: Springer; 2012. p. 160–74.

Chen T, Guestrin C. XGBoost: A Scalable Tree Boosting System. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining; 2016. p. 785–794.

Friedman J. Greedy function approximation: a gradient boosting machine. Ann Stat. 2001;29(5):1189–232.

Lessmann S, Baesens B, Seow H-V, Thomas LC. Benchmarking state-of-the-art classification algorithms for credit scoring: an update of research. Eur J Oper Res. 2015;247(1):124–36.

Dani MN., Faruquie TA, Garg R, Kothari G, Mohania MK, Prasad KH, Subramaniam LV, Swamy VN. Knowledge acquisition method for improving data quality in services engagements. In: IEEE International Conference on Services Computing (SCC 2010), IEEE; 2010. p. 346–353.

Mehrabi N, Morstatter F, Saxena N, Lerman K, Galstyan A. A survey on bias and fairness in machine learning. ACM Comput Surv (CSUR). 2021;54(6):1–35.

Barocas S, Hardt M, Narayanan A. Fairness and Machine Learning: Limitations and Opportunities. fairmlbook.org (also MIT Press 2023); 2019. http://www.fairmlbook.org

Compton P, Cao TM. Evaluation of incremental knowledge acquisition with simulated experts. In: Sattar A, Kang BH, editors. AI 2006: Proceedings of the 19th Australian joint conference on artificial intelligence. LNAI, vol. 4304. Berlin: Springer; 2006. p. 39–48.

Compton P, Preston P, Kang B. The use of simulated experts in evaluating knowledge acquisition. In: Gaines B, Musen M, editors. Proceedings of the 9th AAAI-sponsored banff knowledge acquisition for knowledge-based systems workshop, vol. 1. SRDG Publications, University of Calgary; 1995. p. 12–11218.

Acknowledgements

The authors acknowledge helpful discussions on some of the topics in this paper with Ashwin Srinivasan.

Funding

This research was supported in part by the Australian Government’s Innovations Connections scheme (awards ICG001855 and ICG001858).

Author information

Authors and Affiliations

Contributions

All authors conceived the study and approved the experimental design. CG, HS, and AM carried out the experiments with the assistance of MB and PC. CG authored rules. CG, HS, and AM analyzed the results with the assistance of MB and PC. AM, HS, MB and PC wrote the manuscript. All authors read and approved the manuscript.

Corresponding author

Ethics declarations

Conflict of Interest

Authors Charles Guan, Hendra Suryanto and Ashesh Mahidadia are employed by Rich Data Corporation, Sydney, Australia. Author Paul Compton is a member of the Advisory Board of Rich Data Corporation. All authors declare no other competing interests.

Ethical Approval and Consent to Participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Guan, C., Suryanto, H., Mahidadia, A. et al. Responsible Credit Risk Assessment with Machine Learning and Knowledge Acquisition. Hum-Cent Intell Syst 3, 232–243 (2023). https://doi.org/10.1007/s44230-023-00035-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s44230-023-00035-1