Abstract

The analysis of data streams offers a great opportunity for development of new methodologies and applications in the area of Intelligent Transportation Systems. In this paper, we propose two new incremental learning approaches for the travel time prediction problem for taxi GPS data streams in different scenarios and compare the same with three other existing methods. An extensive performance evaluation using four real life datasets indicate that when the training data size is small the Support Vector Regression method is the best choice considering both prediction accuracy and total computation time. However when the training data size is large to moderate then the Randomized K-Nearest Neighbor Regression with Spherical Distance (RKNNRSD) and the Incremental Polynomial Regression become the methods of choice. When continuous prediction of remaining travel time along the trajectory of a trip is considered we find that the RKNNRSD is the method of choice. A Real-time Speeding Alert System (RSAS) and a Driver Suspected Speeding Scorecard (DSSS) using the RKNNRSD method are proposed which have great potential for improving travel safety.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Nowadays, one can find and avail GPS based cab services such as Uber, Lyft, Didi, Ola etc. almost anywhere in the world. A GPS enabled taxi continuously collects and records the geo-spatial location data for each trip it travels. These recorded geo-spatial location data are often referred to as GPS traces which is a very rich data source for understanding the mobility patterns of passengers and also the demand.

The GPS traces are a rich source for streaming data i.e. continuous inflow of data arriving at a high speed [18]. Streaming data (a.k.a Data Streams) allows for gathering of real time or near real time insights. Analysis of streaming data comes with a great deal of challenges which we will discuss in detail in Sect. 1.1.1.

The travel time prediction problem in the transportation domain is a well-studied topic [17, 36, 83, 86] focusing mostly on the context of batch learning. However, not much work on this problem has been reported in the literature in the streaming data set-up. In this paper, we develop new methodologies for dynamic travel time prediction that takes into account the streaming nature of the taxi-GPS data. Please refer to Sect. 1.2 for more details.

In this paper, we are interested in the travel time prediction problem in the streaming data context. For a transport dispatch system, it is useful to know that for how long a cab will be occupied since it can help them in vehicle allocation and in improving their service efficiency. We propose a new method and adapt four other methods already existing in the literature to the streaming data context and carry out an extensive performance comparison study on four real world datasets. A similar comparison of performance study is conducted using a trajectory level dataset details of which is discussed in Sect. 4.3. We also look at some of the applications of the proposed methods to travel safety.

The intelligent transportation literature is replete with the works on applications of machine learning and data analytics for travel safety. Some of the recent examples are as follows. Regev et al. [65] proposed a new methodology for estimating crash risks. They compared their new crash risk estimates using the proposed method with the conventional crash rates across the various features such as driver’s age, driver’s gender and time of the day. Sivasankaran and Balasubramanian [70] worked on crash severity of bicycle-vehicle crashes using a latent class clustering approach.

Moreover, real time analytics have great applications for improving travel safety. Shi and Abdel-Aty [69] proposed applications of data analytics in real time traffic operation and safety monitoring on urban expressways. In fact, ride-sharing companies have understood the importance and potential of real time analytics for improving travel safety. Recently, Ola and Uber have enabled real time ride monitoring and information sharing features in their app in India [9]. Ola has also introduced a real-time selfie authentication feature to curb proxy driving that has a direct impact on passenger safety [75]. Thus, there is a lot of scope for development of new methodologies that can be applied for real time travel safety monitoring. In this paper, we develop new methodologies for dynamic travel time prediction as mentioned earlier and we explore some applications of the proposed methodologies for improving travel safety (see Sect. 4.4).

The contributions of this paper are in development of new methods for travel time prediction in streaming data context and its application for development of a real time speeding alert system. This paper also compares the performance of our proposed methods with some of the well-known batch learning methods adapted for the streaming data set-up. In this paper the methods are evaluated on criteria that trades off between prediction accuracy and computation time. We find from extensive experimentation reported later in this paper the best methods to use in different situations: (a) When the drop-off location is known and the training data sizes are large to moderate, the RKNNRSD and the PR methods are the best choices. However when the training data size is small (say less than 5, 000), the SVR method becomes the best choice (see Sect. 2.2 for the definition of these methods). (b) When continuous prediction of remaining travel time along the trajectory of a trip are considered, the RKNNRSD and the PR methods are the best performers.

In this paper, we address the need for taxi dispatch systems or the cab aggregators to handle the nuisance of "speeding" by vehicle drivers that poses a great risk to the safety of the passengers and the drivers. We propose a Real-time Speeding Alert System (RSAS) using the RKNNRSD method which has great potential for application to travel safety. This speeding warning notification system can help drivers in taking corrective measures. As a further application, we also propose a Driver Suspected Speeding Scorecard (DSSS) that scores the drivers based on their speeding violation and these scores can be used by the taxi dispatch system or the cab aggregator for the performance appraisal of their driving partners. This can help in identifying and taking action on rash drivers who pose a real threat to themselves and to the customers.

To summarize, the motivation of this study is to develop novel methods for travel time prediction in a streaming data context for public transport vehicles (Taxis in this case) and use it to improve travel safety for passengers. In this paper, we propose a new method RKNNRSD for travel time prediction in real-time for GPS taxi data streams. We compare its performance against different benchmark methods by running experiments on multiple real-world datasets. Another innovation of this study is to develop a Real-time Speeding Alert System (RSAS) using the RKNNRSD method which has great potential for application to travel safety. The RSAS send speeding notifications to Drivers and helps them in taking corrective measures. As a further application, we also propose a Driver Suspected Speeding Scorecard (DSSS) that scores the drivers based on their speeding violation. These scores can be potentially used by the taxi dispatch system or the cab aggregator for the performance appraisal of their driving partners. So, in other words the taxi dispatch system or the cab aggregator is a potential customer of this product- DSSS and this helps in identifying the rash drivers.

The rest of the paper is structured as follows. Section 1.1 gives a background of various concepts that we will be using in this paper. This is followed by a brief review of the literature in Sect. 1.2. We then discuss the methodology, evaluation metrics and describe the datasets used in this study in Sect. 2. In Sect. 3, we give an overview of the concept drift phenomenon. Section 4 discusses the results of the various experiments conducted along with the static data experiment conducted for the different methods. Section 4.3 presents the analysis of the continuous prediction of remaining travel time problem with a trajectory level dataset. In Sect. 4.4, we discuss an application of our proposed method to travel safety. Finally, Sect. 5 concludes the paper.

1.1 Background

1.1.1 Streaming Data

Streaming data is defined as a continuous and sequential arrival of data at a high velocity from a source [1, 59]. However, analysis of streaming data comes with its share of challenges which were identified in Aggarwal [1], Babcock et al. [3], and Gama [21] as (a) single pass processing of data (b) the presence of Concept Drift i.e. the characteristics of the incoming data may change over time and (c) fast near real time analysis of data due to high speed of incoming streaming data. Thus, we see that streaming data is very different from static data. The conventional methods for static data analysis assume that the entire data is always available and multiple passes over the data set is possible. Since both of these assumptions are not true in a streaming data set up, analysis of streaming data needs newer methods.

A major challenge for streaming data mining algorithms is the ability to tackle the Concept Drift. Often such algorithms need to discard older data points and update the model parameters frequently to ensure that the model performs well. These algorithms continuously learn from the incoming streaming data which is very much different from batch learning methods where we don’t need to update the parameters repeatedly.

The streaming data mining algorithms can be broadly classified into online learning or incremental learning algorithms based on the update frequency of model parameters. The online learning methods update the model parameters as new observations comes in whereas an incremental learning algorithm updates the parameters when a batch of new training examples comes in Büttcher et al. [5]. We will discuss this further in the Methodology section of this paper (see Sect. 2.2).

1.1.2 Spherical Data

Sometimes observations come in the form of directions. This kind of data is known as Directional data and the area of statistics that deals with such data is known as Directional data analysis. Directional data in two dimensions and in three dimensions are known as Circular data and Spherical data respectively [31, 47]. Both Mardia and Jupp [47] and Fisher et al. [19] give a detailed account of different methods for analysis of Directional data/Spherical data. But most of these methods are in the batch learning setting and application of these methods in the streaming data context for travel time prediction has not been addressed in the literature to the best of our knowledge.

In the context of this paper, we focus on the spherical data analysis for geo-spatial location coordinate data since these location coordinate points can be seen as points on earth (which is approximately spherical). We will incorporate the idea of spherical distance in k-NN regression (see Sect. 2.2.2).

1.2 Related Work

In this section, we present a brief literature review of the work done on the travel time prediction problem with GPS enabled vehicles. GPS traces are instrumental in finding interesting insights that have applications such as passenger finding [77], hotspot identification [7], vacant taxi finding [58], trajectory mapping [44], traffic monitoring [27] and location prediction [37]. However, travel time prediction has many important applications within the field of intelligent transportation, such as vehicle routing, congestion and traffic management [22].

Most of the work in the literature for solving the travel time prediction problem have used batch learning methods. Zhang and Haghani [86] used a gradient boosting method for improving travel time prediction. Mendes-Moreira et al. [49] worked on the long term travel time prediction problem. They did a comparison of three different methods and found that SVM gave the best results out of them. Some of the commonly used methods reported in the literature for solving the travel time prediction problem are Localised regression models [67], Artificial neural networks [32], Support vector regression [84], Random Forests [72], KNN [34], and k-medoid clustering technique [13].

In recent years, there has been some work reported in the literature which has focussed on real time or near real time prediction. Lee et al. [41] worked on a real-time knowledge based travel time prediction model using OD-pairs and meta-rules. Tiesyte and Jensen [73] worked on real time position tracking and travel time prediction of vehicles using the nearest neighbor technique (NNT) technique. Hofleitner et al. [29] worked on arterial travel time forecasting with streaming data using a hybrid model approach. Also, some work has been reported in the literature that uses Artificial neural network (ANN) for real-time travel time prediction from GPS data [40]. In fact, LSTM (Long-Short Term Memory) neural network models are quite popular for time series prediction in the context of travel time prediction in the intelligent transportation systems literature Duan et al. [16], Liu et al. [45], and Qiu & Fan [60].

Wang et al. [79] worked on a real-time model for predicting the travel time of a vehicle in a city using the GPS trajectory data of vehicles. Luo et al. [46] worked on the travel time prediction problem based on the most frequently used path extracted from large trajectory data. Wibisono et al. [81] discusses prediction and visualization of traffic in a particular region using the FIMT-DD method with streaming data. Please see Laha and Putatunda [38] for a more comprehensive review of the literature. In Table 1, we describe a comparative table for some of the related works in this domain.

2 Data and Methods

2.1 Data

In this section we briefly discuss about the datasets used in this study. Since, most of the datasets used in this paper are same as those used in Laha and Putatunda [37], the reader may look at that paper for more details. However, please note that the paper [37] solves a different problem i.e. real time location prediction and the problem is formulated as a multivariate regression whereas, in this paper we are focussing on incremental travel time prediction with a single target variable (please see Sect. 4 for more details).

2.1.1 NYC1 and NYC2

The NYC Yellow Taxi GPS dataset is publicly available at the "NYC Taxi and Limousine Commission" website [56]. We cleaned the dataset i.e. removed anomalies such as erroneous GPS coordinate values and performed missing value treatment. We take one subset of the dataset from the period period \(1^{st}\) to \(5^{th}\) January containing 824, 799 observations. This dataset serves as the primary dataset on which the different models are tested. We will refer to this dataset as nyc1.

We take another subset of this cleaned NYC Yellow Taxi GPS dataset for the time period \(13^{th}\) - \(16^{th}\) January, 2013 containing 680, 865 observations that is used for our experiments. We will refer to this dataset as nyc2. The attributes for both nyc1 and nyc2 that we would be using for our analysis are the pickup coordinates (start_latitude, start_longitude), drop-off coordinates (dest_latitude, dest_longitude) and time taken for the trip for each taxi with an unique ID.

In Sect. 4.4 we use two subsets of the nyc1 data containing the first two days i.e. \(1^{st}\) January 2013 and \(2^{nd}\) January 2013. We will refer to these datasets as nyc1_day1 and nyc1_day2.

2.1.2 PORTO

The Porto GPS taxi dataset [15]. was first used in Moreira- Matias et al. [51]. This dataset contains for each trip information regarding taxi stand, call origin details, unique taxi id, unique trip id and the Polyline. We take a subset of this dataset i.e. trip details from \(1^{st}\) to \(7^{th}\) July, 2013 and it contains 34, 768 observations. We obtained the travel time, pickup coordinates (start_latitude, start_longitude) and drop-off coordinates (dest_latitude, dest_longitude) from this dataset and we will refer to this dataset as porto.

2.1.3 SFBLACK1, SFBLACK2 and SFBLACK3

The publicly available "San Francisco black cars GPS traces" dataset consists of anonymized GPS traces of Uber black cars in San Francisco for one week (1st–7th January, 2007) [26]. Also, similar to the porto dataset, we obtained the travel time, pickup coordinates and drop-off coordinates for each trip from this dataset. This derived dataset has 24, 552 observations after data cleaning and we will refer to this dataset as sfblack1.

For the continuous updating and continuous prediction problem as given in Sect. 4.3, we take the trajectory level data for the 1st day of the week (1st January, 2007) of the black cars GPS trace data [26]. This dataset has 92, 694 observations after data cleaning and comprises location details and the corresponding timestamps for 1925 unique trip IDs. We will refer to this dataset as sfblack2. In Sect. 4.4, we will use both the sfblack2 dataset and take the trajectory level data for the 2nd day of the week (2nd January, 2007) of the black cars GPS trace data. This dataset has 88, 447 observations after data cleaning and comprises location details and the corresponding timestamps for 1967 unique trip IDs. We will refer to this dataset as sfblack3.

2.2 Methodology

In this section, we propose two methodologies for travel time prediction in streaming data context namely, Randomized KNN Regression with Spherical Distance - rknnrsd and Incremental Polynomial Regression -pr. The performance of these methods are then compared with feed forward neural networks- ann, linear regression- lr and support vector regression - svr suitably adapted for the streaming data setting (see Sect. 2.2.5).

In this paper, our focus is on developing an incremental learning algorithm for travel time prediction and it is reported in the literature that an incremental learning algorithm can be approximated by using a batch learner along with a sliding window [5]. We implement this idea by using a batch learner \({\mathcal {L}}\) which is fed a sequence of data points \(p_1\), \(p_2\),....., \(p_m\) using a damped window model (see Sect. 2.2.1) where the value of m varies from window to window.

In Fig. 1, we show the model framework diagram. As shown in the diagram, we apply a windowing scheme (Damped Window model) along with a Learner (RKNNRSD, PR, LR, and more) on an incoming streaming data where, the predictor variables are the pickup and drop-off location coordinates and the target variable is the travel time. We will discuss in details about the windowing scheme and the machine learning methods in Sections 2.2.1 to 2.2.5.

2.2.1 Damped Window Model

As discussed earlier, the phenomenon of “concept drift” presents a formidable challenge when we are dealing with streaming data. One of the ways of handling Concept drift effectively is by using windowing technique. Cao et al. [6] defines a damped window model as a windowing technique that uses an exponential fading strategy to discard the old data. For this purpose, a fading function is used where the weight assigned to each data point decreases with respect to time t. The fading function g(t) is represented below as

The change in the value of the decay factor (\(\varLambda\)) influences the decision related to the discarding of the older data. For example, if the value of \(\varLambda\) is high then less importance is given to the older data than compared to the more recent/latest data. In this paper, we have used a variation of the damped window model technique where instead of assigning weight to each individual data point based on the fading function, we assign weights to a batch of data points in an input data stream window and discard the older ones based on an user defined cutoff, which in this paper is 0.09. The windowing scheme used in this paper is similar to the one used in Laha and Putatunda [37]. Also, one thing to note here is that due to the windowing scheme in our models, we do not simply put more importance to the recent data but also consider some of the older observations as well. The daily and weekly patterns are automatically taken care of in this scheme because the windows on which the models are built gets updated periodically which accommodates the daily and the weekly patterns in the dataset.

Figure 2 shows how the Sliding windows and Damped Window model segments or uses the input data. The vertical axis here represents the time. In sliding windows, the specific window size is fixed so that the algorithm discards the oldest observation from consideration for each new incoming observation. Thus, the same number of observations is always used to retrain the model. As explained earlier, Damped window model uses a fading strategy to discard the old data. So, when a new observation comes the damped window model use some old and the recent data for consideration as input as shown in the Fig. 2b. To know more about various types of windowing techniques (such as Landmark Windows, adapted Time Window, and more), please refer to Clever et al. [11].

Sliding window and damped window model. The more concentrated the grey, the more are the observations considered in retraining the model. Image Source—Clever et al. [11]

When using a damped window model with a machine learning model i.e. Learner L as mentioned in Fig. 1, the incrementality refers to the ability to process incoming data in a sequential manner, while discarding outdated data. This approach allows the model to adapt to changing patterns over time.

To illustrate this concept, let’s consider the Fig. 3 that demonstrates how data is discarded and what data is taken as input in a Damped window model. In this figure, time progresses from left to right. Each vertical bar represents a data point or a time step. The window represents the active data that the damped window model uses for its predictions. As new data points arrive, the window slides forward, and the oldest data points are discarded. The discarded data lies outside the window and is no longer considered by the model. The active data within the window serves as input for the machine learning method. As the window slides, the model takes the current window’s data as input, updating its internal state and making predictions based on the new information. By discarding the relatively oldest data and incorporating only some of the older data points and the most recent information, the damped window model adapts to changes in the underlying patterns, allowing for incrementality in the machine learning process.

Thus, by leveraging windowing, machine learning models can capture temporal dependencies, track changes over time, and adapt to evolving patterns. In other words, as mentioned in Büttcher et al. [5], an incremental learning algorithm can be approximated by using a machine learning model i.e. Learner L with a Windowing scheme. This approach is particularly useful when dealing with streaming data or scenarios where the underlying patterns exhibit temporal dynamics. In Sections 2.2.2 to 2.2.5, we describe the modus-operandi of the various incremental methods we have used in this paper.

In this paper, when we look at the average number of observations across the training and testing windows for nyc1, nyc2, porto and sfblack1 datasets (see Sect. 2.1), we find that the nyc1 and nyc2 contain much more observations in the training and testing windows as compared to that of porto and sfblack1. To illustrate this further, the average number of observations across the training for the nyc1 is 39, 879 whereas it’s 1233 and 869 for porto and sfblack1 respectively. Similarly, the average number of observations across the testing for nyc1, porto and sfblack1 are 6, 825, 208 and 146 respectively.

2.2.2 K-Nearest Neighbor Regression with Spherical Distance (KNNRSD)

In this paper we propose the K-Nearest Neighbor Regression with Spherical Distance (KNNRSD) method for solving the travel time prediction problem. We also propose a variant of the KNNRSD which is discussed in Sect. 2.2.3. The KNNRSD has its origins in the literature on Spherical data analysis (see Sect. 1.1.2) and K-Nearest Neighbor (K-NN) Regression method [55]. In the K-NN Regression method, for every observation K nearest neighbors are selected using a distance metric (such as Euclidean distance, Mahalanobis distance and more) and the response for the observation is predicted by averaging the responses of the K nearest neighbors. Please see Cover and Hart [12] and Murty and Devi [54] for a detailed review on the K-Nearest Neighbor algorithm.

The motivation behind using this method i.e. KNNRSD is that the predictor variables i.e. the pickup and drop-off location coordinates are points on the surface of earth which can be taken approximately as a sphere. This idea of Spherical data is explored in Laha and Putatunda [37] for solving the location prediction problem in streaming data context. However, to the best of our knowledge, there has been no work reported in the literature that takes into account the spherical nature of the data while solving the travel time prediction problem for GPS enabled taxis in streaming data context.

In this paper, we treat the predictor variables as spherical data but the response variable is linear. Then we perform the KNN regression with the distance metric as Spherical distance, which is defined as the shortest route along the surface between two points M and N lying on the surface of a sphere. The Spherical distance for the points M and N on an unit sphere is defined as given below.

where \(M\cdot N\) represents the dot product [64].

The KNNRSD algorithm is described below in Algorithm 1. Here, k is the number of nearest neighbors and the value of k is chosen from an array given to the user. And n represents the training window size.

2.2.3 Randomized K-Nearest Neighbor Regression with Spherical Distance (RKNNRSD)

For large training datasets, the K-Nearest Neighbor method is computationally expensive as it has a \({\mathcal {O}}(np)\) where, n is the number of observations and p dimension of the training dataset [35]. It is a memory based technique and has no training cost since whole training dataset is kept in memory and used for finding similarity with test instances. So the KNN method has speed or memory related issues especially when the training dataset size is large. One way to deal with this problem is to bring down the size of the training dataset without adversely affecting the prediction accuracy of the technique.

In this paper we propose a variant of the KNNRSD method i.e. Randomized KNNRSD (RKNNRSD) as described in Algorithm 2, where we first perform a simple random sampling of the training dataset. It may be noted that sometimes especially for sparse training datasets, the sample size obtained with a sampling rate of \(r\%\) may be less than the value of the k nearest neighbors where k is the user defined input. In such cases we run the KNNRSD on the whole training data.

Since this sample (mentioned in Algorithm 2) is representative of the whole training dataset we replace the training dataset with this randomly sampled subset and proceed to use the KNNRSD algorithm as discussed in Sect. 2.2.2. Here, the sampling rate i.e. the proportion of the original dataset that is samples is an hyper-parameter of the proposed method. We find that this method greatly increases the computation speed of a stand alone KNNRSD method without compromising the accuracy of the method. The time complexity of the RKNNRSD method is \({\mathcal {O}}(mp)\) where, m is the number of observations in the sample of the training dataset and p dimension of the training dataset.

2.2.4 Incremental Polynomial Regression (PR)

Polynomial regression is a type of non-linear regression method which can model the curvilinear relationship between the response and the predictor variables [25]. In this paper, we will implement the Polynomial regression method in a streaming data context by adapting the polynomial regression technique to devise an incremental method using a damped window model. This is following the solution framework diagram shown in Fig. 1, where the Learner L in this case is the Polynomial regression method with degree 2 or 3.

Here, the travel time taken is the dependent variable and the pickup and drop-off latitude and longitudes are the independent variables. The pickup and drop-off coordinates are spherical data and so we will first convert them into Euclidean data using Eq. 3 before applying the polynomial regression model. We can either use an unit vector (u,v,w) or angles \((\lambda _1, \lambda _2)\) to represent points of the surface of an unit sphere centered at origin. Here \(\lambda _1\) and \(\lambda _2\) are known as latitude and longitude respectively and \((\lambda _1, \lambda _2) \in [0,\pi ) \times [0,2\pi )\). We can interchange between these two different forms of representation using the following equation as described in Jammalamadaka and Sengupta [31]

We will refer to the above method as PR in this paper. We will implement the PR method with different degrees i.e. 2 and 3 as described in Table 4 in Sect. 4.1 and then choose the most suitable one.

2.2.5 Other Methods

In this paper, we will compare the proposed method with three different methods using the evaluation metrics that are discussed in Sect. 4. The methods are ANN [4], SVR [76], and linear regression [20]. All of these methods are executed along with a damped window model as is the case with the RKNNRSD and the PR methods discussed above.

LR Since we are working with streaming data we adapt the linear regression technique to devise an incremental method using a damped window model in this paper. We call this method LR.

ANN We apply a three layer feed forward neural network with one hidden layer and a sigmoid activation function. The number of hidden nodes is taken to be 2. This is in accordance with the Geometric pyramid rule [48] which states that for a three layer feed forward neural network with p inputs and q outputs the number of hidden nodes H in the hidden layer is \(\sqrt{pq}\).

We adapt the standard ANN algorithm to the streaming data setting by using a damped window model. We call this method ANN in this paper.

SVR We have implemented an \(\epsilon\)-SVR method with a RBF kernel [30]. Henceforth we will call this method SVR.

It may be noted that the input given to the above methods viz. LR, SVR and ANN are the pickup and drop-off coordinates. Since these are spherical data, so we will first convert them into euclidean data using Eq. 3 before applying the above methods.

2.3 Evaluation Metrics

2.3.1 Prediction Accuracy

To compare the performance of different methods in terms of prediction accuracy, we will use the Aggregated Mean Absolute Error (AMAE)

If we record the Mean Absolute Errors (MAE) for each prediction horizons then the AMAE can be defined as follows.

where n is the number of prediction horizons, \(MAE_i\) is the MAE in the \(i^{th}\) prediction horizon.

The method with the least value of AMAE is considered the "best" method in terms of prediction accuracy. The unit of this evaluation metric is in “seconds”.

2.3.2 Accuracy-Time Trade-off Criteria

Similar to what was done in Laha and Putatunda [37], here also a computation time versus prediction accuracy trade-off criteria -TC is introduced

where t(n) and MAE(n) are the total computation time (in seconds) and the Mean absolute error respectively and n represents the training data size. This is a multiplicative criterion. If we take log on both sides then it can be seen that \(ln \;TC(n)= ln \;MAE(n) + ln\; t(n)\). So in the log scale the TC(n) criteria gives equal weight to both \(ln\; MAE(n)\) and \(ln\; t(n)\).

In this paper we introduce a new trade-off criteria- TOC which is defined as a linear combination of MAE and time taken (both are in “seconds”)

where t(n) and MAE(n) are the total computation time (in seconds) and the Mean absolute error respectively when the training data size is n. Here, \(w_1>0\) and \(w_2>0\) are the weights and \(w_1 + w_2=1\). In Table 4, we report results of a comparison study using various combinations of weight pairs \((w_1,w_2)\) viz. \(TOC1(n) =TOC(0.6,0.4,n)\), \(TOC2(n) = TOC(0.4,0.6,n)\), \(TOC3(n) = TOC(0.8,0.2,n)\), \(TOC4(n) =TOC(0.2,0.8,n)\), \(TOC5(n) = TOC(0.5,0.5,n)\) and TC(n).

It is expected that an ideal algorithm in a streaming data context should have the best prediction accuracy and the least computation time [37]. So, while comparing the performances of multiple methods in the streaming data context, the one with the least value of TOC for all n can be considered as the "best" method.

2.3.3 Stochastic Dominance

Suppose there are two distributions P and Q with cumulative distribution functions (CDFs) \(F_P\) and \(F_Q\) respectively. The distribution Q is said to be "stochastically dominant" over the distribution P (at first order) if for every x, \(F_P(x) \ge F_Q(x)\) [14]. It can be visualized if we examine the plots of the CDFs of distributions P and Q together. If the CDF of P is to the right of that of Q and they don’t cross each other, then it is said that P is stochastically dominant over Q of the first order. However, in many real world situations, the CDF is not known and it needs to be estimated from the data. So, we use the empirical cumulative distribution function (ECDF) as it approximates the CDF very well when sample size is large as demonstrated by the Dvoretzky-Kiefer-Wolfowitz inequality [80, p. 99]. In this paper, we use the ECDF for geometrically checking the stochastic dominance of first order (see Sect. 4).

3 Concept Drift

Concept drift refers to the change in the distribution of the data streams over a period of time (see Sect. 1.1.1). One of the consequence of the presence of concept drift is that an off-line learning/batch learning model’s predictive performance changes unpredictably (and often seen to deteriorate) as we move forward in time as shown in Laha and Putatunda [37]. This induces a need to update the model by taking into consideration the recent data and information. In this context, it has been noted by Moreira-Matias et al. [52] that GPS trace data are inherently subject to concept drift and some scenarios where this concept drift can be clearly visualized are unexpected weather event such as rain or snow, car accidents on a busy road, etc. To illustrate this further, we show that concept drift is present in the nyc1 dataset by comparing the performance of batch learners with the incremental learners using the windowing technique (discussed in Sect. 2.2) for travel time prediction using pickup and drop-off coordinates. In the absence of concept drift we expect both the batch and incremental learners to have similar performance whereas if concept drift is present then we expect the incremental learner to perform better.

We consider a part of the nyc1 dataset (time interval \(00:00-\; 01:00\) of \(1^{st}\) January, 2013) and use it as training data for the off-line learners. We then use these models for drop-off location prediction when the pickup location coordinates are known for the next five 1 hour prediction horizons. We consider the off-line learners to be Linear Regression and Support Vector Regression (see [20] and [76] for more details) which we will refer to as \(LR\_batch\) and \(SVR\_batch\) respectively. The reason for choosing these two offline learning methods is that they have been widely used in practice and were used in [66, 84] and showed good performance for a related problem. We implement an \(\epsilon\)-SVR method with a Radial basis function (RBF) kernel. We then plot the mean absolute error (MAE) for both these methods on the prediction horizons as shown in Fig. 4.

We have discussed in Sects. 1.1.1 and 2.2.1 that Concept drift is one of the major challenge of Streaming data, which makes a batch learning method applied on it become outdated i.e. increases its prediction error. One way to handle this would be to use an incremental learning method that continuously learns from the incoming streaming data [21]. In Fig. 4, we observe that for both the LR and SVR methods, the incremental learners perform much better than the batch learners in all prediction horizons in terms of prediction accuracy and thus, establishing the presence of concept drift.

4 Results and Discussion

In this section, we address the problem of predicting the travel time of a vehicle when the pickup location and the drop-off coordinates are both known. So, we work on a regression model where the travel time is the target variable and the pickup latitude, pickup longitude, drop-off latitude and drop-off longitude are the predictor variables. We report the findings of the different experiments done in this paper in Sect. 2.2. We implement the data stream flow, build models and perform data analysis using the software R version 3.3.1 [61].

The R packages Directional [74], lubridate [24], nnet [78], e1071 [50] and ggplot2 [82] has been used for doing our experiments. A system with a configuration of 4 GB RAM, Intel Core i5 processor 1.6 GHZ and a 64 bit Mac OSX was used to carry out the experiments.

4.1 Results-Travel Time Prediction When the Pickup and the Drop-off Coordinates are Available

In this section, we discuss the results of the various experiments that we have conducted for the travel time estimation problem when both pickup and dropoff coordinates are known. We first apply the KNNRSD method on the nyc1 dataset and perform a sensitivity analysis to select the most appropriate parameters by comparing the AMAE. We do the same for RKNNRSD as well. Then we compare the results of the RKNNRSD (with the chosen parameters) with the other methods viz. LR, ANN and SVR as discussed in Sect. 2.2.

We perform a sensitivity analysis to see the performance of the KNNRSD method for different values of K, window sizes and \(\varLambda\). We vary K= 5, 10, 15, 20, 25, 50 and 100, window sizes = 15 min, 30 min and 1 h and \(\varLambda\) = 0.25, 0.5 and 0.75. From the discussion in Sect. 2.2.1, we see that the value of \(\varLambda\) is dependent on the amount of concept drift. Please note that we could have also taken much lower window sizes say 2 mins or 5 mins especially for the nyc1 dataset. But for sparse datasets such as sfblack1 and porto, there are none or very few observations in some of the windows. For example, if we take the case of 5 min window size for the 1st hour in the sfblack1 dataset, around \(25\%\) of the 5 min windows contains no observations. This can cause problem for our comparison exercise since in some cases the model will not be updated for time duration greater than 5 min. Hence, we focus our study on window sizes of 15 min, 30 min and 1 h (Although, for most of the experiments conducted in this paper, we will take the window size as 1 h). Table 2 describes the results of this experiment, where we find that KNNRSD gives the best prediction accuracy for \(K=25\) with \(\varLambda =0.5\) and \(window \;size=1 \; hour\). We fix the value of K at 25 for the rest of this paper. For the subsequent experiments with different datasets in this paper, we fix the window size at 1 hour and \(\varLambda\) as 0.5.

In Table 3, we compare KNN with Spherical distance (i.e. KNNRSD) against the conventional KNN with Euclidean distance (i.e. KNNRED). We find that the KNNRSD method performs better than KNNRED in terms of prediction accuracy. There is not much difference between the AMAE values of KNNRSD and KNNRED but we proceed with the spherical distance metric in the KNN i.e. KNNRSD since it hasn’t been explored much in the intelligent transportation domain. Moreover, a possible reason for the AMAE values of KNNRSD and KNNRED are not much different is that the trip distances in the nyc1 dataset are generally quite small. We believe that the KNNRSD method will perform much better than the KNNRED method when the trip distances are large.

We then perform TOC(0.6,0.4,n) analysis (see Sect. 2.3.2 for more details) for RKNNRSD (where, \(K=25\)) with different sampling rates (viz., \(5\%, 10\%, 20\%, 40\%, 60\%, 80\%\) and \(100\%\)) on the nyc1 dataset as shown in Fig. 5. We found the sampling rate of \(20\%\) to be optimal. Thus, we use R25NNRSD20P (i.e. RKNNRSD with \(K=25\) and sampling rate \(= 20\%\)) for further study.

Next, we have studied the five methods viz.

(i) R25NNRSD20P, (ii) PR, (iii) ANN, (iv) LR, and (v) SVR for predicting the travel time using both the pickup and drop-off latitude and longitude as predictor variables. A 1 hour damped window model with \(\varLambda =0.5\) is fixed. This study is carried out on four different datasets namely, nyc1, nyc2, porto and sfblack1 and the results obtained are given in Table 4. We apply the PR model with different degrees ( i.e. 2 and 3) and found that PR with \(degree= 3\) is the best performer for the nyc1, nyc2 datasets whereas PR with \(degree= 2\) is the best performer for the porto and the sfblack1 datasets both in terms of prediction accuracy and the trade-off criteria that we will explain further below.

Among the methods reported in Table 4 the prediction accuracy of the SVR method is found to be the best followed by the R25NNRSD20P and PR methods but the ANN and the LR methods are much faster. In terms of the multiplicative trade-off criteria TC(n) (as described in Sect. 2.3.2), we find that ANN and LR are the better performers. This is primarily due to the fact that the ANN and LR are much faster than the other three methods even though their accuracy is much lower when compared to that of R25NNRSD20P, SVR and PR. In case of additive trade-off criterias, we put more weightage on computation time than predictive accuracy for TOC2(n) and TOC4(n) whereas for TOC1(n) and TOC3(n) we put more weightage on the predictive accuracy and finally, we put equal weightage on both predictive accuracy and computation time in case of TOC5(n) as mentioned in Sect. 2.3.2 and Table 4.

If we give more weightage to predictive accuracy in the additive trade-off criteria (i.e. TOC1(n) and TOC3(n)) then we find that for nyc1 and nyc2 the R25NNRSD20P is the best performer. However, if we give equal weightage to both time and predictive accuracy (i.e. TOC5(n)) or give more weightage to time (i.e. TOC2(n) and TOC4(n)) in the additive criteria then we find that the PR method is the best performer for both the nyc1 and nyc2 datasets. But for the porto and the sfblack1 datasets, the SVR method is the best performer followed by the R25NNRSD20P method by considering any of the five additive trade-off criterias.

The choice of the evaluation metric for the various trade-off criteria mentioned above depends largely on the problem in hand. In the context of this paper, we believe that a substantial weightage needs to be given to predictive accuracy and so chose TOC1(n) (where the values of the weights for \(TOC(w_1,w_2,n)\) are fixed at \(w_1=0.6\) and \(w_2=0.4\)) as our evaluation metric for the rest of this paper. We will refer to TOC1(n) as TOC(n) for the rest of this paper.

An alternative way to compare the performances of these four methods is by using the concept of Stochastic Dominance as discussed in Sect. 2.3.3. We compute the TOC(n) for each prediction horizon and then use it for computation of the ECDF of TOC(n) which is defined as

where t is the number of prediction horizons, \(TOC(n)_i\) is the value of TOC(n) in the \(i^{th}\) prediction horizon and \(\#\{TOC(n)_i \le y\}\) is the number of \(TOC(n)_i\) that are less than or equal to y.

Figures 6 and 7 shows the ECDF plots for the five methods on the four datasets discussed above. For nyc1, we can see from Fig. 6a that the curves for SVR, LR and ANN are to the right of other two. The curve for the R25NNRSD20P method is leftmost, followed by PR. So, the R25NNRSD20P method is the best performer followed by PR. Similar conclusion can be drawn for nyc2 as well (see Fig. 6b). In case of porto (see Fig. 7a), we find that the curve for SVR is to the left of the R25NNRSD20P method. So SVR is the better performer here. Similar conclusion can be drawn for the sfblack1 dataset (see Fig. 7b). This lends further support to our insights obtained from Table 4.

4.2 Static Data Experiment

In this section, we perform an experiment to demonstrate how the prediction accuracy and the execution time varies with respect to the training data size for each of the following three methods viz. SVR, PR (with \(degree=3\)) and R25NNRSD20P for travel time prediction when both the pickup and the drop-off location coordinates are known. In this experiment, we take random samples of different sizes from the nyc1 dataset. The data size for different training data windows varies from 262 to 70, 000 in the three datasets viz. nyc1, porto and sfblack1. So we consider sample datasizes of \(250, 500, 750, 1000, 2000,\dots ,10,000, 12,000, 14,000, \dots , 20,000, 25,000, 30,000,\dots 70000\) for this experiment. We want to perform sequence learning and so sort all the observations in each samples in the increasing order of the pickup timestamp. We take the first \(80\%\) of each of the samples as training data and the remaining \(20\%\) as the test data. For example, for the sample data of size 250, the first 200 observations are used to train the algorithm/method and the last 50 observations are used for testing. We then apply different methods namely, PR, R25NNRSD20P and SVR and capture the prediction accuracy i.e. Mean Absolute Error (MAE) and the total time taken by the algorithm for training and prediction. We then calculate and plot the trade-off criteria i.e. TOC(n) with respect to the training data size(n) for these three methods as shown in Fig. 8. We also provide a comparison of our proposed methods with one of the most popular method in recent times for sequence learning i.e. Long Short-Term Memory (LSTM).

LSTM is a kind of Recurrent Neural Network (RNN) architecture but it can model temporal sequences and their long-range dependencies better than conventional RNNs [68] (see [28] and [42] for more details on RNNs and LSTM). LSTMs have been very successful in various applications that needs sequence to sequence learning such as language modeling [53], natural language processing [71] and handwriting generation [23]. However, there is no work reported in the intelligent transportation literature to the best of our knowledge that implements LSTMs for travel time prediction in a streaming data context. But [85] worked on a related problem where they used RNNS and LSTMs for real-time prediction on taxi demand.

We perform a static data experiment as described earlier and the results are shown in Fig. 8. We compare the three methods namely, PR, R25NNRSD20P and SVR with two different architectures of LSTMs. The first one is an LSTM with 1 hidden layer with 1200 hidden nodes in it. This architecture was used in [85] and we will refer to this architecture as LSTM1 for the rest of this paper. The second LSTM architecture contains 2 hidden layers with 100 hidden nodes in each. We will refer to this architecture as LSTM2 for the rest of this paper. The other hyperparameters for both the LSTMs were taken as ADAM optimizer, batch size=1 and epochs=2. Both the LSTMs were implemented using the Keras [10] library in Python. Figure 8 shows the comparison of TOC(n) for different sample data sizes for all the five methods namely, PR, R25NNRSD20P, SVR, LSTM1 and LSTM2. We can see that LSTM2 performs better than LSTM1. So, in Fig. 9, we compare the four methods namely, PR, R25NNRSD20P, SVR and LSTM2 across prediction accuracy i.e. MAE and the Time taken (in Fig. 9a, b respectively).

In Fig. 9a, we can see that for large training data size the TOC(n) values for the SVR method is much more than that of the R25NNRSD20P, PR and LSTM2 methods. The SVR method can be recommended for training data size less than 5, 000 since in this case the TOC(n) values are lower than that of the LSTM2, R25NNRSD20P and PR methods. But for moderate training data size i.e. between 5, 000 to 35, 000, we find that R25NNRSD20P is the better performer with TOC(n) values much lower than that of the LSTM2, SVR and PR methods. However, for large training data size i.e. more than 35, 000, we find that the PR method is the best performer with TOC(n) values much lower than that of the SVR and R25NNRSD20P methods.

In Fig. 9b, we can see that for large training data size the Time taken by LSTM2 is much higher than that of other methods. However, we can see in Fig. 9a that the MAE values for LSTM2 decreases with larger sample data sizes, which makes sense as deep learning methods generally get better with more data points. But the MAE values for LSTM2 is consistently higher than that of SVR and R25NNRSD20P for almost all the sample data sizes. The SVR method can be recommended for training data size less than 5, 000 since in this case the TOC(n) values are lower than that of the LSTM2, R25NNRSD20P and PR methods. But for moderate training data size i.e. between 5, 000 to 35, 000, we find that R25NNRSD20P is the better performer with TOC(n) values much lower than that of the LSTM2, SVR and PR methods. However, for large training data size i.e. more than 35, 000, we find that the PR method is the best performer with TOC(n) values much lower than that of the LSTM2, SVR and R25NNRSD20P methods.

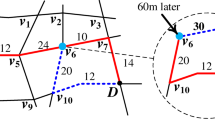

4.3 Continuous Prediction of Remaining Travel Time for Trajectory Data

In this section, we explore the problem of continuous prediction of remaining travel time at different points on the trajectory of the vehicle when the drop-off coordinates are known. We discuss the performance of our proposed methods RKNNRSD and PR (with \(degree= 2\)) along with the SVR method on a trajectory level dataset sfblack2 details of which is given in Sect. 2.1.3. The dataset consists of location coordinates i.e. latitude and longitude recorded every 4 seconds for each trip for a day.

We intend to give continuous updates of the remaining travel time from the time of pickup till drop-off. We assume that the drop-off coordinates are known since it is expected that the passenger would inform the driver about the drop-off location at the time of pickup. A major update that may be useful to the transport dispatch system and also to the passenger is the remaining time to the reach the destination. Here, we calculate the remaining travel time by considering the current latitude and longitude readings as the pickup point and using the algorithms i.e. RKNNRSD, PR and SVR as mentioned in Sect. 4.

We apply and compare the performance of R25NNRSD20P, PR (with \(degree= 2\)) and SVR for the continuous prediction of remaining travel time. As before we use a 1 hour damped window model with \(\varLambda =0.5\). For the remaining travel time problem we obtain the accuracy vs. time trade-off criteria (TOC) for each trip ID, L, as follows:

where \(n_L\) is the number of recordings for trip-id L, \(t_{Pred,i}\) is the predicted remaining travel time at instant i and \(t_{Actual,i}\) is the actual remaining travel time, \(1 \le i \le n_L -1\) and \(T(n_L-1)\) is the total computation time (in seconds). Here, \(w_1>0\) and \(w_2>0\) are the weights and \(w_1 + w_2=1\). In the context of this paper as discussed in Sect. 4, we are giving \(50\%\) more weightage to accuracy than to computing time, which is reflected in the assigned weights \(w_1=0.6\) and \(w_2=0.4\).

We define the ECDF of the TOC as

where p is the number of trip IDs, \(TOC_i\) is the TOC of the \(i^{th}\) trip ID and \(\#\{TOC_i \le k\}\) is the number of \(TOC_i\) that are less than or equal to k.

Figure 10 shows the ECDF plot for the \(TOC_L\) of the R25NNRSD20P, PR and SVR methods mentioned above for the continuous prediction of remaining travel time for each of the 1796 unique Trip IDs in the prediction horizons of the trajectory dataset sfblack2. We can see that the ECDF of the \(TOC_L\) for R25NNRSD20P is the leftmost followed by that of the PR and SVR i.e. the ECDF of R25NNRSDP stochastically dominate those of PR and SVR. Thus, we find that the R25NNRSD20P method is the best performer for continuous prediction of remaining travel time for trajectory data.

4.4 Applications to Travel Safety: Real-time Speeding Alert System and Driver Suspected Speeding Scorecard

In this section, we propose an application of the proposed method R25NNRSD20P mentioned earlier (see Sects. 2.2, 4 and 4.3) to travel safety. Speeding is one of the greatest contributory factor to traffic accidents [43, 57]. Speeding negatively impacts the ability of the driver to steer the vehicle properly on the road [43]. Thus, it poses a huge risk for the driver, passengers, other vehicles and pedestrians. For more details on the relationship between speed and travel safety, please refer to [57] and [2].

We propose a Real-time Speeding Alert System (RSAS) where we first predict the travel time for each trip using the R25NNRSD20P method proposed in this paper and then compare it with the actual time taken. We then create a Speeding Index \((S_i)\) and then determine a threshold for sending the driver a speed driving warning notification. The speeding notification can help drivers in taking corrective measures. The way we create the Speeding Index \((S_i)\) and then determine the threshold for sending the driver a speed driving warning notification is explained below.

Let there be k trips \(T_1,..., T_k\). Consider trip \(T_i\).

Let the path of the trips be (\(n_{i0}, n_{i1},..., n_{ij},..., n_{iJ_i} ) = (n_{ij})_{j=0}^{j=J_i}\). Let the predicted time for traversing the path \((n_{ij})_{j=l-1}^{j=j_i}\) be \(E_l\) and the actual time taken be \(A_l\) where \(l=1,2,..., J_i\). The Speeding index \(S_i\) corresponding to trip \(T_i\) is defined as

We then look at the distribution of \(S_i\) where \(i = 1,..., k\) and base a cut-off or threshold on this distribution for sending the speeding warnings. In the context of this paper, we choose the \(99^{th}\) percentile of the distribution of \(S_i\) values as a cut-off but we also report the cut-off values corresponding to the \(95^{th}\) and \(99.73^{th}\) percentile of the distribution of \(S_i\). The threshold can be varied by the user based on the application context.

As an illustration, we first apply the R25NNRSD20P for continuous prediction of remaining travel time on the trajectory dataset sfblack2 as discussed in Sect. 4.3. We then calculate the Speeding index \(S_i\) for each unique trips IDs i.e. 1796 trip IDs using Eq. 10. Figure 11 shows the density plot for the distribution of the Speeding index \(S_i\) for each trip IDs computed above. We then take the threshold as the \(99^{th}\) percentile of the \(S_i\) values i.e. \(threshold= 187.15\). Using this cut-off, for \(1\%\) of cases a warning notification need to be sent to the drivers. If we then take the threshold as the \(95^{th}\) percentile of the \(S_i\) values i.e. \(threshold= 94.92\), for \(5\%\) of cases a warning notification need to be sent to the drivers. The choice of the threshold depends on the user (i.e. the taxi dispatch control system) and it can take a call based on the application context. However, in conventional normal distribution based control chart applications the 99.73 percentile is used for determining the control limit [33, 63]. If we take the threshold as the \(99.73^{th}\) percentile of the \(S_i\) values i.e. \(threshold= 245.87\), then only for \(0.28\%\) of cases a warning notification need to be sent to the drivers.

To validate this further we take the next day trip details i.e. the sfblack3 dataset (see Sect. 2.1.3). We then calculate the Speeding index \(S_i\) for each unique trips (i.e. 1892 trip IDs) for all the prediction horizons using Eq. 10. We then apply the previously generated threshold values for the \(99^{th}\), \(95^{th}\) and \(99.73^{th}\) percentiles as mentioned above. When we take \(threshold= 187.15\), for \(0.53\%\) of cases a warning notification need to be sent to the drivers. In case of \(threshold= 94.92\), for \(4.43\%\) of cases a warning notification need to be sent to the drivers. And finally, for \(threshold= 245.87\), for \(0.31\%\) of cases a warning notification need to be sent to the drivers. Figure 12 shows the speeding index for each trip IDs with respect to the different thresholds. A warning notification need to be sent to the medallions corresponding to the trip IDs whose Speeding index exceed the chosen threshold value such as \(95^{th}\), \(99^{th}\) and \(99.73^{th}\) percentiles as shown in Fig. 12. This control chart can be incorporated in some apps that can aid the taxi dispatch system or the cab aggregator in real time monitoring.

4.4.1 Real-time Speeding Alert System for Non-Trajectory Level Dataset

We develop the Real-time Speeding Alert System (RSAS) for datasets at non-trajectory level as well. For example, in case of the nyc1 dataset, we can aggregate the trips of a yellow taxi in a day by their "medallion number". Here, we take the nyc1_day1 dataset as discussed in Sect. 2.1.1. The modus-operandi is same as mentioned above i.e. we first apply the R25NNRSD20P method for travel time prediction as discussed in Sect. 4. We then calculate the Speeding index \(S_i\) for each unique medallions i.e. 6990 medallions for all the prediction horizons using Eq. 10. We then take the threshold as the \(99^{th}\) percentile of the \(S_i\) values which gives \(threshold= 306.52\) and for \(1\%\) of cases a warning notification need to be sent to the drivers. If we take the threshold as the \(95^{th}\) percentile of the \(S_i\) values then \(threshold= 177.85\), and for \(5\%\) of cases a warning notification need to be sent to the drivers. If we take the threshold as the \(99.73^{th}\) percentile of the \(S_i\) values then \(threshold= 433.25\) and for \(0.27\%\) of cases a warning notification need to be sent to the drivers.

We validate this further by taking the next day trip details i.e. the nyc1_day2 dataset as discussed in Sect. 2.1.1. We then calculate the Speeding index \(S_i\) for each unique medallions i.e. 7305 medallions using Eq. 10. We then apply the previously generated threshold values for the \(99^{th}\), \(95^{th}\) and \(99.73^{th}\) percentiles as mentioned above. When we take \(threshold= 306.52\), we find that for \(1.39\%\) of cases a warning notification need to be sent to the drivers. In case of \(threshold= 177.85\), for \(6\%\) of cases a warning notification need to be sent to the drivers while when \(threshold= 433.25\), for \(0.53\%\) of cases a warning notification need to be sent to the drivers.

4.4.2 Driver Suspected Speeding Scorecard

As a further application, we propose a Driver Suspected Speeding Scorecard (DSSS) where we use the Real-time Speeding Alert System (RSAS) and map the trip IDs or the medallion numbers to their respective drivers. We then score the drivers based on their suspected speeding instances i.e. the number of instances a driver is suspected to have violated the threshold for the Speeding index \(S_i\) as discussed above in Sects. 4.4 and 4.4.1. We are calling it suspected speeding because occasionally due to various other reasons the driver may be able to complete the trip much earlier than predicted. These scores can be used by the taxi dispatch system or the cab aggregator for the performance appraisal of the driving partners. This can help in identifying and taking action on rash drivers who pose a real threat to themselves and to the customers.

To give an illustration, let’s take the case of the nyc1_day2 dataset as explained in Sect. 4.4.1 to calculate the Speeding index \(S_i\) for each unique medallions. Let’s consider the threshold value as \(99^{th}\) percentile i.e. \(threshold= 306.52\). We find that around 102 drivers/medallions (i.e. \(1.39\%\) ) are suspected for speeding. The Driver Suspected Speeding Scorecard (DSSS) will give the details of these speeding instances with the required medallion IDs and the number of trips taken, which the supervisors or managers in a taxi dispatch system or a cab aggregator can use for performance appraisal of the driving partners. However, if we deep dive further, we find that out of these 102 medallions suspected of speeding, 43 of them has undertaken only one trip during the day. So, only 59 out of these 102 medallions have had trips more than one.

Table 5 describes the Speeding Index and Trip counts for each of the medallions suspected for speeding with more than or equal to 10 trips over the day. These 11 medallions are high frequency drivers that have been flagged for suspected speeding and a warning should be sent to them. We can see in Table 5 that medallion-3F3BAFB2BE213B93C849663B195D89BE has the highest speeding index and medallion-70441B1EE93324621B443AA0142B111A has the maximum number of trips and these drivers in our opinion should be appropriately warned against speeding.

5 Conclusion

In this paper, we have examined the travel time prediction problem and have suggested the best algorithm to use in different situations: (a) When the drop-off location is known and the training data sizes are large to moderate, the RKNNRSD and the PR methods are the best choices. However when the training data size is small (say less than 5, 000), the SVR method becomes the best choice. (b) When continuous prediction of remaining travel time along the trajectory of a trip is considered we find that the RKNNRSD method is the best performer. It would be interesting to explore in future the possibility of creating a deep learning model that auto-tunes its hyperparameters in real time to give accurate results in real time. We intend to explore this as a future work.

Finally, we propose a Real-time Speeding Alert System (RSAS) that can help drivers take corrective measures and thus can enhance travel safety. As a further application, we also propose a Driver Suspected Speeding Scorecard (DSSS) that scores the drivers based on their speeding violation and these scores can be used by the taxi dispatch system or the cab aggregator for the performance appraisal of the driving partners. This can help in identifying and taking action on rash drivers.

Data Availibility Statement

All data used in this paper is public.

Abbreviations

- RKNNRSD:

-

Randomized K- Nearest Neighbor Regression with Spherical Distance

- KNNRSD:

-

K- Nearest Neighbor Regression with Spherical Distance

- PR:

-

Polynomial Regression

- ANN:

-

Artificial Neural Networks

- LR:

-

Linear Regression

- SVR:

-

Support Vector Regression

- RSAS:

-

Real-time Speeding Alert System

- DSSS:

-

Driver Suspected Speeding Scorecard

References

Aggarwal CC. Data streams: models and algorithms (advances in database systems). Secaucus: Springer-Verlag; 2006.

Archer J, Fotheringham N, Symmons M, Corben B. The impact of lowered speed limits in urban and metropolitan areas . Tech. Rep. 276, Monash University Accident Research Centre report 2008.

Babcock B, Babu S, Datar M, Motwani R, Widom J. Models and issues in data stream systems. In: Proceedings of the Twenty-first ACM SIGMOD-SIGACT-SIGART Symposium on Principles of Database Systems, PODS ’02, pp. 1–16. ACM, New York, NY, USA 2002. https://doi.org/10.1145/543613.543615

Bishop CM. Neural networks for pattern recognition. New York: Oxford University Press Inc; 1995.

Büttcher S, Clarke CLA, Cormack GV. Information retrieval: implementing and evaluating search engines. The MIT Press 2010. Isbn:0262026511, 9780262026512

Cao F, Ester M, Qian W, Zhou A. Density-based clustering over an evolving data stream with noise. In: 2006 SIAM Conference on Data Mining, 2006:328–339.

Chang H, Tai Y, Hsu J. Context-aware taxi demand hotspots prediction. Int J Bus Intell Data Mining. 2010;5(1):3–18.

Chen H, Rakha HA. Real-time travel time prediction using particle filtering with a non-explicit state-transition model. Transp Res Part C Emerg Technol. 2014;43:112–26. https://doi.org/10.1016/j.trc.2014.02.008.

Chinnappa D. Ola takes big step to improve passenger safety 2020. https://techstory.in/ [Accessed on: 10-May-2020]

Chollet F. keras. https://github.com/fchollet/keras 2015.

Clever L, Pohl JS, Bossek J, Kerschke P, Trautmann H. Process-oriented stream classification pipeline: a literature review. Appl Sci. 2022;12:9094.

Cover T, Hart P. Nearest neighbour pattern classification. IEEE Transactions on Information Theory IT-13, 1967:21–27 .

Cristóbal T, Padrón G. Quesada-Arencibia A, F A, Blasio G, García C. A study on the behavior of clustering techniques for modeling travel time in road-based mass transit systems. Proceedings 2019;31(1):18.

Davidson R. Stochastic dominance. 2nd ed. UK: Palgrave Macmillan; 2008.

Dheeru D, Karra Taniskidou E. UCI machine learning repository 2017. http://archive.ics.uci.edu/ml

Duan Y, LV Y, Wang FY. Travel time prediction with LSTM neural network. In: 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), 2016:1053–1058. https://doi.org/10.1109/ITSC.2016.7795686

Elhenawy M, Chen H, Rakha HA. Dynamic travel time prediction using data clustering and genetic programming. Transp Res Part C Emerg Technol. 2014;42:82–98. https://doi.org/10.1016/j.trc.2014.02.016.

Ellis B. Real-time analytics: techniques to analyze and visualize streaming data, 1st edn. Wiley Publishing 2014. Isbn: 1118837916, 9781118837917

Fisher NI, Lewis T, Embleton BJJ. Statistical analysis of spherical data. Cambridge: Cambridge University Press; 1993.

Fox J. Applied regression analysis and generalized linear models. SAGE Publications 2008.

Gama J. Knowledge discovery from data streams, 1st edn. Chapman & Hall/CRC 2010.

Goudarzi F. Travel time prediction: Comparison of machine learning algorithms in a case study. In: 2018 IEEE 20th International Conference on High Performance Computing and Communications. IEEE 2018. https://doi.org/10.1109/HPCC/SmartCity/DSS.2018.00232

Graves A. Generating sequences with recurrent neural networks. arXiv preprint arXiv:1308.0850 2013.

Grolemund G, Wickham H. Dates and times made easy with lubridate. J Stat Softw 2011;40(3):1–25. http://www.jstatsoft.org/v40/i03/

Heiberger RM, Neuwirth E. R through excel: a spreadsheet interface for statistics, data analysis, and graphics. 1st ed. Incorporated: Springer Publishing Company; 2009.

Henry: Uber gps traces. Github, Retrieved from: https://github.com/dima42/uber-gps-analysis/tree/master/gpsdata 2011. [Online, Accessed on 1-Sep-2015]

Herring R, Hofleitner A, Abbeel P, Bayen A. Estimating arterial traffic conditions using sparse probe data. In: Proceedings of the International IEEE Conference on Intelligent Transportation Systems, 2010:929–936.

Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput. 1997;9(8):1735–80.

Hofleitner A, Herring R, Bayen A. Arterial travel time forecast with streaming data: a hybrid approach of flow modeling and machine learning. Transp Res Part B. 2012;46(9):1097–122.

James G, Witten D, Hastie T, Tibshirani R. An introduction to statistical learning: with applications in R. Incorporated: Springer Publishing Company; 2014.

Jammalamadaka SR, Sengupta A. Topics in circular statistics. World Scientific Publishing Co. Pte. Ltd. 2001. Isbn: 9810237782

Jeong R, Rilett LR. Bus arrival time prediction using articial neural network model. In: Proceedings of the 7th International IEEE Conference on Intelligent Transportation Systems, 2004:988–993. IEEE.

Kantam R, Rao AV, Rao G. Control charts for the log-logistic distribution. Econ Quality Control. 2006;21(1):77–86.

Kumar BA, Jairam R, Arkatkar SS, Vanajakshi L. Real time bus travel time prediction using k-nn classifier. Transp Lett 2019:362–372.

Kusner M, Tyree S, Weinberger KQ, Agrawal K. Stochastic neighbor compression. In: T. Jebara, E.P. Xing (eds.) Proceedings of the 31st International Conference on Machine Learning (ICML-14), JMLR Workshop and Conference Proceedings 622–630:2014.

Ladino A, Kibangou AY, de Wit CC, Fourati H. A real time forecasting tool for dynamic travel time from clustered time series. Transp Res Part C Emerg Technol. 2017;80:216–38. https://doi.org/10.1016/j.trc.2017.05.002.

Laha A, Putatunda S. Real time location prediction with taxi-gps data streams. Transp Res Part C Emerg Technol. 2018;92:298–322. https://doi.org/10.1016/j.trc.2018.05.005.

Laha AK, Putatunda S. Travel Time Prediction for GPS Taxi Data Streams. Indian Institute of Management Ahmedabad, Working Paper No. 2017-03-03 2017.

Lam HT, Diaz-Aviles E, Pascale A, Gkoufas Y, Chen B. (blue) taxi destination and trip time prediction from partial trajectories. CoRR arXiv:abs/1509.05257 2015.

Larsen GH, Yoshioka LR, Marte CL. Bus travel times prediction based on real-time traffic data forecast using artificial neural networks. In: 2020 International Conference on Electrical, Communication, and Computer Engineering (ICECCE), 2020:1–6. https://doi.org/10.1109/ICECCE49384.2020.9179382

Lee WH, Tseng SS, Tsai SH. A knowledge based real-time travel time prediction system for urban network. Expert Syst Appl. 2009;36(3):4239–47. https://doi.org/10.1016/j.eswa.2008.03.018.

Lipton ZC, Berkowitz J, Elkan C. A critical review of recurrent neural networks for sequence learning. arXiv:1506.00019v4 2015.

Liu C, Chen CL, Subramanian R, Utter D. Analysis of Speeding-Related Fatal Motor Vehicle Traffic Crashes. Tech. Rep. Technical Report DOT HS 809 839, NHTSA, Washington, DC: National Highway Traffic Safety Administration 2005.

Liu X, Zhu Y, Wang Y, Forman G, Ni LM, Fang Y, Li M. Road recognition using coarse-grained vehicular traces. HP Lab: Tech. rep; 2012.

Liu Y, Wang Y, Yang X, Zhang L. Short-term travel time prediction by deep learning: A comparison of different LSTM-DNN models. In: 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), 2017:1–8. https://doi.org/10.1109/ITSC.2017.8317886

Luo W, Tan H, Chen L, Ni LM. Finding time period-based most frequent path in big trajectory data. In: Proceedings of the 2013 ACM SIGMOD International Conference on Management of Data, ACM, New York, NY, USA 713–724:2013. https://doi.org/10.1145/2463676.2465287

Mardia KV, Jupp PE. Directional Statistics. Wiley series in probability and statistics. Wiley, Chichester 2000. http://opac.inria.fr/record=b1101626. Previous ed. published as: Statistics of directional data. London : Academic Press, 1972

Masters T. Practical neural network recipes in C++. San Diego: Academic Press Professional Inc; 1993.

Mendes-Moreira Ja, Jorge AM, de Sousa JF, Soares C. Comparing state-of-the-art regression methods for long term travel time prediction. Intell Data Anal 2012;16(3):427–449. https://doi.org/10.3233/IDA-2012-0532

Meyer D, Dimitriadou E, Hornik K, Weingessel A, Leisch F. e1071: Misc Functions of the Department of Statistics Probability Theory Group (Formerly: E1071) TU Wien 2015. https://CRAN.R-project.org/package=e1071

Moreira-Matias L, Gama J, Ferreira M, Mendes-Moreira J, Damas L. Predicting taxi-passenger demand using streaming data. IEEE Trans Intell Transp Syst. 2013;14(3):1393–402.

Moreira-Matias L, Gama J, Mendes-Moreira J, Ferreira M, Damas L. Time-evolving O-D matrix estimation using high-speed gps data streams. Expert systems with Applications 2016;44(C):275–288. https://doi.org/10.1016/j.eswa.2015.08.048. http://dx.doi.org/10.1016/j.eswa.2015.08.048

Sundermeyer M, Schluter R, Ney H. Lstm neural networks for language modeling. In: INTERSPEECH, 2012:194–197.

Murty MN, Devi VS. Pattern recognition: an algorithmic approach. undergraduate topics in computer science. Springer, New York 2011. http://opac.inria.fr/record=b1133243

Navot A, Shpigelman L, Tishby N, Vaadia E. Nearest neighbor based feature selection for regression and its application to neural activity. In: Y. Weiss, B. Schölkopf, J.C. Platt (eds.) Advances in Neural Information Processing Systems 18 [Neural Information Processing Systems, NIPS 2005, December 5-8, 2005, Vancouver, British Columbia, Canada, pp. 996–1002. MIT Press 2006.

NYC-TLC: TLC trip record data 2014. [Online, Accessed on 17-Nov-2015]

Patterson T, Frith W, Small M. Down with speed: A review of the literature, and the impact of speed on new zealanders accident compensation corporation and land transport safety authority. www.transport.govt.nz/research/Documents/ACC672-Down-with-speed.pdf 2000.

Phithakkitnukoon S, Veloso M, Bento C, Biderman A, Ratti C. Taxi-aware map: identifying and predicting vacant taxis in the city. Ambient Intell. 2010;6439:86–95.

Putatunda S. Practical machine learning for streaming data with python: design, develop, and validate online learning models. Apress 2021.

Qiu B, Fan W. Machine learning based short-term travel time prediction: Numerical results and comparative analyses. Sustainability 2021:13(7454). https://doi.org/10.3390/su13137454

R Core Team: R: A Language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria 2016. https://www.R-project.org/

Rahman MM, Wirasinghe S, Kattan L. Analysis of bus travel time distributions for varying horizons and real-time applications. Transp Res Part C Emerg Technol. 2018;86:453–66. https://doi.org/10.1016/j.trc.2017.11.023.

Rao S, Durgamamba A, Rao S. Variable control charts based on percentiles of size biased lomax distribution. ProbStat Forum. 2014;7:55–64.

Ratcliffe JG. Foundations of hyperbolic manifolds, Graduate texts in mathematics, vol. 149, 2nd edn. Springer, New York 2006. https://doi.org/10.1007/978-0-387-47322-2

Regev S, Rolison JJ, Moutari S. Crash risk by driver age, gender, and time of day using a new exposure methodology. J Safety Res. 2018;66:131–40. https://doi.org/10.1016/j.jsr.2018.07.002.

Rice J, van Zwet E. A simple and effective method for predicting travel times on freeways. In: Proc. 4th IEEE Conf. Intelligent Transportation Systems, 2001:140–145.

Rupnik J, Davies J, Fortuna B, Duke A, Clarke S, Jan R, John D, Bla F, Alistair D, Stincic C. Travel time prediction on highways. In: 2015 IEEE International Conference on Computer and Information Technology, Liverpool, UK 2015:1435–1442.

Sak H, Senior A, Beaufays F. Long short-term memory recurrent neural network architectures for large scale acoustic modeling. In: In Proc. Interspeech 2014.

Shi Q, Abdel-Aty M. Big data applications in real-time traffic operation and safety monitoring and improvement on urban expressways. Transp Res Part C Emerg Technol. 2015;58:380–94. https://doi.org/10.1016/j.trc.2015.02.022.

Sivasankaran SK, Balasubramanian V. Exploring the severity of bicycle-vehicle crashes using latent class clustering approach in india. J Safety Res. 2020;72:127–38. https://doi.org/10.1016/j.jsr.2019.12.012.

Sutskever I, Vinyals O, Le QV. Sequence to sequence learning with neural networks. In: Proc. of NIPS’14, 2014:3104–3112.

Taghipour H, Parsa AB, Mohammadian AK. A dynamic approach to predict travel time in real time using data driven techniques and comprehensive data sources. Transportation Engineering 2020;2.

Tiesyte D, Jensen CS. Similarity-based prediction of travel times for vehicles traveling on known routes. In: GIS, 2008:14:1–14:10.

Tsagris M, Athineou G. Directional: Directional Statistics 2016. https://CRAN.R-project.org/package=Directional. R package version 1.9

Upadhyay H. Ola introduces real-time selfie authentication feature to curb proxy driving 2018. https://entrackr.com/2018/07/ola-real-time-selfie-proxy-driving/ [Accessed on: 10-May-2020]

Vapnik VN. The nature of statistical learning theory. New York: Springer-Verlag; 1995.

Veloso M, Phithakkitnukoon S, Bento C. Urban mobility study using taxi traces. In: Proceedings of the International Workshop on Trajectory Data Mining and Analysis, TDMA ’11, ACM, New York, NY, USA 2011:23–30. https://doi.org/10.1145/2030080.2030086.

Venables WN, Ripley BD. Modern Applied Statistics with S. Springer 2002.

Wang Y, Zheng Y, Xue Y. Travel time estimation of a path using sparse trajectories. In: Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’14, ACM, New York, NY, USA 2014:25–34. https://doi.org/10.1145/2623330.2623656

Wasserman L. All of statistics: a concise course in statistical inference. Incorporated: Springer Publishing Company; 2010.

Wibisono A, Jatmiko W, Wisesa HA, Hardjono B, Mursanto P. Traffic big data prediction and visualization using fast incremental model trees-drift detection (fimt-dd). Knowledge-Based Systems 2016;93(C):33–46. https://doi.org/10.1016/j.knosys.2015.10.028

Wickham H. ggplot2: elegant graphics for data analysis. Springer-Verlag New York 2009. http://ggplot2.org

Woodard D, Nogina G, Koch P, Racz D, Goldszmidt M, Horvitz E. Predicting travel time reliability using mobile phone GPS data. Transp Res Part C Emerg Technol. 2017;75:30–44. https://doi.org/10.1016/j.trc.2016.10.011.

Wu CH, Ho JM, Lee DT. Travel time prediction with support vector regression. IEEE Trans Intell Transp Syst. 2004;5(4):276–81.

Xu J, Rahmatizadeh R, Bölöni L, Turgut D. Real-time prediction of taxi demand using recurrent neural networks. IEEE Trans Intell Transp Syst. 2017;19(8):2572–81.

Zhang Y, Haghani A. A gradient boosting method to improve travel time prediction. Transp Res Part C Emerg Technol. 2015;58:308–24. https://doi.org/10.1016/j.trc.2015.02.019.

Acknowledgements

This is an independent research work done at IIM Ahmedabad. This has no connection with any of the works done by author 1 (Sayan Putatunda) with any of his industry employers of past/present. The authors acknowledge the comments received from the anonymous reviewers and the editor on an earlier version of the paper which has substantially improved the paper.

Funding

This research received no external funding.

Author information

Authors and Affiliations

Contributions

Here, we denote the authors as SP (Sayan Putatunda) and AKL (Arnab Kumar Laha). Methodology, SP and AKL; investigation, SP and AKL; writing-original draft preparation, SP; writing-review and editing, AKL and SP; supervision AKL. All authors have read and agreed to the published version of the manuscript.