Abstract

Question generation (QG) from a given context paragraph is a demanding task in natural language processing for its practical applications and prospects in various fields. Several studies have been conducted on QG in high-resource languages like English, however, very few have been done on resource-poor languages like Arabic and Bangla. In this work, we propose a finetuning method for QG that uses pre-trained transformer-based language models to generate questions from a given context paragraph in Bangla. Our approach is based on the idea that a transformer-based language model can be used to learn the relationships between words and phrases in a context paragraph which allows the models to generate questions that are both relevant and grammatically correct. We finetuned three different transformer models: (1) BanglaT5, (2) mT5-base, (3) BanglaGPT2, and demonstrated their capabilities using two different data formatting techniques: (1) AQL—All Question Per Line, (2) OQL—One Question Per Line, making it a total of six different variations of QG models. For each of these variants, six different decoding algorithms: (1) Greedy search, (2) Beam search, (3) Random Sampling, (4) Top K sampling, (5) Top- p Sampling, 6) a combination of Top K and Top-p Sampling were used to generate questions from the test dataset. For evaluation of the quality of questions generated using different models and decoding techniques, we also fine-tuned another transformer model BanglaBert on two custom datasets of our own and created two question classifier (QC) models that check the relevancy and Grammatical correctness of the questions generated by our QG models. The QC models showed test accuracy of 88.54% and 95.76% in the case of correctness and relevancy checks, respectively. Our results show that among all the variants of the QG, the mT5 OQL approach and beam decoding algorithm outperformed all the other ones in terms of relevancy (77%) and correctness (96%) with 36.60 Bleu_4, 48.98 METEOR, and 63.38 ROUGE-L scores.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The increased research interest in question generation has been driven primarily by advancements in the effectiveness of deep learning. Writing effective questions that focus on key ideas takes effort and time. According to a systematic review, the time it takes to create these questions might be greatly reduced by using the effective technology known as Automatic Question Generation (AQG) [1]. A case study performed in Germany by Steuer et al. [2] shows that the generated questions had a positive effect on the participants’ learning outcomes and seemed to benefit their understanding. Though there are QG systems in resource-rich languages like English, there are very few systems that cover resource-poor languages. Bengali is a language that needs attention to ensure the opportunity to get efficient natural language understanding and generation systems to keep up with technological advancement around the world. This motivated and encouraged us to continue on this complex mission to provide a Question Generation model to generate questions in an efficient manner and achieve technological advancement for the Bengali language.

The question-generating problem comes in many forms, but they all have the same fundamental structure: given a selection of text as input, a question-generation system should create a pertinent, thought-provoking, and grammatically accurate question [3]. Among them, two forms are the most popular in Question Generation tasks. One is Answer Aware Question Generation—these models call for a certain answer span to be specifically chosen in the input context, typically utilizing highlight tokens. It is undesirable when there are no short lists of important key terms because this makes producing questions much more difficult [4]. The other one is Answer Agnostic Question Generation—where the model does not require a specifically selected answer span in the input and only generates questions from the given context. Within an Artificial Intelligence (AI) model, the ability to ask meaningful questions provides evidence of comprehension [5]. As a result, the task of QG is significant in the context of AI [6]. We investigate the viability of applying answer-agnostic question-generation models (models that do not require explicit answer span selection) in the Bangla language. The introduction of big crowdsourced extractive question answering (QA) datasets in recent years, like SQuAD, has made neural models the primary method for creating conventional interrogative-type questions. Enhancing reading comprehension and critical thinking abilities is one of the main goals of question-generating research [2]. Question Generation (QG) tasks can also be helpful in an educational context. Professors and educators might create quizzes more quickly, and students could remain interested in the content using a QG system that automatically makes pertinent questions from the course materials [4].

Question generation is essential in information retrieval and conversation-based information retrieval tasks. Finding specific information inside a given context can be made easier with the help of appropriate questions generated depending on a user’s inquiry [7]. Developing new content and its supplementation is another area where question-generating has found application [8]. Content producers can generate inquiries, evaluations, and educational resources more quickly by automatically generating questions. For neural QG, it is usual to formulate the problem as answer-aware, which means that given a context passage C = c0,…, cn and an answer span inside this context A = ck,…, ck+l, train a model to maximize P(Q|A, C), where Q = c0,…, cm are the tokens in the question (C = Context, Q = Question and A = Answer) [4]. The initial question was created by someone, according to the extractive QA dataset., served as the reference in the evaluation of these models using the metrics BLEU/ROUGE/METEOR for n-gram overlap [9,10,11]. The transition to answer-agnostic models brings new difficulties. According to Vanderwende [12], choosing what is and is not important is in and of itself a crucial effort. Without well-selected response spans to serve as a guide, an answer-agnostic system has to decide what is and is not substantial enough to raise a question. The Bangla language has QG models of the Answer Aware form, but there is no QG model of an Answer Agnostic form. In answer-aware question generation, the answer to the question has to be given with the context as an input to the mode. But if there was an Answer agnostic form of Question Generation in the Bangla language, then the AQG system would become more efficient and less time-consuming as the model would find the questions itself and there would be no requirement for giving answers for the questions too with the context as model input. So, we decided to conduct a study based on an Answer Agnostic Question Generation in the Bangla language.

Question Generation has numerous practical uses, including instructional technology, natural language comprehension, conversational agents, and information retrieval systems [13]. These systems can assess a user’s understanding, engage them in interactive learning, or assist in retrieving specific information from an enormous corpus of literature by creating questions. However, experimenting with Question Generation tasks for low-resource languages is quite challenging as it includes unique challenges and goals relevant to low-resource circumstances. To cope with this challenge, multilingual models like mT5, a multilingual variant of the T5 model, have been introduced. mT5 is a massively multilingual model pre-trained using fresh Common Crawl-based data that includes 101 languages [14]. Another model, BanglaT5, a Transformer model for the Bangla language, was trained using a 27.5 GB clean corpus of Bangla data. BanglaT5 outperforms in six challenging conditional text generation tasks, surpassing some multilingual models by up to 9% absolute gain and 32% relative gain [15]. We aim to finetune the pre-trained mT5, BanglaT5, and BanglaGPT models to create a robust Answer Agnostic Question Generation model to predict efficient, relevant, and grammatically correct questions in the Bangla language from a given context. Training such language models requires massive data, which is challenging to find for low-resource languages like the Bangla language. We used the SQuAD_bn dataset, published by Bhattacharjee et al. [18], a Bangla question-answering dataset curated and translated to Bangla from SQuAD 2.0 [16] and TyDI-QA [17] datasets. As it is mainly a Question Answering dataset and we are using it for Question Generation, we had to preprocess the dataset to make it feasible for the Question Generation task. We had to preprocess the data differently for three different models as their input format differs. BanglaT5 and mT5 use similar formats, but BanglaGPT has a different format for input, so we had to preprocess it differently for that. We have thoroughly discussed our data preparation process, model fine-tuning steps, experiments with various architectures, their evaluation, and how they fulfill our requirements of an efficient automatic Answer Agnostic Question Generation task. Overall, the key contribution to this research can be summarized as follows:

-

An automatic finetuned answer-agnostic question generation model that will predict questions from the given context in the Bangla language.

-

Two custom new datasets to create classification models for evaluating the prediction performance of the Question Generation model.

-

A classification model that will evaluate the generated question from the model and classify it into two categories in Bangla—প্রাসঙ্গিক (Relevant)/ অপ্রাসঙ্গিক (Irrelevant).

-

Another classification model that will evaluate the generated question from the model and classify it into two categories—সঠিক (Grammatically Correct)/ ভুল (Incorrect).

The paper is arranged in 10 sections and some sections have multiple subsections. The rest of the paper is organized as follows—related works and literature review is shown in Sect. 2. Here we discussed two forms of Question Generation tasks and some important works related to the topic. Section 3 contains discussion on our data collection and preprocessing techniques. Section 4 presents the methodology and description of the system, followed by the description of evaluation metrics used for the research in Sect. 5. Section 6 contains discussion on experimental results and Human evaluation of the model’s output has been stated in Sect. 7. “Practical Implementation” in Sect. 8 describes how the outcome of this research can be implemented with an interactive web interface. Our research findings has been discussed in Sect. 9. The last section concludes the paper with the direction of future works.

2 Related Works

2.1 Question Generation in NLP

The earliest days of question generation in natural language processing (NLP) can be traced back to the early 2000s when researchers began investigating ways for autonomously generating questions from the text. One of the earlier approaches relied on shallow parsing and template-based question production. These systems used predetermined question templates and pattern-matching techniques to extract relevant information from a text and generate questions accordingly. As the area developed, researchers began to investigate more advanced approaches for generating questions. In 2017, Du et al. [19] sought to generate questions for reading comprehension, they were the first to use a sequence-to-sequence approach to produce questions. Later in 2018, Radford et al. released the first version of the GPT model using the Transformer [20]. Although GPT-1 was not as well-known and noteworthy as its successor models, such as GPT-2 and GPT-3, it laid the groundwork for subsequent developments and paved the way for the tremendous progress made in later iterations. Then researchers started fine-tuning pre-trained transformer models like BERT and T5, for the Question Generation task, demonstrating improved performance.

In the Answer-Aware Question Generation task, the model is given the passage and the answer to it and is then asked to come up with a question. From a given context and answers, it attempts to produce questions that can be answered from the given passage. In resource-rich languages like English, the first Answer-Aware Question Generation (AAQG) model was proposed in research done by Sun et al. [21]. Later in 2018, Kim et al. [22] presented a model for question creation that integrates answer separation into the sequence-to-sequence framework. Their model is based on an RNN-based seq-to-seq learning model, the RNN encoder-decoder architecture. These two works laid the groundwork for seq2seq-model (sequence-to-sequence) based Question Generation tasks. Though transformers provide various benefits over seq-to-seq models. They are better at managing long-range dependence because of the self-attention mechanism. In 2019, Klein and Nabi [23] fine-tuned a transformer model, GPT2 of OpenAI to generate questions using SQuAD 1.1 dataset. Chan and Fan used the BERT transformer model for the Question Generation and Question Answering task. They provided three neural architectures based on BERT for the following task. The first one is a straightforward BERT implementation demonstrating the shortcomings of utilizing BERT directly for text generation. So, they offered two more models by sequentially restructuring their BERT employment to take knowledge from previously decoded outputs. Their models are trained and tested using the SQuAD 1.1 dataset. Experiment findings reveal that their best model produces state-of-the-art performance, increasing the BLEU 4 score of the existing best models from 16.85 to 22.17 [24]. Later on, other researchers explored the domain and used fine-tuned Transformer models to generate questions. In the Bangla language, Ruma et al. [25] used T5-based models like mT5-small, mT5-base, and BanglaT5 (A version of T5 trained on Bangla corpus) for the answer-aware question generation task. By far, it is the only work in the Bangla language for Question Generation.

2.2 Answer-Agnostic Question Generation

Answer-Agnostic Question Generation is a form of Question Generation task where the questions are predicted by the model from a given context or document without knowing the precise answers. There are a few works in this form of Question Generation even in resource-rich languages like English. In 2019, Scialom et al. [26] proposed a Question Generation task by studying several strategies, such as copy mechanisms, placeholders, and contextual word embeddings, to deal with out-of-vocabulary words. They used a transformer model for Answer-Agnostic Question Generation on the SQuAD dataset. In 2021, Lamba [28] conducted a study on Answer-Agnostic Question Generation, using the PolicyQA dataset published by Ahmad et al. [27] with seq-to-seq and the T5 model. In 2022, Dugan et al. [4] conducted a feasibility study on increasing the acceptability of generated questions by Answer-Agnostic models where he suggested giving a summary written by humans in place of the original text to Answer-Agnostic models which increases the acceptability rate of the output.

In resource-poor languages, there are a few noteworthy works for the Answer-Agnostic Question Generation task. In 2019, Kumar et al. [30] conducted research for cross-lingual question generation, where they used a model published by Vaswani et al. [29] using their custom dataset called HiQuAD. It was focused on Hindi and Chinese languages though they also used English data from the SQuAD dataset. In 2022, Wiwatbutsiri et al. [31] did research on Answer-Agnostic Question Generation in the Thai Language, where they proposed a method utilizing a single pre-trained model to produce synthetic data using an additional technique. The current research on answer agnostic QG has been depicted in Table 1. As per our knowledge, there is no work on Answer-Agnostic Question Generation in the Bangla language, which motivated us to do this study for the Bangla language.

3 Data Collection and Preparation

3.1 Dataset Description

3.1.1 Dataset for Question Generation Model

We used the squad_bn dataset by “csebuetnlp” for training our Question Generation Models [32]. squad_bn is a QA dataset created by translating SQuAD 2.0 [16] and TyDI-QA [17] using a state-of-the-art English-to-Bengali translation model. Train, test, and validation split of the following dataset contains 118 k, 2.5 k, and 2.5 k rows respectively. The complete SQUAD_2.0 dataset was translated for the training set. Selected instances (instances for which there were no answers to the given questions) from the TyDI-QA dataset were distributed to the test and validation set. The dataset contains five features where “id”, “title”, “context” and “question” are string type features. The ‘answer’ feature is a dictionary. An instance of the dataset is shown below (Table 2):

3.1.2 Dataset for Question Checking Model

We created two custom datasets for two different QC models: one for relevancy checking and another for grammatical correctness checking using an existing Bangla RC-based QA dataset named “UDDIPOK” [33]. UDDIPOK dataset was created by collecting Bangla passages from various Bangla Articles, Fiction, Biographies, etc. Questions and answers against each context were annotated cautiously taking into account real-life questions and answers. So, the dataset contains three features named ‘passage’, ‘question’, and ‘answer’ all of which are string type features in the Bengali language. The dataset has 3636 instances altogether. Table 3 demonstrates an example of the dataset.

For QC models, we removed the “Answer” column of the following dataset and added a new column named Label. The existing questions of the “UDDIPOK” dataset were Labeled as “সঠিক” (correct) and “প্রাসঙ্গিক” (relevant). We then added multiple other questions from the same contexts which were labeled as “ভুল” (incorrect) and “অপ্রাসঙ্গিক” (irrelevant). So, both the datasets contain two classes and we kept the number of instances from each class almost equal for the sake of better performance of the QC models. These two datasets are named as “Correctness_QC” and “Relevancy_QC”. “Correctness_QC” contains 1793 number of “সঠিক” and 1788 number of “ভুল” labeled instances making it a total of 3581 instances whereas “Relevancy_QC” contains 1302 number of “প্রাসঙ্গিক” and 1300 number of “অপ্রাসঙ্গিক” labeled instances making it a total of 2603 instances. Tables 4 and 5 demonstrate examples of the following datasets.

3.2 Data Pre-processing

3.2.1 Preprocessing for QG

We experimented with three different models which are BanglaT5, mT5-base, and, gpt2-bengali for question generation. Each of these models requires a particular format of data to be trained with for QG tasks. Gpt2-bengali is a GPT2-like model that performs language modeling; thus, we transformed the entire squad_bn dataset into a continuous body of text to finetune the gpt2-bengali model. The context and questions were separated by delimiters in between for the model to understand the difference. Special tokens such as ‘</s>’ for BanglaT5 and Mt5 and <|endoftext|> tokens were added at the required positions to indicate the end of the string to the models. We explored two different data formatting techniques for the representation of multiple questions per context to finetune the models. Figure 1 demonstrates the different data formats that we used to finetune the QG models.

AQL (All questions per Line): All questions from a particular context are brought together in a single data instance separated by (1) “প্রশ্ন:” (Question) delimiter in case of gpt2-bengali and (2) “<sep>” token in case of BanglaT5 and mT5 models (shown in Fig. 1). This significantly reduces the number of training data and results in faster training. One problem with this setup in the case of gpt2-bengali could be an increase in the input sequence length resulting in the model not being able to get to the earlier tokens. However, this issue has been handled by removing larger length contexts and questions from the dataset.

OQL (One question per Line): A single question per context is placed in a single data instance causing duplication of context data. This increases the size of the dataset significantly resulting in an increased training duration. For gpt2-bengali, number delimiters “১.” was used and BanglaT5 and MT5 did not require any delimiters in this format (see Fig. 1).

3.3 Data Reduction

Squad_bn dataset contains a lot of questions against particular contexts that cannot be answered from the context. These questions are considered irrelevant and thus, we removed all the elements of the dataset that had unanswerable questions. Some of the contexts contained “…” symbols and missing information. Rows containing such data were also removed. Doing so significantly reduced the amount of data. The max sequence length of the gpt2-bengali model is 768. T5-like models are able to handle inputs of any sequence length according to the original paper [34]. However, a larger sequence length demands greater memory requirements making the training process computationally expensive. Thus, we set the maximum length of the context (input) to be 512, the maximum length of the question (target) to be 45 in OQL, and 256 in AQL format keeping the sum ≤ 768 tokens for BanglaT5 and Mt5. For gpt2-bengali, the maximum length is set to 768. However, there were some contexts in our dataset where the number of words exceeds the maximum length. As we are using the default tokenizer, we need to find the maximum number of words each tokenizer can handle considering the above-mentioned setup. BanglaT5, MT5, and gpt2-bengali all use variants of sub-word tokenizers. Sub-word tokenizers split uncommon words into subwords resulting in multiple tokens from a single word. Commonly used words are kept intact and converted into a single token. Due to this behavior of the tokenizer, the number of words in the input sequence must be less than the number of max length set, otherwise, the tokenizer won’t be able to tokenize the whole input sequence resulting in missing information. An example below proves the claim:

We thoroughly analyzed this behavior for all three tokenizers of the three models and filtered the dataset based on a common number of words every tokenizer can handle. The optimal context length is found to be 175 words and the optimal target length for OQL and AQL format is 17 and 95 words respectively. After filtering 61,289 numbers of data remain in OQL format and In AQL format the number of data is 18,045. These datasets were then split into a train and validation set using the train_test_split function of scikit-learn taking test_size = 0.1(10%). The bar plot in Fig. 2 demonstrates the distribution of data in each format after splitting.

The test data was created by taking 100 unique short-length contexts from the validation split of the squad_bn dataset for making the output analysis and human evaluation of the outputs smoother and easier.

3.3.1 Pre-processing for QC

For QC models, we fine-tuned BanglaBert. The passage and question are concatenated with the “[SEP]” token in between for the model to be able to differentiate them during training. Figure 3 illustrates the data format for QC models.

The max_length was set to 512 and no reduction of data was required as all the contexts and question lengths of the dataset were short enough to be handled by the default tokenizer of the BanglaBERT model.

4 Methodology

This section provides a thorough description of our work, the transformer architectures that we used to train, the dataset that we trained the models with, and the different types of pre-trained models that we used.

4.1 Transformer Model Architecture

The most common sequence transduction models are built on large recurrent or convolutional neural networks with an encoder and a decoder. An attention mechanism is used to connect the encoder and the decoder in the best models. The Transformer [29] is a revolutionary simple network architecture based purely on attention mechanisms, with no repetition or convolutions. Research on two machine translation projects demonstrates that these models outperform others in terms of quality in addition to being more parallelizable and quick training period. Transformer models adhere to an encoder-decoder architecture and handle sequential inputs using fully connected layers and layered attention mechanisms for both the encoder and the decoder. An input sequence of symbol representations (a1, …, an) is converted to a sequence of continuous representations y = (y1, …, yn) by the encoder. The decoder is provided with z and returns a symbol output sequence (b1, …, bm) one element at a time. Autoregressive behavior at each step allows the model to use previously created sequences as additional inputs for the next.

4.1.1 Encoder and Decoder Stacks

Both the encoder and decoder are made up of N = 6 resembling layers, with 2 sublayers in the encoder and 3 sublayers in the decoder for each layer. A fully connected feed-forward network comes after a multi-head attention mechanism as the second layer. The decoder has a third sublayer in addition for performing multihead attention over the encoder outputs. Around each sublayer, a residual connection and layer normalization were used. LayerNorm(x + Sublayer(x)) represents the output of each sublayer and the function Sublayer(x) is employed by the sublayers itself. All sublayers and embedding layers provide outputs of dimension size, dmodel = 512 to support the residual connections. Decoder stacks self-attention sublayer is changed to refrain positions from attending to adjacent positions. This masking along with the offset of output embedding by one position ensures the dependency of predictions for position p on known outputs at positions < p.

Keys and queries in input are of dimension dk and values are of dimension dv. In Scaled dot product attention, to determine the weight of the values, divide each of the query's dot products with all of the keys by \(\sqrt{{d}_{k}}\) and apply a SoftMax function. Queries, keys, and values are condensed together in three matrices Q, K, and V respectively. The output matrix is calculated as follows:

Scaled dot product attention is similar to commonly used dot product attention except for \(\frac{1}{\sqrt{{d}_{k}}}\) scaling factor.

4.1.2 Multi-Head Attention

To dimensions dk, dq, and dv, respectively, queries, keys, and values are linearly projected for n times using different learned linear projections. Then, in parallel, the attention function is applied to each of these projected permutations of queries, keys, and values to provide output values for dimension dv. By combining these numbers and projecting them once again, the ultimate result is achieved. The model can simultaneously attend to information at different positions from distinct representation subspaces:

Here, the projections are parameter matrices \(W{Q}_{j} \in {R}^{{d}_{{\text{model}}} * {d}_{k}}\), \(W{K}_{j} \in {R}^{{d}_{{\text{model}}} * {d}_{k}}\), \(W{V}_{j} \in {R}^{{d}_{{\text{model}}} * {d}_{k}}\) and \({W}^{O}\in {R}^{{nd}_{v} * {d}_{{\text{model}}}}\).

4.2 Types of Transformers

A conventional approach of first pretraining and then fine-tuning transformer models is being followed for various NLP tasks. The goal of pretraining is to build a general model of language understanding on a large amount of unlabeled text so that it can be further fine-tuned on specific tasks such as Text generation, Question Answering, Dialogue generation, machine translation, Question Generation, etc. Masked Language Modeling (MLM) and Causal Language Modeling (CLM) are two well-known pre-training schemes. In MLM a certain percentage of words are kept hidden and these hidden words are supposed to be predicted by the model based on the other words of the sentence. Representation of the masked word is learned based on words at its left and right making the model bidirectional in nature. Example: BERT, T5. Example of MLM and CLM has been depicted in Fig. 4.

In CLM the model predicts the masked token in a sentence considering only the words at its left or right which makes the model unidirectional. According to the figure above the model is supposed to predict the masked token based on the words that occur to its left and depending on the prediction of the model against the actual label, cross-entropy loss is calculated and model parameters are trained through backpropagation, e.g., GPT2. Among these two, CLM is preferred for Text generation, Question answering, and Question Generation [35].

4.3 Tokenizers

The tokenizers play a crucial part in comprehending human language. Word, subword, or character-based tokenization techniques can be utilized. Different kinds of tokenizers assist the machines in processing the text in various ways. Each one is superior to the other in some way. OpenAI used the BPE tokenizer to train their GPT-2 model. The important thing is to utilize BPE tokenizers since they can tokenize any term in any language without utilizing the unknown token because they have been trained on massive corpora. BPE (Byte Pair Encoding) is a sub-word encoding. The most frequent byte pairings get combined into a single token, added to the list of tokens, and the frequency of occurrence of each token will be recalculated. It is a straightforward data compression method that repeatedly substitutes a single, unused byte for the most common pair of bytes in a sequence [36]. The subword-based tokenizer is the most often used of these tokenizers. Most state-of-the-art NLP models use this tokenizer. Subword-based tokenization is a middle ground between word-based tokenization and character-driven tokenization. Addressing the issues with character-driven tokenization and word-based tokenization is the main objective (very large vocabulary size, various OOV tokens, and inconsistent meaning of very similar words) (exceedingly extended sequences, and tokens that aren't as important individually). The commonly used terms are not divided into smaller subwords by the subword-based tokenization methods. This generally takes care of not treating different forms of words as different. The uncommon words are instead divided up into smaller, more readable subwords. For instance, “অক্ষ” and “অক্ষর” are divided into “অক্ষ” and “র” but “অক্ষ” is not separated. In doing so, the model is assisted in understanding how the word “অক্ষর” is derived from the word “অক্ষ” which has the same root word but significantly distinct connotations.

Another popular tokenizer that many models use is a sentence-piece tokenizer. Sentencepiece basically splits words into subwords during the tokenization process. After splitting the words into subwords, the separated words get mapped into numerical representation via a lookup table. A sample lookup table is shown in Fig. 5.

Sentencepiece comprises 4 different components: Normalizer, Encoder, Decoder, and Trainer. Normalization is the process of standardizing words so that they follow a suitable format. Sentence-piece changes words, characters, or letters into equivalent NFKC Unicode. Sentence-piece not only does direct training on raw sentences but also implements two main algorithms—BPE and the unigram language model. It doesn’t depend on language-specific pre-processing or post-processing.

The Encoder is the process of pre-processing and post-processing whereas the Decoder is implemented as an inverse operation of the Encoder in sentence-piece, i.e., Decode(Encode(Normalize(text))) = Normalize(text). Sentence-piece tokenizer named this specific processing design ‘lossless tokenization’, in which the encoder's output has all the necessary data to reproduce the normalized text. In this procedure, even whitespace is considered as a symbol and firstly gets tokenized with a meta symbol “_” (U + 2581), and then the input gets tokenized into an arbitrary subword sequence. For example, ‘হ্যালো_ওয়ার্ল্ড।’ in raw Bengali will be tokenized as ‘[হ্যা][লো][_][ওয়ার]['্][ল্ড][।]’. After all, sentence-piece offers many benefits in comparison to other implementations. The benefits SentencePiece brings in comparison to other implementations is summarized in the table below (Table 6).

4.4 NLP Architecture Description

4.4.1 Pre-trained Models

BanglaT5: BanglaT5 [15] is a seq2seq (sequence to sequence) transformer model pre-trained on the “Bangla2B+” dataset that contains 27.5 GB of Bangla data with 5.25 million documents crawled from 110 popular Bangla sites. The model was pretrained with a “span correction” objective that has been proven to be the optimal choice in encoder-decoder architecture. The model is trained to reconstruct spans of tokens that have been replaced with mask tokens. The model has the architecture of the T5-base model comprising 12 layers, 12 attention heads, 768 hidden size, 2048 feed-forward size with GeGLU activation with a batch size of 65,536 tokens for 3 million steps on a v3-8 TPU instance on Google Cloud Platform. As per hyperparameters, ‘Adam’ was used as the optimizer with a learning rate of 3e−4. Inverse square root was used as the learning rate decay mechanism and the liner warmup steps was 10,000.

BanglaT5 is the best performer among all existing multilingual models by a significant margin on Banglanlg (Bangla natural language generation) tasks. The margin is 4% on average over mT5, the second-best model (Fig. 6). It outperforms the other multilingual models in all Banglanlg tasks except MTD (multi-turn dialogue generation). The performance increase in QA (Question Answering) is up to 9.5% over others (Table 7). The model shows promising computation and memory efficiency due to its reduced size, almost half of the other multilingual models except indicbart, and is able to train 2–2.5 × faster compared to other multilingual models [15].

mT5: mT5 [14] is a multilingual text-to-text transformer model that serves as the multilingual version of the T5 architecture. It was pretrained using mC4—an extended version of the C4 dataset that includes 101 languages. The pretraining objective of the model was to construct a hugely multilingual model adhering to the T5 model as much as possible. The model architecture is closely similar to T5v1.1 which is an improved version of T5 with GeGLU activation, pretraining with no dropout on unlabeled data only, and scaling both dff and dmodel instead of just dff in larger models. The model consists of 12 layers, 12 attention heads, 768 hidden size, 2048 feed-forward size. Performance comparison of BanglaT5 and mT5 on various Banglanlg task is demonstrated in Table 7.

BanglaBERT: BanglaBERT [18] is a BERT-based natural language understanding model pretrained on the “Bangla2B+” dataset—the same dataset used to pre-train BanglaT5. The model was pretrained with Replaced token detection objective (RTD). A generator and a discriminator model are trained concurrently in RTD. Input sequences with a certain percentage of masked tokens (15% for Banglabert) are fed to the generator and the generator has to predict the masked tokens using the other portion of the input. Tokens drawn from the generator’s output distribution replace the masked tokens for corresponding masks and the discriminator predicts whether or not the replaced tokens are from the original sequence. The model has the same architecture as Electra-base comprising a 12-layer encoder, 12 attention heads, 768 hidden size, 768 embedding size, generator-to-discriminator ratio \(\frac{1}{3}\), 3072 feed-forward size, with 256 batch size for 2.5 M steps on a v3-8 TPU instance on Google Cloud Platform. BanglaBERT used ‘Adam’ as the optimizer with learning rate of 2e−4 and liner warmup steps of 10,000. BanglaBERT yields the best performance among all multilingual models on all the Bangla NLU tasks, achieving a 77.09 BLUB (Bangla Language Understanding Benchmark) [18] score. BanglaBERT also shows superiority in memory and computation efficiency. The model takes 2–3.5 × less time and occupies 2.4–3.33 × less memory than sahajBERT, a monolingual model which is smaller than BanglaBERT.

Gpt2-bengali: Gpt2-bengali [37] is a decoder-only transformer model pre-trained on the Bangla portion of the mC4 dataset. This pre-trained model shares the same configuration as the GPT2 model. It has 12 layers, 12 attention heads, 768 embedding size, and 768 hidden size. The model showed an evaluation loss of 1.45 and an evaluation perplexity of 3.141 after being trained for 250 k steps. The training time was approximately 3 days.

4.4.2 Fine-Tuning

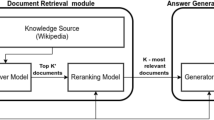

To fine-tune the pretrained models, we transformed the data into the required format for the QG task. We added ‘<sep>’ as sep_token to the default tokenizer and resized the token_embeddings size of the models. <sep> tokens helped the models to differentiate between the questions against each unique context in AQL format. ‘</s>’ token at the end of every element of the ‘context’ and ‘questions’ column indicates the end of the string and is absolutely necessary for training mT5 and BanglaT5 models. Elements of the ‘context’ column served as input encodings, and questions served as targets. tokenizer.batch_encode_plus was used to get the input encodings (‘input ids’, ‘attention mask’) and target_encodings(‘decoder_input ids’, ‘decoder_attention mask’). Then, the tokenized train, test, and validation dataset were set to ‘torch’ format with columns = [‘input_ids’, ‘decoder_input_ids’, ‘attention_mask’, ‘decoder_attention_mask’] for training using PyTorch and hugging face’s trainer class. After training, we saved the best model (lowest validation loss) and the tokenizer to use for question generation on test data. Figure 6 demonstrates the entire Finetuning process for QG model.

For evaluating generated questions, we fine-tuned BanglaBERT on two different datasets to determine if a question is contextually relevant and grammatically correct or not. So, our one fine-tuned model is for determining contextual irrelevance and the other one is for determining grammatical mistakes. [SEP] token in the preprocessed data allows the model to differentiate between context and question. tokenizer () function was used to get the input encodings (‘input ids’, token_type_ids, ‘attention mask’). Labels ({‘সঠিক’: 1, ‘ভুল’: 0}, {‘প্রাসঙ্গিক’: 1, ‘অপ্রাসঙ্গিক’: 0}) were also passed with the input encodings during training. For training, PyTorch and a hugging face’s trainer class were used just like before. After training, the best model was saved in terms of lowest validation loss and later on, used for label prediction on test data. Figure 7 demonstrates the entire Finetuning process for QC models.

5 Evaluation Metrics and Loss Function

5.1 Bleu

A statistic called BLEU (Bilingual Evaluation Understudy) is used to assess the effectiveness of machine translations which is now also used in several nlp tasks [9]. The shortness penalty and n-gram overlap are mathematically combined to define the BLEU score. The brevity penalty depends on how long the candidate translation is compared to how long the reference translation is. The number of n-grams in the candidate translation that is also present in the reference translation determines the extent of the n-gram overlap. The modified n-gram precision function is defined in Eq. 1 as follows

In Eq. (1), \({{\text{Count}}}_{{\text{clip}}}(n{\text{-gram}}\)) is the maximum number of times an n-gram appears in any single reference translation, and \({\text{Count}}\left(n{{\text{-gram}}}^{{{\prime}}}\right)\) is the total count of all n-grams in the candidate translation.

5.2 METEOR

METEOR (Metric for Evaluation of Translation with Explicit Ordering) is a metric for assessing the output of machine translation [11]. At first, it computes unigram precision (\(P\)) and unigram recall (\(R\)). Then it combines the computed precision and recall via a harmonic mean and computes \({F}_{{\text{mean}}}\). As precision, recall and \({F}_{{\text{mean}}}\) are based on unigram matches, another term is computed called \({\text{Penalty}}\) to account longer matches. Then it computes the final METEOR score by multiplying \({F}_{{\text{mean}}}\) with \((1-{\text{Penalty}})\).

In Eq. (2), \(m\) represents the number of unigrams in the candidate translation also found in reference, \({w}_{t}\) represents the number of unigrams in candidate translation and in Eq. (3), \({w}_{r}\) stands for the number of unigrams in reference translation. In Eq. (4), \(P\) is precision and \(R\) is recall. Along with the typical exact word matching, Meteor also offers a number of additional elements that are not present in other measures, like stemming and synonym matching.

5.3 Rouge

ROUGE (Recall-Oriented Understudy for Gisting Evaluation) is a set of metrics and software programs used to rate machine translation and automatic summarization programs in NLP [10]. A recall-focused statistic called the ROUGE score compares an automatically generated summary or translation against a set of reference summaries. The ROUGE score is, in its most basic form, the ratio of the matching words to the total number of words in the reference phrase. The ROUGE metric has a number of variants, including ROUGE-N, ROUGE-L, and ROUGE-S. Each variation uses a different equation to determine the score. For instance, Eq. (7) can be used to determine the ROUGE-1 score, which assesses the degree of unigram overlap between the system and reference summaries:

where \({{\text{Count}}}_{{\text{match}}}\left({\text{gram}}1\right)\) is the maximum number of co-occurring unigrams between the candidate summary and any reference summary, and \({\text{Count}}({\text{gram}}1\)) is the number of unigrams in the reference summary.

6 Results and Analysis

6.1 Experiment Results of QC Models

QC models that we trained for the evaluation purpose of QG models fall under the text classification domain. Thus, we finetuned a pre-trained NLU transformer model to serve the purpose. We named the models as Question_Classifier_IC (Correctness check) and Question_Classifier_IR (Relevancy check). Accuracy, Recall, F1 score, and Precision are the most commonly used evaluation metrics for classifiers. The datasets used to train the models were balanced. Therefore, we primarily focused on Accuracy as the performance measurer of the models. Training vs validation loss curve for each epoch also gives an insight into the underfitting and overfitting of the model. The models were finetuned for 10 epochs. Figure 8 demonstrates the training and validation loss of each epoch for both QC models.

From the following curve, it is observed that the training loss continued to decrease till the last epoch for both cases. However, the validation loss decreases until epoch 2 and epoch 3 in (a) and (b), respectively, and then increases. This behaviour denotes that the model is evolving into a function that more accurately captures patterns found in the training data rather than patterns found in the validation data, and so the overfitting. The increase–decrease cycle is slightly greater in (b) than (a). Therefore, the epoch with the lowest validation loss is chosen as the best performing model, yielding the highest accuracy in both cases. In case of (a) and (b), the least validation loss of 0.259 and 0.163 is attained at epochs 2 and 3, respectively. These models were then used to predict the labels of the test datasets-completely unfamiliar to the models. The models showed satisfactory test accuracy of 95.7% in the relevancy check and 88.5% in the correctness check. One possible reason of this performance difference between the two finetuned models is the data quality and data diversity. Transformer models performance on downstream tasks such as text classification highly depends on data. The number of data instances of the “Correctness_QC” is greater than that of “Relevancy_QC”. However, the higher data quality and the data being more representative of “Relevancy_QC” dataset has led to a greater test accuracy. Figure 9 illustrates weighted F1, weighted precision, weighted recall, macro f1, macro precision, macro recall, and accuracy scores of the models on the test set.

Each node of the figure corresponds to the value of the metric in its direction. The node’s position indicates the scores of the metrics. For example, the weighted F1 of the red model is very slightly extended from the 5th circle indicating that the score is slightly greater than 0.88, 0.885% in actual. The interactive use of the chart allows one to visualize the exact scores. In any case, from the following curve, it can be concluded that every metric score of the blue model is approx. 96%. The accuracy and weighted precision of the red model is approx. 89% whereas macro F1, weighted F1, weighted recall, and macro recall are approx. 88%. With these scores in line, the Question_Classifier_IC predicted 317 examples correctly and 41 incorrectly. The correct and incorrect number of predictions of Question_Classifier_IR is 249 and 11 respectively. Prediction results are shown in Fig. 10.

6.2 Experiment Results of QG Models

6.2.1 Model Generation

We have implemented six prominent decoding strategies at the current time for question generation using our fine-tuned models. These are:

-

(1)

Greedy decoding: Greedy decoding always selects the token with the highest probability as the next token. The generated words based on the context are suitable for greedy decoding. However, the model quickly begins to duplicate itself! Another significant disadvantage of greedy decoding is that it overlooks high-probability words concealed beneath a low-probability word.

-

(2)

Beam search: Beam search keeps the most probable num_beams of hypotheses at each time step and minimizes the risk of losing high-probability words hidden underneath low-probability ones. It selects the hypothesis that has the highest overall probability. It always provides an output sequence of higher probability compared to greedy but does not guarantee the desirable output. It also has the drawback of repeating itself.

-

(3)

Random sampling: Random sampling picks the next word based on its conditional probability distribution randomly.

-

(4)

Top K sampling: Top K sampling filters the K number of the next words and redistributes the probability mass among those filtered ones. GPT2 used this decoding technique and proved to be a success for open-end generation. One drawback of this approach is not adjusting the number of filtered words dynamically.

-

(5)

Top p sampling: Top p sampling picks the fewest number of words whose overall probability is higher than the probability p rather than choosing from K most likely words.

-

(6)

Combination: Theoretically, combining both top K and top P should yield the most optimal results.

Though top p and top k should outperform the other decoding algorithms by generating more humanlike sequences, recent studies involving human evaluation have shown that the beam search can yield better outputs. Decoding configurations that were used to achieve the predicted questions are illustrated in Table 8.

The max_length for all the decoding strategies was set to 45 for OQL and 256 for AQL data format. A comparison between generated questions among all three models for all the decoding techniques is shown below. For AQL setup, the best generated question is reported.

Greedy

mT5(OQL): প্রস্তর যুগকে কত পর্যায়ে ভাগ করা হয়? (Stone age is divided into how many stages?)

mT5(AQL): কোন যুগকে তিনটি পর্যায়ে ভাগ করা হয়? (Which age is divided into three stages?)

BanglaT5(OQL): প্রস্তর যুগকে কতটি পর্যায়ে ভাগ করা হয়? (Stone age is divided into how many stages?)

BanglaT5(AQL): প্রস্তর যুগকে কতটি পর্যায়ে ভাগ করা হয়? (Stone age is divided into how many stages?)

gpt2-bengali(OQL): যারা প্রথম পরিচিত হয়েছিল তাদের নাম কি ছিলনা? (What was the name of those who first met?)

gpt2-bengali(AQL): পৃথিবীর কোন পরিবর্তনকে বলা হতো পাথর যা পূর্বের যে কাজগুলোকে পুনরাবৃত্তি করেছিল, সেগুলরোর মধ্যবর্তমানে পরেরটাকে? (Which change in the world was called a stone that repeated the actions of the former, the latter in the middle of each one?)

Beam search

mT5(OQL): রাশিয়ার একমাত্র সরকারি ভাষা কী? (What is the only official language of Russia?)

mT5(AQL): রাশিয়ার একমাত্র সরকারি ভাষা কী? (What is the only official language of Russia?)

BanglaT5(OQL): মলদোভার দ্রান্সনিস্ত্রিয়ার সরকারী ভাষা কী? (What is the official language of Moldova Transnistria?)

BanglaT5(AQL): মলদোভার ক্রান্সনিস্ত্রিয়ার সরকারী ভাষা কি? (What is the official language of Moldova Transnistria?)

gpt2-bengali(OQL): কোন দেশে প্রতিষ্ঠা করা হয়েছিল, যেটা সাধারণভাবে ব্যবহৃত হয় না? (Which country was established, which is not commonly used?)

gpt2-bengali(AQL): কোন দেশগুলোতে বিশেষভাবে উল্লেখযোগ্য সংখ্যক রিপোর্ট করা হয়েছিল, যেটা সাধারণত ব্যবহৃত হয়নি? (In which countries was a particularly significant number reported, which was not commonly used?)

Random sampling

mT5(OQL): মুন জেইন কখন দক্ষিণ কোরিয়ার নতুন রাষ্ট্রপতির পদে নির্বাচিত হয়েছিলেন? (When was Moon Jae-in elected as the new president of South Korea?)

mT5(AQL): দক্ষিণ কোরিয়ার বর্তমান রাষ্ট্রপতি কে? (Who is the current president of South Korea?)

BanglaT5(OQL): ২০১৭ সাল থেকে দক্ষিণ কোরিয়ার প্রেসিডেন্ট কে? (Who is the president of South Korea since 2017?)

BanglaT5(AQL): ২০১৭ সালে কে প্রেসিডেন্ট নির্বাচিত হন? (Who was elected president in 2017?)

gpt2-bengali(OQL): জেইন কখন দক্ষিণ কোরিয়ার বর্তমান রাষ্ট্রপতি হবেন? (When Jain will be the current president of South Korea?)

gpt2-bengali(AQL): রাষ্ট্রপতির মেয়াদ কতদিন? (How long is the term of the president?)

Top K sampling

mT5(OQL): যে-ব্যক্তি আনুষ্ঠানিকভাবে ঘানাতে বাস করে কোন সময়ে সেখানে সীমাবদ্ধ হয়েছিল? (At what point was a person officially resident in Ghana restricted there?)

mT5(AQL): প্রথম আদমশুমারিতে ঘানাতে জনসংখ্যা কত? (What is the population of Ghana in the first census?)

BanglaT5(OQL): ১৯৬০-এর দশকে, এই অঞ্চলের জনসংখ্যা কত ছিল? (In the 1960s, what was the population of this region?)

BanglaT5(AQL): প্রথম আদমশুমারিতে কতজন লোক ছিল? (How many people were there in the first census?)

gpt2-bengali(OQL): াড্ডি নামটি কোন আইনের আওতায় পড়ে? (What law does the name Addy fall under?)

gpt2-bengali(AQL): তাদের জনসংখ্যা কত? (What is their population?)

Top p sampling

mT5(OQL): ভারতের রাজ্য উত্তরপ্রদেশের সাথে কতটি জেলা বিভাগ? (How many district divisions with the state of Uttar Pradesh in India?)

mT5(AQL): কোন রাজ্য উত্তরপ্রদেশের জেলাশাসক নিযুক্ত করে? (Which state appoints District Magistrate of Uttar Pradesh?)

BanglaT5(OQL): ভারতে কতগুলো জেলা আছে? (How many districts are there in India?)

BanglaT5(AQL): ভারতে কতগুলো জেলা আছে? (How many districts are there in India?)

gpt2-bengali(OQL): িবি রাজ্য কোন জেলাতে অবস্থিত? (B is located in which district of the state?)

gpt2-bengali(AQL): বিভাগীয় জেলাগুলো কিসের অন্তর্ভুক্ত? (What are the divisional districts?)

Combination of top k and top p sampling

mT5(OQL): চট্টগ্রামের মোট এলাকা কি? (What is the total area of Chittagong?)

mT5(AQL): চট্টগ্রাম জেলার মোট আয়তন কত? (What is the total area of Chittagong district?)

BanglaT5(OQL): এই শহরে কোন্ অবস্থান? (What location is this city?)

BanglaT5(AQL): বিশ্বের সবচেয়ে বড় স্থানীয় জেলা কি? (What is the largest local district in the world?)

gpt2-bengali(OQL): জেলার মোট দেশজ উৎপাদন কত? (What is the total domestic production of the district?)

gpt2-bengali(AQL): আয়তনের দিক থেকে বাংলাদেশের দ্বিতীয় বৃহত্তম জেলা কী? (What is the second largest district of Bangladesh in terms of size?)

From the model’s outputs, it is noticeable that the generations by gpt2-bengali in OQL are either meaningless or contain missing characters. In AQL format, sampling techniques generated meaningful questions. Different decoding techniques seem to do well for different data formats in the case of mT5 and banglaT5 models. However, it can be said as an initial impression of the above observation that the greedy and beam search works well with these two models rather than the other ones.

6.2.2 Relevancy and Correctness

We used our finetuned QC models to predict the labels of the questions generated by the model in terms of contextual relevance and grammatical correctness for both OQL and AQL formats. Tables 9 and 10 show the prediction results of the QC models on generated questions.

The data from Table 9 demonstrate that in both OQL and AQL format, mT5 beam search yields the highest correctness percentage which is 96% and 91.5% respectively. It is also observed that in OQL format mT5 achieved greater scores for all decoding techniques except the combination sampling. However, in AQL format BanglaT5 achieved better scores compared to mT5 for every algorithm except beam search and random sampling. The performance of gpt2-bengali is poor compared to the other two with the highest correctness percentage of 72.4% for random sampling in AQL format. Now if we look at the results of Table 10, it is evident that in both OQL and AQL format, mT5 outperformed the rest models with relevance scores of 77% and 67.7% respectively which is quite impressive considering the Answer agnostic setup. mT5 achieved better results and differed by a great margin in both formats for all decoding algorithms in relevancy as well. BanglaT5 could not keep up with mT5 and yielded the highest relevancy score of 28% for beam search in OQL. gpt2-bengali continues to maintain its legacy of poor performance here as well. Though gpt2-bengali performed better than BanglaT5 for top k, top p, and their combination sampling, it achieved 0% relevancy for beam search and greedy search in OQL format.

6.2.3 Optimal context length

Since mT5 performed better than the rest in terms of both relevance and correctness in both data formats, we decided to find the optimal context length for this model. The test data that we created from the squad_bn dataset contained short-length contexts, 5–50 words. So, we evaluated the performance of our best model on different context lengths based on word numbers in the context. We categorized context length into three different variants:

-

(1)

Short length context: 5–50 words.

-

(2)

Medium length context: 51–100 words.

-

(3)

Long length context: 101–150 words.

The reason behind keeping the context lengths between 5–150 words is that we filtered the training data by 175 words. We scraped 50 medium-length and 50 long-length contexts from HSC (equivalent to A level) Bangla ICT book and created two different test sets. We then generated questions on those two test sets and evaluated the quality of the questions using our QC models. The comparison of relevancy and correctness scores among different length contexts for the mT5 model is stated in Table 11.

According to Table 11, our selected model performs the best for short-length contexts containing 5–50 words. Surprisingly, beam and greedy decoding provide identical results (94% and 92% correctness and 46% and 24% relevancy) for both medium and long-length contexts. However, the sampling decoding differs in scores for different lengths. Overall, it can be concluded that the shorter the context is, the better the model will perform. Dugan et al. [4] demonstrated that providing human-written summaries of context rather than the actual context resulted in significantly higher levels of relevance (61% → 95%) for Answer Agnostic QG models. That justifies the optimal context length to be short.

6.3 Comparison with Existing Models

We chose the best performing model based on the results from the QC models. mT5 and beam decoding achieved the highest scores in both contextual relevance and grammatical correctness even with different context lengths. Therefore, we picked it as our best model and compared its performance with the existing QG models. For comparison, we calculated Blue_1, Bleu_2, Blue_3, Blue_4, METEOR, ROUGE_L using the nlg_eval [38] library function. Comparison of the mentioned metrics among our best model and existing QG models are shown in Table 12.

Table 12 shows that our model achieved greater scores in all the metrics. As our approach for question generation was answer agnostic, most of the predicted questions by the model and reference questions of the dataset were from different answer domains. Therefore, we had to annotate the reference questions ourselves for the generated questions. We cautiously annotated the reference questions so that it does not get biased. So, this might have led to a better representation of reference questions boosting the evaluation scores. Another reason might have been the context length. We evaluated the scores on the questions generated using optimal context length for our model. These higher scores were obtained as a result of the model's realistic and closely worded questions. Annotated reference texts were verified by one of our professors in Bangla.

7 Human Evaluation

Language understanding is a very difficult task in NLP. Though we finetuned a state-of-the-art transformer model to evaluate context relevance and the produced questions’ grammatical accuracy, human evaluation is still required for further analysis of the question quality because no model is 100% perfect in language understanding. Bangla is a resource-poor language and thus it is possible for the models to be more error-prone in predictions made in this language. Therefore, despite showing satisfactory test accuracy we analyzed the outputs given by our QC models. We labeled the generated questions ourselves and compared them with the prediction labels of the QC models. We verified our labeled data by a professor in Bangla for justification. We checked for three things in human evaluation:

-

1.

Relevance—whether the question can be answered from the context or not

-

2.

Correctness—whether the question is grammatically correct or not

-

3.

Meaningfulness—whether the question makes sense or not.

We did this analysis on the questions generated by the best-performing model and decoding technique to calculate the error percentage made by the QC models as well as analyze the absurd generation percentage by the QG model. As per our findings, 10% of questions are grammatically incorrect and 20% of questions are irrelevant among the generated questions. So, based on human evaluation, the relevance of the generated questions is about 80% and the correctness of generated questions is 90%. A total of 6% of questions are meaningless as well as irrelevant (meaningless questions cannot be relevant). Despite the human evaluation results being very close to the QC models results, we further analyzed the error rate made by the QC models to validate our evaluation process. Question_classifier_IC made 7% wrong predictions which is justified considering the test accuracy to be approximately 88%. However, Question_classifier_IR made 33% wrong predictions despite showing 96% test accuracy on a different test set. So, we dug down further to analyze this behavior and explored that among the 33% wrong predictions of Question_classifier_IR, 18% of relevant questions were predicted as irrelevant, and 15% of irrelevant questions were predicted as relevant. The error in the opposite label prediction is almost 50%. So, we checked the questions which were actually relevant but the model predicted them as irrelevant and discovered a pattern in the model’s prediction behavior. Questions that had answers in the first bracket and questions that had answers in percentage were mistakenly predicted to be irrelevant. Example of such predictions are shown below.

Actual Label: প্রাসঙ্গিক (Relevant)

Predicted Label: অপ্রাসঙ্গিক (Irrelevant)

Actual Label: প্রাসঙ্গিক (Relevant)

Predicted Label: অপ্রাসঙ্গিক (Irrelevant)

Actual Label: প্রাসঙ্গিক (Relevant)

Predicted Label: অপ্রাসঙ্গিক (Irrelevant)

Actual Label: প্রাসঙ্গিক (Relevant)

Predicted Label: অপ্রাসঙ্গিক (Irrelevant)

This behaviour can be the direct cause of the training data that we fed to finetune the model. Our training data lacked these sorts of context and question pairs. No specific patterns for vice-versa wrong predictions were found though. No models are expected to work 100% perfectly, though the error rate of the Question_classifier_IR is a bit higher than expected but the score found via QC_models deviate from the result of human evaluation by only 3%. Thus, it can be concluded that mT5, beam_decoding and OQL data format performs the best for short-length contexts in Bangla answer agnostic Question generation task.

8 Practical Implementation

To demonstrate the practical implementation, we have created a simple and easily understandable user interface for our Question generation system using gradio. Users can choose between the two-best scoring QG models (mT5-base and BanglaT5) and options for generating single (OQL) or multiple (AQL) questions. Users are also allowed to tweak some hyperparameters to analyze the hyperparameter’s effect on generation behavior. Figures 11 and 12 delineates the case of single and multiple question generation using our interface.

9 Discussion

Dataset plays a very important role in better performance of finetuned transformer models irrespective of task domain. These fine-tuned model predictions are highly biased towards training data and thus data quality and data preparation are crucial factors for achieving better results. Training with Low-quality data can confuse the model and result in unexpected generation behaviour. Data size is also important along with data quality because the more quality data the models are trained with the stronger the knowledge base it achieves for predictions. In QG tasks, ensuring proper tokenization is very important because if very long contexts are passed as inputs, then truncation by the tokenizer to max_length can lead to missing information and the corresponding question of the context might be derived from that particular part of the context. This can confuse the model during the training process and affect generation behaviour. Therefore, keeping context lengths that are properly tokenized under the max_length limitation of the tokenizer and removing any longer contexts from the dataset should be the optimal choice for tokenization.

10 Conclusion

The question generation task is a system where the context is given to the model and the model will generate relevant questions from the given context. The automatic question generation task has gained some attention in NLP over the past few years. Transformer-based question generation has many uses, including enhancing conversational agents, automated customer support systems, and educational platforms. It can be used to create interactive and interesting user experiences while also helping to automate the process of generating questions.

The dataset that has been used for this project was a translated version of the Squad dataset. Translations from English to Bangla are not always correct and sometimes even cause the addition of redundant words or meaning changes. Thus, for this research, a problem was the lack of a large quality pure Bangla QA dataset. Other limitations that this research faced were resource limitations and financial constraints. The models that have been finetuned for this particular task are very large models in size and require a tremendous amount of computational resources and power. Since all the experimentations were done in the Google Colab free version, limitations were faced for playing around with hyperparameters such as batch_size, learning_rate, epoch, gradient_accumulation_steps, etc. We also faced an obstacle in doing more experimentation with the code since running the code in the free version wasted a large amount of time. Also, the paid version of Google Colab was not used from the very beginning due to financial constraints. The colab pro+ was only used for the final code run for this project. So, for future work, pure Bangla texts from various sources can be collected to create a moderate-sized QA Bangla dataset. Instead of fine-tuning pre-trained models, creating a Bangla Question generation model from scratch using encoder-decoder architecture and self-attention would provide better results and that can be used in different circumstances too. Adding some more diverse data instances in the Relevancy_QC dataset can be another future work as it has fewer data instances than expected. We will label them cautiously to maintain data quality and create a less error-prone Question_Classifier_IR model for evaluation purposes. We also want to deploy it into a web application to make it more accessible in the future.

Data availability

Data associated with this study has been deposited at https://github.com/Abdur-Rahman-Fahad/QC_Datasets.

References

Kurdi G, Leo J, Parsia B, Sattler U, Al-Emari S (2020) A systematic review of automatic question generation for educational purposes. Int J Artif Intell Educ 30:121–204

Steuer T, Filighera A, Tregel T, Miede A (2022) Educational automatic question generation improves reading comprehension in non-native speakers: a learner-centric case study. Front Artif Intell 5:900304. https://doi.org/10.3389/frai.2022.900304

Emerson J (2023) Transformer-based multi-hop question generation. In: Proceedings of the AAAI conference on artificial intelligence, vol. 37, no. 13, 16206–16207. https://doi.org/10.1609/aaai.v37i13.26963.

Dugan L, Miltsakaki E, Upadhyay S, Ginsberg E, Gonzalez H, Choi D, Yuan C, Callison-Burch C (2022) A feasibility study of answer-agnostic question generation for education. Findings of the Association for Computational Linguistics: ACL 2022:1919–1926

Nappi JS (2017) The importance of questioning in developing critical thinking skills. Delta Kappa Gamma Bulletin 84(1):30

Lopez LE, Cruz DK, Cruz JCB, Cheng C (2020) Transformer-based end-to-end question generation. arXiv preprint arXiv:2005.01107

Zamani H, Dumais S, Craswell N, Bennett P, Lueck G (2020) Generating clarifying questions for information retrieval. In: Proceedings of the web conference 2020, WWW '20, 418–428. Association for Computing Machinery, New York, USA. https://doi.org/10.1145/3366423.3380126

Khan S, Hamer J, Almeida T (2021) Generate: a NLG system for educational content creation. Educational Data Mining. Retrieved from https://api.semanticscholar.org/CorpusID:246471896

Papineni K, Roukos S, Ward T, Zhu WJ (2002) Bleu: a method for automatic evaluation of machine translation. In: Proceedings of the 40th annual meeting of the Association for Computational Linguistics (pp. 311-318)

Lin CY (2004) Rouge: a package for automatic evaluation of summaries. In: Text summarization branches out, pp 74–81

Banerjee S, Lavie A (2005) METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In: Proceedings of the acl workshop on intrinsic and extrinsic evaluation measures for machine translation and/or summarization, pp 65-72

Vanderwende L (2007) Answering and questioning for machine reading. In: AAAI spring symposium: machine reading, p 91

Das B, Majumder M, Phadikar S, Sekh AA (2021) Automatic question generation and answer assessment: a survey. Res Pract Technol Enhanced Learn 16(1):1–15.

Xue L, Constant N, Roberts A, Kale M, Al-Rfou R, Siddhant A, Barua A, Raffel C (2020) mT5: A massively multilingual pre-trained text-to-text transformer. arXiv preprint arXiv:2010.11934

Bhattacharjee A, Hasan T, Ahmad W, Shahriyar R (2023) BanglaNLG and BanglaT5: benchmarks and resources for evaluating low-resource natural language generation in bangla. In: In findings of the association for computational linguistics: EACL 2023, pp 714–723

Rajpurkar P, Jia R, Liang P (2018) Know What You Don’t Know: unanswerable questions for SQuAD. ACLWeb. Association for Computational Linguistics, Melbourne, Australia, pp 784–789

Clark JH, Choi E, Collins M, Garette D, Kwiatkowski T, Nikolaev V, Palomaki J (2020) TYDI QA: a benchmark for information-seeking question answering in typologically diverse languages, vol 8. MIT Press, Cambridge, pp 454–470

Bhattacharjee A, Hasan T, Ahmad WU, Samin K, Islam MS, Iqbal A, Rahman S, Shahriyar R (2022) BanglaBERT: language model pretraining and benchmarks for low-resource language understanding evaluation in Bangla. In: Findings of the association for computational linguistics: NAACL 2022. https://doi.org/10.18653/v1/2022.findings-naacl.98

Du X, Shao J, Cardie C (2017) Learning to Ask: neural question generation for reading comprehension. ArXiv (Cornell University). https://doi.org/10.48550/arXiv.1705.00106

Radford A, Narasimhan K, Salimans T, Sutskever I (2018) Improving language understanding by generative pre-training

Sun X, Liu J, Lyu Y, He W, Ma Y, Wang S (2018) Answer-focused and position-aware neural question generation. In: Proceedings of the 2018 conference on empirical methods in natural language processing, pp 3930–3939

Kim Y, Lee H, Shin J, Jung K (2019) Improving neural question generation using answer separation. In: Proceedings of the AAAI conference on artificial intelligence, Vol. 33, No. 01, pp 6602–6609

Klein T, Nabi M (2019) Learning to answer by learning to ask: Getting the best of gpt-2 and bert worlds. arXiv preprint. ArXiv (Cornell University). arXiv:1911.02365

Chan YH, Fan YC (2019) A recurrent BERT-based model for question generation. In: Proceedings of the 2nd workshop on machine reading for question answering, pp 154–162

Ruma JF, Mayeesha TT, Rahman RM (2023) Transformer based answer-aware bengali question generation. Int J Cogn Comput Eng 4:314–326

Scialom T, Piwowarski B, Staiano J (2019) Self-attention architectures for answer-agnostic neural question generation. In: Proceedings of the 57th annual meeting of the association for computational linguistics, pp 6027–6032

Ahmad W, Chi J, Tian Y, Chang KW (2020) PolicyQA: a reading comprehension dataset for privacy policies. In: Findings of the association for computational linguistics: EMNLP 2020, pp 743–749

Lamba D (2021) Deep learning with constraints for answer-agnostic question generation in legal text understanding. Kansas State University, Manhattan

Vaswani A, Shazeer N, Pamar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need. In: Proceedings of the 31st international conference on neural information processing systems, vol 30, Curran Associates Inc, USA, pp 6000–6010

Kumar V, Joshi N, Mukherjee A, Ramakrishnan G, Jyothi P (2019) Cross-lingual training for automatic question generation. ArXiv (Cornell University) arXiv preprint. arXiv:1906.02525

Wiwatbutsiri N, Suchato A, Punyabukkana P, Tuaycharoen N (2022) Question generation in the thai language using MT5. In: 2022 19th international joint conference on computer science and software engineering (JCSSE). IEEE, USA, pp 1–6

Bhattacharjee A, Hasan T, Samin K, Rahman MS, Iqbal A, Shahriyar R (2021) BanglaBERT: Combating embedding barrier for low-resource language understanding. ArXiv (Cornell University). arXiv:2101.00204

Aurpa TT, Ahmed MS, Rifat RK, Anwar MM, Ali AS (2023) UDDIPOK: a reading comprehension based question answering dataset in Bangla language, vol 47. Elsevier, Amsterdam, p 108933

Raffel C, Shazeer N, Roberts A, Lee K, Narang S, Matena M, Zhou Y, Li W, Liu PJ (2020) Exploring the limits of transfer learning with a unified text-to-text transformer. J Mach Learn Res 21(1):5485–5551

Mishra S, Goel P, Sharma A, Jagannatha A, Jacobs DR, Daumé H (2020) Towards automatic generation of questions from long answers. ArXiv (Cornell University). https://doi.org/10.48550/arxiv.2004.05109

Sennrich R, Haddow B, Birch A (2016) Neural machine translation of rare words with subword units. In: Proceedings of the 54th annual meeting of the association for computational linguistics vol 1, Association for Computational Linguistics, Berlin, Germany. pp 1715–1725. https://doi.org/10.18653/v1/p16-1162

flax-community/gpt2-bengali (2023)· Hugging Face. (n.d.). Huggingface.co. https://huggingface.co/flax-community/gpt2-bengali

Sharma S, Asri LE, Schulz H, Zumer J (2017) Relevance of unsupervised metrics in task-oriented dialogue for evaluating natural language generation. ArXiv (Cornell University). https://doi.org/10.48550/arXiv.1706.09799

Acknowledgements

This work was supported by the Faculty Research Grant [CTRG-22-SEPS-07], North South University, Bashundhara, Dhaka 1229, Bangladesh.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fahad, A.R., Al Nahian, N., Islam, M.A. et al. Answer Agnostic Question Generation in Bangla Language. Int J Netw Distrib Comput 12, 82–107 (2024). https://doi.org/10.1007/s44227-023-00018-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s44227-023-00018-5