Abstract

Artificial intelligence (AI) tools, notably ChatGPT, are increasingly recognised for their transformative potential in higher education. This study employs a detailed case study approach complemented by a survey, delving into ChatGPT’s impact on pedagogical practices, student engagement, and academic performance. It involved 74 undergraduate and postgraduate students enrolled in data analytics courses in Australia. The quantitative analysis highlights ChatGPT’s role in providing personalised and on-demand support, which is highly valued among users for its flexibility and responsiveness, meeting a critical demand in educational settings. Notably, the study identifies a medium effect size (\(\eta ^2 = 0.173\)) in perceived benefits, indicating that ChatGPT accounts for approximately 17.3% of the variance in improved academic outcomes. However, challenges such as ChatGPT’s limited understanding of complex queries and the lack of human interactions are primary concerns, with a medium effect size (\(\eta ^2 = 0.289\)) suggesting significant areas for improvement. Furthermore, statistical analyses reveal a clear relationship between the frequency of ChatGPT usage and the perception of its benefits, underscoring the transformative potential for users who have integrated it into their academic practices. Despite these challenges, the differential impact on users versus non-users highlights the potential for ChatGPT to foster more engaging and effective educational practices. The findings advocate for targeted strategies to epitomise ChatGPT’s integration into educational settings, emphasising the need for ongoing research and the development of comprehensive guidelines to navigate its complexities and maximise its educational benefits.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The rapid advance of artificial intelligence (AI) technologies, notably OpenAI’s Generative AI (GenAI) chatbot, ChatGPT, has precipitated numerous opportunities and challenges across sectors, especially in Information and Communication Technology (ICT) education [1]. GenAI tools offer a broad spectrum of functionalities, from producing detailed, coherent responses and condensing complex data to generating images and solving complex problems. Although these tools hold immense promise, their application in educational settings has spurred debate among educators, researchers, and policymakers due to potential concerns regarding academic integrity and misinformation [2, 3].

Proponents of such AI tools contend that they can encourage ethical and effective usage, enabling students to engage both creatively and critically [3]. This polarity mirrors the overarching debate on AI’s role in education, wherein educators seek to strike a balance between potential benefits and risks. Existing research on ChatGPT underscores its ability to emulate human tutor performance, delivering personalised instructions and feedback [4]. Research have shown that educators are using these AI tools for diverse purposes, such as creating lesson plans and tests [5]. AI tools are been used as well to support students’ English language learning as a foreign language [6]. Despite these promising outlooks, some other research also unveiled some potential misuse, as students might resort to using ChatGPT for cheating, highlighting the demand for ethical guidelines and responsible usage in educational settings [7].

Apart from its potential classroom applications, ChatGPT’s utility in academic research has also been probed. Studies have shown that ChatGPT can accurately categorise open-text responses, streamline data analysis, and revolutionise academic writing and communication [8, 9]. Despite these findings, not enough existing research examined the ways students interact with ChatGPT. Thus, there is a pressing need to investigate the ways students are using ChatGPT and to explore methods that would guide them to use it as a tutor or as an assistive digital tool, rather than just relying on it to provide solutions.

In order to address this existing gap in research, the present study expands upon our previous work [10]. Our earlier research encompassed a controlled experiment involving three distinct case studies with human–computer interaction (HCI) students at both postgraduate and undergraduate levels. The current study builds on these prior efforts and also introduces fresh insights into the area. The initiation of this research involved the formulation of targeted research questions, thereby laying the groundwork for an experimental design and the subsequent development of an appropriate survey instrument. This instrument was designed with a focus on students’ experiences in their interactions with ChatGPT, specifically while performing data analytic tasks. In the context of this task-based experiment, the students were asked to use ChatGPT as an assistive tool. The survey then captured their evaluations of the tool, including its effectiveness in enhancing their learning process, their overall satisfaction with the educational experience provided, and any challenges they encountered during their interaction with ChatGPT.

To ensure a representative understanding of the subject, a stratified random sample of students in a selected Australian higher education institution were surveyed. This sampling technique and the usage of an appropriately determined sample size ensured the feasibility of identifying significant group differences. Upon collection, responses were processed to ensure data quality and readiness for analysis. This involved discarding any incomplete responses and performing additional data cleaning and preparation as necessary. The processed data were then readied for statistical examination. Several statistical tests, including Chi-Square and ANOVA, were employed to examine the academic use of ChatGPT and to discern the potential benefits, challenges, and differences among groups or variables.

Henceforth, building upon our prior research [10], this study aims to delve deeper into the students’ interactions with ChatGPT, examining its use as an assistive tool in higher education. The research questions guiding this study are:

-

1.

How do data analytics students use ChatGPT for academic purposes, and what tasks do they use it for?

-

2.

What specific benefits and challenges do students perceive in using ChatGPT for academic purposes, and how do these perceptions align with their actual learning outcomes and engagement?

-

3.

How does the use of ChatGPT differentially impact the academic performance and engagement of users versus non-users, and what implications do these findings have for enhancing educational practices and strategies?

To this end, Sect. 2 of this paper outlines the related work, while the specific methodological details and results of these analyses are detailed in Sects. 3 and 4, respectively. A comprehensice discussion of the results is provided in Sect. 5. Section 6 outlines the limitations of the study. Sections. 7 and 8 offer recommendations and conclusions, respectively.

2 Related works

The discourse around AI tools, particularly ChatGPT, in educational settings has seen diverse perspectives focusing on their pedagogical utility, ethical considerations, and potential challenges. This section delineates the related works into subsections corresponding to the study’s objectives, offering a focused review of existing literature in these domains.

2.1 Pedagogical utility of ChatGPT in higher education

A growing body of research underscores the pedagogical potential of ChatGPT in fostering engaging and interactive learning experiences. Studies suggest that ChatGPT can serve as a versatile tool in education, offering personalised assistance, answering queries, providing feedback, and facilitating access to study resources [11,12,13]. Moreover according to [14], ChatGPT adoption in blended learning methodologies, particularly in engineering education, reflects its capacity to enhance student confidence and engagement, albeit with concerns about its impact on developing core competencies. Similarly, its applicability in enhancing language learners’ performance highlights the breadth of ChatGPT’s utility across academic disciplines [15].

2.2 Reflective and critical thinking enhancement

The role of ChatGPT and similar tools in promoting reflective and critical thinking skills has been noted, with evidence suggesting their efficacy in stimulating creativity, problem-solving skills, and conceptual comprehension [16]. These findings position ChatGPT as a valuable “object-to-think-with,” capable of contributing to a deeper, more reflective learning process as reported in [17] and [18].

2.3 Challenges and ethical considerations

Despite the promising applications of ChatGPT, concerns regarding information accuracy, academic integrity, and the potential reduction in human interaction have emerged [16, 19]. The ethical implications of deploying AI tools in education such as those reported in [20] necessitate a careful examination of their impact on learning dynamics and the development of guidelines to ensure responsible use [19].

2.4 Perceptions of ChatGPT’s utility in education

An exploration of students’ perceptions reveals a general favorability towards ChatGPT, attributed to its engaging, motivating nature and the quality of interaction it offers [21, 22]. Nonetheless, the tool’s occasional inaccuracies and the prerequisite of foundational knowledge for effective use present notable limitations [19]. These insights underscore the complexity of integrating ChatGPT into educational practices and highlight the need for continued improvement and ethical considerations [10].

2.5 Contributions of this study

This research builds upon and extends the findings of existing studies by delving deeper into the application, perceptions, and ethical dimensions of ChatGPT usage in an Australian higher education setting. Through empirical investigation and comprehensive analysis, this study aims to enrich the discourse on AI’s role in education, contributing to a more informed understanding and implementation of these technologies. It distinguishes itself by:

-

1.

Providing a detailed exploration of ChatGPT’s pedagogical utility, offering empirical evidence to substantiate its impact on teaching and learning practices. The focus is on how ChatGPT supports and enhances academic outcomes.

-

2.

Investigating the potential of ChatGPT to augment reflective and critical thinking skills, thereby contributing to the development of cognitive and creative competencies among data students studying data analytics.

-

3.

Addressing the multifaceted challenges and ethical considerations associated with ChatGPT’s use in education, including concerns related to accuracy, academic integrity, and the dynamics of human–AI interaction.

-

4.

Capturing a wide range of student perceptions regarding ChatGPT’s utility, benefits, limitations, and its broader impact on the educational landscape. This includes an examination of how ChatGPT aligns with or diverges from existing pedagogical practices and student needs.

This study offers a comprehensive analysis that bridges the gap in the current literature. It also provides recommendations that set the foundation for further research into the effective integration of AI technologies in educational settings, to enhance student engagement, learning processes, and overall educational outcomes.

3 Research methodology

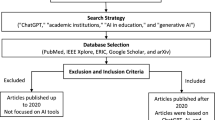

The research was designed to assess the impact of ChatGPT on students’ learning, with a specific focus on understanding students’ experiences, perceived effectiveness, and any challenges encountered with ChatGPT. The free version of ChatGPT 3.5 was used by students involved in this study. Figure 1 depicts the methodology used in this research. This methodology will be explained further in the subsequent sections.

3.1 Participants

The survey participants comprised students from an Australian higher education institution. In total, 74 students were surveyed, of which 65% were from business courses and the remaining 35% were from ICT courses. Both groups of students were studying a data analytic unit. The majority of participants were undergraduate students (approximately 73%), while the remaining 27% were postgraduate students.

3.2 Method

In the study, a combination of methods was used to introduce students to ChatGPT and assess their understanding. A 10-min video presentation was initially shown, covering the basics of ChatGPT, prompting, functionality, educational applications, and the ethical considerations and limitations of AI in education.

Following the video, a hands-on tutorial session was conducted, allowing for direct interaction with ChatGPT and exploration of its features. To measure the students’ knowledge before and after exposure to the video, a short quiz was administered. This quiz was designed to evaluate their understanding of ChatGPT’s capabilities, its relevance in their coursework, and awareness of its limitations. The study’s approach, integrating visual, interactive, and evaluative elements, aimed to enhance the overall learning experience and deepen the student’s comprehension of ChatGPT in a higher education context.

Hence, since students were studying Data Analytics, they were specifically asked to use ChatGPT for data exploration, hypothesis testing, and inferential analysis. Following these activities, students were asked to fill out an online survey that was distributed via the Learning Management System (LMS). In-class encouragement was provided for survey completion. The survey was conducted unsupervised and was not time-bound, allowing students to complete it at their convenience.

3.3 Data collection and handling

Among the initial respondents, 67 participants completed the survey in full, resulting in robust data sets available for analysis. The survey achieved a 100% completion rate, indicating strong engagement and reliability in the collected data.

3.4 Survey instrumentation and response analysis

The survey instrument was structured to capture a broad range of responses, using both categorical and Likert scale items. The detailed breakdown of responses includes:

-

Age group: Coded from 1 to 4, with a mean of 1.90 and a standard deviation of 0.926. This coding aligns with the age brackets defined in the survey design, with ‘1’ representing the youngest bracket and ‘4’ the oldest, reflecting a young demographic skew.

-

Gender identity: Responses ranged from 1 to 2, with a mean of 1.47 and a standard deviation of 0.503, suggesting a balanced gender representation across the binary options provided.

-

Education level: Varied from 10 to 17 years of education, with a mean level of 15.08, highlighting a highly educated sample. This spread from secondary education to postgraduate studies indicates the educational diversity of the participants.

3.5 Survey instrumentation

The survey instrument employed in this study was designed to capture comprehensive insights into students’ experiences, perceptions, and satisfaction levels with ChatGPT in academic settings. The survey consisted of multiple sections, each aimed at exploring different facets of ChatGPT usage within higher education.

3.5.1 Survey design

The survey comprised 27 items, incorporating both open-ended and closed-ended questions. Closed-ended questions predominantly utilised a 5-point Likert scale, ranging from “Strongly Disagree” to “Strongly Agree.” This scale facilitated the measurement of respondents’ attitudes towards ChatGPT’s impact on their learning and engagement, along with the perceived benefits and challenges of using AI tools in educational contexts. The choice of a Likert scale is instrumental for its recognized psychometric properties, particularly its ability to measure the intensity of respondents’ feelings in a reliable manner.

3.5.2 Content of the survey

The questionnaire was segmented into sections that addressed:

-

Demographic information (age, gender identity, highest level of education).

-

Familiarity and prior usage of ChatGPT and other AI applications in educational settings.

-

Specific targeted use-case based questions on ChatGPT, including academic guidance, course information, mental health support, and others.

-

Satisfaction levels with the assistance provided by ChatGPT and its perceived impact on student engagement.

-

Perceived benefits of using ChatGPT, including personalized assistance, on-demand support, improved academic results, and enhanced engagement.

-

Challenges and limitations encountered while using ChatGPT, such as privacy concerns, technical issues, lack of human interaction, and limited understanding of complex queries.

3.5.3 Validity and reliability

Before the survey launch, it underwent a rigorous validation process to ensure appropriateness for the research objectives. The survey design and content were critically reviewed and refined by the research team to ensure clarity, relevance, and comprehensiveness, effectively capturing the necessary data to fulfil the study’s aims. Additionally, to anchor the survey to the theoretical framework, items were developed based on constructs defined in the literature review, ensuring that each question reflected an aspect of the theoretical underpinnings discussed. Content validity, a crucial aspect of survey validation, was assessed through a process akin to the methodologies outlined in established literature, such as the sequential mixed-method approach advocated in [23]. This approach, as discussed in [24], emphasises the collaborative efforts between content experts and assessment specialists in refining and validating assessment tools. Moreover, in alignment with the argument presented in [25], which highlights the importance of expert judgement in establishing content validity for assessments related to vocational knowledge and skills, our study similarly engaged experts in the field to ensure the comprehensiveness and appropriateness of survey items. This alignment with established methodologies and best practices in survey validation was further affirmed by the approval of the ethical clearance department, underscoring the survey’s robust content validity.

3.5.4 Administration of the survey

The survey was administered electronically via the Learning Management System (LMS), ensuring broad reach and easy access. A detailed informed consent page prefaced the survey, informing participants about the study’s objectives, the voluntary nature of participation, and the anonymity of responses, alongside the measures taken to ensure data privacy and integrity.

4 Results

This section presents the findings from the survey, structured to address the main research questions concerning the impact of ChatGPT in higher education settings.

4.1 Demographic and general use information

We analysed the responses from 74 participants, leading to 67 valid responses after data cleaning. The demographic distribution, as summarised in Table 1, reflects the diverse sample in terms of age, gender, and education level.

4.2 Analysis of ChatGPT’s impact in higher education

This subsection synthesises the perceived benefits and challenges of ChatGPT as reported by participants, accompanied by statistical evidence.

4.2.1 Perceived benefits and challenges

In evaluating ChatGPT’s role within higher education, participants demonstrated strong approval for the assistance it provides, with an average satisfaction rate of 3.88 out of 5. This level of satisfaction reflects the considerable value that ChatGPT offers in facilitating academic pursuits. Furthermore, its impact on academic engagement, rated at 3.18, substantiates ChatGPT’s efficacy in creating an interactive and dynamic learning environment.

Figure 2 shows a clear preference for ChatGPT’s personalised assistance and on-demand support among users. This inclination toward personalised and instantaneous support indicates ChatGPT’s success in meeting a vital demand within the educational sector for flexible and responsive learning tools.

While ChatGPT significantly benefits users, it also introduces specific challenges, as illustrated in Fig. 3. Contrary to technical issues being the predominant challenge, it was the limited understanding of complex queries and the lack of human interactions that stood out as the primary concerns among participants. This finding suggests areas of improvement for ChatGPT, particularly in enhancing its ability to process and respond to complex inquiries and in providing interactions that more closely mimic the nuances of human communications.

Addressing these challenges is crucial for developers and educators aiming to optimise the use of AI technologies in education. Improving ChatGPT’s comprehension capabilities and finding innovative ways to imbue AI interactions with a sense of human touch are essential steps forward.

The summarised data in Table 2 underscores these insights, with standard deviation scores reflecting the range of experiences among respondents. Despite the identified challenges, the overall benefit score of 4.14 highlights a predominantly positive attitude towards the inclusion of ChatGPT in educational settings. To delve deeper into the insights provided by Table 2, it’s essential to consider the specifics that underscore the broad acceptance and satisfaction with ChatGPT’s role in higher education such as the variances in how these AI tools are perceived across different metrics of utility and satisfaction.

For instance, the robust satisfaction score averages at 3.88 (out of a maximum of 5), when coupled with a standard deviation of 0.896, suggests that while the majority of respondents are satisfied, there exists a slight variation in the degree of satisfaction among users. This variability could stem from different expectations, user experiences, or even the specific educational contexts in which ChatGPT is employed.

Similarly, the Perceived Impact on Engagement score, averaging at 3.18, indicates a positive but more varied experience. The standard deviation of 0.765 here points to a broader range of perceptions regarding how effectively ChatGPT engages users. This variance might reflect the diverse ways in which engagement is measured or experienced by individuals, highlighting the importance of personalising AI tools to suit varying educational needs and learning styles.

The overall benefit score, which stands at 4.14 with a standard deviation of 2.912, is particularly noteworthy. The relatively high standard deviation signifies a wide spectrum of perceived benefits among respondents.

4.2.2 Statistical evidence

The ANOVA results presented in Tables 3 and 4 offer insightful quantitative analysis into how the use of ChatGPT and similar AI tools affect users’ perceptions of benefits and challenges within the educational sector.

Table 3 focuses on the perceived benefits of ChatGPT, revealing a significant difference between groups with an F-value of 2.851 and a significance level of 0.012. This outcome suggests that the experience of using ChatGPT significantly varies across different user groups, indicating a pronounced effect on how its benefits are perceived. Notably, the partial eta squared (\(\eta ^2\)) value of 0.173 indicates that approximately 17.3% of the variance in perceived benefits can be attributed to differences in ChatGPT usage among various groups. This significant proportion underscores the tangible impact that ChatGPT can have on enhancing academic experiences, with its utilisation accounting for a substantial variance in how its benefits are perceived.

Conversely, Table 4 examines the perceived challenges associated with ChatGPT use, showcasing a slightly lower F-value of 1.422 and a significance level of 0.194. This result suggests a less pronounced yet noticeable variation in how users perceive challenges related to ChatGPT, albeit not reaching statistical significance. The partial eta squared (\(\eta ^2\)) value of 0.289, however, indicates that nearly 28.9% of the variance in perceived challenges can be directly linked to the usage of ChatGPT. This high variance signifies that users’ experiences with ChatGPT notably influence their perceptions of challenges, emphasising the need for addressing and mitigating these challenges to enhance user experience further.

The statistical evidence shows a clear relationship between ChatGPT usage and the perception of its benefits and challenges. Users who engage more frequently with ChatGPT tend to report higher benefits, possibly due to increased familiarity and proficiency with the AI tool. On the other hand, the significant variance in perceived challenges suggests that more frequent users may also be more attuned to the limitations and areas for improvement within ChatGPT’s application in educational contexts.

4.3 Comparative analysis of Chatbots/AI users and non-users

The investigation into the distinctions between users and non-users of ChatGPT and other AI applications reveals significant contrasts in both usage patterns and the perceived advantages of these technologies. The insights drawn from Tables 5 and 6 underscore not only the quantitative differences but also the qualitative impact of such technologies in educational environments.

The data captured in Table 5 clearly demonstrates that users of Chatbots/AI engage with a wider range of tasks and perceive greater benefits, with mean scores indicating a substantial differential in both usage intensity and satisfaction levels. This variance suggests that exposure to and familiarity with ChatGPT significantly enhance users’ perceptions of its utility and effectiveness in supporting their academic endeavours.

The inferential statistics provided in Table 6 further solidify these observations. The significance levels obtained from the Levene’s Test and t-tests point towards a robust disparity in the academic integration of ChatGPT. The Mean Difference values, especially, highlight the gap in perceived benefits, underscoring the transformative potential of ChatGPT for users who have integrated it into their academic practices.

These statistical differences shed light on the potential barriers to adoption for non-users. The distinct variance in perceived benefits between the two groups may also reflect broader themes within the educational technology adoption landscape, including access to resources, digital literacy, and openness to technological innovation.

Implications for educational practice and policy This comparative analysis reveals the necessity for targeted strategies to promote broader adoption and effective utilisation of AI technologies like ChatGPT in educational settings. Educators and policy makers should consider these findings in their planning and development of digital literacy programs, aiming to bridge the existing gap between users and non-users. Furthermore, the insights suggest a need for ongoing support and resources to help integrate GenAI tools like ChatGPT more fully into pedagogical strategies, thereby enhancing its perceived value and efficacy across a broader segment of the academic community.

4.4 Detailed analysis

This section extends the investigation into the utilisation patterns of ChatGPT and Chatbots within the academic environment, leveraging a Chi-Square test to elucidate the relationship between the usage of these AI technologies and the types of academic tasks they support.

Variables analysed The analysis differentiates between respondents who have utilised ChatGPT or Chatbots (“Yes”) and those who have not (“No”), across various task categories such as academic guidance, course information retrieval, among others.

As shown in Table 7, the statistical significance indicated by the Pearson Chi-Square and Likelihood Ratio values (\(\hbox {p}<.05\)) confirms a distinct association between the usage of ChatGPT and the specific academic tasks it is employed for. This suggests not merely a widespread integration of ChatGPT into academic practices but also a discerning application tailored to particular educational needs and contexts.

Interpreting the findings The diverse application of ChatGPT across academic tasks underscores its versatility and adaptability as a tool within higher education. The significant correlation found through the Chi-Square test may reflect broader trends in educational technology, where AI tools are increasingly designed or selected based on their capability to address specific academic challenges or enhance particular learning outcomes.

Moreover, the variance in usage patterns could indicate differing levels of acceptance or recognition of GenAI’s potential across disciplines, potentially influenced by the unique demands or technological readiness of those fields. It also highlights the opportunity for further research into best practices for GenAI technologies like ChatGPT integration across various educational settings, aiming to maximise its benefits and mitigate any identified challenges.

5 Discussion

This study’s findings contribute significantly to our understanding of ChatGPT’s role in Australian higher education, aligning with and expanding upon existing literature in the domain of AI-assisted learning.

5.1 Perceived benefits and challenges

The empirical evidence suggests that ChatGPT enhances pedagogical practices through personalised and on-demand support, corroborating with previous studies that highlight the potential of AI in creating customised learning experiences [26]. Specifically, the high levels of satisfaction (Mean=3.88) among students regarding ChatGPT’s assistance underscore its effectiveness in engaging students and supporting diverse learning needs.

Consistently, the benefits recognised by participants, including improved academic results and student engagement, mirror findings from prior research indicating AI tools’ capability to positively influence educational outcomes [4]. However, the study also underscores challenges such as handling complex queries and the necessity of human interaction, emphasising the nuanced application of AI in education and resonating with discussions on AI’s limitations [27].

5.2 Synthesis with previous studies

Our findings align with the growing body of research advocating for AI’s integration into educational practices while also highlighting the importance of addressing associated challenges. For instance, the perceived challenges, particularly regarding complex queries and the lack of human interaction, suggest areas where ChatGPT could be further refined or complemented with traditional educational methods.

Furthermore, this study highlights the diverse applications of ChatGPT, from academic guidance to mental health support, showcasing the tool’s multifaceted potential in educational settings. This aligns with recent discussions on leveraging AI for comprehensive support beyond traditional academic boundaries, pushing for a broader conceptualisation of educational assistance.

5.3 Advancing AI integration in education: insights and implications

The exploration of ChatGPT’s in this study yields concrete insights and practical implications, directly tied to our empirical findings. Here’s how these insights relate to specific evidence from the study:

-

Empirical insight into student perceptions Our findings revealed a satisfaction level of 3.88 (out of 5) for ChatGPT’s assistance and a perceived impact on engagement rated at 3.18, indicating a positive reception among students. This evidences the optimism but also showcases the critical lens through which students evaluate the technology’s efficacy and limitations.

-

Strategic deployment recommendations The variance between groups in perceived benefits (\(\eta ^2 = 0.173\)) and challenges (\(\eta ^2 = 0.289\)) underscores the impact of ChatGPT in academic settings. This data supports our recommendation for a balanced AI integration approach, advocating for training and guidelines that enhance utilisation while mitigating potential drawbacks.

-

Policy and ethical considerations The significant variance in perceived challenges highlights areas requiring attention, such as data privacy and academic integrity. These findings substantiate our call for comprehensive policies and ethical guidelines to navigate AI’s integration in education, ensuring a responsible and equitable use of technology.

-

Future research directions The statistically significant association between ChatGPT usage and diverse academic tasks, as revealed through our Chi-Square analysis, points to the adaptive application of AI tools. This variability underscores the necessity for ongoing research to explore the longitudinal impacts of AI on pedagogical outcomes and student engagement further.

These insights serve as a foundation for future research and dialogue among stakeholders, guiding the development of effective, ethical, and equitable AI-assisted pedagogical practices.

6 Limitations

This study provides valuable insights but also carries certain limitations that warrant a careful interpretation of the results. The restricted sample size, particularly from specific demographics, limits the generalisability of the findings to broader populations. This should be explicitly noted as the findings from this study may not necessarily apply universally across different contexts or populations. However, when these results are viewed in conjunction with our previous work [10], they contribute to a broader understanding of the dynamics at play, enhancing their applicability in similar educational settings. A more rigorous research methodology with larger and more varied sample sizes would be necessary for a comprehensive understanding of how Chatbots and ChatGPT AI impact student-learning outcomes in Australian higher education at large. Despite these limitations, the study provides a foundation for educators and researchers in Australia and globally to explore the potential of Chatbots and ChatGPT AI in enhancing student learning. Nevertheless, the followings key limitations of this study should be noted:

-

Sample size The small sample size and its lack of diversity may significantly limit the generalisability of the findings. Studies involving larger and more diverse samples could help to refine and validate the results.

-

Self-report bias The use of self-reported data introduces potential for social desirability or recall biases. Future studies could benefit from supplementing self-reported data with objective measurements to mitigate these biases. Something we have done done in our previous work [10] but in this study.

-

Lack of qualitative data While quantitative data is excellent for establishing patterns and statistical relationships, qualitative data could provide more depth and context, especially when exploring perceptions, experiences, and attitudes towards AI in education. Future studies could use a mixed-methods approach or conduct follow-up interviews to gain richer, more nuanced insights.

-

Confounding variables Factors such as demographics, technological experience, and cultural background might have influenced the findings. Controlling these potential variables in future research could produce more precise results.

-

Cross-sectional design This study employs a cross-sectional design, which captures a specific point in time. Although this provides valuable insight, it does not account for changes over time or cause-effect relationships. Future research could employ longitudinal designs to investigate changes and trends over time.

7 Optimising Chatbot usage in higher education: reflections and recommendations

Reflecting on the insights garnered from this investigation, it becomes evident that ChatGPT and similar AI tools possess significant potential to enrich the educational landscape. This study sheds lights on the multifaceted benefits these technologies can offer, from enhancing learning outcomes to facilitating engaging and personalised educational experiences.

Figure 4 presents a consolidated view of the recommended strategies for optimising ChatGPT’s integration into higher education. These interconnected strategies aim to enhance pedagogical practices, digital literacy, institutional support, and user-centred AI tool development, ultimately leading to an enriched educational experience.

7.1 Redefining digital competencies in the AI-enhanced educational landscape

Following the strategic overview depicted in Fig. 4, this subsection delves into the actionable insights for educators, institutional policies, and the broader educational community aiming to leverage ChatGPT within their pedagogical practices. The positive student perception towards ChatGPT, underscored by observed benefits in critical thinking, engagement, and academic performance, suggests a ripe opportunity for thoughtful integration into curricula. However, the balanced approach exhibited by students towards these tools highlights the importance of guiding students towards effective and ethical use.

7.1.1 Educational strategies

Educators are encouraged to integrate ChatGPT in ways that promote critical engagement, encouraging students to question and critically evaluate the AI-generated content. This can be facilitated through structured assignments that require students to cross-reference ChatGPT responses with credible sources or use the tool to brainstorm and then critically assess the generated ideas. Furthermore, to fully realise the benefits of AI in education, a systemic redesign of the curriculum is essential. This involves fostering interdisciplinary collaborations that bridge technical expertise with the ethical, legal, and social implications of AI. For instance, computer science departments could partner with philosophy departments to offer courses on AI ethics, ensuring that students understand both the capabilities and the boundaries of AI applications.

Additional Examples: In a website design unit, students could use ChatGPT to generate initial design concepts based on user requirements. Subsequently, they would compare these AI-generated concepts against industry standards and best practices, encouraging a deep dive into design thinking and user experience principles.

7.1.2 Digital literacy and ethics

The inclusion of digital literacy modules that address the ethical use of AI tools, the evaluation of information accuracy, and the avoidance of over-reliance on AI for academic tasks is paramount. Such modules can help students navigate the digital landscape more effectively, making informed decisions about when and how to use AI tools like ChatGPT.

Example

In a programming unit, a module on “AI and Code Ethics” could be introduced, exploring the implications of using AI-generated code snippets. Students might examine case studies where AI-generated code led to security vulnerabilities, emphasising the importance of vetting and understanding code rather than copying and pasting AI suggestions blindly.

7.1.3 Institutional support and training

Institutions play a crucial role in providing the necessary support and training for both educators and students. This includes offering workshops on innovative AI applications in teaching and learning, developing policies around AI tool usage, and creating resources that guide ethical and effective use. Additionally, the role of educators is pivotal in this transition. As AI tools become more embedded in educational practices, educators themselves must be equipped with the necessary skills and knowledge. This includes professional development opportunities focused on AI applications in teaching and learning, as well as training on the ethical implications of AI. Such initiatives ensure that educators are not only users of AI technologies but are also competent in mentoring students on effective and ethical AI usage.

By redefining digital competencies to include AI-related skills, the educational landscape can adapt to better prepare students for a future where AI is ubiquitous. This adaptation not only enhances their learning experience but also ensures they are well-equipped to navigate and influence an increasingly digital world.

Example

A workshop series titled “Integrating AI into Computer Science Education” could be offered, showcasing practical ways to use ChatGPT in coding projects, website design, and algorithm development. These workshops could include sessions on evaluating AI-generated content’s reliability and integrating AI tools within the curriculum responsibly.

7.1.4 User-centred AI tool development

Engaging educators and students in the development process of AI tools can lead to more user-friendly and educationally effective solutions. Feedback loops between users and developers can inform enhancements that better align with educational needs and practices.

Example

A collaborative project between computer science students and ChatGPT developers could be initiated, aiming to create a customised version of ChatGPT tailored for coding education. Students could contribute by providing feedback on their learning experiences, suggesting new features like code debugging tips or personalised learning pathways based on the user’s coding skills.

7.1.5 Alignment with existing frameworks

In the evolving landscape of higher education, integrating Artificial Intelligence (AI) necessitates a reexamination of digital competencies within existing frameworks. This process aligns closely with recognised standards such as the European Digital Competence Framework (DigComp) and the UNESCO ICT Competency Framework for Teachers. These frameworks serve as a foundation, yet they require updates to address the nuances and complexities introduced by AI technologies.

The integration of AI-specific competencies into existing frameworks involves identifying gaps where AI can play a transformative role. Such frameworks for example could introduce modules focused on the ethical use of AI in education, preparing educators to handle AI tools responsibly and informatively. For instance, the UNESCO ICT Competency Framework for Teachers, is designed to help countries develop comprehensive teacher training programs that integrate ICT skills into educational practices. As AI becomes integral to various aspects of education, it’s essential to expand this framework to include AI competencies, ensuring that teachers are prepared to use AI tools effectively and ethically in the classroom.

Other frameworks are the European Digital Competence Framework (DigComp) which provides a detailed description of what it means to be digitally competent in today’s rapidly changing digital landscape. By incorporating AI-related competencies, such as understanding AI concepts, using AI tools for data analysis, and addressing ethical issues in AI, DigComp can offer a more comprehensive guide for individuals navigating digital environments across Europe and perhaps more globally.

In Australia, the Australian Computer Society (ACS) sets standards and provides guidelines that emphasise the ethical development and use of technology, including AI. Aligning digital competency frameworks with ACS guidelines ensures that educational programs instil not only the technical skills necessary for handling AI but also the ethical reasoning and problem-solving abilities crucial in the face of AI-driven changes.

8 Conclusions

This study has shed light on the significant potential that ChatGPT and similar AI tools possess for transforming higher education in the classroom. By examining student interactions, perceived benefits, and challenges with ChatGPT, we have unveiled its considerable impact on pedagogical practices, student engagement, and academic outcomes. Our findings highlight that, despite some challenges, students largely view ChatGPT as a beneficial tool for academic support, pointing towards its promising role in creating more inclusive and engaging learning environments.

Moreover, our research aligns with existing literature by underscoring the importance of ethical considerations and institutional support in the successful integration of AI tools into educational settings. It reaffirms the need for educational stakeholders to be proactive in addressing the challenges associated with these technologies, such as concerns over data security, plagiarism, and potential biases, to fully harness their pedagogical potential.

Looking forward, the future scope of this research would delve into the detailed impact of ChatGPT on student learning outcomes, exploring how these tools can be integrated more effectively into classroom settings. Enhancing the external validity of future studies through larger, more diverse participant samples and broader demographic representation will be crucial in providing a more comprehensive understanding of ChatGPT’s educational implications. Additionally, adopting longitudinal studies and mixed-method research approaches could offer deeper insights into user experiences and the evolving perceptions of AI tools in education over time.

In conclusion, as the educational landscape continues to evolve with technological advancements, this study contributes valuable insights into the integration of AI tools like ChatGPT in higher education. It highlights the balance needed between leveraging the benefits of such technologies and addressing their limitations to foster ethical and effective educational practices. As we look towards the future, it is clear that there is a ripe opportunity for further research to explore the nuances of AI-assisted learning and to develop strategies that ensure its successful incorporation into educational frameworks, ultimately enriching the teaching and learning experience for all.

Data availability

The datasets generated and analysed during the current study are not publicly available due to the sensitive nature of the information, which includes responses from case studies conducted in a classroom setting and student-completed surveys. These activities were carried out with ethical approval and consent, adhering to strict confidentiality and privacy standards to protect participant anonymity. As such, the raw data are subject to ethical restrictions to prevent any potential breach of confidentiality. Aggregated data and findings that ensure participant anonymity can be made available upon reasonable request to the corresponding author, subject to compliance with ethical guidelines. Requests for access will be reviewed on a case-by-case basis to ensure they meet ethical standards for data sharing.

References

Alenizi MAK, Mohamed AM, Shaaban TS. Revolutionizing EFL special education: how ChatGPT is transforming the way teachers approach language learning. Innoeduca: Int J Technol Educ Innov. 2023;9(2):5–23.

Rudolph J, Tan S, Tan S. Chatgpt: Bullshit spewer or the end of traditional assessments in higher education? J Appl Learn Teach. 2023;6:342–63.

Hostetter A, Call N, Frazier G, James T, Linnertz C, Nestle E, Tucci M. Student and faculty perceptions of artificial intelligence in student writing; 2023. https://doi.org/10.1021/acs.jchemed.3c00505

Adiguzel T, Kaya MH, Cansu FK. Revolutionizing education with AI: exploring the transformative potential of ChatGPT. Contemp Educ Technol. 2023;15:429.

Jimenez K. ChatGPT in the classroom: here’s what teachers and students are saying; 2023. https://www.mdpi.com/2071-1050/15/18/14025

Mohamed AM. Exploring the potential of an AI-based chatbot (ChatGPT) in enhancing English as a foreign language (EFL) teaching: perceptions of EFL faculty members. Educ Inf Technol. 2023;1–23. https://www.springerprofessional.de/en/exploring-the-potential-of-an-ai-based-chatbot-chatgpt-inenhanc/25518594

Study.Com: productive teaching tool or innovative cheating.

Mellon J, Bailey J, Scott R, Breckwoldt J, Miori M. Does GPT-3 know what the most important issue is? Using large language models to code open-text social survey responses at scale;2022. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4310154

Lund BD, Wang T, Mannuru NR, Nie B, Shimray S, Wang Z. ChatGPT and a new academic reality: artificial intelligence written research papers and the ethics of the large language models in scholarly publishing. J Assoc Inf Sci Technol. 2023;74:570–81.

Elkhodr M, Gide E, Wu R, Darwish O. ICT students’ perceptions towards ChatGPT: an experimental reflective lab analysis. STEM Educ. 2023;3(2):70–88.

Sandu N, Gide E. Adoption of AI-chatbots to enhance student learning experience in higher education in India. In: 2019 18th international conference on information technology based higher education and training (ITHET); 2019. p. 1–5.

Tang K-Y, Chang C-Y, Hwang G-J. Trends in artificial intelligence-supported e-learning: a systematic review and co-citation network analysis (1998–2019). Interact Learn Environ. 2021;1–19. https://doi.org/10.1080/10494820.2021.1875001

Yellow.ai: 10 powerful use cases of educational chatbots in 2022; 2022. https://yellow.ai/chatbots/use-cases-of-chatbots-in-education-industry/.

Sánchez-Ruiz LM, Moll-López S, Nuñez-Pérez A, Moraño-Fernández JA, Vega-Fleitas E. ChatGPT challenges blended learning methodologies in engineering education: a case study in mathematics. Appl Sci. 2023;13(10):6039.

Sakai N. Investigating the feasibility of ChatGPT for personalized English language learning: a case study on its applicability to Japanese students; 2023. https://doi.org/10.1007/s10639-024-12574-6

Rodrigues Vasconcelos MA, Santos R. Enhancing STEM learning with ChatGPT and Bing chat as objects-to-think-with: a case study (May 1, 2023); 2023.

Guo Y, Lee D. Leveraging ChatGPT for enhancing critical thinking skills. J Chem Educ. 2023;100(12):4876–83.

Minh AN. Leveraging ChatGPT for enhancing English writing skills and critical thinking in university freshmen. J Knowl Learn Sci Technol. 2024;3(2):51–62.

Shoufan A. Exploring students’ perceptions of ChatGPT: thematic analysis and follow-up survey. IEEE Access. 2023;11:38805–18. https://doi.org/10.1109/ACCESS.2023.3268224.

Panagopoulou F, Parpoula C, Karpouzis K. Legal and ethical considerations regarding the use of ChatGPT in education; 2023. arXiv preprint arXiv:2306.10037

Ngo TTA. The perception by university students of the use of ChatGPT in education. Int J Emerg Technol Learn (Online). 2023;18(17):4.

Mogavi RH, Deng C, Kim JJ, Zhou P, Kwon YD, Metwally AHS, Tlili A, Bassanelli S, Bucchiarone A, Gujar S, et al. ChatGPT in education: a blessing or a curse? A qualitative study exploring early adopters’ utilization and perceptions. Comput Hum Behav Artif Hum. 2024;2(1):100027.

Ermis-Demirtas H. Establishing content-related validity evidence for assessments in counseling: application of a sequential mixed-method approach. Int J Adv Couns. 2018;40(4):387–97.

Brijmohan A, Khan GA, Orpwood G, Brown ES, Childs RA. Collaboration between content experts and assessment specialists: using a validity argument framework to develop a college mathematics assessment. Can J Educ/Revue canadienne de l’éducation. 2018;41(2):584–600.

Suhaini M, Ahmad A, Bohari NM. Assessments on vocational knowledge and skills: a content validity analysis. Eur J Educ Res. 2021;10(3):1529–40.

Limo FAF, Tiza DRH, Roque MM, Herrera EE, Murillo JPM, Huallpa JJ, Flores VAA, Castillo AGR, Peña PFP, Carranza CPM, et al. Personalized tutoring: ChatGPT as a virtual tutor for personalized learning experiences. Przestrzeń Społeczna (Social Space). 2023;23(1):293–312.

Memarian B, Doleck T. ChatGPT in education: methods, potentials and limitations. Comput Hum Behav Artif Hum. 2023;1:100022.

Author information

Authors and Affiliations

Contributions

R.S. conceptualised the study, led the methodology design, and was involved in writing the original draft. E.G. contributed to the analysis and interpretation of data, and critically reviewed and revised the manuscript. E.G. and M.E. supervised the project and contributed to the conceptualisation of the work. M.E. was involved in the writing of the results and recommendation sections and the final draft of the manuscript. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no Conflict of interest related to the use of ChatGPT 4. The datasets generated during the current study are available from the corresponding author upon reasonable request. All procedures performed in the studies involving human participants were by the ethical standards of the relevant institution.

Artificial Intelligence

The authors declare that they have not used Artificial Intelligence (AI) tools in the writing of this article. However, ChatGPT 4 was employed for specific formatting tasks, such as summarising and converting tables into LaTeX and enhancing the readability of the tables. In addition, ChatGPT 4 was utilised for copyediting and proofreading certain sections, including the abstract, limitation section and the conclusion. The authors took precautions to ensure that ChatGPT 4 did not introduce any text that was not authored by the original writers.

ChatGPT

The authors further declare that ChatGPT 4 was used as an aid in formatting, proofreading, and improving the readability of the whole manuscript. While it assisted, the AI did not contribute to the conception, design, or intellectual content of the work. The authors were in full control of the content and had final approval of the version to be published. The use of ChatGPT 4 was strictly controlled and any suggestions it provided were reviewed and edited by the authors to ensure the final content reflects the authors’ perspectives and interpretations.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sandu, R., Gide, E. & Elkhodr, M. The role and impact of ChatGPT in educational practices: insights from an Australian higher education case study. Discov Educ 3, 71 (2024). https://doi.org/10.1007/s44217-024-00126-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44217-024-00126-6